Abstract

Background Health service providers must understand their digital health capability if they are to drive digital transformation in a strategic and informed manner. Little is known about the assessment and benchmarking of digital maturity or capability at scale across an entire jurisdiction. The public health care system across the state of Queensland, Australia has an ambitious 10-year digital transformation strategy.

Objective The aim of this research was to evaluate the digital health capability in Queensland to inform digital health strategy and investment.

Methods The Healthcare Information and Management Systems Society Digital Health Indicator (DHI) was used via a cross-sectional survey design to assess four core dimensions of digital health transformation: governance and workforce; interoperability; person-enabled health; and predictive analytics across an entire jurisdiction simultaneously. The DHI questionnaire was completed by each health care system ( n = 16) within Queensland in February to July 2021. DHI is scored 0 to 400 and dimension score is 0 to 100.

Results The results reveal a variation in DHI scores reflecting the diverse stages of health care digitization across the state. The average DHI score across sites was 143 (range 78–193; SD35.3) which is similar to other systems in the Oceania region and global public systems but below the global private average. Governance and workforce was on average the highest scoring dimension (x̅= 54), followed by interoperability (x̅ = 46), person-enabled health (x̅ = 36), and predictive analytics (x̅ = 30).

Conclusion The findings were incorporated into the new digital health strategy for the jurisdiction. As one of the largest single simultaneous assessments of digital health capability globally, the findings and lessons learnt offer insights for policy makers and organizational managers.

Keywords: digital health, digital capability, digital maturity, digital hospitals, digital transformation, health information management, organizational characteristics

Background and Significance

Health care delivery is increasingly challenging as demand for care escalates against a static resourcing profile. Digital health care platforms are seen as a solution to deliver health care at scale. 1 2 Large fiscal investments are required to implement these digital solutions. However, it can be confusing for health care organizations to plan and justify such large investments.

Digital maturity is “the extent to which health IT is an enabler of high-quality care through supporting improvements to service delivery and patient experience.” 3 Digital health maturity evaluations help identify the level of capability across a series of “dimensions” to understand different aspects from business processes and organizational characteristics, to information and people. 4 Digital maturity models (MM) provide structure to a maturity or capability assessment and have risen in use 4 in response to the increased drive to digitize health care. Limitations of current MMs include challenges over which dimensions should be assessed, 5 overly technologically focused, 6 narrow focus, and lack of peer-reviewed evidence base. 7 MMs are often developed for specific clinical areas or information systems, 8 yet there is a growing need for MMs to include all areas and subsystems in a health care organization 9 to capture the complex reality of digital transformations. 10

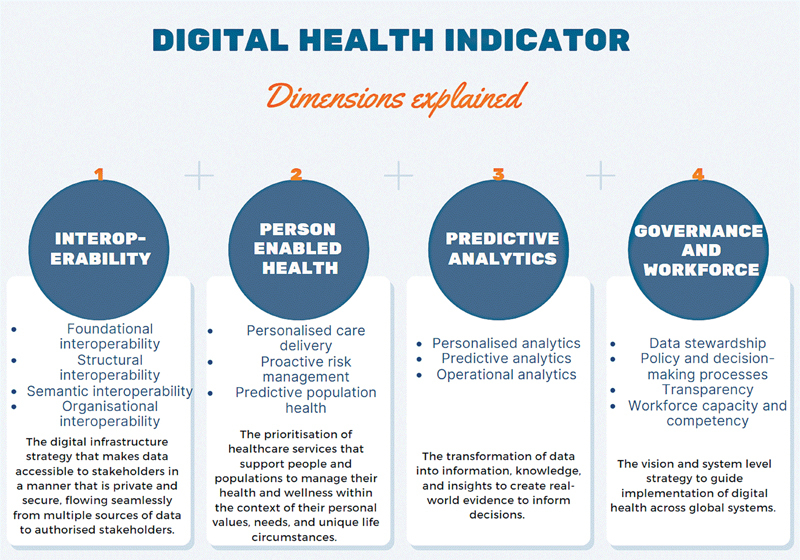

The digital health indicator (DHI) from the Healthcare Information and Management Systems Society (HIMSS) documents the digital capability of health care services (beyond simple assessments of the presence or absence of electronic medical record systems) using outcome driven, specific and balanced measures. 11 The DHI is a self-assessment tool 11 which assesses four key dimensions of digital transformation: Interoperability: Person-Enabled Health; Predictive Analytics; and Governance and Workforce ( Fig. 1 ). These four dimensions recognize the need for data to be mobilized across the health system to enable advanced analytics for outcome tracking, risk management, and cue health care teams to focus on preventative action that helps keep people and populations well. 11

Fig. 1.

Digital health indicator dimensions.

The DHI aims to provide a baseline understanding of where the health care organization has strengths and opportunities to improve as they progress in digital transformation. Launched in the global market in 2019, the DHI was developed from a critical analysis of published, peer-reviewed digital health literature, and was tested in health care organisations. 11 The DHI is currently adopted by many global health systems across jurisdictions such as Canada, United States of America, England, Saudi Arabia, Australia, New Zealand, Korea, Indonesia, Japan, Taiwan, and Hong Kong. It was chosen over other MMs or capability frameworks because the dimensions measured extend beyond technological implementations and include the often underrepresented patient-centeredness in existing models, 12 and provide the ability for global benchmarking. As a new tool, there has been limited published results of how the DHI has been applied to health services to shape digital transformation strategies and investment decisions.

In the state of Queensland, Australia, an ambitious digital transformation of the public health care system is underway, aiming to provide a single electronic record to all consumers as a key foundational component. 13 In a quest to understand progress made toward this digital health vision, an evaluation was undertaken with the research question: What is the digital health capability of the Queensland health system? Our hypothesis was digital capability would vary widely across the jurisdiction.

Objective

The aim of this research was to apply the DHI 11 to evaluate the digital health capability in Queensland to inform digital health strategy and investment.

Methods

A cross-sectional survey design involving the DHI was employed in the state of Queensland. This study has received a multisite ethics approval from the Royal Brisbane and Women's Hospital [ID: HREC/2020/QRBW/66895], followed by site-specific research governance approvals.

Setting

Queensland Health (QH) provides state-wide health care to over 5 million people, covering a geographical area 2.5 times the size of Texas, United States. At an annual cost of over AUD$29billion, QH funds universal free health care across acute inpatient care; emergency care; mental health and alcohol and other drug services; outpatient care; prevention, primary and community care; ambulance services, and; sub and non-acute care. 14 Queensland is also serviced by multiple private hospitals, non-government organizations, Aboriginal and Torres Strait Islander Community Controlled Health Organizations, general practices, primary health networks, and charitable organisations. 15 In 2018 to 2019, 57% of inpatient care was in Queensland's public hospitals. 15

The population is unevenly dispersed within metropolitan areas in the Southeast corner of the state, regional sites with large base hospitals to considerably isolated remote and ultra-remote communities with a fly-in fly-out health workforce. To effectively manage health services across a large geographically dispersed state, QH decentralized their operations into 16 regionally divided independent statutory bodies called health care systems. Each health care system has variable numbers of health services covering the full spectrum of complexity from quaternary academic hospitals to small rural hospitals. 13 16 Publicly funded health care systems were assessed in this study with a focus on digital capabilities of the hospitals contained within, and not the private hospitals across the systems.

At the time of the assessment, 15 individual hospitals across nine health care systems were digital hospitals with the single instance Cerner integrated Electronic Medical Record (EMR) system. The full stack of advanced EMR capability covers the patient journey across various health care sites, and is integrated with computerized provider order entry, ePrescribing, and clinical decision support systems. 17 The remaining hospitals use paper-based clinical documentation with various levels infrastructure, connectivity, and point of care technologies for integration of business, patient administration, diagnostics and virtual care systems.

Data Collection

Data were collected using the DHI electronic self-assessment questionnaire. The DHI consists of 121 indicator statements measured on a five-point scale ranging from not enabled to fully enabled covering the dimensions of digital transformation. Organizational data are collected using 10 demographic questions which do not contribute to the overall DHI score.

The survey was administered electronically to each individual site and completed between February and July 2021. Survey respondents were voluntary staff representatives from each site, who (1) had an awareness of digital health across the health care system, (2) the ability to network with local workforce to complete the survey accurately, and (3) provide informed consent. The survey respondents included chief information officers ( n = 8), chief digital officers ( n = 2), clinical directors of digital health ( n = 2), director of information communication technologies ( n = 2), executive director of medical services ( n = 1), and chief digital director medical services ( n = 1). Respondents required at least 2 hours to complete the survey, receiving support and clarification from HIMSS to avoid partial completions.

Data Analysis

The DHI score was calculated for each health care system using pre-built algorithms; proprietary of HIMSS. Through application of this algorithm each DHI dimension (i.e., interoperability; person-enabled health; predictive analytics; and governance and workforce) can be scored from zero to 100. A proprietary algorithm is then applied to calculate a total score (i.e., the total score is not the sum of the dimension scores). The dimension level scores and the overall DHI scores were exported to IBM SPSS Statistics (Version 28) where a series of analyses were performed.

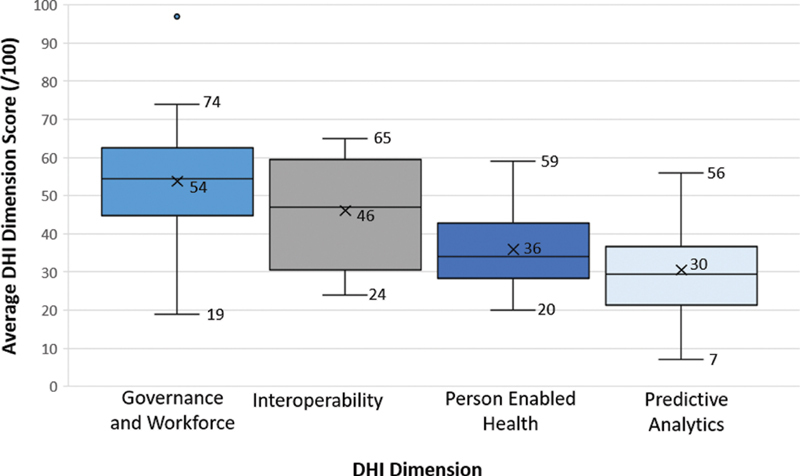

Dimension Capability

A dimension level analysis was performed to provide granular insights into the strengths and weaknesses in digital capability. The scores of each DHI dimension per site were aggregated, with descriptive statistics and visualized using box and whisker plots.

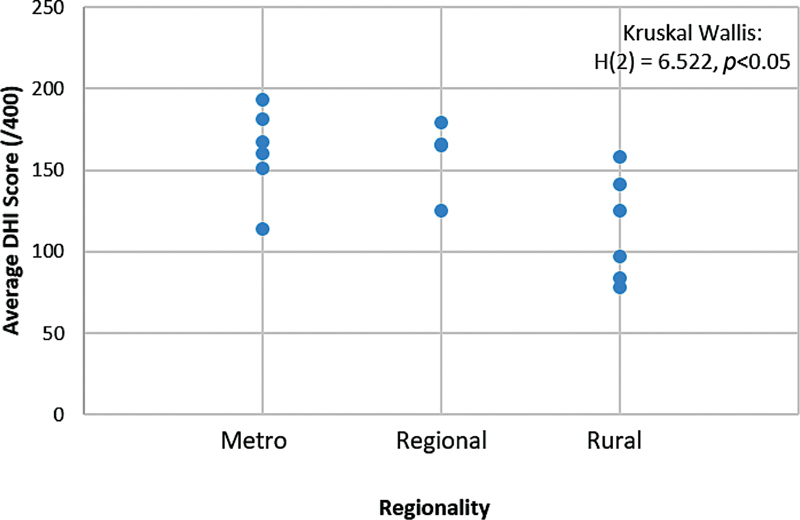

Regional Capability

A region level analysis was conducted to examine differences in digital capability due to the geographical spread of Queensland. This required the regionality of each health care system to be determined. Applying the Modified Monash Model 2019 tool (MMM) in the Health Workforce Locator, 18 six sites were determined to be metro (MMM1), four regional (MMM2), and six rural (MMM3–7). A Kruskal–Wallis test was performed to identify if there were any statistical differences among the regions. Mann-Whitney U tests were then performed to identify differences between all combinations of groups (i.e., metro vs. regional; metro vs. rural; regional vs. rural).

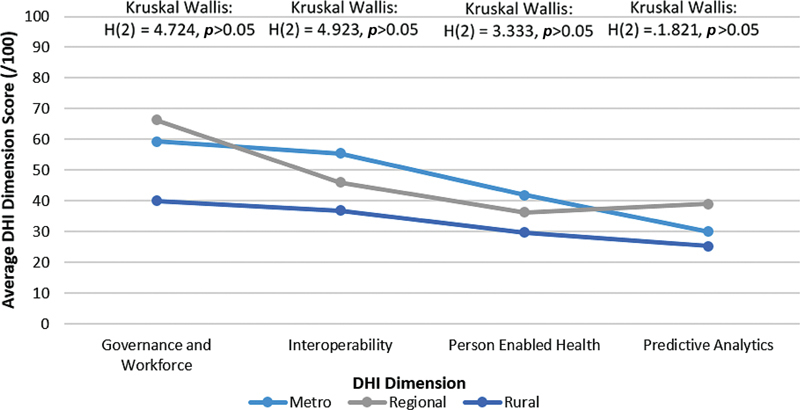

Regional Dimension Analysis

A region dimension level analysis was conducted to examine if regional areas differed in their evaluations of the digital capability dimensions. For each region classification, the DHI dimension scores (i.e., interoperability; person-enabled health; predictive analytics; and governance and workforce) were extracted. A Kruskal–Wallis test was performed to identify if there were any statistical differences among regions.

Digital Hospital Analysis

A digital hospital analysis was conducted to examine if EMR implementation impacted digital capability between health care systems. The DHI score for each health care system was grouped (EMR vs. non-EMR) and a Mann-Whitney U test performed to identify any statistically significant differences between EMR sites and non-EMR sites.

External Benchmarking

External comparisons were performed to benchmark the digital capability of QH globally. This involved examining archival data provided by HIMSS for the DHI scores reported by other private and public health systems across Oceania ( n = 7), and North America ( n = 10). For the first comparison, a Kruskal–Wallis test was performed to identify if there were any differences in DHI scores among the continental areas. A Mann-Whitney U test was performed to identify differences between all combinations of groups (i.e., North America vs. QH; North America vs. Oceania; QH vs. Oceania). For the second comparison, Mann-Whitney U tests were performed to identify if there were any statistical differences between QH's DHI score and global DHI scores, global public DHI scores, and global private DHI scores. For the final comparison, a Global regionality level analysis was performed; Kruskal–Wallis and Mann-Whitney U tests were performed to identify if there were any differences between global metro and rural DHI scores with QH's global, rural, and regional DHI scores.

Results

Analysis of Queensland's Digital Capability

The overall digital capability, denoted by the mean DHI score across the health care systems, was 143 (/400), ranging from the lowest digital capability health care system of 78 with a maximum digital capability health care system of 193.

The dimension level analysis ( Fig. 2 ) identified that Governance and Workforce was on average the highest scoring dimension (x̅ = 54), although there was a potential outlier present. This was followed by interoperability (x̅ = 46), person-enabled health (x̅ = 36), and predictive analytics (x̅ = 30).

Fig. 2.

DHI dimensional scores for 16 health care services in Queensland, Australia. DHI, digital health indicator.

Fig. 3 illustrates the region level analysis comparing DHI scores of metro, regional, and rural areas. A Kruskal − Wallis test showed that the regionality of the site affects the DHI scores ( p <0.05). Post-hoc Mann-Whitney U tests ( Table 1 ) indicated that the differences in DHI scores of health care systems in metro regions did not significantly differ from those in regional regions ( p > 0.05), although, metro and regional regions received higher DHI scores than rural regions ( p <0.05).

Fig. 3.

DHI scores comparing health care systems across geographic regions. DHI, digital health indicator.

Table 1. Region level analysis.

| Metro vs. regional | Metro vs. rural | Regional vs. rural | ||||

|---|---|---|---|---|---|---|

| Metro | Regional | Metro | Rural | Regional | Rural | |

| N | 6 | 4 | 6 | 6 | 4 | 6 |

| Mean | 161 | 159 | 161 | 114 | 159 | 114 |

| Median | 163 | 166 | 163 | 111 | 166 | 111 |

| Mann-Whitney result | U = 11, z = −0.213 | U = 4, z = 2.242 | U = 2.5, z = −2.032 | |||

| p -Value | p > 0.05 | p <0.05 | p <0.05 | |||

| Outcome | Metro = Regional | Metro> Rural | Regional> Rural | |||

The region dimension level analysis ( Fig. 4 ) identified similar results to the dimension level analysis ( Fig. 2 ) with Governance and Workforce scoring higher than interoperability, person-enabled health and predictive analytics, although the Kruskal − Wallis test ( Table 2 ) indicated that the scores for each dimensions were comparable across regions ( p >0.05).

Fig. 4.

DHI dimensional scores comparing health care systems across different geographic regions. DHI, digital health indicator.

Table 2. Region-dimension level analysis.

| Governance and workforce | Interoperability | Person-enabled health | Predictive analytics | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Metro | Reg. | Rural | Metro | Reg. | Rural | Metro | Reg. | Rural | Metro | Reg. | Rural | |

| N | 6 | 4 | 6 | 6 | 4 | 6 | 6 | 4 | 6 | 6 | 4 | 6 |

| Mean | 59 | 66 | 40 | 56 | 46 | 37 | 42 | 36 | 30 | 30 | 39 | 25 |

| Median | 62 | 60 | 44 | 58 | 47 | 35 | 41 | 36 | 29 | 30 | 40 | 27 |

| Kruskal–Wallis result | H (2) = 4.724 | H (2) = 4.923 | H (2) = 3.333 | H (2) = 1.821 | ||||||||

| p -Value | p > 0.05 | p > 0.05 | p > 0.05 | p > 0.05 | ||||||||

Abbreviation: Reg, regional.

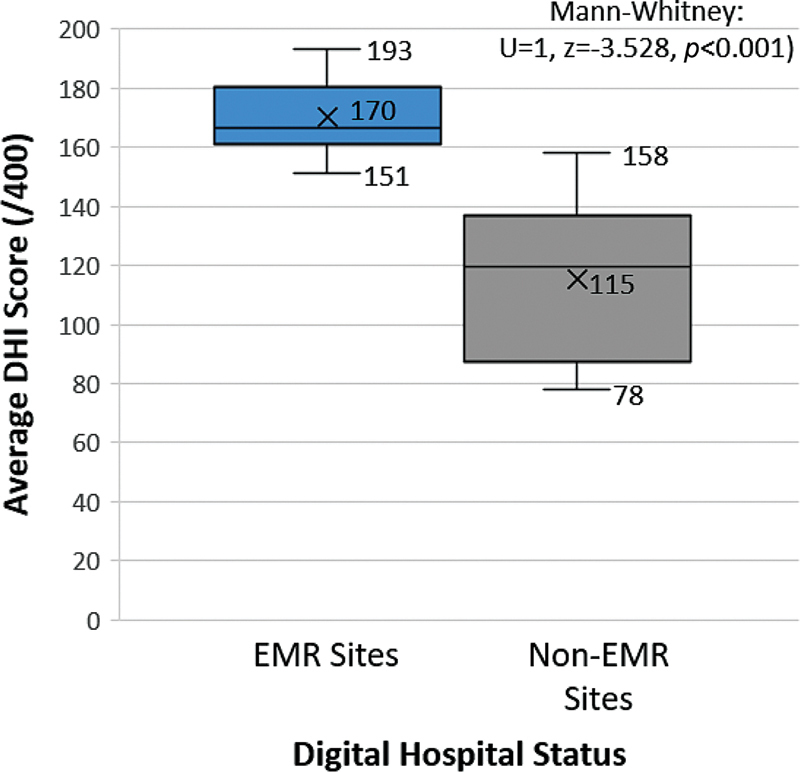

The digital hospital analysis is illustrated in Fig. 5 . The Mann-Whitney U test ( Table 3 ) indicated that sites with an EMR have a higher DHI score than sites that do not have the EMR ( p <0.001).

Fig. 5.

DHI scores comparing health care systems stratified by EMR use. DHI, digital health indicator; EMR, electronic medical record.

Table 3. Digital hospital analysis.

| EMR site | Non-EMR site | |

|---|---|---|

| N | 8 | 8 |

| Mean | 170 | 115 |

| Median | 167 | 119 |

| Mann-Whitney result | U = 1, z = −3.258 | |

| p -Value | p <0.001 | |

| Outcome | EMR site >non-EMR site | |

External Comparisons of QH's Digital Capability with Global DHI Scores

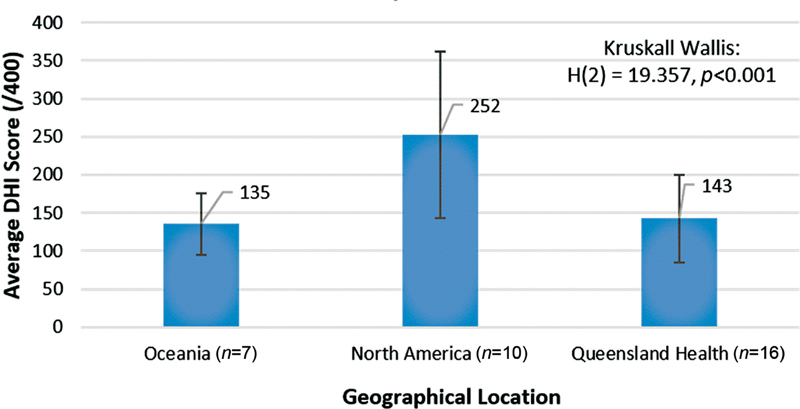

A Kruskal–Wallis test indicated that there are differences in DHI scores across locations ( Fig. 6 ) ( p <0.001). Post-hoc Mann-Whitney U tests indicated that the DHI scores of health systems in North America were significantly higher than QH ( p <0.001) and Oceania ( p <0.001). There was no significant difference in DHI scores reported between QH and Oceania ( p > 0.05) ( Table 4 ).

Fig. 6.

Comparison of DHI scores against global location. DHI, digital health indicator.

Table 4. International comparison.

| North America vs. QH | North America vs. Oceania | Oceania vs. QH | ||||

|---|---|---|---|---|---|---|

| North America | QH | North America | Oceania | Oceania | QH | |

| N | 10 | 16 | 10 | 7 | 7 | 16 |

| Mean | 252 | 143 | 252 | 135 | 135 | 143 |

| Median | 238 | 155 | 238 | 132 | 132 | 155 |

| Mann-Whitney result | U = 3, z = −4.059 | U = 0, z = −3.416 | U = 50, z = −0.401 | |||

| p -Value | p <0.001 | p <0.001 | p >0.05 | |||

| Outcome | North America> QH | North America> Oceania | Oceania = QH | |||

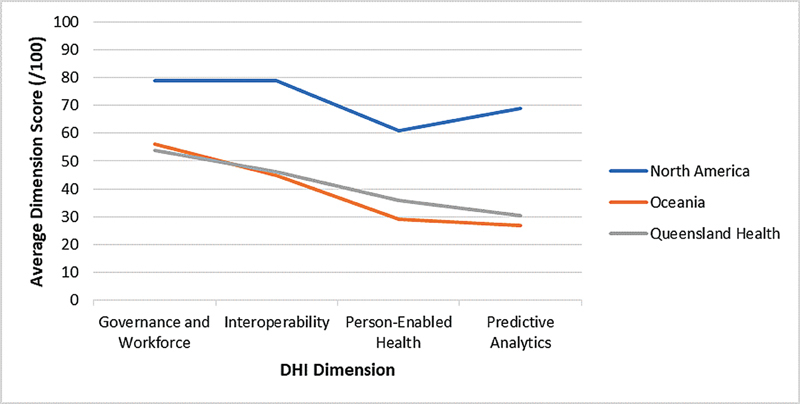

The global-dimension level analysis ( Fig. 7 ), indicates a similar pattern across Oceania and QH with governance and workforce being the highest scoring dimensions, followed by interoperability, person-enabled health, and predictive analytics. In North America, however, interoperability scored equally with governance and workforce, with person-enabled health being the lowest scoring dimension.

Fig. 7.

Comparison of DHI dimensions scores against global location. DHI, digital health indicator.

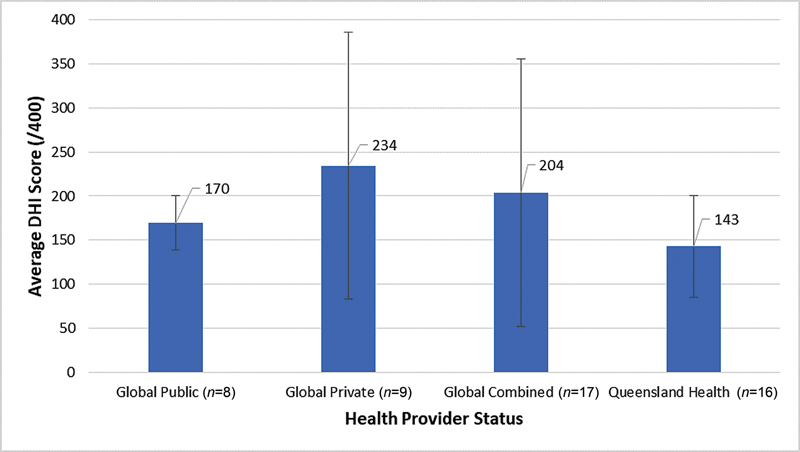

Subsequently, a global comparison was performed with a Mann-Whitney U test ( Fig. 8 , Table 5 ) indicating that the QH DHI score was lower than those reported globally ( p < 0.05). To identify if the difference at the global level is partially explained by the public or private nature of the health care system follow-up, Mann-Whitney U tests were performed, which indicated that QH's DHI scores were comparable to global public DHI scores ( p >0.05), and lower than global private DHI scores ( p <0.01).

Fig. 8.

DHI scores comparing global private and public health systems. DHI, digital health indicator.

Table 5. Global health provider status comparison.

| Global vs. QH | Global private vs. QH | Global public vs. QH | ||||

|---|---|---|---|---|---|---|

| Global | QH | Global private | QH | Global public | QH | |

| N | 17 | 16 | 9 | 16 | 16 | |

| Mean | 204 | 143 | 234 | 143 | 143 | |

| Median | 202 | 155 | 244 | 155 | 155 | |

| Mann-Whitney result | U = 68, z = −2.558 | U = 24, z = −2.718 | U = 41, z = −1.409 | |||

| p -Value | p <0.05 | p <0.01 | p >0.05 | |||

| Outcome | Global> QH | Global private> QH | Global Public = QH | |||

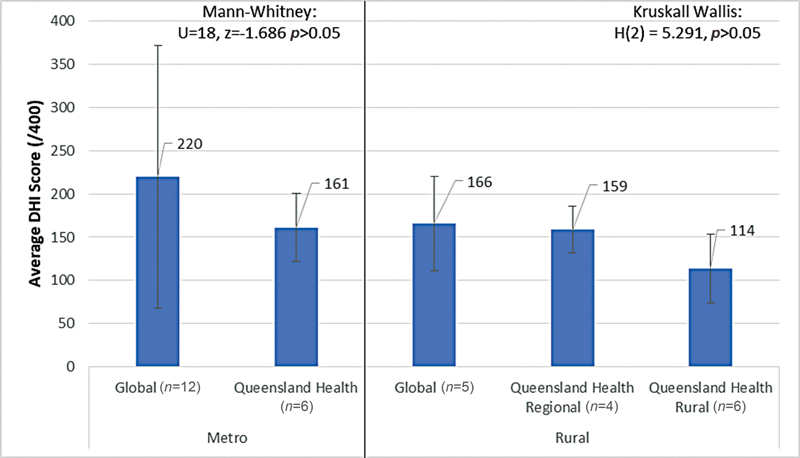

A Kruskal–Wallis test ( Fig. 9 , Table 6 ) indicated that QH's DHI scores in regional and rural areas were comparable to global rural DHI scores ( p >0.05). A Mann-Whitney analysis ( Table 7 ) further indicated that QH's DHI scores in metro areas were comparable to global metro DHI scores ( p >0.05).

Fig. 9.

DHI scores comparing global and QH data stratified by geographic location (regional, metro, and rural). DHI, digital health indicator.

Table 6. Global rural/regional comparison.

| Global-rural | QH-regional | QH-rural | |

|---|---|---|---|

| N | 5 | 4 | 6 |

| Mean | 166 | 159 | 114 |

| Median | 168 | 166 | 111 |

| Kruskal–Wallis result | H (2) = 5.291 | ||

| p -Value | p > 0.05 | ||

Table 7. Global metro comparison.

| Global-metro | QH-metro | |

|---|---|---|

| N | 12 | 6 |

| Mean | 220 | 161 |

| Median | 227 | 164 |

| Mann-Whitney result | U = 18, z = −1.686 | |

| p -Value | p > 0.05 | |

| Outcome | Global-Metro = QH-Metro | |

Discussion

There is a significant drive to digitize health care to enable new models of care, data, and analytics and to form the foundation to apply emerging technologies such as artificial intelligence and precision medicine. To digitally transform health care delivery in a strategic and informed manner, understanding and benchmarking the current digital capability is essential to drive and monitor progress.

Summary of Key Findings

Queensland's results reveal a variation in DHI scores reflecting the diverse stages of health care digitization across the state, with the findings indicating that sites that have adopted an integrated EMR system possess higher levels of digital capability than non-digital sites. The DHI score is similar to other systems in the Oceania region and global public systems but below the global private average. Dimension-specific results were explored to understand digital capability across four categories: Interoperability; Person Enabled Health; Predictive Analytics; and Governance and Workforce. Relevant findings are informing the updated state-wide digital health strategic plan.

Governance and Workforce received the highest comparable dimension score. The dimension captures organizational elements of governance, risk, culture, leadership, and accountability which have always existed within organizations. Modernizing these policies is possible to reflect the broader institutional environment which has shifted over the past decades to recognize the criticality of digital health. 19 However, the large variance in scores indicates that not all health care systems have data strategy, security and privacy, and safety and quality outcome tracking in place. To develop the capability from within a site, mirroring other digital health implementation strategies from other sites may not transfer successfully across due to context-specific factors. 20 21 Informed by these results, the strategic focuses include: clinician workflow improvement and increasing the digital health competence of staff (workforce), and transparency of health system performance, improvements in service planning and an increase in artificial intelligence capability (governance).

Interoperability is a relatively strong dimension across QH with the large variation in scores linked to regionality; metropolitan and regional sites are more likely to have higher scores. These sites are more likely to have implemented digital tools including the EMR. To improve interoperability and the connectivity between health care stakeholders, health care systems can invest in technology advancements and centralization of patient information. The state-wide digital health investment strategy outlined the key investment of EMR implementations at large tertiary and quaternary care hospitals in metropolitan and regional sites. The rollout in rural areas is currently under review for funding allocation.

Person-enabled health is an area for development across all geographic regions. Absence of digitally enabled self-management platforms, patient access to medical documents or care plans, connectivity between clinicians and patients, and underutilization of patient-reported outcomes emerged as limitations in this dimension. Standardized design features in wide-scale EMR implementations do not necessarily account for well-established person-centered care delivery approaches 21 which will need management. The acceptance of telehealth options for consumers, clinicians, and system administrators during the COVID−19 pandemic uncovered through the analysis means that the QH will continue to promote the use of virtual care services. These service options not only ensure that consumers have the option to receive care safely and effectively in their homes, and be with family and carers, whenever possible, they also increase the efficiency of the health system to cope with ever increasing demand.

The predictive analytics dimension scores the lowest of the four dimensions, which is to be expected given this is an emerging technology. Predictive tools employing machine learning and artificial intelligence are not currently used, yet real-time descriptive analytics are being leveraged in pockets across the state. Adoption of predictive analytics tools is a major step toward making the health care system prevention-focused through targeted interventions and resourcing. 22 The Predictive Analytics assessment provided an opportunity to modernize data strategy and infrastructure aiming to: (1) enable business areas to have timely access to high quality data; (2) effectively integrate across the system, achieving horizontal collaboration through establishing a single source of truth; (3) coproduce knowledge and insights with key research and industry partners to drive health service and system improvements, and; (4) establish the underpinnings of precision medicine to effectively reduce low value-based care, waste, and harm.

Comparison to Existing Literature

This is the first large simultaneous application of the DHI across a single jurisdiction reported in the academic literature. While HIMSS describes the DHI as a “capability assessment,” the applications of comprehensive MMs or capability assessments in health care are scarcely described. 23 MMs are often conducted without academic input by national or supranational organizations, corporations, and national health organizations limiting the peer reviewed evidence. 8 Existing literature is focused on MM design over deployment, 24 with few reporting on evaluations of multifunctional systems within complex health care organisations. 3 Measuring single technological implementations alone is unlikely to capture the full spectrum of capability required for system transformation. Evidence of practical application and efficacy of implementations are needed to address the practice-research gaps 23 with this study contributing to the growing evidence base.

The closest comparison of a large scale digital capability assessment was the English National Health Service's Clinical Digital Maturity Index (CDMI). The CDMI uses readiness, capability, and infrastructure to contribute to a total score /1,400. When 136 hospitals were assessed in 2016, the mean aggregate of the total CDMI score was 797 (range 324–1,253; SD 174). 3 Significant variation among hospitals in all domains was uncovered, 3 similar to the findings from Queensland and demonstrating the possibility for improvement in dimension and overall scores for both the DHI and CDMI tools.

The most widely reported maturity application is HIMSS's seven stage Electronic Medical Record Adoption Model (EMRAM). 25 EMRAM is a technology-focused staged MM rather than a comprehensive assessment across governance and workforce, interoperability, person-enabled health and predictive analytics, which is what the DHI assesses. Large applications of EMRAM were reported in two jurisdictions. In The Netherlands in 2014, 48% ( n = 32) of hospitals sampled (80% total non-academic Dutch hospitals) received a score of five on the EMRAM and no hospital received the highest score of seven. 26 In the same year in the United States over 5,200 hospitals (86% total U.S. hospitals) completed the EMRAM, demonstrating an incremental increase in scores from previous years. 27 More than 96% of hospitals were identified to be in Stage 3 or below in 2006, while this number decreased to approximately 31% in 2014. 27 In comparison to single technology and implementation focused MMs such as EMRAM, a holistic capability assessment such as the DHI provides contextual detail, highlights weaknesses, and documents specific actions for system wide improvements.

Limitations

The DHI was the chosen method for assessing digital capability in this instance and results may vary using another tool due to the methodologically diverse approaches to assessing digital maturity. The individual indicator statements and algorithm to calculate the DHI scores are proprietary of HIMSS, and the weighting of the dimension scores to generate the total DHI is unknown. We do not believe this will discredit the approach as it still provides a useful benchmark for others employing the DHI. Although our findings indicate that DHI scores are higher with the presence of an EMR within the health systems examined, due to limitations in the archival dataset it was not possible to evaluate whether this finding also manifests at the global level. It is certainly possible given EMRs can be associated with improved interoperability and are a precursor to predictive analytics, however, there is no guarantee as these technology systems can be implemented and adopted by users in a myriad of ways. The ability to assess the digital capability at an individual hospital level, longitudinally or objectively was not possible using this study design and provides opportunity for future research. The state health system was assessed by aggregating multiple site analyses, and therefore subject to the accuracy of localized assessments. Global comparisons were limited to DHI scores, with no accompanying comparison of health care systems or point-of-care digital health capabilities. Some possibilities could be differences in EMR implementation, health care expenditure, and nature of health funding, which will benefit from future research.

Future Research

Future research involves building the evidence-base for advancing digital capabilities in practice, including validation of the DHI tool. We are underway with an analysis of routinely collected hospital performance and clinical quality and safety data to correlate digital capability with outcomes mapped to the quadruple aims of health care, 28 including a longitudinal analysis of digitizing health care systems. Measuring the impact of the digitization of health care is necessary to quantify the meaningful impacts on health outcomes, cost of care, and the patient and clinician experience.

Conclusion

For health care organizations to drive digital transformation in a strategic and informed manner, it is critical they understand and benchmark their current digital health capability. Queensland, a large state in Australia, undertook a capability assessment of public health services using the DHI. The results reveal a variation in DHI scores reflecting the diverse stages of health care digitization across the state which is consistent with global trends. Governance and Workforce was on average the highest scoring dimension, followed by interoperability, person-enabled health, and predictive analytics. The findings helped derive specific insights for future digital health planning. As the first large scale application of the DHI globally and the first published state-wide digital capability report in Australia, the findings also offer insights for policy makers and organizational managers. Understanding and monitoring digital transformation at scale is critical for strategic and evidence-based digital transformation investments.

Clinical Relevance Statement

A digital health maturity or capability assessment involves clinicians and managers completing a self-assessment by answering a series of indicator statements describing the current system and workforce capability. Reports are generated helping staff to identify strengths and weaknesses in the current state, and provide recommendations into how this might be addressed in the future state. The process generates insights beyond the common focus on technology implementation by health care teams, affording the opportunity to guide the digital transformation to meet organizational goals for patient care. Business cases or reports can be generated for health care executives, decision-makers, and policy makers for targeted digital health planning, resourcing, and investment.

Multiple Choice Questions

-

The Digital Health Indicator is a capability assessment of which of the following dimensions?

Business; organization; information; people.

People; process; information; technology.

Interoperability; person-enabled health; predictive analytics; governance and workforce.

Interoperability; patient centered care; quality and safety; leadership.

Correct Answer: The correct answer is option c. The Digital Health Indicator assesses the digital health capability of the health system across the dimensions of: interoperability; person-enabled health; predictive analytics; governance and workforce.

-

The benefits of conducting a digital health capability assessment in Queensland include all the following except:

Identify key directions for future resourcing.

Name who was responsible for suboptimal implementation efforts.

Benchmark against global counterparts.

Assess the current state of digital health.

Correct Answer: The correct answer is option b. There are many reasons for conducting a digital health capability assessment including: identification of key directions for future resourcing; benchmarking against global counterparts; and assess the current state of digital health. Naming individuals who were responsible for suboptimal implementation efforts was not a benefit.

Acknowledgments

The authors would like to thank the participants for their contribution to this research. This research was supported by the Digital Health Cooperative Research Centre which is funded under the Commonwealth Government's Cooperative Research Program and Queensland Department of Health.

Conflict of Interest A.P. and L.J. are employees of HIMSS, the organization who developed the DHI. Remaining authors declare that they have no conflict of interest in the research.

Protection of Human and Animal Subjects

The study was performed in compliance with the Ethical Principles for Medical Research Involving Human Subjects and received multisite ethics approval from the Royal Brisbane and Women's Hospital [ID: HREC/2020/QRBW/66895], and research governance approvals from all sites.

References

- 1.World Health Organization Recommendations on digital interventions for health system strengthening World Health Organization; 2019:2020–2010 [PubMed] [Google Scholar]

- 2.World Health Organization . Geneva: World Health Organization; 2021. Global Strategy on Digital Health 2020–2025. [Google Scholar]

- 3.Martin G, Clarke J, Liew F. Evaluating the impact of organisational digital maturity on clinical outcomes in secondary care in England. NPJ Digit Med. 2019;2(01):41. doi: 10.1038/s41746-019-0118-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kolukısa Tarhan A, Garousi V, Turetken O, Söylemez M, Garossi S. Maturity assessment and maturity models in health care: a multivocal literature review. Digit Health. 2020;6:2.055207620914772E15. doi: 10.1177/2055207620914772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Krasuska M, Williams R, Sheikh A. Technological capabilities to assess digital excellence in hospitals in high performing health care systems: international eDelphi exercise. J Med Internet Res. 2020;22(08):e17022. doi: 10.2196/17022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cresswell K, Sheikh A, Krasuska M. Reconceptualising the digital maturity of health systems. Lancet Digit Health. 2019;1(05):e200–e201. doi: 10.1016/S2589-7500(19)30083-4. [DOI] [PubMed] [Google Scholar]

- 7.Carvalho J V, Rocha Á, Abreu A. Maturity assessment methodology for HISMM – Hospital Information System Maturity Model. J Med Syst. 2019;43(02):35. doi: 10.1007/s10916-018-1143-y. [DOI] [PubMed] [Google Scholar]

- 8.Carvalho J V, Rocha Á, Abreu A. Maturity models of healthcare information systems and technologies: a literature review. J Med Syst. 2016;40(06):131. doi: 10.1007/s10916-016-0486-5. [DOI] [PubMed] [Google Scholar]

- 9.Vidal Carvalho J, Rocha Á, Abreu A. Maturity of hospital information systems: most important influencing factors. Health Informatics J. 2019;25(03):617–631. doi: 10.1177/1460458217720054. [DOI] [PubMed] [Google Scholar]

- 10.Marwaha J S, Landman A B, Brat G A, Dunn T, Gordon W J. Deploying digital health tools within large, complex health systems: key considerations for adoption and implementation. NPJ Digit Med. 2022;5(01):13. doi: 10.1038/s41746-022-00557-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Snowdon A. Illinois, USA: Healthcare Information and Management Systems Society; 2020. Digital Health: A Framework for Healthcare Transformation. [Google Scholar]

- 12.Duncan R, Eden R, Woods L, Wong I, Sullivan C. Synthesizing dimensions of digital maturity in hospitals: systematic review. J Med Internet Res. 2022;24(03):e32994. doi: 10.2196/32994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Queensland Health . Queensland, Brisbane: State of Queensland (Queensland Health); 2017. Digital Health Strategic Vision for Queensland 2026. Digital Strategy Branch eHealth. [Google Scholar]

- 14.Queensland Department of Health . Queensland: The State of Queensland (Department of Health); 2021. Department of Health Annual Report 2020–2021. [Google Scholar]

- 15.Queensland Health . Brisbane: State of Queensland (Queensland Health); 2020. The Health of Queenslanders 2020: Report of the Chief Health Officer Queensland. [Google Scholar]

- 16.Burton-Jones A, Akhlaghpour S, Ayre S, Barde P, Staib A, Sullivan C. Changing the conversation on evaluating digital transformation in healthcare: insights from an institutional analysis. Inf Organ. 2020;30(01):100255. [Google Scholar]

- 17.Eden R, Burton-Jones A, Staib A, Sullivan C. Surveying perceptions of the early impacts of an integrated electronic medical record across a hospital and healthcare service. Aust Health Rev. 2020;44(05):690–698. doi: 10.1071/AH19157. [DOI] [PubMed] [Google Scholar]

- 18.Australian Government Department of Health Health Workforce LocatorPublished (no date). Accessed March 07, 2022 at:https://www.health.gov.au/resources/apps-and-tools/health-workforce-locator/health-workforce-locator

- 19.Kaplan B. The computer prescription: Medical computing, public policy, and views of history. Sci Technol Human Values. 1995;20(01):5–38. [Google Scholar]

- 20.Eden R, Burton-Jones A, Scott I, Staib A, Sullivan C. Effects of eHealth on hospital practice: synthesis of the current literature. Aust Health Rev. 2018;42(05):568–578. doi: 10.1071/AH17255. [DOI] [PubMed] [Google Scholar]

- 21.Burridge L H, Foster M, Jones R, Geraghty T, Atresh S. Nurses' perspectives of person-centered spinal cord injury rehabilitation in a digital hospital. Rehabil Nurs. 2020;45(05):263–270. doi: 10.1097/rnj.0000000000000201. [DOI] [PubMed] [Google Scholar]

- 22.Canfell O J, Davidson K, Woods L. Precision public health for noncommunicable diseases: an emerging strategic roadmap and multinational use cases. Front Public Health. 2022;10:854525. doi: 10.3389/fpubh.2022.854525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Burmann A, Meister S.Practical Application of Maturity Models in Healthcare: Findings from Multiple Digitalization Case StudiesPaper presented at: HEALTHINF2021

- 24.Blondiau A, Mettler T, Winter R. Designing and implementing maturity models in hospitals: an experience report from 5 years of research. Health Informatics J. 2016;22(03):758–767. doi: 10.1177/1460458215590249. [DOI] [PubMed] [Google Scholar]

- 25.Analytics H IMSS.EMRAM: A strategic roadmap for effective EMR adoption and maturityPublished 2017; 2021. Accessed March 31, 2021 at:https://www.himssanalytics.org/emram

- 26.van Poelgeest R, Heida J-P, Pettit L, de Leeuw R J, Schrijvers G. The association between eHealth capabilities and the quality and safety of health care in the Netherlands: comparison of HIMSS analytics EMRAM data with Elsevier's ‘The Best Hospitals’ data. J Med Syst. 2015;39(09):90. doi: 10.1007/s10916-015-0274-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kharrazi H, Gonzalez C P, Lowe K B, Huerta T R, Ford E W. Forecasting the maturation of electronic health record functions among US hospitals: retrospective analysis and predictive model. J Med Internet Res. 2018;20(08):e10458. doi: 10.2196/10458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. 2014;12(06):573–576. doi: 10.1370/afm.1713. [DOI] [PMC free article] [PubMed] [Google Scholar]