Abstract

The Behavior Analyst Certification Board (BACB) annually publishes data on the pass rates of institutions with verified course sequences (VCS). The current study analyzed BACB-published data from the years 2015–2019 and explored relations among program mode, number of first-time candidates, and examination pass rates. In a correlation analysis of number of first-time candidates and pass rates, there was a weak negative correlation, indicating that larger numbers of first-time candidates are associated with lower pass rates. Further, statistically significant differences were found among the mean number of first-time candidates, mean pass rates, and mean number of passing first-time candidates across program modes. Campus and hybrid programs had higher mean pass rates than distance programs, whereas distance programs had higher numbers of passing first-time candidates than campus programs. External validity and implications for indicators of program quality are discussed.

Keywords: BACB, Certification examination pass rates, Distance learning

The Behavior Analyst Certification Board (BACB) is the credentialing body that regulates board certification in behavior analysis. It was founded in 1998 to protect consumers of behavior analytic clinical services (BACB, 2020a). The BACB oversees the issuance and regulation of four credentials; the Board Certified Behavior Analyst (BCBA) is the certification that is held by the greatest number of professionals who may practice independently (Carr & Nosik, 2017). Although there are alternate pathways for faculty and senior practitioners, the most common approach to earn the BCBA credential involves satisfying three criteria: (1) complete a master’s degree in a related field that includes a verified course sequence (VCS), (2) complete all required supervised practice hours, and (3) successfully pass the board certification examination constructed by the BACB and proctored at a Pearson VUE testing site.

The BACB and academic institutions that provide behavior analytic coursework are designed to be independent of one another. However, the graduate program where one chooses to complete the coursework requirement is often considered by prospective students to differentially prepare one for the board certification exam. In fact, the BACB annually publishes the board certification examination pass rate data for all academic institutions with VCS programs on its website (BACB, 2020b). These pass rates are often referred to in admissions marketing materials for academic institutions with VCS programs (e.g., Applied Behavior Analysis Programs Guide, 2019).

To be considered a VCS, a program must include courses covering specified content areas that meet required hours by the BACB. Beyond guidelines regarding content hour allocation, not all VCS programs are delivered in exactly the same package or with the same content (Shepley et al., 2017). For instance, a VCS may or may not be embedded in a master’s program in behavior analysis (or similar field); at some institutions, a VCS is offered as a stand-alone credentialing track or certificate program for individuals with a previously earned master’s degree. Shepley et al. identified differences in departmental affiliation, methods of course delivery, additional program accreditation, provision of supervised experience, and ABA-related topic areas across VCS programs. In addition, a VCS may be awarded additional peer-reviewed accreditation through the Association for Behavior Analysis International (ABAI). In other words, some VCS are also ABAI-accredited and are held to higher standard than programs without ABAI accreditation (ABAI, n.d.).

These differences across types of VCS programs may have implications regarding both the type of program certain students select as well as the rigor and intensity of preparation that students receive to pass the BCBA examination in the short-term and affect the field in the long-term. For example, a master’s degree program in counseling or special education with an optional VCS track may produce students with a different breadth and depth of coursework and practice experience than that of a fully embedded VCS within a behavior analysis master’s degree program. The BACB (2020b) does not currently specify these differences in VCS programs in its published pass rate data.

Despite the variability in structure of VCS programs, several authors have examined curricula across VCS and ABAI-accredited programs and identified variables that may influence the quality of this coursework component in training prospective BCBAs. Given the field is growing rapidly due to a preponderance of practitioner-focused employment opportunities, concerns have arisen about the shrinking proportion of behavior analysts with research training to continue to advance the field in basic and applied research (Kelley III et al., 2015). In addition to practice methods, research training provided to students (Critchfield, 2015) and research productivity of faculty teaching in a VCS (Dixon et al., 2015) were identified as necessary indicators of program quality. In particular, Dixon et al. conducted an analysis of combined faculty publications across VCS programs (at the time of their publication, these were referred to as “BACB-approved” programs) and identified only 50% of programs were taught by faculty with 10 or more total publications in the field’s six top-tier journals. Monitoring the current and ongoing capacity for research training in behavior analysis is essential to ensuring that the field will continue to produce future researchers in the field, along with practitioners.

With specific focus on training in experimentation, Kazemi et al. (2019) conducted an analysis of syllabi across 70% of the 20 ABAI-accredited programs in order to examine assigned readings in experimental analysis of behavior (EAB) across programs. Although this study identified a list of common readings (specific books, chapters, and journal articles) by EAB topic area, it also identified variability beyond those common readings, and that instructors were more likely to assign applied or subspeciality topics. As a field, it may be necessary to examine and continue to assess specific training in EAB across VCS programs.

Although Kazemi et al. (2019) focused primarily on EAB content areas, Pastrana et al. (2018) conducted a similar analysis of course syllabi and reported the 10 most frequently assigned readings from the VCS programs with pass rates of 80% or higher and at least six first-time test-takers and (if the program had fewer than six first-time test-takers, the previous year’s 2013 pass rate was combined with the 2014 pass rate). Although the authors framed the results of this analysis as a resource for curriculum developers, they also stated, “the pass rate of an institution is unlikely the most important dependent variable for graduate training. However, it is available, quantifiable, and important nonetheless” (Pastrana et al., 2018, p. 272). A number of publications have examined a variety of variables as possible indicators of VCS quality as they may relate to BCBA examination pass rates (see Table 1).

Table 1.

Variables examined in publications exploring VCS programs

| Number of first-time test-takers | VCS program mode | VCS curricular focus/topics | VCS departmental affiliation | VCS faculty research record | VCS with ABAI-accreditation | VCS supervision provided | Total | |

|---|---|---|---|---|---|---|---|---|

| Citation | ||||||||

| Behavior Analyst Certification Board (BACB) (2020a, 2020b) | x | x | 2 | |||||

| Dixon et al. (2015) | x | x | 2 | |||||

| Pastrana et al. (2018) | x | x | 2 | |||||

| Shepley et al. (2017) | x | x | x | x | x | x | 6 | |

| Shepley et al. (2018) | x | x | x | x | x | x | 6 | |

| Total | 4 | 3 | 3 | 2 | 1 | 3 | 2 | |

VCS Verified Course Sequence, BACB Behavior Analyst Certification Board, ABAI Applied Behavior Analysis International. “x” indicates that the article included the variable as a dependent measure or inclusion/exclusion criterion

Using the published BACB pass rate data and delving deeply into additional data across VCS programs, Shepley et al. (2018) conducted regression analyses of program characteristics as they may predict examination pass rate data. In particular, the program characteristics evaluated were: (1) provision of supervision experience, (2) ABAI accreditation, (3) departmental affiliation, (4) course delivery methods, (5) course topics, and (6) number of first-time test-takers in 2014. These data were gathered in an initial article (Shepley et al., 2017) and were coded for analysis in a subsequent article (Shepley et al., 2018). The results of these analyses hold valuable implications for the field, such as statistically significant increased pass rates for on-campus course delivery versus online course delivery, and ABAI-accredited programs versus nonaccredited programs. However, as the data set included data from only 1 year (i.e., 2014), an updated analysis is warranted.

In addition, Shepley et al. (2017, 2018) conducted complex analyses using data that were gathered following moderate to high response effort. The publicly available BACB pass rate data provide a narrower range of variables than what was included in the Shepley et al. studies. In its reporting of pass rate data, the BACB characterizes each VCS only by its program mode (distance, campus, hybrid, or both) and number of first-time test-takers or candidates. Because consumers of the BACB pass rate data consist not only of highly trained researchers and higher education leadership, but also prospective graduate students (without such training or perspective), we chose to conduct an analysis using exclusively the data publicly available on the BACB reporting page to determine the conclusions that may be drawn from those data alone. Therefore, the purpose of the current study was to analyze the 2015–2019 pass rate data published by the BACB and explore relations among reported program characteristics, including mode and number of first-time candidates, and certification examination pass rates across VCS programs in behavior analysis.

Method

Data Retrieval

Pass rate data for the years 2015–2019 were presented in a PDF document on the BACB website (BACB, 2020b). We downloaded this document and converted it to Microsoft Excel format for analysis. The initial data set included 347 VCS programs. Per the BACB, pass rate data are not reported if (1) a VCS is in its first 4 years of operation or (2) fewer than six first-time candidates sit for the examination in a calendar year. One hundred sixty-five VCS programs did not report any pass rate data between 2015 and 2019 for one or both of the aforementioned reasons, leaving 182 VCS programs reporting pass rate data for at least 1 year during this period.

Program Mode

Four program modes were defined and coded by the BACB (2020b). Distance programs included programs where all classes were delivered online. Campus programs included programs where all classes were delivered on campus. Hybrid programs included programs where some classes were delivered online and some classes were delivered on campus. Both programs included programs where students were required to select a program mode of either all online or all on campus class delivery. No additional details regarding program mode classifications were provided in the BACB publication.

Number of First-Time Candidates and Percentage Pass Rates

The number of first-time candidates and percentage pass rates for each VCS across the years 2015–2019 were taken directly from the BACB publication (2020b). The BACB also noted that these measures only included candidates who “. . . used coursework entirely from that sequence to meet certification eligibility requirements” (p. 1). That is, if a candidate began study with one VCS but completed their coursework with another VCS, that candidate would not be counted for either VCS.

Number of First-Time Passing Candidates

The number of first-time passing candidates was calculated by multiplying the number of first-time candidates by the percentage pass rate and rounding to the nearest whole number (e.g., 92% pass rate x 11 first-time candidates = 10.12, converted to 10 first-time passing candidates).

Data Analysis

Each year’s pass rate was treated as an individual data point. For example, if “University A” reported pass rates for 2019, 2018, and 2016, these were treated as three separate data points. Across the 182 VCS programs that reported pass rate data for at least 1 year between 2015 and 2019, 558 data points were analyzed. Further, because the data were not normally distributed, we selected nonparametric tests for each of our statistical analyses (Dodge, 2008).

Results

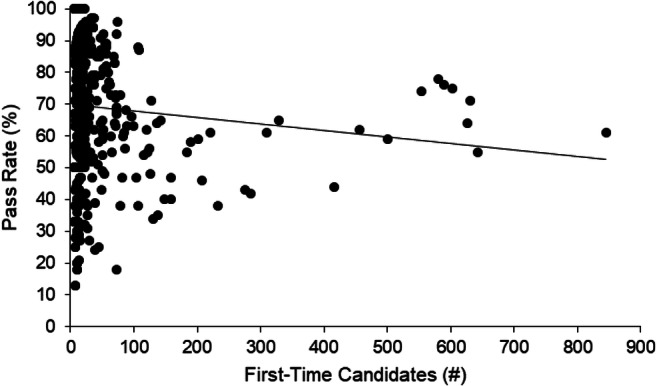

A Spearman correlation analysis was conducted to evaluate the relation between number of first-time candidates and pass rates. We selected a Spearman correlation because (1) it is a nonparametric test (where the Pearson correlation is a parametric test) and (2) it is more resistant to outliers than the Pearson correlation (de Winter et al., 2016), which was a concern due to the wide range of number of first-time candidates (i.e., 6–845). It indicated a weak, negative relation between these variables (rs = -.095, p = .025), suggesting that larger numbers of first-time candidates were associated with lower pass rates. It should be noted that although this relation was statistically significant, the correlation coefficient was extremely weak. Figure 1 depicts a scatterplot of number of first-time candidates and pass rate with a line of best fit.

Fig. 1.

Scatterplot of pass rate percentage and number of first-time candidates with line of best fit. Note. N = 558 across 182 verified course sequences

Table 2 depicts the mean number of first-time candidates, mean pass rate percentage, and mean number of passing first-time candidates across the four program modes: campus, distance, hybrid, and both. Three Kruskal-Wallis tests with Dunn’s post-hoc comparisons were conducted between program mode and the aforementioned variables. The Kruskal-Wallis test is a nonparametric equivalent of a one-way ANOVA and determines if there is a statistically significant difference among the means of three or more groups. Dunn’s test serves as a post-hoc comparison (also nonparametric) to identify the specific pairs of variables that are significantly different from one another.

Table 2.

Descriptive statistics across verified course sequences in behavior analysis 2015–2019

| Program Mode | Mean Number First-Time Candidates (range) | Mean Percentage First-Time Candidates Passing (range) | Mean Number First-Time Candidates Passing (range) |

|---|---|---|---|

| Campus | 13.68 (6–74) | 74.27% (13%–100%) | 10.4 (1–71) |

| Distance | 90.24 (6–845) | 56.55% (18%–100%) | 55.3 (2–515) |

| Hybrid | 20.93 (6–108) | 75.83% (30%–100%) | 16.29 (2–94) |

| Both | 50.98 (6–285) | 66.95% (24%–100%) | 31.3 (3–120) |

| Overall | 38.02 (6–845) | 69.25% (13%–100%) | 24.72 (1–120) |

Note. N = 558 across 182 verified course sequences

The Kruskal-Wallis test of program mode and mean number of first-time candidates indicated a statistically significant difference among the mean number of first-time candidates of the four program modes (H = 88.5, df = 3, p < .0001). Dunn’s post-hoc comparison detected statistically significant differences in mean number of first-time candidates between distance (M = 90.24, range = 6–845) and campus (M = 13.68, range = 6–74) programs (p < .0001) , distance and hybrid (M = 20.93, range = 6–108) programs (p = .0017), campus and both (M = 50.98, range = 6–285) programs (p < .0001), and hybrid and both programs (p < .0001). The differences in mean number of first-time candidates were not statistically significant between campus and hybrid programs (p = 0.7589) and distance and both programs (p = 0.6864).

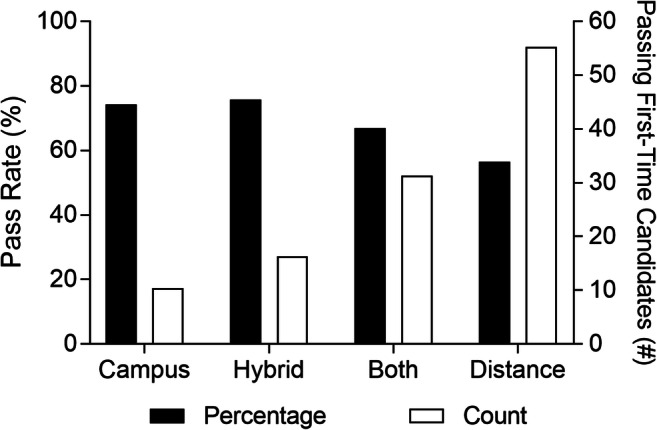

The Kruskal-Wallis test of program mode and mean pass rate percentage indicated a statistically significant difference among the mean pass rates across the four program modes (H = 77.84, df = 3, p < .0001). Dunn’s test indicated statistically significant differences in mean pass rate between campus (M = 74.27%, range = 13%–100%) and distance (M = 56.55%, range = 18%–100%) programs (p < .0001), campus and both (M = 66.95%, range = 24%–100%) programs (p = .0043), distance and hybrid (M = 75.83%, range = 30%–100%) programs (p < .0001), distance and both programs (p = .0043), and hybrid and both programs (p = .0147). The difference in mean pass rates between campus and hybrid programs was not statistically significant (p > 0.9999). Mean pass rates across the four program modes are depicted in Fig. 2.

Fig. 2.

Percentage pass rate and number of passing first-time candidates across program modes. Note. N = 558 across 182 verified course sequences. Black bars orient to left y-axis; white bars orient to right y-axis

The Kruskal-Wallis test of program mode and mean number of passing first-time candidates indicated a statistically significant difference among the four program modes (H = 50.23, df = 3, p < .0001). Dunn’s test indicated statistically significant differences in mean number of passing candidates between campus (M = 10.4, range = 1–71), and distance (M = 55.3, range = 2–515) programs (p = .0005), campus and both (M = 31.3, range = 3–120) programs (p < .0001), distance and both programs (p = .0184), and hybrid (M = 16.29, range = 2–94) and both programs (p = 0.0016). The difference in mean number of passing candidates was not statistically significant between campus and hybrid programs (p = 0.4232) and distance and hybrid programs (p > 0.9999). Mean number of passing candidates across the four program modes are depicted in Fig. 2.

Discussion

The field of applied behavior analysis, including consumers and professionals, has come to rely on the regulatory functions of the BACB to protect consumers and oversee the credentialing of practicing clinicians. Prospective graduate students seeking their BCBA may refer to the pass rate data of institutions in selecting their program and these data are published by the BACB alongside the number of first-time candidates and program mode. This study endeavored to explore possible relations between the published BACB examination pass rate data from 2015–2019 and variables reported by the BACB inherent to the institution wherein one completed their VCS program to prepare for the examination.

A negative correlation was found between number of first-time candidates and pass rates, consistent with the findings of Shepley et al. (2018), though it should be noted that this correlation was extremely weak. Comparisons of the mean number of first-time candidates, mean pass rates, and mean number of passing candidates revealed a number of statistically significant differences. Distance programs had a significantly higher mean number of first-time candidates and mean number of passing first-time candidates than campus programs. Campus and hybrid programs had significantly higher mean pass rates than distance and both programs.

The weak relation between number of first-time candidates and pass rates raises a number of questions. The number of first-time candidates for each VCS simply indicates the number of individuals who sat for the examination in a particular year. Although this variable is critical for interpreting the mean percentage pass rate, it does not necessarily capture two additional important variables: (1) average class size and (2) average latency between graduation and sitting for the examination. It stands to reason that larger average class sizes will affect the teacher–student ratio and potentially provide less attention with instructors, which may affect eventual pass rates. The aforementioned research on indicators of behavior analysis program quality (see Table 1) did not examine the relation between class size and outcome measures, and results of research from other fields is mixed (e.g., Arias & Walker, 2004; Guder et al., 2009; Tseng, 2010). Further research is needed to explore the potential impact of class size on BACB examination pass rates. Publishing these data alongside the current annual dissemination of pass rate data may facilitate future investigations, while simultaneously providing prospective students with additional information to aid in choosing a graduate program.

Second, the number of first-time candidates does not necessarily reflect how many students graduated from the VCS in a particular year. Some students may opt to take the examination within weeks of conferral of their master’s degree and completion of the VCS program whereas other students may wait the entire allotted 2 years after their application is approved to take the examination. Latency to sitting for the examination is another variable that has yet to be explored in the behavior analytic literature, though research from other fields suggests that longer delays are associated with lower pass rates (Malangoni et al., 2012; Marco et al., 2014). Delaying sitting for the examination may be a factor outside of the VCS program’s control that decreases its overall pass rate. Therefore, reporting the average time between completion of coursework and sitting for the examination may assist prospective graduate students in assessing how this variable might interact the VCS program’s pass rate.

Our analyses also revealed significant differences in the mean pass rates across program modes, with campus and hybrid programs reporting higher average pass rates than distance and both programs. One possible explanation is that the practice of in-person learning (in the case of a fully in-person program or a hybrid program with in-person elements of instruction) is more effective at preparing students for the BCBA examination, but another possibility is that there are characteristics of students who select a distance versus an on campus program that are responsible for the difference in pass rates. Shepley et al. (2018) also found higher average pass rates for campus programs, but in the absence of a controlled experiment, the exact nature of the relation between program mode and pass rate is unclear.

Further, the mean number of passing first-time candidates was significantly higher for distance programs over campus programs. This is not unexpected given that the mean number of first-time candidates was also significantly higher for distance programs over campus programs, but this raises additional questions about the reporting of these data. Marketing materials frequently cite VCS percentage pass rates (e.g., ABA Program Guide, n.d.) as opposed to the number of BCBAs the program produced in a particular year. By reporting the number of first-time candidates who sat for the examination, the BACB provides some context for interpreting pass rate percentages. For example, two programs may both have a 100% pass rate, but one program had 50 first-time candidates and the other had only 6. A larger number of passing candidates may suggest the high pass rate is more representative of the VCS (where a smaller number could represent an anomaly). Although the number of passing first-time candidates is calculated fairly easily with the data provided by the BACB, presenting this variable more saliently may provide additional valuable information for prospective students and higher education administrators who may use these data to inform decisions.

In conducting this exploratory analysis, additional questions arise as to which other variables may affect VCS pass rates, and if the BACB should report on these variables. The BACB publishes data on two critical criteria required to earn the BCBA credential (i.e., completion of coursework and passing the certification examination), but leave out the equally essential requirement of supervised practice. Some VCS programs provide intensive practicum or support to students in identifying a practicum site, whereas others offer coursework only. This variability across VCS programs may also affect the degree to which students are prepared for the examination. For instance, students may complete a rigorous VCS that offers coursework only, and then proceed to earn their supervised practice hours with an employer unaffiliated with the VCS. In this situation, only one of the two preceding steps to taking the examination was under the control of the VCS, yet the ultimate pass rate is still reflected on the program. When that scenario is contrasted with the performance of students who complete a VCS that provides an intensive practicum component, the pass rate data may better reflect the VCS program’s influence on student examination performance entirely (i.e., the maximum variables were controlled by the institution boasting the particular pass rate). Shepley et al. (2018) did not find any significant differences in mean pass rates across VCS programs based on supervision. However, data from only 1 year were analyzed, and VCS programs were coded binarily as to whether they provided supervision or did not, not specifying the type of supervision provided (intensive practicum, practicum, or supervised independent field experience). Publishing the type of supervision offered by each VCS will assist in more accurate analyses of these data and better inform prospective students.

Although the most immediate implications of these data apply to prospective students themselves and their decision of whether to apply to or enroll in certain graduate programs, BCBA examination pass rate data also extend to decisions made by leadership in higher education institutions. Priority decisions involving funding and allocation of resources, investment in faculty training and retention, and resources to sustain departments of behavior analysis and VCS programs are influenced by BCBA examination pass rate data. When these data are reported in a manner classified with conflated or unreported variables, the utility of them as an accurate metric of program quality decreases.

The published pass rates of VCS programs in behavior analysis are used to make critical decisions, and understanding the variables that influence pass rates is vital for their interpretation. The current study analyzed data published by the BACB on program characteristics and pass rates across VCS programs. A weak, negative correlation was detected between pass rate and number of first-time candidates. Differences in mean pass rates were detected among program modes, but a causal relation cannot be inferred from the present data. Moreover, additional program characteristics such as average class size, average latency to sitting for the examination, and practicum requirements may also be associated with differential pass rates. Publishing these data alongside existing VCS characteristics would facilitate additional investigation in this area.

Declarations

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Ethical Approval

This article does not include research with human participants or animals by any of the authors.

Footnotes

Research Highlights

• Statistical analyses revealed relations among VCS characteristics reported by the BACB and certification examination pass rates.

• A negative correlation between number of first-time candidates and pass rates was detected, though this correlation was extremely weak.

• Mean pass rates were higher for campus and hybrid programs over distance programs, and mean numbers of first-time candidates were higher for distance programs over campus programs.

• Potential explanations for these findings include differences in VCS quality and/or characteristics of students who select different program modes or cohort sizes, and further investigation is needed to clarify the nature of these variables’ influences.

• Additional variables, such as practicum requirements, average class sizes, and average latency to sitting for the examination may also influence an institution’s pass rate, and therefore would be beneficial additions to the BACB’s publications.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Applied Behavior Analysis Programs Guide. (2019). Top 25 best applied behavior analysis programs 2019. https://www.appliedbehavioranalysisprograms.com/rankings/applied-behavior-analysis-programs/

- Arias JJ, Walker DM. Additional evidence on the relationship between class size and student performance. Journal of Economic Education. 2004;35(4):311–329. doi: 10.3200/JECE.35.4.311-329. [DOI] [Google Scholar]

- Association for Behavior Analysis International (ABAI). (n.d.). Accreditation standards. https://accreditation.abainternational.org/apply/accreditation-standards.aspx

- Behavior Analyst Certification Board (BACB). (2020a). About the BACB. https://www.bacb.com/about/

- Behavior Analyst Certification Board (BACB). (2020b). BCBA examination pass rates for verified course sequences. https://www.bacb.com/wp-content/uploads/2020/05/BCBA-Pass-Rates-Combined-20200422.pdf

- Carr JE, Nosik MR. Professional credentialing of practicing behavior analysts. Policy Insights from the Behavioral & Brain Sciences. 2017;4(1):3–8. doi: 10.1177/2372732216685861. [DOI] [Google Scholar]

- Critchfield TS. What counts as high-quality practitioner training in applied behavior analysis? Behavior Analysis in Practice. 2015;8(1):3–6. doi: 10.1007/s40617-015-0049-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Winter JCF, Gosling SD, Potter J. Comparing the Pearson and Spearman correlation coefficients across distributions and sample sizes: A tutorial using simulations and empirical data. Psychological Methods. 2016;21(3):273–290. doi: 10.1037/met0000079. [DOI] [PubMed] [Google Scholar]

- Dixon MR, Reed DD, Smith T, Belisle J, Jackson RE. Research ranking of behavior analytic training programs and their faculty. Behavior Analysis in Practice. 2015;8(1):7–15. doi: 10.1007/s40617-015-0057-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dodge, Y. (2008). The concise encyclopedia of statistics. Springer. 10.1111/j.1751-5823.2008.00062_25.x.

- Guder F, Malliaris M, Jalilvand A. Changing the culture of a school: The effect of larger class size on instructor and student performance. American Journal of Business Education. 2009;2(9):83–90. [Google Scholar]

- Kazemi E, Fahmie TA, Eldevik S. Experimental analysis of behavior readings assigned by accredited master's degree programs in behavior analysis. Behavior Analysis in Practice. 2019;12:12–21. doi: 10.1007/s40617-018-00301-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelley DP, III, Wilder DA, Carr JE, Rey C, Green N, Lipschultz J. Research productivity among practitioners in behavior analysis: Recommendations from the prolific. Behavior Analysis in Practice. 2015;8(2):201–206. doi: 10.1007/s40617-015-0064-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malangoni MA, Jones AT, Rubright J, Biester TW, Buyske J, Lewis JFR. Delay in taking the American Board of Surgery qualifying examination affects examination performance. Surgery. 2012;152(4):738–746. doi: 10.1016/j.surg.2012.07.001. [DOI] [PubMed] [Google Scholar]

- Marco CA, Counselman FL, Korte RC, Purosky RG, Whitley CT, Reisdorff EJ, Burton J. Delaying the American board of emergency medicine qualifying examination is associated with poorer performance. Academic Emergency Medicine. 2014;21(6):688–693. doi: 10.1111/acem.12391. [DOI] [PubMed] [Google Scholar]

- Pastrana, S. J., Frewing, T. M., Grow, L. L., Nosik, M. R., Turner, M., & Carr, J. E. (2018). Frequently assigned readings in behavior analysis graduate training programs. Behavior Analysis in Practice, 11(3), 267–273. 10.1007/s40617-016-0137-9. [DOI] [PMC free article] [PubMed]

- Shepley C, Allday RA, Crawford D, Pence R, Johnson M, Winstead O. Examining the emphasis on consultation in behavior analyst preparation programs. Behavior Analysis: Research & Practice. 2017;17(4):381–392. doi: 10.1037/bar0000064. [DOI] [Google Scholar]

- Shepley C, Allday RA, Shepley SB. Towards a meaningful analysis of behavior analyst preparation programs. Behavior Analysis in Practice. 2018;11(1):39–45. doi: 10.1007/s40617-017-0193-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tseng HK. Has the student performance in managerial economics been affected by the class size of principles of microeconomics? Journal of Economics & Economic Education Research. 2010;11(3):15–27. [Google Scholar]