Abstract

Categorical perception, indicated by superior discrimination between stimuli that cross categorical boundaries than between stimuli within a category, is an efficient manner of classification. The current study examined the development of categorical perception of emotional stimuli in infancy. We used morphed facial images to investigate whether infants find contrasts between emotional facial images that cross categorical boundaries to be more salient than those that do not, while matching the degree of differences in the two contrasts. Five-month-olds exhibited sensitivity to the categorical boundary between sadness and disgust, between happiness and surprise, as well as between sadness and anger but not between anger and disgust. Even 9-month-olds failed to exhibit evidence of a definitive category boundary between anger and disgust. These findings indicate the presence of discrete boundaries between some, but not all, of the basic emotions early in life. Implications of these findings for the major theories of emotion representation are discussed.

Our ability to appropriately categorize emotions is essential to our success in interacting and communicating with others. An efficient manner in which adults represent basic emotion categories is by treating them as discrete entities with definitive borders (Etcoff & Magee, 1992; de Gelder, Teunisse, & Benson, 1997). However, there is ongoing debate and uncertainty about the nature of these categories and their developmental origins (Barrett, 2006; Ekman, 2016; Widen, 2013). Specifically, it is unknown whether young infants treat emotion categories within positive and negative valence classes as discrete entities and how this kind of categorical perception develops during the first year of life. This topic was addressed in the current experiments.

Some theorists assume that emotions are fundamental entities that are segregated from each other early in life via innate mechanisms (e.g., Ekman, 1972; Izard, Woodburn, & Finlon, 2010), while others believe that emotion categories develop slowly and depend upon conceptual development associated with language and culture (e.g., Widen, 2013; Widen & Russell, 2008). However, this debate regarding the nature of emotion categories is intertwined with the complex nature of emotions, such that both theoretical approaches might be correct to some extent depending upon the level at which emotion knowledge is analyzed. Thus, for example, Brosch, Pourtois, and Sander (2010) reviewed the literature on emotion categorization and concluded that the basic percept of emotions may lend itself to discrete categorization at above-chance levels, although higher levels of emotion knowledge reflected in such functions as naming of emotions and discrimination of subtle emotions may be affected by language development and culture. By testing infants within the first year of life, the current experiments addressed the development of emotion categories at the basic percept level. Specifically, we examined whether 5- and 9-month-olds’ discrimination of emotional information in faces indicates discrete category boundaries. Dichotomous perception of emotion continua during the first year of life would indicate the presence of an early developing perceptual process for efficient categorical representations of emotion expressions.

Distinct category boundaries

In principle, categories could have strict boundaries such that a contrast between exemplars that cross a category boundary would be more salient than a contrast within a category. Alternatively, category boundaries may not be well defined, and equivalent changes across and within category boundaries might be equally discriminable. Studies attempting to differentiate between discrete and continuous categorization have been conducted in a number of domains, including color (Bornstein, Kessen, & Weiskopf, 1976; Clifford, Franklin, Davies, & Holmes, 2009; Hu, Hanley, Zhang, Liu, & Roberson, 2014), emotion (Etcoff & Magee, 1992; Kotsoni, de Haan, & Johnson, 2001; Lee, Cheal, & Rutherford, 2015), and speech sounds (Altmann et al., 2014; Eimas, Siqueland, Jusczyk, & Vigorito, 1971). In particular, Etcoff and Magee (1992) found that adults discriminate between facial expressions that crossed an emotion category boundary more accurately than expressions that were within an emotion category. The authors suggest that their findings provide support for the theory that emotion categories are discrete (Ekman, 1992; Izard, 1994). Furthermore, de Gelder et al. (1997) found that both adults and children (9- to 10-year-olds) demonstrate categorical perception of facial expressions on happy–sad, angry–sad, and angry–afraid continua. Additionally, Sauter, LeGeun, and Haun (2011) found that individuals who speak Yucatec Maya exhibit categorical perception of disgust and anger despite their language not containing words that distinguish between these two emotion categories, indicating that verbal labels for emotion categories are not a prerequisite for demonstrating categorical perception of emotion.

Moreover, extensive evidence of infants’ ability to discriminate among facial emotion expressions has been thought to suggest innateness or at least rapid development of emotion categories (e.g., Adams, Gordon, Baird, Ambady, & Kleck, 2003; Ludemann & Nelson, 1988; Schwartz, Izard, & Ansul, 1985; Walker-Andrews, 1997). For instance, newborns show a preference for happy over fearful faces (Farroni, Menon, Rigato, & Johnson, 2007), 3-month-olds discriminate smiling from frowning faces (Barrera & Maurer, 1981) and happy from surprised faces (Young-Browne, Rosenfeld, & Horowitz, 1977), 4-month-olds look longer at happy than at angry expressions (LaBarbera, Izard, Vietze, & Parisi, 1976), and 4- to 6-month-olds discriminate among facial expressions of anger, fear, and surprise (Serrano, Iglesias, & Loeches, 1992). Furthermore, 7-month-old infants show evidence of categorization when familiarized to happy expressions and tested with happy/fear contrasts (Kotsoni et al., 2001; Ludemann & Nelson, 1988; Nelson, Morse, & Leavitt, 1979), and Bornstein and Arterberry (2003) documented evidence of the development of categorization for happy facial expressions as early as 5 months of age. Based on these kinds of results, Ekman, Friesen, and Ellsworth (2013) argue that preverbal infants can discriminate between emotion expressions which, along with the universality of emotion categories, suggest an innate or quickly developing ability to categorize emotions (Izard, 1994; Ludemann & Nelson, 1988; Serrano et al., 1992). Thus, such empirical findings have been thought to support Ekman’s (1972, 1989) basic emotions theory which posits that discrete emotion categories are innate.

However, other researchers disagree with the view that emotion knowledge develops early and involves contrasts between discrete categories (e.g., Russell & Pratt, 1980; Widen, 2013; Widen & Russell, 2008). Widen and Russell (2008) suggest that emotion categories develop slowly, driven by extensive experience, language, and conceptual development. In other words, rather than reflecting an innate sensitivity to emotion categories, the previously discussed preference that young infants exhibit toward happy faces (e.g., Barrera & Maurer, 1981; Farroni et al., 2007; Young-Browne et al., 1977) and their superior categorization of happy faces (e.g., Kotsoni et al., 2001) could be a function of the differing frequencies of infants’ experience with positively versus negatively valenced emotions. Furthermore, Widen and Russell urge caution when interpreting findings of emotion discrimination in infancy, as discrimination could be based on contrasts of values along dimensions rather than due to discrete categories. Moreover, Barrett (2006) suggests that emotions are not “natural kinds” but are categories constructed by adults as a function of experience and developed conceptual knowledge about emotions.

It is difficult to separate the effects of language and emotion labels from the perception of the physical structure of facial expressions of emotion when investigating children’s and adults’ emotion category knowledge. Therefore, testing infants who have not yet attained verbal proficiency may help elucidate the process of how visual differentiation of emotion categories develops. Specifically, if young infants exhibit evidence of categorical emotion perception, it would mean that, at least at a basic perceptual level, emotion categories are available early in life before language and labels develop.

Categorical perception of emotion in infancy

While many researchers have examined infants’ discrimination of emotions, few studies have specifically investigated infants’ categorical perception of emotions. Kotsoni et al. (2001) were among the first to apply the logic of categorical perception to emotions in infants. They hypothesized that 7-month-old infants would discriminate between facial expressions that cross an emotion category boundary, but not between those that are within an emotion category. Infants were familiarized to morphed happy and fearful facial expressions, with half of the infants being familiarized to majority fearful faces (e.g., 60% fearful/40% happy) and the other half being familiarized to majority happy faces (e.g., 40% fearful/60% happy). Following familiarization, infants were tested on two trial types: within-emotion and across-emotion. For example, in the within-emotion trials, infants familiarized with a majority happy face (i.e., 60% happy/40% fearful) were tested for discrimination between that face and an 80% happy/20% fearful face. In the between-emotion trials, infants were tested for discrimination between the familiarization face paired with a 60% fearful/40% happy face. In line with the authors’ predictions, infants discriminated between the test stimuli in the across-emotion condition but not in the within-emotion condition. That is, following familiarization to one expression, 7-month-olds discriminated it from an expression that crossed the boundary, but failed to discriminate it from another expression that did not cross the boundary. (Note that this result was obtained only when infants were familiarized to majority happy faces. The authors suggested that this asymmetry in performance was due to interference from infants’ spontaneous preference for fearful faces rather than a failure to discriminate the between-category faces when familiarized to fear.)

Furthermore, Leppäanen, Richmond, Vogel-Farley, Moulson, and Nelson (2009) tested 7-month-olds’ categorical perception of emotion in faces, using within-emotion (e.g., happy/happy) and between-category (happy/sad) pairings. Like Kotsoni et al. (2001), Leppäanen et al. (2009) found that 7-month-olds discriminated between stimuli that crossed the happy/sad categorical boundary but not between stimuli that were both either happy or sad. These findings suggest that preverbal infants are sensitive to discrete boundaries between emotions of opposing valence (e.g., happiness, sadness).

It is important to note that both the Kotsoni et al. (2001) and Leppäanen et al. (2009) studies only contrasted oppositely valenced emotions (e.g., happiness versus fear; happiness versus sad), rather than within-valence contrasts (e.g., fear versus sadness). Widen and Russell (2008) suggested that, rather than providing evidence of categorical perception of emotion, these findings may simply be explained as infants’ discrimination between extreme ends of the valence dimension (positive or negative). This would be consistent with the circumplex model of affect which posits that cognitive representations of emotion categories are organized according to similarity in different dimensions, most importantly those of pleasure/displeasure (valence) and degree of arousal expressed by the actor (Russell, 1980).

Recently, Ruba, Johnson, Harris, and Wilbourn (2017) reported that 10- and 18-month-old infants do categorize stimuli within a valence class, specifically anger versus disgust. Infants were sequentially habituated to images of four different actresses all displaying the same emotion (i.e., all angry or all disgusted) and then tested on four different types of test trials (i.e., novel individual displaying novel emotion, novel individual displaying familiar emotion, familiar individual displaying novel emotion, and familiar individual displaying familiar emotion). Critically, both age-groups dishabituated (exhibited heightened looking) based on emotion expression. This indicates that they were able to categorize exemplars of anger and disgust. This contrast is noteworthy because the two emotions are not only negative but also similarly high in arousal. Thus, during the first year of life, infants are capable of categorizing stimuli that come from similar emotion classes. However, the Ruba et al. study did not address the question of whether infants classify the angry/disgust stimuli into discrete or continuous classes. Thus, it remains unknown whether infants perceive emotions within a valence as categories with definitive discrete boundaries or as stimuli that vary along continuous dimensions of the sort posited by the circumplex model. Moreover, the youngest age tested by Ruba et al. was 10 months, so it is not clear whether infants in first half-year of life are sensitive to discrete emotion categories.

We addressed these issues in the current experiments by testing 5-month-olds on within-valence emotion continua between emotions (sadness/disgust, sadness/anger, anger/disgust, and happiness/surprise) and observing infants’ visual preferences between pairs of images that either did, or did not, cross over the categorical boundary. Importantly, the degree of differences between stimuli in each of these sets was matched, which allowed us to document whether infants exhibited categorical perception within the positive valence (happiness/surprise) and negative valence (sadness/disgust, sadness/anger, anger/disgust) dimensions. If emotion categories early in life are discrete and mutually exclusive, one would expect differences in attention to images or image pairs that cross the categorical boundary compared to those that do not. However, if emotion categories in infancy vary continuously along certain underlying dimensions, then one would not expect such differences. The current investigation focused on contrast within the positive and negative valence dimension, rather than degree of arousal, as previous studies have demonstrated that infants are sensitive to discrete boundaries between oppositely valenced emotions (Kotsoni et al., 2001; Leppäanen et al., 2009).

We chose to study the categorical perception of emotions at 5 months in Experiments 1 and 2. This age was chosen because researchers have suggested that the recognition of facial emotional expressions is available within the first 6 months of life (e.g., Haviland & Lelwica, 1987; Walker-Andrews, 2005), and 5 months is the youngest age at which emotion categorization has been previously documented (Bornstein & Arterberry, 2003). Also, 5-month-olds match facial (Vaillant-Molina, Bahrick, & Flom, 2013) and bodily (Heck, Chroust, White, Jubran, & Bhatt, 2018) emotions to vocal emotions, suggesting at least some level of emotion knowledge beyond simple discrimination of percepts within the visual modality. We also tested an older age-group, 9 months, in Experiment 3 on a contrast (anger/disgust) on which 5-month-olds failed to exhibit evidence of categorical perception in Experiments 1 and 2. Prior studies have found evidence of significant emotional and social development at 9 months compared to younger ages (Anzures, Quinn, Pascalis, Slater, & Lee, 2010; Hoehl & Pauen, 2017; Hoehl & Striano, 2010; Lee et al., 2015; Moore & Corkum, 1994). For example, 9-month-olds, but not 6-month-olds, form discrete categories of Caucasian and Asian faces (Anzures et al., 2010). Moreover, Lee et al. (2015) found that 9-month-olds exhibit categorical perception of happy/angry contrasts under conditions in which 6-month-olds do not. In Experiment 3, we tested the similar possibility that 9-month-olds would exhibit categorical perception of anger/disgust contrasts under conditions in which 5-month-olds do not.

EXPERIMENT 1

In this experiment, we used a spontaneous preference procedure to examine whether 5-month-old infants form discrete categories of emotions. A digital morphing software program was used to systematically create equally spaced intermediates along a continuum between two emotions. Infants were simultaneously shown two pairs of images that differed systematically in the proportion of two emotions. In one pair, the two images spanned the 50% category boundary (40% emotion A/60% emotion B and 60% emotion A/40% emotion B), while in the other pair, both images were within the same category (60% emotion A/40% emotion B and 80% emotion A/20% emotion B). Given that the images in each pair were equally spaced along the continuum and were therefore equally visually discrepant, differences in attention to across-category image pairs compared to the within-category pairs would be evidence of a discrete categorical boundary. Specifically, if the category boundary is salient to infants, it should render the across-emotion contrast more complex than the within-emotion contrast. Thus, it was predicted that infants will exhibit heightened attention to the across-emotion contrast as they have been known to prefer complex over simple stimuli, at least within certain limits (Martin, 1975). Such heightened attention to the across-emotion contrast would be consistent with the results of previous studies utilizing event-related potentials suggesting that infants employ a deeper level of processing when viewing across-emotion contrasts compared to within-emotion contrasts (Leppäanen et al., 2009). In Experiment 1, 5-month-olds were tested on one of four emotion contrasts: sadness/disgust, sadness/anger, anger/disgust, or happiness/surprise. These emotion pairs were chosen to specifically examine within-valence contrasts, with the first three involving negative emotions and the last involving positive emotions.

Within the negative dimension, the sadness/anger and sadness/disgust comparisons contrasted low arousal (sadness) with high-arousal (disgust, anger) stimuli, while the anger/disgust contrast contained two high-arousal emotions. If the categorical perception of these contrasts is affected by the similarity in arousal levels, it is possible that young infants would not exhibit categorical perception of the contrast between disgust and anger. If, on the other hand, these categories are all basic, universally available classes of stimuli that even young infants are sensitive to, then there would be no expectation of a difference in performance in the anger/disgust versus other conditions.

Method

The present study was conducted according to guidelines laid down in the Declaration of Helsinki, with written informed consent obtained from a parent or guardian for each child before any assessment or data collection. All procedures involving human subjects in this study were approved by the Institutional Review Board at the University of Kentucky.

Participants

Forty-eight 5-month-old infants (mean age = 151.12 days, SD = 5.35; 24 female) were tested in Experiment 1. Infants were recruited through birth announcements and a local university hospital. They were predominately Caucasian and from middle-class families. Data from six additional infants were excluded due to <20% looking during test trials.

Stimuli

In order to investigate whether infants categorize emotions in a discrete manner, we contrasted two pairs of faces, presented side-by-side, with one pair containing two faces from different emotion categories (across-emotion pair) and the other containing two faces from within the same category (within-emotion pair). Critically, the change in expression intensity between the two images in the across-emotion pair was the same as the change in expression intensity between the two images in the within-emotion pair. This was accomplished by using morphed images of the same actress displaying two emotions of interest, with a boundary between the emotions at 50% (see Figure 1). For example, infants in the sadness/disgust condition were shown two pairs of stimuli, one pair being the across-emotion contrast and the other being the within-emotion contrast. If infants’ total fixation duration to across-emotion contrasts (e.g., 60% sadness/40% disgust versus 40% sadness/60% disgust) is longer than their total fixation duration to within-emotion contrasts (e.g., 60% sadness/40% disgust versus 80% sadness/20% disgust), despite the equivalence in the degree of differences (each contrast spanning 20% of the continuum), it would indicate that infants are sensitive to the boundary between emotion categories.

Figure 1.

Examples of the faces used in the spontaneous preference looking procedure in the sad/disgust emotion contrast condition during Experiment 1. Note that the 0/100% faces are the original emotion facial expressions from the Radboud Faces Database (Langner et al., 2010) that were used in the FantaMorph software to create the continuum of morphed emotional expressions. The resulting morphed emotion expressions were retouched in Adobe Photoshop.

The face stimuli were created from full color Caucasian female faces taken from the NimStim set (Tottenham et al., 2009; face numbers 02, 07, and 09) and from the Radboud set (Langner et al., 2010; face number 01). Only female faces were used in this study because previous research indicates that infants generally exhibit a greater degree of expertise on female images than on male images (Quinn, Yahr, Kuhn, Slater, & Pascalis, 2002; Ramsey, Langlois, & Marti, 2005). Therefore, the use of female models in the current experiment provided infants with the best opportunity to demonstrate sensitivity to the boundary between emotion categories. Each individual displayed a happy, surprise, sad, angry, or disgust expression. These are prototypical facial expressions conveying purported “basic” emotions (Ekman, 1992). We elected not to include fearful facial expressions because of the early emergence of differential attention to fearful versus other emotional stimuli (Heck, Hock, White, Jubran, & Bhatt, 2016; Leppäanen, Moulson, Vogel-Farley, & Nelson, 2007; Peltola, Hietanen, Forssman, & Leppäanen, 2013), which could interfere with the measurement of differential attention to across-emotion versus within-emotion contrasts. Furthermore, recall that there was an asymmetry noted in Kotsoni et al. (2001) study of infants’ categorical perception of happy/fear contrasts. The authors speculated that infants’ spontaneous preference for fearful faces interfered with their performance on the categorical perception task.

The 100% emotion faces were used as endpoints in the morphing process. Three 100% faces for each emotion (from the four possible actresses) were selected. To represent the emotional continua, four morphed images were created for each pair of faces (20, 40, 60, and 80% emotion A, with the complementary percent composed of emotion B) using Abrosoft FantaMorph SE. This is similar to the morphing technique used by Kotsoni et al. (2001) and Leppäanen et al. (2009). The visual angle for each face subtended approximately 8.67° horizontally and 11.14° vertically. The images within a pair were separated by a visual angle of 1.62°, and the image pairs were separated by 4.78°.

We verified the equivalence of the degree of difference between the stimuli in the within-emotion and across-emotion contrasts by examining the number of pixel changes. The amount of pixel change between the two images in the across-emotion contrasts was compared to the amount of pixel change in the within-emotion contrasts using ImageDiff 1.0.1 (created by ionForge). That is, the average percent of different pixels between the 40% emotion A/60% emotion B stimulus and the 60% emotion A/40% emotion B stimulus was compared to the average percent of different pixels between the 60% emotion A/40% emotion B stimulus and the 80% emotion A/20% emotion B stimulus. A stimulus set shown to an individual infant consisted of two paired contrasts (each including 2 images; see Figure 2). Difference scores were calculated for each contrast, and a paired-sample t-test was used to determine whether there was a systematic difference between the across-emotion contrasts compared to the within-emotion contrasts paired by stimulus set. Across all infants, there were 24 pairs of contrasts (3 exemplars × 4 Emotion Contrasts × 2 Majority Emotions). Majority emotion refers to the emotion expressed by three of the four faces that infants were exposed to in each contrast; that is, the faces composed of 60%, 60%, and 80% of that emotion. Across all four emotion continua, there was no evidence to suggest a difference between the pixel changes in the across-emotion (M = 38.66%; SE = 1.72) versus the within-emotion contrasts (M = 38.39%; SE = 1.86), t(23) = 0.18, p = .86. This suggests that differences in infants’ looking behavior were not due to greater changes in pixels in the across-emotion compared to the within-emotion morphed stimulus pairs.

Figure 2.

Examples of the stimuli shown to participants in Experiment 1. The black rectangles imposed on the images depict the across-emotion (left pair) and within-emotion (right pair) category boundary areas of interest (AOIs) used in data analysis. These rectangles were not visible to infants during test.

Procedure and apparatus

Infants were tested on a spontaneous preference paired-comparison procedure similar to that used in prior studies (e.g., Hock, Kangas, Zieber, & Bhatt, 2015; Zieber et al., 2010). They were tested for their preference between an across-emotion boundary (40% emotion A and 60% emotion A) face pair versus a within-emotion boundary (60% emotion A and 80% emotion A) face pair presented side-by-side on the screen (Figure 2). Each infant was tested on one of the four emotion contrasts noted earlier (sadness/disgust, sadness/anger, anger/disgust, or happiness/surprise). Infants sat on their parent’s lap approximately 60 cm in front of a 58-cm Tobii TX300 eye-tracking monitor. Parents wore opaque glasses and were instructed not to gesture toward the screen. The surroundings of the monitor and the video camera above the monitor were concealed with black curtains.

The eye tracker’s cameras recorded the reflection of an infrared light source on the cornea relative to the pupil from both eyes at a frequency of 300 Hz. In order to remove noise from the data, the I-VT fixation filter provided within Tobii Studio was used to classify which eye movements were considered to be valid fixations (i.e., any look that exceeded 60 msec while remaining within a 0.5° radius). This criterion is similar to that used in other studies on infants’ scanning (e.g., Hunnius, de Wit, Vrins, & von Hofsten, 2011; Papageorgiou et al., 2014).

Before starting data collection, each infant’s eyes were calibrated using a 5-point calibration procedure in which a 23.04 cm2 red and yellow rattle coupled with a rhythmic sound was presented sequentially at five locations on the screen (i.e., the four corners and the center). An experimenter controlled the calibration process with a key press to advance to the next calibration point after the infant was judged (via a live video feed) to be looking at the current calibration point. The calibration procedure was repeated if calibration was not obtained for both eyes in more than one location. Eye-tracker calibration and stimulus presentation were controlled by Tobii Studio 3.3.1 software (Tobii Technology AB; www.tobii.com).

Once the infant was calibrated, four 12-second trials immediately followed. The experimenter controlled the stimulus presentation at the beginning of the trials from behind the curtain while monitoring the infant’s behavior through the live video feed. The interval between each trial consisted entirely of an attention-getter displayed on the screen in which colorful shapes alternated in a continuous fashion to attract infants’ attention to the center of the screen. The duration of the intertrial interval was not prespecified to allow each infant to be given as much time as they needed to become calmly attentive and focused on the center of the screen. When an experimenter judged the infant’s attention to be centered, he/she pressed a button to present the test stimuli. During each trial, the across-emotion pair (40% emotion A and 60% emotion A) was presented on one side of the monitor, while the within-emotion pair (60% emotion A and 80% emotion A) was presented on the other side. The left/right location of each pair on the first trial was counterbalanced across infants and varied across the four trials (e.g., the location of the across-emotion pair could be on the left during trial 1, right during trial 2, right during trial 3, left during trial 4). Additionally, in order to avoid the influence of potential side bias, the left/right location of the individual faces within each boundary pair was counterbalanced across infants (i.e., from left to right, half of the infants saw 40% A, 60% A, 60% A, and 80% A faces, while the other half saw 60% A, 40% A, 80% A, and 60% A faces).

Areas of interest (AOIs) were defined around the across- and within-emotion pairs, see Figure 2. Each AOI comprised 18% of the screen and had a horizontal visual angle of 18.46° and a vertical visual angle of 11.71°. The dependent measure was the proportion fixation duration to the across-emotion contrast. This was computed by summing the total fixation duration to the across-emotion contrasts across all four trials and dividing this sum by the total fixation duration to both the across- and within-emotion contrasts across all trials. If infants’ mean proportional fixation duration is >50%, indicating that they looked longer at the pair of faces crossing the category boundary than at the pair from within category boundaries, this would suggest that they are sensitive to the boundaries between emotion categories. If, however, infants look equally at both the across- and within-emotion stimuli pairs, then that would suggest that infants may not be sensitive to discrete categorical boundaries between emotions at 5 months.

Results and Discussion

An analysis of outlier status using percentiles and boxplots (Tukey, 1977; using SPSS version 22) was conducted in accordance with standard practice to protect against inflated error rates and distortions of statistical estimates. The scores of two infants in the sadness/disgust condition were outliers and removed from subsequent analyses Table 1.

TABLE 1.

Percent Preference for the Across-emotion Pair in Experiment 1

| Emotion contrast | n | Majority emotion | Mean % preference (SE) | t-versus chance (50%) | p-value |

|---|---|---|---|---|---|

| Sadness/Disgust | 10 | Overall | 56.64 (2.43)a | 2.74 | .023* |

| 5 | Sad | 57.82 (4.09) | 1.91 | .128 | |

| 5 | Disgust | 55.47 (3.02) | 1.81 | .145 | |

| Sadness/Anger | 12 | Overall | 53.49 (1.75) | 1.99 | .072† |

| 6 | Sad | 51.35 (2.87) | 0.47 | .658 | |

| 6 | Angry | 55.63 (1.85) | 3.05 | .028* | |

| Anger/Disgust | 12 | Overall | 47.02 (3.35) | −0.89 | .393 |

| 6 | Angry | 42.62 (2.94) | −2.51 | .054 | |

| 6 | Disgust | 51.41 (5.75) | −0.25 | .816 | |

| Happiness/Surprise | 12 | Overall | 60.11 (4.24) | 2.39 | .036* |

| 6 | Happy | 61.11 (7.62) | 1.46 | .204 | |

| 6 | Surprise | 59.11 (4.54) | 2.00 | .101 |

Note. Standard errors are presented in parentheses.

When the two outliers in the sadness/disgust condition are included, the mean preference is still above chance (56.54%), but no longer statistically significant (p = .177).

p < .05,

p < .10, two-tailed.

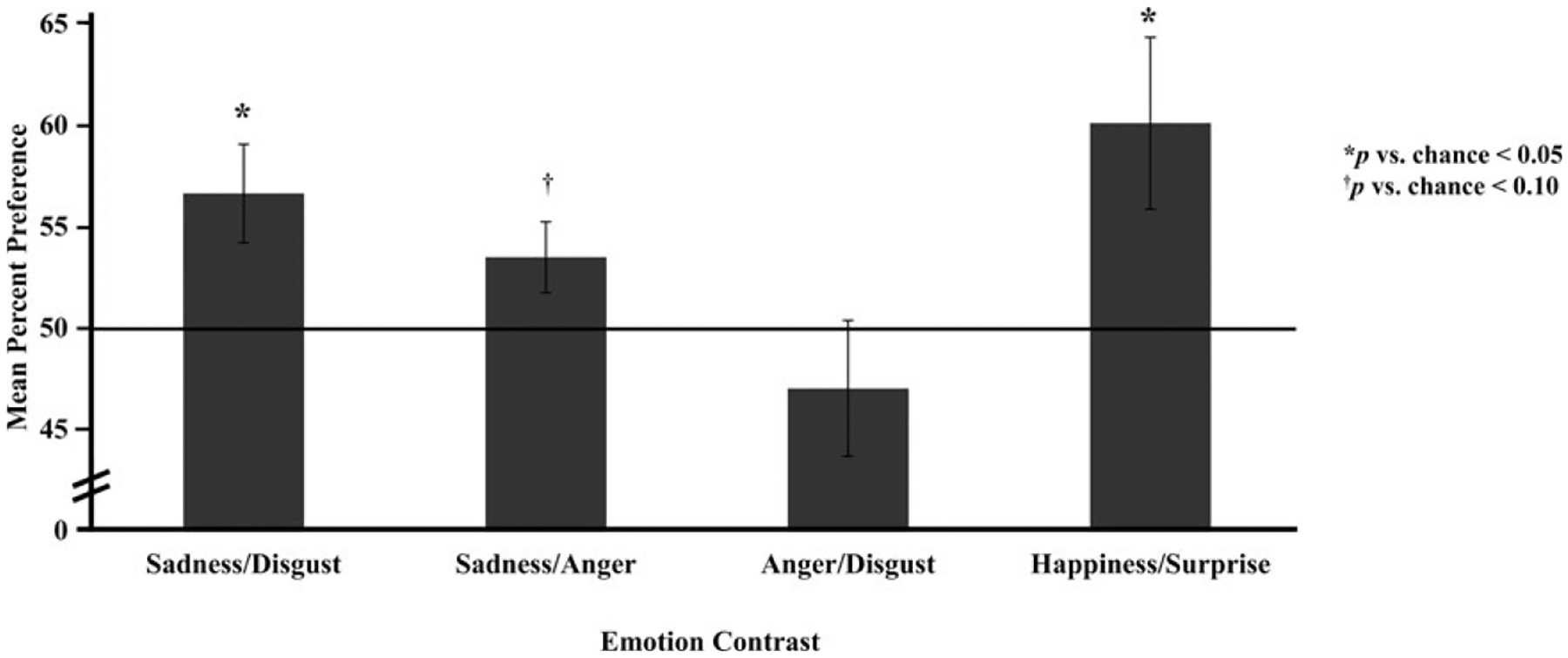

A univariate analysis of variance (ANOVA) with a between-subjects factor of emotion contrast (sadness/disgust, sadness/anger, anger/disgust, happiness/surprise) was used to investigate whether infants’ proportion fixation duration to the across-emotion pair differed based on the emotion contrast. All significance values reported in the current paper are two-tailed. Results from the ANOVA indicated that infants performed differently across the various emotion contrasts, F(3, 42) = 3.23, p = .03, . Thus, planned follow-up one-sample t-tests comparing infants’ proportion fixations duration to the across-emotion pair to chance performance (50%) were performed for each emotion contrast condition tested in Experiment 1 (see Table 1). Infants in the happiness/surprise condition fixated significantly longer on the across-emotion pair (M = 60.11%; SE = 4.24) than would be expected by chance, t (11) = 2.39, p = .04, d = 0.69. Infants in the sadness/disgust emotion condition also fixated significantly longer (M = 56.64%; SE = 2.43) than predicted by 50% chance, t (9) = 2.74, p = .02, d = 0.87. Infants’ proportion fixation duration to the across-emotion pair in the sadness/anger condition (M = 53.49%; SE = 1.75) was not statistically significantly different from chance, t(11) = 1.99, p = .08, d = 0.58. Similarly, infants’ proportion fixation duration to the across-emotion pair in the anger/disgust condition (M = 47.02%; SE = 3.35) was not statistically different from chance, t(11) = −0.890, p = .39, two-tailed, d = .26. Thus, 5-month-olds in two emotion contrast conditions (sadness/disgust and happiness/surprise) looked significantly longer at face pairs that crossed an emotional boundary than at face contrasts that were within the same emotional boundary, but infants in the sadness/anger and anger/disgust contrast failed to do so, see Figure 3.

Figure 3.

Performance in Experiment 1 by contrast type. Independent-samples t-test comparing each possible pair of contrasts revealed that the only significant differences were between anger/disgust versus happiness/surprise, t(22) = 2.42, p = 0.02, d = 0.99, and anger/disgust versus sadness/disgust, t(20) = 2.24, p = 0.04, d = 0.98. However, it should be noted that the current investigation was not powered with the intention of directly comparing performance in the various emotion contrasts. Furthermore, emotion contrasts were not equated for discriminability which may have resulted in between-contrast differences in the level of difficulty that were unrelated to the emotions expressed. Thus, such differences should be interpreted with caution.

These data suggest that discrete emotion category boundaries between happiness and surprise as well as between sadness and disgust develop by 5 months of age. The one-sample t-test comparing infants’ performance in the sadness/anger condition to chance revealed a nonsignificant trend (p value of .072), indicating weak evidence that a perceptually discrete emotion category boundary between sadness and anger also develops by at least 5 months of age. Moreover, there was no evidence to indicate that 5-month-olds were sensitive to the categorical boundary between anger and disgust. In Experiment 2, we further examined 5-month-olds’ performance on the latter two contrasts.

EXPERIMENT 2

Recall that infants in Experiment 1 were tested using a spontaneous preference procedure that relied upon an a priori preference for the across- over the within-emotion contrast. This procedure may not have been sufficiently sensitive to generate clear evidence of categorical perception within the sadness/anger and anger/disgust contrasts. For example, infants may have processed the differences between across- versus within-emotion contrasts, but did not exhibit a preference. In other words, rather than infants being unable to detect the boundary between certain emotions, it is possible that certain boundaries did not attract the same level of attention spontaneously because the contrast between the emotions was not as familiar to young infants or as functionally significant. In prior studies, familiarization/novelty preference procedures have been used to induce infants to exhibit a preference under conditions in which they have previously failed to exhibit a spontaneous preference (e.g., Bhatt & Quinn, 2011; Slater, Earle, Morison, & Rose, 1985; Zieber, Kangas, Hock, & Bhatt, 2015). Because the familiarization procedure involves repeated exposure to a particular stimulus prior to testing infants for a preference between the familiarized versus novel stimulus, infants may be more likely to fixate on the novel stimulus resulting in a novelty preference. Hence, in the current experiment, we used an infant-control habituation procedure to first familiarize infants to a stimulus from an emotion category (60% emotion A/40% emotion B) and then test infants for their preference between a novel face from within the familiar emotion category (80% emotion A/20% emotion B) versus a novel face that crossed the emotional boundary (40% emotion A/60% emotion B). This procedure has been used previously to demonstrate infants’ categorical perception of positive versus negative facial expressions (Kotsoni et al., 2001; Leppäanen et al., 2009). Given that the amount of change going from the habituated image to both the novel across- and within-boundary images is equated, if infants exhibit a preference for the across-category image over the within-category image, it would indicate that infants are sensitive to a discrete boundary within that contrast.

Method

Participants

Thirty-six 5-month-old infants (mean age = 151.64 days, SD = 5.39; 18 female) participated in Experiment 2. Infants were recruited in a similar manner as in Experiment 1 and were predominately Caucasian and from middle-class families. Data from sixteen additional infants were excluded for less than 20% looking during test trials (summed across all trials and divided by the total trial length; n = 13), fussiness (n = 1), or side bias (more than 90% of fixations directed to one side of the screen during the test trials; n = 2).

Stimuli

The stimuli were the same morphed faces used in the sadness/anger and anger/disgust contrast conditions of Experiment 1 (Figure 4). The faces were separated by a visual angle of 4.78°.

Figure 4.

Examples of the faces used in the infant-control habituation procedure in the sadness/anger emotion contrast in Experiments 2 and 3. Row (a) displays a sample habituation trial in which two 60% sad/40% angry faces are presented side-by-side. Row (b) is an example of a test trial in which a 40% sad/60% angry face (left) and an 80% sad/20% angry face (right) are paired. The rectangles overlaid on the images depict the 40% face AOI (across) and the 80% face AOI (within) used in data analysis. These rectangles were not visible to infants during test.

Procedure and apparatus

Each infant was tested on either the sadness/anger or anger/disgust contrast. An infant-control habituation procedure was used (Bhatt, Hayden, & Quinn, 2007; Hayden, Bhatt, & Quinn, 2008; Kangas, Zieber, Hayden, & Bhatt, 2013). Each habituation and test trial began with the presentation of alternating green and purple shapes to direct infants’ attention to the middle of the screen. Once an experimenter judged that the infant was looking to these alternating shapes, he/she pressed a key to start the trial. During habituation trials, two identical copies of a 60% emotion face were presented, one on the left side and the other on the right side of the monitor (Figure 4). The faces remained on the screen until the infant looked away for 2 sec or until 75 sec had passed. Habituation trials continued until infants’ mean look duration during three consecutive trials was <50% of the average for the three longest look duration trials or until a maximum of 20 trials was reached. Once the infant reached habituation criterion, four 10-sec test trials immediately followed. During the test trials, the across-emotion face (40%) was presented on one side of the monitor and the within-emotion face (80%) was presented on the other side (Figure 4). The left/right location of each face on the first trial was counterbalanced across infants. Additionally, to avoid the influence of potential side bias, the left/right location of the faces switched across the four trials (e.g., the location of the 40% face could be on the left during trial 1, right during trial 2, right during trial 3, and left during trial 4). Moreover, half of the infants were habituated to 60% emotion A faces, thus making stimuli from the emotion B category the across-emotion faces, while the other half of infants were habituated to 60% emotion B faces, making stimuli from the emotion A category the across-emotion faces. For example, in the sadness/anger condition, half of the infants were habituated to sadness and the other half were habituated to anger.

Areas of interest (AOIs) during the test trials were defined around the across and within emotion faces (Figure 3). The across and within AOIs each comprised 9.14% of the screen and had a horizontal visual angle of 8.8° and a vertical visual angle of 11°. The dependent measure was the proportion fixation duration to the across-emotion face during the four test trials. This was calculated by summing the total fixation duration to the across-emotion face across all four test trials and dividing this sum by the total fixation duration to both the across- and within-emotion faces across all trials. If infants look longer to a face from the emotion B category following habituation to a face from the emotion A category, this would indicate that infants are sensitive to differences in facial expressions between emotion categories. Recall that the visual discrepancy of each across- and within-test image from the habituation image was equated. Therefore, a preference for the image that crosses the category boundary over the one that does not can be interpreted as sensitivity to a discrete category boundary. In contrast, if there are no significant differences in looking times to across- versus within-emotion faces, then it would suggest lack of evidence of discrete categories.

Results and Discussion

An analysis of outlier status conducted in the same manner as in Experiment 1 revealed one outlier in the sadness/anger condition. This score was removed from the remaining analyses. The mean times required for infants to habituate to the 60% stimuli are presented in Table 2. An independent-samples t-test comparing infants’ habituation times in the sadness/anger condition (M = 180.59 s; SE = 40.37) versus the anger/disgust condition (M = 178.49 s; SE = 23.17) revealed no significant difference, t(33) = 0.05, p = .96, d = 0.02. Thus, we found no evidence to suggest differences in the patterns of habituation across the two conditions.

TABLE 2.

Time to Habituate and Percent Preference for the Across-emotion Stimulus in Experiments 2 and 3

| Emotion contrast | Age-group | n | Habituation emotion | Mean number of trials to habituate (SE) | Mean habituation time seconds (SE) | Mean seconds first-three habituation trials (SE) | Mean seconds last-three habituation trials (SE) | Mean % preference (SE) | t-versus chance (50%) | p-value |

|---|---|---|---|---|---|---|---|---|---|---|

| Sadness/Anger | 5 month | 17 | Overall | 7.59 (0.52) | 180.59 (23.87) | 26.48 (4.76) | 12.84 (2.17) | 57.35 (3.06)a | 2.40 | .029* |

| 9 | Sad | 6.78 (0.57) | 165.69 (28.74) | 32.36 (7.04) | 12.16 (2.95) | 55.45 (5.00) | 1.09 | .307 | ||

| 8 | Angry | 8.50 (0.82) | 197.36 (40.38) | 19.86 (5.88) | 13.60 (3.40) | 59.72 (3.48) | 2.72 | .029* | ||

| Anger/Disgust | 5 month | 18 | Overall | 6.67 (0.28) | 178.49 (23.17) | 30.47 (4.08) | 13.91 (2.34) | 48.17 (2.48) | −0.73 | .472 |

| 9 | Angry | 7.00 (0.50) | 191.68 (32.97) | 31.29 (5.67) | 14.27 (3.37) | 46.07 (3.95) | −0.97 | .349 | ||

| 9 | Disgust | 6.33 (0.24) | 165.29 (33.94) | 29.65 (6.20) | 13.55 (3.44) | 50.28 (3.08) | 0.92 | .283 | ||

| Anger/Disgust | 9 month | 18 | Overall | 8.06 (0.59) | 113.19 (15.72) | 14.39 (1.93) | 9.07 (2.59) | 52.20 (3.41) | 0.64 | .528 |

| 9 | Angry | 7.89 (0.87) | 95.69 (7.67) | 13.69 (2.36) | 12.47 (4.98) | 52.97 (5.33) | 0.56 | .592 | ||

| 9 | Disgust | 8.22 (0.83) | 130.68 (30.26) | 15.08 (3.19) | 5.67 (0.84) | 51.43 (4.58) | 0.31 | .763 |

Note. Standard errors are presented in parentheses.

When the outlier in the sadness/anger condition is included, the mean reference is still above chance (55.20%), but no longer significant (p = .167).

p < .05, two-tailed.

Infants’ mean across-emotion preference scores during the test trials are also shown in Table 2. Similar to Experiment 1, one-sample t-tests comparing infants’ proportion fixation duration to the face that crosses the emotional boundary (40% face) to chance performance were performed. Infants in the sadness/anger condition fixated significantly longer (M = 57.35%; SE = 3.06) on the 40% face than chance performance would predict, t(16) = 2.40, p = .03, d = 0.58. However, infants in the anger/disgust condition (M = 48.17%; SE = 2.48) failed to exhibit evidence of discrimination, t(17) = −0.74, p = .47, d = 0.17. Moreover, an independent-samples t-test indicated that the score in the sadness/anger condition was significantly greater than the score in the anger/disgust condition, t(33) = 2.34, p = .03, d = 0.79. Thus, 5-month-olds exhibited clear evidence of categorical perception in the sadness/anger condition but not in the anger/disgust condition. Together, Experiments 1 and 2 provide evidence of sensitivity to a discrete category boundary at 5 months of age in the case of the sadness/disgust, happiness/surprise, and sadness/anger emotion contrasts. However, 5-month-olds failed to exhibit evidence of sensitivity to a discrete category boundary between anger and disgust.

EXPERIMENT 3

It is possible that 5-month-olds’ failure to exhibit categorical perception of anger/disgust contrasts in Experiments 1 and 2 is because these emotions are not fully differentiated by 5 months, and it takes longer for the categorical boundary between anger and disgust to develop (Ruba et al., 2017). One reason for this might be the overlap in several physical facial features (e.g., face muscular structure) associated with angry and disgusted expressions (Aviezer, Hassin, Bentin, & Trope, 2008). Computerized analysis (Susskind, Littlewort, Bartlett, Movellan, & Anderson, 2007) and human errors research (Ekman & Friesen, 1976) attest to the similarity between anger and disgust. Anger and disgust are also quite similar to each other in Russell’s dimensional model (Russell, 2003). It is thus possible that only infants older than 5 months exhibit categorical perception of the contrast between disgust and anger. To examine this possibility in Experiment 3, we tested 9-month-old infants’ sensitivity to the emotion boundary between anger and disgust using the infant-control habituation procedure from Experiment 2.

We chose to study 9-month-olds because, as discussed in the Introduction, prior research has demonstrated critical differences in many aspects of social perception between 9 months and younger ages. For example, 9-month-olds in Lee et al. (2015) exhibited categorical perception of happy/angry contrasts under conditions in which 6-month-olds did not.

Similarly, 9-month-olds, but not 6-month-olds, formed discrete categories of Caucasian and Asian faces in Anzures et al. (2010). If 9-month-olds exhibit evidence of categorical perception when tested using the same procedure as in Experiment 2, then it would indicate that there is a developmental change in categorical perception from 5 to 9 months of age. If, however, even 9-month-olds fail to exhibit evidence of categorical perception, then it would suggest that it takes longer than 9 months to develop categorical representation of the anger/disgust contrast.

Method

Participants

Eighteen 9-month-old infants (mean age = 268.78 days, SD = 10.20; 10 female) participated in Experiment 3. Infants were recruited in a similar manner as in Experiments 1 and 2 and were predominately Caucasians and from middle-class families. The data from seven additional infants were excluded due to fussiness (n = 1), less than 20% looking during test trials (n = 4), sibling interference (n = 1), or side bias (n = 1).

Stimuli, procedure, and apparatus

The stimuli were the same morphed faces used in the anger/disgust emotion contrast condition of Experiments 1 and 2. The procedure, apparatus, and dependent measure were the same as those used in Experiment 2.

Results and Discussion

An analysis of outlier status conducted in the same manner as in Experiments 1 and 2 revealed no outliers. Therefore, the scores of all infants were included in the following analyses. The mean times required for infants to habituate and mean percent preferences for the across-emotion stimulus are presented in Table 2. A one-sample t-test indicated that 9-month-olds did not fixate on the 40% face longer than predicted by chance, [M = 52.20%; SE = 3.41; t(17) = 0.65, p = .53, d = 0.15]. Furthermore, an independent-samples t-test revealed that 9-month-olds’ performance did not significantly differ from 5-month-olds’ performance in the anger/disgust condition from Experiment 2, t(34) = 0.95, p = .36, d = 0.38. Thus, as in the case of 5-month-olds in Experiment 2, this result suggests the lack of a discrete category boundary between anger and disgust at 9 months. This finding indicates that sensitivity to the boundary between anger and disgust develops sometime after 9 months of age.

GENERAL DISCUSSION

The current results provide clear evidence of sensitivity to category boundaries among emotions early in life. This was demonstrated by infants’ greater attention to stimulus comparisons that crossed a categorical boundary than to comparisons that did not, even when controlling for the degree of difference between images. Five-month-olds exhibited sensitivity to category boundaries between happiness and surprise, between sadness and disgust, and between sadness and anger but not between anger and disgust. Even 9-month-olds failed to exhibit sensitivity to a boundary between angry and disgusted faces. These findings indicate that, at least at a basic perceptual level, many emotion categories are differentiated early in life, although some categorical contrasts take longer than 9 months to develop.

It is possible that the presence of dichotomized emotion categories early in life is due to a rapidly developing social cognition system that is geared to respond to social cues like emotion (Bhatt, Hock, White, Jubran, & Galati, 2016; Simion & Di Giorgio, 2015). Such a system might benefit from regularities in the facial features that signal emotions and lend themselves to organization and categorization. The regular nature of emotional signals, at least when they portray prototypical emotions, is supported by reports in the literature that computer models are able to accurately categorize emotions (Susskind et al., 2007), dogs are able to match human emotional faces to voices (Albuquerque et al., 2016), and that pigeons discriminate between human emotions (Soto & Wasserman, 2011). Thus, low-level perceptual differentiation might develop early in infancy based on the systematic nature of the features of emotional faces, as suggested by the results of the current study. This conclusion is consistent with numerous reports in the literature on the rapid development of emotion processing in infancy (for reviews, see Grossmann, 2010; Quinn et al., 2011; Walker-Andrews, 2008). However, higher-level emotional knowledge that includes the processing of subtle emotions in context and the understanding of subjective states and affect might take longer to develop, perhaps based on the development of language and other higher-level cognition (Widen, 2013).

When examining the stimuli used in these experiments, salient differences between specific emotion expressions are readily apparent in some cases, the most striking being the visibility of the teeth. This raises the question whether infants were processing images based on “toothiness” rather than the emotion expressed (Caron, Caron, & Myers, 1985). However, “toothiness” does not differ systematically in all of the contrasts included. For example, in the happiness/surprise contrasts, teeth were visible in the portrayals of both emotions. Furthermore, performance in the contrasts that do differ in “toothiness” is not consistent (infants succeed in the sadness/disgust contrast but failed in the anger/disgust contrast). Moreover, if stimulus-level salience differences varied between the across-emotion and within-emotion contrast types, it would have been reflected in the pixel-level image analysis (presented in the stimulus section of Experiment 1). Thus, it is unlikely that “toothiness” (or any other single low-level feature of that nature) was driving infants’ performance in the current investigation.

As noted in the Introduction, a few prior studies have demonstrated categorical perception of contrasts between emotional stimuli (Kotsoni et al., 2001; Leppäanen et al., 2009). However, these studies examined contrasts across the positive and negative valence classes and did not test contrasts within each of the valence classes. Some researchers have argued that young infants are only sensitive to valence information and do not classify emotions within a valence class. For instance, Widen (2013) suggested that “Infants younger than 10 months of age respond emotionally and behaviorally to the valence of facial expressions … but do not interpret faces in terms of discrete negative emotions” (page 73). However, the current results suggest that infants as young as 5 months are sensitive to categorical boundaries within negative emotions. This indicates a greater degree of perceptual development early in life than envisioned by some researchers.

Moreover, in prior studies, 7 months is the youngest age at which infants exhibited evidence of discrete categories (Kotsoni et al., 2001; Leppäanen et al., 2009). The current research suggests that even younger infants, 5-month-olds, are sensitive to discrete category boundaries. The evidence of discrete category boundaries early in life is consistent with prior findings indicating that, by 5 months, infants attend differentially to negative emotions (Heck et al., 2016) and match facial (Vaillant-Molina et al., 2013) and bodily emotions (Heck et al., 2018) to vocal emotions. Altogether, these results suggest a sufficient level of emotion perception to detect boundaries between emotion categories by 5 months of age.

However, in the case of the anger/disgust contrast, neither 5-month-olds nor 9-month-olds exhibited sensitivity to category boundaries. This indicates that not all category contrasts are available as discrete categories early in life. The fact that 5-month-olds failed to exhibit sensitivity to a discrete category boundary between anger and disgust under conditions in which they did dichotomize happiness/surprise, sadness/disgust, and anger/sadness contrasts may be due to different levels of similarity between the prototypical basic emotions on the underlying dimensions of valence and arousal (Russell & Pratt, 1980). All the emotion pairs that 5-month-olds dichotomized varied in the arousal dimension. For example, anger and sadness have the same valence, but they differ in the arousal dimension. In contrast, anger and disgust are quite similar in the arousal dimension, and this may have prevented young infants’ dichotomization of these categories. This similarity between disgust and anger is illustrated by the fact that even children have difficulties distinguishing between anger and disgust (Pochedly, Widen, & Russell, 2012; Widen & Russell, 2010a). For example, Widen and Russell (2010a) found that children were more likely than adults to label the “disgust face” as anger than as disgust. It is also possible that young infants’ relatively low exposure to anger expressions prior to the onset of self-locomotion (Campos, Kermoian, & Zumbahlen, 1992), in conjunction with the high perceptual similarity of angry and disgusted faces, negatively impacted their ability to perceive the discrete category boundary between anger and disgust. As noted earlier, Ruba et al. (2017) reported that 10-month-olds categorize the anger/disgust contrast in faces. However, Ruba et al. did not test for discrete category boundaries of the sort studied in the current experiments, so it is not possible to directly compare the two studies. It thus remains unclear as to when a discrete category boundary between disgust and anger develops.

The current study provides evidence of discrete category boundaries in happiness/surprise, sadness/disgust, and anger/sadness contrasts at as early as 5 months of age. This is in contrast to several prior studies that did not find evidence of discrete categories until much later in life, well after the development of language (for reviews, see Widen, 2013; Widen & Russell, 2008). Perhaps infants in the current set of experiments succeeded because our study examined basic perceptual differentiation, whereas previous work with older children (Widen & Russell, 2008) has typically required the development of language abilities and verbal labeling. For example, in Widen and Russell (2010b), preschool-aged children were asked to name the emotion conveyed by different facial expressions by answering the question, “How does John feel?” Thus, the current study may have tapped into something implicit or purely perceptual in nature, while studies that have reported failure of categorization in even older children may have required a higher level of emotion processing involving conceptual and linguistic understanding of emotions.

As noted earlier, current models of emotion processing make conflicting claims about the development of emotion knowledge. Ekman’s (1972) basic emotions theory suggests that basic emotions are innately specified and emotion categories should be evident early in life (see Ekman, 2016; Izard et al., 2010). In contrast, Russell’s dimensional model (Russell & Pratt, 1980; Widen, 2013; Widen & Russell, 2008) assumes that emotion categories are available only after extensive conceptual and language development. The current study does not necessarily distinguish between the two approaches because it did not test innateness predicted by basic emotions theory and did not assess complex higher-level conceptual understanding of emotions addressed by the dimensional model. However, the current results challenge each of the theories. Recall that not all basic emotion contrasts led to categorical distinctions by 5-month-olds: Categorical perception of the anger/disgust contrast was not evident even at 9 months. Basic emotions theory would have to account for such differences in the development of categorical distinctions of emotion contrasts. At the same time, the dimensional model of emotion knowledge has to consider the fact that even young infants classify emotional stimuli into discrete categories at a basic perceptual or implicit level. Although infants did not exhibit evidence of discrete categories when the contrasts involved similar high-arousal emotions (anger/disgust), even 5-month-olds exhibited discrete categorization of stimuli that varied in arousal (happiness/surprise, sadness/anger, sadness/disgust), suggesting that, even early in life, the arousal dimension is not continuous in the manner proposed in the circumplex model. Perhaps language and conceptual development build upon these early implicit categories to generate the complex understanding of emotions displayed by adults.

It should also be noted that there is growing realization that contextual factors such as the specific events engendering emotions and the presence of body emotion information affect adults’ perception of emotions from faces (Aviezer et al., 2008). Thus, a limitation of the current study is that faces were presented without any context. Future studies should address how infants’ perception of facial emotions is affected by different contexts such as the presence of congruent and incongruent bodily emotions and the nature of events that precipitated the emotion. There is also growing realization that the depiction of emotion in real-world contexts is not necessarily prototypical. As is the case of most studies of infants’ emotion perception, the starting point for the stimuli used in the current experiments were pictures of adults’ depiction of prototypical emotions, although the actual stimuli that the infants were tested on were morphed blends of these pictures. Future studies should test infants on a range of naturalistic images in order to obtain a complete picture of infants’ perception of emotions in everyday contexts.

In summary, to our knowledge, the current study provides the first evidence of discrete emotion categories within the first half-year of life. Thus, early in life, infants respond to many kinds of emotional faces as organized classes of stimuli with definitive boundaries. These findings challenge extant theories of emotion processing and development.

ACKNOWLEDGEMENTS

This research was supported by a grant from the National Institute of Child Health and Human Development (HD075829). The authors would like to thank the infants and parents who participated in this study. Alyson Chroust is now at East Tennessee State University and Ashley Galati is at Kent State University at Tuscarawas.

Footnotes

CONFLICT OF INTEREST

There is no conflict of interest.

REFERENCES

- Adams RB, Gordon HL, Baird AA, Ambady N, & Kleck RE (2003). Effects of gaze on amygdala sensitivity to anger and fear faces. Science, 300(5625), 1536. [DOI] [PubMed] [Google Scholar]

- Albuquerque N, Guo K, Wilkinson A, Savalli C, Otta E, & Mills D (2016). Dogs recognize dog and human emotions. Biology Letters, 12(1), 20150883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altmann CF, Uesaki M, Ono K, Matsuhashi M, Mima T, & Fukuyama H (2014). Categorical speech perception during active discrimination of consonants and vowels. Neuropsychologia, 64(1), 13–23. [DOI] [PubMed] [Google Scholar]

- Anzures G, Quinn PC, Pascalis O, Slater AM, & Lee K (2010). Categorization, categorical perception, and asymmetry in infants’ representation of face race. Developmental Science, 13(4), 553–564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aviezer H, Hassin RR, Bentin S, & Trope Y (2008). Putting facial expressions back in context. In Ambady N, & Skowronski JJ (Eds.), First impressions (pp. 255–286). New York, NY: Guilford Publications. [Google Scholar]

- Barrera ME, & Maurer D (1981). The perception of facial expressions by the three-month-old. Child Development, 52(1), 203–206. [PubMed] [Google Scholar]

- Barrett LF (2006). Are emotions natural kinds? Perspectives on Psychological Science, 1(1), 28–58. [DOI] [PubMed] [Google Scholar]

- Bhatt RS, Hayden A, & Quinn PC (2007). Perceptual organization based on common region in infancy. Infancy, 12(2), 147–168. [DOI] [PubMed] [Google Scholar]

- Bhatt RS, Hock A, White H, Jubran R, & Galati A (2016). The development of body structure knowledge in infancy. Child Development Perspectives, 10(1), 45–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhatt RS, & Quinn PC (2011). How does learning impact development in infancy? The case of perceptual organization Infancy, 16(1), 2–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bornstein MH, & Arterberry ME (2003). Recognition, discrimination and categorization of smiling by 5-month-old infants. Developmental Science, 6(5), 585–599. [Google Scholar]

- Bornstein MH, Kessen W, & Weiskopf S (1976). The categories of hue in infancy. Science, 191(4223), 201–202. [DOI] [PubMed] [Google Scholar]

- Brosch T, Pourtois G, & Sander D (2010). The perception and categorization of emotional stimuli: A review. Cognition and Emotion, 24(3), 377–400. [Google Scholar]

- Campos JJ, Kermoian R, & Zumbahlen MR (1992). Socioemotional transformations in the family system following infant crawling onset. New Directions for Child and Adolescent Development, 1992(55), 25–40. [DOI] [PubMed] [Google Scholar]

- Caron RF, Caron AJ, & Myers RS (1985). Do infants see emotional expressions in static faces? Child Development, 56, 1552–1560. [PubMed] [Google Scholar]

- Clifford A, Franklin A, Davies IRL, & Holmes A (2009). Electrophysiological markers of categorical perception of color in 7-month-old infants. Brain and Cognition, 71(2), 165–172. [DOI] [PubMed] [Google Scholar]

- Eimas PD, Siqueland ER, Jusczyk P, & Vigorito J (1971). Speech perception in infants. Science, 171 (3968), 303–306. [DOI] [PubMed] [Google Scholar]

- Ekman P (1972). Universals and cultural differences in facial expressions of emotion. In Cole J (Ed.), Nebraska symposium on motivation, 1971 (pp. 207–283). Lincoln, NE: University of Nebraska Press. [Google Scholar]

- Ekman P (1989). The argument and evidence about universals in facial expressions of emotion. In Wagner H, & Manstead A (Eds.), Handbook of social psychophysiology (pp. 143–164). Chichester, UK: Wiley. [Google Scholar]

- Ekman P (1992). An argument for basic emotions. Cognition and Emotion, 6(3–4), 169–200. [Google Scholar]

- Ekman P (2016). What scientists who study emotion agree about. Perspectives on Psychological Science, 11 (1), 31–34. [DOI] [PubMed] [Google Scholar]

- Ekman P, & Friesen WV (1976). Measuring facial movement. Environmental Psychology and Nonverbal Behavior, 1(1), 56–75. [Google Scholar]

- Ekman P, Friesen WV, & Ellsworth P (2013). Emotion in the human face: Guidelines for research and an integration of findings. Elmsford, NY: Pergamon Press Inc. [Google Scholar]

- Etcoff NL, & Magee JJ (1992). Categorical perception of facial expressions. Cognition, 44(3), 227–240. [DOI] [PubMed] [Google Scholar]

- Farroni T, Menon E, Rigato S, & Johnson MH (2007). The perception of facial expressions in newborns. European Journal of Developmental Psychology, 4(1), 2–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Teunisse JP, & Benson PJ (1997). Categorical perception of facial expressions: Categories and their internal structure. Cognition and Emotion, 11(1), 1–23. [Google Scholar]

- Grossmann T (2010). The development of emotion perception in face and voice during infancy. Restorative Neurology and Neuroscience, 28(2), 219–236. [DOI] [PubMed] [Google Scholar]

- Haviland JM, & Lelwica M (1987). The induced affect response: 10-week-old infants’ responses to three emotion expressions. Developmental Psychology, 23(1), 97–104. [Google Scholar]

- Hayden A, Bhatt RS, & Quinn PC (2008). Perceptual organization based on illusory regions in infancy. Psychonomic Bulletin & Review, 15(2), 443–447. [DOI] [PubMed] [Google Scholar]

- Heck A, Chroust A, White H, Jubran R, & Bhatt RS (2018). Development of body emotion perception in infancy: From discrimination to recognition. Infant Behavior and Development, 50, 42–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heck A, Hock A, White H, Jubran R, & Bhatt RS (2016). The development of attention to dynamic facial emotions. Journal of Experimental Child Psychology, 147(1), 100–110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hock A, Kangas A, Zieber N, & Bhatt RS (2015). The development of sex category representation in infancy: Matching of faces and bodies. Developmental Psychology, 51(3), 346–352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoehl S, & Pauen S (2017). Do infants associate spiders and snakes with fearful facial expressions? Evolution and Human Behavior, 38(3), 404–413. [Google Scholar]

- Hoehl S, & Striano T (2010). The development of emotional face and eye gaze processing. Developmental Science, 13(6), 813–825. [DOI] [PubMed] [Google Scholar]

- Hu Z, Hanley JR, Zhang R, Liu Q, & Roberson D (2014). A conflict-based model of color categorical perception: Evidence from a priming study. Psychonomic Bulletin and Review, 21(5), 1214–1223. [DOI] [PubMed] [Google Scholar]

- Hunnius S, de Wit TC, Vrins S, & von Hofsten C (2011). Facing threat: Infants’ and adults’ visual scanning of faces with neutral, happy, sad, angry, and fearful emotional expressions. Cognition and Emotion, 25(2), 193–205. [DOI] [PubMed] [Google Scholar]

- Izard CE (1994). Innate and universal facial expressions: Evidence from developmental and cross-cultural research. Psychological Bulletin, 115(2), 288–299. [DOI] [PubMed] [Google Scholar]

- Izard CE, Woodburn EM, & Finlon KJ (2010). Extending emotion science to the study of discrete emotions in infants. Emotion Review, 2(2), 134–136. [Google Scholar]

- Kangas A, Zieber N, Hayden A, & Bhatt RS (2013). Parts function as perceptual organizational entities in infancy. Psychonomic Bulletin & Review, 20(4), 726–731. [DOI] [PubMed] [Google Scholar]

- Kotsoni E, de Haan M, & Johnson MH (2001). Categorical perception of facial expressions by 7-month-old infants. Perception, 30(9), 1115–1125. [DOI] [PubMed] [Google Scholar]

- LaBarbera JD, Izard CE, Vietze P, & Parisi SA (1976). Four-and six-month-old infants’ visual responses to joy, anger, and neutral expressions. Child Development, 47(2), 535–538. [PubMed] [Google Scholar]

- Langner O, Dotsch R, Bijlstra G, Wigboldus DH, Hawk ST, & van Knippenberg A (2010). Presentation and validation of the Radboud Faces Database. Cognition and Emotion, 24(8), 1377–1388. [Google Scholar]

- Lee V, Cheal JL, & Rutherford MD (2015). Categorical perception along the happy-angry and happy-sad continua in the first year of life. Infant Behavior and Development, 40(1), 95–102. [DOI] [PubMed] [Google Scholar]

- Leppäanen JM, Moulson MC, Vogel-Farley VK, & Nelson CA (2007). An ERP study of emotional face processing in the adult and infant brain. Child Development, 78(1), 232–245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppäanen JM, Richmond J, Vogel-Farley VK, Moulson MC, & Nelson CA (2009). Categorical representation of facial expressions in the infant brain. Infancy, 14(3), 346–362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ludemann PM, & Nelson CA (1988). Categorical representation of facial expressions by 7-month-old infants. Developmental Psychology, 24(4), 492–501. [Google Scholar]

- Martin RM (1975). Effects of familiar and complex stimuli on infant attention. Developmental Psychology, 11(2), 178–185. [Google Scholar]

- Moore C, & Corkum V (1994). Social understanding at the end of the first year of life. Developmental Review, 14(4), 349–372. [Google Scholar]

- Nelson CA, Morse PA, & Leavitt LA (1979). Recognition of facial expressions by seven-month-old infants. Child Development, 50(4), 1239–1242. [PubMed] [Google Scholar]

- Papageorgiou KA, Smith TJ, Wu R, Johnson MH, Kirkham NZ, & Ronald A (2014). Individual differences in infant fixation duration relate to attention and behavioral control in childhood. Psychological Science, 25(7), 1371–1379. [DOI] [PubMed] [Google Scholar]

- Peltola MJ, Hietanen JK, Forssman L, & Leppäanen JM (2013). The emergence and stability of the attentional bias to fearful faces in infancy. Infancy, 18(6), 905–926. [Google Scholar]

- Pochedly JT, Widen SC, & Russell JA (2012). What emotion does the “facial expression of disgust” express? Emotion, 12(6), 1315–1319. [DOI] [PubMed] [Google Scholar]

- Quinn PC, Anzures G, Izard CE, Lee K, Pascalis O, Slater AM, & Tanaka JW (2011). Looking across domains to understand infant representation of emotion. Emotion Review, 3(2), 197–206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinn PC, Yahr J, Kuhn A, Slater AM, & Pascalis O (2002). Representation of the gender of human faces by infants: A preference for female. Perception, 31(9), 1109–1121. [DOI] [PubMed] [Google Scholar]

- Ramsey JL, Langlois JH, & Marti NC (2005). Infant categorization of faces: Ladies first. Developmental Review, 25(2), 212–246. [Google Scholar]

- Ruba AL, Johnson KM, Harris LT, & Wilbourn MP (2017). Developmental changes in infants’ categorization of anger and disgust facial expressions. Developmental Psychology, 53(10), 1826–1832. [DOI] [PubMed] [Google Scholar]

- Russell JA (1980). A circumplex model of affect. Journal of Personality and Social Psychology, 39(6), 1161. [DOI] [PubMed] [Google Scholar]

- Russell JA (2003). Core affect and the psychological construction of emotion. Psychological Review, 110 (1), 145–172. [DOI] [PubMed] [Google Scholar]

- Russell JA, & Pratt G (1980). A description of the affective quality attributed to environments. Journal of Personality and Social Psychology, 38(2), 311–322. [Google Scholar]

- Sauter DA, LeGeun O, & Haun DBM (2011). Categorical perception of emotional facial expressions does not require lexical categories. Emotion, 11(6), 1471–1483. [DOI] [PubMed] [Google Scholar]

- Schwartz GM, Izard CE, & Ansul SE (1985). The 5-month-old’s ability to discriminate facial expressions of emotion. Infant Behavior and Development, 8(1), 65–77. [Google Scholar]

- Serrano JM, Iglesias J, & Loeches A (1992). Visual discrimination and recognition of facial expressions of anger, fear, and surprise in 4- to 6-month-old infants. Developmental Psychobiology, 25(6), 411–425. [DOI] [PubMed] [Google Scholar]

- Simion F, & Di Giorgio E (2015). Face perception and processing in early infancy: Inborn predispositions and developmental changes. Frontiers in Psychology, 6(969), 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slater A, Earle DC, Morison V, & Rose D (1985). Pattern preferences at birth and their interaction with habituation-induced novelty preferences. Journal of Experimental Child Psychology, 39(1), 37–54. [DOI] [PubMed] [Google Scholar]

- Soto FA, & Wasserman EA (2011). Asymmetrical interactions in the perception of face identity and emotional expression are not unique to the primate visual system. Journal of Vision, 1(3), 24. [DOI] [PubMed] [Google Scholar]

- Susskind JM, Littlewort G, Bartlett MS, Movellan J, & Anderson AK (2007). Human and computer recognition of facial expressions of emotion. Neuropsychologia, 45(1), 152–162. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, … Nelson C (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168(3), 242–249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tukey JW (1977). Exploratory data analysis. Addison–Wesley Series in Behavioral Science: Quantitative Methods. Reading, Massachusetts. [Google Scholar]

- Vaillant-Molina M, Bahrick LE, & Flom R (2013). Young infants match facial and vocal emotional expressions of other infants. Infancy, 18(Suppl 1), E97–E111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker-Andrews AS (1997). Infants’ perception of expressive behaviors: Differentiation of multimodal information. Psychological Bulletin, 121(3), 437–456. [DOI] [PubMed] [Google Scholar]

- Walker-Andrews AS (2005). Perceiving social affordances: The development of emotion understanding. In Homer BD, & Tamis-LeMonda CS (Eds.), The development of social cognition and communication (pp. 93–116). New York, NY: Psychology Press. [Google Scholar]

- Walker-Andrews AS (2008). Intermodal emotional processes in infancy. In Lewis M, Haviland-Jones JM, Barrett LF, Lewis M, Haviland-Jones JM, & Barrett LF (Eds.), Handbook of emotions (3rd ed., pp. 364–375). New York, NY: Guilford Press. [Google Scholar]

- Widen SC (2013). Children’s interpretation of facial expressions: The long path from valence-based to specific discrete categories. Emotion Review, 5(1), 72–77. [Google Scholar]

- Widen SC, & Russell JA (2008). Children acquire emotion categories gradually. Cognitive Development, 23(2), 291–312. [Google Scholar]

- Widen SC, & Russell JA (2010a). The “disgust face” conveys anger to children. Emotion, 10(4), 455–466. [DOI] [PubMed] [Google Scholar]

- Widen SC, & Russell JA (2010b). Differentiation in preschooler’s categories of emotion. Emotion, 10(5), 651–661. [DOI] [PubMed] [Google Scholar]

- Young-Browne G, Rosenfeld HM, & Horowitz FD (1977). Infant discrimination of facial expressions. Child Development, 48(2), 555–562. [Google Scholar]

- Zieber N, Bhatt RS, Hayden A, Kangas A, Collins R, & Bada H (2010). Body representation in the first year of life. Infancy, 15(5), 534–544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zieber N, Kangas A, Hock A, & Bhatt RS (2015). Body structure perception in infancy. Infancy, 20 (1), 1–17. [Google Scholar]