Abstract

Neurons convert external stimuli into action potentials, or spikes, and encode the contained information into the biological nervous system. Despite the complexity of neurons and the synaptic interactions in between, rate models are often adapted to describe neural encoding with modest success. However, it is not clear whether the firing rate, the reciprocal of the time interval between spikes, is sufficient to capture the essential features for the neuronal dynamics. Going beyond the usual relaxation dynamics in Ginzburg-Landau theory for statistical systems, we propose that neural activities can be captured by the U(1) dynamics, integrating the action potential and the “phase” of the neuron together. The gain function of the Hodgkin-Huxley neuron and the corresponding dynamical phase transitions can be described within the U(1) neuron framework. In addition, the phase dependence of the synaptic interactions is illustrated and the mapping to the Kinouchi-Copelli neuron is established. It suggests that the U(1) neuron is the minimal model for single-neuron activities and serves as the building block of the neuronal network for information processing.

Subject terms: Computational neuroscience; Biological physics; Statistical physics, thermodynamics and nonlinear dynamics

Introduction

Human beings rely on their nervous systems to detect external stimuli and take proper reactions afterwards. Neurons are the fundamental units in the nervous system and deserve careful and thorough characterizations for their responses to external stimuli1–4. However, because neurons display considerable diversity in morphological and physiological properties, it is rather challenging to pin down the essential degrees of freedom even when studying the single-neuron dynamics. While the neural encoding and decoding4 are not fully understood yet, the action potentials, or the spikes, of the neurons upon external stimuli are the apparent means to pass the information onto the nervous system. In consequence, the firing rate of the neuronal spiking is often used for data analysis and modeling5–9. The firing-rate models are appealing due to their simplicity and accessibility for numerical simulations.

Although the firing-rate models are helpful descriptions for neural circuits, it is not clear whether the neuronal spiking alone is sufficient to capture the essential features of neuronal activities. At the microscopic scale, the electric activities of a single neuron arise from a variety of ionic flows passing the relevant ion channels embedded on the membrane of the nerve cell10,11. The conductance-based approach, such as the Hodgkin-Huxley model12, provides an effective description for the emergence of action potentials by the inclusion of gating variables of the ion channels involved2. Above some current thresholds, the neuronal spikes start to appear. Hodgkin proposed to classify the neurons into type I or type II depending on whether the firing rate changes continuously or discontinuously above the current threshold13. While the conductance-based model with ion-channel dynamics explains the emergence of the neuronal spikes and provides a better description for biological details, it blurs the priority of various degrees of freedom in a single neuron, rendering a clear understanding of neuronal dynamics intractable.

The dynamical transitions in a single neuron above the current threshold posts another challenge. Both the biologically based models, such as the Hodgkin-Huxley model and its generalizations, or the reduced neuron models, including the Ermentrout-Kopell model14, the FitzHugh-Nagumo model15, the Izhikevich model16,17 and so on, exhibit rich types of dynamical phase transitions above the firing threshold. There are three major types of dynamical phase transitions found in single-neuron dynamics: saddle-node on invariant circle (SNIC), supercritical Hopf bifurcation and subcritical Hopf bifurcation2,3. Ermentrout18 showed that the type I neurons undergo a SNIC dynamical transition at the threshold, while Izhikevich3 pointed out that type II neurons may go through all three different bifurcations. It would be great to build a theoretical framework, capturing the essential degrees of freedom for spiking neurons and incorporating all types of dynamical phase transitions systematically.

In this Article, we propose the essential degrees of freedom for a spiking neuron are the membrane potential and the temporal sequence. It is rather remarkable that both can be integrated into a unified theoretical framework described by a single complex dynamical variable and the U(1) dynamics emerges naturally. The real part of the complex dynamical variable represents the potential of the neuron and the phase describes the temporal sequence during the firing process. When describing the neuronal dynamics of the complex dynamical variable, our U(1) neuron model not only reproduces the action potentials from the Hodgkin-Huxley neuron but also captures the gain function of the firing rate in response to the external current.

It is known that the classification of spiking neurons is closely related to the bifurcation of neuronal dynamics. The gain function of the type I neuron is continuous at the current threshold while the type II neuron exhibits a discontinuous jump at the threshold. The U(1) neuron described by the complex variable provides a natural explanation for the classification. The bifurcations on the complex plane, either in radial or phase directions, lead to various transitions among resting, excitable, firing states of a single neuron. In short, the U(1) neuron not only captures the single-neuron activity upon external stimuli, but also provides a coherent understanding for the dynamical phase transition between different types of neuronal activities.

The major impact of the U(1) neuron is not to provide a realistic description of a spiking neuron (although it can be done as shown in the later paragraphs), just like the Fermi liquid theory is not aiming to provide a precise quantitative description for metals. The key is to grab the essential features in neuronal dynamics so that model building for different purposes can be facilitated with these ingredients. For instance, within the U(1) neuron description, we find the phase dynamics of spiking neurons is nonuniform during the firing process and the firing rate is thus dictated by the bottleneck (phase regime with smaller angular velocity). In addition, it is known that the neuron reacts differently when stimulated in different firing processes. Going beyond the usual Kumamoto-like interactions, the U(1) neuron framework provides a systematic approach to describe the phase dependence of the synaptic interactions on the presynaptic and postsynaptic neurons. With the phase dependence in mind, the refractory effect can be incorporated seamlessly into the U(1) neuron framework. In fact, we show that the Kinouchi-Copelli neuronal network is equivalent to the discrete version of the U(1) neuronal network and the spontaneous asynchronous firing (SAF) state, crucial for information processing, can be realized when the synaptic strength is strong enough.

The remainder of the paper is organized as follows. In Section II, we first compare the artificial and biological neuronal networks and point out the importance of neuronal dynamics. In Section III, we discuss the mode-locking phenomena in biological neurons. In Section IV, we go beyond the usual Ginzburg-Landau theory and construct the theoretical foundation for the U(1) neuron. In Section V, we demonstrate how the Hodgkin-Huxley neuron can be described with the theoretical framework of the U(1) neuron. In Section VI, we reveal the importance of phase dependence in the synaptic interactions and establish the equivalence of the Kinouchi-Copelli neuron and the discrete version of the U(1) neuron. Finally, we extend the single-neuron approach to neuronal networks and show that the spontaneous asynchronous firing phase, beneficial for information processing, in the Kinouchi-Copelli neuronal network.

Artificial and biological neurons

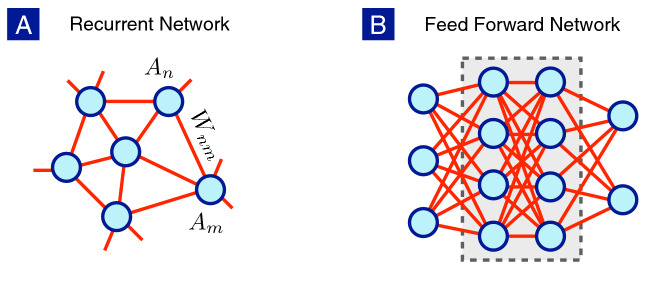

We first briefly explain the difference between artificial and biological neurons as shown in Fig. 1. In biological nervous systems, recurrent neuronal networks are often found, while the artificial neural networks used in deep learning19,20 usually belong to the feed-forward type with well-defined input, output and hidden layers. Despite the difference between the network structures, the neuronal dynamics within the rate-model framework is captured by the set of coupled non-linear differential equations,

| 1 |

where denotes the firing rate of the single neuron or the activity of the neuronal population at the n-th node, is the external current injected into the n-th neuron and is the synaptic weight from the m-th neuron to the n-th neuron. The relaxation time is the typical time scale for a single neuron returning to its resting state when the external stimulus is turned off. The gain function G relates the firing rate with the stimuli, including the external current and the synaptic currents from connected neurons. The variety of individual neurons is ignored here for simplicity but can be included without technical difficulty.

Figure 1.

Neurons with different network structures: (A) Recurrent neuronal network often observed in biological nerve systems. (B) Feed forward network with input, output and hidden layers, largely put to practice in deep learning.

Even with the simple relaxation dynamics, the coupled non-linear differential equations are already extremely complicated. Because the synaptic dynamics (how evolves with time) is typically much slower than the neuronal dynamics, the stationary solution plays a significant role in some cases,

| 2 |

Note that the true dynamics drops out completely in the above relations. In the feed-forward network, these are exactly the relations between input and output neurons commonly used in the artificial neural network. The synaptic weight can be adjusted by employing appropriate algorithm to minimize the cost function but the evolution of at different epochs does not represent the true dynamics of the neuronal network.

The deep neural network19 enjoys great success in recent years and makes strong impacts in many areas in science and technology. However, as explained in the previous paragraph, it explains the slower process such as learning but does not include the reactive information processing at the shorter time scale. If the neuronal dynamics is properly included, shall the neuronal network with both types of dynamics exhibits different class of intelligence? The first step to answer this important question is to capture the essential features in neuronal dynamics before constructing the network with complicated structures. It will become evident later that the activity (firing rate) is insufficient to describe the dynamics of a single neuron and more degrees of freedom must be included to account for proper synaptic interactions.

Mode-locking in a single neuron

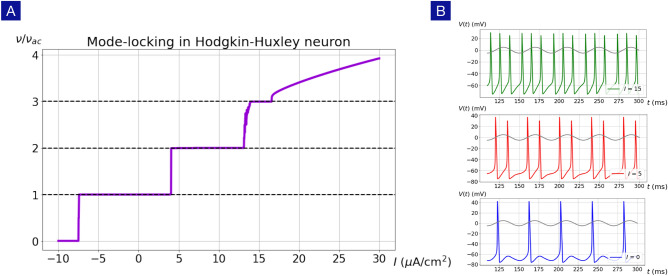

Mode locking21–23 is a common phenomenon in physical and biological systems24–28 with non-linear dynamics and may play an important role in neural information processing. In a wide variety of neuron models including the Hodgkin-Huxley model, the FitzHugh-Nagumo model, the Izhikevich model and some integrate-and-fire models, the firing rate is locked to the integer multiples of the oscillatory frequency of the external ac current. As shown in Fig. 2, the Hodgkin-Huxley neuron shows robust mode-locking behavior in the presence of the time-dependent current stimulus,

| 3 |

where I and represent the strengths of dc and ac currents respectively. It is rather remarkable that, even with a moderate , the gain function of the Hodgkin-Huxley neuron changes drastically and robust firing-rate plateaus appear with , where . The action potentials on the firing-rate plateaus indicate clear mode-locking behaviors as shown in Fig. 2b.

Figure 2.

Mode-locking in the Hodgkin-Huxley neuron. (A) The firing rate of the Hodgkin-Huxley neuron exhibits roust plateaus at , where n is an integer, in the presence of an external ac drive with frequency Hz. (B) Action potentials at A/cm exhibit different mode-locking behaviors with respectively.

The mode-locking phenomena have been known in the neuroscience community for quite a long time but its deeper implication seems neglected. First of all, the mode-locking phenomena provide a stable method to transfer information between neurons in the presence of stochastic noises. Furthermore, the mode-locking phenomena manifest from the underlying non-linear phase dynamics, or the so-called U(1) dynamics.

To unveil the underlying phase dynamics, we introduce a complex dynamical variable to describe the neuronal dynamics,

| 4 |

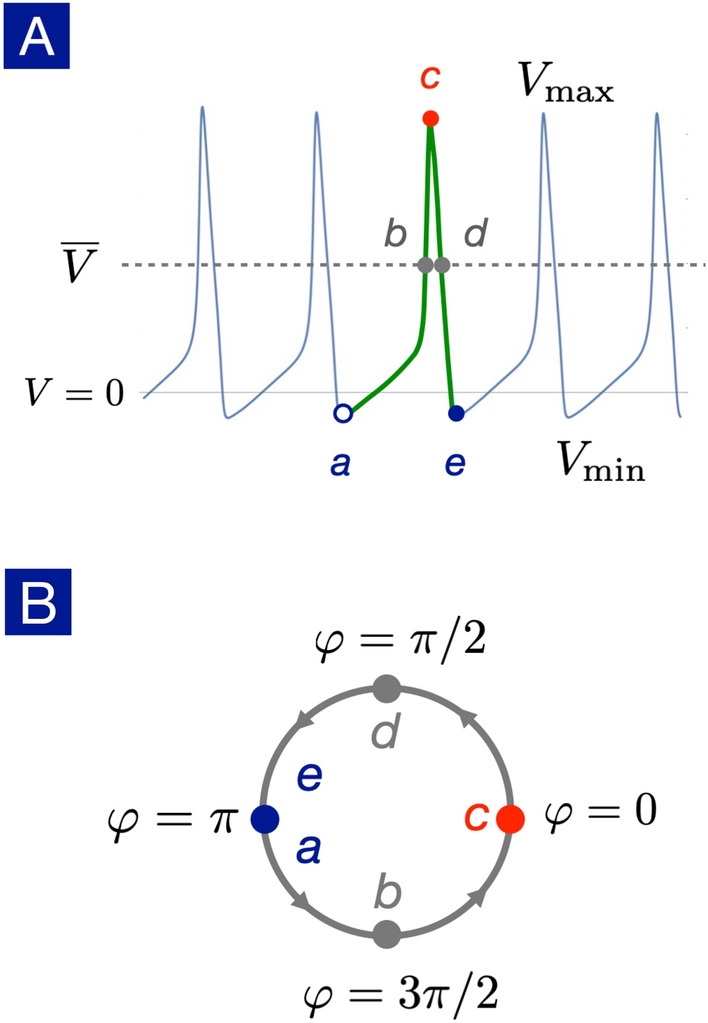

where r(t) denotes the firing amplitude and represents the phase during the firing process. We propose the essential degrees of freedom for a spiking neuron are the membrane potential and the phase as shown in Fig. 3. The phases correspond to the potential maximum and minimum respectively. When a spiking neuron completes a full firing cycle, it can be viewed as a winding process of in the phase dynamics.

Figure 3.

Definition of the U(1) phase. (A) The action potential of a spiking neuron is marked by its potential minimum (point a, e), average (point b, d) and maximum (point c). (B) The points from a to e corresponds to the U(1) phase respectively. A complete firing process can thus be viewed as a winding process of in the phase dynamics.

In general, the angular velocity is not constant and depends on the phase,

| 5 |

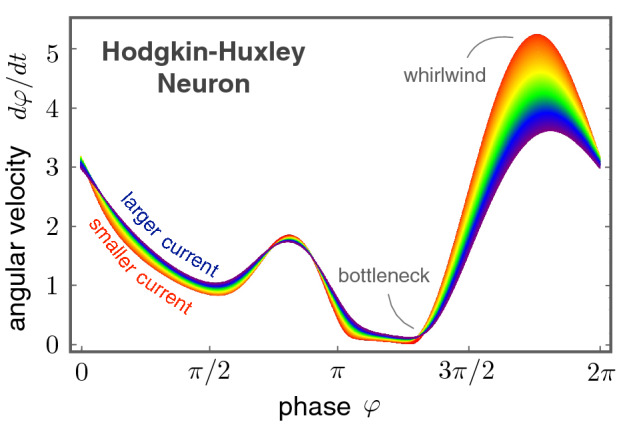

As a demonstrating example, we extract the nonuniform phase dynamics of the Hodgkin-Huxley neuron with our U(1) neuron framework. The phase dynamics reveals lots of interesting features as shown in Fig. 4. First of all, the angular velocity is nonuniform, showing a bottleneck (small angular velocity) starting around and a whirlwind (large angular velocity) slight below . A non-trivial correlation between membrane potential V(t) and the phase is thus established: the phase dynamics is fast near potential maximum while it slows down near potential minimum.

Figure 4.

Nonuniform phase dynamics in the Hodgkin-Huxley neuron. The angular velocity exhibits a highly non-trivial dependence on the U(1) phase. In the regime of current stimuli from 6.3 A/cm (red) to 46.3 A/cm (blue), one finds the overall shape remains more or less the same. However, because the firing rate is dictated by the bottleneck regime, a slight increase of the angular velocity here will boost up the firing rate significantly.

Upon changing the external current stimulus, the overall shape of remains more or less the same, indicating the nonuniform phase dynamics we found here is an intrinsic property of the Hodgkin-Huxley neuron. Zooming into the finer differences caused by different current stimuli, a larger current increases the angular velocity slightly near the bottleneck regime while slows down the phase dynamics around the whirlwind regime. It means that an increase of the injected current suppresses the non-uniformity of the angular velocity.

The firing rate can be determined from the phase dynamics as well. Because the complete firing cycle corresponds to a phase winding, the firing rate of a spiking neuron is

| 6 |

where is the time duration for adjacent firing events. From the above rate-phase relation, it is clear that the firing rate is dominated by the angular velocity in the bottleneck regime. Thus, a slight increase of in the bottleneck gives rise to a significant upsurge in the firing rate . On the other hand, the relatively large changes in the whirlwind regime for different current stimuli are irrelevant to the firing rate.

The nonuniform phase dynamics revealed by the U(1) neuron framework presents a natural explanation for the observed mode-locking phenomena. It is worth emphasizing that a uniform angular velocity can be gauged away by redefining the complex dynamical variable but the phase-dependent angular velocity is an intrinsic property of the neuron and cannot be gauged away. The simple yet stimulating findings presented in Fig. 4 encourage us to go beyond the rate models and to integrate both membrane potential V(t) and the phase together into a coherent theoretical framework.

The U(1) Neuron

Extending the conventional Ginzburg-Landau theory for the complex dynamical variable z(t), its dynamical equation contains two parts,

| 7 |

where the Lyapunov function is a real-valued potential with U(1) symmetry and is another real-valued function describing the nonuniform rotation of the phase. It can be shown that the Lyapunov function decreases throughout the temporal evolution, seeking the potential minimum representing the free energy in thermal equilibrium. Thus, the radial dynamics of the firing amplitude r(t) is relatively simple and, in most cases, can be understood with the usual Ginzburg-Landau theory with slight modifications. However, the presence of the nonuniform phase dynamics goes beyond the relaxation dynamics (seeking for specific potential minima) and gives rise to interesting non-equilibrium phenomena as anticipated in the excitable neuronal systems.

To make the radial and phase dynamics explicit, one can choose the biased double-well potential in the Ginzburg-Landau theory (for both the first-order and the second-order phase transtions) supplemented with the Fourier expansion for the phase dynamics,

| 8 |

| 9 |

Here , , are real while are in general complex. Note that, unlike the Lyapunov function, the U(1) symmetry does not hold for the phase-rotation function . Separating the complex Eq. (7) into amplitude and phase parts, the dynamical equations read

| 10 |

| 11 |

where and are the amplitudes and phases of the complex numbers . Note that the radial dynamics contains no phase dependence and F(r) serves as the conservative force driving the firing amplitude to the potential minima at satisfying the equilibrium condition . The phase dynamics can be rather unconventional because the angular velocity is nonuniform29, a direct consequence from the spike-like action potential.

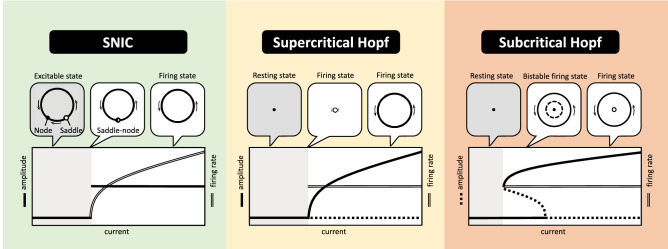

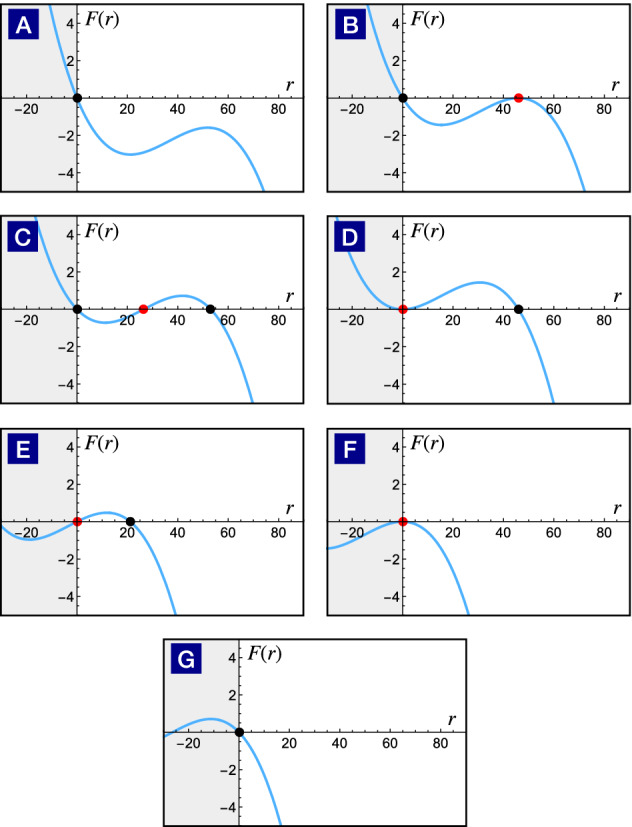

The above dynamical equations for firing amplitude and phase provides a coherent understanding of different types of dynamical phase transitions in spiking neurons. Three major types of dynamical phase transitions are illustrated in Fig. 5: the saddle-node-onto-invariant-cycle (SNIC) transition is associated with bifurcations in the phase dynamics, while the supercritical and subcritical Hopf transitions are driven by bifurcations in the radial dynamics for the firing amplitude. While these dynamical phase transitions are found in various neuron models in the literature, it is rather satisfying that all three types of transitions emerge naturally within the U(1) neuron framework.

Figure 5.

Dynamical phase transitions in the U(1) neuron: SNIC, supercritical Hopf, subcritical Hopf. The upper panels present the topological structures of the limit cycles and the fixed points in the vicinity of the dynamical phase transitions. The firing amplitude and rate of the neuron versus external current stimulus are illustrated in the bottom panels.

Let us focus on the SNIC transition first. As shown in Fig. 5, in the presence of a finite amplitude , the equilibrium condition gives rise to a pair of saddle-node fixed points in phase dynamics. Below the current threshold, the saddle-node structure makes the neuron excitable. Upon increasing current injection, the saddle-node pair gets close, merges into a critical point and eventually disappears on the limit cycle. Because the limit cycle already exists below the current threshold, the firing amplitude exhibits a discontinuous jump. It is known in statistical physics that the dynamics slows down indefinitely in the vicinity of a critical point. In consequence, in near the SNIC transition, the time duration between adjacent firing events diverges, indicating a vanishing firing rate. Thus, the firing rate changes continuously across the current threshold, equating to the type I neuron.

On the other hand, in the regime where the angular velocity remains positive , the dynamical transitions are driven by the competitions among the equilibrium points in the radial dynamics determined by . In the supercritical Hopf transition, a stable limit cycle (non-zero solution) appears at the current threshold, rendering the resting state ( solution) unstable. Because the limit cycle grows out from the resting state, the amplitude changes continuously across the current threshold as shown in Fig. 5. Because there is no critical point involved here, the firing rate associated with the emergent limit cycle is finite in general cases. Thus, the gain function exhibits a discontinuous jump at the current threshold and corresponds to the type II neuron.

The subcritical Hopf transition arises when a pair of limit cycles appears at the current threshold. Due to the simultaneous presence of the stable limit cycle (firing state) and the stable fixed point (resting state), the neuron is bistable. As shown in Fig. 5, both the firing rate and amplitude are discontinuous at the current threshold. Although neurons undergo the subcritical Hopf transition can be classified as the type II, their dynamics are more complicated in comparison with the supercritical Hopf transition where neurons are either in the resting state or the firing state.

Fitting Hodgkin-Huxley Neuron

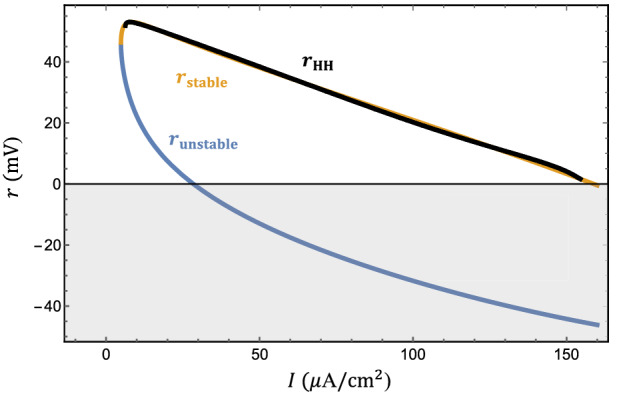

In this section, we demonstrate how the Hodgkin-Huxley neuron can be described by the U(1) neuron in a wide range of parameter regimes. The bifurcation diagram30 of the Hodgkin-Huxley neuron undergoes a subcritical Hopf transition first at the current threshold at A/cm, followed by another supercritical Hopf transition at A/cm. These dynamical phase transitions can be captured within the U(1) neuron framework.

Let us focus on the radial dynamics of the firing amplitude first. Because the resting state () is always present, the potential flow in the Ginzburg-Landau theory can be constructed in the following way. As shown in Fig. 6, we introducing a cubic function f(r) with three zeroes at ,

| 12 |

The parameters and can be chosen as the amplitudes of the stable and unstable limit cycle, and A should be negative because of the limit of the ionic sources. Then the force in the amplitude equation is the coordinate transformation concerning the external input current I that switches neurons between firing states and resting states:

| 13 |

Here s(I) depends on the external current and serves as a fitting function to reproduce the correct dynamical phase transitions in the targeted parameter regime.

Figure 6.

Dynamical phase transitions driven by the F(r) evolution. As the fitting function s(I) changes with the external current, the cubic function slides through different configurations from (A) to (G). The black and red points indicate the radius of the stable and unstable limit cycle respectively. Configurations from (A) to (D) show the birth of a pair of limit cycles and the unstable limit cycle swallows the stable fixed point at the origin subsequently. Compared with the previous classification scheme, it belongs to the subcritical Hopf transition. Configurations from (E) to (G) illustrate the process of a stable limit cycle shrinks to an unstable fixed point, leading to a stable fixed point subsequently. This dynamical transition belongs to the supercritical Hopf transition.

The solutions for describes the fixed point or the amplitudes of the limit cycles,

| 14 |

Note that only non-negative solutions are physical because the firing amplitude cannot be negative. The solution is always present as anticipated. The other two solutions appear in pair and represent a pair of stable and unstable limit cycles.

The remaining task to map the Hodgkin-Huxley neuron into the U(1) neuron is to equate firing amplitudes in both descriptions,

| 15 |

by numerical fitting. For convenience, we suppose that the stable solution has its maximum when the fitting function s(I) equals to 0. Therefore, the first step is to take the derivative of with respect to s and set this derivative zero to find the extreme point of .

| 16 |

We can find the expression of s at the maximal by making the above equation equal to 0.

| 17 |

Now we use our assumption stated in the beginning that the maximum of the stable solution happens when the fitting function s(I) equals to 0. After setting , we will find the relation between the parameters and .

| 18 |

However, since we previously define that and stand for the amplitude of the stable and unstable limit cycle, should always be smaller than , see Fig. 6. As a result, we should only keep the solution. From the bifurcation diagram of the Hodgkin-Huxley neuron, we know that the maximal firing amplitude is 53.0632(mV) when the current is 7.67(A/cm). Therefore, we get values for the parameters and . Next, we anchor the maximum of the stable solution to the maximal firing amplitude in Hodgkin-Huxley neuron to get a reference of the the relation. Then we just need to map currents I smaller than 7.67 to negative s and those larger than 7.67 to the positive s according to the Equation (15).

As a result, we will have a number of (I, s) pairs to fit. Keeping the lower-order terms in the fitting function s(I),

| 19 |

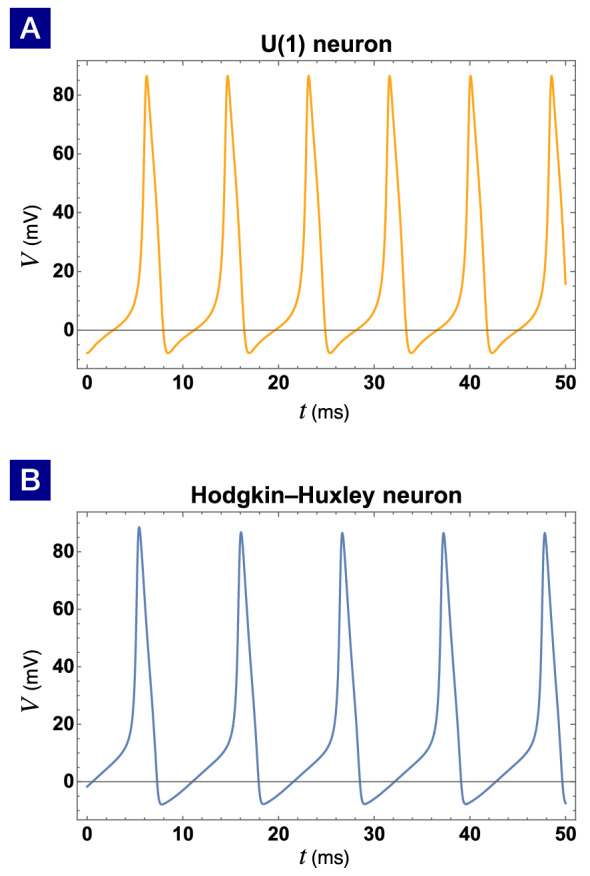

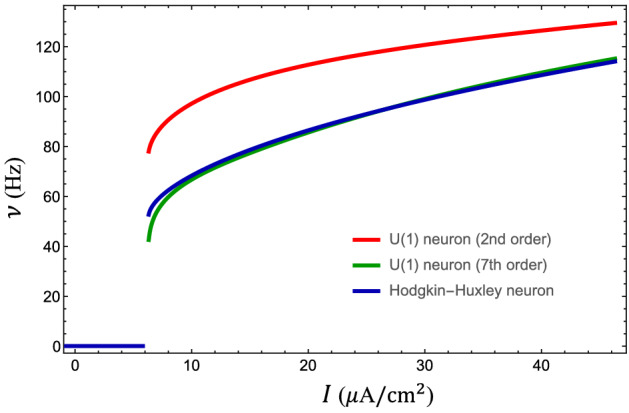

it is sufficient to match the firing amplitude rather well as shown in Fig. 7. The coefficients in the phase dynamics can be found in numerical fitting as well. Keeping the Fourier expansion to the seventh order in phase dynamics, the action potential of the Hodgkin-Huxley neuron is almost identical to the effective U(1) neuron presented in Fig. 8.

Figure 7.

Fitting the firing amplitude of the Hodgkin-Huxley neuron. The firing amplitude of the effective U(1) neuron (orange) fits that of the Hodgkin-Huxley neuron (black) rather well. The firing amplitude of the unstable limit cycle (light blue) is also shown for reference.

Figure 8.

The action potentials of (A) the effective U(1) neuron and (B) the Hodgkin-Huxley neuron are almost identical. The external current is A/cm for both neurons. The voltage of the resting state mV is shifted to zero for visual clarity.

To capture the spike-like profile of the action potential, it is necessary to include higher order terms in the phase dynamics. However, in the cases where the precise shape of the action potential is irrelevant, the precision of the Fourier expansion can be relaxed. As shown in Fig. 9, the gain function of the U(1) neuron up to the seventh order in phase dynamics matches that of the Hodgkin-Huxley neuron rather well. However, the gain function up to the second order in phase dynamics still keeps the qualitative trend with reasonable compromise in quantitative precision. The parameters in the Fourier expansion to the second order in the phase-rotation function are found to be

| 20 |

Figure 9.

Gain function of the U(1) neuron. The gain function of the Hodgkin-Huxley neuron (blue) is well captured by that of the U(1) neuron with Fourier expansion to the seventh order in phase dynamics. However, the U(1) neuron to the second order delivers an approximate gain function with the same trend, indicating lower-order terms already secure the qualitative behavior of the neuronal dynamics.

Phase-dependent synaptic interactions

With the established theoretical framework for a single neuron, we move on to investigate the synaptic interactions between connected neurons. As the nonuniform angular velocity in phase dynamics plays a significant role for the single neuron, we anticipate that the synaptic interactions also carry nontrivial phase dependence.

Suppose the phases of presynaptic and postsynaptic neurons are labels as and respectively. The Kuramoto interaction31 carries the phase dependence and tends to synchronize both neurons. In terms of the complex dynamical variables, the synaptic interaction can be written as

| 21 |

where denotes the synaptic strength of the Kuramoto interaction between the neurons. Note that the above interaction is U(1) symmetric, i.e. it is invariant under a constant phase shift for all neurons.

The above synaptic interaction is simple and widely used in the generalized Kuramoto models31 for studying synchronization phenomena. But, the U(1) symmetry imposes a rather unrealistic constraint on the neuronal dynamics. A constant phase shift means a temporal shift in the firing process, which is certainly not invariant for any realistic neurons. Furthermore, even though synchronization phenomena are widely observed in many biological systems, it is faulty for information processing. In fact, Parkinson’s disease is correlated with excessively strong oscillatory synchronization in the brain areas such as thalami and basal ganglia32–34, while a healthy brain is asynchronous in these areas.

Let us try to model the phase dependence of the synaptic interactions from realistic neuronal properties. When the presynaptic neuron fires, it provides a synaptic current and enhances the chance for the postsynaptic neuron to fire. This process can be approximated by the phase factor qualitatively because it reaches the maximum at (spike) and vanishes at (hyperpolarized). It is also known that the postsynaptic neuron is sensitive to external stimuli in the hyperpolarized period while almost insensitive around the spike. Thus, there is another phase factor arisen from the postsynaptic neuron. Combing the phase factors for presynaptic and postsynaptic neurons together, we anticipate the synaptic interaction carries the overall phase dependence . In terms of the complex dynamical variables, the synaptic interaction can be written as

| 22 |

where denotes the strength of the synaptic interaction between the neurons. After taking the realistic neuronal properties into account, the synaptic interaction is no longer U(1) symmetric and the non-physical constraints are lifted. It is rather interesting that the above synaptic interaction is similar to the Bardeen-Cooper-Schrieffer interaction, as the U(1) symmetry is also broken in superconductors. The dynamical behaviors of neuronal networks with this type of phase-dependent synaptic interactions avoid the ultimate fate of synchronization, are effective for information processing, and remain open for further investigations.

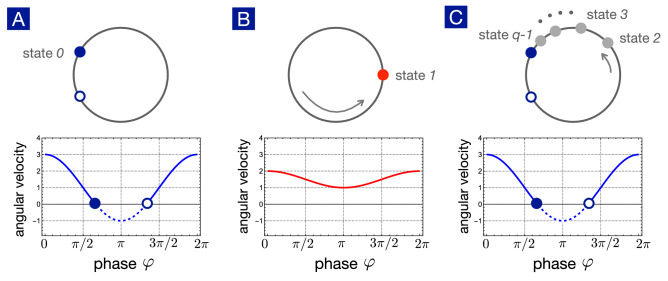

The refractory effects in spiking neurons also lead to phase-dependent synaptic interactions. Kinouchi and Copelli proposed a q-state neuron model to account for the observed refractory effects35. As shown in Fig. 10, the resting state is labeled as state 0 and the firing state is labeled as state 1. The other states are refractory and thus insensitive to external stimuli. Time evolution is discrete in the Kinouchi-Copelli model, and the update for the whole system is synchronous. A neuron i in state 0 can be excited to state 1 in the next time step in two ways: (1) excited by the external stimulus, driven by a Poisson process with a rate r, or (2) excited by a neighbor neuron j, which is in the excited state in the previous time step, with probability . However, the dynamics is deterministic after excitation. If the neuron is in state n, it must evolve to state sequentially in the next time step until the last refractory state leads back to the state 0 (resting state). Using to represent the state of the neuron i at the time step t, we summarize the rules of dynamics for a q-state KCNN in the math form:

If , then ;

If , then ;

If , then if triggered by either the external stimulus or neighboring firing neurons at the last time step. Otherwise, .

Because the U(1) neuron provides the general theoretical framework for nonuniform phase dynamics, it is not surprising that the Kinouchi-Copelli neuron can be mapped to the U(1) neuron as well.

Figure 10.

Kinouchi-Copelli neuron as the U(1) neuron in discrete time steps. (A) Without external stimuli, the neuron is excitable with a stable fixed point (state 0) denoting the resting state. (B) In the presence of external stimuli, by either external or synaptic currents, the neuron fires and the phase swings to with maximum potential. (C) After firing, the neuron enters the refractory states denoted by states and eventually returns to the rest state.

In the absence of external stimuli, the Kinouchi-Copelli neuron is excitable, described by a pair of saddle and node on the limit cycle as shown in Fig. 10. The resting state corresponds to the stable node, labeled as state 0. When the neuron is activated by external stimuli, the angular velocity lifts up and the neuron fires. The phase swings to (spike) with maximum potential, labeled as state 1. The change of the angular velocity upon external stimuli resembles the Hodgkin-Huxley neuron studied in the previous section. After firing, the neuron enters the refractory period and gradually relaxes back to the resting state. Cutting the refractory period into time steps of equal intervals, the refractory states are defined accordingly. In short, the Kinouchi-Copelli neuron can be viewed as a discrete version of the U(1) neuron going through the SNIC transition upon external stimuli.

Now we turn to the phase dependence of the synaptic interaction caused by the refractory effect. For simplicity, we place these neurons on a two-dimensional grid to form the Kinouchi-Copelli neuronal network (KCNN). The phase dependence of the presynaptic neuron takes the usual form of pulse coupling: when the presynaptic neuron fires (state 1), it gives rise to a finite probability p to activate the postsynaptic neuron. In a two-dimensional grid, postsynaptic neurons are connected to 4 presynaptic neurons. Thus, it is convenient to introduce the brach ratio as the sum of all activation probabilities, , to parameterize the dynamical behaviors of the KCNN. The postsynaptic neuron also brings about another phase dependence due to refractory effects: only when the postsynaptic neuron is in the resting state (state 0), it can be activated to fire upon external stimuli.

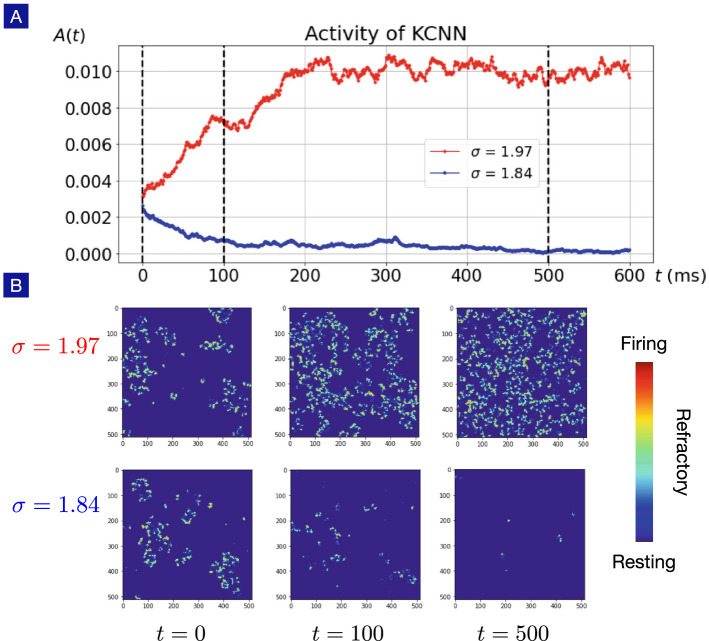

Although the phase dependence of the synaptic interactions in the KCNN is not the same as that in Eq. (22), the broken U(1) symmetry is manifest. Therefore, spontaneous asynchronous firing (SAF) phase is anticipated when the synaptic weight is strong enough. This is indeed true. We perform numerical simulations for the KCNN with different branching ratios as shown in Fig. 11. The initial configurations are randomly set with sparsely distributed activated neurons. When the branching ratio is larger than some critical value, the firing activity is enhanced and the neuronal activity is described by the SAF phase. On the other hand, when the branching ratio is smaller than the critical, the firing activities are suppressed, entering the quiescent phase with diminishing neuronal activities. The dynamical phase transition and associated statistical analysis for the KCNN will be presented in detail elsewhere.

Figure 11.

Spontaneous asynchronous firing in Kinouchi-Copelli neuronal netwrok. (A) Given a weak neuronal activity as the initial configuration, a larger branching ratio () drives the Kinouchi-Copelli neuronal network to the spontaneous asynchronous firing (SAF) phase with stable neuronal activities, while a smaller branching ratio () leads to the quiescent state with diminishing neuronal activities. (B) Snapshots of the Kinouchi-Copelli neuronal network in SAF and quiescent phases.

Unlike the synchronous phase, the SAF phase can encode and decode information effectively and is of vital importance for studying neuronal networks. To achieve this goal, the phase dependence of the synaptic interactions must be properly taken into account and the U(1) neuron framework provides a natural and convenient approach to tackle this daunting challenge.

Discussions and conclusions

The notion of “minimum model” is rather common in physics – constructing the simplest model to capture the essential degrees of freedom of the system. When adopting the minimum-model approach, one does not intend to describe all detail features observed in experiments. Instead, one is interested in extracting the most important features/factors in the dynamical systems. The firing-rate model, widely used in computational neuroscience, serves as a good examples for the minimum-model approach because only one feature (the firing rate) is kept. However, our findings here demonstrate that the rate models miss out important features and, thus, the appropriate minimum model should be the U(1) neuron model proposed here. The U(1) neuron model integrates the dynamics of firing amplitude and phase together and serves as a better minimum model to capture the essential ingredients of neuronal dynamics. In fact, we study the dynamics of the Hodgkin-Huxley neuron within the U(1) neuron framework and show that the action potential, the gain function and the associated dynamical phase transitions can all be described consistently by the U(1) neuron model.

The central result of the U(1) neuron is shown in Fig. 4. The nonuniform phase dynamics exhibits “whirlwind” (fast dynamics) and “bottleneck” (slow dynamics) features and can be understood as being away or close to the critical point. For instance, in the vicinity of the critical point, the bottleneck feature emerges due to the critical-slowdown phenomena. One thus anticipates the neuron shall be sensitive to external stimuli, consistent with the experimental observations. On the other hand, the whirlwind point is relatively far away from the critical point so that the dynamics shall remain robust and insensitive to external stimuli, also consistent with current observations. The U(1) neuron model thus provides a coherent understanding for the neuronal sensitivity during different firing periods.

The phase dependence is also important when studying synaptic interactions between neurons. The U(1) neuron framework provides a systematic approach construct the phase-dependent synaptic interaction between presynaptic and postsynaptic neurons. For instance, let’s denote the phases of the presynaptic and postsynaptic neurons as respectively. Since the presynaptic neuron provides a pulse of synaptic current when it fires, we can approximate this process by the phase-dependent factor . Similarly, because the postsynaptic neuron is sensitive to the stimuli during the hyperpolarized period but insensitive during the depolarized period, these phenomena can be approximated by a factor of . Combining both factors together can catch the phase dependences on the presynaptic and postsynaptic phases. Note that the synaptic interactions discussed here are different from the usual Kuramoto model and the like, where the synaptic interactions depend on the phase difference , ready to kick off synchronization instability among neurons. The phase-dependent synaptic interactions are much richer within the U(1) neuron framework and avoid the ultimate fate of synchronization. Therefore, they may lead to asynchronous phases, more effective for information processing. These important issues remain open for future investigations.

Finally, we would like to address the role of network structure. As demonstrated in this article, we assemble the Kinouchi-Copelli neurons, discrete version of the U(1) neurons, on a two dimensional grid to form a neuronal network. Our numerical simulations show that the SAF phase, crucial for information processing, can be realized in the square-lattice KCNN. Compared to the fully-connected KCNN, which can be solved by the mean-field approximation, the square-lattice KCNN demonstrates a better capability for spatial information processing, such as the neuronal networks in the retina. There’s plenty of room between these two extreme network structures. It is expected that, as the longer-ranged synaptic interactions appear, the collective modes of the neurons are enhanced while the spatial resolution compromises. To make the neuronal network smarter, it is crucial to abandon the naive fully-connected network structure. It remains an interesting challenge to explore various dynamical phases on different network structures now.

Acknowledgements

The authors would like to express their gratitude to Ching-Lung Hsu, Chin-Yuan Lee, Georg Northoff and Wei-Ping Pan for helpful discussions and comments. We acknowledge supports from the Ministry of Science and Technology through grants MOST 109-2112-M-007-026-MY3, MOST 109-2124-M-007-005 and MOST 110-2112-M-005-011. Financial supports and friendly environment provided by the National Center for Theoretical Sciences in Taiwan are also greatly appreciated.

Author contributions

H.H.L. proposed the research topics and supervised the project. C.Y.L. worked out most analytic derivation and numerical simulations. P.H.C. carried out the numerical simulations on the neuronal network. W.M.H. joined the discussions and provided suggestions. C.Y.L. and H.H.L. wrote the manuscript and all authors reviewed the manuscript.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Hsiu-Hau Lin, Email: hsiuhau.lin@phys.nthu.edu.tw.

Wen-Min Huang, Email: wenmin@phys.nchu.edu.tw.

References

- 1.Galizia CG, Lledo P-M. Neurosciences - from molecule to behavior: A university textbook. Berlin: Springer-Verlag; 2013. [Google Scholar]

- 2.Gerstner, W., Kistler, W. M., Naud, R., & Paninski, L. Neuronal dynamics: From single neurons to networks and models of cognition (Cambridge University Press, 2014).

- 3.Izhikevich, E. M. Dynamical systems in neuroscience (MIT press, 2007).

- 4.Dayan, P. & Abbott, L. F. Theoretical Neuroscience: computational and mathematical modeling of neural systems (MIT Press, 2014).

- 5.Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 1972;12:1. doi: 10.1016/S0006-3495(72)86068-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ermentrout B. Reduction of conductance-based models with slow synapses to neural nets. Neural Comput. 1994;6:679. doi: 10.1162/neco.1994.6.4.679. [DOI] [Google Scholar]

- 7.Gerstner W. Time structure of the activity in the neural network models. Phys. Rev. E. 1995;51:738. doi: 10.1103/PhysRevE.51.738. [DOI] [PubMed] [Google Scholar]

- 8.Shriki O, Hansel D, Sompolinsky H. Rate models for conductance-based cortical neuronal networks. Neural Comput. 2003;15:1809. doi: 10.1162/08997660360675053. [DOI] [PubMed] [Google Scholar]

- 9.Ostojic S, Brunel N. From spiking neuron models to linear-nonlinear models. PLoS Comput. Biol. 2011;7:e1001056. doi: 10.1371/journal.pcbi.1001056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Choe Senyon. Potassium channel structures. Nat. Rev. Neurosci. 2002;3:115. doi: 10.1038/nrn727. [DOI] [PubMed] [Google Scholar]

- 11.Naylor CE, Bagnéris C, DeCaen PG, Sula A, Scaglione A, Clapham DE, Wallace BA. Molecular basis of ion permeability in a voltage-gated sodium channel. The EMBO J. 2016;35:820. doi: 10.15252/embj.201593285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hodgkin AL, Huxley AF. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 1952;117:500. doi: 10.1113/jphysiol.1952.sp004764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hodgkin AL. The local electric changes associated with repetitive action in a non-medullated axon. J. Physiol. 1948;107:165. doi: 10.1113/jphysiol.1948.sp004260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ermentrout GB, Kopell N. Parabolic bursting in an excitable system coupled with a slow oscillation. SIAM J. Appl. Math. 1986;46:233. doi: 10.1137/0146017. [DOI] [Google Scholar]

- 15.FitzHugh R. Impulses and physiological states in theoretical models of nerve membrane. Biophys. J. 1961;1:445. doi: 10.1016/S0006-3495(61)86902-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Izhikevich EM. Simple model of spiking neurons. IEEE Trans. Neural Netw. 2003;14:1569. doi: 10.1109/TNN.2003.820440. [DOI] [PubMed] [Google Scholar]

- 17.Izhikevich EM. Which model to use for cortical spiking neurons? IEEE Trans. Neural Netw. 2004;15:1063. doi: 10.1109/TNN.2004.832719. [DOI] [PubMed] [Google Scholar]

- 18.Ermentrout B. Type I membranes, phase resetting curves, and synchrony. Neural Comput. 1996;8:979. doi: 10.1162/neco.1996.8.5.979. [DOI] [PubMed] [Google Scholar]

- 19.Goodfellow, I., Bengio, Y. & Courville, A. Dynamical systems in neuroscience (MIT press, 2007).

- 20.Haykin, S. Neural Networks and Learning Machines (Pearson, 2009).

- 21.Jensen MH, Bak P, Bohr T. Transition to chaos by interaction of resonances in dissipative systems. I. Circle Maps Phys. Rev. A. 1984;30:1960. [Google Scholar]

- 22.Flaherty JE, Hoppensteadt FC. Frequency entrainment of a forced van der Pol oscillator. Stud. Appl. Math. 1978;58:5. doi: 10.1002/sapm19785815. [DOI] [Google Scholar]

- 23.Fletcher NH. Mode locking in nonlinearly excited inharmonic musical oscillators. J. Acoust. Soc. Am. 1978;64:1566. doi: 10.1121/1.382139. [DOI] [Google Scholar]

- 24.Guevara MR, Glass L, Shrier A. Phase locking, period-doubling bifurcations, and irregular dynamics in periodically stimulated cardiac cells. Science. 1981;214:1350. doi: 10.1126/science.7313693. [DOI] [PubMed] [Google Scholar]

- 25.Aihara K, Numajiri T, Matsumoto G, Kotani M. Structures of attractors in periodically forced neural oscillators. Phys. Lett. A. 1986;116:313. doi: 10.1016/0375-9601(86)90578-5. [DOI] [Google Scholar]

- 26.Takahashi N, Hanyu Y, Musha T, Kubo R, Matsumoto G. Global bifurcation structure in periodically stimulated giant axons of squid. Physica D. 1990;43:318. doi: 10.1016/0167-2789(90)90140-K. [DOI] [Google Scholar]

- 27.Gray CM, McCormick DA. Chattering cells: Superficial pyramidal neurons contributing to the generation of synchronous oscillations in the visual cortex. Science. 1996;274:109. doi: 10.1126/science.274.5284.109. [DOI] [PubMed] [Google Scholar]

- 28.Szucs A, Elson RC, Rabinovich MI, Abarbanel HDI, Selverston AI. Nonlinear behavior of sinusoidally forced pyloric pacemaker neurons. J. Neurophysiol. 2001;85:1623. doi: 10.1152/jn.2001.85.4.1623. [DOI] [PubMed] [Google Scholar]

- 29.Matthews PC, Strogatz SH. Phase diagram for the collective behavior of limit-cycle oscillators. Phys. Rev. Lett. 1990;65:1701. doi: 10.1103/PhysRevLett.65.1701. [DOI] [PubMed] [Google Scholar]

- 30.Xie Y, Chen L, Kang YM, Aihara K. Controlling the onset of Hopf bifurcation in the Hodgkin-Huxley model. Phys. Rev. E. 2008;77:061921. doi: 10.1103/PhysRevE.77.061921. [DOI] [PubMed] [Google Scholar]

- 31.Acebron JA, Bonilla LL, Vicente CJP, Ritort F, Spigler R. The kuramoto model: A simple paradigm for synchronization phenomena. Re. Mod. Phys. 2005;77:137. doi: 10.1103/RevModPhys.77.137. [DOI] [Google Scholar]

- 32.Pare D, Curro’Dossi R, Steriade M. Neuronal basis of the parkinsonian resting tremor. Neuroscience. 1990;35:217. doi: 10.1016/0306-4522(90)90077-H. [DOI] [PubMed] [Google Scholar]

- 33.Nini A, Feingold A, Slovin H, Bergman H. Neurons in the globus pallidus do not show correlated activity in the normal monkey, but phase-locked oscillations appear in the MPTP model of parkinsonism. J. Neurophysiol. 1995;74:1800. doi: 10.1152/jn.1995.74.4.1800. [DOI] [PubMed] [Google Scholar]

- 34.Tass P, et al. The causal relationship between subcortical local field potential oscillations and parkinsonian resting tremor. J. Neur. Eng. 2010;7:016009. doi: 10.1088/1741-2560/7/1/016009. [DOI] [PubMed] [Google Scholar]

- 35.Kinouchi O, Copelli M. Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2006;2:348. doi: 10.1038/nphys289. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.