Abstract

Background

There is a fundamental gap in understanding the causal mechanisms by which strategies for implementing evidence-based practices address local barriers to effective, appropriate service delivery. Until this gap is addressed, scientific knowledge and practical guidance about which implementation strategies to use in which contexts will remain elusive. This research project aims to identify plausible strategy-mechanism linkages, develop causal models for mechanism evaluation, produce measures needed to evaluate such linkages, and make these models, methods, and measures available in a user-friendly website. The specific aims are as follows: (1) build a database of strategy-mechanism linkages and associated causal pathway diagrams, (2) develop psychometrically strong, pragmatic measures of mechanisms, and (3) develop and disseminate a website of implementation mechanisms knowledge for use by diverse stakeholders.

Methods

For the first aim, a combination of qualitative inquiry, expert panel methods, and causal pathway diagramming will be used to identify and confirm plausible strategy-mechanism linkages and articulate moderators, preconditions, and proximal and distal outcomes associated with those linkages. For the second aim, rapid-cycle measure development and testing methods will be employed to create reliable, valid, pragmatic measures of six mechanisms of common strategies for which no high-quality measures exist. For the third aim, we will develop a user-friendly website and searchable database that incorporates user-centered design, disseminating the final product using social marketing principles.

Discussion

Once strategy-mechanism linkages are identified using this multi-method approach, implementation scientists can use the searchable database to develop tailored implementation strategies and generate more robust evidence about which strategies work best in which contexts. Moreover, practitioners will be better able to select implementation strategies to address their specific implementation problems. New horizons in implementation strategy development, optimization, evaluation, and deployment are expected to be more attainable as a result of this research, which will lead to enhanced implementation of evidence-based interventions for cancer control, and ultimately improvements in patient outcomes.

Supplementary Information

The online version contains supplementary material available at 10.1186/s43058-022-00358-3.

Keywords: Mechanisms, Implementation Science, Measures, Causal pathway diagrams, Theory, Determinants

Contributions to the literature.

This protocol paper surfaces four critical challenges to advancing the study of implementation mechanisms

This paper details a series of studies to address these four challenges

Factors implicated in an implementation strategy causal pathways are defined and methods for articulating them are provided

Background

Implementation science is poised to make a transformative advance by illuminating the causal mechanisms through which implementation strategies influence evidence-based practice (EBP) implementation, care delivery, and, ultimately, patient outcomes. Knowing how strategies work—that is, knowing their mechanisms—will facilitate identifying the barriers and facilitators that specific strategies effectively address, and the conditions under which strategies work well, poorly, or not at all. Likewise, implementation strategies, including multilevel or multicomponent ones, could be optimized by adding or strengthening components that activate mechanisms to influence key barriers while eliminating components that do not. Knowledge from mechanisms-focused implementation research will offer practitioners guidance about which implementation strategies to use in which contexts.

To address calls from funders, journals, leaders in cancer control [1, 2], and the implementation research field at large [3–5], we have identified critical challenges that must be addressed to inform when and in what contexts specific implementation strategies should be used and when they should not. First, many strategies are underspecified in their core components [6, 7] which makes it difficult to identify mechanisms of change [7] and to replicate successful approaches. Second, little evidence of implementation strategy mechanisms exist. A 2016 systematic review of implementation mechanisms in mental health found no empirically supported mechanisms in nine studies [8]. Another systematic review of implementation mechanisms examined 46 studies across health domains and found that none identified a mechanism for even a single strategy [9]. Third, there are few testable causal accounts of how implementation strategies operate, the proximal and distal outcomes they impact, and the preconditions (i.e., factors that must be in place to activate mechanisms) and moderators that influence their effectiveness. Fourth, reliable, valid, pragmatic measures of implementation mechanisms are sorely lacking [10], impeding evaluation of implementation strategies and their causal pathways. Furthermore, existing measures often lack desirable psychometric qualities.

Current study

This manuscript presents the protocol for a three-year research project that seeks to address these critical issues. Our research project will employ a structured approach that integrates theory, empirical literature, and qualitative inquiry with expert panel methods across a series of studies that build on and validate the ones prior. We draw on Agile Science [11], a new approach for developing and studying behavioral interventions that borrows concepts from computer science and offers tools for formulating causal pathway diagrams, to articulate plausible mechanisms for commonly used strategies [12, 13], clarifying how implementation strategies operate. Three aims guide this research project.

Aim 1: Build a database of causal pathway diagrams of implementation strategy functioning. We will first identify plausible strategy-mechanism linkages by interviewing 30 principal investigators of implementation scienced-focused National Institute of Health-funded grants in cancer control or mental health. We, the investigative team, will then develop casual pathway diagrams (CPD; i.e., graphical depictions of factors implicated in strategy operations) for 30 commonly used implementation strategies [10]. Finally, we will affirm the plausibility and strength of strategy-mechanism linkages, and completeness of the CPD, for the 30 strategies by soliciting feedback from diverse stakeholders who are experts in their use of each strategy.

Aim 2: Develop reliable, valid, pragmatic measures of six implementation mechanisms that operate at intrapersonal, interpersonal, or organizational levels, using our established rapid-cycle measure development and testing procedures [14].

Aim 3: Develop and disseminate an interactive website repository of implementation mechanisms knowledge using iterative user-centered design and social marketing principles.

Methods

This project is a series of interrelated studies and activities intended to yield causal pathway diagrams of common implementation strategies, methods and associated toolkits, and measures to be disseminated through a user-friendly website. Our approach ensures the application of the most appropriate methods for rigorous and efficient study completion for each aim, with each activity serving as a validation check for preceding activities (Table 1).

Table 1.

Research strategy overview

| Aim | Inputs | Methods | Outcomes |

|---|---|---|---|

| 1a | Expertise of principal investigators | Semi-structured interviews | Specified strategies, plausible mechanisms |

| 1b |

30 commonly used strategies Extant literature |

Causal pathway diagramming | Causal pathway diagrams |

| 1c | Diagrams of 30 commonly used strategies | Expert panel validation process | Confirmed strategy-mechanism linkages |

| 2 | Existing measures, theory | Rapid measure development | 6 measures of mechanisms |

| 3 |

Causal pathway diagrams 6 new measures |

User-centered design, social marketing | User-friendly website, relational database, dissemination effort |

Aim 1a: Identify strategy-mechanism linkages in US National Institutes (NIH)-funded research

Design and sample

This study will involve conducting semi-structured interviews via Zoom with principal investigators (PIs) (N=30) of federally funded studies on the development and/or testing of an implementation strategy in cancer control or behavioral health. Studies funded by the NCI and NIMH that were reviewed by the Dissemination and Implementation Research in Health or the Science of Implementation in Health and Health Care study sections will be prioritized.

Data collection

We will review published and unpublished study protocols from PIs before interviews. Experienced qualitative interviewers (BJP and RLH) will conduct 1-h, Zoom-based interviews using a semi-structured interview guide (see Supplemental file 1). The interviews will include four major sections that provide the opportunity to (1) become better oriented to each PI’s study and the implementation strategies being tested, (2) understand the discrete components of the implementation strategies, (3) explore if and how PIs are conceptualizing and studying mechanisms in their studies, and (4) discuss their study strengths and opportunities to improve research to accelerate our understanding of how and why implementation strategies work. Before each interview, interviewers will review the PIs study protocol documents and complete a structured abstraction sheet that will be used as background information for the interview questions. Interviews will be recorded and transcribed.

Data analysis

After each interview, interviewers will draft field notes to record key takeaways and similarities and differences with previously conducted interviews. Transcribed interviews will be imported into NVivo [15] and analyzed using qualitative content analysis, which allows for deductive and inductive coding [16]. Deductive coding will be based upon key concepts from the interview guide (e.g., discrete strategies, mechanisms, barriers, implementation outcomes), while inductive coding will allow for additional concepts and themes to be identified. Two analysts will independently code transcripts to increase reliability and reduce bias, with a goal of at least 80% agreement. Regular meetings will be used to discuss and resolve coding discrepancies as a team throughout the coding process. Descriptive and interpretive summaries with direct quotes will be developed to support descriptions and analytic assertions. In addition to the analytic memos and co-coding of interview transcripts, we will engage in peer debriefing among the interviewers after each interview and throughout the analytic process. As we synthesize the qualitative interview findings, we will continuously update the study protocols and interviews to enhance the rigor and impact of our qualitative findings [17]. We expect this study will yield a detailed qualitative account of how NIH PIs understand implementation strategys functioning.

Aim 1b: Develop causal pathway diagrams for 30 commonly used implementation strategies

Overview

To effectively enact change, implementation researchers and practitioners must have a clearer understanding of the factors required for mechanism activation as well factors that might influence strategy strength. The investigative team, which includes experts in implementation science and agile science, will develop causal pathway diagrams for 30 commonly used implementation strategies identified.

Causal pathway diagrams are an efficient way to represent evidence and hypotheses about a strategy’s operation, including the mechanisms it is intended to activate, the barrier it is intended to impact, downstream implementation outcomes that should result, and the factors that are necessary for or that moderate this causal process. Such diagrams offer initial accounts of the strategy functioning that can be further developed into robust theories of strategy operation [10]. Advances in path models and latent variable modeling allow for empirical testing of proposed causal pathways.

Research strategy

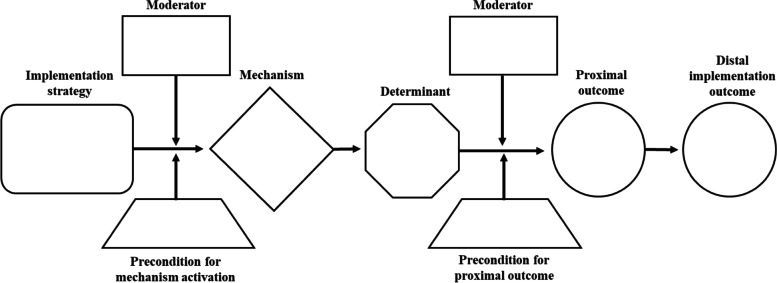

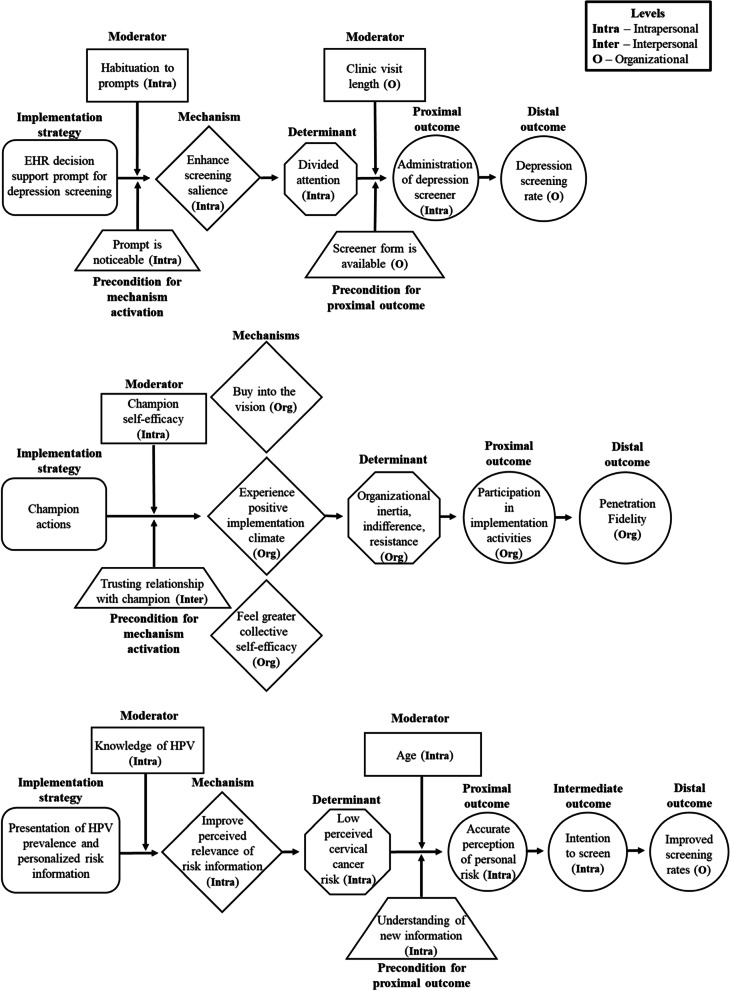

We will develop sets of causal pathway diagrams for 30 strategies across five levels (patient, provider, innovation, organization, system) selected according to several criteria—these include those most commonly used by PIs interviewed in Aim 1a and level of evidence documented in systematic reviews (e.g., Effective Practice and Organization of Care) [18]. Diagrams will be developed iteratively by investigative team members using the online diagramming tool Miro [19] to allow asynchronous collaborative work on shared diagrams. Each diagram will contain these elements: (1) operationalization of an implementation strategy; (2) target barrier(s); (3) mechanism by which the strategy is hypothesized to affect the barrier; (4) observable proximal outcomes for testing mechanism activation and barrier change, which are precursors to implementation outcomes; (5) preconditions for the mechanism to be activated and to affect outcomes (6) moderators that could facilitate or impede strategy effectiveness, and (7) distal implementation outcomes that should be altered by target barrier changes (Fig. 1 includes a CPD template; Fig. 2 depicts three completed example CPDs).

Fig. 1.

Causal pathway diagram template. Note: The number and placement of moderators and preconditions will depend on the specific causal pathway diagram being created. The placement above is merely an illustration

Fig. 2.

Causal pathway diagram examples. Note. EHR electronic health record, HPV human papillomavirus

Causal pathway diagram development for each strategy will (1) draw on existing theories and empirical studies of the strategy; (2) begin with implementation strategy specification; (3) articulate putative mechanisms that reflect the processes through which the strategy operates; (4) center the specific barrier(s) that a strategy can plausibly address given its mechanism of action; (5) identify the strategy’s preconditions, or factors that must be in place, for a part of the causal process to occur; (6) identify key moderators at different levels that might amplify or diminish strategy effects and depict where in the causal chain effect modification is likely to be most relevant; and (7) operationalize observable, proximal outcomes that precede implementation outcomes and provide evidence that the mechanism is being activated or that the barrier is impacted by the strategy’s administration.

For both preconditions and moderators, we will start with a list of any effect modifiers identified in existing theories and empirical literature on the strategy and theorize additional ones by applying structured prompts to those theories and studies. If a strategy is linked to multiple mechanisms, we will graph how those mechanisms and their effect modifiers might interact in the strategy’s operation by adding multiple paths in a strategy’s diagram. This sub-aim will yield causal pathway diagrams depicting strategy-mechanism-barrier-outcome linkages, preconditions, and moderators for 30 common implementation strategies.

Aim 1c: Affirm plausibility and strength of 30 strategy-mechanism linkages

Overview

Aim 1c will affirm the plausibility and strength of strategy-mechanism linkages, and completeness of the CPDs, by engaging implementation scientists and other stakeholders in an expert panel process for the 30 commonly used strategies from 1b. This step ensures that the most commonly used strategies (and their associated mechanisms) are carefully vetted by subject matter experts, serving as a validation check for Aim 1b.

Design

Using a web-based meeting platform, we will engage nine stakeholders per strategy-mechanism linkage to rate them for plausibility and strength. After this rating, we will invite stakeholders to articulate up to three operationalizations of the strategy—specific ways that the strategy could be implemented in a particular setting. This step provides further validation of generated strategy-mechanisms linkages (i.e., checking if different operationalizations of the same strategy are feasibly activating the same mechanisms) and articulates more comprehensive sets of moderators and preconditions.

Participants

Each implementation strategy will be vetted by at least nine subject matter experts; the same subject matter experts could be asked to validate one or more implementation strategy-mechanism linkages and associated CPDs. We will attempt to include diverse stakeholders with respect to role (e.g., researcher, practitioner, purveyor, patient), geography, gender, and race/ethnicity. We will recruit stakeholders using multiple professional connections, such as through the Implementation Science Centers in Cancer Consortium, the Cancer Prevention and Care Research Network [20], the Mentored Training for Dissemination and Implementation Research in Cancer alumni network, the Implementation Research Hub (Ireland), the National Centre of Implementation Science (Australia), etc.

Strategy-mechanism-barrier ratings

Participants will have, in advance, key terms and definitions, and a standard rubric for rating strategy-mechanism plausibility and strength. Participants will first be presented with each strategy (its definition and any specification work from Aim 1a) and its putative mechanisms of action from Aim 1b to rate for plausibility and strength. Participants will rate linkages using 10-point ordinal scales from “low” to “high.” Participants will then be invited to qualitatively describe up to three different ways in which the strategy could be operationalized, inspired by prompts (e.g., delivery mode, actor, action), and then rate the causal pathway diagram for completeness using a 10-point ordinal scale. Participants will have the option to propose new moderators, preconditions, etc. and provide feedback on all variable content.

Expected outcomes

Practice stakeholders could use these causal pathway diagrams to plan, guide, monitor, and evaluate their EBP implementation efforts. Implementation researchers could use them to conduct mechanisms-focused implementation research by testing (i.e., validating) and refining the hypothesized linkages. Moreover, our method for developing causal pathway diagrams will be packaged into a toolkit using principles of Agile Science and housed on our website so other researchers can apply these methods to other implementation strategies.

Aim 2: Develop reliable, valid, pragmatic measures of six implementation mechanisms

Overview

We will develop reliable, valid, pragmatic measures for six mechanisms identified in Aim 1. These measures will be administered across two data collection waves, an approach that was used in a previously established rapid-cycle process used by our team and recommended by experts [21–24]. The measure development and testing process involve domain delineation, survey item generation, and assessment of content validity, structural validity, known-groups validity, test-retest reliability, and sensitivity to change. We will develop pragmatic measures using a process that garners feedback from a pool of diverse stakeholders (implementation scientists, implementation-experienced cancer control practitioners, oncology nurses), ensuring brevity, readability, and relevance [25].

Research design: measure selection

Our measures will assess implementation mechanisms that operate at intrapersonal, interpersonal, or organizational levels, as strategies at these levels are more feasible and are more commonly deployed than those at higher levels (e.g., changing system licensure standards). In prior work [26], our team identified no psychometrically validated measures of mechanisms for the following strategies commonly used in cancer control: audit and feedback, clinical reminders, championing, multidisciplinary care teams, practice facilitation, and workflow redesign [27–31]. Provisional but plausible mechanisms for these aforementioned strategies include, respectively, highlighting performance discrepancy, cueing action (intrapersonal); promoting vision buy-in, fostering teamwork (interpersonal); and engaging in quality improvement and improving workflow (organizational).

Domain delineation

We anticipate that all of the 30 strategies evaluated by our team of stakeholders will include one or more theoretical constructs—that is, psychosocial and/or behavioral phenomena that are not directly measurable [23]. Domain delineation is the process of defining what a construct is and is not [22, 32]. Not only are most strategy mechanisms composed of constructs, so too are many barriers (e.g., readiness for change) and some implementation outcomes (e.g., acceptability). For each mechanism’s components, we will use its respective research literature to develop conceptual definitions, working as much as possible to distinguish it from related constructs and create a nomological network specifying causal relations (i.e., a CPD). Given that many constructs have some overlap (e.g., perceived behavioral control vs. self-efficacy) [33], we acknowledge that this will be a difficult endeavor; however,

Item generation

We will generate a minimum of 10–12 survey items per construct, assuming at least half will be eliminated during psychometric testing [21]. Our deductive approach to item generation [21] will use a mix of existing items from the literature and novel items that align with the conceptual definitions and nomological network.

Psychometric study 1

Study 1 will primarily focus on content validity, or the extent to which a measure is judged to be fully reflective of a construct of interest, be it unidimensional or multidimensional [34]. We will convene panels of content experts, one for each measure in development. Each panel will consist of 9–10 implementation scientists, cancer control practitioners, and psychometricians with experience in implementation science. Participants will be recruited from organizations such as the Implementation Science Centers in Cancer Control (ISC3) and the Cancer Prevention and Control Research Network, as well as our professional networks. Using a web-based survey, content experts will assess the content validity of items measuring one or more constructs associated with the implementation strategy mechanism of interest. Specifically, they will receive an orientation to the content validity assessment task, information about the mechanism and associated constructs, and a survey where they (a) rate the relevance of each item as an indicator of the mechanism on a four-point ordinal scale ranging from “not relevant” to “very relevant;” (b) rate the clarity of the wording of each item on a four-point ordinal scale ranging from “not clear, major revision needed” to “very clear, no revision needed;” and (c) respond to open-ended questions asking for suggested revisions to item wording, additional items, and coverage of the items as a whole (i.e., if any aspects of the mechanism not covered by the items). For each item, we will compute an Item-level Content Validity Index (I-CVI) by dividing the number of experts giving a rating of 3 (“quite relevant”) or 4 (“very relevant”) by the number of experts [35]. I-CVIs will be translated into values of a modified kappa statistic to adjust for chance agreement of content experts’ ratings [36]. Items with I-CVIs of 0.78 or higher will be considered as having acceptable content validity [36]. If content experts suggest item wording revisions or additional items, a follow-up survey containing these items will be sent to the panel for content validity assessment. Based on qualitative and quantitative analysis of the responses, the panel will identify a set of candidate measures for use in Psychometric Study 2. Items will be selected with an eye toward maximum clarity, brevity, and relevance.

Psychometric study 2

Study 2 assesses structural validity, reliability, known-groups validity, test-retest reliability, and sensitivity to change. Structural validity refers to the extent to which relationships among items measuring a construct accord with the construct’s expected internal structure [37].. Reliability refers to the extent to which items measuring a construct exhibit internal consistency [38]. Known-groups validity refers to the extent to which a measure is sensitive to known differences between groups [39]. Test-retest reliability refers to the extent of consistency in test scores over time. Sensitivity to change refers to the ability of a measure to detect a change in state as a change in test scores [23].

In each development wave, we will use a 2×2×2 factorial between-subjects design in which we manipulate three mechanisms at 2 opposing levels (e.g., high vs. low relative priority) in vignettes depicting a multilevel intervention to support EBP use in cancer care (e.g., integrated symptom assessment and management). We have used the vignette method successfully in prior work [40]. For each development wave, we plan to recruit, based on statistical power calculations, 240 members of the Oncology Nurse Society (ONS), an organization with 35,000+ active members working in hospital-based clinics, medical oncology units, physician offices, and other settings. We previously recruited 346 mental health counselors from a similar professional organization in a single development wave using these procedures [41]. We will compare using one-sample tests the demographics and practice characteristics of the respondents to known information about ONS members to assess for response bias. For each development wave, we will recruit from a non-overlapping sample of 1500 ONS members.

Non-retired ONS members will receive an email invitation to participate in a web-based survey, with non-respondents receiving 3 weekly reminder emails. Participants will read 1 of 8 randomly assigned vignettes. Using items generated from Psychometric Study 2, participants will indicate the extent to which the mechanism is activated from the perspective of the oncology nurse in the vignette (e.g., whether they perceive EBP use to be a relatively high or low priority, as depicted in the vignette). Participants will be re-randomized to rate either the same or the opposite vignette 3 weeks later to test for test-retest reliability or sensitivity to change, respectively. For example, a participant who received a vignette in which relative priority, teamwork, and tension for change were all designed to be high would 3 weeks later rate the vignette in which the three mechanisms were all designed to be low. We will stratify the random assignment across vignettes to ensure balance for test-retest and sensitivity analyses. Each survey is anticipated to take ~10 min, and participants will receive $25 for their efforts.

To assess structural validity, assess reliability, and identify poorly performing items, we will test separate confirmatory factor analysis models for each scale. Adequate model fit will be defined as a comparative fit index and Tucker-Lewis fit index more than 0.95 [42], standard root mean square residual less than 0.05, and RMSEA less than 0.08 [42, 43]. We will also examine factor loadings for statistical significance and adequate size (i.e., b ≥ .65). With six latent constructs and five indicators per construct (df=390), using the RMSEA test of close fit [44], with a critical alpha of 0.05, estimated power with a sample size of 240 is 100%. To assess known-groups validity we will conduct a 23 analysis of covariance with the Tukey test for multiple comparisons, controlling for nurse demographics (e.g., sex as a biological variable), to determine if the mechanism scale scores varied as expected by vignette. Achieved power for this test with 240 participants and a medium effect size (f = 0.25) is 85.7%. Significant main effects for vignette level will indicate if the measure differentiates vignette clinics that are high or low on the mechanism. We will assess test-retest reliability by calculating a two-way mixed effects ICC (>0.70 showing reliability) between the first and second survey for participants randomized to receive the same survey. We will assess sensitivity to change using linear regression models to predict the difference score (or change in measure) based on whether the vignette assignment order between the two surveys is low-low-high, low-high-low, low-high-high, high-low-low, high-high-low, high-low-high, or high-high-high; the assignment order low-low-low will serve as the reference group.

Aim 3: Develop and disseminate a website repository of implementation mechanisms knowledge

We propose to [1] develop a public website that hosts a knowledge repository with our CPDs, methods and associated toolkits, and measures, and (2) to actively disseminate this information to diverse stakeholders, including researchers and the practice community. The goal of our website will be to enable stakeholders to access our methods, measures, and results in ways that are useful to their own projects and research questions. Powering the public-facing website will be a relational database, structured to accommodate information contained in causal pathway diagrams, that will link (i.e., relate) information about barriers, strategies, mechanisms, outcomes, moderators, and preconditions using standardized data types, which allows for curation of knowledge over time. For example, if an organizational leader is interested in addressing a barrier to EBP implementation such as time, typing “time” as a barrier into a website search tool will offer information about potential strategies to address this barrier, plausible mechanisms through which these strategies operate, proximal and distal outcomes of the strategies, and preconditions and moderators affecting strategy success to consider.

Dissemination

We will conduct a social marketing campaign to promote public use of the website. We will conduct user research across key user segments to answer the 4 “Ps” of marketing (product, price, place, and promotion) and tailor dissemination strategies to meet diverse audiences’ needs and preferences. In terms of user research, we will inqure about what diverse users need and how our research results could meet those needs (product) as well as how to design the website and database to make those research results accessible and convenient to use (place). We will obtain additional information about how to reduce effort for diverse users to find the website (price); and how best to reach diverse users to inform them about the website and promote its use (promotion). We will work with communication experts to construct messages that appeal to different user segments to send through users’ preferred channels. We will also create a professional video overview of our products geared toward the practice community.

Product/website design

To design the website, we will conduct two series’ of focus groups with diverse stakeholders (one series with research and one with representatives from the practice community: providers, administrators, policy makers) to identify their information access needs. Focus groups are a commonly used needs-assessment method in user-centered design (UCD) [45, 46]. Each series will consist of three virtual sessions. The first session will introduce participants to the products of Aims 1 and 2 and elicit ideas about who might be interested in using these products and why. The expected outcome will be an initial set of personas [47, 48]—characters that represent the main types of target users for the website, acknowledging that both researchers and the practice community contain heterogeneous stakeholders. The second session will concretize the target users’ information needs by creating design scenarios [49, 50] that represent different use cases for visiting the website. To this end, participants will generate situations that different target users (represented by the personas) might encounter in their work that would motivate them to go to the website and a set of questions they would want to answer by consulting its resources. For main types of questions, participants will think aloud about how the user would want to interact with the website to get relevant information and how that information should be presented. Participants will draft brief scenarios—short narratives—that describe users’ motivations and these interactions. The third session will generate ideas for metadata that enable users to find what they are looking for. Using the scenarios, participants will think about the ways that the user in each scenario might try to access needed information and how results should be presented to enable finding other relevant results. This exercise will generate a preliminary list of attributes to accompany each major class of website content. The process will be repeated for the second series of focus groups. We will synthesize findings from the two groups and generate a design specification.

Using a web developer who specializes in information architecture will yield an appropriate organizational structure for the website’s database schema. In addition to its practical utility, this information architecture is a scientific contribution to the field as a way to unify and structure evidence for the operation of a wide range of implementation strategies. The web developer will design front-end functionality using the personas and scenarios.

The design will be usability-tested iteratively with target users, starting with low-fidelity prototypes [22, 23] and moving to higher-fidelity prototypes as the design matures. As is typical in UCD, evaluations will focus on comprehensibility, perceived usefulness, usability, and user satisfaction. These characteristics will be assessed using qualitative interviews, task-based prototype walk-throughs [23], and, in the final evaluation, a set of standard usability scales [25–27]. The evaluation results will be used to iteratively revise the design to optimize the website’s usefulness and ease of use. After review of all user data, the website will be refined and ready for dissemination.

Discussion

Despite advances in implementation barriers [51], models and frameworks [52, 53], outcomes [54], and in evaluating the general effectiveness of strategies [55], implementation mechanisms are underdeveloped and understudied [9]. The robust methods and measures described herein will facilitate mechanisms-focused implementation research, opening new horizons in implementation strategy development, optimization, evaluation, and deployment [56]. Strategy-mechanism linkages and full causal pathway diagrams will improve the design of implementation strategies and generate more robust evidence about which strategies work best in which contexts. Multicomponent and multilevel strategies could be optimized by focusing on those elements that best engage key mechanisms. When implementation strategies fail, scientists could investigate why by examining if the strategy failed to engage key mechanism(s), or if a contextual factor moderated flow of the effect from the strategy to the mechanism(s) to the outcome [57]. These advances in implementation science, in turn, could guide practitioners in selecting strategies optimized to address specific problems. Practitioners could use our reliable, valid, pragmatic measures of mechanisms to detect early if a strategy is working. Our use of UCD principles to build the website repository of implementation mechanisms knowledge combined with social marketing to actively engage intended users in our website increases our potential for impact.

Supplementary Information

Additional file 1. Aim 1a Interview Guide.

Acknowledgements

NA.

Abbreviations

- EBP

Evidence-based practice

- EHR

Electronic health record

- CPD

Casual pathway diagrams

- HPV

Human papillomavirus

- ICC

Intraclass correlation coefficient

- I-CVI

Item-level Content Validity Index

- ISC3

Implementation Science Centers in Cancer Control

- NCI

National Cancer Institute

- NIH

U.S. National Institutes of Health

- NIMH

National Institute of Mental Health

- ONS

Oncology Nurse Society

- PIs

Principal investigators

- RMSEA

Root mean square error of approximation

- UCD

User-centered design

Authors’ contributions

CCL drafted the manuscript based on the co-created grant submission. BJP, RLH, and GB drafted the Aim 1a methods section. CCL, RM, and PK drafted the Aim 1b and 1c methods sections. BJW and MHB drafted the Aim 2 methods section. ARL, PK, and BJW drafted the Aim 3 methods section. BJW led the generation of the example causal pathway diagrams. MC contributed to the operationalization of Aim 2 methods and provided critical edits throughout the manuscript. All authors reviewed, edited, and approved the final manuscript content.

Funding

This study is supported by funding from the National Cancer Institute R01CA262325, P50CA244432 and the National Institute of Mental Health P50MH126219, and the Agency for Health Care Research R13HS025632.

Availability of data and materials

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Declarations

Ethics approval and consent to participate

NA.

Consent for publication

NA.

Competing interests

The authors declare they have no competing interests to report.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Rabin BA, Glasgow RE, Kerner JF, Klump MP, Brownson RC. Dissemination and implementation research on community-based cancer prevention: a systematic review. Am J Prev Med. 2010;38(4):443–456. doi: 10.1016/j.amepre.2009.12.035. [DOI] [PubMed] [Google Scholar]

- 2.Glasgow RE, Marcus AC, Bull SS, Wilson KM. Disseminating effective cancer screening interventions. Cancer. 2004;101(S5):1239–50. [DOI] [PubMed]

- 3.Society for Implementation Research Collaboration. 4th Biennial Society for Implementation Research Collaboration 2018. Available from: https://societyforimplementationresearchcollaboration.org/4th-biennial-society-for-implementation-research-collaboration/. [Cited 2022 July 15].

- 4.Lewis CC, Larson M, Marriott BR, Jackson CB, Dorsey CN, Kerns SEU, et al. Optimizing psychological science’s impact on public health: Association for Pyschological Science; 2018. Available from: https://www.psychologicalscience.org/observer/optimizing-psychological-sciences-impact-on-public-health. [Cited 2022 July 15].

- 5.Ngo VK. Randomized control trial on implementation strategies for task-shifting depression care in Vietnam: Grantome; 2017. Available from: http://grantome.com/grant/NIH/R56-MH112630-01. [Cited 2022 July 15].

- 6.Michie S, Fixsen D, Grimshaw JM, Eccles MP. Specifying and reporting complex behaviour change interventions: the need for a scientific method. Implement Sci. 2009;4:40. doi: 10.1186/1748-5908-4-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139. doi: 10.1186/1748-5908-8-139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Williams NJ. Multilevel mechanisms of implementation strategies in mental health: integrating theory, research, and practice. Admin Pol Ment Health. 2016;43(5):783–798. doi: 10.1007/s10488-015-0693-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lewis CC, Boyd MR, Walsh-Bailey C, Lyon AR, Beidas R, Mittman B, Aarons GA, Weiner BJ, Chambers DA. A systematic review of empirical studies examining mechanisms of implementation in health. Implement Sci. 2020;15(1):1–25. doi: 10.1186/s13012-020-00983-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health. 2018;6:136. doi: 10.3389/fpubh.2018.00136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Birken SA, Powell BJ, Presseau J, Kirk MA, Lorencatto F, Gould NJ, et al. Combined use of the onsolidated Framework for Implementation Research (CFIR) and the Theoretical Domains Framework (TDF): a systematic review. Implement Sci. 2017;12(1):2. doi: 10.1186/s13012-016-0534-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gude WT, Brown B, van der Veer SN, Colquhoun HL, Ivers NM, Brehaut JC, et al. Clinical performance comparators in audit and feedback: a review of theory and evidence. Implement Sci. 2019;14(1):39. doi: 10.1186/s13012-019-0887-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Brown B, Gude WT, Blakeman T, van der Veer SN, Ivers N, Francis JJ, et al. Clinical Performance Feedback Intervention Theory (CP-FIT): a new theory for designing, implementing, and evaluating feedback in health care based on a systematic review and meta-synthesis of qualitative research. Implement Sci. 2019;14(1):40. doi: 10.1186/s13012-019-0883-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21. doi: 10.1186/s13012-015-0209-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.QSR International. NVIVO (release 2020) 2022. Available from: https://www.qsrinternational.com/nvivo-qualitative-data-analysis-software/home. [Cited 2022 July 15].

- 16.Forman J, Damschroder L. Qualitative Content Analysis. In: Jacoby L, Siminoff LA, editors. Empirical Methods for Bioethics: A Primer. Advances in Bioethics. 11: Emerald Group Publishing Limited; 2007. p. 39-62.

- 17.Padgett DK. Qualitative and mixed methods in public health. Sage publications; 2011.

- 18.The Cochrane Collaborative. Cochrane Effective Practice and Organisation of Care (EPOC) London, UK: The Cochrane Collaborative; 2022. Available from: https://epoc.cochrane.org/. [Cited 2022 July 15].

- 19.Miro. Miro home page 2022. Available from: miro.com. [Cited 2022 July 15].

- 20.Oh A, Vinson CA, Chambers DA. Future directions for implementation science at the National Cancer Institute: Implementation Science Centers in Cancer Control. Transl Behav Med. 2020;11(2):669–675. doi: 10.1093/tbm/ibaa018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hinkin TR. A brief tutorial on the development of measures for use in survey questionnaires. Organ Res Methods. 1998;1(1):104–121. doi: 10.1177/109442819800100106. [DOI] [Google Scholar]

- 22.Viswanathan M. Measurement error and research design. Thousand Oaks: Sage; 2005. [Google Scholar]

- 23.DeVellis RF. Scale development: theory and applications (Applied social research methods). Third ed. SAGE Publications, Inc; 2011. [Google Scholar]

- 24.Grol RP, Bosch MC, Hulscher ME, Eccles MP, Wensing M. Planning and studying improvement in patient care: the use of theoretical perspectives. Milbank Q. 2007;85(1):93–138. doi: 10.1111/j.1468-0009.2007.00478.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Glasgow RE, Riley WT. Pragmatic measures: what they are and why we need them. Am J Prev Med. 2013;45(2):237–243. doi: 10.1016/j.amepre.2013.03.010. [DOI] [PubMed] [Google Scholar]

- 26.Lewis CC, Stanick CF, Martinez RG, Weiner BJ, Kim M, Barwick M, et al. The Society for Implementation Research Collaboration Instrument Review Project: a methodology to promote rigorous evaluation. Implement Sci. 2015;10:2. doi: 10.1186/s13012-014-0193-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Powell BJ, Mettert KD, Dorsey CN, Weiner BJ, Stanick CF, Lengnick-Hall R, et al. Measures of organizational culture, organizational climate, and implementation climate in behavioral health: A systematic review. Implement Res Pract. 2021;2:26334895211018862. [DOI] [PMC free article] [PubMed]

- 28.Berta W, Cranley L, Dearing JW, Dogherty EJ, Squires JE, Estabrooks CA. Why (we think) facilitation works: insights from organizational learning theory. Implement Sci. 2015;10:141. doi: 10.1186/s13012-015-0323-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Armenakis AA, Harris SG, Mossholder KW. Creating readiness for organizational change. Hum Relat. 1993;46:681–703. doi: 10.1177/001872679304600601. [DOI] [Google Scholar]

- 30.Aarons GA, Ehrhart MG, Moullin JC, Torres EM, Green AE. Testing the leadership and organizational change for implementation (LOCI) intervention in substance abuse treatment: a cluster randomized trial study protocol. Implement Sci. 2017;12(1):29. doi: 10.1186/s13012-017-0562-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manag Rev. 1996;21(4):1055–1080. doi: 10.2307/259164. [DOI] [Google Scholar]

- 32.Chor KH, Wisdom JP, Olin SC, Hoagwood KE, Horwitz SM. Measures for predictors of innovation adoption. Admin Pol Ment Health. 2015;42(5):545–573. doi: 10.1007/s10488-014-0551-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tayousi M, Hidarnia AR, Montazeri A, Hajizadeh E, Taremian F, Ghofranipour F. Are Perceived Behavioral Control and Self-Efficacy Distinct Constructs? Eur J Sci Res. 2009;30(1):146–152. [Google Scholar]

- 34.Nunnally J, Bernstein I. Psychometric Theory. 3rd ed. New York: McGraw-Hill; 1994. [Google Scholar]

- 35.Polit DF, Beck CT. The content validity index: are you sure you know what's being reported? Critique and recommendations. Res Nurs Health. 2006;29(5):489–497. doi: 10.1002/nur.20147. [DOI] [PubMed] [Google Scholar]

- 36.Polit DF, Beck CT, Owen SV. Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res Nurs Health. 2007;30(4):459–467. doi: 10.1002/nur.20199. [DOI] [PubMed] [Google Scholar]

- 37.Anderson JC, Gerbing DW. Predicting the performance of measures in a confirmatory factor analysis with a pretest assessment of their substantive validities. J Appl Psychol. 1991;76(5):732–740. doi: 10.1037/0021-9010.76.5.732. [DOI] [Google Scholar]

- 38.Huijg JM, Gebhardt WA, Crone MR, Dusseldorp E, Presseau J. Discriminant content validity of a theoretical domains framework questionnaire for use in implementation research. Implement Sci. 2014;9:11. doi: 10.1186/1748-5908-9-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Davidson M. Known-groups validity. In: Michalos AC, editor. Encyclopedia of quality of life and well-being research. Dodrecht: Springer; 2014. [Google Scholar]

- 40.Weiner BJ, Lewis CC, Stanick C, Powell BJ, Dorsey CN, Clary AS, et al. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12(1):108. doi: 10.1186/s13012-017-0635-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Eccles M. Improved Clinical Effectiveness through Behavioural Research Group. Designing theoretically-informed implementation interventions. Implement Sci. 2006;1(1):4. doi: 10.1186/1748-5908-1-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Schreiber JB, Nora A, Stage FK, Barlow EA, King J. Reporting structural equation modeling and confirmatory factor analysis results: a review. J Educ Res. 2006;99(6):323–338. doi: 10.3200/JOER.99.6.323-338. [DOI] [Google Scholar]

- 43.Hox JJ, Bechger TM. An introduction to structural equation modelling. Fam Sci Rev. 1998;11:354–373. [Google Scholar]

- 44.MacCallum RC, Browne MW, Sugawara HM. Power analysis and determination of sample size for covariance structure modeling. Psychol Methods. 1996;1(2):130–149. doi: 10.1037/1082-989X.1.2.130. [DOI] [Google Scholar]

- 45.Hasson H, Blomberg S, Duner A. Fidelity and moderating factors in complex interventions: a case study of a continuum of care program for frail elderly people in health and social care. Implement Sci. 2012;7:23. doi: 10.1186/1748-5908-7-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Pill J. The Delphi method: substance, context, a critique and an annotated bibliography. Socio Econ Plan Sci. 1971;5(1):57–71. doi: 10.1016/0038-0121(71)90041-3. [DOI] [Google Scholar]

- 47.Dajani JS, Sincoff MZ, Talley WK. Stability and agreement criteria for the termination of Delphi studies. Technol Forecast Soc Change. 1979;13(1):83–90. doi: 10.1016/0040-1625(79)90007-6. [DOI] [Google Scholar]

- 48.Klasnja P, Hekler EB, Korinek EV, Harlow J, Mishra SR. Toward usable evidence: optimizing knowledge accumulation in HCI research on health behavior change. Proc SIGCHI Conf Hum Factor Comput Syst. 2017;2017:3071–3082. doi: 10.1145/3025453.3026013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Pearl J. Casuality. Cambridge: Cambridge University Press; 2009. [Google Scholar]

- 50.Pearl J, Mackenzie D. The book of why. New York: Basic Books; 2018. [Google Scholar]

- 51.Krause J, Van Lieshout J, Klomp R, Huntink E, Aakhus E, Flottorp S, et al. Identifying determinants of care for tailoring implementation in chronic diseases: an evaluation of different methods. Implement Sci. 2014;9:102. doi: 10.1186/s13012-014-0102-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43(3):337–350. doi: 10.1016/j.amepre.2012.05.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):53. doi: 10.1186/s13012-015-0242-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Pol Ment Health. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Grimshaw JM, Eccles MP, Lavis JN, Hill SJ, Squires JE. Knowledge translation of research findings. Implement Sci. 2012;7(1):50. doi: 10.1186/1748-5908-7-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Lewis CC, Powell BJ, Brewer SK, Nguyen AM, Schriger SH, Vejnoska SF, et al. Advancing mechanisms of implementation to accelerate sustainable evidence-based practice integration: protocol for generating a research agenda. BMJ Open. 2021;11(10):e053474. doi: 10.1136/bmjopen-2021-053474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Geng EH, Baumann AA, Powell BJ. Mechanism mapping to advance research on implementation strategies. PLoS Med. 2022;19(2):e1003918. doi: 10.1371/journal.pmed.1003918. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1. Aim 1a Interview Guide.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.