Abstract

A novel meta-heuristic algorithm named Egret Swarm Optimization Algorithm (ESOA) is proposed in this paper, which is inspired by two egret species’ hunting behavior (Great Egret and Snowy Egret). ESOA consists of three primary components: a sit-and-wait strategy, aggressive strategy as well as discriminant conditions. The learnable sit-and-wait strategy guides the egret to the most probable solution by applying a pseudo gradient estimator. The aggressive strategy uses random wandering and encirclement mechanisms to allow for optimal solution exploration. The discriminant model is utilized to balance the two strategies. The proposed approach provides a parallel framework and a strategy for parameter learning through historical information that can be adapted to most scenarios and has well stability. The performance of ESOA on 36 benchmark functions as well as 3 engineering problems are compared with Particle Swarm Optimization (PSO), Genetic Algorithm (GA), Differential Evolution (DE), Grey Wolf Optimizer (GWO), and Harris Hawks Optimization (HHO). The result proves the superior effectiveness and robustness of ESOA. ESOA acquires the winner in all unimodal functions and reaches statistic scores all above 9.9, while the scores are better in complex functions as 10.96 and 11.92.

Keywords: metaheuristic algorithm, swarm intelligence, egret swarm optimization algorithm, constrained optimization

1. Introduction

General engineering applications involving manipulator control, path planning, and fault diagnosis can be described as optimization problems. Since these problems are almost non-convex, conventional gradient approaches are difficult to apply and frequently result in local optima [1]. For this reason, meta-heuristic algorithms are being increasingly utilized to solve such problems as they are able to find a sufficiently good solution, whilst not relying on gradient information.

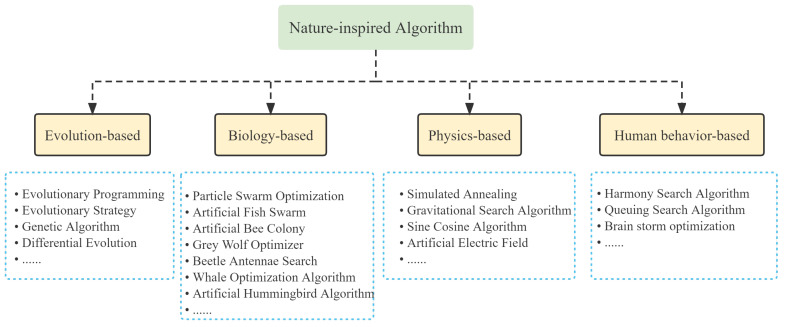

Meta-heuristic algorithms imitate natural phenomena through simulating animal and environmental behaviours [2]. As shown in Figure 1, these algorithms are broadly inspired by four concepts: the species evolution, the biological behavior, the human behavior as well as the physical principles [3,4].

Figure 1.

The taxonomy of existing meta-heuristics algorithm.

Evolution-based algorithms generate various solution spaces by mimicking the natural evolution of a species. Potential solutions are considered as members of a population, which evolve over time towards better solutions through a series of cross-mutation and Survival of the fittest. The Darwinian evolution-inspired Genetic Algorithm (GA) has remarkable global search capabilities and has been applied in a variety of disciplines [5]. Comparable to GA, Differential Evolution (DE) has also shown to be able to adapt to a number of optimization problems [6]. In addition, evolutionary strategies [7] and evolutionary programming [8] are among some of the well-known algorithms in this classification. Physics-based approaches apply the physical law as a way to achieve an optimal solution. Common examples include: Simulated Annealing (SA) [9], Gravitational Search Algorithm (GSA) [10], Black Hole Algorithm (BH) [11], and Multi-Verse Optimizer (MVO) [12]. Human behavior-based algorithms simulate the evolution of human society or human intelligence. Examples include the Harmony Search Algorithm (HSA) [13], Queuing Search Algorithm (QSA) [14], as well as the Brain Storm Optimization Algorithm (BSO) [15].

Biologically inspired algorithms mimic behaviours like hunting, pathfinding, growth, and aggregation in order to solve numerical optimization problems. A well known example of this is Particle Swarm Optimization (PSO), a swarm intelligence algorithm inspired by bird flocking behavior [16]. PSO leverages information exchange among individuals in a population to develop the population’s motion from disorder to order, resulting in the location of an optimal solution. Alternatively, ref. [17] was inspired by the behaviour of ant colonies, proposing the Ant Colony Optimization (ACO) algorithm. In ACO, a feasible solution to the optimization problem is represented in terms of the ant pathways, where a greater number of pheromone is deposited on shorter paths. The concentration of pheromone collecting on the shorter pathways steadily rises over time, which causes the ant colony to focus on the optimal path due to the influence of positive feedback.

With the widespread application of PSO and ACO in areas such as robot control, route planning, artificial intelligence, and combinatorial optimization, meta-heuristic algorithms have enabled a plethora of excellent research. Authors in [18] presented Grey Wolf Optimizer (GWO) based on the hierarchy and hunting mechanism of grey wolves. In [19], authors applied GWO to restructure a maximum power extraction model for a photovoltaic system under a partial shading situation. GWO has also been utilized in non-linear servo systems to tune the Takagi-Sugeno parameters of the proportional-integral-fuzzy controller [20]. Paper [21] introduced the Sparrow Search Algorithm (SSA), inspired by the collective foraging and anti-predation behaviours of sparrows. Authors in [22] suggested a novel incremental generation model based on a chaotic Sparrow Search Algorithm to handle large-scale data regression and classification challenges. An integrated optimization model for dynamic reconfiguration of active distribution networks was built with a multi-objective SSA by [23]. Authors in [24] constructed a tiny but efficient meta-heuristic algorithm named Beetle Antennae Search Algorithm (BAS), through modelling the beetle’s predatory behavior. Paper [25] integrated BAS with a recurrent neural network to create a novel robot control framework for redundant robotic manipulator trajectory planning and obstacle avoidance. BAS is applied in [26] to optimize the initial parameters of a convolutional neural network for medical imaging diagnosis, resulting in high accuracy and a short period of tuning time.

In recent years, there has been a proliferation of swarm-based algorithms for various situations due to the massive adoption of swarm intelligence in engineering applications [27,28,29,30,31,32,33,34,35,36]. Authors in [37] proposed a novel meta-heuristic algorithm named Artificial Hummingbird Algorithm (AHA), influenced by the flight skills and hunting strategies of hummingbirds. In addition, paper [38] introduced the African Vultures Optimization Algorithm (AVOA) inspired by vultures’ navigation behavior. Meanwhile, the Starling Maturation Optimizer (SMO) and Orca Predation Algorithm (OPA) were proposed in [39,40] to suit complex optimization problems by mimicking bird migration and orca hunting strategies. Other notable examples include Aptenodytes Forsteri Optimization (AFO) inspired by penguin hugging behaviour [41], Golden Eagle Optimizer (GEO) inspired by golden eagle feeding trails [42], Chameleon Swarm Algorithm (CSA) inspired by Chameleon dynamic hunting routes [43], Red Fox Optimization Algorithm (RFOA) inspired by red fox habits, and Elephant Clan Optimization (ECO) inspired by elephant survival strategies [44,45]. Unlike most other bio-inspired methods, authors in [46] proposed a Quantum-based Avian Navigation Optimizer Algorithm (QANA) that contains a V-echelon topology to disperse information flow and a quantum mutation strategy to enhance search efficiency. Overall, it can be observed that swarm intelligence algorithms have gradually progressed from simply imitating the appearance of animal behavior to modelling the behavior with a deeper understanding of their underlying principles.

Not only that, the evolutionary algorithm performs well on some benchmark functions [47,48,49,50,51,52]. Paper [53] introduced IPOP-CMA-ES utilizing CMA-ES [54] within a restart method with the increasing population size for each restart. Paper [55] integrated IPOP-CMA-ES with an iterative local search as ICMAES-ILS that generated better solution adopted in the rest of evaluations. Authors in [56] developed DRMA that utilizing CMA-ES as the local searcher in GA and dilivering solution space into different parts for global optimization. In addition, there are a series of evolutionary algorithms such as GaAPPADE [57], MVMO14 [58], L-SHADE [59], L-SHADE-ND [60] and SPS-L-SHADE-EIG [61].

Although meta-heuristics algorithms have shown to be well suited to various engineering applications, as Wolpert analyses in [62], there is no near-perfect method that can deal with all optimization problems. To put it another way, if an algorithm is appropriate for one class of optimization problems, it may not be acceptable for another. Furthermore, the search efficiency of an algorithm is inversely related to its computational complexity, and a certain amount of computational consumption needs to be sacrificed in order to enhance search efficiency. However, the No Free Lunch (NFL) theorem has assured that the field has flourished, with new structures and frameworks for meta-heuristics algorithms constantly being developed.

For instance, most metaheuristic algorithms contain just a single strategy, typically a best solution following strategy, and without learnable parameters, which are just deformations built on stochastic optimization. Although this category of algorithms performs well on CEC benchmark functions, they produce disappointing results in praticle application settings because of lacking feedback and parameter-learning mechanism [63,64]. In the field of robotics, the control of a manipulator is a continuous process. If the original metaheuristic algorithm is utilized, the algorithm needs to iterate and converge again at each solution, resulting in a possible discontinuity in the solution space, which affects the control effect [65]. In contrast, the method proposed in this paper includes a learnable tangent surface estimation parameter, which makes it possible to have a base reference to assist in each solution, reducing computational difficulty while ensuring continuity of understanding. Despite the proliferation of studies into meta-heuristic algorithms, the balance between exploitation and exploration has remained a significant topic of research [66,67]. The field requires a framework that balances both, enabling algorithms to be more adaptable and stable in a wider range of situations. This paper proposes a novel meta-heuristic algorithm (Egret Swarm Optimization Algorithm, ESOA) to examine how to improve the balance between the algorithm’s exploration and exploitation. The contributions of Egret Swarm Optimization Algorithm include:

Proposing a parallel framework to balance exploitation and exploration with excellent performance and stability.

Introducing a sit-and-wait strategy guided by a pseudo-gradient estimator with learnable parameters.

Introducing an aggressive strategy controlled by a random wandering and encirclement mechanism.

Introducing a discriminant condition that are capable of ensembling various strategies.

Developing a pseudo-gradient estimator referenced to historical data and swarm information.

The rest of the paper is structured as follows: Section 2 depicts the observation of egret migration behavior as well as the development of the ESOA framework and mathematical model. The comparison of performance and efficiency in CEC2005 and CEC2017 between ESOA and other algorithms is demonstrated in Section 3. The result and convergence of two engineering optimization problems utilizing ESOA are discussed in Section 4. Section 5 represents the conclusion of this paper as well as outlines further work.

2. Egret Swarm Optimization Algorithm

This section reveals the inspiration of ESOA. Then, the mathematical model of the proposed method is discussed.

2.1. Inspiration

The egret is the collective term for four bird species: the Great Egret, the Middle Egret, the Little Egret, and the Yellow-billed Egret, all of which are known for their magnificent white plumage. The majority of egrets inhabit coastal islands, coasts, estuaries, and rivers, as well as lakes, ponds, streams, rice paddies, and marshes near their shores. Egrets are usually observed in pairs, or in small groups, however vast flocks of tens or hundreds can also be spotted [68,69,70]. Maccarone observed that Great Egret fly at an average speed of 9.2 m/s and balance their movements and energy expenditure whilst hunting [71]. Due to the high consumption of energy when flying, the decision to prey typically necessitates a thorough inspection of the trajectory to guarantee that more energy would be obtained through the location of food than what would be expended through flight. Compared to Great Egrets, Snowy Egrets tend to sample more sites, and they will observe and select the location where other birds have already discovered food [72]. Snowy Egrets often adopt a sit-and-wait strategy, a scheme that involves observing the behavior of prey for a period of time and then anticipating their next move in order to hunt with the least energy expenditure [73]. Maccarone indicated in [74] that not only do Snowy Egrets applying the strategy consume less energy, but they are also 50% more efficient at catching prey than other egrets. Although the Great Egret adopt a higher exertion strategy to pursue prey aggressively, they are capable of capturing larger prey since it is rare for large prey to travel through an identical place multiple times [75]. Overall, Great Egrets with an aggressive search strategy balance high energy consumption for potentially greater returns, whereas Snowy Egrets with a sit-and-wait approach, balance lower energy expenditure for smaller but more reliable profits [74].

2.2. Mathematical Model and Algorithm

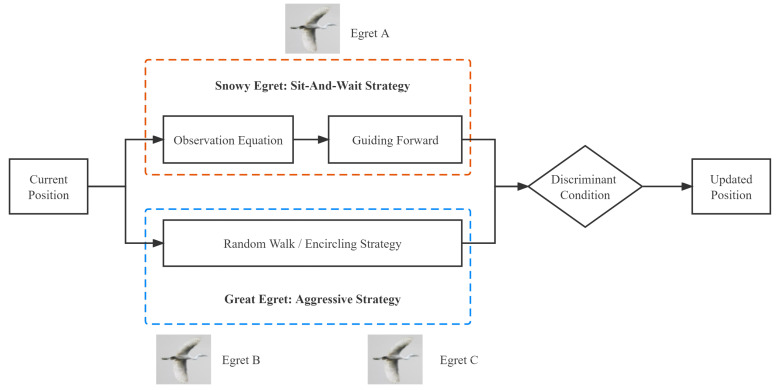

Inspired by the Snowy Egret’s sit-and-wait strategy and the Great Egret’s aggressive strategy, ESOA has combined the advantages of both strategies and constructed a corresponding mathematical model to quantify the behaviors. As shown in the Figure 2, ESOA is a parallel algorithm with three essential components: the sit-and-wait strategy, the aggressive strategy, and the discriminant condition. There are three Egrets in one Egret squad, Egret A applies a guiding forward mechanism while Egret B and Egret C adopt random walk and encircling mechanisms respectively. Each part is detailed below.

Figure 2.

The Framework Of Egret Swarm Optimization Algorithm.

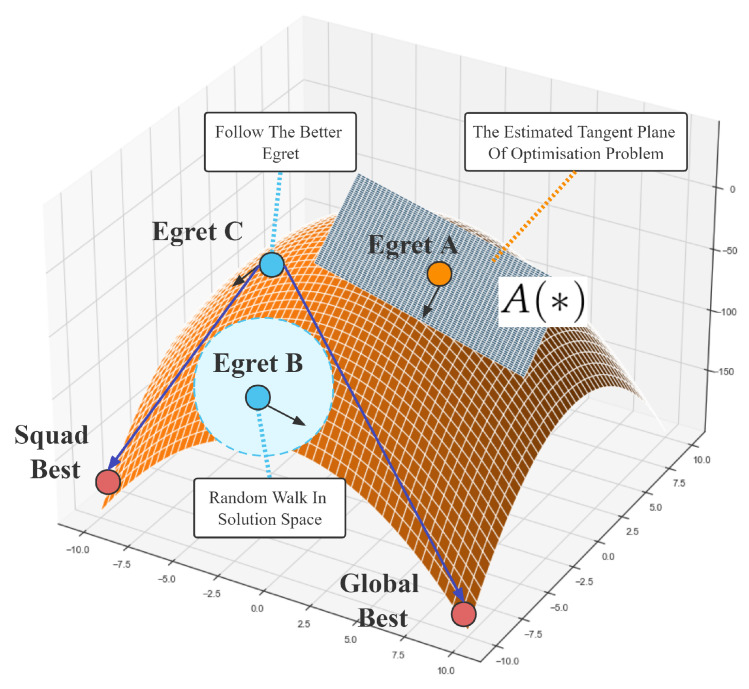

The individual roles and search preferences of the Egret Squad can be seen in Figure 3. Egret A will estimate a descent plane and search based on the gradient of the plane parameters, Egret B performs a global random wander, and Egret C selectively explores based on the location of better egrets. In this way, ESOA will be more balanced in terms of exploitation and exploration and will be capable of performing fast searches for feasible solutions. Unlike gradient descent, ESOA refers to historical information as well as stochasticity in the gradient estimation, meaning it is less likely to fall into the saddle point of the optimization problem. ESOA also differs from other meta-heuristic algorithms by estimating the tangent plane of the optimization problem, enabling a rapid descent to the current optimal point.

Figure 3.

The Detailed Search Behavior of ESOA.

2.2.1. Sit-and-Wait Strategy

Observation Equation: Assuming that the position of the i-th egret squad is , n is the dimension of problem, is the Snowy Egret’s estimate approach of the possible presence of prey in its own current location. is the estimation of prey in current location,

| (1) |

then the estimate method could be parameterized as,

| (2) |

where the is the weight of estimate method. The error could be described as,

| (3) |

Meanwhile, , the practical gradient of , can be retrieved by taking the partial derivative of for the error Equation (3), and its direction is .

| (4) |

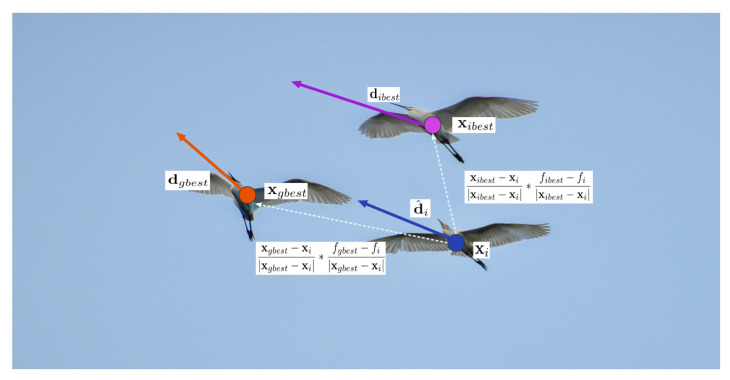

Figure 4 demonstrates the Egret’s following behavior, where Egrets refer to better Egrets during preying, drawing on their experience of estimating prey behavior and incorporating their own thoughts. is the directional correction of the best location of the squad while is the the directional correction of the best location of all squad.

| (5) |

| (6) |

Figure 4.

Following behaviour of Egret Swarms as an effective way of gradient estimation.

The integrated gradient can be represented as below, and , :

| (7) |

An adaptive weight update method is applied here [76], is 0.9 and is 0.99:

| (8) |

According to Egret A’s judgement of the current situation, the next sampling location can be described as,

| (9) |

| (10) |

where t and is the current iteration time and the maximum iteration time, while is the gap between the low bound and the up bound of solution space. is Egret A’s step size factor. is the fitness of .

2.2.2. Aggressive Strategy

Egret B tends to randomly search prey and its behavior could be depicted as below,

| (11) |

| (12) |

where is a random number in , is Egret B’s expected next location and is the fitness.

Egret C prefers to pursue prey aggressively, so the encircling mechanism is used as the update method of its position:

| (13) |

| (14) |

is the gap matrix between current location and the best position of this Egret squad while compares with the best location of all Egret squads. is the expected location of Egret C. is Egret B’s step size factor. and are random numbers in .

2.2.3. Discriminant Condition

After each member of the Egret squad has decided on its plan, the squad selects the optimal option and takes the action together. is the solution matrix of i-th Egret squad:

| (15) |

| (16) |

| (17) |

| (18) |

If the minimal value of is better than current fitness , the Egret squad accepts the choice. Or if the random number is less than 0.3, which means there is 30% possibility to accept a worse plan.

2.3. Pseudo Code

Based on the discussion above, the pseudo-code of ESOA is constructed as Algorithm 1, which contains two main functions to retrieve the Egret squad’s expected position matrix and a discriminant condition to choose a better scheme. ESOA requires an initial matrix of the P size Egret Swarm position as input, while it returns the optimal position and fitness .

We will analyse the computational complexity of each part of ESOA in turn and provide the final results. For sit-and-wait strategy, Equation (4) requires , Equations (5) and (6) need the same while Equation (7) require floating-point operators. Weight updating Equation (8) and position search need as well as respectively. Then the total operators of sit-and-wait strategy is . As for aggressive strategy, random wander Equation (11) and encircling mechanism require both then in total operators. Discriminant condition need n operators. So ESOA requires a total of floating-point operators and then its computational complexity is . Assuming that the population size of ESOA is k, the complexity then becomes .

| Algorithm 1 Egret Swarm Optimization Algorithm |

|

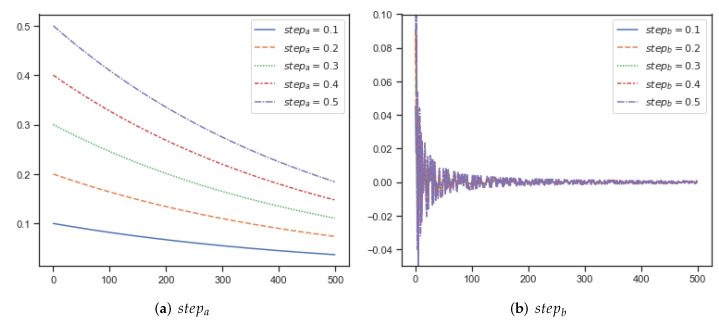

2.4. Parameters

The two parameters required for ESOA are the step factors and for Egret A and Egret B respectively. Larger step coefficients represent more aggressive exploration behavior. Table 1 shows how the parameters required for ESOA compare to other state-of-the-art algorithms. ESOA requires two parameters, which can be easily adjusted to obtain better optimization results when optimizing the problem. In fact, as ESOA’s Equation (8) in the sit-and-wait strategy contains adaptive mechanisms, it is able to respond to different parameter changes by self-adjusting. In the general case, both and can be set to 0.1 to deal with most problems. Figure 5 presents the effect of various and on the step size. larger a will significantly increase the search step of Egret during the original iterations, but will gradually close the gap with a smaller after many iterations. In simple applications or unimodal problems, can be appropriately tuned up for faster convergence, and in multimodal problems or complex applications, is appropriately tuned down for scenarios that require continuous optimization. A larger value of means that Egret B will wander randomly in larger steps, suitable for complex scenarios, to help ESOA perform a larger search and jump out of the local optimum solution where possible.

Table 1.

The Comparison of Required Parameters.

| Algorithm | Mechanism | Parameters |

|---|---|---|

| PSO [16] | Bird Predation | |

| GA [5] | Mutation, Crossover | Selection Rate, Crossover Rate, Mutation Rate |

| GSO [77] | Galactic Motion | Subswarm, |

| CSA [43] | Hiding Place | Awareness Probability, Flight Length |

| FFA [78] | Multiswarm | |

| GWO [18] | Social Hierarchy, Encircling Prey | a |

| WOA [79] | Whale Predation | |

| JS [80] | Active And Passive Motions | |

| ALSO [81] | Balanced Lumping | |

| ESOA | Predation Strategy |

Figure 5.

(a,b) represent the effect of different and on the step size, respectively.

3. Experimental Results and Discussion

In this section, the quantified performance of the ESOA algorithm is evaluated by examining 36 optimization functions. The first 7 unimodal functions are typical benchmark optimization problems presented in [8] and the mathematical expressions, dimensions, range of the solution space as well as the best fitness are indicated in Table 2. The final result and partial convergence curves are shown in Table A3 and Table A4 respectively. The remaining 29 test functions introduced in [82] are constructed by summarizing valid features from other benchmark problems, such as cascade, rotation, shift as well as shuffle traps. The overview of these functions are shown in Table 3 and the comparison is indicated in Table A5. All of the experiments are in 30 dimensions, whilst the algorithms used have 50 population sizes and are limited to a maximum of 500 iterations.

Table 2.

Unimodal test function.

| Function | Dim | Range | |

|---|---|---|---|

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 |

Table 3.

Summary of the CEC’17 Test Functions.

| No. | Functions | ||

|---|---|---|---|

| Unimodal Functions |

1 | Shifted and Rotated Bent Cigar Function | 100 |

| 2 | Shifted and Rotated Zakharov Function | 200 | |

| Simple Multimodal Functions |

3 | Shifted and Rotated Rosenbrock’s Function | 300 |

| 4 | Shifted and Rotated Rastrigin’s Function | 400 | |

| 5 | Shifted and Rotated Expanded Scaffer’s F6 Function | 500 | |

| 6 | Shifted and Rotated Lunacek Bi_Rastrigin Function | 600 | |

| 7 | Shifted and Rotated Non-Continuous Rastrigin’s Function | 700 | |

| 8 | Shifted and Rotated Levy Function | 800 | |

| 9 | Shifted and Rotated Schwefel’s Function | 900 | |

| Hybrid Functions |

10 | Hybrid Function 1 (N = 3) | 1000 |

| 11 | Hybrid Function 2 (N = 3) | 1100 | |

| 12 | Hybrid Function 3 (N = 3) | 1200 | |

| 13 | Hybrid Function 4 (N = 4) | 1300 | |

| 14 | Hybrid Function 5 (N = 4) | 1400 | |

| 15 | Hybrid Function 6 (N = 4) | 1500 | |

| 16 | Hybrid Function 6 (N = 5) | 1600 | |

| 17 | Hybrid Function 6 (N = 5) | 1700 | |

| 18 | Hybrid Function 6 (N = 5) | 1800 | |

| 19 | Hybrid Function 6 (N = 6) | 1900 | |

| Composition Functions |

20 | Composition Function 1 (N = 3) | 2000 |

| 21 | Composition Function 2 (N = 3) | 2100 | |

| 22 | Composition Function 3 (N = 4) | 2200 | |

| 23 | Composition Function 4 (N = 4) | 2300 | |

| 24 | Composition Function 5 (N = 5) | 2400 | |

| 25 | Composition Function 6 (N = 5) | 2500 | |

| 26 | Composition Function 7 (N = 6) | 2600 | |

| 27 | Composition Function 8 (N = 6) | 2700 | |

| 28 | Composition Function 9 (N = 3) | 2800 | |

| 29 | Composition Function 10 (N = 3) | 2900 |

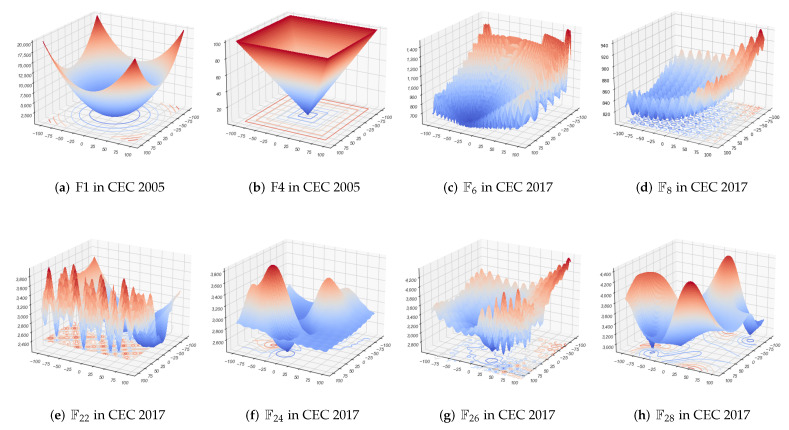

Researchers generally classify optimization test functions as Unimodal, Simple Multimodal, Hybrid, and Composition Functions. A 3D visualization of several of these functions are shown in Figure 6. As Unimodal Functions, (a) and (b) are as well as in CEC 2005, which only have one global minimum value without local optima. (c) and (d), the Simple Multimodal problems, retain numerous local optimal solution traps and multiple peaks to impede the exploration of global optimal search. Hybrid Multimodal functions are a series of problems adding up several different test functions with well-designed weights, and due to the dimension restriction, these are hard to reveal in the 3D graphics. (e), (f), (g) as well as (h) are Composition Functions, the non-linear combination of numerous test functions. These functions are extremely hard to optimize because of the various local traps and spikes, all of which are designed to impede the algorithm’s progress.

Figure 6.

(a,b) are Unimodal Functions, (c,d) are Simple Multimodal Functions, (e–h) are Composition Functions.

ESOA was compared with three traditional algorithms (PSO [16], GA [5], DE [6]) as well as two novel methods (GWO [20], HHO [83]) in the 37 benchmark functions. The numerical results from a maximum of 500 iterations is presented in Table A3 and Table A5. The initial input for each algorithm is a random matrix with 30 dimensions and 50 populations in . The specific variables w, , and in PSO are 0.8, 0.5, and 0.5 while the mutation value is 0.001 in GA.

3.1. Computational Complexity Analysis

The computational complexity test was performed using a laptop with windows 11, 16 GB of RAM, and an i5-10210U quad core CPU. Table 4 indicates the cost time from 100 runs between ESOA and other algorithms on the CEC05 benchmark functions in 30 dimensions. In general, ESOA is medium in terms of computational complexity, with taking the shortest time of all the algorithms and the longest.

Table 4.

The average cost time of each algorithm for the CEC05 problem, Dimension = 30, Maximum Iterations = 500.

| ESOA | PSO [16] | GA [5] | DE [6] | GWO [18] | HHO [83] | |

|---|---|---|---|---|---|---|

| F1 | 0.743205 | 0.813564 | 0.932624 | 0.69359 | 0.710619 | 0.820192 |

| F2 | 1.3374 | 1.0858 | 1.268 | 0.938598 | 0.9772 | 1.1564 |

| F3 | 4.4884 | 4.35339 | 4.5192 | 4.2196 | 4.2218 | 6.98319 |

| F4 | 0.577956 | 0.682438 | 0.95496 | 0.630826 | 0.580365 | 0.809334 |

| F5 | 1.08654 | 0.848078 | 1.10613 | 0.822341 | 0.743037 | 1.07561 |

| F6 | 0.761223 | 0.75511 | 1.01592 | 0.718751 | 0.646592 | 0.899095 |

| F7 | 1.81767 | 1.05312 | 1.3103 | 1.03578 | 0.953007 | 1.32848 |

3.2. Evaluation of Exploitation Ability

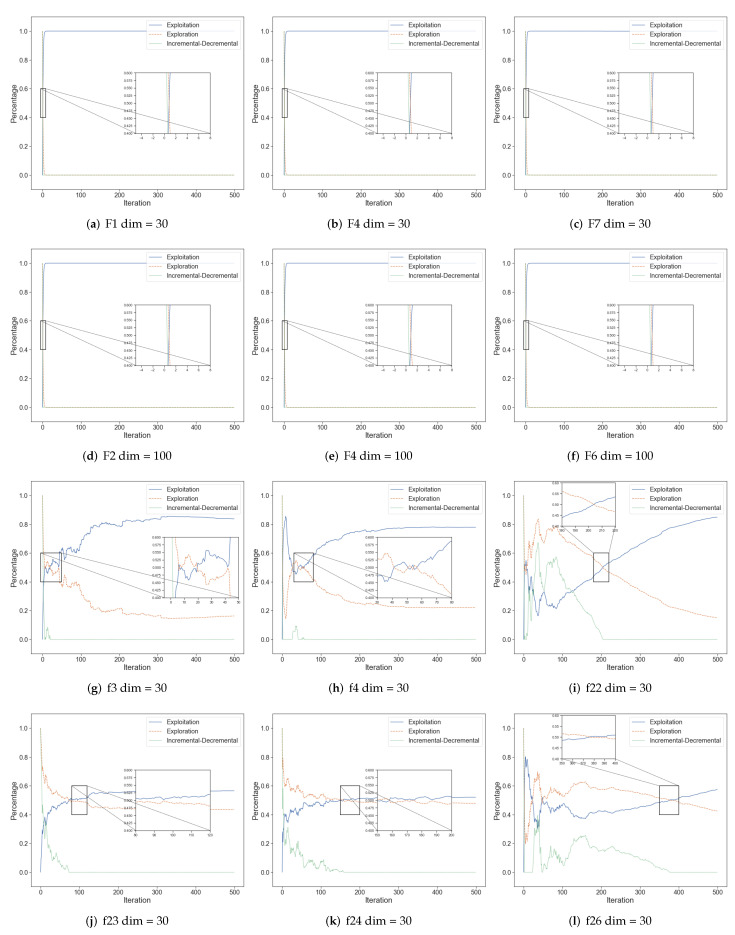

The exploration and exploitation measurement method utilized in this paper is based on computing the dimension-wise diversity of the meta-heuristic algorithm population in the following way [84,85].

| (19) |

where means the median value of algorithm swarm in dimension j, whereas is the value of individual i in dimension j, n is the population size. Then presents the average value of the whole swarm.

Moreover, the percentage of exploration and exploitation based on dimension-wise diversity could be calculated as below,

| (20) |

where is the exploration value while indicates the exploitation value. means the maximum diversity along with the whole iteration process. The exploration, exploitation as well as incremental-decremental are shown in Figure 7. Increment means the increasing ability of algorithms’ exploration while decrement presents the contrary. We can find that in most unimodal benchmark functions, due to the fast convergence of ESOA, all agents search the optimal solution swiftly and are clustered together about almost 10 iterations. And in complex functions, ESOA is computed after some iterations and all agents will gradually approach the optimal solution and are distributed around the optimal solution, which is expressed as exploitation gradually overtaking exploration.

Figure 7.

The exploration and exploitation of ESOA in benchmark test.

The Unimodal Function is utilized to evaluate the convergence speed and exploitation ability of the algorithms, as only one global optimum point is present. As shown in Table 5, ESOA demonstrates outstanding performance from to . ESOA trails GWO and HHO in to , however, the result is considerably superior to PSO, GA, as well as DE. Therefore, the excellent exploitation ability of ESOA is evident here.

Table 5.

Comparison Of Optimization Results Under The Unimodal Functions, Dimension = 30, Maximum Iterations = 500.

3.3. Evaluation of Exploration Ability (–)

In the MultiModal Functions and Hybrid Functions, there are numerous local optimum positions to impede the algorithm’s progress. The optimization difficulty increases exponentially with rising dimensions, which are useful for evaluating the exploration capability of an algorithm. The result of – shown in Table A5 is clear evidence of the remarkable exploration ability of ESOA. In particular, ESOA has superior performance to the other five algorithms for the average fitness on . Because of the aggressive strategy component of ESOA, it is capable of overcoming the interference from numerous local optimum points in the exploration of the global solution.

3.4. Comprehensive Performance Assessment (–)

Composition Functions are a difficult type of test function that require a good balance between exploitation and exploration. They are usually employed to undertake comprehensive evaluations of algorithms. The performance of each algorithm in – is shown in Table A5, the average fitness of ESOA in each test function is extremely competitive when compared to the other listed algorithms. Especially in , ESOA outperforms the other approaches and reaches 2346 average fitness while the second method (DE) only obtains 3129. In fact, ESOA possesses a sit-and-wait strategy for exploitation as well as an aggressive strategy for exploration. Both features are regulated by a discriminant condition which is fundamental to the performance of the algorithm in such scenarios.

3.5. Algorithm Stability

In general, the standard deviation of an algorithm’s outcomes when it is repeatedly applied to a problem can reflect its stability. It can be seen that the standard deviation of ESOA is at the top results in both tables in most situations, and much ahead of the second position in certain test functions. The stability of ESOA is hence proven.

Table A1 and Table A2 are two-sided 5% t-test results of ESOA’s performance in CEC05 and CEC17, respectively, against other algorithms. Combining this with Table A3, Table A4 and Table A5, it can be concluded that ESOA outperforms the other algorithms by a wide margin on the benchmark function.

In addition, for the field of evolutionary computation, hypothesis tests with parameters are more difficult to fully satisfy the conditions, so the Wilcoxon non-parametric test has been added to this section [86]. The Wilcoxon test results of ESOA’s performance in CEC05 and CEC17 are indicated in Table A6 and Table A7, which could be an evidence that ESOA demonstrates sufficient superiority.

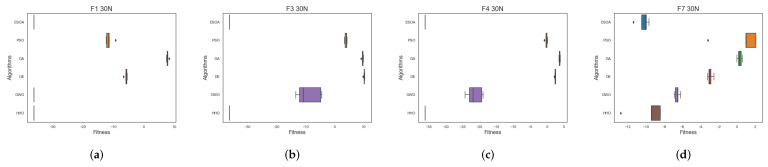

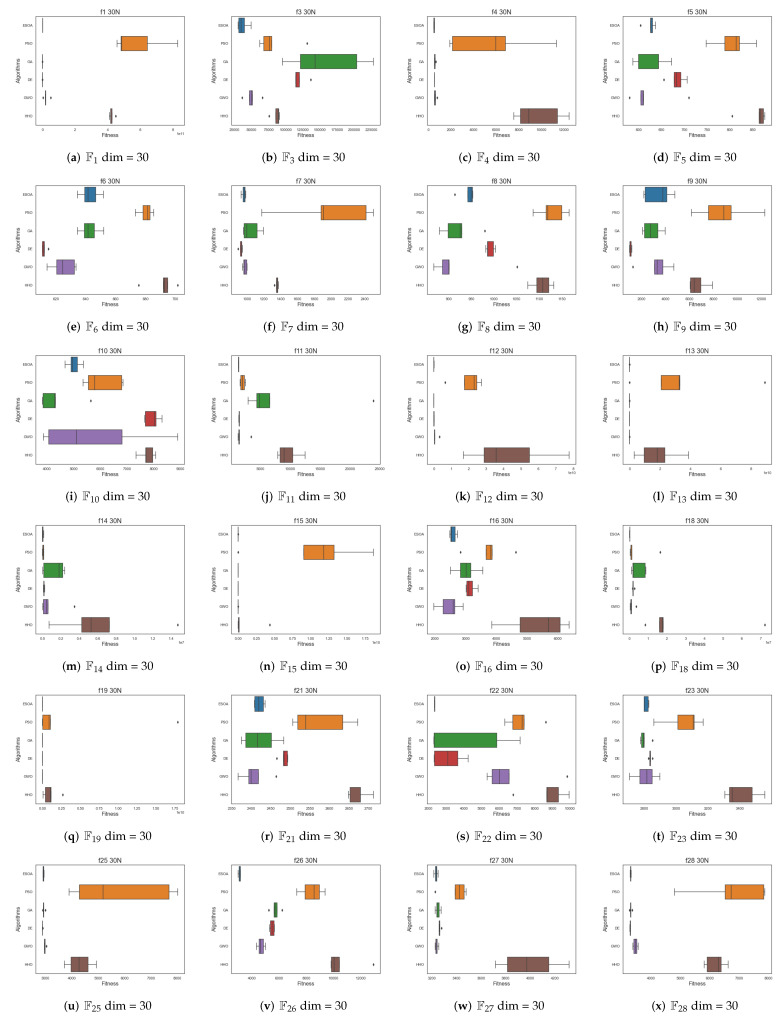

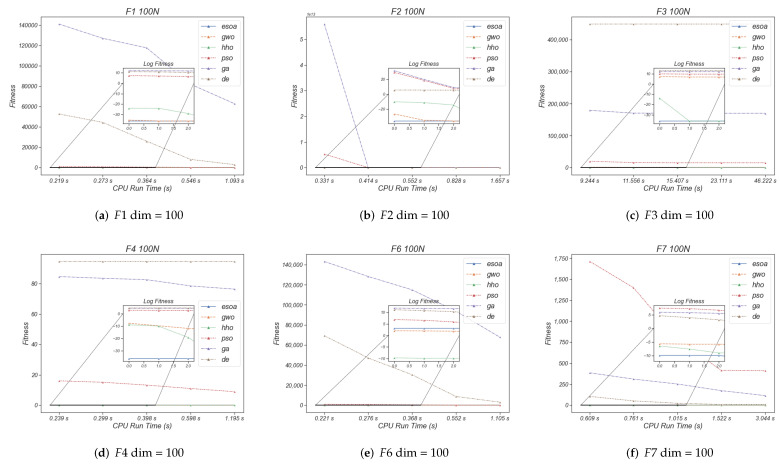

Figure 8 and Figure 9 show box plots of the results of multiple algorithms run 30 times on two types of test functions, respectively, where Figure 6 has been ln-processed. The results show that ESOA outperforms the other algorithms in most cases and has a smaller box, indicating a more stable algorithm. In the unimodal test function, ESOA’s boxes are significantly smaller than those of the other algorithms and have narrower boxes. With most complex test functions, e.g., , , as well as , ESOA has a significantly smaller box than the other algorithms and its superiority can be clearly seen.

Figure 8.

Box plots of the various algorithms’ performances for the CEC05 benchmark function. (a) F1 dim = 30, (b) F3 dim = 30, (c) F4 dim = 30, (d) F7 dim = 30.

Figure 9.

Box Plots of the Various Algorithms’ Performances for the CEC17 Benchmark Function.

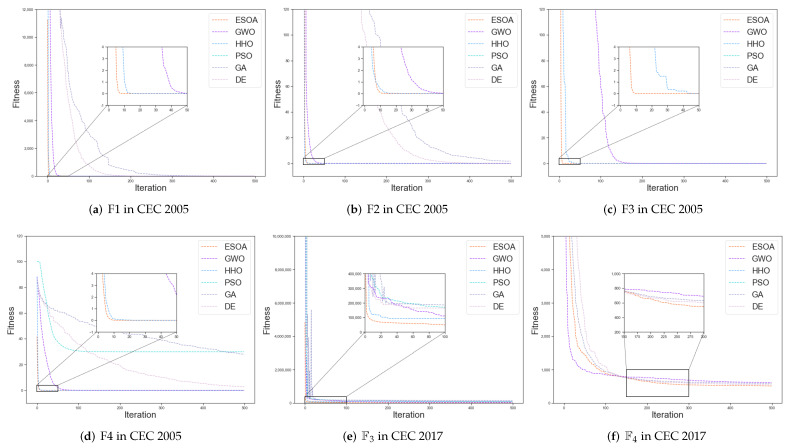

3.6. Analysis of Convergence Behavior

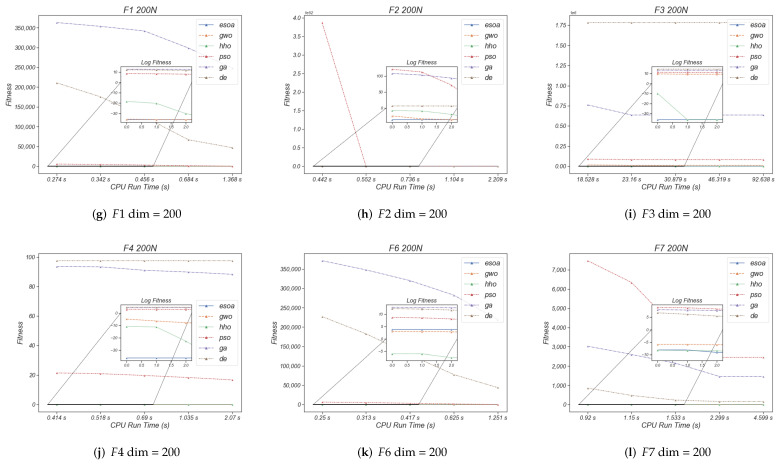

The partial convergence curves of each method are shown in Figure 10. In (a), (b), and (c), the Unimodal Functions, ESOA converges to near the global optimum in less than 10 iterations while PSO, GA as well as DE have yet to uncover the optimal path for fitness descent. The fast convergence in unimodal tasks allows ESOA to be applied to some online optimization problems. In (d), (e), and (f), the Multimodal and Hybrid Functions, after a period of searching the optimization results of ESOA will surpass almost all other algorithms in most cases and would continue to explore afterward. ESOA’s effectiveness in Multimodal problems indicates that it has notable potential to be applied in general engineering applications. In (g), (h) as well as (i), the Composition problems, ESOA’s search, and estimation mechanism allow for continuous optimization in most cases, and ultimately for excellent results. The performance in the Composition Functions is evidence of ESOA’s applicability for use in complex engineering applications.

Figure 10.

(a–d) are Unimodal Functions, (e,f) are Simple Multimodal Functions, (g,h) are Hybrid Functions, (i–l) are Composition Functions.

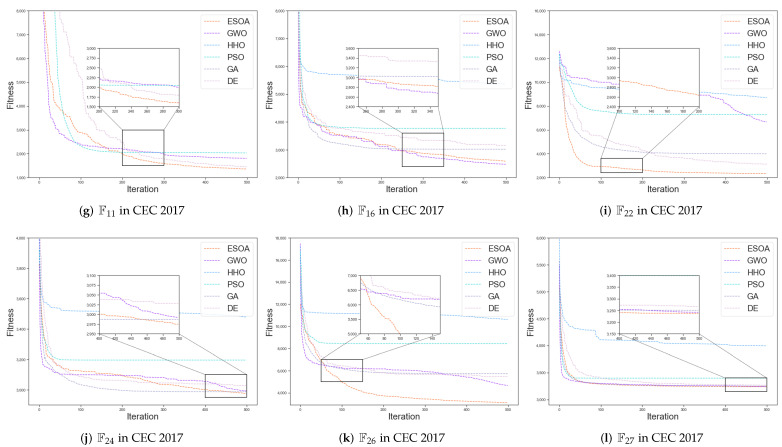

To complete the experiment and to provide more favorable conditions for demonstrating the superiority of ESOA, we have added a supplementary experiment to this section. The experiment uses the 100 and 200 dimensions of the CEC2005 benchmark, with five fixed sets of CPU runtimes present in each experiment. Figure 11 shows the optimal value searched for by each algorithm for a fixed CPU runtime, with a subplot of the logarithm of the fitness value to show its differentiation. The Figure 11 reveals that ESOA always remains the best at most fixed times, for instance (a), (b) and (c) in Figure 11, while the second place is usually taken by GWO or HHO. This experiment further justice to the superiority of ESOA.

Figure 11.

The performance of each algorithm in given CPU run time.

3.7. Statistical Analysis

In order to clarify the results of the comparison of the algorithms, this section will count the number of winners, losers, scores as well as rankings of each algorithm on the different test functions. The score is calculated as below,

| (21) |

where presents the score of i-th algorithm, means the optimal value of i-th algorithm in j-th problem. is the minimal value of all algorithm in j-th problem while is the maximal value. And is the number of winners of i-th algorithm in all problems.

As the CEC 2005 results shown in Table 6 and Table 7, ESOA consistently ranked first among all algorithms in all dimensions, with HHO in second place. ESOA performed particularly well in the 50, 100, and 200 dimensions, all close to 12, pulling away from second place by almost 3 scores. The results of CEC2017 are shown in Table 8, for the simple multimodal problem, ESOA was slightly behind GWO, but the scores were very close, at 8.99378 and 9.01376 respectively. For the hybrid functions and composition functions, ESOA was again the winner and outperformed others. As the data indicates, ESOA is with the capability of fast convergence in simple problems while maintaining excellent generalization and robustness for complex problems. The beneficial properties of ESOA stem from the algorithmic framework’s coordination, where the discrimination conditions effectively balance the exploitation of the sit-and-wait strategy with the exploration of the aggressive strategy.

Table 6.

The overall rank in CEC 2005 test functions (a), the bold numbers means the best performance among whole competitors.

| Dim = 30 | Dim = 50 | Dim = 100 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Winner | Loser | Score | Rank | Winner | Loser | Score | Rank | Winner | Loser | Score | Rank | |

| ESOA | 4 | 0 | 10.9997 | 1 | 5 | 0 | 11.9991 | 1 | 5 | 0 | 11.9996 | 1 |

| PSO [16] | 0 | 7 | 0 | 6 | 0 | 1 | 5.73968 | 4 | 0 | 1 | 5.40695 | 4 |

| GA [5] | 0 | 0 | 5.73443 | 5 | 0 | 4 | 1.32583 | 6 | 0 | 4 | 1.06203 | 6 |

| DE [6] | 0 | 0 | 6.40334 | 4 | 0 | 2 | 4.90238 | 5 | 0 | 2 | 4.07895 | 5 |

| GWO [18] | 0 | 0 | 7 | 3 | 0 | 0 | 6.99967 | 3 | 0 | 0 | 6.99396 | 3 |

| HHO [83] | 3 | 0 | 10 | 2 | 2 | 0 | 9 | 2 | 2 | 0 | 9 | 2 |

Table 7.

The overall rank in CEC 2005 test functions (b), the bold numbers means the best performance among whole competitors.

| Dim = 200 | Dim = 500 | Dim = 1000 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Winner | Loser | Score | Rank | Winner | Loser | Score | Rank | Winner | Loser | Score | Rank | |

| ESOA | 5 | 0 | 11.9998 | 1 | 4 | 0 | 9.99987 | 1 | 4 | 0 | 9.99989 | 1 |

| PSO [16] | 0 | 1 | 5.77841 | 4 | 0 | 1 | 4.6879 | 4 | 0 | 1 | 4.59214 | 4 |

| GA [5] | 0 | 4 | 1.01684 | 6 | 0 | 3 | 1.05412 | 6 | 0 | 3 | 1.10947 | 6 |

| DE [6] | 0 | 2 | 4.44476 | 5 | 0 | 2 | 2.96067 | 5 | 0 | 2 | 2.73122 | 5 |

| GWO [18] | 0 | 0 | 6.97992 | 3 | 0 | 0 | 5.95328 | 3 | 0 | 0 | 5.82731 | 3 |

| HHO [83] | 2 | 0 | 9 | 2 | 2 | 0 | 8 | 2 | 2 | 0 | 8 | 2 |

Table 8.

The overall rank in CEC 2017 test functions (a), the bold numbers means the best performance among whole competitors.

| Simple Multimodal | Hybrid Functions | Composition Functions | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Winner | Loser | Score | Rank | Winner | Loser | Score | Rank | Winner | Loser | Score | Rank | |

| ESOA | 2 | 0 | 8.99378 | 2 | 3 | 0 | 10.9602 | 1 | 4 | 0 | 11.9205 | 1 |

| PSO [16] | 0 | 3 | 1.94422 | 5 | 0 | 3 | 3.85613 | 5 | 0 | 2 | 2.82345 | 5 |

| GA [5] | 1 | 1 | 7.23141 | 4 | 4 | 0 | 10.5838 | 2 | 1 | 0 | 8.27331 | 3 |

| DE [6] | 3 | 1 | 8.71516 | 3 | 0 | 0 | 7.66103 | 4 | 2 | 0 | 9.07179 | 2 |

| GWO [18] | 2 | 0 | 9.01376 | 1 | 1 | 0 | 8.74242 | 3 | 1 | 0 | 7.97473 | 4 |

| HHO [83] | 0 | 3 | 1.62757 | 6 | 0 | 5 | 2.11415 | 6 | 0 | 6 | 0.674735 | 6 |

In order to better reflect the superiority of ESOA, two generic ranking systems, ELO and TrueSkill, are utilized in additional ranking [87]. Table 9, Table 10 and Table 11 indicate the ranking performance of each algorithm on the CEC05 and CEC17 benchmark test sets respectively. In CEC05 benchmark, ESOA reached first place under both ELO rankings and maintained scores above 1450 (ELO applies benchmark score as [1500, 1450, 1400, 1350, 1300, 1250]), and their performance at CEC17 was consistent. ESOA continues to show leadership in the CEC05 test set with scores of 29+ and first place in all CEC17 results under TrueSkill’s evaluation metrics.

Table 9.

The ELO and TrueSkill rank in CEC 2005 test functions (a), the bold numbers means the best performance among whole competitors.

| Dim = 30 | Dim = 50 | Dim = 100 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ELO | Rank | TrueSkill | Rank | ELO | Rank | TrueSkill | Rank | ELO | Rank | TrueSkill | Rank | |

| ESOA | 1470.39 | 1 | 31.258 | 1 | 1500.9 | 1 | 33.8623 | 1 | 1493.29 | 1 | 33.2997 | 1 |

| PSO [16] | 1383.44 | 4 | 24.7029 | 4 | 1345.27 | 4 | 21.2705 | 4 | 1375.78 | 4 | 23.8748 | 4 |

| GA [5] | 1273.48 | 6 | 15.5308 | 6 | 1288.79 | 6 | 17.187 | 6 | 1281.13 | 6 | 16.3589 | 6 |

| DE [6] | 1308.69 | 5 | 20.0568 | 5 | 1301.03 | 5 | 19.2287 | 5 | 1293.41 | 5 | 18.6219 | 5 |

| GWO [18] | 1397.08 | 3 | 27.9015 | 3 | 1389.45 | 3 | 27.2946 | 3 | 1381.83 | 3 | 26.6878 | 3 |

| HHO [83] | 1416.93 | 2 | 30.55 | 2 | 1424.56 | 2 | 31.1569 | 2 | 1424.56 | 2 | 31.1569 | 2 |

Table 10.

The ELO and TrueSkill rank in CEC 2005 test functions (b), the bold numbers means the best performance among whole competitors.

| Dim = 30 | Dim = 50 | Dim = 100 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ELO | Rank | TrueSkill | Rank | ELO | Rank | TrueSkill | Rank | ELO | Rank | TrueSkill | Rank | |

| ESOA | 1478.01 | 1 | 31.8648 | 2 | 1455.11 | 1 | 29.8231 | 2 | 1485.62 | 1 | 32.4274 | 1 |

| PSO [16] | 1352.88 | 4 | 21.8331 | 4 | 1352.89 | 4 | 21.8773 | 4 | 1352.89 | 4 | 21.8773 | 4 |

| GA [5] | 1281.13 | 6 | 16.3589 | 6 | 1281.13 | 6 | 16.3589 | 6 | 1288.79 | 6 | 17.187 | 6 |

| DE [6] | 1308.69 | 5 | 20.0568 | 5 | 1316.3 | 5 | 20.6194 | 5 | 1293.41 | 5 | 18.6219 | 5 |

| GWO [18] | 1397.08 | 3 | 27.9015 | 3 | 1404.7 | 3 | 28.5083 | 3 | 1397.08 | 3 | 27.9015 | 3 |

| HHO [83] | 1432.22 | 2 | 31.9849 | 1 | 1439.87 | 2 | 32.813 | 1 | 1432.22 | 2 | 31.9849 | 2 |

Table 11.

The ELO and TrueSkill rank in CEC 2017 test functions (a), the bold numbers means the best performance among whole competitors.

| Simple Multimodal | Hybrid Functions | Composition Functions | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ELO | Rank | TrueSkill | Rank | ELO | Rank | TrueSkill | Rank | ELO | Rank | TrueSkill | Rank | |

| ESOA | 1452.45 | 1 | 28.8727 | 1 | 1477.02 | 1 | 29.3237 | 1 | 1481.63 | 1 | 30.4362 | 1 |

| PSO [16] | 1327.35 | 5 | 19.0284 | 6 | 1383.93 | 5 | 22.0796 | 5 | 1343.86 | 5 | 19.7913 | 5 |

| GA [5] | 1421.97 | 2 | 28.0675 | 2 | 1432.86 | 2 | 27.7156 | 2 | 1399.32 | 4 | 25.5226 | 4 |

| DE [6] | 1402.15 | 3 | 27.3398 | 4 | 1403.81 | 4 | 25.7017 | 4 | 1414.12 | 2 | 27.8698 | 3 |

| GWO [18] | 1391.4 | 4 | 27.3977 | 3 | 1406.88 | 3 | 26.9267 | 3 | 1413.57 | 3 | 28.4724 | 2 |

| HHO [83] | 1284.68 | 6 | 19.294 | 5 | 1295.51 | 6 | 18.2527 | 6 | 1280.83 | 6 | 17.9076 | 6 |

In conclusion, this section revealed ESOA’s properties under various test functions. The sit-and-wait strategy in ESOA allows the algorithm to perform fast descent on deterministic surfaces. ESOA’s aggressive strategy ensures that the algorithm is extremely exploratory and does not readily slip into local optima. Therefore, ESOA has shown excellent outcomes in both exploration and exploitation.

4. Typical Application

In this section, ESOA is utilized on three practical engineering applications to demonstrate its competitive capabilities on optimization constraint problems. We compare not only ESOA with the original metaheuristic algorithm used in the previous section, but also with some improved variants, such as IWHO [88], QANA [46], L-Shade [59], iL-Shade [89] as well as MPEDE [90]. The results show that although the improved variant of the algorithm is able to achieve better optimization, it still falls short in terms of the adaptability of the constraints, in contrast, ESOA is comfortable with all constraints. Although some methods perform very well in CEC test functions, they may show weak results when applied to real scenarios or applications, which is because each optimisation method has its own aspects that are suitable and is not exhaustive.

In order to simplify the computational procedure, a penalty function is adopted to integrate the inequality constraints into the objective function [91]. The specific form is as below,

| (22) |

| (23) |

where is the transformed objective function and is the penalty parameter while and is the origin objective function as well as the inequality constraints respectively, and p are the number of constraints. is used to determine whether the independent variable violates a constraint.

4.1. Himmelblau’s Nonlinear Optimization Problem

Himmelblau introduced a nonlinear optimization problem as one of the famous benchmark problems for meta-heuristic algorithms [92]. The problem is described below,

For this problem, the in Equation (22) is set to , the number of search agents used by each algorithm is set to 10, and the maximum number of iterations is set to 500.

The optimal result is presented in Table 12 while the statistic result from 30 trials for each algorithm is shown in Table 13. PSO is the best performing algorithm in terms of optimal results, with the best result reaching −30,665.5 of variables . The second best was achieved by ESOA at −30,664.5 of variables . The standard deviation represents the algorithm’s stability, and ESOA, although ranking second, outperforms PSO, GA, DE, as well as HHO. The experimental results demonstrate the engineering feasibility of the proposed method.

Table 12.

The optimal result of various algorithms for Himmelblau problem, the bold numbers means the best performance among whole competitors.

| Value | Constraints | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ESOA | 78 | 33 | 29.9984 | 45 | 36.77 | −0.00018 | −91.9998 | −11.16 | −8.841 | −4.9988 | −0.00120342 | −30,664.5 | Yes |

| PSO [16] | 78 | 33 | 29.9953 | 45 | 36.77 | 0 | −92 | −11.15 | −8.84 | −5 | 0 | −30,665.5 | Yes |

| GA [5] | 78.047 | 35.02 | 31.81 | 44.81 | 32.57 | −0.27373 | −91.7263 | −10.95 | −9.04 | −4.98832 | −0.0116773 | −30,333.1 | Yes |

| DE [6] | 78 | 33.00 | 30.002 | 44.97 | 36.77 | −0.00107 | −91.9989 | −11.15 | −8.84 | −4.99875 | −0.00124893 | −30,663.2 | Yes |

| GWO [18] | 78.0031 | 33.00 | 30.0069 | 45 | 36.75 | −0.00201 | −91.998 | −11.15 | −8.84 | −4.99815 | −0.0018509 | −30,662.7 | Yes |

| HHO [83] | 78 | 33 | 32.4546 | 43.68 | 31.56 | −0.86883 | −91.1312 | −12.05 | −7.94 | −5 | 9.56 × 10−7 | −30,182.6 | Yes |

| L-Shade [59] | 78 | 33 | 27 | 27 | 27 | −1.88843 | −90.11157 | −13.83258 | −6.16742 | −8.23715 | 3.23715 | −32,217.4 | No |

| iL-Shade [60] | 78 | 33 | 27 | 27 | 27 | −1.88843 | −90.11157 | −13.83258 | −6.16742 | −8.23715 | 3.23715 | −32,217.4 | No |

| MPEDE [90] | 78 | 33 | 27 | 27 | 27 | −1.88843 | −90.11157 | −13.83258 | −6.16742 | −8.23715 | 3.23715 | −32,217.4 | No |

Table 13.

The statistical result of various algorithms for the Himmelblau problem, the bold numbers means the best performance among whole competitors.

| Best | Worst | Ave | Std | Time | |

|---|---|---|---|---|---|

| ESOA | −30,664.5 | −30,422.6 | −30,615.4 | 88.457 | 0.68607 |

| PSO [16] | −30,665.5 | −30,186.2 | −30509.8 | 200.628 | 0.64493 |

| GA [5] | −30,333.1 | −29,221.1 | −29,853.2 | 312.106 | 0.71309 |

| DE [6] | −30,663.2 | −30,655.2 | −30,658.8 | 2.35697 | 0.62209 |

| GWO [18] | −30,662.7 | −30,453.7 | −30,637.6 | 61.3822 | 0.615 |

| HHO [83] | −30,182.6 | −29,643 | −29,902.7 | 186.764 | 0.85409 |

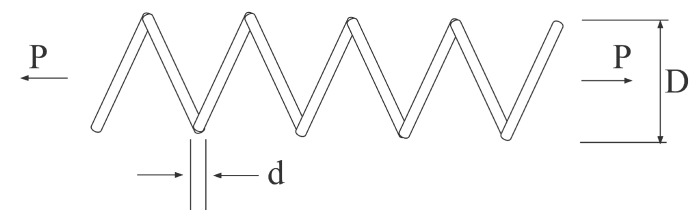

4.2. Tension/Compression Spring Design

The string design problem is described by Arora and Belegundu for minimizing spring weight under the constraints of minimum deflection, shear stress, and surge frequency [93,94]. Figure 12 illustrates the details of the problem, P is the number of active coils, d is the wire diameter while D represents the mean coil diameter.

Figure 12.

Tension/compression string design problem.

The mathematical modeling is given below,

For this problem, the in Equation (22) is set to , the number of search agents used by each algorithm is again set to 10, and the maximum number of iterations is set to 500.

Table 14 and Table 15 show the optimal and statistic results from 30 independent runs for the six algorithms respectively. The average fitness of DE achieves the best result at , whilst ESOA achieves the second best result at . The standard deviation of ESOA outperforms other algorithms, which demonstrates ESOA’s exceptional stability. The optimal result of ESOA was of variables .

Table 14.

The optimal result of various algorithms for the Spring problem, the bold numbers means the best performance among whole competitors.

| d | D | P | Value | Constraints | |||||

|---|---|---|---|---|---|---|---|---|---|

| ESOA | 0.05 | 0.317168 | 14.0715 | −0.000684592 | −0.000637523 | −3.96102 | −0.755221 | 0.01274345 | Yes |

| PSO [16] | 0.05 | 0.317425 | 14.0278 | 5.57 × 10−8 | −3.96844 | −0.75505 | 0.01271905 | No | |

| GA [5] | 0.0534462 | 0.39517 | 9.57495 | −0.00876173 | −0.0108724 | −4.02034 | −0.700922 | 0.01306582 | Yes |

| DE [6] | 0.0516891 | 0.356718 | 11.289 | −4.05379 | −0.727729 | 0.01266523 | No | ||

| GWO [18] | 0.0532407 | 0.394828 | 9.37671 | −0.000610472 | −0.000786781 | −4.11563 | −0.701287 | 0.01273248 | Yes |

| HHO [83] | 0.0536234 | 0.405061 | 8.93075 | −4.13981 | −0.69421 | 0.012731469 | No | ||

| IWHO [88] | 0.0517 | 0.4155 | 7.1564 | −0.000948687 | 0.132366 | −4.87727 | −0.688533 | 0.0102 | No |

| QANA [46] | 0.051926 | 0.362432 | 10.961632 | −4.06498 | −0.72376 | 0.01266625 | No | ||

| L-Shade [59] | 0.06899394 | 0.93343162 | 2 | −4.56081 | −0.33172 | 0.01777 | No | ||

| iL-Shade [89] | 0.05573737 | 0.46215675 | 7.01862125 | −4.22201 | −0.65473 | 0.01295 | No | ||

| MPEDE [90] | 0.05956062 | 0.5767404 | 4.71717282 | −0.00173 | −0.00087 | −4.33136 | −0.5758 | 0.01374 | Yes |

Table 15.

The statistical result of various algorithms for the spring problem, the bold numbers means the best performance among whole competitors.

| Best | Worst | Ave | Std | Time | |

|---|---|---|---|---|---|

| ESOA | 0.0127434 | 0.0128516 | 0.0127839 | 0.00003092 | 0.59006 |

| PSO [16] | 0.0127190 | 0.030455 | 0.0149345 | 0.00520118 | 0.58293 |

| GA [5] | 0.0130658 | 0.0180691 | 0.0151508 | 0.00186 | 0.623 |

| DE [6] | 0.0126652 | 0.0131926 | 0.0127371 | 0.000154639 | 0.56308 |

| GWO [18] | 0.0127324 | 0.0136782 | 0.0131852 | 0.000340304 | 0.535 |

| HHO [83] | 0.0127314 | 0.0145846 | 0.0133872 | 0.000615518 | 0.64992 |

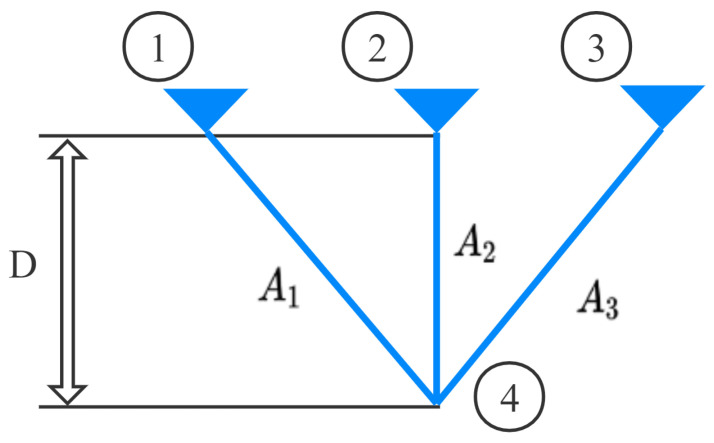

4.3. Three-Bar Truss Design

Three-bar truss design was introduced in [95], whose objective is to optimize the weight under the constraints of stress, deflection, and buckling. Figure 13 presents the structure of three bar truss and the parameters to be optimized. The specific mathematical formula is as below,

Figure 13.

Three-Bar Truss Design Problem.

For this problem, the in Equation (22) is set to , the number of search agents used by each algorithm is again set to 10, and the maximum number of iterations is set to 500.

Table 16 indicates ESOA, PSO, DE as well as HHO reach the optimal result of this problem. The optimal result achieved by ESOA is with variables . Table 17 presents the statistic result of each algorithm and provides sufficient proof for the excellence of ESOA. The proposed algorithm’s (ESOA) effectiveness and robustness are hence verified.

Table 16.

The optimal result of various algorithms for Three-Bar Truss Design problem, the bold numbers means the best performance among whole competitors.

| Value | Constraints | ||||||

|---|---|---|---|---|---|---|---|

| ESOA | 0.788192 | 0.409618 | −1.46255 | −0.948953 | 263.896 | Yes | |

| PSO [16] | 0.788763 | 0.408 | −1.46438 | −0.949714 | 263.896 | Yes | |

| GA [5] | 0.793214 | 0.395595 | −1.47859 | −0.955608 | 263.914 | Yes | |

| DE [6] | 0.788675 | 0.408248 | −1.4641 | −0.949596 | 263.896 | No | |

| GWO [18] | 0.788853 | 0.40775 | −1.46467 | −0.949833 | 263.897 | Yes | |

| HHO [83] | 0.788727 | 0.408102 | −1.46427 | −0.949665 | 263.896 | No | |

| IWHO [88] | 0.7884 | 0.4081 | 0.00070 | −1.46391 | −0.949229 | 263.8523 | No |

| QANA [46] | 0.788675 | 0.408248 | −1.4641 | −0.94959 | 263.895 | No | |

| L-Shade [59] | 0.78867514 | 0.40824829 | −0.0 | −1.4641 | −0.9496 | 263.896 | Yes |

| iL-Shade [89] | 0.78867513 | 0.40824829 | −0.0 | −1.4641 | −0.9496 | 263.896 | Yes |

| MPEDE [90] | 0.78924889 | 0.40662803 | −0.0 | −1.46595 | −0.95036 | 263.896 | Yes |

Table 17.

The statistical result of various algorithms for Three-Bar Truss Design problem, the bold numbers means the best performance among whole competitors.

| Best | Worst | Ave | Std | Time | |

|---|---|---|---|---|---|

| ESOA | 263.896 | 263.948 | 263.909 | 0.0146444 | 0.69 |

| PSO [16] | 263.896 | 263.905 | 263.897 | 0.00186868 | 0.626 |

| GA [5] | 263.914 | 264.522 | 264.084 | 0.158269 | 0.73401 |

| DE [6] | 263.896 | 263.896 | 263.896 | 0.656 | |

| GWO [18] | 263.897 | 263.921 | 263.904 | 0.00564346 | 0.63508 |

| HHO [83] | 263.896 | 268.296 | 264.424 | 0.99462 | 0.78 |

5. Conclusions

This paper introduced a novel meta-heuristic algorithm, the Egret Swarm Optimization Algorithm, which mimics two egret species’ typical hunting behavior (Great Egret and Snowy Egret). ESOA consists of three essential components: Snowy Egret’s sit-and-wait strategy, Great Egret’s aggressive strategy as well as a discriminant condition. The performance of ESOA was compared with 5 other state-of-the-art methods (PSO, GA, DE, GWO, and HHO) on 36 test functions, including Unimodal, Multimodal, Hybrid, and Composition Functions. The results of which demonstrate ESOA’s exploitation, exploration, comprehensive performance, stability as well as convergence behavior. In addition, two practical engineering problem instances demonstrate the excellent performance and robustness of ESOA to typical optimization applications. The code developed in this paper is available at https://github.com/Knightsll/Egret_Swarm_Optimization_Algorithm; https://ww2.mathworks.cn/matlabcentral/fileexchange/115595-egret-swarm-optimization-algorithm-esoa (accessed on 26 September 2022).

To accommodate more applications and optimization scenarios, other mathematical forms of sit-and-wait strategy, aggressive strategy, and discriminant condition in ESOA are currently under development.

Acknowledgments

The authors would like to convey their heartfelt appreciation to the support by the National Natural Science Foundation of China under Grants 62066015, 61962023, and 61966014, the support of EPSRC grant PURIFY (EP/V000756/1), and the Fundamental Research Funds for the Central Universities.

Appendix A

Table A1.

The T-test Result Between ESOA And Other Algorithm On CEC05 Benchmark Functions.

| ESOA vs. PSO | ESOA vs. GA | ESOA vs. DE | ESOA vs. GWO | ESOA vs. HHO | ||

|---|---|---|---|---|---|---|

| F1 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F2 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | N/A | N/A | N/A | N/A | N/A | |

| 1000 | N/A | N/A | N/A | N/A | N/A | |

| F3 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F4 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F5 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F6 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F7 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 |

Table A2.

The T-test Result Between ESOA And Other Algorithm On CEC17 Benchmark Functions.

| ESOA vs. PSO | ESOA vs. GA | ESOA vs. DE | ESOA vs. GWO | ESOA vs. HHO | ||

|---|---|---|---|---|---|---|

| 30 | ||||||

| 30 | N/A | N/A | N/A | N/A | ||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | N/A | N/A | N/A | N/A | N/A | |

| 30 | ||||||

| 30 | ||||||

| 30 | N/A | N/A | N/A | N/A | N/A | |

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | N/A | N/A | N/A | N/A | N/A |

Table A3.

The Detailed Comparison of Various Algorithms on Various Dimensions of CEC05 Benchmark Functions(a).

| ESOA | PSO [16] | GA [5] | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ave | best | worst | std | ave | best | worst | std | ave | best | worst | std | ||

| F1 | 50 | ||||||||||||

| 100 | |||||||||||||

| 200 | |||||||||||||

| 500 | |||||||||||||

| 1000 | |||||||||||||

| F2 | 50 | ||||||||||||

| 100 | |||||||||||||

| 200 | |||||||||||||

| 500 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | |||||

| 1000 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | |||||

| F3 | 50 | ||||||||||||

| 100 | |||||||||||||

| 200 | |||||||||||||

| 500 | |||||||||||||

| 1000 | |||||||||||||

| F4 | 50 | ||||||||||||

| 100 | |||||||||||||

| 200 | |||||||||||||

| 500 | |||||||||||||

| 1000 | |||||||||||||

| F5 | 50 | ||||||||||||

| 100 | |||||||||||||

| 200 | |||||||||||||

| 500 | |||||||||||||

| 1000 | |||||||||||||

| F6 | 50 | ||||||||||||

| 100 | |||||||||||||

| 200 | |||||||||||||

| 500 | |||||||||||||

| 1000 | |||||||||||||

| F7 | 50 | ||||||||||||

| 100 | |||||||||||||

| 200 | |||||||||||||

| 500 | |||||||||||||

| 1000 | |||||||||||||

Table A4.

The Detailed Comparison Of Various Algorithms On Various Dimensions Of CEC05 Benchmark Functions(b).

Table A5.

Comparison of optimization results under CEC17 test functions, dimension = 30, maximum iterations = 500.

| ESOA | PSO [16] | GA [5] | DE [6] | GWO [18] | HHO [83] | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ave | std | ave | std | ave | std | ave | std | ave | std | ave | std | |

| 1 | ||||||||||||

| 2 | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf |

| 3 | ||||||||||||

| 4 | ||||||||||||

| 5 | ||||||||||||

| 6 | ||||||||||||

| 7 | ||||||||||||

| 8 | ||||||||||||

| 9 | ||||||||||||

| 10 | ||||||||||||

| 11 | ||||||||||||

| 12 | ||||||||||||

| 13 | ||||||||||||

| 14 | ||||||||||||

| 15 | ||||||||||||

| 16 | ||||||||||||

| 17 | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf |

| 18 | ||||||||||||

| 19 | ||||||||||||

| 20 | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf |

| 21 | ||||||||||||

| 22 | ||||||||||||

| 23 | ||||||||||||

| 24 | ||||||||||||

| 25 | ||||||||||||

| 26 | ||||||||||||

| 27 | ||||||||||||

| 28 | ||||||||||||

| 29 | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf | inf |

Table A6.

The Wilcoxon Test Result Between ESOA And Other Algorithm On CEC05 Benchmark Functions.

| ESOA vs. PSO | ESOA vs. GA | ESOA vs. DE | ESOA vs. GWO | ESOA vs. HHO | ||

|---|---|---|---|---|---|---|

| F1 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F2 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | N/A | N/A | N/A | N/A | N/A | |

| 1000 | N/A | N/A | N/A | N/A | N/A | |

| F3 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F4 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F5 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F6 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 | ||||||

| F7 | 30 | |||||

| 50 | ||||||

| 100 | ||||||

| 200 | ||||||

| 500 | ||||||

| 1000 |

Table A7.

The Wilcoxon Test Result Between ESOA And Other Algorithm On CEC17 Benchmark Functions.

| ESOA vs. PSO | ESOA vs. GA | ESOA vs. DE | ESOA vs. GWO | ESOA vs. HHO | ||

|---|---|---|---|---|---|---|

| 30 | ||||||

| 30 | N/A | N/A | N/A | N/A | N/A | |

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | N/A | N/A | N/A | N/A | N/A | |

| 30 | ||||||

| 30 | ||||||

| 30 | N/A | N/A | N/A | N/A | N/A | |

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | ||||||

| 30 | N/A | N/A | N/A | N/A | N/A |

Author Contributions

The authors confirm their contribution to the paper as follows: conceptualization, S.L. and Z.C.; methematical modeling, S.L., A.F., B.L. and D.X.; data analysis, Z.C., A.F., B.L., D.X. and T.T.H.; validation, J.L., L.D. and X.C.; visualization, T.T.H., J.L. and X.C.; review, L.D., J.L. and X.C.; manuscript preparation, Z.C., A.F. and S.L. All authors have read and agreed to the published version of the manuscript.

Data Availability Statement

The source code used in this work can be retrieved from Github Link: https://github.com/Knightsll/Egret_Swarm_Optimization_Algorithm; Mathworks Link: https://ww2.mathworks.cn/matlabcentral/fileexchange/115595-egret-swarm-optimization-algorithm-esoa.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Nanda S.J., Panda G. A Survey on Nature Inspired Metaheuristic Algorithms for Partitional Clustering. Swarm Evol. Comput. 2014;16:1–18. doi: 10.1016/j.swevo.2013.11.003. [DOI] [Google Scholar]

- 2.Hussain K., Mohd Salleh M.N., Cheng S., Shi Y. Metaheuristic Research: A Comprehensive Survey. Artif. Intell. Rev. 2019;52:2191–2233. doi: 10.1007/s10462-017-9605-z. [DOI] [Google Scholar]

- 3.Tang J., Liu G., Pan Q. A Review on Representative Swarm Intelligence Algorithms for Solving Optimization Problems: Applications and Trends. IEEE/CAA J. Autom. Sin. 2021;8:1627–1643. doi: 10.1109/JAS.2021.1004129. [DOI] [Google Scholar]

- 4.Shaikh P.W., El-Abd M., Khanafer M., Gao K. A Review on Swarm Intelligence and Evolutionary Algorithms for Solving the Traffic Signal Control Problem. IEEE Trans. Intell. Transp. Syst. 2022;23:48–63. doi: 10.1109/TITS.2020.3014296. [DOI] [Google Scholar]

- 5.Holland J.H. Genetic Algorithms. Sci. Am. 1992;267:66–73. doi: 10.1038/scientificamerican0792-66. [DOI] [Google Scholar]

- 6.Storn R., Price K.V. Differential evolution-A simple and efficient heuristic for global optimization over continuous Spaces. J. Glob. Optim. 1997;11:341–359. doi: 10.1023/A:1008202821328. [DOI] [Google Scholar]

- 7.Francois O. An evolutionary strategy for global minimization and its Markov chain analysis. IEEE Trans. Evol. Comput. 1998;2:77–90. doi: 10.1109/4235.735430. [DOI] [Google Scholar]

- 8.Yao X., Liu Y., Lin G. Evolutionary Programming Made Faster. IEEE Trans. Evol. Comput. 1999;3:82–102. [Google Scholar]

- 9.Kirkpatrick S., Gelatt C.D., Vecchi M.P. Optimization by Simmulated Annealing. Science. 1983;220:671–680. doi: 10.1126/science.220.4598.671. [DOI] [PubMed] [Google Scholar]

- 10.Rashedi E., Nezamabadi-Pour H., Saryazdi S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009;179:2232–2248. doi: 10.1016/j.ins.2009.03.004. [DOI] [Google Scholar]

- 11.Hatamlou A. Black Hole: A New Heuristic Optimization Approach for Data Clustering. Inf. Sci. 2013;222:75–184. doi: 10.1016/j.ins.2012.08.023. [DOI] [Google Scholar]

- 12.Mirjalili S., Mirjalili S.M., Hatamlou A. Multi-Verse Optimizer: A Nature-Inspired Algorithm For Global Optimization. Neural Comput. Appl. 2016;27:495–513. doi: 10.1007/s00521-015-1870-7. [DOI] [Google Scholar]

- 13.Yang X. Harmony Search as a Metaheuristic Algorithm. In: Geem Z.W., editor. Music-Inspired Harmony Search Algorithm. Studies in Computational Intelligence. Volume 191 Springer; Berlin/Heidelberg, Germany: 2009. [Google Scholar]

- 14.Zhao J., Xiao M., Gao L., Pan Q. Queuing search algorithm: A novel metaheuristic algorithm for solving engineering optimization problems. Appl. Math. Model. 2018;63:464–490. [Google Scholar]

- 15.Shi Y. Brain Storm Optimization Algorithm. In: Tan Y., Shi Y., Chai Y., Wang G., editors. Advances in Swarm Intelligence. Volume 6728 Springer; Berlin/Heidelberg, Germany: 2011. ICSI 2011. Lecture Notes in Computer Science. [Google Scholar]

- 16.Kennedy J., Eberhart R. Particle Swarm Optimization; Proceedings of the ICNN’95—International Conference on Neural Networks; Perth, WA, Australia. 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- 17.Dorigo M., Birattari M., Stutzle T. Ant Colony Optimization. IEEE Comput. Intell. Mag. 2006;1:28–39. doi: 10.1109/MCI.2006.329691. [DOI] [Google Scholar]

- 18.Mirjalili S., Mirjalili S.M., Lewis A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014;69:46–61. doi: 10.1016/j.advengsoft.2013.12.007. [DOI] [Google Scholar]

- 19.Mohanty S., Subudhi B., Ray P.K. A New Mppt Design Using Grey Wolf Optimization Technique For Photovoltaic System Under Partial Shading Conditions. IEEE Trans. Sustain. Energy. 2016;7:181–188. doi: 10.1109/TSTE.2015.2482120. [DOI] [Google Scholar]

- 20.Precup R., David R., Petriu E.M. Grey Wolf Optimizer Algorithm-Based Tuning of Fuzzy Control Systems with Reduced Parametric Sensitivity. IEEE Trans. Ind. Electron. 2017;64:527–534. doi: 10.1109/TIE.2016.2607698. [DOI] [Google Scholar]

- 21.Xue J., Shen B. A Novel Swarm Intelligence Optimization Approach: Sparrow Search Algorithm. Syst. Sci. Control. Eng. 2020;8:22–34. doi: 10.1080/21642583.2019.1708830. [DOI] [Google Scholar]

- 22.Zhang C., Ding S. A Stochastic Configuration Network Based On Chaotic Sparrow Search Algorithm. Knowl.-Based Syst. 2021;220:106924. doi: 10.1016/j.knosys.2021.106924. [DOI] [Google Scholar]

- 23.Li L., Xiong J., Tseng M., Yan Z., Lim M.K. Using Multi-Objective Sparrow Search Algorithm To Establish Active Distribution Network Dynamic Reconfiguration Integrated Optimization. Expert Syst. Appl. 2022;193:116445. doi: 10.1016/j.eswa.2021.116445. [DOI] [Google Scholar]

- 24.Jiang X., Li S. BAS: Beetle Antennae Search Algorithm For Optimization Problems. arXiv. 2017 doi: 10.5430/ijrc.v1n1p1.1710.10724 [DOI] [Google Scholar]

- 25.Khan A.H., Li S., Luo X. Obstacle Avoidance and Tracking Control of Redundant Robotic Manipulator: An RNN-Based Metaheuristic Approach. IEEE Trans. Ind. Inform. 2020;16:4670–4680. doi: 10.1109/TII.2019.2941916. [DOI] [Google Scholar]

- 26.Chen D., Li X., Li S. A Novel Convolutional Neural Network Model Based on Beetle Antennae Search Optimization Algorithm for Computerized Tomography Diagnosis. IEEE Trans. Neural Netw. Learn. Syst. 2021:1–12. doi: 10.1109/TNNLS.2021.3105384. [DOI] [PubMed] [Google Scholar]

- 27.Valdez F. Swarm Intelligence: A Review of Optimization Algorithms Based on Animal Behavior. Recent Advances of Hybrid Intelligent Systems Based on Soft Computing. Stud. Comput. Intell. 2021;915:273–298. [Google Scholar]

- 28.Rostami M., Berahmand K., Nasiri E., Forouzandeh S. Review of swarm intelligence-based feature selection methods. Eng. Appl. Artif. Intell. 2021;100:104210. doi: 10.1016/j.engappai.2021.104210. [DOI] [Google Scholar]

- 29.Osaba E., Yang X.S. Applied Optimization and Swarm Intelligence: A Systematic Review and Prospect Opportunities. Springer; Singapore: 2021. pp. 1–23. [Google Scholar]

- 30.Sharma A., Sharma A., Pandey J.K., Ram M. Swarm Intelligence: Foundation, Principles, and Engineering Applications. CRC Press; Boca Raton, FL, USA: 2022. [Google Scholar]

- 31.Vasuki A. Nature-Inspired Optimization Algorithms. Academic Press/Chapman and Hall/CRC; London, UK: 2020. [Google Scholar]

- 32.Naderi E., Pourakbari-Kasmaei M., Lehtonen M. Transmission expansion planning integrated with wind farms: A review, comparative study, and a novel profound search approach. Int. J. Electr. Power Energy Syst. 2020;115:105460. doi: 10.1016/j.ijepes.2019.105460. [DOI] [Google Scholar]

- 33.Naderi E., Azizivahed A., Narimani H., Fathi M., Narimani M.R. A comprehensive study of practical economic dispatch problems by a new hybrid evolutionary algorithm. Appl. Soft Comput. 2017;61:1186–1206. doi: 10.1016/j.asoc.2017.06.041. [DOI] [Google Scholar]

- 34.Narimani H., Azizivahed A., Naderi E., Fathi M., Narimani M.R. A practical approach for reliability-oriented multi-objective unit commitment problem. Appl. Soft Comput. 2019;85:105786. doi: 10.1016/j.asoc.2019.105786. [DOI] [Google Scholar]

- 35.Naderi E., Azizivahed A., Asrari A. A step toward cleaner energy production: A water saving-based optimization approach for economic dispatch in modern power systems. Electr. Power Syst. Res. 2022;204:107689. doi: 10.1016/j.epsr.2021.107689. [DOI] [Google Scholar]

- 36.Naderi E., Pourakbari-Kasmaei M., Cerna F.V., Lehtonen M. A novel hybrid self-adaptive heuristic algorithm to handle single- and multi-objective optimal power flow problems. Int. J. Electr. Power Energy Syst. 2021;125:106492. doi: 10.1016/j.ijepes.2020.106492. [DOI] [Google Scholar]

- 37.Zhao W., Wang L., Mirjalili S. Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications. Comput. Methods Appl. Mech. Eng. 2022;388:114194. doi: 10.1016/j.cma.2021.114194. [DOI] [Google Scholar]

- 38.Abdollahzadeh B., Gharehchopogh F.S., Mirjalili S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 2021;158:107408. doi: 10.1016/j.cie.2021.107408. [DOI] [Google Scholar]

- 39.Zamani H., Nadimi-Shahraki M.H., Gandomi A.H. Starling murmuration optimizer: A novel bio-inspired algorithm for global and engineering optimization. Comput. Methods Appl. Mech. Eng. 2022;392:114616. doi: 10.1016/j.cma.2022.114616. [DOI] [Google Scholar]

- 40.Jiang Y., Wu Q., Zhu S., Zhang L. Orca predation algorithm: A novel bio-inspired algorithm for global optimization problems. Expert Syst. Appl. 2022;188:116026. doi: 10.1016/j.eswa.2021.116026. [DOI] [Google Scholar]

- 41.Yang Z., Deng L., Wang Y., Liu J. Aptenodytes forsteri optimization: Algorithm and applications. Knowl. Based Syst. 2021;232:107483. doi: 10.1016/j.knosys.2021.107483. [DOI] [Google Scholar]

- 42.Mohammadi-Balani A., Nayeri M.D., Azar A., Taghizadeh-Yazdi M. Golden eagle optimizer: A nature-inspired metaheuristic algorithm. Comput. Ind. Eng. 2021;152:107050. doi: 10.1016/j.cie.2020.107050. [DOI] [Google Scholar]

- 43.Braik M.S. Chameleon Swarm Algorithm: A bio-inspired optimizer for solving engineering design problems. Expert Syst. Appl. 2021;174:114685. doi: 10.1016/j.eswa.2021.114685. [DOI] [Google Scholar]

- 44.Połap D., Woźniak M. Red fox optimization algorithm. Expert Syst. Appl. 2021;166:114107. doi: 10.1016/j.eswa.2020.114107. [DOI] [Google Scholar]

- 45.Jafari M., Salajegheh E., Salajegheh J. Elephant clan optimization: A nature-inspired metaheuristic algorithm for the optimal design of structures. Appl. Soft Comput. 2021;113:107892. doi: 10.1016/j.asoc.2021.107892. [DOI] [Google Scholar]

- 46.Zamani H., Nadimi-Shahraki M.H., Gandomi A.H. QANA: Quantum-based avian navigation optimizer algorithm. Eng. Appl. Artif. Intell. 2021;104:104314. doi: 10.1016/j.engappai.2021.104314. [DOI] [Google Scholar]

- 47.Stork J., Eiben A.E., Bartz-Beielstein T. A new taxonomy of continuous global optimization algorithms. arXiv. 20181808.08818 [Google Scholar]

- 48.Liu S.-H., Mernik M., Hrnčič D., Črepinšek M. A parameter control method of evolutionary algorithms using exploration and exploitation measures with a practical application for fitting Sovova’s mass transfer model. Appl. Soft Comput. 2013;13:3792–3805. doi: 10.1016/j.asoc.2013.05.010. [DOI] [Google Scholar]

- 49.LaTorre A., Molina D., Osaba E., Poyatos J., Del Ser J., Herrera F. A prescription of methodological guidelines for comparing bio-inspired optimization algorithms. Swarm Evol. Comput. 2021;67:100973. doi: 10.1016/j.swevo.2021.100973. [DOI] [Google Scholar]

- 50.Tanabe R., Fukunaga A. Success-history based parameter adaptation for Differential Evolution; Proceedings of the 2013 IEEE Congress on Evolutionary Computation; Cancun, Mexico. 20–23 June 2013. [Google Scholar]

- 51.Ravber M., Liu S.-H., Mernik M., Črepinšek M. Maximum number of generations as a stopping criterion considered harmful. Appl. Soft Comput. 2022;128:109478. doi: 10.1016/j.asoc.2022.109478. [DOI] [Google Scholar]

- 52.Derrac J., García S., Molina D., Herrera F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011;1:3–18. doi: 10.1016/j.swevo.2011.02.002. [DOI] [Google Scholar]

- 53.Auger A., Hansen N. A restart CMA evolution strategy with increasing population size; Proceedings of the 2005 IEEE Congress on Evolutionary Computation; Edinburgh, UK. 2–5 September 2005. [Google Scholar]

- 54.Hansen N., Müller S.D., Koumoutsakos P. Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES) Evol. Comput. 2003;11:1–18. doi: 10.1162/106365603321828970. [DOI] [PubMed] [Google Scholar]

- 55.Liao T., Stutzle T. Benchmark results for a simple hybrid algorithm on the CEC 2013 benchmark set for real-parameter optimization; Proceedings of the 2013 IEEE Congress on Evolutionary Computation; Cancun, Mexico. 20–23 June 2013. [Google Scholar]

- 56.Lacroix B., Molina D., Herrera F. Dynamically updated region based memetic algorithm for the 2013 CEC Special Session and Competition on Real Parameter Single Objective Optimization; Proceedings of the 2013 IEEE Congress on Evolutionary Computation; Cancun, Mexico. 20–23 June 2013. [Google Scholar]

- 57.Mallipeddi R., Wu G., Lee M., Suganthan P.N. Gaussian adaptation based parameter adaptation for differential evolution; Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC); Beijing, China. 6–11 July 2014. [Google Scholar]

- 58.Erlich I., Rueda J.L., Wildenhues S., Shewarega F. Evaluating the Mean-Variance Mapping Optimization on the IEEE-CEC 2014 test suite; Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC); Beijing, China. 6–11 July 2014. [Google Scholar]

- 59.Tanabe R., Fukunaga A.S. Improving the search performance of SHADE using linear population size reduction; Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC); Beijing, China. 6–11 July 2014. [Google Scholar]

- 60.Sallam K.M., Sarker R.A., Essam D.L., Elsayed S.M. Neurodynamic differential evolution algorithm and solving CEC2015 competition problems; Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC); Sendai, Japan. 25–28 May 2015. [Google Scholar]

- 61.Guo S.M., Tsai J.S.H., Yang C.C., Hsu P.H. A self-optimization approach for L-SHADE incorporated with eigenvectorbased crossover and successful-parent-selecting framework on CEC 2015 benchmark set; Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC); Sendai, Japan. 25–28 May 2015; pp. 1003–1010. [Google Scholar]

- 62.Wolpert D.H., Macready W.G. No Free Lunch Theorems For Optimization. IEEE Trans. Evol. Comput. 1997;1:67–82. doi: 10.1109/4235.585893. [DOI] [Google Scholar]

- 63.Villalón C.L.C., Stützle T., Dorigo M. Lecture Notes in Computer Science. Springer International Publishing; Cham, Switzerland: 2020. Grey wolf, firefly and bat algorithms: Three widespread algorithms that do not contain any novelty; pp. 121–133. [Google Scholar]

- 64.Sörensen K. Metaheuristics-the metaphor exposed. Int. Trans. Oper. Res. 2015;22:3–18. doi: 10.1111/itor.12001. [DOI] [Google Scholar]

- 65.Wahab M.N.A., Nefti-Meziani S., Atyabi A. A comparative review on mobile robot path planning: Classical or meta-heuristic methods? Annu. Rev. Control. 2020;50:233–252. doi: 10.1016/j.arcontrol.2020.10.001. [DOI] [Google Scholar]

- 66.Črepinšek M., Liu S.-H., Mernik M. Exploration and exploitation in evolutionary algorithms: A survey. ACM Comput. Surv. 2013;45:1–33. doi: 10.1145/2480741.2480752. [DOI] [Google Scholar]

- 67.Jerebic J., Mernik M., Liu S.H., Ravber M., Baketarić M., Mernik L., Črepinšek M. A novel direct measure of exploration and exploitation based on attraction basins. Expert Syst. Appl. 2021;167:114353. doi: 10.1016/j.eswa.2020.114353. [DOI] [Google Scholar]

- 68.Dimalexis A., Pyrovetsi M., Sgardelis S. Foraging Ecology of the Grey Heron (Ardea cinerea), Great Egret (Ardea alba) and Little Egret (Egretta garzetta) in Response to Habitat, at 2 Greek Wetlands. Colon. Waterbirds. 1997;20:261. doi: 10.2307/1521692. [DOI] [Google Scholar]

- 69.Wiggins D.A. Foraging success and aggression in solitary and group-feeding great egrets (casmerodius albus) Colon. Waterbirds. 1991;14:176. doi: 10.2307/1521508. [DOI] [Google Scholar]

- 70.Kent D.M. Behavior, habitat use, and food of three egrets in a marine habitat. Colon. Waterbirds. 1986;9:25. doi: 10.2307/1521140. [DOI] [Google Scholar]

- 71.Maccarone A.D., Brzorad J.N., Stone H.M. Characteristics and Energetics of Great Egret and Snowy Egret Foraging Flights. Waterbirds. 2008;31:541–549. [Google Scholar]

- 72.Brzorad J.N., Maccarone A.D., Conley K.J. Foraging Energetics of Great Egrets and Snowy Egrets. J. Field Ornithol. 2004;75:266–280. doi: 10.1648/0273-8570-75.3.266. [DOI] [Google Scholar]

- 73.Master T.L., Leiser J.K., Bennett K.A., Bretsch J.K., Wolfe H.J. Patch Selection by Snowy Egrets. Waterbirds. 2005;28:220–224. doi: 10.1675/1524-4695(2005)028[0220:PSBSE]2.0.CO;2. [DOI] [Google Scholar]

- 74.Maccarone A.D., Brzorad J.N., Stone H.M. A Telemetry-based Study of Snowy Egret (Egretta thula) Nest-activity Patterns, Food-provisioning Rates and Foraging Energetics. Waterbirds. 2012;35:394–401. doi: 10.1675/063.035.0304. [DOI] [Google Scholar]

- 75.Maccarone A.D., Brzorad J.N. Foraging Behavior and Energetics of Great Egrets and Snowy Egrets at Interior Rivers and Weirs. J. Field Ornithol. 2017;78:411–419. doi: 10.1111/j.1557-9263.2007.00133.x. [DOI] [Google Scholar]