Abstract

During the last decade, there has been rapid adoption of ground and aerial platforms with multiple sensors for phenotyping various biotic and abiotic stresses throughout the developmental stages of the crop plant. High throughput phenotyping (HTP) involves the application of these tools to phenotype the plants and can vary from ground-based imaging to aerial phenotyping to remote sensing. Adoption of these HTP tools has tried to reduce the phenotyping bottleneck in breeding programs and help to increase the pace of genetic gain. More specifically, several root phenotyping tools are discussed to study the plant’s hidden half and an area long neglected. However, the use of these HTP technologies produces big data sets that impede the inference from those datasets. Machine learning and deep learning provide an alternative opportunity for the extraction of useful information for making conclusions. These are interdisciplinary approaches for data analysis using probability, statistics, classification, regression, decision theory, data visualization, and neural networks to relate information extracted with the phenotypes obtained. These techniques use feature extraction, identification, classification, and prediction criteria to identify pertinent data for use in plant breeding and pathology activities. This review focuses on the recent findings where machine learning and deep learning approaches have been used for plant stress phenotyping with data being collected using various HTP platforms. We have provided a comprehensive overview of different machine learning and deep learning tools available with their potential advantages and pitfalls. Overall, this review provides an avenue for studying various HTP platforms with particular emphasis on using the machine learning and deep learning tools for drawing legitimate conclusions. Finally, we propose the conceptual challenges being faced and provide insights on future perspectives for managing those issues.

Keywords: Biotic and abiotic stresses, Deep learning, Ground-based imaging, High throughput phenotyping, Machine learning, Unmanned aerial vehicle

Introduction

The world population is expected to reach approximately 9 to 10 billion by 2050; therefore a gain of around 25–70% above present-day production levels will be required to meet these burgeoning population demands (Hunter et al. 2017). Further, various biotic and abiotic factors cause adverse environmental conditions or stress for crop plants, resulting in a significant reduction in their yields. This significant reduction in crop yield due to stress can jeopardize global food security (Strange and Scott 2005). Enhancement of crop yields is an ever-changing global challenge for plant breeders, entomologists, pathologists, and farmers. Hence, an in-depth understanding of plant stress is pivotal for improving yield protection for sustainable production systems (Pessarakli 2019). Plant scientists rely on crop phenotyping for precise and reliable trait collection and utilization of genetic resources and tools to accomplish their research goals.

Plant phenotyping is defined as the comprehensive assessment of complex traits of plants such as development, growth, resistance, tolerance, physiology, architecture, yield, ecology, and the elementary measurement of individual quantitative parameters that form the foundation for complex trait assessment (Li et al. 2014). Breeding programs generally aim to phenotype large populations for numerous traits throughout the crop cycle (Sandhu et al. 2021b,c). This phenotyping challenge is further aggravated by the need to sample at multiple environment with replicated trials. Traditional phenotyping is very costly, laborious, destructive, and could decrease the significance or preciseness of the results. The development of automated, high throughput phenotyping (HTP) systems merged with artificial intelligence has largely overcome the problems linked with the contemporary state-of-the-art crop stress phenotyping. HTP has offered great potential for non-destructive and effective field-based plant phenotyping. Manual, semi-autonomous or autonomous platforms furnished with single or multiple sensors record temporal and spatial data, resulting in large amounts of data for storage and analysis (Kaur et al. 2021; Sandhu et al. 2021c). For the analysis and interpretation of these massive datasets, machine learning (ML) and its subtypes, i.e. deep learning (DL) approaches, are utilized (Ashourloo et al. 2014; Atieno et al. 2017; LeCun et al. 2015; Lin et al. 2019; Ramcharan et al. 2019; Sandhu et al. 2021a).

Machine learning is a multidisciplinary approach that largely relies on probability and decision theories, visualization, and optimization. Machine learning approaches can handle large amounts of data effectively and allow plant researchers to search massive datasets to discover patterns by concurrently looking at a combination of traits rather than analyzing each trait or feature separately. The capability of identifying a hierarchy of features and inferring generalized trends from given data is one of the major attributes responsible for the immense success of ML tools. Supervised and unsupervised learning are the two major ML techniques, which have been extensively used for biotic and abiotic stress phenotyping in crops (Ashourloo et al. 2014; Peña et al. 2015; Raza et al. 2015; Naik et al. 2017; Zhang et al. 2019c). Traditional ML approaches require significant efforts for feature designing, which is a laborious procedure and calls for expertise in computation and image analysis, thereby hindering traditional ML approaches for trait phenotyping applications. DL has emerged as a potential ML approach that incorporates benefits of both the advanced computing power and massive datasets and allows for hierarchical data learning (LeCun et al. 2015; Min et al. 2017). Further, DL also bypasses the need for feature designing, as the features are learned automatically from the data. The important DL models include multilayer perceptron (MLP), generative adversarial networks (GAN), convolutional neural network (CNN), and recurrent neural network (RNN) (LeCun et al. 2015). Deep CNNs primarily use DL architecture that have now attained state-of-the-art performance for crucial computer vision tasks; for instance, image classification, object recognition, and image segmentation (Pérez-Enciso and Zingaretti 2019).

In this article, we review state-of-the-art image-based HTP methods with discussion on different imaging platforms, imaging techniques, and spectral indices deployed for plant stress phenotyping. Furthermore, we provide a comprehensive overview of the different ML and DL tools available with their comparative advantages and shortcomings. We focus more on DL applications that mainly use image data because digital imaging is comparatively cheap, may be combined with the ground, manual and aerial platforms employed in a scalable manner, and do not require much technical expertise to install with off-the-shelf components for HTP of plant stress. Furthermore, we also summarize several recent studies involving ML and DL approaches for phenotyping different biotic and abiotic stresses in plants.

Phenotyping Platforms

The area of plant stress phenotyping is steadily progressing, with destructive, low throughput phenotyping protocols/methods being substituted by non-invasive high-throughput methods (Barbedo 2019). Expeditious developments in non-invasive affordable sensors and imaging techniques and tools over the decades have transformed plant phenomics. Moreover, these developments have brought harmony between the sensors, imaging techniques and analytical tools. This consonance has led to the development of one-piece compact imaging platforms for HTP studies. Several HTP platforms exist and are presently employed to phenotype different biotic and abiotic stress-associated traits in various crops (Table 1). Examples of these platforms include an automated platform i.e. “PHENOPSIS” for phenotyping plant responses to soil water stress in Arabidopsis (Granier et al. 2006); “GROWSCREEN FLUORO” to phenotype leaf growth and chlorophyll fluorescence which allowed the detection of tolerance to different abiotic stresses in Arabidopsis (Arabidopsis thaliana L.) (Jansen et al. 2009); “LemnaTec 3D Scanalyzer system” for non-invasive screening of different salinity tolerance traits in rice (Oryzae sativa L.) (Hairmansis et al. 2014); “HyperART” for non-destructive quantification of leaf traits such as leaf chlorophyll content and disease severity on leaves in four different crop species (barley, maize, tomato and rapeseed) (Bergsträsser et al. 2015); “PhenoBox” for detection of head smut and corn smut diseases on Brachypodium and maize (Zea mays L.), respectively, and salt stress response in tobacco (Nicotiana tabacum L.) (Czedik-Eysenberg et al. 2018); “PHENOVISION” for detection of drought stress and recovery in maize plants (Asaari et al. 2019); “PhénoField” for characterization of different abiotic stresses in wheat (Triticum aestivum L.) (Beauchêne et al. 2019); and the “PlantScreen™ Robotic XYZ System” for analyzing different traits associated with drought tolerance in rice (Kim et al. 2020).

Table 1.

Details of some selected imaging platforms used for trait phenotyping for biotic and abiotic stress and other morphological traits in crops

| Platform | Traits recorded | Crop | References |

|---|---|---|---|

| A. Biotic and abiotic stresses | |||

| PHENOPSIS | Plant responses to water stress | Arabidopsis (Arabidopsis thaliana) | Granier et al. (2006) |

| PHENODYN | Soil water status (drought scenarios), leaf elongation rate, and micrometeorological variable | Rice (Oryza sativa) and maize (Zea mays) | Sadok et al. (2007) |

| GROWSCREENFLUORO | Leaf growth and chlorophyll fluorescence which allows detection of stress tolerance | Arabidopsis (Arabidopsis thaliana) | Jansen et al. (2009) |

| Field monitoring support system | Occurrence of the rice bug in the field | Rice (Oryza sativa) | Fukatsu et al. ((2012) |

| BreedVision | Lodging, plant moisture content, biomass yield or tiller density | Triticale (xTriticosecale Wittmack L.) | Busemeyer et al. (2013a) |

| LemnaTec 3D scanalyzer system | Salinity tolerance traits | Rice (Oryza sativa) | Hairmansis et al. (2014) |

| HyperART | Leaf traits such as disease severity or leaf chlorophyll content | Barley (Hordeum vulgare), maize (Zea mays), tomato (Lycopersicon esculentum) and rapeseed (Brassica rapa) | Bergsträsser et al. (2015) |

| Automated video tracking platform | Resistance to aphids and other piercing-sucking insects | lettuce (Lactuca sativa) and Arabidopsis (Arabidopsis thaliana) | Kloth et al. (2015) |

| RhizoTubes | Root related traits under non-stressed and stressed conditions | Medicago (Medicago truncatula), pea (Pisum sativum), rapeseed (Brassica napus), grapes (Vitis vinifera), wheat (Triticum aestivum) | Jeudy et al. (2016) |

| RADIX | Root and shoot related traits under control and as well as stress conditions | Maize (Zea mays) | Le Marié et al. (2016) |

| PhenoBox | Detection of head smut fungus and corn smut on Brachypodium and maize, respectively, and salt stress response in Tobacco | Brachypodium (Brachypodium distachyon), maize (Zea mays), tobacco (Nicotiana benthamiana) | Czedik-Eysenberg et al. (2018) |

| PHENOVISION | Detection of drought stress and recovery | Maize (Zea mays) | Asaari et al. (2019) |

| PhénoField | Characterization of different abiotic stresses | Wheat (Triticum aestivum) | Beauchêne et al. (2019) |

| Liaphen | Leaf expansion or transpiration rate in response to water deficit | Sunflower (Helianthus annuus) | Gosseau et al. (2018) |

| PlantScreen™ robotic XYZ system | Drought tolerance traits | Rice (Oryza sativa) | Kim et al. (2020) |

| PHENOTIC | Evaluation of plant resistance to pathogens, evaluation of virulence of pathogens | Horticultural crops | Boureau 2020) |

| PhenoImage | Plant responses to water stress | Wheat (Triticum aestivum), sorghum (Sorghum bicolor) | Zhu et al. (2021) |

| B. Morphological and physiological traits (recorded under unstressed conditions) | |||

| Plant root monitoring platform (PlaRoM) | Root related traits | Arabidopsis (Arabidopsis thaliana) | Yazdanbakhsh and Fisahn (2009) |

| Phenoscope | Complex traits which involved in growth responses to the environment | Arabidopsis (Arabidopsis thaliana) | Tisné et al. (2013) |

| High-throughput rice phenotyping facility (HRPF) | Agronomic traits | Rice (Oryza sativa) | Yang et al. (2014) |

| Zeppelin NT aircraft | Leaf area index, leaf biomass, early vigour, plant height | Maize (Zea mays) | Liebisch et al. (2015) |

| Rhizoponics | Root related traits | Arabidopsis (Arabidopsis thaliana) | Mathieu et al. (2015) |

| Phenocart | Morphological traits | Wheat (Triticum aestivum) | Crain et al. (2016) |

| PhenoArch phenotyping platform | Light interception and radiation‐use efficiency | Maize (Zea mays) | Cabrera-Bosquet et al. (2016) |

| Phenovator | Growth, photosynthesis, and spectral reflectance | Arabidopsis (Arabidopsis thaliana) | Flood et al. (2016) |

| PHENOARCH | For tracking the growths of maize ear and silks | Maize (Zea mays) | Brichet et al. (2017) |

| Phenobot 1.0 | Biomass-related traits | Sorghum (Sorghum bicolor) | Salas Fernandez et al. (2017) |

| Field Scanalyzer | Morphological traits | Wheat (Triticum aestivum) | Virlet et al. (2016) |

| CropQuant | Performance-related traits | Wheat (Triticum aestivum) | Zhou et al. (2017) |

| PhenoRoots | Root related traits | Cotton (Gossypium hirsutum L.) | Martins et al. (2020) |

| Self-propelled electric HTPP platform | Plant height | Wheat (Triticum aestivum) | Pérez-Ruiz et al. (2020) |

| MVS-Pheno | Plant height and leaf traits | Maize (Zea mays) | Wu et al. (2020) |

Data has also been recorded in an automated and high throughput manner for root and shoot related traits, leaf traits, plant height, plant biomass, early vigor, radiation use efficiency, photosynthesis in different plant species such as rice, wheat, maize, sorghum (Sorghum bicolor L.), cotton (Gossypium hirsutum L.), Arabidopsis, Brachypodium (Brachypodium distachyon L.), rapeseed (Brassica napus L.), and barley (Hordeum vulgare L.) among others using different phenotyping platforms such as RootReader3D (Clark et al. 2011), GROWSCREEN-Rhizo (Nagel et al., 2012), Zeppelin NT aircraft (Liebisch et al. 2015), Phenocart (Crain et al. 2016), Phenovator (Flood et al. 2016), PHENOARCH (Brichet et al. 2017), Field Scanalyzer (Virlet et al. 2016), CropQuant (Zhou et al. 2017), and MVS-Pheno (Wu et al. 2020) (Table 1). These platforms have the potential to be utilized for HTP of traits associated with stress tolerance/resistance in different crops.

In the last decade, several state-of-the-art phenomics centers have been established across the world which utilize sensor platforms for phenotyping under controlled conditions. Major phenomics centers include ‘High-Resolution Plant Phenomics Center’ and ‘Plant Accelerator’ in Australia; ‘Leibniz Institute of Plant Genetics and Crop Plant Research’ and the ‘Julich Plant Phenotyping Center’ in Germany; the ‘National Plant Phenomics Center’ in the United Kingdom and the ‘Nanaji Deshmukh Plant Phenomics Center’ in India (Mir et al. 2019). To disseminate information about HTP, an international association of major plant phenotyping centers was also established in Germany, known as the International Plant Phenotyping Network (https://www.plant-phenotyping.org/IPPN_home).

Significant efforts are also being made to develop advanced technologies for use under field conditions at industrial and experimental scales. Some private companies, including ‘LemnaTec’, ‘PhenoSpex’, ‘Phenokey’, ‘WIWAM’, ‘Photon System Instruments’, and ‘We Provide Solutions’ provide large-scale, customized HTP platforms for both controlled and field environments (Gehan and Kellogg 2017; Mir et al. 2019). A list of major HTP platforms being utilized in different laboratories is given in Table 1. These imaging platforms can be broken down into their components to understand the evolution and scope of HTP studies. Understanding the subunits of imaging platforms that include imaging sensors, imaging techniques, and analytical tools such as spectral indices, would help justify the role of ML and DL in HTP studies and these have been summarized below.

Imaging Techniques

Phenomics has been extensively used to monitor diseases, pest infestations, drought stress, nutrient status, growth, presence of weeds and yield under stresses and normal conditions in different crop species (Barbedo 2019). Technological advancement has made novel imaging techniques available for use in HTP. Imaging techniques range from handheld mobile phones to highly flexible drone imaging using unmanned aerial vehicles (UAV). UAVs offer a platform that rapidly records data using different imaging sensors over large areas and potentially gives images with high spatial resolution. UAV can be used to cover plots or multiple fields in one flight, but their limited battery capacity reduces their utility for very large-scale HTP. Remote sensing using satellites imagery has been extensively used in assessing plant stresses since the early 1970s (Saini et al. 2022; Sishodia et al. 2020). Multispectral images obtained from satellites can be used to assess drought conditions in a particular area, crop damage due to insect pests, for example, tracking the damages caused by a swarm of locusts, or crop damage due to diseases (Kaur et al. 2021). As mentioned earlier, the field of HTP is an evolving area with consistent changes and same is true for the imaging techniques. To score minute changes in plant development with more detail and automate the imaging process, ground-based imaging platforms are also put to use. Although ground-based imaging platforms has the same limitation as drones due to limited battery capacity, but they provide more detailed and accurate images at the individual plant to individual branches, even down to the single leaf level. More detail provides more data and thus build accurate models and assessments. Moreover, the ground-based imaging platforms can be programmed to engage in a time-scheduled analysis even in the absence of the researcher, making it more convenient and efficient. All of these imaging techniques generate terabytes of data per day and thus cannot be handled manually hence they need the assistance of ML and DL programs for their management. Different machine learning methods, specifically deep learning, may efficiently deal with the millions of images quickly and with high reliability (Ashourloo et al. 2014; LeCun et al. 2015; Atieno et al. 2017). Now we are providing a brief overview of different imaging techniques used for plant stress phenotyping.

Satellite Imagery

Satellite imagery or remote sensing is the oldest of all HTP method. Satellites can cover a large area from 1,000 hectares to an entire county at a time. Earth observation satellites installed with multiple sensors having large apertures are used to capture ground information. These sensors vary from RGB, multispectral, hyperspectral, thermal and time of flight sensors. The multispectral sensors collect information in specific wavelengths (bands) out of the electromagnetic (EM) spectrum. With an ability to target 2–10 bands out of the EM spectrum at a time, these sensors generally target Red (R), Blue (B), and Green (G) bands which are visible to the human eye. The information collected from each specific band is then overlapped with the other bands to generate high-resolution RGB images. Other than these bands, near infra-red (NIR) bands or infrared (IR) bands are also used (Pineda et al. 2020). Multispectral sensors can have a spectral resolution as high as 0.25 m, and is continuously improving with advancements in technology.

Hyperspectral sensors can target numerous bands (up to thousands), but in a narrower spectral range (Pettorelli 2019). As they target multiple bands, their spectral resolution is higher than multispectral sensors. Studies have been conducted to use hyperspectral images for plant disease identification (Das et al. 2015; Nagasubramanian et al. 2019). Most of multispectral and hyperspectral sensors use the reflected sunlight from the earth’s surface to gather information which make these passive sensors. Conversely, active sensors include RADAR (Radio Detection and Ranging) and LiDAR (Light Detecting and Ranging), which both emit radiation to gather ground information (Teke et al. 2013). The band information gathered from multispectral and hyperspectral sensors is evaluated using different spectral indices to assess plant health and conditions.

One of the biggest limitations of satellite imaging is the high cost associated with constructing and launching satellites. A list of unique satellite sensors with their spatial resolution is provided by Pettorelli (2019). Some of the satellite involved in plant stress assessment include Resourcesat-2 and Resourcesat-2A (Indian Space Research Organization), Sentinel-2 A + B twin platform (European Space Agency) (Segarra et al. 2020), EO-1 Hyperion (National Aeronautics and Space Administration) (Apan et al. 2004), Spot-6 and Spot-7 (Centre national d'études spatiales), and KOMPSAT-3A (Korean Aerospace Industries, Ltd.).

Mobile Cameras/Imaging

Mobile phones are equipped with high-quality cameras limited to basic photography, but some manufacturers add advanced sensors like LiDAR for 3D imaging. The rapid development of smartphones with powerful computing and high-resolution cameras has also facilitated the creation of mobile applications with expanding utility. Moreover, features of smartphone technology are also being incorporated into other portable devices/instruments, thereby expanding the range of sensors and strengthening the portability and connectivity of conventional phenotyping equipment. These advancements put researchers at an advantage as they can conveniently record the phenotype with a portable handheld device. These advances, however, do not provide any practical use for research purposes, as taking pictures of each plant in the field is not practical using this method. It is equivalent to manual phenotyping as researchers would still need to go and look at every plant or its parts. Although mobile imaging can help diagnose biotic (Hallau et al. 2017) and abiotic stresses (Naik et al. 2017) using ML and DL, it doesn’t have any utility in HTP studies. Many mobile applications are available that can help a researcher or even a farmer run a quick diagnostic of symptoms before an in-depth analysis. Such mobile applications may be adapted to other important crops and their diseases, thereby contributing to better decision-making in integrated disease management but this utility is limited.

UAV Imaging

Imaging using UAV is considered the most convenient and economical for large-scale HTP studies (Ehsani and Maja 2013). UAVs take multiple photographs during their flight. The images captured include parts of the whole field which are then stitched together to form a larger image called an orthomosaic providing an overall view of the field. Open drone map, Pix4D and QGIS are some of the software applications that can be used to create these orthomosaics. Images from UAVs have high resolution as they fly closer to the ground compared to satellites. This can be particularly helpful for hyperspectral sensors as they have a low spatial resolution. UAVs can carry all the sensors that can be installed on any satellite due to increase of their payload capacity. Still, they cannot cover as much spatial area as satellites because of their limited battery capacity and flight height. Like the satellites, UAVs provide data in the form of various spectral bands that are further evaluated using spectral indices. Numerous studies have been conducted where drone imaging is used to assess biotic and abiotic stresses in plants. Some of these are provided in Table 2.

Table 2.

Different imaging techniques, plant assessment studies conducted using the imaging technique, and their advantages and limitations

| Imaging technique | Studies conducted using the technique | Advantages | Limitations |

|---|---|---|---|

| Satellite Imagery | Heat stress (Cârlan et al. 2020), wheat yield (Fieuzal et al. 2020), cotton yield (He and Mostovoy 2019), dry bean (Sankaran et al. 2019), drought assessment (Babaeian et al. 2019), hessian Fly infestation wheat (Bhattarai et al. 2019), crop water stress (Omran 2018), alien invasive species (Royimani et al. 2018), purple spot diseases asparagus (Navrozidis et al. 2018), phytophthora root rot in avocado (Salgadoe et al. 2018), heavy metal induced stress rice (Liu et al. 2018), wheat yellow rust (Zheng et al. 2018), cotton root rot (Song et al. 2017), red palm weevil attack (Bannari et al. 2017), wheat biomass (Dong et al. 2016), powdery mildew (Yuan et al. 2016), orange rust sugarcane (Apan et al. 2004) | Easily covers very large areas | High cost of satellite and their launch |

| The data can easily help predict droughts and epiphytotics as very large areas can be covered at the same time | RGB imaging is hindered by clouds and inclement weather | ||

| Temporal cycle of satellite limits use at any time | |||

| Mobile Cameras | Cercospora leaf spot(Hallau et al. 2017), iron deficiency chlorosis severity in soybean(Naik et al. 2017), salinity stress tolerance (Awlia et al. 2016) | Convenient and portable | No practical use in research as limited to only a handful of samples |

| Rapid | |||

| No operational costs | |||

| UAV/Drone imaging | Target spot and Bacterial spot Tomato (Abdulridha et al. 2020), iron deficiency soybean (Dobbels and Lorenz 2019), early stress detection (Sagan et al. 2019), dry bean (Sankaran et al. 2019), grain yield wheat (Hassan et al. 2019), plant Nitrogen content (Camino et al. 2018), wheat biomass (Yue et al. 2017), maize yield (Maresma et al. 2016), grapevine leaf stripe (Gennaro et al. 2016), bacterial Leaf Blight in rice (Das et al. 2015), low-nitrogen (low-N) stress tolerance (Zaman-Allah et al. 2015) | Very economical | Cannot cover very large areas like a satellite |

| Can cover large areas | Creating orthomosaics out of a myriad of sectional images | ||

| High resolution data | Limited battery capacity | ||

| Easy to operate with low learning curve | |||

| Imaging using robots | Nitrogen content maize (Chen et al. 2021), vegetation indices (Bai et al. 2019), Xylella fastidiosa infection Olive (Rey et al. 2019), leaf traits (Atefi et al. 2019), plant architecture (Qiu et al. 2019), heat stress and stripe rust resistance wheat (Zhang et al. 2019a), plant architecture (Young et al. 2018) | Most advanced technique | Still an evolving technique |

| Highly efficient as it provides human-like manual phenotyping results | Much work required for workflow and data management | ||

| Test images are run through models autonomously to assess plant health, no need of separate evaluation with spectral indices | High initial cost of equipment | ||

| Programmable to act like a cron job, increases efficiency | Programming skills are required |

Ground Based Imaging Platforms

Imaging using ground-based platforms is the most advanced of all of the techniques. Ground-based platforms provide very close range imaging for plant stress assessment (Mishra et al. 2020). The proximity provides human-like manual phenotyping with greater efficiency (Atefi et al. 2019). Most of the ground based platforms are autonomous systems, they can be integrated with onboard chips to evaluate the parameters of each phenotype (Vougioukas 2019). Such autonomous systems, alongside test images, add much more information to the project, such as phenotype score, metrics, parameters, generating up to terabytes of data per day. To organize all of the generated information, it becomes important to have efficient workflow managers such as PhytoOracle (Peri 2020) for improved data management and processing. Ground-based platforms are the perfect example of IoT-based intelligent systems for plant stress assessment and HTP studies (Das et al., 2019).

Spectral Indices for Plant Stress Phenotyping

Images captured by the aforementioned techniques need to be decoded, and spectral indices (SIs) are used to assess the information in these images (Hunt et al. 2013). SIs involve conducting various sets of operations on different spectral layers of an image. These sets of operations include some mathematical calculations and combination of spectral reflectance from two or more wavelengths. The result of this mathematical combination generates a number that denotes the relative abundance of the feature of interest (Jackson and Huete 1991). Various types of SIs are available to assess different types of features captured in an image. For the purposes of this review, we will discuss only vegetation indices (VIs) which are a spectral calculation conducted using different spectral bands, for decoding features and information about vegetation captured in an image. VIs can provide a plethora of information such as plant phenotype, plant architecture, stress level, and biomass (Kokhan and Vostokov 2020). The most important factor in using VIs is determining is the right kind of VIs to be incorporated for a particular application (Xue and Su 2017). Various types of VIs can be used for plant assessment studies in HTP programs. Because healthy plants reflect more IR radiation than stressed plants (Xue and Su 2017), mostly NIR or IR bands are used to monitor aspects like biotic (Mahlein et al. 2012a; Oerke et al. 2014) and abiotic stresses (Prashar and Jones 2016). Therefore, VIs involving NIR/IR data such as normalized difference vegetation index (NDVI) become of prime importance in plant stress assessment studies. A list of various types of VIs is provided in Table 3.

Table 3.

Commonly used vegetation indices for plant stress phenotyping. Rn, Rre, Rr, Rg, and Rb are the reflectance bands for NIR, Red Edge, red, green, and blue wavelengths

| Index name | Formula | Relevance | References |

|---|---|---|---|

| Normalized difference vegetation Index | (Rn − Rr)/(Rn + Rr) | Plant health monitoring, assess plant stress | Tucker (1979) |

| Triangular vegetation index | 0.5 [120(Rn − Rg) − 200 (Rr − Rg)] | Leaf chlorophyll measurements and leaf area index | Broge and Leblanc (2001) |

| Triangular greenness index | Rg − 0.39·Rr − 0.61·Rb | Leaf chlorophyll measurements | Hunt et al. (2011) |

| Normalized green red difference index | (Rg − Rr)/(Rg + Rr) | Biomass measurements | Tucker (1979) |

| Green normalized difference vegetation index | (Rn − Rg)/(Rn + Rg) | Leaf chlorophyll measurements | Gitelson et al. (1996) |

| Enhanced vegetation index | 2.5(Rn − Rr)/(Rn + 6·Rr − 7.5·Rb + 1) | Improved vegetation monitoring and biomass measurements | Huete et al. (2002) |

| Chlorophyll vegetation index | Rn·Rr/Rg2 | Leaf chlorophyll measurements | Vincini et al. (2008) |

| Chlorophyll index—green | Rn/Rg − 1 | Leaf chlorophyll measurements | Gitelson et al. (2003) |

| Chlorophyll index—red edge | Rn/Rre − 1 | Leaf chlorophyll measurements | Gitelson et al. (2003) |

| Visible atmospherically resistant index | (Rg − Rr)/(Rg + Rr − Rb) | Highlights vegetation in images | Gitelson et al. (2002) |

| Triangular chlorophyll index | 1.2(R700 − R550) − 1.5(R670 − R550)·√(R700/R670) | Leaf chlorophyll measurements | Haboudane et al. (2008) |

| Green leaf index | (2·Rg − Rr − Rb)/(2·Rg + Rr + Rb) | Differentiates vegetation from bare soil | Louhaichi et al. (2001) |

| Normalized difference red edge index | (Rn − Rre)/(Rn + Rre) | Leaf chlorophyll measurements | Gitelson and Merzlyak (1994) |

| MERIS total chlorophyll index | (R750 − R710)/(R710 − R680) | Leaf chlorophyll measurements | Dash and Curran (2004) |

Big Data and Machine Learning

With the rapid adoption of HTP platforms in agriculture, it has created enormous volume, variety, and veracity of the data with collection at multiple time points, causing big data issues. The ability to analyze and understand data is becoming critical for the development of new tools and findings. A report from the Mckinsey industry (Manyika et al. 2011) shows that the amount of data generated is increasing at approximately 50% per year, which is equivalent to a 40-fold rise in data since 2001. Even a few years ago storing such enormous data was challenging, but with better storage capacities, it is now possible for such data to be efficiently archived and potentially used in the near future. The biggest hesitation in adopting HTP for most agriculture operations at a massive scale is the challenge with analysis and interpretation of the information. Machine learning and deep learning approaches act as a valuable computer science technique, which could be adopted for increasing its utilization in agriculture, especially in monitoring plant stresses. There are numerous studies available where ML approaches have been used for processing images to identify different types and levels of stresses such as powdery mildew in cucumber (Cucumis sativus L.) (Lin et al. 2019), aflatoxin level in maize (Yao et al. 2013), leaf rust in wheat (Ashourloo et al. 2014), and salinity stress in chickpea (Cicer arietinum L.) (Atieno et al. 2017) and many more examples (Table 4). These studies provided an excellent avenue for how ML could be used for managing plant stresses.

Table 4.

Studies using various machine and deep learning tools for abiotic stress management in field crops during the last decade

| Plant | Plant trait/disease | Spectral range (nm) | Domain (field/lab) | Sensing modality | Data analysis | References |

|---|---|---|---|---|---|---|

| Barley | Drought | 400–900 | Greenhouse | Hyperspectral imaging | Simplex volume maximization | Mer et al. (2012) |

| Barley | Drought | 430–890 | Field | Hyperspectral imaging | Support vector machine | Behmann et al. (2014) |

| Barley | Drought | 400–1000 | Field | Hyperspectral imaging | Dirichlet aggregation regression | Kersting et al. (2012) |

| Chickpea | Salinity | 380–760 | Greenhouse | RGB imaging | PLS and correlation analysis | Atieno et al. (2017) |

| Chilean strawberry (Fragraria chiloensis) | Salt stress | 350–2500 | Greenhouse | Spectral reflectance imaging | ANOVA, SRI linear regression analysis, multilinear regression analysis | Garriga et al. (2014) |

| Citrus | Chlorophyll fluorescence water Stress | 400–885 | Field | Non-imaging | Regression | Zarco-Tejada et al. (2012) |

| Cotton | Water stress |

490–900 7500–13,500 |

Field | Thermal Imaging | Mapping and Correlation | Bian et al. (2019) |

| Maize | Water stress | 475–840 | Field | Non-imaging | Correlations among Indices | Zhang et al. (2019c) |

| Maize | Nitrogen, Oxygen, and Ash | 400–2500 | Field | Non-imaging | ANOVA and regression | Cabrera-Bosquet et al. (2011) |

| Maize | Weeds | 380–760 | Field | RGB imaging | Image processing and color segmentation | Burgos-Artizzu et al. (2011) |

| Maize | Weeds | 380–760 | Field | RGB imaging | Support vector machine for separating plants based on spectral components | Guerrero et al. (2012) |

| Oilseed rape | Proteins | 500–900 | Laboratory | Near-infrared and hyperspectral imaging | PLS | Zhang et al. (2015) |

| Pepper | Nitrogen | 380–1030 | Laboratory | Hyperspectral imaging | PLSR | Yu et al. (2014) |

| Rice | Nitrogen | 400–1000 | Field | Hyperspectral imaging | PLSR | Onoyama et al. (2013) |

| Rice | Salinity | 380–760 | Field | RGB imaging | Smoothing splines curves and gene mapping | Al-Tamimi et al. (2016) |

| Rice | Salt stress | 400–500 | Greenhouse | Fluorescence imaging | Hierarchical clustering, Pearson correlation analysis, non-linear mixed model | Campbell et al. (2015) |

| Soybean | Iron | 380–760 | Field | RGB imaging | Decision trees, random forests, KNN, LDA and QDA | Naik et al. (2017) |

| Soybean | Drought | 400–780 | Greenhouse | Hyperspectral fluorescence imaging | PLSR | Mo et al. (2015) |

| Spinach | Quality | 400–1000 | Lab | Hyperspectral imaging | PLS-DA | Diezma et al. (2013) |

| Spinach | Drought | 380–760, and 8000–14,000 | Field | Visible and thermal imaging | Support vector machine and Gaussian processes classifier | Raza et al. (2014) |

| Spring Wheat | Water Stress | 350–2500 | Field | Non-imaging | Vegetation indices and regression | Wang et al. (2015) |

| Sunflower | Weed | 450–780 | Field | Visible light and multispectral imaging | Classification | Peña et al. (2015) |

| Vineyard | Water stress variability | 530–800 | Field | Thermal and multispectral Imaging | Regression | Baluja et al. (2012) |

| Wheat | Water stress | 841–1652 | Landsat data | Multispectral imaging | Indices and regression | Dangwal et al. (2016) |

| Wheat | Nitrogen | 400–1000 | Both | Hyperspectral imaging | PLSR | Vigneau et al. (2011) |

| Wheat | Nitrogen, phosphorus, potassium, sulfur | 350–2500 | Field | Hyperspectral imaging | Regression | Mahajan et al. (2014) |

| Wheat | Ozone | 300–1100 | Field | Non-imaging | Correlation analysis | Chi et al. (2016) |

ML provides an alternative opportunity to extract valuable information for making conclusions, which were previously difficult because of issues discovering patterns from the datasets (Sandhu et al. 2020, 2021b). ML is an interdisciplinary approach for data analysis using probability, statistics, classification, regression, decision theory, data visualization, and neural networks to relate information extracted with the phenotype obtained (Samuel 1959). ML provides a significant advantage to plant breeders, pathologists, physiologists and agronomists for the extraction of multiple parameters to analyze traits together instead of traditional methods that focused on a single feature at a time. The other important advantage with ML is directly linking the variables extracted from HTP data to plant stresses (Zhang et al. 2019c), biomass accumulation (Busemeyer et al. 2013b), grain yield (Crain et al. 2018), and soil characteristics. ML’s greatest success involves inferring trends from the data and generalizing the results by training the model. There has been a recent adoption of ML in bioinformatics (Min et al. 2017), cell biology, epigenetics (Samantara et al. 2021), plant breeding (Sandhu et al. 2021a), pathology (Ashourloo et al. 2014), computer vision, image processing (Rousseau et al. 2013), voice recognition, and disease classification (Fuentes et al. 2017). The main driving forces behind applying these techniques in agriculture involve their use by commercial companies and a reduction in the cost of sensors and imaging platforms (Araus and Cairns 2014).

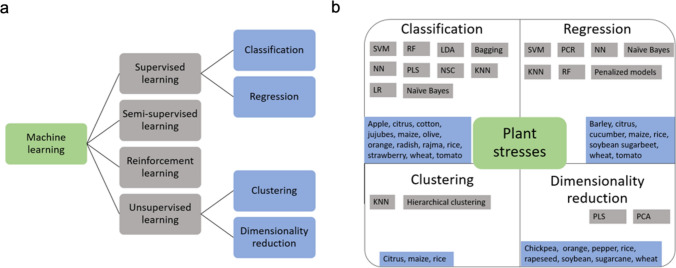

ML involves learning the patterns from the dataset using computerized models to make reliable conclusions without being explicitly told (Sandhu et al. 2022a, 2022b). ML efficiently uses its experience for identifying the underlying structure, pattern, similarities, or dissimilarities in the provided dataset for classification and prediction problems (LeCun et al. 2015). Typically, an ML model consists of a calibration process where a model is trained on a given large data set called a training set. The remaining dataset on which the model’s performance is validated is called the testing set. The accuracy and precision of the calibrated model classify its use for future applications. Generally, during the model training process, two approaches, namely, supervised and unsupervised machine learning are used. Supervised ML models involve providing a label for the data during the training process; for example, differentiating wheat and rice images, labels are provided for the two crops while training the model. On the other hand, the unsupervised model does not involve the use of labels during the training process, while the model attempts to differentiate both crops on its own by learning similarities and dissimilarities. Numerous studies have used ML for managing biotic and abiotic stresses in HTP and involve applications like support vector machine, discriminant analysis, k-means clustering, neural networks, clustering, dimensional reduction, and least discriminant analysis (Table 4). All of these models help to identify, classify, quantify, and predict different phenotyping components in plants. The overview of all these ML learning models can be found in Fig. 1 and the section below.

Fig. 1.

Classification of machine and deep learning models is provided which are mostly used in high throughput phenotyping (a, b)

Supervised Learning

Supervised learning provides the power to process data using machine language. The main goal of supervised learning is to use the input and output variables for mapping the relationship. The main interest is to build a mapping function such that if you used new input data, predictions could be made about the output. Training supervised learning models involves learning its parameters and is focused on lowering the training data’s loss function. Supervised learning can be further classified into classification and regression problems (Fig. 1). A classification problem involves categorizing the output variables by building a relationship within them. For example, grouping plants based on disease severity rating. Here, we review the studies using classification algorithms for plant stress detection. While in case of regression problems, the output consists of real values, and the model aims to predict the data using a trained model on the training data set. For example, the prediction of a plant’s grain yield can be done by training the model on the previous year’s data set. There are various supervised machine learning models which have been used for plant stress phenotyping and are described below.

Linear Discriminant Analysis

Linear discriminant analysis (LDA) uses a linear combination of features for categorizing the output into two or more classes. LDA has continuous independent variables with a categorical dependent variable (Kim et al. 2011). Kim et al. (2011) applied the discriminant analysis to classify 14 different Arabidopsis seeds into two groups using direct analysis in real-time and mass spectrometry. LDA and support vector machine (SVM) were used for the early detection of almond (Prunus dulcis) red leaf blotch using high-resolution hyperspectral and thermal imagery, and it was helpful for the differentiation between the trees that did not have symptoms and those with high infestation (Peña et al. 2015). In another study, various classification algorithms such as LDA, quadratic discriminant analysis (QDA), KNN and soft independent modelling of classification analogies were used to detect Huanglongbing in citrus orchards using the images acquired through visible-near infrared spectroscopy. The classification accuracies of QDA and SIMCA were 95% and 92%, respectively (Sankaran et al. 2011).

Support Vector Machine

The support vector machine (SVM) develops a hyperplane to have a maximum distance from the nearest example in the training data set. This hyperplane helps in the clear separation between different classes with maximization of the difference between the classes (Eichner et al. 2011). Eichner et al. (2011) utilized the support vector machines for detecting the intron retentions and exon skipping in tiling arrays. SVM was used for the segmentation of images, which were helpful in the analysis of Salmonella typhimurium (human pathogen) attack on Arabidopsis spp (Schikora et al. 2012). LDA and SVM classification methods were used to detect verticillium wilt in olive (Olea europaea) by using thermal and hyperspectral images. SVM performed better, having a classification accuracy of 79.2% as compared to LDA, which only had an accuracy of 59.0% (Calderón et al. 2015). This method was used to identify regions of canopy showing response to soil water deficit in spinach (Spinacia oleracea) using infrared thermal images. Results indicated that an average accuracy of 96.3% was obtained for SVM (Raza et al. 2014). In another study, SVM was used to detect drought stress in barley (Hordeum vulgare) plants from a series of hyperspectral images. The final data set contained 211,500 tests and 211,500 training instances. Five supervised prediction methods for deriving local stress levels were evaluated: one-vs.-one Support Vector Machine (SVM), one-vs.-all SVM, Support Vector Regression (SVR), Support Vector Ordinal Regression (SVORIM) and Linear Ordinal SVM classification. The highest accuracy was achieved by the one-vs.-one SVM (83%) (Behmann et al. 2014). Furthermore, SVM was used to identify weeds with green spectral components masked and unmasked. The masked plants were detected by identifying support vectors (Guerrero et al. 2012).

Logistic Regression

Logistic regression uses a logistic function for classifying binary variables. A logistic function usually classifies the dependent variable into two classes using all of the predictors with the odds ratio. Output with more than two values can be applied using multinomial logistic regression. Hyperspectral imaging was used for the early detection of apple scab for managing the application of pesticides and crop management strategies in the orchard. Logistic regression was efficiently used in this study for separating the infected and non-infected plants by selecting hyperspectral bands based on classification algorithms (Delalieux et al. 2007).

Random Forest

The working principle of random forests (RF) relies on the ensemble learning algorithm. This uses the tree-building process for classifying individuals into separate nodes of the tree. Random forests have several advantages over other tree-based classifications because of its ability to handle noise, control model overfitting, and a capacity to handle many variables. Random forest was used for feature selection from spectroradiometer data for detecting phaeosphaeria leaf spot in maize (Adam et al. 2017).

Linear Regression

Linear regression is used in most phenomics studies because of its simplicity and interpretation of data. The focus is how much variation in the target is explained by a particular feature in the dataset. A regression model was developed between crop water stress index (CWSI) and vegetation indices (VI) in maize to measure water stress by using multispectral images and regression models between VI and CWSI, which successfully mapped water stress (Zhang et al. 2019c). In another study, Pearson correlations and linear regression were calculated between thermal indices and leaf stomatal conductance (gs) and stem water potential (ѱSTEM) to measure water status variability in a vineyard using multispectral and thermal imagery (Baluja et al. 2012). Also, linear regression models were developed based on correlation coefficients between nitrogen absorption and different radiometric variables to determine nitrogen uptake in rice paddy by using two-band imaging systems, namely the visible red band (650–670 nm) and the near-infrared band (820–900 nm). The equation developed for nitrogen absorption per unit area based on the two band reflectance values indicated a high r-value (0.96) (Shibayama et al. 2009). In addition, the correlation and linear relationship between projected shoot area and shoot fresh weight were calculated to study salinity tolerance in rice by high throughput phenotyping technologies (Hairmansis et al. 2014), and a mixed linear model with the use of REML procedure was used for the detection of salinity tolerance in barley using infrared thermography (Sirault et al. 2009).

Multiple Linear Regression

Multiple linear regression (MLR), also known as multiple regression, predicts the outcome using several explanatory variables. MLR models the linear relationship between the predictors for predicting the outcome. Hyperspectral images were used to measure powdery mildew using multivariate linear regression (MLR), PLSR and Fisher linear discriminant analysis (FLDA) as data analysis techniques. The PLSR model's performance performed better than the MLR model, whereas FLDA had the highest accuracy (Zhang et al. 2012a). Bacterial spot in tomato (Lycopersicon esculentum) was measured using the spectral images and data analyzed using partial least squares (PLS) regression, analysis of correlation coefficient spectrum, and stepwise multiple linear regression (SMLR). Various predictive models were developed for the prediction of bacterial spot (Jones et al. 2010).

Partial Least Square Regression

Partial least square regression (PLSR) is a very beneficial tool for modelling and multivariate analysis since it can handle a large number of variables and collinearity among the variables (Yu et al. 2014). High value of correlation coefficient (r), and low values of RMSE are selected for developing the best model (Zhang et al. 2015). The PLSR model was used to estimate nitrogen content in rice (Oryza sativa) using ground-based hyperspectral imaging, and the relationship between the reflectance of the rice plant and nitrogen content was used to develop the PLSR model. The best model for the estimation of rice nitrogen content was constructed by combining the reflectance and the temperature data, which had low RMSE (0.95 g/m2) and RE (13%) (Onoyama et al. 2013). In another study, nitrogen content in citrus leaves was measured by hyperspectral imaging at 719 nm, and analysis was done using PLS. Stepwise multiple linear regression (SMLR) calibration models and the MLR calibration model performed better at 70% accuracy (Min et al. 2008). Also, the PLSR model successfully predicted drought stress in soybean plants, and its accuracy for two cultivars in an 8-day treatment group and a 6-day treatment group was 0.973 and 0.969, respectively (Mo et al. 2015).

Unsupervised Learning

Unsupervised learning finds the structure present in the unlabeled data set where output variables are not provided. The main focus in unsupervised learning is to identify the underlying structure present in the data to get more insight into the data. Unsupervised learning can be grouped into clustering of the dataset or extraction of latent factors using dimensionality reduction approaches (Fig. 1a). Clustering involves finding similarities in the dataset and later grouping those individuals. The dissimilar individuals are grouped into separate clusters. There are various clustering approaches including K-mean clustering and hierarchical clustering.

K-means Clustering

K-nearest neighbor (K-NN) is a nonparametric supervised and unsupervised classification method that assigns the object to its nearest neighbor using the most popular vote for classification. The number of classes depends upon the value of K specified before classification. These methods were used for classification and K-NN gave better results than Bayes rule for weed detection in cereal crops. The K-NN decision rule has high storage demand and is used for pattern recognition (Pérez et al. 2000). In another study, K-means clustering was used to extract values for the plant surface in the RGB channels for salinity tolerance in Arabidopsis thaliana (Awlia et al. 2016).

Dimensionality Reduction

Dimensionality reduction approaches try to explain the whole dataset with a few numbers of variables with the extraction of useful or latent variables. The most common dimensionality reduction techniques is principal component analysis (PCA) and it involves reducing the data’s dimensionality with the extraction of completely independent variables while minimizing information loss. PCA aims to explain the majority of variance present in the dataset using a few principal components. Stepwise discriminant analysis was performed to identify insect infestation in jujubes (Hovenia acerba Lindl.) by using visible and NIR spectroscopy. PCA was used for analyzing the spectra (Wang et al. 2011). In another study, aflatoxin B1 in maize (Zea mays) was detected by hyperspectral imaging and the data was analyzed using PCA. PCA was used for the reduction of dimensionality of the data. Stepwise factorial discriminant analysis was carried out on the latent variables provided by the PCAs (Wang et al. 2011). PCA analysis was also done to predict toxigenic fungi on maize with hyperspectral imaging (400–1000 nm), and discriminant analysis was done to create a model for fungal growth identification (Del Fiore et al. 2010). Furthermore, Fusarium infestation in wheat (Triticum aestivum) was identified with hyperspectral imaging and diseased and healthy plants were identified using PCA (Bauriegel et al. 2011).

Deep Learning

In the ML section, we provided information about various tools which could potentially be used for extracting valuable information from plants. However, there is rapid adoption of DL tools for easier data analysis of large numbers of images with high accuracy. DL is a branch of ML which uses a deep network of neurons and layers for processing information (LeCun et al. 2015). DL has provided remarkable achievements in other disciplines such as fraud detection, automated financial management, autonomous vehicles, consumer analytics, and automated medical diagnostics (Min et al. 2017). DL is a type of ML that does not require the prior feature selection, which is the characteristic of traditional ML models and the most time-consuming and error-prone step (Sandhu et al. 2021b). DL models involve automatically learning the pattern from a large data set using non-linear activation functions for making conclusions such as classification or predictions (Sandhu et al. 2021d).

The important DL models used for phenomics include, but are not limited to, a multilayer perceptron (MLP), generative adversarial networks (GAN), convolutional neural network (CNN), and recurrent neural network (RNN). It has been observed that CNN is superior for image analysis, and different CNN image recognition architecture is utilized for plants, namely ResNet, ZFNet, VGGNet, GoogleNet, and AlexNet (Fuentes et al. 2017). The general outline of deep learning consists of multiple connected neurons across the layers. The concept of DL was proposed in the 1950s (Samuel 1959) and is designed to work using a logic structure as a human would in completing a particular task. There has been rapid advancement in the adoption of DL models because of the development of efficient algorithms for estimation of complex hyperparameters for training the models (Sandhu et al. 2020). Deep learning consists of multiple terms, some of which can be found in Table 5.

Table 5.

Terms used in deep learning models for the model’s optimization and prediction

| Term | Definition |

|---|---|

| Activation function | The function that produces the neuron’s output |

| Batch | Partition of the data into different sets within a given epoch |

| Dropout | Removal of a fixed number of neurons during each training set for controlling overfitting |

| Early stopping | A strategy to control overfitting by the early stopping of the model |

| Epoch | Shifting the training set into different batches |

| Neuron | A primary entity of the DL model which learns the information and provides the output to the next layer using different activation functions |

| Regularization | Works as a penalty for neurons’ weight during model training |

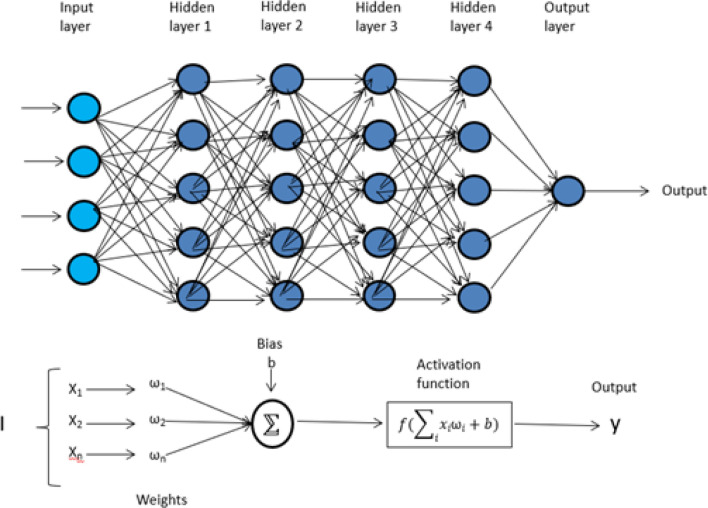

MLP is the most commonly used neural network in genomics which consists of multiple fully connected layers, namely input, hidden, and an output layer which is connected by a dense network of neurons. The input layer consists of all of the input features. The first hidden layer uses a different number of neurons to learn a weight parameter with a constant bias while training the model. The output of the first hidden layer acts as input for the second hidden layer and continues in this sequence. The final layer is known as an output layer where input from the last hidden layer converges to a single value. The output from the first hidden layer can be represented as:

Where Z1 is the output of the first hidden layer, b0 is the bias estimated with the rest of the weights W0, and 0(x) is the particular activation function used in the model (Gulli and Pal 2017). The detailed working and model of the MLP is presented in Fig. 2.

Fig. 2.

Multi-layer perceptron showing the working of the neural network using input, hidden, and output layer. The bottom half of the figure shows the weight associated with each neuron and transformation using an activation function. Y represents the final output from the model and is achieved by optimizing other hyperparameters

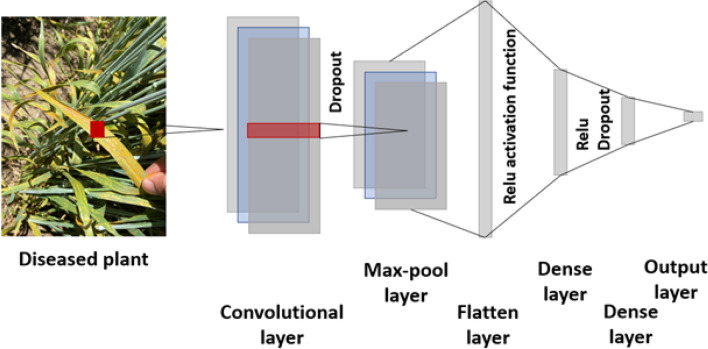

Convolutional neural network (CNN) is used when variables are distributed in one or two dimensions and is mostly used for plant image analysis. CNN is a special case of DL which uses fully connected layers known as convolutional layers. In each of the convolutional layers, the convolution operation is performed along with the inputs of predefined strides and width (Pérez-Enciso and Zingaretti 2019). Finally, smoothing the results is performed along the pooling layer. The detailed layout and working of CNN are provided in Fig. 3. CNN is the most commonly used classification model used in plant studies, especially for classifying different disease types. The biggest advantage of CNN involves utilization of the raw image as input without any prior pre-processing of the image. CNN has demonstrated superiority in plant detection and diagnosis, classification of fruits and flowers, and detection of disease severity in infected plants (Sandhu et al. 2020, 2021b).

Fig. 3.

The most straightforward design of convolutional neural networks with convolutional, max pool, flatten, dense and output layer. Each region of interest is processed separately in the CNN for predicting the output

Ramcharan et al. (2019) showed that CNN could be used in mobile-based apps for detecting foliar disease symptoms in cassava (Manihot esculenta Crantz). They also suggested that model performances differed when trained on real-world conditions and existing images, demonstrating the importance of lighting and orientation for efficient model performance. Powdery mildew is a devastating disease in cucumber during the middle and final stages of growth. Quantitative assessment of disease over the cucumber (Cucumis sativus) leaves is especially important for plant breeders for making selections. Lin et al. (2019) segmented the diseased leaf images at pixel level using a segmentation model on a CNN and achieved a pixel accuracy up to 96%. The CNN model employed in their study outperformed the existing segmentation models, namely, random forest, K-means, and support vector machines. Similarly, deep convolutional neural networks were used to detect 10 different diseases in rice and differentiate them from the healthy plants on a dataset of 500 images. That study showed that using ten-fold cross-validation, deep CNN models gave an accuracy of 95.48%, which was significantly higher than the conventional machine learning models (Lu et al. 2017) (Table 6).

Table 6.

Studies using various machine and deep learning tools for biotic stress management in field crops during the last decade

| Plant | Plant trait/disease | Pathogen | Spectral range (nm) | Domain (field/lab) | Sensing modality | Data analysis | References |

|---|---|---|---|---|---|---|---|

| Almond | Red leaf blotch | Polystigma amygdalinum | 400–790 | Field | RGB, hyperspectral and thermal imaging | ANOVA, LDA, SVM | López-López et al. (2016) |

| Apple | Scab | Venturia inaequalis | 400–800 | Field | RGB and 3D imaging | Image processing and segmentation | Chéné et al. (2012) |

| Arabidopsis | Salmonella bacteria | Salmonella typhimurium | 380–760 | Laboratory | RGB imaging | Support vector machine | Schikora et al. (2012) |

| Banana | Black Sigatoka and fusarium wilt | Mycosphaerella fijiensis | 380–760 | Field | RGB imaging | Deep learning and image processing | Sanga et al. (2020) |

| Banana | Black Sigatoka and speckle | Mycosphaerella fijiensis | 380–760 | Field | RGB imaging | Deep learning | Amara et al. (2017) |

| Banana | Black Sigatoka | Mycosphaerella fijiensis | 380–1024 | Laboratory | Hyperspectral imaging | Visual inspection | Ochoa et al. (2016) |

| Blackgram | Yellow mosaic disease | Yellow mosaic virus | 350–2500 | Field | Non-imaging | Multinomial logistic regression | Prabhakar et al. (2013a) |

| Barley | Powdery mildew, and rust | Blumeria graminis hordei, and Puccinia hordei | 400–2500 | Greenhouse | Hyperspectral imaging | Simplex volume maximization | Wahabzada et al. (2015) |

| Cassava | Cassava mosaic, cassava brown streak, and green might | Bemisia tabaci | 380–750 | Field | RGB imaging | Deep learning | Ramcharan et al. (2019) |

| Cassava | Brown leaf spot, cassava brown streak, and red mite | Bemisia tabaci | 380–750 | Field | RGB imaging | Deep learning | Ramcharan et al. (2017) |

| Chili Pepper | Aflatoxins | Aspergillus flavus, Aspergillus parasiticus | 400–720 | Laboratory | Hyperspectral imaging | Fisher discrimination power | Ataş et al. 2012) |

| Citrus | Huanglongbing | Candidatus spp | 530–690 | Laboratory | Fluorescence imaging | Support vector machine and artificial neural network | Wetterich et al. (2017) |

| Citrus | Huanglongbing | Candidatus spp | 530–1000 | Field | NIR spectroscopy | Support vector machine and vegetation indices | Garcia-Ruiz et al. (2013) |

| Citrus | Huanglongbing | Candidatus spp | 350–2500 | Field | Infra-red spectroscopy | Linear and quadratic discriminant analysis, k nearest neighbor and support vector machine | Sankaran et al. (2011) |

| Cotton | Mealybug | Phenacoccus solenopsis | 350–2500 | Field | Spectro-radiometry | Linear discriminant analysis, and multivariate logistic regression | Prabhakar et al. (2013b) |

| Cucumber | Cucumber mosaic virus (CMV), Cucumber green mottle mosaic virus (CGMMV), Powdery mildew | Sphaerotheca fuliginea (Powdery mildew) | 400–1000 nm | Greenhouse | Hyperspectral and fluorescence Imaging | Linear regression | Berdugo et al. (2014) |

| Cucumber | Powdery mildew | Podosphaera xanthii | 400–700 | Laboratory | RGB imaging | CNN | Lin et al. (2019) |

| Jujubes | Internal insect infestation | Ancylis sativa | 400–2000 | Laboratory | NIR spectroscopy | Classification | Wang et al. (2011) |

| Maize | Northern leaf blight | Setosphaeria turcica | 380–760 | Field | RGB Imaging | Deep learning | DeChant et al. (2017) |

| Maize | Phaeosphaeria Leaf Spot | Phaeosphaeria maydis | 350–2500 | Field | Hyperspectral Imaging | Random forest classifier | Adam et al. (2017) |

| Maize | Aflatoxin | Aspergillus flavus, Aspergillus parasiticus | 1100–1700 | Lab | Hyperspectral Imaging | Deep learning | Kandpal et al. (2015) |

| Maize | Aflatoxin | Aspergillus flavus | 1000–2500 | Laboratory | Hyperspectral Imaging | PCA and stepwise factorial discriminant analysis | Wang et al. (2014) |

| Maize | Aflatoxin | Aspergillus flavus | 400–700 | Field and laboratory | Hyperspectral Imaging | Discriminant analysis | Yao et al. (2013) |

| Maize | |||||||

| Oilseed rape | Alternaria spots | Alternaria alternata | 400–2500 | Laboratory | Hyperspectral and thermal Imaging | Neural networks | Baranowski et al. (2015) |

| Olive | Wilt | Verticillium dahliae | 400–885 and 7500–13,000 | Field | Hyperspectral and thermal Imaging | Support vector machine and linear discriminant analysis | Calderón et al. (2015) |

| Orange | |||||||

| Pearl millet | Mildew diseases | Sclerospora graminicola | 380–760 | Field | RGB Imaging | Deep learning | Coulibaly et al. (2019) |

| Rice | Aflatoxin | Aspergillus flavus | 950–1650 | Laboratory | Non-imaging | PLSR | Dachoupakan Sirisomboon et al. (2013) |

| Rice | Rice blast, false smut, brown spot, bakanae disease, sheath blight, bacterial leaf blight, and bacterial wilt | Magnaporthe grisea | 380–760 | Field | RGB Imaging | Deep learning | Lu et al. (2017) |

| Strawberry | Anthracnose | Colletotrichum gloeosporioides | 400–1000 | Greenhouse | Hyperspectral Imaging | Discriminant analysis | Yeh et al. (2016) |

| Sugarbeet | |||||||

| Sugarbeet | Cercospora leaf spot | Cercospora beticola | 400–1000 | Greenhouse | Hyperspectral Imaging | SAM | Mahlein et al. (2012b) |

| Sugarbeet | Cercospora leaf spot | Cercospora beticola | 380–760 | Greenhouse and field | RGB Imaging | Support vector machine with radial basis kernel function | Hallau et al. (2017) |

| Sugarbeet | Nematode and root rot | Heterodera schachtii and Rhizoctonia solani | 400–2500 | Field | Hyperspectral Imaging | Vegetation indices and classification approaches | Hillnhütter et al. (2011) |

| Sugarcane | African stalk borer, Smut, Sugarcane thrips, Brown rust |

Eldana saccharina (African stalk borer), Sporisorium scitamineum (Smut), Fulmekiola serrata (Sugarcane thrips), Puccinia melanocephala (Brown rust ) |

1100–2300 | Field | NIR spectroscopy | PCA, PLS | Mahlein et al. (2012b) |

| Wheat | Head blight | Fusarium sp. | 400–1000 | Field | RGB and multispectral imaging | Linear and quadratic regression | Dammer et al. (2011) |

| Wheat | Head blight | Fusarium sp. | 400–1000 | Laboratory | Visible/near-infrared hyperspectral imaging | PCA and LDA | Shahin and Symons (2011) |

| Wheat | Head blight | Fusarium sp. | 400–1000 | Laboratory | Hyperspectral imaging | PCA | Bauriegel et al. (2011) |

| Wheat | Leaf rust and powdery mildew | Puccinia triticina, and Blumeria graminis | 370–800 | Greenhouse | Hyperspectral imaging | Linear regression | Bürling et al. (2011) |

| Wheat | Leaf rust | Puccinia triticina | 350–2500 | Field | Hyperspectral imaging | Vegetation indices | Zhang et al. (2012a) |

| Wheat | Powdery mildew | Blumeria graminis | 350–2500 | Field | Hyperspectral imaging | Vegetation indices | Cao et al. 2013) |

| Wheat | Powdery mildew | Blumeria graminis | 450–950 | Field and laboratory | Hyperspectral imaging | Multivariate linear regression and PLSR | Zhang et al. 2012b) |

| Wheat | |||||||

| Wheat | Wheat streak mosaic virus | Tritimovirus spp. | 380–760 | Field | RGB imaging | Expectation maximization for analysing hue values differences | Casanova et al. (2014) |

| Wheat | Powdery mildew | Blumeria graminis | 400–2450 | Field | Hyperspectral imaging | Classification | Mewes et al. (2011) |

| Wheat | Mycotoxins | Fusarium spp. | 400–1000 | Greenhouse, Field | Hyperspectral imaging | Classification | Bauriegel et al. (2011) |

| Wheat | Leaf Rust | Puccinia triticina | 350–2500 | Greenhouse | Hyperspectral imaging | Classification | Ashourloo et al. (2014) |

| Wheat | Stripe rust | Puccinia striiformis | 400–900 | Field | Hyperspectral imaging | Correlation | Devadas et al. (2015) |

| Tomato | Gray mold, leaf mold, and canker | Botrytis cinerea | 380–780 | Field | Fluorescence and hyperspectral imaging | CNN | Fuentes et al. (2017) |

| Tomato | |||||||

| Tomato | Gray mold | Botrytis cinerea | 380–1050 | Greenhouse | Hyperspectral imaging | GA-PLS | Kong et al. (2014) |

| Tomato | Early blight | Alternaria solani | 380–1023 | Greenhouse | Hyperspectral imaging | ELM | Xie et al. (2015) |

| Tomato | Powdery mildew | Oidium neolycopersici | 380–760 and 7000–13,000 | Greenhouse | Thermal and visible light imaging | Support vector machine | Raza et al. (2015) |

| Tomato | Powdery mildew | Oidium neolycopersici | 380–760 | Greenhouse | Color image processing (using self-organizing map) | Bayesian classifier | Hernández-Rabadán et al. (2014) |

| Tomato | Leaf curl virus | Begomovirus spp. | 380–760 | Laboratory | Gray level co occurrence matrix detection | Multilayer perceptron, quadratic kernel, and support vector machine | Mokhtar et al. (2015) |

| Zucchini | Soft rot | Dickeya dadantii | 7500–13,000 | Greenhouse | Fluorescence imaging | Logistic regression analysis, and artificial neural networks | Pérez-Bueno et al. (2016) |

The main bottleneck in DL involves optimization of hyperparameters that involve the number of neurons, activation functions, number of epochs, total number of convolutional layers, number of filters and hidden layers, solver, dropout, and regularization. There are many different combinations which could be used for these hyperparameters resulting in a huge computational burden and requires strong programming skills. There are four most common approaches used for selecting these hyperparameters, namely, Latin hypercube sampling, grid search, random search, and optimization (Cho and Hegde 2019). Furthermore, there is a problem of overfitting in DL models, if it is not accounted for (Sandhu et al. 2021b). Tools such as early stopping, L1 and L2 regularization, and dropout are available for reducing the chances of overfitting in the trained model. The detailed information about the optimization of hyperparameters and tools for controlling overfitting is referred to in other texts (Cho and Hegde 2019).

Conclusion

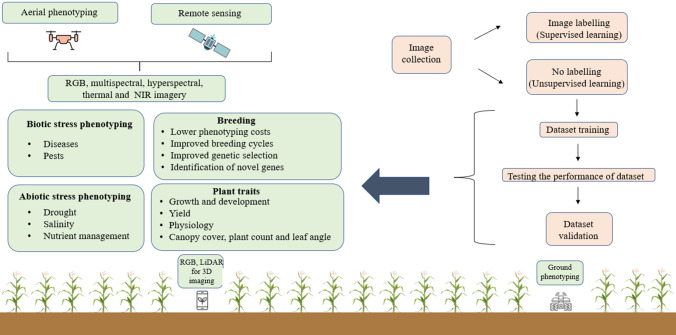

Plant stress phenotyping is an important parameter for predicting crop losses caused by various biotic and abiotic stresses. It can be used to identify superior disease resistant and stress-tolerant genotypes as well as to assess disease management decisions (Fig. 4). The phenotypic parameters include not only morphological data, but also a large number of physiological and biochemical data, as well as deeper mechanistic data, allowing scientists to identify and predict heritable traits through controlled phenotypic and genotypic studies. Current methods for stress severity phenotyping are used at various scales, such as the number of plants affected or exact counts of lesion numbers, or estimates of the severity or surface area affected by a particular biotic/abiotic stress at the canopy of single plant and field levels (Table 4). Stationary HTP platforms can carry a variety of sensors to monitor both pot crops and crops in specific field areas at the same time. Their operation, however, is limited to a small area, and their construction is costly. More research is needed in the future to improve UAV-based sensing for plant phenotyping. High-performance and low cost UAVs should be introduced in future studies. For long-term and large-field plant phenotyping, high-performance UAVs with high flight stability, precision, long flight duration, and heavy payload are required. Unlike ground-based phenotyping, UAV-based phenotyping is afflicted by a serious issue: the safety of the UAV and its sensors.

Fig. 4.

The outline of different phenotyping tools and platforms for plant stress detection in the field

Presently, RGB and multispectral sensors are primarily used to estimate crop height, biomass and other agronomic traits under normal and stressed conditions. However, the utilization of hyperspectral sensors is still in its infancy. Hyperspectral imaging has emerged as a cutting-edge/emerging method to assess crop attributes such as water content, leaf nitrogen concentration, chlorophyll content, leaf area index, and other physiology and biochemistry parameters. The interference of soil signals, ambient air, and canopy temperature on thermal infrared cameras' imaging is the main limitation. The collected data information is heavily reliant on these sensors and has an impact on the final phenotyping results. Simultaneous acquisition and analysis of the same crop phenotypes by multiple sensors can provide a more comprehensive and accurate assessment of crop traits. For instance, in the process of screening crop biotic and abiotic stress tolerance, a variety of phenotypic data is typically combined for effective and accurate analysis. Further, the data types and formats collected by different phenotypic sensors varies, combining and managing the data collected by multiple sensors, as well as the development of novel sensors such as laser-induced breakdown spectroscopy and electrochemical micro-sensing (Li et al. 2019) for detection of plant signal molecules is another challenge for future phenotypic data analysis, necessitating collaboration between multidisciplinary laboratories.

In addition, environmental factors are also critical and should be given at least as much attention as the traits that are measured, which leads to the next question: how can all of the environmental impacts be measured? Envirotyping, defined as a comprehensive set of next-generation high-throughput accurate envirotyping technologies, could aid in addressing this issue (Xu 2016). Furthermore, by integrating multi-typing data, the genotyping environment management interaction could be investigated, and predictive phenomics would be possible (Xu 2016; Araus et al. 2018). High-throughput and accurate phenotyping, when combined with optimized experimental design, high quality field trials, a robust crop model, envirotyping, and other strategies, will improve heritability and genetic gain potential (Araus et al. 2018).

Phenotypic researchers prefer mobile high-throughput plant phenotypings that can be transported to the experimental sites (Roitsch et al. 2019). This indicates that the development of mobile high-throughput plant phenotyping must focus on flexibility and portability, and that modular and customizable designs will be appreciated by the phenotyping community. With recent advances in artificial intelligence analysis techniques, 5th generation mobile networks, and cloud-based innovations, more smart "pocket" phenotyping tools are likely to be introduced, potentially replacing manual field phenotyping, which has been practiced for hundreds of years.

Overall, whether phenotyping in the greenhouse or in the field, ground-based proximal phenotyping or aerial large-scale remote sensing, the future of high-throughput plant phenotyping lies in enhancing spatial–temporal resolution, turnaround time in data analysis, sensor integration, human–machine interaction, throughput, operational stability, operability, automation, and accessibility. It is worth mentioning that the selection, development, and application of high-throughput plant phenotypings should be directed by concrete project requirements, and practical application scenarios, specific phenotypic tasks, such as field coverage (Kim, 2020), instead of supposing that the more devices, innovations, and funds with which the high-throughput plant phenotyping is furnished, the better; partly because collecting a large amount of data does not imply that all of it is useful (Haagsma et al. 2020). Even in some cases, the experimental implications of using a single sensor and multiple sensors are similar (Meacham-Hensold et al. 2020), and data acquired from different devices is redundant.

Challenges and Future Prospects Nevertheless, combining various high-throughput plant phenotypings for comparative verification and detailed evaluation could open up new avenues for inspection, extraction, and quantification of complex physiological functional phenotypes. However, the technical issues of developing standards and coordinating calibrations for these numerous combinations are challenging tasks. Furthermore, to achieve a true sense of “cost-effective phenotyping”, the trade-off between phenotyping technique investment and manpower cost should be noted, which is primarily dependent on the different objectives (Reynolds et al. 2019).

After collecting massive amounts of data, the next concern is how to handle “Big Data”? Wilkinson et al. proposed the FAIR (findable, available, identifiable, and reusable) principle to enable the finding and reuse of data all over different individuals or groups (Wilkinson et al. 2016), which means all essential metadata, such as resource and data acquisition information, measurement protocols, and environmental conditions, data description should be clearly addressable. Several efforts for data management and analysis have also been made in accordance with the FAIR principle, such as PHENOPSIS DB (Fabre et al. 2011) and CropSight (Reynolds et al. 2019). Data sharing and standardization across communities continues to be a major challenge. Another issue is a lack of funding and data infrastructure to manage these data sets (Bolger et al. 2019). We support the creation of OPEN data infrastructures and the publication of primary data with DOIs. This would make data reuse easier, as well as the development, testing, and comparison of technologies.

Online databases, such as http://www.plant-image-analysis.org, can effectively connect developers and users. The creation of the PlantVillage database, which comprised 54,306 images of 14 crop species and 26 diseases, opened up new avenues for meeting some research needs (crops, number of diseases, severity of expression, stages of disease infection, and so on), along with the need for a publicly accessible, open-source, shared database of annotated plant stresses at the individual leaf scale. PlantVillage data has also been overexplored, and new data sources will be required for the development of robust models. Some other open-source databases are also available, including the maize northern leaf blight disease database (Wiesner-Hanks et al. 2018), which contains 18,222 digital images of maize leaves in the field, either captured manually, mounted on a boom, or taken by UAVs. Human experts annotated approximately 105,705 NLB lesions, making this the largest publicly accessible annotated image set for a plant disease. However, comprehensive management platforms covering software, web-based tools, toolkits, pipelines, ML and DL tools, and other phenotypic solutions are still lacking, which will be a significant milestone as well as a significant challenge.

Data that is poorly annotated and in a disorganized format, on the other hand, could generate noise or disordered waves. Certainly, standard constraints are being proposed that are relevant. Krajewski et al. (2015), for instance, published a technical paper with effective recommendations (at http://cropnet.pl/phenotypes) and initiatives (such as http://wheatis.org), taking another step toward establishing globally practical solutions. Furthermore, initiating relevant phenotyping standardization can improve comprehension and explanation of biological phenomena, contributing to the transformation of biological knowledge and the creation of a true coherent semantic network.