Abstract

The novel coronavirus 2019 (COVID-19) spread rapidly around the world and its outbreak has become a pandemic. Due to an increase in afflicted cases, the quantity of COVID-19 tests kits available in hospitals has decreased. Therefore, an autonomous detection system is an essential tool for reducing infection risks and spreading of the virus. In the literature, various models based on machine learning (ML) and deep learning (DL) are introduced to detect many pneumonias using chest X-ray images. The cornerstone in this paper is the use of pretrained deep learning CNN architectures to construct an automated system for COVID-19 detection and diagnosis. In this work, we used the deep feature concatenation (DFC) mechanism to combine features extracted from input images using the two modern pre-trained CNN models, AlexNet and Xception. Hence, we propose COVID-AleXception: a neural network that is a concatenation of the AlexNet and Xception models for the overall improvement of the prediction capability of this pandemic. To evaluate the proposed model and build a dataset of large-scale X-ray images, there was a careful selection of multiple X-ray images from several sources. The COVID-AleXception model can achieve a classification accuracy of 98.68%, which shows the superiority of the proposed model over AlexNet and Xception that achieved a classification accuracy of 94.86% and 95.63%, respectively. The performance results of this proposed model demonstrate its pertinence to help radiologists diagnose COVID-19 more quickly.

Keywords: deep learning, COVID-19, chest X-ray images, AlexNet, Xception, deep feature concatenation, COVID-AlexCeption

1. Introduction

On 31 December 2019, the World Health Organization (WHO) received notification of a novel coronavirus [1]. The disease’s bothersome characteristics are its ease of transmission and asymptomatic presentation, which can be a source of development [2]. Dry cough, sore throat, and fever are the most prevalent COVID-19 symptoms. Septic shock, pulmonary edema, acute respiratory distress syndrome, and multi-organ failure can all occur if symptoms progress to a severe type of pneumonia. With reference to an update announced by the WHO on the 9 October 2022, 626,500,862 COVID-19 cases were confirmed in which there were 6,560,744 deaths [3]. Because no medical treatment is available, the prompt diagnosis of COVID-19 is required to prevent further spread and to treat the patient in a timely manner. In the beginning, the technique for the detection of this pandemic was RT-PCR, which was the first available technique to combat this worldwide spread. Because of the high demand for test kits in many parts of the world, fresh corona pneumonia screening is delayed, resulting in the virus’s spread [4]. So, it is necessary to develop an intelligent predictive model of COVID-19 based on machine learning methods for corrective measures following the clinician decisions. Apart from clinical procedures, researchers have shown that artificial intelligence and machine learning are promising technologies adopted by a variety of health care providers due to their high potential to scale-up and speed-up processing power, their reliability, as well as outperforming humans in certain healthcare tasks [5].

Even though if negative results are observed, they should not be treated as definitive due to the poor sensitivity value of RT-PCR, which has 60% to 70% accuracy. On top of that, symptoms can be diagnosed and detected with the aid of using the patient’s radiographic images [6]. Chest radiological imaging, mainly comprising X-ray and computed tomography (CT), play a significant role not only in the prompt diagnosis but also in the treatment of COVID-19 [7]. As a result, it is important to merge these radiographic pictures with an artificial intelligence system for more accurate COVID-19 forecasts. Deep CNN is the deep learning mechanism that offers enormous success when compared to other conventional methods in different image complexities [8]. Deep learning has enabled researchers to solve various complex problems. It has also shown a high performance not only in vision but also in machine learning tasks such as image classification, speech and voice recognition, natural language processing, object detection, medical imaging, etc. [9].

Due to the importance of this topic, numerous approaches have recently been proposed to classify COVID-19 using chest X-ray images. Anichur et al. [10] utilized a deep learning model to diagnose COVID-19 via X-ray images and proposed a COVIDX-Net model comprising seven CNN models. Sujata et al. [11] proposed a deep learning model for the detection of COVID-19 (COVID-Net) with an accuracy of 92.4% in classifying three classes that include normal (non-COVID), pneumonia and COVID-19. In another study, Muhammad et al. [12] developed a deep learning model which classified COVID-19 infection, as well as normal and pneumonia classes. They used 224 images with confirmed COVID-19 cases. Their model achieved a success rate of 98.75% and 93.48% for two and three classes, respectively. Kumar et al. [13] developed a custom-made deep learning architecture named SARS-Net to classify and detect the Chest X-ray images for COVID-19 diagnosis. Their model achieved an accuracy of 97.60% and a sensitivity of 92.90% on the validation set. To classify the features obtained from different X-ray images, Zouch et al. [14] used the convolutional neural network (CNN) models together with the support of vector machine (SVM) classifier.

In this paper, a hybrid deep learning model for the prediction of COVID-19 from chest X-ray images is proposed. This model is a concatenation between AlexNet and Xception models named COVID–AlexCeption. The combination of publicly open datasets in the experimental studies has enabled us to obtain a set of 15,153 X-ray images that contain normal (healthy), COVID-19, and Pneumonia classes. The COVID-AleXception model can achieve a classification accuracy of 98.68% that shows the superiority of the proposed model over AlexNet and Xception, which achieved a classification accuracy of 94.86% and 95.63% respectively.

Our main contributions can be summarized as follows:

-

❖

The collection of a medical X-ray image dataset includes three main classes (normal, pneumonia, and COVID-19) for the training and testing of the proposed system.

-

❖

To perform a DFC technique in order to benefit from combining deep features which were extracted from AlexNet and Xception models.

-

❖

We propose COVID-AlexCeption: a deep learning model with concatenation of AlexNet and Xception models to detect COVID-19 from X-ray images.

-

❖

The performance of COVID-AlexCeption has been tested.

-

❖

The comparison of COVID-AlexCeption to competitive methods in terms of different performance metrics has been performed, mainly in terms of f-measure, accuracy, precision, and recall.

The remainder of our paper is organized this way: related works are investigated in Section 2. Materials and Methods are presented in detail in Section 3. In Section 4, we describe the implementation and testbed, followed by the experimental results in Section 5. Finally, conclusions are presented in Section 5.

2. State-of-the-Art Methods

With the purpose of identifying persons infected with COVID-19, a variety of works use transfer learning on chest X-ray images. The different works presented in this section are summarized in Table 1.

The work presented in [15] shows an algorithm, nCOVnet, that is used to detect COVID-19 patients making use of X-ray images. This system is meant to help determine whether a person is actively infected by coronavirus or not. The forecast model consists of 24 layers. The first layer represents the input layer. An input RGB image with 224 × 224 × 3 pixels dimensions has been fixed. The next 18 layers represent a combination of the convolution layers plus ReLU (Rectified Linear Unit) and Max Pooling Layers. Then, a transfer learning model was applied using the five different layers that were proposed and trained. The fundamental structure of the network of this proposed model is based upon four layers, which are the convolution layer, aggregation layer, flattening and fully connected layers. The authors made use of 337 images in order to evaluate the system. The suggested nCOVnet model efficiently detects patients infected with COVID-19 with 97% accuracy, which represents an encouraging result compared to previous models.

Belal Hossain et al. [16] developed a transfer learning with fine-tuned deep CNN ResNet50 model for classifying COVID-19 from chest X-ray images. In this paper, the authors present a method for how to identify the presence of COVID-19 in X-ray images, using transfer learning (TL) on a ResNet50 model. In this work, the authors used 10 different pre-trained weights. The 10 pre-trained models are: ChestX-ray14, ChexPert, ImageNet, ImageNet_C h estX—ray14, ImageNet_C h exPert, iNat2021_Supervised, iNat2021_Supervised_Form_Scratch, iNat2021_Mini_SwAV _1k, and MoCo_v1, MoCo_v2. The main goal of this system was detecting whether a person is a carrier of the virus or normal. The proposed model correctly detects COVID-19 with 99.17% validation accuracy, 99.95% training accuracy, 99.31% precision, 99.03% sensitivity, and 99.17% F1-score.

To detect COVID-19, Sakshi et al. [17] deployed a model making use of transfer learning from CT scan images divided into three levels with the use of stationary wavelets. Three major steps make the basis of this proposed model. Step one is data augmentation. Due to the shortage of databases, the number of the processed images is augmented to three levels, making use of fixed waves where shear, rotation and transition have been applied to all those images. Step two is detecting COVID-19 using pre-trained CNN models. In this step, CT images are classified into two categories (COVID+ and COVID−), making use of the techniques of learning-based transmission. To do this task, four pre-trained transfer learning models, ResNet18, ResNet101, ResNet50 and SqueezeNet, have been used. Later on, the performance of the different models was compared to find out the best. Step three is anomaly localization. In this step, the feature map together with the activation layers of the best transmission learning models that were extracted from step two are deployed in order to translate the anomaly to the chest CT scan images of the positive cases of COVID-19. Results have shown that the highest classification accuracy was achieved through ResNet18 (training = 99.82%, validation = 97.32%, and testing = 99.4%).

Likewise, the authors in reference [18] made use of four CNN architectures, which are VGG19-CNN, ResNet152V2, ResNet152V2 + Gated Recurrent Unit (GRU), and ResNet152V2 + Bidirectional GRU (Bi-GRU), in order to detect COVID-19 through the use of public digital chest X-ray plus CT datasets, having four classes (i.e., normal, COVID-19, pneumonia, and lung cancer). The first step handles the pre-processing of images such as resizing, image augmentation and the splitting of datasets that are randomly divided into 70% being used as a training set and 30% as a testing set. The second and third steps are feature extraction and image classification, respectively. Based on the findings of the experiments, it is clear that the VGG19 + CNN model has a better performance compared to the three other proposed models. The VGG19 + CNN model has led to an accuracy (ACC) of 98.05%, recall of 98.05%, precision of 98.43%, specificity (SPC) of 99.5%, negative predictive value (NPV) of 99.3% and F1 score of 98.24%.

In [19], a diagnostic test was proposed by authors in order to detect COVID-19 cases. The rationale was to distinguish COVID-19 cases from bacterial pneumonia, viral pneumonia, and normal healthy people through the use of chest X-ray images and applying the deep learning neural networks ResNet50 and ResNet101. Cohen and Kaggle collected the two open-source image datasets. The proposed method was conducted in four major stages. The first stage consists of data visualization. The second stage is data augmentation. The third stage is data pre-processing while the fourth stage is deep neural network model designing, which is subdivided into stage-I and stage-II. In the image pre-processing phase, there are two sub-stages, which involve first finding out the minimum height and width of the dataset images and then resizing them according to the ImageNet database. In the second phase, which is data augmentation, the authors resorted to Rotation and Gaussian Blur in order to increase the number of images. Later on, the ResNet50 network is formed. In order to see the difference between viral pneumonia, bacterial pneumonia, and normal cases, this model has been trained. In stage-II, deep network model designing, the ResNet-101 network is formed. The first stage model presents a satisfactory performance which an accuracy reaching 93.01% in the identification of viral pneumonia, bacterial pneumonia, and healthy/normal people. The objective of the second stage model is to detect the existence of COVID-19. The findings in this stage show a significant performance with 97.22% accuracy.

A new model to detect COVID-19 automatically using raw chest X-ray images was developed by Chiranjibi et al. [20]. It is considered as one of the first solutions that is based on the DL model (VGG-16) and the attention module. This solution is meant to provide dependable diagnostics not only for binary classification (COVID-19 and normal) but also multi-class classification (COVID-19, normal and pneumonia). This proposed method (also called attention-based VGG-16) consists of four main building blocks, such as an Attention module, Convolution module, FC-layers, and Softmax classifier. In this work, three COVID-19 CXR image datasets that are publicly available have been used. The created system achieved 79.58% accuracy. However, the performance of this proposed method could be further improved.

In reference [21], authors presented a deep convolutional neural-network-based architecture model to detect COVID-19, making use of chest radiography. The model is named FocusCOVID. The proposed model in this paper is end-to-end CNN architecture without any techniques of handcrafted feature extraction. In order to boost the performance of the model, residual learning and a squeeze-extinction network are employed by the proposed model. The two chosen datasets are Kaggle-1 and Kaggle-2. It includes 1143 COVID-19 specimens and 1345 normal and pneumonia radiograph specimens having a resolution of 1024 × 1024 and 256 × 256 pixels, respectively. The architecture involves a sum of 2,940,122 parameters. In the two-class classification (COVID-19, pneumonia and normal) for COVID-19 instances, the suggested FocusCOVID realized a 95.20% validation accuracy, a 96.40% train accuracy, a precision of 96.40%, a sensitivity of 95.20% and finally a 95.20% F1-score.

CoroNet is proposed in [22]. It is a deep convolutional neural network model to detect COVID-19 infection automatically from chest X-ray images. The proposed CoroNet is based upon the Xception architecture which has been pre-trained in the ImageNet data set then trained end-to-end, making use of COVID-19 and chest pneumonia X-ray images extracted from two various publicly available databases. COVID-19, bormal, pneumonia–bacterial, and pneumonia–viral are the four categories on which CoroNet has been trained with the objective of classifying chest X-ray pictures. Researchers collected a number of 1300 images from these two available sources. Then, they dimensioned them to 224 × 224 pixels in size with a resolution of 72 dpi. CoroNet has 33,969,964 parameters in which 33,969,964 are trainable and 54,528 are non-trainable ones. On top of Tensorflow 2.0, CoroNet is implemented in Keras. As for four-class cases (COVID vs. pneumonia–bacterial vs. pneumonia–viral vs. normal), the proposed CoroNet achieved an 89.6% validation accuracy, a train accuracy of 90.40%, a precision of 90%, 96.40% sensitivity and an 89.9% F1-score.

In this study, in reference [23], researchers suggested a new architecture having the name CheXImageNet. This exclusive model presents an encouraging performance concerning the classification of COVID-19 with chest X-ray digital images. It is built upon using deep convolutional neural networks where the two used open-source image databases were obtained from Cohen and Kaggle. Pre-processing methods are applied in order to enhance the quality of images. During this process, merging datasets and resizing the images to 256 × 256 pixels is included. Once the data pre-processing method is applied, the data is split into training (80%) and testing (20%). The images are then classified into two distinct sets for binary class classification (normal cases and COVID-19 cases images) and into thee sets (COVID-19 cases, normal cases and images of pneumonia cases) for multi-class classification. The CheXImageNet architecture includes some layers and filters. The constituent of this architecture is: three convolutional layers, four batch normalization layers, three max pooling layers, seven LeakyReLU layers, five dense layers, one flattened layer, and four dropout layers. Authors claimed that 100% accuracy has been achieved for both binary classification (including COVID-19 cases and normal X-ray) and three-class classification (containing cases of COVID-19, normal X-ray, and cases of pneumonia) respectively.

A new hybrid deep learning model is suggested in [24] by Ritika Nandi et al. It detects coronavirus from chest X-ray images. It is an amalgam of ResNet50 and MobileNet. These two models are used in a separate way then the results of each model are concatenated in order to produce the final results. This is followed by two fully joined layers. To update the neural network weights, the Adam optimization algorithm has been used. The suggested hybrid model’s performance was assessed on two publicly available COVID-19 chest X-ray data sets. Each of the datasets involves normal, pneumonia, and COVID-19 chest X-ray images. The results show that using the first dataset leads to an accuracy of 84.35%. However, using the second dataset leads to 94.43% accuracy.

Table 1.

Overview of studies using deep learning approaches for COVID-19 detection.

| Ref | CNN Model | Data Sources | Accuracy (%) | Limitations |

|---|---|---|---|---|

| [15] | nCOVnet | Cohen et al. [25] | 97.97 | High execution time |

| [16] | ResNet50 | Radiography Database [26] | 99.17 | Imbalanced data |

| [17] | ResNet18 | CT scan images [27] | 99.60 | Overfitting issue |

| ResNet50 | 99.20 | |||

| ResNet101 | 99.30 | |||

| SqueezeNet | 99.50 | |||

| [18] | VGG19 +CNN | GitHub+ cancer X-ray and CT images [28] |

98.05 | Imbalanced data |

| ResNet152V2 | 95.31 | |||

| ResNet152V2 + GRU | 96.09 | |||

| ResNet152V2+ Bi-GRU | 93.36 | |||

| [19] | ResNet50 | Cohen [25] Kaggle [29] |

93.01 | Imbalanced data Overfitting issue |

| ResNet101 | 97.22 | |||

| [20] | VGG-16 | Khan et al. [22] Ozturk [20] |

79.58 | Overfitting issue |

| [21] | FocusCOVID | Kaggle-1 [30] Kaggle-2 [31] | 95.20 | Cannot provide the optimal accuracy. |

| [22] | CoroNet | Chest X-ray Images [32] | 89.6 | This method is slow. |

| [23] | CheXImageNet | Cohen [25] Kaggle [29] |

100 | Overfitting issue |

| [24] | ResNet50 | Kaggle chest X-ray [33] RSNA pneumonia [34] |

91.13 | Cannot provide the optimal accuracy. |

| MobileNet | 93.73 | |||

| Hybrid model | 94.43 |

3. Deep Learning Architectures

Deep learning (DL) is a type of machine learning algorithm that has lately shown tremendous promise in terms of computer vision and image processing [35]. Several deep learning models have been broadly utilized on chest X-ray pictures, generating excellent results in detection, segmentation, and classification tasks [26]. The AlexNet and Xception models are discussed in depth in this section.

3.1. AlexNet Model Architecture

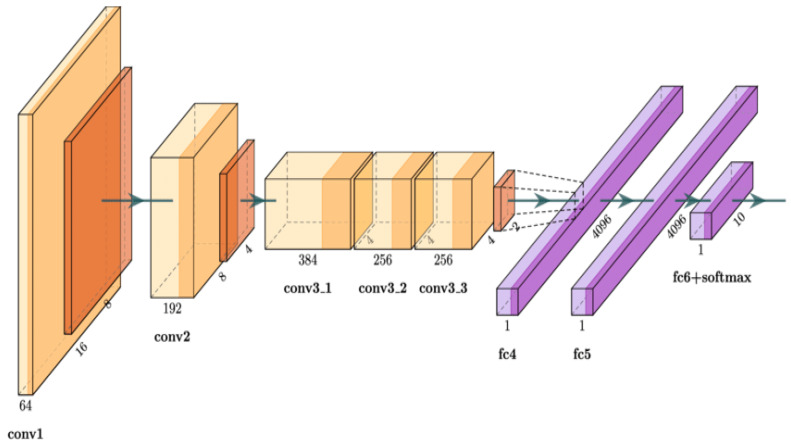

AlexNet architecture was developed by Alex Krishevesky et al. [35]. The architecture consists of eight layers: five convolutional layers and three fully connected layers. Figure 1 illustrates the basic design of the AlexNet architecture. The input picture of (224 × 224 × 3) is filtered using 96 kernels of size (11 × 11 × 3) in the first convolutional layer. The output of the first convolutional layer is sent into the second convolutional layer, which filters it using 256 kernels of size (5 × 5 × 48). The third convolution layer contains 384 kernels of size (3 × 3 × 256) connected to the outputs of the second convolutional layer (normalized, pooled). The fourth convolution layer contains 384 kernels of size (3 × 3 × 192), whereas the fifth convolution layer has 256 kernels of size (3 × 3 × 192). There are 4096 neurons in the fully linked layers. At the end and with dropout, two fully connected (FC) layers are employed, followed by a Softmax layer. This technique has been frequently utilized to analyze highly dimensional biomedical data automatically.

Figure 1.

The architectures of the AlexNet model.

3.2. Xception Model Architecture

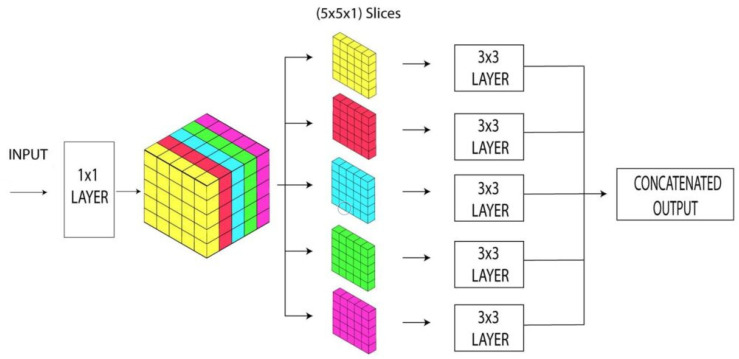

Francois [36] introduced the Xception architecture. It is an enhancement of the inception that takes the place of the regular inception modules, having distinguishable depth convolutions. The ultimate objective of the Xception architecture is to generate a network having more parameters which can be useful in solving the problems of any computer network. Figure 2 depicts the architecture of the Xception network model. Xception is based on two main phases. The depth-wise separable convolution is performed first, followed by a pointwise convolution. The channel-wise n × n spatial convolution is the depth-wise convolution. If the network comprises five channels, for example, we will get 5 n × n spatial convolution. 1 × 1 convolution is the pointwise convolution. As in residual networks, there are shortcuts between convolution blocks. Since Xception is a new model, there are only a few accessible cases to demonstrate its success [37].

Figure 2.

The architectures of the Xception model.

4. Materials and Methods

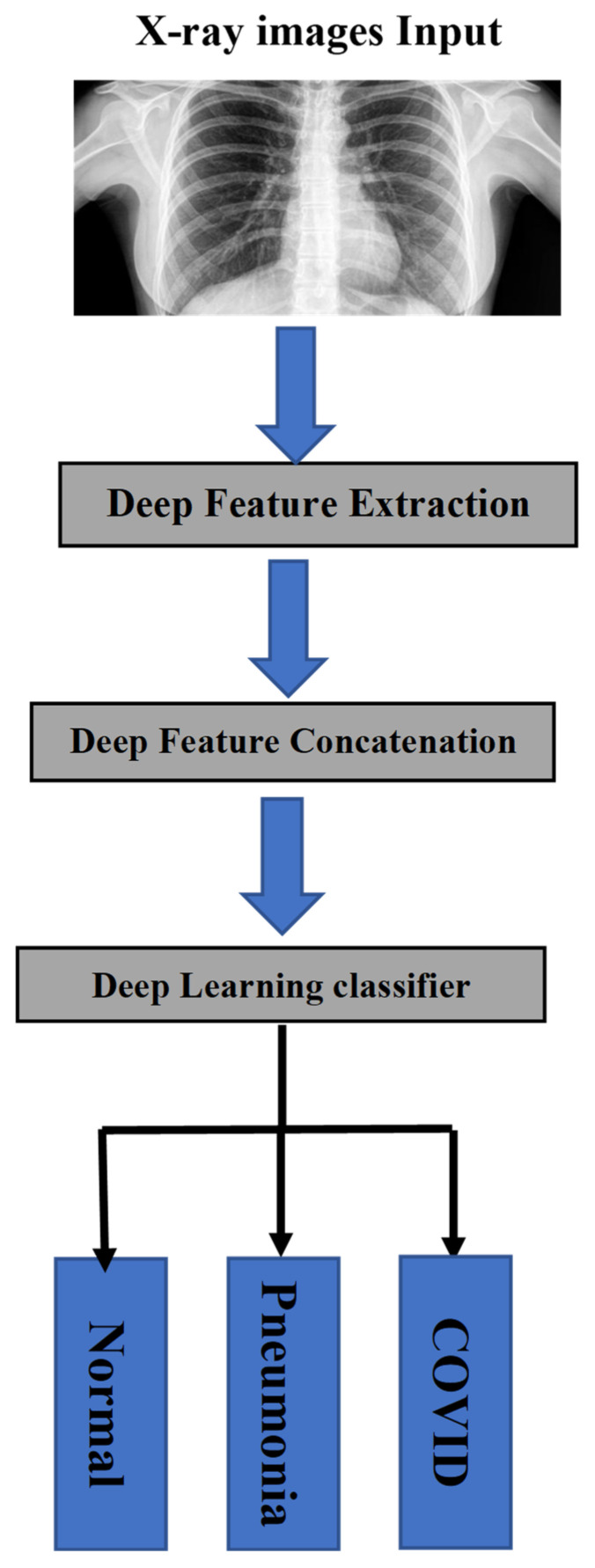

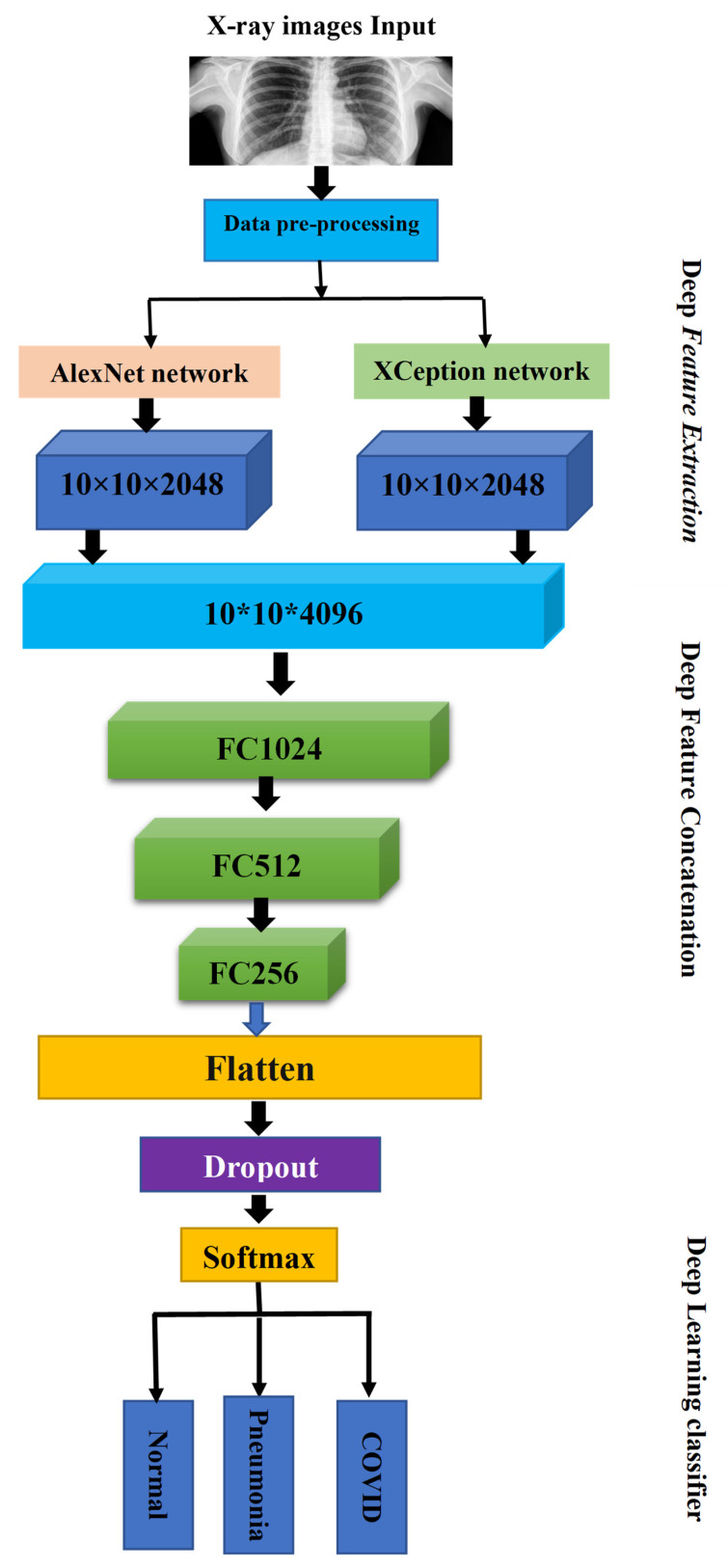

In this section, the dataset as well as the block diagram of the proposed system are presented. Figure 3 gives a global overview of the methodology of the study, describing the deep learning proposed method built on deep feature concatenation. Firstly, (1) images were gathered from 5 COVID-19 radiography databases. (2) The pre-processing of images was done through resizing and normalization, a task that is going to be explained further. (3) Later on, data augmentation has been added with the purpose of solving the problem of overfitting resulting from the limited number of training images. Then, AlexNet and Xception deep learning models were used to extract the features from X-ray images. The DFC methodology was then applied in order to combine extracted features into one single descriptor to be classified. In this work, multiclass classification (COVID-19, normal, and pneumonia) was conducted with the proposed method. Below, there is an explanation of a detailed description of the architecture.

Figure 3.

Simple block diagram of the proposed system.

4.1. Dataset Description

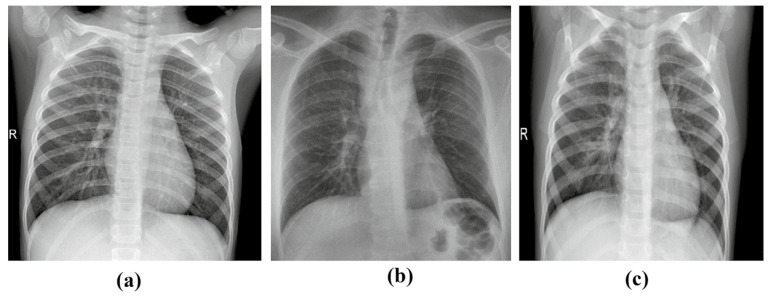

Since COVID-19 is a newly discovered virus, the available number of COVID-19 X-ray images is limited and insufficient. In this proposed work, multiple X-ray images were carefully gathered from publicly available datasets to generate a large X-ray images dataset. 5 different open-source repositories were combined to form the dataset: (1) Cohen et al. [25], (2) Radiography Database [26], (3) Kaggle-1 [30], (4) Kaggle-2 [31] and (5) Chest X-ray Images [32]. These images were examined by experienced radiologists, who excluded any that lacked convincing COVID-19 evidence. Concerning the experimental analysis, 3 classes of chest X-ray images (normal, COVID-19, and pneumonia) have been considered. The dataset is composed of 15,153 X-ray images which are divided into 3 diseases as follows: 10,192 images of normal patients, 3616 X-ray images of COVID-19 and 1345 images of pneumonia. Figure 4 shows a sample of chest X-ray radiography image from every class.

Figure 4.

Samples of frontal-view chest X-ray images from the dataset: (a) normal case, (b) COVID-19 case, and (c) pneumonia.

4.2. Data Preparation and Preprocessing

The input images are pre-processed using several pre-processing methodologies to improve the training process. The ultimate objective of pre-processing images is to enhance the visual capacity of the input image through the improvement of contrast, minimizing noise or eliminating low or high frequencies in the initial image. In this work, image resizing and image normalization pre-processing techniques were applied in order to boost the quality of images. The data were pre-processed in two stages:

Image resizing: The images in this dataset have a wide range of dimensions. All of the images that were originally obtained were examined to see if they met the dataset’s minimum height and width requirements. All of the images in the dataset were scaled to this minimum dimension after it was determined. A minimum dimension as obtained in our work is 224 × 224. So, to fit to the input image size in AlexNet and Xception pretrained models, all images in the dataset were resized to the dimension of 224 × 224.

Image normalization: In this work, contrast enhancement and image normalization methods were utilized to adjust pixel intensity values in order to provide a better-enhanced image in this study. Hidden information that occurs inside the low range is revealed by changing the pixel intensity. As a result, we normalized all image intensity values to a range of [–1, 1]. Given the fact that this method helps remove bias and achieve uniform distribution, it helps in accelerating the convergence of the model.

4.3. Data Augmentation

Data augmentation is a common deep learning technique that increases the amount of available training/testing data [15]. The main reason for applying multiple image augmentation techniques is to improve the system’s overall performance through the adding of more diversified data to the already existing limited dataset. Given the small number of positive COVID-19 cases, this is especially critical at this stage. The variation in the original number of the acquired images in every image class can be clearly seen in Table 2. This difference in image count creates a significant class divide. This imbalance in image class might result in various issues that may include overfitting, where the model does not succeed in effectively generalizing the unseen dataset. Data augmentation (rotation and zoom) was used in this article in order to resolve the problem of overfitting that is caused by the limited number of training images. We randomly rotated images by 30 degrees and randomly zoomed them by 20%. Table 2 illustrates the number of images before the data augmentation method (15,153) and after it (26,383). Later, we divided this dataset both data into 80% for training and 20% for validation. Table 3 summarizes the partitions of this data set.

Table 2.

Number of images of the training dataset before and after the augmentation technique.

| Name of Class | Normal | Pneumonia | COVID-19 |

|---|---|---|---|

| No. of images before augmentation | 10,192 | 1345 | 3616 |

| No. of images after augmentation | 10,192 | 8087 | 8107 |

Table 3.

Details of Train–test class-wise distribution of datasets after the augmentation technique.

| Classes | Training (80%) | Validation (20%) | Total |

|---|---|---|---|

| Normal | 8154 | 2038 | 10,192 |

| Pneumonia | 6470 | 1617 | 8087 |

| COVID-19 | 6486 | 1621 | 8107 |

4.4. Deep Feature Concatenation (DFC)

To detect COVID-19 from X-rays images, AlexNet and Xception have been used separately by many researchers. In most works, these models give weak results. However, the most challenging difficulty in this study is the relatively limited number of accessible infected images. To overcome this problem in this presented paper, the deep feature concatenation mechanism was used in order to improve the classification process. Firstly, 2 CNNCs have been used to conduct the process of deep feature extraction. We used AlexNet and Xception deep learning models to extract features from X-ray images. The collected features were then combined into a single descriptor for classification using the DFC approach. The principle of DFC is taking a set of different learners and combining them using new learning techniques. Later, three fully connected layers of sizes 1024, 512, and 256 neurons, respectively, were added. Outputs from fully connected layers are unnormalized values. The final action that terminates this model is Softmax activation function layer whose aim is to generate outputs. With Softmax activation function, output values are normalized and turned into probability values. In the case of normal, pneumonia and COVID-19, there are 3 classes in our last classification layer. The architecture of the proposed COVID-AleXception model is shown in Figure 5.

Figure 5.

Graphical abstract of proposed method.

5. Implementation and Testbed

In this section, we evaluate the efficiency of the suggested scheme based on the results of the conducted experiments. The Intel Core i7 3.5 GHz processor machine with 16 GB RAM and a 2 GB integrated NVIDIA graphics card was used to train and test all of the methods stated in Section 4. Anaconda3 (Python 3.7) was used to implement image pre-processing algorithms, data augmentation tasks, and deep learning models. The dataset presented in Section 4.1 is divided into two groups: training (80%) and validation (20%). The first is for the training process, while the second is for the final evaluation testing.

The performance of the developed model was analyzed using the following metrics: accuracy, precision, recall, and F1 score. Each measure is delineated as follows:

-

❖

Accuracy: The accuracy is the percentage of properly predicted images out of the total number of predictions [24]. The accuracy is calculated as:

-

❖

Precision: The ratio of correctly predicted positive results (TP) to the total number of positive results (TP + FP) forecasted by the model is the accuracy metric. The vast number of FPs results in a lower precision [30]. The precision range is between 0 and 1 and is calculated as follows:

-

❖

Recall: The recall is utilized to measure the right positive forecasts by calculating the ratio between the number of true positive results (TP) to the total number of samples (TP + FN) [30]. The recall is calculated using the following equation:

-

❖

F1 score: The F1 score is one of the metrics used to measure and evaluate the model’s performance. The weighted harmonic between the accuracy and recall is used to compute the F1 score. Its definition is as follows:

where TP—True Positive, FP—False Positive, TN—True Negative, FN—False Negative.

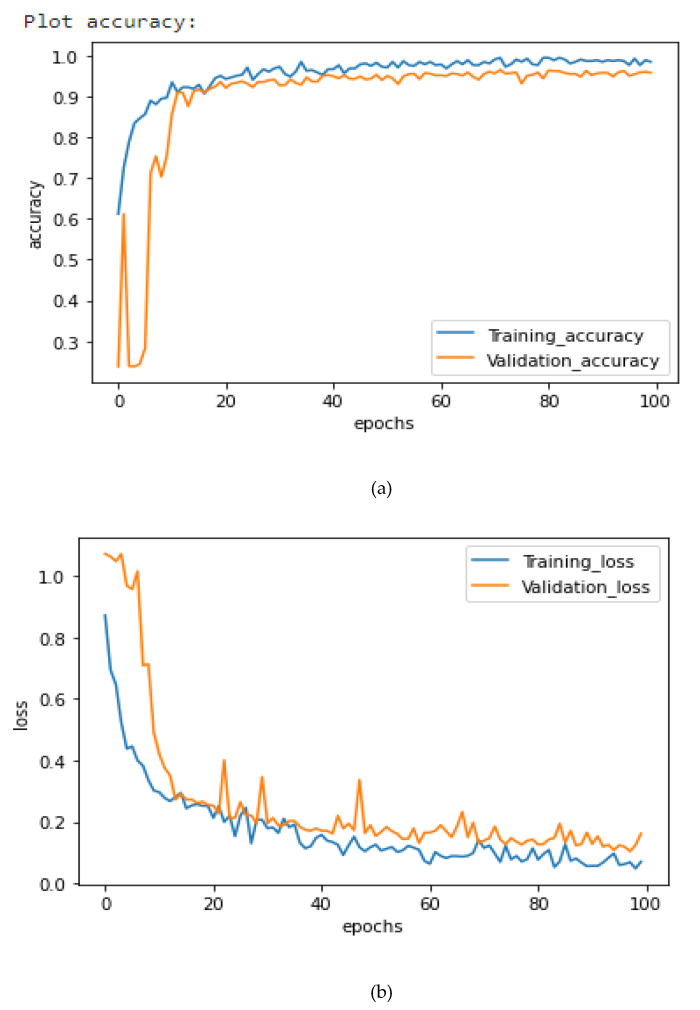

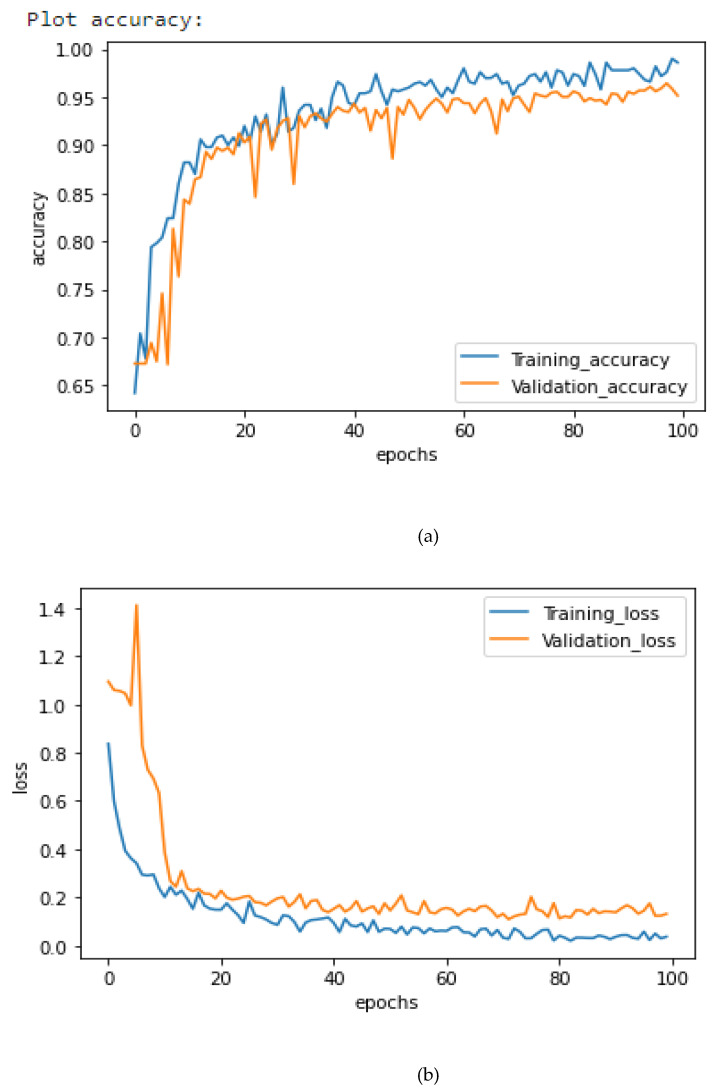

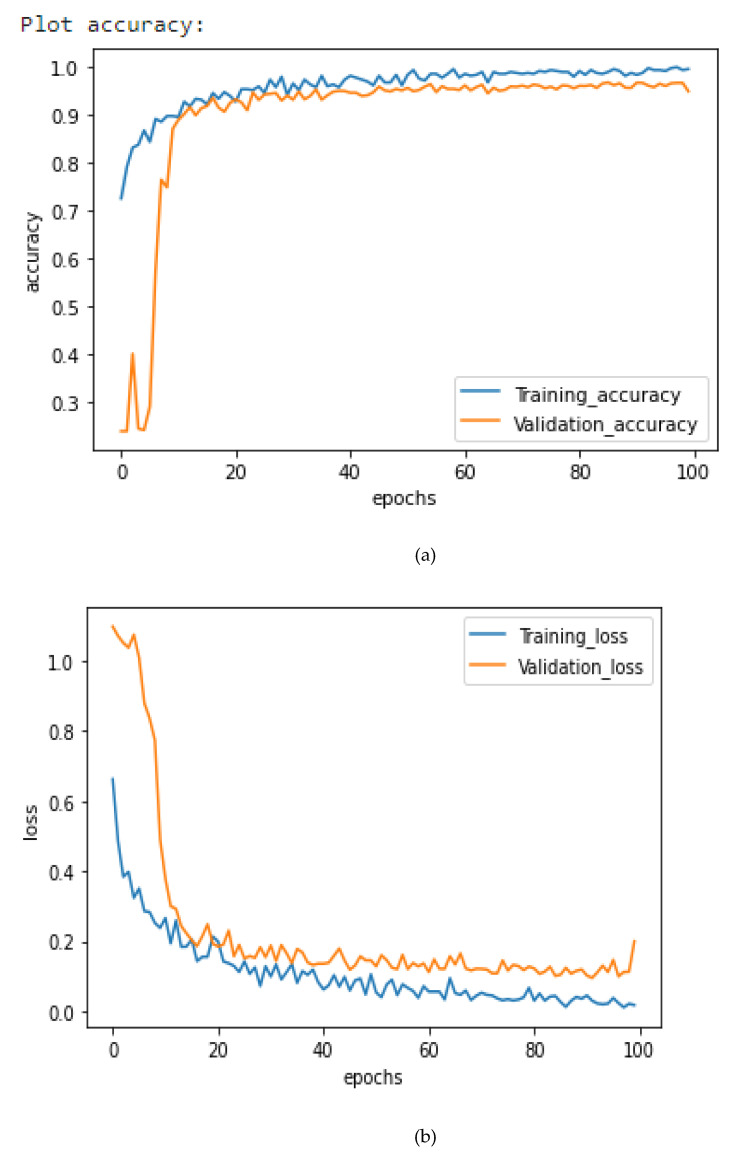

5.1. Performance Analysis and Analysis

Figure 6, Figure 7 and Figure 8 illustrate the suggested models’ model accuracy and loss graphs. As shown in Figure 6, after 100 epochs of validation, the accuracy of the AlexNet model achieves the highest of 94.86%, while the training accuracy reaches 95.25% and the loss is 0.2. As illustrated in Figure 7, after 100 epochs of validation, the accuracy of the Xception model achieves 95.63%, while the training accuracy for this model reaches 96.75% and the loss is 0.16. Concatenating AlexNet and Xception enabled us to get the best result, previous models passing them through the fully connected layer. As it is shown in Figure 8, the use of this hybrid strategy helped us to obtain 98.68% accuracy and only 0.1% loss.

Figure 6.

AlexNet Loss and Accuracy curves. (a) Training and Validation Accuracy, (b) Training and Validation Loss.

Figure 7.

Xception Loss and Accuracy curves. (a) Training and Validation Accuracy, (b) Training and Validation Loss.

Figure 8.

COVID-AleXception Loss and Accuracy curves. (a) Training and Validation Accuracy, (b) Training and Validation Loss.

The average results for the classification task in the dataset from the three deep learning models are displayed in Table 4. The training as well as the testing run times are reported second. As it is clearly shown in Table 4, AlexNet and Xception give an average accuracy > 96%. The COVID-AleXception model helped us to achieve the highest average accuracy of 98.68%. The same model gives the highest F1 score equal to 98.46% as well as the finest recall equal to 98.77%. Therefore, the method proposed in this article plays a significant role in evaluating the feasibility and reliability of the detection of COVID-19. However, due to the complicated structures of internal modules, the COVID-AleXception model requires a longer time for training and testing if compared to other models, which is one of the shortcomings of our suggested model. Thus, different computing environments have crucial impacts on the time consumption. Added to that, high-performance hardware is required in order to process these models.

Table 4.

Average classification results for the classification task of the original dataset.

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | Training Time (s) | Testing Time (s) |

|---|---|---|---|---|---|---|

| AlexNet | 94.86 | 94.75 | 94.08 | 94.78 | 690 | 3.29 |

| Xception | 95.63 | 94.26 | 94.12 | 95.16 | 740 | 2.49 |

| COVID-AleXception | 98.68 | 99.11 | 98.77 | 98.46 | 938 | 4.23 |

Table 5, on the other hand, illustrates the classification results for the different classes (normal, pneumonia and COVID-19) which were obtained from the same AlexNet, Xception and COVID-AleXception models for the determination of accuracy, precision, recall and F1 score. As it is presented in the table, AlexNet achieved an accuracy of 96.25%, which is the highest for the normal category. Besides, Xception achieved a recall equal to 97.25%, the highest for the normal category. However, it can be stated that COVID-AleXception attained the precision of 98.55%, the finest F1 score of 98.75% and the recall of 98.72 for COVID-19 class.

Table 5.

Model classification results for a 3-category classification task for the original dataset.

| Model | Category | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|---|

| AlexNet | Normal | 96.25 | 95.25 | 95.55 | 95.36 |

| Pneumonia | 95.25 | 95.45 | 95.85 | 95.57 | |

| COVID-19 | 93.55 | 93.75 | 93.35 | 93.03 | |

| Xception | Normal | 97.15 | 97.52 | 97.25 | 97.55 |

| Pneumonia | 95.52 | 95.63 | 95.25 | 95.85 | |

| COVID-19 | 95.01 | 95.21 | 95.15 | 95.05 | |

| COVID-AleXception | Normal | 99.05 | 99.35 | 99.27 | 99.78 |

| Pneumonia | 98.75 | 99.85 | 99.48 | 99.05 | |

| COVID-19 | 98.55 | 98.45 | 98.72 | 98.75 |

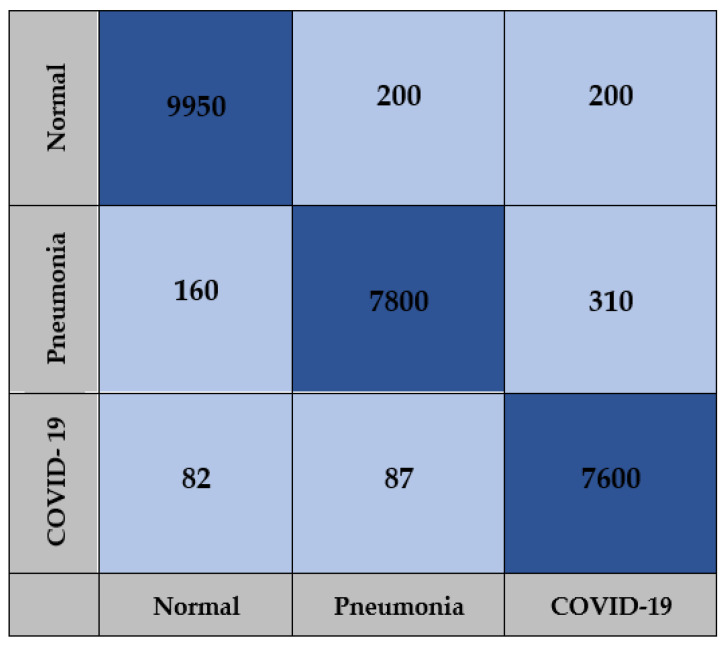

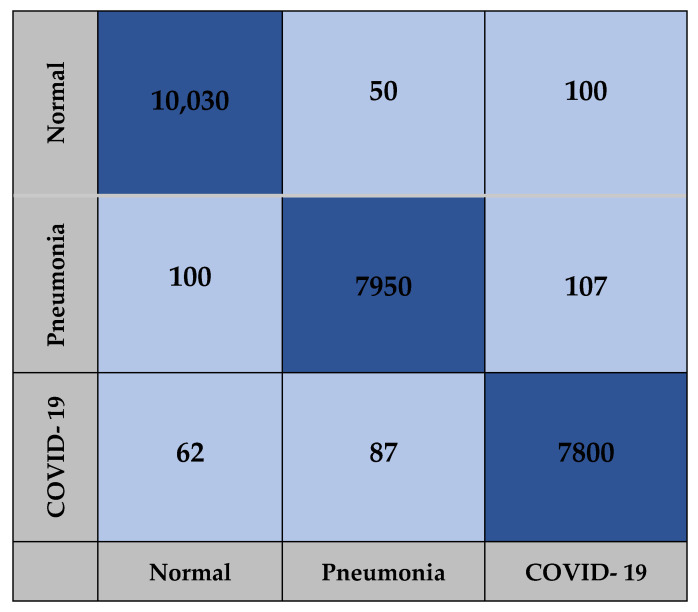

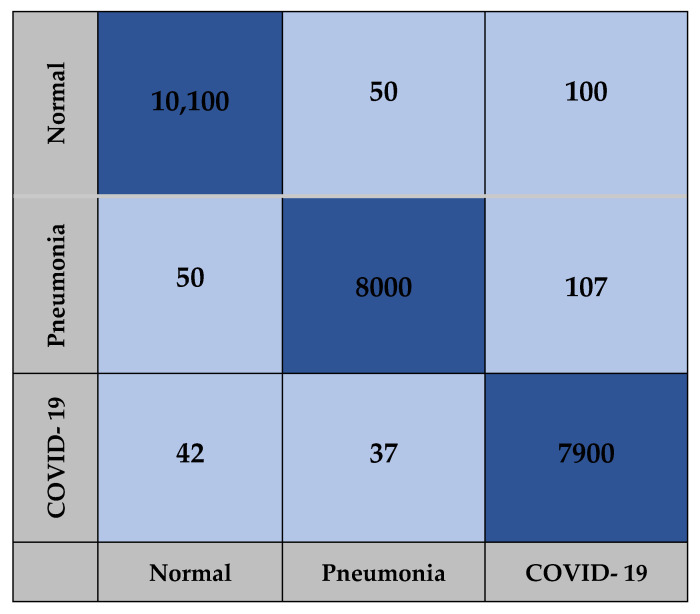

The confusion matrix for the AlexNet, Xception and COVID-AleXception models used in the study include the findings obtained from training carried out with the test dataset, as presented in Figure 9, Figure 10 and Figure 11.

Figure 9.

Confusion matrix for AlexNet model.

Figure 10.

Confusion matrix for Xception model.

Figure 11.

Confusion matrix for COVID-AleXception model.

5.2. Comparative Analysis and Discussion

The collected results indicated the superiority of our suggested model in terms of accuracy for the three-class classification task when compared to state-of-the-art approaches presented in Table 6. The performance of the COVID-AleXception model is better than the AlexNet and Xception models. The algorithms that were proposed in the state-of-the-art are focused on accuracy, but they don’t take into account the amount of computation time that is required for something such as the training procedure. Although the image classification methods provide essential features for CNN but reduce the complexity of its computation, the amount of time necessary to process would be quite substantial. When training on a huge database, the time needed can indeed be complex, and applications should always wait until training has been completed. COVID-AleXception was developed to ensure that the preparation is completed in the shortest amount of time possible. These results should only be used for reference because the methodologies and metrics used to calculate each system are differ such that an accurate comparison cannot be made.

Table 6.

The results obtained compared to state-of-the-art methods.

| Ref | CNN Model | Data Sources | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|---|---|

| [15] | nCOVnet | Cohen et al. [25] | 97.97 | 97.61 | 97.78 | 97.67 |

| [16] | ResNet50 | Radiography Database [26] | 99.17 | 99.45 | 98.97 | 99.25 |

| [17] | ResNet18 | CT scan images [27] | 99.60 | 99.52 | 99.71 | 99.75 |

| ResNet50 | 99.20 | 99.05 | 99.35 | 99.25 | ||

| ResNet101 | 99.30 | 99.35 | 99.25 | 99.18 | ||

| SqueezeNet | 99.50 | 99.45 | 99.40 | 99.55 | ||

| [18] | VGG19 +CNN | GitHub+ cancer X-ray and CT images [28] |

98.05 | 97.95 | 97.87 | 97.90 |

| ResNet152V2 | 95.31 | 95.23 | 95.17 | 95.25 | ||

| ResNet152V2 + GRU | 96.09 | 95.85 | 95.95 | 95.80 | ||

| ResNet152V2+ Bi-GRU | 93.36 | 93.15 | 93.22 | 93.25 | ||

| [19] | ResNet50 | Cohen [25] Kaggle [29] |

93.01 | 92.90 | 92.95 | 92.85 |

| ResNet101 | 97.22 | 97.05 | 97.15 | 97.03 | ||

| [20] | VGG-16 | Khan et al. [22] Ozturk [20] |

79.58 | 79.58 | 85.43 | 87.49 |

| [21] | FocusCOVID | Kaggle-1 [30] Kaggle-2 [31] | 95.20 | 95.36 | 95.16 | 95.26 |

| [22] | CoroNet | Chest X-ray Images [32] | 89.6 | 89.45 | 89.36 | 89.50 |

| [23] | CheXImageNet | Cohen [25] Kaggle [29] |

100 | 100 | 100 | 100 |

| [24] | ResNet50 | Kaggle chest X-ray [33] RSNA pneumonia [34] |

91.13 | 92.96 | 92.85 | 92.73 |

| MobileNet | 93.73 | 93.66 | 93.55 | 93.48 | ||

| Hybrid model | 94.43 | 94.26 | 94.35 | 94.15 | ||

| COVID-AleXception | Xception | Ref: [25,26,30,31,32] | 94.86 | 94.75 | 94.08 | 94.78 |

| AlexNet | 95.63 | 94.26 | 94.12 | 95.16 | ||

| COVID-AleXception | 98.68 | 99.11 | 98.77 | 98.46 |

6. Conclusions

Because it spreads through human contact, COVID-19, a severe virus, has had an impact on many nations worldwide. With the ever-increasing demand for PCR tests commonly used to screen for all possible cases of COVID-19, the unavailability of these tests and the emergence of significant false negative rates, developing an alternative diagnostic tool is paramount to minimize risks and spread. In this article, two recent pre-trained CNN models were used to combine features collected from chest X-ray images, utilizing the deep feature concatenation (DFC) technique. For the overall enhancement of the pandemic’s prediction capability, we propose COVID-AleXception: a neural network that concatenates the AlexNet and Xception networks. Several X-ray images were carefully collected from various sources to form a relatively large-scale X-ray image dataset for examining this suggested model. The COVID-AleXception model has a classification accuracy of 98.68%, demonstrating its superiority over AlexNet and Xception, which have classification accuracies of 94.86% and 95.63%, respectively. Since nothing can be perfect, our proposed MobiRes-Net Model has some limitations. One of them is the higher training and testing run times if compared to other models because of the complex structure of the models inside the modules. Another limitation is that high-performance hardware is required in order to process those models. In future, the COVID-AleXception model can be installed in the FPGA (Field-Programmable Gate Array) processor to make a standalone device, which will be easier to integrate with a drone monitoring system.

Author Contributions

Conceptualization, methodology, writing—original draft, results analysis, M.A.; data collection, data analysis, writing—review and editing, results analysis, A.K.; methodology, writing—review and editing, design and presentation, references, B.O.S.; methodology, writing—review and editing, A.A.-R. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This work was supported by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2022R235), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Luz E., Silva P., Silva R., Silva L., Guimarães J., Miozzo G., Moreira G., Menotti D. Towards an effective and efficient deep learning model for COVID-19 patterns detection in X-ray images. Res. Biomed. Eng. 2022;38:149–162. doi: 10.1007/s42600-021-00151-6. [DOI] [Google Scholar]

- 2.Senan E.M., Alzahrani A., Alzahrani M.Y., Alsharif N., Aldhyani T.H.H. Automated Diagnosis of Chest X-Ray for Early Detection of COVID-19 Disease. Comput. Math. Methods Med. 2021;2021:6919483. doi: 10.1155/2021/6919483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Iwendi C., Huescas C.G.Y., Chakraborty C., Mohan S. COVID-19 health analysis and prediction using machine learning algorithms for Mexico and Brazil patients. J. Exp. Theor. Artif. Intell. 2022;34:725–733. doi: 10.1080/0952813X.2022.2058097. [DOI] [Google Scholar]

- 4.Sitaula C., Shahi T.B. Monkeypox Virus Detection Using Pre-Trained Deep Learning-based Approaches. J. Med. Syst. 2022;46:78. doi: 10.1007/s10916-022-01868-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Krishnaraj C., Chinmay C., Srikanth P., Shashikiran U., KVivekananda B., Niranjana S. Clinical and Laboratory approach to Diagnose COVID-19 using Machine Learning. Interdiscip. Sci. Comput. Life Sci. 2022;14:452–470. doi: 10.1007/s12539-021-00499-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wu C., Khishe M., Mohammadi M., Taher Karim S.H., Rashid T.A. Evolving deep convolutional neutral network by hybrid sine–cosine and extreme learning machine for real-time COVID19 diagnosis from X-ray images. Soft Comput. 2021;5:9663–9676. doi: 10.1007/s00500-021-05839-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nasiri H., Hasani S. Automated detection of COVID-19 cases from chest X-ray images using deep neural network and XGBoost. Radiography. 2022;28:732–738. doi: 10.1016/j.radi.2022.03.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chakraborty C., Kishor A., Rodrigues J.J. Novel Enhanced-Grey Wolf Optimization hybrid machine learning technique for biomedical data computation. Comput. Electr. Eng. 2022;99:107778. doi: 10.1016/j.compeleceng.2022.107778. [DOI] [Google Scholar]

- 9.Hassan H., Ren Z., Zhou C., Khan M.A., Pan Y., Zhao J., Huang B. Supervised and weakly supervised deep learning models for COVID-19 CT diagnosis: A systematic review. Comput. Methods Progr. Biomed. 2022;218:106731. doi: 10.1016/j.cmpb.2022.106731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Rahman A., Chakraborty C., Anwar A., Karim M., Islam M., Kundu D., Rahman Z., Band S.S. SDN-IoT Empowered Intelligent Framework for Industry 4.0 Applications during COVID-19 Pandemic. Clust. Comput. 2021;25:2351–2368. doi: 10.1007/s10586-021-03367-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sujata D., Chinmay C., Sourav K.G., Subhendu K.P., Jaroslav F. BIFM: Big-data Driven Intelligent Forecasting Model for COVID-19. IEEE Access. 2021;9:97505–97517. doi: 10.1109/ACCESS.2021.3094658. [DOI] [Google Scholar]

- 12.Muhammad S., Javeria A., Nadia G., Seifedine K., Chinmay C. Quantum Machine Learning Architecture for COVID-19 Classification based on Synthetic Data Generation using Conditional Adversarial Neural Network (CGAN) Cogn. Comput. 2021;14:1677–1688. doi: 10.1007/s12559-021-09926-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kumar A., Tripathi A.R., Satapathy S.C., Zhang Y.D. SARS-Net: COVID-19 detection from chest x-rays by combining graph convolutional network and convolutional neural network. Pattern Recognit. 2022;122:108255. doi: 10.1016/j.patcog.2021.108255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zouch W., Sagga D., Echtioui A., Khemakhem R., Ghorbel M., Mhiri C., Hamida A.B. Detection of COVID-19 from CT and Chest X-ray Images Using Deep Learning Models. Ann. Biomed. Eng. 2022;50:825–835. doi: 10.1007/s10439-022-02958-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Panwar H., Gupta P.K., Siddiqui M.K., Morales-Menendez R., Singh V. Application of deep learning for fast detection of COVID-19 in X-Rays using nCOVnet. Chaos Solitons Fractals. 2020;138:109944. doi: 10.1016/j.chaos.2020.109944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hossain M.B., Iqbal S.H.S., Islam M.M., Akhtar M.N., Sarker I.H. Transfer learning with fine-tuned deep CNN ResNet50 model for classifying COVID-19 from chest X-ray images. Inform. Med. Unlocked. 2022;30:100916. doi: 10.1016/j.imu.2022.100916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ahuja S., Panigrahi B.K., Dey N., Rajinikanth V., Gandhi T.K. Deep transfer learning-based automated detection of COVID-19 from lung CT scan slices. Appl. Intell. 2020;51:571–585. doi: 10.1007/s10489-020-01826-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ibrahim D.M., Elshennawy N.M., Sarhan A.M. Deep-chest: Multi-classification deep learning model for diagnosing COVID-19, pneumonia, and lung cancer chest diseases. Comput. Biol. Med. 2021;132:104348. doi: 10.1016/j.compbiomed.2021.104348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jain G., Mittal D., Thakur D., Mittal M.K. A deep learning approach to detect COVID-19 coronavirus with X-ray images. Biocybern. Biomed. Eng. 2020;40:1391–1405. doi: 10.1016/j.bbe.2020.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sitaula C., Hossain M.B. Attention-based VGG-16 model for COVID-19 chest X-ray image classification. Appl. Intell. 2021;51:2850–2863. doi: 10.1007/s10489-020-02055-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Li L., Qin L., Xu Z., Yin Y., Wang X., Kong B., Bai J., Lu Y., Fang Z., Song Q., et al. Using Artificial Intelligence to Detect COVID-19 and Community-acquired Pneumonia Based on Pulmonary CT: Evaluation of the Diagnostic Accuracy. Radiology. 2020;296:E65–E71. doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Khan A.I., Shah J.L., Bhat M.M. CoroNet: A deep neural network for detection and diagnosis of COVID-19 from chest X-ray images. Comput. Methods Progr. Biomed. 2020;196:105581. doi: 10.1016/j.cmpb.2020.105581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shastri S., Kansal I., Kumar S., Singh K., Popli R., Mansotra V. CheXImageNet: A novel architecture for accurate classification of COVID-19 with chest x-ray digital images using deep convolutional neural networks. Health Technol. 2022;12:193–204. doi: 10.1007/s12553-021-00630-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nandi R., Mulimani M. Detection of COVID-19 from X-rays using hybrid deep learning models. Res. Biomed. Eng. 2021;37:687–695. doi: 10.1007/s42600-021-00181-0. [DOI] [Google Scholar]

- 25.Cohen J.P., Morrison P., Dao L. COVID-19 image data collection. arXiv. 20032003.11597 [Google Scholar]

- 26.Rahman T., Khandakar A., Qiblawey Y., Tahir A., Kiranyaz S., Kashem S.B.A., Islam M.T., Al Maadeed S., Zughaier S.M., Khan M.S., et al. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput. Biol. Med. 2021;132:104319. doi: 10.1016/j.compbiomed.2021.104319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zhao J., Zhang Y., He X., Xie P. COVID-ct-dataset: A ct scan dataset about COVID-19. arXiv. 20202003.13865 [Google Scholar]

- 28.Bhandary A., Prabhu G.A., Rajinikanth V., Thanaraj K.P., Satapathy S.C., Robbins D.E., Shasky C., Zhang Y.D., Tavares J.M.R., Raja N.S.M. Deep-learning framework to detect lung abnormality—A study with chest X-ray and lung CT scan images. Pattern Recognit. Lett. 2020;129:271–278. doi: 10.1016/j.patrec.2019.11.013. [DOI] [Google Scholar]

- 29.Mooney P. Chest X-ray Images (Pneumonia) [(accessed on 5 April 2022)]. Available online: https://www.kaggle.com/datasets/paultimothymooney/chest-xray-pneumonia.

- 30.Chowdhury M.E.H., Rahman T., Khandakar A., Mazhar R., Kadir M.A., Mahbub Z.B., Islam K.R., Khan M.S., Iqbal A., Emadi N.A., et al. Can ai help in screening viral and COVID- 19 pneumonia? IEEE Access. 2020;8:132665–132676. doi: 10.1109/ACCESS.2020.3010287. [DOI] [Google Scholar]

- 31.Asraf A. COVID Dataset. 2020. [(accessed on 2 January 2022)]. Available online: https://www.kaggle.com/amanullahasraf/COVID19-pneumonia-normal-chest-xraypa-dataset.

- 32.Chest X-ray Images (Pneumonia) [(accessed on 1 April 2022)]. Available online: https://www.kaggle.com/datasets/prashant268/chest-xray-COVID19-pneumonia.

- 33.Kermany D.S., Zhang K., Goldbaum M.H. Labeled optical coherence tomography (oct) and chest x-ray images for classification. Mendeley Data. 2018:2. doi: 10.17632/rscbjbr9sj.2. [DOI] [Google Scholar]

- 34.Rsna Pneumonia Detection Challenge. 2018. [(accessed on 25 June 2022)]. Available online: https://www.kaggle.com/c/rsna-pneumonia-detection-challenge/data.

- 35.Alzubaidi L., Zhang J., Humaidi A.J., Al-Dujaili A., Duan Y., Al-Shamma O., Santamaría J., Fadhel M.A., Al-Amidie M., Farhan L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data. 2021;8:53. doi: 10.1186/s40537-021-00444-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Turkoglu M. COVIDetectioNet: COVID-19 diagnosis system based on X-ray images using features selected from pre-learned deep features ensemble. Appl. Intell. 2021;51:1213–1226. doi: 10.1007/s10489-020-01888-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020;121:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used during the current study are available from the corresponding author on reasonable request.