Abstract

Artificial intelligence (AI)-assisted diagnosis and treatment could expand the medical scenarios and augment work efficiency and accuracy. However, factors influencing healthcare workers’ adoption intention of AI-assisted diagnosis and treatment are not well-understood. This study conducted a cross-sectional study of 343 dental healthcare workers from tertiary hospitals and secondary hospitals in Anhui Province. The obtained data were analyzed using structural equation modeling. The results showed that performance expectancy and effort expectancy were both positively related to healthcare workers’ adoption intention of AI-assisted diagnosis and treatment. Social influence and human–computer trust, respectively, mediated the relationship between expectancy (performance expectancy and effort expectancy) and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment. Furthermore, social influence and human–computer trust played a chain mediation role between expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment. Our study provided novel insights into the path mechanism of healthcare workers’ adoption intention of AI-assisted diagnosis and treatment.

Keywords: performance expectancy, effort expectancy, social influence, human–computer trust, adoption intention, healthcare worker, AI-assisted diagnosis and treatment

1. Introduction

Artificial intelligence (AI) is advertised as the principal general-purpose technology of this era [1,2]. Medical AI denotes applying a series of functions, including auxiliary diagnosis, risk prediction, disease triage, health management, and hospital management, through intelligent algorithms and technologies such as machine learning, representation learning, and deep learning [3]. Among them, AI-assisted diagnosis and treatment is highly followed worldwide, and domestic and foreign technology giants are striving in this field. AI robots perform medical AI-assisted diagnosis and treatment to complete daily supporting tasks, including directing methods, consultation in hospitals, image capture and recognition, assistive support in surgery, and epidemic-prevention information [4,5]. The last decade has witnessed a noticeable progression in the use of AI-assisted diagnosis and treatment in the field of dentistry [6].

AI-assisted diagnosis and treatment is the application of AI in disease diagnosis and treatment. For disease diagnosis, in oral implantology, several artificial intelligence models have been able to classify normal and osteoporotic subjects on panoramic radiographs, and their accuracy, sensitivity, and specificity are above 95%. They can assist doctors in identifying osteoporotic patients before implant treatment and improve the success rate of treatment [7]. In endodontics, some scholars [8,9] found that a deep-learning-based convolutional neural network algorithm can provide accurate diagnosis of dental caries, with an accuracy of 89.0% for premolars and 88.0% for molars, respectively, which is expected to be one of the effective methods for diagnosing caries. For treatment, dental robots play an important role in various fields of dentistry. In maxillofacial surgery, the robot can provide high-definition, three-dimensional magnified images and enter the body through a minimally invasive incision during surgery, which can significantly improve the precision, safety, and therapeutic effect of surgery [10]. In oral implantology, the robot system can achieve the reproduction of the anatomical structure of the surgical area, the precise design of preoperative implants, the automatic and precise implementation of surgery, and immediate implant restoration, which meet the requirements of precise, efficient, minimally invasive, and comfortable surgery [11]. Although the application of AI-assisted diagnosis and treatment could expand the medical scenarios [12,13] and augment work efficiency and accuracy [14,15], healthcare workers are unwilling to believe and rely on things that cannot be explained [16,17]. Owing to the lack of algorithm transparency, data security risks, uncertain medical responsibilities, and substitution threats, healthcare workers refuse to use AI [18,19]. Thus, it is imperative and urgent to investigate the acceptance of AI-assisted diagnosis and treatment for healthcare workers at this stage.

Research on technology-adoption intention in healthcare can be divided into three categories according to the different subjects of adoption: healthcare recipients (e.g., patients), healthcare workers (e.g., doctors, nurses), and healthcare institutions (e.g., hospitals, clinics). For different adopters, there are different factors influencing the intention to adopt technology, and the research models also differ [20,21,22,23,24,25]. This study compares the relevant literature, summarizes the theoretical basis and factors of healthcare workers’ intention to adopt technology, and lays the foundation for subsequent research on healthcare workers’ adoption intention of AI-assisted treatment technology (see Table 1).

Table 1.

Literature review on healthcare workers’ adoption intention.

| Authors | Context | Theoretical Basis | Region | Key Findings |

|---|---|---|---|---|

| Alsyouf et al. (2022) [23] | Nurses’ continuance intention of EHR | UTAUT, ECT, FFM | Jordan | Performance expectancy as a mediating variable on the relationships between the different personality dimensions and continuance intention, specifically conscientiousness as a moderator. |

| Pikkemaat et al. (2021) [24] | Physicians’ adoption intention of telemedicine | TPB | Sweden | Attitudes and perceived behavioral control being significant predictors for physicians to use telemedicine. |

| Hossain et al. (2019) [25] | Physicians’ adoption intention of EHR | Extended UTAUT | Bangladesh | Social influence, facilitating conditions, and personal innovativeness in information technology had a significant influence on physicians’ adoption intention to adopt the EHR system. |

| Alsyouf and Ishak (2018) [26] | Nurses’ continuance intention to use EHR | UTAUT and TMS | Jordan | Effort expectancy, performance expectancy, and facilitating conditions positively influence nurses’ continuance intention to use and top management support as significant and negatively related to nurses’ continuance adoption intention. |

| Fan et al. (2018) [27] | Healthcare workers’ adoption intention of AIMDSS | UTAUT, TTF, trust theory | China | Initial trust mediates the relationship between UTAUT factors and behavioral intentions. |

| Bawack and Kamdjoug (2018) [28] | Clinicians’ adoption intention of HIS | Extended UTAUT | Cameroon | Performance expectancy, effort expectancy, social influence, and facilitating conditions have a positive direct effect on clinicians’ adoption intention of HIS. |

| Adenuga et al. (2017) [29] | Clinicians’ adoption intention of telemedicine | UTAUT | Nigeria | Performance expectancy, effort expectancy, facilitating condition, and reinforcement factor have significant effects on clinicians’ adoption intention of telemedicine. |

| Liu and Cheng (2015) [30] | Physicians’ adoption intention of MEMR | The dual-factor model | Taiwan | Physicians’ intention to use MEMRs is significantly and directly related to perceived ease of use and perceived usefulness, but perceived threat has a negative influence on physicians’ adoption intention. |

| Hsieh (2015) [31] | Healthcare professionals’ adoption intention of health clouds | TPB and Status quo bias theory | Taiwan | Attitude, subjective norm, and perceived behavior control are shown to have positive and direct effects on healthcare professionals’ intention to use the health cloud. |

| Wu et al. (2011) [32] | Healthcare professionals’ adoption intention of mobile healthcare | TAM and TPB | Taiwan | Perceived usefulness, attitude, perceived behavioral control, and subjective norm have a positive effect on healthcare professionals’ adoption intention of mobile healthcare. |

| Egea and González (2011) [33] | Physicians’ acceptance of EHCR | Extended TAM | Southern Spain | Trust fully mediated the influences of perceived risk and information integrity perceptions on physicians’ acceptance of EHCR systems. |

Note: EHR, electronic health record; AIMDSS, medical diagnosis support system; HIS, health information system; MEMR, mobile electronic medical records; EHCR, electronic health care records. UTAUT, unified theory of acceptance and use of technology; ECT, the theory of expectation confirmation; FFM, five-factor model; TPB, theory of planned behavior; TMS, top management support; TTF, task technology fit; TAM, technology-acceptance model.

The current research on healthcare workers’ adoption intention is primarily based on a single technology-adoption theory (e.g., technology-acceptance model (TAM) and unified theory of acceptance and use of technology (UTAUT)) [34,35], which explored the impact of AI technical characteristics [27], individual psychological cognition [26,28,29], and social norms [25,31,32] on healthcare workers’ intention to adopt AI technology. Of these, expectancy includes performance expectancy and effort expectancy, which are psychological cognitive factors that affect technology adoption [34,35,36,37]. Performance expectancy signifies the degree to which an individual believes that adopting new technology could improve his/her work performance and is an individual perception of the practicality of new technology [34,35]. Effort expectancy denotes the level of effort required by healthcare workers to use AI-assisted diagnosis and treatment and their perception of the ease of use of the new technology [34,35]. Two expectancies both positively affect users’ adoption intention [26,28,29]. Nevertheless, the adoption intention of AI by healthcare workers might change because of technological and environmental changes. At present, limited research has been conducted on the microprocess mechanism and medical scenarios of healthcare workers’ acceptance of AI.

It is worth noting that although existing studies have confirmed the validity of models such as TAM and UTAUT in assessing healthcare workers’ technology-adoption intention, medical AI is different from the previous technologies and presents the characteristics of high motility, high risk, and low trust. Therefore, a single model based on traditional TAM and UTAUT has a low explanation for intention to use AI [38]. Most existing studies have used mostly extended TAM or UTAUT to explore the factors influencing healthcare workers’ technology-adoption intention (see Table 1).

AI represents a highly capable, complex technology designed to mimic human intelligence, characterized by agency and control shifting from humans to technology and altering people’s previous understanding of the relationship between humans and technology, thereby creating a sense of trust [39]. Research has confirmed that HCT is an important prerequisite for user’s acceptance of medical AI, especially for more automated AI applications [40]. HCT contributes to reliability and the anthropomorphic features of AI [4,39,41]. Although many studies have examined trust in the interpersonal and societal domains, in different technologies, studies addressing trust in medical AI-assisted diagnosis and treatment are scarce. Madsen and Gregor (2000) defined HCT as “the extent to which a user is confident in, and willing to act on the basis of the recommendations, actions, and decisions of an artificially intelligent decision aid” [42], which enhances healthcare workers’ adoption intention of AI-assisted diagnosis and treatment [27,33]. Theories of interpersonal relationships have established trust as a social glue in relationships, groups, and societies [21,43]. However, the current literature leaves unanswered questions. For example, how is HCT built among healthcare workers, and how does it affect adoption intention? In addition, based on the UTAUT model, social influence exerts a positive impact on technology adoption [34,35]. The research has established that social influence indirectly affects users’ adoption intention of AI through trust [44]. Thus, based on the UTAUT model and HCT theory, this study investigates the path mechanism of expectancy on healthcare workers’ adoption intention of AI-assisted diagnosis and treatment.

This study contributes to the extant research literature in two ways. First, previous studies primarily used a single technology-adoption model to examine the electronic health record (EHR) [23,25,26] and telemedicine [24,29] by healthcare workers’ adoption intention. This study proposes an integrated model of the UTAUT model and HCT theory to determine what factors affect the intention of healthcare workers to adopt AI-assisted diagnosis and treatment, enriches the theoretical research of the UTAUT model, and expands the application scenarios of medical AI.

Second, previous research focused more on the direct impact of technology adoption [34,35]. The intermediary mechanism influencing the expectancy on healthcare workers’ adoption intention of AI-assisted diagnosis and treatment has received limited attention. Of note, HCT fails to elucidate the underlying mechanisms of why some healthcare workers are reluctant to believe medical AI. This study constructs a chain mediation model to illustrate the psychological mechanism of how healthcare workers’ expectancy affects their intention of embracing AI-assisted diagnosis and treatment. In addition, this study demonstrates the single mediating effect and chain mediation effect of social influence and HCT. By integrating social influence and HCT in the model, we offer a better understanding of how social influence and HCT can individually and collectively influence the association between expectancy and adoption intention for healthcare workers. Moreover, the conclusions could also provide a theoretical basis for medical explainable AI research and provide management enlightenment or reference for service providers, hospital managers, and government sectors.

The rest of the paper is structured as follows. Section 2 presents the proposed model with theoretical background. The research methodology is explained in Section 3. Data analysis and results are presented in Section 4. In Section 5, we discuss the implications of the findings, contributions, limitations, and directions for future research. Section 6 concludes the paper with some final thoughts.

2. Theoretical Background and Research Hypotheses

2.1. Theoretical Background

2.1.1. The Unified Theory of Acceptance and Use of Technology

The UTAUT is a model to explain the generation of behavior proposed by integrating eight theoretical models, including the theory of reasoned action (TRA) [35,45], TAM [34], and theory of planned behavior (TPB) [46]. The UTAUT model could explain 70% of individual intentions to adopt information technology and 50% of information technology-adoption behavior. Among them, performance expectancy, effort expectancy, and social influence play a decisive role in individual intention, and facilitating conditions directly influence individual behavior [35]. This study focuses on healthcare workers’ adoption intention of AI-assisted diagnosis and treatment rather than on their adoption behavior. Therefore, the impact of facilitating conditions on healthcare workers’ adoption intention was not considered.

Wang et al. (2020) integrated UTAUT and task–technology fit (TTF) to understand the factors influencing consumer acceptance of healthcare wearable devices (HWDs). The key findings revealed that consumer acceptance is influenced by both users’ perceptions (performance expectancy, effort expectancy, social influence, and facilitating conditions) and the task–technology fit [20]. As the faster application of medical AI makes healthcare workers face greater uncertainty, the reasons for healthcare workers to adopt new technology are more diverse. Hossain et al. (2019) explored factors influencing the physicians’ adoption of EHR in Bangladesh and determined that social influence, facilitating conditions, and personal innovation positively influenced physicians’ intention to adopt the EHR system [25]. A Chinese study reported that doctors’ initial trust in AI-assisted diagnosis and treatment exerted a significant positive impact on doctors’ adoption intention [27]. The UTAUT model has been broadly used in healthcare.

2.1.2. Human–Computer Trust Theory

The interaction between people and technology has special trust characteristics [47]. HCT is the degree to which people have confidence in AI systems and are willing to take action [42]. Trust is considered an attitude intention [47], which could directly influence acceptance and help people make cognitive judgments by decreasing risk perception [48] and enhancing benefit perception [49]. HCT is an attitude of trust that stems from the interaction between human and AI [50]. Fan et al. (2020) stated that perceived trust positively correlated with the adoption of AI-based medical diagnosis support system (AIMDSS) by healthcare professionals [27]. Furthermore, a Chinese study established that initial trust in an AI-assisted diagnosis system affects doctors’ adoption intention [37].

The traditional medical service relationship primarily occurs between patients and medical institutions or medical personnel, while in the medical AI scenario, the vital factor of human–technology interaction is added. HCT largely focuses on the collaboration between the human being and the automatic system [51]. From the perspective of technological object, the performance (such as trustworthiness and reliability) and attributes (such as appearance and sound) of the AI system itself as well as the different social and cultural situations might affect the HCT establishment [52]. From the viewpoint of technology users, people’s perceived expertise and responsiveness, risk cognition and brand perceptions, and other influencing factors constitute a preliminary model of technology trust, which emphasizes that users’ trust in AI chatbots could be a direct factor affecting users’ behavior [53].

2.2. Research Hypotheses

2.2.1. Expectancy and Adoption Intention

Performance expectancy denotes the degree to which using technology would bring effectiveness to users in performing specific tasks [34,35]. In the context of AI-assisted diagnosis and treatment, performance expectancy indicates the extent to which AI-assisted diagnosis and treatment help healthcare workers increase their work efficiency. Effort expectancy is defined as the degree to which a person believes that using a particular system would be free of effort [34,35]. In this study, effort expectancy is to mirror healthcare workers’ perception of how easy it is to adopt AI-assisted diagnosis and treatment. Previous studies demonstrated that performance expectancy and effort expectancy are the primary determinants of intention to adopt a new technology [20,27,35]. Adenuga et al. (2017) posited that performance expectancy and effort expectancy exerted significant effects on Nigerian clinicians’ intention to adopt the telemedicine systems [29]. Regarding the adoption of the EHR [23,25] and the health information system (HIS) [28], studies have confirmed that performance expectancy and effort expectancy are positively related to physicians’ adoption intention. Hence, the following hypotheses are proposed:

Hypothesis 1a (H1a).

Performance expectancy is positively related to healthcare workers’ adoption intention of AI-assisted diagnosis and treatment.

Hypothesis 1b (H1b).

Effort expectancy is positively related to healthcare workers’ adoption intention of AI-assisted diagnosis and treatment.

2.2.2. The Mediating Role of Social Influence

Social influence is defined as the degree to which an individual believes that important others believe he/she should adopt a new technology, which is considered the main predictor of general technology-acceptance behavior [34,35,54,55]. The rationale behind social influence could be that individuals want to fortify their relationships with critical persons by following their views of specific behaviors [56]. Based on the UTAUT model, Shiferaw and Mehari (2019) stated that social influence significantly and positively affected the intention of healthcare workers to use the electronic medical record system [57]. In addition, previous studies confirmed the positive association between social influence and behavioral intention [25,28]. Social influence can also aid the understanding of uncertainty reduction, as it might function as a substitute for interaction with the unknown and not-yet-available technology [58]. In other words, social influence is an active information-seeking method [59]. In this study, healthcare workers’ expectancy (performance expectancy and effort expectancy) of AI-assisted diagnosis and treatment are influenced by other healthcare workers’ attitudes, in turn influencing other healthcare workers’ attitudes toward AI-assisted diagnosis and treatment. This social interaction allows healthcare workers to gain information about AI-assisted diagnosis and treatment, reducing their perception of uncertainty and thus influencing their willingness to adopt AI-assisted diagnosis and treatment. Hence, the following hypotheses are proposed:

Hypothesis 2a (H2a).

Social influence mediates the relationship between performance expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment.

Hypothesis 2b (H2b).

Social influence mediates the relationship between effort expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment.

2.2.3. The Mediating Role of Human–Computer Trust

Human–computer trust (HCT) denotes the beliefs that technology contributes to attaining personal goals and determining attitudes toward subsequent behavior in situations of uncertainty and vulnerability [60,61]. In the context of AI-assisted diagnosis and treatment, HCT demonstrates that healthcare workers believe the suggestions, actions, and decisions provided by AI-assisted diagnosis and treatment are reliable [62,63]. HCT can be viewed as a potential and critical prerequisite for the adoption of AI technology [40,64,65]. Nordheim et al. (2019) first developed an initial model of technology trust in a chatbot scenario and deduced that technology trust might be a direct factor influencing users’ adoption intention of AI chatbots [53]. Meanwhile, the association between expectancy and trust has been illustrated in the field of healthcare [27,66,67]. Furthermore, Prakash and Das (2021) surveyed 183 radiologists and demonstrated that trust played a mediating role between expectancy and adoption intention [68]. Of note, healthcare workers are more likely to trust AI-assisted diagnosis and treatment when they believe it would be more efficient or require less effort and then more likely to adopt it. Hence, the following hypotheses are proposed:

Hypothesis 3a (H3a).

HCT mediates the relationship between performance expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment.

Hypothesis 3b (H3b).

HCT mediates the relationship between effort expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment.

2.2.4. The Chain Mediation Model of Social Influence and HCT

In the context of medical AI, the social reaction of medical AI would affect the trust and attitude of healthcare workers toward AI-assisted diagnosis and treatment. A study on trust in information systems also confirmed that social influence is related to HCT [44,54]. In addition, a study in China showed that social influence affects user behavior indirectly through trust [44,69]. Zhang et al. (2020) posited that in the automated vehicle sector, social influence manifests itself in the propaganda and assessment of users, which warrants service providers to attach importance to propaganda and word-of-mouth because the improvement of social acceptance could help enhance users’ trust and thus affect adoption intention [44]. Moreover, based on the above-mentioned discussion, users’ expectancy (performance expectancy and effort expectancy) influences others’ attitudes toward technology. When deciding whether to use AI-assisted diagnosis and treatment, healthcare workers will consider whether AI-assisted diagnosis and treatment could improve their work efficiency and whether the cost of learning AI-assisted diagnosis and treatment is less than the benefit [69,70]. Healthcare workers themselves are influenced by others’ attitudes toward AI-assisted diagnosis and treatment [71,72]. That is, when the person they think is critical to them has a positive attitude toward AI-assisted diagnosis and treatment, healthcare workers believe that AI-assisted diagnosis and treatment is reliable, accurate, and convenient [73,74]. When most people are negative about AI-assisted diagnosis and treatment, healthcare workers query the advice and decisions provided by AI-assisted diagnosis and treatment. Hence, the following hypotheses are proposed:

Hypothesis 4a (H4a).

The relationship between performance expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment can be mediated sequentially by social influence and HCT.

Hypothesis 4b (H4b).

The relationship between effort expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment can be mediated sequentially by social influence and HCT.

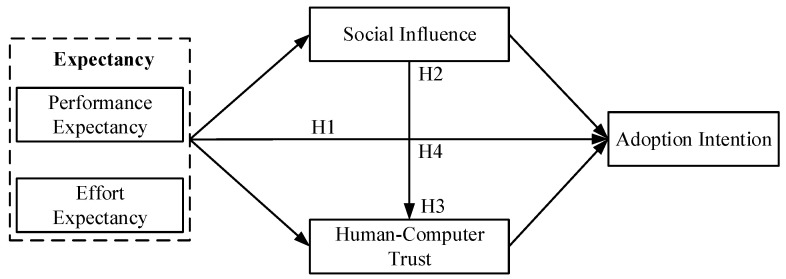

Figure 1 presents the theoretical model of this study.

Figure 1.

Research model.

3. Materials and Methods

3.1. Participants and Date Collection

Considering the service model of AI-assisted diagnosis and treatment, this study focused on the dental department, a medical scenario where AI was used widely, and selected healthcare professionals serving this department as the research subjects. Inclusion criteria consisted of dental healthcare workers who had a qualification certificate and a practice certificate, worked for at least 3 months, used AI-assisted diagnosis and treatment in the department, and understood the purpose of the survey, agreed, and participated voluntarily. A total of 450 questionnaires were distributed, of which 379 were collected, with a recovery rate of 84.2%. After screening the incomplete and visibly unqualified questionnaires, the total number of valid questionnaires was 343.

The demographic characteristics of participants were summarized in Table 2. Most participants were female (71.1%) with 77.0% aged < 41 years. Approximately half were married (47.5%), doctor (44.3%), and had a university degree (49.3%). The majority of the participants had less than 10 years of clinical experience (70.6%) and worked in tertiary hospital (57.7%).

Table 2.

Demographic characteristics for the participants (N = 343).

| Characteristics | Frequency (f) | Percentage (%) |

|---|---|---|

| Gender | ||

| Male | 99 | 28.9 |

| Female | 244 | 71.1 |

| Age | ||

| ≤20 | 37 | 10.8 |

| 21–30 | 105 | 30.6 |

| 31–40 | 122 | 35.6 |

| 41–50 | 57 | 16.6 |

| ≥51 | 22 | 6.4 |

| Marital status | ||

| Single | 118 | 34.4 |

| Married | 163 | 47.5 |

| Divorced | 62 | 18.1 |

| Education | ||

| High school | 27 | 7.9 |

| Junior college | 66 | 19.2 |

| University | 169 | 49.3 |

| Master and above | 81 | 23.6 |

| Clinical experience | ||

| <1 | 23 | 6.7 |

| 1–5 | 133 | 38.8 |

| 6–10 | 86 | 25.1 |

| 11–15 | 54 | 15.7 |

| 16–20 | 32 | 9.3 |

| >20 | 15 | 4.4 |

| Position | ||

| Doctor | 152 | 44.3 |

| Nurse | 124 | 36.2 |

| Medical technician | 67 | 19.5 |

| Type of hospital | ||

| tertiary | 198 | 57.7 |

| secondary | 145 | 42.3 |

Note: In China, there are three hospital levels (the rank of hospitals). The best hospital level is level three (tertiary hospitals). Hospitals in this level can provide more beds, departments, professional nurses, professional doctors, and good service for patients. The higher the rank of hospital, the greater the use of AI.

Before the survey, participants were explicitly informed that the survey was for academic purposes only and that personal information (such as gender, age, and education) would be involved [75,76]. In the survey, participants were allowed to complete the questionnaire voluntarily and anonymously. After the survey, the survey data were kept in safe custody to protect the participants’ privacy.

3.2. Measures

All measurements were based on reliable mature scales. To ensure the applicability and efficacy of foreign scales in Chinese context, we strictly followed the “forward-backward translation” procedure [77]. Meanwhile, appropriate adjustments were made to the questions based on the AI-assisted diagnosis and treatment context, and two professionals were invited to examine the questionnaire for its clarity, terminology, logical consistency, and contextual relevance. The items and sources of the questionnaire are shown in Appendix A. All responses were reflected using a 5-point Likert scale, where 1 = strongly disagree, and 5 = strongly agree. Moreover, a pretest was administered to 20 dental healthcare workers before the formal research, and the questionnaire was revised to determine the official questionnaire based on the results of the research and feedback on the questions. To assess the reliability of our research instrument, Cronbach’s α values were calculated. Cronbach’s α of all the scales were greater than the threshold of 0.60 [78], which indicated that our research instrument had good reliability.

The 4-item performance expectancy scale was applied to measure performance expectancy [35]. A sample item from the questionnaire is “AI-assisted diagnosis and treatment will make my work more efficient”. The Cronbach’s α for this scale in the present study was 0.90. The 4-item effort expectancy scale was applied to measure effort expectancy [35]. A sample item from the questionnaire is “I can skillfully use AI-assisted diagnosis and treatment”. The Cronbach’s α for this scale in the present study was 0.93.

The 4-item social influence scale was applied to measure social influence [35]. A sample item from the questionnaire is “People who are important to me think that I should use AI-assisted diagnosis and treatment”. The Cronbach’s α for this scale in the present study was 0.92.

The 12-item human–computer trust scale developed by Gulati et al. (2018) was adapted [79]. A sample item from the questionnaire is “I can always rely on AI-assisted diagnosis and treatment”. The Cronbach’s α for this scale in the present study was 0.92.

The 3-item behavioral intention scale was applied to measure adoption intention [35]. A sample item from the questionnaire is “I intend to use AI-assisted diagnosis and treatment in the future”. The Cronbach’s α for this scale in the present study was 0.94.

3.3. Data Analysis

Before reliability and validity testing, this study implemented common methods bias (CMB) testing [80,81]. To minimize the threats of CMB, data confidentiality and anonymity, concealing variable names, and item mismatches were guaranteed, but it was still necessary to test the possible homologous variance. CMB was examined by Harman’s single-factor test [82,83]. Constraining the number of factors extracted to one, exploratory factor analysis yielded one single factor explaining 34.72% of the variance, lower than 50%, indicating there were no serious common method bias.

In this study, SPSS v22.0 was used to conduct the descriptive statistical analysis, internal reliability of the scales, and correlations between variables. AMOS 23.0 was used to conduct confirmatory factor analysis (CFA) and convergent validity of the scales. We used SPSS PROCESS macro 3.5 (MODEL 6) to test the chain mediation effect of social influence and HCT. The bootstrapping method produced 95% confidence intervals (CI) of these effects from 5000 bootstrap samples, which was the efficient method to test the mediating effect [84].

4. Results

4.1. Descriptive Statistics

Table 3 summarized the descriptive statistics and correlation among variables. Performance expectancy was significantly positively correlated with social influence (r = 0.583, p < 0.01), HCT (r = 0.559, p < 0.01), and adoption intention (r = 0.441, p < 0.01). Effort expectancy was significantly positively correlated with social influence (r = 0.391, p < 0.01), HCT (r = 0.558, p < 0.01), and adoption intention (r = 0.261, p < 0.01). Social influence had a positive correlation with HCT (r = 0.451, p < 0.01) and adoption intention (r = 0.551, p < 0.01). Similarly, there was a positive correlation between HCT and adoption intention (r = 0.604, p < 0.01).

Table 3.

Descriptive statistics and correlation among variables (N = 343).

| M | SD | AVE | PE | EE | SI | HCT | ADI | |

|---|---|---|---|---|---|---|---|---|

| PE | 3.96 | 0.75 | 0.697 | 0.835 | ||||

| EE | 3.11 | 0.97 | 0.779 | 0.276 ** | 0.883 | |||

| SI | 3.53 | 0.75 | 0.800 | 0.583 ** | 0.391 ** | 0.894 | ||

| HCT | 3.44 | 0.72 | 0.623 | 0.559 ** | 0.558 ** | 0.451 ** | 0.789 | |

| ADI | 3.70 | 0.72 | 0.650 | 0.441 ** | 0.261 ** | 0.511 ** | 0.604 ** | 0.806 |

Note: SD, standard deviations; AVE, average variance extracted; PE, performance expectancy; EE, effort expectancy; SI, social influence; HCT, human–computer trust; ADI, adoption intention. ** p < 0.05 (two-tailed). Values on the diagonal are the square root of the AVE of each variable.

4.2. Confirmatory Factor Analysis

We used CFA to show that the theoretical model had a good fit and establish the distinctiveness of study variables [85]. As shown in Table 4, the fitting degree of the one-factor model was poor, and our hypothesized five-factor model fits the data best (χ2/df = 2.213, GFI = 0.901, NFI = 0.937, RFI = 0.915, CFI = 0.964, RMSEA = 0.068). This indicated that the five-factor model had a good fit, and the distinctiveness of the five constructs in the current study was clear.

Table 4.

Comparisons of measurement models.

| Model | Variables | χ2/df | GFI | NFI | RFI | CFI | RMSEA |

|---|---|---|---|---|---|---|---|

| Five-factor model | PE, EE, SI, HCT, ADI | 2.213 | 0.901 | 0.937 | 0.915 | 0.964 | 0.068 |

| Four-factor model | PE + EE, SI, HCT, ADI | 3.021 | 0.851 | 0.903 | 0.884 | 0.933 | 0.088 |

| Three-factor model | PE + EE, SI + HCT, ADI | 4.676 | 0.763 | 0.845 | 0.821 | 0.873 | 0.118 |

| Two-factor model | PE + EE + SI + HCT, ADI | 6.469 | 0.648 | 0.778 | 0.752 | 0.805 | 0.144 |

| One-factor model | PE + EE + SI + HCT + ADI | 10.870 | 0.481 | 0.621 | 0.583 | 0.642 | 0.194 |

Note: PE, performance expectancy; EE, effort expectancy; SI, social influence; HCT, human–computer trust; ADI, adoption intention. χ2/df, cmin/df; GFI, goodness-of-fit index; NFI, normed fit index; RFI, relative fit index; CFI, comparative fit index; RMSEA, root mean square error of approximation.

In addition, as shown in Table 3, the AVE of all constructs scored above the conventional value of 0.5, and the convergent validity of the model could be confirmed [86,87]. Discriminant validity was evaluated using the AVE square root calculated for every construct; all square roots were greater than the correlations among the constructs, proving discriminant validity (Table 3) [86].

4.3. Structural Model Testing

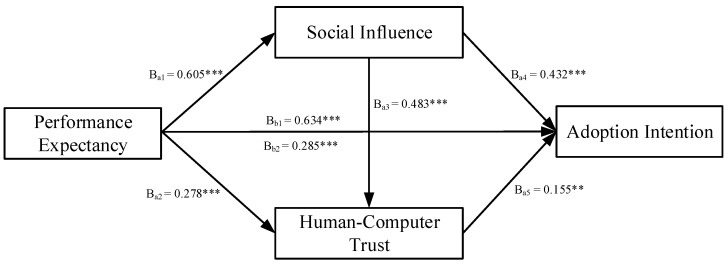

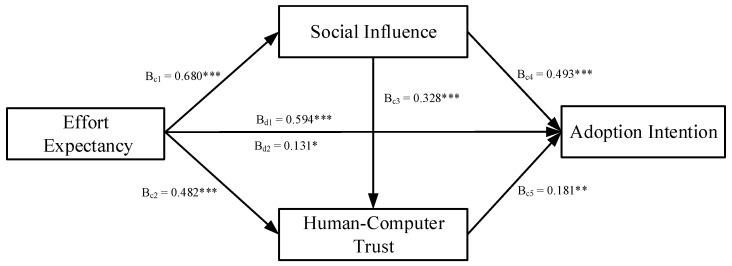

Figure 2 and Figure 3 indicated the results of the serial multiple mediation model. As shown in Figure 2, the total effect (βb1 = 0.634, p < 0.001) and the total direct effect (βb2 = 0.285, p < 0.001) of performance expectancy on healthcare workers’ adoption intention of AI-assisted diagnosis and treatment were found to be significant. Hence, H1a was supported. As shown in Figure 3, the total effect (βd1 = 0.594, p < 0.001) and the total direct effect (βd2 = 0.131, p < 0.05) of effort expectancy on healthcare workers’ adoption intention of AI-assisted diagnosis and treatment were found to be significant. Hence, H1b was supported.

Figure 2.

The serial multiple mediation model of performance expectancy. Note: a1, direct effect of performance expectancy on social influence; a2, direct effect of performance expectancy on human-computer trust; a3, direct effect of social influence on human-computer trust; a4, direct effect of social influence on adoption intention; a5, direct effect of human-computer trust on adoption intention; b1, total effect of performance expectancy on adoption intention; b2, direct effect of performance expectancy on adoption intention. ** p < 0.01; *** p < 0.001.

Figure 3.

The serial multiple mediation model of effort expectancy. Note: c1, direct effect of effort expectancy on social influence; c2, direct effect of effort expectancy on human–computer trust; c3, direct effect of social influence on human–computer trust; c4, direct effect of social influence on adoption intention; c5, direct effect of human–computer trust on adoption intention; d1, total effect of effort expectancy on adoption intention; d2, direct effect of effort expectancy on adoption intention. * p < 0.05; ** p < 0.01; *** p < 0.001.

Using the bootstrap method, we tested the mediating effects of social influence, HCT, and chain mediation effect of social influence and HCT, where the sampling value was set to 5000, and the CI was set to 95% [88]. Table 5 presents the results of the hypothesis testing.

Table 5.

Direct and indirect effects.

| Effect | X = PE | X = EE | ||||

|---|---|---|---|---|---|---|

| Point Estimate | Boot SE | 95%CI | Point Estimate | Boot SE | 95%CI | |

| Total indirect effect of X on ADI | 0.349 | 0.064 | [0.230, 0.481] | 0.463 | 0.064 | [0.332, 0.585] |

| Indirect 1: X → SI → ADI |

0.261 | 0.061 | [0.147, 0.386] | 0.335 | 0.061 | [0.213, 0.456] |

| Indirect 2: X → HCT → ADI |

0.043 | 0.020 | [0.010, 0.088] | 0.088 | 0.033 | [0.027, 0.157] |

| Indirect 3: X → SI → HCT → ADI |

0.045 | 0.020 | [0.011, 0.090] | 0.040 | 0.017 | [0.012, 0.077] |

Note: CI, confidence interval; PE, performance expectancy; EE, effort expectancy; SI, social influence; HCT, human–computer trust; ADI, adoption intention.

For performance expectancy, the total indirect effect of performance expectancy and adoption intention was 0.349. The total indirect effect was significant at 95% CI (0.230, 0.481), excluding 0. Of these, indirect effect 1 was performance expectancy → social influence → adoption intention, which tested the mediating effect of social influence between performance expectancy and adoption intention. The effect value of indirect effect 1 was 0.261, with 95% CI (0.147, 0.386), excluding 0. Indirect effect 2 was performance expectancy → HCT → adoption intention, which tested the mediating effect of HCT between performance expectancy and adoption intention. The effect value of indirect effect 2 was 0.043, with 95% CI (0.010, 0.088), excluding 0. Indirect effect 3 was performance expectancy → social influence → HCT → adoption intention, which tested the chain mediation effect of social influence and HCT between performance expectancy and adoption intention. The effect value of indirect effect 3 was 0.045, with 95% CI (0.011, 0.090), excluding 0. Hence, H2a, H3a, and H4a were supported.

For effort expectancy, the total indirect effect of effort expectancy and adoption intention was 0.463. The total indirect effect was significant at 95% CI (0.332, 0.585), excluding 0. Of these, indirect effect 1 was effort expectancy → social influence → adoption intention, which tested the mediating effect of social influence between effort expectancy and adoption intention. The effect value of indirect effect 1 was 0.335, with 95% CI (0.213, 0.456), excluding 0. Indirect effect 2 was effort expectancy → HCT → adoption intention, which tested the mediating effect of HCT between performance expectancy and adoption intention. The effect value of indirect effect 2 was 0.088, with 95% CI (0.027, 0.157), excluding 0. Indirect effect 3 was effort expectancy → social influence → HCT → adoption intention, which tested the chain mediation effect of social influence and HCT between effort expectancy and adoption intention. The effect value of indirect effect 3 was 0.040, with 95% CI (0.012, 0.077), excluding 0. Hence, H2b, H3b, and H4b were supported.

5. Discussion

This study explored the adoption intention theoretical model of AI-assisted diagnosis and treatment by integrating the UTAUT model and HCT theory. The findings revealed that expectancy (performance expectancy and effort expectancy) positively influenced healthcare workers’ adoption intention of AI-assisted diagnosis and treatment, corroborating well-established evidence in previous UTAUT studies [20,23,25,27,28,29]. Notably, effort expectancy had a relatively smaller impact in determining healthcare workers’ adoption intention of AI-assisted diagnosis and treatment compared with performance expectancy. The reason might be that nowadays, the public has much experience in using high-tech devices. They might believe that they can handle AI-assisted diagnosis and treatment without spending too much effort. Moreover, if technology offers the needed functions, the public will accept more efforts in using it [89,90].

Our findings established that expectancy (performance expectancy and effort expectancy) influenced healthcare workers’ adoption intention through the mediation of social influence. The perceived utility and ease of use of AI-assisted diagnosis and treatment by healthcare workers would trigger positive attitudes among those around them [75,91]. When people engage in social interactions, healthcare workers are more likely to believe that adopting AI-assisted diagnosis and treatment is useful and effortless and thus would like to accept it. In addition, we found that expectancy (performance expectancy and effort expectancy) exerted a positive impact on adoption intention by the mediating effect of HCT, in line with previous studies [27,44,68,92]. That is, healthcare workers’ expectancy affected their trust in AI-assisted diagnosis and treatment, and subsequently, they would like to accept AI-assisted diagnosis and treatment.

This study supported the hypothesis that social influence and HCT played a chain mediation role between expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment. Social influence was positively related to HCT, thereby supporting previous studies [27,44]. Fan et al. (2020) claimed that user advocacy and assessment are particularly crucial in the promotion and popularization of artificial intelligence-based medical diagnosis support system and that increased recognition of the service helps in enhancing users’ trust [27]. Healthcare workers could decrease costs of decision by referring to the attitudes of people around them toward AI-assisted diagnosis and treatment. Hence, healthcare workers’ expectancy was influenced by the positive attitudes of those around them toward technology. Furthermore, the positive impact would eventually transform into trust in AI-assisted diagnosis and treatment, resulting in the adoption intention of AI-assisted diagnosis and treatment by healthcare workers.

5.1. Theoretical Implications

The major implications of this study can be summarized as follows. First, this study enriches theoretical research on the application of medical AI scenarios. Previous research on healthcare workers’ intention to adopt technology focused on technologies such as the EHR [23,25,26], telemedicine [24,29], and the HIS [28]. However, limited research has been conducted on AI-assisted diagnosis and treatment. We extended scholarship by offering a theoretical framework and an empirically tested model of healthcare workers’ adoption intention of AI-assisted diagnosis and treatment, considering healthcare workers’ perception of AI-assisted diagnosis and treatment as well as the anticipated positive effects on work and society that AI may have. Perhaps this model will serve as a foundation for others seeking to understand the mixed attitudes and reactions of healthcare workers in the face of other medical AI scenarios [93].

Second, this study broadens the theoretical research of the UTAUT model by revealing the impact of human–computer trust (HCT) on healthcare workers’ adoption intention of AI-assisted diagnosis and treatment. Previous studies primarily used a single technology-adoption model, such as TAM and UTAUT, as the main research framework, establishing that performance expectancy and effort expectancy markedly affected the adoption intention of new technologies [34,35,94]. Nevertheless, medical AI differs from the previous technologies and presents the characteristics of high motility, high risk, and low trust. Our results suggested that HCT mediated the relationship between expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment, similar to previous studies [27,44,68]. This study explains well how to promote HCT and sequentially accept AI-assisted diagnosis and treatment from the perspective of individual perception. This result is notable because it provides an explanation mechanism of HCT with regards to medical AI and extends the theoretical framework for UTAUT model in the medical field.

Third, this study addresses how performance expectancy and effort expectancy affected adoption intention of AI-assisted diagnosis and treatment, thereby enriching the research on the mediating mechanism between the above-mentioned relationships. While previous studies focused more on the direct effects of technology adoption, this study incorporated the HCT theory based on the UTAUT model and established a chain-mediated mechanism of social influence and HCT. Moreover, our findings tested the single mediating effect and chain mediating effect of social influence and HCT between expectancy and adoption intention, revealing interesting conversions between social influence and HCT. To some extent, the research conclusions also compensate for the low degree of explanation of AI-adoption intention based on single models, such as traditional TAM and UTAUT [38].

5.2. Practical Implications

The contributions of this study extend beyond the empirical findings and lie in the significance of its theoretical extension for the acceptance of AI-assisted diagnosis and treatment. First, service developers should focus on the performance expectancy and effort expectancy of healthcare workers for medical AI. Service developers should effectively comprehend the needs of healthcare workers for AI-assisted diagnosis and treatment, increase the R&D of AI-assisted diagnosis and treatment, develop professional functions that fulfill the needs of healthcare workers, ensure the accuracy of information and services provided by AI-assisted diagnosis and treatment, enhance work efficiency and service quality of healthcare workers, and improve the worthy perception of healthcare workers. Furthermore, service providers should be user-centered, focus on the experience of AI-assisted diagnosis and treatment, improve the simplicity of operation and interface friendliness, and enhance the perception of the ease of use for healthcare workers.

Second, hospital administrators could adjust their management strategies to augment the trust and acceptance of AI-assisted diagnosis and treatment among healthcare workers. For example, hospital managers should encourage healthcare workers to adopt the AI-assisted diagnosis and treatment as well as convey the hospitals’ support for the use of AI-assisted diagnosis and treatment. In addition, hospitals should conduct AI technology training for relevant healthcare workers to help them quickly understand AI-assisted diagnosis and treatment and enhance their expectancy [75]. Hospital managers could also associate work performance with salary and promotion for healthcare workers to urge them to adopt AI-assisted diagnosis and treatment.

Third, the government could amplify publicity on AI-assisted diagnosis and treatment and enhance its social influence. A study demonstrated that technology is adopted faster in mandatory settings [28]. The government’s vigorous promotion of AI-assisted diagnosis and treatment would enhance healthcare workers’ recognition of AI-assisted diagnosis and treatment. Moreover, social influence is a key factor affecting healthcare workers’ trust in AI-assisted diagnosis and treatment. When building trust with AI-assisted diagnosis and treatment, healthcare workers would refer to the positive or negative attitudes of those around them toward the technology. Thus, service providers must focus on their own publicity and word-of-mouth to increase the recognition of AI-assisted diagnosis and treatment, enhance HCT, and in turn influence healthcare workers’ adoption intention.

5.3. Limitations and Future Research

Although this study provided meaningful findings about healthcare workers’ adoption intention of AI-assisted diagnosis and treatment, the following points merit further research. First, our study used adoption intention instead of actual usage behaviors as the agent of acceptance because it is hard to measure potential users’ actual usage behavior in such a cross-sectional survey study, a fact that is commonly encountered by many previous studies [95]. A meta-analysis inferred that medium-to-large changes in intention induce small-to-medium changes in behavior [96]. Future studies could focus on healthcare workers’ adoption behavior of AI-assisted diagnosis and treatment to make the findings more practical. Second, this study was conducted in the dental department; thus, the findings might not be applicable to other departments. Further research might also involve other departments, such as imaging and clinic. Third, this study investigated the antecedents of healthcare workers’ adoption intention of AI-assisted diagnosis and treatment only from the viewpoint of healthcare workers’ perceptions. Notably, some other scenario-related factors (e.g., perceived risk and task–technology fit) might also contribute to healthcare workers’ acceptance and merit future explorations. Finally, this study was conducted with healthcare workers, including doctors, nurses, and medical technicians, but there were differences in the psychological perceptions of different types of healthcare workers (e.g., performance expectancy and effort expectancy). Research on the intention to adopt medical AI for just one type of healthcare worker would also be valuable in the future.

6. Conclusions

The adoption of AI-assisted diagnosis and treatment by healthcare workers could enhance work efficiency and accuracy; however, the “black box” nature of AI technology is a real barrier to its acceptance by healthcare workers. This study proposed and verified the theoretical model of adoption intention by integrating the UTAUT model and HCT theory to explain healthcare workers’ adoption intention of AI-assisted diagnosis and treatment. The results revealed that expectancy (performance expectancy and effort expectancy) positively affected healthcare workers’ adoption intention. In addition, we explored the single mediating effect and chain mediating effect of social influence and HCT between expectancy and adoption intention. This study could also effectively assist AI technology companies in their technology algorithm optimization, product development, and promotion and provide a reference for decision making on policy formulation for establishing trustworthy AI as well as the management needs of AI systems in government public and other sectors.

Appendix A

Table A1.

Measurement items of constructs.

| Constructs | Variables | Measurement Items |

|---|---|---|

| Performance Expectancy (PE) |

PE1 | AI-assisted diagnosis and treatment will enhance the efficiency of my medical consultation process. |

| PE2 | AI-assisted diagnosis and treatment will make my work more efficient. | |

| PE3 | AI-assisted diagnosis and treatment will provide me with new abilities that I did not have before. | |

| PE4 | AI-assisted diagnosis and treatment will expand my existing knowledge base and provide new ideas. | |

| Effort Expectancy (EE) |

EE1 | I think the openness of AI-assisted diagnosis and treatment is clear and unambiguous. |

| EE2 | I can skillfully use AI-assisted diagnosis and treatment. | |

| EE3 | I think getting the information I need through AI-assisted diagnosis and treatment is easy for me. | |

| EE4 | AI-assisted diagnosis and treatment doesn’t take much of my energy. | |

| Social Influence (SI) |

SI1 | People around me use AI-assisted diagnosis and treatment. |

| SI2 | People who are important to me think that I should use AI-assisted diagnosis and treatment. | |

| SI3 | My professional interaction with my peers requires knowledge of AI-assisted diagnosis and treatment. | |

| Human–Computer Trust (HCT) |

HCT1 | I believe AI-assisted diagnosis and treatment will help me do my job. |

| HCT2 | I trust AI-assisted diagnosis and treatment to understand my work needs and preferences. | |

| HCT3 | I believe AI-assisted diagnosis and treatment is an effective tool. | |

| HCT4 | I think AI-assisted diagnosis and treatment works well for diagnostic and treatment purposes. | |

| HCT5 | I believe AI-assisted diagnosis and treatment has all the functions I expect in a medical procedure. | |

| HCT6 | I can always rely on AI-assisted diagnosis and treatment. | |

| HCT7 | I can trust the reference information provided by AI-assisted diagnosis and treatment. | |

| Adoption Intention (ADI) |

ADI1 | I am willing to learn and use AI-assisted diagnosis and treatment. |

| ADI2 | I intend to use AI-assisted diagnosis and treatment in the future. | |

| ADI3 | I would advise people around me to use AI-assisted diagnosis and treatment. |

Author Contributions

Conceptualization, M.C. and X.L.; methodology, M.C., X.L. and J.X.; software, M.C., X.L. and J.X.; validation, M.C. and X.L.; formal analysis, M.C., X.L. and J.X.; investigation, M.C. and J.X.; resources, M.C. and J.X.; data curation, M.C. and X.L.; writing—original draft, M.C. and X.L.; draft preparation, M.C., X.L. and J.X; writing—review and editing, M.C., X.L. and J.X. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from each respondent.

Data Availability Statement

The datasets analyzed during the current study are not yet publicly available but are available from the corresponding authors upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was funded by the Anhui Education Humanities and Social Science Research Major Program (SK2020ZD18) and Anhui University of Science and Technology Innovation Fund Program (2021CX2133).

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Malik P., Pathania M., Rathaur V.K. Overview of artificial intelligence in medicine. J. Fam. Med. Prim. Care. 2019;8:2328–2331. doi: 10.4103/jfmpc.jfmpc_440_19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Brynjolfsson E., Mcafee A. What’s driving the machine learning explosion? Harv. Bus. Rev. 2017;1:1–31. [Google Scholar]

- 3.He J., Baxter S.L., Xu J., Xu J.M., Zhou X., Zhang K. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 2019;25:30–36. doi: 10.1038/s41591-018-0307-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Huo W., Zheng G., Yan J., Sun L., Han L. Interacting with medical artificial intelligence: Integrating self-responsibility attribution, human-computer trust, and personality. Comput. Hum. Behav. 2022;132:107253. doi: 10.1016/j.chb.2022.107253. [DOI] [Google Scholar]

- 5.Vinod D.N., Prabaharan S.R.S. Data science and the role of Artificial Intelligence in achieving the fast diagnosis of COVID-19. Chaos Solitons Fractals. 2020;140:110182. doi: 10.1016/j.chaos.2020.110182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chen Y.W., Stanley K., Att W. Artificial intelligence in dentistry: Current applications and future perspectives. Quintessence Int. 2020;51:248–257. doi: 10.3290/j.qi.a43952. [DOI] [PubMed] [Google Scholar]

- 7.Hung K., Montalvao C., Tanaka R., Kawai T., Bornstein M.M. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofac. Radiol. 2020;49:20190107. doi: 10.1259/dmfr.20190107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lee J.H., Kim D.H., Jeong S.N., Choi S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018;77:106–111. doi: 10.1016/j.jdent.2018.07.015. [DOI] [PubMed] [Google Scholar]

- 9.Casalegno F., Newton T., Daher R., Abdelaziz M., Lodi-Rizzini A., Schürmann F., Krejci I., Markram H. Caries detection with near-infrared transillumination using deep learning. J. Dent. Res. 2019;98:1227–1233. doi: 10.1177/0022034519871884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Holsinger F.C. A flexible, single-arm robotic surgical system for transoral resection of the tonsil and lateral pharyngeal wall: Next-generation robotic head and neck surgery. Laryngoscope. 2016;126:864–869. doi: 10.1002/lary.25724. [DOI] [PubMed] [Google Scholar]

- 11.Genden E.M., Desai S., Sung C.K. Transoral robotic surgery for the management of head and neck cancer: A preliminary experience. Head Neck. 2009;31:283–289. doi: 10.1002/hed.20972. [DOI] [PubMed] [Google Scholar]

- 12.Shademan A., Decker R.S., Opfermann J.D., Leonard S., Krieger A., Kim P.C. Supervised autonomous robotic soft tissue surgery. Sci. Transl. Med. 2016;8:337ra64. doi: 10.1126/scitranslmed.aad9398. [DOI] [PubMed] [Google Scholar]

- 13.Yu K.H., Beam A.L., Kohane I.S. Artificial intelligence in healthcare. Nat. Biomed. Eng. 2018;2:719–731. doi: 10.1038/s41551-018-0305-z. [DOI] [PubMed] [Google Scholar]

- 14.Qin Y., Zhou R., Wu Q., Huang X., Chen X., Wang W., Wang X., Xu H., Zheng J., Qian S., et al. The effect of nursing participation in the design of a critical care information system: A case study in a Chinese hospital. BMC Med. Inform. Decis. Mak. 2017;17:1–12. doi: 10.1186/s12911-017-0569-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Vockley M. Game-changing technologies: 10 promising innovations for healthcare. Biomed. Instrum. Technol. 2017;51:96–108. doi: 10.2345/0899-8205-51.2.96. [DOI] [PubMed] [Google Scholar]

- 16.Vanman E.J., Kappas A. “Danger, Will Robinson!” The challenges of social robots for intergroup relations. Soc. Personal. Psychol. Compass. 2019;13:e12489. doi: 10.1111/spc3.12489. [DOI] [Google Scholar]

- 17.Robin A.L., Muir K.W. Medication adherence in patients with ocular hypertension or glaucoma. Expert Rev. Ophthalmol. 2019;14:199–210. doi: 10.1080/17469899.2019.1635456. [DOI] [Google Scholar]

- 18.Lötsch J., Kringel D., Ultsch A. Explainable artificial intelligence (XAI) in biomedicine: Making AI decisions trustworthy for physicians and patients. Biomedinformatics. 2021;2:1. doi: 10.3390/biomedinformatics2010001. [DOI] [Google Scholar]

- 19.Dietvorst B.J., Simmons J.P., Massey C. Algorithm aversion: People erroneously avoid algorithms after seeing them err. J. Exp. Psychol. Gen. 2014;144:114–126. doi: 10.1037/xge0000033. [DOI] [PubMed] [Google Scholar]

- 20.Wang H., Tao D., Yu N., Qu X. Understanding consumer acceptance of healthcare wearable devices: An integrated model of UTAUT and TTF. Int. J. Med. Inform. 2020;139:104156. doi: 10.1016/j.ijmedinf.2020.104156. [DOI] [PubMed] [Google Scholar]

- 21.Dhagarra D., Goswami M., Kumar G. Impact of trust and privacy concerns on technology acceptance in healthcare: An Indian perspective. Int. J. Med. Inform. 2020;141:104164. doi: 10.1016/j.ijmedinf.2020.104164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fernandes T., Oliveira E. Understanding consumers’ acceptance of automated technologies in service encounters: Drivers of digital voice assistants adoption. J. Bus. Res. 2021;122:180–191. doi: 10.1016/j.jbusres.2020.08.058. [DOI] [Google Scholar]

- 23.Alsyouf A., Ishak A.K., Lutfi A., Alhazmi F.N., Al-Okaily M. The role of personality and top management support in continuance intention to use electronic health record systems among nurses. Int. J. Environ. Res. Public Health. 2022;19:11125. doi: 10.3390/ijerph191711125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pikkemaat M., Thulesius H., Nymberg V.M. Swedish primary care physicians’ intentions to use telemedicine: A survey using a new questionnaire–physician attitudes and intentions to use telemedicine (PAIT) Int. J. Gen. Med. 2021;14:3445–3455. doi: 10.2147/IJGM.S319497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hossain A., Quaresma R., Rahman H. Investigating factors influencing the physicians’ adoption of electronic health record (EHR) in healthcare system of Bangladesh: An empirical study. Int. J. Inf. Manag. 2019;44:76–87. doi: 10.1016/j.ijinfomgt.2018.09.016. [DOI] [Google Scholar]

- 26.Alsyouf A., Ishak A.K. Understanding EHRs continuance intention to use from the perspectives of UTAUT: Practice environment moderating effect and top management support as predictor variables. Int. J. Electron. Healthc. 2018;10:24–59. doi: 10.1504/IJEH.2018.092175. [DOI] [Google Scholar]

- 27.Fan W., Liu J., Zhu S., Pardalos P.M. Investigating the impacting factors for the healthcare professionals to adopt artificial intelligence-based medical diagnosis support system (AIMDSS) Ann. Oper. Res. 2020;294:567–592. doi: 10.1007/s10479-018-2818-y. [DOI] [Google Scholar]

- 28.Bawack R.E., Kamdjoug J.R.K. Adequacy of UTAUT in clinician adoption of health information systems in developing countries: The case of Cameroon. Int. J. Med. Inform. 2018;109:15–22. doi: 10.1016/j.ijmedinf.2017.10.016. [DOI] [PubMed] [Google Scholar]

- 29.Adenuga K.I., Iahad N.A., Miskon S. Towards reinforcing telemedicine adoption amongst clinicians in Nigeria. Int. J. Med. Inform. 2017;104:84–96. doi: 10.1016/j.ijmedinf.2017.05.008. [DOI] [PubMed] [Google Scholar]

- 30.Liu C.F., Cheng T.J. Exploring critical factors influencing physicians’ acceptance of mobile electronic medical records based on the dual-factor model: A validation in Taiwan. BMC Med. Inform. Decis. Mak. 2015;15:1–12. doi: 10.1186/s12911-014-0125-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hsieh P.J. Healthcare professionals’ use of health clouds: Integrating technology acceptance and status quo bias perspectives. Int. J. Med. Inform. 2015;84:512–523. doi: 10.1016/j.ijmedinf.2015.03.004. [DOI] [PubMed] [Google Scholar]

- 32.Wu L., Li J.Y., Fu C.Y. The adoption of mobile healthcare by hospital’s professionals: An integrative perspective. Decis. Support Syst. 2011;51:587–596. doi: 10.1016/j.dss.2011.03.003. [DOI] [Google Scholar]

- 33.Egea J.M.O., González M.V.R. Explaining physicians’ acceptance of EHCR systems: An extension of TAM with trust and risk factors. Comput. Hum. Behav. 2011;27:319–332. doi: 10.1016/j.chb.2010.08.010. [DOI] [Google Scholar]

- 34.Davis F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989;13:319–340. doi: 10.2307/249008. [DOI] [Google Scholar]

- 35.Venkatesh V., Morris M.G., Davis G.B., Davis F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003;27:425–478. doi: 10.2307/30036540. [DOI] [Google Scholar]

- 36.Huang C.Y., Yang M.C. Empirical investigation of factors influencing consumer intention to use an artificial intelligence-powered mobile application for weight loss and health management. Telemed. e-Health. 2020;26:1240–1251. doi: 10.1089/tmj.2019.0182. [DOI] [PubMed] [Google Scholar]

- 37.Gerli P., Clement J., Esposito G., Mora L., Crutzen N. The hidden power of emotions: How psychological factors influence skill development in smart technology adoption. Technol. Forecast. Soc. Chang. 2022;180:121721. doi: 10.1016/j.techfore.2022.121721. [DOI] [Google Scholar]

- 38.Fernández-Llamas C., Conde M.A., Rodríguez-Lera F.J., Rodríguez-Sedano F.J., García F. May I teach you? Students’ behavior when lectured by robotic vs. human teachers. Comput. Hum. Behav. 2018;80:460–469. doi: 10.1016/j.chb.2017.09.028. [DOI] [Google Scholar]

- 39.Hancock P.A., Billings D.R., Schaefer K.E., Chen J.Y., De Visser E.J., Parasuraman R. A meta-analysis of factors affecting trust in human-robot interaction. Hum. Factors. 2011;53:517–527. doi: 10.1177/0018720811417254. [DOI] [PubMed] [Google Scholar]

- 40.Glikson E., Woolley A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020;14:627–660. doi: 10.5465/annals.2018.0057. [DOI] [Google Scholar]

- 41.Choi J.K., Ji Y.G. Investigating the importance of trust on adopting an autonomous vehicle. Int. J. Hum-Comput. Int. 2015;31:692–702. doi: 10.1080/10447318.2015.1070549. [DOI] [Google Scholar]

- 42.Madsen M., Gregor S. Measuring human-computer trust; Proceedings of the 11th Australasian Conference on Information Systems; Brisbane, Australia. 6–8 December 2000; pp. 6–8. [Google Scholar]

- 43.Van Lange P.A. Generalized trust: Four lessons from genetics and culture. Curr. Dir. Psychol. 2015;24:71–76. doi: 10.1177/0963721414552473. [DOI] [Google Scholar]

- 44.Zhang T., Tao D., Qu X., Zhang X., Zeng J., Zhu H., Zhu H. Automated vehicle acceptance in China: Social influence and initial trust are key determinants. Transp. Res. Pt. C-Emerg. Technol. 2020;112:220–233. doi: 10.1016/j.trc.2020.01.027. [DOI] [Google Scholar]

- 45.Fishbein M., Ajzen I. Belief, Attitude, Intention, and Behavior: An Introduction to Theory and Research. Addison-Wesley; Reading, MA, USA: 1975. [Google Scholar]

- 46.Ajzen I. Perceived behavioral control, self-efficacy, locus of control and the theory of planned behavior. J. Appl. Soc. Psychol. 2002;32:665–683. doi: 10.1111/j.1559-1816.2002.tb00236.x. [DOI] [Google Scholar]

- 47.Hardjono T., Deegan P., Clippinger J.H. On the design of trustworthy compute frameworks for self-organizing digital institutions; Proceedings of the International Conference on Social Computing and Social Media; Crete, Greece. 22–27 June 2014; pp. 342–353. [DOI] [Google Scholar]

- 48.Huijts N.M., Molin E.J., Steg L. Psychological factors influencing sustainable energy technology acceptance: A review-based comprehensive framework. Renew. Sust. Energ. Rev. 2012;16:525–531. doi: 10.1016/j.rser.2011.08.018. [DOI] [Google Scholar]

- 49.Liu P., Yang R., Xu Z. Public acceptance of fully automated driving: Effects of social trust and risk/benefit perceptions. Risk Anal. 2019;39:326–341. doi: 10.1111/risa.13143. [DOI] [PubMed] [Google Scholar]

- 50.Robinette P., Howard A.M., Wagner A.R. Effect of robot performance on human-robot trust in time-critical situations. IEEE Trans. Hum.-Mach. Syst. 2017;47:425–436. doi: 10.1109/THMS.2017.2648849. [DOI] [Google Scholar]

- 51.Johnson P. Human Computer Interaction: Psychology, Task Analysis and Software Engineering. McGraw-Hill; London, UK: 1992. [DOI] [Google Scholar]

- 52.Williams A., Sherman I., Smarr S., Posadas B., Gilbert J.E. Human trust factors in image analysis; Proceedings of the International Conference on Applied Human Factors and Ergonomics; San Diego, CA, USA. 20–24 July 2018; pp. 3–12. [DOI] [Google Scholar]

- 53.Nordheim C.B., Følstad A., Bjørkli C.A. An initial model of trust in chatbots for customer service-findings from a questionnaire study. Interact. Comput. 2019;31:317–335. doi: 10.1093/iwc/iwz022. [DOI] [Google Scholar]

- 54.Li X., Hess T.J., Valacich J.S. Using attitude and social influence to develop an extended trust model for information systems. ACM SIGMIS Database Database Adv. Inf. Syst. 2006;37:108–124. doi: 10.1145/1161345.1161359. [DOI] [Google Scholar]

- 55.Li W., Mao Y., Zhou L. The impact of interactivity on user satisfaction in digital social reading: Social presence as a mediator. Int. J. Hum-Comput. Int. 2021;37:1636–1647. doi: 10.1080/10447318.2021.1898850. [DOI] [Google Scholar]

- 56.Ifinedo P. Applying uses and gratifications theory and social influence processes to understand students’ pervasive adoption of social networking sites: Perspectives from the Americas. Int. J. Inf. Manag. 2016;36:192–206. doi: 10.1016/j.ijinfomgt.2015.11.007. [DOI] [Google Scholar]

- 57.Shiferaw K.B., Mehari E.A. Modeling predictors of acceptance and use of electronic medical record system in a resource limited setting: Using modified UTAUT model. Inform. Med. Unlocked. 2019;17:100182. doi: 10.1016/j.imu.2019.100182. [DOI] [Google Scholar]

- 58.Oldeweme A., Märtins J., Westmattelmann D., Schewe G. The role of transparency, trust, and social influence on uncertainty reduction in times of pandemics: Empirical study on the adoption of COVID-19 tracing apps. J. Med. Internet Res. 2021;23:e25893. doi: 10.2196/25893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Alsyouf A., Lutfi A., Al-Bsheish M., Jarrar M.T., Al-Mugheed K., Almaiah M.A., Alhazmi F.N., Masa’deh R.E., Anshasi R.J., Ashour A. Exposure Detection Applications Acceptance: The Case of COVID-19. Int. J. Environ. Res. Public Health. 2022;19:7307. doi: 10.3390/ijerph19127307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Allam H., Qusa H., Alameer O., Ahmad J., Shoib E., Tammi H. Theoretical perspective of technology acceptance models: Towards a unified model for social media applciations; Proceedings of the 2019 Sixth HCT Information Technology Trends (ITT); Ras Al Khaimah, United Arab Emirates. 20–21 November 2019; pp. 154–159. [DOI] [Google Scholar]

- 61.Hengstler M., Enkel E., Duelli S. Applied artificial intelligence and trust-The case of autonomous vehicles and medical assistance devices. Technol. Forecast. Soc. Chang. 2016;105:105–120. doi: 10.1016/j.techfore.2015.12.014. [DOI] [Google Scholar]

- 62.Choung H., David P., Ross A. Trust in AI and its role in the acceptance of AI technologies. Int. J. Hum.-Comput. Int. 2022:1–13. doi: 10.1080/10447318.2022.2050543. [DOI] [Google Scholar]

- 63.De Angelis F., Pranno N., Franchina A., Di Carlo S., Brauner E., Ferri A., Pellegrino G., Grecchi E., Goker F., Stefanelli L.V. Artificial intelligence: A new diagnostic software in dentistry: A preliminary performance diagnostic study. Int. J. Environ. Res. Public Health. 2022;19:1728. doi: 10.3390/ijerph19031728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Shin D. How do people judge the credibility of algorithmic sources? AI Soc. 2022;37:81–96. doi: 10.1007/s00146-021-01158-4. [DOI] [Google Scholar]

- 65.Hoff K.A., Masooda B. Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors. 2015;57:407–434. doi: 10.1177/0018720814547570. [DOI] [PubMed] [Google Scholar]

- 66.Liu L.L., He Y.M., Liu X.D. Investigation on patients’ cognition and trust in artificial intelligence medicine. Chin. Med. Ethics. 2019;32:986–990. [Google Scholar]

- 67.Yang C.C., Li C.L., Yeh T.F., Chang Y.C. Assessing older adults’ intentions to use a smartphone: Using the meta-unified theory of the acceptance and use of technology. Int. J. Environ. Res. Public Health. 2022;19:5403. doi: 10.3390/ijerph19095403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Prakash A.V., Das S. Medical practitioner’s adoption of intelligent clinical diagnostic decision support systems: A mixed-methods study. Inf. Manag. 2021;58:103524. doi: 10.1016/j.im.2021.103524. [DOI] [Google Scholar]

- 69.Siau K., Wang W. Building trust in artificial intelligence, machine learning, and robotics. Cut. Bus. Technol. J. 2018;31:47–53. [Google Scholar]

- 70.Walczak R., Kludacz-Alessandri M., Hawrysz L. Use of telemedicine echnology among general practitioners during COVID-19: A modified technology acceptance model study in Poland. Int. J. Environ. Res. Public Health. 2022;19:10937. doi: 10.3390/ijerph191710937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Du C.Z., Gu J. Adoptability and limitation of cancer treatment guidelines: A Chinese oncologist’s perspective. Chin. Med. J. 2012;125:725–727. doi: 10.3760/cma.j.issn.0366-6999.2012.05.001. [DOI] [PubMed] [Google Scholar]

- 72.Kijsanayotin B., Pannarunothai S., Speedie S.M. Factors influencing health information technology adoption in Thailand’s community health centers: Applying the UTAUT model. Int. J. Med. Inform. 2009;78:404–416. doi: 10.1016/j.ijmedinf.2008.12.005. [DOI] [PubMed] [Google Scholar]

- 73.Huqh M.Z.U., Abdullah J.Y., Wong L.S., Jamayet N.B., Alam M.K., Rashid Q.F., Husein A., Ahmad W.M.A.W., Eusufzai S.Z., Prasadh S., et al. Clinical Applications of Artificial Intelligence and Machine Learning in Children with Cleft Lip and Palate-A Systematic Review. Int. J. Environ. Res. Public Health. 2022;19:10860. doi: 10.3390/ijerph191710860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Damoah I.S., Ayakwah A., Tingbani I. Artificial intelligence (AI)-enhanced medical drones in the healthcare supply chain (HSC) for sustainability development: A case study. J. Clean Prod. 2021;328:129598. doi: 10.1016/j.jclepro.2021.129598. [DOI] [Google Scholar]

- 75.Li X., Cheng M., Xu J. Leaders’ innovation expectation and nurses’ innovation behaviour in conjunction with artificial intelligence: The chain mediation of job control and creative self-efficacy. J. Nurs. Manag. 2022 doi: 10.1111/jonm.13749. [DOI] [PubMed] [Google Scholar]

- 76.Martikainen S., Kaipio J., Lääveri T. End-user participation in health information systems (HIS) development: Physicians’ and nurses’ experiences. Int. J. Med. Inform. 2020;137:104117. doi: 10.1016/j.ijmedinf.2020.104117. [DOI] [PubMed] [Google Scholar]

- 77.Brislin R.W. Translation and content analysis of oral and written material. In: Triandis H.C., Berry J.W., editors. Handbook of Cross-Cultural Psychology: Methodology. Allyn and Bacon; Boston, MA, USA: 1980. pp. 389–444. [Google Scholar]

- 78.Fornell C., Larcker D.F. Structural equation models with unobservable variables and measurement error: Algebra and statistics. J. Mark. Res. 1981;18:382–388. doi: 10.1177/002224378101800313. [DOI] [Google Scholar]

- 79.Gulati S., Sousa S., Lamas D. Modelling trust in human-like technologies; Proceedings of the 9th Indian Conference on Human Computer Interaction; Allahabad, India. 16–18 December 2018; pp. 1–10. [DOI] [Google Scholar]

- 80.Zhou H., Long L. Statistical remedies for common method biases. Adv. Psychol. Sci. 2004;12:942–950. doi: 10.1007/BF02911031. [DOI] [Google Scholar]

- 81.Meade A.W., Watson A.M., Kroustalis C.M. Assessing common methods bias in organizational research; Proceedings of the 22nd Annual Meeting of the Society for Industrial and Organizational Psychology; New York, NY, USA. 27 April 2007; pp. 1–10. [Google Scholar]

- 82.Aguirre-Urreta M.I., Hu J. Detecting common method bias: Performance of the Harman’s single-factor test. ACM SIGMIS Database: Database Adv. Inf. Syst. 2019;50:45–70. doi: 10.1145/3330472.3330477. [DOI] [Google Scholar]

- 83.Podsakoff P.M., MacKenzie S.B., Lee J.Y., Podsakoff N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003;88:879–903. doi: 10.1037/0021-9010.88.5.879. [DOI] [PubMed] [Google Scholar]

- 84.Hayes A.F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach. Guilford Publications; New York, NY, USA: 2013. [Google Scholar]

- 85.Byrne B.M. Structural Equation Modeling with AMOS: Basic Concepts, Applications, and Programming. 3rd ed. Routledge; New York, NY, USA: 2016. [DOI] [Google Scholar]

- 86.Fornell C., Larcker D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981;18:39–50. doi: 10.1177/002224378101800104. [DOI] [Google Scholar]

- 87.Chin W.W., Newsted P.R. Structural equation modeling analysis with small samples using partial least squares. In: Hoyle R.H., editor. Statistical Strategies for Small Sample Research. Volume 1. Sage Publications; Thousand Oaks, CA, USA: 1999. pp. 307–341. [Google Scholar]

- 88.Hayes A.F., Preacher K.J. Statistical mediation analysis with a multi-categorical independent variable. Br. J. Math. Stat. Psychol. 2014;67:451–470. doi: 10.1111/bmsp.12028. [DOI] [PubMed] [Google Scholar]

- 89.Wu B., Chen X. Continuance intention to use MOOCs: Integrating the technology acceptance model (TAM) and task technology fit (TTF) model. Comput. Hum. Behav. 2017;67:221–232. doi: 10.1016/j.chb.2016.10.028. [DOI] [Google Scholar]

- 90.Lin Y.H., Huang G.S., Ho Y.L., Lou M.F. Patient willingness to undergo a two-week free trial of a telemedicine service for coronary artery disease after coronary intervention: A mixed-methods study. J. Nurs. Manag. 2020;28:407–416. doi: 10.1111/jonm.12942. [DOI] [PubMed] [Google Scholar]

- 91.Raymond L., Castonguay A., Doyon O., Paré G. Nurse practitioners’ involvement and experience with AI-based health technologies: A systematic review. Appl. Nurs. Res. 2022:151604. doi: 10.1016/j.apnr.2022.151604. [DOI] [PubMed] [Google Scholar]

- 92.Chuah S.H.W., Rauschnabel P.A., Krey N., Nguyen B., Ramayah T., Lade S. Wearable technologies: The role of usefulness and visibility in smartwatch adoption. Comput. Hum. Behav. 2016;65:276–284. doi: 10.1016/j.chb.2016.07.047. [DOI] [Google Scholar]

- 93.Lysaght T., Lim H.Y., Xafis V., Ngiam K.Y. AI-assisted decision-making in healthcare: The application of an ethics framework for big data in health and research. Asian Bioeth. Rev. 2019;11:299–314. doi: 10.1007/s41649-019-00096-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Im I., Hong S., Kang M.S. An international comparison of technology adoption: Testing the UTAUT model. Inf. Manag. 2011;48:1–8. doi: 10.1016/j.im.2010.09.001. [DOI] [Google Scholar]