Abstract

Emerging sleep health technologies will have an impact on monitoring patients with sleep disorders. This study proposes a new deep learning model architecture that improves the under-blanket sleep posture classification accuracy by leveraging the anatomical landmark feature through an attention strategy. The system used an integrated visible light and depth camera. Deep learning models (ResNet-34, EfficientNet B4, and ECA-Net50) were trained using depth images. We compared the models with and without an anatomical landmark coordinate input generated with an open-source pose estimation model using visible image data. We recruited 120 participants to perform seven major sleep postures, namely, the supine posture, prone postures with the head turned left and right, left- and right-sided log postures, and left- and right-sided fetal postures under four blanket conditions, including no blanket, thin, medium, and thick. A data augmentation technique was applied to the blanket conditions. The data were sliced at an 8:2 training-to-testing ratio. The results showed that ECA-Net50 produced the best classification results. Incorporating the anatomical landmark features increased the F1 score of ECA-Net50 from 87.4% to 92.2%. Our findings also suggested that the classification performances of deep learning models guided with features of anatomical landmarks were less affected by the interference of blanket conditions.

Keywords: sleep posture recognition, sleep surveillance, sleep monitoring, sleep behavior, ubiquitous health, digital health

1. Introduction

Sleep is an indispensable activity that maintains physiological functions and processes in life [1]. Humans spend one-third of their lifetime sleeping [2]. Adults are recommended to sleep at least seven hours a day to mitigate the risks of obesity, diabetes, hypertension, cardiovascular disease, stroke, mental distress, and premature death [3]. Poor sleep quality not only affects productivity and academic performance, but may also lead to injury and car accidents [4]. Sleep postures are closely related to sleep quality and disorders [5], and an analysis of sleep posture changes have been used to identify the deep sleep stage [6]. It was reported that individuals with poor sleep quality spent more time in provocative poses with more frequent posture changes [5]. While sleep aims to relieve the fatigue of muscles and ligaments, a side-lying posture accompanied by poor support may lead to a sagging spine and sinking lumbar, resulting in sleep-related musculoskeletal problems, such as neck and back pain [7,8,9]. Sleep postures and posture changes were also associated with sleep bruxism [10], carpal tunnel syndrome [11], and restless leg syndrome [12]. Additionally, for patients with obstructive sleep apnea, supine and prone postures may affect their respiration because of tongue and palate prolapse and pressure on the thorax [13]. Investigating sleeping postures is important to understand the pathomechanism and design interventions for sleeping disorders [14], while different sleep assessment technologies, such as polysomnography, unobstructive intelligent sensors, and wearables, could monitor sleep behaviors and physiological signs for the better diagnosis of sleep problems [15].

Clinically, to evaluate sleep disorders, individuals need to stay overnight in a specialized clinic for a sleep test. Not only is the process expensive, annoying, and requires medical and technical professionals to operate, but the unfamiliar sleeping environment can affect the test’s accuracy. Ubiquitous sleep posture monitoring is, thus, necessary for the long-term assessment of sleep deprivation and sleep disorders in a nonclinical setting [15].

Traditional sleeping posture measurements include videotaping with manual labeling or self-reported assessments, which can be tedious and inaccurate [16,17]. Recently, different nonintrusive technologies have been used in sleeping posture recognition, including visible light, infrared, and depth cameras [18,19], inertia measurement units with a wireless connection [20], radar/radio sensors [21,22,23], and pressure sensors [24,25,26]. However, there have been few attempts to recognize and classify sleeping postures accurately [27]. Various machine-learning-based techniques have emerged recently, including learning, the support vector machine (SVM), k-nearest neighbors (KNNs), and a convolutional neural network (CNN), which aim to improve the accuracy of posture classification for both optical and pressure sensing [18,19,28,29,30,31,32,33].

Depth-camera-based systems are preferred for sleep monitoring, since they are noncontact, easier to maintain, allow for privacy, and can work well at nighttime without visible light [34]. Grimm, et al. [35] classified three common sleeping postures (supine and left-, and right-sided lying) using a convolutional neural network (CNN) with a good overall accuracy of 97.5% [35]. Similarly, Ren, et al. [36] reported high accuracy in classifying seven postures using a support vector machine (SVM) from images acquired using a Kinect Artec scanner. Nevertheless, these systems did not practically consider the interference of blankets, which is known as the primary barrier for noncontact sleep surveillance [37]. Our previous study classified seven postures under different thicknesses of blanket conditions using a CNN, but did not achieve an adequately satisfactory accuracy (<90%).

In summary, wearable sensors can reach high accuracy in posture classification, but are uncomfortable and comply poorly [38]. Additionally, blankets challenge the classification accuracy of noncontact optical systems, in addition to causing difficulty in distinguishing prone from supine postures [37]. To this end, we propose to enhance the accuracy of the under-blanket sleep posture classification with a new deep learning model architecture with a guided input of anatomical features generated using a pose estimator [39]. The classification covers seven common sleep postures, including the supine posture, prone postures with the head turned left and right, left- and right-sided log postures, and left- and right-sided fetal postures. The key contributions of this study are as follows:

We developed a posture classification system that can be generalized to various blanket conditions.

We proposed an integrative innovation for the deep learning model to improve the classification performance through anatomical landmark features generated using a pose estimator.

2. Materials and Methods

2.1. Participant Recruitment

We recruited 120 healthy adults (61 male and 59 female) in this study from the university and community. Their mean age was 45.5 years (SD: 20.7; range 11 to 77). Their average height and weight were 165 cm (SD: 9.02; range 148 to 185) and 60.6 kg (SD; 11.2, range 40.8 to 98.1), respectively. The age distribution in different genders is shown in Table 1. We endeavored to recruit participants of different ages to adequately test the robustness of the deep learning model. Participants were excluded if they reported severe sleep deprivation, a sleep behavioral disorder, musculoskeletal pain, or a deformity.

Table 1.

Histogram on the age and gender distribution of participants.

| Characteristics | Number of Participants (n = 120) | Proportion (%) | |

|---|---|---|---|

| Gender | Male | 61 | 50.8 |

| Female | 59 | 49.2 | |

| Age Group | 18–19 | 10 | 8.3 |

| 20–29 | 35 | 29.2 | |

| 30–39 | 11 | 9.2 | |

| 40–49 | 3 | 2.5 | |

| 50–59 | 11 | 9.2 | |

| 60–69 | 37 | 30.8 | |

| 70–79 | 13 | 10.8 | |

The experiment was approved by the Institutional Review Board (reference number: HSEARS20210127007). All participants signed an informed consent form after receiving oral and written descriptions of the experimental procedures before the start of the experiment.

2.2. Hardware Setup

We used an active infrared stereo technology depth camera (Realsense D435i, Intel Corp., Santa Clara, CA, USA), which incorporates an auxiliary visible light RGB camera and inertia measurement unit (IMU). The depth camera had a resolution of 848 × 480 pixels, sampled at six frames per second. The camera was mounted 1.6 m above the bed, which was 196 cm long, 90 cm wide, and 55 cm tall. During the experiment, we considered the no-blanket condition and three thicknesses of blankets (thick, medium, and thin) to resemble real life scenarios. Respectively, the blankets were the FJALLARNIKA extra warm duvet (8 cm thick), SAFFERO light warm duvet (2 cm thick), and VALLKRASSING duvet (0.4 cm thick). All of them were sourced from IKEA (Delft, The Netherlands).

2.3. Experimental Procedure

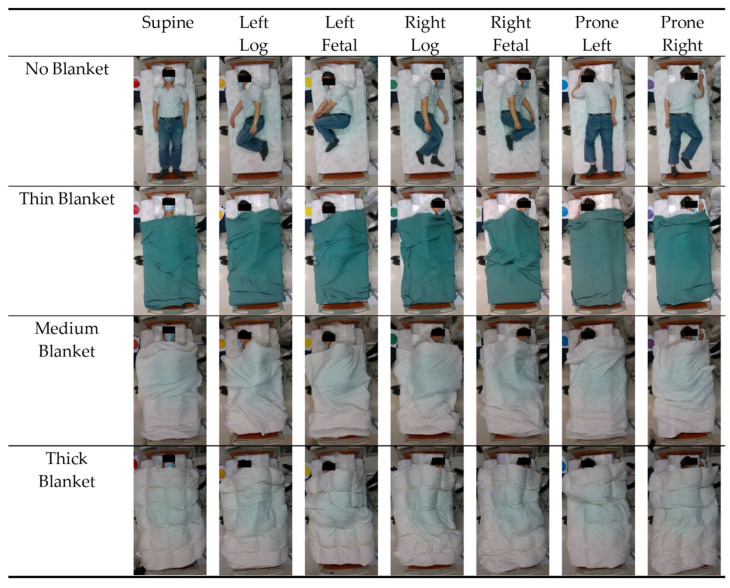

During the experiment, participants were instructed to lie on the bed in seven sleep (recumbent) postures in order: (1) supine, (2) prone with head turned left, (3) prone with head turned right, (4) log left, (5) log right, (6) fetal left, and (7) fetal right (Figure 1). Time was given to allow participants to adjust to their most comfortable position before data collection.

Figure 1.

Seven sleep postures classified in the deep learning models: supine, left-sided log, left-sided fetal, right-sided log, right-sided fetal, prone with head turned left, and prone with head turned right.

During the data collection for each posture, the participants maintained their posture. Then, we covered the participants with blankets in the sequence of thick, medium, thin, and no blanket over their full body (except head). Data were collected continuously throughout the experiment and postures were labeled by placing a color-coded paper adjacent to the bed.

2.4. Data Processing

The RealSense Software (Intel, Santa Clara, CA, USA) Development Kit was utilized for extracting the raw data. A pair of synchronized RGB and infrared depth image data was extracted for every posture and quilt conditions. For every subject, 28 pairs (4 quilt conditions × 7 classes) of images were captured, resulting in a total of 3360 pairs of images. Additional labeling and cropping were performed through a manual pipeline program written using OpenCV SDK (Intel, Santa Clara, CA, USA). Pairs of images were labeled based on the color-coded paper and cross-checked by observing the video, and were cropped to combine the pairs of images to the bed area.

A customized data augmentation strategy was applied to generate more blanket conditions (blanket-augmented dataset), described previously [14]. In brief, data augmentation was conducted using the affine transformation of the depth image data and the data fusion of the blanket conditions. It shall be noted that the data augmentation took place and was incorporated inside the model training process.

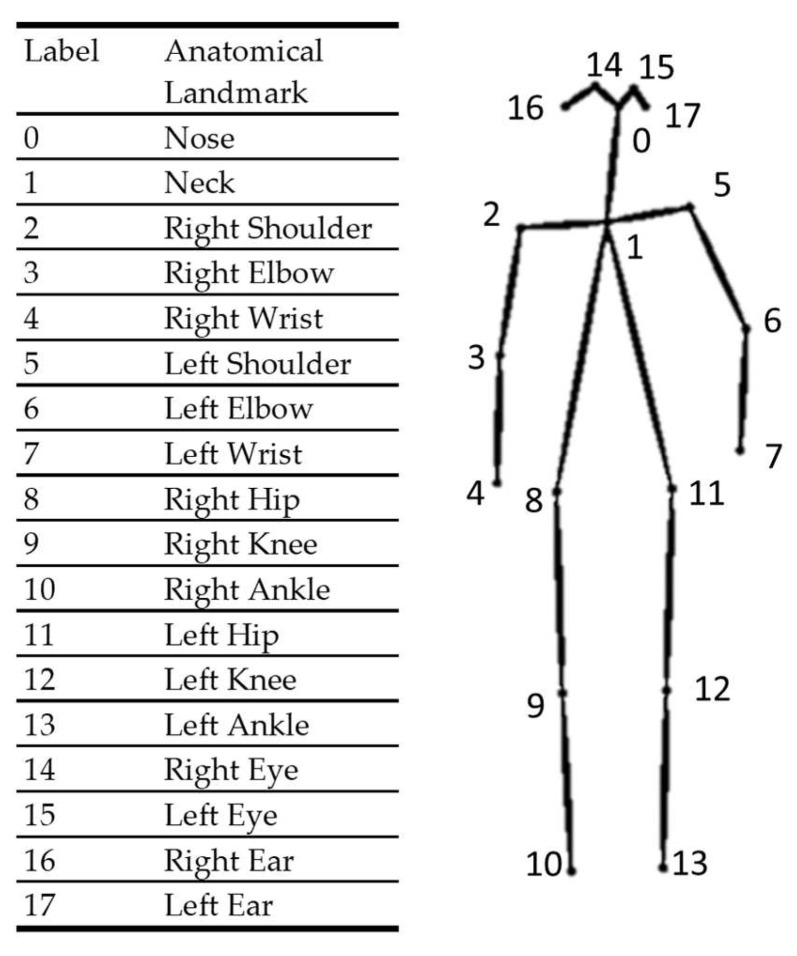

The RGB images collected from the auxiliary visible light camera were input into an open-source deep-learning-based human pose estimator. The key role of the human pose estimator was to provide anatomical landmark information to guide the deep learning model. We utilized OpenPose [39] as the human pose estimator. OpenPose adopts a strategy named the part affinity field, which is a nonparametric representation of association used to encode body part positions and orientations [39]. The estimator learned the part detection and association simultaneously, and identified the poses through a greedy parsing algorithm [39]. A map of confidence levels was calculated to represent the probability that a particular body landmark could be detected in any given pixels. The two-dimensional coordinates of a particular body landmark were taken as that of the maximum of the confidence map. Subsequently, the pose estimator could output ordered pairs of two-dimensional coordinates of 18 anatomical landmarks (detailed in Figure 2) and confidence level values. We then mapped the coordinates to corresponding depth images.

Figure 2.

The anatomical landmarks generated by the pose estimator (OpenPose).

2.5. Model Architecture

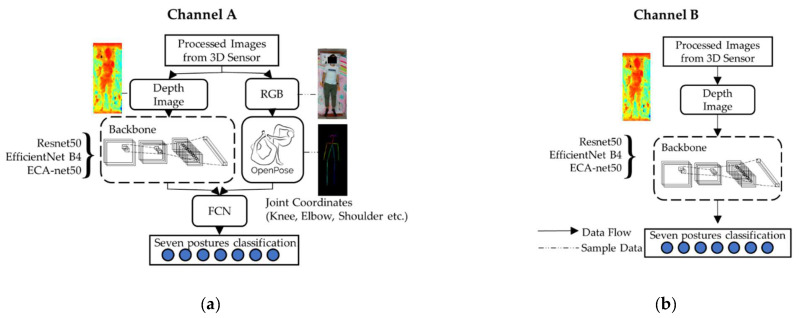

We exploited six model architectures, which were three deep learning models (ResNet-34, EfficientNet B4, and ECA-Net50), trained using depth camera images, with (Channel A) and without (Channel B) pose estimator input, as shown in Figure 3 [39]. With regard to the deep learning models, in brief, ResNet (short for residual network) [40] employed a residual network architecture and solved the vanishing gradient problem using traditional deep neural learning by skipping some layers (shortcut connection). EfficientNet [41] utilized a compound scaling method that sedulously scaled the network depth, width, and resolution, and was proven to outperform traditional CNNs with arbitrary and uniform network dimensions. ECA-Net (short for efficient channel attention module for deep CNNs) [42] is a lightweight model that aimed to avoid channel dimensionality reduction, but maintained the consideration of cross-channel interactions through a fast 1D convolution with a kernel predetermined using channel dimensions.

Figure 3.

Model training architectures (system units) with the ResNet-34, EfficientNet B4, and ECA-Net50 backbones: (a) Channel A: with pose estimator; (b) Channel B: without pose estimator.

As shown in Channel B (Figure 3b), for the architecture without the pose estimator, the depth image data were input into the backbone of the model. The model then output the classified postures.

For the architecture with the pose estimator (Channel A, Figure 3a), the RGB image data were input into the pose estimator to approximate the coordinates of anatomical landmarks. The depth image data were input into the backbone of the model. Thereafter, the coordinate information was concatenated with the output of the backbone’s fully connected layer into another fully connected layer for posture classification.

2.6. Model Training

Overall, the models were trained with the depth image data. Moreover, the model training in Channel A contained additional information on the coordinates of anatomical landmarks, which was generated using the pose estimator (OpenPose) over the no-blanket dataset, and mapped on the blanket dataset correspondingly (i.e., data mapped on the same posture of the same participant). Channel B did not have the coordinate information for model training. The process of Channel A and B selection was detailed in Section 2.7.

We implemented a data slicing strategy at an 8:2 training-to-testing ratio (i.e., 96 participants with 2688 images for model training). Model training was implemented using the PyTorch Deep Learning Framework 1.10 [43]. A randomized grid search (n = 10) on hyperparameters (learning rate and L2 regularization) was implemented over values of 1 × 10−3, 1 × 10−4, 1 × 10−5, 1 × 10−6, 2 × 10−3, 2 × 10−4, 2 × 10−5, 2 × 10−6, 5 × 10−3, 5 × 10−4, 5 × 10−5, and 5 × 10−6. Cross-entropy loss was used as the objective function for the model training. Afterwards, the Adam optimizer was set with a fixed learning rate of 0.0001, L2 regularization of 0.0005, and a batch size of eight. The batch size of eight was predetermined due to the constraint of computational power. Based on the learning curve, we decided to train the model with 1000 epochs.

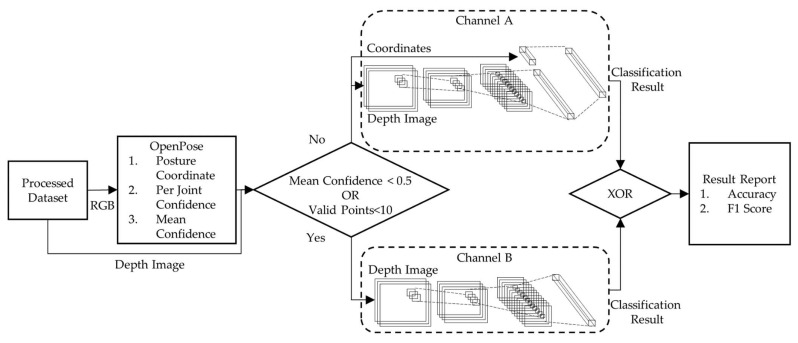

2.7. System Architecture (with Model Testing)

Figure 4 shows the system level after the models were trained. The test data were input into the pose estimator (OpenPose) to generate point coordinate information and confidence levels. The selection of Channel A or B for a particular piece of data sample was determined with the confidence level of the coordinate points generated using the pose estimator. If the confidence level of the pose estimation averaged over all point coordinates was less than 0.5, or the number of valid points (i.e., NULL confidence level) was less than 10, the data were delivered to Channel B without a pose estimation, shown in Figure 4. Otherwise, the data headed to Channel A with a pose estimation. The final prediction was determined with an exclusive OR (XOR) of outputs from Channels A and B (Figure 4).

Figure 4.

System application architecture to predict sleep posture from testing data after the models were trained.

2.8. Evaluation

The models were evaluated using accuracy and the F1 score, which corresponded to the proportion of correct predictions and the harmonic mean of recall and precision [44]. The primary evaluation was conducted to compare the accuracy of the three deep learning models with and without guidance from anatomical landmarks. The secondary evaluation was conducted to compare the performance of the models trained with different blanket datasets. For models without anatomical landmark guidelines, all data flowed to Channel B only. For models with anatomical landmark guidelines, since some data may or may not have fulfilled the confidence level criteria, data could flow in either Channel A or B.

3. Results

3.1. Performance of Different Models with and without Pose Estimator

The outcome measures of accuracy and F1 score are presented in Table 2. In general, models guided with anatomical features had a higher accuracy and F1 score. The mean accuracy and F1 score of the models without anatomical feature guidance were 86.6% and 86.1%, whereas those with the pose estimator were 90.8% and 91.4%. The elevation was approximately 2% to 7% and 3% to 4%, respectively, for accuracy and the F1 score. ECA-Net demonstrated the best performance with an accuracy of 91.5% and an F1 score of 92.2%.

Table 2.

Performance of deep learning models (EfficientNetB4, ResNet50, and ECA-Net50) trained using augmented dataset with and without pose estimator.

| Outcome | Channel | Pose Estimator | Deep Learning Models | ||

|---|---|---|---|---|---|

| EfficientNetB4 | ResNet50 | ECA-Net50 | |||

| Accuracy | A + B | Yes | 89.7% | 91.1% | 91.5% |

| B | No | 87.4% | 83.6% | 88.7% | |

| F1-score | A + B | Yes | 90.8% | 91.3% | 92.2% |

| B | No | 87.3% | 83.6% | 87.4% | |

3.2. Influence of Blankets on Model Performance

The accuracy and F1 score of the models with the pose estimator trained using different blanket conditions are presented in Table 3. Similarly, ECA-Net50 seemed to have superior performance overall. Nevertheless, EfficientsNetB4 outperformed the others for the all-blanket dataset. In general, the blanket conditions did not change the classification accuracy considerably for all models enhanced with the pose estimator, given that the deviation was approximately 2%. The classification accuracy was less affected by the blanket conditions when the pose estimator was present.

Table 3.

Performance of deep learning models (EfficientNetB4, ResNet50, and ECA-Net50) with pose estimator input trained using datasets with no blanket, all blankets, and augmented data.

| Outcome | Dataset | Deep Learning Models with Pose Estimator | ||

|---|---|---|---|---|

| EfficientNetB4 | ResNet50 | ECA-Net50 | ||

| Accuracy | No blanket data | 91.1% | 91.7% | 92.3% |

| All blanket data | 91.4% | 89.3% | 90.9% | |

| Augmented data | 89.7% | 91.1% | 91.5% | |

| F1-score | No blanket data | 91.1% | 91.6% | 92.2% |

| All blanket data | 91.9% | 88.9% | 91.7% | |

| Augmented data | 90.8% | 91.3% | 92.2% | |

4. Discussion

This study endeavored to improve the classification performance of sleep postures with blanket interference using the input and guidance of anatomical information from a pose estimator. The significance of this study laid in the development of a relatively accurate posture classifier of under-blanket sleep postures, and, thus, to facilitate the monitoring and diagnosis of sleep disorders. Key findings of this study included:

ECA-Net50 produced the best classification results with an F1 score of 87.4%.

The performance of ECA-Net50 was improved by the anatomical landmark feature from 87.4% to 92.2%.

Classification performances of ECA-Net50 with anatomical landmark features were less affected by the interference of blanket conditions.

Sleep postures were featured by the location of the head–trunk and the placement of the limbs. Therefore, anatomical landmarks or musculoskeletal joint positions were intuitively critical features representing the sleeping postures. Furthermore, the deep learning models were less sensitive to the interference of blanket conditions after guidance from anatomical landmarks because of the identification of critical anatomical features. In particular, the level of improvements was considerable in ResNet and ECA-Net, with an increment of more than 7% and 4%, respectively. We evaluated that the anatomical landmark-guided strategy was more significant to models that required larger datasets. Moreover, ECA-Net produced the best accuracy among the three models. The reason could be that the model applied an “attention” function to leverage the weights of anatomical landmark features, which were determinants of sleep postures, resulting in improved accuracy. However, it shall be noted that the current pose estimator relied on the input of RGB images, which could not be acquired at night without lights. The further development of the pose estimator to accommodate depth camera images is necessary.

Apart from noncontact methods, other modalities are commonly used for sleep posture classification. Ostadabbas, et al. [45] developed a body pressure measurement system to classify three sleep postures (supine and left- and right-sided lying) using the Gaussian mixture model with an overall accuracy of 98.4%. Fallmann, et al. [38] mounted accelerometers onto the trunk and limbs of participants in an attempt to classify six sleep postures using the matrix learning vector quantization approach. Despite the higher accuracy, these noncontact methods can be obstructive, difficult to maintain, and poorly complied, which affects the feasibility of long-term surveillance, particularly in the clinical setting [46].

There was one study considering sleep posture classification with blanket conditions using a noncontact approach. Mohammadi, et al. [47] utilized the Microsoft Kinect infrared depth camera to classify 12 sleep postures with thin blankets and achieved an accuracy of 76%. Our study demonstrated a higher accuracy with different thicknesses of blankets. In addition, the data augmentation subsumed hypothetical blanket variations that enhanced the generalizability.

In fact, a four-posture classification system (i.e., supine, prone, right, and left lateral) was commonly adopted [19,48,49,50,51] because of its relationship with the Apnea-Hypopnea Index (AHI) [37,52]. Some studies excluded or merged prone posture with supine posture due to the difficulty in classifying them [53,54,55,56,57,58]. A higher granularity classification was of particular interest, especially subdividing the lateral posture into log and fetal postures [59,60]. We found one study exploring the yearner posture, but ignoring the log and prone postures [59]. In this study, we classified seven postures that involved a fine granularity on the prone posture of head positions (left and right) and lateral postures (log and fetal), which covered the majority of common sleep postures. Additionally, finer granularity classifications were also endeavored on the location of the limb placement [38,47,61,62] or joint angles [63,64] to address sleep-related musculoskeletal disorders [37]. Furthermore, while existing finer granularity classifications often exploit head or limb orientation/placement, the postures of the head, trunk, and limbs can be classified separately, since some sleep disorders are sensitive to postures over individual body parts. For example, a supine head posture can worsen the conditions of sleep apnea, but not the trunk [37,52]. A hierarchical posture classification system with an analysis of localized postures of body parts to generalized sleep postures could be beneficial in the clinical diagnosis of sleep disorders.

It is often a challenging issue to determine the sample size for machine learning models. Machine learning models can suffer from overfitting, underfitting, nonconvergence, and/or bias in accuracy estimates if there is a scarcity of samples [65,66]. Data augmentation has been widely accepted as a technique that ameliorates the sample size issue in machine learning [65,66]. In our study, we endeavored to synthesize more blanket conditions using a data augmentation technique [14], which produced a comparable data size per class as a common benchmark dataset, such as the CIFAR-100 dataset [67].

There were some limitations in the study. First of all, the pose estimator may not have always successfully produced valid coordinates for the anatomical landmarks. The guided strategy was bypassed for those challenging cases in the model training process despite a satisfactory rate of accuracy being possible to achieve. Secondly, the evaluation of our current system targeted discrete events (i.e., based on static images). We are currently heading towards incorporating the model into a video-based system to monitor the proportion of different postures during the sleep course. Thirdly, we recruited normal/healthy subjects only through convenient sampling that could have led to some level of imbalanced age and gender distributions. Since our model, at this stage, only considered posture classifications for normal individuals, we did not consider including participants with sleep disorders. Finally, the deep learning model was a totally unsupervised feature discovery model used alongside a classifier. The model may or may not have utilized the anatomical features, though it might have been critically weighed because of its strong association with sleep postures. Nevertheless, deep learning models are often referred as an inscrutable blackbox models. The methodology could not obtain the physical meaning and relationship between features, which could be important for clinicians [68]. Future studies should consider to quantify the spine curvature and joint kinematics to better understand and monitor sleep-related musculoskeletal disorders [7].

5. Conclusions

This study presented a new deep learning model architecture for an under-blanket sleep posture classification system using infrared depth camera images. A feature of the model’s architecture included the utilization of a pose estimator to generate anatomical landmarks to guide the deep learning models, which improved the posture classification accuracy and benefitted pragmatic applications over generalized blanket scenarios in real-life. The F1 score of ECA-Net50 increased from 87.4% to 92.2% when anatomical landmark information was considered using the pose estimator. ECA-Net50 adopted an “attention” strategy that might have leveraged the weights of the critical determinants of sleep postures (i.e., the anatomical landmark information). In addition, our findings also supported that the performance of deep learning models enhanced with anatomical landmark was less affected by the interference of blanket conditions.

Author Contributions

Conceptualization, D.W.-C.W. and J.C.-W.C.; methodology, D.W.-C.W. and J.C.-W.C.; software, D.K.-H.L.; validation, Y.-J.M.; formal analysis, A.Y.-C.T. and B.P.-H.S.; investigation, A.Y.-C.T., B.P.-H.S. and H.-J.L.; resources, D.W.-C.W. and J.C.-W.C.; data curation, A.Y.-C.T., D.K.-H.L. and H.-J.L.; writing—original draft preparation, L.-W.Z.; writing—review and editing, D.W.-C.W. and J.C.-W.C.; visualization, D.K.-H.L. and Y.-J.M.; supervision, D.W.-C.W. and J.C.-W.C.; project administration, D.W.-C.W. and J.C.-W.C.; funding acquisition, D.W.-C.W. and J.C.-W.C. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of The Hong Kong Polytechnic University (reference number: HSEARS20210127007).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Funding Statement

The APC was supported by an internal fund from the Research Institute of Smart Ageing, The Hong Kong Polytechnic University (reference number: P0039001) and the General Research Fund granted by the Hong Kong Research Grants Council (reference number: PolyU15223822).

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zielinski M.R., McKenna J.T., McCarley R.W. Functions and mechanisms of sleep. AIMS Neurosci. 2016;3:67. doi: 10.3934/Neuroscience.2016.1.67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Devaraj D., Devaraj U., Venkatnarayan K., Veluthat C., Ramachandran P., D’Souza G., Maheswari K.U. Prevalence of sleep practices, circadian types and their effect on sleep beliefs in general population: Knowledge and Beliefs About Sleep and Sleep Practices (KNOBS Survey) Sleep Vigil. 2021;5:61–69. doi: 10.1007/s41782-021-00128-6. [DOI] [Google Scholar]

- 3.Liu Y., Wheaton A.G., Chapman D.P., Cunningham T.J., Lu H., Croft J.B. Prevalence of healthy sleep duration among adults—United States, 2014. Morb. Mortal. Wkly. Rep. 2016;65:137–141. doi: 10.15585/mmwr.mm6506a1. [DOI] [PubMed] [Google Scholar]

- 4.Wickwire E.M. Value-based sleep and breathing: Health economic aspects of obstructive sleep apnea. Fac. Rev. 2021;10:40. doi: 10.12703/r/10-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cary D., Jacques A., Briffa K. Examining relationships between sleep posture, waking spinal symptoms and quality of sleep: A cross sectional study. PLoS ONE. 2021;16:e0260582. doi: 10.1371/journal.pone.0260582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lorrain D., Koninck J.D. Sleep position and sleep stages: Evidence of their independence. Sleep. 1998;21:335–340. doi: 10.1093/sleep/21.4.335. [DOI] [PubMed] [Google Scholar]

- 7.Wong D.W.-C., Wang Y., Lin J., Tan Q., Chen T.L.-W., Zhang M. Sleeping mattress determinants and evaluation: A biomechanical review and critique. PeerJ. 2019;7:e6364. doi: 10.7717/peerj.6364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ren S., Wong D.W.-C., Yang H., Zhou Y., Lin J., Zhang M. Effect of pillow height on the biomechanics of the head-neck complex: Investigation of the cranio-cervical pressure and cervical spine alignment. PeerJ. 2016;4:e2397. doi: 10.7717/peerj.2397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hong T.T.-H., Wang Y., Wong D.W.-C., Zhang G., Tan Q., Chen T.L.-W., Zhang M. The Influence of Mattress Stiffness on Spinal Curvature and Intervertebral Disc Stress—An Experimental and Computational Study. Biology. 2022;11:1030. doi: 10.3390/biology11071030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Miyawaki S., Tanimoto Y., Araki Y., Katayama A., Imai M., Takano-Yamamoto T. Relationships among nocturnal jaw muscle activities, decreased esophageal pH, and sleep positions. Am. J. Orthod. Dentofac. Orthop. 2004;126:615–619. doi: 10.1016/j.ajodo.2004.02.007. [DOI] [PubMed] [Google Scholar]

- 11.McCabe S.J., Uebele A.L., Pihur V., Rosales R.S., Atroshi I. Epidemiologic associations of carpal tunnel syndrome and sleep position: Is there a case for causation? Hand. 2007;2:127–134. doi: 10.1007/s11552-007-9035-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pien G.W., Schwab R.J. Sleep disorders during pregnancy. Sleep. 2004;27:1405–1417. doi: 10.1093/sleep/27.7.1405. [DOI] [PubMed] [Google Scholar]

- 13.Menon A., Kumar M. Influence of body position on severity of obstructive sleep apnea: A systematic review. Int. Sch. Res. Not. 2013;2013:670381. doi: 10.1155/2013/670381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tam A.Y.-C., So B.P.-H., Chan T.T.-C., Cheung A.K.-Y., Wong D.W.-C., Cheung J.C.-W. A Blanket Accommodative Sleep Posture Classification System Using an Infrared Depth Camera: A Deep Learning Approach with Synthetic Augmentation of Blanket Conditions. Sensors. 2021;21:5553. doi: 10.3390/s21165553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hussain Z., Sheng Q.Z., Zhang W.E., Ortiz J., Pouriyeh S. Non-invasive techniques for monitoring different aspects of sleep: A comprehensive review. ACM Trans. Comput. Healthc. (HEALTH) 2022;3:1–26. doi: 10.1145/3491245. [DOI] [Google Scholar]

- 16.Cary D., Briffa K., McKenna L. Identifying relationships between sleep posture and non-specific spinal symptoms in adults: A scoping review. BMJ Open. 2019;9:e027633. doi: 10.1136/bmjopen-2018-027633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kubota T., Ohshima N., Kunisawa N., Murayama R., Okano S., Mori-Okamoto J. Characteristic features of the nocturnal sleeping posture of healthy men. Sleep Biol. Rhythm. 2003;1:183–185. doi: 10.1046/j.1446-9235.2003.00040.x. [DOI] [Google Scholar]

- 18.Yu M., Rhuma A., Naqvi S.M., Wang L., Chambers J. A posture recognition based fall detection system for monitoring an elderly person in a smart home environment. IEEE Trans. Inf. Technol. Biomed. 2012;16:1274–1286. doi: 10.1109/titb.2012.2214786. [DOI] [PubMed] [Google Scholar]

- 19.Masek M., Lam C.P., Tranthim-Fryer C., Jansen B., Baptist K. Sleep monitor: A tool for monitoring and categorical scoring of lying position using 3D camera data. SoftwareX. 2018;7:341–346. doi: 10.1016/j.softx.2018.10.001. [DOI] [Google Scholar]

- 20.Hoque E., Dickerson R., Stankovic J. Monitoring Body Positions and Movements during Sleep Using WISPs; Proceedings of the WH’10 Wireless Health Conference 2010; San Diego, CA, USA. 5–7 October 2010; pp. 44–53. [Google Scholar]

- 21.Lin F., Zhuang Y., Song C., Wang A., Li Y., Gu C., Li C., Xu W. SleepSense: A Noncontact and Cost-Effective Sleep Monitoring System. IEEE Trans. Biomed. Circuits Syst. 2017;11:189–202. doi: 10.1109/TBCAS.2016.2541680. [DOI] [PubMed] [Google Scholar]

- 22.Liu J., Chen X., Chen S., Liu X., Wang Y., Chen L. TagSheet: Sleeping Posture Recognition with an Unobtrusive Passive Tag Matrix; Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications; Paris, France. 29 April–2 May 2019; pp. 874–882. [Google Scholar]

- 23.Zhang F., Wu C., Wang B., Wu M., Bugos D., Zhang H., Liu K.J.R. SMARS: Sleep Monitoring via Ambient Radio Signals. IEEE Trans. Mob. Comput. 2021;20:217–231. doi: 10.1109/TMC.2019.2939791. [DOI] [Google Scholar]

- 24.Liu J.J., Xu W., Huang M.-C., Alshurafa N., Sarrafzadeh M., Raut N., Yadegar B. A dense pressure sensitive bedsheet design for unobtrusive sleep posture monitoring; Proceedings of the 2013 IEEE International Conference on Pervasive Computing and Communications (PerCom); San Deigo, CA, USA. 18–22 March 2013; pp. 207–215. [Google Scholar]

- 25.Pino E.J., Dörner De la Paz A., Aqueveque P., Chávez J.A., Morán A.A. Contact pressure monitoring device for sleep studies; Proceedings of the Annu Int Conf IEEE Eng Med Biol Soc; Osaka, Japan. 3–7 July 2013; pp. 4160–4163. [DOI] [PubMed] [Google Scholar]

- 26.Lin L., Xie Y., Wang S., Wu W., Niu S., Wen X., Wang Z.L. Triboelectric Active Sensor Array for Self-Powered Static and Dynamic Pressure Detection and Tactile Imaging. ACS Nano. 2013;7:8266–8274. doi: 10.1021/nn4037514. [DOI] [PubMed] [Google Scholar]

- 27.Tang K., Kumar A., Nadeem M., Maaz I. CNN-Based Smart Sleep Posture Recognition System. IoT. 2021;2:119–139. doi: 10.3390/iot2010007. [DOI] [Google Scholar]

- 28.Matar G., Lina J.M., Kaddoum G. Artificial Neural Network for in-Bed Posture Classification Using Bed-Sheet Pressure Sensors. IEEE J. Biomed. Health Inform. 2020;24:101–110. doi: 10.1109/JBHI.2019.2899070. [DOI] [PubMed] [Google Scholar]

- 29.Liu Z., Wang X.a., Su M., Lu K. A Method to Recognize Sleeping Position Using an CNN Model Based on Human Body Pressure Image; Proceedings of the 2019 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS); Shenyang, China. 12–24 July 2019; pp. 219–224. [Google Scholar]

- 30.Zhao A., Dong J., Zhou H. Self-Supervised Learning From Multi-Sensor Data for Sleep Recognition. IEEE Access. 2020;8:93907–93921. doi: 10.1109/ACCESS.2020.2994593. [DOI] [Google Scholar]

- 31.Byeon Y.-H., Lee J.-Y., Kim D.-H., Kwak K.-C. Posture Recognition Using Ensemble Deep Models under Various Home Environments. Appl. Sci. 2020;10:1287. doi: 10.3390/app10041287. [DOI] [Google Scholar]

- 32.Viriyavit W., Sornlertlamvanich V. Bed Position Classification by a Neural Network and Bayesian Network Using Noninvasive Sensors for Fall Prevention. J. Sens. 2020;2020:5689860. doi: 10.1155/2020/5689860. [DOI] [Google Scholar]

- 33.Wang Z.-W., Wang S.-K., Wan B.-T., Song W.W. A novel multi-label classification algorithm based on K-nearest neighbor and random walk. Int. J. Distrib. Sens. Netw. 2020;16:1550147720911892. doi: 10.1177/1550147720911892. [DOI] [Google Scholar]

- 34.Cheung J.C.-W., Tam E.W.-C., Mak A.H.-Y., Chan T.T.-C., Lai W.P.-Y., Zheng Y.-P. Night-time monitoring system (eNightLog) for elderly wandering behavior. Sensors. 2021;21:704. doi: 10.3390/s21030704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Grimm T., Martinez M., Benz A., Stiefelhagen R. Sleep position classification from a depth camera using bed aligned maps; Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR); Cancun, Mexico. 4–8 December 2016; pp. 319–324. [Google Scholar]

- 36.Ren A., Dong B., Lv X., Zhu T., Hu F., Yang X. A non-contact sleep posture sensing strategy considering three dimensional human body models; Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications (ICCC); Chengdu, China. 14–17 October 2016; pp. 414–417. [Google Scholar]

- 37.Fallmann S., Chen L. Computational sleep behavior analysis: A survey. IEEE Access. 2019;7:142421–142440. doi: 10.1109/ACCESS.2019.2944801. [DOI] [Google Scholar]

- 38.Fallmann S., Veen R.v., Chen L.L., Walker D., Chen F., Pan C. Wearable accelerometer based extended sleep position recognition; Proceedings of the 2017 IEEE 19th International Conference on e-Health Networking, Applications and Services (Healthcom); Dalian, China. 12–15 October 2017; pp. 1–6. [Google Scholar]

- 39.Cao Z., Hidalgo G., Simon T., Wei S.E., Sheikh Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021;43:172–186. doi: 10.1109/TPAMI.2019.2929257. [DOI] [PubMed] [Google Scholar]

- 40.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE conference on computer vision and pattern recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 41.Tan M., Le Q. Efficientnet: Rethinking model scaling for convolutional neural networks; Proceedings of the International Conference on Machine Learning; Long Beach, CA, USA. 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- 42.Wang Q., Wu B., Zhu P., Li P., Zuo W., Hu Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks; Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); Seattle, WA, USA. 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- 43.Paszke A., Gross S., Massa F., Lerer A., Bradbury J., Chanan G., Killeen T., Lin Z., Gimelshein N., Antiga L. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019;32:8024–8035. [Google Scholar]

- 44.Mao Y.-J., Lim H.-J., Ni M., Yan W.-H., Wong D.W.-C., Cheung J.C.-W. Breast Tumour Classification Using Ultrasound Elastography with Machine Learning: A Systematic Scoping Review. Cancers. 2022;14:367. doi: 10.3390/cancers14020367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ostadabbas S., Pouyan M.B., Nourani M., Kehtarnavaz N. In-bed posture classification and limb identification; Proceedings of the 2014 IEEE Biomedical Circuits and Systems Conference (BioCAS); Lausanne, Switzerland. 22–24 October 2014; pp. 133–136. [Google Scholar]

- 46.Cheung J.C.-W., Tam E.W.-C., Mak A.H.-Y., Chan T.T.-C., Zheng Y.-P. A night-time monitoring system (eNightLog) to prevent elderly wandering in hostels: A three-month field study. Int. J. Environ. Res. Public Health. 2022;19:2103. doi: 10.3390/ijerph19042103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Mohammadi S.M., Alnowami M., Khan S., Dijk D.J., Hilton A., Wells K. Sleep Posture Classification using a Convolutional Neural Network; Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Honolulu, HI, USA. 28–21 July 2018; pp. 3501–3504. [DOI] [PubMed] [Google Scholar]

- 48.Shinar Z., Baharav A., Akselrod S. Detection of different recumbent body positions from the electrocardiogram. Med. Biol. Eng. Comput. 2003;41:206–210. doi: 10.1007/BF02344890. [DOI] [PubMed] [Google Scholar]

- 49.Liu G., Li K., Zheng L., Chen W.-H., Zhou G., Jiang Q. A respiration-derived posture method based on dual-channel respiration impedance signals. IEEE Access. 2017;5:17514–17524. doi: 10.1109/ACCESS.2017.2737461. [DOI] [Google Scholar]

- 50.Zhang Z., Yang G.-Z. Monitoring cardio-respiratory and posture movements during sleep: What can be achieved by a single motion sensor; Proceedings of the 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks (BSN); Cambridge, MA, USA. 9–12 June 2015; pp. 1–6. [Google Scholar]

- 51.Enayati M., Skubic M., Keller J.M., Popescu M., Farahani N.Z. Sleep posture classification using bed sensor data and neural networks; Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Honolulu, HI, USA. 18–21 July 2018; pp. 461–465. [DOI] [PubMed] [Google Scholar]

- 52.Pinna G.D., Robbi E., La Rovere M.T., Taurino A.E., Bruschi C., Guazzotti G., Maestri R. Differential impact of body position on the severity of disordered breathing in heart failure patients with obstructive vs. central sleep apnoea. Eur. J. Heart Fail. 2015;17:1302–1309. doi: 10.1002/ejhf.410. [DOI] [PubMed] [Google Scholar]

- 53.Pouyan M.B., Birjandtalab J., Heydarzadeh M., Nourani M., Ostadabbas S. A pressure map dataset for posture and subject analytics; Proceedings of the 2017 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI); Orlando, FL, USA. 16–19 February 2017; pp. 65–68. [Google Scholar]

- 54.Hsia C.-C., Hung Y.-W., Chiu Y.-H., Kang C.-H. Bayesian classification for bed posture detection based on kurtosis and skewness estimation; Proceedings of the HealthCom 2008-10th International Conference on e-health Networking, Applications and Services; Biopolis, Singapore. 7–9 July 2008; pp. 165–168. [Google Scholar]

- 55.Viriyavit W., Sornlertlamvanich V., Kongprawechnon W., Pongpaibool P., Isshiki T. Neural network based bed posture classification enhanced by Bayesian approach; Proceedings of the 2017 8th International Conference of Information and Communication Technology for Embedded Systems (IC-ICTES); Chonburi, Thailand. 7–9 May 2017; pp. 1–5. [Google Scholar]

- 56.Liu S., Yin Y., Ostadabbas S. In-bed pose estimation: Deep learning with shallow dataset. IEEE J. Transl. Eng. Health Med. 2019;7:1–12. doi: 10.1109/JTEHM.2019.2892970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Wang M., Liu P., Gao W. International Conference in Communications, Signal Processing, and Systems. Springer; Berlin/Heidelberg, Germany: 2017. Automatic sleeping posture detection in ballistocardiography; pp. 1785–1792. [Google Scholar]

- 58.Matar G., Lina J.-M., Carrier J., Riley A., Kaddoum G. Internet of Things in sleep monitoring: An application for posture recognition using supervised learning; Proceedings of the 2016 IEEE 18th International conference on e-Health networking, applications and services (Healthcom); Munich, Germany. 14–16 September 2016; pp. 1–6. [Google Scholar]

- 59.Yousefi R., Ostadabbas S., Faezipour M., Farshbaf M., Nourani M., Tamil L., Pompeo M. Bed posture classification for pressure ulcer prevention; Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Boston, MA, USA. 30 August–1 September 2011; pp. 7175–7178. [DOI] [PubMed] [Google Scholar]

- 60.Liu J.J., Xu W., Huang M.-C., Alshurafa N., Sarrafzadeh M., Raut N., Yadegar B. Sleep posture analysis using a dense pressure sensitive bedsheet. Pervasive Mob. Comput. 2014;10:34–50. doi: 10.1016/j.pmcj.2013.10.008. [DOI] [Google Scholar]

- 61.Pouyan M.B., Ostadabbas S., Farshbaf M., Yousefi R., Nourani M., Pompeo M. Continuous eight-posture classification for bed-bound patients; Proceedings of the 2013 6th International Conference on Biomedical Engineering and Informatics; Hangzhou, China. 16–18 September 2013; pp. 121–126. [Google Scholar]

- 62.Kwasnicki R.M., Cross G.W., Geoghegan L., Zhang Z., Reilly P., Darzi A., Yang G.Z., Emery R. A lightweight sensing platform for monitoring sleep quality and posture: A simulated validation study. Eur. J. Med. Res. 2018;23:28. doi: 10.1186/s40001-018-0326-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Barsocchi P. Position recognition to support bedsores prevention. IEEE J. Biomed. Health Inform. 2012;17:53–59. doi: 10.1109/TITB.2012.2220374. [DOI] [PubMed] [Google Scholar]

- 64.Lee J., Hong M., Ryu S. Sleep monitoring system using kinect sensor. Int. J. Distrib. Sens. Netw. 2015;11:875371. doi: 10.1155/2015/875371. [DOI] [Google Scholar]

- 65.Larracy R., Phinyomark A., Scheme E. Machine learning model validation for early stage studies with small sample sizes; Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC); Guadalajara, Mexico. 1–5 November 2021; pp. 2314–2319. [DOI] [PubMed] [Google Scholar]

- 66.Keshari R., Ghosh S., Chhabra S., Vatsa M., Singh R. Unravelling small sample size problems in the deep learning world; Proceedings of the 2020 IEEE Sixth International Conference on Multimedia Big Data (BigMM); New Delhi, India. 24–26 September 2020; pp. 134–143. [Google Scholar]

- 67.Krizhevsky A., Hinton G. Learning Multiple Layers of Features from Tiny Images. [(accessed on 5 September 2022)]. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.222.9220&rep=rep1&type=pdf.

- 68.Cabitza F., Rasoini R., Gensini G.F. Unintended consequences of machine learning in medicine. JAMA. 2017;318:517–518. doi: 10.1001/jama.2017.7797. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.