Abstract

Background

Obtaining patient-reported outcomes (PROs) is becoming a standard component of patient care. For nonacademic practices, this can be challenging. From this perspective, we designed a nearly autonomous patient outcomes reporting system. We then conducted a prospective, cohort pilot study to assess the efficacy of the system.

Methods

We created an automated system to gather PROs. All operative patients for 4 surgeons in an upper-extremity private practice were asked to participate. These patients completed the Quick Disabilities of the Arm, Shoulder, and Hand (QuickDASH) questionnaires preoperatively and received follow-up e-mails requesting patients to complete additional QuickDASH questionnaires at 3, 6, and 12 weeks postoperatively and to complete a 13-week postoperative satisfaction survey. Response rates and satisfaction levels are reported with descriptive statistics.

Results

Sixty-two percent of participants completed the 3-week assessment, 55% completed the 6-week assessment, and 43% completed the 12-week assessment. Overall, 35% of patients completed all questionnaires, and 73% completed at least 1 postoperative assessment. The collection of follow-up questionnaires required no additional time from the clinical staff, surgeon, or a research associate.

Conclusions

Automated e-mail assessments can collect reliable clinical data, with minimal surgeon or staff intervention required to administer and collect data, minimizing the financial cost. For nonacademic practices, without access to additional research resources, such a system is feasible. Further improvements in communication with patients could increase response rates.

Keywords: automated data collection, outcomes research, patient-reported outcomes, QuickDASH

Introduction

Over the past decade, patient-reported outcomes (PROs) have become increasingly identified as an integral aspect of critically assessing and improving clinical care.1-4 As some recent federal mandates have linked hospital and surgeon compensation to patient outcomes, the collection of PROs is likely to become a clinical standard, if not universally required.5-7 Thankfully, the ubiquitous presence of wireless and touch screen technology has advanced our ability to easily collect and analyze outcomes in the form of electronic PROs (ePROs) via tablet devices.2,8,9 Although the vast majority of PROs were created and validated in a standard paper format, extensive evidence supports the equivalence of paper PROs and ePROs. 10 The benefits of transitioning to ePROs are widely recognized, but the adoption rate has been slow.8,11,12 Benefits of ePROs include linking ePROs with electronic health records, triggering automated alerts for designated results, immediate scoring and presentation of data, reducing cost and time of monitoring completion, reducing data entry errors, improving data quality, and increased patient and provider satisfaction.1,12-16 However, logistic barriers exist, which have slowed the adoption of electronic platforms to facilitate this process, especially in nonacademic settings. These include the startup and potential device purchase expenses, training of clinical staff and patients to use the platform, limited integration with existing electronic health records, and resistance to changing an existing clinical workflow.1,13,14,16

Several clinical studies have validated the use of ePROs in orthopedic surgery with similar conclusions to those above.9,17-19 Electronic PROs are more effective and preferred over paper PROs by both physicians and patients.18,19 They include the ability to integrate computer adaptive testing principles to reduce survey length, improve the quality and number of scorable responses, and have the potential to transfer the time burden for survey completion from the orthopedic office setting to the patient’s time at home. This last point, the ability of patients to complete ePROs at home, uses the concept of “bring your own device” (BYOD) where patients are asked to complete ePROs on their personal computers, laptops, tablets, or smartphones independent of the office setting. 11 Using the BYOD principle has limitations, including the requirement that all patients have compatible devices and are capable of using the appropriate technology (e-mail, text message, etc) to complete the assessments.

With a rapid trend toward the ubiquitous collection of PROs, we recognized that traditional pen/paper PROs are time-consuming and imperfect. The recent emergence of numerous Web-based technologies can assist with the automation of patient reminders and data collection. Using these new technologies, we designed a novel system to collect a large number of ePROs with minimal cost and clinical staff time investment. Then, for a pilot study, we designed a prospective cohort study to assess the efficacy of a nearly autonomous patient outcomes reporting system in a private practice setting. Our purpose was to measure the time required to integrate this new system, evaluate response rates, and identify the barriers to surgeon and patient participation.

Materials and Methods

This study was approved by our institution’s institutional review board. All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008. Informed consent was obtained from all patients for being included in the study. The study was separated into 3 parts: the creation of an automated data collection system, integration of the system into an orthopedic hand surgery practice, and assessment of the patient response rates, satisfaction, and barriers to participation.

Creating the Automated System

Although many academic institutions have access to services such as REDCap, this is not true for practices in other environments. Therefore, we sought to develop a low-cost system that required minimal time investment to build and integrate. Based on these principles, we elected to use existing Health Insurance Portability and Accountability Act (HIPAA)-compliant Web-based services to create validated ePRO surveys in combination with other Web-based services to send e-mail notifications. We used e-mail as the primary communication modality because it is ubiquitous and easy to integrate with automation servers over other options, such as text messaging or telephone calls. We used a validated upper-extremity outcome assessment, the Quick Disabilities of the Arm, Shoulder, and Hand Questionnaire (QuickDASH), to reduce question burden and improve response rates.20,21 The DASH has previously been validated for use in an electronic and touch screen format.17,19,22 The Web-based form creation tool (Google Apps) was both HIPAA-compliant and mobile-responsive, thereby fulfilling critical requirements for both security and easy accessibility on a variety of mobile device types and sizes.

The software was programmed to e-mail patients at 3, 6, and 12 weeks from the day of surgery with a link and request to complete the ePRO. If a patient had not completed the survey within 3 days, an automatic reminder was generated, followed by a second reminder 3 days after that (with a maximum of 2 reminders). At each time point, the number of e-mails sent and surveys collected was recorded. At 13 weeks after surgery, all patients received a request to complete a voluntary satisfaction survey reporting on their experience using the automated e-mail system.

When patients completed an assessment, the software automatically tabulated and calculated the QuickDASH score and populated a cloud-based HIPAA-compliant database where the responses were processed in real time and organized for Latera analysis. The database then automatically generated continuously updated reports as programmed by the surgeon. All scores were readily available in real time via a secure Web site to allow care providers to review specific patient outcomes and aggregate data.

Once the beta version was created, the system was tested among select providers to troubleshoot any potential flaws. The system was deemed to be ready for patient enrollment after passing all quality assessment tests.

Integrating Into Practice

One of our primary objectives was to reduce the clinical staff burden. After discussing various enrollment options (at the time of an office visit, by a scheduling secretary, during the preoperative visit, etc), we elected to enroll patients on the day of surgery in the preoperative area, immediately before surgery. We believed this would give us the most control over patient enrollment while reducing scheduling changes or cancellations that would pose challenges to enrollment during a preoperative office visit. Different enrollment strategies may be more successful in other clinical environments. An in-training session was held with preoperative nurses to explain the purpose and protocol for patient enrollment. All nurses were equipped to enroll patients and demonstrated mastery of the system and use of tablets within 20 minutes of training. To further limit burden of office clinical staff for follow-up questionnaires, as well as unlink questionnaire completion with office appointments, all follow-up questionnaires were sent via e-mail in an automated fashion. Such a setup is also beneficial for offices where there is frequent substitution of clinical staff who may not be aware of particular surgeon’s clinical documentation workflows.

Patient enrollment

All adult patients who underwent standard preoperative preparation at an outpatient hand surgery center were eligible to participate. During the preoperative nursing assessment, nurses enrolled patients using a touch screen tablet mobile device. The tablets were configured to have a single enrollment icon on the home screen that was linked to the Web-based enrollment form. After entering the patient’s information and e-mail address, the patient was given the tablet device to read and agree to participating in the study (digital consent) and complete a preoperative QuickDASH assessment (11 questions). Once submitted, the enrollment process was complete. There was no requirement of any software to be downloaded or installed by the patient on their personal phone, mobile device, or computer.

Assessing Efficacy

Clinical efficacy of the system was based on 3 primary factors: (1) ease of enrollment; (2) patient response rates; and (3) clinical staff time investment. Ease of enrollment was assessed through personal interviews with preoperative nursing staff and patient satisfaction surveys. Patient response rates were similarly analyzed and reported. The clinical staff time investment and satisfaction were reported based on unstructured interviews.

Statistical Analysis

All raw data are reported with descriptive statistics. The average age between respondents and nonrespondents was compared using the Student t test, as the data met parametric analysis requirements. An α value of 0.05 was used to determine statistical significance.

Results

Patients were enrolled over 6 months with a total enrollment of 454 patients among 546 invited patients (83% enrollment rate). Reasons for nonparticipation included 45 who did not have e-mail (49%, mean age of 60 years), 32 who did not use their e-mail (35%, mean age of 55 years), and 15 with other reasons such as non-English speaking (16%, mean age of 54 years). The average age of participating patients was 54 years, compared with 58 years for those not participating (P > .05). Enrolled patient demographics are reported in Table 1.

Table 1.

Demographics of Patients Who Were Invited to Participate in the Study.

| Variable | Participating | Nonparticipating | P value |

|---|---|---|---|

| Age, y a | 54 | 58 | >.05 |

| Employed, % | 65 | Unknown | |

| Procedure, % | |||

| CTR | 28 | 27 | >.05 |

| CTR + other | 9 | 11 | |

| Distal radius fracture | 2 | 3 | |

| Excision mass | 8 | 8 | |

| Metacarpal/Phalanx fracture | 5 | 5 | |

| LRTI | 6 | 3 | |

| Trigger finger release | 13 | 8 | |

| De Quervain release | 3 | 2 | |

| Other | 26 | 33 | |

| Surgeon | |||

| A | 78 | 22 | >.05 |

| B | 87 | 13 | |

| C | 77 | 23 | |

| D | 83 | 17 | |

Note. CTR = carpal tunnel release; LRTI = ligament reconstruction and tendon interposition.

Mean.

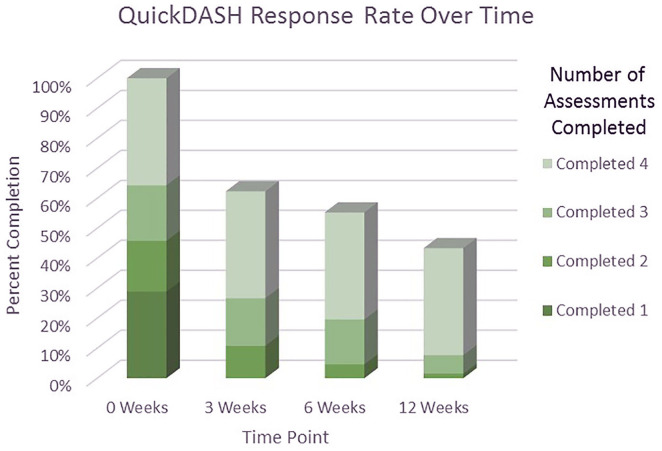

Each of the 454 participants was asked to complete a QuickDASH score at 4 different time points, for a total of 1816 requested surveys. The data collection yielded 1185 responses, with an overall completion rate of 65%. In total, 731 (54%) of the 1362 follow-up questionnaires were completed at home, automated, and independent of any staff or human intervention. Only 36% of enrolled patients completed all 4 time-point assessments, whereas 19% completed 3 assessments, 17% completed 2 assessments, and 29% completed only the first assessment. The completion rates for each time point assessment at 3, 6, and 12 weeks were 62%, 55%, and 43%, respectively (Figure 1).

Figure 1.

The percentage of participants who completed the QuickDASH assessment stratified by the total number of assessments that participants completed.

Note. QuickDASH = Quick Disabilities of the Arm, Shoulder, and Hand.

E-mail analytics revealed that 4% of e-mails sent “bounced” back and were unable to be delivered. Initial e-mail requests were viewed by patients 66% of the time, with a downward trend after reminders and across all time points (Table 2). For any given e-mail, there was a 17% to 44% rate of clicking the survey link, depending on the time point and e-mail reminder (Table 2). Only 3 patients elected to unsubscribe from e-mails at any point during the study.

Table 2.

The Percentage of E-Mails That Could Not Be Delivered (Bounced), Were Opened, Had the Link to the Assessment Clicked, and the Percentage of Assessments Completed Were Stratified by the Time Point and the Number of E-Mail Requests That Were Sent.

| Assessment | 3 wk | 6 wk | 12 wk | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Number of e-mail requests | 1st | 2nd | 3rd | 1st | 2nd | 3rd | 1st | 2nd | 3rd |

| Bounced, % | 4 | 4 | 4 | ||||||

| Opened, % | 66 | 48 | 39 | 57 | 43 | 41 | 50 | 38 | 33 |

| Clicked, % | 44 | 31 | 24 | 40 | 24 | 25 | 31 | 21 | 17 |

| Completed, % | 62 | 55 | 43 | ||||||

The analysis of response rates as a factor of age demonstrated the highest rate of enrollment in the age ranges <70 years, but the highest rate of responses to the automated assessment e-mails in patients aged >70 years (Table 3).

Table 3.

The Percentage of Participants Who Participated at Various Time Points of the Study Stratified by Their Age Range.

| Participation rate | Age range, y (n) | All patients | |||

|---|---|---|---|---|---|

| <30 y (35) |

31-50 y (109) |

51-70 y (232) |

>70 y (58) |

||

| Enrollment, % | 83 | 84 | 85 | 73 | 82 |

| 3 wk, % | 43 | 49 | 67 | 80 | 62 |

| 6 wk, % | 31 | 39 | 63 | 67 | 55 |

| 12 wk, % | 17 | 31 | 50 | 52 | 43 |

Only 41 participants responded to the satisfaction survey. This corresponds to 25% (41 of 146) of the participants who had responded to all 4 previous assessments. Satisfaction survey results are shown in Table 4 and reveal that >90% of patients were either satisfied or very satisfied with the e-mail-based reporting system and preferred it to traditional paper/pen forms. This survey was sent after all the previous assessments, 13 weeks after surgery.

Table 4.

The Distribution of Responses to Survey Questions Related to Participants’ Satisfaction With E-Mail Reminders and Electronic Completion of Assessments.

| Satisfaction with e-mail surveys | Response rate (%) | ||||

|---|---|---|---|---|---|

| Very dissatisfied | Dissatisfied | Neutral | Satisfied | Very satisfied | |

| Convenience | 0 (0) | 0 (0) | 5 (12) | 7 (17) | 26 (63) |

| Clarity | 0 (0) | 0 (0) | 4 (10) | 11 (27) | 24 (59) |

| Flexibility | 0 (0) | 0 (0) | 2 (5) | 7 (18) | 30 (75) |

| Reminders | 0 (0) | 1 (2) | 4 (10) | 8 (20) | 25 (61) |

| E-mail compared with paper surveys | Much worse | Worse | Same | Better | Much better |

| Convenience | 1 (2) | 0 (0) | 4 (10) | 9 (22) | 26 (63) |

| Time Completion | 1 (2) | 0 (0) | 4 (10) | 10 (24) | 24 (59) |

| Personal Preference | 1 (2) | 0 (0) | 3 (7) | 8 (20) | 27 (66) |

| Would you choose electronic over paper? | Definitely pencil/paper | Likely pencil/paper | Not sure | Likely e-mail | Definitely e-mail |

| Yes | 0 (0) | 1 (2) | 2 (5) | 10 (24) | 28 (68) |

Anecdotal data collected for clinical staff satisfaction, assessed through personal interviews, were very high. Surgeons were very positive based on the collection of valuable data and reports without requiring surgeon, nurse, or medical assistant time investment, nor delays in the office/clinic setting.

Preoperative nurses were also positive in their feedback but acknowledged that the introduction of the enrollment step during their preoperative assessment was sometimes cumbersome. In total, nurses required less than 2 minutes to enter the patient data (name, medical record number, birthdate, surgeon, and procedure). Patients required approximately 5 minutes for instructions, training, and completion of the QuickDASH assessment, typically while nurses were confirming allergies or measuring vital signs. There were no measurable delays in operative start times as a result of the enrollment protocol.

Discussion

Collection of PROs can be challenging, especially for nonacademic practices. The purpose of this study was to create and report on the efficacy of a novel, automated ePRO collection system that was low cost and easy to integrate with existing systems in a pilot study. Our results demonstrate that the system was well received by both patients and clinical staff with minimal time investment and cost. We were able to successfully collect 731 automated ePRO responses without any external input or time investment during this 6-month trial period. This correlated with obtaining at least 1 postoperative outcomes assessment for 71% of patients and complete data for 36% of patients.

The interpretation of these results depends largely on one’s perspective. For academic institutions with access to additional resources such as clinical research staff and the ability to provide patients with tablet devices upon check-in of their visit, a yield of 36% completion is low. A response rate at this level would be incompatible with the goal of publishing clinical research. However, for practices that are not currently collecting PROs and do not have access to additional research resources or other online collection systems, we have demonstrated the ability to capture patient data with need for little additional resources.

With a reported average of 2½ minutes to complete the paper QuickDASH,23,24 plus an estimated 3 to 5 minutes for training, teaching, and instruction per patient, as well as clinical staff computation and entry time, we estimate that this ePRO collection system saved 7 minutes of clinical staff time for each patient at each postoperative visit. For this study’s protocol, that amounted to nearly 30 minutes saved for each operative patient when including a preoperative QuickDASH assessment and 3 postoperative QuickDASH assessments. Furthermore, this e-mail-based system avoids the need to manage and maintain tablet devices in a busy clinical office. The results of this study are promising and suggest that fully automated data collection systems are reasonable options for collecting valuable outcome assessments with minimal impact on clinical pathways.

The literature is replete with studies validating the use of electronic data collection systems, including studies specifically examining their use in orthopedics and hand surgery. Dy et al 17 compared the use of a tablet computer with paper administration of the DASH questionnaire. They found that 24% of paper surveys could not be scored compared with only 2% of electronic surveys. A similar study performed by Tyser et al 19 evaluated DASH survey completion by tablet computer versus traditional paper. They reported that 14% of paper survey responses could not be scored, compared with 4% in the tablet group. Time to completion was higher in the tablet group compared with paper (4.3 minute vs 3.1 minute) in their study. They found no correlation with age among outcome variables analyzed. Smith et al 3 recruited 308 patients to compare paper or electronic forms for the completion of orthopedic outcome questionnaires for upper extremity, lower extremity, and spine patients. They concluded there were no differences between electronic and paper surveys, but there were significantly more missed questions for paper forms, and patients strongly preferred the electronic forms.

Our results represent an enhancement to the protocols above, demonstrating all surveys could be scored and a high respondent participation rate of 65% across all age groups could be achieved. We attribute the 100% scorable rate to our software that only permitted survey submission if all questions were answered. This likely reduced our overall response rates, and our results could have been improved if we allowed up to 1 missing question, which is permitted by the QuickDASH.

While we were encouraged by our response rate, there is room for improvement. In total, 65% of survey requests were completed. We recognize that response rates to any e-mail survey are based on numerous factors, including patient motivation, preenrollment counseling, the accuracy of e-mail address recording, the wording of e-mail reminders, the number and frequency of e-mail reminders, and the potential use of other reminder media such as text messages or automated phone calls. Our e-mail analytics results demonstrate that 4% of e-mails were never delivered, most likely due to a spelling error when enrolling patients resulting in a nonfunctional address. They also reveal that only 66% of patients open their first e-mail request with a downward trend over time, suggesting that e-mails may potentially be marked as spam or otherwise overlooked. And, once opened, only 44% of patients clicked the survey link, with even fewer completing the survey at secondary requests. These informative numbers imply that further improvements can be made to the e-mail format, wording, and brevity of both the e-mail and the survey. However, despite a relatively low response rate to an individual e-mail request, the reminder e-mails were highly effective and resulted in a clinically relevant rate of survey responses and time savings. We suspect that additional reminders beyond 3 e-mails would further increase response rates, but would need to be balanced against potential harassment and e-mail etiquette guidelines. In addition, in-person verbal reminders at the first postoperative appointment could be useful, as many patients did not have 6- or 12-week postoperative appointments.

Traditional outcome assessment formats, such as those completed in a clinical setting, have considerable drawbacks, including cost, time, and overall convenience as outlined above. Alternatively, paper mail assessments can be considered a form of “automated” survey completion that eliminates in-office time, with a response rate to orthopedic surveys in the range of 67%. 25 However, paper mailed surveys do not eliminate the staff time required for preparation, addressing, mailing, and then collecting, tabulating, and recording the results. We believe that this automated e-mail system offers a comparable alternative with similar response rates and a significantly reduced time investment.

Recently, there has been a focus on the limitations and barriers to integrating electronic outcome reporting in medical practices. 11 Some limitations are easily overcome, such as encryption and security. Other limitations may self-resolve over time, such as the incomplete penetrance of mobile devices among users. However, some barriers will be very difficult to surmount, such as required custom programming for integration with a plethora of electronic medical record vendors. In this study, we elected to focus on the most high-yield characteristics to make the system effective while bypassing limitations that could not be improved. For example, integrating through our practice’s electronic medical record would have been an extraordinarily slow, expensive, and unsatisfying process. Thus, we elected to create a system that was independent of the medical record, but could be easily queried and analyzed via a secure Web site that in some ways is even more easily accessible than the medical record. Another example was the use of existing Web-based services to send assessments and collect data. Although these systems are “prebuilt” and have inherent limitations, the very low cost and immediate availability outweighed the potential benefit of creating custom software with more complex features.

Our study has several limitations that should influence the interpretation of these results. Our study population consisted of hand surgery patients with a mean age of 55 years. Initially, we were concerned that an older patient population would be less likely to enroll and comply with electronic outcome monitoring based on unfamiliarity or comfort with mobile devices. However, our results refuted this and, rather, demonstrated a higher compliance rate among patients aged >70 years compared with younger patients. This could be because this cohort of patients generally receives fewer e-mails overall and is not overwhelmed by their baseline e-mail burden. This finding is consistent with other research findings suggesting that touch screen devices are equally usable by all age groups. 26 Based on our findings, we would anticipate that a surgical practice with a younger patient demographic would have lower enrollment and response rates based on these data. Similarly, participants reported being highly satisfied with the system; however, a relatively small percentage of participants completed this survey, which could cause a selection bias. This low response rate is likely because the satisfaction survey was a fifth survey that was sent separately and after the 12-week postoperative QuickDASH survey.

In addition, our study was performed at a private, outpatient surgery center where all preoperative nurses could be easily gathered and trained to enroll patients. This would be much more challenging in a large hospital or university setting. To simplify data collection, we elected to use the QuickDASH outcome questionnaire for its familiarity and length. Using a longer or more complex PRO measure would likely reduce response rates.

The overall participation rate for our study was 71% for a baseline plus at least 1 assessment point, but only 36% for a complete data set at all 4 time points. This likely would not be sufficient for peer-review, clinical research studies. This could be because patients were less likely to respond the further out they were from surgery, especially if they had already experienced clinical benefit from their surgery, such as with carpal tunnel and trigger finger releases. Furthermore, patients who underwent those types of surgeries were typically discharged from care after 1 to 2 postoperative appointments, typically no later than 6 weeks after surgery, and they may not have been anticipating receiving further questionnaires in their inbox.

Although our response rate would not be adequate for clinical research, from the perspective of government reporting and quality improvement, this represents valuable patient outcomes data that would otherwise be lost. For example, our practice has been able to submit our data to our larger hospital system to demonstrate quality improvement tracking. Furthermore, we believe this response rate can be improved with system improvements and patient counseling, as outlined above.

Conclusions

Collection of PROs in many academic institutions is commonplace. However, in certain clinical settings, such processes can be challenging. Through this pilot study, we demonstrated that a low-cost, minimal-burden protocol can be integrated into any practice regardless of the electronic medical record platform or the practice type. Such integration can allow a wider spectrum of practices to participate in collection of PROs, thereby meeting reporting requirements, while possibly allowing the expansion of PROs into their clinical care discussion.

Footnotes

Ethical Approval: This study was approved by our institutional review board.

Statement of Human and Animal Rights: All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008.

Statement of Informed Consent: Informed consent was obtained from all patients for being included in the study.

The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: O.I.F. created and owns the website SurgiSurvey.com which was used in this study. The rest of the authors declare no potential conflicts of interest with respect to this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

ORCID iD: Daniel A. London  https://orcid.org/0000-0002-1711-0762

https://orcid.org/0000-0002-1711-0762

References

- 1. Basch E, Goldfarb S. Electronic patient-reported outcomes for collecting sensitive information from patients. J Support Oncol. 2009;7(3):98-99. [PubMed] [Google Scholar]

- 2. Dupont A, Wheeler J, Herndon JE, 2nd, et al. Use of tablet personal computers for sensitive patient-reported information. J Support Oncol. 2009;7(3):91-97. [PubMed] [Google Scholar]

- 3. Smith SK, Rowe K, Abernethy AP. Use of an electronic patient-reported outcome measurement system to improve distress management in oncology. Palliat Support Care. 2014;12(1):69-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Zbrozek A, Hebert J, Gogates G, et al. Validation of electronic systems to collect patient-reported outcome (PRO) data-recommendations for clinical trial teams: report of the ISPOR ePRO systems validation good research practices task force. Value Health. 2013;16(4):480-489. [DOI] [PubMed] [Google Scholar]

- 5. Epstein AM. Pay for performance at the tipping point. N Engl J Med. 2007;356(5):515-517. [DOI] [PubMed] [Google Scholar]

- 6. Graham B, Green A, James M, et al. Current concepts review measuring patient satisfaction in orthopaedic surgery. J Bone Joint Surg Am. 2015;97(1):80-84. [DOI] [PubMed] [Google Scholar]

- 7. Pierce RG, Bozic KJ, Bradford DS. Pay for performance in orthopaedic surgery. Clin Orthop Rel Res. 2007;457:87-95. [DOI] [PubMed] [Google Scholar]

- 8. Schick-Makaroff K, Molzahn A. Strategies to use tablet computers for collection of electronic patient-reported outcomes. Health Qual Life Outcomes. 2015;13:2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Roberts N, Bradley B, Williams D. Use of SMS and tablet computer improves the electronic collection of elective orthopaedic patient reported outcome measures. Ann R Coll Surg Engl. 2014;96(5):348-351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Gwaltney CJ, Shields AL, Shiffman S. Equivalence of electronic and paper-and-pencil administration of patient-reported outcome measures: a meta-analytic review. Value Health. 2008;11(2):322-333. [DOI] [PubMed] [Google Scholar]

- 11. Coons SJ, Eremenco S, Lundy JJ, et al. Capturing patient-reported outcome (PRO) data electronically: the past, present, and promise of ePRO measurement in clinical trials. Patient. 2015;8(4):301-309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Snyder CF, Aaronson NK, Choucair AK, et al. Implementing patient-reported outcomes assessment in clinical practice: a review of the options and considerations. Qual Life Res. 2012;21(8):1305-1314. [DOI] [PubMed] [Google Scholar]

- 13. Abernethy AP, Ahmad A, Zafar SY, et al. Electronic patient-reported data capture as a foundation of rapid learning cancer care. Med Care. 2010;48(suppl 6):S32-S38. [DOI] [PubMed] [Google Scholar]

- 14. Bennett AV, Jensen RE, Basch E. Electronic patient-reported outcome systems in oncology clinical practice. CA Cancer J Clin. 2012;62(5):337-347. [DOI] [PubMed] [Google Scholar]

- 15. Espallargues M, Valderas JM, Alonso J. Provision of feedback on perceived health status to health care professionals: a systematic review of its impact. Med Care. 2000;38(2):175-186. [DOI] [PubMed] [Google Scholar]

- 16. Holzner B, Giesinger JM, Pinggera J, et al. The Computer-Based Health Evaluation Software (CHES): a software for electronic patient-reported outcome monitoring. BMC Med Inform Decis Mak. 2012;12:126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Dy CJ, Schmicker T, Tran Q, et al. The use of a tablet computer to complete the DASH questionnaire. J Hand Surg Am. 2012;37(12):2589-2594. [DOI] [PubMed] [Google Scholar]

- 18. Gakhar H, McConnell B, Apostolopoulos AP, et al. A pilot study investigating the use of at-home, web-based questionnaires compiling patient-reported outcome measures following total hip and knee replacement surgeries. J Long Term Eff Med Implants. 2013;23(1):39-43. [DOI] [PubMed] [Google Scholar]

- 19. Tyser AR, Beckmann J, Weng C, et al. A randomized trial of the disabilities of the arm, shoulder, and hand administration: tablet computer versus paper and pencil. J Hand Surg Am. 2015;40(3):554-559. [DOI] [PubMed] [Google Scholar]

- 20. Beaton DE, Wright JG, Katz JN, et al. Development of the QuickDASH: comparison of three item-reduction approaches. J Bone Joint Surg Am. 2005;87(5):1038-1046. [DOI] [PubMed] [Google Scholar]

- 21. Hudak PL, Amadio PC, Bombardier C, et al. Development of an upper extremity outcome measure: the DASH (Disabilities of the Arm, Shoulder, and Head). Am J Ind Med. 1996;29(6):602-608. [DOI] [PubMed] [Google Scholar]

- 22. Yucel H, Seyithanoglu H. Choosing the most efficacious scoring method for carpal tunnel syndrome. Acta Orthop Traumatol Turc. 2015;49(1):23-29. [DOI] [PubMed] [Google Scholar]

- 23. Gabel CP, Yelland M, Melloh M, et al. A modified QuickDASH-9 provides a valid outcome instrument for upper limb function. BMC Musculoskelet Disord. 2009;10:161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Yaffe M, Goyal N, Kokmeyer D, et al. The use of an iPad to collect patient-reported functional outcome measures in hand surgery. Hand (N Y). 2015;10(3):522-528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Hunsaker FG, Cioffi DA, Amadio PC, et al. The American Academy of Orthopaedic Surgeons outcomes instruments: normative values from the general population. J Bone Joint Surg Am. 2002;84(2):208-215. [DOI] [PubMed] [Google Scholar]

- 26. Dixon S, Bunker T, Chan D. Outcome scores collected by touchscreen: medical audit as it should be in the 21st century? Ann R Coll Surg Engl. 2007;89(7):689-691. [DOI] [PMC free article] [PubMed] [Google Scholar]