Abstract

Humans have a remarkable capacity for perceiving and producing rhythm. Rhythmic competence is often viewed as a single concept, with participants who perform more or less accurately on a single rhythm task. However, research is revealing numerous sub-processes and competencies involved in rhythm perception and production, which can be selectively impaired or enhanced. To investigate whether different patterns of performance emerge across tasks and individuals, we measured performance across a range of rhythm tasks from different test batteries. Distinct performance patterns could potentially reveal separable rhythmic competencies that may draw on distinct neural mechanisms. Participants completed nine rhythm perception and production tasks selected from the Battery for the Assessment of Auditory Sensorimotor and Timing Abilities (BAASTA), the Beat Alignment Test (BAT), the Beat-Based Advantage task (BBA), and two tasks from the Burgundy best Musical Aptitude Test (BbMAT). Principal component analyses revealed clear separation of task performance along three main dimensions: production, beat-based rhythm perception, and sequence memory-based rhythm perception. Hierarchical cluster analyses supported these results, revealing clusters of participants who performed selectively more or less accurately along different dimensions. The current results support the hypothesis of divergence of rhythmic skills. Based on these results, we provide guidelines towards a comprehensive testing of rhythm abilities, including at least three short tasks measuring: (1) rhythm production (e.g., tapping to metronome/music), (2) beat-based rhythm perception (e.g., BAT), and (3) sequence memory-based rhythm processing (e.g., BBA). Implications for underlying neural mechanisms, future research, and potential directions for rehabilitation and training programs are discussed.

Keywords: Music, Rhythm, Rhythmic skills, Rhythmic competencies, Rhythm battery

Introduction

The human proclivity for rhythm is widespread through-out the population and can be clearly seen on the dancefloor (Carlson et al., 2018), at infancy (Winkler et al., 2009), and across different cultures (Jacoby & McDermott, 2017; Polak et al., 2018). We refer to rhythm as the serially ordered pattern of time intervals in a stimulus sequence (i.e., time spans marked by event onsets). The beat1 can be considered as the most prominent periodicity within a musical piece, for example, where the listener is likely to want to clap their hands or move in time with the rhythm (McAuley, 2010). Meter refers to the temporal organization of beats, in which some beats are perceived as more salient than others, on multiple time scales (i.e., a perceived hierarchy of patterns of strong and weak beats) (Fitch, 2013). Extracting a beat/metrical structure from a rhythm engages several cognitive processes, including time/duration processing and more general cognitive processing, including working memory and attention. Rhythm perception is also strongly linked to movement, as just listening to rhythmic patterns activates (pre)motor areas in the brain (Grahn & Brett, 2007), and invokes an urge to move in time with the music (Vuust et al., 2014). The large majority of the population are able to move in time with an external rhythm (e.g., Repp, 2010; Sowiński & Dalla Bella, 2013) and find the beat without difficulty; however, different patterns of rhythm impairments observed in the population can be valuable to reveal the multidimensionality of rhythmic abilities (Bégel et al., 2017; Phillips-Silver et al., 2011) and provide insight into potential differences in underlying neural mechanisms.

To assess rhythmic skills, previous research has used different types of tasks that potentially tap into separable underlying competencies. One distinction observed in the literature is between tasks that involve the perception of rhythm and tasks that involve the production of rhythm. Rhythm perception tasks refer to tasks where the listener makes a judgment on the rhythm (with no production element), and rhythm production tasks refer to tasks where the listener is asked to produce a rhythm (i.e., synchronization/paced tapping tasks, reproducing a previously heard rhythm). Rhythm production tasks often additionally require rhythm perception skills (i.e., tapping to a metronome or music), but not always (i.e., unpaced tapping). A second distinction in the literature is between tasks that involve memory for and discrimination of rhythmic sequences (during perception and/or production), and tasks that involve judgments on or synchronization to the timing, beat, or alignment of rhythms. We refer to tasks that involve a strong short-term memory component (i.e., rhythm pattern discrimination and rhythm reproduction tasks) as sequence memory-based rhythm tasks, and tasks that involve judgments about the timing, beat, or alignment of a rhythm, as well as tapping tasks, as beat-based rhythm tasks. Note that this distinction is not always clear-cut, as sequence memory-based rhythm tasks may also involve some beat-based processing and beat-based rhythm tasks may also involve some sequence memory-based processing. However, for current purposes, and to align with previous research in the field (e.g., Bonacina et al., 2019; Tierney & Kraus, 2015), we use this distinction. In the typically developing population, performance on rhythm perception and production tasks (Dalla Bella et al., 2017), and sequence memory-based and beat-based rhythm tasks (Bonacina et al., 2019; Tierney & Kraus, 2015) are not routinely correlated, suggesting separable rhythmic competencies.

Within the larger umbrella term of rhythm ability, different patterns of performance have been observed in single-case studies, which indicate various dissociations between different rhythmic competencies. Isolated difficulties have been observed for rhythm perception (Bégel et al., 2017), rhythm synchronization (Sowiński & Dalla Bella, 2013), or both perception and synchronization (Palmer et al., 2014; Phillips-Silver et al., 2011). Synchronization with a musical beat can also be selectively impaired (i.e., beat/meter extraction), while synchronization to an isochronous stimulus and unpaced tapping remain unimpaired (Launay et al., 2014; Phillips-Silver et al., 2011). Rhythm deficits have also been observed to occur comorbidly with developmental disorders, including attention deficit hyperactivity disorder (Puyjarinet et al., 2017), dyslexia (Bégel et al., 2022; Colling et al., 2017; Overy et al., 2003), developmental language disorder (Cumming et al., 2015), as well as in patients with Parkinson’s disease (Puyjarinet et al., 2019). Such cases provide an interesting basis to suggest that different rhythm skills are (a) somewhat separable and (b) come into play during social and language development (Lense et al., 2021). They may also be linked to pathology in some cases (for review, see Fiveash et al., 2021; Ladányi et al., 2020). It is therefore important to understand the multidimensionality of rhythm skills in the general population, with the larger goal to better understand rhythm deficits and their underlying neural correlates in patient populations.

The distinctions and dissociations observed for performance on different types of rhythm tasks in the literature strongly provoke a case for multiple rhythmic competencies (Tierney & Kraus, 2015). These competencies can be measured with different types of tasks and are likely to be supported by different underlying neural mechanisms (Bouwer et al., 2020; Leow & Grahn, 2014; Thaut et al., 2014). Based on such findings, theoretical work is beginning to re-categorize rhythmic ability as multi-faceted, with potentially distinct biological bases and evolutionary histories underlying different competencies (Bouwer et al., 2021; Greenfield et al., 2021; Kotz et al., 2018). With the aim of measuring separable rhythmic competencies within an overall picture of competencies instead of investigating them independently (as in earlier research), we refer here primarily to two distinctions: performance measured by perception versus production tasks, and performance measured by sequence memory-based versus beat-based rhythm perception tasks.

Perception versus production

One common distinction that has emerged from previous research is between rhythm perception and production skills. Although these skills are often intertwined, and encompass various sub-processes (i.e., beat extraction, attention, working memory), evidence for dissociation suggests the need for further exploration of individual differences and underlying mechanisms. The Battery for the Assessment of Auditory Sensorimotor and Timing Abilities (BAASTA; Dalla Bella et al., 2017) and the Harvard Beat Assessment Test (H-BAT; Fujii & Schlaug, 2013) are rhythm perception and production test batteries that each use the same set of materials across tasks. These batteries have shown both correlation and lack of correlation between different measures of rhythmic perception and production. For example, using BAASTA (Dalla Bella et al., 2017; Fig. 8; n = 20), correlations were found between the perceptual beat alignment test (determine whether a super-imposed metronome was on or off the beat of a musical sequence) and a number of production measures: unpaced tapping, paced tapping to an isochronous sequence (but only marginally for paced tapping to music), and the synchronization-continuation task (continue tapping after the external metronome has stopped). The perceptual Anisochrony detection task (detect whether a sequence of tones was regular or not regular) was correlated with paced tapping to an isochronous sequence and paced tapping to music, but not with unpaced tapping or synchronization-continuation. In the H-BAT, Fujii and Schlaug (2013, n = 30) only found one significant correlation between equivalent perception and production measures, which was in the beat saliency test. In this test, participants were asked to detect a duple or triple meter, or produced the same meter by tapping on a drum pad. For the beat interval test (discriminate or synchronize with increases/decreases in tempo) and the beat finding and interval test (find the underlying beat and produce or discriminate increases/decreases in tempo), there were no correlations between the perception and production measures. Different patterns of correlation and no correlation across these different batteries suggest that rhythm processing skills are complex and may be composed of different underlying competencies. Note though that these correlations need to be treated somewhat carefully as they are based on quite low participant numbers.

Single-case studies have shown dissociations in participants with selective impairments in rhythm perception or production. On the one hand, Sowiński and Dalla Bella (2013) reported two participants (S1 and S5) who were atypical in their marked inaccuracy at tapping to music and to amplitude-modulated noise, but performed at control level on the Anisochrony detection task and the Montreal Battery of Evaluation of Amusia (both the pitch and rhythm sub-tests; Peretz et al., 2003) and were unimpaired in unpaced tapping. Such a pattern suggests a specific synchronization impairment. To distinguish it from beat deafness, the authors labelled this new form of impairment as “pure sensorimotor coupling disorder.” This distinction is in line with an earlier case study that reported a patient with brain damage following stroke who exhibited intact rhythm perception and reproduction skills, but impaired synchronization to both a metronome and marching band music (Fries & Swihart, 1990).

On the other hand, Bégel et al. (2017) showed the reverse pattern of two cases of “beat deaf’ individuals (L.A. and L.C.) who showed impaired beat perception (detecting time shifts in a regular sequence; estimating if a metronome is aligned/not aligned with a musical beat) compared to controls, but were unimpaired in beat production, as measured by tapping to a metronome or to the beat of music (they also present the case of L.V., who showed impairments in both perception and production tasks). It therefore appears that neural mechanisms serving perception and production can dissociate in participants with rhythm disorders, even though beat perception and production are commonly linked in the brain (Cannon & Patel, 2021; Chen et al., 2008). Different patterns of association and dissociation across different perception and production tasks have also been shown in the pitch domain, with evidence for both dissociations (e.g., Dalla Bella et al., 2007; Loui et al., 2008) and associations (Pfordresher & Demorest, 2021; Pfordresher & Nolan, 2019; Williamson et al., 2012) across different perception and production tasks.

Sequence memory-based rhythm versus beat-based rhythm

The other distinction observed in the literature is between sequence memory-based and beat-based rhythm processing tasks. Sequence memory-based tasks that require the participant to remember or reproduce a rhythm are suggested to draw on mechanisms that differ from those in beat-based tasks; the latter requiring the participant to extract a beat or synchronize with an external rhythm. Such distinctions appear related to the different time-scales and sequencing/memory demands necessary to complete the task. As outlined in Tierney and Kraus (2015), memory for rhythm largely operates over a longer, supra-second time scale, whereas synchronization to a regular pulse generally operates on a shorter, sub-second time scale (specifically in the case where participants tap at a low level of the metric hierarchy). A meta-analysis of fMRI studies showed that although sub- and supra-second processing do draw on similar areas in the brain, subcortical areas appear more strongly involved in sub-second tasks, and cortical areas appear more strongly involved in supra-second tasks (Nani et al., 2019). A specialization of the cerebellum for processing sub-second time scales and the basal ganglia for processing supra-second time scales has also been proposed (Schwartze et al., 2012). It therefore appears that sub-second and supra-second time scales may be processed differently in the brain, though it should be noted that this does not discount roles for cortical areas in sub-second timing or subcortical areas in supra-second timing (see Nani et al., 2019). Importantly, discrimination tasks requiring the comparison of two rhythmic stimuli, or the reproduction of a previously heard stimulus, additionally require the maintenance of these rhythms in short-term memory (STM), which could also account for the larger involvement of cortical areas for longer time scales (see also links with STM and rhythm reproduction in Grahn & Schuit, 2012, and Tierney & Kraus, 2015). However, it should be noted that shared processes are likely to still be involved in both types of tasks, including beat and meter processing, duration processing, attention, and working memory, though perhaps the relative contribution of these processes differs depending on the task, resulting in separable skills.

Behavioral research has shown a separation between performance on sequence memory-based rhythm tasks compared to beat-based rhythm tasks. To test the potential divergence of sequence memory-based and beat-based rhythm skills, both Tierney and Kraus (2015) and Bonacina et al. (2019) ran four separate tasks on 67 adults and 68 children (aged between 5 and 8 years), respectively. All tasks consisted of production measures, though as acknowledged by the authors, perception was also strongly involved. In Tierney and Kraus (2015), two of the tasks were suggested to implicate beat-tapping (i.e., beat-based) skills: (a) drumming to a metronome and (b) a tempo adaptation task. It was suggested that the other two tasks involved memory/sequencing (i.e., memory-based) skills: (a) drumming along to repeated rhythmic sequences and (b) reproducing previously heard rhythms. As predicted, the two beat-tapping tasks correlated with each other, and the two memory/sequencing tasks correlated with each other, but there were no correlations between the beat-tapping and memory/sequencing tasks, supporting the hypothesis that these tasks may recruit different underlying mechanisms.

Bonacina et al. (2019) asked participants to (a) drum in time with an isochronous beat, (b) drum to the beat of music, (c) remember and reproduce rhythms, and (d) clap in time with visual feedback. Consistent with the results from Tierney and Kraus (2015), drumming to an isochronous beat and remembering and reproducing rhythms did not correlate. However, clapping in time with visual feedback correlated with the other three measures, and drumming to music also correlated with the other three measures (including remembering and reproducing rhythms). These results therefore replicate a distinction between tapping to a metronome and reproducing a rhythm from memory, but suggest that there may be a connection between beat extraction in music and remembering and repeating rhythms. This connection may be related to beat and meter extraction, as the rhythms to be reproduced consisted of both strong and weak metrical sequences. It therefore appears that the connections between different rhythmic skills reflect more than a simple dichotomy between perception and production, but likely provide an insight into underlying neural mechanisms driving these separable skills (see also distinctions between beat-based and memory-based temporal expectations in Bouwer et al., 2020).

To test the hypothesis that shorter and longer rhythmic time scales relate to synchronization and sequencing skills (respectively) in the brain, Tierney et al. (2017) administered six different drumming production tasks and measured neural response consistency to a sound (i.e., the syllable “da”) in 64 young adults (49 with the neural measures). The rationale behind this experiment was that there should be a link between tapping consistency and the neural response to sound. The drumming production tasks consisted of synchronization and memory/sequencing tasks, while both subcortical and cortical neural responses were measured. The subcortical measurement was the consistency of the fast frequency-following response (FFR) to the sound presented with an inter-onset-interval (IOI) of 251 ms. The cortical measurement was the consistency of the evoked response to the same sound presented with a 1,006 ms IOI. Four main findings emerged from this study: First, there were no correlations between synchronization (tapping to an isochronous metronome) and memory/sequencing tasks (repeating rhythmic sequences and drumming to repeated rhythmic sequences), in line with other studies (Bonacina et al., 2019; Tierney & Kraus, 2015). Second, a factor analysis across the six tasks independently revealed two main factors: a synchronization factor and a sequencing factor (as observed in Bonacina et al., 2019; Tierney & Kraus, 2015). Third, a measure of STM correlated with the sequencing factor, but not the synchronization factor, revealing the expected link between STM and memory/sequencing tasks. Fourth, the synchronization factor only correlated with subcortical FFR consistency (both reflecting shorter time scales), and the sequencing factor only correlated with cortical evoked consistency (both reflecting longer time scales). The authors therefore suggest that synchronization tasks rely on shorter time scales of processing in the brain, and that memory/sequencing tasks rely on longer time scales of processing in the brain, and can be dissociated.

Although research is beginning to find distinctions between performance in perception and production tasks, as well as sequence memory-based and beat-based rhythm tasks, these distinctions have not yet been systematically investigated within one group of participants and across different types of tasks. Further, to our knowledge there has been no investigation of individual differences in relation to unique variance on different types of rhythm tasks, or whether participants can have selectively strong or weak performance depending on the rhythm task tested. The current study aims to investigate these questions, which have implications for how rhythm is processed by the brain, how rhythm skills are measured in the literature, and how different rhythm tasks may relate to other skills, such as speech/language processing (Fiveash et al., 2021; Ladányi et al., 2020).

The current study

The aim of the current study was to test on the same participants a set of rhythm tasks conceived across different labs to capture separable underlying rhythm competencies that exist above and beyond methodological differences of the various tasks. From currently available rhythm tasks, we selected nine representative tasks to cover different aspects of perception, production, sequence memory-based rhythm perception, and beat-based rhythm perception, including tests for internal beat generation, and beat and meter extraction. Based on previous research, we hypothesized that (a) perception and production tasks and (b) sequence memory-based and beat-based rhythm perception tasks would map onto different latent factors within principal component analyses (PCAs). We further predicted that clusters of participants would emerge who presented with different performance patterns across the different tasks. Differences across individuals and across tasks would provide insight into the complexity and variation of rhythmic skills within the general population, as well as potential clues for separable underlying neural mechanisms. This investigation aimed to provide insights into the diversity of rhythmic competencies in the general population, which could provide perspectives for the understanding of potential deficits emerging in both typically developing and patient populations.

Method

Participants

Thirty-one native French speaking adults non-selected for musical training participated in the current experimental battery (Mage = 20 years, SD = 1.9; range = 18–26; 26 females). Participants were recruited from different Universities in Lyon and through social media. Nineteen participants reported that they had previously played music and eight reported to currently play music. On average, participants had 3.61 years (SD = 4.24; range = 0–13, median = 2.00) of musical experience (including years of classes and years of individual playing). Seventeen participants reported attending dance classes in the past, and two currently attended dance classes. In total, participants had taken an average of 3.32 years of dance classes (SD = 4.45 years, range = 0–15 years). See Online Supplementary Material (Table 5) for all music and dance training information. Participants reported no history of dyslexia or neurological issues, and no issues with hearing or vision that precluded them from participating in the study. All participants provided written informed consent, as approved by the French ethics committee (Comité de Protection des Personnes Ile de France X, CPP). They were paid 12 euros an hour for their participation.

Sample size was determined based on a power analysis using G*Power (Faul et al., 2007). As the required sample size for principal component analysis (PCA) is highly variable and inconsistent (Mundfrom et al., 2005), and PCA is considered a form of multivariate statistics (Chi, 2012), we ran a power analysis to aim for enough power to detect an effect within a repeated-measures MANOVA-based analysis. We set α = 0.05, power = 0.80, and specified a medium effect size2 f = 0.25, as suggested by Cunningham & McCrum-Gardner, 2007). Further, we outlined that we have one group with eight measurements (i.e., our dependent variables), and a correlation of 0.5 between variables. The power analysis suggested 23 participants were necessary to detect an effect, but considering we were running a PCA, we tested some more participants (n = 31), aimed to reduce redundancy in variables entered into the PCA, and limited the extracted components to three to avoid possible over-interpretation. All participants were tested before data were analyzed, to avoid optional stopping (Rouder, 2014; Simmons et al., 2011).

Tasks and apparatus

Nine rhythm tasks were selected to encompass various rhythmic competencies (see Table 1). Five tasks measured different aspects of perception: (1) the Anisochrony detection task (BAASTA; Dalla Bella et al., 2017); (2) the beat-based advantage task (BBA; Gordon et al., 2015; Niarchou et al., 2021); (3) the beat alignment test (BAT; Dalla Bella et al., 2017; original version, Iversen & Patel, 2008); (4) the Burgundy best musical aptitude test (http://leadserv.u-bourgogne.fr/~cimus/) for synchronization (BbMAT-Synch); and (5) the BbMAT metric regularity task (BbMAT-Metric). Four tasks (all from the BAASTA) measured different aspects of production using finger tapping: (1) unpaced tapping; (2) synchronization-continuation; (3) paced tapping to a metronome, and (4) paced tapping to music. See more details below in the descriptions of each task. Even though we did expect some degree of overlap across tasks, each task was chosen to assess a different aspect of rhythm-based processing, as outlined in Table 1. All production tasks were performed before the perception tasks, so the perception of errors would not have influenced tapping performance, even though paced tapping to music and BAT used the same music. The BAT and BbMAT both assess an aspect of beat alignment; however, the BAT is a well-established test that uses an external timbre to investigate beat alignment, whereas the BbMAT has not been widely tested up to now and is a more subtle measure of alignment sensitivity within a complex musical piece (with finer manipulations and no external stimulus). The combination of these tests provides a unique opportunity to extend the domain to other tasks and investigate potential overlap across tasks and competencies.

Table 1.

Summary of perception and production tasks, the dependent variables (DVs) measured, the duration of each task, and potential rhythm abilities measured by these tasks

| Task | DV | Duration | Rhythm ability | |

|---|---|---|---|---|

| Perception | Anisochrony detection | Threshold Estimation | ~ 3 min | Deviation detection from an isochronous beat |

| BBA | Sensitivity, c, accuracy | ~ 6 min | Rhythm discrimination, potential beat and meter extraction (simple and complex rhythms) | |

| BAT | Sensitivity, c, accuracy | ~ 8 min | Beat alignment | |

| BbMAT-Synch | Sensitivity, c, accuracy | ~ 6 min | Beat and meter extraction, alignment | |

| BbMAT-Metric | Sensitivity, c, accuracy | ~ 5 min | Beat and meter extraction (impaired performers) | |

| Production | Unpaced tapping | Mean ITI, motor variability | ~ 1 min | Preferred tapping rate. Internal rhythm generation and consistency |

| Synch – continuation | Mean ITI, motor variability | ~ 1.5 | Internal rhythm generation and consistency | |

| Paced tapping metronome | Synchronization accuracy and consistency, motor variability | ~ 1.7 min | Synchronization | |

| Paced tapping music | Synchronization accuracy and consistency, motor variability | ~ 1.8 min | Beat extraction, metric extraction, synchronization |

ITI inter-tap interval, BBA beat-based advantage task, BbMAT Burgundy best musical aptitude test, BAT beat alignment test, c response bias c

All production tasks and the Anisochrony detection task were run on a tablet version of BAASTA (as in Bégel et al., 2018; Dauvergne et al., 2018; Puyjarinet et al., 2017). This version of BAASTA affords high temporal precision (≤ 1 ms) by relying on the audio recording of the sound of the taps when they reach the touchscreen, and thereby bypassing possible sources of delay and jitter typical of mobile devices (Dalla Bella & Andary, 2020). The BBA, BbMAT-Synch, and BbMAT-Metric tasks were run with Matlab (version 2018a) and PsychToolbox (version 3.0.14) through an Apple Mac Mini desktop computer. The BAT was run with Eprime (Schneider et al., 2002) on a Dell laptop.

Procedure

Participants completed all tasks in a fixed order, starting with the five tasks from BAASTA, in the order: unpaced tapping, paced tapping to a metronome, paced tapping to music, synchronization-continuation, and Anisochrony detection. Each production task was completed twice, and paced tapping to music was completed once with music 1 and once with music 2 (more details below). After BAASTA, participants completed the BBA, BbMAT-Synch, BbMAT-Metric, BAT, and the questionnaires. Participants wore headphones for all tasks and had a short break between each task. The tasks are outlined in more detail below, grouped into perception and then production tasks. The total testing time was approximately 1 h.

Perception tasks

Anisochrony detection

Participants heard a sequence of five tones (tone frequency = 1,047 Hz, duration =150 ms) and indicated if the sequence was regular or irregular. The IOI for regular sequences was constant (750 ms IOI). For the non-regular sequences, the fourth tone was up to 30% of the IOI earlier than expected. The difference in IOI was adapted based on participant responses to detect a change threshold using a 2 down/1 up staircase procedure. With this procedure, participants needed to consecutively detect an irregular trial twice before the IOI difference was reduced in subsequent trials (see Dalla Bella et al., 2017, Exp. 2 for further explanation). Participants responded after each sequence by pressing a regular or irregular button on the BAASTA tablet. The task was repeated twice, and the final threshold was the average of the two thresholds.

Beat-based advantage task

The BBA consisted of 16 same-different trials derived from previous work (Grahn & Brett, 2007; Povel & Essens, 1985) and similar to Gordon et al. (2015). Sixteen items were chosen here to reflect a wide range of difficulty, from the 32-trial version of the same task conducted on 724 participants (Niarchou et al., 2021). Eight trials consisted of simple rhythms and the other eight consisted of complex rhythms (half same, half different). Simple rhythms contained a strong metrical structure and were considered easier to discriminate, whereas complex rhythms contained a weaker metrical structure with more syncopation and were considered more difficult to discriminate (based on principles of subjective accenting from Povel & Essens, 1985). For each trial, a visual schema was presented on the screen to make the structure of the trial format clear, i.e., rhythm 1, rhythm 2, rhythm 3. The first two rhythms were identical, and the third rhythm was either the same or different. Different trials consisted of the reversal of an adjacent interval. A 1,500 ms silence occurred between each rhythm. All three rhythms in each trial were presented in one of four pure tone frequencies (294, 587, 411, 470 Hz). Participants were asked to detect whether the third rhythm was the same or different. Participants responded at the end of the third rhythm by pressing one of two keys on the computer keyboard.

Beat alignment test

The beat alignment test used in the current experiment was an adaptation of the task created by Iversen and Patel (2008). The current implementation used four unique musical sequences (two different fragments from Bach’s Badinerie and Rossini’s William Tell Overture) with an inter-beat interval of 600 ms, taken from BAASTA. Approximately 3–4 s into each musical sequence, a high-pitched triangle timbre was introduced that was either in phase and period with the rhythm (aligned), out of phase (phase misaligned), or out of period (period misaligned). For phase misaligned trials, the triangle tones were presented at the same tempo as that of the excerpt, but shifted before or after the beat by 33% of the inter-beat-interval. In the period misaligned trials, the triangle timbre was presented at a consistent tempo that was 10% slower or faster than the beat. There were two blocks consisting of 24 trials each (four rhythms, presented twice in each condition), for a total of 48 trials. Participants were asked to detect whether the triangle timbre was aligned or not aligned with the beat and to respond as soon as they knew their answer. Participants pressed one of two buttons to indicate their response.

BbMAT synchronization and metric regularity tests

Synchronization

In the synchronization test, participants were presented with sound files created for the BbMAT with complex percussion and accompaniment and were asked whether the music was played well together and synchronized or not played well together and not synchronized. Synchronized rhythms were designed with a polyrhythmic structure including four instrument streams in the style of Brazilian "batucada" from MIDI virtual studio technology instrument timbres. The rhythms were arranged to create a stable beat percept in a 4/4 meter at 120 beats per minute (bpm) including some syncopations. To create the unsynchronized rhythms, all onsets of three of the four polyrhythmic streams were shifted by random values varying in relation to the expected onset chosen. The random values could be between −60 ms/+60 ms, −40 ms/+30 ms, −50 ms/+60 ms, or −50 ms/+50 ms, depending on the polyrhythmic sequence. These slight changes in time values were motivated to render the asynchrony more easily perceptible.

There were eight synchronized and eight unsynchronized trials, as well as an example trial for each, where synchronized or not synchronized was explicitly indicated on the screen. In the current implementation, all rhythms were limited to 17 s in duration with a fade-out at the end, and were randomized for each participant, with the restrictions that (a) the synchronized and unsynchronized versions of the same rhythm were not played in succession, and (b) no more than four of the same type of stimulus were played sequentially. Once the sound file had finished, participants pressed one of two buttons on the keyboard to indicate whether the rhythm was synchronized or not synchronized.

Metric regularity

In the metric regularity test, participants were presented with regular and irregular musical sequences similar to those used in Canette et al. (2020), Fiveash et al. (2020a), (2020b). Regular rhythms contained different percussion instruments and electronic sounds (i.e., bass drum, snare drum, tom-tom, and cymbal), created with MIDI VST instrument timbres. Regular rhythms were in a 4/4 meter and 120 bpm. Irregular rhythms were composed by taking the regular rhythms and rearranging the acoustic information in time so that there were no regularities in beat or meter. In the current implementation, all rhythms were shortened to 17 s with a fade-out at the end. Participants were asked to judge whether the rhythms were pulsed or not pulsed. Pulsed rhythms were defined as rhythms that made you want to tap your feet, to dance, or to move. Non-pulsed rhythms were defined as a bit disjointed, less made to move, and less regular. After two example rhythms (where pulsed or not pulsed was indicated on the screen), six regular and six irregular rhythms were randomly presented to participants, with the same randomization restrictions as in the synchronization task. Because this was a relatively easy task, participants were able to respond as soon as they knew their response (thereby stopping the rhythm) by pressing one of two buttons to indicate pulsed or not pulsed.

Production tasks

In all production tasks, participants tapped with the index finger of their dominant hand within a large green square on the tablet screen.

Unpaced tapping

Participants tapped at a natural rate for 60 s, as regularly as possible. This task was performed twice, separated by a short break.

Paced tapping to a metronome

Participants heard an isochronous sequence of 60 identical tones (600 ms IOI, tone frequency = 1,319 Hz) and tapped along with each tone. To ensure they were as accurate as possible, it was suggested that participants wait for the first four or five tones to be comfortable with the tapping speed before starting to tap. This task was performed twice, separated by a small break.

Paced tapping with music

Participants tapped to the beat (defined as a regular pulse in the music, where you might clap your hands or tap your foot) of two different pieces of music (the same as in the BAT above): The Badinerie (Bach), and the William Tell Overture (Rossini), referred to as music 1 and music 2, respectively. Each piece had a 600 ms inter-beat interval (IBI), with 64 beats each (~38 s). Participants were asked to keep their tapping regular, with the same interval between each tap, and to start tapping when they felt comfortable with the tapping speed. Music 1 was followed by music 2. Tapping results for the two pieces were averaged for a global music tapping measure.

Synchronization-continuation

Participants heard ten piano tones (600 ms IOI, tone frequency = 1,319 Hz, as in paced tapping to metronome), which they tapped along with (synchronization phase). After the last tone, they continued tapping at the same pace (continuation phase). The continuation phase lasted for the equivalent of 30 IOIs from the synchronization phase. Participants stopped tapping when they heard a low-pitched tone. They were asked to tap as regularly as possible, and to maintain the same interval between each tap. This task was repeated twice separated by a short break.

Questionnaires

Participants completed a series of questionnaires at the end of the experiment, in addition to a general questionnaire of musical background and training. To measure a participant’s sensitivity to music reward, we administered the Barcelona Music Reward Questionnaire (BMRQ; Mas-Herrero et al., 2013), consisting of 20 questions, four in each sub-scale of: musical seeking, emotional evocation, mood regulation, sensory-motor, and social reward. The French translation was used from Saliba et al. (2016) and can be accessed in their Appendix S2. Normed scores were then calculated at http://brainvitge.org/z_oldsite/bmrq.php. To measure musicality/music engagement, three questions were selected from the Goldsmiths Musical Sophistication Index (Gold-MSI; Müllensiefen et al., 2014) based on their face validity for musicality/music engagement. These questions were: (1) I can sing or play music from memory (7-point scale; strongly disagree to strongly agree), (2) I have never been complimented for my talents as a musical performer (7-point scale; strongly disagree to strongly agree), and (3) At the peak of my interest, I practiced… hours per day on my primary instrument (7-point scale; 0–5 or more hours). The French translation for the Gold-MSI from Degrave and Dedonder (2019) was used and can be accessed in their supplementary material (and our OSM Table 6). Additionally, we added the question used in Niarchou et al. (2021): can you clap in time with a musical beat?, with a French translation of savez-vous taper en rythme sur la musique?3 We also included a final question from the adaption of the Creative Achievement Questionnaire (Carson et al., 2005) used in Mosing et al. (2016): How engaged with music are you? Singing, playing, and even writing music counts here (7-point scale; I am not engaged in music – I am a professional musician). The French translations can be seen in OSM Table 6. Non-standardized French translations were verified with at least two native French speakers.

Data processing

Perception tasks

For the Anisochrony detection task, the mean threshold for detecting a change in the five-note sequences was expressed as a percentage of the IOI (as in Dalla Bella et al., 2017, Exp. 2). Smaller thresholds indicate better performance. For the other perceptual tasks (BBA, BAT, BbMAT-Synch, BbMAT-Metric), accuracy, sensitivity to the signal (d prime, d’), and response bias c were calculated (see Table 1). D prime is a signal detection theory measure that incorporates hits (i.e., when there was an error/difference and the participant detects the error/difference) and false alarms (i.e., when there was no error/difference, but the participant detects an error/difference) to determine participants’ discrimination sensitivity (Stanislaw & Todorov, 1999). To calculate d’, the z-score of the false alarm rate was subtracted from the z-score of the hit rate. Extreme values of 1 and 0 were corrected to 0.99 and 0.01, respectively. Higher d’ values reflect greater detection of the signal, and a value of 0 reflects that the signal cannot be distinguished from the noise. To calculate response bias c, the sum of the z-scores for hits and false alarms were multiplied by −0.50. Positive values of c suggest a bias to respond same/aligned/synchronized/pulsed, whereas negative values suggest a bias to respond different/not aligned/unsynchronized/not pulsed.

Production tasks

All analyses were conducted as in Dalla Bella et al. (2017), with both linear and circular statistics. Measures obtained using circular statistics for auditory-motor synchronization tasks are particularly sensitive to individual differences, and capture a range of tapping situations (Fujii & Schlaug, 2013; Kirschner & Tomasello, 2009; Sowiński & Dalla Bella, 2013). For all tapping measures, the mean inter-tap interval (ITI) and motor variability (the mean coefficient of variation, CV; calculated by taking the SD of the ITIs divided by the mean ITI) were calculated (for the synchronization-continuation task only the continuation phase was analyzed). For circular statistics, synchronization accuracy (i.e., angle) and synchronization consistency (i.e., vector length R) were calculated (Dalla Bella et al., 2017; Dalla Bella & Sowiński, 2015; Sowiński & Dalla Bella, 2013). The inter-stimulus interval (or inter-beat interval) is presented on a polar scale (from 0 to 360°), where 0° represents the beat time. Taps are represented as angles (unitary vectors) in this circular space, depending on their occurrence relative to the beat time. The resultant vector R is calculated from this distribution of angles. The direction of the vector (or relative phase) indicates synchronization accuracy and refers to tapping time relative to the beat on average. A negative value indicates that taps on average anticipated the beat, while a positive value indicates that taps lagged after the beat. The length of this vector indicates synchronization consistency, which ranges from 0 to 1 (1 = perfect synchronization; 0 = random alignment of the taps to the beat). Note that synchronization accuracy was only calculated when performance was above chance, according to the Rayleigh test (Pewsey et al., 2013). Synchronization consistency (vector length R) was logit transformed before analysis (as in Cumming et al., 2015; Dalla Bella et al., 2017; Falk et al., 2015). For tasks repeated twice, averages were calculated. See Table 1 for an overview.

Statistical analyses

Principal components and hierarchical cluster analyses

PCAs were run in R using the package FactoMineR (Lê et al., 2008). Based on distinctions observed in the literature, we first ran a PCA with only the perception variables to investigate whether we could observe a difference between sequence memory-based rhythm perception tasks and beat-based rhythm perception tasks. We then added the production variables to these same perception variables in a second PCA to investigate whether we would observe distinct performance for perception compared to production tasks. One value for each task was used for the PCAs to reduce redundancy, and composite scores were used instead of sub-scores for the same reason. For perception, the threshold value from the Anisochrony detection task and the sensitivity d’ values from the BBA, BAT, and BbMAT-Synch were used, while BbMAT-Metric was excluded because of ceiling performance. For production, the motor variability (CV of ITI) values were used for all tasks (unpaced tapping, synchronization-continuation, paced tapping to metronome, paced tapping to music) because these values were available across all tasks and reflect tapping variability (e.g., Cameron & Grahn, 2014; Dalla Bella et al., 2017). All variables were z-score normalized as implemented within the PCA analysis of FactoMineR to compare measurements across different variables. Negatively scored values (i.e., motor variability and Anisochrony threshold) were multiplied by −1 so that higher scores on a variable always indicated better performance.

Missing values were imputed using regularized iterative PCA and replaced with an estimation of the missing data based on performance on other tasks within the same dimension (as implemented with FactoMineR using the command imputePCA). Across all perception tasks, only one data point for one participant (#18) was missing for the Anisochrony detection task. For production, one participant (#31) was missing all motor variability values because of a technical problem with the tapping recording file. For the perception + production PCA, this participant’s data were not included.

Clustering was performed using Hierarchical Classification on Principal Components (HCPC) within FactoMineR and was based on the first three principal components extracted from each PCA. The hierarchical trees were cut based on suggested heights defined by the program. Clusters were confirmed within each task with ANOVAs when variance between groups was equal, or Welch’s F-tests if variance between groups was not equal. Clusters were then compared using independent-sample t-tests (if group variance was equal), or Welch’s two-sample t-tests (if group variance was not equal).

Results

All descriptive statistics are outlined in the OSM, shown in Figs. 1 (perception) and 2 (production) for all aggregate results, and OSM Figs. 1 and 2 for a more detailed breakdown of conditions. Bivariate correlations between all measures included in the PCAs are reported in OSM Table 3.

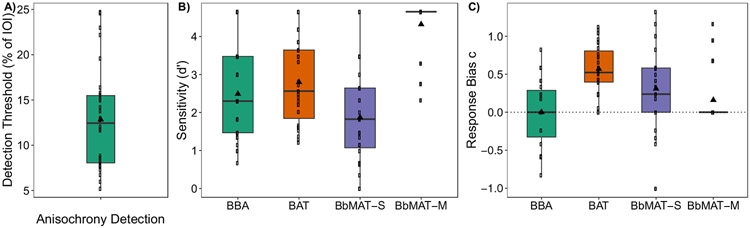

Fig. 1.

Perception results. (A) Anisochrony detection threshold presented as a percentage of the inter-onset interval (IOI, 750 ms); (B) d′ sensitivity values for the beat-based advantage task (BBA), the beat-alignment test (BAT), the synchronization task in the Burgundy best Musical Aptitude Test (BbMAT-S), and the Metric task in the BbMAT (BbMAT-M); and (C) response bias c for the four perception tasks. Individual dots represent individual participant data, and the mean is represented by a black triangle. Boxplots represent the distribution of data as implemented in ggplot2 in R (R Core Team, 2018), with the black line representing the median

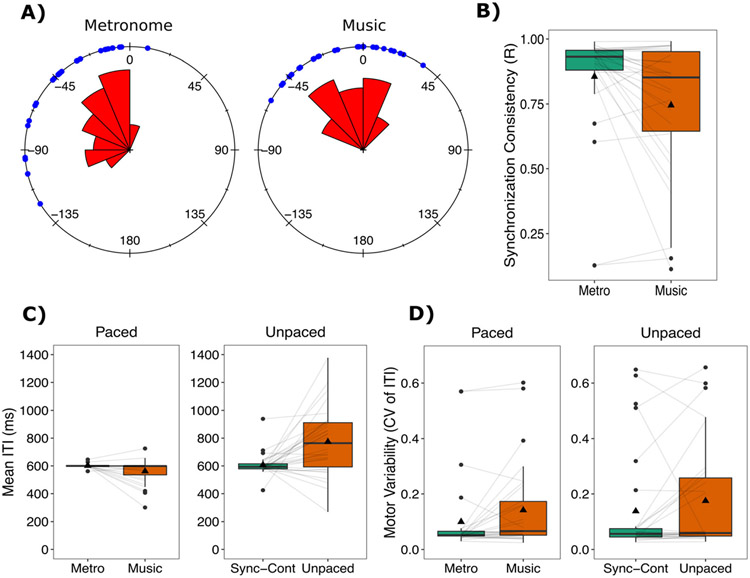

Fig. 2.

Production results. (A) Synchronization accuracy (i.e., angle) and rose plots for paced tapping measures represented with circular statistics. The zero point refers to when the beat occurred. Blue dots reflect individual participant responses. Negative values reflect taps before the beat, and positive reflect taps after the beat. The rose diagram (in red) reflects the frequency of responses in each segment. Sixteens bins were specified, and the radius of each segment reflects the square root of the relative frequency in each bin. See Pewsey et al. (2013) for more details. (B) Synchronization consistency (vector length R) values where 0 = no consistency between taps and 1 = absolute consistency between taps. (C) Mean inter-tap interval (ITI) for the paced and unpaced tapping tasks. For tasks with an external rhythm, all ITIs were 600 ms. (D) Motor variability (coefficient of variation (CV) of the ITI) for paced and unpaced tapping tasks. Boxplots represent the spread of data as implemented in ggplot2 in R. The black line represents the median in each condition, and the black triangle represents the mean. The box represents the interquartile range (quartile 1–quartile 3), and individual dots represent participants who might be considered as outliers in relation to the interquartile range. Individual lines represent individual participants

Principal component analyses

Participant performance on all tasks entered in the PCA is reported in the OSM, as well as the clusters, musical and dance training, and questionnaire scores and responses.

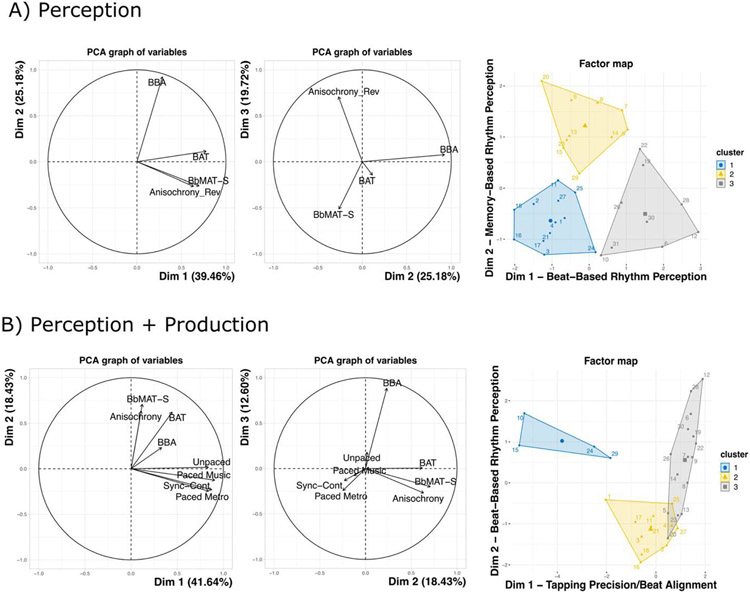

Perception principal component analysis (PCA)

The perception PCA revealed clear dimensions of rhythmic perception skills, with the first three dimensions accounting for 84.35% of the total variance (see Table 2 for variables entered into the PCA, Fig. 3A for the PCA dimensions, and Table 3 for an outline of all dimensions and clusters). Dimension 1 accounted for 39.46% of the variance and correlated with performance on the BAT, r(29) = 0.784, p < .001, BbMAT-Synch, r(29) = 0.70, p < .001, and Anisochrony detection, r(29) = 0.64, p < .001. This dimension could be considered as beat-based or alignment perception. Dimension 2 accounted for 25.18% of the total variance and correlated with the BBA, r(29) = 0.92, p <.001, suggesting that it is related to sequence memory-based rhythm perception. Dimension 3 accounted for 19.72% of the total variance and correlated with Anisochrony detection, r(29) = 0.71, p < .001 and BbMAT-Synch, r(29) = −0.51, p < .001. The negative correlation with BbMAT-Synch was unexpected, but might be explained by a difference between the two tasks in relation to duration-based timing or complexity: Anisochrony detection is quite simple and based on durations between isochronous tones, whereas BbMAT-Synch involves complex beat extraction and hierarchical meter perception, with musical material that uses complex timbres and multiple instruments. These tasks could therefore reflect extremes along the same dimension.

Table 2.

Tasks and dependent variables included in each principal component analysis. Sensitivity refers to the d′ measurement. Note that the paced tapping to metronome, paced tapping to music, and synchronization-continuation also require perception abilities. Duration is approximate and includes instructions. For trials that were completed twice (Anisochrony detection and all tapping tasks), the duration should be doubled to include the repetition

| PCA | Task | DV |

|---|---|---|

| Perception | BBA, BAT, BbMAT-S | Sensitivity d’ |

| Anisochrony detection | Threshold of detection | |

| Perception | BBA, BAT, BbMAT-S | Sensitivity d’ |

| + | Anisochrony detection | Threshold of detection |

| Production | Unpaced tapping, synch-cont, paced tapping metronome, paced tapping music (average) | Motor variability |

PCA principal components analysis, DV dependent variable, BBA beat-based advantage task, BAT beat alignment test, BbMAT-S Burgundy best musical aptitude test – synchronization, synch-cont synchronization-continuation, motor variability coefficient of variation of the inter-tap interval

Fig. 3.

Dimensions (1, 2, and 3) and clusters (1, 2, and 3) for (A) the perception PCA and (B) the perception + production PCA. (A) In the perception PCA, Cluster 1 reflects weak perceivers, Cluster 2 reflects strong sequence memory-based rhythm perceivers, and Cluster 3 reflects strong beat-based rhythm perceivers. (B) In the perception + production PCA, Cluster 1 reflects weak tappers, Cluster 2 reflects weak perceivers, and Cluster 3 reflects participants with strong rhythm (perception and production). Note that only Dimensions 1 and 2 are shown for the cluster graphs for clarity, but Dimension 3 for the perception + production PCA can be seen in OSM Fig. 3

Table 3.

The first three dimensions, clusters, and their interpretation for the principal component analyses

| Dim 1 | Dim 2 | Dim 3 | Cluster 1 | Cluster 2 | Cluster 3 | |

|---|---|---|---|---|---|---|

| PCA Perc | Beat-based perception | Sequence memory-based perception | Duration-based / complexity | Weak perceivers: 1, 2, 3, 4, 11, 16, 17, 18, 21, 24, 25, 27 | Strong sequence memory-based perceivers: 5, 7, 8, 9, 13, 14, 15, 20, 23, 29 | Strong beat-based perceivers: 6, 10, 12, 19, 22, 26, 28, 30, 31 |

| Task | BAT, BbMAT-S, Anisoch | BBA | Anisoch, BbMAT-SS | BAT*, Anisoch*, BBA* | BBA | BAT, BbMAT-S, Anisoch |

| PCA Perc/ Prod | Tapping precision and beat alignment | Beat-based perception | Sequence memory-based perception | Weak tappers: 10, 15, 24, 29 | Weak perceivers: 1, 2, 3, 4, 11, 16, 17, 18, 21, 25, 27 | Strong rhythm (perc/prod): 5, 6, 7, 8, 9, 12, 13, 14, 19, 20, 22, 23, 26, 28, 30 |

| Task | Unpaced, Synch-cont Metro, Music avg, BAT | BAT, Anisoch, BbMAT-S | BBA | Unpaced*, Synch-cont*, Metro*, Music avg* | BAT*, Anisoch*, BBA* | Unpaced, Synch-cont, Metro, Music, BBA, BAT |

Indicates negative correlation. Participant numbers (1–31) presented to show overlap between clusters

Dim dimension, Anisoch Anisochrony detection, Synch-cont synchronization-continuation, Metro metronome, BBA beat-based advantage task, BAT beat alignment test, BbMAT-S Burgundy best musical aptitude test- Synchronization, Perc perception. Prod production

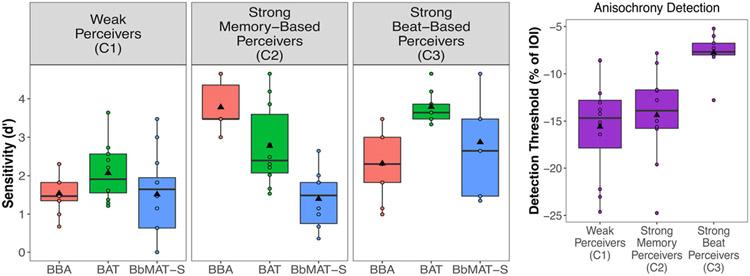

For perception, the hierarchical cluster analysis revealed three clear and distinct clusters of participants (see Fig. 4 for each cluster’s performance on the tests and Fig. 3A for cluster maps). Note that performance on all tasks significantly contributed to the clusters (all values, p < .008), but only along Dimensions 1 and 2 (both p < .001). Cluster 1 (n = 12) contained participants who performed inaccurately along both the beat-based (Dimension 1, v = −3.60, p < .001) and the sequence memory-based (Dimension 2, v = −2.76, p = .006) rhythm dimensions. Cluster 1 performed inaccurately on the BBA (v = −3.62, p < .001), the BAT (v = −3.06, p = .002), and the Anisochrony detection (v = −2.14, p = .03) tasks, and can be considered “weak perceivers.”5 Cluster 2 (n = 10) contained participants who performed well on the sequence memory-based rhythm dimension (Dimension 2, v = 4.59, p < .001), with particularly high performance on the BBA (v = 4.29, p < .001), and can be considered “strong sequence memory-based rhythm perceivers.” Cluster 3 (n = 9) performed well along the beat-based dimension (Dimension 1, v = 4.20, p < .001), with high performance on the BAT (v = 3.37, p < .001), Anisochrony detection (v = 3.33, p < .001), and the BbMAT-Synch (v = 2.93, p = .003) tasks, and can be considered “strong beat-based rhythm perceivers.”

Fig. 4.

The three clusters that emerged from the perception principal component analyses (PCAs) across the four tasks. Note that Anisochrony detection threshold scores (presented as a percentage of the 750 ms inter-onset interval) were reversed in scoring for the analysis and in the figure, such that more negative scores reflect more inaccurate performance. Boxplots represent the distribution of data as implemented in ggplot2 in R, with the black line representing the median. Individual dots represent individual participant data, and the black triangle represents the mean

Clusters were confirmed with ANOVAs showing a significant main effect of cluster in each perceptual task: BBA, F(2, 28) = 31.31, p < .001, ηp2 = 0.69; BAT, F(2, 28) = 11.89, p < .001, ηp2 = 0.46; Anisochrony detection: F(2, 28) = 8.56, p = .001, ηp2 = 0.38; and BbMAT-Synch, F(2, 28) = 5.67, p = .009, ηp2 = 0.29. Independent-samples t-tests (adjusted p-values presented with p’ after Holm-Bonferroni correction) showed that strong beat-based rhythm perceivers (Cluster 3) performed significantly better than weak perceivers (Cluster 1) on the BAT, t(19) = 6.29, p’ < .001, d = 2.77, Anisochrony detection, t(19) = 4.21, p’ < .001, d = 1.86, BbMAT-Synch, t(19) = 2.59, p’ = .04, d = 1.14, and the BBA, t(19) = 2.55, p’ = .02, d = 1.12 tasks. Strong beat-based rhythm perceivers (Cluster 3) also performed significantly better than strong sequence memory-based rhythm perceivers (Cluster 2) on Anisochrony detection, t(17) = 3.65, p’ = .004, d = 1.68, BbMAT-Synch, t(17) = 3.09, p’ = .02, d = 1.42, and the BAT, t(17) = 2.64, p’ = .03, d = 1.21. However, the strong sequence memory-based rhythm perceivers (Cluster 2) were significantly better than both the weak perceivers (Cluster 1), t(20) = 9.63, p’ < .001, d = 4.12, and the strong beat-based rhythm perceivers (Cluster 3), t(19) = 4.17, p’ = .001, d = 1.92 on the BBA. There were no differences between weak perceivers (Cluster 1) and strong sequence memory-based perceivers (Cluster 2) on the BAT, the BbMAT-Synch, or Anisochrony detection, all corrected p-values > .09.

Note that of the six participants who did not score at ceiling on the BbMAT-M (not included in the PCA analyses), four were clustered as weak perceivers, and two were clustered as strong sequence memory-based perceivers, consistent with the cluster groupings.

Perception + production PCA

The perception + production PCA consisted of the same four perception tasks in the perception PCA (BBA, BAT, BbMAT-Synch, Anisochrony detection) to which we added the motor variability scores (CV of ITI) for four additional production tasks (unpaced tapping, synchronization-continuation, paced tapping to metronome, paced tapping to music; see Table 2, Fig. 3B, and Table 3). The first three dimensions accounted for 72.68% of the total variance (see Fig. 3B). Dimension 1 accounted for 41.64% of the variance and correlated with performance on paced tapping to music, r(29) = 0.91, p < .001, synchronization-continuation, r(29) = 0.87, p < .001, unpaced tapping, r(29) = 0.84, p < .001, paced tapping to ametronome, r(29) = 0.84, p < .001, and BAT, r(29) = 0.44, p = .01. This dimension could be considered as tapping precision and beat alignment. Dimension 2 accounted for 18.43% of the total variance and correlated with performance on the BbMAT-Synch, r(29) = 0.71, p < .001, Anisochrony detection, r(29) = 0.63, p < .001, and the BAT, r(29) = 0.62, p < .001. This dimension therefore appears to reflect beat-based perception. Dimension 3 accounted for 12.60% of the total variance and correlated with performance on the BBA, r(29) = 0.88, p < .001, suggesting that it is related to sequence memory-based perception.

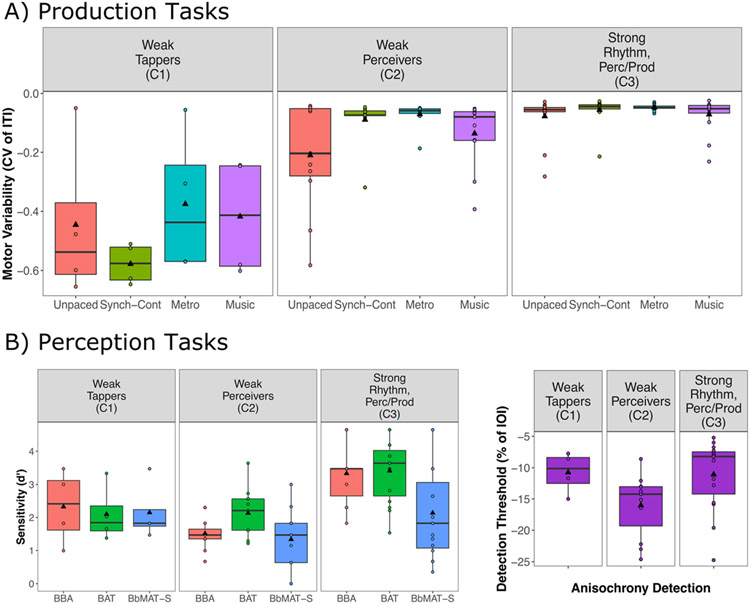

The hierarchical cluster analysis revealed three clusters of participants (see Fig. 3B for the clusters and Fig. 5 for performance on each task depending on cluster). Both production (unpaced tapping, synchronization-continuation, paced tapping to metronome, and paced tapping to music, all ps < .001) and perception (BBA and BAT, all ps < .001) tasks contributed significantly to the clusters; however, BbMAT-Synch and Anisochrony detection did not. All three dimensions contributed significantly to the clusters (all ps < .015). Cluster 1 (n = 4) contained participants who performed inaccurately along the tapping dimension (Dimension 1, v = −4.41, p < .001), and was associated with inaccurate performance on synchronization-continuation (v = −5.08, p < .001), paced tapping to metronome, (v = −4.29, p < .001), paced tapping to music (v = −3.90, p < .001), and unpaced tapping (v = −3.01, p = .003). Cluster 1 can therefore be considered as the outlying “weak tappers.” Cluster 2 (n = 11) contained participants who performed inaccurately along both beat-based (Dimension 2, v = −3.82, p < .001) and sequence memory-based (Dimension 3, v = −2.77, p = .006) rhythm perception dimensions (see also Supplementary Figure 3). Cluster 2 was associated with inaccurate performance on the BBA (v = −3.69, p < .001), Anisochrony detection (v = −2.36, p = .02), and the BAT (v = −2.50, p = .01). This cluster can therefore be considered as “weak perceivers.”6 Cluster 3 (n = 15) contained participants who performed well across all dimensions and tasks. They performed accurately across the tapping dimension (Dimension 1, v = 3.46, p < .001), the sequence memory-based rhythm perception dimension (Dimension 3, v = 2.13, p = .03) and the beat-based rhythm perception dimension (Dimension 2, v = 2.47, p = .01). Cluster 3 showed positive correlations for performance on unpaced tapping (v = 2.76, p = .006), paced tapping to music (v = 2.52, p = .01), synchronization-continuation (v = 2.41, p = .02), paced tapping to metronome (v = 2.06, p = .04), BBA (v = 3.82, p < .001), and BAT (v = 3.33, p < .001), suggesting that they were strong at rhythm perception and production in general.

Fig. 5.

Performance across all tasks for the three clusters observed in the perception + production principal component analysis. (A) Motor variability of all production tasks. (B) Performance on all perception tasks. Boxplots represent the distribution of data as implemented in ggplot2 in R, with the black line representing the median. Individual dots refer to individual participant data, and black triangles represent the mean

Clusters were confirmed with ANOVAs showing a significant main effect of cluster for synchronization-continuation, F(2, 27) = 118.48, p < .001, ηp2 = 0.90; paced tapping to music, F(2, 6.76) = 6.43,p = .03; unpaced tapping, F(2, 6.57) = 5.29, p = .04; BBA, F(2, 27) = 16.72, p < .001, ηp2 = 0.55; and BAT, F(2, 27) = 8.39, p = .001, ηp2 = 0.38. Paced tapping to a metronome, F(2, 6.28) = 4.73, p = .056 and Anisochrony detection, F(2, 27) = 3.20, p = .056 showed a marginal difference between clusters, and there was no difference between clusters for BbMAT-Synch, F(2, 27) = 1.55, p = .23. Independent-samples t-tests (adjusted p-values presented with p’ after Holm-Bonferroni correction) showed significantly worse performance on the synchronization-continuation task for the weak tappers (Cluster 1) compared to both the weak perceivers (Cluster 2), t(13) = 11.10, p’ < .001, d = 6.48, and the cluster with strong rhythm (Cluster 3), t(17) = 18.51, p’ < .001, d = 10.41. When controlling for multiple comparisons, there were no significant differences between clusters for unpaced tapping (all corrected values, p’ > .13), paced tapping to music (all corrected values, p’ > .12), or paced tapping to metronome (all corrected values, p’ = .23). However, better performance for the strong rhythm cluster (Cluster 3) on the BBA and BAT was confirmed, with the strong perceivers performing better than the weak perceivers (Cluster 2) on the BBA, t(24) = 6.15, p’ < .001, d = 2.44, and the BAT, t(24) = 3.74, p’ = .003, d = 1.49. The strong rhythm cluster (Cluster 3) also performed significantly better than the weak tappers (Cluster 1) on the BAT, t(17) = 2.52, p’ = .04, d = 1.42. All other comparisons were not significant after correction for multiple comparisons (all corrected values, p’ > .09). It should be noted that there was a strong overlap between this combined PCA and the perception PCA for participants identified as weak versus strong perceivers (see Table 3). The emerging clusters of strong and weak perceivers can be considered as quite robust, considering that they remained with the addition of the tapping data.

Note that of the six participants who did not score at ceiling on the BbMAT-M (not included in the PCA analyses), three were clustered as weak perceivers, one was clustered as a weak tapper, and two were clustered with the strong rhythm category, most likely driven by their high sequence memory-based perception, as revealed in the perception PCA.

Links with questionnaire data

Based on the clusters identified in the hierarchical cluster analysis for the perception and production PCA, we identified two groups of participants: weak performers (the weak tappers and weak perceivers, n = 15) and strong performers (the strong rhythm group, i.e., the strong tappers and strong perceivers, n = 15).7 It is particularly interesting to see whether these groupings align with subjective self-report measures. For example, the standardized scores from the BMRQ allowed us to observe whether participants were considered to have a strong (scores above 60) or weak (scores below 40) sensitivity to music (Mas-Herrero et al., 2013; Saliba et al., 2016). Three of our participants scored as having a weak sensitivity to music (two from the weak performer group and one from the strong performer group), and eight of our participants scored as having a strong sensitivity to music (seven from the strong performer group and one from the weak performer group).

A logistic regression8 was run to investigate whether the sub-scales of the BMRQ and participants’ music and dance training could predict whether they were classified as a weak or strong performer. None of the predicting variables were significant; however, the mood regulation sub-scale, χ2(1, N = 30) = 3.74, p = .053, and years of dance training, χ2(1, N = 30) = 3.35, p = .067 predictors were approaching significance. For the mood regulation sub-scale, strong performers (M = 51.07, SD = 8.32) tended to score higher than weak performers (M = 41.73, SD = 14.58), suggesting that strong performers were more likely to use music to regulate their mood. For dance training, strong performers (M = 2.53 years, SD = 4.02, range = 0–13) tended to have had less years of dance training than weak performers (M = 4.33 years, SD = 4.86, range = 0–15). This marginal effect may be based on some extreme values, as two participants who had more than 10 years of dance training both were clustered as weak perceivers (see OSM Table 5).

We also asked participants whether they could clap in time with a musical beat9 (as in Niarchou et al., 2021). Of the 11 participants who said they were “not sure,” seven were in the weak performing group (two within the weak tapping cluster), and four were in the strong performing group. All other participants indicated that they could tap in time to a rhythm, including two who were identified as weak tappers in the perception and production hierarchical cluster analysis. These results indicate that self-report data may not be entirely reliable to identify participants who perform inaccurately across different tasks.

Finally, because our distribution of self-reported musical training was approaching bimodal, we checked whether there was a difference in performance across the different tasks depending on whether participants reported that they had never engaged in musical training or practice (n = 12), or whether they had engaged in musical training or practice (perception tasks: n = 19, range = 1–13 years, M = 5.89, SD = 3.97, median = 6.0; production tasks: n = 18, range = 1–13 years, M = 5.89, SD = 4.09, median = 5.0). Wilcoxon independent tests were run because of unequal group sizes. For perception tasks, performance on the BAT was significantly better for the music training group (Mdprime = 3.15, SD = 0.87) compared to the non-music training group (Mdprime = 2.23, SD = 1.11), W = 53, p = .014, r = .45. There was no difference depending on music training for the BbMAT-Synch, W = 95, p = .45, the BBA, W = 102, p = .64, or the Anisochrony detection, W = 86, p = .44 tasks. For production tasks, paced tapping to music (average) was significantly less variable for the music training group (MCV of ITI = 0.11, SD = 0.14) compared to the non-music training group (MCV of ITI = 0.19, SD = 0.17), W = 160, p = .03, r = .40. Paced tapping to the metronome and synchronization-continuation showed a marginally significant difference between the groups, both in the direction of lower variability for participants with musical training compared to participants without musical training, both W = 154, p = 0.05, r = .36. There was no significant difference between groups for unpaced tapping, W = 130, p = .37. Note that Spearman correlations confirmed this pattern of results, with significant correlations between years of music training and the BAT, r(29) = .42, p = .02, paced tapping to music, r(28) = −.44, p = .01, as well as significant correlations for paced tapping to metronome, r(28) = −.38, p = .04 and synchronization-continuation, r(28) = −.46, p = .01.

Discussion

The goal of the current study was to investigate whether different types of rhythmic tasks would diverge into distinct patterns of performance across tasks and within individuals. Nine tasks originating from different laboratories were selected to cover a variety of potentially distinct rhythmic processing skills. Principal component analyses (PCAs) showed a clear distinction between (1) perception compared to production tasks, and (2) beat-based compared to sequence memory-based rhythm perception tasks. Further, hierarchical cluster analyses revealed participants with selectively strong or weak performance on different types of tasks, suggesting distinct rhythmic competencies across individuals in the broader population from which our participants were sampled. These results are discussed in relation to distinct rhythmic competencies, implications for underlying neural mechanisms, and suggestions for future research.

Distinct rhythmic competencies measured by different rhythm tasks

Across both the perception and perception + production PCAs, three primary dimensions emerged; these corresponded to: (1) tapping precision and beat alignment, (2) beat-based rhythm perception, and (3) sequence memory-based rhythm perception. These dimensions corresponded to our hypotheses of a separation between perception and production tasks (Bégel et al., 2017; Dalla Bella et al., 2017; Sowiński & Dalla Bella, 2013), as well as between sequence memory-based and beat-based rhythm perception tasks (Bonacina et al., 2019; Tierney & Kraus, 2015). Our findings also support and extend the previous findings from Tierney and Kraus (2015) and Bonacina et al. (2019) to show a distinction between sequence memory- and beat-based processing in the perception domain rather than only in the production domain as they had previously shown. The observed separation in performance across different tasks is particularly interesting as it provides behavioral evidence for distinct rhythmic competencies. Such evidence could provide some insight into potential differences in underlying neural architecture, or potential differences in task sensitivity that tap into separable aspects of rhythmic abilities.

It has proven difficult to isolate different rhythmic competencies or sub-components in the brain, as rhythm processing activates a wide range of neural areas, and overlapping cognitive processes are implicated across different tasks (Grahn & McAuley, 2009; Schubotz et al., 2000). Considering that rhythm production tasks that involve synchronization to or reproduction of an external auditory rhythm necessarily involve perception (Leow & Grahn, 2014), and that rhythm perception activates motor areas in the brain (Bengtsson et al., 2009; Chen et al., 2008; Fujioka et al., 2012; Grahn & Brett, 2007; Stephan et al., 2018), it is challenging to separate potentially distinct processes in typically developing individuals. Further, rhythm perception is suggested to be aided by predictions from the motor system, and a tight link between perception and production has been postulated (Cannon & Patel, 2021; Morillon & Baillet, 2017; Patel & Iversen, 2014). Indeed, generally speaking, it appears that perception and production are tightly linked in the brain and there is strong sensorimotor coupling involved in rhythm processing (Zatorre et al., 2007). Nevertheless, rhythm perception and production can dissociate in individuals with rhythm disorders: Accurate beat perception does not appear to be required for accurate synchronization (Bégel et al., 2017) and accurate synchronization does not appear to be required for accurate beat perception (Sowiński & Dalla Bella, 2013). This separation may be possible based on implicit processing of temporal information, allowing for intact production with impaired perception (Bégel et al., 2017). Although the underlying neural networks are difficult to distinguish, the current results suggest that different rhythm tasks may tap into different rhythmic competencies in the general population.

Distinct performance clusters support separable rhythmic competencies

The hierarchical cluster analysis revealed clusters of participants who presented with different profiles of rhythm performance that were remarkably consistent between the perception and the perception + production PCAs. When perception and production variables were combined, a broad distinction was observed between participants who generally performed accurately across all tasks, and those were selectively inaccurate in production tasks (across all tasks) or perception tasks (in both sequence memory-based and beat-based tasks). This distinction suggests that participants can be selectively impaired at production or perception of rhythm in general, supporting previous research (Bégel et al., 2017; Sowiński & Dalla Bella, 2013).

However, we found that when participants performed accurately in rhythm tasks, they generally performed accurately across both perception and production tasks. An fMRI study using a perceptual tempo judgment task showed that strong beat perceivers had greater activation in motor areas (supplementary motor area, the left premotor cortex, and the left insula) than did weak beat perceivers, who showed stronger activation in largely non-motor areas (left posterior superior and middle temporal gyri, but also the right premotor cortex) (Grahn & McAuley, 2009). The authors suggest that strong beat perceivers are more likely to use implicit beat perception when performing rhythm tasks, whereas weak beat perceivers may use more explicit strategies (i.e., interval duration judgments). The ability to use implicit beat processing mechanisms may therefore result in improved performance across both perception and production tasks. Our results provide behavioral support for this suggestion, as participants with high performance tended to perform well across both rhythm production and rhythm perception tasks. However, as also shown in single case studies, our results suggest that production or perception competencies can be selectively impaired, reflected by participant clusters that were selectively weak at tapping or perceiving. Such evidence suggests that patterns of dissociation may be more common than previously thought in the general population. These findings could help to explain why different patterns of correlations between various rhythm tasks are found across different studies (see examples in Tierney & Kraus, 2015), as rhythmic competencies may be both related and unrelated, depending on the participant and their specific constellation of rhythmic competencies and beat processing strategies.

When only perception tasks were included in the PCA, clusters of participants emerged who performed inaccurately on the perception tasks in general (as seen also in the combined PCA), selectively well for the sequence memory-based rhythm task (BBA), or selectively well for the beat-based rhythm tasks (BAT, BbMAT-Synch, Anisochrony detection). Combined with the perception and production results, it appears that weak perceivers perform inaccurately across all perceptual tasks (i.e., a general lack of perception skills that affects both short-term sequence memory- and beat-based rhythm perception), but that strong perceivers can show selective enhancements to either sequence memory- or beat-based skills. These selective enhancements could be based on different cognitive skills necessary to perform well in each type of task. Both beat-based and sequence memory-based tasks require some level of beat-based processing. However, the sequence memory task requires the additional contribution of short-term memory, sequencing, and supra-second judgments (Tierney & Kraus, 2015), which could compensate for deficits in beat-based skills or boost these skills. Therefore, participants could draw on stronger sequence learning and memory-based skills to perform selectively well in the BBA (see the contribution of short-term memory to rhythm reproduction in Grahn & Schuit, 2012), and draw on stronger beat-based skills to perform selectively well in the beat-based tasks. Our results suggest that the necessary skills to perform perceptual tasks somewhat overlap, but that selective abilities can enhance performance in sequence memory-based or beat-based tasks.

Further evidence for distinct performance patterns across individuals can be observed when the clusters that emerge from the PCAs are examined. Only one of the identified weak tappers was also identified as a weak perceiver in the perception PCA (note that this participant also had low music reward sensitivity on the BMRQ, and appeared to have large, general impairments in rhythm and/or music processing). Two other poor tappers were identified as strong sequence memory-based rhythm perceivers, suggesting that they were able to use other cognitive skills for rhythm perception, and that accurate tapping was not necessary for accurate perception. One weak tapper was identified as a strong beat-based rhythm perceiver. This pattern further suggests that tapping can be impaired at the same time as perception is spared, as has been observed in the general population (Sowiński & Dalla Bella 2013), and in children with cerebellum lesions (Provasi et al., 2014). Sowiński and Dalla Bella (2013) suggest that a distinction between synchronization and beat-based perception within the general population could be related to a disruption in auditory-motor mapping. However, Tranchant and Vuvan (2015) pointed out that the perceptual tests used in Sowiński and Dalla Bella (2013) (the Anisochrony detection task and the MBEA rhythm test) could be performed without using beat-based processing (i.e., by comparing durations between intervals). The current study supports the conclusions of Sowiński and Dalla Bella (2013), with the addition of clear beat-based processing tasks (the BAT, BbMAT-S), in addition to the Anisochrony detection task, and the finding of a single participant who showed weak synchronization and intact, strong beat-based perceptual processing. Such result patterns suggest a large variety of rhythmic competency patterns within the population, and that individual differences are important to consider in future research, in healthy and pathological brains.

Production tasks