Abstract

This article presents a new framework for realizing the value of linked data understood as a strategic asset and increasingly necessary form of infrastructure for policy-making and research in many domains. We outline a framework, the ‘data mosaic’ approach, which combines socio-organizational and technical aspects. After demonstrating the value of linked data, we highlight key concepts and dangers for community-developed data infrastructures. We concretize the framework in the context of work on science and innovation generally. Next we consider how a new partnership to link federal survey data, university data, and a range of public and proprietary data represents a concrete step toward building and sustaining a valuable data mosaic. We discuss technical issues surrounding linked data but emphasize that linking data involves addressing the varied concerns of wide-ranging data holders, including privacy, confidentiality, and security, as well as ensuring that all parties receive value from participating. The core of successful data mosaic projects, we contend, is as much institutional and organizational as it is technical. As such, sustained efforts to fully engage and develop diverse, innovative communities are essential.

Keywords: science, innovation, community, linkage, graduate education

1. Introduction

Over the last two decades, computational and data revolutions have dramatically shifted the landscape for fundamental research and policy analysis. Recent changes in law, such as the Foundations for Evidence-Policy Act of 2018 (2019), and administrative efforts, such as the Federal Data Strategy, highlight a transformation in how the U.S. government views data as a strategic asset. Recent executive orders emphasize that far from being a neutral commodity, data—its development, characteristics, accessibility, and use—are key to addressing persistent disparities and ensuring equality of opportunity in the United States (Exec. Order No. 13985, 2021). All these policy developments (and parallel trends in industry and academe) highlight that in today’s world, data are a necessary form of infrastructure that require strategic development, the success of which is implicated in nearly every aspect of government and civil society.

But what does it mean to consider data a strategic asset on par with other, more tangible forms of infrastructure? How can a 21st-century data infrastructure that protects privacy, ensures equity, and transparently enables rigorous, timely research and analysis across a wide range of topics be developed, maintained, and used? We propose that the answers to these questions are as much social and institutional as they are technical. This article seeks to develop a framework for creating and maintaining such an infrastructure. We identify the social and institutional conditions under which large-scale, often restricted data might be productively and sustainably integrated to support and improve fundamental and evidence-based policy research in multiple domains.

Substantial linked data assets are not new. The intellectual and analytic value of analyses that draw together data from multiple sources is not news. However, we argue that current models do not realize the full value or permit the same flexibility as the ‘data mosaic’ structure that we propose here. One prominent approach tends toward a centralized, hierarchical form of organization that makes the timelines, goals, and needs of a small number of near monopolies preeminent in the design, protection, and use of data infrastructures. This structure can work in some contexts but is insufficient to realize the vision of contemporary evidence-based policy research in many important cases, especially when data resources are widely held by different, co-equal custodians.

In such cases, a hierarchical model often resolves to attaching one or more ‘child’ data sets to a ‘parent’ data set where the child data sets are typically used in conjunction with the parent, but not with each other (i.e., not independently of the parent data set). Often, thin slices of such integrated data can be made available for public use with few or no restrictions, allowing broad, decentralized work by many users. However, such data releases generally lack the features necessary to support a comprehensive, equitable, general-purpose data architecture. A second approach involves attaching thin slices of data from one or more child data sets to a parent data set to answer a specific question. Such work may be artisanal and hard to replicate, and any resulting integrated data generally lack the features necessary to support a broad, equitable, general-purpose data architecture.

A new approach is necessary because the value of large-scale data infrastructure is manifest. Lengthy traditions of research in sociology, organizational theory, and economics can inform an organizational framework and set of principles that avoid the pitfalls of too much centralization on the one hand and too little coordination on the other.

We propose that realizing the full value of linked data for both fundamental research and policy analysis requires a network form of organization and a set of practices to promote generalized exchange among stakeholders with very different goals, who face varied, sometimes contradictory legal, ethical, and proprietary restrictions. Mindful efforts to organize and sustain diverse, innovative communities that build, protect, and use data are essential.

There has been much work done on the statistical algorithms used for data integration (Baldwin et al., 1987; Batini & Scannapieca, 2006; Christen, 2012; Herzog et al., 2007; Talburt, 2011; Winkler & Newcombe, 1989), but to our knowledge, this is the first article that focuses on the network form of organization that is essential to cooperative long-term partnerships necessary to establish a linked data mosaic. Our argument centers on the conditions necessary for, and the substantial value of, linked data mosaics. We take such mosaics to have several key features. They are: (1) collectively designed and collaboratively integrated data architectures that (2) support coherent, productive, accessible, and equitable data infrastructures and (3) deeply integrate a wide range of co-equal data sources (4) through the work of a diverse, decentralized but interdependent community. We distinguish data mosaics from hierarchical data infrastructures that subordinate partners, users, and data to the needs of a powerful, central authority. We also distinguish data mosaics from decentralized systems that make carefully curated, but often thin slices of data broadly available to users who have little input in their creation but who creatively integrate them with often laboriously collected data sets tuned to address a particular topic or question.

In what follows, section 2 presents a stylized model of the value of linked data mosaics. Section 3 summarizes the key features of an innovative data science community organized as a network form of governance. We attend particularly to the dangers of relying on network communities to develop and sustain reliable, secure, and equitable data infrastructures. Close focus on a particular case, where a data mosaic is valuable —establishing the value of science through the people who conduct it—concretizes our more abstract conceptual claims. We lay out that context in section 4. Section 5 sketches the two organizations that are at the heart of our work: the Institute for Research on Innovation & Science (IRIS) and the National Center for Science and Engineering Statistics (NCSES). Section 6 discusses the issues that have arisen in linking these data sets. Section 7 presents initial linkage results that highlight the value of our integration of three types of data: transaction-level administrative data on research funding, national survey data on the population of doctoral recipients, and bibliometric data from scientific publications. Section 8 concludes by laying out lessons that can be drawn from our partnership.

2. Value of the Network Form

We begin with a toy model to make the general case for linked data, especially the value of linked data in a mosaic. In discussing linked data, it is important to emphasize that to be fruitfully linked two data sets must be relatable (i.e., cover common units and have common identifiers). To see the power of linked data, we posit the relationship Y = QD, where Y denotes the knowledge that can be produced by research using given data; Q is a constant that denotes the number of questions that can be answered per distinct data element available; and D denotes the number of distinct data elements that are available. Consider an area where each data element can be used to study two questions (i.e., Q = 2). If there are two data elements available (D = 2), then four questions can be answered, but if we increase the number of data elements to three (D = 3), then eight questions can be answered, and so forth. Thus, we posit that the value of linked data is that it generates exponential growth by adding in more distinct data elements.

A few points are worth clarifying. First, by distinct data elements, we mean data elements that do not overlap substantially, such as measures of hours worked in one week and an hourly wage, instead of two measures of hours worked in two, unexceptional, consecutive weeks. Second, the constant Q in our model should not be taken as an immutable, universal constant like Planck’s constant, but rather one that is likely to vary across data sets based on their data elements. Indeed, different combinations of data sets are likely to have different values of Q depending on their complementary data elements and possibilities for linkage. Thus, our use of a given value of Q should be viewed as a heuristic rather than definitive. Second, we note that the final value of Y depends not just on the characteristics of data sets but also on the conditions under which they were combined, rules for their accessibility and use, and the characteristics of their potential users. In short, the potential value of linked data architectures is determined by the characteristics of their component pieces, but also by the larger socio-organizational contexts in which they are created, sustained, and used. Third, from the perspective of economic industrial organization, exponential value or any form of increasing returns generates the potential for a monopoly in industry as well as in government or research, a danger that has to be managed and for which we propose governance mechanisms.

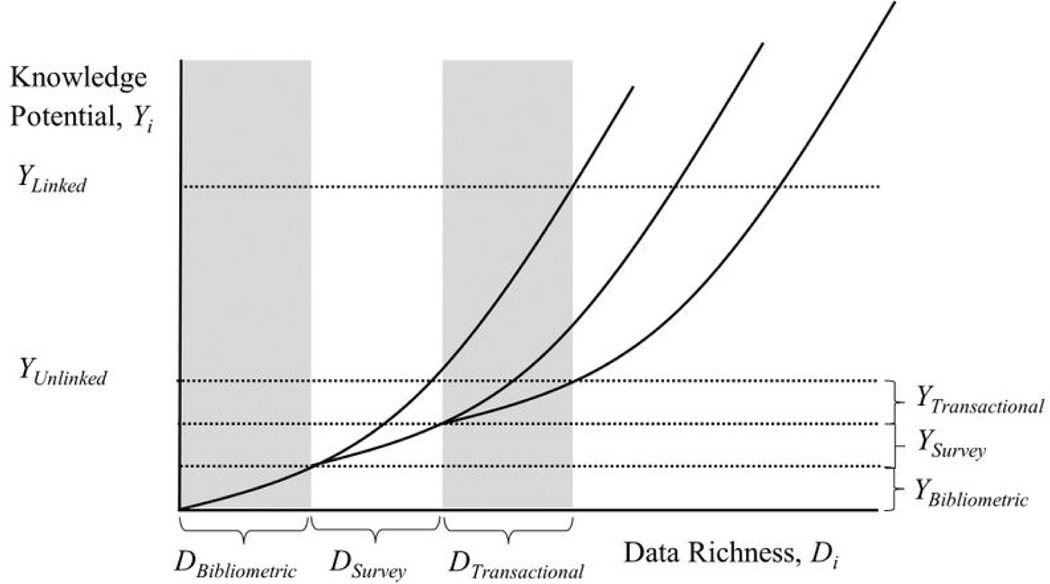

Figure 1 illustrates the case where there are three sets of distinct data elements, bibliometric, survey, and transactional, which correspond very broadly to some of the main classes of data relevant for our illustrative example, innovation. The specific types factor into the discussion, but are less critical for the moment. What is critical is that each represents distinct data elements from the others. If these three types of data are not linked and we assume that they each comprise the same number of data elements (D) of comparable utility (Q), it is possible to produce knowledge of YUnlinked = 3QD, but if these data elements are fully linked and interoperable in mosaic fashion, it is possible to produce considerably more knowledge, YLinked = Q3D. Intuitively, the combination of different data allows for an exponential expansion in opportunities compared to unlinked data because it increases D dramatically from a very high initial level, greatly increasing potential knowledge. More concretely, any of the explanatory variables in a given data set can be used to analyze any of the outcome variables present in any of the others. Further, it is likely that some of the ‘explanatory’ variables in one data set will be valuable as outcome variables when linked to another data set and vice versa.

Figure 1. Power of linked data.

The figure illustrates the case where there are three sets of distinct data elements, bibliometric, survey, and transactional. If these three data elements are not linked, it is possible to produce knowledge of YUnlinked, but if these data elements are linked, it is possible to produce considerably more knowledge, YLinked.

We have already mentioned two common ways of linking data that are distinct from a data mosaic, in which the data sets are linking on multiple keys and where each pair of data sets can be used in conjunction with or independently of the others because integration work builds rich linkages among all relevant pairs of data sets. Contrast this case to the two stylized alternative models: decentralization and hierarchy. In the former, individual researchers might construct a data set suitable to answer their particular questions and then draw on a publicly available, already curated data set to compute additional variables (e.g., as explanatory variables or instruments) they then integrate with their data to address one or more concrete research questions. An example in the case of innovation might be to merge some data on the institutions at which researchers work drawn from publicly available sources such as the Higher Education Research and Development (HERD) survey into proprietary bibliometric data to provide contextual variables based on author affiliations. In this decentralized case, we expect there to be some gain from integration, but we anticipate lower values of Q and D because the ability to combine data sets is relatively limited as are the units of analysis that might be examined.

More importantly, perhaps, this kind of decentralized, artisanal approach to data integration might allow replication and potentially some extension of research depending on individual data-sharing practices, but would not result in a broadly accessible and expandable resource and would not, without significant further work, represent a component of a larger integrated data architecture. As a result, the range of questions that this kind of decentralized approach might be able to address and the knowledge that might be produced through their answers will be relatively limited.

A second approach mentioned earlier is hierarchical, in which one data set is the parent and other child data sets are linked to it. For instance, restricted administrative data from many organizations might be linked to a restricted federal data asset. Here the value hinges on whether the child data sets are integrated with each other and on whether the links between them can be accessed (in terms of data architecture and data use provisions) without the parent data set. If the child data sets were themselves integrated and usable with each other and without need to rely on the parent data set or adhere to its rules for access and use, then the gains might be comparable to those of a linked data mosaic, provided the data are accessible by the relevant community and their custodians are responsive to community needs. If, as is more typical, the various child data sets are only linked indirectly through each’s integration with the parent—a situation that would not permit their use independent of the parent data set —the potential knowledge available from combining the parent data set with each child data set would be Y = Q2D and the gain from combining the parent data set with two child data sets would be Y = 2Q2D. This is considerable, but for reasonable values of D unlikely to produce the same gains as a linked data mosaic, where the potential knowledge available from fully integrating the three data sets is Y = Q3D.

The network form, or linked data mosaic, maximizes the potential uses of each data set through linkage because it allows many possible integration strategies and levels of analysis to be explored in use by the relevant community members who seek to develop them for their own purposes. The linkage dimensions can be defined using all possible analysis units, or all identifiers that exist in the data. For instance, in the Universities Measuring the EffecTs of Research on Innovation, Competitiveness and Science (UMETRICS) case we consider in more detail below, a transaction from one project (payer) can be linked for all payees (person or vendor). Moreover, when a new data source enters the architecture through linkage to an already integrated component, it does not merely become available for analysis using the data set to which it is directly linked. Instead, additional possibilities are opened because the new data can be indirectly connected to all other data sets that are ‘reachable’ because of their integration in the larger architecture, which can also be conceptualized as a network where data sets themselves are the nodes. In those terms, part of the power of a mosaic approach is that new nodes can potentially be linked to other existing related nodes in the network because the existing nodes are all already integrated (or at least integratable) with each other. While we have emphasized the potential value of linking data sets, there are many factors to consider when exploring whether to link a pair of linkable data sets. Technical feasibility is often not the determining factor. Rather, the benefits of the linkage must outweigh the costs. And, as we argue below, the community-building component is a major benefit in addition to the connected data itself and a way of lowering the cost of linkage.

A separate, slightly more technical advantage of linked data, which is not covered in the figure but potentially quite important in practice, is that a large portion of data construction involves algorithmically cleaning and linking data sets and, while adding data sets increases the amount of linkage and cleaning required, it also greatly increases the data resources available by providing multiple angles on the same data elements. And we speculate that in most cases the gains from greater visibility exceed the complexity of more links.

3. Features of the Network Form of Data Integration

Realizing the kinds of synergies our conceptual model highlights requires social and organizational integration as well. If we are to avoid the twin poles of hierarchical, centralized data assets and uncoordinated, decentralized fields while ensuring that participants gain something of value for their work, we require a new model for the construction and maintenance of data mosaics. A conceptual toolkit drawn from sociological, organizational, and network theory can help us solve this problem. Here we focus on the social aspects, briefly introduce two key concepts (network forms of organization and generalized exchange), highlight a few of their features, and identify some points of possible failure. We can then outline the essential components of an innovative learning community built around integrated data and its effective use, which we illustrate with examples drawn from a data-integration relationship between IRIS and NCSES. That partnership highlights both the technical and the socio-institutional features required to start building the kind of generative community that can maintain and expand a data mosaic of the sort we describe. This community matches a cohesive network of interdependent data custodians and researchers (a generative community) with a deeply integrated network of co-equal data sets (a data mosaic) that can be used by many community members in a wide variety of combinations (a data infrastructure suitable as a strategic asset for policy and research in multiple domains).

Generative communities are fickle things. Their existence implies a degree of trust and affinity among their members, often anchored on some productive form of interdependence enforced by reputational or other social means. For voluntary communities to work, membership must provide something of value that participants could not realize solely with their own skills and resources. That value must offer sufficient inducement to defer some of their individual interests and some of their autonomy in favor of the community’s health. It must also lead them to stick and stay in the face of the inevitable problems and conflicts that accompany any complex, collaborative endeavor. Communities are defined in part by a willingness to exercise voice in the face of those challenges rather than exit (Hirschman, 2007). These features are particularly hard to develop when communities must draw from diverse groups whose cultures, priorities, and constraints are at least somewhat alien to one another. They are harder to sustain when participants and their interests are at least somewhat inimical. Both things are true of the diverse communities that would be needed to support the national data mosaic we envision.

Several questions follow: (1) what kinds of relationships create and sustain the positive social and affective features of community? (2) what kind of organizational arrangements can support and sustain such relationships? and (3) what dangers must we guard against in cultivating both?

Question 1. What kinds of relationships?

Voluntary communities that one must choose to join and work to help maintain at some cost to oneself are forged from interdependent goals and value. At the heart of both things is a peculiar social relationship, exchange. Some types of exchange relationships, for instance between strangers in market contexts, are relatively socially thin. Buying an apple from a stranger at a farmer’s market while visiting a distant town requires a lot of formal institutional infrastructure (such as enforceable property rights) (North, 1993) but relatively little social embeddedness (Granovetter, 1985). It is not necessary to have any kind of personal relationship or any degree of commonality or fellow-feeling with the person who sells one a snack. Other types of exchange, like gift giving, or sharing of valuable and costly-to-transfer private information, are more socially complicated (Blau, 1964; Homans, 1974) and require deeper social support. The problems are compounded when exchanges carry significant risk or might be perceived as illegitimate (Healy, 2006).

The kinds of resource exchanges—of access to restricted, complicated data; of expertise and skill—necessary to vibrant data science communities have much of this character. Likewise, the conditions under which relationships must work—under time pressure; when parties’ collaborators might also be rivals pursuing orthogonal or unrelated ends; when there are high scientific and practical stakes—can introduce a substantial degree of risk. Finally, real and important concerns about privacy and security as well as binding legal restrictions raise the possibility that at least some potentially powerful observers might consider the sorts of exchanges we hope to facilitate to be illegitimate.

Under these conditions, we could trust altruism. That has possibilities, but we are realistic enough not to trust it too much. Instead, we should focus on reciprocity, the familiar tit-for-tat of game theory. Reciprocal exchanges are those where one party gives to another with the expectation that similarly valuable resources will be returned. When the shadow of the future is long, that can be sufficient to support the emergence of relatively complex cooperative systems, but it also runs the risk of a downward spiral of defection.

There are two general types of reciprocity. Direct reciprocation happens when one enters an exchange relationship expecting that the specific partner with whom one is exchanging valuable resources will also be the one who gives back. A second form, indirect or generalized exchange, is more diffuse and takes place when one gives to a partner with the expectation of return from a person or organization who was not party to the original exchange. This requires first that there be multiple parties (a community if you will) engaged in similar forms of valuable, reciprocal exchange who are aware of and engaged with one another (Axelrod, 1984 Takahashi, 2000). Generalized exchange is a common form of gift giving among kinship groups (Levi-Strauss, 1955). Someone might offer something of value to a cousin with the expectation that eventually an uncle will return the favor, even if that cousin never does.

This kind of ‘pay it forward’ ethos is subject to real dangers of free-riding or opportunistic exit and thus requires a degree of trust that typically only emerges within a system of ongoing, successful relationships; an exchange network. However, when it works, generalized exchange generates and sustains social solidarity, which encompasses most of the features of generative communities that opened this section (Molm et al., 2007). Kinship clearly won’t do the trick here. Instead, we suggest an organizational scaffold.

Question 2. What kinds of organizational arrangements?

Network forms of organization exist at a midpoint between markets—the socially thin but institutionally thick contexts for generally short-term, transactional, and armslength exchanges like buying that apple in a far-away farmers market—and hierarchies —the formal bureaucratic structures that characterize large organizations. It seems unlikely that market coordination will result in the kind of community we need. Even if it were possible, internalizing the wide range of data, skills, and expertise necessary to our task in a large bureaucracy would create a dangerous monopoly and might increase security risks by concentrating all the data in a single, presumably fallible organization. We also know that generalized exchange and the benefits it could bring requires a distributed network of interdependent players.

Network forms of organization are more nimble and ‘lighter on their feet’ than bureaucracies and much better at the transfer of complicated, highly tacit information necessary to the sorts of substantive work we would want an innovative data community to accomplish compared to markets (Podolny & Page, 1998; Powell, 1990). They are generally stable enough to create a long shadow of the future and to foster the kinds of trust, informal, reputation-based social control, and forbearance that are necessary in the absence of perfect contracts (Uzzi, 1997). Network forms of organization are common and generally effective in precisely the kinds of diverse, skilled, and creative communities we seek to develop. They are often found in industrial districts, regional technology communities, creative industries, and open-source software projects where rivals on one project may be collaborators on another and where disparate, sometimes conflicting interests and goals are commonplace (Hargadon & Bechky, 2006; O’Mahony & Ferraro, 2007; O’Mahony & Bechky, 2008; Sable & Piore, 1984; Whittington et al., 2009).

Network forms are particularly suited to situations where “the knowledge base is both complex and expanding and the sources of expertise are widely dispersed” (Powell et al., 1996, p. 116). Dispersed, rapidly changing knowledge and complex interdependencies among component parts of innovations characterize this type of governance. Those are also exactly the features of a data science community that seeks to understand, explain, and improve the value of science.

The seed crystals for successful networks have particular characteristics. They are often themselves organizations who anchor communities, convene partners, and make significant, substantive contributions to common projects. Network anchors help to set the technical directions and the tone for their communities (Owen-Smith & Powell, 2004, 2006). Though they lack the authority or power necessary to compel participation or dictate behavior, they exert significant influence by serving as neutral brokers and meeting grounds for participants. Network anchors are most effective when they are active and credible participants in collective efforts and thus can play important roles in fostering new partnerships, engaging new participants, aiding in collaborative problem solving, and helping to build systemwide capabilities so they are in no way disinterested (Owen-Smith, 2018; Powell & Owen-Smith, 2012). Their ability to do all these things is aided by transparency, by rigor, and by the ability to help translate across the different languages and goals of their many partners to help ensure that all find their participation valuable. In doing so, they help provide a basis for the emergence of generalized exchange in a community. As discussed in a later section, a network anchor also performs a valuable service by acting as a neutral broker, collecting and disseminating information that can help identify new partners and smooth their entry into the community. IRIS, which seeks to play just this kind of role in a consortium of major research universities and a growing, interdisciplinary research community, was designed and operates according to these principles.

Question 3. What are the dangers?

Networks, like markets and hierarchies, can and do fail. Setting aside changes in circumstances rendering their work less valuable and to put it starkly, networks fail “when exchange partners either screw each other or screw up” (Schrank & Whitford, 2011, p. 170). There are instructive differences between screwing your collaborators and screwing up. The former is most often a function of opportunism, “self-interest seeking with guile” (Williamson, 1979, p. 234). The problems of defection, free-riding, self-dealing, and strategic exit from relationships or communities that are dangers of both direct and generalized reciprocal exchange are all examples. While it is often hard to distinguish them in practice, or in the heat of the moment, screwing up is less malignant but no less dangerous. Partners screw up when they lack the competencies or knowledge necessary to successfully pursue complex and difficult collaborative projects. Networks screw up when they stop seeking new information from outside their current orbit and calcify, closing themselves off and becoming unable to identify and adapt to new situations or needs. Such ‘closure’ renders them incapable of the kind of flexibility and responsiveness that help ensure membership in the community is valuable. Beyond natural obsolescence, the problem here is not guile, it is ignorance or a simple competency shortfall. These, along with various forms of opportunism, are the primary dangers for network forms of organization that seek to foster generalized exchange in support of vibrant, innovative learning communities.

In the context of data architectures, innovative communities organized around network forms of governance and oriented toward generalized forms of exchange represent a key difference between what we call a data mosaic and either of the other stylized models for data infrastructure we discuss. This is the case because the potential value of a mosaic derives as much from the conditions under which it is developed, expanded, accessed, and used as from the features of data itself. Linked data architectures are themselves networks where nodes are data sets and ties are defined by links across them. Under conditions where the nodes of such a ‘data network’ are controlled by many different custodians and governed by multiple restrictions, the options for organizing such a network have typically either required centralized control (an analogue of the hierarches that are one pole of the organizational spectrum) or decentralized modes for allowing access to thinner subsets of data that do not run afoul of restrictions on use (an analogue of the markets that represent the other).

In contrast, a vibrant data mosaic that can take full advantage of many different linking assets and levels for many purposes is a network of linked data sets that is grounded in a community infrastructure that neither requires all participants to subordinate their requirements and goals to a single dominant player, nor necessitates the thinning of data to allow them to be used broadly. Such communities are also themselves networks, albeit of a very different type than the networks of data they might create and sustain. The value of communities that are organized as networks and integrated through generalized exchange are particularly apparent when we turn our attention to research on innovation and the public value of science and engineering research.

4. The Illustrative Case of Innovation Research

Innovation is critical for improving living standards, advancing health, and self-actualization. The study of innovation allows scholars and practitioners to explore the depth of value created by new discoveries and inventions, including dramatic advances in fundamental knowledge in multiple fields, relevant, practical contributions to some of today’s most pressing policy issues and new technologies. Despite its importance, the innovative process is surprisingly poorly understood.

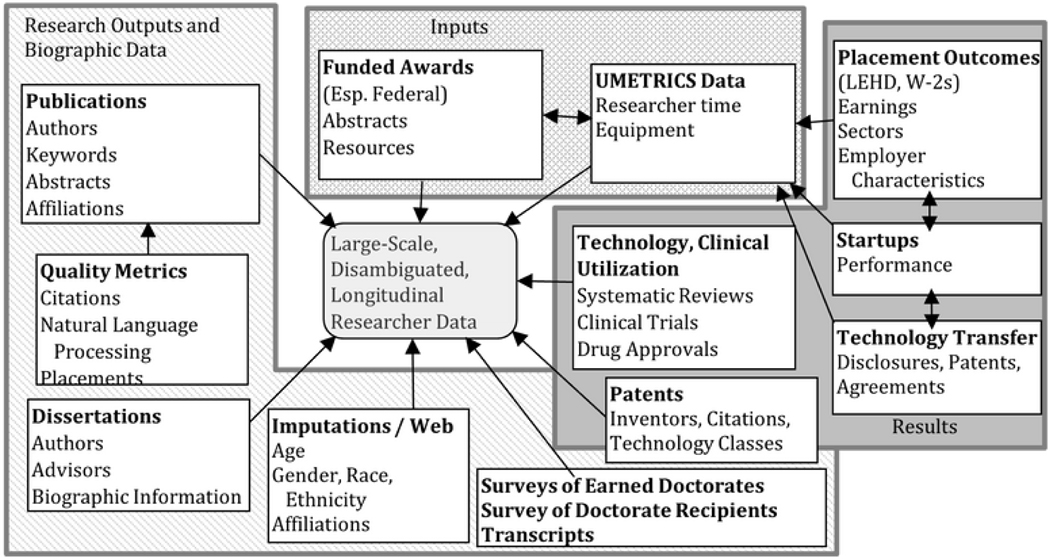

To see the limits to understanding innovation without linked data and to better understand the value of linked data for studying innovation, it is useful to have a question in mind. Consider a relatively simple example: what is the return on federal investments in academic research and development (R&D)? To dramatically oversimplify things, billions of dollars of grants are made every year by more than a dozen federal agencies to thousands of universities where they support innovative research conducted by hundreds of thousands of people who organize their work in a wide variety of ways.

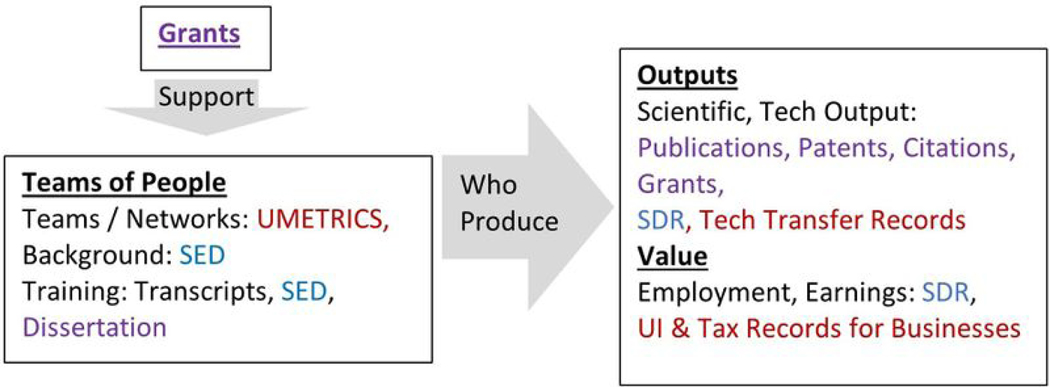

However, as illustrated in Figure 2, grants, by themselves, do not do anything. They enable research conducted by people. And, as Corrado and Lane (2009) observe, innovations are made by relatively small numbers of people, frequently working on teams (Wuchty et al., 2007). Their work produces new knowledge while training people to the levels of expertise necessary to effectively apply it. Ultimately, most of those highly skilled people leave the academy for employment across all sectors, where their work impacts the economy and society. Yet, they carry with them the knowledge and skills they gained during their grant-funded work (Stokes, 1997; Zolas et al., 2015). While much of that knowledge is codified in print (Stephan, 1996) or concretized in technology (e.g., patents) or both (Murray & Stern, 2007), much more remains tacit, embodied in the people who produced it (Polanyi, 1966). Private sector access to tacit knowledge largely comes through employment as companies learn by hiring (Palomeras & Melero, 2010; Song et al., 2003).

Figure 2. Types of data and roles.

The figure illustrates the types of data elements that can be brought to bear to understand how research support affects researchers and teams, output, and the economy. Blue indicates survey data. Red indicates transactional data. Purple indicates naturally occurring data and bibliometric data, which are both unobtrusive. SDR = Survey of Doctorate Recipients; SED = Survey of Earned Doctorates. UMETRICS = Universities Measuring the EffecTs of Research on Competitiveness, Innovation and Science.

At a bare minimum, making a start on understanding who research touches and how it affects them and through them society at large requires that we know what specific grants have been made, in what amounts, to what institutions for what kinds of work. We also need to know how they were spent, what inputs were purchased and, critically, which particular people were employed and trained in what kinds of teams. Then we need to know what those teams produced, what role specific researchers played in that production, where those people eventually found other work, what they earned for themselves and what value they produced for their employers. The list could go on and on, but this should be sufficient to make the point—answering even a relatively simple question in this domain requires many types of data (grant obligations and details, granular research expenditures, publication and patent data, demographics, dissertation information, transcripts, employment and wages, and detailed business data to name just a few). These data are housed in many different locations (federal science agencies, university administrative systems, publishers and repositories, federal statistical agencies, state workforce and higher education agencies, firms). Moreover, each of these entities operates under many different sets of restrictions (federal and state laws, ethical protections, proprietary limits on use). Because the focus of this analysis is largely on people, it is inherently sensitive at some level and requires social capital, protocols, and data systems that all of the fiduciaries holding data can rely upon.

Trust in common protocols and systems is essential, but the value of linked data assets can only be fully realized if their use addresses the needs and concerns of all participants. In an area such as ours, where the results of cutting-edge data science can make immediate contributions to both the frontiers of academic knowledge and directly inform pressing policy and social debates, researchers, data custodians, and communicators who make use of results must be engaged at all phases of the work, and all must find value in participation on their own terms. And, for questions revolving around the diversity of the STEM workforce and the breadth of its impacts, it is essential that a diverse community be engaged in data construction and in an analysis.

Transparency is essential to both engaging communities and establishing trust in what they produce. This theme is apparent in our work at multiple scales. In technical work with complex data, transparency resolves to clear, complete, and discoverable documentation and the broadest responsible access possible. In the soci-oorganizational work of community building, transparency requires clear processes and practices to ensure equitable and inclusive access to data, to address emergent needs and concerns, and to ensure well-understood and effective protections that meet all necessary legal and ethical requirements while addressing varied participants’ different degrees of willingness to, for instance, tradeoff privacy protections and utility. Transparency also resolves to explicit and sustained efforts to match technical and methodological choices to substantive domain knowledge to help ensure the face validity of work that results.

Finally, rigor in the construction, analysis, and use of data and findings is paramount. We take this to mean more than simple replicability (though that too is important). Large, complicated data sets pose challenges in part because they enable a huge range of possible analyses and increase the chance of misuse at the same time. The analytic decisions necessary to render them tractable for particular uses and sensible to relevant communities requires attention not just as to clarity and transparency in technical and methodological work, but also in terms of guiding theoretical principles and concern with the substantive particularities of relevant domains. Large-scale data, we contend, underdetermine knowledge and thus making effective transparent use of them to ensure value for all relevant stakeholders requires that we reach beyond the records and fields themselves in our efforts to render them both sensible and useful.

Speaking to these core principles in a particular area, science and innovation, highlights the general challenges and opportunities of complex linked data in many others, and thus provides broader insights. But all the benefits that might be realized from such work begin with the value that participants derive from it. In the absence of that, the necessary precondition of any such project—data from multiple sources that can be linked—are often impossible to access. In what follows we describe one, concrete, collaborative relationship to build out a single piece of the large data mosaic we envision. The partnership between NCSES and IRIS and the new linked data that results from it (in Figures 4 and 5) offers a case in point.

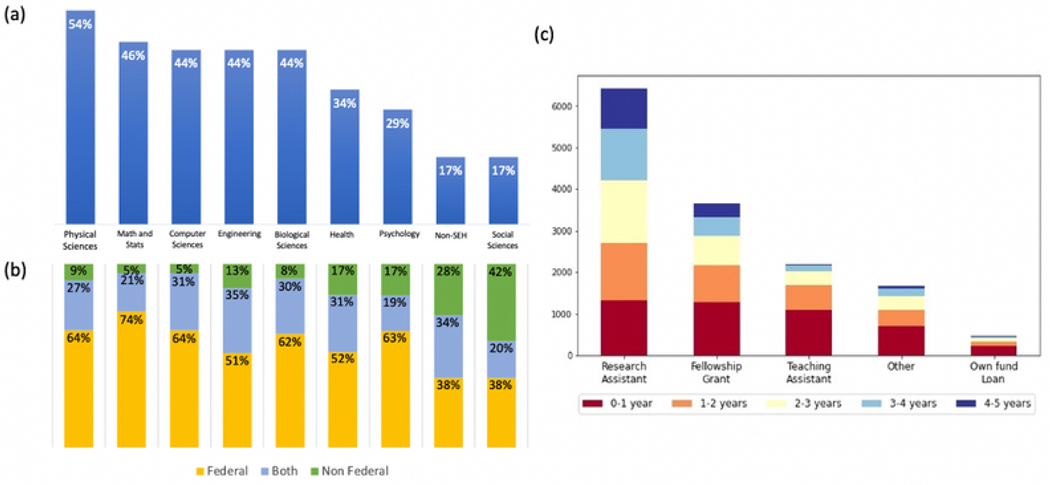

Figure 4. SED-UMETRICS linked data for graduate cohort FY 2014–2017.

(a) Match rate by doctoral field of study. (b) Distribution (percent) of funding type by field of study. Funding type is classified into federal source only, nonfederal source only, and funded by both federal and nonfederal sources. (c) Distribution (count) of funding duration by the primary source of financial support during graduate school reported in SED. SED = Survey of Earned Doctorates.

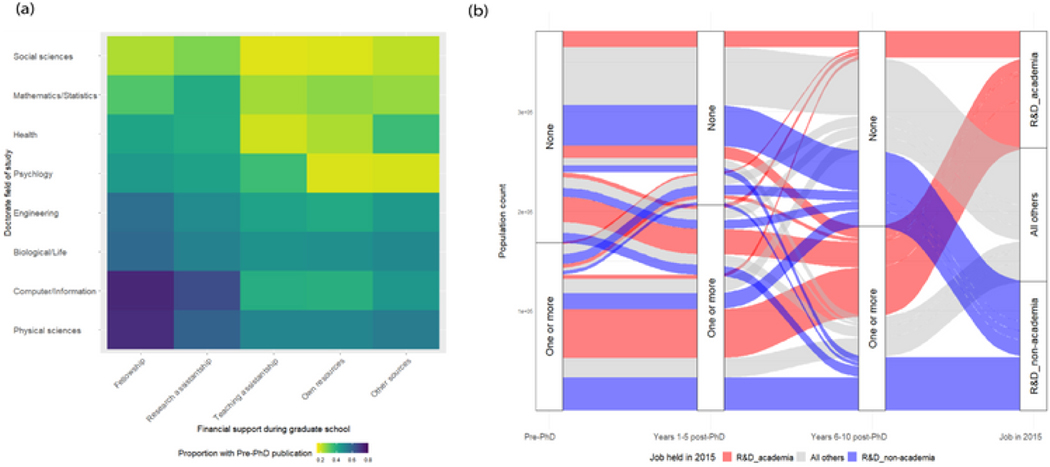

Figure 5. Early career scholarly publishing and employment outcome of the science and engineering doctorate recipients, graduate cohort 1994–2007.

(a) Proportion having at least one published article, review, or conference proceeding paper during the pre-PhD period by doctoral field of study and the primary source of financial support during graduate school. (b) Publishing and research trajectories of early career graduates denoted in order of time by indicators of predoctorate publishing, postdoctorate publishing in years 1–5 and 6–10, and employment type in 2015. The pre-PhD period includes 4 years leading to the degree award and the degree year. The 2015 employment type is defined by whether it is an academic position and if performing R&D for primary or secondary work activities.

5. Network Example

Ongoing work in the study of science and innovation offers concrete examples of successful network anchors. This article examines two differently organized illustrations and then considers the ways in which their collaboration around a particular data integration task provides concrete insight into both the challenges and the benefits of the data mosaic framework we propose.

5.1. IRIS

IRIS is an IRB-approved data repository housed at the University of Michigan, which anchors a consortium of major research universities and maintains productive partnerships with federal statistical and science agencies, foundations, higher education advocacy groups, and other stakeholders in or adjacent to the science policy domain. University members of the IRIS network share transaction-level administrative data on the direct cost expenditures of federal and nonfederal sponsored project grants. IRIS uses these data along with information derived from more than 50 other sources to construct and document an integrated research data release, the UMETRICS data set, that then can serve as the basis for future projects such as those documented earlier. For now, what is important to know is that university administrative data form the kernel of a growing data mosaic that, because of the central role of academic research in the development and value of science, can be extended through responsible linkage to include many types of information at a high degree of granularity. IRIS is a network form of organization and UMETRICS is a network data structure that together represent a growing data mosaic and illustrate the conditions for its expansion through partnership with other network anchors that allow linkage to other high-quality data sets.

IRIS uses UMETRICS data for several purposes under protections established in MOUs and other agreements executed with university members and diverse other partners. Three of those uses are particularly important background for the examples and discussion to follow. First, IRIS makes research data, documentation, and support available to a growing interdisciplinary research community of more than 350 people from nearly 150 institutions through a secure virtual data enclave maintained at Michigan, through the Federal Research Data Center (FSRDC) system and, soon, through the Coleridge Initiative’s Administrative Data Research facility (ADRF). Developing and supporting a vibrant research community represents one use for the data the IRIS network produces and protects.

Part of what makes IRIS a network anchor, has to do with the very terms of the memoranda of understanding (MOUs) that support its work. At base, IRIS is designed to absorb two kinds of costs that often impede the collaborative creation of public goods. First, technically, research staff at IRIS collaborate with partners ranging from universities and statistical agencies to individual research groups to clean, improve, and document research data releases. This ‘build once-use many times’ framework results in a common data resource that can be used and expanded for many purposes by many different constituents. Rather than offering partners access to a complete, conclusive data resource, UMETRICS represents a central tile that can be attached to many different data sources to meet the needs of different users. More important than the technical aspects of this work are the institutional dimensions. IRIS is organized to absorb transaction costs that might make more bilateral, decentralized data-linkage projects prohibitively difficult. For instance, research users of UMETRICS data and approved partner organizations, such as the U.S. Census Bureau or NCSES, are able to sign a single agreement with IRIS to access data from many participating institutions. By combining technical and institutional procedures to streamline later data integration and use, IRIS facilitates connections and serves as a network anchor.

Second, IRIS uses UMETRICS, the results of its own and community research, and the skills it has built in working with the data to design, develop, and share valuable data products with participating universities. IRIS routinely produces nine different interactive data products for a variety of use cases—ranging from government relations and communication to faculty and research development and the improvement of education. Universities thus receive direct and tangible benefits from their involvement that would be very difficult to realize on their own. The IRIS community continually updates data and pilots new products in collaboration as research findings create new knowledge and as participants’ needs shift. For instance, rapid turnaround reporting and benchmarking on the research effects of COVID-19 aided many of IRIS member institutions and partner organizations in tracking and responding to the effects of pandemic shutdowns.

Third, IRIS uses data aggregated across all participating campuses, which currently represent about 41% of total academic R&D spending in the nation, to develop and produce public-facing data products used by policymakers, advocacy groups, science agencies, and other stakeholders to forward their own goals and missions in science policy, science communication, and federal and state outreach. IRIS is increasingly turning these privacy-disclosed aggregate products toward communicating the value of science to various publics.

Realizing the Benefits.

At its base, then, IRIS is a network anchor that makes transparent, rigorous, and secure use of a wide variety of open access and restricted data from many sources to support fundamental and policy-relevant research and provide valuable benefits to all members of its community. The technical and institutional features that help make it a network anchor by absorbing costs that would otherwise fall to researchers or data custodians and the strong focus on ensuring that streamlined access is accompanied by direct benefits makes the kinds of collaborative integration and improvement work we describe possible. Consider a simple example that will become important in our discussion of linking Survey of Earned Doctorates (SED) and UMETRICS data.

Universities submit monthly transactional data to IRIS. Those data include information on payments of wages from individual grants to particular people. This ‘employee transaction’ table includes information on the job title for which a person is paid on a particular grant and a university-submitted classification of more general occupations (e.g., faculty, students, staff) (IRIS, 2020). Those occupation categories, however, are often incomplete or missing, so university-submitted classifications cannot be used alone for most purposes. Instead, IRIS has, over the course of years, worked with various partners to develop and expand classification systems that work for both research and reporting purposes (Ikudo et al., 2020). This system emerged from work done by university data representatives under the STARMETRICS program, and it was improved by IRIS cofounders Bruce Weinberg and Julia Lane and their research teams while UMETRICS was being piloted with a set of universities from the Big 10 Academic Alliance.

Over multiple iterations of IRIS’s research data release, that classification system has been adapted and improved. Universities receive classifications for their employees with IRIS-produced reports and IRIS technical staff have created mechanisms for university partners to improve the classifications, which they have an incentive to do as a means to make the reports they receive more valuable and usable to local stakeholders. Research teams who access IRIS data for their projects also create improvements using particular methods for specific purposes that can then be tested and where appropriate integrated with future data releases. We shall see that linkage between UMETRICS and SED offers another example, where IRIS classifications of graduate students (based on job titles) can be validated against a universe of known PhD recipients. The result of all this is that IRIS serves simultaneously as a responsible curator for restricted data for many purposes and as a location where different parts of a larger community can collaborate to improve a shared resource. This is the context in which we work to mitigate the dangers of opportunism and competency shortfalls.

Addressing the Dangers.

Minimizing opportunism requires formal and informal arrangements designed to promote fairness and transparency in procedures while also supporting inclusiveness and accessibility in operations and data access. IRIS strives to accomplish this by making all requirements and materials for data access public along with easily accessible and clear documentation, ready and equitably distributed administrative and research support, and encouragement for data and expertise sharing within groups (e.g., researchers and data producers) and across them.

A key part of this work is ensuring not only that access and support are transparent and fair but also that there are mechanisms to allow all members of the community to receive credit for work they do that contributes to the common good. With credit comes credibility for IRIS and, more importantly, a reward for individual community members engaged in the work of generalized exchange. A major source of value for research community members is recognition for completing quality work that is documented and freely shared. One means to limit opportunism is to facilitate mechanisms for data users and data providers to establish positive (or negative) reputations for themselves, which can help guide the collaboration decisions of their future partners. Both of these mechanisms benefit from transparency and reinforce procedural fairness. They are augmented by mechanisms such as research support for documenting and archiving code and measures as well as replication data sets. Collaborative tools (such as secure git repositories within the IRIS virtual data enclave) and means to discover, disseminate, and cite user-generated work (such as IRIS-minted DOIs) aid in both these processes while helping to make certain that the network IRIS anchors do not become closed to new ideas, new techniques, or new analysts.

Running throughout the whole of our argument is the theme of value for participants. Given that we cannot and should not rely on altruism or preexisting bonds of affinity or kinship (which could themselves contribute to network closure and calcification), the pursuit of individual interests and goals that can only be met with inputs from others becomes a primary driver of participation and spur toward generalized exchange. IRIS directly ensures value to data providers, which builds trust that facilitates the creation of streamlined data protections that, in turn, put access to data within reach of a wider community and increase the range of types and sources of data that are available. As the quality, scale, and scope of data resources increase, so too does the value for all members of the community. Perhaps unsurprisingly, shared, community-driven data resources and vibrant generalized exchange networks have positive externalities, reinforcing a virtuous cycle of credit and engagement while further diminishing the likelihood of opportunism by increasing the amount one stands to lose when bad behavior is identified.

Taking advantage of the many sources of value that are ‘baked into’ joining and fully participating in the IRIS network also requires attention to costs. The fundamental cost of complex collaborations that involve data sharing and integration are as much transactional as they are technical or financial. Indeed, the challenge individual researchers would face in overcoming the frictions necessary to secure data access permissions from a wide range of providers would be insurmountable for most. The work to build trust and establish value that is core to the IRIS model allow it (with the help of well-crafted agreements) to minimize the transaction costs of data access and collaboration for all. IRIS negotiates dozens of agreements with provisions that allow us to make the full data set we produce available to researchers under a single agreement with no additional university approvals.

Likewise, non-university data providers can, with appropriate approvals from IRIS’s elected board of directors, access data and link assets from many sources with a single agreement. Establishing the value of the data set as a whole for aggregate reporting on matters of pressing concern to non-university partners increases the likelihood that future data-sharing agreements will augment value for everyone. The benefits of participation compound to limit the likelihood of opportunistic behavior by lowering the cost of participation through streamlined data access and increasing its value through growing scale and scope. Here too, reciprocity becomes an essential, and positive, force for community development. The linkages we present between SED and restricted transaction data from nearly two dozen different institutions offers just one case in point.

Transparency, fairness, accessibility, value, and efficiency may seem far removed from the day-to-day details of developing a research community. But they are essential accelerants for generalized exchange and retardants for opportunism.

The primary means IRIS uses to decrease the dangers associated with screwing up flow from and reinforce these principles too. If one primary danger for data science communities is competency shortfalls among participants, it becomes essential to meet data users and data producers where they are. Building research community is thus inseparable from building collaborative, technical, and analytic capacity. That capacity, in turn, improves transparency and increases the rigor of both individual and collective work.

Training opportunities, public convenings, and clear documentation and support for mechanisms to allow learning within and across constituent groups increase accessibility and the flow of new people and ideas into the network. They also reduce the forms of ignorance that can lead to unintentional screw-ups. IRIS routinely offers a 9-day-long virtual workshop series aimed at providing novice research users of largescale restricted data with the tools they need to get started. Sustained collaborative work and substantial technical support in those settings jumpstarts expertise building and generalized exchange. Other courses, which IRIS contributes to, are run by our partners NCSES and the Coleridge Initiative. Those courses aim to engage analysts and technical staff at federal and state agencies as well as universities and they rely directly on the analytic data sets whose production we describe in some detail while providing more description of the classes themselves in a subsequent section. Training opportunities such as these serve not only to increase capacities for collaboration but also to further integrate disparate but interdependent portions of the community and to help members with different backgrounds and expertise learn from and communicate with each other. The macroscopic results of all that work refresh the network, expand the grounds for generalized exchange, and decrease the likelihood of both screwups and screwings.

5.2. NCSES

NCSES is a leading provider of statistical data on the U.S. science and engineering enterprise. As a principal federal statistical agency, NCSES serves as a clearinghouse for the collection, acquisition, analysis, reporting, and dissemination of statistical data related to the United States and other nations. NCSES conducts more than a dozen surveys in the areas of education of scientists and engineers, R&D funding and expenditures, science and engineering research facilities, and the science and engineering workforce with type of respondent ranging from individual, academic department and institution, state agency, federal agency, to company, business, and establishment. In recent years, NCSES has explored alternative data sources in conjunction with survey data to bolster the utility of its surveys and avoid imposing further response burden on the survey participants. NCSES also supported requests to match its restricted-use survey data with administrative or other data as potential research tools.

Results of these data linkage explorations formed a basis for a research data network centered around the SED, an annual census of individuals who earned research doctoral degrees from accredited U.S. academic institutions. These census data of the highly trained and highly invested U.S. research doctorate holders is a natural magnet attracting data-matching requests to study outcomes of federal programs such as those supporting diversity in doctoral training (McNaire Scholars program), engineering workforce development (National Science Foundation [NSF] Engineering Research Centers), and research opportunities for undergraduate students (NSF Research Experiences for Undergraduates program). For this population, postgraduation career trajectory, scientific productivity, and their contributions to innovation are also high-priority topics in science policy research. Connected to the SED by design is a NCSES workforce survey, the Survey of Doctorate Recipients (SDR) a biennial longitudinal survey. The two linked surveys provide rich demographic, education, and career history information for this population, and significantly increased feasibility of further expansions through data linkage to illuminate policy-relevant topics ranging from the impact federal research funding has on graduate education, job placement, career progression, and research output as well as scientific mobility indicators, factors impacting scientific workforce diversity, and gender gaps in publication productivity, earnings, and employment (Goulden et al., 2011; Kahn & Ginther, 2017; Millar, 2013).

Data linkage involving restricted-use data is governed by the center’s matching policy, which requires work to be performed by NCSES contractors or, with NCSES approval, by a federal agency. The center’s practices in protection of the confidentiality of NCSES collected data and statistical standards placed on data production add cost and operational challenges on top of the technical challenges of developing linking methods. For example, contractors are required to have a NCSES-approved confidentiality plan and data security procedures before the work can begin and it historically entails building a customized secure data system for the project. At times, this practice and data use agreement can take a significant amount of time to establish. Not until 2019, when NCSES started housing-linkage projects in the NCSES Secure Data Access Facility (SDAF), did linkage projects begin to flow more efficiently. Using the same facility, where NCSES also provides secure remote access to confidential survey data for licensed researchers, cross-pollination between project teams and collaborations with data users that have shared data interests could be efficiently facilitated and managed effectively by NCSES.

Many questions of interest for the population of doctorate recipients revolve around research publications, and the SDR has periodically included self-reported data on publications. At the same time, a wealth of data on publications is available in publication and citation databases. These data constitute valuable information that, through linkage, might augment the SED/SDR without increasing burden on respondents. NCSES partnered with the research community in multiple ways to conduct this work. First, linking respondents in the SED/SDR to authored scientific publications and U.S. patent inventors is a NCSES major research undertaking that began in 2012. The linkage methodology continues to evolve from an initial deterministic matching attempt to advanced machine learning approaches. All the while, venders of bibliometric data continue to improve data coverage and offer new data features that could redirect the linkage methodology. The first pilot project with Clarivate (then Thomson Reuters) delivered results in 2016. Staff reshuffling during the lengthy project with the departures of key technical personnel and project managers resulted in incomplete linkage quality assessment and process documentation. NCSES engaged the community to complete data-quality assessments and prepare for data release, contracting with three independent research groups. One (Science-Metrix) conducted a review of the potential data source biases and methodology shortfalls, one (Ginther et al., 2020) performed independent validation of matched records of publications, and one (Jiang et al., 2021) performed analysis of matched publications and provided guidance on how to use the linked data. Valuable information was gathered on linkage quality and data limitations. Here NCSES was able to facilitate the construction of a data mosaic, supporting linkages that would require resources beyond those of most or all research groups, address privacy and confidentiality issues, and engage community expertise to assess those linkages to produce high-value data for the community.

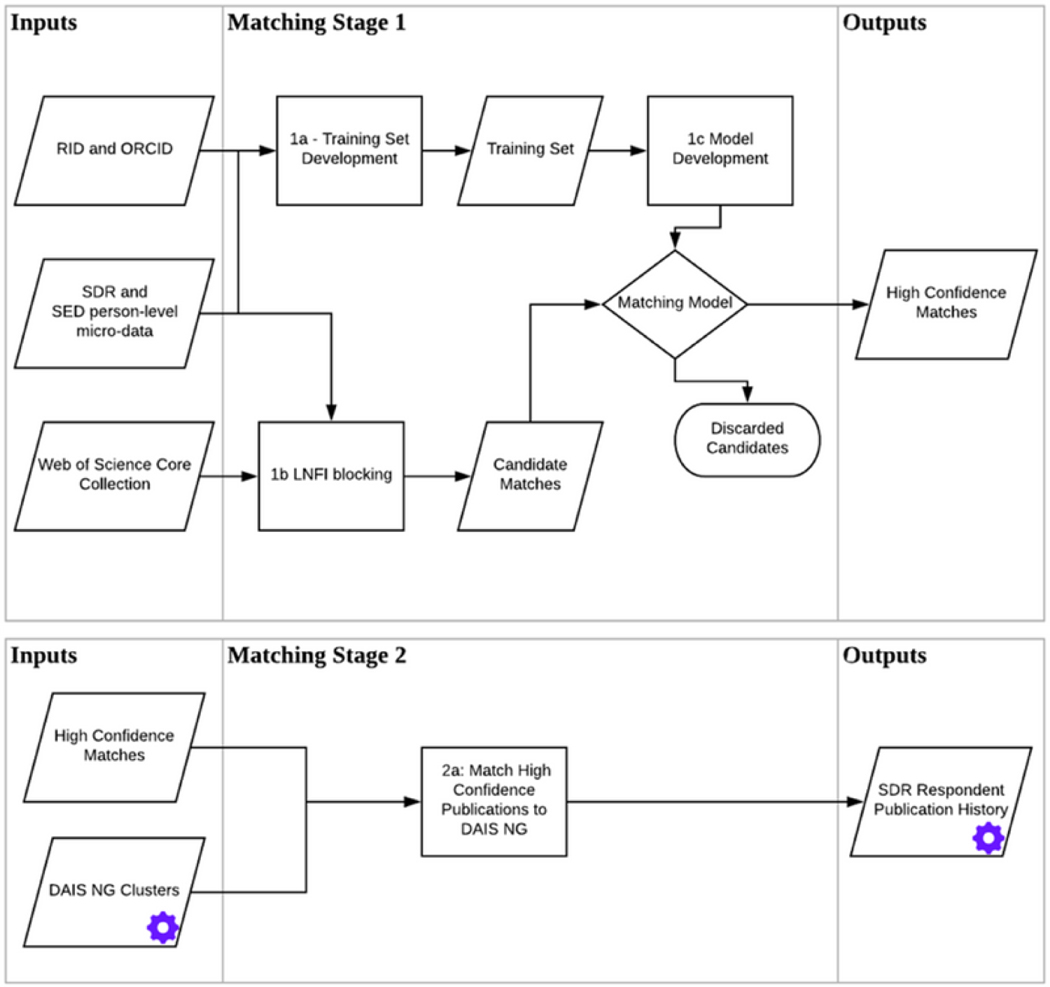

NCSES kicked off a new round of linkage in 2019 to link a new and larger sample (~80,000) from SDR 2015 to the two leading bibliometric databases, Scopus and Web of Science (WoS). By contracting with both Elsevier and Clarivate, NCSES plans to explore contractor capability and identify a longer-term partner to build a repeatable and sustainable data production. The Elsevier team utilized a well-established author ID system and a sophisticated query algorithm to link SDR respondents directly to Scopus author IDs using all identifiers and linkable attributes from the cluster of publications associated with each author ID. The Clarivate team took a sequential two-stage machine learning approach as detailed and illustrated in Appendix Figure A1. In stage 1, high-confidence matches were determined by applying a supervised gradient boosting machine learning algorithm (GBM) constructed with 67 features ranging from comparable direct and indirect identifiers to auxiliary variables containing only information from the SDR (e.g., race/ethnicity) or Web of Science (WoS) (e.g., name commonality.) In stage 2, the resulting high-confidence publications were used to seed into and selectively append additional publications from WoS Author Clusters, an independent methodology that disambiguates the more than 171 million WoS publication records.

Lessons learned from initial pilot work made it clear that (i) model performance is not uniform across subgroups and prediction thresholds must be adjusted to reflect limitation of source data to achieve the required high-precision level (for example, individual author affiliation data are not available for all years or all types of publications); (ii) linkage errors need to be assessed at levels aligned with the expected unit of analysis (in this case, at the individual publication and individual researcher levels); (iii) most of readily available bibliometric measures are derived for single publications, and meaningful measures of publication output and impact are needed at the researcher level to support population estimation and reporting. This activity relies heavily on analysts from both bibliometric and survey sides to collaborate; and (iv) a new set of statistical standards need to be developed to support releasing of research microdata by a statistical agency.

5.3. The IRIS-Coleridge-NCSES Partnership

If the UMETRICS and NCSES data architectures are both evolving mosaics, the next step is to combine these mosaics. The exponential value of data mosaics suggested in our toy model is a function of just this kind of extension, where a strategic linkage (in this case between UMETRICS and SED) conducted through an ongoing collaboration opens a wide range of new possibilities for improving access to and use of both the newly connected mosaics. This work is in progress under a NCSES contract with the Coleridge Initiative and being conducted in the Administrative Data Research Facility (ADRF). Combining these data sets adds considerable value to both, as is illustrated following. For instance, the SED is administered at a single point in time, although it has retrospective questions. Adding UMETRICS adds dynamic richness. For UMETRICS, which does not contain data on field of study or background, the SED provides context. Of course, UMETRICS also provides context to the SED in that the SED contains limited information on researchers’ formative training experiences in the form of funding and research teams, while UMETRICS contains data on the source of research support (funder and specific award) as well as the research teams in which people are embedded.

The key to realizing the value of this linkage beyond the specific projects come from: (a) the process by which the linkage process draws on complementary expertise of network anchors, (b) the enabling features of the data platform (ADRF), and (c) the institutional arrangements of IRIS that allow for integration of data from many institutions and sources with NCSES surveys under conditions that minimize transaction costs for NCSES but that maintain necessary data protections required by both partners.

All of these features are enabled by both parties’ recognition of and attention to multiple sources of value. NCSES and IRIS share the goal of making well-documented, linked data that can address key questions in the study of science and innovation broadly and make it responsibly available to a diverse research community. The partnership thus reflects a common recognition of just the kind of value our model abstractly identifies with linked data mosaics. This common source of value is matched by independent needs for each organization, which the joint project helps to address. For IRIS and its member institutions, linking university administrative data to a widely used and recognized ‘gold standard’ data set for understanding graduate education represents the potential for important new findings and data products that might be developed to address key concerns of universities and their graduate programs that pertain to diversity, equity, and inclusion and to widespread efforts to re-envision STEM graduate education for the 21st century (NASEM, 2018a). Thus, the partnership has immense potential value to IRIS and its stakeholders.

For NCSES, streamlined access to administrative data from many institutions and collaborative means to develop expertise in their use likewise address specific needs. Recall that, in its role as a network anchor, IRIS is designed to absorb transaction costs and to facilitate the development of community expertise in the use of the data it creates. Because of these features, NCSES can sign one agreement to access many institutions’ data and the collaborative work to develop linkages (discussed following) can grow each organization’s relevant expertise. This work thus addresses both requirements under the Evidence Based Policy Act and Federal Data Strategy and recent recommendations made by a NASEM panel that evaluated NCSES data products (NASEM, 2018b). Training classes that use the linked data this partnership produces serve both organization’s needs and their joint goals by developing local and community capacity and deeply engaging researchers in the process of expanding and documenting data linkage.

5.4. Technical issues in Linking SED and UMETRICS

Realizing the value of this partnership has required consistent, long-term, and iterative work that engages analysts from IRIS, NCSES, and the Coleridge Initiative as well as participants in four training classes. While our goal is not to dwell on the deep technical details of this particular linkage, which largely rely on standard methods in data science, we do highlight key questions and processes that we take to be necessary for the project’s success. We summarize them here in the belief that similar issues are likely to arise in data integration efforts in this and other fields and hope that the discussion will illustrate common critical challenges and illuminate in very concrete terms aspects of the data mosaic approach we propose.

Units of Analysis.

Earlier, we noted that one of the first substantive questions for any data mosaic project has to do with establishing a small number of fundamental levels of analysis for the overall effort. In this pilot effort, as in the larger initiatives of which it partakes, that level of analysis is the individual researcher, or, more accurately, the individual research career. This decision was motivated by the exemplary discussion in section 4 and represents a key distinction between this data linkage effort and other large-scale projects relevant to our domain, which often focus specifically on documents, publications, patents, proposals, and grants.

Linking Variables.

The selection of that level of analysis is driven by theoretical concerns, but also by substantive knowledge of the field and by the needs of participating community members. It is important for the general project of data linkage because the fundamental level of analysis dictates the key linking assets and also the levels and types of privacy protections required. In our case, we rely on personal identifiable information (PII), either hashed (in the case of SED-UMETRICS linkage) or clear text (in the case of SDR-WoS) linkages. The SED-UMETRICS linkage is augmented by information on each individual research employee’s month and year of birth. Those fields, rather than complete date of birth information, were selected in concert with university data providers after careful pilot tests of linking procedures that helped us determine the highest value assets for improving linkage. While adding day of birth to these data would offer a minor improvement in linkage quality, that improvement came at the cost of expanded concerns about privacy and risk and thus we chose not to pursue the additional data asset. Such risk-utility tradeoffs with regard to privacy and security are best driven transparently by discussion of community needs and value, and represent another general challenge for this type of project.

Protecting Privacy.

A fundamental concern for linked individual data is the increased danger of primary and secondary reidentification that accompanies a dramatic increase in information associated with individual-level identifiers. This danger is particularly salient in fields such as health care and education (which this project implicates) where federal regulations such as Health Insurance Portability and Accountability Act (HIPAA), Federal Education Rights and Privacy Act (FERPA), and Confidential Information Protection and Statistical Efficiency Act (CIPSEA) impose specific requirements on participants and on research users. A substantial challenge for bringing together data from two different organizations with their own privacy and security protocols is finding and agreeing upon an environment that will allow the members of the prospective organizations to collaborate while upholding their separate privacy and protection requirements.

IRIS and NCSES agreed to have the SED-UMETRICS linkage conducted within the Coleridge Initiative’s ADRF. The ADRF is a FedRAMP Certified secure cloud-based computing platform designed to promote collaboration, facilitate documentation, and provide information about data use to the agencies that own the data. The U.S. Census Bureau established the ADRF with funding from the Office of Management and Budget to inform the decision-making of the Commission on Evidence-Based Policy. The ADRF was designed to apply the “five safes” framework (safe projects, safe people, safe settings, safe data, safe exports) for data protection (Desai et al., 2016; Arbuckle & Ritchie, 2019). Since the ADRF was designed to bring together data from different organizations and facilitate collaboration, it provided the framework to establish data-security protocols that met the needs of both NCSES and IRIS. Still, developing a protocol to establish safe people and safe exports was not a trivial process.

We match individuals in the SED and UMETRICS data sets on name, institution, and date of birth information. To meet IRIS’s privacy and protection requirements, the personally identifiable information (PII) had to be hashed before bringing the data together in the ADRF. To realize this requirement, we apply the same standardization techniques to information from each data set and hash the resulting strings using the same algorithm and seed. The result is that identical cleartext PII strings are transformed into unique, identical hashed strings, which can then be used for deidentified linkage to integrate the two data sources. Since the personally identifiable information is hashed, our options for linkage are restricted to using an exact matching strategy. We are unable to use a sound-matching or statistical similarity approach.

This exact matching strategy across multiple name-token combinations gives us more confidence in the matches we identify; however, the relative inflexibility of our approach will fail to link individuals who appear in both data sources with a misspelled first or last name in one, and it will also face challenges where survey data contains nicknames or other substantive differences between an individual’s self-identification and their formal legal name as registered in university administrative systems. Parallel data-cleaning processes applied to both data sources included a step that harmonizes full names and common nicknames associated with them. Nevertheless, the tradeoffs involved in these privacy protection procedures may lead us to underestimate the number of individuals who receive grant funding while earning a PhD. Plans to conduct parallel cleartext PII linkage of UMETRICS and SED once appropriate institutional clearances are secured will allow for additional validation. But, in keeping with the mantra of establishing value while hewing closely to the privacy requirements of particular communities, an imperfect initial match can be used to demonstrate value and build further support for expanded work with these restricted data.

Similarly, the SDR and WoS linkage was carried out within a closed, secure data-access environment, NCSES’s SDAF, to safeguard the privacy and confidentiality of the survey respondents. The constraints added to the operational cost and efficiency. The data construction does not end at the completion of data linkage. In the case of appending longitudinal publication outcomes to surveys rich in demographic information at the individual level, the risk of reidentification can increase dramatically. We face new challenges in designing research data that can be disseminated broadly to the communities while addressing the heightened disclosure risk. Here, too, the dictates of data structures and privacy requirements are a key component of analytic decision-making in early project stages.

Identifying a Cohort With Nonoverlapping Samples.

One of the first issues that arises in any linkage, which confronted the work we describe, is the timeframe to use. The SED has covered all accredited U.S. institutions since 1957; therefore, its duration and timeframe was not a limiting factor when considering the appropriate timeframe for analysis. On the other hand, the availability of UMETRICS data varies across universities and ranges from 3 months to 18 years, with an average of 7 years and 8 months. The earliest UMETRICS data available begins in 2001; however, the number of reporting universities is sparse in the earlier years, with fewer than half of the universities available before 2013 (IRIS, 2020). We have the most coverage for the fiscal years 2014–2017. Each of the 21 universities we analyze here is represented during this time; 16 universities have full coverage, and the other five universities are only missing one year. Thus, we focus on PhD graduates from SED during the fiscal years 2014–2017. (One of the IRIS universities only had 3 months of UMETRICS data available for this time and thus only captured a fraction of the grant funding from the doctoral graduates; thus, we removed this institution from the analysis.) As with any such decision both data sufficiency and technical needs must be balanced with substantive concerns (e.g., changes over time in fields).

Another, potentially related, issue that can arise is how to harmonize time across data sets. This was less of an issue in our context because the SED is administered at a single point in time while UMETRICS is longitudinal. On the other hand, there was more of an issue to develop standardized time variables within UMETRICS. While it would be possible to provide full-time detail, there is an advantage to providing a more parsimonious set of variables. For many purposes, we chose to provide data at a monthly level, which still provides considerable richness, but with more parsimony.

Validation Strategies.