Abstract

Objective

To address gaps in routine recommended care for children with Down syndrome, through quality improvement during the coronavirus disease 2019 (COVID-19) pandemic.

Study design

A retrospective chart review of patients with Down syndrome was conducted. Records of visits to the Massachusetts General Hospital Down Syndrome Program were assessed for adherence to 5 components of the 2011 American Academy of Pediatrics (AAP) Clinical Report, “Health Supervision for Children with Down Syndrome.” The impact of 2 major changes was analyzed using statistical process control charts: a planned intervention of integrations to the electronic health record for routine health maintenance with age-based logic based on a diagnosis of Down syndrome, created and implemented in July 2020; and a natural disruption in care due to the COVID-19 pandemic, starting in March 2020.

Results

From December 2018 to March 2022, 433 patients with Down syndrome had 940 visits. During the COVID-19 pandemic, adherence to the audiology component decreased (from 58% to 45%, P < .001); composite adherence decreased but later improved. Ophthalmology evaluation remained stable. Improvement in adherence to 3 components (thyroid-stimulating hormone, hemoglobin, sleep study ever) in July 2020 coincided with electronic health record integrations. Total adherence to the 5 AAP guideline components was greater for follow-up visits compared with new patient visits (69% and 61%, respectively; P < .01).

Conclusions

The COVID-19 pandemic influenced adherence to components of the AAP Health supervision for children with Down syndrome, but improvements in adherence coincided with implementation of our intervention and reopening after the COVID-19 pandemic.

Keywords: Down syndrome, health maintenance, quality improvement

Abbreviations: AAP, American Academy of Pediatrics; COVID-19, Coronavirus disease 2019; EHR, Electronic health record; MGB, Mass General Brigham; MGH DSP, Massachusetts General Hospital Down Syndrome Program; QI, Quality improvement; SPC, Statistical process control; TSH, Thyroid-stimulating hormone

As each primary care pediatrician typically cares for 1-2 patients with Down syndrome,1 for many children, the current care model involves a primary care physician providing health supervision for children with Down syndrome following the American Academy of Pediatrics' (AAP) Clinical Report.2, 3, 4

Studies show wide variation in adherence to the various recommended care elements in the AAP's report. Annual blood work, including thyroid screening with thyroid-stimulating hormone (TSH), is conducted in 56%-92% of patients, and hemoglobin determination is completed in 48%-67%.1 , 5, 6, 7, 8, 9 Hearing is screened at least annually in 18%-85%, and vision is screened in 43%-88%.1 , 6, 7, 8, 9 Sleep studies are conducted in 4%-70%.1 , 5 , 6 , 8 , 9

The AAP guidelines are revised over time as new evidence emerges. In the past, sleep studies were recommended for children with symptoms of obstructive sleep apnea, but in 2011 they were recommended universally by age 4 years. Sleep study completion rates were lower before publication of the 2011 AAP document, with subsequent increases.1 , 5 , 6 , 8 , 9 Two studies from single institutions in Ohio focused on adherence to the 2011 document.1 , 5 At baseline, 13%-16.7% patients were fully up-to-date on components studied.1 , 9 Adherence improved with physician education, integration of components of the AAP guidelines directly into the electronic health record (EHR), and direct-to consumer tools.1 , 5 , 10

We began this quality improvement initiative within our subspecialty clinic for Down syndrome, the Massachusetts General Hospital Down Syndrome Program (MGH DSP), to attempt to improve health supervision. We based our approach on our previous work in a different hospital system, which demonstrated that a combination of EHR tools using the same EHR system, Epic (Epic Systems Corp), improved adherence for patients with Down syndrome.5

Methods

The MGH DSP is affiliated with Partners Healthcare, now Mass General Brigham (MGB) in Boston, Massachusetts, and is housed in the Genetics Department. The MGH DSP is a multidisciplinary specialty program for individuals with Down syndrome, and medical visits include a physician, a social worker, a nutritionist, a self-advocate with Down syndrome, and a program coordinator. The location has phlebotomy available on-site; bloodwork is ordered during a visit and completed on the same day. In addition to the medical visit, patients may see audiology and/or ophthalmology at MGH-affiliated Mass Eye and Ear Institute the same day. Before a visit, parents complete an electronic intake form with parent-reported interval medical history and health surveillance.

Beginning in 2019, our team began a quality improvement (QI) initiative focused on adherence to 2011 AAP guideline components, based on previous work and the existing literature. A typical team-based approach was used to evaluate barriers, drivers, and study adherence and the EHR integrations at MGH. The team consisted of an Epic analyst, the Director of Quality Improvement Research for the MGH DSP (geneticist), the MGH DSP Director (geneticist), a parent of a child with Down syndrome (developmental–behavioral pediatrician), and a research coordinator. In previous work using EHR integrations at another institution, process-improvement methods were critical for successful implementation and adoption5; we needed to follow the integrations closely to make sure that they were functioning appropriately, and not excessively, and to check in with locations at which the intervention was functioning to determine whether changes were needed.5 We included an Epic analyst to monitor feedback about the EHR integrations. The first author presented to the Clinical Committee for MGB in October 2019 for approval to implement these pediatric integrations throughout the MGB health care system. We included a parent to incorporate a parent's insight and perspective. Team interactions included email communication, meetings in-person, or through videoconferencing technology.

In April 2020, due to the coronavirus disease 2019 (COVID-19) pandemic, MGH DSP transitioned from a fully in-person clinic model to a virtual visit model using videoconferencing.11 As we were conducting this planned QI project, we were able to capture the real-time impact of adherence due to the unplanned, natural disruption of the COVID-19 pandemic, as well as our ability to maintain adherence during use of the virtual visit model.

Baseline

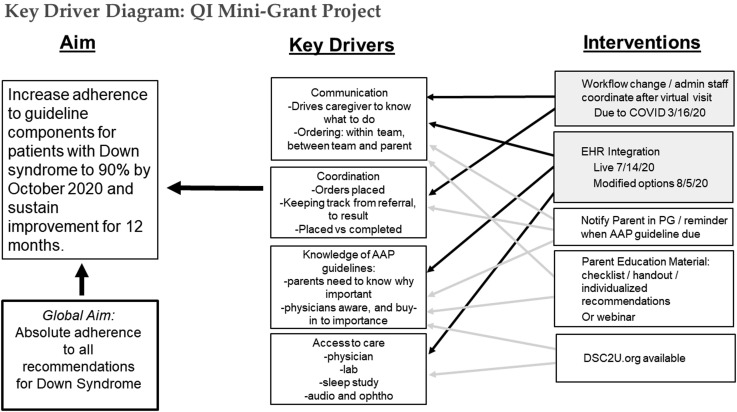

Baseline data were collected in 2019, while developing the intervention, and before the COVID-19 pandemic. From this, our Specific, Measurable, Achievable, Relevant, and Time-Bound aim was to increase adherence to components of the AAP Clinical Report for patients with Down syndrome to 90% by October 2020 and sustain improvement for 12 months. We created a Key Driver Diagram as we planned our initiative (Figure 1 ).

Figure 1.

Graphic of Key Driver Diagram with drivers, interventions, and aims. PG, patient gateway (an electronic mode of communication between patients or parents and the medical team that is housed in the electronic health record).

The Intervention

In 2019, our team developed an intervention to address gaps in adherence. Epic analysts in the MGB system developed EHR integrations to replicate previous work that showed the benefit of specific EHR integrations at a pediatric hospital in Ohio.5 The EHR integrations at MGB we studied consisted of a listing of components in the Health Maintenance Record section of Epic, for patients with the diagnosis of Down syndrome already added to their “problem list” in Epic.

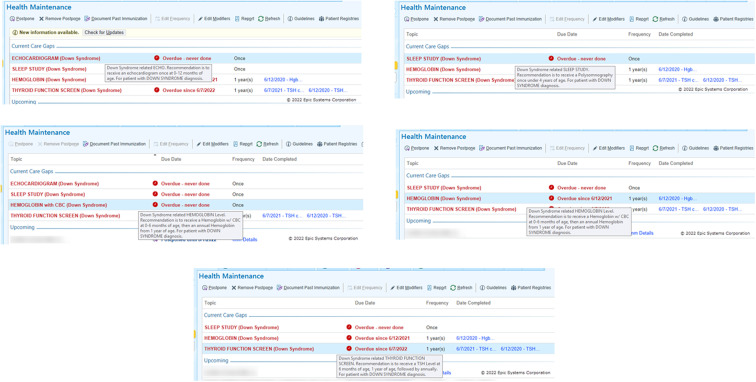

The goal of the intervention was to alert physicians in the MGH DSP of missing components of the 2011 AAP guidelines. The EHR integrations consisted of the same approach used previously,5 with integration of reminders to key components that apply to all children with Down syndrome, of which we studied 3: a sleep study once before age 4 years, a serum hemoglobin with complete blood count at age 0-6 months, then annual hemoglobin, and a TSH at 6 months of age, and then annually. These items were shown as text in the Health Maintenance Record of Epic, and in our build were listed in red text as “Care Gaps” (Figure 2; available at www.jpeds.com).

Figure 2.

Screenshots of integrations in the EHR.

Implementation

EHR integrations began in July 2020. At implementation, an Epic analyst reviewed responses from users. In August 2020, the responses in the best practice advisory were changed to include all genetics departments in the MGB hospital system and to combine genetics department names to shorten the number of options listed for easier readability. Feedback from users continued to be followed. In January 2021, additional modifications were made to the best practice advisory; to give users time to review the chart, the age range of sleep health maintenance was changed to allow time for users to order a sleep study before it was “overdue,” and the hemoglobin health care maintenance was updated to include the common name “HGB” as completing the hemoglobin component.

When we began planning and developing the intervention, we did not foresee that our implementation would occur during a global pandemic with broad consequences at many levels: from our MGH DSP clinic operations, to our institutional procedures, and provision of health care statewide.

Chart Review

Although our MGH DSP follows patients throughout the lifespan, we chose to study retrospectively patients aged 18 years old and younger to correspond with the MGB-approved EHR integrations. Included for study were all completed clinic visits to the MGH DSP in December 2018 or after, regardless of visit format (eg, telemedicine, phone only, or in-person). Scheduled but not completed visits and encounters outside a clinic visit were excluded. We extracted data from finalized progress notes written and signed by the MGH Down Syndrome Program physicians, and included medical record information before, but not including, the clinic visit date; age, sex, race, ethnicity, and visit type. The MGB Institutional Review Board approved this study.

Outcome Measures

We studied adherence to 5 components of Health supervision for children with Down syndrome.3 We defined adherence to each component as completion of a TSH measurement within the past 12 months, hemoglobin check within the past 12 months, sleep study any time for those aged 4 years and older, audiogram within the past 6 months for those aged younger than 5 years and within the past 12 months for those aged 5 years and older, and ophthalmology consultation within the past 12 months for those aged 1-4 years, within the past 2 years for those aged 5-12 years, and within the past 3 years for those aged 13 years and older.

We calculated adherence in reference to the date of clinic visit to the MGH DSP. For the 5 components, each was scored at each visit as either adherent or not adherent. Adherence was defined as the completion of a component as documented in a MGH DSP physician's progress note. The first 3 measures were included in the intervention, and the latter 2 were not included.

Our outcome measures were 3 different composite measures of adherence, sleep study ever, adherence to each component, and percentage fully up-to-date. First, we calculated a composite measure of total adherence at each visit, for each patient: [(the number of components that were adherent)/(the number of components recommended) × 100]. Then, a composite measure of total adherence each month was calculated as [(the number of components that were adherent for all patients with visits in month X)/(the number of components recommended for all patients with visits in month X) × 100]. Finally, we calculated a composite measure of EHR-integration components (TSH, hemoglobin, sleep study ever) each month by dividing the number of EHR-integration components that were adherent for all patients with visits in month X by the number of EHR-integration components recommended for all patients with visits in month X and multiplied by 100.

Sleep study adherence for those 4 years of age and older each month was calculated as [(the number of patients ≥4 years who had a sleep study completed ever with visits in month X)/(the number of patients ≥4 years with visits in month X) × 100]. Adherence to each component (TSH/hemoglobin/audiology/ophthalmology) each month was calculated by dividing the number of patients adherent with visits in month X by the number of patients with visits in month X and multiplying by 100. In addition, the percentage of patients fully up-to-date to 5 components each month was calculated as [(the number of patients with 100% adherence with visits in month X)/(the number of patients with visits in month X) × 100]. To be 100% adherent, patients aged 4 years and older needed all 5 components, and patients younger than 4 years needed all 4components except the sleep study.

Statistical Analyses

We plotted p-charts using software from a local QI course12 to analyze monthly percentages of composite adherence for all measures tracked, composite adherence for EHR-integration measures, sleep study adherence, adherence to TSH/hemoglobin/audiology/ophthalmology, and the percentage of patients fully up-to-date. We tracked the impact of the integration for more than 12 months. Centerline shifts were determined using standard statistical process control (SPC) chart rules.13 , 14 We used the American Society for Quality rules to detect special cause variation on control charts.15 Final charts were reviewed by QI course faculty.12

Given the general stability in charts, we conducted χ2 analysis to compare aggregate values (total adherence) by race/ethnicity (non-Hispanic White, Black, Hispanic, and Asian); by visit type (new patient visit and follow-up); and by Massachusetts residency (residents and non-Massachusetts residents). Determination of race was based on EHR documentation of race, generally obtained by patient report at time of registration. A “new” patient visit referred to those patients who were establishing care with the MGH DSP and had never been seen previously in our clinic. Any patient with previous visits in the MGH DSP was considered a “follow-up” patient.

Results

From 2019 to 2022, there were 940 eligible visits to the MGH DSP, of which 109 were new patient visits and 831 were follow-up visits. These visits corresponded to 433 unique patients of mean age 7.7 years (range 1.0-18.9 years), who were most commonly of White race and non-Hispanic ethnicity (Table I ). Patients most often lived in Massachusetts and New England states, but some were from a variety of states and other countries.

Table I.

Demographic characteristics of 433 unique patients in the MGH DSP from 2018 to 2021

| Characteristics | No. (%) |

|---|---|

| Sex | |

| Male | 201 (46) |

| Race | |

| White | 304 (70) |

| Black | 16 (4) |

| Asian | 20 (5) |

| Other | 44 (10) |

| Multiple races | 14 (3) |

| Unknown | 35 (8) |

| Ethnicity | |

| Hispanic | 64 (15) |

| Non-Hispanic | 300 (69) |

| Other | 13 (3) |

| Unknown | 56 (13) |

| Residence | |

| State | |

| MA | 284 (66) |

| NY | 56 (13) |

| NH | 33 (8) |

| CT | 15 (3) |

| NJ | 9 (2) |

| ME | 8 (2) |

| RI | 5 (1) |

| PA | 4 (1) |

| MI | 3 (1) |

| FL | 2 (<1) |

| WA, MO, TX, or MS | 1 (<1) |

| International | 4 (1) |

| Unknown | 2 (<1) |

| Missing/blank | 1 (<1) |

| Age at first visit, y, mean (SD, range) | 7.7 (5.2, 1.0-18.9) |

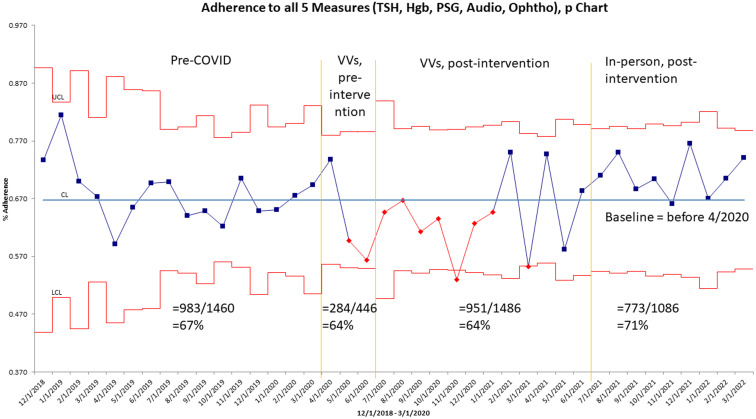

Plotting measures over time, we considered 4 time periods: baseline (before April 2020 when our clinic was practicing in-person, before the COVID-19 pandemic), virtual visits, preintervention (from April 2020 to June 2020, when our clinic transitioned to virtual visits but before our intervention was implemented), virtual visits, postintervention (from July 2020 to June 2021, when our clinic remained in virtual visits and after our intervention was implemented), and in-person, postintervention (July 2021 and after, when our clinic returned to in-person clinic and our intervention remained active).

At baseline, we found 67% adherence to the 5 components of the 2011 version of the AAP Clinical Report from December 2018 to March 2022 (Figure 3; available at www.jpeds.com). From May 2020 to January 2021, adherence to the 5 components was below the baseline median (67%); based on the SPC rule of “7 or more consecutive points on one side of the average,” these 9 points are special cause variation, do not coincide with the timing of our intervention, occurred before our intervention in July 2020, and occurred during the COVID-19 pandemic and virtual visits. In later months, the adherence to the 5 components returned to baseline range, with values both above and below the baseline median, and within control limits. At baseline, 24% of patients each month were fully adherent to the 5 components from the AAP, from April 2019 to September 2019 showed variability during the COVID-19 pandemic and virtual visits, and was 34% from July 2021 to March 2022.

Figure 3.

Total adherence rate to 5 select age-based AAP guidelines for individuals with Down syndrome in the MGH DSP from December 2018 to March 2022. Yellow lines denote the transition to virtual visits from April 2020 to June 2021 due to the COVID-19 pandemic and the timing of EHR integration intervention in July 2020. Gray lines indicate the process stage mean, which refers to the arithmetic mean for all points within that process stage; statistical rules indicate that there is 1 stable process stage. Red lines indicate the control limits (±3 SDs based on the process mean and number for that month). PSG, polysomnogram; VV, virtual visits.

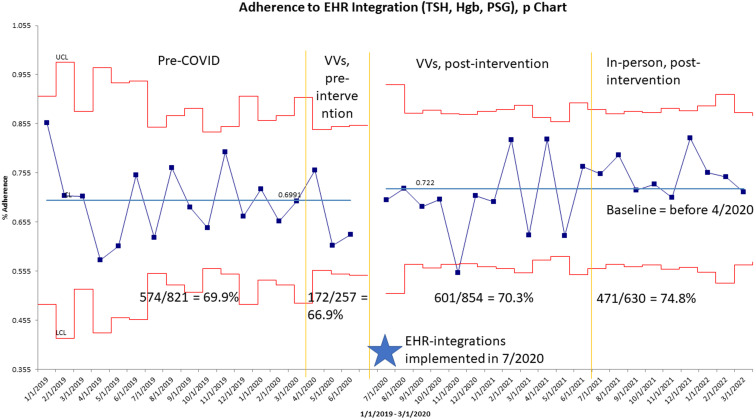

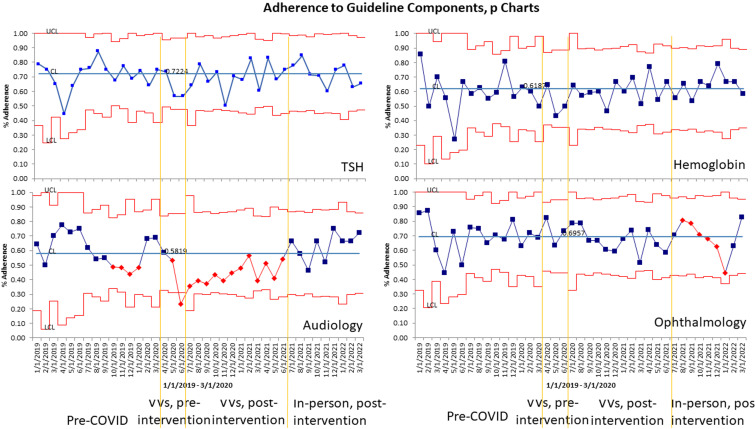

To study the impact of our EHR integrations, at baseline there was 69.9% adherence to the 3 components included in the EHR integrations (TSH, hemoglobin, sleep study ever) from January 2019 to March 2020 (Figure 4; available at www.jpeds.com). Special cause was detected in July 2020, which coincided with implementation of our EHR integrations; adherence was 72.2% from July 2020 to March 2022. Adherence to each individual component of the composite showed that 78% of patients aged 4 years and older from 2019 to 2022 had a sleep study in their life without special cause, 72% of TSH screens were done, and 62% were adherent to hemoglobin screening at baseline without special cause (Figure 5; available at www.jpeds.com). Adherence to audiograms (58%) was the lowest individual component and showed a downward shift, with greater than 8 consecutive points below the centerline from May 2020 to June 2021 (corresponding to an average adherence of 43%), aligning with transition to virtual visits and the COVID-19 pandemic, but subsequently returned to baseline range (Figure 5). Adherence to ophthalmology was 70% at baseline and showed special cause from August 2021 to January 2022 with consecutively decreasing points (Figure 5).

Figure 4.

Total adherence rate to 3 EHR-integration components of the AAP guidelines for individuals with Down syndrome in the MGH DSP from January 2019 to March 2022. Yellow lines denote the transition to virtual visits from April 2020 to June 2021 due to the COVID-19 pandemic and the timing of EHR-integration intervention in July 2020. Gray lines indicate the process stage mean, which refers to the arithmetic mean for all points within that process stage; statistical rules indicate that there are 2 stable process stages, which are indicated by the shift in July 2020. Red lines indicate the control limits (±3 SDs based on the process mean and number for that month).

Figure 5.

Adherence to guideline components (TSH, hemoglobin, audiology, and ophthalmology evaluation) from January 2019 to March 2022. Yellow lines denote the transition to virtual visits from April 2020 to June 2021 due to the COVID-19 pandemic and the timing of EHR-integration intervention in July 2020. Gray lines indicate the process stage mean, which refers to the arithmetic mean for all points within that process stage; statistical rules indicate that there is 1 stable process stage. Red lines indicate the control limits (±3 SDs based on the process mean and number for that month).

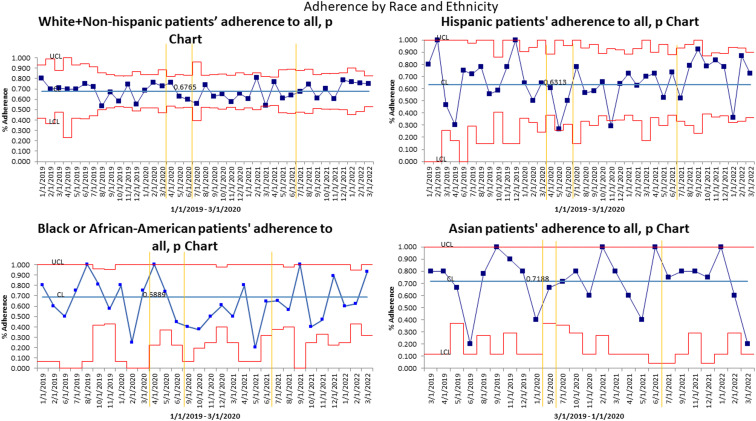

The composite measure of monthly total adherence to the 5 components was plotted on p charts by demographic characteristics. Total adherence by visit type (new vs follow-up visits) demonstrated that on average follow-up visits had 69% adherence at baseline, compared with new patients with 60% adherence at baseline. χ2 analysis by visit type was significant (χ2 = 11.10, P < .01) such that follow-up patient visits had greater adherence than new patient visits. On average, non-Hispanic White patients were 68% adherent, Black patients (of any ethnicity) were 69% adherent, Hispanic patients (of any race) were 63% adherent, and Asian patients were 72% adherent (Figure 6; available at www.jpeds.com). Total adherence by race did not show special cause due to either our EHR integrations, or during the time of virtual visits due to the COVID-19 pandemic. χ2 analysis by race was not significant (χ2 = 7.6, P = .06). Analysis by location of residence (Massachusetts vs non-Massachusetts) showed an average of 68% and 66% adherence to the 5 AAP guideline components, respectively, at baseline; χ2 analysis by location of residence was not significant (χ2 = 0.11, P = .74). We summarized our results and existing studies on adherence to the 2001 and 2011 AAP documents (Table II; available at www.jpeds.com).

Figure 6.

Total adherence rate to 5 select age-based American Academy of Pediatrics guidelines for individuals with Down syndrome in the MGH DSP by race/ethnicity from January 2019 to March 2022. Yellow lines denote the transition to virtual visits from April 2020 to June 2021 due to the COVID-19 pandemic and the timing of EHR-integration intervention in July 2020. Gray lines indicate the process stage mean, which refers to the arithmetic mean for all points within that process stage; statistical rules indicate that there is 1 stable process stage. Red lines indicate the control limits (±3 SDs based on the process mean and number for that month).

Discussion

In this QI project, we aimed to improve adherence to 5 AAP guideline components for Down syndrome to 90% by October 2020 and sustain improvement for 12 months. By beginning our project in 2019, our QI project unexpectedly overlapped with the COVID-19 pandemic and provided an unanticipated opportunity to study adherence in real-time during the natural disruption of the pandemic and our transition to a virtual visit model.11

Assessing adherence in the MGH DSP for the first time, our median monthly adherence rate at baseline (67%) showed that many, but not all, of our patients were up to date on the 5 components before their MGH DSP clinic date. At baseline, only 24% of patients had 100% adherence to the components, demonstrating opportunity for improvement; previous studies report 10% adherence to all guidelines.9 Baseline monthly adherence rates were greater for follow-up (69%) than new patients (61%), which could be the result of interval completion of components at a Down syndrome specialty clinic,9 like the MGH DSP. From our baseline adherence, we created a Specific, Measurable, Achievable, Relevant, and Time-Bound aim target of 90% adherence sustained for 12 months. In this project, we did not meet that aim, and considered that we may have set our target (90%) unrealistically high, that we had too many changes in our system to sustain change for 12 months, and that additional, different interventions may be needed to reach 90% adherence.

In comparison with published studies of adherence in Down syndrome at other sites, we found that our adherence rates to TSH and hemoglobin were similar to studies of the 2001 AAP statement6, 7, 8, 9 or the revised 2011 AAP statement.1 , 5 , 8 Our adherence to sleep study was greater than published adherence rates of 4.6%-69.0%.1 , 5 , 6 , 8 Change in sleep study guidance may account for some difference; our sleep study adherence is greater than studies using the 2011 AAP statement (12%-57% at baseline).1 , 5 This could represent a selection bias; patients in the MGH DSP may not represent the national population with Down syndrome. For example, pediatricians may be more likely to refer complicated, “sicker” patients with Down syndrome to the MGH DSP, and those more medically-complex patients may have seen more specialists and been more likely recommended for sleep study. Additional regional differences such as distance to MGH, interval time to incorporate this new recommendation, stakeholder buy-in, or sleep laboratory availability could all impact adherence.

Adherence to audiology in the MGH DSP was our least-adherent component. Audiology adherence decreased during the COVID-19 pandemic, and our parent representative agreed that in-person visits were limited or delayed due to the pandemic in 2020. During the pandemic, as outpatient elective procedures were canceled, audiology testing might have been canceled; decreased availability or closing of in-person testing centers may explain our finding. However, the other components, such as TSH and hemoglobin blood tests, and an ophthalmology evaluation would also require an in-person encounter and adherence to those components did not decrease. Differences between laboratories and testing centers may have existed, for example, phlebotomy may have been more open and accessible during the pandemic than audiology testing. It is possible that the recommendation to conduct an audiologic evaluation at greater frequency led us to be able to detect a change in adherence during the 16 months when we were in virtual visits.

We began our QI project to study the impact of EHR integrations and demonstrated improvement in a composite measure of 3 EHR-integration components that coincided with the intervention in July 2020. We did not see a change in our other outcome measures: a composite measure of total adherence, sleep study adherence, adherence to TSH/hemoglobin/audiology/ophthalmology, or the percentage of patients fully up-to-date, which coincide with the implementation of these EHR integrations in July 2020. We tracked adherence at 4 time frames to attempt to distinguish the impact of the COVID-19 pandemic, and the impact of the intervention. We selected the baseline (before April 2020) to include only data before both the transition to virtual visits due to the COVID-19 pandemic and the intervention. We used standard, accepted QI methods of following data over time and using SPC rules defining shifts and trends, to determine special cause, and found special cause (Figure 4), which aligned with our intervention. Given the broad implications of the COVID-19 pandemic, we anticipated that the total adherence, or adherence to individual components, might have worsened as the result of disruptions to medical care systems and saw decreased adherence to audiology, as outlined previously. In the third time frame, adherence to the 3 EHR integrations (Figure 4) might have improved regardless of our intervention due to reopening and return to in-person clinic after the COVID-19 pandemic. Our adherence to 5 measures (Figure 3), was greatest in the fourth time frame, giving hope that increased adherence in the future is possible.

EHR integrations were effective in Ohio, but not in all measures in this study.5 In developing this QI initiative, we replicated the methods of the EHR integrations with many similarities, including EHR integrations, EHR platform, outcomes measured, the patient population, and the implementation. Yet, there are inherent differences between sites, and multisite QI studies show variation between sites even when locations are using a cohesive, consistent approach.16 In other research, we have seen site differences in the prevalence of iron deficiency and iron-deficiency anemia.17 In our study, we relied on the clinical notes from physicians in the MGH DSP for documentation, which included physician review of chart, and the Ohio study reviewed the full medical chart5; it is possible that nuance allowed capture of additional components in the other study. Considering the 2 hospital systems, the MGH DSP is a subspecialty clinic for Down syndrome housed in the genetics department, and the integrations in Ohio were previously effective in the genetics department, neonatal intensive care units, and primary care clinics but did not study the Down syndrome specialty clinic housed in developmental pediatrics.5 The MGH DSP is associated with the pediatric component of Mass General for Children, within MGH, and the MGB medicine system, and the hospital system in Ohio is a large, standalone pediatric hospital system.5 The 2 studies differed in time; the Ohio project was done in conjunction with larger outreach efforts to local neonatal intensive care units during a generally stable time (2015-2017),5 whereas our project was started before the COVID-19 pandemic, with all the changes that entailed when families may have had other priorities beyond routine health care maintenance for Down syndrome.

Overall, it is important to consider nuances in all aspects of a QI project from the team, the aim, the intervention, the measures, and the broader context.14 , 18 , 19 We compared adherence among subgroups by race/ethnicity and location of residence and saw similar rates of adherence between groups; in the future, additional factors could be evaluated. If we could identify any features that are common among patients with the lowest adherence rates, this could help us to choose interventions of greatest benefit.

Many aspects of preventive health care in primary care have been negatively affected by COVID-19,20, 21, 22, 23 As we transitioned to a virtual visit model, we attribute our success with continued health care maintenance to our dedicated, multidisciplinary team, which took on new roles and worked to maintain our clinic during the COVID pandemic.11

Our MGH DSP may not generalize to other Down syndrome clinics that follow different care models and may need to be updated as the AAP statement is revised.24 Studies to date also have focused on single hospital systems or the use of Medicaid claims; in the future, it would be useful to study adherence to the AAP statement for Down syndrome in other hospitals that have adopted the EHR integrations,25 or in a population-based cohort, such as a national ambulatory pediatric database.

In conclusion, total adherence to components of the 2011 AAP Health supervision for children with Down syndrome was imperfect at baseline, decreased during the COVID-19 pandemic, and subsequently improved, especially once in-person visits resumed. Adherence to 3 EHR-integration components improved in July 2020 and coincided with the intervention.

Acknowledgments

Appreciation is given to Victoria Carballo for assistance with use of statistical process control charts and quality improvement software, and to Zbigniew Lech for assistance with creation of electronic health record integrations. We acknowledge the Mass General Brigham Clinical Process Improvement Leadership Program (CPIP) for project methodology training and data analysis support.

Footnotes

All phases of this study were supported by an internal grant from the Quality and Safety Mini-grant program at the Massachusetts General Hospital for Children. The QI group had no role in the design and conduct of the study. S.S. has received research funding from LuMind Research Down Syndrome Foundation to conduct clinical trials for people with Down syndrome within the past 2 years. She serves in a nonpaid capacity on the Medical and Scientific Advisory Council of the Massachusetts Down Syndrome Congress, the Board of Directors of the Down Syndrome Medical Interest Group (DSMIG-USA), and the Executive Committee of the American Academy of Pediatrics Council on Genetics. B.S. occasionally consults on the topic of Down syndrome through the Gerson Lehrman Group. He receives remuneration from Down syndrome nonprofit organizations for speaking engagements and associated travel expenses. B.S. receives annual royalties from Woodbine House, Inc, for the publication of his book, Fasten your seatbelt: A crash course on Down syndrome for brothers and sisters. Within the past 2 years, he has received research funding from F. Hoffmann-La Roche, Inc, AC Immune, and LuMind IDSC Down Syndrome Foundation to conduct clinical trials for people with Down syndrome. B.S. is occasionally asked to serve as an expert witness for legal cases in which Down syndrome is discussed. B.S. serves in a nonpaid capacity on the Honorary Board of Directors for the Massachusetts Down Syndrome Congress and the Professional Advisory Committee for the National Center for Prenatal and Postnatal Down Syndrome Resources. B.S. has a sister with Down syndrome. Y.H. has received education-related funding (Autism Speaks Autism Care Network for teaching in autism ECHO),internal funding, and philanthropic funding for clinical support (Nancy Lurie Marks Foundation). Her daughter has Down syndrome. The other authors declare no conflicts of interest.

Appendix

Table II.

Summary of adherence for Down syndrome from literature review

| Jensen et al6 | Williams et al7 | O’Neill et al8 | Santoro et al5 | Santoro et al1 | Skotko et al9 | This study | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Source | CO, CA, MI, PA Medicaid claims; 2006-2010 | University of Wisconsin-Madison; 2001-2011 | Lurie Children's Hospital of Chicago: 2 urban academic clinic sites; 2008-2012 | Nationwide Children’s Hospital system; 2015-2017 | Cincinnati Children’s Hospital: 22 pediatric care sites | Boston Children’s Hospital; 2009-2010 | Massachusetts General Hospital Down Syndrome Program; 2018-2021 | |||

| Age | 12+ y | 0-21 y | 0-17 y | 0-32 y | Pediatric | 3-21 y | 0-18 y | |||

| Guideline version | 2001 | 2001 | 2001 | 2011 | 2011 | 2001 | 2011 | |||

| Baseline | Postintervention | Baseline | Postintervention | Baseline (before 4/2020) | 4/2020 and after (included COVID-19, post-COVID-19, and postintervention) | |||||

| Thyroid | 2517/3501 = 72% had annually | 445/732 = 61% had at ages 6 and 12 mo, yearly ages 2-21 | 55/60 = 92% had annually | 72/118 = 61% | 166/226 = 73% | 48/82 = 59% | 52/82 = 63% | 58/103 = 56% had annually | 216/333 = 65% | 444/638 = 70% |

| Hearing | 637/3500 = 18% had annually | 265/794 = 33% had every 6 mo ages 1-3 y, yearly ages 4-21 | 68/80 = 85% had annually age 1-4, and 13+ y Once from age 5-12 y | 52/82 = 63% | 75/82 = 91% | 49/104 = 47% had annually | 174/299 = 58% | 327/638 = 51% | ||

| Vision | 1919/3502 = 55% had annually | 285/661 = 43% had by age 6 mo, every 2 y ages 1-5, then yearly ages 5-21 | 52/59 = 88% had – Annually age 1-4, and 13+ y As needed age 5–12 y | 57/82 = 70% | 68/82 = 83% | 58/104 = 56% had annually | 208/299 = 70% | 438/638 = 69% | ||

| OSA/sleep study | 162/3521 = 5% if risk/symptoms | 58/84 = 69% had if symptoms; 57% had by age 4 y | 33/65 = 51% | 78/119 = 66% | 12% | 43% | 173/223 = 78% | 410/465 = 88% | ||

| Complete blood count hemoglobin | 114/206 = 55% had CBC yearly if ages 13-21 y | 10/15 = 67% had Hgb annually for 13+ y | 88/167 = 53% | 206/299 = 69% | 39/82 = 48% | 41/82 = 50% | 185/333 = 56% | 388/638 = 61% | ||

| Echocardiogram | 62/66 = 94% | 107/108 = 99% | 35/82 = 43% | 74/82 = 90% | ||||||

| Genetics visit | 37/66 = 56% | 97/108 = 90% | 25/82 = 30% | 55/82 = 67% | ||||||

CBC, complete blood count; COVID-19, coronavirus disease 2019; Hgb, hemoglobin; OSA, obstructive sleep apnea.

References

- 1.Santoro S.L., Martin L.J., Pleatman S.I., Hopkin R.J. Stakeholder buy-in and physician education improve adherence to guidelines for Down syndrome. J Pediatr. 2016;171:262–268.e1-2. doi: 10.1016/j.jpeds.2015.12.026. [DOI] [PubMed] [Google Scholar]

- 2.American Academy of Pediatrics. Committee on Genetics American Academy of Pediatrics: health supervision for children with Down syndrome. Pediatrics. 2001;107:442–449. doi: 10.1542/peds.107.2.442. [DOI] [PubMed] [Google Scholar]

- 3.Bull M.J., Committee on Genetics Health supervision for children with Down syndrome. Pediatrics. 2011;128:393–406. doi: 10.1542/peds.2011-1605. [DOI] [PubMed] [Google Scholar]

- 4.AAP publications reaffirmed or retired. Pediatrics. 2018;141:e20180518. [Google Scholar]

- 5.Santoro S.L., Bartman T., Cua C.L., Lemle S., Skotko B.G. Use of electronic health record integration for Down syndrome guidelines. Pediatrics. 2018;142:e20174119. doi: 10.1542/peds.2017-4119. [DOI] [PubMed] [Google Scholar]

- 6.Jensen K.M., Campagna E.J., Juarez-Colunga E., Prochazka A.V., Runyan D.K. Low rates of preventive healthcare service utilization among adolescents and adults with Down syndrome. Am J Prev Med. 2021;60:1–12. doi: 10.1016/j.amepre.2020.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Williams K., Wargowski D., Eickhoff J., Wald E. Disparities in health supervision for children with Down syndrome. Clin Pediatr (Phila) 2017;56:1319–1327. doi: 10.1177/0009922816685817. [DOI] [PubMed] [Google Scholar]

- 8.O'Neill M.E., Ryan A., Kwon S., Binns H.J. Evaluation of pediatrician adherence to the American Academy of Pediatrics Health Supervision guidelines for Down syndrome. Am J Intellect Dev Disabil. 2018;123:387–398. doi: 10.1352/1944-7558-123.5.387. [DOI] [PubMed] [Google Scholar]

- 9.Skotko B.G., Davidson E.J., Weintraub G.S. Contributions of a specialty clinic for children and adolescents with Down syndrome. Am J Med Genet A. 2013;161A:430–437. doi: 10.1002/ajmg.a.35795. [DOI] [PubMed] [Google Scholar]

- 10.Chung J., Donelan K., Macklin E.A., Schwartz A., Elsharkawi I., Torres A., et al. A randomized controlled trial of an online health tool about Down syndrome. Genet Med. 2021;23:163–173. doi: 10.1038/s41436-020-00952-7. [DOI] [PubMed] [Google Scholar]

- 11.Santoro S.L., Donelan K., Haugen K., Oreskovic N.M., Torres A., Skotko B.G. Transition to virtual clinic: experience in a multidisciplinary clinic for Down syndrome. Am J Med Genet C Semin Med Genet. 2021;187:70–82. doi: 10.1002/ajmg.c.31876. [DOI] [PubMed] [Google Scholar]

- 12.Rao S.K., Carballo V., Cummings B.M., Millham F., Jacobson J.O. Developing an interdisciplinary, team-based quality improvement leadership training program for clinicians: the Partners Clinical Process Improvement Leadership Program. Am J Med Qual. 2017;32:271–277. doi: 10.1177/1062860616648773. [DOI] [PubMed] [Google Scholar]

- 13.Provost L.P., Murray S.K. John Wiley & Sons; 2011. The health care data guide: learning from data for improvement. [Google Scholar]

- 14.Langley G.J., Moen R., Nolan K.M., Nolan T.W., Norman C.L., Provost L.P. Jossey-Bass Publishers; 2009. The improvement guide: a practical approach to enhancing organizational performance. [Google Scholar]

- 15.Tague N.R. 2nd ed. ASQ Quality Press; 2005. The quality toolbox. [Google Scholar]

- 16.Raney L., McManaman J., Elsaid M., Morgan J., Bowman R., Mohamed A., et al. Multisite quality improvement initiative to repair incomplete electronic medical record documentation as one of many causes of provider burnout. JCO Oncol Pract. 2020;16:e1412–e1416. doi: 10.1200/OP.20.00294. [DOI] [PubMed] [Google Scholar]

- 17.Hart S.J., Zimmerman K., Linardic C.M., Cannon S., Pastore A., Patsiogiannis V., et al. Detection of iron deficiency in children with Down syndrome. Genet Med. 2020;22:317–325. doi: 10.1038/s41436-019-0637-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Beal A.C., Co J.P.T., Dougherty D., Jorsling T., Kam J., Perrin J., et al. Quality measures for children's health care. Pediatrics. 2004;113:199–209. [PubMed] [Google Scholar]

- 19.Crandall W., Davis J.T., Dotson J., Elmaraghy C., Fetzer M., Hayes D., et al. Clinical indices can standardize and monitor pediatric care: a novel mechanism to improve quality and safety. J Pediatr. 2018;193:190–195.e1. doi: 10.1016/j.jpeds.2017.09.073. [DOI] [PubMed] [Google Scholar]

- 20.Diaz Kane M.M. Effects of the COVID-19 pandemic on well-child care and recommendations for remediation. Pediatr Ann. 2021;50:e488–e493. doi: 10.3928/19382359-20211113-01. [DOI] [PubMed] [Google Scholar]

- 21.Alcocer Alkureishi L., Young S. Pediatric practice changes during the COVID-19 pandemic. Pediatr Ann. 2021;50:e486–e487. doi: 10.3928/19382359-20211112-02. [DOI] [PubMed] [Google Scholar]

- 22.Spindel J.F., Spindel J., Gordon K., Koch J. The effects of the COVID-19 pandemic on primary prevention. Am J Med Sci. 2022;363:204–205. doi: 10.1016/j.amjms.2021.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mayne S.L., Hannan C., Davis M., Young J.F., Kelly M.K., Powell M., et al. COVID-19 and adolescent depression and suicide risk screening outcomes. Pediatrics. 2021;148 doi: 10.1542/peds.2021-051507. e2021051507. [DOI] [PubMed] [Google Scholar]

- 24.Bull M.J., Trotter T., Santoro S.L., Christensen C., Grout R.W. Health supervision for children and adolescents with Down syndrome. Pediatrics. 2022;149 doi: 10.1542/peds.2022-057010. e2022057010. [DOI] [PubMed] [Google Scholar]

- 25.Santoro S.L. Building connections to improve care of those with Down syndrome [Internet]. AAP Voices. 2022. https://www.aap.org/en/news-room/aap-voices/building-connections-to-improve-care-of-those-with-down-syndrome/