Significance

Neural activity is traditionally thought to occur over fixed timescales. However, recent animal work has suggested that some neural responses occur over varying timescales. We extended this animal result to humans by detecting temporally scaled signals noninvasively at the scalp in four different tasks. Our results suggest that temporal scaling is an important feature of cognitive processes known to unfold over varying timescales.

Keywords: EEG, timing, regression

Abstract

Much of human behavior is governed by common processes that unfold over varying timescales. Standard event-related potential analysis assumes fixed-duration responses relative to experimental events. However, recent single-unit recordings in animals have revealed neural activity scales to span different durations during behaviors demanding flexible timing. Here, we employed a general linear modeling approach using a combination of fixed-duration and variable-duration regressors to unmix fixed-time and scaled-time components in human magneto-/electroencephalography (M/EEG) data. We use this to reveal consistent temporal scaling of human scalp–recorded potentials across four independent electroencephalogram (EEG) datasets, including interval perception, production, prediction, and value-based decision making. Between-trial variation in the temporally scaled response predicts between-trial variation in subject reaction times, demonstrating the relevance of this temporally scaled signal for temporal variation in behavior. Our results provide a general approach for studying flexibly timed behavior in the human brain.

Action and perception in the real world require flexible timing. We can walk quickly or slowly, recognize the same piece of music played at different tempos, and form temporal expectations over long and short intervals. In many cognitive tasks, reaction-time variability is modeled in terms of internal evidence accumulation (1), whereby the same dynamical process unfolds at different speeds on different trials.

Flexible timing is critical in our lives, yet despite several decades of research (2–5), its neural correlates remain subject to extensive debate. Due to their high temporal resolution, magnetoencephalography and electroencephalography (M/EEG) have played a particularly prominent role in understanding the neural basis of timing (5–13), and the method typically used to analyze such data has been the event-related potential (ERP), which averages event-locked responses across multiple repetitions. For example, this approach has been used to identify the presence of a slow negative-going signal during timed intervals. This signal, called the contingent negative variation (CNV) (14), is thought to be timing related because its slope depends inversely on the duration of the timed interval (7, 8, 12).

Crucially, the ERP analysis strategy implicitly assumes that neural activity occurs at fixed-time latencies with respect to experimental events. However, it has recently been shown that brain activity at the level of individual neurons can be best explained by a temporal scaling model (15, 16), in which activity is explained by a single response that is stretched or compressed according to the length of the produced interval. When monkeys are cued to produce intervals of different lengths, the temporal scaling model explains the majority of variance in neural responses from medial frontal cortex single units (15). This suggests that one mechanism by which flexible motor timing can be achieved is by adjusting the speed of a common neural process, a perspective readily viewed through the lens of dynamical systems theory (16). Consistent with the broad role played by dynamical systems in a range of neural computations (17, 18), recent studies in neural populations have revealed time warping as a common property across many different population recordings and behavioral tasks (19). For example, temporal scaling is also implicit in the neural correlates of evidence integration during sensory and value-based decision making (20) (which itself has also been proposed as a mechanism for time estimation in previous work (21)).

Successfully characterizing scaled-time components in humans could open the door to studying the role of temporal scaling in more-complex, hierarchical tasks, such as music production or language perception, as well as in patient populations in which timing is impaired (22). Yet it is currently unclear how temporal scaling of neural responses may manifest at the scalp (if at all) using noninvasive recording in humans. This is because of the fixed-time nature of the ERP analysis strategy. Again, one component of the ERP, called the CNV, has been found to ramp at different speeds for different temporal intervals (7, 8, 12), suggestive of temporal scaling. Crucially though, any scaled activity would appear mixed at the scalp with fixed-time components due to the superposition problem (23).

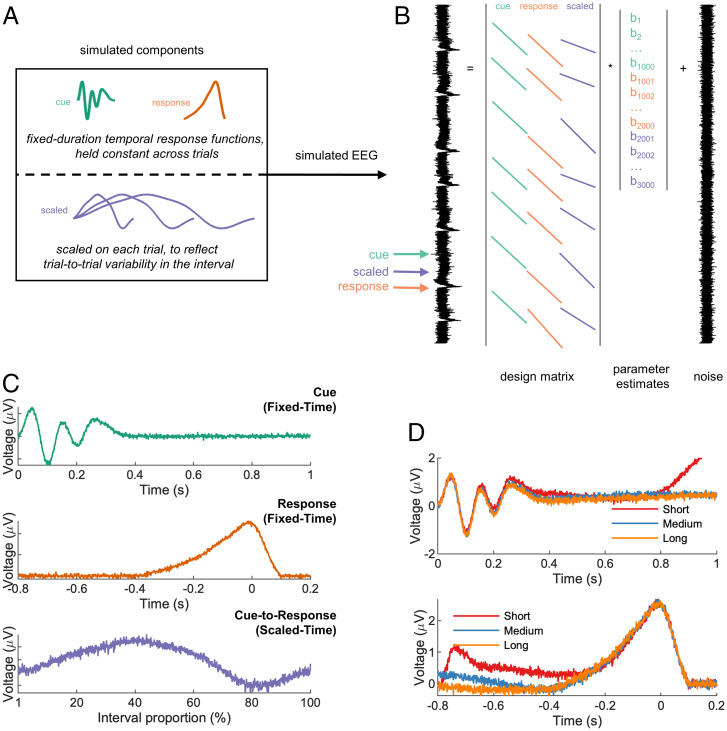

We therefore developed an approach to unmix scaled-time and fixed-time components in the EEG (Fig. 1A). Our proposed method builds on recently developed least square regression–based approaches (24–29) that have proven useful in unmixing fixed-time components that overlap with one another, such as stimulus-related activity and response-related activity. To overcome the superposition problem, these approaches use a convolutional general linear model (GLM) to deconvolve neural responses that are potentially overlapping. Following this work, we estimate the fixed-time ERPs using a GLM in which the design matrix is filled with time-lagged “stick functions” (a regressor which is valued 1 around the timepoint of interest and 0 otherwise). Importantly, the stick functions can overlap in time to capture overlap in the underlying neural responses (Fig. 1B), and the degree of fit to neural data can be improved by adding a regularization penalty to the model estimation (29). In situations without any overlap, the GLM would exactly return the conventional ERP.

Fig. 1.

Regression-based unmixing of simulated data successfully recovers scaled-time and fixed-time components. (A) EEG data were simulated by summing fixed-time components (cue and response), a scaled-time component with differing durations for different trials (short, medium, or long), and noise. (B) The simulated responses were unmixed via a GLM with stick basis functions: cue-locked, response-locked, and a single scaled-time basis spanning from cue to response (i.e., variable duration). (C) The GLM successfully recovered all three components, including the scaled-time component. (D) A conventional ERP analysis (cue-locked and response-locked averages) of the same data obscured the scaled-time component.

The key innovation that we introduce here is to allow for variable-duration regressors in such models, in addition to fixed-duration regressors, to test for the presence of scaled-time responses. In particular, we allow the duration of the stick function to vary depending upon the interval between stimulus and response, meaning that the same neural response can span different durations on different trials. Thus, rather than modeling the mean interval duration of each condition (e.g., via traditional ERPs), the proposed method captures trial-to-trial response variability. The returned scaled-time potential is no longer a function of real-world (“wall clock”) time but instead a function of the percentage of time elapsed between stimulus and response.

As a proof of concept, we simulated data at a single EEG sensor for an interval timing task, consisting of two fixed-time components (locked to cues and responses) and one scaled-time component spanning between cues and responses (Fig. 1A). Our proposed method was successful in recovering all three components (Fig. 1C), whereas a conventional ERP approach obscured the scaled-time component (Fig. 1D). Crucially, in real EEG data, we repeated this approach across all sensors, potentially revealing different scalp distributions (and hence different neural sources) for fixed-time versus scaled-time components.

By unmixing fixed and scaled components, our method goes beyond previous approaches for dealing with timing variability in EEG experiments. For example, the event-related timing of EEG trials can be aligned by translating either the entire waveform (30) or individual ERP components (31). Such methods allow for component alignment but do not involve any scaling. On the other hand, raw EEG can be scaled to align trials to a common time frame, e.g., through upsampling/downsampling (32) or dynamic time warping (33). However, these methods are not designed to unmix fixed-time and scaled-time components. Finally, the effect of a continuous variable on EEG can be quantified using the method of temporal response functions (TRFs), another regression-based approach (27, 34–36). TRFs are particularly flexible in capturing different types of delay activity, e.g., during periods of growing expectancy (34) or while listening to fast/slow speech (35). Our method is related to TRFs in that it involves the convolution of a to-be-estimated input signal with a continuous regressor. Unlike TRFs, however, the proposed method involves an additional scaling step in which the input signal is stretched or compressed (Fig. 1 and SI Appendix, Fig. 1).

We also note that a time-frequency decomposition might also readily separate the responses at higher and lower frequencies. Indeed, a wide range of neural oscillations have been implicated in time perception (37). One might reasonably expect stretched/compressed signals to manifest differently in the time-frequency domain, e.g., as they correlate more strongly with different stretched/compressed versions of the same wavelet function. Unlike our proposed approach, however, a time-frequency decomposition is not readily designed to look for temporal scaling of the scaled-time response, namely the same neural response unfolding over different timescales on different trials. Nor will a time-frequency decomposition separate fixed-time responses from scaled-time responses if the signals occupy the same frequency band (38).

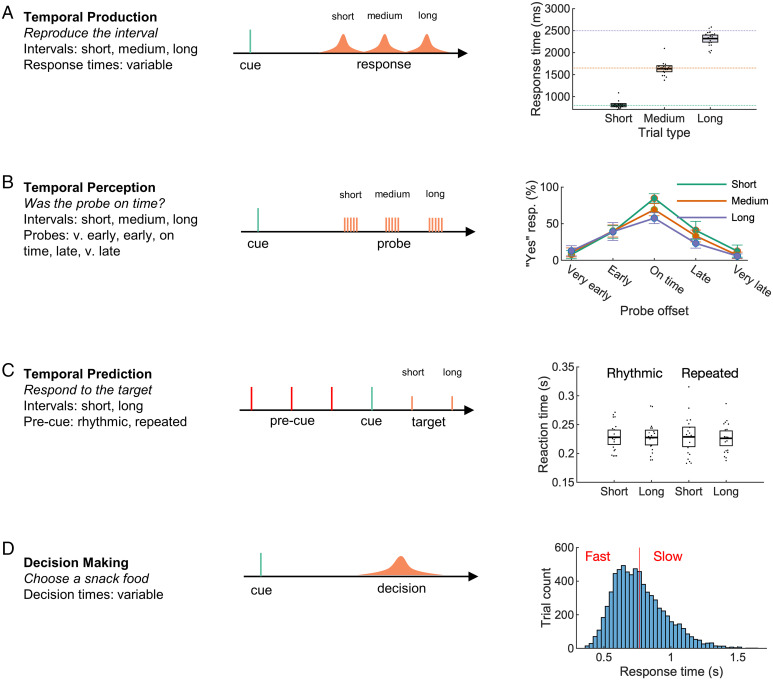

We used our approach to analyze EEG recorded across four independent datasets, comprising three interval-timing tasks and one decision-making task. In the first task, participants produced a target interval (short, medium, or long) following a cue (Fig. 2A). Feedback was provided, and participants were able to closely match the target intervals. In the second, participants evaluated a computer-produced interval (Fig. 2B). The closer the produced interval was to the target interval, the more likely participants were to judge the response as “on time”. In the third (previously analyzed (39, 40)) task, participants made temporal predictions about upcoming events based on rhythmic predictions (Fig. 2C).

Fig. 2.

Datasets from three time-estimation and one decision-making paradigm were analyzed. In the temporal production task (A), participants successfully produced one of three cued intervals. In the temporal perception task (B), participants were able to properly judge a computer-produced interval. In a previously analyzed temporal prediction task (C) (39, 40), participants responded quickly to targets following either a rhythmic or repeated (nonrhythmic) cue. In a previously analyzed decision-making task (D) (41, 42), participants were cued to choose one of two snack food items, resulting in a range of response times (mean shown as red line). Error bars represent 95% CIs.

In the fourth task (also previously analyzed (41, 42)), participant chose between pairs of snack items (Fig. 2D)—a process in which reaction-time variability can be modeled as a process of internal evidence accumulation across time (43). Neural activity related to evidence accumulation is measurable on the scalp as ramping activity that scales with decision difficulty. EEG for fast, easy trials increases at a faster rate compared with EEG for slow, difficult trials, indicating a higher rate of internal evidence accumulation (41). Thus, we predicted that the EEG would contain an underlying scaled component associated with different rates of evidence accumulation.

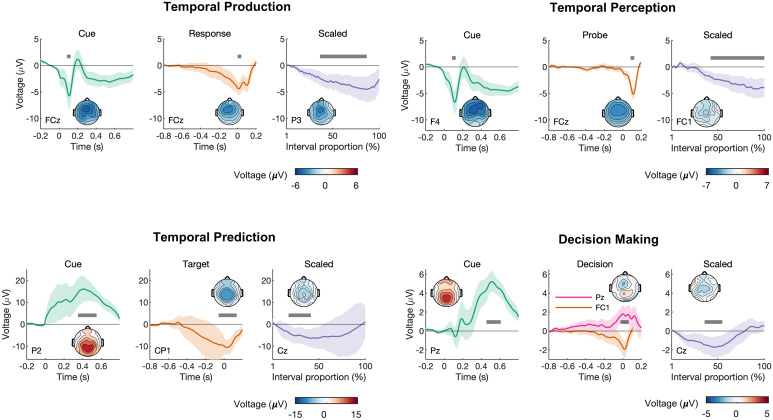

In all four tasks, we observed a scaled-time component that was distinct from the preceding and following fixed-time components (Fig. 3), which resembled conventional ERPs (SI Appendix, Fig. 3). Typically, ERP components are defined by their polarity and scalp distribution (44). The observed scaled-time components shared a common polarity (negative) and scalp distribution (central). In each task, cluster-based permutation testing revealed that the scaled-time component differed significantly from zero. The differences were driven by clusters spanning 36–87% in the production task (P < 0.001), 42–100% in the perception task (P < 0.001), 18–27% in the prediction task (P = 0.004), and 36–55% in the decision-making task (P < 0.001). For each task, including a scaled-time component improved model fit compared with a model with fixed-time components only: production: t(19) = −3.97, P < 0.001, Cohen’s d = −0.89; perception: t(19) = −5.09, P < 0.001, Cohen’s d = −1.14; prediction: t(18) = −4.90, P < 0.001, Cohen’s d = −1.12; decision making: t(17) = −7.77, P < 0.001, Cohen’s d = −1.83 (see SI Appendix, Table 8 for model errors). In many cases, scaled-time components were reliably observed at the single-subject level (SI Appendix, Figs. 5–8).

Fig. 3.

Scaled-time components were consistently observed across all four paradigms, with distinct scalp topographies from fixed-time components. Each had distinct fixed-time components relative to task-relevant events (Left/Middle Columns) and a common negative scaled-time component over central electrodes, reflecting interval time (Right Column). The scalp topographies represent the mean voltage across the intervals indicated by the gray bars. For the fixed-time components, the intervals were chosen to visualize prominent deflections in the average waveform. For the scaled-time components, the intervals represent regions of significance as determined by cluster-based permutation tests. The error bars represent 95% CIs.

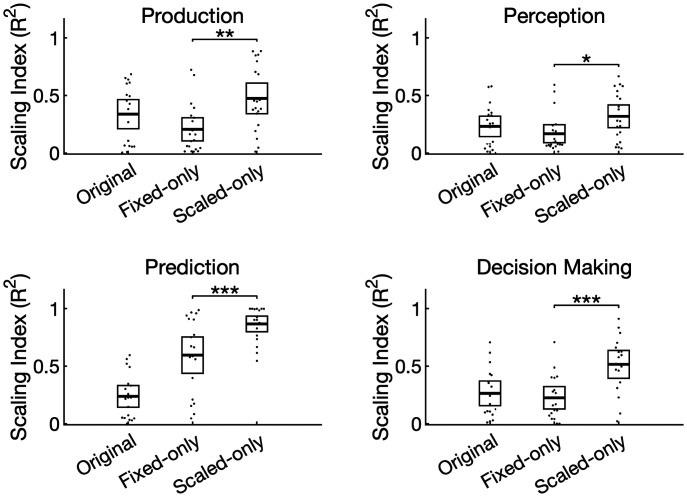

To further validate our method, we quantified temporal scaling by computing a “scaling index” (15) for each task and participant (Fig. 4). To calculate this, we stretched/compressed each epoch to match the longest interval in each task, averaged by condition, then calculated the coefficient of determination for predicting the longer interval using stretched versions of the shorter intervals. We did this first on the raw data (“original”), then separately for the data containing only the fixed-time components (“fixed-only”, i.e., scaled-time components regressed out) and the scaled-time components (“scaled-only”, i.e., fixed-time components regressed out). In all four tasks, the scaling index for the scaled component exceeded the scaling index for the fixed component (production: t(19) = 2.95, P = 0.008, Cohen’s d = 0.66; perception: t(19) = 2.63, P = 0.017, Cohen’s d = 0.59; prediction: t(18) =5.45, P < 0.001, Cohen’s d = 1.27; decision making: t(17) = 5.45, P < 0.001, Cohen’s d = 1.29).

Fig. 4.

The unmixed signals differed quantitatively in their degree of scaling. The scaling index, defined as the coefficient of determination between epochs after stretching, was first computed for the raw data (“original”) and after isolating either the fixed (“fixed only”) or scaled (“scaled only”) components. In all four tasks, the scaled-time components had a greater scaling index compared with the fixed-time components. Dots represent individual participants, and error bars represent 95% CIs. *P < 0.05, *P < 0.01, ***P < 0.001.

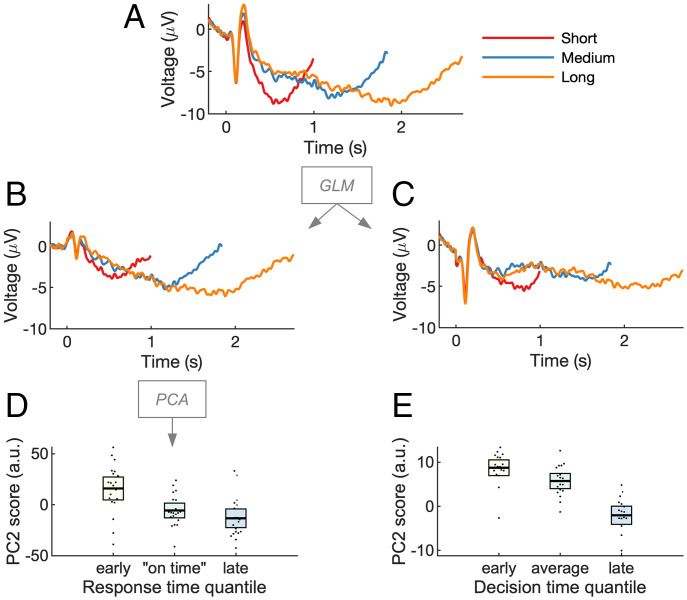

We then examined how the scaled-time component relates to behavioral variability: does the latency of the scaled-time component predict participants’ response time? We focused on the temporal production and decision-making tasks, in which the interval duration was equal to the response time. As response time varied from trial to trial, so did the modeled scaled component. To measure component latency, we applied an approach developed in refs. (45, 46), using principal-component analysis (PCA) to model delay activity over central electrodes in the temporal production task. The approach works by detecting latency shifts in a common underlying component (45, 46). Unlike simple peak detection, PCA can account for a range of waveform dynamics (e.g., multiple peaks). We first regressed out the fixed-time component as identified by the GLM, resulting in a dataset that consisted only of the residual scaled-time activity. We then computed the average scaled-time activity for each of the three interval conditions (Fig. 5 A–C). PCA was applied separately to each interval. This consistently revealed a first principal component that matched the shape of the scaled-time component and a second principal component that matched its temporal derivative. This analysis confirms the presence of the scaled-time component in our data, as it is the first principal component of the residuals after removing fixed-time components. Crucially, adding or subtracting the second principal component captures variation in the latency of the scaled-time component (SI Appendix, Fig. 4). Across response time quantiles, we found that PC2 scores (SI Appendix, Table 6) were significantly related to response times (Fig. 5D) (F(2,38) = 6.18, P = 0.005, ηp2 = 0.25, ηg2 = 0.19). This implies that the earlier in time that the scaled-time component peaked, the faster the subject would respond on that trial. This result was replicated in the decision-making task (F(2,34) = 4.18, P = 0.02, ηp2 = 0.20, ηg2 = 0.18) (Fig. 5E).

Fig. 5.

Variation in scaled-time components predicts behavioral variation in time estimation. (A) Cue-locked EEG, shown as ERPs, was analyzed via GLM. (B and C) To visualize the unmixing of scaled-time and fixed-time components, the residual (noise) was recombined with either the scaled-time component (B) or the fixed-time components (C). PCA was run on the “scaled time plus residual” EEG. The second principal component resembled the temporal derivative or “rate” of the scaled component (SI Appendix, Fig. 5). (D) PC2 scores depended on response time, implying the scaled-time component peaked earlier for fast responses and later for slow responses. (E) The effect replicated in a decision-making task. PC2 scores are in arbitrary units (a.u.). Error bars represent 95% CIs.

Discussion

Our results provide a general method for recovering temporally scaled signals in human M/EEG, where scaled-time components are mixed at the scalp with conventional fixed-time ERPs. We focused here on tasks that have been widely used in the timing literature, namely interval production, perception, and prediction, as well as an example of a cognitive task that exhibits variable reaction times across trials (value-based decision making). Distinct scaled-time components and scalp topographies were revealed in all four tasks. These results suggest that flexible cognition relies on temporally scaled neural activity, as seen in recent animal work (15, 16).

The existence of temporally scaled signals at the scalp may not be surprising to those familiar with the study of time perception. Because of its excellent temporal resolution, EEG has long been used to study delay activity in interval-timing tasks. As discussed, one signal of interest has been a ramping frontal–central signal called the CNV, which we observed in our conventional ERP analysis (SI Appendix, Fig. 3). Notably, CNV slope has been interpreted as an accumulation signal in pacemaker-accumulation models of timing (7, 8, 11, 13). Our work differs from these previous studies in one important respect. In a conventional ERP analysis, delay activity is assumed to occur over fixed latencies. The CNV is thus computed by averaging over many cue-locked EEG epochs of the same duration. In contrast, we have considered the possibility that scalp-recorded potentials reflect a mixture of both fixed-time and scaled-time components. By modeling fixed-time and scaled-time components separately, we revealed scaled activity that was common across all timed intervals. This, in turn, is consistent with a recent class of models of timing that propose time estimation reflects the variable speed over which an underlying dynamical system unfolds (16–18).

We also observed temporally scaled activity in a decision-making task, a somewhat surprising result given that the task did not have an explicit timing component (participants made simple binary decisions (41)). Nevertheless, time is the medium within which decisions are made (47). Computationally, the timing of binary decisions can be captured in a drift-diffusion model as the accumulation of evidence in favor of each alternative (1). This accumulation is thought to be indexed by an ERP component called the central parietal positivity (CPP) (48). There is evidence that the slope of the CPP—which can be either stimulus locked or response locked—captures the rate of evidence accumulation (49). For faster/easier decisions, the CPP climbs more rapidly compared with slower/harder decisions (48, 49). Perhaps these effects can also be explained by stretching/compressing a common scaled-time component while holding stimulus- and response-related activity constant. Furthermore, variation in the scaled-time component is relevant to decision making according to our results: it predicts when a decision will be made. However, we also note that the topography observed in our scaled-time component was a negative-going potential rather than positive (Fig. 3D). This can potentially be explained by the standard CPP-like ERP (41) being a mixture of our observed negative scaled-time topography with the positive fixed-time topographies.

Although our approach makes no assumptions about the overall shape of the scaled-time component, it does assume a consistent, linear scaling across intervals. This is an assumption that could be relaxed in a more-complex model, e.g., using spline regression (25). We also note that, although we made no a priori predictions about waveform shape, some between-task similarities and differences were noted in the resulting scaled-time components. For example, similar responses were seen in the tasks for which the interval of interest ended with a motor response (temporal production and decision making—see Fig. 3 A and D). In both cases, activity immediately preceding the response depended on a ramping, fixed-time, motor-related component, with little contribution from a scaled component. A similar observation was made in the temporal prediction task—activity just before the appearance of the target depended on anticipatory fixed-time activity, not scaled activity (Fig. 3C). In contrast, preprobe activity in the temporal perception task showed almost no fixed-time activity but a robust scaled-time component (Fig. 3B). The reason for this difference cannot be identified by the current experiments, however. First, the perception and prediction tasks involved different task instructions (“listen for the probe” versus “respond to the target”). Second, the probe/target distributions differed in the two tasks; the mean duration was 75% likely in the prediction task but only 20% likely in the perception task. We therefore speculate that scaled activity may be somewhat task dependent.

Our approach is not only conceptually different from previous work that models variability in timing using a regression framework (27, 34–36), it is also a mechanistically important finding. It indicates the brain may support flexible timing by adapting the duration of an otherwise consistent neural response. This can be understood as varying the rate of a dynamical system (17, 18) during interval estimation. Although there is evidence for such temporally scaled responses in the monkey neurophysiology literature (e.g., (15, 16), which inspired the current study), we are not aware of any direct evidence in support of this idea in humans. Indeed, it goes against the dominant framework of fixed-duration responses that has thus far dominated M/EEG analysis.

Although we have focused here on interval timing and decision-making tasks, we anticipate other temporally scaled EEG and magnetoencephalography signals will be discovered for cognitive processes known to unfold over varying timescales. For example, the neural basis of flexible (fast/slow) speech production and perception is an active area of research (50–52) and may involve a form of temporal scaling (32). Flexible timing is also important across a vast array of decision-making tasks, where evidence accumulation can proceed quickly or slowly depending on the strength of the evidence (20). Flexible timing helps facilitate a range of adaptive behaviors via temporal attention (4), while disordered timing characterizes several clinical disorders (53), underscoring the importance of characterizing temporal scaling of neural responses in human participants.

Methods

Simulations.

We simulated cue-related and response-related EEG in a temporal production task using MATLAB 2020a (Mathworks, Natick, USA). Cue and response were separated by either a short, medium, or long interval. During the delay period, we simulated a scaled response that stretched or compressed to fill the interval. All three responses (cue, response, and scaled) were summed together at appropriate lags (short, medium, or long), with noise—see Fig. 1A. In total, we simulated 50 trials of each condition (short, medium, and long).

To unmix fixed-time and scaled-time components, we used a regression-based approach (24, 25, 54) in which the continuous EEG at one sensor Y is modeled as a linear combination of the underlying event-related responses β, which are unknown initially. The model can be written in equation form as

where X is the design matrix and ε is the residual EEG not accounted for by the model. X contains as many rows as EEG data points and as many columns as predictors (that is, the No. of points in the estimated event-related responses). In our case, X was populated by stick functions—nonzero values around the time of the modeled events and zeros otherwise. We included in X two fixed-time components, the cue and the response, as stick functions of fixed EEG duration (with variables set to 1). In other words, the height of the fixed-time stick function was constant across events of the same type and equal to its width. To model a temporally scaled response, we used the MATLAB imresize function (Image Processing Toolbox, R2020b) with “box” interpolation to stretch/compress a stick function so that it spanned the duration between cue and response (other interpolation methods were tried—see SI Appendix, Fig. 1—but this choice had little effect on the results). Thus, the duration of the scaled stick function varied from trial to trial (Fig. 1B). The goal here was to estimate a single scaled-time response to account for EEG activity across multiple varying delay periods. For the fixed-time responses, each column of X represents a latency in milliseconds before/after an experimental event; by contrast, for the scaled-time responses, each column of X represents the percentage of time that has elapsed between two events (stimulus and response). Simulation code is available at https://github.com/chassall/temporalscaling.

Production and Perception Tasks.

Participants.

Participants completed both the production and perception tasks within the same recording session. We tested 20 university-aged participants, five male, two left handed, Mage = 23.40, 95% CI [21.29, 25.51]. Participants had normal or corrected-to-normal vision and no known neurological impairments. This study was approved by the Medical Sciences Interdivisional Research Ethics Committee at the University of Oxford, and participants provided informed consent. Following the experiment, participants were compensated £20 (£10 per hour of participation) plus a mean performance bonus of £3.23, 95% CI [2.92, 3.55].

Apparatus and procedure.

Participants were seated ∼64 cm from a 27-inch liquid-crystal display (144 Hz, 1-ms response rate, 1,920 × 1,080 pixels, Acer XB270H, New Taipei City, Taiwan). Visual stimuli were presented using the Psychophysics Toolbox Extension (55, 56) for MATLAB 2014b (Mathworks, Natick, USA). Participants were given written and verbal instructions to minimize head and eye movements. The goal of the production task was to produce a target interval, and the goal of the perception task was to judge whether or not a computer-produced interval was correct.

The experiment was blocked with 10 trials per block. There were 18 production blocks and 18 perception blocks, completed in random order. Prior to each block, participants listened to five isochronic tones indicating the target interval. Beeps were 400-Hz sine waves of duration 50 ms and an onset/offset ramping to a point 1/8 of the length of the wave (to avoid abrupt transitions). The target interval was either short (0.8 s), medium (1.65 s), or long (2.5 s).

In production trials, participants listened to a beep, then waited the target time before responding. Feedback appeared after a 400- to 600-ms delay (uniform distribution) and remained on the display for 1,000 ms. Feedback was a “quarter to” clockface to indicate “too early”, a “quarter after” clockface to indicate “too late”, or a checkmark to indicate an on-time response. Feedback itself was determined by where the participant’s response fell relative to a window around the target duration. The response window was initialized to ±100 ms around each target, then changed following each feedback via a staircase procedure: increased on each side by 10 ms following a correct response and decreased by 10 ms following an incorrect response (either too early or too late).

In perception trials, participants heard two beeps, then were asked to judge the correctness of the interval, that is, whether or not the test interval matched the target interval. Test intervals (very early, early, on time, late, and very late) were set such that each subsequent interval was 25% longer than the previous (see SI Appendix, Table 1). Participants were then given feedback on their judgement—a checkmark for a correct judgement or an “x” for an incorrect judgement.

For each task, participants gained two points for each correct response and lost one point for each incorrect response. At the end of the experiment, points were converted to a monetary bonus at a rate of £0.01 per point.

Data collection.

In the perception task, we recorded participant response time from cue, trial outcome (early, late, or on time), and staircase-response window. In the production task, we recorded trial on time judgements (yes/no) and trial outcome (correct/incorrect).

We recorded 36 channels of EEG, referenced to AFz. Data were recorded at 1,000 Hz using a Synamps amplifier and CURRY 8 software (Compumetrics Neuroscan, Charlotte, USA). The electrodes were sintered Ag/AgCl (EasyCap, Herrsching, Germany). Thirty-one of the electrodes were laid out according to the 10–20 system. Additional electrodes were placed on the left and right mastoids, on the outer canthi of the left and right eyes, and below the right eye. The reference electrode was placed at location AFz and the ground electrode at Fpz.

Prediction Task.

In this previously published (39, 40) experiment, 19 participants responded to the onset of a visual target following a visual warning cue. The delay between cue and target was either short (700 ms) or long (1,300 ms) and, in some conditions, congruent with a preceding stimulus stream. Only congruent trials were included in the current analysis (i.e., the “valid” trials in the “rhythmic” and “repeated” conditions). Each trial was preceded by a 500-ms fixation cross subtending 0.6° of visual angle. During the precue period, participants were shown a flashing stimulus for four to six repetitions to indicate the target interval. The stimulus was a centrally presented black disk (1.2°) that appeared on the display for 100 ms. In the rhythmic condition, the black disk appeared every 700 ms or 1,300 ms (“short” or “long”). In the repeated condition, a red disk appeared either 700 ms or 1,300 ms after the appearance of the black disk, followed by a variable delay period of either 1,500–1,900 ms (short) or 1,900–2,700 ms (long). Following the precue period, participants were then shown the warning cue, a white disk (1.2°) that appeared for 100 ms. After either a short or long delay (700 ms or 1,300 ms), the target appeared—a green 1.2° disk—for 100 ms, followed by the participant’s response. The experimental program recorded the response time (time since the onset of the target). See SI Appendix, Fig. 2C and refs. (39, 40) for more detail.

Decision-Making Task.

In this experiment, also previously published (41, 42), 18 participants were presented with two snack foods and asked to pick one. This was not an interval timing task, and on average, participants took 763 ms, 95% CI [713, 813], to respond. Trials began with the appearance of a centrally presented fixation cross (0.6°) for 2–4 s followed by the presentation of the snack items (3° across, in total). Participants were asked to indicate their preference by making a left or right button press within a 1.25-s window. The experimental program recorded the response time (time since the onset of the snack items). See SI Appendix, Fig. 2D and ref. (41) for more detail.

Data Analysis.

Behavioral data.

For the production task, we computed the mean produced interval for each participant. For the perception task, we computed mean likelihood of responding yes to the on time prompt, for each condition (short, medium, and long) and interval (very early, early, on time, late, and very late). For the prediction task, we computed the mean reaction time for each analyzed condition (rhythmic or repeated) and interval (short or long). For the decision-making task, we computed the mean response (decision) time. See Fig. 2 and SI Appendix, Tables 2–4 for behavioral results.

EEG preprocessing.

For all three timing tasks, EEG was preprocessed in MATLAB 2020b (Mathworks, Natick, USA) using EEGLAB (57). We first downsampled the EEG to 200 Hz, then applied a 0.1- to 20-Hz bandpass filter and 50-Hz notch filter. The EEG was then rereferenced to the average of the left and right mastoids (and AFz recovered in the production/perception tasks). Ocular artifacts were removed using independent component analysis (ICA). The ICA was trained on 3-s epochs of data following the appearance of the fixation cross at the beginning of each trial. Ocular components were identified using the iclabel function and then removed from the continuous data.

EEG for the decision-making task was already preprocessed prior to our analysis. This was a simultaneous EEG–functional MRI recording, and preprocessing included the removal of magnetic resonance–related artifacts via filtering and PCA, as well as a 0.5- to 40-Hz bandpass filter. In line with our other analyses, we rereferenced the EEG to the average of TP7 and TP8 (located close to the mastoids) and applied an additional 20-Hz low-pass filter.

ERPs.

To construct conventional ERPs, we first created epochs of EEG around cues (all tasks), responses (perception task), probes (production task), targets (prediction task), and decisions (decision-making task). Cue-locked ERPs extended from 200 ms precue to either 800, 1,650, or 2,500 ms postcue (the short, medium, and long targets) in the perception/production tasks; 700 or 1,300 ms in the prediction task (the short and long targets); and 600 ms in the decision-making task. Epochs were baseline corrected using a 200-ms precue window. We also constructed epochs from 800, 1,650, or 2,500 ms prior to the response/probe in the production/perception tasks; 700 or 1,300 ms prior to the target in the prediction task; and 600 ms prior to the decision in the decision-making task to 200 ms after the response/probe/target/decision. A baseline was defined around the event of interest (mean EEG from −20 to 20 ms) and removed in all cases except for the decision-making task, in line with the original analysis (41). We then removed any trials in which the sample-to-sample voltage differed by more than 50 μV or the voltage change across the entire epoch exceeded 150 μV. We then created conditional cue and response/probe/target/decision averages for each participant and task: production/perception (short, medium, and long), prediction (short and long), and decision making (early and late, via a median split (41)). Finally, participant averages in the timing tasks were combined into grand-average waveforms at electrode FCz, a location where timing-related activity has been previously observed (5), and Pz in the decision-making task, in line with the previously published analysis (41) (SI Appendix, Fig. 3).

Regression ERPs (rERPs).

To unmix fixed-time and scaled-time components in our EEG data, we estimated rERPs following the same GLM procedure we used with our simulated data but now applied to each sensor. We used a design matrix consisting of regular stick functions for cue and response/probe/target and a stretched/compressed stick function spanning the interval from cue to response/probe/target/decision. In particular, we estimated cue-locked responses that spanned from 200 ms precue to 800 ms postcue. The response/probe/target/decision response interval spanned from −800 to 200 ms. Each fixed-time response thus spanned 1,000 ms or 200 EEG sample points. The scaled-time component, as described earlier, was modeled as a single underlying component (set width in X) that spanned over multiple EEG durations (varying No. of rows in X). Thus, our method required choosing how many scaled-time sample points to estimate (the width in X). For the production/perception tasks, we chose to estimate 330 scaled-time points, equivalent to the duration of the “medium” interval. For the prediction task, we chose to estimate 200 scaled-time points, equivalent to the mean of the short and long conditions (700 ms and 1,300 ms). For the decision-making task, we estimated 153 scaled-time points (roughly equivalent to the mean decision time). Unlike the conventional ERP approach, this analysis was conducted on the continuous EEG. To identify artifacts in the continuous EEG, we used the basicrap function from the ERPLAB (58) toolbox with a 150-μV threshold (2,000 ms window, 1,000 ms step size). A sample was flagged if it was “bad” for any channel. Flagged samples were excluded from the GLM (samples removed from the EEG and rows removed from the design matrix). Additionally, we removed samples/rows associated with unusually fast or slow responses in the production task (less than 0.2 s or more than 5 s). On average, we removed 10.16% of samples in the production task (95% CI [8.90, 11.42]), 3.75% of samples in the perception task (95% CI [2.39, 5.10]), 5.57% of samples in the prediction task (95% CI [4.94, 6.20]), and 5.56% of samples in the decision-making task (95% CI [4.99, 6.12]).

To test for multicollinearity between the regressors, we computed the variance inflation factor (VIF) for each regressor, i.e., at each timepoint in the estimated waveforms. This was done using the uf_vif function in the Unfold toolbox (25). We were concerned about multicollinearity because the fixed-time and scaled-time components occurred over the same “real time” durations. For example, in the production task, the early and later parts of the scaled waveform always coincided with the start of the cue-locked and end of the response-locked responses, respectively. The overlap was not consistent, however; alignment between the fixed and scaled regressors was lessened due to distortions in the scaled stick function (see SI Appendix, Fig. 1). As a result, the VIF was low (<10) at nearly all points other than the start/end (SI Appendix, Fig. 9). This was true in all tasks except for the temporal prediction task (VIFs > 10), as these tasks incorporated greater temporal variability across trials. We therefore expected the waveform estimates in the temporal prediction task to be noisier relative to the other tasks. We note that future studies could use VIF to evaluate the likelihood of successfully unmixing fixed-time and scaled-time components. Introducing elements of experimental design (such as increased interval variability across trials) could help to address concerns over multicollinearity.

To lessen the effect of multicollinearity and impose a smoothness constraint on our estimates, we used a first-derivative form of Tikhonov regularization (29). Tikhonov regularization reframes the GLM solution as the minimization of

where L is the regularization operator and λ is the regularization parameter. In other words, we aimed to minimize a penalty term in addition to the usual residual. This has the solution

In our case, L approximated the first derivative as a scaled finite difference (59):

We then chose regularization parameters for each participant using 10-fold cross validation. Our goal here was to minimize the mean squared error of the residual EEG at electrode FCz, our electrode of interest. The following λs were tested on each fold: 0.001, 0.01, 0, 1, 10, 100, 1,000, 10,000, and 100,000. An optimal λ was chosen for each participant, corresponding to the parameter with the lowest mean squared error across all folds. See SI Appendix, Table 7 for a summary.

For each task and participant, we computed the mean squared error according to the model described above and—for comparison—a model with fixed components only. To make the comparison fair, we only considered those timepoints for which the fixed components were active, e.g., from −200 ms to 800 ms relative to the cue and from −800 to 200 ms relative to the response.

Statistics.

We quantified the amplitude of the scaled-time component by conducting a nonparametric statistical test of the scaled-time component according to the procedure outlined in ref. (60). After computing a single-sample t-statistic at each sample point and electrode, we identified clusters of points for which the t-value exceeded a critical threshold, corresponding to an alpha value of 0.001 for the production task, 0.01 for the perception task, and 0.05 for the prediction and decision-making tasks. Lower alpha values were used for the production and perception tasks to better isolate the effect; using an alpha value of 0.05 yielded longer windows of significance but did not change our results. Clusters were identified both spatially and temporally. For each electrode, we defined a cluster by identifying neighboring electrodes according to a template available in the FieldTrip toolbox (61). For each spatial cluster, we then identified temporal clusters for which the t-values of all the included electrodes exceeded the critical value. Within this common window, we computed the “cluster mass”, defined as the spatial mean of the sum of the absolute values of the t-values within the temporal cluster. To determine whether the observed cluster masses exceeded what could occur by chance, we permuted the scaled components by randomly flipping (multiplying by −1) the entire waveform. We then computed and recorded the cluster masses for 1,000 permuted waveforms. If more than one temporal cluster was found within a spatial cluster, only the maximum cluster mass was recorded. A value of zero was recorded if there were no clusters. We then labeled our observed cluster masses as “significant” if they exceeded 95% of the maximum cluster masses of the permuted waveforms. Finally, we examined and reported the cluster extents and P values for the clusters of maximum cluster mass: (P3, CP5, and CP1) in the production task, (F3, Fz, FCz, C3, and Cz) in the perception task, (FC1, FCz, FC2, C1, C2, CP1, CPz, and CP2) in the prediction task, and (Cz, FC1, FCz, FC2, C1, C2, CP1, CPz, and CP2) in the decision-making task.

Scaling index.

To validate the unmixing procedure, we regressed out either the scaled-time component or the fixed-time components from the EEG in each task and participant to create fixed-only or scaled-only datasets. We then quantified the amount of temporal scaling present in each task, participant, and dataset (original, fixed-only, and scaled only) using a similar procedure as ref. (15). Specifically, we constructed epochs spanning the intervals of interest (e.g., cue to response), then stretched or compressed each epoch to match a common duration (the longest duration in the interval timing tasks; the mean of the “late” responses in the decision-making task, as defined above). For each task and participant, we averaged by condition (e.g., short, medium, and long) to create conditional ERPs with a common duration, then computed a scaling index defined as the coefficient of determination. Specifically, we asked how well the long waveform could be predicted by the short waveform. If there was also a medium waveform (the production/perception tasks), another coefficient of determination was computed, and the two coefficients were averaged. A larger scaling index can therefore be interpreted as a greater postscaling similarity between conditions. Scaling indices in the fixed-only and scaled-only datasets were compared via paired-samples t tests. For each t test, we computed Cohen’s d as

where Mdiff is the mean difference between the scores being compared and sdiff is the SD of the difference of the scores being compared (62). Interestingly, the scaling index of the original signal appeared to be a mixture of the scaling indices of the fixed-only and scaled-only signals in all tasks except for the temporal prediction task (Fig. 4). We interpreted this as further evidence that the unmixing procedure was less effective here due to multicollinearity.

PCA.

To explore the link between the scaled-time component and behavior, we examined the scaled-only dataset described above—that is, the scaled-time regressors plus residuals. Only midfrontal electrodes were considered: FC1, FCz, FC2, Cz, CP1, CPz, and CP2. We then constructed epochs starting at the cue and ending at the target interval (800 ms, 1,650 ms, or 2,500 ms). Epochs within each condition (short, medium, or long) were further grouped into three equal-sized response-time bins (early, on time, or late) and averaged for each electrode and participant. We then conducted a PCA for each condition (short, medium, or long) and participant. See SI Appendix, Table 5 for amount of variance explained by PC1 and PC2. To visualize the effect of PC2, we computed the mean PC2 across all participants. We then added more or less of the mean PC2 to the mean PC1 projection and applied a 25-point moving-mean window for visualization purposes (SI Appendix, Fig. 4). In order to choose a reasonable range of PC2 scores, we examined the average minimum and maximum PC2 score for each participant and condition (short, medium, or long). The PC2 score ranges were −21 to 15 (short), −41 to 38 (medium), and −40 to 55 (long). To assess the relationship between PC2 score and behavior, we binned PC2 scores according to our response-time bins (early, on time, and late) and collapsed across conditions (short, medium, or long). This gave us a single mean PC2 score for each participant and response-time bin (early, on time, or late), which we analyzed using a two-sided, repeated-measures ANOVA (Fig. 5D) after verifying the assumption of normality using the Shapiro–Wilk test. Two different effect sizes, ηp2 and ηg2, were computed, according to

where SSQ is the sum of squares of the quantile effect (early, on time, or late), SSsQ is the error sum of squares of the quantile effect, and SSS is the sum of squares between subjects (63).

We then replicated the PCA procedure for the decision-making task using an epoch extending 800 ms from the cue at a central electrode cluster (FC3, FC1, FC2, FC4, C3, C1, Cz, C2, C4, CP3, CP1, CP2, CP4, P3, P1, Pz, P2, and P4). Note that the assumption of normality was violated for “early” responses in the decision-making task. However, as repeated-measures ANOVA is robust to violations of normality, no statistical correction was made.

Supplementary Material

Acknowledgments

This research was funded by a Natural Sciences and Engineering Research Council of Canada (https://www.nserc-crsng.gc.ca/) Postdoctoral Fellowship to C.D.H. (PDF 546078 – 2020), a Biotechnology and Biological Sciences Research Council (https://www.ukri.org/councils/bbsrc/) Anniversary Future Leader Fellowship (BB/R010803/1) to N.K., a Sir Henry Dale Fellowship from the Royal Society (https://royalsociety.org) and Wellcome (https://wellcome.org/) to L.T.H. (208789/Z/17/Z), and a National Alliance for Research on Schizophrenia and Depression Young Investigator Award from the Brain and Behavior Research Foundation (https://www.bbrfoundation.org/) to L.T.H. and was supported by the National Institute for Health and Care Research Oxford Health Biomedical Research Centre (https://oxfordhealthbrc.nihr.ac.uk/). The Wellcome Centre for Integrative Neuroimaging was supported by core funding from Wellcome Trust (203139/Z/16/Z). For the purpose of Open Access, the author has applied a CC BY public copyright license to any author-accepted manuscript version arising from this submission.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission. S.L. is a guest editor invited by the Editorial Board.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2214638119/-/DCSupplemental.

Data, Materials, and Software Availability

Raw data for the production and perception tasks are available at https://openneuro.org/datasets/ds004200/versions/1.0.0 (64). Raw data for the prediction task are available at datadryad.org/stash/dataset/doi:10.5061/dryad.5vb8h (40). Raw data for the decision-making task are available at https://openneuro.org/datasets/ds002734/versions/1.0.2 (42). Simulation and analysis scripts are available at https://github.com/chassall/temporalscaling (65).

[production and perception tasks; decision-making task; prediction task; Simulation and analysis scripts;] data have been deposited in [OpenNeuro; Dryad; github] (https://doi.org/10.18112/openneuro.ds004200.v1.0.0; https://doi.org/10.18112/openneuro.ds002734.v1.0.2; https://doi.org/10.5061/dryad.5vb8h; https://github.com/chassall/temporalscaling).

References

- 1.Bogacz R., Brown E., Moehlis J., Holmes P., Cohen J. D., The physics of optimal decision making: A formal analysis of models of performance in two-alternative forced-choice tasks. Psychol. Rev. 113, 700–765 (2006). [DOI] [PubMed] [Google Scholar]

- 2.Merchant H., Harrington D. L., Meck W. H., Neural basis of the perception and estimation of time. Annu. Rev. Neurosci. 36, 313–336 (2013). [DOI] [PubMed] [Google Scholar]

- 3.Wittmann M., The inner sense of time: How the brain creates a representation of duration. Nat. Rev. Neurosci. 14, 217–223 (2013). [DOI] [PubMed] [Google Scholar]

- 4.Nobre A. C., van Ede F., Anticipated moments: Temporal structure in attention. Nat. Rev. Neurosci. 19, 34–48 (2018). [DOI] [PubMed] [Google Scholar]

- 5.Macar F., Vidal F., Event-related potentials as indices of time processing: A review. J. Psychophysiol. 18, 89–104 (2004). [Google Scholar]

- 6.Kononowicz T. W., van Rijn H., Decoupling interval timing and climbing neural activity: A dissociation between CNV and N1P2 amplitudes. J. Neurosci. 34, 2931–2939 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pfeuty M., Ragot R., Pouthas V., Relationship between CNV and timing of an upcoming event. Neurosci. Lett. 382, 106–111 (2005). [DOI] [PubMed] [Google Scholar]

- 8.Macar F., Vidal F., Casini L., The supplementary motor area in motor and sensory timing: Evidence from slow brain potential changes. Exp. Brain Res. 125, 271–280 (1999). [DOI] [PubMed] [Google Scholar]

- 9.Trillenberg P., Verleger R., Wascher E., Wauschkuhn B., Wessel K., CNV and temporal uncertainty with ‘ageing’ and ‘non-ageing’ S1-S2 intervals. Clin. Neurophysiol. 111, 1216–1226 (2000). [DOI] [PubMed] [Google Scholar]

- 10.Miniussi C., Wilding E. L., Coull J. T., Nobre A. C., Orienting attention in time. Modulation of brain potentials. Brain 122, 1507–1518 (1999). [DOI] [PubMed] [Google Scholar]

- 11.Macar F., Vidal F., The CNV peak: An index of decision making and temporal memory. Psychophysiology 40, 950–954 (2003). [DOI] [PubMed] [Google Scholar]

- 12.Praamstra P., Kourtis D., Kwok H. F., Oostenveld R., Neurophysiology of implicit timing in serial choice reaction-time performance. J. Neurosci. 26, 5448–5455 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Macar F., Vidal F., Time processing reflected by EEG surface Laplacians. Exp. Brain Res. 145, 403–406 (2002). [DOI] [PubMed] [Google Scholar]

- 14.Walter W. G., Cooper R., Aldridge V. J., McCallum W. C., Winter A. L., Contingent negative variation : An electric sign of sensori-motor association and expectancy in the human brain. Nature 203, 380–384 (1964). [DOI] [PubMed] [Google Scholar]

- 15.Wang J., Narain D., Hosseini E. A., Jazayeri M., Flexible timing by temporal scaling of cortical responses. Nat. Neurosci. 21, 102–110 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Remington E. D., Egger S. W., Narain D., Wang J., Jazayeri M., A dynamical systems perspective on flexible motor timing. Trends Cogn. Sci. 22, 938–952 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Vyas S., Golub M. D., Sussillo D., Shenoy K. V., Computation through neural population dynamics. Annu. Rev. Neurosci. 43, 249–275 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Sussillo D., Neural circuits as computational dynamical systems. Curr. Opin. Neurobiol. 25, 156–163 (2014). [DOI] [PubMed] [Google Scholar]

- 19.Williams A. H., et al. , Discovering precise temporal patterns in large-scale neural recordings through robust and interpretable time warping. Neuron 105, 246–259.e8 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.O’Connell R. G., Shadlen M. N., Wong-Lin K., Kelly S. P., Bridging neural and computational viewpoints on perceptual decision-making. Trends Neurosci. 41, 838–852 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Boehm U., van Maanen L., Forstmann B., van Rijn H., Trial-by-trial fluctuations in CNV amplitude reflect anticipatory adjustment of response caution. Neuroimage 96, 95–105 (2014). [DOI] [PubMed] [Google Scholar]

- 22.Meck W. H., Neuropsychology of timing and time perception. Brain Cogn. 58, 1–8 (2005). [DOI] [PubMed] [Google Scholar]

- 23.Luck S. J., “A closer look at ERPs and ERP components” in An Introduction to the Event-Related Potential Technique, Second Edition (The MIT Press, Cambridge, MA, 2014), pp. 35–70. [Google Scholar]

- 24.Smith N. J., Kutas M., Regression-based estimation of ERP waveforms: I. The rERP framework. Psychophysiology 52, 157–168 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ehinger B. V., Dimigen O., Unfold: An integrated toolbox for overlap correction, non-linear modeling, and regression-based EEG analysis. PeerJ 7, e7838 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Litvak V., Jha A., Flandin G., Friston K., Convolution models for induced electromagnetic responses. Neuroimage 64, 388–398 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Lalor E. C., Pearlmutter B. A., Reilly R. B., McDarby G., Foxe J. J., The VESPA: A method for the rapid estimation of a visual evoked potential. Neuroimage 32, 1549–1561 (2006). [DOI] [PubMed] [Google Scholar]

- 28.Crosse M. J., Di Liberto G. M., Bednar A., Lalor E. C., The multivariate temporal response function (mTRF) toolbox: A MATLAB toolbox for relating neural signals to continuous stimuli. Front. Hum. Neurosci. 10, 604 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kristensen E., Guerin-Dugué A., Rivet B., Regularization and a general linear model for event-related potential estimation. Behav. Res. Methods 49, 2255–2274 (2017). [DOI] [PubMed] [Google Scholar]

- 30.Woody C. D., Characterization of an adaptive filter for the analysis of variable latency neuroelectric signals. Med. Biol. Eng. 5, 539–554 (1967). [Google Scholar]

- 31.Ouyang G., Herzmann G., Zhou C., Sommer W., Residue iteration decomposition (RIDE): A new method to separate ERP components on the basis of latency variability in single trials. Psychophysiology 48, 1631–1647 (2011). [DOI] [PubMed] [Google Scholar]

- 32.Lerner Y., Honey C. J., Katkov M., Hasson U., Temporal scaling of neural responses to compressed and dilated natural speech. J. Neurophysiol. 111, 2433–2444 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Huang H.-C., Jansen B. H., EEG waveform analysis by means of dynamic time-warping. Int. J. Biomed. Comput. 17, 135–144 (1985). [DOI] [PubMed] [Google Scholar]

- 34.Herbst S. K., Fiedler L., Obleser J., Tracking temporal hazard in the human electroencephalogram using a forward encoding model. eNeuro 5, ENEURO.0017-18.2018 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kulasingham J. P., Joshi N. H., Rezaeizadeh M., Simon J. Z., Cortical processing of arithmetic and simple sentences in an auditory attention task. J. Neurosci. 41, 8023–8039 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Gonçalves N. R., Whelan R., Foxe J. J., Lalor E. C., Towards obtaining spatiotemporally precise responses to continuous sensory stimuli in humans: A general linear modeling approach to EEG. Neuroimage 97, 196–205 (2014). [DOI] [PubMed] [Google Scholar]

- 37.Wiener M., Kanai R., Frequency tuning for temporal perception and prediction. Curr. Opin. Behav. Sci. 8, 1–6 (2016). [Google Scholar]

- 38.Herrmann C. S., Rach S., Vosskuhl J., Strüber D., Time-frequency analysis of event-related potentials: A brief tutorial. Brain Topogr. 27, 438–450 (2014). [DOI] [PubMed] [Google Scholar]

- 39.Breska A., Deouell L. Y., Neural mechanisms of rhythm-based temporal prediction: Delta phase-locking reflects temporal predictability but not rhythmic entrainment. PLoS Biol. 15, e2001665 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Breska A., Deouell L. Y., Neural mechanisms of rhythm-based temporal prediction: Delta phase-locking reflects temporal predictability but not rhythmic entrainment. Dryad. 10.5061/dryad.5vb8h. Accessed 5 November 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Pisauro M. A., Fouragnan E., Retzler C., Philiastides M. G., Neural correlates of evidence accumulation during value-based decisions revealed via simultaneous EEG-fMRI. Nat. Commun. 8, 15808 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Pisauro M. A., Fouragnan E., Retzler C., Philiastides M. G., Evidence accumulation in value-based decisions. OpenNeuro. 10.18112/openneuro.ds002734.v1.0.2. Accessed 12 January 2021. [DOI] [PMC free article] [PubMed]

- 43.Krajbich I., Armel C., Rangel A., Visual fixations and the computation and comparison of value in simple choice. Nat. Neurosci. 13, 1292–1298 (2010). [DOI] [PubMed] [Google Scholar]

- 44.Luck S. J., An Introduction to the Event-Related Potential Technique (The MIT Press, Cambridge, MA, ed. 2, 2014). [Google Scholar]

- 45.Möcks J., The influence of latency jitter in principal component analysis of event-related potentials. Psychophysiology 23, 480–484 (1986). [DOI] [PubMed] [Google Scholar]

- 46.Hunt L. T., Behrens T. E., Hosokawa T., Wallis J. D., Kennerley S. W., Capturing the temporal evolution of choice across prefrontal cortex. eLife 4, e11945 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Ariely D., Zakay D., A timely account of the role of duration in decision making. Acta Psychol. (Amst.) 108, 187–207 (2001). [DOI] [PubMed] [Google Scholar]

- 48.O’Connell R. G., Dockree P. M., Kelly S. P., A supramodal accumulation-to-bound signal that determines perceptual decisions in humans. Nat. Neurosci. 15, 1729–1735 (2012). [DOI] [PubMed] [Google Scholar]

- 49.Kelly S. P., O’Connell R. G., Internal and external influences on the rate of sensory evidence accumulation in the human brain. J. Neurosci. 33, 19434–19441 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kösem A., et al. , Neural entrainment determines the words we hear. Curr. Biol. 28, 2867–2875.e3 (2018). [DOI] [PubMed] [Google Scholar]

- 51.Abrams D. A., Nicol T., Zecker S., Kraus N., Abnormal cortical processing of the syllable rate of speech in poor readers. J. Neurosci. 29, 7686–7693 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Müller J. A., Wendt D., Kollmeier B., Debener S., Brand T., Effect of speech rate on neural tracking of speech. Front. Psychol. 10, 449 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Allman M. J., Meck W. H., Pathophysiological distortions in time perception and timed performance. Brain 135, 656–677 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Smith N. J., Kutas M., Regression-based estimation of ERP waveforms: II. Nonlinear effects, overlap correction, and practical considerations. Psychophysiology 52, 169–181 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Brainard D. H., The psychophysics toolbox. Spat. Vis. 10, 433–436 (1997). [PubMed] [Google Scholar]

- 56.Pelli D. G., The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spat. Vis. 10, 437–442 (1997). [PubMed] [Google Scholar]

- 57.Delorme A., Makeig S., EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21 (2004). [DOI] [PubMed] [Google Scholar]

- 58.Lopez-Calderon J., Luck S. J., ERPLAB: An open-source toolbox for the analysis of event-related potentials. Front. Hum. Neurosci. 8, 213 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Reichel L., Ye Q., Simple square smoothing regularization operators. Electron. Trans. Numer. Anal. 33, 63–83 (2008). [Google Scholar]

- 60.Maris E., Oostenveld R., Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods 164, 177–190 (2007). [DOI] [PubMed] [Google Scholar]

- 61.Oostenveld R., Fries P., Maris E., Schoffelen J.-M., FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, 156869 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Cumming G., The new statistics: Why and how. Psychol. Sci. 25, 7–29 (2014). [DOI] [PubMed] [Google Scholar]

- 63.Olejnik S., Algina J., Generalized eta and omega squared statistics: Measures of effect size for some common research designs. Psychol. Methods 8, 434–447 (2003). [DOI] [PubMed] [Google Scholar]

- 64.C. D. Hassall, J. Harley, N. Kolling, L. T. Hunt, Temporal scaling. OpenNeuro. 10.18112/openneuro.ds004200.v1.0.1. Deposited 6 October 2022. [DOI] [Google Scholar]

- 65.C. D. Hassall, J. Harley, N. Kolling, L. T Hunt, CCNHuntLab: temporal scaling. GitHub. https://github.com/CCNHuntLab/temporalscaling. Deposited 31 August 2022. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Raw data for the production and perception tasks are available at https://openneuro.org/datasets/ds004200/versions/1.0.0 (64). Raw data for the prediction task are available at datadryad.org/stash/dataset/doi:10.5061/dryad.5vb8h (40). Raw data for the decision-making task are available at https://openneuro.org/datasets/ds002734/versions/1.0.2 (42). Simulation and analysis scripts are available at https://github.com/chassall/temporalscaling (65).

[production and perception tasks; decision-making task; prediction task; Simulation and analysis scripts;] data have been deposited in [OpenNeuro; Dryad; github] (https://doi.org/10.18112/openneuro.ds004200.v1.0.0; https://doi.org/10.18112/openneuro.ds002734.v1.0.2; https://doi.org/10.5061/dryad.5vb8h; https://github.com/chassall/temporalscaling).