Significance

Sensory input is continuous and yet humans perceive discrete events. This event segmentation has been studied in adults by asking them to indicate natural breaks in continuous input. This classic parsing task is impossible in infants who cannot understand or follow instructions. We circumvent this barrier by testing how the infant brain parses experience. We applied a computational model to rare awake functional MRI data from infants to identify how their brains transitioned between stable states during a cartoon. Whereas adults showed a gradient in event timescales, from shorter events in sensory regions to longer events in associative regions, infants persistently segmented fewer, longer events across the cortical hierarchy. These findings provide neuroscientific insight into how infants represent their environment.

Keywords: event cognition, early development, timescale hierarchy, naturalistic movies, fMRI

Abstract

How infants experience the world is fundamental to understanding their cognition and development. A key principle of adult experience is that, despite receiving continuous sensory input, we perceive this input as discrete events. Here we investigate such event segmentation in infants and how it differs from adults. Research on event cognition in infants often uses simplified tasks in which (adult) experimenters help solve the segmentation problem for infants by defining event boundaries or presenting discrete actions/vignettes. This presupposes which events are experienced by infants and leaves open questions about the principles governing infant segmentation. We take a different, data-driven approach by studying infant event segmentation of continuous input. We collected whole-brain functional MRI (fMRI) data from awake infants (and adults, for comparison) watching a cartoon and used a hidden Markov model to identify event states in the brain. We quantified the existence, timescale, and organization of multiple-event representations across brain regions. The adult brain exhibited a known hierarchical gradient of event timescales, from shorter events in early visual regions to longer events in later visual and associative regions. In contrast, the infant brain represented only longer events, even in early visual regions, with no timescale hierarchy. The boundaries defining these infant events only partially overlapped with boundaries defined from adult brain activity and behavioral judgments. These findings suggest that events are organized differently in infants, with longer timescales and more stable neural patterns, even in sensory regions. This may indicate greater temporal integration and reduced temporal precision during dynamic, naturalistic perception.

From the moment we are born, our sensory systems are bombarded with information. Infants must make sense of this input to learn regularities in their environment (1, 2) and remember objects and events (3, 4). How infants overcome this perceptual challenge has consequences for other cognitive abilities, such as social competence and language (5). Adults segment continuous experience into meaningful events (6), both online (7, 8) and after the fact (9). This occurs automatically and at multiple timescales (10), allowing us to perceive the passage of long events (e.g., a talk from a visiting scientist) and to differentiate or integrate shorter events that compose them (e.g., an impressive results slide or a funny anecdote). These multiple timescales of event perception can be modulated by attentional states (11) and goals (10) to support adaptive decision making and prediction (12). Thus, characterizing event segmentation in infants and how it relates to that of adults is important for understanding infant perception and cognition.

Research on infant event perception has found that infants are sensitive to complex event types such as human actions (2, 13, 14) and cartoons (4, 15). Infants recognize the similarity between target action segments and longer sequences that contain them, with greater sensitivity to discrete events (e.g., an object being occluded) than to transitions between events (e.g., an object sliding along the ground) (16, 17). Such findings suggest that infants not only segment experience at a basic sensory level, in reaction to changes in low-level properties, but also are capable of segmenting at a more abstract level like adults. Behavioral measures such as looking time have expanded our understanding of infant event processing. Yet, such measures provide indirect evidence and may reflect multiple different underlying cognitive processes. For example, the same amount of looking to a surprising event could reflect novelty detection or visual memory for components of the event (18). Thus, infant researchers are increasingly using neural measures such as electroencephalography (EEG) to study infant event processing. These studies find differences in brain activity to pauses that disrupt events versus coincide with event boundaries (19–21). In sum, there is rich evidence across paradigms that infants are capable of event segmentation and that this guides their processing of ongoing experience.

However, a limitation in the current literature on infant event segmentation is that it relies on boundaries determined by (adult) experimenters. This reflects an unstated or unintended assumption that infants experience the same event boundaries as adults, which may obscure events that are specific to either age group. Because behavioral research with infants tests their looking preferences after part versus whole events (13, 16), it would be infeasible to test all possible boundary locations and event durations. Brain imaging has the potential to contribute to our understanding by circumventing this limitation. Although most infant EEG studies have followed the behavioral work by introducing pauses at or between predetermined event boundaries, some adult EEG studies have found signatures of event segmentation during a continuous sequence of images (22) or a movie (23). Although similar studies could be performed in infants, EEG may not have the spatial resolution or sensitivity away from the scalp to recover event representations in subcortical structures such as the hippocampus (24) and midline regions such as the precuneus and medial prefrontal cortex (25), each of which has been shown to play a distinct role in adult event segmentation (26).

Functional MRI (fMRI) has proved effective at capturing event representations in adults during continuous, naturalistic experience (27). In one fMRI approach, behavioral boundaries from an explicit parsing task are used as event markers to model fMRI activity during passive movie watching. Regions such as the superior temporal sulcus and middle temporal area respond to events at different timescales, with larger responses at coarser boundaries (8, 28, 29). Other brain regions respond transiently to different types of event changes, such as character changes in the precuneus and spatial changes in the parahippocampal gyrus (8). However, applied to infants, this approach would suffer the aforementioned limitation of adult experimenters predetermining event boundaries. An alternative fMRI approach discovers events in a data-driven manner from brain activity (25, 30), using an unsupervised computational model to learn stable neural patterns during movie watching. This model can be fit to different brain regions to discover a range of event timescales. In adults, sensory regions process shorter events, whereas higher-order regions process longer events, mirroring the topography of temporal receptive windows (31–33). Moreover, event boundaries in the precuneus and posterior cingulate best match narrative changes in the movie (25). Thus, fMRI could reveal fundamental aspects of infant event perception that cannot otherwise be accessed easily (34–38).

In this study, we collected movie-watching fMRI data from infants in their first year to investigate the early development of event perception during continuous, naturalistic experience. We also collected fMRI data from adults watching the same movie. We first asked whether the movie was processed reliably across infants using an intersubject correlation analysis (39). By comparing each infant to the average of the others, this analysis isolates the component of fMRI activity shared across infants from participant-specific responses and noise (40). Eye movements during movie watching are less consistent in infants than in adults (41, 42), and thus it was not a given that infant neural activity would be reliable during the movie. After establishing this reliability, we then asked whether and how infants segmented the movie into events using a data-driven computational model. As a comparison and to validate our methods, we first performed this analysis on adult data. We attempted to replicate previous work showing a hierarchical gradient of timescales in event processing across regions in the adult brain, with more/shorter events in early sensory regions at the bottom of the hierarchy and fewer/longer events in associative regions at the top of the hierarchy. This itself was an open question and extension of prior studies because the infant-friendly movie we used was animated rather than live action and was much shorter in length, reducing the range of possible event durations and the amount of data and statistical power.

With this adult comparison in hand, we tested three hypotheses about event segmentation in the infant brain. The first hypothesis is that infants possess an adult-like hierarchy of event timescales across the brain. This would fit with findings that aspects of adult brain function, including resting-state networks (43), are present early in infancy. The second hypothesis is that the infant brain shows a bias to segment events at shorter timescales than the adult brain. This would result in a flatter hierarchy in which higher-order brain regions are structured into a greater number of events, akin to adult early visual cortex. Such a pattern would fit with findings that sensory regions mature early in development (38) and may provide bottom–up input that dominates the function of (comparatively immature) higher-order regions. This pattern could also result from the shorter attention span of infants (44). The third hypothesis is that the infant brain shows a bias to segment events at longer timescales than the adult brain. This would also result in a flatter hierarchy, just in reverse, with fewer events in sensory regions, akin to higher-order regions in adults. This could reflect attentional limitations that reduce the number of sensory transients perceived by infants (45), attentional inertia that sustains engagement through changes (46), or greater temporal integration in infant visual (47, 48) and multisensory processing (49, 50). We adjudicate these hypotheses and provide detailed comparisons between infant and adult event boundaries.

Results

Intersubject Correlation Reveals Reliable Neural Responses in Infants.

We scanned infants (n = 24; 3.6 to 12.7 mo) and adults (n = 24; 18 to 32 y) while they watched a short, silent movie (“Aeronaut”) that had a complete narrative arc. To investigate the consistency of infants’ neural responses during movie watching, we performed leave-one-out intersubject correlation (ISC), in which the voxel activity of each individual participant was correlated with the average voxel activity of all other participants (39). This analysis was performed separately in adults and infants for every voxel in the brain and then averaged within eight regions of interest (ROIs), spanning from early visual cortex (EVC) to later visual regions (lateral occipital cortex [LOC]), higher-order associative regions (angular gyrus [AG], posterior cingulate cortex [PCC], precuneus, and medial prefrontal cortex [mPFC]), and the hippocampus. Because the movie was silent, we used early auditory cortex (EAC) as a control region.

Whole-brain ISC was highest in visual regions in adults (Fig. 1A), similar to prior studies with movies (39, 51). That said, all eight ROIs were statistically significant in adults (EVC, M = 0.498, CI [0.446, 0.541], P < 0.001; LOC, M = 0.430, CI [0.391, 0.466], P < 0.001; AG, M = 0.094, CI [0.058, 0.127], P < 0.001; PCC, M = 0.143, CI [0.097, 0.190], P < 0.001; precuneus, M = 0.160, CI [0.128, 0.193], P < 0.001; mPFC, M = 0.053, CI [0.032, 0.077], P < 0.001; hippocampus, M = 0.047, CI [0.032, 0.062], P < 0.001; EAC, M = 0.081, CI [0.044, 0.115], P < 0.001; Fig. 1B). Broad ISC in these regions is consistent with what has been found in prior studies that used longer movies. The one surprise was EAC, given that the movie was silent (see Discussion for potential explanations).

Fig. 1.

Average leave-one-out ISC in adults and infants. (A) Voxel-wise ISC values in the two groups, thresholded arbitrarily at a mean correlation value of 0.10 to visualize the distribution across the whole brain. (B) ISC values were significant in both adults and infants across ROIs (except EAC in infants). Dots represent individual participants and error bars represent 95% CIs of the mean from bootstrap resampling. ***P < 0.001, **P < 0.01, *P < 0.05. ROIs: EVC, LOC, AG, PCC, precuneus (Prec), mPFC, hippocampus (Hipp), and EAC.

ISC was weaker overall in infants than in adults, but again higher in visual regions compared to other regions. All ROIs except for EAC were statistically significant in infants (EVC, M = 0.290, CI [0.197, 0.379], P < 0.001; LOC, M = 0.206, CI [0.135, 0.275], P < 0.001; AG, M = 0.079, CI [0.030, 0.133], ; PCC, M = 0.087, CI [0.028, 0.154], ; precuneus, M = 0.073, CI [0.030, 0.118], P < 0.001; mPFC, M = 0.073, CI [0.017, 0.124], ; hippocampus, M = 0.059, CI [0.012, 0.113], ; EAC, M = 0.013, CI [–0.018, 0.039], ). These levels were lower than those in adults in EVC (M = 0.208, permutation P < 0.001), LOC (M = 0.224, P < 0.001), precuneus (M = 0.087, ), and EAC (M = 0.068, ); all other regions did not differ between groups (AG, M = 0.015, ; PCC, M = 0.056, ; mPFC, ; hippocampus, , ). In sum, there is strong evidence of a shared response across infants, not just in visual regions, but also in regions involved in narrative processing in adults. This shared response was specific to the timing of the movie, with higher ISC values when timecourses were aligned vs. shifted in time (SI Appendix, Fig. S1A), and specific to the content of the movie, with higher ISC values for comparisons of the same vs. different movies (SI Appendix, Fig. S1B).

Flattened Hierarchical Gradient of Event Timescales in the Infant Brain.

Given that infants process the movie in a similar way to each other, we next asked whether their neural activity contains evidence of event segmentation, as in adults. Our analysis tested whether infant brains transition through discrete event states characterized by stable voxel activity patterns, which then shift into new stable activity patterns at event boundaries. We used a computational model to characterize the stable neural event patterns of infant and adult brains (25). We analyzed the data from infant and adult groups separately. Within each group, we repeatedly split the data in half, with one set of participants forming a training set and the other a test set. We learned the model on the training set using a range of event numbers from 2 to 21 and then applied it to the test set. Model fit was assessed by the log probability of the test data according to the event segmentation that was learned (i.e., log-likelihood). In a searchlight analysis, we assigned to each voxel the number of events that maximized the log-likelihood of the model for activity patterns from surrounding voxels across test iterations. In an ROI analysis, the patterns were defined from all voxels in each ROI.

In adults, we replicated previous work showing a hierarchical gradient of event timescales across cortex, with more/shorter events in early visual compared to higher-order associative regions (Fig. 2A). Qualitative inspection revealed that boundaries in EVC seemed to correspond to multiple types of visual changes in the movie (e.g., between camera angles, viewpoints of main characters), while precuneus boundaries included major plot points (e.g., arrival of pilot, flying machine breaking, return of blueprint that fixes machine). In contrast, infants did not show strong evidence of a hierarchical gradient. In fact, the model performed optimally with fewer/longer events across the brain (Fig. 2B). Although the timing of infant EVC boundaries differed from that for adults, the infant precuneus boundary was part of the set of adult precuneus boundaries (corresponding to the flying machine breaking). These findings support the third hypothesis of infants being biased to longer event timescales throughout the cortical hierarchy.

Fig. 2.

Event structure across the adult and infant brain. (A) The optimal number of events for a given voxel was determined via a searchlight across the brain, which found the number of events that maximized the model log-likelihood in held-out data. Voxels with an average ISC value greater than 0.10 are plotted for visualization purposes. In adults, there was a clear difference in the number of events found in early visual regions vs. higher-order associative regions, but this was not present in infants. Instead, there was a flattened hierarchy in the infant brain, with fewer/longer events in both early visual and associative regions. (B) Example timepoint-by-timepoint correlation matrices in EVC and precuneus in the two groups. The model event boundaries found for each age group are outlined in red (EVC) and aqua (precuneus).

Coarser but Reliable Event Structure across Brain Regions in Infants.

The above analysis provides a qualitative description of the timescale of event processing in the infant brain. However, comparing the relative model fits for different timescales does not allow us to assess whether the model fit at the optimal timescale is significantly above chance. Furthermore, it does not give us a sense of the reliability of these results and whether they generalize to unseen participants. To quantify whether these learned events truly demarcated state changes in neural activity patterns, we used nested cross-validation. For each ROI, we followed the steps above for finding the optimal number of events, but critically held one participant out of the analysis completely (and iterated so each participant was held out once). On each leave-one-participant-out iteration, the number of optimal events in the remaining training participants could vary; the held-out participant had no impact on the event model that was learned. The model with the optimal event structure in the training participants was then fit to the held-out participant’s data. To establish a null distribution, we rotated the held-out participant’s data in time. A z score of the log-likelihood for the actual result versus the null distribution was calculated to determine whether the event structure learned by the model generalized to a new participant better than chance (Fig. 3A). This analysis can tell us whether the smaller number of events observed in infants reflects true differences in processing granularity between adults and infants or results from combining across infants who have idiosyncratic event structures.

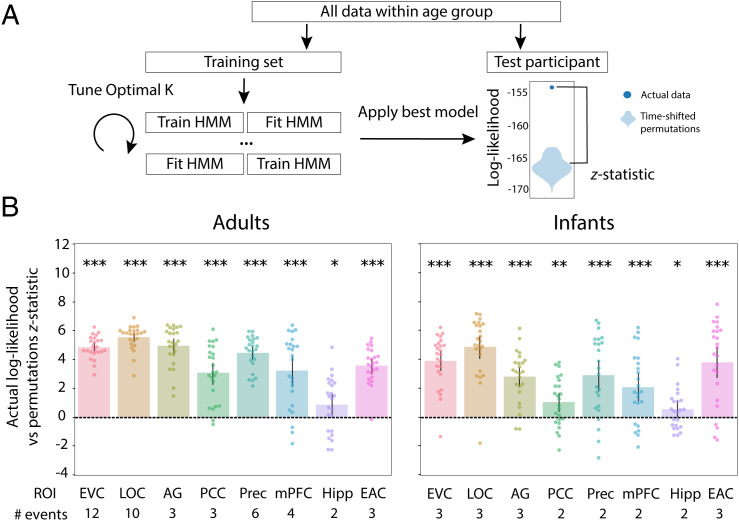

Fig. 3.

Nested cross-validation of adult and infant age groups. (A) Schematic explaining the nested cross-validation procedure for computing the reliability of event segmentation. (B) Across ROIs and for both adults and infants, the model fit real better than permuted held-out data. The number of events that optimized model log-likelihood in the full sample of participants is labeled below the x axis. Dots represent individual participants and error bars represent 95% CIs of the mean from bootstrap resampling. ***P < 0.001, **P < 0.01, *P < 0.05.

Overall, the models for different ROIs reliably fit independent data (Fig. 3B). All ROIs were significant in adults (EVC, M = 4.85, CI [4.55, 5.16], P < 0.001; LOC, M = 5.53, CI [5.18, 5.86], P < 0.001; AG, M = 4.94, CI [4.38, 5.43], P < 0.001; PCC, M = 3.07, CI [2.27, 3.77], P < 0.001; precuneus, M = 4.46, CI [4.02, 4.91], P < 0.001; mPFC, M = 3.23, CI [2.21, 4.20], P < 0.001; hippocampus, M = 0.907, CI [0.147, 1.64], ; EAC, M = 3.60, CI [3.06, 4.06], P < 0.001). This was also true for infants (EVC, M = 3.89, CI [3.14, 4.54], P < 0.001; LOC, M = 4.87, CI [4.05, 5.55], P < 0.001; AG, M = 2.83, CI [2.13, 3.53], P < 0.001; PCC, M = 1.05, CI [0.406, 1.68], ; precuneus, M = 2.91, CI [1.79, 3.93], P < 0.001; mPFC, M = 2.08, CI [1.13, 3.03], P < 0.001; hippocampus, M = 0.567, CI [0.071, 1.11], ; EAC, M = 3.83, CI [2.67, 4.89], P < 0.001). This analysis confirms that the longer event timescales we observed across the cortical hierarchy in infants are reliable.

Infant data are often noisier than adult data (35), so we took additional steps to verify that the longer events in infants were not a mere byproduct of this noise. In particular, we simulated fMRI data with different levels of noise and then fit the event segmentation model at each level. The model tended to overestimate the optimal number of events (i.e., more/shorter events) as noise increased (SI Appendix, Fig. S2A), regardless of the shape of the neural data assumed (SI Appendix, Fig. S2B). This suggests that the smaller number of longer events in infants does not result from increased noise per se.

Although infants did not fixate the movie as much as adults, we could not find evidence that this was responsible for their fewer/longer neural events. In a reanalysis of the few infants who maintained fixation throughout the movie (n = 4), we obtained the same optimal number/duration of neural events as in the full sample in EVC (3), PCC (2), precuneus (2), mPFC (2), hippocampus (2), and EAC (3) and very similar number/duration of neural events in LOC (2 vs. 3) and AG (2 vs. 3). Moreover, in a split-half analysis of infants based on the proportion of movie frames that were fixated, infants with below-median fixation and above-median fixation showed nearly identical log-likelihoods of the hidden Markov model (HMM) fits across a range of different event numbers/durations (SI Appendix, Fig. S3).

Relationship between Adult and Infant Event Structure.

The optimal number of events for a given region differs across adults and infants, but this does not necessarily mean that the patterns of neural activity are unrelated. The coarser event structure in infants may still be present in the adult brain, with their additional events carving up these longer events more finely. Conversely, the finer event structure in adults may still be developing in the infant brain, such that it may be present but have a less optimal fit. We thus investigated whether event structure from one group could explain the neural activity of individual participants in the other group (Fig. 4). We compared this across-group prediction to a baseline of how well other members of the same group could explain an individual’s neural activity. If event structure better explains neural data from the same age group compared to the other age group, then we can conclude that event structures differ between age groups.

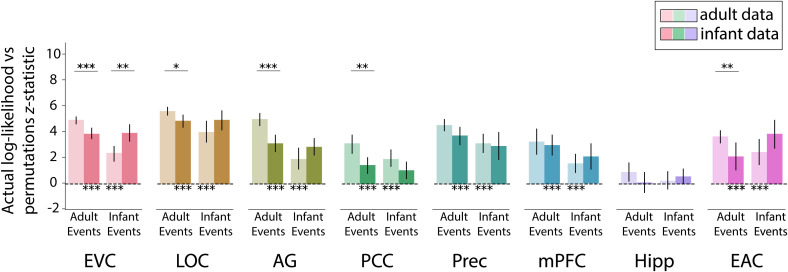

Fig. 4.

Reliability of event structure for models learned on participants of the same vs. other age group. Light bars indicate fit of adult and infant event structures to adult data, and dark bars indicate fit of adult and infant event structures to infant data. Note that the fits to the same group (adult events in adults, infant events in infants) are simply replotted from Fig. 3, without duplicating the statistics. Overall, event structures learned from adults and infants fit data from the other group (clearest in EVC and LOC). However, in several regions, these fits were weaker than to data from the same group (clearest in EVC, LOC, AG, PCC, and EAC for adult events and in EVC for infant events). Error bars represent 95% CIs of the mean from bootstrap resampling. ***P < 0.001, **P < 0.01, *P < 0.05.

When event segmentation models fit to adults were applied to infant neural activity, all ROIs except hippocampus showed significant model fit over permutations (EVC, M = 3.81, CI [3.40, 4.26], P < 0.001; LOC, M = 4.82, CI [4.30, 5.29], P < 0.001; AG, M = 3.12, CI [2.45, 3.72], P < 0.001; PCC, M = 1.43, CI [0.796, 2.03], P < 0.001; precuneus, , CI [2.93, 4.34], P < 0.001; mPFC, M = 2.95, CI [2.18, 3.73], P < 0.001; hippocampus, M = 0.106, CI [–0.659, 0.892], ; EAC, M = 2.12, CI [1.03, 3.18], P < 0.001). This suggests that although infants and adults had a different optimal number of events in these regions, there was some overlap in their event representations. In many of these regions, models trained on adults showed significantly better fit to adults than to infants (EVC, M = 1.04, CI [0.490, 1.56], P < 0.001; LOC, M = 0.715, CI [0.152, 1.31], ; AG, M = 1.82, CI [0.952, 2.65], P < 0.001; PCC, , CI [0.691, 2.68], ; precuneus, M = 0.781, CI [–0.024, 1.61], ; EAC, M = 1.48, CI [0.385, 2.65], ), suggesting that adult-like event structure is still developing in these regions. Indeed, how well adult event structure fit an infant was related to infant age in LOC (, ). No other ROIs showed a reliable relationship with age (), although we had a relatively small sample and truncated age range for evaluating individual differences.

When event segmentation models learned from infants were applied to adult neural activity, all ROIs except hippocampus showed significant model fit over permutations (EVC, M = 2.33, CI [1.73, 2.89], P < 0.001; LOC, M = 3.99, CI [3.14, 4.81], P < 0.001; AG, M = 1.90, CI [1.08, 2.75], P < 0.001; PCC, M = 1.93, CI [1.33, 2.63], P < 0.001; precuneus, M = 3.08, CI [2.35, 3.84], P < 0.001; mPFC, M = 1.59, CI [0.846, 2.31], ; hippocampus, M = 0.215, CI [–0.433, 0.941], ; EAC, M = 2.41, CI [1.46, 3.42], P < 0.001). Infant event models explained infant data better than adult data only in EVC (M = 1.56, CI [0.585, 2.42], ). Intriguingly, infant event models showed better fit to adult vs. infant neural activity in PCC, although the effect did not reach statistical significance (, CI [–1.84, 0.126], ).

Altogether, the finding that events from one age group significantly fit data from the other age group shows that infant and adult event representations are related in all regions except the hippocampus. Nonetheless, the better fit in some ROIs when applying events from one age group to neural activity from the same vs. other age group provides evidence that these event representations are not the same and may in fact change over development, compatible with the second and third hypotheses that the infant brain segments experience differently than the adult brain.

Expression of Behavioral Event Boundaries.

We took a brain-based, data-driven approach to discovering event representations in adults and infants, but how do these neural event representations relate to behavior? The behavioral task of asking participants to explicitly parse or annotate a movie is not possible in infants. However, in adults such annotations of high-level scene changes have been shown to align with neural event boundaries in the adult AG, precuneus, and PCC (25). Given that we found that adult neural event boundaries in these same regions significantly fit infant data, we tested whether adult behavioral event boundaries might also be reflected in the infant brain.

We collected behavioral event segmentation data from 22 independent adult participants who watched “Aeronaut” while identifying salient boundaries (24) (Fig. 5A). Participants were not instructed to annotate at any particular timescale and were simply asked to indicate when it felt like a new event occurred. We quantified the fit of behavioral boundaries to neural activity by calculating the difference in pattern similarity between two timepoints within vs. across boundaries, equating temporal distance. Results were weighted by the number of unique timepoint pairs that made up the smaller group of correlations (e.g., close to the boundary, there are fewer across-event pairs than within-event pairs). A more conservative approach considered timepoint pairs within vs. across event boundaries anchored to the same timepoint (SI Appendix, Fig. S4). To the extent that behavioral segmentation manifested in the event structure of a region, we expected greater neural similarity for timepoints within events.

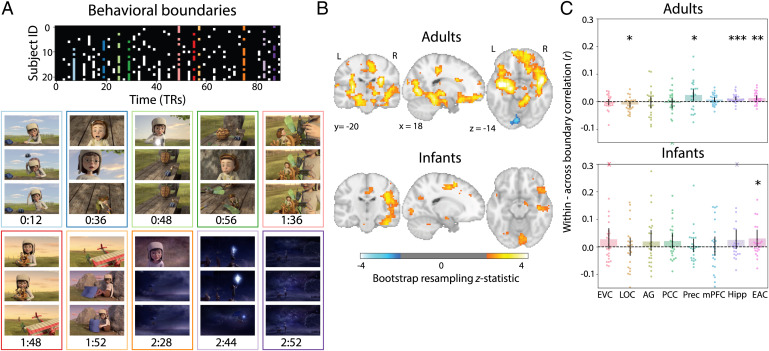

Fig. 5.

Relating behavioral boundaries to neural activity. (A) Matrix showing which behavioral participants indicated the presence of an event boundary at each TR in the movie. The 10 TRs with the highest percentage of agreement robust to response time adjustment were used as event boundaries (colored columns; see Materials and Methods). Movie frames from the TR before, during, and after each event boundary are depicted below for qualitative inspection. (B) Whole-brain searchlight analysis for each age group comparing pattern similarity between timepoints drawn from within vs. across behavioral event boundaries. Bootstrapped z scores are thresholded at , uncorrected. (C) ROI analysis of difference in pattern similarity within minus across behavioral events. Dots represent individual participants and error bars represent 95% CIs of the mean from bootstrap resampling. One adult participant with a value beyond the y axis range for PCC is indicated with an X at the negative edge. Infant participants with values beyond the y axis range for EVC and hipppocampus are indicated with Xs at the positive edge. ***P < 0.001, **P < 0.01, *P < 0.05.

We performed this analysis for both whole-brain searchlights and ROIs. In adults, the searchlight revealed greater pattern similarity within vs. across behavioral event boundaries throughout the brain, including visual regions, medial frontal cortex, bilateral hippocampus, supramarginal gyrus, and posterior cingulate (Fig. 5B). This generally fits with previous work showing that neural events in visual and semantic regions can relate to behavioral boundaries (25). For the ROIs (Fig. 5C), we found significantly greater pattern similarity within vs. across behavioral boundaries in precuneus (M = 0.024, CI [0.003, 0.045], ), hippocampus (M = 0.011, CI [0.004, 0.018], P < 0.001), and EAC (M = 0.013, CI [0.004, 0.022], ), as well as significantly less pattern similarity within vs. across behavioral boundaries in LOC (, CI [–0.022, –0.001], ); the other regions were insensitive to the behavioral boundaries (EVC, , CI [–0.013, 0.008], ; AG, , CI [–0.025, 0.018], ; PCC, , CI [–0.025, 0.013], ; mPFC, M = 0.007, CI [–0.002, 0.015], ). Given that most of the nonsignificant ROIs in this analysis showed reliable event segmentation overall (Fig. 3B), the behavioral event boundaries may have been misaligned, for example by anticipation (52), or focused at a particular timescale that could have been modified through instructions.

In infants, several regions showed greater pattern similarity within vs. across behavioral boundaries in the searchlight analysis, including visual regions, supramarginal gyrus, posterior and anterior cingulate, and right lateral frontal cortex. In the ROIs, there was significantly greater pattern similarity within vs. across behavioral boundaries in EAC (M = 0.030, CI [0.001, 0.062], ); the other regions were insensitive to the behavioral boundaries (EVC, M = 0.027, CI [–0.007, 0.068, ; LOC, , CI [–0.033, 0.031], ; AG, M = 0.019, CI [–0.014, 0.060], ; PCC, M = 0.021, CI [–0.003, 0.049], ; precuneus, M = 0.003, CI [–0.019, 0.029], ; mPFC, M = 0.000, CI [–0.032, 0.037], ; hippocampus, M = 0.025, CI [–0.006, 0.062], ). Expression of behavioral boundaries in neural event structure in EAC was also related to infants’ age (r = 0.326, ). This is especially striking because adult behavioral segmentation also manifested in adult EAC. Thus, infants can have neural representations related to how adults explicitly segment a movie, long before they can perform the behavior, understand task instructions, or even speak. This is true when we consider all timepoints within behavioral events (Fig. 5) and when we take into account temporal distance from behavioral event boundaries (SI Appendix, Figs. S5 and S6). However, this occurred in a small number of regions that did not fully overlap with those of adults, and no region showed reliable alignment between the probability of adult behavioral boundaries and infant neural boundaries from the HMM (SI Appendix, Fig. S7). Together, these results suggest functional changes over development in the behavioral relevance of neural signals for event segmentation.

Event Structure in an Additional Infant Cohort.

To provide additional evidence of coarser event structure in infancy, we applied our analyses to a more heterogeneous sample of infants who watched a different, short cartoon movie (“Mickey”) during breaks between tasks for other studies. We first asked whether there were consistent neural responses within a group of 15 adults and found a similar topography of ISC as in the main cohort, with significant values in EVC, LOC, AG, PCC, precuneus, and EAC (SI Appendix, Fig. S8). ISC was weaker in 15 infants, although still significant in EVC and LOC. Weaker ISC may potentially be related to the broader age range of the infants (4 to 33 mo)—almost 2 additional years—given the dramatic developmental changes that occur in this age range and the reliance of ISC on common signal across participants. The smaller sample size, smaller stimulus display (one-quarter size), and different movie may also have been factors that influenced ISC. We next fit the event segmentation model and found a hierarchical gradient of event timescales across adult cortex, with more events in sensory regions and fewer events in associative regions (SI Appendix, Fig. S9). However, this gradient was less pronounced than in the “Aeronaut” dataset, with fewer events in visual regions. In all but mPFC and hippocampus, event structure significantly explained held-out adult data. We therefore found a similar pattern of results in adults across both datasets. In infants, there was no evidence of a hierarchical gradient in the number/length of events (SI Appendix, Fig. S9). The model again favored fewer/longer events across regions, and these events reliably fit neural activity from a held-out participant in EVC, LOC, precuneus, mPFC, and hippocampus (SI Appendix, Fig. S9). This additional sample provides further evidence for the third hypothesis that there is reliable but coarser event structure across the infant brain.

Discussion

In this study, we investigated neural event segmentation using a data-driven, computational approach in adults and infants watching a short movie. We found synchronous processing of the movie and reliable event structure in both groups. In adults, we replicated a previously observed hierarchical gradient of timescales in event processing across brain regions. This gradient was flattened in infants, who instead had coarse neural event structure across regions, both in our main dataset and in a second dataset of infants watching a different movie. Although event structure from one age group provided a reliable fit to the other age group, suggesting some similarity in their representations, adult event structure best fit adult data, suggesting developmental differences. Furthermore, behavioral boundaries were expressed in neural event structure in overlapping but distinct regions across the two age groups. Altogether, this study provides insights into how infants represent continuous experience, namely that they automatically segment experience into discrete events, as in adults, but at a coarser granularity lacking a hierarchical gradient.

Different mechanisms could be responsible for longer event timescales in infant visual regions. One account involves which inputs are processed by the infant brain. Developmental differences in sensation (e.g., acuity), perception (e.g., object recognition), and/or attention (e.g., selection, vigilance) could limit the number of visual features or transients that are registered, decreasing the number of events that could be represented. For example, although it seems intuitive that the limited attention of infants might lead to shorter neural event timescales, this shorter attention span may hamper integration of information from before to after a boundary, causing infants to miss some events that adults would notice. This is consistent with work in adults showing that shifts of attention can decrease the temporal resolution of perception (53) and that working-memory limitations can be associated with coarser event segmentation in some cases (54). By this account, the architecture for hierarchical event processing may be ready in infancy but not fully engaged given input or processing constraints.

An alternative account is that the infant brain receives rich input but that the architecture itself takes time to develop. This is consistent with findings that infants integrate sensory inputs more flexibly in time. Namely, young children bind multisensory and visual features over longer temporal receptive windows than older children and adults (47–50). Interestingly, this diminished temporal precision may be advantageous to infants when gathering information about objects, labels, and events in their environment (55). For instance, infants may better extract meaning from social interactions if they can bind together continuously unfolding visual, auditory, and emotional information; accordingly, toddlers with autism spectrum disorder have shorter than normal temporal receptive windows (48). This behavioral literature has been agnostic to how or why temporal receptive windows are dilated in infancy, but the lack of neural gradient may be related. Future work combining behavioral and neural approaches to temporal processing and attention could inform this relationship.

A less theoretically interesting explanation for the smaller number of events in infant visual regions could be model bias. For example, the model may default to fewer events in heterogeneous participant groups. Although the “Aeronaut” dataset had a relatively narrow band of absolute age (9 mo), there are dramatic cognitive and neural changes during the first year of life (56). We found only limited evidence for developmental trajectories in infant event representation (e.g., in how well adult event structure fit infant LOC). That said, to test whether heterogeneity and noise reduce the estimated number of events, we performed a series of simulations. Contrary to what would be predicted, the optimal number of events increased when we added more noise. This is inconsistent with attributing the fewer/longer events in infant visual regions to greater functional and anatomical variability. Nonetheless, research with larger samples targeted at more specific age bands across a broader range of development could inform whether there are meaningful changes in event structure during infancy.

In contrast to visual regions, higher-order regions of adult and infant brains represented events over a similar timescale. This maturity might be understood in light of the sensitivity of infants to goal-directed actions and events (5). Young infants both predict the outcomes of actions (57) and are surprised by inefficient paths toward a goal (58) when a causal agent is involved. Unambiguous agency also increases the ability of older infants to learn statistical structure (59), suggesting that the infant mind may prioritize agency. Indeed, infants are better at imitating a sequence of actions that have hierarchical versus arbitrary structure (3, 60) and show better memory for events that have a clear agent (61), perhaps because of a propensity to segment events according to goals during encoding. Our findings of seemingly mature temporal processing windows in higher-order but not visual regions in infancy suggest that coarser event representations may precede fine-grained representations. Longitudinal tracking of infants’ neural event representations could inform this possibility.

We chose the “Aeronaut” movie for this study because it is dynamic, has appropriate content for infants, and completes a narrative arc within a short timeframe. Nevertheless, the event boundaries detected in the brain and behavior surely depend in part on the particularities of this movie, including both low-level changes such as color and motion and high-level changes to characters and locations. Furthermore, neural synchrony across participants is driven not only by shared perceptual or cognitive factors, but also by eye movements (42) and physiological signals (62), which may themselves get synchronized when viewing the same content. This study is a first step, parallel to initial adult studies (25), in demonstrating the existence and characteristics of neural event segmentation in infants. Relying on an off-the-shelf movie makes it more difficult to determine which factors drive segmentation. Adult EVC vs. precuneus boundaries seemed to occur at timepoints that made sense for each region’s respective function (visual changes vs. major plot points), but we recognize that this is a post hoc, qualitative observation. Nonetheless, our study lays the groundwork for future studies to manipulate video content and test various factors. For instance, in adults it was subsequently determined that neural event patterns in mPFC reflect event schemas (26) and neural event patterns in precuneus and mPFC track surprisal with respect to beliefs (63). Future infant studies could experimentally manipulate the content and timescales of dynamic visual stimuli to better understand the nature of longer neural event structure in infants.

The nature of conducting fMRI research in awake infants means our study has several limitations. First, there were more missing data in the infant group than in the adult group from eye closure and movement. We partially addressed this issue by introducing a new variance parameter to the computational model, but acknowledge that it remains an unavoidable difference in the data. Second, our analyses were conducted in a common adult standard space, requiring alignment across participants. Because of uncertainty in the localization and extent of these regions in infants, we defined our ROIs liberally. This may explain the curious finding of reliable event structure and relation to behavioral boundaries in EAC for both adults and infants. Indeed, the EAC ROI encompassed secondary auditory regions and superior temporal cortex, which is important for social cognition, motion, and face processing (64, 65) and shows a modality-invariant response to narratives (66). Alternatively, events in EAC may have been driven by auditory imagery while watching the movie (e.g., the sound of the flying machine crashing or the click of the girl’s camera). Future work could define ROIs based on a child atlas (67), although that would complicate comparison to adults. Alternatively, ROIs could be defined in each individual using a functional localizer task, although collecting both movie and localizer data from a single awake infant session is difficult. Regardless, in other studies we have successfully used adult-defined ROIs (35, 36). Finally, the age range of infants was wide from a developmental perspective. This was a practical reality given the difficulty of recruiting and testing awake infants in fMRI. Nevertheless, it is potentially problematic in that our model can only capture event structure shared across participants. Future work could focus on larger samples from narrower age bands to ascertain how changes in neural event representations relate to other developmental processes (e.g., language acquisition, motor development).

In conclusion, we found that infants’ brains automatically segment continuous experience into discrete neural events, but do so in a coarser way than the corresponding brain regions in adults and without a resulting hierarchical gradient in the timescale of event processing across these regions. This neuroscientific approach for accessing infant mental representations complements a rich body of prior behavioral work on event cognition, providing a different lens on how infants bring order to the “blooming, buzzing confusion” (68) of their first year.

Materials and Methods

Participants.

Data were collected from 24 infants aged 3.60 to 12.70 mo (M = 7.43, SD 2.70; 12 female) while they watched a silent cartoon (“Aeronaut”). This number does not include data from infants who moved their head excessively (n = 11), who did not look at the screen (n = 4) during more than half of the movie, who did not watch the full movie because of fussiness (n = 9), or because of technical error (n = 1). Our final sample size of 24 infants was chosen prior to the start of data collection to align with recent infant fMRI studies from our laboratory (36, 37) and others (69). For comparison, we also collected data from 24 adult participants aged 18 to 32 y (M = 22.54, SD 3.66; 14 female) who watched the same movie. An extraneous adult participant was collected but subsequently excluded to equate infant and adult participant group sizes. Although some infant and adult participants watched “Aeronaut” one or more additional times in later fMRI sessions, we analyzed only the first session in which we collected usable data in the current paper. The study was approved by the Human Subjects Committee (HSC) at Yale University. All adults provided informed consent, and parents provided informed consent on behalf of their child.

Materials.

“Aeronaut” is a 3-min-long segment of a short film entitled “Soar” created by Alyce Tzue (https://vimeo.com/148198462). The film was downloaded from YouTube in Fall 2017 and iMovie was used to trim the length. The audio was not played to participants in the scanner. The movie spanned 45.5 visual degrees in width and 22.5 visual degrees in height. In the video, a girl is looking at airplane blueprints when a miniature boy crashes his flying machine onto her workbench. The pilot appears frightened at first, but the girl helps him fix the machine. After a few failed attempts, a blueprint flies into the girl’s shoes, which they use to finally launch the flying machine into the air to join a flotilla of other ships drifting away. In the night sky, the pilot opens his suitcase, revealing a diamond star, and tosses it into the sky. The pilot then looks down at Earth and signals to the girl, who looks up as the night sky fills with stars.

The code used to show the movies on the experimental display is available at https://github.com/ntblab/experiment_menu/tree/Movies/. The code used to perform the data analyses is available at https://github.com/ntblab/infant_neuropipe/tree/EventSeg/; this code builds on tools from the Brain Imaging Analysis Kit (70) (https://brainiak.org/docs/). Raw and preprocessed data are available at https://doi.org/10.5061/dryad.vhhmgqnx1.

Data Acquisition.

Procedures and parameters for collecting MRI data from awake infants were developed and validated in a previous methods paper (35), with key details repeated below. Data were collected at the Brain Imaging Center in the Faculty of Arts and Sciences at Yale University. We used a Siemens Prisma (3T) MRI and the bottom half of the 20-channel head coil. Functional images were acquired with a whole-brain T2* gradient-echo echo-planar imaging (EPI) sequence (repetition time [TR] 2 s, echo time [TE] 30 ms, flip angle 71, matrix 64 × 64, slices 34, resolution 3 mm isotropic, interleaved slice acquisition). Anatomical images were acquired with a T1 pointwise encoding time reduction with radial acquisition (PETRA) sequence for infants (TR1 3.32 ms, TR2 2,250 ms, TE 0.07 ms, flip angle 6, matrix 320 × 320, slices 320, resolution 0.94 mm isotropic, radial slices 30,000) and a T1 magnetization-prepared rapid gradient-echo (MPRAGE) sequence for adults (TR 2,300 ms, TE 2.96 ms, inversion time [TI] 900 ms, flip angle 9, integrated parallel acquisition technique [iPAT] 2, slices 176, matrix 256 × 256, resolution 1.0 mm isotropic). The adult MPRAGE sequence included the top half of the 20-channel head coil.

Procedure.

Before their first session, infant participants and their parents met with the researchers for a mock scanning session to familiarize them with the scanning environment. Scans were scheduled for a time when the infant was thought to be most comfortable and calm. Infants and their accompanying parents were extensively screened for metal. Three layers of hearing protection (silicone inner ear putty, over-ear adhesive covers, and ear muffs) were applied to the infant participant. They were then placed on the scanner bed on top of a vacuum pillow that comfortably reduced movement. Stimuli were projected directly on to the surface of the bore. A video camera (MRC high-resolution camera) was placed above the participant to record the participant’s face during scanning. Adult participants underwent the same procedure with the following exceptions: They did not attend a mock scanning session, hearing protection was only two layers (earplugs and optoacoustics noise-canceling headphones), and they were not given a vacuum pillow. Finally, infants may have participated in additional tasks during their scanning session, whereas adult sessions contained only the movie task (and an anatomical image).

Gaze Coding.

Gaze was coded offline by two to three coders for infants (M = 2.2, SD 0.6) and by one coder for adults. Based on recordings from the in-bore camera, coders determined whether the participant’s eyes were on screen, off screen (i.e., closed, blinking, or looking off of the screen), or undetected (i.e., out of the camera’s field of view). In one infant, gaze data were not collected because of technical issues; in this case, the infant was monitored by visual inspection of a researcher and determined to be attentive enough to warrant inclusion. For all other infants, coders were highly reliable: They reported the same response code on an average of 93.2% (SD 5.2%; range across participants 77.7 to 99.6%) of frames. The modal response across coders from a moving window of five frames was used to determine the final response for the frame centered in that window. In the case of ties, the response from the previous frame was used. Frames were pooled within TRs, and the average proportion of TRs included was high for both adults (M = 98.8%, SD 3.2%; range across participants 84.4 to 100%) and infants (M = 88.4%, SD 12.2%; range across participants 55.6 to 100%).

Preprocessing.

Data from both age groups were preprocessed using a custom pipeline designed for awake infant fMRI (35), based on the FMRIB Software Library (FSL) FMRI Expert Analysis Tool (FEAT). If infants participated in other tasks during the same functional run (n = 12), the movie data were cleaved to create a pseudorun. Three burn-in volumes were discarded from the beginning of each run/pseudorun. Motion correction was applied using the centroid volume as the reference—determined by calculating the Euclidean distance between all volumes and choosing the volume that minimized the distance to all other volumes. Slices in each volume were realigned using slice-timing correction. Timepoints with greater than 3 mm of translational motion were excluded and temporally interpolated so as not to bias linear detrending. The vast majority of infant timepoints were included after motion exclusion (M = 92.6%, SD 9.9%; range across participants 65.6 to 100%) and all adult timepoints were included. Timepoints with excessive motion and timepoints during which eyes were closed for a majority of movie frames in the volume (out of 48, given the 2-s TR and movie frame rate of 24 frames per second) were excluded from subsequent analyses. Combining across exclusion types, this meant that in total, a total of 2,135 TRs (98.8% of possible) or 71.2 min of data were retained in adults (M = 2.97 min per participant, range 2.57 to 3.00) and 1,830 TRs (84.7% of possible) or 61.0 min of data were retained in infants (M = 2.54 min per participant, range 1.37 to 3.00). The signal-to-fluctuating-noise ratio (SFNR) was calculated (71) and thresholded to form the mask of brain vs. nonbrain voxels. Data were spatially smoothed with a Gaussian kernel (5 mm full width at half maximum [FWHM]) and linearly detrended in time. Analysis of Functional NeuroImages (AFNI)’s (https://afni.nimh.nih.gov) despiking algorithm was used to attenuate aberrant timepoints within voxels. After removing excess burn-out TRs, functional data were z scored within run.

The centroid functional volume was registered to the anatomical image. Initial alignment was performed using FMRIB’s Linear Image Registration Tool (FLIRT) with 6 degrees of freedom (DOF) and a normalized mutual information cost function. This automatic registration was manually inspected and then corrected if necessary using mrAlign from mrTools (Gardener Laboratory). To compare across participants, functional data were further transformed into standard space. For infants, anatomical images were first aligned automatically (FLIRT) and then manually (Freeview) to an age-specific Montreal Neurological Institute (MNI) infant template (72). This infant template was then aligned to the adult MNI standard (MNI152). Adult anatomical images were directly aligned to the adult MNI standard. For all analyses, we considered only voxels included in the intersection of all infant and adult brain masks.

In an additional exploratory analysis, we realigned participants’ anatomical data to the adult standard using Advanced Normalization Tools (ANTs) (73), a nonlinear alignment algorithm. For infants, an initial linear alignment with 12 DOF was used to align anatomical data to the age-specific infant template, followed by nonlinear warping using diffeomorphic symmetric normalization. Then, as before, we used a predefined transformation (12 DOF) to linearly align between the infant template and the adult standard. For adults, we used the same alignment procedure, except participants were directly aligned to the adult standard. Results using this nonlinear procedure were nearly identical to the original analyses (SI Appendix, Fig. S10).

Regions of Interest.

We performed analyses over the whole brain and in ROIs. We defined the ROIs using the Harvard-Oxford probabilistic atlas (74) (0% probability threshold) in EVC, LOC, AG, precuneus, EAC, and the hippocampus. We used functionally defined parcellations obtained in resting state (75) to define two additional ROIs: mPFC and PCC. We included these regions because of their involvement in longer timescale narratives, events, and integration (76).

Intersubject Correlation.

We assessed whether participants were processing the movie in a similar way using ISC (39, 40). For each voxel, we correlated the timecourse of activity between that of a single held-out participant and the average timecourse of all other participants in a given age group. We iterated through each participant and then created the average ISC map by first Fisher transforming the Pearson correlations, averaging these transformed values, and then performing an inverse Fisher transformation on the average. We visualized the whole-brain map of the intersubject correlations for adults and infants separately, thresholded at a correlation of 0.10.

For the ROI analysis, the voxel ISCs within a region were averaged separately for each held-out participant using the Fisher-transform method described above. Statistical significance was determined by bootstrap resampling. We randomly sampled participants with replacement 1,000 times, on each iteration forming a new sample of the same size as the original group, and then averaged their ISC values to form a sampling distribution. The P value was calculated as the proportion of resampling iterations on which the group average had the opposite sign to the original effect and doubled to make it two tailed. For comparing ISC across infant and adult groups, we permuted the age group labels 1,000 times, each time recalculating ISC values for these shuffled groups and then finding the difference of group means. This created a null distribution for the difference between age groups.

Event Segmentation Model.

To determine the characteristic patterns of event states and their structure, we applied an HMM variant (25) available in BrainIAK (70) to the average fMRI activity of participants from the same age group. This model uses an algorithm that alternates between estimating two related components of stable neural events: 1) multivariate event patterns and 2) their event structure (i.e., placement of boundaries between events). The constraints of the model are that each event state is visited only once and that staying versus transitioning into a new event state has the same prior probability. Model fitting stopped when the log probability that the data were generated from the learned event structure (i.e., log-likelihood) (77) began to decrease.

To deal with missing data in the input (a reality of infant fMRI data), we modified the BrainIAK implementation of the HMM. First, in calculating the probability that each observed timepoint was generated from each possible event model, timepoint variance was scaled by the proportion of participants with data at that timepoint. In other words, if some infants had missing data at a timepoint because of head motion or gaze, the variance at that timepoint was adjusted by the square root of the maximum number of participants divided by the square root of the number of participants with data at that point. This meant that even though the model was fit on averaged data that obscured missing timepoints, it had an estimate of the “trustworthiness” of each timepoint. Second, for the case in which missing timepoints persisted after averaging across participants, the log probability for the missing timepoint was linearly interpolated based on nearby values.

The HMM requires a hyperparameter indicating the number of event states. By testing a range of event numbers and assessing model fit, we determined the optimal number of events for a given voxel or region. We used a cubical searchlight (7 × 7 × 7 voxels) to look at the timescales of event segmentation across the whole brain. In a given searchlight, the HMM was fit to the average timecourse of activity for a random split half of participants using a range of event counts between 2 and 21. We capped the maximum number of possible events at 21 to ensure that at least some events would be 3 TRs long. The learned-event patterns and structure for each event count were then applied to the average timecourse of activity for held-out data, and model fit was assessed using the log-likelihood. We iterated through this procedure, each time splitting the data in half differently. The center voxel of the searchlight was assigned the number of events that maximized the average log-likelihood across 24 iterations (chosen to be the same number of iterations as in a leave-one-participant-out analysis). This analysis was performed in each searchlight, separately for adults and infants, to obtain a topography of event timescales. We also used this method to determine the optimal number of events for each of our ROIs. In these analyses, the timecourse of activity for every voxel in the ROI was used to learn the event structure.

To test whether a given ROI had statistically significant event structure, we used a nested cross-validation approach. Here, we can assess whether event structure is generalizable to an entirely unseen participant’s data and is reliable across unseen participants. The inner loop of this analysis was identical to what is described above, except that a single participant was completely held out from the analysis. After finding the optimal number of events for all but that held-out participant, the event patterns and structure were fit to that participant’s data. The log-likelihood for those data was compared to a permuted rotation distribution, where the participant’s data were time shifted for every possible shift value between one and the length of the movie (wrapping around to the beginning). We calculated a z statistic as the difference between the actual log-likelihood and the average log-likelihood of the permuted distribution, divided by the SD of the permuted distribution. We then iterated through all participants and used bootstrap resampling of the z statistics to determine significance. We randomly sampled participants with replacement 1,000 times, on each iteration forming a new sample of the same size as the original group, and then averaged their z statistics to form a sampling distribution. The P value was calculated as the proportion of resampling iterations with values less than zero, doubled to make it two tailed.

Behavioral Segmentation.

Behavioral segmentation was collected from 22 naive undergraduate students attending Yale University aged 18 to 22 y (M = 18.9, SD 1.0; 14 females). All participants provided informed consent and received course credit. Participants were instructed to watch the “Aeronaut” movie and press a key on the keyboard when a new, meaningful event occurred. Participants watched a version of the movie with its accompanying audio—a musical track without language. Although the visual input remained the same as in the fMRI data collection, these auditory cues may have influenced event segmentation (78). During data collection, participants also evaluated nine other movies, not described here, and verbally recalled each movie after segmenting. We elected to have participants use their own judgement for what constituted an event change. Participants had a 1-min practice movie to orient them to the task, and the “Aeronaut” movie appeared in a random order among the list of other movies. To capture “true” event boundaries and avoid contamination by accidental or delayed key presses, we followed a previously published procedure (24). That is, we set a threshold for the number of participants who indicated the same event boundary, such that the number of event boundaries agreed upon by at least that many participants was equal or close to the average number of key presses across participants. Participant responses were binned into 2 TR (4-s) windows. We found 10 event boundaries (11 events) that were each agreed upon by at least 36% of participants and were robust to whether or not key presses were shifted 0.9 s to account for response time (for comparison, ~31% was used in ref. 24). These event boundaries were then shifted 4 s in time to account for the hemodynamic lag.

To evaluate whether and how these behavioral boundaries relate to neural data, we tested whether voxel activity patterns for timepoints within a boundary were more correlated than timepoints spanning a boundary. This within vs. across boundary comparison has been used previously as a metric of event structure (25). For our analysis, we considered all possible pairs of timepoints within and across boundaries. For each temporal distance from the boundary, we subtracted the average correlation value for pairs of timepoints that were across events from the average correlation value for pairs of timepoints within the same event. At different temporal distances, there are either more or less within-event pairs compared to across-event pairs. To equate the number of within- and across-event pairs, we subsampled values and recomputed the within vs. across difference score 1,000 times. To combine across distances that had different numbers of possible pairs, we weighted the average difference score for each distance by the number of unique timepoint pairs that made up the smaller group of timepoint pairs (i.e., across-event pairs when temporal distance was low, within-event pairs when temporal distance was high). This was repeated for all participants, resulting in a single weighted within vs. across difference score for each participant. For the ROIs, we used bootstrap resampling of these participant difference scores to determine statistical significance. The P value was the proportion of difference values that were less than zero after 1,000 resamples, doubled to make it two tailed. For the whole-brain searchlight results, we also used 1,000 bootstrap resamples to determine statistical significance for within vs. across difference scores for each voxel. We then calculated a z score for each voxel as the distance between the bootstrap distribution and zero and thresholded the bootstrapped z-score map at P < 0.05, uncorrected.

Supplementary Material

Acknowledgments

We are thankful to the families of infants who participated. We also acknowledge the hard work of the Yale Baby School team, including L. Rait, J. Daniels, and K. Armstrong for recruitment, scheduling, and administration. We thank J. Wu, J. Fel, and A. Klein for help with gaze coding and R. Watts for technical support. We are grateful for internal funding from the Department of Psychology and Faculty of Arts and Sciences at Yale University. T.S.Y. was supported by NSF Graduate Research Fellowship, Grant/Award DGE 1752134. N.B.T-B. was further supported by the Canadian Institute for Advanced Research and the James S. McDonnell Foundation (https://doi.org/10.37717/2020-1208).

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2200257119/-/DCSupplemental.

Data, Materials, and Software Availability

Anonymized data are available on Dryad (https://doi.org/10.5061/dryad.vhhmgqnx1) (79). The code for performing the specific analyses described in this paper can be found on GitHub (https://github.com/ntblab/infant_neuropipe/tree/EventSeg/) (80). The code used to show the movies on the experimental display is also available on GitHub at (https://github.com/ntblab/experiment_menu/tree/Movies/) (81).

References

- 1.Roseberry S., Richie R., Hirsh-Pasek K., Golinkoff R. M., Shipley T. F., Babies catch a break: 7- to 9-month-olds track statistical probabilities in continuous dynamic events. Psychol. Sci. 22, 1422–1424 (2011). [DOI] [PubMed] [Google Scholar]

- 2.Stahl A. E., Romberg A. R., Roseberry S., Golinkoff R. M., Hirsh-Pasek K., Infants segment continuous events using transitional probabilities. Child Dev. 85, 1821–1826 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bauer P. J., Mandler J. M., One thing follows another: Effects of temporal structure on 1- to 2-year-olds’ recall of events. Dev. Psychol. 25, 197–206 (1989). [Google Scholar]

- 4.Sonne T., Kingo O. S., Krøjgaard P., Bound to remember: Infants show superior memory for objects presented at event boundaries. Scand. J. Psychol. 58, 107–113 (2017). [DOI] [PubMed] [Google Scholar]

- 5.Levine D., Buchsbaum D., Hirsh-Pasek K., Golinkoff R. M., Finding events in a continuous world: A developmental account. Dev. Psychobiol. 61, 376–389 (2019). [DOI] [PubMed] [Google Scholar]

- 6.Zacks J. M., Event perception and memory. Annu. Rev. Psychol. 71, 165–191 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kurby C. A., Zacks J. M., Segmentation in the perception and memory of events. Trends Cogn. Sci. 12, 72–79 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zacks J. M., Speer N. K., Swallow K. M., Maley C. J., The brain’s cutting-room floor: Segmentation of narrative cinema. Front. Hum. Neurosci. 4, 168 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Clewett D., DuBrow S., Davachi L., Transcending time in the brain: How event memories are constructed from experience. Hippocampus 29, 162–183 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hard B. M., Tversky B., Lang D. S., Making sense of abstract events: Building event schemas. Mem. Cognit. 34, 1221–1235 (2006). [DOI] [PubMed] [Google Scholar]

- 11.Bailey H. R., Kurby C. A., Sargent J. Q., Zacks J. M., Attentional focus affects how events are segmented and updated in narrative reading. Mem. Cognit. 45, 940–955 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Shin Y. S., DuBrow S., Structuring memory through inference-based event segmentation. Top. Cogn. Sci. 13, 106–127 (2021). [DOI] [PubMed] [Google Scholar]

- 13.Baldwin D. A., Baird J. A., Saylor M. M., Clark M. A., Infants parse dynamic action. Child Dev. 72, 708–717 (2001). [DOI] [PubMed] [Google Scholar]

- 14.Saylor M. M., Baldwin D. A., Baird J. A., LaBounty J., Infants’ on-line segmentation of dynamic human action. J. Cogn. Dev. 8, 113–128 (2007). [Google Scholar]

- 15.Sonne T., Kingo O. S., Krøjgaard P., Occlusions at event boundaries during encoding have a negative effect on infant memory. Conscious. Cogn. 41, 72–82 (2016). [DOI] [PubMed] [Google Scholar]

- 16.Hespos S. J., Saylor M. M., Grossman S. R., Infants’ ability to parse continuous actions. Dev. Psychol. 45, 575–585 (2009). [DOI] [PubMed] [Google Scholar]

- 17.Hespos S. J., Grossman S. R., Saylor M. M., Infants’ ability to parse continuous actions: Further evidence. Neural Netw. 23, 1026–1032 (2010). [DOI] [PubMed] [Google Scholar]

- 18.Aslin R. N., What’s in a look? Dev. Sci. 10, 48–53 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Reid V. M., Csibra G., Belsky J., Johnson M. H., Neural correlates of the perception of goal-directed action in infants. Acta Psychol. (Amst.) 124, 129–138 (2007). [DOI] [PubMed] [Google Scholar]

- 20.Pace A., Carver L. J., Friend M., Event-related potentials to intact and disrupted actions in children and adults. J. Exp. Child Psychol. 116, 453–470 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Pace A., Levine D. F., Golinkoff R. M., Carver L. J., Hirsh-Pasek K., Keeping the end in mind: Preliminary brain and behavioral evidence for broad attention to endpoints in pre-linguistic infants. Infant Behav. Dev. 58, 101425 (2020). [DOI] [PubMed] [Google Scholar]

- 22.Sols I., DuBrow S., Davachi L., Fuentemilla L., Event boundaries trigger rapid memory reinstatement of the prior events to promote their representation in long-term memory. Curr. Biol. 27, 3499–3504.e4 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Silva M., Baldassano C., Fuentemilla L., Rapid memory reactivation at movie event boundaries promotes episodic encoding. J. Neurosci. 39, 8538–8548 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ben-Yakov A., Henson R. N., The hippocampal film editor: Sensitivity and specificity to event boundaries in continuous experience. J. Neurosci. 38, 10057–10068 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Baldassano C., et al., Discovering event structure in continuous narrative perception and memory. Neuron 95, 709–721.e5 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Baldassano C., Hasson U., Norman K. A., Representation of real-world event schemas during narrative perception. J. Neurosci. 38, 9689–9699 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Stawarczyk D., Bezdek M. A., Zacks J. M., Event representations and predictive processing: The role of the midline default network core. Top. Cogn. Sci. 13, 164–186 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Zacks J. M., et al., Human brain activity time-locked to perceptual event boundaries. Nat. Neurosci. 4, 651–655 (2001). [DOI] [PubMed] [Google Scholar]

- 29.Zacks J. M., Swallow K. M., Vettel J. M., McAvoy M. P., Visual motion and the neural correlates of event perception. Brain Res. 1076, 150–162 (2006). [DOI] [PubMed] [Google Scholar]

- 30.Geerligs L., van Gerven M., Güçlü U., Detecting neural state transitions underlying event segmentation. Neuroimage 236, 118085 (2021). [DOI] [PubMed] [Google Scholar]

- 31.Hasson U., Yang E., Vallines I., Heeger D. J., Rubin N., A hierarchy of temporal receptive windows in human cortex. J. Neurosci. 28, 2539–2550 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lerner Y., Honey C. J., Silbert L. J., Hasson U., Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J. Neurosci. 31, 2906–2915 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Himberger K. D., Chien H. Y., Honey C. J., Principles of temporal processing across the cortical hierarchy. Neuroscience 389, 161–174 (2018). [DOI] [PubMed] [Google Scholar]

- 34.Deen B., et al., Organization of high-level visual cortex in human infants. Nat. Commun. 8, 13995 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ellis C. T., et al., Re-imagining fMRI for awake behaving infants. Nat. Commun. 11, 4523 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ellis C. T., Skalaban L. J., Yates T. S., Turk-Browne N. B., Attention recruits frontal cortex in human infants. Proc. Natl. Acad. Sci. U.S.A. 118, e2021474118 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ellis C. T., et al., Evidence of hippocampal learning in human infants. Curr. Biol. 31, 3358–3364.e4 (2021). [DOI] [PubMed] [Google Scholar]

- 38.Ellis C. T., et al., Retinotopic organization of visual cortex in human infants. Neuron 109, 2616–2626.e6 (2021). [DOI] [PubMed] [Google Scholar]

- 39.Hasson U., Nir Y., Levy I., Fuhrmann G., Malach R., Intersubject synchronization of cortical activity during natural vision. Science 303, 1634–1640 (2004). [DOI] [PubMed] [Google Scholar]

- 40.Nastase S. A., Gazzola V., Hasson U., Keysers C., Measuring shared responses across subjects using intersubject correlation. Soc. Cogn. Affect. Neurosci. 14, 667–685 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kirkorian H. L., Anderson D. R., Keen R., Age differences in online processing of video: An eye movement study. Child Dev. 83, 497–507 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Franchak J. M., Heeger D. J., Hasson U., Adolph K. E., Free viewing gaze behavior in infants and adults. Infancy 21, 262–287 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Gao W., Lin W., Grewen K., Gilmore J. H., Functional connectivity of the infant human brain: Plastic and modifiable. Neuroscientist 23, 169–184 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Richards J. E., Casey B. J., “Development of sustained visual attention in the human infant” in Attention and Information Processing in Infants and Adults: Perspectives from Human and Animal Research, Campbell B. A., Hayne H., Richardson R., Eds. (Lawrence Erlbaum Associates, Inc., 1992), pp. 30–60. [Google Scholar]

- 45.Colombo J., The development of visual attention in infancy. Annu. Rev. Psychol. 52, 337–367 (2001). [DOI] [PubMed] [Google Scholar]

- 46.Richards J. E., Anderson D. R., “Attentional inertia in children’s extended looking at television” in Advances in Child Development and Behavior, Kail R. V., Ed. (JAI, 2004), vol. 32, pp. 163–212. [DOI] [PubMed] [Google Scholar]

- 47.Farzin F., Rivera S. M., Whitney D., Time crawls: The temporal resolution of infants’ visual attention. Psychol. Sci. 22, 1004–1010 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Freschl J., Melcher D., Carter A., Kaldy Z., Blaser E., Seeing a page in a flipbook: Shorter visual temporal integration windows in 2-year-old toddlers with autism spectrum disorder. Autism Res. 14, 946–958 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lewkowicz D. J., Perception of auditory-visual temporal synchrony in human infants. J. Exp. Psychol. Hum. Percept. Perform. 22, 1094–1106 (1996). [DOI] [PubMed] [Google Scholar]

- 50.Lewkowicz D. J., Flom R., The audiovisual temporal binding window narrows in early childhood. Child Dev. 85, 685–694 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Chen J., et al., Shared memories reveal shared structure in neural activity across individuals. Nat. Neurosci. 20, 115–125 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Lee C. S., Aly M., Baldassano C., Anticipation of temporally structured events in the brain. eLife 10, e64972 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Yeshurun Y., Levy L., Transient spatial attention degrades temporal resolution. Psychol. Sci. 14, 225–231 (2003). [DOI] [PubMed] [Google Scholar]

- 54.Jafarpour A., Buffalo E. A., Knight R. T., Collins A. G. E., Event segmentation reveals working memory forgetting rate. iScience 25, 103902 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Freschl J., Melcher D., Kaldy Z., Blaser E., Visual temporal integration windows are adult-like in 5- to 7-year-old children. J. Vis. 19, 5 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Turesky T. K., Vanderauwera J., Gaab N., Imaging the rapidly developing brain: Current challenges for MRI studies in the first five years of life. Dev. Cogn. Neurosci. 47, 100893 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Woodward A. L., Infants selectively encode the goal object of an actor’s reach. Cognition 69, 1–34 (1998). [DOI] [PubMed] [Google Scholar]

- 58.Liu S., Brooks N. B., Spelke E. S., Origins of the concepts cause, cost, and goal in prereaching infants. Proc. Natl. Acad. Sci. U.S.A. 116, 17747–17752 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Monroy C. D., Gerson S. A., Hunnius S., Toddlers’ action prediction: Statistical learning of continuous action sequences. J. Exp. Child Psychol. 157, 14–28 (2017). [DOI] [PubMed] [Google Scholar]

- 60.Bauer P. J., Holding it all together: How enabling relations facilitate young children’s event recall. Cogn. Dev. 7, 1–28 (1992). [Google Scholar]

- 61.Howard L. H., Woodward A. L., Human actions support infant memory. J. Cogn. Dev. 20, 772–789 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Pérez P., et al., Conscious processing of narrative stimuli synchronizes heart rate between individuals. Cell Rep. 36, 109692 (2021). [DOI] [PubMed] [Google Scholar]

- 63.Antony J. W., et al., Behavioral, physiological, and neural signatures of surprise during naturalistic sports viewing. Neuron 109, 377–390.e7 (2021). [DOI] [PubMed] [Google Scholar]

- 64.Hein G., Knight R. T., Superior temporal sulcus—It’s my area: Or is it? J. Cogn. Neurosci. 20, 2125–2136 (2008). [DOI] [PubMed] [Google Scholar]

- 65.Jacoby N., Bruneau E., Koster-Hale J., Saxe R., Localizing pain matrix and theory of mind networks with both verbal and non-verbal stimuli. Neuroimage 126, 39–48 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]