ABSTRACT

Introduction

Medical physician residency program websites often serve as the first contact for any prospective applicant. No analysis of military residency program websites has yet been conducted, in contrast to their civilian counterparts. This study evaluated all military residency programs certified by the Accreditation Council for Graduate Medical Education (ACGME) 2021-2022 to determine program website comprehensiveness and accessibility and identify areas for improvement.

Materials and Methods

A list of military residency programs in the USA was compiled using Defense Health Agency Graduate Medical Education resources together with the ACGME database. A total of 15 objective website criteria covering education and recruitment content were assessed by two independent evaluators. Accessibility was also scored. Programs’ website scores were compared by geographic location, specialty affiliation, type of institution partnership, and program size. Analysis was performed with descriptive statistics and comparison via an unpaired t-test or Kruskal–Wallis analysis, as appropriate.

Results

A total of 124 military residency program websites were evaluated with a range of scores from 0 to 15 out of 15 possible points. Six programs had no identifiable website. All three services were represented with 43% joint-service programs. Content concerning physician education and development was more widely available than content directed toward the recruitment of applicants. The number of residency program websites reporting each content criterion varied greatly, but overall, no single service had a significantly higher score across their residencies’ websites. Significant variation occurred among individual specialties (P < .05) but there was no significant difference in surgical and nonsurgical specialties. Civilian-associated programs (18 programs, 14.5%) were associated with significantly greater website comprehensiveness scored best on informatics measures for recruitment and performed 64% better than military-only programs overall.

Conclusions

Program information in an accessible website platform allows prospective applicants to gain comprehensive perspectives of programs during the application process without reliance on personal visits and audition rotations. Limitations to in-person experiences, such as those caused by reductions in travel and concern for student safety during the global pandemic caused by the SARS-CoV-2 virus, may be alleviated by accessible virtual information. Our results indicate that there is opportunity for all military residency programs to improve their websites and better recruit applicants through understanding their audience and optimizing their reach online.

INTRODUCTION

Applicants of medical residency programs use websites to obtain program information, send applications, manage interviews, and optimize the selection process. The pattern of use of digital residency information by prospective applicants was amplified in the COVID-19 pandemic when nearly all residency interviews converted to a virtual platform. A national review of orthopedic residency programs found that 48.1% of programs updated their websites to include program videos and 13.2% added virtual tours.1 Likely indicating prospective resident physicians’ use of media and information published online, program websites were accessed by applicants in an exponential manner before interview dates.2

From 2019 to 2021, 36 studies evaluated how information was evaluated on program websites across medical specialties and subspecialties.2–37 These studies addressed information regarding current fellows or residents (58% of sources), call or rotation schedule (53% of sources) and positions available per year (58% of sources). Overall, these data points can be categorized into information points pertaining to education or recruitment.

There are considerable variances in website comprehensiveness and usability among physician residency programs despite evidence that potential future residents rely heavily on information put forth online.14,38 Usability and accessibility also vary among residency websites.10 In one study, half of applicants to a highly competitive specialty reported that they would have applied to fewer programs if better information was available. Mentorship and word-of-mouth information was shown to be more highly favored than websites when the latter was of lower quality.39

Unique features of U.S. Military Graduate Medical Education (GME) can make navigating preparation for a successful match into medical residency more complicated than in the civilian sector. Nuances in the military sphere include the interview timeline, application system, and the “match” process. There is also the consideration of Department of Defense (DoD) mandates that regulate information release. The application process for interviews and interview rotations is not standardized within specialty, within branches of the military, or by institution. The Medical Operational Data System, in contrast to the Electronic Residency Application Service system, is only accessible on secure networks at military treatment facilities and bases. There is no publicly available database of residency programs sponsored by the DoD. The number of positions in a given residency class will often change from year to year as the needs of the military change. Finally, in terms of availability of information, the DoD mandates that official DoD information be cleared for public release with the authority in DoD Directive 5144.02. This often creates a barrier between individuals with up-to-date information and those who update the website.

While there are data on many aspects of the residency match in the civilian sphere of medicine, military residencies are not represented in the literature. Recruiting the most competitive and diverse cohort of applicants for military medical residencies requires a transparent and accessible source of information. Unless informational gaps are noted, programs cannot rise to a higher standard.

Our objective was to evaluate military residency programs’ websites on a quantitative scale according to specific information pertinent to recruitment and educational opportunities available to military applicants. Recruitment details included application requirements, benefits, contact information for the program, current residents, location description, number of positions available, postgraduate placement, and schedule. Educational opportunity data points were research opportunities, research requirement, program description, professional development, faculty information, clinical site description, and clinical rotation off-service or elective opportunities.

The secondary objective was to evaluate any variation in score by geographic region, military service, civilian affiliation, and medical specialty. Each website was evaluated for comprehensiveness using 15-point scoring criteria that aimed to reflect the most necessary items for programs to include online, based on information sought out by prospective applicants.

METHODS

Three reviewers performed a cross-sectional review of all current military residency programs. Inclusion criteria for medical physician residency programs were accreditation awarded by the Accreditation Council for Graduate Medical Education (ACGME) in the 2021-2022 application cycle, and U.S. Air Force, Navy, and Army-sponsored or civilian-associated with dedicated military positions. Exclusion criteria were civilian-only residencies, transitional year programs and fellowship programs. Social media sites (Facebook, Twitter and Instagram) were not regarded as independent websites, although links to such were noted. Non-ACGME programs were not included.

The Defense Health Agency’s GME Program Director in December 2021 provided residency and fellowship information for military medical centers and clinics. This list was cross-referenced with the ACGME listings of accredited programs. A final list of ACGME-accredited military residency programs was created from these resources and encompassed all known active programs for the academic year 2021-2022.

No current validated tool exists to evaluate physician residency program websites. Furthermore, the unique nature of military programs precludes simple comparison with their civilian counterparts. We developed scoring criteria based on a literature review of residency and fellowship website studies from 2019 to 2021.2–37 The 15 most frequently used content criteria in recent literature were considered and adapted to fit the nature of military residencies. This included removing salary information from the criteria, which was otherwise among the 15 most frequently used criteria. The criteria studied cover application and contact information, resident benefits, faculty, description of location, current residents and number of positions per class, postgraduate placement, daily to monthly schedule, service rotations, research, and professional development. The scoring criteria was tested by three independent evaluators on two residency program websites and modified for clarity, efficiency, and reproducibility before implementation for data collection.

Each criterion was evaluated as a binary variable (presence or absence of certain information) to minimize subjective bias. Ease of information access was evaluated on a 4-point Likert scale, reflecting ease of use awarded with a score of 3, adequate with a score of 2, poor with a score of 1, and absence of website was denoted with a 0. Accessibility of information was measured by the ways the website could be found, including search engine, military, and medical center links. Finally, free text fields were used to collect information on additional program appearance on social media. Demographic information was collected to characterize each program including service affiliation, number of residents per year, geographic location (using Census Bureau regions), and specialty.

Programs’ websites were identified with a Google search of “medical center name + specialty + residency program.” The assessment of websites was conducted between December 14, 2021, and February 24, 2022. Two independent evaluators assessed each website. Agreement between evaluators was completed for 15 binary data points. Credit was given if information was present directly on the residency program webpage or was accessible from a link on the program website. The 15 criteria were subdivided into educational and recruitment content domains for a more detailed analysis. Any website with greater than 25% disagreement was evaluated by a third evaluator and a composite score was utilized.

Physician residency programs were further characterized based on (1) military service branch affiliation, (2) U.S. geographic regions, (3) program class size, and (4) ACGME designated specialty. Programs were grouped based on their geographic location into one of four regions (Northeast, Midwest, South, and West) listed by the U.S. Census Bureau in 2010. Program class size was defined as the stated number of accepted residents per class on the residency program website.

Primary statistical analysis was performed with descriptive statistics. Categorical variables were summarized as percentage or frequency. Continuous variables were summarized as mean ± SD. Secondary analysis including comparison of scores across individual specialties, regions, and program size by number of trainees were performed using an Mann–Whitney test for two groups or analysis of variance (ANOVA) with Kruskal–Wallis analysis if comparison was across three or more groups, with no assumption of parametric distribution, at a two-tailed significance level of 0.05 as previously demonstrated as a reasonable strategy.9 All analyses were completed in GraphPad Prism version 8.0.0 for Windows, GraphPad Software, San Diego, California, USA, www.graphpad.com.

RESULTS

In total, 124 programs within 21 specialties with military association were reviewed. Military associations were spread among the services with 44 Navy, 54 Air Force, and 70 Army programs. Overall, 35% of programs were associated with more than one service, and 14.5% of programs were associated with a civilian program. The number of residents per year varied from 1 to 16 trainees per year, with 54% of programs not reporting resident class size. These baseline characteristics are represented in Table I.

TABLE I.

Baseline Program Characteristics

| Characteristic | N (%) |

|---|---|

| Number of residents per year | |

| Not disclosed | 79 (64%) |

| 1-5 | 25 (20%) |

| 6+ | 20 (16%) |

| Geographic region | |

| Northeast | 0 (0%) |

| Midwest | 10 (8%) |

| South | 65 (52%) |

| West | 49 (40%) |

| Specialty | |

| Anesthesiology | 4 (3%) |

| Dermatology | 3 (2%) |

| Diagnostic Radiology | 7 (6%) |

| Emergency Medicine | 9 (7%) |

| Otolaryngology-Head & Neck Surgery | 6 (5%) |

| Family medicine | 15 (12%) |

| General surgery | 14 (11%) |

| Integrated vascular surgery | 1 (1%) |

| Internal medicine | 11 (9%) |

| Neurology | 3 (2%) |

| Neurosurgery | 1 (1%) |

| Gynecologic Surgery and Obstetrics | 9 (7%) |

| Ophthalmology | 4 (3%) |

| Orthopedic surgery | 9 (7%) |

| Pathology | 4 (3%) |

| Pediatrics | 7 (6%) |

| Physical Medicine | 1 (1%) |

| Preventive Medicine/Public health | 4 (3%) |

| Psychiatry | 6 (5%) |

| Radiation Oncology | 1 (1%) |

| Urology | 4 (3%) |

Program scores varied greatly across the queried points. The percentage of programs that provided each information area on their website is listed in Table II. More than half of programs provided a program description (92.7%), contact information for the program (89.9%), a clinical site description (89.5%), a description of clinical rotations off-service or electives (74.3%), the number of positions available (66.1%), and a description of research opportunities (59.3%). Websites were least likely to report schedule expectations (14.9%), current residents (19.8%), non-salary benefits/wellness measures (27%), or postgraduate placement (28.2%). Social media presence was not featured prominently on sites, with only 14.5% of sites displaying a link to any content (Twitter, Instagram, Facebook, or other).

TABLE II.

Website Informatics and Percentile Reporting Each Point

| Website informatics point | Percentage of programs disclosing |

|---|---|

| Recruitment | |

| Application requirements or eligibility criteria | 46.8 |

| Including military service commitment, board scores, letters of recommendation, and letter of good standing | |

| Benefits for residents | 27 |

| At least one non-salary component of compensation including complementary lunches, wellness events, and residency retreat | |

| Contact information for the program | 89.9 |

| Including email or phone number for director, coordinator, or faculty | |

| Current residents | 19.8 |

| Must include names of residents | |

| Location description | 38.3 |

| At least one statement of details about the location of the program | |

| Number of positions available for military applicants | 66.1 |

| Per year or total in residency | |

| Postgraduate placement | 28.2 |

| Including recent fellowship acceptances, duty stations, next position, and multiple alumni | |

| Schedule | 14.9 |

| Including specific requirements for call, number of rotations on night float, and work hour minimums or maximums | |

| Education | |

| Research opportunities | 59.3 |

| Including types of facilities, research produced in the program, ongoing resident projects, and research-specific faculty | |

| Research requirement stated | 45.6 |

| Or lack of requirement | |

| Program description | 92.7 |

| May include educational curriculum, informational brochure, and presentation | |

| Professional development | 39.1 |

| Including conference allotment/coverage, teaching, leadership, and military development | |

| Faculty information | 44.4 |

| At least one faculty member listed with name, may include photo, contact information, and subspecialty information | |

| Clinical rotation site description | 89.5 |

| Characteristics or specific details of at least one clinic site or rotation | |

| Clinical rotations off-service/electives and opportunities for individual interests | 74.2 |

| Rotations that are outside of the specialty-specific scope, such as critical care or research rotations | |

In order to evaluate secondary outcomes including variation across service affiliation, region, class size, and specialty, the actual scores out of the 15 points assessed were compared.

No single service had a significantly higher score across their websites. However, civilian-associated programs were significantly more likely to provide more information to applicants, mean score of 11.68 (SD 2.1) out of 15 points, compared to military-only programs with a mean of 7.16 (SD 3.1) out of 15 points, P < .0001. This is illustrated in Supplementary Figure S1. In particular, civilian-associated programs scored better on informatics measures for recruitment.

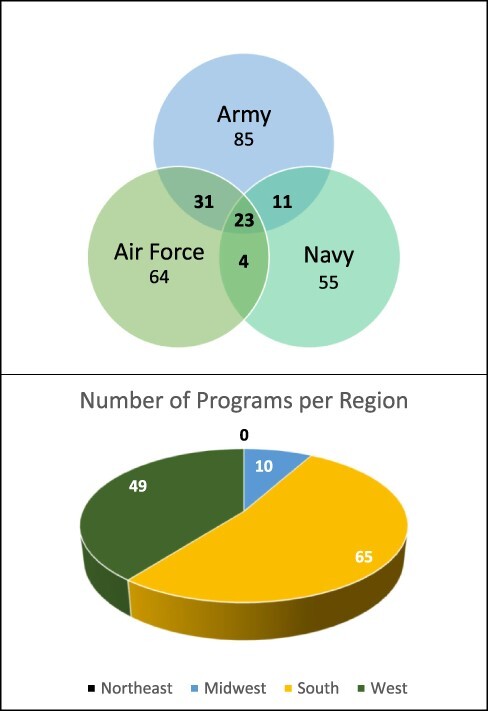

Programs were not spread evenly across the regions as reflected in Fig. 1. No residencies were located within the Northeast; 10 were in the Midwest. The majority were in the West (49) and South (65). This distribution varies from the concentration density of civilian residencies, which is much higher in the Northeast. Despite having the largest number of programs, the South had the lowest mean total score of 6.74 (SD 3.64). The Western region scored better at 8.23 (SD 2.61). The Midwest had the highest mean score of 11.68 (SD 1.42). The score deferred between regions significantly (P = .0147) when compared with one-way ANOVA Kruskal–Wallis nonparametric analysis.

FIGURE 1.

Baseline characteristics of service affiliation and region.

To determine the effect of program class size on information available, we divided the programs into three subgroups. Programs that did not specify the number of applicants were excluded from this sub-analysis (25 programs), but it was noted that they had the lowest mean score of 4.66 (SD 2.83). Programs with 1-2 residents had a mean total score of 8.5 (SD 3.31). Those with 3-6 residents had a mean score of 8.54 (SD 3.03). Finally, the large programs with seven or greater trainees per year had a mean score of 8.57 (SD 3.08). The distribution was not parametric and so a Kruskal–Wallis test was used to evaluate for differences. A small but significant difference was noted as the program size increased, P < .0001, with a Kruskal–Wallis statistic of 23.74.

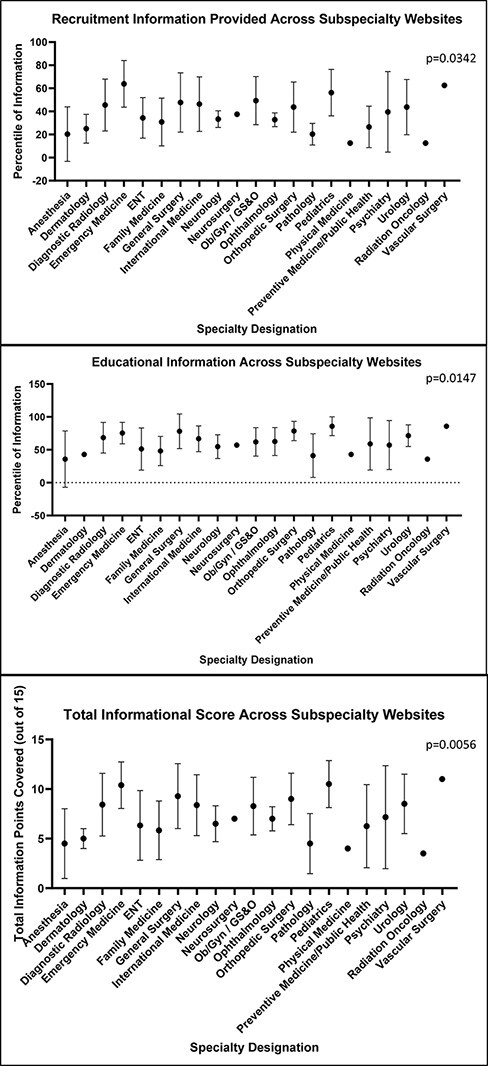

If compared across the 21 specialties currently available in the military medical health system, there was significant variation in scores. This ranged from a score of 3.5 in the single radiation oncology program to a high of 11 in the single vascular radiology. In groups with more than one program, the highest scoring group was pediatrics with a mean score of 10.5 (SD 2.36). The individual specialty scores are presented as percentages out of the total 15 points in Fig. 2. The variation among groups was again analyzed using an ANOVA with a Kruskal–Wallis test that demonstrated significant variation (P = .0135), with a Kruskal–Wallis statistic of 36.49. There was not a significant difference between surgical versus nonsurgical specialties when compared with a Mann–Whitney test, P = .098. Surgical programs had a mean score of 8.41 (SD 2.96); nonsurgical programs scored a mean of 7.35 (SD 3.63).

FIGURE 2.

Variance in informational website scores across residents.

Finally, on a 4-point scale from 0 (website not available) to 3 (quality website with easily accessible information), the mean accessibility score was only 1.95 (SD 0.74).

DISCUSSION

The poor quality of military physician residency program websites was reflected in the low mean scores found across educational and recruitment information areas. Our findings reflect a gap in informational content on military medical residency programs.

The National Resident Matching Program set forth the ideal of maximizing a fair, equitable, efficient, transparent, and reliable process to merge matching applicants’ preferences with the ranking of residency programs. In the separate military residency match, applicants apply to residency with similar goals. Ensuring that military trainees are best suited for a given residency and program location involves well-informed applicants and transparent programs. It is known that an informative website has the potential to positively impact the perception of a residency program.25 Our findings reflect a low priority to online information and lack of standardization across the residency websites. While some individual medical centers had standard formatting, this was not correlated with greater information availability.

As information becomes increasingly available in an online format, it is necessary that residency programs follow the same trend to expand accessibility. Programs have increased the availability of online information since an initial study conducted in 2001 by Rozental et al., with still much room for growth and improvement.40 Military programs should strive to be on the leading charge—not barely getting by.

One of these forward-leaning changes is the use of social media sites, which allows residency programs to regularly post information that interested individuals can follow directly for updates.41 In 2017, Sterling et al. conducted a literature review on the impact of social media in GME. They concluded that GME programs were transitioning information to social media to attract applicants at that time.42 In this study, less than a sixth of military medicine residency programs evaluated had social media pages linked to their website. In a civilian survey, applicants to anesthesia residency utilized social media to glean information on programs 42.7% of the time and 52.8% felt that residency-based social media accounts impacted their evaluation of programs, according to a study by Renew et al. in 2019.43 Military programs could grow their involvement in the free, accessible social resources to expand outreach in accordance with these shifts identified in the literature.

In the COVID-19 pandemic, virtual recruitment jeopardized applicants’ opportunities to learn about the culture of a program and its city, obtain accurate information about training, and interact with residents.9 Geography is consistently reported as one of the most important factors for residency applicants but less than 40% of programs evaluated provided specific regional information on their location. Programs also vary significantly on their scores when stratified by region, which ultimately affects availability of information for applicants interested in a specific geographic location. This variability highlights the need for a more standardized forum that includes necessary information for applicants. El Shatanofy, in their survey of orthopedic programs, concluded that smaller programs were at a potential disadvantage for applicant recruiting in orthopedic residencies due to being less likely to have information that applicants would find critical if in-person interaction was truncated.1 This conclusion could translate to military programs as well.

This study reflects only a portion of the information available on residency programs. The strengths of this study are the inclusion of all military residency program websites regardless of service affiliation and medical specialty. Review by at least two reviewers with a standardized, binary, literature-based criteria allow for future reproducibility of these findings. Specific detailed descriptions for each informatics point were referenced during data collection and are included. Limitations include the inference of topics that are important to prospective residents. Specifically, we did not directly query current military applicants or residents as to the information points that drove their decision-making. A follow-up study could consider this feedback and an updated scoring of websites. Additional direct information from programs to candidates via email, presentation, or conversation was not evaluated and may serve as the primary source for some candidates.

Ultimately, decision-making regarding residency training is individualized and criteria are rated differently among applicants, which include but are not limited to medical specialty choice, career goals, and specific interests.44 Further research in this area could address this lack of data for military-specific applicants.

Programs seeking to close the information gap could look to websites published by civilian–military combined programs that, as a group, were significantly more likely to provide more information to applicants. Features of these websites to incorporate into design may include clear links to resources, adding a video to capture more information in a smaller space, and updating frequently to highlight current information, such as the list of residents. Ways of implementing these changes across military programs could include creating checklists based on the criteria outlined in this study. Other options may be to design website templates that would maximize impact while minimizing additional tech support across military treatment facility, medical specialty, or military branch.

Currently, inconsistent availability and accessibility of information makes meeting the needs of future trainees more difficult. Applicants use websites more than other platforms to gain information about residency programs.39 Programs can turn to these key informatic areas to focus their efforts and create a well-rounded profile of their program for all applicants to access on the internet.

Supplementary Material

Contributor Information

2d Lt Raegan A Chunn, F. Edward Herbert School of Medicine, Uniformed Services University, Bethesda, MD 20814, USA.

Delaney E S Clark, John Sealy School of Medicine, University of Texas Medical Branch at Galveston, Galveston, TX 77555, USA.

Maj Meghan C H Ozcan, Warren Alpert Medical School, Brown University, Providence, RI 02903, USA; Women and Infants, Division of Reproductive Endocrinology and Infertility, Providence, RI 02905, USA.

SUPPLEMENTARY MATERIAL

Supplementary Material is available at Military Medicine online.

FUNDING

None.

INSTITUTIONAL REVIEW BOARD

Care New England—Women and Infants Institutional Review Board, under number 1808503-1, found to be not Human Subject Research.

CONFLICT OF INTEREST STATEMENT

None declared.

DATA AVAILABILITY

The data that support the findings of this study are available on request from the corresponding author. All data are freely accessible.

AUTHOR CONTRIBUTION STATEMENT

R.A.C. and M.C.H.O. designed this study. All authors collected the data and drafted the original manuscript. R.A.C. and M.C.H.O. analyzed the data. All authors read and approved the final manuscript.

REFERENCES

- 1. El Shatanofy M, Brown L, Berger P, et al. : Orthopedic surgery residency program website content and accessibility during the COVID-19 pandemic: observational study. JMIR Med Educ 2021; 7(3): e30821.doi: 10.2196/30821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Dyer S, Dickson B, Chhabra N: Utilizing analytics to identify trends in residency program website visits. Cureus 2020; 12(2): e6910.doi: 10.7759/cureus.6910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Adham S, Nasir MU, Niu B, Hamid S, Xu A, Khosa F: How well do we represent ourselves: an analysis of musculoskeletal radiology fellowships website content in Canada and the USA. Skeletal Radiol 2020; 49(12): 1951–5.doi: 10.1007/s00256-020-03481-1. [DOI] [PubMed] [Google Scholar]

- 4. Carnevale ML, Phair J, Indes JE, Koleilat I: Digital footprint of vascular surgery training programs in the United States and Canada. Ann Vasc Surg 2020; 67: 115–22.doi: 10.1016/j.avsg.2020.01.105. [DOI] [PubMed] [Google Scholar]

- 5. Chan M, Chan E, Wei C, et al. : Geriatrics fellowship-family medicine: evaluation of fellowship program accessibility and content for family medicine applicants. Cureus 2020; 12(9): e10388.doi: 10.7759/cureus.15815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Chawla S, Ding J, Faheem S, Shelly S, Khosa F: How comprehensive are Canadian plastic surgery fellowship websites? Cureus 2021; 13(6): e15815.doi: 10.7759/cureus.15815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Chen PY, Dai YX, Hsu YC, Chen TJ: Analysis of the content and comprehensiveness of dermatology residency training websites in Taiwan. Healthcare (Basel) 2021; 9(6): 773.doi: 10.3390/healthcare9060773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Daniel D, Vila C, Leon Guerrero CR, Karroum EG: Evaluation of adult neurology residency program websites. Ann Neurol 2021; 89(4): 637–42.doi: 10.1002/ana.26016. [DOI] [PubMed] [Google Scholar]

- 9. Everett AS, Strickler S, Marcrom SR, McDonald AM: Students’ perspectives and concerns for the 2020 to 2021 radiation oncology interview season. Adv Radiat Oncol 2021; 6(1): 100554.doi: 10.1016/j.adro.2020.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Fundingsland E, Fike J, Calvano J, et al. : Website usability analysis of United States emergency medicine residencies. AEM Educ Train 2021; 5(3): e10604.doi: 10.1002/aet2.10604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Gerlach EB, Plantz MA, Swiatek PR, et al. : The content and accessibility of spine surgery fellowship websites and the North American Spine Surgery (NASS) fellowship directory. Spine J 2021; 21(9): 1542–8.doi: 10.1016/j.spinee.2021.04.011. [DOI] [PubMed] [Google Scholar]

- 12. Gupta S, Palmer S, Ferreira-Dos-Santos G, Hurdle MF: Pain medicine fellowship program websites in the United States of America—a nonparametric statistic analysis of 14 different criteria. J Pain Res 2021; 14: 1339–43.doi: 10.2147/JPR.S313513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Hsu AL, Chien JL, Sarkany D, Smith C: Evaluating neuroradiology fellowship program websites: a critical analysis of all 84 programs in the United States. Curr Probl Diagn Radiol 2021; 50(2): 147–50.doi: 10.1067/j.cpradiol.2019.11.002. [DOI] [PubMed] [Google Scholar]

- 14. Huang BY, Hicks TD, Haidar GM, Pounds LL, Davies MG: An evaluation of the availability, accessibility, and quality of online content of vascular surgery training program websites for residency and fellowship applicants. J Vasc Surg 2017; 66(6): 1892–901.doi: 10.1016/j.jvs.2017.08.064. [DOI] [PubMed] [Google Scholar]

- 15. Jain M, Sood N, Varguise R, Karol DL, Alwazzan AB, Khosa F: North American urogynecology fellowship programs: value of program website content. Int Urogynecol J. 2021; 32(9): 2443–8.doi: 10.1007/s00192-021-04808-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Khwaja A, Du PZ, DeSilva GL: Website evaluation for shoulder and elbow fellowships in the United States: an evaluation of accessibility and content. JSES Int 2020; 4(3): 449–52.doi: 10.1016/j.jseint.2020.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Maisner RS, Babu A, Ayyala HS, Ramanadham S: How comprehensive are aesthetic surgery fellowship websites? Ann Plast Surg 2021; 86(6): 615–7.doi: 10.1097/SAP.0000000000002518. [DOI] [PubMed] [Google Scholar]

- 18. Maybee C, Nguyen NT, Chan M, et al. : Assessing the accessibility of content for geriatric fellowship programs. J Am Geriatr Soc 2021; 69(1): 197–200.doi: 10.1111/jgs.16948. [DOI] [PubMed] [Google Scholar]

- 19. Miller VM, Padilla LA, Schuh A, et al. : Evaluation of cardiothoracic surgery residency and fellowship program websites. J Surg Res 2020; 246: 200–6.doi: 10.1016/j.jss.2019.09.009. [DOI] [PubMed] [Google Scholar]

- 20. CHt M, Goyer S, Cantrell CK, Hendershot K, Corey B: An assessment of the online presentation of MIS fellowship information for residents. Surg Endosc 2020; 34(9): 3986–91.doi: 10.1007/s00464-019-07179-x. [DOI] [PubMed] [Google Scholar]

- 21. Nasir MU, Murray N, Mathur S, et al. : Advertise right by addressing the concerns: an evaluation of women’s/breast imaging radiology fellowship website content for prospective fellows. Curr Probl Diagn Radiol. 2021; 50(4): 481–4.doi: 10.1067/j.cpradiol.2020.05.010. [DOI] [PubMed] [Google Scholar]

- 22. Nguyen KT, Chen FR, Maracheril R, et al. : Assessment of the accessibility and content of both ACGME accredited and nonaccredited regional anesthesiology and acute pain medicine fellowship websites. J Educ Perioper Med 2021; 23(2): E663.doi: 10.46374/volxxiii_issue1_wei. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Patel BG, Gallo K, Cherullo EE, Chow AK: Content analysis of ACGME accredited urology residency program webpages. Urology 2020; 138: 11–5.doi: 10.1016/j.urology.2019.11.053. [DOI] [PubMed] [Google Scholar]

- 24. Peyser A, Abittan B, Mullin C, Goldman RH: A content and quality evaluation of ACGME-accredited reproductive endocrinology and infertility fellowship program webpages. J Assist Reprod Genet 2021; 38(4): 895–9.doi: 10.1007/s10815-021-02073-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Pollock JR, Weyand JA, Reyes AB, et al. : Descriptive analysis of components of emergency medicine residency program websites. West J Emerg Med 2021; 22(4): 937–42.doi: 10.5811/westjem.2021.4.50135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Rajaram R, Abreu JA, Mehran R, Nguyen TC, Antonoff MB, Vaporciyan A: Using quality improvement principles to redesign a cardiothoracic surgery fellowship program website. Ann Thorac Surg 2021; 111(3): 1079–85.doi: 10.1016/j.athoracsur.2020.05.158. [DOI] [PubMed] [Google Scholar]

- 27. Ruddell JH, Eltorai AEM, Bakhit M, Lateef AM, Moss SF: Analysis of accredited gastroenterology fellowship internet-available content: twenty-nine steps toward a better program website. Dig Dis Sci 2019; 64(5): 1074–8.doi: 10.1007/s10620-019-05579-6. [DOI] [PubMed] [Google Scholar]

- 28. Ruddell JH, Eltorai AEM, Tang OY, et al. : The current state of nuclear medicine and nuclear radiology: workforce trends, training pathways, and training program websites. Acad Radiol. 2020; 27(12): 1751–9.doi: 10.1016/j.acra.2019.09.026. [DOI] [PubMed] [Google Scholar]

- 29. Ruddell JH, Tang OY, Persaud B, Eltorai AEM, Daniels AH, Ng T: Thoracic surgery program websites: bridging the content gap for improved applicant recruitment. J Thorac Cardiovasc Surg 2021; 162(3): 724–32.doi: 10.1016/j.jtcvs.2020.06.131. [DOI] [PubMed] [Google Scholar]

- 30. Sardana A, Kapani N, Gu A, Petersen SM, Gimovsky AC: Why is applying to fellowship so difficult? Am J Obstet Gynecol MFM 2019; 1(4): 100042.doi: 10.1016/j.ajogmf.2019.100042. [DOI] [PubMed] [Google Scholar]

- 31. Sayegh F, Perdikis G, Eaves M, Taub D, Glassman GE, Taub PJ: Evaluation of plastic surgery resident aesthetic clinic websites. JPRAS Open 2021; 27: 99–103.doi: 10.1016/j.jpra.2020.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Shaath MK, Avilucea FR, Lim PK, Warner SJ, Achor TS: Increasing fellow recruitment: how can fellowship program websites be optimized? J Am Acad Orthop Surg 2020; 28(24): e1105–e1110.doi: 10.5435/JAAOS-D-19-00804. [DOI] [PubMed] [Google Scholar]

- 33. Sherman NC, Sorenson JC, Khwaja AM, DeSilva GL: The content and accessibility of orthopaedic residency program websites. JB JS Open Access 2020; 5 e20.00087.doi: 10.2106/JBJS.OA.20.00087(4). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Shimshak SJE, Witt BS, Chen JY, Pollock JR, Sokumbi O: A cross-sectional evaluation of dermatopathology fellowship website content. J Cutan Pathol 2021; 48(9): 1213–5.doi: 10.1111/cup.13982. [DOI] [PubMed] [Google Scholar]

- 35. Stoeger SM, Freeman H, Bitter B, Helmer SD, Reyes J, Vincent KB: Evaluation of general surgery residency program websites. Am J Surg 2019; 217(4): 794–9.doi: 10.1016/j.amjsurg.2018.12.060. [DOI] [PubMed] [Google Scholar]

- 36. Vilanilam GK, Wadhwa V, Purushothaman R, Desai S, Kamran M, Radvany MG: Critical evaluation of interventional neuroradiology fellowship program websites in North America. World Neurosurg 2021; 146: e48–52.doi: 10.1016/j.wneu.2020.09.164. [DOI] [PubMed] [Google Scholar]

- 37. Wei C, Quan T, Wu T, et al. : Assessment of the accessibility and content of dermatology fellowship websites. J Am Acad Dermatol 2021; 84(5): 1423–5.doi: 10.1016/j.jaad.2020.06.017. [DOI] [PubMed] [Google Scholar]

- 38. Oladeji LO, Yu JC, Oladeji AK, Ponce BA: How useful are orthopedic surgery residency web pages? J Surg Educ 2015; 72(6): 1185–9.doi: 10.1016/j.jsurg.2015.05.012. [DOI] [PubMed] [Google Scholar]

- 39. Yong TM, Austin DC, Molloy IB, Torchia MT, Coe MP, Information O and Mentorship: perspectives from orthopaedic surgery residency applicants. J Am Acad Orthop Surg 2021; 29(14): 616–23.doi: 10.5435/JAAOS-D-20-00512. [DOI] [PubMed] [Google Scholar]

- 40. Rozental TD, Lonner JH, Parekh SG: The Internet as a communication tool for academic orthopaedic surgery departments in the United States. J Bone Joint Surg Am 2001; 83(7): 987–91.doi: 10.2106/00004623-200107000-00002. [DOI] [PubMed] [Google Scholar]

- 41. Davidson AR, Loftis CM, Throckmorton TW, Kelly DM: Accessibility and availability of online information for orthopedic surgery residency programs. Iowa Orthop J 2016; 36: 31–6. [PMC free article] [PubMed] [Google Scholar]

- 42. Sterling M, Leung P, Wright D, Bishop TF: The use of social media in graduate medical education: a systematic review. Acad Med 2017; 92(7): 1043–56.doi: 10.1097/ACM.0000000000001617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Renew JR, Ladlie B, Gorlin A, Long T: The impact of social media on anesthesia resident recruitment. J Educ Perioper Med 2019; 21(1): E632. [PMC free article] [PubMed] [Google Scholar]

- 44. Zigrossi D, Ralls G, Martel M, et al. : Ranking programs: medical student strategies. J Emerg Med 2019; 57(4): e141–5.doi: 10.1016/j.jemermed.2019.04.027. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author. All data are freely accessible.