Abstract

Objectives

Although eHealth tools are potentially useful for remote disease monitoring, barriers include concerns of low engagement and high attrition. We aimed to summarise evidence on patients’ engagement and attrition with eHealth tools for remotely monitoring disease activity/impact in chronic arthritis.

Methods

A systematic literature search was conducted for original articles and abstracts published before September 2022. Eligible studies reported quantitative measures of patients’ engagement with eHealth instruments used for remote monitoring in chronic arthritis. Engagement rates were pooled using random effects meta-analysis.

Results

Of 8246 references, 45 studies were included: 23 using smartphone applications, 13 evaluating wearable activity trackers, 7 using personal digital assistants, 6 including web-based platforms and 2 using short message service. Wearable-based studies mostly reported engagement as the proportion of days the tracker was worn (70% pooled across 6 studies). For other eHealth tools, engagement was mostly reported as completion rates for remote patient-reported outcomes (PROs). The pooled completion rate was 80%, although between-study heterogeneity was high (I2 93%) with significant differences between eHealth tools and frequency of PRO-collection. Engagement significantly decreased with longer study duration, but attrition varied across studies (0%–89%). Several predictors of higher engagement were reported. Data on the influence of PRO-reporting frequency were conflicting.

Conclusion

Generally high patient engagement was reported with eHealth tools for remote monitoring in chronic arthritis. However, we found considerable between-study heterogeneity and a relative lack of real-world data. Future studies should use standardised measures of engagement, preferably assessed in a daily practice setting.

Trial registeration number

The protocol was registered on PROSPERO (CRD42021267936).

Keywords: arthritis, epidemiology, health services research, patient reported outcome measures

WHAT IS ALREADY KNOWN ON THIS TOPIC

eHealth tools, such as smartphone applications, wearable activity trackers and web-based platforms, are increasingly used in the management of chronic arthritis. Although these tools could provide unique opportunities to improve care, for instance through remote monitoring and by facilitating patient-initiated follow-up, concerns are often raised in relation to attrition and limited patient engagement with these tools.

WHAT THIS STUDY ADDS

We found generally high reported engagement rates with eHealth tools used to remotely monitor disease activity or impact in patients with chronic arthritis. However, engagement declined over time to a highly variable degree and data mostly came from strictly controlled research settings, possibly underestimating the problem of attrition.

HOW THIS STUDY MIGHT AFFECT RESEARCH, PRACTICE OR POLICY

Although remote monitoring of chronic arthritis using eHealth tools seems a feasible approach, future eHealth-related research should aim to optimally characterise feasibility in a real-world setting.

Introduction

Chronic arthritis is an umbrella term for several inflammatory and non-inflammatory musculoskeletal conditions that rank among the most prevalent chronic diseases worldwide and represent a considerable societal burden.1 People suffering from chronic arthritis are faced with pain, stiffness, fatigue and functional decline, negatively affecting their quality of life and social participation.2 These symptoms fluctuate over time and can even persist when the disease is clinically well-controlled, often giving rise to discordance between patients’ and physicians’ views on disease activity.3 Consequently, potentially relevant information about the disease’s impact between clinic visits is insufficiently captured in routine care.4 Moreover, following shifts to targeted treatment strategies, managing chronic inflammatory arthritis increasingly requires lifelong follow-up on a regular basis.5 However, the resulting increase in demand has not been met with a corresponding expansion of the rheumatology workforce, leading to increasing referral times and rising pressure on the conventional care model.6

Possible solutions to some of these challenges could be found in the form of eHealth, defined as the use of information and communication technologies to support healthcare.7 Recent years have brought a revolution of technological innovations, including the widespread availability of internet connectivity, smartphones and wearable activity trackers, all of which could provide healthcare practitioners and researchers with opportunities to improve patient care.8 One potential strategy is to use eHealth tools like these to monitor patients with chronic arthritis remotely. Remote monitoring can be implemented in a synchronous setting, where patients and care providers remain in real-time contact through digital communication tools like telephone or video calls. Alternatively, this strategy can be approached asynchronously, which implies that the eHealth tool collects information, such as patient-reported outcomes (PROs), that is only later accessed by the care provider.9 Particularly in an asynchronous setting, eHealth tools could provide researchers or care providers with a unique window into the day-to-day variability of disease activity and its impact on patients.10 Such information has also shown potential to facilitate patient-initiated follow-up, as opposed to prescheduled clinic visits,11 ultimately contributing to reduced healthcare utilisation.12 Finally, there is an ongoing evolution in technology that allows patients to self-sample capillary blood for biochemical markers like urate or C-reactive protein, and recent studies have shown promising feasibility for such devices.13–15

However, despite their potential benefits, the implementation of eHealth tools in routine rheumatology care is associated with several challenges, including concerns about vulnerable populations, legal and organisational barriers, and respondent fatigue.16 Among these, arguably the biggest challenge of eHealth studies is missing data due to attrition and limited patient engagement, potentially biasing study results and hampering larger-scale implementation.17 Consequently, it is crucial to comprehensively describe how engagement with eHealth tools is currently measured, how reliably patients use and continue to use these tools, and which population-based or study-specific factors are associated with eHealth engagement.

The objective of this systematic review was to summarise the available evidence on patients’ engagement and attrition with eHealth tools for remote monitoring of disease activity or impact in chronic arthritis. For these purposes, this review focused on asynchronous eHealth interventions, rather than on tools intended for remote consultations.

Methods

This systematic review was conducted in accordance with the Cochrane Handbook and reported following the Preferred Reporting Items for Systematic Reviews and Meta-Analysis guidelines.18

Eligibility criteria

Studies were eligible for inclusion if they were conducted in patients with chronic arthritis, defined as either rheumatoid arthritis (RA), spondyloarthritis (SpA), psoriatic arthritis, osteoarthritis (OA), gout or juvenile idiopathic arthritis (JIA). Records not reporting delineable outcome data for any of these populations were excluded. Participants of all ages were eligible. Moreover, studies were considered only if they included any eHealth instrument for asynchronous remote monitoring purposes, and additionally provided information on patients’ objectively measured engagement, adherence or compliance with the use of this eHealth instrument. Records were excluded if engagement was solely self-reported or if no information on data completeness was reported. As an eHealth tool, we considered any application of information and communication technology in the context of health or health-related fields.7

Study design

We included randomised controlled trials (RCTs), observational studies and case–control studies published in English and in peer-reviewed journals. Given the rapid evolution of this research field, we additionally included conference abstracts adhering to the eligibility criteria and updated the search a second time. To allow for maximal comparability, we did not consider purely qualitative studies.

Outcomes

The primary outcome was any quantitative assessment of patients’ engagement or adherence with the reported eHealth instrument. As secondary outcomes, we aimed to summarise the evidence on attrition, defined as a loss of participant engagement over time, and to describe demographic, disease-related or study-related factors associated with engagement or attrition.

Search strategy and study selection

We systematically searched the following databases from inception to 29 May 2021 (updated to 31 August 2022): Embase, PubMed, Cochrane Central, CINAHL, Web of Science, ClinicalTrials.gov and the International Clinical Trials Registry Platform. The search string was developed in collaboration with biomedical reference librarians of KU Leuven Libraries and was based on keywords and free-text entries combining the concepts of “chronic arthritis” AND “eHealth” (online supplemental material 1). In addition, we screened the reference lists of included reports as a backward citation search.

rmdopen-2022-002625supp001.pdf (4.5MB, pdf)

Duplicates were removed with Endnote V.20.1. First, all records were screened by title and abstract independently by two reviewers (CVL and MD), using Rayyan QRCI (https://www.rayyan.ai/). Finally, the full texts were screened for all remaining articles. A third reviewer (DDC) was consulted to resolve conflicts.

Data extraction

Two reviewers (CVL and MD) independently extracted data from included studies into a Microsoft Excel database. The following data were considered: general study characteristics (first author, publication year, study design and study duration); population characteristics (number of participants, age, sex and diagnosis); eHealth-related characteristics (type of eHealth tool, outcomes collected by the tool, requested frequency of outcome collection and incentives for use of the tool); and engagement or attrition-related characteristics (definition and quantitative outcome).

Data synthesis

Meta-analysis was performed for engagement outcomes available in ≥3 studies. As we expected high between-study heterogeneity, a random-effects model (restricted maximum likelihood method with Hartung-Knapp adjustment) was applied to estimate the pooled effect across studies with similar engagement outcomes. An inverse variance method was used for weighting each study in the meta-analysis. The proportion of variability in effect estimates due to between-study heterogeneity was summarised using τ2 and I2. Logit-transformed proportions were used for variance stabilisation. If ≥10 studies were available and relevant subgroups were sufficiently large, heterogeneity among studies with similar engagement outcomes was further explored by subgroup analyses for the type of eHealth tool, the outcome collection frequency, diagnosis and study design (RCT or observational/case–control study). In addition, the impact of study duration was explored with univariable meta-regression. Finally, sensitivity analyses were performed by excluding abstracts. Meta-analysis was conducted with R (V.2021.09.1), using the meta package.

Risk of bias assessment

Risk of bias was assessed for all included studies for which a full text was available, using the Newcastle-Ottawa scale for cohort studies and the PEDro scale for RCTs.19 20

Results

Search results

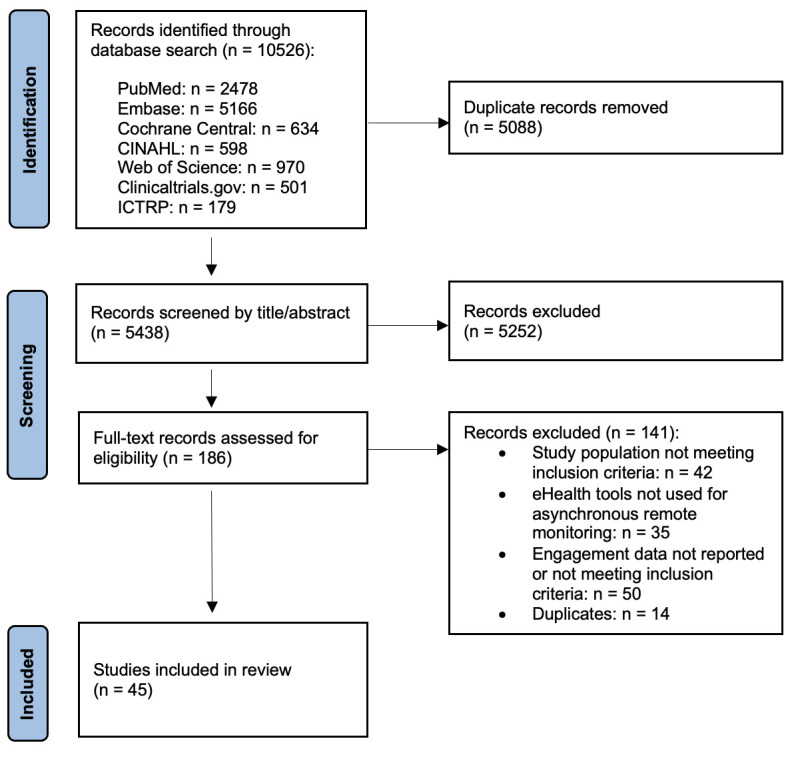

The systematic search resulted in 10 526 potentially relevant records (figure 1). After deduplication, 5438 articles were screened by title and abstract, 186 of which were eligible for full-text screening. Among these, 45 articles were eventually included in the final review, with publication dates ranging from 2008 to 2022. No additional records meeting the inclusion criteria were identified through backward citation searching.

Figure 1.

PRISMA flow chart of systematic review. ICTRP, International Clinical Trials Registry Platform.

Study characteristics and risk of bias

A total of 14 341 patients were included across the 45 eligible studies (table 1). Of these 45 studies, 32 were observational studies, 8 were RCTs or post hoc analyses thereof, 2 were case–control studies and 3 were conference abstracts. Sixteen (36%) studies were conducted in an RA population, 13 (29%) in an OA population, 9 (20%) in JIA, 2 (4%) in a population with gout and 3 (7%) in patients with ankylosing spondylitis (AS) or axial SpA, while 4 (9%) studies included mixed populations. The reported mean age of included participants ranged from <18 years in the JIA studies to over 60 years in several OA-focused studies. Most studies included a predominantly female patient population. Only six articles included mostly men with either gout,21 22 JIA,23 SpA24 25 or OA.26

Table 1.

Characteristics and engagement data for included eHealth studies

| First author (year) | Diagnosis | N | Age, years (SD) | Female, % | Duration, days | Recruitment | eHealth tool | Monitoring frequency | Incentives | Engagement (definition) | Engagement (%) |

| Austin (2020)61 | RA | 20 | 32–84 | 75 | 85 | Physical/clinic | Smartphone app | Daily; weekly; monthly | Reminders | Completion rate | 86; 85; 58 |

| Bellamy (2010)62 | OA | 12 | 63 (9) | 75 | 12 | Physical/clinic | Smartphone app | Once every 3 days | Reminders | Completion rate | 100 |

| Beukenhorst (2020)30 | OA | 26 | 64 | 50 | 90 | Advertisements -> event | WAT (C) | Continuous+PROs daily; weekly; monthly | Reminders | Proportion of days worn (WAT) + completion rate (PRO) | 73 + 66; 69; 89 |

| Bingham III (2019)39 | RA | 1305 | 53 (12) | 77 | 84 | Physical/clinic | PDA e-diary | Daily | Reminders | Completion rate | 94 |

| RA | 684 | 52 (12) | 82 | 84 | Physical/clinic | PDA e-diary | Daily | Reminders | Completion rate | 93 | |

| Broderick (2013)44 | OA | 98 | 57 (10) | 60 | 28 | Online survey -> phone call | Web-based | Daily; weekly | Financial; phone calls | Completion rate | 96; 100 |

| Bromberg (2014)23 | JIA | 59 | 13 (3) | 45 | 28 | Physical/clinic | Smartphone app | Thrice daily | Reminders; financial; phone calls | Completion rate | 66 |

| Christodoulou (2014)29 | OA | 100 | 57 (10) | 60 | 28 | Online survey -> phone call | Web-based | Daily | Reminders; financial; phone calls | Completion rate | 93 |

| Colls (2021)40 | RA | 78 | 55 (11) | 81 | 210 | Physical/clinic | Smartphone app | Daily | Reminders | Completion rate | 79 |

| Connelly (2010)63 | JIA | 9 | 12 (3) | 89 | 14 | Physical/clinic | PDA e-diary | Thrice daily | Reminders | Completion rate | 88 |

| Connelly (2012)64 | JIA | 43 | 13 (3) | 86 | 28 | Physical/clinic | Smartphone app | Thrice daily | Reminders; phone calls | Completion rate | 69 |

| Connelly (2017)65 | JIA | 66 | 13 (3) | 73 | 30 | Physical/clinic | Smartphone app | Thrice daily | Reminders; financial | Completion rate | 81 |

| Costantino (2022)66 | AxSpA | 99 | NR | 64 | 356 | Online (SPONDY+ platform) | Web-based | Weekly | NR | Completion rate | 54 |

| Crouthamel (2018)27 | RA | 388 | 48 (12) | 81 | 84 | Social media | Smartphone app | Weekly; monthly | Reminders | Proportion of active participants (per week) | 20-100* |

| Druce (2017)31 | RA (19%) SpA (9%) Arthritis (40%) Gout (3%) FMS (24%) Headache (7%) Neuropathic (13%) Other(23%) |

6370 | 49 (13) | 81 | 180 | Advertisements; directing to app | Smartphone app | Daily | Reminders | Categorical engagement: - High (15%) - Moderate (22%) - Low (38%) - Tourists (25%). For each group: proportion of days with complete data |

High: 70 Moderate: 28 Low: 3 Tourists: 1 |

| Elmagboul (2020)21 | Gout | 33/44† | 49 (15) | 15 | 183 | Physical/clinic | WAT (C) + smartphone app | Continuous + ePROs weekly | Reminders | Proportion of days worn + completion rate | 61; 81 |

| Fu (2019)67 | OA | 252 | 62 (8) | 79 | 90 | Online survey -> phone call | Web-based | Once every 10 days + in case of flare | Reminders | Completion rate | 81 |

| Gilbert (2021)68 | OA | 2127 | 65 (9) | 56 | 7 | Physical/clinic | WAT (R) | Continuous | NR | Proportion wearing WAT ≥4/7 days | 91 |

| Harbottle (2018)35 | JIA | 12 | 14 | 50 | 90 | Physical/clinic | WAT (R) | Continuous | NR | Proportion of days worn | 17 |

| Heale (2018)36 | JIA | 28 | 15 | 74 | 28 | Physical/clinic | WAT (C) | Continuous | Reminders | Proportion of days worn | 72 |

| Heiberg (2007)69 | RA | 38 | 58 (13) | 66 | 84 | Mail -> event | PDA e-diary | Daily; weekly | Phone calls | Completion rate (overall daily/weekly) | 85 |

| Jacquemin (2018)37 | RA & axSpA | 177 | 46 (12) | 64 | 90 | Physical/clinic | WAT (C) | Continuous | NR | Proportion of days worn | 88 |

| Kempin (2022)25 | AxSpA | 69 | 42 (11) | 42 | 168 | Physical/clinic | Smartphone app | Once every 2 weeks | Reminders (1/2); phone calls | Proportion providing requested data ≥80% of moments by week 12 | 29 (but 81% exported at least once) |

| Lalloo (2021)70 | JIA | 60 | 15 (2) | 78 | 56 | Physical/clinic | Smartphone app | Daily | Financial; phone calls | Completion rate | 52 |

| Lazaridou (2019)71 | OA | 121 | 66 (9) | 59 | 7 | Physical/clinic | PDA e-diary | Daily | NR | Completion rate | 98 |

| Lee (2013)42 | RA | 85 | 18+ (categorical) | 77 | 60 | Physical/clinic | SMS | Monthly | Reminders; financial | Completion rate | 75 |

| Lee (2020)43 | JIA | 14 | 13 (2) | 64 | 56 | Physical/clinic | Smartphone app | Twice daily; daily; weekly + in case of pain | Phone calls | Completion rate | 51; 63; 38 |

| Martin (2021)72 | RA | 104 | Ongoing | SMS | SMS | Monthly | Reminders | Completion rate | 69 | ||

| McBeth (2022)33 | RA | 254 | 57 (49-64) | 81 | 30 | Email -> phone call | Smartphone app | Thrice daily; daily; once every 10 days | Reminders | Completion rate (overall thrice daily & daily) | 92 |

| Murray (2022)73 | RA | 26 | 18+ (categorical) | 77 | 56 | Mail‡ -> phone call | Smartphone app | Daily (alternating cycle) | Reminders | Completion rate | 66 |

| Nowell (2020)32 | RA | 278 | 50 (11) | 92 | 84 | Email to ArthritisPower members | WAT (C) + smartphone app | Continuous + PROs daily; weekly | Reminders; financial; phone calls/emails | Proportion providing requested data >70% of days | 82 + 57; 87 |

| Nowell (2021)28 | OA (65%); RA (49%); PsA (26%); AS (16%); FMS (40%); OP (16%); SLE (9%) | 253 | 56 (9) | 89 | 90 | Email to ArthritisPower members | Smartphone app | Monthly | Reminders | Proportion completing all ePROs | 55 |

| Östlind (2021)38 | OA | 74 | 57 (5) | 87 | 84 | Physical/clinic | WAT (C) | Continuous | Emails | Proportion of days worn | 88 |

| Pers (2021)74 | RA | 45 | 18-75 | 73 | 183 | Physical/clinic | Smartphone app | Weekly | Reminders Phone calls | Completion rate (final 16 weeks) | 67 |

| Pouls (2021)22 | Gout | 29 | 57 (13) | 3 | 90 | Physical/clinic | Smartphone app | Daily | Reminders | Completion rate | 96 |

| Reade (2017)57 | RA | 20 | 60 | Physical/clinic | Smartphone app | Daily | Reminders | Completion rate | 68 | ||

| Renskers (2020)34 | RA & SpA | 47 | 57 (11) | 57 | 14-597 (mean 350) | Physical/clinic | Web-based | Free to choose (at baseline) | Reminders | Completion rate | 68 |

| Rouzaud-Laborde (2021)45 | OA | 28 | 73 (6) | 70 | 14 | Physical/clinic | WAT-based ePRO-app (C) | Thrice daily; daily | Reminders | Completion rate (adjusted for wear time - technical issues) | 81; 93 |

| Seppen (2020)41 | RA | 42 | 54 (13) | 86 | 28 | Physical/clinic | Smartphone app | Weekly | NR | Completion rate | 82 |

| RA | 27 | 52 (11) | 78 | 28 | Physical/clinic | Smartphone app | Weekly | NR | Completion rate | 70 | |

| Seppen (2022)11 | RA | 50 | 58 (13) | 56 | 356 | Physical/clinic | Smartphone app | Weekly | Reminders (only 1 month) | Completion rate | 59 |

| Skrepnik (2017)46 | OA | 211 | 63 (9) | 50 | 90 (+ 90 day follow-up) | Physical/clinic | WAT (C) ± smartphone app (1:1) | Continuous | Reminders (app only) | Proportion providing data >80% of days (first 90 days) | 91 |

| Stinson (2008)58 | JIA | 13 | 13 (3) | 85 | 14 | Physical/clinic | PDA e-diary | Thrice daily | Reminders Financial Phone calls | Completion rate | 72 |

| Tyrrell (2016)24 | AS | 223 | 50 (14) | 39 | 84 | Email/social media | Web-based | Weekly | Reminders | Completion rate | 67 |

| Wilson (2013)75 | OA | 144 | 66 (10) | 57 | 22 | Physical/clinic | PDA e-diary | Daily | NR | Completion rate | 92 |

| Yu (2022)26 | OA | 65 | 61 (6) | 46 | 2x7 | Physical/clinic | WAT (C) | Continuous | NR | Proportion wearing WAT ≥6/7 days | 99 |

| Zaslavsky (2019)76 | OA | 24 | 71 (4) | 70 | 133 | Physical/clinic | WAT (C) | Continuous | Reminders Financial Phone calls | Proportion of hours worn per day | 88 |

*Proportion of active participants was reported separately for each week, ranging from 100% in week 1 to approximately 20% at week 12.

†ePROs were evaluated in 44 participants, WAT in a subgroup of 33 participants from the same study.

‡Recruitment letters were mailed to patients who participated in the preceding trial with the same smartphone app.40

AS, ankylosing spondylitis; FMS, fibromyalgia syndrome; JIA, juvenile idiopathic arthritis; OA, osteoarthritis; OP, osteoporosis; PDA, personal digital assistant; PROs, patient-reported outcomes; PsA, psoriatic arthritis; RA, rheumatoid arthritis; SLE, systemic lupus erythematosus; SMS, short message service; SpA, spondyloarthritis; WAT, wearable activity tracker (either consumer-grade C or research-grade R).

Study durations varied considerably across the included studies, ranging from 7 days to up to 1 year. Moreover, a large degree of heterogeneity was seen in the studies’ approach to patient recruitment. Although most studies recruited participants during a physical clinic visit, 13 studies (29%) approached eligible participants through fully digital channels such as email, social media, short message service (SMS) messages or online surveys.

Finally, risk of bias was assessed for all 42 studies with full-text availability (online supplemental material 2). Overall, included studies had a moderate risk of bias when considering the outcome of participant engagement. The main source of bias was selection bias, with most studies including only participants who had both access to smartphones or computers and sufficient experience using them. Furthermore, some studies recruited participants based on a self-reported physician’s diagnosis of arthritis,27–33 particularly when a fully digital recruitment approach was taken, and most studies lacked control groups.

Characteristics of reported eHealth tools

In all, 23 studies (51%) evaluated the use of a smartphone application, while 13 studies (29%) reported on the use of wearable activity trackers, 7 (16%) provided participants with a personal digital assistant (PDA) e-diary, 6 (13%) used a web-based platform and 2 (4%) were based on an SMS messaging system (table 1). Generally, in studies evaluating activity trackers, participant data such as step counts and physical activity were collected continuously while wearing the tracker. By contrast, other eHealth tools primarily collected PROs, usually in the form of questionnaires sent to participants via the system. However, these eHealth systems differed significantly in the specific type of outcome measures collected, in the frequency of requested PRO-reporting, and in the incentives provided to participants to adhere to this reporting frequency. For instance, 7 studies (16%) asked participants to complete PROs three times each day, whereas once-a-day reporting was requested in 19 studies (42%), weekly reporting in 14 (31%), and monthly reporting in 6 (13%). Some systems allowed data entry at any time, for instance in case of a flare, while one study asked participants to choose their preferred reporting frequency at baseline.34 Finally, incentives to participants for providing complete data ranged from financial compensation and regular compliance checks in some studies to no incentives whatsoever in others.

Participant engagement with eHealth tools

Engagement was defined in several ways across the included studies (table 1). Some studies defined engagement categorically or based on the proportion of patients providing data above a certain threshold of completeness.

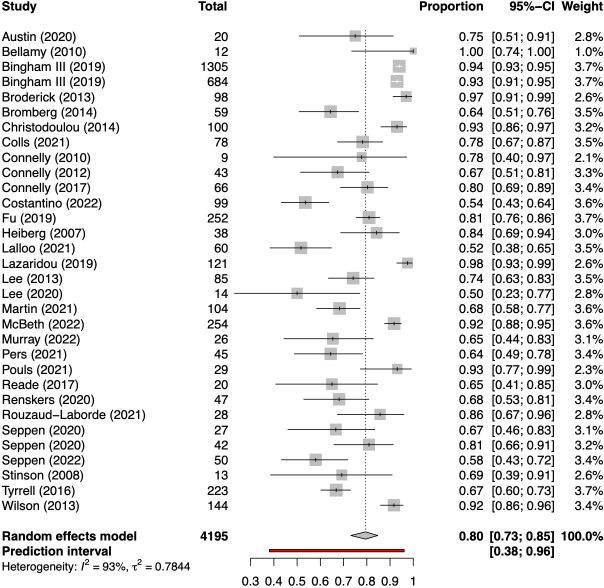

However, where applicable, most articles reported a completion rate for the collected PRO data, defined as the proportion of all requested data entries in the study that were effectively completed by participants. Meta-analysis of the 34 studies that reported completion rates (figure 2) resulted in a pooled global completion rate of 80% (95% CI 73% to 85%) with high between-study heterogeneity (τ2 0.78; I2 93%).

Figure 2.

Forest plot of pooled completion rates for patient-reported outcomes collected with eHealth tools.

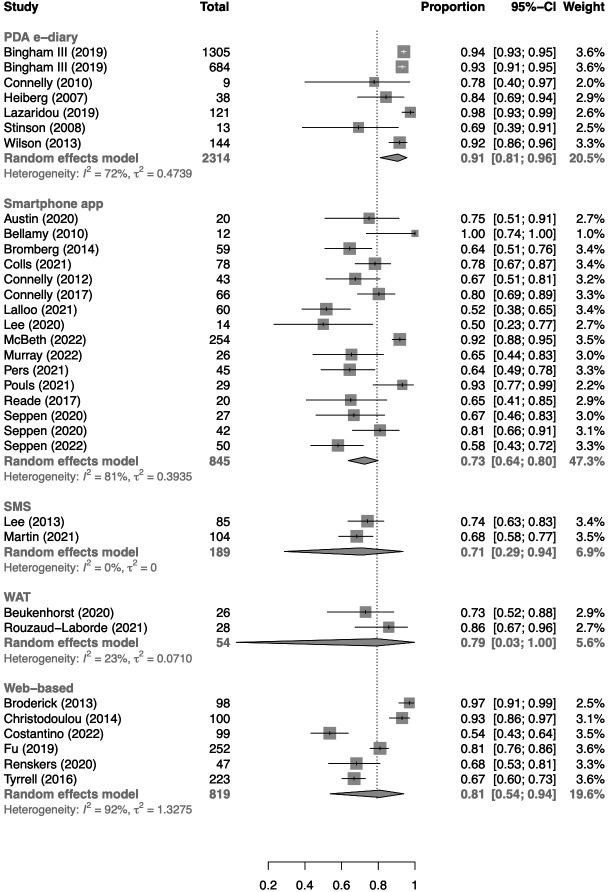

Subgroup analysis (figure 3) showed significant differences between eHealth tools (p for interaction<0.01), with a pooled completion rate of 73% (95% CI 64% to 80%) in studies using smartphone applications, 91% (95% CI 81% to 96%) in studies using PDA e-diaries, 81% (95% CI 54% to 94%) in studies evaluating a web-based platform and 71% (95% CI 29% to 94%) in studies using an SMS messaging system. In addition, two studies involved PRO registration via a smartwatch app and reported a pooled completion rate of 79%.

Figure 3.

Forest plot of pooled completion rates according to eHealth tool. PDA, personal digital assistant; SMS, short message service; WAT, wearable activity tracker.

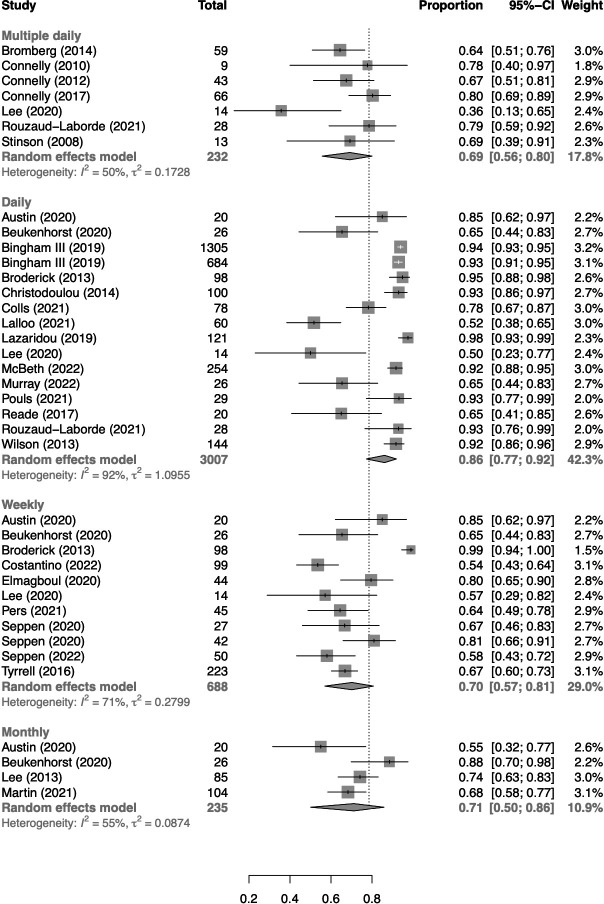

PRO completion rates also differed according to the requested frequency of data collection (p for interaction=0.02), with a pooled completion rate of 86% (95% CI 77% to 92%) for daily, 70% (95% CI 57% to 81%) for weekly and 71% (95% CI 50% to 86%) for monthly intervals (figure 4). In studies collecting data more than once per day, a pooled completion rate of 69% (95% CI 56% to 80%) was reported. Additional subgroup meta-analyses (online supplemental material 3) showed no apparent differences in PRO completion rates according to diagnosis (including only studies in an RA population) and study design (comparing RCTs and observational studies).

Figure 4.

Forest plot of pooled completion rates according to patient-reported outcome collection frequency.

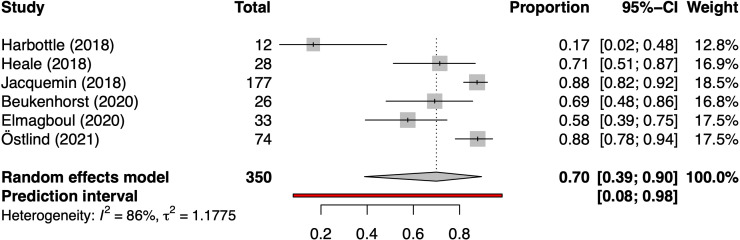

Finally, studies using a wearable activity tracker mostly defined engagement as the proportion of days the tracker was actively worn. Across the six studies for which this was reported,21 30 35–38 the pooled proportion of days worn was 70% (95% CI 39% to 90%) with high between-study heterogeneity (τ2 1.18; I2 86%) (figure 5).

Figure 5.

Forest plot of pooled proportion of study days a wearable activity tracker was actively worn.

In sensitivity analyses excluding abstracts, meta-analysis resulted in a pooled global PRO-completion rate of 80% (unchanged to when considering all records) and a pooled proportional tracker wear time of 77% (compared with 70% when considering all records) (online supplemental material 4).

Attrition

In total, 20 studies reported on attrition, defined as a loss of participant engagement over time (table 2). Among these, 14 studies (70%) defined attrition as the difference between the start and end of the study in PRO-completion rate or in the amount of time the wearable tracker was worn. The remaining six articles reported on attrition either as the proportion of participants who were no longer active with the eHealth system by the end of the study, or as the proportion who did not meet a minimal threshold of data completeness.

Table 2.

Overview of included studies reporting loss of eHealth engagement over time (attrition)

| First author (year) | Duration (days) | eHealth tool | Monitoring frequency | Attrition (definition) | Attrition (%) |

| Beukenhorst 2020 30 | 90 | WAT | Continuous+ PROs daily; weekly; monthly |

Active participants (WAT) + completion rate (overall PROs): baseline to end |

54 + 55 |

| Bingham III 201939 | 84 | PDA e-diary | Daily | Completion rate: week 1 to week 12 | 0 |

| Colls 202140 | 210 | Smartphone app | Daily | Completion rate: first 3 months to final 3 months | 26 |

| Connelly 201264 | 28 | Smartphone app | Thrice daily | Completion rate: first 2 weeks to second 2 weeks | 24 |

| Crouthamel 201827 | 84 | Smartphone app | Weekly; monthly | Active participants: baseline to end | 89 |

| Elmagboul 202021 | 183 | WAT+smartphone app | Continuous+ePROs weekly | Proportion not meeting minimal WAT wear time | 18 |

| Jacquemin 201837 | 90 | WAT | Continuous | Active participants: baseline to end | 22 |

| Kempin 202225 | 168 | Smartphone app | Once every 2 weeks | Proportion providing data ≥80% of moments: week 12 to week 24 | 10 |

| Lalloo 202170 | 56 | Smartphone app | Daily | Completion rate: first day to final day | 48 |

| Lee 201342 | 60 | SMS | Monthly | Completion rate: first month to final month | 10 |

| Nowell 202128 | 90 | Smartphone app | Monthly | Active participants: baseline to final month | 45 |

| Murray 202273 | 56 | Smartphone app | Daily (alternating cycle) | Completion rate: first week to final week | 16 |

| Östlind 202138 | 84 | WAT | Continuous | Proportion of days worn: first week to final week | 20 |

| Pouls 202122 | 90 | Smartphone app | Daily | Active participants: baseline to end | 0 |

| Renskers 202034 | 14–597 (mean 350) | Web-based | Free to choose (at baseline) | Active participants: baseline to end | 49 |

| Seppen 202041 | 28 | Smartphone app | Weekly | Completion rate: first week to final week | 39 |

| 28 | Smartphone app | Weekly | Completion rate: first week to final week | 63 | |

| Seppen 202211 | 356 | Smartphone app | Weekly | Completion rate: first 3 months to final 3 months | 4 |

| Skrepnik 201746 | 90 (+90 day follow-up) | WAT±smartphone app (1:1) | Continuous | Proportion providing data >80% of days: day 90 to day 180 (app-group) | 64 |

| Stinson 200858 | 14 | PDA e-diary | Thrice daily | Completion rate: week 1 to week 2 | 10 |

| Zaslavsky 201976 | 133 | WAT | Continuous | Proportion of hours worn per day: first week to final week | −1 (increase) |

PDA, personal digital assistant; PROs, patient-reported outcomes; SMS, short message service; WAT, wearable activity tracker.

Generally, attrition rates were highly variable, ranging from no change in completion rate over time in a PDA e-diary study nested within a large phase III drug trial39 to as much as 89% drop-out in a smartphone feasibility study that recruited participants exclusively through social media.27 Overall, longer study durations were associated with lower completion rates (p=0.029 in univariable meta-regression) (online supplemental material 5).

Factors facilitating or hindering engagement

Facilitators or barriers to eHealth engagement were reported in 17 (38%) of the included studies (table 3), but only 13 of these studies provided quantitative assessments.

Table 3.

Factors quantitatively associated with eHealth engagement in included studies

| Facilitates engagement | Hinders engagement | No apparent influence | Reference | |

| Age: older adults | ♦ | 25 31 32 40 | ||

| Age: children versus adolescents | ♦ | 35 | ||

| Sex: female | ♦ | 31 | ||

| Disease activity: higher | ♦ | ♦ | Facilitates25 Hinders40 |

|

| Treatment: bDMARDs | ♦ | ♦ | Facilitates32 Hinders25 |

|

| Employment: full time | ♦ | 42 | ||

| Employment: non-employed | ♦ | 32 | ||

| Outcomes: less frequent reporting | ♦ | 30 32 43 44 | ||

| Outcomes: morning reporting | ♦ | 58 | ||

| Feedback | ♦ | 27 46 | ||

| Reminders | ♦ | 11 25 | ||

| Habitual use of eHealth tools | ♦ | 45 |

bDMARDs, biological disease-modifying antirheumatic drugs.

First, evidence for the influence of demographic or disease-related aspects on engagement was largely inconsistent. Overall, higher levels of engagement were reported for older adults and for children rather than adolescents with JIA. However, while one study found women with chronic arthritis to be more engaged than men,31 no such difference was apparent in others.28 40 Similarly, higher completion rates were reported with lower disease activity in an established RA population,40 whereas the inverse was found in a recent study on SpA,25 and qualitative data suggested that a lack of symptoms might hinder engagement.41 In addition, one study found higher completion rates for patients treated with biological disease-modifying drugs,32 while this finding was contradicted in another report.25 Moreover, engagement was not affected by the diagnosis itself in any of the included studies with mixed populations. Conflicting results were also reported for the influence of employment status, with one study reporting more dropouts in non-employed participants,32 whereas another found lower completion rates in fully employed patients.42

In addition, several studies indicated that the approach to outcome collection might influence participants’ engagement. For instance, less time-intensive PRO-reporting schedules, such as weekly rather than daily reporting, were associated with higher completion rates in 5 out of 6 studies for which this information was available.30 32 43–45 Moreover, higher engagement was found when feedback was provided to patients about the data they reported, either by discussing them in clinic or through data visualisation in the eHealth tool itself. Specifically, the PARADE study reported a 4% difference in attrition in favour of participants who had daily access to their data compared with those who did not.27 Similarly, using a smartphone app to provide visual feedback on step counts resulted in increased engagement with a wearable activity tracker in another report.46 Interestingly, however, using reminders to prompt data entry did not appear to have a clear effect on participant engagement in both studies that investigated this.11 25

Discussion

In this systematic review, we summarised the evidence on patients’ engagement with eHealth systems used to remotely monitor disease activity or impact in chronic arthritis. Relatively high levels of eHealth engagement were reported across 45 included studies, which primarily consisted of observational cohorts and RCTs. The pooled global completion rate for remotely monitored PROs was 80%, while wearable activity trackers were worn for a pooled global proportion of 70% of study days, rising to 77% in a sensitivity analysis excluding abstracts.

In general, these results are in line with engagement data from remote monitoring studies in other chronic diseases, including diabetes, obesity and mental health conditions.47 However, between-study heterogeneity for engagement outcomes was high in both previous research and in our review. This considerable heterogeneity encountered between studies represents a first major challenge in the current landscape of eHealth research. Among the included studies, much variability was apparent in study population and duration, ranging from only a few days to 1 year, as well as in the incentives to participants for providing complete data, and even in how engagement was defined and measured. Furthermore, some studies used an entirely digital recruitment approach without physical contact between patients and investigators, for instance by including participants via social media. All these study-related aspects might affect both engagement with an eHealth monitoring approach and the generalisability of the results. Furthermore, comparisons between studies are inevitably limited because of these differences. Similar reports on the challenges of heterogeneity in eHealth studies also emerged as major conclusions in several recent systematic reviews, focusing on synchronous telemedicine, mobile health interventions and wearable activity trackers in rheumatology.9 48 49 Moreover, this challenge is clearly not limited to the rheumatology field.50 Therefore, future research on the use of eHealth systems should not only assess engagement outcomes, but also report them in a standardised way. Based on our review results, the most suitable measures for this purpose seem to be completion rates and proportional wear time for PRO-based and wearable-based interventions, respectively.

A second challenge faced by the eHealth research field in rheumatology is the limited availability of data from daily clinical practice, as is clear from the studies included in our review. This seems particularly important when considering the issue of disengagement over time reported both in many eHealth studies and for mobile applications outside of the research setting. For instance, an estimated 71% of app users across all industries stop using the app within 3 months,17 and a study of adherence to activity trackers in students showed 75% disengagement within 4 weeks.51 In our review, only 20/45 included studies effectively reported data on attrition, and in most cases these data were collected within a strictly controlled research environment. This is particularly relevant since generally low levels of attrition were primarily reported in studies that were either of shorter duration or included strong incentives to support engagement, such as financial compensation. Similarly, completion rates for the PDA e-diaries did not show any decline over time in the phase III baricitinib trials RA-BEAM and RA-BUILD, again in a setting of close follow-up and targeted participant training.39 By contrast, much higher attrition rates were reported in several ‘fully digital’ studies, with 89% of participants disengaging within less than 3 months in the PARADE study,27 45% disengaging within 3 months in an ancillary study of the ArthritisPower registry28 and 41% not completing the 2-week lead-in period in another ArthritisPower study.32 These striking differences in attrition between closely or less closely controlled research settings have also been reported for other chronic diseases, with higher engagement rates in studies that compensated patients for their participation.52 Consequently, there is a clear need for more eHealth engagement data from routine care settings, where higher attrition rates are likely. Pending such data, the current evidence on eHealth in rheumatology might underestimate the problem of attrition.

However, we should note that some potentially relevant studies from daily practice settings were excluded from our review because they did not report engagement based on data completeness. For instance, in a 12-month, multicentric study from France, patients with RA were randomised to either usual care or additional access to the web-based Sanoia platform, developed to support self-monitoring.53 In this study, no direct incentives to access the platform were provided to optimally mirror daily care. Although satisfaction with the platform was high, 26% of patients never accessed Sanoia, and the number of accesses clearly declined over time.

The phenomenon of attrition has prompted researchers to explore the optimal target population and possible barriers and facilitators for engaging with eHealth systems. Our review identified several demographic and disease-related aspects that might be associated with eHealth engagement. Among these, the finding that older age seems associated with higher engagement is somewhat surprising, given the known barriers of ageing on technology use.54 However, since much of the available evidence stems from research populations, it is likely that the most vulnerable elderly patients were either excluded from these studies or did not express an interest to participate. Moreover, older age was associated with lower eHealth engagement in JIA studies, in line with the commonly reported lower treatment adherence in adolescents.55 Interestingly though, the diagnosis did not appear to affect eHealth engagement, either in individual studies or in subgroup meta-analysis, despite the important differences in how different types of chronic arthritis are managed.

Another intriguing finding of our review is that engagement rates do appear to be affected by the approach to outcome collection. For instance, one included study investigated the effect of different pain reporting frequencies on completion rates in JIA patients, using a randomised N-of-1 cross-over design.43 In this study, engagement was higher in less time-intensive schedules, such as weekly rather than daily reporting, even though participants tended to prefer the once-a-day schedule. Similar differences were apparent in an additional four studies,30 32 44 45 while another intervention allowed participants to choose their preferred reporting frequency up-front and found that monthly reporting was chosen most often.34 In contrast, our meta-analysis showed that completion rates were highest in studies requesting daily PRO reporting. This discrepancy might be explained by differences in study or population characteristics. Nevertheless, these findings are particularly interesting in relation to concerns, raised in qualitative research, that more frequent reporting could cause more internal resistance or illness behaviour in patients.16 41 Evidence to support this concern is still limited, however, with one trial indeed showing a negative effect of a gout self-management app on illness perceptions,56 while Lee et al found no differences in pain interference between reporting schedules in their N-of-1 trial.43 Interestingly, the choice of outcome measure to be collected by the eHealth system might be equally important, with some studies allowing participants to freely select their preferred outcome measure.28 34

Regardless of the way eHealth tools are implemented, benefiting from their promising potential requires efforts to maximise and maintain participant engagement. To achieve this, previous research has highlighted the importance of co-designing eHealth systems with patients, optimising system usability, minimising workload and time commitment for users, including community-building efforts or personal contact with care providers, and sending automated reminders for data entry.17 Interestingly though, reminders did not appear to have a clear effect on data completeness in both included studies that quantitatively assessed this. Additionally, qualitative results from the studies included in our review suggested that eHealth engagement might be hindered in case of well-controlled symptoms or by system-related technical issues, lack of digital literacy, interference of other daily activities and internal resistances like respondent fatigue.24 30 34 41 57 58

Furthermore, our review showed positive effects on engagement when feedback was provided to participants, either by the physician or by the eHealth system itself,27 46 a finding that is extensively supported by qualitative evidence.16 24 30 34 41 Finally, motivational techniques such as gamification could help users to remain engaged and are generally underused in the existing array of eHealth systems.59 60 Our systematic review adds to this growing body of evidence and could support researchers and system developers in designing and implementing eHealth tools that optimally meet the challenges of attrition and low engagement.

Some limitations of this systematic review should be acknowledged. We focused specifically on remote monitoring in an asynchronous setting, which implies that eHealth tools were not considered if they were intended to facilitate real-time contact between patients and care providers. Consequently, studies based on telehealth interventions like telephone or video consultations were not included. The results of this systematic review can therefore not be extended to any type of eHealth intervention. In addition, we did not consider purely qualitative studies. Finally, both the conclusions and the generalisability of our review are inherently limited by selection bias in the included studies, the majority of which considered participants only if they had access to digital tools and sufficient experience using them.

However, our review was conducted and reported according to commonly recommended guidelines. The protocol was registered in PROSPERO, the search string was developed in collaboration with biomedical reference librarians, study selection and data extraction were conducted independently by two reviewers in both published and ‘grey’ literature, and a third reviewer was consulted to resolve conflicts. Moreover, the search was updated at a later time, and a meta-analysis was performed with subgroup and sensitivity analyses to explore between-study heterogeneity.

Conclusion

Relatively high levels of participant engagement were reported in studies involving eHealth systems for asynchronous remote monitoring in chronic arthritis. However, comparisons were hindered by considerable heterogeneity and a relative lack of data from routine care settings. This is of particular importance given the observed differences in attrition in closely controlled versus less incentivised research settings, as well as the finding that engagement tends to decline with longer study duration. To provide a clear picture of the feasibility of remote monitoring eHealth strategies, future studies should therefore use standardised measures of engagement, such as PRO-completion rates or proportional tracker wear time, and assess them with study designs that optimally reflect daily clinical practice.

Footnotes

Twitter: @DoumenMichael, @DiederikDeCock, @Will_I_Am_Osler, @sophie_33pl

Contributors: MD, DDC and PV designed the study. CVL, MD and DDC drafted the PROSPERO protocol. CVL and MD performed the systematic literature search, and DDC acted as third reviewer to resolve conflicts. CVL and MD extracted the data and performed the descriptive analyses. MD and AB performed the meta-analysis. CVL and MD assessed risk of bias for included studies. MD drafted the article. DDC, CVL, AB, SP, DB, RW and PV revised the article critically for content. All authors gave final approval of the manuscript to be published. PV and MD are the guarantors.

Funding: MD has received a Strategic Basic Research Fellowship grant from Fonds Wetenschappelijk Onderzoek (FWO) (grant number 1S85521N). No financial funding was received for the study itself.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Data are available on reasonable request.

Ethics statements

Patient consent for publication

Not applicable.

References

- 1.Hoy DG, Smith E, Cross M, et al. The global burden of musculoskeletal conditions for 2010: an overview of methods. Ann Rheum Dis 2014;73:982–9. 10.1136/annrheumdis-2013-204344 [DOI] [PubMed] [Google Scholar]

- 2.March L, Smith EUR, Hoy DG, et al. Burden of disability due to musculoskeletal (MSK) disorders. Best Pract Res Clin Rheumatol 2014;28:353–66. 10.1016/j.berh.2014.08.002 [DOI] [PubMed] [Google Scholar]

- 3.Pazmino S, Lovik A, Boonen A, et al. New indicator for discordance between patient-reported and traditional disease activity outcomes in patients with early rheumatoid arthritis. Rheumatology 2022. 10.1093/rheumatology/keac213. [Epub ahead of print: 13 Apr 2022]. [DOI] [PubMed] [Google Scholar]

- 4.Walker UA, Mueller RB, Jaeger VK, et al. Disease activity dynamics in rheumatoid arthritis: patients' self-assessment of disease activity via WebApp. Rheumatology 2017;56:1707–12. 10.1093/rheumatology/kex229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Schoemaker CG, de Wit MPT. Treat-to-Target from the patient perspective is Bowling for a perfect strike. Arthritis Rheumatol 2021;73:9–11. 10.1002/art.41461 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Battafarano DF, Ditmyer M, Bolster MB, et al. 2015 American College of rheumatology workforce study: supply and demand projections of adult rheumatology workforce, 2015-2030. Arthritis Care Res 2018;70:617–26. 10.1002/acr.23518 [DOI] [PubMed] [Google Scholar]

- 7.World Health Organization . Global diffusion of eHealth: Making universal health coverage achievable. [Internet]. Report of the third global survey on eHealth; 2016: 156. http://who.int/goe/publications/global_diffusion/en/

- 8.Studenic P, Karlfeldt S, Alunno A. The past, present and future of e-health in rheumatology. Joint Bone Spine 2021;88:105163. 10.1016/j.jbspin.2021.105163 [DOI] [PubMed] [Google Scholar]

- 9.Seppen BF, den Boer P, Wiegel J, et al. Asynchronous mHealth interventions in rheumatoid arthritis: systematic scoping review. JMIR Mhealth Uhealth 2020;8:e19260. 10.2196/19260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Beukenhorst AL, Druce KL, De Cock D. Smartphones for musculoskeletal research - hype or hope? Lessons from a decennium of mHealth studies. BMC Musculoskelet Disord 2022;23:487. 10.1186/s12891-022-05420-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Seppen B, Wiegel J, Ter Wee MM, et al. Smartphone-Assisted patient-initiated care versus usual care in patients with rheumatoid arthritis and low disease activity: a randomized controlled trial. Arthritis Rheumatol 2022. 10.1002/art.42292. [Epub ahead of print: 11 Jul 2022]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Müskens WD, Rongen-van Dartel SAA, Vogel C, et al. Telemedicine in the management of rheumatoid arthritis: maintaining disease control with less health-care utilization. Rheumatol Adv Pract 2021;5:rkaa079. 10.1093/rap/rkaa079 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Knitza J, Tascilar K, Vuillerme N, et al. Accuracy and tolerability of self-sampling of capillary blood for analysis of inflammation and autoantibodies in rheumatoid arthritis patients-results from a randomized controlled trial. Arthritis Res Ther 2022;24:125. 10.1186/s13075-022-02809-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Druce KL, Gibson DS, McEleney K, et al. Remote sampling of biomarkers of inflammation with linked patient generated health data in patients with rheumatic and musculoskeletal diseases: an ecological Momentary assessment feasibility study. BMC Musculoskelet Disord 2022;23:770. 10.1186/s12891-022-05723-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Riches PL, Alexander D, Hauser B, et al. Evaluation of supported self-management in gout (GoutSMART): a randomised controlled feasibility trial. Lancet Rheumatol 2022;4:e320–8. 10.1016/S2665-9913(22)00062-5 [DOI] [PubMed] [Google Scholar]

- 16.Doumen M, Westhovens R, Pazmino S, et al. The ideal mHealth-application for rheumatoid arthritis: qualitative findings from stakeholder focus groups. BMC Musculoskelet Disord 2021;22:746. 10.1186/s12891-021-04624-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Druce KL, Dixon WG, McBeth J. Maximizing engagement in mobile health studies: lessons learned and future directions. Rheum Dis Clin North Am 2019;45:159–72. 10.1016/j.rdc.2019.01.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021;372:n71. 10.1136/bmj.n71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wells GA, Shea B, O’Connell D. The Newcastle-Ottawa Scale (NOS) for assessing the quality if nonrandomized studies in meta-analyses. Available: http://www.ohri.ca/programs/clinical_epidemiology/oxford.htm

- 20.Maher CG, Sherrington C, Herbert RD, et al. Reliability of the PEDro scale for rating quality of randomized controlled trials. Phys Ther 2003;83:713–21. 10.1093/ptj/83.8.713 [DOI] [PubMed] [Google Scholar]

- 21.Elmagboul N, Coburn BW, Foster J, et al. Physical activity measured using wearable activity tracking devices associated with gout flares. Arthritis Res Ther 2020;22:181. 10.1186/s13075-020-02272-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pouls BPH, Bekker CL, Gaffo AL, et al. Tele-monitoring flares using a smartphone APP in patients with gout or suspected gout: a feasibility study. Rheumatol Adv Pract 2021;5:rkab100. 10.1093/rap/rkab100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bromberg MH, Connelly M, Anthony KK, et al. Self-Reported pain and disease symptoms persist in juvenile idiopathic arthritis despite treatment advances: an electronic diary study. Arthritis Rheumatol 2014;66:462–9. 10.1002/art.38223 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tyrrell JS, Redshaw CH, Schmidt W. Physical activity in ankylosing spondylitis: evaluation and analysis of an eHealth tool. J Innov Health Inform 2016;23:510–22. 10.14236/jhi.v23i2.169 [DOI] [PubMed] [Google Scholar]

- 25.Kempin R, Richter JG, Schlegel A, et al. Monitoring of disease activity with a smartphone APP in routine clinical care in patients with axial spondyloarthritis. J Rheumatol 2022;49:878–84. [DOI] [PubMed] [Google Scholar]

- 26.Yu SP, Ferreira ML, Duong V, et al. Responsiveness of an activity tracker as a measurement tool in a knee osteoarthritis clinical trial (ACTIVe-OA study). Ann Phys Rehabil Med 2022;65:101619. 10.1016/j.rehab.2021.101619 [DOI] [PubMed] [Google Scholar]

- 27.Crouthamel M, Quattrocchi E, Watts S, et al. Using a researchkit smartphone app to collect rheumatoid arthritis symptoms from real-world participants: feasibility study. JMIR Mhealth Uhealth 2018;6:e177. 10.2196/mhealth.9656 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Nowell WB, Gavigan K, Kannowski CL, et al. Which patient-reported outcomes do rheumatology patients find important to track digitally? A real-world longitudinal study in ArthritisPower. Arthritis Res Ther 2021;23:53. 10.1186/s13075-021-02430-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Christodoulou C, Schneider S, Junghaenel DU, et al. Measuring daily fatigue using a brief scale adapted from the patient-reported outcomes measurement information system (PROMIS ®). Qual Life Res 2014;23:1245–53. 10.1007/s11136-013-0553-z [DOI] [PubMed] [Google Scholar]

- 30.Beukenhorst AL, Howells K, Cook L, et al. Engagement and participant experiences with consumer smartwatches for health research: longitudinal, observational feasibility study. JMIR Mhealth Uhealth 2020;8:e14368. 10.2196/14368 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Druce KL, McBeth J, van der Veer SN, et al. Recruitment and ongoing engagement in a UK smartphone study examining the association between weather and pain: cohort study. JMIR Mhealth Uhealth 2017;5:e168. 10.2196/mhealth.8162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Nowell W, Curtis JR, Zhao H. Participant engagement and adherence in an ArthritisPower real-world study to capture Smartwatch and patient-reported outcome data among rheumatoid arthritis patients. Arthritis & Rheumatology 2020;72:3959–62. [Google Scholar]

- 33.McBeth J, Dixon WG, Moore SM, et al. Sleep disturbance and quality of life in rheumatoid arthritis: prospective mHealth study. J Med Internet Res 2022;24:e32825. 10.2196/32825 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Renskers L, Rongen-van Dartel SAA, Huis AMP, et al. Patients’ experiences regarding self-monitoring of the disease course: an observational pilot study in patients with inflammatory rheumatic diseases at a rheumatology outpatient clinic in The Netherlands. BMJ Open 2020;10:e033321. 10.1136/bmjopen-2019-033321 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Harbottle V, Bennett J, Duong C, et al. 303 feasibility of wearable technologies in children and young people with juvenile idiopathic arthritis. Rheumatology 2018;57:key075.527. 10.1093/rheumatology/key075.527 [DOI] [Google Scholar]

- 36.Heale LD, Dover S, Goh YI, et al. A wearable activity tracker intervention for promoting physical activity in adolescents with juvenile idiopathic arthritis: a pilot study. Pediatr Rheumatol Online J 2018;16:66. 10.1186/s12969-018-0282-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Jacquemin C, Servy H, Molto A, et al. Physical activity assessment using an activity tracker in patients with rheumatoid arthritis and axial spondyloarthritis: prospective observational study. JMIR Mhealth Uhealth 2018;6:e1. 10.2196/mhealth.7948 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Östlind E, Sant'Anna A, Eek F, et al. Physical activity patterns, adherence to using a wearable activity tracker during a 12-week period and correlation between self-reported function and physical activity in working age individuals with hip and/or knee osteoarthritis. BMC Musculoskelet Disord 2021;22:450. 10.1186/s12891-021-04338-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Bingham CO, Gaich CL, DeLozier AM, et al. Use of daily electronic patient-reported outcome (PRO) diaries in randomized controlled trials for rheumatoid arthritis: rationale and implementation. Trials 2019;20:182. 10.1186/s13063-019-3272-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Colls J, Lee YC, Xu C, et al. Patient adherence with a smartphone APP for patient-reported outcomes in rheumatoid arthritis. Rheumatology 2021;60:108–12. 10.1093/rheumatology/keaa202 [DOI] [PubMed] [Google Scholar]

- 41.Seppen BF, Wiegel J, L'ami MJ, et al. Feasibility of self-monitoring rheumatoid arthritis with a smartphone APP: results of two mixed-methods pilot studies. JMIR Form Res 2020;4:e20165. 10.2196/20165 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lee SSS, Xin X, Lee WP, et al. The feasibility of using SMS as a health survey tool: an exploratory study in patients with rheumatoid arthritis. Int J Med Inform 2013;82:427–34. 10.1016/j.ijmedinf.2012.12.003 [DOI] [PubMed] [Google Scholar]

- 43.Lee RR, Shoop-Worrall S, Rashid A, et al. "Asking Too Much?": Randomized N-of-1 trial exploring patient preferences and measurement reactivity to frequent use of remote multidimensional pain assessments in children and young people with juvenile idiopathic arthritis. J Med Internet Res 2020;22:e14503. 10.2196/14503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Broderick JE, Schneider S, Junghaenel DU, et al. Validity and reliability of patient-reported outcomes measurement information system instruments in osteoarthritis. Arthritis Care Res 2013;65:1625–33. 10.1002/acr.22025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Rouzaud Laborde C, Cenko E, Mardini MT, et al. Satisfaction, usability, and compliance with the use of smartwatches for ecological momentary assessment of knee osteoarthritis symptoms in older adults: usability study. JMIR Aging 2021;4:e24553. 10.2196/24553 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Skrepnik N, Spitzer A, Altman R, et al. Assessing the impact of a novel smartphone application compared with standard follow-up on mobility of patients with knee osteoarthritis following treatment with Hylan G-F 20: a randomized controlled trial. JMIR Mhealth Uhealth 2017;5:e64. 10.2196/mhealth.7179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Simblett S, Greer B, Matcham F, et al. Barriers to and facilitators of engagement with remote measurement technology for managing health: systematic review and content analysis of findings. J Med Internet Res 2018;20:e10480. 10.2196/10480 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Jackson LE, Edgil TA, Hill B, et al. Telemedicine in rheumatology care: a systematic review. Semin Arthritis Rheum 2022;56:152045. 10.1016/j.semarthrit.2022.152045 [DOI] [PubMed] [Google Scholar]

- 49.Davergne T, Pallot A, Dechartres A, et al. Use of wearable activity Trackers to improve physical activity behavior in patients with rheumatic and musculoskeletal diseases: a systematic review and meta-analysis. Arthritis Care Res 2019;71:758–67. 10.1002/acr.23752 [DOI] [PubMed] [Google Scholar]

- 50.Amagai S, Pila S, Kaat AJ, et al. Challenges in participant engagement and retention using mobile health Apps: literature review. J Med Internet Res 2022;24:e35120. 10.2196/35120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Shih PC, Han K, Poole ES. Use and adoption challenges of wearable activity trackers. iConference 2015 Proceedings, 2015. [Google Scholar]

- 52.Pratap A, Neto EC, Snyder P, et al. Indicators of retention in remote digital health studies: a cross-study evaluation of 100,000 participants. NPJ Digit Med 2020;3:21. 10.1038/s41746-020-0224-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Gossec L, Cantagrel A, Soubrier M, et al. An e-health interactive self-assessment website (Sanoia®) in rheumatoid arthritis. A 12-month randomized controlled trial in 320 patients. Joint Bone Spine 2018;85:709–14. 10.1016/j.jbspin.2017.11.015 [DOI] [PubMed] [Google Scholar]

- 54.Wildenbos GA, Peute L, Jaspers M. Aging barriers influencing mobile health usability for older adults: a literature based framework (MOLD-US). Int J Med Inform 2018;114:66–75. 10.1016/j.ijmedinf.2018.03.012 [DOI] [PubMed] [Google Scholar]

- 55.Len CA, Miotto e Silva VB, Terreri MTRA. Importance of adherence in the outcome of juvenile idiopathic arthritis. Curr Rheumatol Rep 2014;16:410. 10.1007/s11926-014-0410-2 [DOI] [PubMed] [Google Scholar]

- 56.Serlachius A, Schache K, Kieser A, et al. Association between user engagement of a mobile health APP for gout and improvements in self-care behaviors: randomized controlled trial. JMIR Mhealth Uhealth 2019;7:e15021. 10.2196/15021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Reade S, Spencer K, Sergeant JC, et al. Cloudy with a chance of pain: engagement and subsequent attrition of daily data entry in a smartphone pilot study tracking weather, disease severity, and physical activity in patients with rheumatoid arthritis. JMIR Mhealth Uhealth 2017;5:e37. 10.2196/mhealth.6496 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Stinson JN, Petroz GC, Stevens BJ, et al. Working out the kinks: testing the feasibility of an electronic pain diary for adolescents with arthritis. Pain Res Manag 2008;13:375–82. 10.1155/2008/326389 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Najm A, Gossec L, Weill C, et al. Mobile health apps for self-management of rheumatic and musculoskeletal diseases: systematic literature review. JMIR Mhealth Uhealth 2019;7:e14730. 10.2196/14730 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Geuens J, Swinnen TW, Westhovens R, et al. A review of persuasive principles in mobile apps for chronic arthritis patients: opportunities for improvement. JMIR Mhealth Uhealth 2016;4:e118. 10.2196/mhealth.6286 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Austin L, Sharp CA, van der Veer SN, et al. Providing 'the bigger picture': benefits and feasibility of integrating remote monitoring from smartphones into the electronic health record. Rheumatology 2020;59:367–78. 10.1093/rheumatology/kez207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Bellamy N, Patel B, Davis T, et al. Electronic data capture using the Womac NRS 3.1 index (m-Womac): a pilot study of repeated independent remote data capture in oa. Inflammopharmacology 2010;18:107–11. 10.1007/s10787-010-0040-x [DOI] [PubMed] [Google Scholar]

- 63.Connelly M, Anthony KK, Sarniak R, et al. Parent pain responses as predictors of daily activities and mood in children with juvenile idiopathic arthritis: the utility of electronic diaries. J Pain Symptom Manage 2010;39:579–90. 10.1016/j.jpainsymman.2009.07.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Connelly M, Bromberg MH, Anthony KK, et al. Emotion regulation predicts pain and functioning in children with juvenile idiopathic arthritis: an electronic diary study. J Pediatr Psychol 2012;37:43–52. 10.1093/jpepsy/jsr088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Connelly M, Bromberg MH, Anthony KK, et al. Use of smartphones to prospectively evaluate predictors and outcomes of caregiver responses to pain in youth with chronic disease. Pain 2017;158:629–36. 10.1097/j.pain.0000000000000804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Costantino F, Leboime A, Bessalah L, et al. Correspondence between patient-reported flare and disease activity score variation in axial spondyloarthritis: a 12-months web-based study. Joint Bone Spine 2022;89:105422. 10.1016/j.jbspin.2022.105422 [DOI] [PubMed] [Google Scholar]

- 67.Fu K, Makovey J, Metcalf B, et al. Sleep quality and fatigue are associated with pain exacerbations of hip osteoarthritis: an Internet-based case-crossover study. J Rheumatol 2019;46:1524–30. 10.3899/jrheum.181406 [DOI] [PubMed] [Google Scholar]

- 68.Gilbert AL, Lee J, Song J, et al. Relationship between self-reported restless sleep and objectively measured physical activity in adults with knee osteoarthritis. Arthritis Care Res 2021;73:687–92. 10.1002/acr.23581 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Heiberg T, Kvien TK, Dale Øystein, et al. Daily health status registration (patient diary) in patients with rheumatoid arthritis: a comparison between personal digital assistant and paper-pencil format. Arthritis Rheum 2007;57:454–60. 10.1002/art.22613 [DOI] [PubMed] [Google Scholar]

- 70.Lalloo C, Harris LR, Hundert AS, et al. The iCanCope pain self-management application for adolescents with juvenile idiopathic arthritis: a pilot randomized controlled trial. Rheumatology 2021;60:196–206. 10.1093/rheumatology/keaa178 [DOI] [PubMed] [Google Scholar]

- 71.Lazaridou A, Martel MO, Cornelius M, et al. The association between daily physical activity and pain among patients with knee osteoarthritis: the moderating role of pain catastrophizing. Pain Med 2019;20:916–24. 10.1093/pm/pny129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Martin MJ, Ng N, Blackler L, et al. P227 Remote monitoring of patients with RA: a user-centred design approach. Rheumatology 2020;59:keaa111–221. 10.1093/rheumatology/keaa111.221 [DOI] [Google Scholar]

- 73.Murray MT, Penney SP, Landman A, et al. User experience with a voice-enabled smartphone APP to collect patient-reported outcomes in rheumatoid arthritis. Clin Exp Rheumatol 2022;40:882–9. 10.55563/clinexprheumatol/xsdfl2 [DOI] [PubMed] [Google Scholar]

- 74.Pers Y-M, Valsecchi V, Mura T, et al. A randomized prospective open-label controlled trial comparing the performance of a connected monitoring interface versus physical routine monitoring in patients with rheumatoid arthritis. Rheumatology 2021;60:1659–68. 10.1093/rheumatology/keaa462 [DOI] [PubMed] [Google Scholar]

- 75.Wilson SJ, Martire LM, Keefe FJ, et al. Daily verbal and nonverbal expression of osteoarthritis pain and spouse responses. Pain 2013;154:2045–53. 10.1016/j.pain.2013.06.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Zaslavsky O, Thompson HJ, McCurry SM, et al. Use of a wearable technology and motivational interviews to improve sleep in older adults with osteoarthritis and sleep disturbance: a pilot study. Res Gerontol Nurs 2019;12:167–73. 10.3928/19404921-20190319-02 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

rmdopen-2022-002625supp001.pdf (4.5MB, pdf)

Data Availability Statement

Data are available on reasonable request.