Abstract

One of the challenges in differentiating a duplicate hologram from an original one is reflectivity. A slight change in lighting condition will completely change the reflection pattern exhibited by a hologram, and consequently, a standardized duplicate hologram detector has not yet been created. In this study, a portable and low-cost snapshot hyperspectral imaging (HSI) algorithm-based housing module for differentiating between original and duplicate holograms was proposed. The module consisted of a Raspberry Pi 4 processor, a Raspberry Pi camera, a display, and a light-emitting diode lighting system with a dimmer. A visible HSI algorithm that could convert an RGB image captured by the Raspberry Pi camera into a hyperspectral image was established. A specific region of interest was selected from the spectral image and mean gray value (MGV) and reflectivity were measured. Results suggested that shorter wavelengths are the most suitable for differentiating holograms when using MGV as the parameter for classification, while longer wavelengths are the most suitable when using reflectivity. The key features of this design include low cost, simplicity, lack of moving parts, and no requirement for an additional decoding key.

Subject terms: Engineering, Optics and photonics

Introduction

Various optical systems, such as spectral imaging1–3, optical coherence tomography (OCT)4, photoacoustic (PA) imaging mechanism5, laser scanning micro-profilometry6, ultraviolet–visible (UV–Vis) spectroscopy7, optical fiber sensor8, and scanning microscopy9, are available. In OCT, low coherence light is employed to obtain 3D images from within optical scattering media10,11. The PA imaging technique uses optical illumination and ultrasound wave detection to visualize optical absorption, which is frequently related to the properties of an object12,13. In UV–Vis spectroscopy, the absorption or reflection properties of a material are compared in the ultraviolet and visible bands of the electromagnetic spectrum14,15. In scanning microscopy, accelerated electrons are used as the source of light16,17. Most of these optical systems have been employed to detect different types of fraud, including counterfeit currencies, pharmaceutical drugs, documents, and artwork. However, one application in which optical systems are not widely used is duplicate hologram detection and classification. Holograms, also known as diffractive optically variable image devices, are optically variable objects18,19. They change appearance when viewed from a different angle or under a different lighting system. Therefore, designing an optical system for detecting and classifying any holograms is challenging and expensive20. Moreover, the portability of an optical system should be considered in this case. One of the methods that can overcome all the aforementioned challenges in the classification of holograms is hyperspectral imaging (HSI). HSI is an evolving pioneering statistical and heuristic spectrometric technology21,22. It is a nondestructive technique that is widely used to examine a broad spectrum of light rather than merely examining the primary colors, i.e., red, green, and blue (RGB) in the pixels of an image23,24. HSI has been used in various field and applications, such as cancer detection25, air pollution monitoring26, remote sensing27, agriculture28, astronomy29, quality control30, environment monitoring31, and vegetable classification32. In an HSI image, every pixel not only contains the primary colors but also the absorption and reflectance data33,34. Each pixel includes spectral information, forming 3D values on 2D images35. This phenomenon is known as the hyperspectral data cube22. HSI data are assumed to be sampled spectrally at more than 20 equally distributed wavelengths. HSI can also be extended beyond the visible spectrum (VIS)36 and into near-infrared37 and far-infrared38 spectra.

At present, nearly all HSI applications are restricted to research laboratories because these instruments are heavy, expensive, and laborious to use35. Sumriddetchkajorn and Intaravanne demonstrated the ability of HSI to verify the authenticity of a credit card by analyzing the hologram printed on it1. They obtained different color spectra of the hologram in the credit card by using white light at different angles. However, the instrument they designed is expensive and not portable. Another study built a portable cylindrical light module that can be attached to a mobile device to verify holograms in currency notes39. Although this instrument is portable, it can verify only a small hologram. Moreover, the quality of the camera will vary among different phone models used to acquire the image, and this condition can considerably affect the analysis results. The major goal of HSI research is to make HSI more affordable, user-friendly, and compact.

Therefore, the current study proposes and demonstrates a portable and low-cost HSI-based housing module to differentiate between original and duplicate holograms. The system consists of a Raspberry Pi 4 processor, a Raspberry Pi version 2 camera module, a thin film transistor (TFT) display, a light-emitting diode (LED) lighting system, and a LED Dimmer. A VIS-HSI technology is also developed to simulate the RGB values captured by the camera into a hyperspectral image. A region of interest (ROI) is selected, and the mean gray value (MGV) acquired from four original holograms is used as a reference and compared with that obtained from four duplicate holograms. A 98% confidence interval (CI) is formed around the MGV of every wavelength and used as a classification criterion. The results demonstrate that the four duplicate holograms are easily differentiated from the original ones. Moreover, the reflectance data of the ROI are measured, indicating that the original and duplicate holograms have different reflective patterns in the longer wavelengths.

Results and discussion

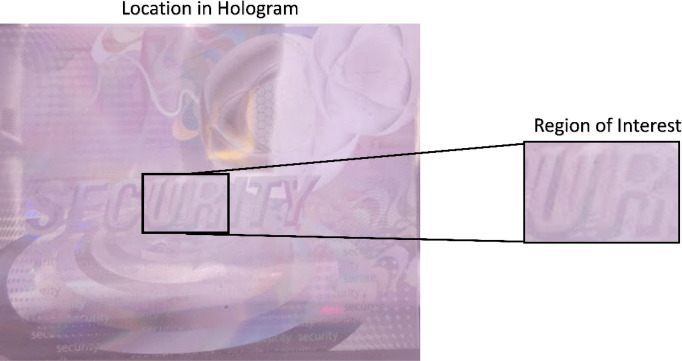

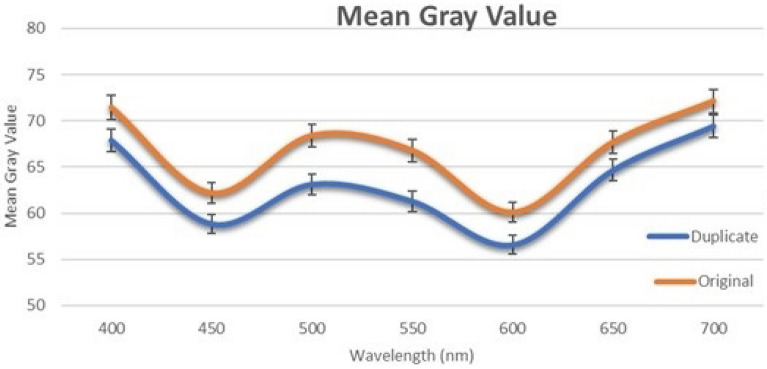

In this study, a Python-based Windows application is developed to capture the real-time image from the Raspberry Pi camera. One specific ROI is extracted from the image that is converted into a hyperspectral image. Finally, the hologram is classified either as an original or a duplicate based on MGV. The ROI consists of the fourth and fifth letters in the word “SECURITY,” i.e., “UR.” This region is selected because it is placed at the center of the hologram, and thus, easily accessible, as shown in Fig. 1. This ROI has a height and width of 0.6 mm and 0.9 mm, respectively. It has a total of 33,810 pixels. This research is a pilot study and hence only four duplicate and four original holograms are used as a reference for numerical analysis provided by K Laser Technology Inc. For the ROI, the MGV is measured in the VIS-HSI band between 400 and 700 nm. MGV is the average measure of the brightness of all the pixels in the image40,41. In a color image, the gray value can have values between 0 and 255. In the past, many studies used MGVs for image classification and detection, because it is one of the reliable classification methods40,42–44. Figure 2 shows the mean of the duplicate and original samples between 400 and 700 nm (see Supplement 1 Sect. 3 for the detailed plot of all the samples).

Figure 1.

Location of the ROI in the hologram.

Figure 2.

Mean MGV of the duplicate and original samples. The error bar represents a 98% CI range in the specified wavelength (n = 8).

The original and duplicate holograms can be easily differentiated. The RMSE of the MGVs in the shorter wavelength between 400 and 500 nm is 3.4664, while that in the longer wavelength between 600 and 700 nm is 3.1126. However, the RMSE of MGVs in the middle wavelengths is 5.4049. Therefore, based on RMSE, we can infer that the middle wavelengths between 500 and 600 nm are the most suitable for the classification of holograms (see Supplement 1 Sect. 2 for entropy measurement). The samples are classified into two classes: original and duplicate holograms. As shown in Fig. 3, a 98% CI is also calculated around the average MGVs of both classes for each wavelength. CI represents the range in that specific wavelength wherein the MGV of a sample belonging to that specific class will probably fall. Although 95% is the most commonly used CI, 98% CI can be used because the MGVs of the samples fall within a narrow range in this study. On this basis, the hologram will be classified as original if the MGV falls within its class; otherwise, the hologram will be classified as duplicate. Although, the developed method can measure the MGVs from 400 till 780 nm, the MGVs become similar for the duplicate and the original holograms after 700 nm falling under the 98% CI. Hence, in this study the MGVs between 400 and 700 nm were only considered.

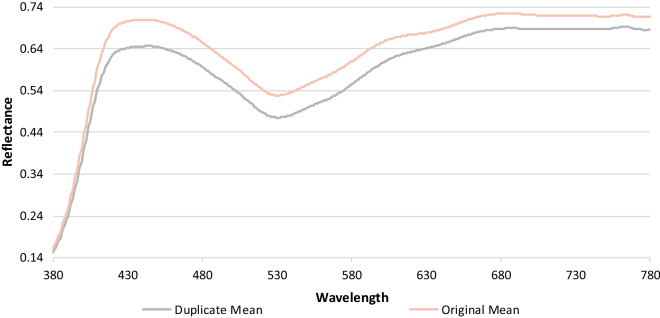

Figure 3.

Mean of the reflectance spectra of four duplicate and four original samples.

The reflectance of the center pixel of all the samples in this study is also calculated using the VIS-HSI algorithm developed in this study. Figure 3 shows the mean reflectance spectrum of the duplicate and original holograms in the visible spectrum (see Supplement 1 Section 3 for individual plots for every sample). In the longer wavelengths, specifically after 580 nm, a clear difference exists between the original and duplicate samples in the reflectance. This result is consistent with those of previous image classification studies that used HSI45–47. Classifying images is easier in the long wavelengths than in the middle or short wavelengths in HSI. Lim and Murukeshan calculated the reflectance spectra of original and duplicate banknotes48. A difference between the reflectance of the original and duplicate banknotes was only observed in longer wavelengths above 550 nm. Qin et al. measured the reflectance spectra of grapefruit samples with normal and different diseased skin conditions49. A clear difference existed in the reflectance data only between wavelengths 600 nm and 800 nm, which are in the longer wavelength region. Both the MGVs and the reflectance values can be used to classify the hologram, MGV has been specifically used to classify because the RMSE value in the wavelengths 500 and 550 are greatest in MGV. Therefore, a better classification performance can be achieved by increasing the CI to 90%.

In this study, a stand-alone Python-based Windows application is also developed to classify holograms. To capture images, the Raspberry Pi web camera interface is installed in the Raspberry Pi operating system. The live feed of the Raspberry Pi camera can be directly accessed by the Windows application by clicking the “Start” button, and the camera can also be turned off by clicking the “Stop” button. The “Capture” button is used to capture the current image that will be used for classification. In the application, the frames per second and frame number are displayed. The user must input parameters, such as the wavelength of the narrowband in which the sample will be analyzed and the gain value specific to each narrowband by clicking the “Ok” button. Once the “Analyze” button is clicked, the application will convert the RGB image captured by the Raspberry Pi camera into a hyperspectral image and crop the ROI from the image. Then, the MGV of the sample will be calculated and classified into its respective class based on the narrowband wavelength inputted by the user. The final result will be displayed at the bottom of the application either as “The Hologram is Original” or “The Hologram is Counterfeit,” (to see the application built in this study refer supplement 1 Figure S10).

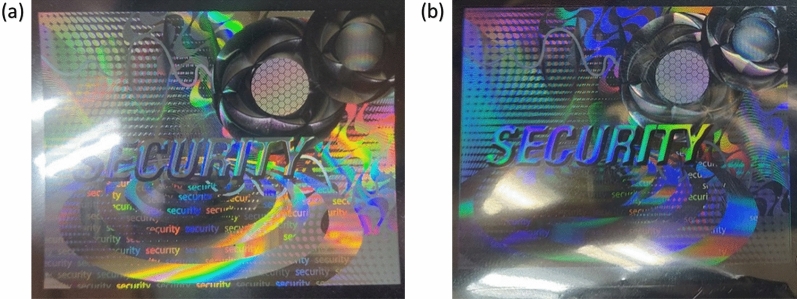

Methods

One of the challenges in differentiating between duplicate and original holograms is reflection. A minor change in lighting angle will create an entirely different reflection pattern. Holograms can be differentiated by capturing and analyzing the reflection data from different incident angles. However, the reflection pattern will vary under different light sources. Therefore, the light source should also be constant. As shown in Fig. 4, a slight change in lighting conditions can lead to a drastic change in the reflection from the final image. Hence, a module must be designed to reduce external light, and lighting conditions must be the same for all holograms.

Figure 4.

(a) Original hologram and (b) duplicate hologram.

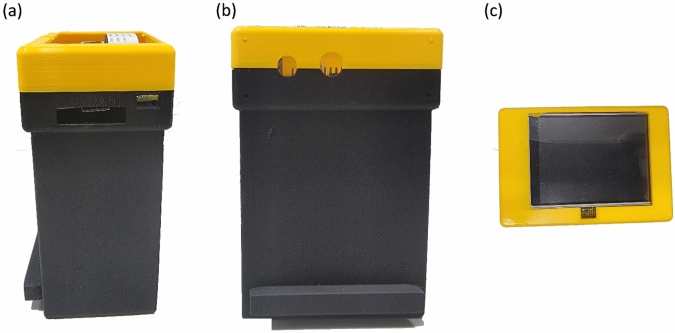

A module that could accommodate all the electronic components and reduce external light noises was designed in the current study. The design was 3D printed using Ultimaker Cura 3. The final product is presented in Fig. 5. The critical factors considered in this study were minimizing the number of optoelectronic components, reducing the size of the design, and optimizing the positioning of different components. In any image processing method based on hyperspectral or multispectral studies, a spectrometer, an optical head, or a multispectral LED board is typically used, making the module costly and fixed. Hence, the number of components must be reduced to build a low-cost and compact module. Accordingly, only a minimum number of components are utilized in the current study. The whole module can be divided into two units: the processing system and the optical system. The processing unit consists of three components: a Raspberry Pi 4 microprocessor, a Raspberry Pi camera module version 2, and a TFT display unit. Meanwhile, the optical system consists of a LED strip, a diffuser, and a LED dimmer (for the detailed specifications of these components, refer to Supplement 1 Section 1).

Figure 5.

(a) Side view, (b) front view, and (c) top view of the 3D printed design.

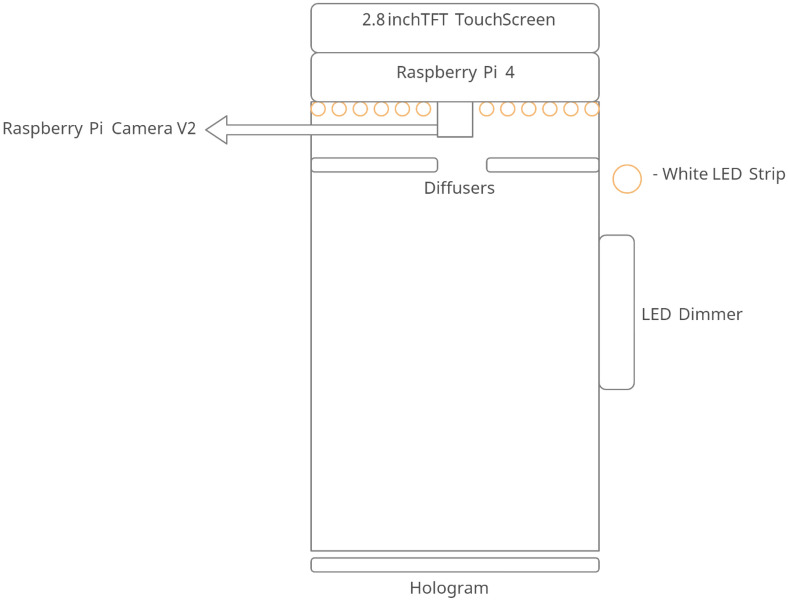

The schematic of the imaging system is presented in Fig. 6. The Raspberry Pi 4 computer Model B is used for processing, while the Raspberry Pi camera module version 2 is used to capture the images of a hologram. The processor is connected to an Adafruit Pi TFT 320 × 240 2.8" touch screen to control the processing unit. The optical system consists of a 3000 K chip on board LED strip light, which is fixed onto the design. The LED strip light is connected to a LED dimmer switch to control brightness. The COB LEDs has a uniform spectral response (no cyan dip) across the blue, green, and red color spectrum. However, this lighting system is not even, but rather, a pointed light source. An opal profile diffuser is also used to reduce the transmittance rate and distribute the light source evenly.

Figure 6.

Schematic of the imaging system.

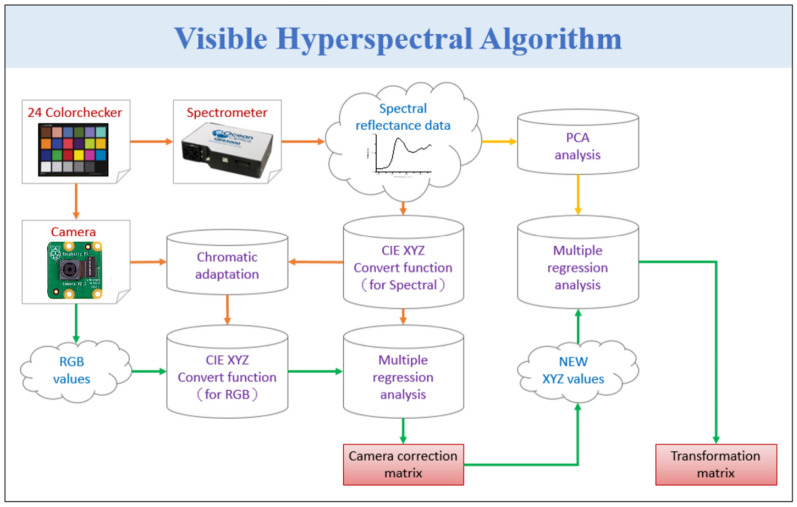

Snapshot-based RGB to HSI conversion algorithm

The core concept of the VIS-HSI technology is to endow a common digital camera with the function of a spectrometer, i.e., the image captured by the camera contains spectral information. To achieve this, a relationship matrix between the camera and the spectrometer that can be used to construct VIS-HSI technology must be established. The construction process of this technology is illustrated in Fig. 7. First, a camera (Raspberry Pi Camera) and a spectrometer (Ocean Optics, QE65000) is provided with multiple common targets as reference for the analysis. In the current study, a standard 24-color checker (X-Rite classic) is selected as the target, because it contains the most important colors (blue, green, red, and gray) and other common colors found in nature. To correct camera errors because they may be affected by inaccurate white balance, the standard 24-color card must be passed through the camera and the spectrometer to obtain 24-color patch images (sRGB, 8 bit) and 24-color images, respectively. Then, the 24-color patch image and the 24-color patch reflectance spectrum data are converted into CIE 1931 XYZ color space (for the individual conversion formulae, see Supplement 1 Sect. 4).

Figure 7.

VIS construction process.

In the camera, the image (JPEG, 8 bit) is stored by the sRGB color space specification. Before converting an image from the sRGB color space into the XYZ color space, the respective R, G, and B values (0–255) must be converted into a smaller scale range (0–1), and then the sRGB values are converted into linear RGB values through gamma function conversion. Finally, the conversion matrix is used to convert the linear RGB value into the XYZ values normalized in the XYZ gamut space. In the spectrometer, the reflection spectrum data must be converted into the XYZ color gamut space, the XYZ color-matching functions, and the light source spectrum. The Y value of the XYZ color gamut space is proportional to the brightness; hence, the maximum brightness of the light source spectrum is calculated, and the Y value is standardized to 100 to obtain the brightness ratio (k). Finally, the reflection spectrum data are converted into the XYZ value (XYZSpectrum) normalized in the XYZ color gamut space. Multiple regression is performed to obtain the correction coefficient matrix C for calibrating the camera, as shown in Eq. (1). The variable matrix V is obtained by analyzing the factors that may cause errors in the camera, such as nonlinear response, dark current, inaccurate color separation of the color filter, and color shifting.

| 1 |

Once the camera is calibrated, the corrected X, Y, and Z (XYZCorrect) values can be obtained using Eq. (2). The conversion matrix M is obtained using the spectrometer and the reflection spectrum data (spectrum) of the 24 color blocks. The average root-mean-square error (RMSE) of XYZCorrect and XYZSpectrum is 0.19, which is negligible in this case. The important principal components are identified by performing the principal component analysis (PCA) on RSpectrum. Moreover, multiple regression is performed on the extracted principal component scores, which are combined with the previously obtained results to determine the conversion matrix M.

| 2 |

To convert XYZCorrect into RSpectrum, the dimensions of RSpectrum must be reduced to increase the correlation between each dimension and XYZCorrect. Therefore, RSpectrum is analyzed via PCA to obtain the eigenvectors. The six principal components are used in dimensionality reduction because these six groups of principal components have been able to explain 99.64% of data variability. The corresponding principal component score can be used for regression analysis with XYZCorrect. In the multivariate regression analysis of XYZCorrect and the score, the variable VColor is selected because it has all the listed possible combinations of X, Y, and Z. The transformation matrix M is obtained using Eq. (3), and then XYZCorrect is passed through Eq. (4) to calculate the analogue spectrum (SSpectrum). Finally, the obtained 24-color block analogue spectrum (SSpectrum) is compared with the 24-color block reflection spectrum. The RMSE of each color block is calculated, and the average error is 0.056, which is negligible. The average color difference between the 24-color block analogue spectrum and the 24-color block reflection spectrum is 0.75, suggesting that distinguishing color difference is difficult. When the processed reflection spectrum color is reproduced, the color is reproduced accurately. The VIS-HSI technology constructed from the above process can simulate the reflection spectrum from the RGB values captured by the monocular camera to obtain the VIS hyperspectral images.

| 3 |

| 4 |

By using this method, an RGB image captured by a digital camera can be converted into an HSI image without using a spectrometer, an optical head, or a hyperspectral camera. By eliminating these components, the machine designed in this study is low cost and highly portable but can still differentiate between duplicate and original holograms.

Conclusion

In this study, a portable and low-cost module has been designed to capture, classify, and detect duplicate hologram. This specific design reduces the external light noises which will cause uneven reflection pattern and provides an even light distribution throughout the surface of the hologram. Also, a VIS-HSI algorithm has been built to convert the RGB images captured by the Raspberry Pi camera to a hyperspectral image. A region of interest is selected from the hyperspectral image and the mean grey value is measured and 98% confidence interval is calculated in the visible band between 400 and 700 nm. Based on the MGVs the holograms are classified as either original or duplicate. Finally, a stand-alone Python-based Windows application is also built which is used to control the Raspberry Pi micro-processor and access the real-time feed of the Raspberry Pi camera housed in the portable and low-cost module. Based on the user defined narrow band wavelength values, the application will analyse the hologram and display which class does the sample belong to. The future scope of this study is to use same methodology to classify the counterfeit currencies from the original currency. The same design could also be used to design a NIR-HSI conversion algorithm and a low cost NIR-HSI module can be developed.

Supplementary Information

Author contributions

Conceptualization, H.-C.W. and A.M.; data curation, Y.-M.T. and A.M.; formal analysis, A.M.; funding acquisition, F.-C.L. and H.-C.W.; investigation, Y.-M.T. and A.M.; methodology, H.-C.W. and A.M.; project administration, Y.-M.T., A.M. and H.-C.W.; resources, H.-C.W. and A.M.; software, Y.-M.T. and A.M.; supervision, Y.-M.T. and H.-C.W.; validation, Y.-M.T. and A.M.; writing—original draft, A.M.; writing—review and editing, A.M. and H.-C.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Science and Technology Council, The Republic of China under the Grants NSTC 111-2221-E-194-007. This work was financially/partially supported by the Advanced Institute of Manufacturing with High-tech Innovations (AIM-HI) and the Center for Innovative Research on Aging Society (CIRAS) from The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE), and Kaohsiung Armed Forces General Hospital research project MAB108-091 in Taiwan.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Fen-Chi Lin, Email: eses.taiwan@gmail.com.

Hsiang-Chen Wang, Email: hcwang@ccu.edu.tw.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-022-22424-5.

References

- 1.Sumriddetchkajorn S, Intaravanne Y. Hyperspectral imaging-based credit card verifier structure with adaptive learning. Appl. Opt. 2008;47:6594–6600. doi: 10.1364/AO.47.006594. [DOI] [PubMed] [Google Scholar]

- 2.Polak A, Kelman T, Murray P, Marshall S, Stothard DJ, Eastaugh N, Eastaugh F. Hyperspectral imaging combined with data classification techniques as an aid for artwork authentication. J. Cult. Herit. 2017;26:1–11. doi: 10.1016/j.culher.2017.01.013. [DOI] [Google Scholar]

- 3.Lim H-T, Murukeshan VM. Hyperspectral imaging of polymer banknotes for building and analysis of spectral library. Opt. Lasers Eng. 2017;98:168–175. doi: 10.1016/j.optlaseng.2017.06.022. [DOI] [Google Scholar]

- 4.Marques MJ, Green R, King R, Clement S, Hallett P, Podoleanu A. Sub-surface characterisation of latest-generation identification documents using optical coherence tomography. Sci. Justice. 2021;61:119–129. doi: 10.1016/j.scijus.2020.12.001. [DOI] [PubMed] [Google Scholar]

- 5.Dal Fovo A, Tserevelakis GJ, Klironomou E, Zacharakis G, Fontana R. First combined application of photoacoustic and optical techniques to the study of an historical oil painting. Eur. Phys. J. Plus. 2021;136:757. doi: 10.1140/epjp/s13360-021-01739-8. [DOI] [Google Scholar]

- 6.Zhang H, Hua D, Huang C, Samal SK, Xiong R, Sauvage F, Braeckmans K, Remaut K, De Smedt SC. Materials and technologies to combat counterfeiting of pharmaceuticals: Current and future problem tackling. Adv. Mater. 2020;32:1905486. doi: 10.1002/adma.201905486. [DOI] [PubMed] [Google Scholar]

- 7.Martins AR, Talhavini M, Vieira ML, Zacca JJ, Braga JWB. Discrimination of whisky brands and counterfeit identification by UV–Vis spectroscopy and multivariate data analysis. Food Chem. 2017;229:142–151. doi: 10.1016/j.foodchem.2017.02.024. [DOI] [PubMed] [Google Scholar]

- 8.Kang D-H, Hong J-H. A study about the discrimination of counterfeit 50,000 won bills using optical fiber sensor. J. Korean Soc. Manuf. Technol. Eng. 2012;21:15–20. [Google Scholar]

- 9.Shaffer, D.K. Forensic document analysis using scanning microscopy, in Proceedings of the Scanning Microscopy 2009. 398–408 (2009).

- 10.Peng C, Jiang L, Wang H-X, Sun H-H, Zhang Y-L, Liang R-H. Fingerprint anti-counterfeiting method based on optical coherence tomography and optical micro-angiography. Acta Photonica Sin. 2019;48:0611001. doi: 10.3788/gzxb20194806.0611001. [DOI] [Google Scholar]

- 11.Marques, M. J., Green, R., King, R., Clement, S., Hallett, P., & Podoleanu, A. Non-destructive identification document inspection with swept-source optical coherence tomography imaging, in Proceedings of the European Conference on Biomedical Optics. EW4A. 6 (2021).

- 12.Gao R, Xu Z, Ren Y, Song L, Liu C. Nonlinear mechanisms in photoacoustics: Powerful tools in photoacoustic imaging. Photoacoustics. 2021;22:100243. doi: 10.1016/j.pacs.2021.100243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hosseinaee Z, Le M, Bell K, Reza PH. Towards non-contact photoacoustic imaging. Photoacoustics. 2020;20:100207. doi: 10.1016/j.pacs.2020.100207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Saif F, Yaseen S, Alameen A, Mane S, Undre P. Identification and characterization of Aspergillus species of fruit rot fungi using microscopy, FT-IR, Raman and UV–Vis spectroscopy. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2021;246:119010. doi: 10.1016/j.saa.2020.119010. [DOI] [PubMed] [Google Scholar]

- 15.Schilling C, Hess C. Real-time observation of the defect dynamics in working Au/CeO2 catalysts by combined operando Raman/UV–Vis spectroscopy. J. Phys. Chem. C. 2018;122:2909–2917. doi: 10.1021/acs.jpcc.8b00027. [DOI] [Google Scholar]

- 16.Mukundan A, Tsao Y-M, Artemkina SB, Fedorov VE, Wang H-C. Growth mechanism of periodic-structured MoS2 by transmission electron microscopy. Nanomaterials. 2022;12:135. doi: 10.3390/nano12010135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mukundan A, Feng S-W, Weng Y-H, Tsao Y-M, Artemkina SB, Fedorov VE, Lin Y-S, Huang Y-C, Wang H-C. Optical and material characteristics of MoS2/Cu2O sensor for detection of lung cancer cell types in hydroplegia. Int. J. Mol. Sci. 2022;23:4745. doi: 10.3390/ijms23094745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Michaloudis I, Kanamori K, Pappa I, Kehagias N. U (rano) topia: Spectral skies and rainbow holograms for silica aerogel artworks. J. Sol-Gel Sci. Technol. 2022;5:1–12. doi: 10.1007/s10971-021-05676-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bessmel’tsev V, Vileiko V, Maksimov M. Method for measuring the main parameters of digital security holograms for expert analysis and real-time control of their quality. Optoelectron. Instrum. Data Process. 2020;56:122–133. doi: 10.3103/S875669902002003X. [DOI] [Google Scholar]

- 20.Ay B. Open-set learning-based hologram verification system using generative adversarial networks. IEEE Access. 2022;10:25114–25124. doi: 10.1109/ACCESS.2022.3155870. [DOI] [Google Scholar]

- 21.Jiménez-Carvelo AM, Martin-Torres S, Cuadros-Rodríguez L, González-Casado A. 6-Nontargeted fingerprinting approaches. In: Galanakis CM, editor. Food Authentication and Traceability. Academic Press; 2021. pp. 163–193. [Google Scholar]

- 22.Vasefi F, MacKinnon N, Farkas DL. Chapter 16—hyperspectral and multispectral imaging in dermatology. In: Hamblin MR, Avci P, Gupta GK, editors. Imaging in Dermatology. Academic Press; 2016. pp. 187–201. [Google Scholar]

- 23.Khan MH, Saleem Z, Ahmad M, Sohaib A, Ayaz H, Mazzara M, Raza RA. Hyperspectral imaging-based unsupervised adulterated red chili content transformation for classification: Identification of red chili adulterants. Neural Comput. Appl. 2021;33:14507–14521. doi: 10.1007/s00521-021-06094-4. [DOI] [Google Scholar]

- 24.Faltynkova A, Johnsen G, Wagner M. Hyperspectral imaging as an emerging tool to analyze microplastics: A systematic review and recommendations for future development. Microplast. Nanoplast. 2021;1:1–19. doi: 10.1186/s43591-021-00014-y. [DOI] [Google Scholar]

- 25.Tsai C-L, Mukundan A, Chung C-S, Chen Y-H, Wang Y-K, Chen T-H, Tseng Y-S, Huang C-W, Wu I-C, Wang H-C. Hyperspectral imaging combined with artificial intelligence in the early detection of esophageal cancer. Cancers. 2021;13:4593. doi: 10.3390/cancers13184593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chen C-W, Tseng Y-S, Mukundan A, Wang H-C. Air pollution: sensitive detection of PM2.5 and PM10 concentration using hyperspectral imaging. Appl. Sci. 2021;11:4543. doi: 10.3390/app11104543. [DOI] [Google Scholar]

- 27.Hou W, Wang J, Xu X, Reid JS, Janz SJ, Leitch JW. An algorithm for hyperspectral remote sensing of aerosols: 3. Application to the GEO-TASO data in KORUS-AQ field campaign. J. Quant. Spectrosc. Radiat. Transf. 2020;253:107161. doi: 10.1016/j.jqsrt.2020.107161. [DOI] [Google Scholar]

- 28.Lu B, Dao PD, Liu J, He Y, Shang J. Recent advances of hyperspectral imaging technology and applications in agriculture. Remote Sens. 2020;12:2659. doi: 10.3390/rs12162659. [DOI] [Google Scholar]

- 29.Mukundan, A., Patel, A., Saraswat, K.D., Tomar, A., Kuhn, T. Kalam Rover, in AIAA SCITECH 2022 Forum.

- 30.Lu Y, Saeys W, Kim M, Peng Y, Lu R. Hyperspectral imaging technology for quality and safety evaluation of horticultural products: A review and celebration of the past 20-year progress. Postharvest Biol. Technol. 2020;170:111318. doi: 10.1016/j.postharvbio.2020.111318. [DOI] [Google Scholar]

- 31.Stuart MB, McGonigle AJ, Willmott JR. Hyperspectral imaging in environmental monitoring: A review of recent developments and technological advances in compact field deployable systems. Sensors. 2019;19:3071. doi: 10.3390/s19143071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ishida T, Kurihara J, Viray FA, Namuco SB, Paringit EC, Perez GJ, Takahashi Y, Marciano JJ., Jr A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Electron. Agric. 2018;144:80–85. doi: 10.1016/j.compag.2017.11.027. [DOI] [Google Scholar]

- 33.Bishop MP, Giardino JR. 1.01—Technology-driven geomorphology: Introduction and overview. In: Shroder JF, editor. Treatise on Geomorphology. 2. Academic Press; 2022. pp. 1–17. [Google Scholar]

- 34.Ozdemir A, Polat K. Deep learning applications for hyperspectral imaging: A systematic review. J. Inst. Electron. Comput. 2020;2:39–56. doi: 10.33969/JIEC.2020.21004. [DOI] [Google Scholar]

- 35.Schneider A, Feussner H. Chapter 5—Diagnostic procedures. In: Schneider A, Feussner H, editors. Biomedical Engineering in Gastrointestinal Surgery. Academic Press; 2017. pp. 87–220. [Google Scholar]

- 36.Özdoğan G, Lin X, Sun D-W. Rapid and noninvasive sensory analyses of food products by hyperspectral imaging: Recent application developments. Trends Food Sci. Technol. 2021;111:151–165. doi: 10.1016/j.tifs.2021.02.044. [DOI] [Google Scholar]

- 37.Chandrasekaran I, Panigrahi SS, Ravikanth L, Singh CB. Potential of near-infrared (NIR) spectroscopy and hyperspectral imaging for quality and safety assessment of fruits: An overview. Food Anal. Methods. 2019;12:2438–2458. doi: 10.1007/s12161-019-01609-1. [DOI] [Google Scholar]

- 38.Dong X, Jakobi M, Wang S, Köhler MH, Zhang X, Koch AW. A review of hyperspectral imaging for nanoscale materials research. Appl. Spectrosc. Rev. 2019;54:285–305. doi: 10.1080/05704928.2018.1463235. [DOI] [Google Scholar]

- 39.Soukup D, Huber-Mörk R. Mobile hologram verification with deep learning. IPSJ Trans. Comput. Vis. Appl. 2017;9:1–6. [Google Scholar]

- 40.Guerriero S, Pilloni M, Alcazar J, Sedda F, Ajossa S, Mais V, Melis GB, Saba L. Tissue characterization using mean gray value analysis in deep infiltrating endometriosis. Ultrasound Obstet. Gynecol. 2013;41:459–464. doi: 10.1002/uog.12292. [DOI] [PubMed] [Google Scholar]

- 41.Arslan H, Ozcan U, Durmus Y. Evaluation of mean gray values of a cat with chronic renal failure: Case report. Arquivo Brasileiro de Medicina Veterinária e Zootecnia. 2021;73:438–444. doi: 10.1590/1678-4162-12172. [DOI] [Google Scholar]

- 42.Alcázar JL, León M, Galván R, Guerriero S. Assessment of cyst content using mean gray value for discriminating endometrioma from other unilocular cysts in premenopausal women. Ultrasound Obstet. Gynecol. 2010;35:228–232. doi: 10.1002/uog.7535. [DOI] [PubMed] [Google Scholar]

- 43.Lakshmanaprabu S, Mohanty SN, Shankar K, Arunkumar N, Ramirez G. Optimal deep learning model for classification of lung cancer on CT images. Future Gener. Comput. Syst. 2019;92:374–382. doi: 10.1016/j.future.2018.10.009. [DOI] [Google Scholar]

- 44.Frighetto-Pereira, L., Menezes-Reis, R., Metzner, G.A., Rangayyan, R.M., Azevedo-Marques, P.M., & Nogueira-Barbosa, M.H. Semiautomatic classification of benign versus malignant vertebral compression fractures using texture and gray-level features in magnetic resonance images, in Proceedings of the 2015 IEEE 28th International Symposium on Computer-Based Medical Systems. 88–92 (2015).

- 45.Li J, Rao X, Ying Y. Detection of common defects on oranges using hyperspectral reflectance imaging. Comput. Electron. Agric. 2011;78:38–48. doi: 10.1016/j.compag.2011.05.010. [DOI] [Google Scholar]

- 46.Deng X, Huang Z, Zheng Z, Lan Y, Dai F. Field detection and classification of citrus Huanglongbing based on hyperspectral reflectance. Comput. Electron. Agric. 2019;167:105006. doi: 10.1016/j.compag.2019.105006. [DOI] [Google Scholar]

- 47.Sun Y, Wei K, Liu Q, Pan L, Tu K. Classification and discrimination of different fungal diseases of three infection levels on peaches using hyperspectral reflectance imaging analysis. Sensors. 2018;18:1295. doi: 10.3390/s18041295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Lim, H.-T., Matham, M.V. Instrumentation challenges of a pushbroom hyperspectral imaging system for currency counterfeit applications, in Proceedings of the International Conference on Optical and Photonic Engineering (icOPEN 2015). 658–664 (2015).

- 49.Qin J, Burks TF, Kim MS, Chao K, Ritenour MA. Citrus canker detection using hyperspectral reflectance imaging and PCA-based image classification method. Sens. Instrum. Food Qual. Saf. 2008;2:168–177. doi: 10.1007/s11694-008-9043-3. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.