Abstract

In two studies, we examined whether open science practices, such as making materials, data, and code of a study openly accessible, positively affect public trust in science. Furthermore, we investigated whether the potential trust-damaging effects of research being funded privately (e.g. by a commercial enterprise) may be buffered by such practices. After preregistering six hypotheses, we conducted a survey study (Study 1; N = 504) and an experimental study (Study 2; N = 588) in two German general population samples. In both studies, we found evidence for the positive effects of open science practices on trust, though it should be noted that in Study 2, results were more inconsistent. We did not however find evidence for the aforementioned buffering effect. We conclude that while open science practices may contribute to increasing trust in science, the importance of making use of open science practices visible should not be underestimated.

Keywords: epistemic trust, experimental study, open science practices, science communication, survey study, trust in science

1. Introduction

Recently, Wingen et al. (2020) argued that “in the current political climate [. . .] the credibility of scientific evidence is questioned and science is threatened by defunding” (p. 461). Such developments have been fueled, among others, by unsuccessful attempts to replicate key findings (e.g. in psychology; Open Science Collaboration, 2015) and by scientists adopting questionable research practices (QRPs; Anvari and Lakens, 2018). To counterbalance these issues, many scientific fields have seen a shift toward open science (Chambers and Etchells, 2018; Lewandowsky and Bishop, 2016; Vazire, 2018; Wallach et al., 2018), generally defined as

the practice of science in such a way that others can collaborate and contribute, where research data, lab notes and other research processes are freely available, under terms that enable reuse, redistribution and reproduction of the research and its underlying data and methods. (Bezjak et al., 2018: 9)

Despite increasing adherence to open science practices (OSPs) in the scientific community, little is known about the public’s expectations of such practices and their effects on the perceived trustworthiness of science. This is striking because knowledge on whether OSPs lead to increased trust has considerable implications, both for the research process itself and with regard to the communication of scientific findings. For example, recent research on COVID-19 has shown that individuals who trust in science as an authority for justifying knowledge claims are less likely to believe in COVID-19-related conspiracy theories (Beck et al., 2020), are more likely to engage in protective behaviors (Soveri et al., 2021), and exhibit a higher vaccination willingness (Rosman et al., 2021). Considering such positive effects, it becomes extremely important to examine the predictors of epistemic trust—and OSPs are an especially promising candidate in this regard since they directly relate to the (quality of the) research process itself. For these reasons, this article addresses the following research questions: To what extent does the public value OSPs? Is it possible to increase the public’s trust in science through such practices? Do OSPs buffer reductions in trust evoked by commercially funded research? Addressing these questions, the present investigation is attempting to map out the general public’s assumptions and values concerning open and transparent scientific practices, with a special focus on whether such practices contribute to increasing trust in science. We thereby focus on the German general population, but expect that our analyses are generalizable to other populations as well because our study materials are largely independent from any national context.

This work is divided into two parts. In a first study, we ask respondents about their opinions toward science and the scientific process using an online survey format, thus mapping out the public’s general expectations toward OSPs and their potential trust-enhancing effects. In a second study, we take a more bias-controlled approach to replicate and extend these research questions: Drawing on a vignette-based experimental design, we investigate whether certain scientific practices (i.e. OSPs and public vs private funding) affect individual trust in and opinions toward science.

2. Background

OSPs and trust in science

We suggest two central mechanisms by which OSPs may influence trust in science. First, on the level of science itself, research quality may increase if scientists adopt OSPs on a larger scale: OSPs restrict scientists’ degrees of freedom, thereby effectively reducing malpractices such as HARKing (hypothesizing after the results are known; Kerr, 1998) or p-hacking (adopting measures to deliberately obtain statistically significant results; Wicherts et al., 2016). This, in turn, reduces the chances of obtaining false-positive results (Wicherts et al., 2016), thus increasing replicability rates (Munafò, 2016; Munafò et al., 2017). Given that replications significantly contribute to trust in psychological theories (van den Akker et al., 2018) and that low replicability has been shown to impair public trust in science (Anvari and Lakens, 2018; Chopik et al., 2018; Hendriks et al., 2020; Wingen et al., 2020), increased trust is thus a likely result of OSPs. It should, however, be noted that some of these assumptions cannot be tested empirically, and that at least one study found that low replicability does not have much of a detrimental effect on public perceptions of science (Mede et al., 2021). Furthermore, increasing trust in science through increasing the quality of science may take several years.

As a second (and more direct) mechanism by which OSPs influence public trust in science, we suggest that visible OSPs may increase trust because recipients may perceive them as an indicator of quality. For example, transparency suggests that the person (or organization) in question has nothing to hide (Bachmann et al., 2015), acts responsibly (Medina and Rufín, 2015), and allows an independent verification of its claims (Lupia, 2017; Nosek and Lakens, 2014). Moreover, the public may simply expect scientists to work open and transparently (Fitzpatrick et al., 2020), which is why decreases in trust should occur if scientists do not meet such expectations. Evidence for both of these assumptions comes from a recent survey conducted by the Pew Research Center, which found that “a majority of U.S. adults (57%) say they trust scientific research findings more if the scientists make their data publicly available” (Pew Research Center, 2019: 24). Moreover, in a 2011 survey among the UK public, 33% of participants mentioned “If I could see the original study for myself” (Ipsos MORI, 2011: 39) as a factor for increasing trust.

However, while such survey studies offer robust evidence due to their large and heterogeneous samples, they usually rely on self-reports and may thus be subject to biases such as social desirability. Hence, taking an additional look at experimental studies (which offer better bias control) is well advised. Unfortunately, as outlined in the next paragraph, the few experimental studies on the relationship between OSPs and trust in science have yielded rather inconclusive results.

In fact, of the four experimental studies that have (to our knowledge) been conducted up to now, only one has found evidence for beneficial effects of OSPs on trust. By confronting their participants with journal article title pages that either included open science badges or not, Schneider et al., 2022 found that badges indicating adherence to OSPs positively influenced trust in scientists. However, two other studies have yielded inconclusive results, and one has even found evidence against OSPs: First, Field et al. (2018) had 209 academics from the field of psychology read descriptions of empirical studies in which they had experimentally manipulated whether the studies were preregistered or not. Subsequently, they assessed this manipulation’s effect on epistemic trust. However, due to a failed manipulation check, 86% of their data had to be excluded from their (Bayesian) analyses, resulting in inconclusive evidence toward almost all research questions. In addition, it should be noted that this study examined an expert sample (i.e. academics) and not the general population. Second, a study by Wingen et al. (2020) assessed whether decreased trust in science elicited by informing participants about replication failures may be buffered by additionally informing them about proposed reforms (i.e. open science and increased transparency). However, their analyses yielded no significant results—hence informing participants about proposed reforms did not seem effective in repairing trust (Wingen et al., 2020). Third, Anvari and Lakens (2018) tested whether trust in psychological science would be impacted by informing participants about replication failures, QRPs, and proposed reforms. However, their results suggested “that learning about all three aspects of the replicability crisis (replication failures, criticisms of QRPs, and suggested reforms) reduces [emphasis added] trust in future research from psychological science” (Anvari and Lakens, 2018: 281–282).

The present research

It is noteworthy that these four experimental studies all relate to the fields of psychology and/or educational research, whereas the survey studies presented earlier focus on science as a whole. Nevertheless, we are intrigued by the discrepancies between the results of the two types of studies. Hence, considering the fact that empirical research on the effects of open science is still in its infancy and that many more corresponding studies are needed, the first part of this research has two objectives: First, we aim to replicate the survey results presented above (i.e. on the beneficial effects of OSPs on trust). To allow for a more fine-grained interpretation of our results, we will ask participants not only about their trust in science as a whole, but additionally focus on two specific academic fields—psychology and medicine. Second, to shed light on the inconclusive results in existing experimental research (see above), we combine this survey-based approach with a scenario-based experimental approach to determine whether OSPs indeed have causal effects on trust in science.

Furthermore, OSPs such as increasing research transparency are, by far, not the only factor influencing perceptions of trust (Bachmann et al., 2015). For example, several studies have consistently found that the public’s trust in research funded by private organizations (e.g. commercial enterprises) is significantly lower compared to research funded by public institutions such as universities (Critchley, 2008; Krause et al., 2019; National Science Board, 2018; Pew Research Center, 2019). Interestingly, Critchley (2008) found, using a mediator analysis, that the increased trust in publicly funded research is partially due to the fact that public scientists are “more likely to deliver any benefits from the research to the public” (Critchley, 2008: 320). With the latter assumption receiving empirical support in a 2016 scientific author survey (Boselli and Galindo-Rueda, 2016), this offers an interesting connection to OSPs because it suggests that the decreases in trust elicited by privately funded research might be buffered by delivering more research benefits to the public—a central goal of the open science movement (Lyon, 2016; Munafò et al., 2017). In the second part of the present research, we will therefore, apart from trying to replicate the aforementioned effects regarding public versus private funding, investigate interactions between type of funding (public vs private) and the adoption of OSPs (yes vs no) on trust in science.

Preregistration and hypotheses

As stated above, the objective of the present research is twofold. First, we examine, using survey questions and an experimental approach, whether OSPs have a positive effect on public trust in science. Second, we experimentally investigate whether the trust-damaging effects of research being privately funded may be buffered by OSPs. In all hypotheses, we focus on the OSP of making materials, data, and analysis code openly accessible (as has been done before, for example, Schneider et al., 2022). In line with our deliberations and the literature presented above, we suggest the following hypotheses, of which the first three are based on survey questions (see Table 1) and the last three make use of a vignette-based experimental 3 (OSP vs no OSP vs OSP not mentioned) × 2 (public vs private) between-person design. All hypotheses and the corresponding study procedures, target sample sizes, manipulation checks, inference criteria, and analysis procedures were preregistered at PsychArchives (Rosman et al., 2020):

Table 1.

Survey questions (Study 1).

| ID | Wording | Response format | M | SD | Positive responses (%) |

|---|---|---|---|---|---|

| SQ1 | How important do you think it is that scientific results are made available to the public free of charge (e.g., on the Internet)? | 7-point scale, not important at all to very important | 5.96 | 1.19 | 87.2 |

| SQ2 | How important do you think it is that the following

scientific results are made available to the public free of

charge (e.g., on the Internet)? • SQ2c: Study materials, datasets, and analysis code of individual studies |

7-point scale, not important at all to very important | 5.09 | 1.32 | 64.3 |

| SQ3 | My trust in a scientific study increases when I see scientists publicly sharing their study materials, their datasets, and their analysis code. | 7-point scale, do not agree at all to fully agree | 5.25 | 1.26 | 74.0 |

| SQ4 | My trust in a study from the field of psychology increases when I see scientists publicly sharing their study materials, their datasets, and their analysis code. | 7-point scale, do not agree at all to fully agree | 5.11 | 1.28 | 68.7 |

| SQ5 | My trust in a study from the field of medicine increases when I see scientists publicly sharing their study materials, their datasets, and their analysis code. | 7-point scale, do not agree at all to fully agree | 5.36 | 1.25 | 76.6 |

| SQ6 | My trust in a scientific study increases when I see that it was funded publicly (instead of by a commercial company). | 7-point scale, do not agree at all to fully agree | 4.74 | 1.47 | 53.4 |

| SQ7 | My trust in a study from the field of psychology increases when I see that it was funded publicly (instead of by a commercial company). | 7-point scale, do not agree at all to fully agree | 4.70 | 1.49 | 53.2 |

| SQ8 | My trust in a study from the field of medicine increases when I see that it was funded publicly (instead of by a commercial company). | 7-point scale, do not agree at all to fully agree | 4.81 | 1.49 | 56.9 |

Note. N = 504; M = mean; SD = standard deviation; positive responses = percentage of participants choosing response options 5–7 on the 7-point scale; all items were administered in German language (see preregistration; Rosman et al., 2020) and have been translated for the present paper by the authors. Note that the SQ2 question included two additional items not displayed here (SQ2a: plain language summaries; SQ2b: journal articles and scholarly books; see preregistration for details).

Hypothesis 1. More than half of the participants indicate that it is “rather important,” “important,” or “very important” that researchers make their findings openly accessible to the public [survey question SQ1].

Hypothesis 2. More than half of the participants indicate that it is “rather important,” “important,” or “very important” that researchers make their materials, data, and analysis code openly accessible to the public [survey question SQ2c].

Hypothesis 3. More than half of the participants “rather agree,” “agree,” or “fully agree” with the statement that their trust in science (H3a)/in psychological science (H3b)/in medical science (H3c) increases if they see that researchers make their materials, data, and analysis code openly accessible [survey questions: H3a: SQ3, H3b: SQ4 and H3c: SQ5].

Hypothesis 4. Participants confronted with vignettes describing empirical studies that use OSPs (i.e. illustrating that the researchers made their materials, data, and analysis code openly accessible) report higher trust in these studies compared to participants confronted with vignettes describing these same empirical studies but mentioning that either no OSPs were implemented (H4a) or not mentioning whether OSPs were implemented or not (H4b). Statistically speaking, we expect a significant main effect of the “open science practices” factor.

Hypothesis 5. Participants confronted with vignettes describing empirical studies that use public funding (i.e. funded by a university) report higher trust in these studies compared to participants confronted with vignettes describing these same empirical studies but mentioning that the studies were privately funded (i.e. by a commercial enterprise). Statistically speaking, we expect a significant main effect of the “funding” factor.

Hypothesis 6. In the aforementioned vignette experiment, the trust-damaging effects of research being privately funded (see Hypothesis 5) are buffered by OSPs: The difference in trust between privately and publicly funded research is higher for studies not employing OSPs (H6a) or making no mention of OSPs (H6b) compared to studies employing OSPs. Statistically speaking, we expect a significant interaction between the “open science” and “funding” factors.

3. Study 1

Design and procedure

Study 1 aimed at testing Hypotheses 1–3 and was realized as an online survey. It used a cross-sectional correlational design with no experimental factors. All study procedures were carried out in one session. After screening and assessing demographic questions, general covariates (e.g. trust in science) were measured. Subsequently, the survey questions were administered. All study materials, including the vignettes, measures, covariates, and manipulation checks, can be found in Rosman et al. (2022c). Inversely formulated items were recoded and scale means were calculated where appropriate.

Participants

Since Hypotheses 1–3 require no inferential testing, we preregistered, on pragmatic grounds, a target sample size of N = 500 participants (Rosman et al., 2020). The sample was recruited by a commercial panel provider in January 2021. Data were collected using the survey software Unipark, and all study materials were administered in German language. Participants completed the data collection using their own device and were paid for their participation by the panel provider. Agreement to an informed consent form that included detailed information on study procedures, data privacy, and participants’ rights was mandatory for participation. Participants needed to be German-speaking and between 18 and 65 years of age. Furthermore, using quota configurations in the survey software, we specified the sample to approximately correspond to the German general population regarding gender distribution (50% females, 50% males) and age (in 7-year intervals). Furthermore, the panel provider ensured that there was no overlap in participants between Study 1 and Study 2.

Total sample size was N = 504. Gender was distributed evenly with 252 women and 252 men. Age distribution was as follows: 11.1% between 18 and 24 years; 14.5% between 25 and 31 years; 14.5% between 32 and 38 years; 13.3% between 39 and 45 years; 14.7% between 46 and 52 years; 17.7% between 53 and 59 years; and 14.3% between 60 and 66 years. This approximately corresponds to the age distribution of the German general population. Education levels were lower secondary school leaving qualification (10.9%), middle maturity (32.5%), higher education entrance qualification (“Abitur” (the German school leaving exam); 55.4%), no qualification (0.2%), and 1.0% of participants were still in secondary education.

Measures

The survey questions relevant for testing Hypotheses 1–3 and for our exploratory analyses (see below) can be found in Table 1. Questions were administered in identical order to all participants.

Results

No major protocol deviations occurred, which is why all calculations were performed using the full dataset (N = 504). Study data as well as the analysis code and output can be found in Rosman et al. (2022a, 2022b). Descriptive statistics on the study variables can be found in Table 1.

Confirmatory analyses

Hypotheses 1–3 were supported. Specifically, 87.2% of participants indicated that they view it as “rather important,” “important,” or “very important” that researchers make their findings openly accessible to the public, thus providing strong support for Hypothesis 1. Furthermore, 64.3% of participants indicated that it is “rather important,” “important,” or “very important” that researchers make their materials, data, and analysis code openly accessible, thus supporting Hypothesis 2. Regarding Hypothesis 3a, 74.0% of participants “rather agreed,” “agreed,” or “fully agreed,” with the statement that their trust in a scientific study increased if they saw that researchers made their materials, data, and analysis code openly accessible. When asked the same question about a psychological study (Hypothesis 3b), the respective proportion was 68.7%, and when asked about a study from the medical domain (Hypothesis 3c), the proportion was 76.6%. Detailed analyses can be found in the online supplement in PsychArchives (Rosman et al., 2022a).

Exploratory analyses

To test whether our descriptive analyses on Hypotheses 1–3 hold up to inferential testing, we dichotomized the corresponding variables by assigning the value 1 to response options indicating a positive response (e.g. “rather agree,” “agree,” or “fully agree” for Hypothesis 1) and by coding all other substantive (i.e. excluding missing data) response options as 0. Subsequently, we carried out one-sample t-tests testing the means of these dichotomous variables against 0.5. If the mean of the respective variable is above 0.5 and the corresponding t-test is significant, this indicates that the percentage of participants with a positive response is significantly higher than 50%, thus allowing to test Hypotheses 1–3 for statistical significance. These analyses revealed full support for Hypothesis 1 (t(503) = 24.83, p < .001), for Hypothesis 2 (t(503) = 6.69, p < .001), and for Hypotheses H3a (t(503) = 12.28, p < .001), H3b (t(503) = 9.02, p < .001), and H3c (t(503) = 14.08, p < .001), thus underlining that the public views open access and open science as important, and that the latter may contribute to trust in science.

As additional exploratory analyses, we took a closer look at the SQ4 and SQ5 variables (see Table 1) and found that a higher percentage of participants indicated that OSPs would increase trust in medicine compared to psychology (76.6% vs 68.7%). To test this for statistical significance, we conducted a paired-samples t-test comparing the means (see Table 1) of the two variables. This analysis resulted in a highly significant difference (t(503) = 7.36, p < .001; Cohen’s U3 = 0.37). In addition, we investigated whether funding impacts trust. In this analysis, we found that 53.4% of participants “rather agreed,” “agreed,” or “fully agreed,” with the statement that their trust in a scientific study increased if they saw that it was funded publicly (SQ6 variable). This proportion was slightly lower with regard to trust in a psychological study (53.2%) and slightly higher with regard to a study from the field of medicine (56.9%). A paired-samples t-test indicated a significant difference between the means of the two variables (SQ7 and SQ8; see Table 1; t(503) = 3.21, p < .01; Cohen’s U3 = 0.44).

Finally, we conducted a number of exploratory one-factorial analyses of variance (ANOVAs) to test whether participants’ responses to the survey questions (SQ1–SQ8, see Table 1) differed with regard to the seven age groups (see participants section), gender, and educational level. The analyses revealed no significant differences regarding age, but significant gender differences on the SQ3, SQ4, and SQ5 questions. More specifically, men were more likely to agree to the statement that their trust in a scientific study would increase if they saw scientists publicly sharing their study materials, datasets, and analysis code (SQ3; F(1, 504) = 8.18, p = .004, ηp2 = .016), and the analyses also yielded significant results when this statement focused on psychology (SQ4; F(1, 504) = 5.97, p = .015, ) or medicine (SQ5; F(1, 504) = 8.18, p = .004, ). In addition, a significant main effect of participants’ educational level1 was found with regard to SQ1 and SQ2c: Participants with higher educational levels found it more important that scientific results are made available to the public (SQ1; F(2, 498) = 6.31, p = .002, ) and also consider it more important that study materials, datasets, and analysis code of individual studies are made available (SQ2c; F(2, 498) = 3.14, p = .044, ). Tukey’s HSD (Honestly Significant Difference) post hoc tests revealed that in both cases, only the difference between the lower secondary school leaving qualification and higher education entrance qualification was statistically significant (i.e. between the highest and the lowest educational level; see Rosman et al., 2022a).

4. Study 2

Design, procedure, and materials

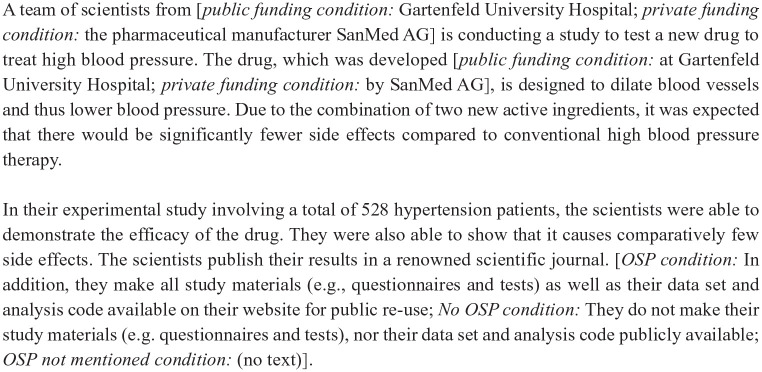

Study 2 aimed to test Hypotheses 4–6 using a mixed experimental design. After participant screening and assessing demographic information, general covariates were measured (e.g. trust in science; see preregistration for details), directly followed by a reading task with four vignettes describing empirical studies from medicine and psychology. The experimental manipulation involved systematically varying specific aspects of these vignettes, resulting in a 2 × 3 × 4 mixed design with the between-factors “open science practices” and “type of funding” (see Table 2) and the within-factor “texts” (four texts covering the following topics: online training for gifted students; therapeutic method to treat fear of heights; medication to treat high blood pressure; method for early detection of muscle atrophy). With regard to the “open science practices” factor, the texts varied in that (1) the scientists (allegedly) employed OSPs by making their study materials, data, and analysis code publicly available (OSP condition), (2) the scientists did not employ such OSPs (no OSP condition), or (3) the texts did not mention whether OSPs were employed or not (OSP not mentioned condition). Regarding the funding factor, the texts varied in that the studies were portrayed as being funded (1) privately (e.g. by a commercial enterprise) or (2) publicly (e.g. by a university). A sample text including all experimental manipulations can be found in Figure 1. Furthermore, all study materials, including the vignettes, measures, covariates, and manipulation checks (in German language), can be found in Rosman et al. (2022c).

Table 2.

Sample sizes and mean trust rating across experimental groups (Study 2).

| Type of Funding | |||

|---|---|---|---|

| Public funding | Private funding | ||

| Open science practices (OSPs) | No OSP |

n = 95 Mtrust = 4.10 SDtrust = 0.71 |

n = 100 Mtrust = 4.02 SDtrust = 0.84 |

| OSP not mentioned |

n = 98 Mtrust = 4.26 SDtrust = 0.93 |

n = 98 Mtrust = 3.97 SDtrust = 0.83 |

|

| OSP |

n = 99 Mtrust = 4.21 SDtrust = 0.74 |

n = 98 Mtrust = 4.10 SDtrust = 0.76 |

|

Note. Means (M) and standard deviations (SD) of trust ratings were averaged across all four texts.

Figure 1.

Sample vignette from the reading task (Study 2).

Note. Underlined passages indicate experimental manipulations. All texts were administered in German language (see preregistration; Rosman et al., 2020).

Participants

Prior to data collection, sample size calculation was performed using GPower 3.1 (Faul et al., 2009). Specifying a small-to-medium expected effect size of f = .15, the analyses revealed a required sample size of N = 576 in total, or n = 96 per group (f = .15; α = .01666; 1–β = 0.90; six groups, four measurement points; correlation among measurements: r = .5).

The sample was recruited by the same panel provider as in Study 1, who also ensured that only participants who had not participated in Study 1 were invited for Study 2. Eligibility criteria, informed consent, and all other procedures surrounding the data collection were identical to Study 1, and the study was also conducted in January 2021. In total, N = 588 participants2 were recruited. Gender was distributed evenly with 298 women and 290 men. Age distribution was as follows: 11.6% between 18 and 24 years; 14.3% between 25 and 31 years; 14.5% between 32 and 38 years; 12.6% between 39 and 45 years; 14.6% between 46 and 52 years; 18.0% between 53 and 59 years; and 14.5% between 60 and 66 years. Education levels were lower secondary school leaving qualification (8.7%), middle maturity (35.7%), higher education entrance qualification (“Abitur”; 54.4%), no qualification (0.7%), and 0.5% of participants were still in secondary education.

Measures

The main outcome variable of Study 2 was trust in the respective study. As outlined above, this was measured separately for each of the four vignettes. After a short introductory text (“Now please give us a brief assessment of this study”), participants were asked to rate three statements (“This study is trustworthy”; “I immediately believe the result of this study”; and “I trust that this study was conducted correctly”; translation by the authors) on a 6-point scale ranging from 1 (do not agree at all) to 6 (fully agree). Exploratory factor analyses revealed a clear one-dimensional structure of these three items for all four measurement occasions, and scale reliabilities (Cronbach’s alpha) were excellent, ranging from α = .88 to α = .90.

Manipulation checks

OSP factor

A forced-choice question was used to test whether the experimental between-person manipulation on OSPs was successful (“Do the researchers described in the texts make their study materials and their dataset and analysis code openly accessible?”; response options: “Yes, they do,” “No, they do not,” “The text does not make any statement on this”; see preregistration for the original German wordings). According to our preregistered criteria, the manipulation check was successful: As expected, (1) the frequency of responses indicating that the researchers employed OSPs was significantly higher in the OSP condition (n = 146 (49.32%)) compared to the two other conditions (n = 56 (18.92%) for the no OSP condition and n = 91 (30.74) for the OSP not mentioned condition), (2) the frequency of responses indicating that the researchers did not employ OSPs was higher in the no OSP condition (n = 100 (70.42%)) compared to the two other conditions (n = 18 (12.68%) for the OSP condition and n = 24 (16.90%) for the OSP not mentioned condition), and (3) the frequency of responses indicating that the text did not mention whether the researchers employed OSP was higher in the OSP not mentioned condition (n = 81 (52.94%)) compared to the two other conditions (n = 33 (21.57%) for the OSP condition and n = 39 (25.49%) for the no OSP condition; χ2(4) = 157.41, p < .001). Interestingly though, the majority of participants from the OSP not mentioned condition nevertheless indicated that the researchers had employed OSPs (n = 91 (46.43%) compared to n = 81 (41.33%) participants correctly indicating that the text makes no statement on OSPs). This might be due to a general expectation that scientists do make their materials, data, and code openly accessible, even if this is not explicitly mentioned.

Funding factor

Another forced-choice question was used to test whether the experimental (between-person) manipulation on public versus private funding was successful (“Where were the four studies conducted?”; response options: “in a private company (e.g. a firm),” “at a public institution (e.g. a university)”; see preregistration for the original German wordings). As expected, the frequency of responses indicating that the research was funded privately was significantly higher in the private funding condition (n = 165 (90.66%)) compared to the public funding condition (n = 17 (9.34%); χ2(1) = 171.41, p < .001). Hence, the manipulation check was successful according to our preregistered criteria—although it should be noted that the number of participants in the private funding condition who falsely indicated that the research was funded publicly was rather high (n = 131 (44.26%) incorrect vs n = 165 (55.74%) correct responses). In contrast, in the public funding condition, the frequency of incorrect answers was much lower (n = 17 (5.82%) incorrect vs n = 275 (94.18%) correct). Hence, the vast majority of the participants in the public funding condition correctly identified studies that were funded publicly, whereas only a slight majority of participants from the private funding condition correctly identified studies that were funded privately.

Results

No major protocol deviations occurred, which is why all calculations were performed using the full dataset (N = 588). Descriptive statistics of the study variables can be found in Table 2. Study data as well as the analysis code and output can be found in Rosman et al. (2022a, 2022b).

Confirmatory analyses

As specified in our preregistration, we used the standard p < .05 criterion for determining the significance of results. Hypotheses 4–6 were tested in one single3 mixed ANOVA (i.e. an ANOVA including both within- and between-factors4). Between-factors for this analysis were OSPs, type of funding, and their interaction; the within-factor was text, and the dependent variable was trust in the respective text.

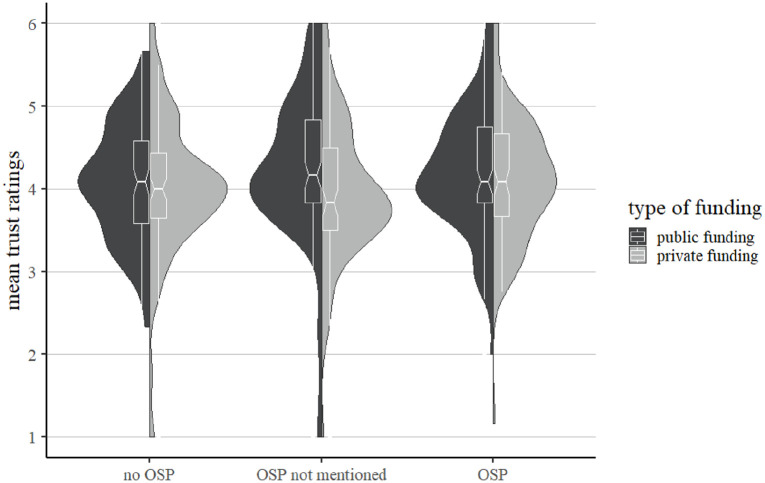

Descriptive results and a visualization of results can be found in Table 2 and Figure 2. While testing Hypothesis 4, no significant effects of the between-factor OSP were found (F(2, 582) = 0.71, p = .494). Hypothesis 4 is not supported. With regard to Hypothesis 5, differences between the private funding and public funding groups were significant and in the expected direction (F(1, 582) = 5.66, p = .018, ). Thus, Hypothesis 5 is supported. Finally, we did not find support for Hypothesis 6—the analyses revealed no significant interaction between the two between-factors OSP and funding (F(2, 582) = 0.99, p = .372).

Figure 2.

Visualization of Study 2 results (violin plots combined with notched box plots; McGill et al., 1978). The notches in the box plots indicate 95% confidence intervals of the median.

Exploratory analyses

To further investigate these results, we performed a number of exploratory analyses. First, the mixed ANOVA conducted to test Hypothesis 4 had revealed a significant interaction between the within-factor (text) and the between-factor OSPs (F(5.87, 1706.79) = 2.71, p = .014; Greenhouse–Geisser corrected). This means that the differences between the experimental groups (OSP factor) varied between different texts, which is why Hypothesis 4 might be supported with regard to some, but not all, of the texts. To investigate this more closely, we conducted four separate univariate ANOVAs—one for each text—with OSP as between-factor and trust in the respective study as dependent variable. However, neither of these analyses revealed significant group differences (all ps > .11). In addition, we tested whether educational level might have affected our results. This was because participants with a higher educational level, due to their better reading skills, might be more likely to correctly identify the elements from the vignettes pertaining to our experimental manipulation (e.g. the information on whether OSPs were employed or not). To increase the statistical power of our analyses, we first recoded the educational level variable to a dichotomous format. This new variable splits our sample into two larger groups—do participants have a higher education entrance qualification (“Abitur”; n = 320) or not (n = 268)? Subsequently, we added this variable as an additional between-person factor and also included the interaction between this new variable and OSP (Hypothesis 4), respectively, and funding (Hypothesis 5) in the analysis. With regard to Hypothesis 4, we found no significant interaction between OSP and education (F(2, 582) = 0.003, p = .997), which suggests that education does not significantly impact our results on Hypothesis 4. Since the interaction between funding and education was not significant, too (F(1, 584) = 0.020, p = .887), the same is true for Hypothesis 5.

As an additional exploratory analysis, we re-ran all confirmatory analyses after removing participants who had failed the respective manipulation checks. When re-testing Hypothesis 4 within the subsample of participants who had correctly indicated the OSP status of their texts (n = 327), group differences became significant (F(2, 321) = 3.89, p = .022), although the corresponding effect was rather small ( ). Regarding Hypothesis 5, we re-ran all analyses after omitting all participants who did not correctly indicate whether their study was funded privately or publicly. In this subsample (n = 440), a highly significant difference between the private funding and the public funding condition was found (F(1, 434) = 11.65, p < .001, )—just as we had expected in Hypothesis 5. Finally, when re-testing Hypothesis 6 after omitting all participants with incorrect answers on either of the two manipulation checks (n = 261), we still found no significant interaction between the two between-factors (F(2, 255) = 1.641, p = .196). In sum, our exploratory analyses thus yielded support for Hypotheses 4 and 5, but no support for Hypothesis 6.

5. Discussion

In two studies, we aimed to assess whether OSPs, such as making materials, data, and analysis code of a study openly accessible, positively affect trust in science. Furthermore, we investigated whether the trust-damaging effects of research being funded privately may be buffered by such practices. After preregistering six hypotheses, we conducted a survey study (Study 1) and an experimental study (Study 2), with around 500 participants each. Both samples thereby approximately corresponded to the German general population regarding gender distribution and age.

Key findings

The online survey of Study 1 directly asked participants about their perceived importance of OSPs, and whether seeing scientists employ such practices would make them more trustworthy. An overwhelming majority of our sample found it important that researchers make their findings openly accessible (Hypothesis 1) and that they implement OSPs (Hypothesis 2). Furthermore, a large proportion of participants indicated that their trust in a scientific study would increase if they saw that researchers made their materials, data, and code openly accessible (Hypothesis 3). Correspondingly, all three hypotheses investigated in Study 1 were supported, thus corroborating our expectations that OSPs are a trust-increasing factor.

In contrast to these results, Study 2 findings were less straightforward. Using an experimental design, we administered four vignettes describing fictitious studies to our participants. We thereby experimentally manipulated the vignettes with regard to (1) whether the scientists responsible for the study had employed OSPs (OSP vs no OSP vs OSP not mentioned) and regarding (2) the funding of the respective study (public vs private). Although our manipulation checks were successful, our preregistered criteria were not fulfilled for Hypotheses 4 and 6. After eliminating all participants who had failed the manipulation checks in an exploratory analysis, we did however find significant differences between the group receiving texts describing scientists who had employed OSPs compared to texts explicitly mentioning that no OSPs were employed. Thus, while Hypothesis 4 is not supported, there are some indications in our data that the use of OSPs may increase trust—although it should also be noted that the corresponding effect sizes were rather small. With regard to Hypothesis 5, our analyses yielded evidence for the effects of a study’s funding type on trust, such that publicly funded studies are trusted more than privately funded ones. This finding is also a precondition for testing our last confirmatory hypothesis, namely that the trust-damaging effects of research being privately funded may be buffered by OSPs (Hypothesis 6). However, this hypothesis was clearly not supported by our data.

Implications and future directions

When comparing the results from both studies, a contrast between the strong empirical support for our hypotheses in Study 1 and the small effect sizes in Study 2 becomes notable, especially given that the hypotheses are very similar on a conceptual level. A first potential explanation for this discrepancy is the rather high trust level in Study 2. While this is perfectly in line with prior research (e.g. Mede et al., 2021; Pew Research Center, 2019; Wissenschaft im Dialog/Kantar Emnid, 2018), it could mean that we have a ceiling effect (Wang et al., 2009), which hinders us to find the hypothesized effects. We further believe that the differences between Study 1 and Study 2 findings may be partly due to social desirability in Study 1, which is controlled for in Study 2 by its experimental design. Another possible explanation would be that it is easier to identify differences in research practices when directly asked about them (Study 1) compared to when they are embedded within a scenario (Study 2). In this regard, it should be noted that our second study’s design focused on written descriptions of empirical studies. Possibly, including more tangible indicators for the adherence to OSPs—for example, visual quality seals such as open science badges—may lead to stronger effect sizes. In this regard, our results are in line with prior research on the effects of OSPs on trust: A comparison of the studies by Anvari and Lakens (2018), Schneider et al. 2022, and Wingen et al. (2020) reveals that more specific manipulations of the OSP “status” of a study (e.g. in terms of badges; Schneider et al., 2022) lead to stronger effects on trust compared to the more general approach of informing participants about proposed reforms in writing (e.g. Anvari and Lakens, 2018; Wingen et al., 2020). Since the strength of our vignette-based manipulation is somewhere between these two, it comes as no surprise that we did find some indications in our data suggesting positive effects of OSPs on trust, but no overwhelming support for our expectations. Taken together, the findings from both studies thus imply that people may well recognize open science as a trust-increasing factor, especially when directly asked about it, but that other factors such as communication strategies may play a comparatively stronger role in the development of trust in science.

With regard to funding as a factor influencing trust, results were more straightforward. First, the majority of Study 1 participants indicated that their trust in a scientific study would increase if they saw that it was funded publicly. Furthermore, our Study 2 analyses revealed experimental evidence for higher trust in publicly funded studies in comparison to privately funded ones. However, it should also be pointed out that the effect sizes for the funding factor were rather small, which might be due to at least some people trusting the private sector more compared to public authorities. For example, Gerdon et al. (2021), who examined the public acceptance of sharing data for fighting COVID-19, found that their participants were more willing to share their data with a private company compared to a public agency. However, when strictly focusing on research funding, the existing evidence more clearly indicates that private funding reduces trust (e.g. Critchley, 2008; Krause et al., 2019; Pew Research Center, 2019). In this context, our results on funding also constitute a precondition for testing Hypothesis 6—if funding were to have no negative effects, investigating how one may buffer such effects would have been futile.

However, as outlined above, Hypothesis 6 was not supported, neither in confirmatory nor in additional exploratory analyses. We believe that these negative results may have been caused by the smaller-than-expected effects of OSPs on trust. In fact, simply telling people that scientists employ OSPs may not be enough to reduce a more general mistrust in privately funded research, thus underlining the need for additional efforts. For example, recent science communication frameworks emphasize the role of members of the general public as active participants in science communication, who bring their own perspectives to the interpretation of scientific findings (e.g. Akin and Scheufele, 2017; Dietz, 2013) or even participate in the production of scientific knowledge (citizen science; Silvertown, 2009). Combining increased transparency with such participatory approaches may thus be even more promising to increase trust in science compared to transparency alone.

Considering the small effect sizes in our experimental setup, we suggest that future studies employing vignette-based manipulations recruit a larger number of participants to increase the likelihood of discovering small-to-moderate effects. In addition, should one decide to employ the same vignettes as in this study, some fine-tuning is advisable to reduce the number of participants failing the manipulation checks—or one might consider changing the analysis plan so that only participants passing the manipulation checks will be included in the analysis (although it should be noted that this may result in statistical challenges due to unequal group sizes). In addition, future research should investigate whether our results are indeed generalizable to samples other than the German population. Given that our vignettes are largely independent from any national context (e.g. no references to a specific study country are given, and the covered topics have an international scope), we expect our results to be generalizable. This is especially so considering that the aforementioned study results on trust in science do not differ much, regardless of whether they were carried out in a German or international context (e.g. Ipsos MORI, 2011; Mede et al., 2021; Pew Research Center, 2019). Nevertheless, future research should try to substantiate this claim with empirical evidence.

Conclusion

In sum, our results suggest that OSPs may well contribute to increasing trust in science and scientists. However, the importance of making the use of such practices visible and tangible should not be underestimated. Furthermore, considering the rate of incorrect answers on our OSP manipulation check, people might need to be taught what open science really means before they can identify it. In addition, while we do recognize the potential role of OSPs in attenuating the negative effects of the replication and trust crisis, it should be noted that the public’s trust in science is generally high (e.g. Mede et al., 2021; Pew Research Center, 2019). Nevertheless, building trust is especially important in the small but significant proportion of the population that denies scientific evidence (e.g. Lazić and Žeželj, 2021), and a widespread adoption of open science principles may be a promising approach to do so. We, however, concede that before this can happen, considerable changes in science’s incentivizing structures are necessary, so that adopting open science principles is not only rewarded by public trust but also benefits the individual scientist.

Acknowledgments

The authors thank Lisa Trierweiler and Sianna Grösser for proofreading and language editing.

Author biographies

Tom Rosman is a Senior Researcher and Acting Head of the Research Literacy Department at ZPID. His current research focuses on epistemic beliefs, epistemic trust, and open science.

Michael Bosnjak is Full Professor for Psychological Research Methods at Trier University (Germany), delegated to Robert Koch Institute as Scientific Director, Department for Epidemiology and Health Monitoring. His research interests include research synthesis methods, consumer/business psychology, and survey methodology.

Henning Silber is a Senior Researcher and Head of the Survey Operations Team at the Survey Design and Methodology Department at GESIS – Leibniz Institute for the Social Sciences. His research interests include survey methodology, data linkage, political sociology, and the experimental social sciences.

Joanna Koßmann is a Research Assistant at ZPID and Trier University. Her current research fields are survey methodology and digital and computer literacy.

Tobias Heycke was a postdoctoral researcher at the Survey Design and Methodology Department at GESIS— – Leibniz Institute for the Social Sciences at the time the research was conducted.

It should be noted that in this analysis, participants with no qualification (n = 1) and participants still in secondary education (n = 5) were removed because of low group sizes.

The slight deviation from our target sample size of N = 576 and the slightly unequal group sizes (see Table 2) are due to technical reasons (all participants simultaneously working on the survey were allowed to finish, even if the target sample size in their quota group was reached at some point during their participation).

This constitutes a deviation from our preregistration, in which we planned to conduct three separate Bonferroni-corrected analyses of variance (ANOVAs). We thank an anonymous reviewer for pointing out that these calculations may also be performed in one single ANOVA, which eliminates the necessity of a Bonferroni correction. The original and the new calculations can be found in the online supplement in PsychArchives (Rosman et al., 2022a); the results are largely the same.

It should be noted that we labeled this as “repeated measures ANOVA” in the preregistration, but “mixed ANOVA” is technically more correct.

Footnotes

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

ORCID iDs: Tom Rosman  https://orcid.org/0000-0002-5386-0499

https://orcid.org/0000-0002-5386-0499

Henning Silber  https://orcid.org/0000-0002-3568-3257

https://orcid.org/0000-0002-3568-3257

Joanna Koßmann  https://orcid.org/0000-0002-7674-5987

https://orcid.org/0000-0002-7674-5987

Contributor Information

Michael Bosnjak, Leibniz Institute for Psychology (ZPID), Germany.

Henning Silber, GESIS – Leibniz-Institute for the Social Sciences, Germany.

Joanna Koßmann, Leibniz Institute for Psychology (ZPID), Germany.

Tobias Heycke, GESIS – Leibniz-Institute for the Social Sciences, Germany.

References

- Akin H, Scheufele DA. (2017) Overview of the science of science communication. In: Jamieson KH, Kahan DM, Scheufele DA, Akin H. (eds) The Oxford handbook of the science of science communication: Oxford: Oxford University Press, pp. 25–33. [Google Scholar]

- Anvari F, Lakens D. (2018) The replicability crisis and public trust in psychological science. Comprehensive Results in Social Psychology 3(3): 266–286. [Google Scholar]

- Bachmann R, Gillespie N, Priem R. (2015) Repairing trust in organizations and institutions: Toward a conceptual framework. Organization Studies 36(9): 1123–1142. [Google Scholar]

- Beck SJ, Boldt D, Dasch H, Frescher E, Hicketier S, Hoffmann K, et al. (2020) Examining the Relationship between Epistemic Beliefs (Justification of Knowing) and the Belief in Conspiracy Theories. PsychArchives. Available at: 10.23668/PSYCHARCHIVES.3149 [DOI] [Google Scholar]

- Bezjak S, Clyburne-Sherin A, Conzett P, Fernandes P, Görögh E, Helbig K, et al. (2018) Open Science Training Handbook. Zenodo. Available at: 10.5281/zenodo.1212496 [DOI] [Google Scholar]

- Boselli B, Galindo-Rueda F. (2016) Drivers and implications of scientific open access publishing: Findings from a pilot OECD international survey of scientific authors. OECD Science, Technology and Industry Policy Papers 33: 1–67. [Google Scholar]

- Chambers C, Etchells P. (2018) Open science is now the only way forward for psychology. The Guardian, 23 August. Available at: https://www.theguardian.com/science/head-quarters/2018/aug/23/open-science-is-now-the-only-way-forward-for-psychology

- Chopik WJ, Bremner RH, Defever AM, Keller VN. (2018) How (and whether) to teach undergraduates about the replication crisis in psychological science. Teaching of Psychology 45(2): 158–163. [Google Scholar]

- Critchley CR. (2008) Public opinion and trust in scientists: The role of the research context, and the perceived motivation of stem cell researchers. Public Understanding of Science 17: 309–327. [DOI] [PubMed] [Google Scholar]

- Dietz T. (2013) Bringing values and deliberation to science communication. Proceedings of the National Academy of Sciences of the United States of America 110: 14081–14087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faul F, Erdfelder E, Buchner A, Lang A-G. (2009) Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods 41(4): 1149–1160. [DOI] [PubMed] [Google Scholar]

- Field SM, Wagenmakers E-J, Kiers H, Hoekstra R, Ernst AF, van Ravenzwaaij D. (2018) The Effect of Preregistration on Trust in Empirical Research Findings. London: The Royal Society. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzpatrick A, Hamlyn B, Jouahri S, Sullivan S, Young V, Busby A, et al. (2020) Public attitudes to science 2019: Main report. Available at: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/905466/public-attitudes-to-science-2019.pdf

- Gerdon F, Nissenbaum H, Bach RL, Kreuter F, Zins S. (2021) Individual acceptance of using health data for private and public benefit: Changes during the COVID-19 pandemic. Harvard Data Science Review. Epub ahead of print 6 April. DOI: 10.1162/99608f92.edf2fc97. [DOI] [Google Scholar]

- Hendriks F, Kienhues D, Bromme R. (2020) Replication crisis = trust crisis? The effect of successful vs failed replications on laypeople’s trust in researchers and research. Public Understanding of Science 29(3): 270–288. [DOI] [PubMed] [Google Scholar]

- Ipsos MORI (2011) Public attitudes to science 2011: Main report. Available at: https://www.ipsos.com/sites/default/files/migrations/en-uk/files/Assets/Docs/Polls/sri-pas-2011-main-report.pdf

- Kerr NL. (1998) HARKing: Hypothesizing after the results are known. Personality and Social Psychology Review 2(3): 196–217. [DOI] [PubMed] [Google Scholar]

- Krause NM, Brossard D, Scheufele DA, Xenos MA, Franke K. (2019) Trends: Americans’ trust in science and scientists. Public Opinion Quarterly 83(4): 817–836. [Google Scholar]

- Lazić A, Žeželj I. (2021) A systematic review of narrative interventions: Lessons for countering anti-vaccination conspiracy theories and misinformation. Public Understanding of Science 30(6): 644–670. [DOI] [PubMed] [Google Scholar]

- Lewandowsky S, Bishop D. (2016) Don’t let transparency damage science. Nature 529: 459–461. [DOI] [PubMed] [Google Scholar]

- Lupia A. (2017) The role of transparency in maintaining the legitimacy and credibility of survey research. In: Vannette DL, Krosnick JA. (eds) The Palgrave Handbook of Survey Research. Cham: Palgrave Macmillan, pp. 315–318. [Google Scholar]

- Lyon L. (2016) Transparency: The emerging third dimension of Open Science and Open Data. Liber Quarterly 25(4): 153–171. [Google Scholar]

- McGill R, Tukey JW, Larsen WA. (1978) Variations of box plots. American Statistician 32(1): 12–16. [Google Scholar]

- Mede NG, Schäfer MS, Ziegler R, Weißkopf M. (2021) The “replication crisis” in the public eye: Germans’ awareness and perceptions of the (ir)reproducibility of scientific research. Public Understanding of Science 30(1): 91–102. [DOI] [PubMed] [Google Scholar]

- Medina C, Rufín R. (2015) Transparency policy and students’ satisfaction and trust. Transforming Government: People, Process and Policy 9(3): 309–323. [Google Scholar]

- Munafò MR. (2016) Open Science and research reproducibility. Ecancermedicalscience 10: ed56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munafò MR, Nosek BA, Bishop DVM, Button KS, Chambers CD, du Sert NP, et al. (2017) A manifesto for reproducible science. Nature Human Behaviour 1: 0021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Science Board (2018) Science & engineering indicators 2018. Available at: https://www.nsf.gov/statistics/2018/nsb20181/assets/nsb20181.pdf

- Nosek BA, Lakens D. (2014) Registered reports: A method to increase the credibility of published results. Social Psychology 45(3): 137–141. [Google Scholar]

- Open Science Collaboration (2015) Estimating the reproducibility of psychological science. Science 349(6251): aac4716. [DOI] [PubMed] [Google Scholar]

- Pew Research Center (2019) Trust and mistrust in Americans’ views of scientific experts. Available at: https://www.pewresearch.org/science/wp-content/uploads/sites/16/2019/08/PS_08.02.19_trust.in_.scientists_FULLREPORT_8.5.19.pdf

- Rosman T, Adler K, Barbian L, Blume V, Burczeck B, Cordes V, et al. (2021) Protect ya grandma! The effects of students’ epistemic beliefs and prosocial values on COVID-19 vaccination intentions. Frontiers in Psychology 12: 683987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosman T, Bosnjak M, Silber H, Koßmann J, Heycke T. (2020) Preregistration: Open Science and the Public’s Trust in Science. PsychArchives. Available at: 10.23668/PSYCHARCHIVES.4470 [DOI] [Google Scholar]

- Rosman T, Bosnjak M, Silber H, Koßmann J, Heycke T. (2022. a) Code for: Open Science and Public Trust in Science: Results from Two Studies. PsychArchives. Available at: 10.23668/psycharchives.6494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosman T, Bosnjak M, Silber H, Koßmann J, Heycke T. (2022. b) Dataset for: Open Science and Public Trust in Science: Results from Two Studies. PsychArchives. Available at: 10.23668/psycharchives.6495 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosman T, Bosnjak M, Silber H, Koßmann J, Heycke T. (2022. c) Survey / Study Materials for: Open Science and Public Trust in Science: Results from Two Studies. PsychArchives. Available at: 10.23668/psycharchives.6496 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider J, Rosman T, Kelava A, Merk S. (2022) Do open science badges increase trust in scientists among student teachers, scientists, and the public? Psychological Science. Advance online publication. DOI: 10.1177/09567976221097499 [DOI] [PubMed] [Google Scholar]

- Silvertown J. (2009) A new dawn for citizen science. Trends in Ecology & Evolution 24(9): 467–471. [DOI] [PubMed] [Google Scholar]

- Soveri A, Karlsson LC, Antfolk J, Lindfelt M, Lewandowsky S. (2021) Unwillingness to engage in behaviors that protect against COVID-19: The role of conspiracy beliefs, trust, and endorsement of complementary and alternative medicine. BMC Public Health 21(1): 684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van den Akker O, Alvarez LD, Bakker M, Wicherts JM, van Assen MALM. (2018) How do academics assess the results of multiple experiments? PsyArXiv. Available at: https://psyarxiv.com/xyks4/download?format=pdf

- Vazire S. (2018) Implications of the credibility revolution for productivity, creativity, and progress. Perspectives on Psychological Science 13(4): 411–417. [DOI] [PubMed] [Google Scholar]

- Wallach JD, Boyack KW, Ioannidis JPA. (2018) Reproducible research practices, transparency, and open access data in the biomedical literature, 2015–2017. PLoS Biology 16(11): e2006930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang L, Zhang Z, McArdle JJ, Salthouse TA. (2009) Investigating ceiling effects in longitudinal data analysis. Multivariate Behavioral Research 43(3): 476–496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wicherts JM, Veldkamp CLS, Augusteijn HEM, Bakker M, van Aert RCM, van Assen MALM. (2016) Degrees of freedom in planning, running, analyzing, and reporting psychological studies: A checklist to avoid p-hacking. Frontiers in Psychology 7: 1832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wingen T, Berkessel JB, Englich B. (2020) No replication, no trust? How low replicability influences trust in psychology. Social Psychological and Personality Science 11(4): 454–463. [Google Scholar]

- Wissenschaft im Dialog/Kantar Emnid (2018) Detaillierte Ergebnisse des Wissenschaftsbarometers 2018 nach Subgruppen. Available at: https://www.wissenschaft-im-dialog.de/fileadmin/user_upload/Projekte/Wissenschaftsbarometer/Dokumente_18/Downloads_allgemein/Tabellenband_Wissenschaftsbarometer2018_final.pdf