Key Points

Question

Is patient-reported health information collected through online portals in pragmatic clinical trials consistent with electronic health record data?

Findings

In this concordance substudy of a large, pragmatic comparative effectiveness research trial of 15 076 patients with atherosclerotic cardiovascular disease, agreement of participant-reported cardiovascular events with electronic health record–derived events was low to moderate.

Meaning

Findings suggest that additional work is needed to optimize the integration of patient-reported health data into pragmatic research studies.

Abstract

Importance

Patient-reported health data can facilitate clinical event capture in pragmatic clinical trials. However, few data are available on the fitness for use of patient-reported data in large-scale health research.

Objective

To evaluate the concordance of a set of variables reported by patients and available in the electronic health record as part of a pragmatic clinical trial.

Design, Setting, and Participants

Data from ADAPTABLE (Aspirin Dosing: A Patient-Centric Trial Assessing Benefits and Long-term Effectiveness), a pragmatic clinical trial, were used in a concordance substudy of a comparative effectiveness research trial. The trial randomized 15 076 patients with existing atherosclerotic cardiovascular disease in a 1:1 ratio to low- or high-dose aspirin from April 2016 through June 30, 2019.

Main Outcomes and Measures

Concordance of data was evaluated from 4 domains (demographic characteristics, encounters, diagnoses, and procedures) present in 2 data sources: patient-reported data captured through an online portal and data from electronic sources (electronic health record data). Overall agreement, sensitivity, specificity, positive predictive value, negative predictive value, and κ statistics with 95% CIs were calculated using patient report as the criterion standard for demographic characteristics and the electronic health record as the criterion standard for clinical outcomes.

Results

Of 15 076 patients with complete information, the median age was 67.6 years (range, 21-99 years), and 68.7% were male. With the use of patient-reported data as the criterion standard, agreement (κ) was high for Black and White race and ethnicity but only moderate for current smoking status. Electronic health record data were highly specific (99.6%) but less sensitive (82.5%) for Hispanic ethnicity. Compared with electronic health record data, patient report of clinical end points had low sensitivity for myocardial infarction (33.0%), stroke (34.2%), and major bleeding (36.6%). Positive predictive value was similarly low for myocardial infarction (40.7%), stroke (38.8%), and major bleeding (21.9%). Coronary revascularization was the most concordant event by data source, with only moderate agreement (κ = 0.54) and positive predictive value. Agreement metrics varied by site for all demographic characteristics and several clinical events.

Conclusions and Relevance

In a concordance substudy of a large, pragmatic comparative effectiveness research trial, sensitivity and chance-corrected agreement of patient-reported data captured through an online portal for cardiovascular events were low to moderate. Findings suggest that additional work is needed to optimize integration of patient-reported health data into pragmatic research studies.

Trial Registration

ClinicalTrials.gov Identifier: NCT02697916

This concordance substudy of a comparative effectiveness research trial investigates concordance between patient-reported health data and electronic health data among patients with atherosclerotic cardiovascular disease.

Introduction

During the past decade, members of the regulatory, payer, patient, and scientific communities have begun to innovate the classic randomized clinical trial to improve its sustainability, generalizability, and efficiency.1,2 Pragmatic clinical trials use existing data sources and streamlined follow-up methods to measure the risks and benefits of therapies in actual clinical settings.3 The US Food and Drug Administration’s Real-World Evidence Program4 and the National Institutes of Health Pragmatic Trials Collaboratory2 are actively exploring the potential use of real-world evidence for safety and effectiveness determinations in the premarket and postmarket settings.5,6

In the context of pragmatic clinical trials, patient-facing internet portals are increasingly used as a mechanism for the collection of patient-reported information to answer key questions about disease status, recent health events, the patient experience, and other outcomes. Access to a patient portal may complement identification of clinical events in the electronic health record (EHR) by capturing outcomes that occur outside the patient’s primary health system or after the patient relocates. Furthermore, patient web portals may facilitate clinical trial recruitment and enrollment through preliminary screening for eligibility and web-based consent. Despite these potential benefits, to our knowledge, patient-reported information gathered through such mechanisms has not been systematically evaluated for completeness and validity, and key questions remain regarding its fitness for use in large-scale, pragmatic health research. Although prior work has examined concordance between participant-reported data collected through in-person visits or telephone interviews,7,8,9,10 there is little evidence on the validity of data collected through virtual participant-facing portals. Our analysis was designed to address this evidence gap by evaluating portal-based participant-reported health data collected to support event ascertainment in a pragmatic clinical trial.

The ADAPTABLE (Aspirin Dosing: A Patient-Centric Trial Assessing Benefits and Long-term Effectiveness) trial, the first major randomized comparative effectiveness trial conducted within the National Patient-Centered Clinical Research Network (PCORnet), to our knowledge, aimed to identify the optimal dose of aspirin therapy for secondary prevention in atherosclerotic cardiovascular disease. As part of a new genre of patient-centered comparative effectiveness trials, the trial encompassed several key features, including enrollment of 15 000 patients across 9 networks, 40 large health care systems, and 1 health plan; provision of an internet portal to obtain patient consent and collect patient-reported information regarding risk factors, medications, and experiences; and reliance on existing EHR data sources for baseline characteristics and outcomes follow-up. Data collection through participant-facing portals provides an opportunity to address social desirability and other factors that may be associated with the quality of baseline data in the EHR,11,12,13 in addition to information about events occurring outside of a participant’s usual health system. Because ADAPTABLE relied on patients to report key information at baseline and throughout follow-up, it represents a unique opportunity to examine concordance between patient-reported health information and data obtained from the EHR.

Through a supplement to the Pragmatic Trials Collaboratory (formerly the Health Care Systems Research Collaboratory), we aimed to develop methods to assess the quality of patient-reported data in pragmatic clinical trials and to integrate the data with existing electronic health data. We report results from our assessment of concordance between patient-reported information on health events and demographic characteristics with corresponding information ascertained from electronic sources in the ADAPTABLE trial. Our aims were to inform future efforts to synthesize potentially inconsistent data from patient-reported and EHR sources, identify opportunities to streamline data capture, and facilitate enrollment of study-specific target populations within larger health systems.

Methods

ADAPTABLE Trial Design

In this concordance substudy of a comparative effectiveness research trial, our concordance assessment used data collected as part of the ADAPTABLE trial (ClinicalTrials.gov Identifier: NCT02697916), which has been described in detail previously.14 In brief, the goal of ADAPTABLE was to identify the optimal aspirin dose for secondary prevention of ischemic events in patients with existing atherosclerotic vascular disease. The trial randomized 15 076 patients in a 1:1 ratio to receive an aspirin dose of 81 mg/d vs 325 mg/d. Trial enrollment was completed in June 2019, and outcome ascertainment in June 2020. The study was conducted from April 2016 through June 30, 2019. Events were ascertained from patient report at scheduled intervals, EHR-based queries of datamarts at participating sites, and linkage with administrative claims from private health plans and the Centers for Medicare & Medicaid Services. The primary end point was a composite of all-cause death, hospitalization for myocardial infarction (MI), or hospitalization for stroke. The primary safety end point was hospitalization for major bleeding with an associated blood transfusion. Inclusion criteria are displayed in eFigure 1 in the Supplement. Given the pragmatic nature of the trial, investigators did not exclude potential patients according to comorbid conditions, advanced age, or concomitant medications other than oral anticoagulants or ticagrelor. This substudy was initiated after the start of enrollment as an ancillary study of the ADAPTABLE trial. All analyses were prespecified in a statistical analysis plan that was reviewed and approved by all authors.

Data Sources and Patient-Reported Data

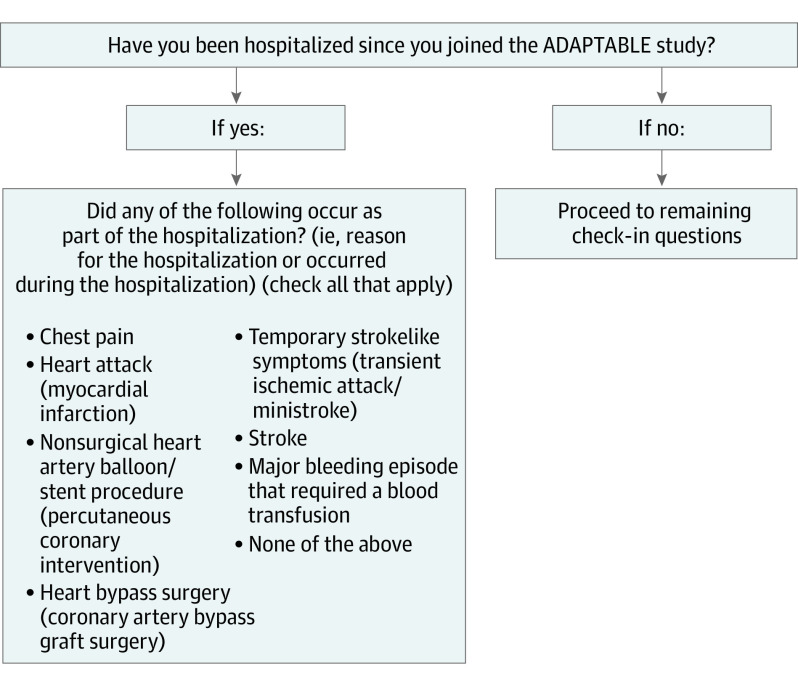

We evaluated concordance between events captured in 2 data sources as part of event ascertainment procedures: (1) patient-reported data captured through an online portal and (2) data from the EHR. Patient-reported health data for ADAPTABLE were captured via the Mytrus platform (Medidata Solutions Inc). Patients were randomized to receive an email every 3 or 6 months with a link to the ADAPTABLE web portal. The Spanish-language version of the survey was available at some sites. Four domains were completed by enrolled patients in Mytrus: patient-reported outcomes for quality of life and general domains of health, specific information about medications they received, specific details about reasons for stopping aspirin when this occurred, and information on hospitalizations. Potential health events requiring hospitalization were queried with both clinical and lay descriptions (Figure). A flow diagram displaying participant engagement with the online portal is shown in eFigure 2 in the Supplement.

Figure. Capture of Hospitalization Information From Patients in the ADAPTABLE Trial.

Electronic Health Data

After a patient completed written informed consent and randomization occurred, baseline and follow-up data were obtained from the EHR of the enrolling site. As part of PCORnet participation, health systems extract, transform, and load EHR data into a datamart using the PCORnet common data model (CDM), which serves as the foundation of the PCORnet data research network.15 By the beginning of phase 2 of PCORnet (October 1, 2015), all participating clinical data research networks (CDRNs) populated CDM, version 3.0, which has all data necessary for ADAPTABLE, with the exception of patient-reported outcomes and some specific information about over-the-counter medications, including aspirin dose. Electronic health record data were queried at participating health systems approximately every 6 months during the study. Queries included programmed algorithms for clinical outcomes using International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM), ICD-10-CM, and ICD-10–Procedure Coding System codes. Files were requested regularly to support safety assessments. Patients were linked across data sources via the use of an ADAPTABLE study identification.

Study Cohort

There were no additional inclusion criteria for the concordance assessment. Our study included all patients randomized through June 30, 2019 (15 076 patients). Electronic health record CDM data were available through the end of follow-up (June 30, 2020), and EHR CDM information was available for 14 661 of the 15 076 randomized patients (97.2%).

Analytic Variables

We evaluated concordance between data domains available in both electronic and patient-reported data sources (demographic characteristics, encounters, diagnoses, and procedures). Our final comparison evaluated 7 variables common to both data sources: the 2 hospital-based primary end point components (hospitalization for nonfatal MI and hospitalization for nonfatal stroke), the primary safety end point (major bleeding with an associated blood product transfusion), coronary revascularization procedures (percutaneous coronary intervention and coronary artery bypass graft), and demographic information (race, ethnicity, and smoking status). All comparisons were made at the patient level. In a sensitivity analysis, we excluded patients who never returned to the portal after randomization (n = 600) and removed EHR events that occurred after a patient’s last portal visit to promote comparability between data sources (eFigure 2 in the Supplement). Our final analysis of clinical events included data from 14 661 patients who had follow-up in both the Mytrus and EHR data sources. Additional detail on included and excluded events is provided in eFigure 1 in the Supplement.

Demographic characteristics were compared for 14 131 randomized patients with data in both the patient-reported and EHR CDM sources. We chose to focus on concordance of race and ethnicity and smoking status because prior work has demonstrated challenges with capturing race and ethnicity11,12,16 and smoking status13 in the EHR. Binary comparisons were made for race (White vs not White and Black vs not Black), ethnicity (Hispanic vs not Hispanic), and smoking status (current vs not current). Denominators varied across these measures because of missing responses in the electronic data. Of a total of 14 661 patients, 1422 (9.7%) had missing data on race in either the patient portal or EHR, 1423 (9.7%) had missing data on ethnicity in either the patient portal or EHR, and 4141 (28.2%) had missing data on smoking status in the EHR CDM. To facilitate comparison, we retained only patients with complete values for each respective variable of interest.

Statistical Analysis

We evaluated concordance with binomial proportions and exact 95% CIs for the following metrics: overall agreement, sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV). The κ statistics with 95% CIs were generated directly from SAS, version 9.4 (SAS Institute). Data were analyzed from June 1, 2021, to August 12, 2022. For the baseline characteristics of race, ethnicity, and smoking status, the reference standard was the patient-reported data collected in Mytrus. For the primary end point components (hospitalization for MI or stroke), safety end point (major bleeding associated with transfusion product), and coronary revascularization, the EHR data source was considered the reference standard against which the patient-reported events were compared.

To account for clustering of patients within sites, we used hierarchic models to combine data from all sites and estimate concordance at the “average” site. This method uses site as a random variable in the model to ensure that variance for each concordance metric is estimated appropriately. We report the model-based median, 25th percentile and 75th percentile values for each metric across sites, and P values corresponding to the test of heterogeneity across sites. P values were calculated from a mixed model with CDRN as a random effect. The P value specifically came from a test of covariance parameters (likelihood ratio test). Null hypothesis was that the G matrix for random effects could be reduced to 0 (ie, random effect not needed). P values were 2-sided and the level used to indicate statistical significance was .05. Analyses were performed with SAS. The Duke University institutional review board provided ethical oversight for the study. Patients provided written informed consent.

Results

Baseline characteristics of the study population are displayed in Table 1. Of 15 076 patients with complete information on age and sex, the median age was 67.6 years (range, 21-99 years; 25th and 75th percentiles, 60.7 years and 73.6 years), 10 352 were male (68.7%), and 4724 were female (31.3%). According to participant self-report, a total of 114 patients were American Indian or Alaska Native (0.8%), 146 were Asian (1.0%), 1311 were Black or African American (8.7%), 481 were Hispanic (3.2%), 11 990 were White (79.5%), 134 were multiple races (0.9%), and 401 were of other race or ethnicity (2.7%) (“other” was a category for race in the online portal, so no additional breakdown is available). A total of 13 959 patients had complete information on race and ethnicity. Of 14 103 patients with complete information on smoking status, 1382 (9.8%) were current smokers.

Table 1. Patient-Reported Demographic Information.

| Characteristic | All, No. (%) |

|---|---|

| No. | 15 076 |

| Age, y | |

| Mean (SD) | 66.8 (9.8) |

| Median (IQR) [range] | 67.6 (60.7-73.6) [21-99] |

| Sex | |

| Male | 10 352 (68.7) |

| Female | 4724 (31.3) |

| Race | |

| American Indian or Alaska Native | 114 (0.8) |

| Asian | 146 (1.0) |

| Black or African American | 1311 (8.7) |

| White | 11 990 (79.5) |

| Multiple racesa | 134 (0.9) |

| Otherb | 401 (2.7) |

| Prefer not to say or missing | 980 (6.5) |

| Ethnicity | |

| Hispanic | 481 (3.2) |

| Non-Hispanic | 13 553 (89.9) |

| Prefer not to say or missing | 1042 (6.9) |

| Current smoker | |

| Yes | 1382 (9.2) |

| No | 12 721 (84.4) |

| Missing | 973 (6.5) |

The category “multiple races” was self-reported and no further detail was available.

A category for race in the patient portal, so no additional breakdown is available.

Electronic health record data were most likely to correspond to patient-reported data for non-Hispanic Black race (eTable 1 in the Supplement), in which 0.6% of patients (81 of 13 021) were misclassified. Non-Hispanic Black race and ethnicity identified with EHR data had high sensitivity, specificity, PPV, NPV, and κ (Table 2). Concordance was also high for non-Hispanic White, non-Hispanic other, and Hispanic ethnicity, which were misclassified for 271 (2.1%), 217 (1.7%), and 109 (0.8%) patients, respectively. White non-Hispanic race and ethnicity identified with EHR data had high sensitivity, PPV, and NPV but slightly lower specificity compared with non-Hispanic Black race. Electronic health record data were highly specific (99.6%) but less sensitive (82.5%) for Hispanic ethnicity, with moderate agreement corrected for chance (κ = 0.86). Data on smoking status were available for 9939 of 14 103 participants (70.5%) in the study population. Of these data, approximately 468 (4.7%) were misclassified in EHR-derived data. Electronic health record–derived smoking status had high specificity but lower sensitivity than race and ethnicity variables, with a κ statistic indicating moderate concordance between the 2 sources (0.74) (Table 2).

Table 2. Agreement Metrics for Patient-Reported and Electronic Health Record–Derived Demographic Characteristicsa.

| Variable | % (95% CI) | κ Statistic | ||||

|---|---|---|---|---|---|---|

| Overall agreement | Sensitivityb | Specificity | PPV | NPV | ||

| Non-Hispanic | ||||||

| Black | 99.4 (99.2-99.5) | 98.0 (97.1-98.7) | 99.5 (99.4-99.6) | 95.5 (94.2-96.5) | 99.8 (99.7-99.9) | 0.96 (0.96-0.97) |

| White | 97.9 (97.7-98.2) | 99.4 (99.2-99.5) | 89.7 (88.3-91.0) | 98.2 (97.9-98.4) | 96.4 (95.5-97.2) | 0.92 (0.91-0.93) |

| Otherc | 98.3 (98.1-98.6) | 47.1 (41.8-52.5) | 99.8 (99.6-99.8) | 83.8 (77.9-88.6) | 98.6 (98.3-98.8) | 0.60 (0.55-0.64) |

| Hispanic | 99.2 (99.0-99.3) | 82.5 (80.8-88.0) | 99.6 (99.5-99.7) | 88.7 (85.2-91.6) | 99.5 (99.4-99.6) | 0.86 (0.84-0.89) |

| Current smoker | 95.3 (94.9-95.7) | 79.7 (77.0-82.2) | 96.9 (96.5-97.3) | 72.8 (70.0-75.5) | 97.9 (97.6-98.2) | 0.74 (0.71-0.76) |

Abbreviations: NPV, negative predictive value; PPV, positive predictive value.

Patient-reported data were used as the criterion standard for all demographic comparisons.

Sensitivity population excluded 600 patients with no portal visits and excluded events that occurred after the last visit.

A category for race in the online portal, so no additional breakdown is available.

Observed concordance and individual frequencies of clinical events occurring during trial follow-up are displayed in eTable 2 in the Supplement. Event rates were low for all 4 events of interest (MI, stroke, major bleeding, and coronary revascularization). Fewer than 2% of 14 661 patients were misclassified for stroke (182 [1.2%]) or major bleeding (138 [0.9%]). A total of 398 patients (2.7%) were misclassified for MI, the majority because the patient did not report MI when it was present in EHR data. The highest proportion of patients were misclassified for coronary revascularization (727 [5.0%]), with the majority of discrepancies occurring because patients reported a revascularization when there was none recorded in the EHR data. Table 3 shows agreement metrics for the 4 clinical events of interest, in this case treating EHR data as the criterion standard, for the full trial population and a sensitivity population that excluded 600 patients with no portal visits and excluded events that occurred after the last visit. In the full population, specificity of patient report was greater than 97% for all 4 clinical events. However, sensitivity of patient report for the 15 076 patients was poor for MI, stroke, and major bleeding, with 114 of 346 (32.9%), 52 of 152 (34.2%), and 26 of 71 (36.6%), respectively, reporting these events when there was evidence of their occurrence in the EHR. Positive predictive value was similarly low for myocardial infarction (40.7%), stroke (38.8%), and major bleeding (21.9%). Agreement corrected for chance was highest for coronary revascularization (κ = 0.54), followed by stroke (κ = 0.36), MI (κ = 0.35), and major bleeding (κ = 0.27). Results were largely similar in the sensitivity population excluding patients without follow-up portal visits and any events happening after the final portal visits, with slightly improved sensitivity for MI (39.0%), stroke (40.5%), and major bleeding (41.9%). eTables 3, 4, 5, 6, and 7 in the Supplement display concordance for each event type by baseline demographic characteristics. For MI, event discordance was higher among participants who were older, female, American Indian or Alaska Native, Black or African American, or of Hispanic ethnicity, as well as among smokers. Similar patterns were observed for stroke, revascularization, and bleeding events, with the exception of lower discordance for revascularization among women compared with men and lower discordance among American Indian or Alaska Native participants compared with other subgroups for bleeding events.

Table 3. Agreement Metrics for Patient-Reported and Electronic Health Record (EHR)–Derived Follow-up Eventsa.

| Variable | % (95% CI) | κ Statistic | ||||

|---|---|---|---|---|---|---|

| Overall agreement | Sensitivity | Specificity | PPV | NPV | ||

| Full population (N = 15 076) | ||||||

| Coronary revascularization | 95.0 (94.7-95.4) | 58.6 (55.1-62.0) | 97.1 (96.9-97.4) | 54.2 (50.8-57.5) | 97.6 (97.3-97.9) | 0.54 (0.51-0.57) |

| MI | 97.3 (97.0-97.5) | 33.0 (28.0-38.2) | 98.8 (98.7-99.0) | 40.7 (34.9-46.7) | 98.4 (98.2-98.6) | 0.35 (0.30-0.40) |

| Stroke | 98.8 (98.6-98.9) | 34.2 (26.7-42.3) | 99.4 (99.3-99.6) | 38.8 (30.5-47.6) | 99.3 (99.2-99.4) | 0.36 (0.29-0.43) |

| Bleeding | 99.1 (98.9-99.2) | 36.6 (25.5-48.9) | 99.4 (99.2-99.5) | 21.9 (14.8-30.4) | 99.7 (99.6-99.8) | 0.27 (0.19-0.35) |

| Sensitivity analysis population (n = 14 476)b | ||||||

| Coronary revascularization | 95.4 (95.1-95.8) | 65.5 (61.9-69.0) | 97.0 (96.7-97.3) | 53.8 (50.4-57.2) | 98.1 (97.9-98.4) | 0.57 (0.54-0.60) |

| MI | 97.6 (97.3-97.8) | 39.0 (33.4-44.9) | 98.8 (98.6-99.0) | 40.1 (34.3-46.2) | 98.7 (98.5-98.9) | 0.38 (0.33-0.44) |

| Stroke | 98.9 (98.7-99.0) | 40.5 (31.8-49.6) | 99.4 (99.3-99.5) | 38.1 (29.8-46.8) | 99.5 (99.3-99.6) | 0.39 (0.31-0.46) |

| Bleeding | 99.1 (98.9-99.2) | 41.9 (29.5-55.2) | 99.3 (99.2-99.5) | 21.9 (14.8-30.4) | 99.7 (99.6-99.8) | 0.28 (0.20-0.37) |

Abbreviations: MI, myocardial infarction; NPV, negative predictive value; PPV, positive predictive value.

Electronic health record data were used as the criterion standard for all clinical event comparisons.

Restricted population excluded 600 patients with no visits after randomization and excluded EHR events that occurred after the patient’s final portal visit.

Table 4 displays results from the hierarchic models accounting for the natural clustering of ADAPTABLE trial patients within PCORnet CDRNs, with P values derived from mixed models with CDRN as a random effect. Metrics representing agreement between patient-reported and EHR-derived demographic characteristics were significantly different by CDRN for all metrics except for sensitivity and NPV for Black race. There were significant differences in agreement by CDRN for revascularization on all metrics except specificity. For MI, sensitivity, specificity, and PPV were consistent across CDRNs, but overall agreement and NPV showed evidence of variability by CDRN. Overall agreement, sensitivity, and NPV differed by CDRN for the stroke end point, but specificity was consistent across networks. Finally, because of small numbers, the model for PPV did not converge for stroke and models for sensitivity did not converge for major bleeding. Specificity, PPV, and NPV were consistent across CDRNs for major bleeding. Despite the low concordance of end points from portal and EHR data sources, no differences in trial findings were observed when comparing aspirin doses using participant-reported vs EHR data sources for end point ascertainment (eTable 7 in the Supplement).

Table 4. Hierarchic Modeling Results for Demographic Characteristics and Clinical Events Accounting for Sitea.

| Characteristic | Overall agreement | Sensitivity | Specificity | PPV | NPV |

|---|---|---|---|---|---|

| Demographic details | |||||

| Black | |||||

| Median (IQR) | 99.2 (99.1-99.6) | 98.3 (97.7-98.6) | 99.6 (99.0-99.7) | 96.3 (94.2-96.8) | 99.8 (99.8-99.8) |

| P valueb | <.001 | .12 | <.001 | <.001 | .42 |

| White | |||||

| Median (IQR) | 97.9 (97.5-98.1) | 99.7 (99.5-99.8) | 88.3 (74.5-89.3) | 98.1 (97.2-98.1) | 98.0 (96.5-99.3) |

| P valueb | <.001 | .006 | <.001 | <.001 | <.001 |

| Hispanic ethnicity | |||||

| Median (IQR) | 99.0 (98.8-99.2) | 73.1 (68.0-85.5) | 99.6 (99.4-99.7) | 79.8 (78.4-88.2) | 99.5 (99.0-99.6) |

| P valueb | <.001 | <.001 | <.001 | <.001 | <.001 |

| Current smoker | |||||

| Median (IQR) | 96.8 (94.2-97.5) | 83.6 (76.9-84.7) | 98.1 (97.8-98.6) | 79.3 (63.5-87.3) | 98.4 (97.8-98.6) |

| P valueb | <.001 | <.001 | <.001 | <.001 | <.001 |

| Clinical events during trial follow-up | |||||

| Revascularization | |||||

| Median (IQR) | 94.7 (94.5-95.7) | 55.8 (51.0-61.9) | 97.2 (97.1-97.3) | 51.9 (45.5-60.1) | 97.3 (96.4-97.9) |

| P valueb | <.001 | .002 | .33 | <.001 | <.001 |

| Myocardial infarction | |||||

| Median (IQR) | 97.4 (96.8-97.6) | 30.1 (28.5-34.2) | 98.9 (98.8-98.9) | 39.3 (39.2-40.6) | 98.1 (97.9-98.8) |

| P valueb | <.001 | .13 | .21 | .30 | <.001 |

| Stroke | |||||

| Median (IQR) | 98.7 (98.6-99.1) | 36.4 (28.1-43.3) | 99.5 (99.4-99.5) | 38.8c | 99.3 (99.0-99.5) |

| P valueb | <.001 | .04 | .18 | >.99 | <.001 |

| Bleeding | |||||

| Median (IQR) | 99.1 (99.0-99.2) | 36.6c | 99.4 (99.4-99.4) | 20.9 (19.1-24.4) | 99.8 (99.7-99.8) |

| P valueb | .03 | >.99 | .16 | .37 | .07 |

Abbreviations: NPV, negative predictive value; PPV, positive predictive value.

Includes the median, 25th percentile, and 75th percentile predicted values for each performance metric across all clinical data research networks (CDRNs).

From a mixed model with CDRN as a random effect. P > .05 is interpreted as no evidence of a difference in the metric across CDRNs.

Estimated site variability for this metric was zero.

Discussion

In this study, we evaluated concordance of a set of variables that were both reported by patients through an online portal and available in the EHR as part of a pragmatic clinical trial. Our main findings were as follows: (1) when patient-reported data were used as the criterion standard, agreement corrected for chance was high for White and Black race but only moderate for current smoking status; (2) EHR data were highly specific but only moderately sensitive for Hispanic ethnicity; (3) patient report of clinical events had low sensitivity compared with EHR data for MI, stroke, and major bleeding; (4) coronary revascularization was the most concordant event by data source, although agreement corrected for chance was only moderate; and (5) there was evidence of variation in agreement metrics by the research datamart for all demographic characteristics and several clinical events.

In September 2021, the US Food and Drug Administration published guidance outlining key considerations when assessing EHR data to support regulatory decisions for drug and biological products.5 The guidance emphasizes the need to determine the reliability and validity of outcomes identified in available real-world data sources, including understanding the degree of outcome misclassification, whether the misclassification was differential, and the potential direction and magnitude of bias introduced by the misclassification. Our study adds to the body of knowledge regarding potential misclassification when real-world data sources are used in the setting of a pragmatic trial. In our study, the overall percentages of patients who were misclassified for stroke, MI, and major bleeding were small (<3%) and expected to be nondifferential across exposure groups. Because the ADAPTABLE trial relied on integrated data from multiple sources to capture events, discrepancies between data sources did not affect overall trial findings. However, our findings underscore the importance of the US Food and Drug Administration recommendation to consider the potential bias introduced by misclassification, particularly if a single data source is used or rare events with imperfectly sensitive event capture are evaluated. An ongoing project evaluating case report form data collected in the Effect of Evolocumab in Patients at High Cardiovascular Risk Without Prior Myocardial Infarction or Stroke trial17 compared with EHR data at participating sites will further explore the extent of and possible associations with misclassification across potential data sources for event ascertainment.

Our study builds on past work evaluating self-reported data captured through conventional mechanisms (in-person visits and telephone interviews) and is the first, to our knowledge, to assess validity of participant-reported health data collected through a virtual portal. Evidence to date suggests that validity of participant self-report varies by collection modality, participant population, and the type of information collected.7,8,9,10 Our findings of relatively low sensitivity and chance-corrected agreement of participant-reported clinical events have important implications given the growing interest in and use of decentralized, direct-to-participant study designs that rely on these data for event capture. Although participant report had high NPV for all events in our study, this finding was likely in part due to chance agreement because of low overall event rates. Because the purpose of patient-reported event data in the ADAPTABLE trial was to support event capture, the low sensitivity for participant-reported cardiovascular events calls into question its utility as the sole method for trial event ascertainment. Future direct-to-participant research efforts should carefully consider both overall agreement and specific concordance statistics in light of the intended use of participant-reported health data.

Our results are largely consistent with prior work reporting suboptimal agreement between patient-reported and electronic health data for clinical events. In the Treatment With ADP Receptor Inhibitors: Longitudinal Assessment of Treatment Patterns and Events After Acute Coronary Syndrome study, Krishnamoorthy and colleagues18 found both underreporting and overreporting of hospitalized events when comparing self-report with hospitalizations derived from billing data. In a comparison of self-reported heart attack and acute MI identified in Medicare claims, Yasaitis et al19 found that only one-third of patients who reported a heart attack had a claims-identified acute MI; of these patients, one-third did not report having a heart attack. Similar results were found in a comparison of self-reported and physician-reported heart attack or stroke in the British Regional Heart Study, which showed differential overreporting vs underreporting of heart attacks and strokes.20 Although both underreporting and overreporting were present in our study for stroke and MI, we found much higher overreporting than underreporting (and worse agreement overall) for the major bleeding end point. This differential agreement by clinical event type was also reported in an analysis of the Women’s Health Initiative, in which agreement was near perfect for coronary revascularization but only fair to moderate for angina, congestive heart failure, and peripheral vascular disease.7

Although low concordance between patient-reported and clinical event data may call into question the use of self-report for clinical events, these data may still play an important role in a comprehensive event ascertainment strategy in pragmatic trials. Although variability in reporting by event type or frequency is largely beyond of the control of the research team, prior work suggests that concordance can be enhanced by study design that minimizes known biases associated with self-report. In a systematic review of self-reported use data, Bhandari and Wagner21 found that accuracy of self-report was variable and depended largely on modifiable design factors, including recall timeframe, data collection method, and questionnaire design. Empirical testing of strategies that vary these factors and measure the associations with agreement would help inform future study design. The US Food and Drug Administration recommends consideration of age, level of cognitive development, health state, and health literacy when determining feasibility of patient self-report.22 Appropriateness of using self-reported data may vary, depending on disease area and target population, so engagement of subject matter experts should drive decisions about its use. Finally, given the pragmatic nature of the ADAPTABLE trial, patients were provided minimal education about event reporting on the online platform. Future trials could implement tailored education focused on specific layperson terms for events of interest, the importance of comprehensive event capture for research, and use of the online interface to further enhance capture of high-quality patient-reported event data.

Although our study used data from a randomized trial, these findings are equally relevant to observational studies, in which information on detailed confounders and outcomes is critical to valid inference. Our study specifically adds to the literature on concordance between EHR and patient-reported data for smoking status and ethnicity, 2 variables that are inconsistently documented in the EHR and are therefore often collected directly from patients. In our study, 64 of 417 patients (15.3%) who identified as Hispanic would have been classified as non-Hispanic had only information from the EHR been used to define ethnicity. This finding is consistent with prior work by Hamilton and colleagues,23 who reported an overall misclassification rate of 15.7% when comparing EHR-derived with self-reported race and ethnicity, with the majority due to lack of information on race and ethnicity in the medical record. In addition to ethnicity data, patient-reported information may also be useful for supplementing EHR-derived smoking status, a key risk factor that was not populated for more than a quarter of patients in the ADAPTABLE trial. Of individuals with EHR information on smoking status, 190 of 935 (20.3%) of smokers were classified as nonsmokers in the EHR. Taken together with prior work demonstrating inconsistencies in smoking status between structured EHR data and clinical notes,13 these results suggest that patient-facing tools that allow documentation of smoking status by patients themselves may enhance the quality of smoking data.

Limitations

There are several limitations to our study. First, we identified EHR events with the same algorithms that were used in the main ADAPTABLE trial. Although these algorithms were developed by a multidisciplinary process, other algorithms may be more or less sensitive or specific than those used in the ADAPTABLE trial. Electronic health record data more broadly are also an imperfect criterion standard given the inherent gaps in coverage of a patient’s health care. Second, PCORnet is a national network with ongoing data curation processes to address missingness and implausible values, so EHR data quality at participating sites may differ compared with that of sites outside of this network. Third, we treated patient report as the criterion standard for smoking status, but it is possible that, owing to social desirability bias, some patients underreported current smoking. However, we anticipate that this bias is present in both data sources and may be even more salient during an in-person clinic visit because of the physical presence of a clinician. Fourth, some data elements were captured only through the EHR (eg, comorbidity information) or were used as part of the study record-linkage process (eg, age and sex), so we were unable to examine the validity of participant report for those variables. Fifth, for simplicity and consistency with trial design, we limited clinical events to the first occurring during follow-up; agreement patterns may differ for recurrent events.

Conclusions

In this analysis of patient-reported event data captured through an online portal in a pragmatic clinical trial, specificity of patient-reported clinical events was high, but sensitivity was low. Patient-reported event information may be most useful to supplement incomplete EHR-based demographic information (such as for Hispanic ethnicity) or as part of a multifaceted approach to event capture in the postrandomization period. Future work should focus on empirical testing to determine optimal strategies for capturing and incorporating patient-reported data into pragmatic clinical trials.

eFigure 1. Inclusion Criteria for the ADAPTABLE Trial

eTable 1. Observed Concordance Between Patient-Reported and EHR-Derived Demographic Characteristics

eTable 2. Observed Concordance Between Patient-Reported and EHR-Derived Follow-up Events

eTable 3. Baseline Characteristics by First MI Event Concordance Status

eTable 4. Baseline Characteristics by First Stroke Event Concordance Status

eTable 5. Baseline Characteristics by First Revascularization Event Concordance Status

eTable 6. Baseline Characteristics by First Bleeding Event Concordance Status

eTable 7. Effect Estimates When Using Various Data Sources for Trial End Points

eFigure 2. Participant Portal Engagement and Event Exclusions

References

- 1.Crowley WF Jr, Sherwood L, Salber P, et al. Clinical research in the United States at a crossroads: proposal for a novel public-private partnership to establish a national clinical research enterprise. JAMA. 2004;291(9):1120-1126. doi: 10.1001/jama.291.9.1120 [DOI] [PubMed] [Google Scholar]

- 2.Weinfurt KP, Hernandez AF, Coronado GD, et al. Pragmatic clinical trials embedded in healthcare systems: generalizable lessons from the NIH Collaboratory. BMC Med Res Methodol. 2017;17(1):144. doi: 10.1186/s12874-017-0420-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.NIH Pragmatic Trials Collaboratory . Using electronic health record data in pragmatic clinical trials. Accessed March 30, 2022. https://rethinkingclinicaltrials.org/chapters/design/using-electronic-health-record-data-pragmatic-clinical-trials-top/specific-uses-ehr-data-pcts/

- 4.US Food and Drug Administration. Framework for FDA’s real-world evidence program. US Food and Drug Administration. December 2018. Accessed March 30, 2022. https://www.fda.gov/media/120060/download

- 5.US Department of Health and Human Services; Food and Drug Administration; Center for Drug Evaluation and Research (CDER); Center for Biologics Evaluation and Research (CBER); Oncology Center for Excellence (OCE). Real-world data: assessing electronic health records and medical claims data to support regulatory decision-making for drug and biological products: guidance for industry. US Food and Drug Administration. Published September 2021. Accessed March 30, 2022. https://www.fda.gov/media/152503/download

- 6.US Department of Health and Human Services; Food and Drug Administration; Center for Drug Evaluation and Research (CDER); Center for Biologics Evaluation and Research (CBER). Considerations for the use of real-world data and real-world evidence to support regulatory decision-making for drug and biological products. US Food and Drug Administration. Published December 2021. Accessed March 30, 2022. https://www.fda.gov/media/154714/download

- 7.Heckbert SR, Kooperberg C, Safford MM, et al. Comparison of self-report, hospital discharge codes, and adjudication of cardiovascular events in the Women’s Health Initiative. Am J Epidemiol. 2004;160(12):1152-1158. doi: 10.1093/aje/kwh314 [DOI] [PubMed] [Google Scholar]

- 8.Stirratt MJ, Dunbar-Jacob J, Crane HM, et al. Self-report measures of medication adherence behavior: recommendations on optimal use. Transl Behav Med. 2015;5(4):470-482. doi: 10.1007/s13142-015-0315-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Woodfield R, Sudlow CL; UK Biobank Stroke Outcomes Group; UK Biobank Follow-up and Outcomes Working Group . Accuracy of patient self-report of stroke: a systematic review from the UK Biobank Stroke Outcomes Group. PLoS One. 2015;10(9):e0137538. doi: 10.1371/journal.pone.0137538 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Simpson CF, Boyd CM, Carlson MC, Griswold ME, Guralnik JM, Fried LP. Agreement between self-report of disease diagnoses and medical record validation in disabled older women: factors that modify agreement. J Am Geriatr Soc. 2004;52(1):123-127. doi: 10.1111/j.1532-5415.2004.52021.x [DOI] [PubMed] [Google Scholar]

- 11.Polubriaginof FCG, Ryan P, Salmasian H, et al. Challenges with quality of race and ethnicity data in observational databases. J Am Med Inform Assoc. 2019;26(8-9):730-736. doi: 10.1093/jamia/ocz113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nelson NC, Evans RS, Samore MH, Gardner RM. Detection and prevention of medication errors using real-time bedside nurse charting. J Am Med Inform Assoc. 2005;12(4):390-397. doi: 10.1197/jamia.M1692 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Polubriaginof F, Salmasian H, Albert DA, Vawdrey DK. Challenges with collecting smoking status in electronic health records. AMIA Annu Symp Proc. 2018;2017:1392-1400. [PMC free article] [PubMed] [Google Scholar]

- 14.Hernandez AF, Fleurence RL, Rothman RL. The ADAPTABLE trial and PCORnet: shining light on a new research paradigm. Ann Intern Med. 2015;163(8):635-636. doi: 10.7326/M15-1460 [DOI] [PubMed] [Google Scholar]

- 15.PCORnet. Data. Accessed September 1, 2018. https://pcornet.org/data-driven-common-model

- 16.Baker DW, Hasnain-Wynia R, Kandula NR, Thompson JA, Brown ER. Attitudes toward health care providers, collecting information about patients’ race, ethnicity, and language. Med Care. 2007;45(11):1034-1042. doi: 10.1097/MLR.0b013e318127148f [DOI] [PubMed] [Google Scholar]

- 17.US National Library of Medicine. Effect of Evolocumab in Patients at High Cardiovascular Risk Without Prior Myocardial Infarction or Stroke (VESALIUS-CV) . Updated December 7, 2021. Accessed January 11, 2022. https://www.clinicaltrials.gov/ct2/show/NCT03872401

- 18.Krishnamoorthy A, Peterson ED, Knight JD, et al. How reliable are patient-reported rehospitalizations? implications for the design of future practical clinical studies. J Am Heart Assoc. 2016;5(1):e002695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yasaitis LC, Berkman LF, Chandra A. Comparison of self-reported and Medicare claims–identified acute myocardial infarction. Circulation. 2015;131(17):1477-1485. doi: 10.1161/CIRCULATIONAHA.114.013829 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Walker MK, Whincup PH, Shaper AG, Lennon LT, Thomson AG. Validation of patient recall of doctor-diagnosed heart attack and stroke: a postal questionnaire and record review comparison. Am J Epidemiol. 1998;148(4):355-361. doi: 10.1093/oxfordjournals.aje.a009653 [DOI] [PubMed] [Google Scholar]

- 21.Bhandari A, Wagner T. Self-reported utilization of health care services: improving measurement and accuracy. Med Care Res Rev. 2006;63(2):217-235. doi: 10.1177/1077558705285298 [DOI] [PubMed] [Google Scholar]

- 22.US Food and Drug Administration . Patient-focused drug development public workshop on guidance 1. Accessed June 20, 2018. https://www.fda.gov/media/109154/download

- 23.Hamilton NS, Edelman D, Weinberger M, Jackson GL. Concordance between self-reported race/ethnicity and that recorded in a Veteran Affairs electronic medical record. N C Med J. 2009;70(4):296-300. doi: 10.18043/ncm.70.4.296 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eFigure 1. Inclusion Criteria for the ADAPTABLE Trial

eTable 1. Observed Concordance Between Patient-Reported and EHR-Derived Demographic Characteristics

eTable 2. Observed Concordance Between Patient-Reported and EHR-Derived Follow-up Events

eTable 3. Baseline Characteristics by First MI Event Concordance Status

eTable 4. Baseline Characteristics by First Stroke Event Concordance Status

eTable 5. Baseline Characteristics by First Revascularization Event Concordance Status

eTable 6. Baseline Characteristics by First Bleeding Event Concordance Status

eTable 7. Effect Estimates When Using Various Data Sources for Trial End Points

eFigure 2. Participant Portal Engagement and Event Exclusions