Summary:

Concomitant with such a shift toward competency-based curricula, there has been increasing adoption of surgical simulation coupled with virtual, mixed, and augmented reality. These technologies have become more commonplace across multiple surgical disciplines, in domains such as preoperative planning, surgical education, and intraoperative navigation. However, there is a relative paucity of literature pertaining to the application of this technology to plastic surgery education. This review outlines the advantages of mixed and augmented reality in the pursuit of an ideal simulation environment, their benefits for the education of plastic surgery trainees, and their role in standardized assessments. In addition, we offer practical solutions to commonly encountered problems with this technology. Augmented reality has tremendous untapped potential in the next phase of plastic surgery education, and we outline steps toward broader implementation to enhance the learning environment for our trainees and to improve patient outcomes.

Takeaways

Question: What is the current state of augmented reality in surgical education and how will broader implementation impact future trainees?

Findings: Augmented reality applications have been implemented across multiple surgical disciplines, although little literature exists pertaining to plastic surgery. We describe the strengths of augmented reality, applications in surgical education, and current limitations, and offer practical guidance for implementation in competency-based curricula.

Meaning: Augmented reality has tremendous untapped potential in plastic surgery. With creation of standardized assessment tools, AR/MR is uniquely positioned to complement the next phase of surgical education.

INTRODUCTION

As the landscape of surgical education has evolved, there is an ever-increasing requirement for surgical residents to demonstrate procedural competency as a necessity for program completion.1 Concurrently, clinical opportunities and the time required for competency-based assessment may be limited by a framework of duty-hour restrictions with reduced time for skill and knowledge acquisition, as well as increased emphasis on patient safety.1–3 This challenge requires a paradigm shift in surgical education that utilizes novel strategies and technologies for resident education and assessment.1,3,4 Some technologies that have seen the most significant innovation for surgical education include the use of surgical simulation coupled with virtual reality (VR), mixed reality (MR), and augmented reality (AR).4,5

The use of simulation in surgical education, achieved with cadaveric, animal, synthetic, or virtual models, aims to recreate the operative experience and allow for skill development. Using these various modalities, trainees are able to develop and refine both technical and cognitive skills in a controlled environment.6 Simulation has been well-described in the surgical literature, with multiple studies demonstrating an acceleration of learning curves and successful development of surgical skills in trainees.3,6,7 The value of simulation has also been demonstrated via improvements in resident competency scores and intraoperative performance for both junior and senior residents.8

VR can be defined as immersion in an entirely simulated digital environment isolated from the external world, often via a wearable headset or other simulator.4,5,9 The terms “AR” and “MR” are often used interchangeably within the surgical literature; however, it is important to distinguish fundamental differences. AR describes technology in which digital information is superimposed onto the user’s view of the external environment, including graphics, audio, or video, such as Google Glass (Google, Inc., Mountain View, Calif.).1,4,5 However, unlike MR, this information is not interactive with the real world. MR is the blending of virtual and real worlds in which superimposed information is integrated into the user’s surroundings, allowing for the user to fully interact with this virtual information and stimuli.1,4 The most heavily studied technology in this domain is the Microsoft Hololens (Microsoft Corp., Redmond, Wash.), which encompasses a head-mounted display (HMD) and the projection of visual information over the user’s field of view.3,10

VR, AR, and MR have seen increasing adoption in recent years across multiple surgical disciplines, demonstrating utility for surgical education, preoperative planning, and intraoperative navigation.10,11 However, much of this research has been focused on general surgery, orthopedics, and spine surgery, with relatively fewer publications pertaining to plastic surgery.11,12 Given the breadth of plastic surgery and the necessity of surgeons to transfer their skills to unique clinical scenarios, plastic surgery is a specialty that could benefit from surgical simulation. This review aims to describe the current state of VR and AR as it pertains to education of plastic surgery trainees and its limitations, and offers guidance for future implementation.

VIRTUAL, AUGMENTED, AND MIXED REALITY—THE BENEFITS OF EACH AND THE PURSUIT OF THE IDEAL SIMULATION ENVIRONMENT

The most recent advances in surgical simulation pertain to the use of VR, AR, and MR, with each modality conferring its own relative advantages.6 VR simulators provide an immersive experience well suited for the development of knowledge and intraoperative decision-making skills.3,13 VR simulation can also replicate rare clinical scenarios and avoids the need for direct supervision by an expert instructor to obtain constructive feedback.8,13 AR allows for information to be superimposed onto the user’s field of view, permitting quick referencing of data to assist with procedural completion, and may also be utilized for task recording and later review.1 In contrast, MR typically uses an HMD, a position-tracking system, and software that allows for integration of superimposed information with the real environment.3 The ability to integrate the real and virtual worlds provides for a higher fidelity simulation and the possibility of remote learning and telementoring.3,14 The advantages and disadvantages of VR, AR, and MR are summarized in Table 1.

Table 1.

| Modality | Advantages | Disadvantages |

|---|---|---|

| VR | -Good for development of knowledge and operative decision-making | -Excludes the physical environment from the user |

| -Can replicate rare clinical scenarios | -Often lack haptic feedback | |

| -Avoids need for direct supervision by expert | -Difficult to facilitate group interaction | |

| -Excellent for anatomic visualization | -May lack construct validity | |

| -Cost-effective | -Users may experience nausea and vomiting with prolonged use | |

| AR | -May be utilized for task recording | -May present false security to the user |

| -May present issues with inattentional blindness | ||

| -Decreased software availability | ||

| -Difficult to incorporate measures of task proficiency | ||

| -Provides access to large amounts of data that may be required during a task | ||

| -Lightweight | ||

| -Cannot fully replicate human tissue | ||

| -Hands-free experience | ||

| MR | -Typically higher fidelity simulation | -Cannot fully replicate human tissue |

| -More frequently uses haptic feedback | -May present false security to the user | |

| -Can be used to facilitate remote learning and telementoring | -Device weight | |

| -May incorporate and record objective operative performance variables as well as eye tracking and cognitive load | -Expensive technology | |

| -Faster learning curves for trainees | -May present issues with inattentional blindness | |

| -Can be combined with physical models to improve the fidelity of the simulation | -Occasional difficulty with precise image registration |

Among the three modalities, AR/MR technology has become predominant given the advantages it confers over VR3. The immersive nature of VR excludes the physical environment from the user, it is difficult to incorporate haptic/force feedback into these systems, and it does not allow natural interaction among a group of teachers/learners.3,13,23 Haptics, or the force feedback capability of the system, is a critical element and results in improved skill acquisition among trainees.15,23 Many VR systems also lack the construct validity, or the ability to accurately distinguish intermediate and expert trainees with respect to technical expertise.16 Finally, certain educational VR simulators are noninteractive and may be better-suited to anatomic visualization and operative planning rather than learning technical skills.13 Therefore, in the pursuit of an ideal simulator for trainees, AR/MR will likely become the predominant modality, although certain limitations pertaining to simulator realism and haptic feedback will need to be overcome.

EDUCATIONAL BENEFITS OF AUGMENTED AND MIXED REALITY

With ongoing improvements, AR/MR applications hold significant promise for the training of surgical trainees, ranging from anatomy curricula to the development of procedural skills and remote instruction.9,24 This technology has already demonstrated utility as an adjunct to nonsimulation-based training methods, with some studies demonstrating increased comprehension and understanding of three-dimensional structures.5

Traditional anatomy teaching has often relied on physical models and cadaveric dissection.5 Due to limited availability and high cost, alternatives to cadaveric specimens are required.25 High-fidelity virtual models provide an alternative to cadaveric models and may allow for improved understanding and conceptualization of anatomical details that are difficult to demonstrate in some cadaveric specimens. For example, Vartanian et al25 developed an interactive 3-D virtual model of a human nose using cadaveric specimens, allowing individual users to review the anatomy in a high level of detail—something that may be beneficial to mastering procedures such as rhinoplasty.26 Subsequently, these models can be extended to create interactive surgical animations and mobile applications such as Touch Surgery (Touch Surgery, London, United Kingdom).2,5 Flores et al27 have also developed a similar virtual atlas of craniofacial procedures and demonstrated its utility in trainee knowledge and skill acquisition.8,28

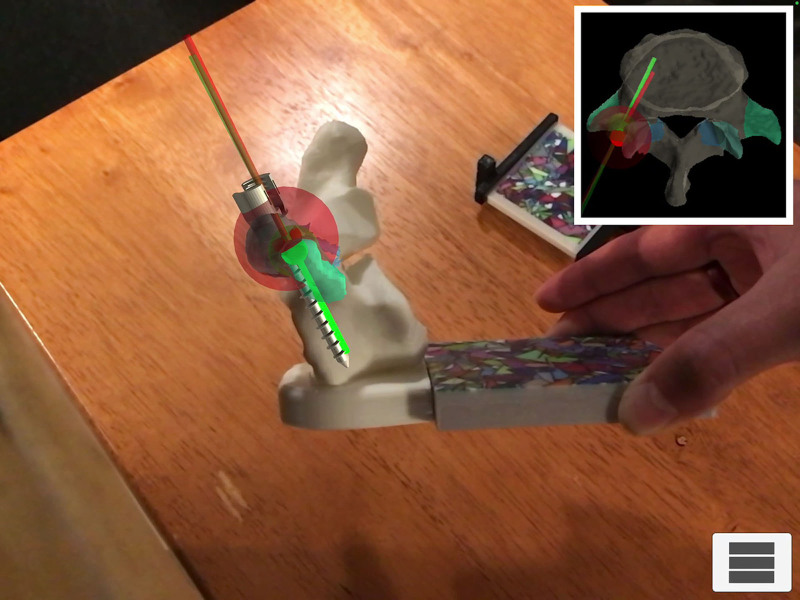

Reduced opportunity to develop surgical skills stemming from duty-hour restrictions has also been coupled with an increased emphasis on patient safety, creating an expectation that trainees must accumulate improved surgical expertise before performing procedures in the operating room.3 Proponents maintain that simulation is, therefore, essential for skill development without needing to balance patient safety concerns.3 MR/AR simulators are uniquely suited to fill this void, providing an environment for deliberate, hands-on practice away from a clinical setting.2 In addition, there is added flexibility with respect to timing and location of training, as these simulators are available on tablets and phones.1 Furthermore, they provide repetition and real-time formative feedback that are imperative for skill development.2,6,29 This has gained traction in spine surgery as it pertains to pedicle screw placement, a crucial procedural component with potentially adverse consequences with incorrect positioning.30 In a study by Gibby et al,30 AR using an HMD to view needle trajectory and CT image overlay resulted in 97% accuracy of needle placement into the pedicle. Needle placement was performed by inexperienced trainees, thereby demonstrating the precision of AR technology and potential for skill development among novice learners.30 Figure 1 demonstrates a similar task with simulated placement of a pedicle screw in an AR model developed in our laboratory. Logishetty et al29 demonstrated no difference in the accuracy of acetabular cup placement during a simulated total hip arthroplasty between a surgeon-trained group and a group trained exclusively with an AR platform. Therefore, AR models may hold significant value in allowing trainees to effectively develop skills in an unsupervised setting to complement expert-guided instruction.

Fig. 1.

Pedicle screw placement into a spine model using an augmented reality overlay to demonstrate ideal and actual trajectories of the screw.

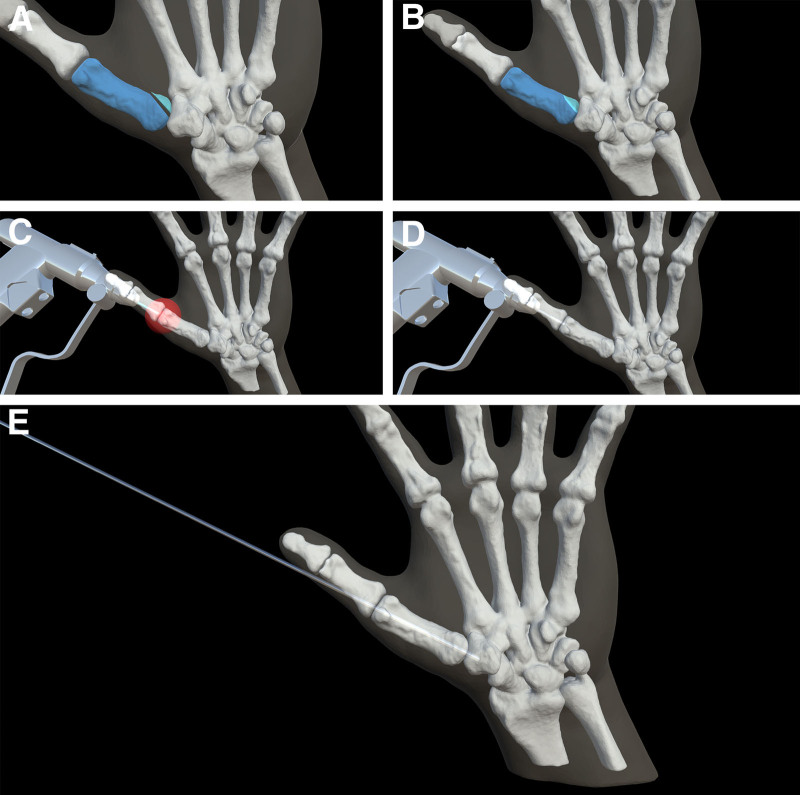

Within plastic surgery, procedures that would benefit most are those that are technically challenging, requiring a high degree of visuospatial awareness, and those where clinical opportunities are limited because of rarity or potential for adverse outcomes. One procedure with a considerable learning curve is operative fixation of scaphoid fractures. Incorrect placement of the compression screw can impair bone healing and adversely affect the biomechanics of the wrist, resulting in well-documented complications.31 Another procedure that requires a high visuospatial awareness and often challenges novice learners is K-wire placement in hand fractures. While one early study utilized Google Glass as an adjunct for K-wire fixation, no objective measures of task proficiency or errors were recorded.17 Therefore, more robust models for operative fixation of hand fractures are warranted and may yield significant benefits in skill acquisition and retention for learners (Fig. 2). (See Video [online], which shows a real-time view of an AR simulator for K-wire fixation of Bennett’s fracture.) The development of AR applications can provide a high-fidelity learning environment and can be coupled with physical models to increase fidelity and haptic feedback for procedures routinely encountered by plastic surgery trainees.14,29

Fig. 2.

Procedural sequence of K-wire fixation task. A, Unreduced Bennett’s fracture. Fracture fragments depicted in blue. B, Reduced Bennett’s fracture. Fracture fragments depicted in blue. C, Starting point for K-wire fixation task. D, K-wire depicted shortly after initiation of task. E, Completed K-wire fixation task with appropriate reduction of fracture fragments.

Video 1. shows a real-time view of an augmented reality simulator for K-wire fixation of a Bennett’s fracture.

To justify increasing investment of resources and further adoption of AR, demonstrable benefits over traditional training techniques are necessary. Vera et al14 demonstrated a shorter learning curve, faster completion times, and reduced error rates using AR for laparoscopic training when compared to receiving verbal cues from a staff surgeon. Improved task performance was also reported by Leblanc et al32 when comparing AR simulation to cadaveric models for colorectal surgery skills. The gynecology and neurosurgical literature supports analogous benefits to trainees, with improved knowledge-based scores and operative efficiency when compared with traditional methods.18,33,34 Plastic surgery trainees may expect to derive similar gains from specialty-specific simulators, although studies assessing traditional learning methods versus AR/MR are warranted.

AR can also facilitate telemedicine and remote learning of new techniques.1,3,24 Vyas et al24 reported on AR to create an overseas cleft-surgery curriculum led by a remote American surgeon to guide two Peruvian surgeons through cleft surgery. The remote surgeon’s virtual operative field was merged with live audio and video from the operating room of the overseas surgeon that was visible to both parties. A progressive improvement in all seven aspects of cleft lip repair was demonstrated by both groups of surgeons. Technology such as virtual interactive presence in AR allows for real-time interaction between local and remote surgeons and an AR overlay for the local surgeon,3,10,35 with demonstrated early success in orthopedics and neurosurgery. Similar technology can be used across multiple institutions, allowing for more experienced surgeons to guide colleagues and trainees through an operative procedure.24 Applications of VR/AR/MR are summarized in Table 2.

Table 2.

Notable Examples of Plastic Surgery Applications of VR, AR, and MR

| Modality | Example of Technology | Description |

|---|---|---|

| VR | 1. The virtual nose25 | 1. A novel three-dimensional VR model of the nose, allowing for effective teaching and anatomy visualization. |

| 2. Virtual surgical atlas of craniofacial procedures27,28 | 2. Static and dynamic animation of normal and pathologic craniofacial states, as well as procedural steps in commonly performed procedures. | |

| 3. Touch surgery1 | 3. Plastic surgery procedures taught in a stepwise format, allowing for both learning and testing modes. | |

| 4. Crisalix,1 VR-assisted surgical planning10 | 4. Applications designed to render postoperative results that can be demonstrated for patients using preoperative images and patient scans. | |

| AR | 1. Google Glass for K-wire fixation of metacarpal fractures17 | 1. Heads-up display to augment the surgeon’s visualization of the fracture pattern and K-wire placement. |

| MR | 1. Remote learning and telementoring for novice surgeons24 | 1. Remote teaching of overseas cleft surgeons using an AR platform, demonstrating improvement in clinical skills. |

| 2. Intraoperative navigation36 | 2. Use of an AR system for intraoperative navigation in mandibular osteotomies, resulting in improved accuracy. | |

| 3. Preoperative planning37 | 3. AR system to assist with creation of accurate cutting guides for fibula free flap harvest. |

Despite its educational potential, AR/MR is intended to be complementary to operating room experience and not a direct replacement for operative exposure. These modalities are effective in teaching anatomy and the surgical steps of a procedure. However, these models do not fully replicate hands-on surgical training as they lack haptic/force feedback and cannot replicate the more nuanced aspects of tissue dissection.1,3 In one study, participants reported greater satisfaction with a cadaveric model, citing tissue consistency and preservation of anatomic planes as important factors.32 Further improvements in AR simulation will require incorporation of appropriate haptic feedback and better representation of tissue characteristics. Therefore, the real benefit is to allow trainees to acquire skills outside of the operating room and extension of these skills in an operative environment to focus on aspects such as tissue handling.

ASSESSMENT BENEFITS OF AUGMENTED REALITY SIMULATION

As residency programs transition to competency-based curricula, more standardized forms of assessment are needed.2 The milestones of such a curriculum reflect knowledge and skill competencies that must be achieved by each trainee before advancement to the next stage of training and, ultimately, autonomous practice.38 However, while in-service training examinations exist to assess clinical knowledge, fewer objective measures exist to measure technical proficiency. Varying degrees of correlation between results of in-service examinations and clinical performance also underscore the need for more objective assessment.39

Incorporating AR-based simulators for core plastic surgery procedures in conjunction with validated assessment tools would allow residency programs to more objectively track trainee competence on predetermined tasks.2 As a first step toward uniform implementation, such core procedures would need to be determined at a national level via consensus among program directors and administrators. Simulators and assessment tools tailored for each core procedure can then be developed with both resident and staff input.19,40 Scores on an objective, validated system that are adapted to postgraduate years would help to illustrate progressive trainee proficiency and determine suitability for operating on patients.19,40 For example, elements such as time to task completion, tool path length, distance from target, tool redirections, and focus shifts are objective measures that can be tracked by an AR simulator and aggregated into a composite score.18,30,33 Systems capable of recording operative performance statistics already exist for general surgery training, such as fundamentals of laparoscopic surgery.41 With real-time tool/instrument tracking,3 these simulators assist trainees to better delineate areas of deficiency and allow for more constructive feedback with supervising surgeons.2,30,33 However, no equivalent assessment tool exists for plastic surgery trainees. Complementary measures would include the Surgery Task Load Index and eye tracking to record changes in pupillary size, blink, and gaze deviation,41,42 as means of determining the cognitive load on trainees. Incorporating measures of cognitive load into simulation would allow for better understanding of procedure-specific task demands and allow for personalized learning.20,42

LIMITATIONS OF CURRENT TECHNOLOGY

Several important limitations must be addressed before these modalities become routine components of training programs. AR simulators lack the degree of realism necessary to completely replicate human tissue and may be missing components of haptic feedback.1,5 Most significantly, these constructs may be missing deleterious sequelae that result from intraoperative mistakes, such as transection of critical structures, and may present false security to the user.1 However, as technology improves, these simulators will be better able to simulate operative environments by incorporating haptic feedback or combining them with physical models and virtual overlay of information.

Multiple studies have also reported on user issues with hardware such as the Microsoft Hololens that is frequently used in AR, such as weight of the device (590 g)5 and nausea and vertigo with prolonged use.1,3 However, these adverse effects on users are more likely to be experienced with VR applications.21 Issues with network connectivity and security will also need to be allayed,3,24 to both protect sensitive patient information and facilitate seamless interactivity in remote applications. Finally, the cost of AR modalities, such as the Microsoft Hololens, which retails for US $3500 (Microsoft.com), may prevent rapid integration, although the prices of these devices are gradually decreasing.5 The ability to use tablets and phones for some simulations that do not require complex devices like Hololens may mitigate this problem and offer potential for wider adoption.

Issues with line of sight and inattentional blindness, the failure to notice objects within one’s operative field, have also been reported in the setting of AR.3,43,44 In two separate neurosurgical studies, participants in groups using AR image guidance were significantly less likely to notice foreign bodies during simulated surgical tasks than those using traditional image guidance.18,43 This inattentional blindness may pose potential safety concerns when translated to a clinical setting if critical events during surgery are not realized. Therefore, further study is warranted to optimize surgeon awareness and possibly incorporate these into assessments during AR-assisted procedures and to maximize patient safety.

CURRENT APPLICATIONS AND NEXT STEPS

Simulation/Education

Numerous studies describe benefits to trainees using AR technology during learning and skill acquisition, although a paucity of data exists regarding the translation of these skills to the clinical setting and the effect on patient outcomes.3,4 Further studies should focus on clinically important outcomes, such as complication rates and cost effectivenes,12 to demonstrate that patients may derive tangible benefit from incorporation of AR4. In addition, the heterogeneity of studies on AR simulation has prevented the establishment of validated outcome measures.4 Such measures would lend further credibility to AR/MR as an important adjunct in the training curriculum. Instrument-tracking software would help to facilitate measurement of objective variables such as “distance-to-target” and subsequently procedure-specific composite scores,4 in keeping with the objectives of competency-based education. Additionally, AR simulators can tailor a module to varying learner experience levels. Novice learners could be presented with a clinical scenario with multiple cues and guidance to proceed through the process, whereas these can be omitted for learners who are more senior. An example would be the use of targets and trajectories in tasks such as K-wire insertion to guide the learner in the skill acquisition phase, and subsequently removed during the competency testing phase of the module.

Preoperative Planning

AR has further potential for patient-specific preoperative planning and to improve the consultation process. For example, Crisalix (Crisalix SA, Lausanne, Switzerland) and the VR-Assisted Surgery Program are two applications that allow photographs of patients to be rendered and altered digitally, helping patients visualize postoperative results. These programs also allow surgeon and trainee to develop a patient-specific surgical plan based on preoperative imaging.1,5,10 This may prove particularly useful during technically demanding procedures, such as rhytidectomy, perforator flap dissection, and osteotomies.5,37,45 AR has already demonstrated proof of concept with planning of LeFort I osteotomies and fibula free flap harvest.22,46

Intraoperative Navigation

AR has been utilized for intraoperative navigation,1,3–5 such as to assist with perforator flap dissection and craniofacial osteotomies. However, if AR is to be incorporated further into the clinical environment, improvements are required in image registration capabilities and integration of preoperative imaging with AR platforms. Given that tissue distortion and patient positioning changes throughout a procedure, orientation of the originally registered images may change, and this has presented a challenge to seamless implementation in an operative setting.1,47 Moving forward, enhancements in spatial tracking capability and AR registration systems will be required so that anatomical changes are accounted for without disruption of the surgeon’s line of sight or the virtual operative plan.11

CONCLUSIONS

VR and MR are rapidly advancing technologies that have tremendous untapped potential in plastic surgery, with proven benefits in skill acquisition, task performance, and remote learning. As these modalities are refined with improved simulation of a surgical environment, their adoption is likely to increase. Together with the creation of standardized assessment tools, AR/MR is uniquely positioned to complement the next phase of surgical education, ultimately leading to improved skill acquisition and improvements in patient outcomes through more efficient operative procedures and fewer complications.

Footnotes

Published online 3 November 2022.

Disclosure: The authors have no financial interest to declare in relation to the content of this article.

Related Digital Media are available in the full-text version of the article on www.PRSGlobalOpen.com.

REFERENCES

- 1.Lee GK, Moshrefi S, Fuertes V, et al. What is your reality? Virtual, augmented, and mixed reality in plastic surgery training, education, and practice. Plast Reconstr Surg. 2021;147:505–511. [DOI] [PubMed] [Google Scholar]

- 2.Khelemsky R, Hill B, Buchbinder D. Validation of a novel cognitive simulator for orbital floor reconstruction. J Oral Maxillofac Surg. 2017;75:775–785. [DOI] [PubMed] [Google Scholar]

- 3.McKnight RR, Pean CA, Buck JS, et al. Virtual reality and augmented reality-translating surgical training into surgical technique. Curr Rev Musculoskelet Med. 2020;13:663–674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Williams MA, McVeigh J, Handa AI, et al. Augmented reality in surgical training: a systematic review. Postgrad Med J. 2020;96:537–542. [DOI] [PubMed] [Google Scholar]

- 5.Sayadi LR, Naides A, Eng M, et al. The new frontier: a review of augmented reality and virtual reality in plastic surgery. Aesthet Surg J. 2019;39:1007–1016. [DOI] [PubMed] [Google Scholar]

- 6.Agrawal N, Turner A, Grome L, et al. Use of simulation in plastic surgery training. Plast Reconstr Surg Glob Open. 2020:8;1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Barsom EZ, Graafland M, Schijven MP. Systematic review on the effectiveness of augmented reality applications in medical training. Surg Endosc. 2016;30:4174–4183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Thomson JE, Poudrier G, Stranix JT, et al. Current status of simulation training in plastic surgery residency programs: a review. Arch Plast Surg. 2018;45:395–402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kim Y, Kim H, Kim YO. Virtual reality and augmented reality in plastic surgery: a review. Arch Plast Surg. 2017;44:179–187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kanevsky J, Safran T, Zammit D, et al. Making augmented and virtual reality work for the plastic surgeon. Ann Plast Surg. 2019;82:363–368. [DOI] [PubMed] [Google Scholar]

- 11.Casari FA, Navab N, Hruby LA, et al. Augmented reality in orthopedic surgery is emerging from proof of concept towards clinical studies: a literature review explaining the technology and current state of the art. Curr Rev Musculoskelet Med. 2021;14:192–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Vles MD, Terng NCO, Zijlstra K, et al. Virtual and augmented reality for preoperative planning in plastic surgical procedures: a systematic review. J Plast Reconstr Aesthet Surg. 2020;73:1951–1959. [DOI] [PubMed] [Google Scholar]

- 13.Ruikar DD, Hegadi RS, Santosh KC. A systematic review on orthopedic simulators for psycho-motor skill and surgical procedure training. J Med Syst. 2018;42:168. [DOI] [PubMed] [Google Scholar]

- 14.Vera AM, Russo M, Mohsin A, et al. Augmented reality telementoring (ART) platform: a randomized controlled trial to assess the efficacy of a new surgical education technology. Surg Endosc. 2014;28:3467–3472. [DOI] [PubMed] [Google Scholar]

- 15.Botden SM, Buzink SN, Schijven MP, et al. Augmented versus virtual reality laparoscopic simulation: what is the difference? A comparison of the ProMIS augmented reality laparoscopic simulator versus LapSim virtual reality laparoscopic simulator. World J Surg. 2007;31:764–772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bartlett JD, Lawrence JE, Stewart ME, et al. Does virtual reality simulation have a role in training trauma and orthopaedic surgeons? Bone Joint J. 2018;100-B:559–565. [DOI] [PubMed] [Google Scholar]

- 17.Chimenti PC, Mitten DJ. Google glass as an alternative to standard fluoroscopic visualization for percutaneous fixation of hand fractures: a pilot study. Plast Reconstr Surg. 2015;136:328–330. [DOI] [PubMed] [Google Scholar]

- 18.Marcus HJ, Pratt P, Hughes-Hallett A, et al. Comparative effectiveness and safety of image guidance systems in neurosurgery: a preclinical randomized study. J Neurosurg. 2015;123:307–313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gosman A, Mann K, Reid CM, et al. Implementing assessment methods in plastic surgery. Plast Reconstr Surg. 2016;137:617e–623e. [DOI] [PubMed] [Google Scholar]

- 20.Rikers RMJP, Van Gerven PWM, Schmidt HG. Cognitive load theory as a tool for expertise development. Instr Sci. 2004;32:173–182. [Google Scholar]

- 21.Moro C, Štromberga Z, Raikos A, et al. The effectiveness of virtual and augmented reality in health sciences and medical anatomy. Anat Sci Educ. 2017;10:549–559. [DOI] [PubMed] [Google Scholar]

- 22.Tepper OM, Rudy HL, Lefkowitz A, et al. Mixed reality with HoloLens: where virtual reality meets augmented reality in the operating room. Plast Reconstr Surg. 2017;140:1066–1070. [DOI] [PubMed] [Google Scholar]

- 23.Maass H, Chantier BB, Cakmak HK, et al. Fundamentals of force feedback and application to a surgery simulator. Comput Aided Surg. 2003;8:283–291. [DOI] [PubMed] [Google Scholar]

- 24.Vyas RM, Sayadi LR, Bendit D, et al. Using virtual augmented reality to remotely proctor overseas surgical outreach: building long-term international capacity and sustainability. Plast Reconstr Surg. 2020;146:622e–629e. [DOI] [PubMed] [Google Scholar]

- 25.Vartanian AJ, Holcomb J, Ai Z, et al. The virtual nose: a 3-dimensional virtual reality model of the human nose. Arch Facial Plast Surg. 2004;6:328–333. [DOI] [PubMed] [Google Scholar]

- 26.Kumar N, Pandey S, Rahman E. A novel three-dimensional interactive virtual face to facilitate facial anatomy teaching using microsoft holoLens. Aesthetic Plast Surg. 2021. [DOI] [PubMed] [Google Scholar]

- 27.Flores RL, Deluccia N, Grayson BH, et al. Creating a virtual surgical atlas of craniofacial procedures: Part I. Three-dimensional digital models of craniofacial deformities. Plast Reconstr Surg. 2010;126:2084–2092. [DOI] [PubMed] [Google Scholar]

- 28.Flores RL, Deluccia N, Oliker A, et al. Creating a virtual surgical atlas of craniofacial procedures: Part II. Surgical animations. Plast Reconstr Surg. 2010;126:2093–2101. [DOI] [PubMed] [Google Scholar]

- 29.Logishetty K, Western L, Morgan R, et al. Can an augmented reality headset improve accuracy of acetabular cup orientation in simulated THA? A randomized trial. Clin Orthop Relat Res. 2019;477:1190–1199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gibby JT, Swenson SA, Cvetko S, et al. Head-mounted display augmented reality to guide pedicle screw placement utilizing computed tomography. Int J Comput Assist Radiol Surg. 2019;14:525–535. [DOI] [PubMed] [Google Scholar]

- 31.Merrell G, Slade J. Technique for percutaneous fixation of displaced and nondisplaced acute scaphoid fractures and select nonunions. J Hand Surg Am. 2008;33:966–973. [DOI] [PubMed] [Google Scholar]

- 32.LeBlanc F, Champagne BJ, Augestad KM, et al. ; Colorectal Surgery Training Group. A comparison of human cadaver and augmented reality simulator models for straight laparoscopic colorectal skills acquisition training. J Am Coll Surg. 2010;211:250–255. [DOI] [PubMed] [Google Scholar]

- 33.Bourdel N, Collins T, Pizarro D, et al. Augmented reality in gynecologic surgery: evaluation of potential benefits for myomectomy in an experimental uterine model. Surg Endosc. 2017;31:456–461. [DOI] [PubMed] [Google Scholar]

- 34.Siff LN, Mehta N. An interactive holographic curriculum for urogynecologic surgery. Obstet Gynecol. 2018;132(suppl 1):27S–32S. [DOI] [PubMed] [Google Scholar]

- 35.Shenai MB, Dillavou M, Shum C, et al. Virtual interactive presence and augmented reality (VIPAR) for remote surgical assistance. Neurosurgery. 2011;68:200–207. [DOI] [PubMed] [Google Scholar]

- 36.Zhu M, Liu F, Zhou C, et al. Does intraoperative navigation improve the accuracy of mandibular angle osteotomy: comparison between augmented reality navigation, individualised templates and free-hand techniques. J Plast Reconstr Aesthet Surg. 2018;71:1188–1195. [DOI] [PubMed] [Google Scholar]

- 37.Pietruski P, Majak M, Świątek-Najwer E, et al. Supporting fibula free flap harvest with augmented reality: a proof-of-concept study. Laryngoscope. 2020;130:1173–1179. [DOI] [PubMed] [Google Scholar]

- 38.ACGME. Clinically-Driven Standards | ACGME Common Program Requirements. Available at https://www.acgme.org/globalassets/pfassets/programrequirements/cprresidency_2022v3.pdf. Published online 2017:1–27.

- 39.Ganesh Kumar N, Benvenuti MA, Drolet BC. Aligning in-service training examinations in plastic surgery and orthopaedic surgery with competency-based education. J Grad Med Educ. 2017;9:650–653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Janis JE, Vedder NB, Reid CM, et al. Validated assessment tools and maintenance of certification in plastic surgery: current status, challenges, and future possibilities. Plast Reconstr Surg. 2016;137:1327–1333. [DOI] [PubMed] [Google Scholar]

- 41.Gao J, Liu S, Feng Q, et al. Subjective and objective quantification of the effect of distraction on physician’s workload and performance during simulated laparoscopic surgery. Med Sci Monit. 2019;25:3127–3132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kapp CM, Akulian JA, Yu DH, et al. Cognitive load in electromagnetic navigational and robotic bronchoscopy for pulmonary nodules. ATS Sch. 2020;2:97–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Dixon BJ, Daly MJ, Chan HH, et al. Inattentional blindness increased with augmented reality surgical navigation. Am J Rhinol Allergy. 2014;28:433–437. [DOI] [PubMed] [Google Scholar]

- 44.Hughes-Hallett A, Mayer EK, Marcus HJ, et al. Inattention blindness in surgery. Surg Endosc. 2015;29:3184–3189. [DOI] [PubMed] [Google Scholar]

- 45.Olsson P, Nysjö F, Rodríguez-Lorenzo A, et al. Haptics-assisted virtual planning of bone, soft tissue, and vessels in fibula osteocutaneous free flaps. Plast Reconstr Surg Glob Open. 2015;3:e479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Badiali G, Ferrari V, Cutolo F, et al. Augmented reality as an aid in maxillofacial surgery: validation of a wearable system allowing maxillary repositioning. J Craniomaxillofac Surg. 2014;42:1970–1976. [DOI] [PubMed] [Google Scholar]

- 47.Liebmann F, Roner S, von Atzigen M, et al. Pedicle screw navigation using surface digitization on the Microsoft HoloLens. Int J Comput Assist Radiol Surg. 2019;14:1157–1165. [DOI] [PubMed] [Google Scholar]