Abstract

Voluntary movement requires communication from cortex to the spinal cord, where a dedicated pool of motor units (MUs) activates each muscle. The canonical description of MU function rests upon two foundational tenets. First, cortex cannot control MUs independently but supplies each pool with a common drive. Second, MUs are recruited in a rigid fashion that largely accords with Henneman’s size principle. While this paradigm has considerable empirical support, a direct test requires simultaneous observations of many MUs across diverse force profiles. We developed an isometric task that allowed stable MU recordings, in a rhesus macaque, even during rapidly changing forces. Patterns of MU activity were surprisingly behavior-dependent and could be accurately described only by assuming multiple drives. Consistent with flexible descending control, microstimulation of neighboring cortical sites recruited different MUs. Furthermore, the cortical population response displayed sufficient degrees of freedom to potentially exert fine-grained control. Thus, MU activity is flexibly controlled to meet task demands and cortex may contribute to this ability.

Introduction

Primates produce myriad behaviors, from acrobatic maneuvers to object manipulation, all requiring precise neural control of muscles. A muscle is controlled by a motor neuron pool (MNP) containing hundreds of α-motoneurons, each innervating a unique subset of muscle fibers. A motoneuron and associated fibers constitute a “motor unit” (MU)1. MUs are heterogeneous, differing in size (large MUs innervate more fibers)2, duration of generated force3, and muscle length where force becomes maximal4.

Optimality suggests flexibly using MUs suited to each situation5–7. Yet nearly a century of research supports an alternative rigid strategy that approximates optimality1,8,9. With limited exceptions, MUs within a pool are believed to be recruited in a consistent order10,11 from smallest to largest, obeying Henneman’s size principle12–17. Small-to-large recruitment minimizes force fluctuations18 and energy expenditure19, and is attributed to intrinsic motoneuron properties1,9. Rigid recruitment is computationally efficient20: one ‘common drive’ determines every MU’s activity21. Henneman found small-to-large recruitment regardless of whether input was delivered via electrical stimulation of dorsal roots12; electrical stimulation of the motor cortex, basal ganglia, cerebellum, or brain stem22; or mechanical activation of reflex circuits13.

Rigid recruitment is believed to simplify control (Fig. 1a). If “the brain cannot selectively activate specific motor units1”, it is freed from the need to do so. Instead, each MU’s activity is a fixed function of a unified force command, yielding small-to-large recruitment with limited well-established exceptions. It is cautioned that these exceptions should not be misinterpreted as flexibility23–26. First, conduction-velocity differences impact recruitment order during ballistic contractions8,23. Second, cell-intrinsic mechanisms cause some MUs to rotate on and off, over many seconds, to combat fatigue27. Finally, for ‘multifunctional’ muscles with multiple mechanical actions, the size principle holds only within each action6,28,29. Each mechanical action is hypothesized to have its own common drive (or ‘synergy’). These drives overlap imperfectly within the MNP30 (Fig. 1a, middle) and are preferentially reflected by different nerve branches31. Recruitment is still considered rigid25 because it is a fixed function of a small number of commands.

Figure 1 |. Rigid versus flexible motor unit control and experimental setup.

(a) Rigid control schematic. The activity of many MUs is determined by a small number of force commands. Commands may be for individual-muscle force, or may be ‘synergies’ that control a mechanical action (e.g., an elbow flexion synergy and a forearm supination synergy). Thus, the number of controlled degrees of freedom is less than or equal to the number of motor neuron pools. Each MU’s activity is a ‘link function’ of one synergy (or for multifunctional muscles, 2–3 synergies). Link functions typically enforce size-based recruitment. (b) Flexible control schematic. Each motor neuron pool is controlled by multiple degrees of freedom, such that recruitment can be size-based but can also be flexibly altered when other solutions are preferable. (c) Task schematic. A monkey modulated the force generated against a load cell to control Pac-Man’s vertical position and intercept a scrolling dot path. (d) Single-trial (gray), trial-averaged (black), and target (cyan) forces from one session. Vertical scale: 4 N. Horizontal scale: 500 ms.

The opposite of rigid control -- highly flexible control that leverages many degrees of freedom (Fig. 1b) -- has rarely been considered viable. EMG frequency analysis suggests flexibility7 within the rat gait cycle32 and across cycling speeds in humans33. Yet most studies argue that speed-based recruitment is absent23,34 and/or of limited benefit35. Thus, the consensus has been that speed does not reveal flexibility1,25,36,37. A possible exception is fast lengthening contractions5, but studies disagree38 regarding whether this is a general property36. Latent flexibility is suggested by biofeedback training26,39,40 yet may be limited41 and could involve known phenomena including multifunctional muscles24. The prevailing view is thus that flexibility is likely scant36,37 or non-existent25. Yet many have stressed the need for further examination using improved recording techniques7 and a broader range of behaviors25.

Results

Pac-Man Task and EMG recordings

We trained a rhesus macaque to perform an isometric force-tracking task. Force controlled a ‘Pac-Man’ icon that intercepted scrolling dots (Fig. 1c). The dot path instructed force profiles (Fig. 1d, cyan). Actual forces (single trials in gray) closely matched target profiles. We performed three experiment types across 38 ~1 hour sessions. Microstimulation experiments perturbed descending commands. Dynamic experiments employed diverse force profiles. Muscle-length experiments used multiple force profiles across two muscle lengths.

In each session, we recorded from multiple custom-modified percutaneous thin-wire electrodes closely clustered within one muscle head. EMG spike-sorting is notoriously difficult during dynamic tasks; movement threatens stability and vigorous activity produces superimposed waveforms. Three factors aided spike sorting: the isometric task facilitated stability, the number of active MUs could be titrated via task gain, and each MU produced a complex waveform spanning multiple channels (Extended Data Fig. 1). We adapted recent spike-sorting advances, including methods for resolving superimposed waveforms. We isolated 3–21 MUs per session (356 total). To eliminate the possibility that recruitment might mistakenly appear flexible due to properties of multifunctional muscles, forces were all generated with the same mechanical action. Furthermore, recordings included non-multifunctional muscles and analyses considered only simultaneously recorded neighboring MUs.

Cortical perturbations

If rigid MU control is spinally enforced13, artificially perturbing cortex should modulate MU activity while preserving orderly recruitment22. In contrast, if control is flexible, cortex might participate and perturbations might drive diverse recruitment patterns. It is argued that this does not occur22, but the possibility is suggested by a mapping study in baboons42. To investigate, we delivered microstimulation in sulcal motor cortex (Fig. 2a), during static forces, while recording from the deltoid, triceps, and pectoralis (18 sessions with 6661 total successful trials; 183 total MUs, 3–21 per session). We compared stimulation-driven responses amongst neighboring cortical electrodes. We also recorded during a four-second force ramp without stimulation.

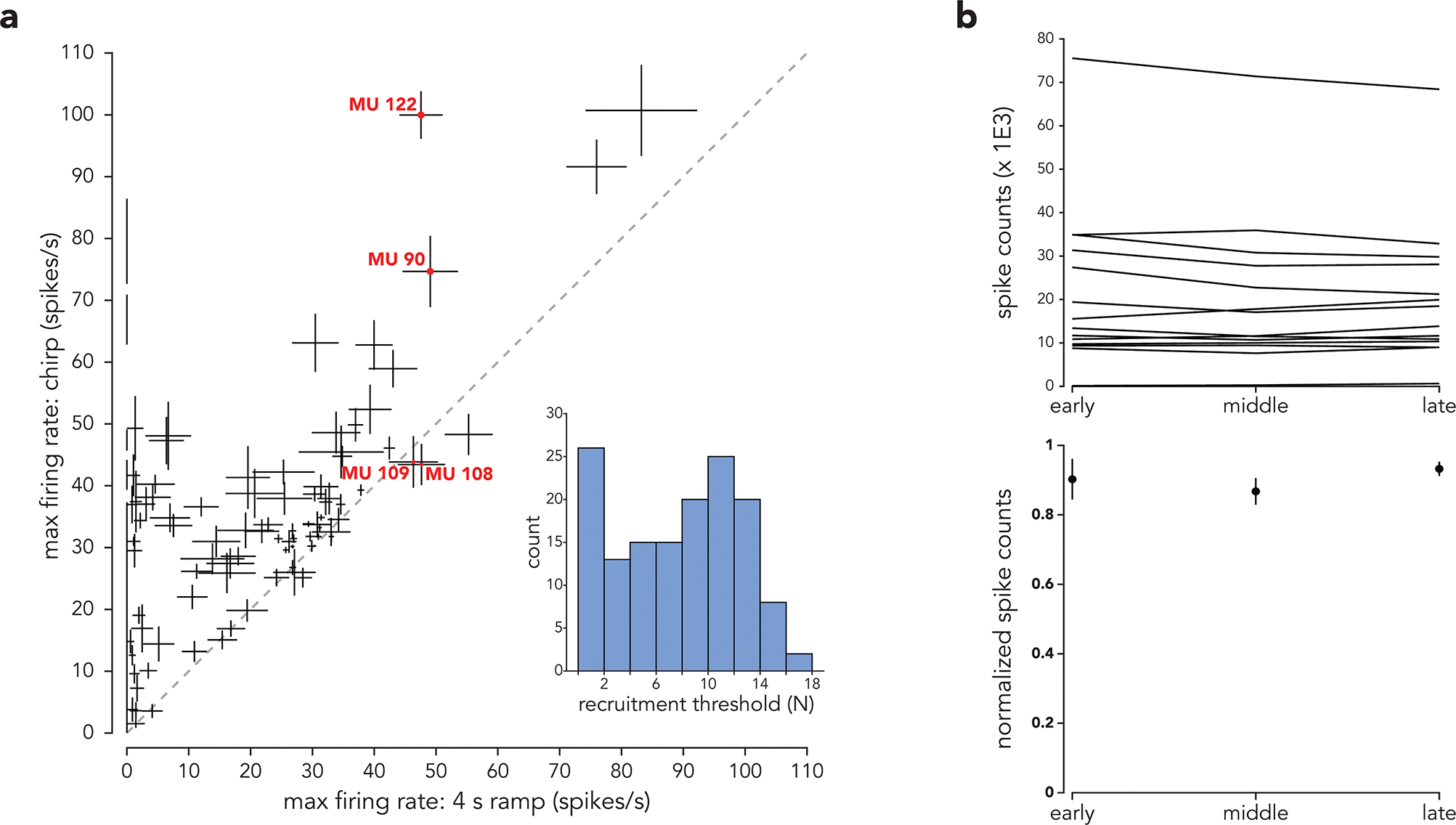

Figure 2 |. Stimulation experiments.

(a) Microstimulation was delivered, on interleaved trials, through a selection of electrodes on a linear array. (b) EMG voltages for two EMG recording channels (of eight total) and four example trials, stimulating on electrode 23 or 27. The two electrodes recruited MUs with different waveform magnitudes, and vertical scales are adjusted accordingly. Stimulation was delivered during each green-shaded region, during which a small stimulation artifact can be seen. Right. spike templates for these two MUs. Vertical scale: 50 standard deviations of background noise (ignoring stimulation artifact). (c) Responses of the same two MUs, for all trials during stimulation on three electrodes. Traces plot firing rates (mean and standard error). Rasters show spike times, separately for the two MUs. (d) As in c but for a different session. (e) Additional example from a different session, showing responses during both the four-second ramp and during stimulation as the monkey held two baseline force levels. Mean force plotted at top. (f) As in e, but for a different session. (g) State-space predictions for rigid control (left) and flexible control (right). (h) State-space plots for two sessions where MUs were recorded from the lateral deltoid. Each subpanel plots joint activity of two MUs during stimulation on each of three electrodes. (i) For two sessions recording from sternal pectoralis major. Left: activity during stimulation on three electrodes. Right: activity during stimulation on two electrodes and during the slow force ramp. (j), For two sessions recording from lateral triceps.

Rigid control is fundamentally a hypothesis regarding the population response. Yet if activity departs from rigid control, there should exist pairwise comparisons (amongst just two MUs) where flexibility becomes patent. Pairwise comparisons are helpful because inspection at the level of spikes and rates allows one to consider trivial explanations. In Fig. 2b, stimulation recruited two neighboring pectoralis MUs, visible on the same channels (MU206’s waveform is smaller and shown on an expanded scale). Cortical electrode 23 primarily recruited MU206. Electrode 27 (400 microns away) primarily recruited MU215. This effect was robust across trials (Fig. 2c). Electrode 24 (physically between 23 and 27) recruited both. These observations are incompatible with electrodes 23 and 27 recruiting the same common drive. Effects cannot be due to sampling error; standard errors of the mean are small. A similar effect is illustrated for two triceps MUs (Fig. 2d). Such effects were common: in 13/18 sessions, the relative activation of MUs depended on which electrode delivered stimulation (ANOVA, interaction between MU identity and electrode; p < 0.001 adjusted for multiple comparisons; N = 11–77 trials per condition; session average = 29).

Cortical stimulation also yielded departures from the natural recruitment observed during the force ramp. For example, based on responses during the ramp, MU295 has a higher recruitment threshold than MU289 (Fig. 2e, left). Yet electrode 24 activated only MU295 (Fig. 2e, middle). When MU289 was already active during static force production (Fig. 2e, right), stimulation had an effect consistent neither with common drive (it differed for the MUs) nor with recruitment during the ramp.

Departures from rigid control were not due to small latency differences amongst MUs23. For example, in Fig. 2c,d, all key departures relate to magnitude not latency. The same is true in Fig. 2e,f. Still, small latency differences exist: e.g., MU206 rises ~20 ms earlier than MU215 (Fig. 2c). Subsequent quantification thus takes latency into account. We saw little evidence for fatigue over a session, both in individual-example rasters and in summary analysis (Extended Data Fig. 2b). Occasionally, fatigue-combatting rotations27 produced ‘streaky’ spike rasters (Fig. 2f, red) but this did not create the departures from rigid control in trial-averaged rates. To minimize any impact of fatigue-related effects, trials for all conditions (including stimulation sites) were interleaved. Occasionally, cortical perturbations produced hysteresis (Fig. 2f, right), likely reflecting persistent inward currents43. Unlike the immediate effect of stimulation, hysteresis rarely altered recruitment relative to natural behavior, consistent with properties of persistent inward currents37.

State-space predictions of rigid control

The hypothesis of rigid control is naturally expressed in a state space where every MU contributes an axis. Each MU’s activity is a monotonic function (termed a ‘link function’) of common drive44,45. Thus, with increasing drive, population activity should move farther from the origin, tracing a monotonic one-dimensional (1D) manifold (Fig. 2g, left). The manifold may curve (due to nonlinear link functions) but greater drive should cause all rates to increase (or remain unchanged if maximal or not yet recruited). In contrast, flexible control predicts recruitment patterns unconfined to a monotonic 1D manifold (Fig. 2g, right). We use MU pairs to illustrate. Responses sometimes adhered to rigid-control predictions (Fig. 2h, left). Yet stimulation on different electrodes often yielded different trajectories (Fig. 2h, right; Fig. 2i,j), as did activity during the ramp. Brief trajectory ‘loops’ likely reflect small latency differences and are thus compatible with rigid control. In contrast, entirely different trajectory directions are not.

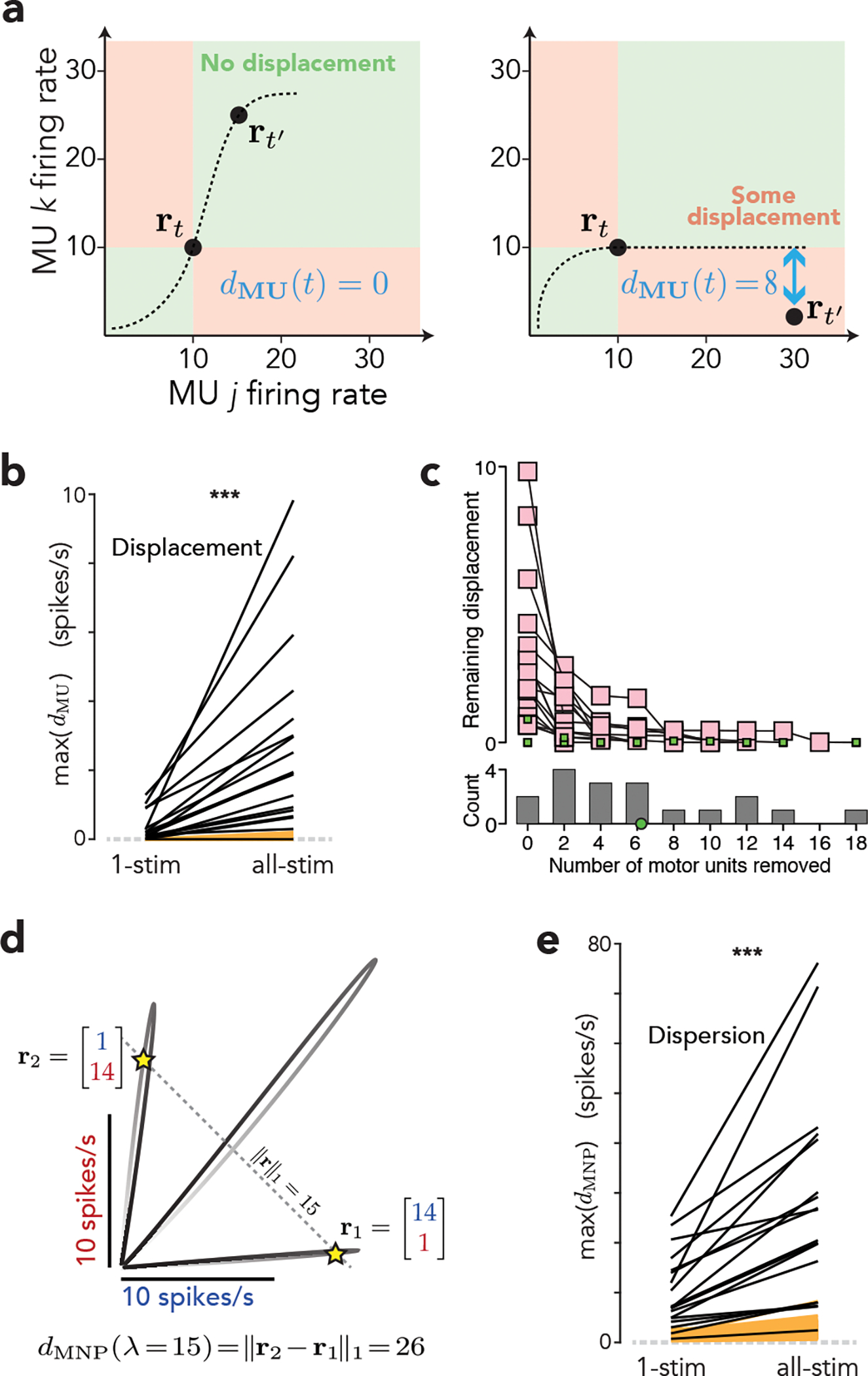

Standard statistical tests (e.g., the ANOVA above) ask when MU responses are statistically different. However, the key population-level question is not whether responses differ, but whether differences can be explained by different link functions operating upon a common drive. Before engaging in model fitting, a simpler approach is to leverage the state-space perspective and ask whether population trajectories are logically consistent with rigid control. To do so, we applied a novel metric: ‘MU displacement’ (Fig. 3a). Suppose two MUs have rates of 10 spikes/s at time t. If both rates increase by time t’ (possibly within a different condition), one can connect them with a monotonic manifold (left, dashed line). This is not true if rates change in opposition (right). ‘Displacement’ (blue) is the smallest alteration, in either MU’s rate, that renders activity consistent with a monotonic manifold. For each population response (one per session) we identified the MU pair causing the largest displacement, then minimized this displacement by allowing temporal shifts to eliminate latency-based effects. Any remaining displacement was the maximal displacement for that session. For 16/18 sessions, maximum displacement was higher than expected from sampling error (Fig. 3b, p<0.001, two-sample t-test). Displacements were larger when considering population trajectories across all stimulation sites (“all-stim”) versus each site alone (“1-stim”). This agrees with the ANOVA above: different stimulation sites often drove different MU-activity patterns. Even within a site, displacement was occasionally non-negligible, indicating some MUs displayed responses with opposing polarities or temporal patterns. We extended the “all-stim” analysis by removing, from the population, the MU pair responsible for the largest displacement. We recomputed displacement and iterated until remaining displacement was no larger than the largest expected from sampling error (Fig. 3c). On average it was necessary to remove 6.2 MUs, out of an average of 10.2 per session. Thus, a reasonably large percentage of the MU population showed responses inconsistent with rigid control.

Figure 3 |. Quantifications of flexible control.

(a) Schematic illustrating MU displacement (dMU) for a two-dimensional population state and two times. Left. a monotonic manifold can pass through rt and . Thus, dMU(t) = 0. Right. Any monotonic manifold passing through rt is restricted to the green zone, and thus cannot come closer than 8 spike/s to . Thus, dMU(t) = 8. (b) Maximum displacement (one black line per session). For ‘1-stim’, displacement was computed across times during the response for each stimulation site alone. The maximum was then taken across sites from that session. For ‘all-stim’, the maximum displacement was computed across all times and stimulation sites within a session. ‘All-stim’ displacement was significantly larger (two-sample two-tailed t-test, p = 0.00017, N=18 sessions). Both were significantly larger than the displacement expected due to sampling error alone (yellow, ten resampled artificial populations per empirical dataset). (c) Maximum displacement (for ‘all-stim’) after repeatedly removing the MU pair causing the largest displacement. Each trace corresponds to one session, and ends when remaining displacement is no larger than the largest across the ten resampled populations for that session. Gray histogram plots the distribution, across sessions, of the number of MUs that had to be removed to reach that point (mean in green). (d) Illustration of MNP dispersion metric for two MUs. The three trajectories were driven by stimulation at three cortical sites. The line defined by ∥r∥1 = 15 intercepts these trajectories at six points, with r1 and r2 being most distant. The MNP dispersion for λ = 15 is the L1 distance between these points. (e) Maximum (across λ) dispersion (one line per session). ‘All-stim’ dispersion was significantly larger (two-sample two-tailed t-test, p = 0.00011, N=18 sessions). Both were significantly larger than the dispersion expected due to sampling error alone (yellow, ten resampled artificial populations per empirical dataset). Results are shown for conditions where stimulation was delivered as the monkey held a low static force, and were nearly identical for a higher static force.

We also applied a related metric, ‘MNP dispersion’. As common drive increases, so should the summed activity of all MUs: the L1-norm, ∥r∥1. Thus, there should not be two different ‘ways’ of achieving a given ∥r∥1. Geometrically, crossing a given diagonal in multiple places (Fig. 3d) is incompatible with a monotonic manifold. We defined MNP dispersion, dMNP(λ) as the maximum distance between all population states with norm λ. For each population we computed the maximum dispersion (across λ), then minimized that value by allowing latency shifts. In agreement with analyses above, dispersion was largest when considering all stimulation sites (Fig. 3e). Dispersion exceeded that expected given sampling error for 17/18 sessions (Fig. 3e).

Optimal motor unit recruitment

Is the capacity for flexible MU recruitment used during behavior? Because larger MUs tend to generate shorter-lived forces44, a venerable suggestion is that flexibility might reveal itself when comparing slow versus fast movements5,7,32,33. The prevailing view is that this does not occur1. Recruitment is preserved during slowly and quickly increasing force ramps23, excepting trivial latency effects. Yet studies have found evidence for flexible recruitment5,33 using non-ramping force profiles. We reasoned that revisitation of this topic would benefit from clear expectations regarding which force profiles are most likely to evoke altered recruitment.

We developed a simplified computational model, inspired by prior work that established size-based recruitment as the optimal fixed strategy18–20. We inquired how recruitment would behave were it infinitely flexible and optimized for each force profile. We modeled the force produced by an idealized MNP with N MUs as

| (1) |

where hi(t) is the twitch responses (modeled as in44) and ri(t) is the idealized firing rate of the ith MU. Twitch responses were bigger and briefer for larger MUs. For each target-force profile, we optimized MU rates (Fig. 4a) to minimize error (both average error and trial-to-trial variability) between fMNP(t) and intended force (Fig. 4b). Optimization considered the entire force profile, and could thus consider whether a force would be short-lived.

Figure 4 |. Optimal MU recruitment.

(a) Isometric force production was modeled using an idealized MNP containing 5 MUs. MU twitch amplitude varied inversely with contraction time such that small MUs were also slow. A given set of rates resulted in both a mean total force and inferred trial-to-trial variation around that force. The latter was smaller when using multiple small MUs versus one large MU. Both contributed to mean-squared error between actual and target forces. The optimal set of MU firing rates was numerically derived as the solution minimizing that error. Optimization was independent for each force profile. (b) Example target (cyan) and mean MNP (black) forces (for simplicity no variability is shown). Mean force matched targets very well, with notable small exceptions at the troughs during sinusoidal forces. (c) Optimal MU firing rates, used to generate the forces in b. Each color corresponds to a different MU, numbered by size (MU1 was the smallest and slowest). (d) State space plot of MU3 versus MU2 for each condition in c.

Recruitment (Fig. 4c) was purely size-based for slowly ramping forces. Recruitment departed from purely size-based during fast ramps, but only modestly. Were such deviations observed empirically, most would be indistinguishable from latency differences. Recruitment was more profoundly altered during fast sinusoids. A similar effect was observed within chirps; recruitment changed as frequency increased. State-space plots confirm that, even under the assumption of complete flexibility, activity followed a similar manifold for slow and fast ramps (Fig. 4d). In contrast, flexibility revealed itself when comparing between slow ramps and the high-frequency sinusoid, or within the chirp. Flexibility leverages the fact that preferentially using small (slow) MUs minimizes fluctuations18,20. However, for high-frequency sinusoids, it becomes optimal to rely more on large (fast) MUs to avoid excessive force during troughs.

Motor unit activity across force profiles

Using diverse force profiles that included sinusoids (Fig. 1d) we recorded MU populations from the deltoid and triceps (14 sessions with 4734 total successful trials; 134 total MUs, 3–20 MUs per session). MUs showed a range of recruitment thresholds and peak rates (Extended Data Fig. 2a). There was no indication of general fatigue across a session (Extended Data Fig. 2b). As predicted by the optimal-recruitment model, MU recruitment differed between slowly changing forces and high-frequency sinusoids. For example, in Fig. 5c, three lateral triceps MUs are active during the four-second ramp (left). During the 3 Hz sinusoid, these MUs are similarly (or slightly less) active, and are joined by MUs that are active primarily during the sinusoid (right). Extended Data Fig. 3 shows additional single-trial traces, and rasters for all trials / MUs from this session. Of seven recorded MUs, three were strongly active during the 3 Hz sinusoid despite being inactive during the ramp.

Figure 5 |. Dynamic experiments.

(a) Forces for two conditions: the four-second ramp and the 3 Hz sinusoid. Gray traces show all trials in this session. Black traces highlight trials for which EMG data are shown below. (b) MU spike templates across four (of eight) EMG channels recorded in the lateral triceps. Vertical scale: 50 standard deviations of the noise. Each template spans 10 ms. (c) EMG voltage traces for the two trials and time-periods highlighted in a. Traces become colored to indicate when spikes were detected. Numbers indicate the identity of the MU producing the spike. In some cases, spikes from two or more MUs overlap. Trace color reflects the relative magnitudes of the contributions made by different MUs. (d) Responses of two MUs during the 0.25 Hz sinusoid. Black trace at top shows trial-averaged force. Colored traces show firing rates (mean and standard error). Rasters show individual spikes on all trials for these conditions. Vertical scales: 8N and 20 spikes/s. (e) Response of two MUs during the four-second ramp (left) and 3 Hz sinusoid (right). MU311 and MU309 correspond to MUs 3 and 5 for the session focused on in c. (f) Response of two MUs (from a different session) during the four-second ramp (left) and the 0 – 3 Hz chirp (right). (g) Response of two MUs (from a different session) during the 250 ms increasing and decreasing ramps. (h,i,j) State-space plots for the MUs shown in d,e,f. Scale bars: 20 spikes/s. (k) Maximum displacement for every session. Displacement was computed twice: within the four-second ramp only, and again across all conditions. Yellow lines plot displacement expected due to sampling error (ten resampled artificial populations per session). Empirical slopes were significantly larger than expected given sampling error (two-sample two-tailed t-test, p = 0.000003, N=14 sessions). (l) Maximum displacement, across all conditions, after repeatedly removing the MU pair that caused the largest displacement. Each trace corresponds to one session, and ends when remaining displacement was no larger than the largest from the ten resampled datasets (or when no pairs would be left). Gray histogram plots the distribution, across sessions, of the number of MUs that had to be removed (mean in green). One session (lone green square) terminated immediately because it contained only 3 MUs. (m) Cumulative distribution for displacement (dMU(t)). Rather than summarizing a session by taking the maximum across all times (as in k,l), here we plot the distribution of dMU(t) for all times within one session. Distributions were computed for the slowly increasing ramp alone (purple) and all conditions (green). (n) Maximum (across λ) dispersion (one line per session). Slopes were significantly larger than expected given sampling error (two-sample two-tailed t-test, p = 0.0000007, N=14 sessions). (o) Additional within-session analysis of dispersion. Rather than summarizing a session by taking the maximum dMNP(λ) across λ (as in n), here we plot dMNP(λ) versus the λ (the norm).

Inspection of examples illustrates a range of effects. When considering only slowly changing forces (Fig. 5d), activity was equally consistent with rigid versus flexible control. Activity became inconsistent with rigid control when also considering high-frequency sinusoids. For example, MU309 (Fig. 5e) becomes far more active during the fast sinusoid, even as MU311 becomes slightly less active (these correspond to MU5 and MU3 from panel c). MU329 (Fig. 5f) is inactive during the four-second ramp and early in the chirp, but becomes active for the higher-frequency cycles even as MU324’s rate declines.

Both prior work and the optimal-recruitment model argue against large recruitment differences between slow and fast ramps. Indeed, despite their high rate of change (~64 N/s), fast (250 ms) ramps rarely produced recruitment that was clearly different from four-second ramps. The only compelling exceptions were at force offset. For example, MU109 (Fig. 5g) tripled its activity in anticipation of a fast downward ramp, even as MU108 became slightly less active. A similar but smaller effect is exhibited by the model: large/fast MUs start to ‘take over’ so force can terminate swiftly (in follow-up simulations, moving to even faster offsets enhanced this strategy).

As above, inspection of examples is useful when considering alternative explanations. Departures were not explained by some MUs reflecting force while others reflected its derivative. For example, MU309 (Fig. 5e) and MU329 (Fig. 5f) are more active during higher-frequency forces, but do not phase lead their neighboring MUs. Furthermore, responses did not exhibit large overshoots during rapidly increasing ramps (e.g., Fig. 5g, left). Effects were also not explainable by some sort of rapid within-trial fatigue. For example, there is no evidence of fatigue in Fig. 5e. In Fig. 5f, one might propose that MU324’s rate declines during the chirp due to fatigue, yet it maintained a consistently high rate during the much longer ramp. In Fig. 5g, the departure occurs because activity increases (not fatigues) in anticipation of force offset. Still, some MUs did show a slight rate ‘sag’, especially during the lengthy four-second ramp (Fig. 2e, left). These long-timescale effects are presumably not a meaningful departure from rigid control, something taken into account when interpreting quantitative analyses below. We occasionally observed fatigue-combatting rotations; an MU could become less active for a handful of trials. To reduce any condition-specific impact on trial-averaged rates, trials for all conditions were interleaved. Inspection of individual-MU responses (also see Extended Data Fig. 3) confirms that different recruitment between ramps and sinusoids cannot be attributed to rotations or other long-timescale fatigue-related effects.

In situations where activity was potentially consistent with rigid control (Fig. 5d), trial-averaged trajectories hewed close to a monotonic manifold (Fig. 5h). When activity was incompatible with rigid control (Fig. 5e,f), trajectories diverged from a monotonic manifold (Fig. 5i,j). To quantify at the population level, we employed the displacement and dispersion metrics. We focused on the model-based prediction that departures from rigid control should be minimal during the four-second ramp (where rigid and flexible control predict the same small-to-large strategy) but become common when considering all conditions. The four-second ramp is also a useful comparison because it provides an estimate of trivial departures from idealized rigid control due to modest temporal instabilities. Indeed, during the four-second ramp, displacement (Fig. 5k) and dispersion (Fig. 5n) were small but higher than expected given sampling error (yellow lines). Inspection of individual cases revealed that such effects were ‘real’ but not evidence of flexibility; they reflected uninteresting phenomena such a slight sag in rate over long timescales (as in Fig. 2e, left).

When considering the population response across all conditions, displacement (Fig. 5k) and dispersion (Fig. 5n) became much larger (p<0.001). All 14 sessions had a maximum displacement outside the range expected due to sampling error. As above, we removed the MU pair with the largest oppositional change, and iterated until remaining displacement was within the range possible due to sampling error. On average, 6.4 (out of an average of 9.6) MUs had to be removed. A given population typically exhibited a distribution of large, medium and small displacements (Fig. 5m). During the four-second ramp, all displacements were small (purple). When considering all force profiles (green), the increase in displacement was due not to a few outliers, but to a large overall rightwards shift. For dispersion, we assessed robustness by sweeping the norm (rather than taking the maximum across norms). When considering all conditions (green), dispersion was high across a broad range of λ. Thus, population activity did not depart from a 1D monotonic manifold only at extremes, but did so over most of its dynamic range.

The contrast between the four-second ramp and sinusoidal forces also serves as a natural control for the concern that departures from rigid control might be due to occasional missed spikes. Judged subjectively, spike-sorting was excellent, but it remains likely that spikes were sometimes missed. Errors in spike sorting would, to a first approximation, be expected to impact all conditions similarly. Yet departures from rigid control were strongly present for some conditions but not others. One could argue that sorting might become particularly challenging during high-frequency sinusoids, leading to more missed spikes. However, departures from rigid control typically involved some MUs becoming more active during sinusoids. Direct inspection of voltage traces (Fig. 5c; Extended Data Fig. 3c) further rules out this potential source of effects.

Motor unit activity across muscle lengths

The optimal-recruitment-model solutions suggest a broader phenomenon: to achieve optimality, recruitment must depart from purely size-based whenever MUs show task-relevant diversity in domains other than size. To explore one potential domain, we employed a task with two postures. The anterior deltoid generated torque in the same axis (shoulder flexion) for both, but was at different lengths as it did so. We recorded MU population activity during six sessions (2971 total successful trials; 39 total MUs, 4–9 MUs per session). Postures could not be interleaved on individual trials, and were thus performed in blocks. The possibility of length-based flexibility has only rarely been considered46. A challenge is that slight shifts in electrode location relative to muscle fibers can impact spike amplitude24,46. Fortunately, a matched-filter approach (see Methods) is insensitive to modest amplitude changes, and can rely on spike shape being preserved across time and channels. Example voltage traces are shown for two postures (Fig. 6a,b; only two channels shown for simplicity). MU3 and MU4 exhibit waveforms that shrink modestly in the second posture, yet could still be identified. MU3 became slightly more active at the shorter length, while MU4 became less active. MU5 was active only when the deltoid was shortened.

Figure 6 |. Muscle-length experiments.

The monkey generated a subset of the force profiles in Fig. 1d with a shoulder-flexion angle of 15° (the deltoid was long) or 50° (the deltoid was short). (a) Example forces and recordings when the deltoid was long, for one static-force condition. Gray traces plot force for all trials. Black trace plots force for one example trial. EMG voltages traces are shown for the highlighted portion (gray window) of that trial. Only two channels are shown for simplicity. Conventions as in Fig 5c. (b) Same but for later in the session after posture was changed and the deltoid was short. Recordings are from the same two channels and many of the same MUs (MU1, MU3, and MU4) are visible. However, MU4 has become considerably less active and MU5 is now quite active, despite being rarely active when the deltoid was long. (c) Data, from the same session documented in a,b, recorded during the 250 ms decreasing ramp. MU158 and MU159 correspond to MU4 and MU5 from panels a,b. Gray traces at top plot trial-averaged force. Colored traces plot firing rates (mean and standard errors). Rasters show spikes on all trials for this condition. Top and bottom subpanels plot data where the deltoid was long and short. (d) Example from another session, recorded during the 0 – 3 Hz chirp. (e) State space plots for four example MU pairs. Top-left: same data as in c. Bottom-left: same data as in d. Top-right: additional comparison from the same session documented in a,b. MUs 157 and 158 correspond to MUs 3 and 4. Responses are during the chirp. Bottom-right: Example from a different session. Responses are during the 250 ms downward ramp. (f) Maximum displacement for every session (one line per session). Displacement was computed three times: within the four-second ramp, across all conditions within a muscle length (then pooled across lengths), and across all conditions and both muscle lengths. Yellow lines plot displacement expected due to sampling error (ten resampled artificial populations per empirical dataset). The empirical slopes were significantly larger than expected due to sampling error both for the increase from the four-second ramp to all conditions within a muscle length (p = 0.00044) and for the additional increase when considering both muscle lengths (p = 0.022, two-sample two-tailed t-test, N=6 sessions). (g) Maximum displacement (across all conditions and both muscle lengths) after repeatedly removing the MU pair that caused the largest displacement. Each trace corresponds to one session, and ends when remaining displacement was no larger than the largest within the ten resampled populations. Gray histogram plots the distribution, across sessions, of the number of MUs that had to be removed (mean in green). (h) Cumulative distribution of displacement, within one session, for the three key comparisons: four-second ramp (purple), all conditions within a muscle length (green), and all conditions for both muscle lengths (orange), for one session. (i) As in f but for dispersion. The two slopes were significantly higher than expected given sampling error (p = 0.00011 and p = 0.03, two-sample two-tailed t-tests, N=6 sessions). (j) dMNP(λ) as a function of the norm, λ, for one session.

Muscle-length-driven recruitment changes could be dramatic (Fig. 6c,d), yielding state-space trajectories far from a single monotonic manifold (Fig. 6e). At the population level, displacement (Fig. 6f) and dispersion (Fig. 6i) were high even when computed within muscle-length (green), reflecting force-profile-specific recruitment. They became higher still when also computed across muscle lengths (orange). All six sessions had maximum displacement outside the range expected due to sampling error. On average, more than half the MUs (4 out of an average of 6.5 recorded MUs) had to be removed from the population to eliminate significant displacement. The displacement distribution (Fig. 6h, orange) was shifted strongly rightwards relative to that observed during the four-second ramp at one muscle length (purple). Dispersion (Fig. 6j) was high across a broad range of norms.

Effects were unrelated to fatigue. Recruitment changed suddenly at block boundaries, not slowly over a session. For example, the blue rasters in Fig. 6d reveal a sudden spiking increase between top and bottom subpanels, not a gradual change spanning both. A different concern is that an MU that becomes inactive might reflect recording instabilities. This is unlikely; other MUs recorded on the same channel always remained visible, indicating minimal electrode movement. Furthermore, often an MU became much less active but still spiked, ruling out this concern (e.g., Extended Data Fig. 4). In some sessions we re-examined the first posture, and confirmed that an MU that fell inactive during the second posture became active upon returning to the first posture.

Latent factor model

The most straightforward test of a hypothesis is to formalize it as a model and ask whether it can fit the data. For rigid control, this has historically been challenging because of the large space of free parameters. Existing models of rigid control use constrained link functions44,45 that are reasonable but limit expressivity. The ideal model should have only those constraints inherent to the hypothesis – only then can it be rejected if it fails to fit the data. Fortunately, modern machine-learning approaches allow fitting models with unconstrained link functions and unknown common drive. We employed a probabilistic latent-factor model (Fig. 7a) where each MU’s rate is a function of common drive: ri(t) ~ fi(x(t + τi). Fitting used black box variational inference47 to infer x(t) and learn MU-specific link functions, fi, and time-lags, τi. fi was unconstrained other than being monotonically increasing. The resulting model can assume essentially any common drive and set of link functions, and MUs can have different latencies.

Figure 7 |. Latent factor model.

(a) The model embodies the premise of rigid control: MU firing rates are fixed ‘link functions’ of a shared, 1-D latent input. (b) Model performance for the three experiment types. The model was fit separately for each session. Regardless of how many conditions were fit, error was computed only during the four-second ramp. To compute cross-validated error, each session’s data was divided, trial-wise, into two random partitions. The model was fit separately to the mean rates for each partition. For each MU, cross-validated fit error was the dot product of the two residuals. To summarize cross-validated error for that experiment type, we computed the median error across all MUs in all relevant sessions. Partitioning was repeated 10 times. Vertical bars indicate the mean and standard deviation across these 10 partitionings, and thus indicate reliability with respect to sampling error (the standard deviation of the sampling error is equivalent to the standard error). Purple bars plot error when fitting only the four-second ramp. Green bars plot error when fitting additional conditions (stimulation across multiple sites, or different force profiles). For muscle-length experiments, green bars plot error when fitting all force profiles for the same muscle length, then averaging across muscle lengths. Orange bars plot error when fitting all force profiles for both muscle lengths. Circles show cross-validated fit error for an artificial population response that obeyed rigid control, but otherwise closely resembled the empirical population response. (c) Illustration of model fits for simplified situations: the activity of two MUs during the four-second ramp (top) or following cortical stimulation on three different electrodes (bottom). For illustration, the model was fit only to the data shown. (d) Proportion of total MUs that consistently violated the single-latent model when fit to pairs of conditions. Each entry is the difference between the proportion of consistent violators obtained from the data and the proportion expected by chance. Left: dynamic experiments. Right: for muscle length experiments, we grouped conditions by frequency (lower on top, higher on bottom).

To be conservative, instead of testing on held-out data, we asked whether the model could fit the data when given every opportunity. Because some fit error is inevitable due to sampling error, we independently fit two random data partitions and computed cross-validated error: the dot product of the two residuals48 (residuals will be uncorrelated if due to sampling error). Cross-validated error can be positive or negative, and should be zero on average for an accurate model and greater than zero for an inaccurate model. To confirm, we used two different methods to construct artificial datasets that obeyed rigid control but were otherwise realistic and contained sampling error (see Methods and Extended Data Fig. 5). The model fit artificial datasets well, with cross-validated error consistently near zero (e.g., Fig. 7b, filled circles).

Given results above, we predict that the model should fit well during slowly changing forces (where flexible and rigid control make identical predictions) but not across all conditions. To test this prediction, for each session we fit MU population activity during the four-second increasing ramp alone, and again during all conditions. To avoid the concern that different conditions might intrinsically induce different-magnitude errors, in both cases we assessed error only within the four-second ramp. A model embodying rigid control should fit this situation well, unless also fitting other situations. As predicted, the model performed well when fitting only the four-second increasing ramp. Cross-validated error was above zero but only slightly (Fig. 7b, purple). This confirms that model failures due to trivial phenomena – e.g., short-timescale fatigue – are small.

Cross-validated error (computed during the four-second ramp only) rose dramatically when the model had to also account for other conditions (Fig. 7b, green and orange). On individual sessions, error ranges were non-overlapping for 13/17 stimulation experiments, 12/14 dynamic experiments, and 5/6 muscle-length experiments, (analysis could not be performed for one stimulation experiment because the recorded MUs were largely inactive during the ramp). To confirm the source of failures, we inspected example fits. As expected, the model could fit activity that followed a monotonic manifold during slowly changing forces (Fig. 7c, top). However, it could not fit activity that spanned different manifolds across conditions (bottom). Attempting to fit both situations yielded ‘compromise’ link functions that fit neither particularly well.

Historically, the possibility of speed-based flexibility has often been examined using force ramps that increase at different rates. In agreement with the predictions of optimal recruitment (Fig. 4) we did not find this comparison to be strongly diagnostic. When fitting the 250 ms ramp in addition to the four-second ramp, error increased only slightly: 9% as much as when fitting all conditions. In contrast, when fitting the 3 Hz sinusoid in addition to the four-second ramp, error increased considerably: 65% as much as when fitting all conditions. This supports what can be seen in examples: departures from rigid control are most prevalent when comparing slowly changing forces with fast sinusoids.

To explore further, we considered conditions spanning a range of frequencies, from static to 3 Hz sinusoid (dividing the chirp into two sub-conditions). We fit the latent-factor model to single-trial responses for two conditions at a time. We defined an MU as a ‘consistent violator’ if activity was overestimated for trials from one condition and underestimated for trials from the other, at a rate much higher than expected by chance. Of course, even when the population response is inconsistent with rigid control, only some individual MUs will show effects large enough to be defined as consistent violators (especially when comparing only two conditions and ignoring within-condition violations). This is acceptable because we wish not to interpret absolute scale, but to assess which conditions evoke different recruitment. Consistent violators were rare when two conditions had similar frequency content (Fig. 7d, left, dark entries near diagonal), and prevalent when conditions had dissimilar frequency content. Violations of rigid control during muscle-length experiments were also systematic. We divided conditions into slowly changing forces (Fig. 7d, top-right) and higher-frequency sinusoidal forces (bottom-right). Within each category, consistent violators were uncommon when comparing conditions with the same muscle length (dark block-diagonal entries), and prevalent when comparing across muscle lengths.

Might it be possible to elaborate the latent-factor model, and perhaps achieve better fits, while maintaining the core assumptions of rigid control? Further increasing link-function expressivity with more free parameters had almost no effect. We also employed a model that allowed history dependence: there was a single common drive, but each MU could reflect the current drive value and/or integrate past values. For example, common drive could reflect force, with some MUs directly sensitive to force and others to its history. Alternatively, the inferred common drive could reflect a high-passed version of force, allowing some MUs to reflect rate-of-change while others (via low-pass filtering) reflect force. Fits were improved for some specific history-dependent features (likely caused by persistent inward currents). Yet there was still a dramatic increase in error when the model had to account for responses during all conditions.

In contrast, excellent fits were obtained simply by abandoning the core assumption of common drive, and allowing multiple latent-factor ‘drives’ consistent with flexible control. To ask how many drives might be needed (Fig. 8a), we challenged the model using the two dynamic sessions with the most simultaneously recorded MUs (16 and 18 recorded from the triceps, selecting sessions where all MUs were reasonably active at some point). Allowing more drives produced an immediate decrease in cross-validated error, which reached zero around 4–6 factors (Fig. 8b). Thus, the model can fit well, it simply needs more drives than allowed under rigid control.

Figure 8 |. Quantifying neural degrees of freedom.

(a) Schematic illustrating the central question: how many degrees of freedom might influence the MNP? (b) Cross-validated fit error as a function of the number of latent factors. Cross-validated error was computed within all conditions, but was otherwise calculated as above. For each partitioning, the overall error was the median cross-validated error across all MUs in two sessions. Error bars indicate the mean +/− standard deviation across 10 total partitionings. (c) We recorded neural activity in motor cortex using 128-channel Neuropixels probes. (d) Two sets of trial-averaged rates were created from a random partitioning of trials. Traces show the projection of the first (green) and second (purple) partitions onto PCs found using only the first. Traces for PCs 1 and 50 were manually offset to aid visual comparison. (e) Reliability of neural latent factors for the first 60 factors. Traces plot the mean and central 95% (shading) of the distribution across 25 random partitionings. (f) Same but for latent factors 1–400. The empirical reliability (blue) is compared to that for simulated data with 50 (yellow), 150 (orange), and 500 (red) latent signals.

Neural degrees of freedom

Describing MU population activity required 4–6 drives, even for a subset of MUs (the triceps alone contain a few hundred) during a subset of behaviors. Neural control of the arm may thus be quite high-dimensional, with dozens or even hundreds of degrees of freedom across all muscles. It is unclear what proportion is accessible to descending control, but it could be large. The corticospinal tract alone contains approximately one million axons in human49, and stimulation suggests remarkably fine-grained control.

For fine-grained descending control to be plausible, motor cortex activity would need to display many degrees of freedom. Yet motor cortex activity is often described as constrained to relatively few dimensions50–52, with 10–20 latent factors accounting for most response variance. Studies have stressed the presence of more degrees of freedom than predicted by simple models, but even the largest estimate (~3053) seems insufficient to support detailed control over many muscles. However, the meaning of these numbers is unclear because they depend on methodology. For example, in53, a variance-explained threshold yielded an estimated dimensionality of 9. In contrast, assessing signal reliability yielded an estimate of 29. Both are potentially underestimates given analyzed populations of ~100 neurons. The present task yields the opportunity to revisit this issue using behaviors relevant to flexible control. We considered a large population (881 sulcal neurons) recorded over 13 sessions using the 128-channel primate Neuropixels probes. Force profiles were as in Fig 1d.

Neurons displayed a great variety of response patterns (Extended Data Fig. 6). Capturing 85% of the variance required 33 principal components (PCs). This is high relative to prior variance-cutoff-based estimates, possibly due to condition variety in the present task. Yet we stress that ‘variance explained’ is not a particularly meaningful measure given our specific scientific question. Small-variance signals may be deeply relevant to descending control54. Thus, we focused not on size but on whether a factor was reliable across trials (Fig. 8d). For the projection onto a given PC, we defined reliability as the correlation between held-out data and the data used to identify the PC. Each PC past the first 33 captured a small signal, yet those signals were reliable (Fig. 8e); the first 60 had reliability >0.7. Reliability remained well above zero for approximately 200 PCs (Fig. 8f). For comparison, we analyzed artificial datasets that closely matched the real data but had known dimensionality. Even when endowed with 150 latent factors, artificial populations displayed reliability that fell faster than for the data. This is consistent with the empirical population having >150 degrees of freedom – an order of magnitude more than previously considered. Thus, the motor cortex population response has enough complexity that it could, in principle, provide detailed outgoing commands.

Discussion

The hypothesis of rigid control has remained dominant1,9 for three reasons: it describes activity during steady force production1,11,16,21, is optimal in that situation18, and could be implemented via simple mechanisms13,14. It has been argued that flexible control would be difficult to implement and that “it is not obvious... that a more flexible, selective system would offer any advantages14.” In contrast, our findings argue that flexible MU control is a normal aspect of skilled performance in the primate. Flexibility appeared in all three situations we examined.

Different muscles, with different compositions of slow- and fast-twitch fibers, become coordinated differently during high-speed movements: rapid paw shakes55, burst-glide swimming56, and cycling57. Yet it has remained controversial whether similar within-muscle flexibility exists7,25,37. Most studies of single MUs23,34,38 report no speed-based recruitment beyond trivial latency effects. Thus, the prevailing view is that speed-based recruitment doesn’t occur1,25,36, or occurs in some lengthening contractions37. Other studies indicate speed-based flexibility5,32,33, but often via indirect measures. This contrast might seem to suggest that the presence versus lack of flexibility reflects how carefully MU activity is measured. Our results instead suggest that prior disagreement reflected force-profile choice: ramps versus sinusoids. The optimal-recruitment model predicts large recruitment changes only during the latter. In agreement, whether studies found evidence for flexibility typically reflected which profile they employed.

We observed MUs that became less active at the highest frequencies (e.g., Fig. 5f) but no MUs became inactive, even though the optimal-recruitment model predicted this could happen. This discrepancy may reflect model simplicity: e.g., it doesn’t consider that slow fibers can accelerate under negative load58. Yet despite its simplicity, the optimal-recruitment model is sufficient to make the key high-level point: accurately generating force profiles while minimizing other costs requires respecting diversity not only of size, but in any domain relevant to the range of behaviors being generated. In agreement, muscle length had a large impact on MU recruitment. The anatomical basis of flexibility is likely also domain-specific. Muscle-length-driven flexibility may require only spinally available proprioceptive feedback. In contrast, when flexibility reflects future changes in force, it may depend upon descending signals.

The hypothesis that descending signals can influence MU recruitment has historically been considered implausible; control might be unmanageably complex unless degrees of freedom are limited14,21. For this reason, descending control has typically been considered to involve muscle synergies59, above the individual-muscle level. Yet cortical stimulation induced remarkably selective recruitment. It remains unclear whether this selective activation involves small or large MU groups, but it is certainly below the individual-muscle level. Such fine-grained control may be more prominent in primates than cats49, where selective recruitment may be weaker and more easily dismissed as reflecting a damaged preparation22.

Motor cortex, a major source of descending control, is often described as low-dimensional. In an operational sense this is correct: a handful of high-variance signals often account for most response variance51,52, and are informative when testing and developing hypotheses52. Yet there also exist reliable small-variance signals53,54 that could contribute to outgoing commands. Our results reveal that small-but-reliable signals are numerous. Thus, although it is typically proposed that descending control is and should be simple1,60, in our view there is little reason to doubt the capacity for detailed control. The human corticospinal tract contains around a million axons, including direct connections onto α-motoneurons49, and exerts surprisingly selective control over MU activity. Our field has long accepted that detailed information about photoreceptor activity is communicated to cortex via a similarly-sized (and less direct) pathway. It seems time to consider that the corticospinal tract could carry detailed information in the other direction.

Methods

Subject and task

All protocols were in accord with the National Institutes of Health guidelines and approved by the Columbia University Institutional Animal Care and Use Committee (protocol AC-AABE3550). Subject C was a 10-year-old adult, male macaque monkey (Macaca mulatta) weighing 13 kg.

During experiments, the monkey sat in a primate chair with his head restrained via surgical implant and his right arm loosely restrained. To perform the task, he grasped a handle with his left hand while resting his forearm on a small platform that supported the handle. Once he had achieved a comfortable position, we applied tape around his hand and velcro around his forearm, which ensured consistent placement within and between sessions (the monkey could break the tape / velcro if desired, but did not as he was focused on the task). When performing the task, the monkey received both time-varying visual feedback from the screen and time-varying cutaneous feedback from the pressure his hand applied to the handle. There exist reports that adding unusual sensory feedback (e.g., via cutaneous nerve or mechanical stimulation) may alter recruitment under some situations61, though this is controversial. This is extremely unlikely to have impacted our results as our task design placed the primary source of feedback (the palm) far from the relevant shoulder muscles, and no interaction across such distance has been suggested (indeed, most studies that report orderly recruitment involve sensory feedback). Furthermore, the handle was held in the same way across force profiles and across stimulation sites, and is thus unlikely to account for changes in recruitment among those conditions.

The handle controlled a manipulandum, custom made from aluminum (80/20 Inc.) and connected to a ball bearing carriage on a guide rail (McMaster-Carr, PN 9184T52). The carriage was fastened to a load cell (FUTEK, PN FSH01673), which was locked in place. The load cell converted one-dimensional (tensile and compressive) forces to a voltage signal. That voltage was amplified (FUTEK, PN FSH03863) and routed to a Performance real-time target machine (Speedgoat) that executed a Simulink model (MathWorks R2016b) to run the task. Because the load cell was locked in place, forces were applied to the manipulandum via isometric contractions.

The monkey controlled a ‘Pac-Man’ icon, displayed on an LCD monitor (Asus PN PG258Q, 240 Hz refresh, 1920 × 1080 pixels) using Psychophysics Toolbox 3.0. Pac-Man’s horizontal position was fixed on the left-hand side of the screen. Vertical position was directly proportional to the force registered by the load cell. For 0 Newtons of applied force, Pac-Man was positioned at the bottom of the screen; for the calibrated maximum requested force for the session, Pac-Man was positioned at the top of the screen. Maximum requested forces (see: Experimental Procedures, below) were titrated with two goals in mind. First, to be comfortable for the monkey across many trials, and second, to activate multiple MUs but not so many that EMG signals became unsortable. On each trial, a series of dots scrolled leftwards on screen at a constant speed (1344 pixels/s). The monkey modulated Pac-Man’s position to intercept the dots, for which he received juice reward. Thus, the shape of the scrolling dot path was the temporal force profile the monkey needed to apply to the handle to obtain reward. We trained the monkey to generate static, step, ramp, and sinusoidal forces over a range of amplitudes and frequencies. We define a ‘condition’ as a particular target force profile (e.g., a 2 Hz sinusoid) that was presented on many ‘trials’, each a repetition of the same profile. Each condition included a ‘lead-in’ and ‘lead-out’ period: a one-second static profile appended to the beginning and end of the target profile, which facilitated trial alignment and averaging (see below). Trials lasted 2.25–6 seconds, depending on the particular force profile. Juice was given throughout the trial so long as Pac-Man successfully intercepted the dots, with a large ‘bonus’ reward given at the end of the trial.

The reward schedule was designed to be encouraging; greater accuracy resulted in more frequent rewards (every few dots) and a larger bonus at the end of the trial. To prevent discouraging failures, we also tolerated small errors in the phase of the response at high frequencies. For example, if the target profile was a 3 Hz sinusoid, it was considered acceptable if the monkey generated a sinusoid of the correct amplitude and frequency but that led the target by 100 ms. To enact this tolerance, the target dots sped up or slowed down to match his phase. The magnitude of this phase correction scaled with the target frequency and was capped at +/− 3 pixels/frame. To discourage inappropriate strategies (e.g., moving randomly, or holding in the middle with the goal if intercepting some dots) a trial was aborted if too many dots were missed (the criterion number was tailored for each condition). The average number of successful trials per session was 370 (stimulation experiments), 338 (dynamic experiments), and 495 (muscle-length experiments). This excludes trials that were failed online and trials that were judged (via an algorithm, see below) to not meet standards for accuracy.

Surgical procedures

After task performance stabilized at a high level, we performed a sterile surgery, under anesthesia, to implant a cylindrical chamber (Crist Instrument Co., 19 mm inner diameter) that provided access to primary motor cortex (M1). Guided by structural magnetic resonance imaging scans taken prior to surgery, we positioned the chamber surface-normal to the skull, centered over the central sulcus. We covered the skull within the cylinder with a thin layer of dental acrylic. Small (3.5 mm), hand-drilled burr holes through the acrylic provided the entry point for electrodes. These were performed under anesthesia and did not cause discomfort. These healed once stimulation / recordings were complete, and were then recovered with dental acrylic. During recordings, a guide-tube was used to provide stability but did not penetrate dura, to avoid any damage to motor cortex.

Intracortical recordings and microstimulation

Neural activity was recorded with the passive version of the primate Neuropixels probes. Each probe contained 128 channels (two columns of 64 sites). Probes were lowered into position with a motorized microdrive (Narishige). Recordings were made at depths ranging from 5.6 – 12.1 mm relative to the surface of the dura. Raw neural signals were digitized at 30 kHz and saved with a 128-channel neural signal processor (Blackrock Microsystems, Cerebus).

Intracortical electrical stimulation (20 biphasic pulses, 333 Hz, 400 μs phase durations, 200 μs interphase) was delivered through linear arrays (Plexon Inc., S-Probes) using a neurostimulator (Blackrock Microsystems, Cerestim R96). We did not explore the effectiveness of different parameters but simply used parameters common across many of our studies. We kept pulse-trains relatively short to avoid the more complex movements that occur on longer timescales62. Each probe contained 32 electrode sites with 100 μm separation between them. Probes were positioned with a motorized microdrive (Narishige). We estimated the target depth by recording neural activity prior to stimulation sessions. Each stimulation experiment began with an initial mapping, used to select 4–6 electrode sites to be used in the experiments. That mapping allowed us to determine the muscles activated from each site, and estimate the associated thresholds. Thresholds were determined based on visual observation and were typically low (10–50 μA), but occasionally quite high (100–150+ μA) depending on depth. Across all 32 electrodes, microstimulation induced twitches of proximal and distal muscles of the upper arm, ranging from the deltoid to the forearm. Rarely did an electrode site fail to elicit any response, but many responses involved multiple muscles or gross movements of the shoulder that were difficult to attribute to a specific muscle. Yet some sites produced more localized responses, prominent only within a single muscle head. Sometimes a narrow (few mm2) region within the head of one muscle would reliably and visibly pulse following stimulation. Because penetration locations were guided by recordings and stimulation on previous days, such effects often involved the muscles central to performance of the task: the deltoid and triceps. In such cases, we selected 4–6 sites that produced responses in one of these muscles, and targeted that muscle with EMG recordings. EMG recordings were always targeted to a localized region of one muscle head (see below). In cases where stimulation appeared to activate only part of one muscle head, EMG recordings targeted that localized region. Recordings targeted the pectoralis when stimulation had its primary effect there. Pectoralis was only modestly involved in the task, but this did not hinder the ability to compare the impact of stimulation across stimulation sites.

EMG recordings

Intramuscular EMG activity was recorded acutely using paired hook-wire electrodes (Natus Neurology, PN 019–475400). Electrodes were inserted ~1 cm into the muscle belly using 30 mm × 27 G needles. Needles were promptly removed and only the wires remained in the muscle during recording. Wires were thin (50 um diameter) and flexible and their presence in the muscle is typically not felt after insertion, allowing the task to be performed normally. Wires were removed at the end of the session.

We employed several modifications to facilitate isolation of MU spikes. As originally manufactured, two wires protruded 2 mm and 5 mm from the end of each needle (thus ending 3 mm apart) with each wire insulated up to a 2 mm exposed end. We found that spike sorting benefited from including 4 wires per needle (i.e., combining two pairs in a single needle), with each pair having a differently modified geometry. Modifying each pair differently meant that they tended to be optimized for recording different MUs63; one MU might be more prominent on one pair and the other on another pair. Electrodes were thus modified as follows. The stripped ends of one pair were trimmed to 1 mm, with 1 mm of one wire and 8 mm of the second wire protruding from the needle’s end. The stripped ends of the second pair were trimmed to 0.5 mm, with 3.25 mm of one wire and 5.25 mm of the second wire protruding. Electrodes were hand-fabricated using a microscope (Zeiss), digital calipers, precision tweezers and knives. During experiments, EMG signals were recorded differentially from each pair of wires with the same length of stripped insulation; each insertion thus provided two active recording channels. Four insertions (closely spaced so that MUs were often recorded across many pairs) were employed, yielding eight total pairs. The above approach was used for both the dynamic and muscle-length experiments, where a challenge was that normal behavior was driven by many MUs, resulting in spikes that could overlap in time. This was less of a concern during the microstimulation experiments. Stimulation-induced responses were typically fairly sparse near threshold (a central finding of our study is that cortical stimulation can induce quite selective MU recruitment). Thus, microstimulation experiments employed one electrode pair per insertion and 8 total insertions (rather than two pairs and 4 total insertions), with minimal modification (exposed ends shortened to 1 mm).

Raw voltages were amplified and analog filtered (band-pass 10 Hz - 10 kHz) with ISO-DAM 8A modules (World Precision Instruments), then digitized at 30 kHz with a neural signal processor (Blackrock Microsystems, Cerebus). EMG signals were digitally band-pass filtered online (50 Hz - 5 kHz) and saved.

Experimental procedures

Cortical recordings were performed exclusively during one set of experiments (‘dynamic’, defined below), whereas EMG recordings were conducted across three sets of experiments (microstimulation, dynamic, and muscle length). In a given session, the eight EMG electrode pairs were inserted within a small (typically ~2 cm2) region of a single muscle head. This focus aided sorting by ensuring that a given MU spike typically appeared, with different waveforms, on multiple channels. This focus also ensured that any response heterogeneity was due to differential recruitment among neighboring MUs.

Microstimulation experiments employed recordings from the lateral deltoid and lateral triceps. Both these muscles exhibited strong task-modulated activity, as documented in the dynamic and muscle-length experiments. We also included recordings from the sternal pectoralis major, as we found cortical sites that reliably activated it. The manipulandum was positioned so that the angle of shoulder flexion was 25° and the angle of elbow flexion was 90°. Microstimulation experiments employed a limited set of force profiles: four static forces (0, 25%, 50% and 100%), and the slow (4 s) increasing ramp. The ramp was included to document the natural recruitment pattern during slowly changing forces. Maximal force was typically set to 16 N, but was increased to 24 N and 28 N for two sessions each in an effort to evoke greater muscle activation during the ramp. Microstimulation was delivered once per trial during the static forces, at a randomized time (1000–1500 ms relative to when the first dot reached Pac-Man). Because stimulation evoked activity in muscles used to perform the task, it sometimes caused small but detectable changes in force applied to the handle. However, these were so small that they did not impact the monkey’s ability to perform the task and appeared to go largely unnoticed. These experiments involved a total of 17–25 conditions: the ramp condition (with no stimulation) plus the four static forces for the 4–6 chosen electrode sites. These were presented interleaved in block-randomized fashion: a random order was chosen, all conditions were performed, then a new random order was chosen.

In dynamic experiments, the monkey generated a diverse set of target force profiles. The manipulandum was positioned so that the angle of shoulder flexion was 25° and the angle of elbow flexion was 90° (as in stimulation experiments). The maximum requested force was 16 Newtons. We employed twelve conditions (Supp Fig. 1) presented interleaved in block-randomized fashion. Three conditions employed static target forces: 33%, 66% and 100% of maximal force. Four conditions employed ramps: increasing or decreasing across the full force range, either fast (lasting 250 ms) or slow (lasting 4 s). Four conditions involved sinusoids at 0.25, 1, 2, and 3 Hz. The final condition was a 0–3 Hz chirp. The amplitude of all sinusoidal and chirp forces was 75% of maximal force, except for the 0.25 Hz sinusoid, which was 100% of maximal force. Recordings in dynamic experiments were made from the deltoid (typically the anterior head with some from the lateral head) and the triceps (lateral head).

In muscle-length experiments, the monkey generated force profiles with his deltoid at a long or short length (relative to the neutral position used in the dynamic experiments). The manipulandum was positioned so that the angle of shoulder flexion was 15° (long) or 50° (short), while maintaining an angle of elbow flexion of 90°. Maximal requested forces were 18 N (long) and 14 N (short). Different maximal forces were employed as it appeared more effortful to generate forces in the shortened position. To ensure enough trials per condition, we employed only a subset of the force profiles used in the dynamics experiments. These were 2 static forces (50% and 100% of maximal force), the slow increasing ramp, both increasing and decreasing fast ramps, all four sinusoids and the chirp. These were presented interleaved in block-randomized fashion for multiple trials (~30 per condition) for the lengthened position (15°) before changing to the shortened position (50°). In most experiments we were able to revert to the lengthened position (15°) at the end of the session, and verify that MU recruitment returned to the originally observed pattern. Recordings in muscle-length experiments were made from the deltoid (anterior head) only, because it was the deltoid that was shortened by the change in posture.

Signal processing and spike sorting

Cortical voltage signals were spike sorted using KiloSort 2.064. A total of 881 neurons were isolated across 15 sessions.

EMG signals were digitally filtered offline using a second-order 500 Hz high-pass Butterworth. Any low SNR or dead EMG channels were omitted from analyses. Motor unit (MU) spike times were extracted using a custom semi-automated algorithm. We adapted recent spike-sorting65–67 advances, including methods for resolving superimposed waveforms66. As with standard spike-sorting algorithms used for neural data, individual MU spikes were identified based on their match to a template: a canonical time-varying voltage across all simultaneously recorded channels (example templates are shown in Extended Data Fig. 1, bottom left). Spike templates were inferred using all the data across a given session. A distinctive feature of intramuscular records (compared to neural recordings) is that they have very high signal-to-noise (peak-to-peak voltages on the order of mV, rather than uV, and there is negligible thermal noise) but it is common for more than one MU to spike simultaneously, yielding a superposition of waveforms. This is relatively rare at low forces but can become common as forces increase. Our algorithm was thus tailored to detect not only voltages that corresponded to single MU spikes, but also those that resulted from the superposition of multiple spikes. Detection of superposition was greatly aided by the multi-channel recordings; different units were prominent on different channels. Further details are provided in the Supplementary Methods. We also analyzed artificial spiking data with realistic properties (e.g., recruitment based on the actual force profiles we used, and waveforms based on actual recorded waveforms) to verify that spike-sorting was accurate in circumstances where the ground truth was known. Mis-sorted spikes were rare, and they were similarly rare during slowly changing forces versus high-frequency sinusoids. Spike-sorting errors are thus unlikely to explain differences in the empirical recruitment between these situations.

Trial alignment and averaging

Single-trial spike rasters, for a given neuron or MU, were converted into a firing rate via convolution with a 25 ms Gaussian kernel. One analysis (Fig. 7d) focused on single-trial responses, but most employed trial-averaging to identify a reliable average firing rate. To do so, trials for a given condition were aligned temporally and the average firing rate, at each time, was computed across trials. Stimulation trials were simply aligned to stimulation onset. For all other conditions, each trial was aligned on the moment the target force profile ‘began’ (when the target force profile, specified by the dots, reached Pac-Man). This alignment brought the actual (generated) force profile closely into register across trials. However, because the actual force profile could sometimes slightly lead or lag the target force profile, some modest across-trial variability remained. Thus, for all trials with changing forces, we realigned each trial (by shifting it slightly in time) to minimize the mean squared error between the actual force and the target force profile. This ensured that trials were well-aligned in terms of the actual generated forces (the most relevant quantity for analyses of MU activity). Trials were excluded from analysis if they could not be well aligned despite searching over shifts from −200 to 200 ms.

ANOVA-based examination of microstimulation effects

We quantified whether microstimulation-evoked responses displayed an interaction effect between stimulation electrode and MU identity using a two-way ANOVA. Analysis was performed separately for each session and static force level. For each MU and stimulation trial, we computed the magnitude of the stimulation-driven response by taking the largest absolute change in mean firing rate between a baseline period and a response period. Baseline and response periods were defined as −300–0 ms and 30–130 ms with respect to stimulation onset. We performed a two-way ANOVA (anovan, MATLAB R2019b, R2020b) using the vector of single-trial responses and a set of two grouping vectors. The first contained the identity of each MU. The second contained the identity of the stimulation electrode. To evaluate whether the responses for a particular session displayed an interaction effect, p-values were divided by the number of static force amplitudes to correct for the three different comparisons made per session.

Quantifying motor unit flexibility

We developed two metrics – displacement and dispersion – that quantified MU-recruitment flexibility without directly fitting a model (model-based quantification is described below). Both methods leverage the definition of rigid control to detect population-level patterns of activity that are inconsistent with rigid control even under the most generous of assumptions.

Let rt = [r1,t r2,t … rn,t]⊤ denote the population state at time t, where ri,t denotes the firing rate of the ith MU. If rt traverses a 1-D monotonic manifold, then as the firing rate of one MU increases, the firing rate of all others should either increase or remain the same. More generally, the change in firing rates from t to t′ should have the same sign (or be zero) for all MUs. If changes in firing rate are all positive (or zero), then one can infer that common drive increased from t to t′. If the changes in firing rate are all negative (or zero), then one can infer that common drive decreased. Both these cases (all positive or all negative) are consistent with rigid control because there exists some 1-D monotonic manifold that contains the data at both t′ and t.