Abstract

Angular path integration is the ability of a system to estimate its own heading direction from potentially noisy angular velocity (or increment) observations. Non-probabilistic algorithms for angular path integration, which rely on a summation of these noisy increments, do not appropriately take into account the reliability of such observations, which is essential for appropriately weighing one’s current heading direction estimate against incoming information. In a probabilistic setting, angular path integration can be formulated as a continuous-time nonlinear filtering problem (circular filtering) with observed state increments. The circular symmetry of heading direction makes this inference task inherently nonlinear, thereby precluding the use of popular inference algorithms such as Kalman filters, rendering the problem analytically inaccessible. Here, we derive an approximate solution to circular continuous-time filtering, which integrates state increment observations while maintaining a fixed representation through both state propagation and observational updates. Specifically, we extend the established projection-filtering method to account for observed state increments and apply this framework to the circular filtering problem. We further propose a generative model for continuous-time angular-valued direct observations of the hidden state, which we integrate seamlessly into the projection filter. Applying the resulting scheme to a model of probabilistic angular path integration, we derive an algorithm for circular filtering, which we term the circular Kalman filter. Importantly, this algorithm is analytically accessible, interpretable, and outperforms an alternative filter based on a Gaussian approximation.

Keywords: Bayesian methods, nonlinear filtering, circular filtering, sensor fusion, continuous-time estimation, stochastic processes

I. Introduction

A compass is an immensely useful tool for a traveler trying to find their way in a barren and featureless landscape. Absent such a tool, the traveler must employ dead reckoning, using what they roughly know about how often and how much they have turned, and summing up those turns to maintain an internal sense of their spatial orientation. Angular path integration, i.e., estimation of heading direction or orientation based on angular self-motion cues, plays an essential role in spatial navigation of humans, other animals and robots [1, 2]. Imperfect sensors make angular path integration an inherently noisy process, and inevitably lead to an accumulation of error in the heading estimate over time. Other cues, such as those from visual landmarks, can help correct the estimate’s error, despite being also noisy and ambiguous. Importantly, properly combining path integration with these external cues requires a reliability-weighted update of the orientation estimate. Computing with uncertainties in such a strategic way is a hallmark of dynamic Bayesian inference, and calls for a probabilistic description. Our goal in this work is to derive a dynamic probabilistic algorithm for angular path integration.

It is well known that many organisms are able to maintain an internal compass which they update by self-motion cues. Since the discovery of orientation-selective head-direction cells in rats [3], and, more recently, the heading direction circuit in Drosophila [4], theoretical efforts to unravel the mechanism of angular path integration in the brain have highlighted the role of angular velocity observations of self-initiated turns [5, 6], e.g., from proprioceptive or vestibular feedback, or from visual flow. Current theories suggest that these biological systems implement angular path integration by neural network motifs called ring attractors [7, 6]. Such networks maintain a heading direction estimate through sustained neural activity, but lack the ability to simultaneously represent the estimate’s certainty. However, the question of whether such biological systems indeed only operate with single point estimates or instead perform probabilistic inference has been hampered by the lack of a probabilistic algorithm for path integration in the brain. The reason for this is the complex set of conditions that are required of such an algorithm: (i) the state-space is circular, (ii) the path integration must operate with a continuous time stream of inputs, (iii) it must maintain a fixed representation of the underlying probability distribution, in line with the expectation that, within a certain area of computation, the brain maintains a similarly fixed representation e.g., in terms of a parametric [8] or sampling-based [9] representation, and (iv) in addition to angular velocity observations, (noisy) direct angular observations (e.g., visual landmarks) may be present, which need to be integrated accordingly.

One approach to designing an algorithm that satisfies these conditions is to consider it in the broader context of continuous-time filtering, which aims to continuously update a posterior distribution over a dynamically evolving hidden state variable from noisy observational data. From condition (i), a circular state-space implies that the underlying filtering task is nonlinear, which precludes the use of popular linear schemes such as the Kalman filter [10, 11]. Furthermore, the solution to this so-called circular filtering problem is analytically intractable [12], and needs to be approximated. The approximation we derive here goes beyond existing circular filtering algorithms [13, 14, 15] (see also the review by Kurz et al. [12]) by both considering increment observations — like observations of angular velocity — and by addressing a continuous stream of observations (condition (ii)). Furthermore, while these previous approaches changed the representations used between prediction and update steps, ours uses a fixed representation, satisfying our condition (iii).

A recent promising approach to circular filtering [16] supports continuous-time state transitions, but is limited to discrete-time and direct (rather than increment) observations. Their method is based on projection filtering [17, 18], a rigorous approach that combines nonlinear filtering with information geometry. The idea behind projection filtering is to approximate the posterior between consecutive discrete-time observations with a parametric distribution. This approximation is chosen to minimize the distance between the true and the approximated posterior, as measured by the Fisher metric. By updating only the values of the parameters, this approach automatically keeps a fixed representation for the posterior in terms of a parametric distribution. Furthermore, if the approximated posterior and the emission probabilities of the observations are conjugate, updating posterior parameters in light of further discrete-time direct observations is straightforward. Projection filtering can be generalized to hidden processes that evolve on arbitrary submanifolds of Euclidean space [19], which makes it applicable to the circular filtering problem. Two challenges hamper the direct application of projection filters to angular path integration. First, no variants currently exist that handle increment observations, either in discrete or continuous time, as we would require to process angular velocity observations. In fact, increment observations have generally received little attention in the filtering literature (but see [20]). Second, there is currently no framework that combines projection-filtering with angular-valued continuous-time observations. We will address both challenges in this work.

We introduce a novel continuous-time nonlinear filtering algorithm based on projection filtering, that includes increment observations and can be applied to circular filtering and to angular path integration, and that meets conditions (i)-(iv) outlined above. To do so, we first describe the general nonlinear filtering problem with observed increment observations in Euclidean space in Section II. In Section III, we review the projection filtering framework [17, 18] as an approximate solution for nonlinear filtering, and extend this approach to account for increment observations. We demonstrate that applying this framework to a linear filtering problem recovers the generalized Kalman filter. In Section IV, we revisit the continuous-time circular filtering problem. Therein, we first derive a probabilistic algorithm for angular path integration, i.e., when only increment observations are present. We then account for direct angular-valued observations, in addition to increment observations, by proposing a generative model based on a constant information-rate criterion that supports seamless inclusion into the filtering algorithm. Combining all of the above, we finally retrieve, as a special case of the general framework, a circular filtering algorithm for Gaussian-type increment and angular direct observations, which we term the circular Kalman filter. We demonstrate in numerical simulations that this algorithm performs comparably to an asymptotically-exact particle filter, and outperforms a Gaussian approximation in the estimation of both heading direction as well as its associated certainty.

II. The filtering problem with increment observations

We consider multivariate filtering with observations generated by increments of the hidden state, rather than the hidden state itself. We assume that the hidden state variable evolves according to a stochastic differential equation (SDE) of the form:

| (1) |

with an -Brownian motion (BM) process, a vector-valued drift function , and a matrix , which determines the error covariance of the hidden state process. In the following, we will use the shorthand or skip its argument completely (whenever there is no notational ambiguity). The density of this stochastic process evolves according to a partial differential equation, the Fokker–Planck equation (FPE):

| (2) |

with

| (3) |

Equivalently, expectations of a scalar test function with respect to evolve according to

| (4) |

with the propagator

| (5) |

where denotes the gradient with respect to x, denotes the trace operator and is the Hessian matrix with . Note that and are adjoint operators with respect to the L2 inner product.

We assume that the hidden state process Xt in (1) cannot be observed directly, but instead is partially observed through the process , which is governed by the infinitesimal state increments :

| (6) |

where is an -BM process, and . The matrix determines the level of noise in the increment observations. Due to its dependency on the increment dX, the observation process is effectively governed by two noise sources, and . The first is correlated with the noise in the hidden state dynamics. The second is independent of it. This is in contrast to classical filtering problems, which consider the noise in hidden states and observations to be independent (cf. Appendix V-A).

If denotes the filtration generated by the process Ut, then the filtering problem is to compute the posterior density or, equivalently, the posterior expectation . In an uncorrelated noise setting, the Kushner–Stratonovich equation describes the temporal evolution of the posterior expectation [21, 22, 23] (Appendix V-A). To find a similar formal solution for filtering with observed state increments (i.e., correlated noise), we can account for the correlations between observations and the hidden state by introducing a slight modification in the Kushner–Stratonovich equation, resulting in a generalized Kushner–Stratonovich equation (gKSE) [23, Chapter 3.8] (cf. Nüsken et al. [20]). For our particular problem, we show in Appendix V-B that the dynamics of posterior expectations satisfy

| (7) |

with , and

| (8) |

Note that the right-hand side of (7) does not only depend on , but also on , and other expectations of potentially nonlinear functions, which in general cannot be computed from alone. Therefore, in order to completely characterize the probabilistic solution, we would need one equation (7) for every moment of the posterior, with each moment corresponding to a specific choice of . Thus, except for a few very specific generative models, such as linear ones, the dynamics of posterior expectations in (7) will result in a system of an infinite number of coupled SDEs, which in general is analytically intractable.

Remark 1.

Equation (7) is the gKSE if state increments are the only available type of observations. In Appendix V-B, we extend it to the Kushner–Stratonovich equation when both state increments and (Gaussian-type) direct observations are present.

In what follows, we will approximately solve the continuous-time filtering problem with observed state increments by projecting the gKSE onto a submanifold of parametric densities with a finite number of parameters, resulting in a finite system of coupled SDEs for these parameters. For this, we will first review the general projection method, which so far has only been applied to classical filtering problems with uncorrelated state and observation noise, and then extend this framework to filtering problems with observed hidden state increments.

III. Projection filtering for observed continuous-time state increments

A. The general projection filtering method

Projection filtering is a method for approximate nonlinear filtering that is based on differential geometry. In this subsection, we outline briefly the differential geometric setup and derivation of the projection filter, and refer the reader to the seminal papers on projection filters [17, 24, 18], or the intuitive introduction to the subject matter presented in [25], for more detailed derivations and in-depth discussion of the method.

In general, we can interpret the solution of a (stochastic) differential equation (such as the FPE (2)) as a (stochastic) vector field on an infinite-dimensional function space of probability density functions pt. If the vector field is stochastic (which is usually the case in nonlinear filtering [22]), we consider it to be given in Stratonovich form

| (9) |

which is the standard choice for stochastic calculus on manifolds. Let us further assume a parametrization with a finite set of parameters , such that the solution to (9) is reasonably well approximated by these parametrized densities.

Projection filtering provides a solution to the filtering problem by evolving the parameters θ, thereby constraining the approximate posterior to evolve on a finite-dimensional submanifold of , rather than on itself. This implies that we have to project the vector field in (9) onto the tangent space

| (10) |

where the denote the basis vectors of this tangent space. Intuitively, an orthogonal projection minimizes at each timestep the distance between the true posterior pt and its approximation pθ with respect to a Riemannian metric which, for probability distributions, corresponds to the Fisher metric [26]

| (11) |

This allows us to use the general orthogonal projection formula

| (12) |

where denotes the projection operator, the are the components of the inverse Fisher metric, and

| (13) |

is the inner product that is associated with the Fisher metric (see Proposition 1 in Appendix V-C).

To find the parameter updates resulting from this projection, we apply the projection (12) to the dynamics of the probability density pt in (9). Since our approximate dynamics evolve along tangent vectors to the manifold parametrized by θ, the posterior pθ will stay on this manifold. Hence, the left-hand side of (9) can be written in terms of the basis vectors of by using the chain rule:

| (14) |

Further, by letting the projection act on the right-hand side of this equation, and consecutively comparing coefficients in front of the basis vectors , we find the following Stratonovich SDEs for the parameters of the projected density following the evolution in (9):

| (15) |

This is the result of Brigo et al. [18, Theorem 4.3].

To facilitate the comparison to the gKSE (7), we further slightly rewrite this SDE:

| (16) |

| (17) |

where here denotes the expectation with respect to the projected density pθ, and and denote the adjoint of and with respect to the L2 scalar product. Rewriting the SDE in such a way allows us to immediately identify the operators and on the right-hand side of this equation with the operators used for propagating expectations rather than densities.

To illustrate how to identify the operator concretely, let us consider the filtering problem without any observations, i.e., a simple diffusion, which is formally solved by the Fokker–Planck Equation (2). Noting that the operator propagates the density through time, we identify . Similarly, we find for the adjoint. Thus, the projection can be immediately determined from the time evolution of the expectation in Eq. (4), with :

| (18) |

If state increments are observed, expectations are propagated by using the gKSE (7), and identification of the operators and is possible after having transformed the gKSE to Stratonovich form, as we will see in the next section. Since this is usually easier to do than transforming the generalized Kushner equation for the posterior density in Stratonovich form, Eq. (17) is a more convenient choice for the parameter dynamics than Eq. (15).

Remark 2.

The derivation in the seminal papers on projection filtering [17, 18] follows a slightly different route but leads to the same result (15). We also refer the reader to the very accessible derivation presented in [25].

B. Projection with observed state increments

As we have seen, the adjunction between the SDE evolution operators for densities and associated expectations allowed us to use the expectation’s evolution equation to derive the projection filter for a specific problem (18). The same applies if the evolution of the density (or equivalently, that of the expectations) is a stochastic differential equation, as is the case for the KSE and the gKSE (7), as long as these are given in Stratonovich form. This allows us to formulate the projection filter for filtering with observed state increments:

Theorem 1.

The projection filter for the filtering problem with observed state increments is given by the following SDE of the parameters θ of a projected density pθ:

| (19) |

with denoting the Jacobian matrix, and with the short-hand modified generator

| (20) |

Proof.

As a first step, let us rewrite the gKSE (7) in Stratonovich form (Corollary 3 in V-B):

| (21) |

This equation allows us to identify the operators and in Eq. (17) as the operations acting on in front of dt and , respectively. By letting these operators act on in Eq. (17), and evaluating the expectations under the projected density pθ, we obtain SDEs for the desired parameters. These can be further simplified by using

which yields (19). □

The 1D special case follows directly from (19).

Corollary 1.

For univariate filtering problems, i.e. a filtering problem with in (1) and (6), with , , and , the projection filter with observed state increments reads:

| (22) |

C. Projection on exponential family distributions

Analogous to [18], it is possible to derive explicit filter equations for the natural parameters of a projected exponential family distribution. Consider the following exponential family parametrization:

| (23) |

where θ is the vector of natural or canonical parameters, T(x) is the vector of sufficient statistics and is the normalization.

Corollary 2.

The projection filter for the filtering problem with observed state increments is given by the following SDE of the natural parameters θ of a projected density pθ belonging to the exponential family:

| (24) |

where is the posterior expectation of the sufficient statistic . The parameters are sometimes referred to as dual or expectation parameters.

Proof.

Making use of the duality relation between natural and expectation parameters for exponential families,

| (25) |

and the fact that , Eq. (24) follows directly from the projection filter with observed state increments (19). □

Example 1

(Generalized Kalman–Bucy filter). In order to demonstrate the general approach, let us consider a model with linear state dynamics

| (26) |

| (27) |

Here, we will show that a projection on a Gaussian manifold with

| (28) |

results in dynamics for the parameters μt and σt that are consistent with the generalized Kalman–Bucy Filter for observed state increments [20, Section 4.2]. In fact, since Eqs. (26) and (27) are both linear, the posterior density is a Gaussian and thus the projection filter becomes exact. For this particular problem, the projection filter reads:

| (29) |

We will use this to determine SDEs for the parameters μt and , with and , respectively, under the Gaussian assumption. First, the components of the Fisher information matrix of a Gaussian parametrized by its expectation parameters are given by

| (30) |

Since the matrix is diagonal, the components of its inverse are and . This considerably simplifies the projection in Eq. (29) for the time evolution of μt and σt. Explicitly carrying out the expectations in Eq. (29) under the assumed Gaussian density, the SDEs for these parameters read:

| (31) |

| (32) |

We found these Ito SDEs from their Strontonovich form by noting for the first line that the quadratic variation between σt and the observations process Ut is zero. In other words, no correction term according to the Wong–Zakai theorem [27] is required, such that both Ito and Stratonovich form have the same representation. Note that for a nonlinear generative model, this will in general not be the case [18]. Equations (31) and (32) are identical to the generalized Kalman–Bucy filter [20, Eqs. (62) & (65)], thus demonstrating the validity of our approach.

Despite being able to reproduce existing results, the main purpose of the projection filtering approach is to simplify potentially hard filtering problems such that the parameter SDEs become analytically accessible. This becomes particularly useful if a certain parametric form of the posterior is desired, for instance for computational reasons, and can be very appealing if expectations are easily carried out under the assumed posterior and the Fisher matrix is straightforward to invert or even diagonal.

Example 2

(Multivariate Gaussian with diagonal covariance matrix). From a computational perspective, projection on a Gaussian density with diagonal covariance matrix can be advantageous in certain situations. In particular, such a solution only requires equations for 2N parameters, instead of for a general Gaussian with Fisher matrix components

which is in general hard to invert. For a Gaussian with diagonal covariance matrix, these simplify to

while all other components evaluate to zero, making the Fisher matrix diagonal and straightforward to invert. Since the diagonality of the covariance matrix effectively decouples the dimensions, expectations can be carried out in each dimension separately. Nevertheless, the specific form of the parameter SDEs will crucially depend on the specific form of the nonlinear function . For instance, considering the linear case, i.e. , yields

IV. Continuous-time circular filtering

In this section, we will consider continuous-time circular filtering with observed state increments as a concrete application of the framework derived above. We will further extend it to account for quasi continuous-time von Mises-valued observations (formally defined below), to provide a continuous-time generalization of the discrete-time circular filtering problem that is frequently encountered in spatial navigation problems.

A. Assuming observed angular increments only

We assume that the hidden state is parametrized on by angle , effectively embedding in as a unit circle. We further assume that follows a diffusion on the circle:

| (33) |

where Wt is now an -BM process, and drift and diffusion functions are as defined in Section II. The propagator for this process is the same as for the corresponding process in as given in Eq. (5).

For state processes that evolve on submanifolds of , as the considered here, Tronarp and Särkkä [19] have shown that projection filter equations are identical to the case where the state variable Xt evolves in Euclidean space. Since the mathematical operations performed to derive the projection filter with observed state increments in Theorem 1 are essentially the same as in Tronarp and Särkkä [19] for the state diffusion, their result carries over to our problem. Thus, Theorem 1 can straightforwardly be applied to the circular filtering problem by considering a circular projected density , such as the von Mises or a wrapped normal distribution.

Example 3

(Circular diffusion with observed state increments). In this example, we explicitly model angular path integration as the estimation of a circular diffusion based on observed angular increments. Consider a model where the hidden state evolves according to a Brownian motion on the circle, with noisy observations of its increment

| (34) |

| (35) |

Here, could, for instance, correspond to the heading direction of an animal (or a robot) that is navigating in darkness and only has access to self-motion cues , i.e., measurements of angular increments, but not to direct heading cues such as landmark positions. We chose to parametrize the diffusion constants in terms of precisions, and , to make units comparable to that of the precision of the projected density, which we will denote . Thus, the parameter governs the speed of the hidden state diffusion, and the parameter modulates the reliability of the observation process that is governed by the increments. The gKSE for this model’s posterior expectation of a test function in Stratonovich form reads:

| (36) |

As a result, the projection filter for the parameters θ becomes (cf. Eq. (19))

| (37) |

We now want to solve the circular filtering problem with observed state increments by projecting on the von Mises density

| (38) |

parametrized by mean μt and precision , using Eq. (37). Unlike e.g., the wrapped normal distribution, which is another popular choice for unimodal circular distributions, the von Mises distribution is an exponential family distribution and could alternatively be written in natural parametrization (23). Here, we chose to parametrize it by μt and , as it significantly simplifies the computation of the Fisher metric and its inverse, which appears on the right-hand side of (37). Noting that

| (39) |

| (40) |

where denotes a ratio of Bessel functions, the components of the Fisher metric (with respect to the μt, parametrization) are given by:

| (41) |

| (42) |

| (43) |

Since the Fisher metric is diagonal, the components of its inverse are simply , and .

Using these gij’s and explicitly computing the expectations on the right-hand side of Eq. (37) with respect to the von Mises approximation, we find the projection filter equations for this model:

| (44) |

| (45) |

where we defined the strictly positive function

| (46) |

and found the Ito form of from its Stratonovich form by noting that the noise variance is constant, making this conversion straightforward.

The projection filter defined by Eqs. (44) and (45) for orientation tracking in darkness has an intuitive interpretation: the mean μt is updated according to the angular increment observations, weighted by their reliability, as quanitified by . Even in the presence of such observations, the estimate’s precision, , decays towards zero, since is strictly positive. This decay reflects the accumulation of noisy observations. Very informative angular velocity observations with large may slow the decay, but cannot fully prevent it. In other words, without direct angular observations (which we will introduce in Section IV-B below), the estimate will inevitably become less accurate over time.

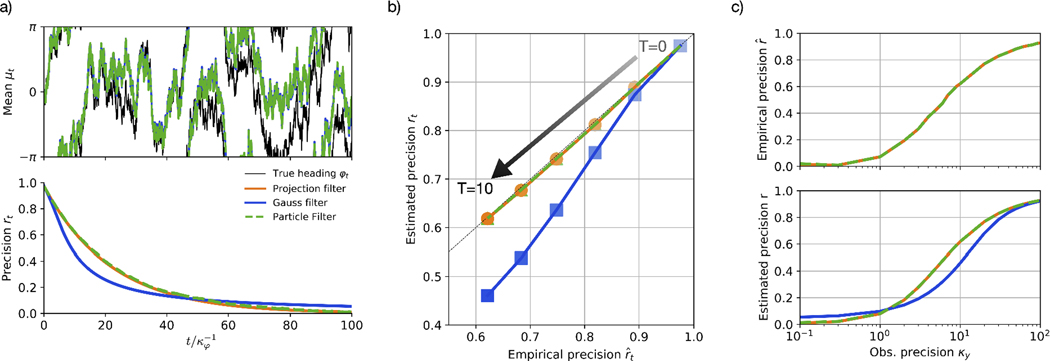

In Figure 1a, we illustrate in an example simulation that, despite the presence of angular increment observations, the estimate slowly drifts away from the true heading . As a benchmark we use a particle filter, and further compare mean μt and precision of the projection filter to that estimated by a Gaussian projection filter approximation (see Appendix V-F for details on these benchmarks). Such a filter relies on the assumption that the hidden state evolves on the real line, and thus leads to a slight deviation in the dynamics of the estimated precision .

Fig. 1. Circular filtering with observed angular increments.

a) The von Mises projection filter in Eqs. (44) and (45) is able to track a diffusion process on the circle based on observed increments, with mean μt and precision rt (given by ) matching that of a particle filter (curves are on top of each other). b) While the precision estimated from the deviation between mean μt and true trajectory and the precision rt estimated by the filter coincide for both the von Mises projection filter and the particle filter, the Gaussian projection filter (“Gauss filter”) tends to underestimate its precision. c) Empirical (upper panel) and estimated (lower panel) precision for different values of the observations precision κu at time . Note that in the upper panel, the empirical precision of the different filters is identical. Parameters for a) and b) are , , times are in units of . Simulations in b) and c) were averaged over 5000 runs.

Numerically, our projection filter’s performance in this example is indistinguishable from that of the particle filter (Figure 1b, c), and its estimated precision rt matches exactly the empirical precision evaluated by averaging the estimation error over 5000 simulation runs. The estimated precision of the Gaussian approximation, in contrast, systematically underestimates its precision for large observation reliability, and overestimates it when the angular increment observations become very noisy (Figure 1c).

Example 4

(Higher-order circular distributions). The previous example is one of the simplest examples of a circular filtering problem, and results in an approximated posterior that is always unimodal. This might be insufficient for certain settings in which we would like to consider more sophisticated projected densities. To show that our framework extends beyond such simple models, let us consider a class of circular distributions with exponential-family densities of the form

| (47) |

The case K = 1 recovers the previously used von Mises distribution (38), but with a different parametrization. For K = 2, this density is referred to as the generalized von Mises distribution, whose properties have been studied extensively [28, 29]. As a proof of concept, we will now use the projection filter for the natural parameters (Eq. (24)) to project the solution to the generative model in Eqs. (34) and (35) onto a distribution with density (47).

The circular distributions with densities (47) belong to the exponential family distributions, with natural parameter vector with and , and sufficient statistics given by

| (48) |

The corresponding expectation parameters are defined by

| (49) |

According to Corollary 2, the projection filter for the natural parameters of an exponential family density requires us to apply the right-hand side of the gKSE (36) to the sufficient statistics. For this, we need to compute

| (50) |

| (51) |

| (52) |

| (53) |

Furthermore, we note that the components of the Fisher matrix are given by

| (54) |

where the θi refer to the ith element in the parameter vector θ that contains the elements of both a and b. Inverting this matrix to get the inverse components is in general not straightforward. In fact, already the Fisher metric needs to be computed numerically, as the normalization Z(a, b) is inaccessible in closed form. We will thus treat symbolically and sort the parameters such that is composed of the blocks

| (55) |

Then, the projection filter for the natural parameters can be formally written as

| (56) |

| (57) |

where we denote with the vector of values k, k2 results from the element-wise squaring of k, is the vector of expectation parameters, and the element-wise (Hadamard) product. This example demonstrates that our framework could, in principle, be applied to project the posterior to more general and inevitably more complicated densities, should the need arise. Although we do not show this here explicitly, an instance where this might increase filtering accuracy is one where the initial density is multimodal. The example also shows that, in general, a projection filtering approach might not be the most practical approach. Here in particular, computation of the Fisher matrix components might only be possible numerically, and in that case is computationally expensive. This highlights the need for a careful choice of the posterior density and its corresponding parametrization, where (ideally) expectations of sufficient statistics are available in closed-form.

B. Quasi continuous-time von Mises observations

So far we have focused on filtering algorithms that rely exclusively on observed angular increments. Such algorithms are bound to accumulate noise, such that their precision will decay to zero in the long run. To counteract this effect, let us now consider how to additionally include observations that are generated directly from the hidden state, rather than only its increments. Specifically, we will in this section propose an observation model for (quasi-)continuous time von Mises valued observations with , which we will refer to as direct angular observations, because they are governed by the hidden state directly. This model will allow us to formulate a von Mises projection filter for both angular observations, and angular increment observations in the continuous-time circular filtering problem.

In classical continuous-time filtering settings, continuous-time observations Yt are usually considered to follow a Gaussian diffusion process, whose drift component is governed by the hidden state Xt [23]. Equivalently, one could consider ‘time-discretized’ (or ‘quasi-continuous’) observations with sampling time step , according to

| (58) |

which is the usual setting of discrete-time filtering (with fixed ), with being a potentially nonlinear function. Notably, the Fisher information about the state of the hidden variable Xt that is conveyed by these quasi-continuous observations grows linearly with sampling time step (seel Proposition 2 in Appendix V-D). The consequence of this scaling is rather intuitive: decreasing the sampling time step will result in overall more observations per unit time, which, in turn, are individually less informative about the state Xt. This renders the information rate (information per unit time) independent of the chosen time step.

Analogously, we now consider observations that are drawn from a von Mises distribution centered around a nonlinear S1 valued transformation of the true hidden state ,

| (59) |

We would like this observation model to have the same linear information scaling properties as the Gaussian observations encountered in the classical filtering problems, i.e. when hidden state noise and observation noise are uncorrelated. Thus, we need to choose the function such that the information content about the state scales linearly with step size and observation precision .

Theorem 2.

If is chosen such that

| (60) |

where is the inverse of (and as defined earlier), then the information about the state of the random variable scales linearly with sampling time step and observation precision, i.e., .

Proof.

The information content about the random variable is given by the Fisher information

| (61) |

| (62) |

We require that the information content per time step is constant and proportional in κz, which can be achieved if α varies with and κz according to

| (63) |

This can be achieved if , with

| (64) |

□

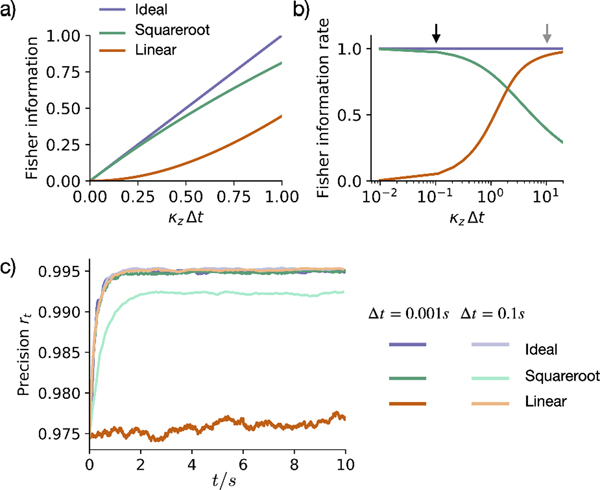

Once a step size is chosen, can be computed numerically. For sufficiently small , e.g., in the continuum limit, this function can be approximated by , while it becomes for large (Figure 2). The latter is consistent with the intuition that, for highly informative observations, the single observation likelihood is well approximated by a Gaussian (which breaks down in the limit ).

Fig. 2. Time scaling of quasi-continuous angular observations.

Both a) the Fisher information per observation and b) the Fisher information rate are linear and constant, respectively, in the sampling time step when using (”Ideal”). For comparison, we also plot (small κzΔt approximation,”Squareroot”) and (Gaussian approximation,”Linear”). c) Sample simulation with the constant position of the state estimated from quasi-continuous observations with different α functions and sampling time steps . Ideally, the estimated precision rt should be independent of the chosen simulation time step . This is satisfied by all simulations except for the linear approximation for small (dark orange), and the square root approximation for large (light green). In these simulations, we used time units of seconds (s), and set and (by design, κz has units of Fisher information per unit time), without loss of generality. Precision estimates were averaged over 10 simulation runs. Black and grey arrow in panel b) correspond to the two time step sizes shown in panel c).

What we have considered here is in essence a modified discrete time observation model, which implies that we can take advantage of filtering methods available for circular filtering with discrete-time observations [13, 12]. However, by allowing the precision to vary with time step, we additionally ensure that the information rate stays constant: increasing the time step will result in more observations per unit time, which is accounted for by less informative individual observations. Thus, the observation model defined in (59) and (60) constitutes a quasi continuous-time observation model.

C. Adding quasi continuous-time observations to the circular projection filter

If the measurement function in (59) is the identity, we can add the direct observations to our filter by straightforwardly making use of Bayes’ theorem at every time step. Specifically, since we assumed our approximated (projected) density to be von Mises at all times, the measurement likelihood

| (65) |

is conjugate to the density before the update . In other words, the posterior is guaranteed to be a von Mises density as well:

| (66) |

| (67) |

As expected from an exponential family distribution, the natural parameters are updated according to

| (68) |

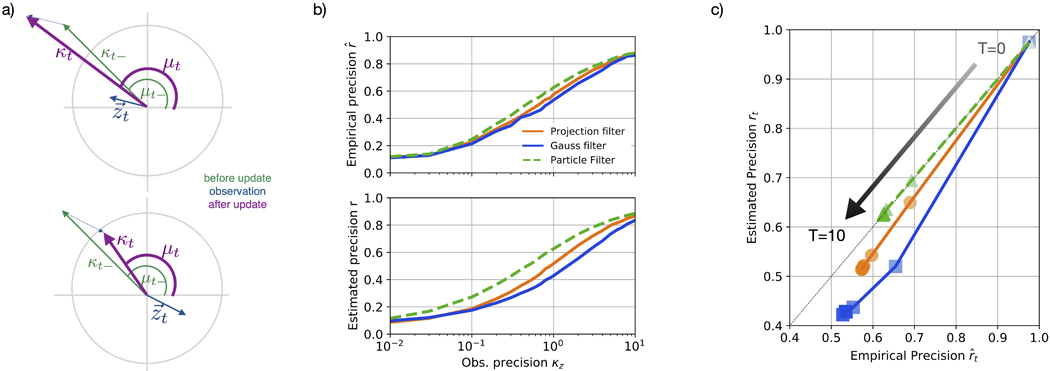

This operation is equivalent to a summation of vectors in , where the natural parameters refer to Eucledian coordinates, and (μ, κ) are the corresponding polar coordinates (Figure 3a). In the continuum limit, we write

| (69) |

where we used that for . A coordinate transform from θ to (μ, κ) recovers the update equations for mean μt and κt that result from quasi-continuous time observations

| (70) |

| (71) |

Fig. 3. Filtering with quasi-continuous time angular observations.

a) Single quasi-continuous time update step (68) with angular observation zt, where the length of the vector indicates observation reliability . The update step for Bayesian inference on the circle is equivalent to a vector addition in the 2D plane. The lower panel demonstrates that a conflicting observation leads to a decreased certainty of the estimate directly after the update, corresponding to a shorter vector. b) Empirical precision (upper panel) and estimated precision rt of the circular Kalman filter (circKF) and a Gaussian projection filter (Gauss filter), when compared to a particle filter, for different values of the observation precision κz at time . Parameters were and , times are in units of . c) Estimated versus empirical precision up to for the different filters at . The precisions shown in b) and c) are averages across 5000 simulation runs.

The recovered update equations are appealingly simply for identity (or linear) observation functions, as such functions allow us to leverage Bayesian conjugacy properties. For nonlinear observation functions, in contrast, the posterior after the update is in general not in the same class of densities (and in this particular case not a von Mises distribution anymore). To apply the projection filtering framework to project such a nonlinear observation model back onto the desired manifold of densities, one would need to know the vector field for the density pt that incorporates the observation-induced update, a derivation that is beyond the scope of this work.1

Example 5

(The circular Kalman filter). Let us revisit angular path integration, which we introduced in Example 3 as a circular diffusion with observed angular increments, and extend it to include direct angular observations Zt with likelihood (65). Such angular observations could, for instance, correspond to directly accessible angular cues, such as visual landmarks. Combining Eqs. (44) and (45) with Eqs. (70) and (71) to include the quasi-continuous updates results in

| (72) |

| (73) |

Due to the simplicity of these equations, as well as their structural similarity to the generalized Kalman filter, while taking full account of the circular state and measurement space, we coin the SDEs (72) and (73) the circular Kalman filter (circKF).

Considering that is modulated by the direct observation’s reliability , the final terms in the filter’s update equations nicely reflect the reliability-weighting that is already present in classical filtering problems: more reliable direct observations, relative to the current certainty κt, have a stronger impact on the mean μt. Furthermore, if the current observation Zt is similar to the current estimate μt, this estimate is hardly updated, while the estimate’s certainty κt increases. In contrast, if direct observations and current estimate are in conflict, the certainty κt might temporarily decrease (Figure 3a). What makes this last feature particularly interesting is that it only occurs in the circKF, but not in its Euclidean counterpart, the standard Kalman filter. In the latter an update induced by direct observations always leads to an increase in certainty. Numerically, the circKF features performance close to that of a particle filter over a wide range of parameters, while outperforming a Gaussian projection filter (Figure 3b, c). The reason for the deviation of the circKF from the optimal solution is that the von Mises distribution is still an approximation of the true posterior, which leads to slight deviations in the updates when the direct observations are integrated. These deviations are offset by the more than 10-fold decrease in computation time of the circKF that we observed in simulations: a single run in Figure 3c with the particle filter took 3.14±0.11s, while it only took 0.113±0.005s with the circKF on a MacBook Pro (Mid 2019) running 2.3 GHz 8-core Intel Core i9 using NumPy 1.19.2 on Python 3.9.1.

V. Conclusion

In this paper, we derived a continuous-time nonlinear filter with observed state increments, based on a projection filtering approach. Using this framework, we revisited the problem of probabilistic angular path integration. By additionally proposing a quasi continuous-time model for von Mises-valued direct observations, we were able to formulate a circular filtering algorithm that accounts for both increment and direct angular observations. Notably, this algorithm fulfills the following four conditions: (i) it operates on a circular state-space and (ii) in continuous time, (iii) it maintains a consistent representation during state propagation and observation update, as ensured by the projection filtering method, and (iv) it performs the proper integration of both increment and direct observations. Even though we have only fully worked out the algorithm for univariate circular filtering problems, we have formulated the overall projection filtering framework for more general multivariate problems. As by the results in [19], we expect our framework to carry over to multivariate circular filtering problems, such as reference vector tracking on the unit sphere. A possible shortcoming of this approach is that the class of generative models it can deal with is fairly limited. The generalized Kushner–Stratonovich equation is only valid if the error covariance of the observation process does not explicitly depend on the value of the hidden state. This constrains the generative model in the following way: first, we can only allow additive noise in the state process, as any multiplicative noise would enter the increment observation process Ut as state-dependent noise. Second, only linear transformations of state increments can be considered. As demonstrated in Example 4, another shortcoming of the projection-filtering method in general is that this approach is only computationally feasible for problems that are analytically accessible, i.e., where expectations under the projected density can be computed rapidly, or in closed form. In this paper, we thus focused on projected densities where these expectations could be efficiently computed.

Despite these limitations, the analytical accessibility and interpretability of our main result, in particular the circular Kalman filter, make it an attractive algorithm for unimodal circular filtering problems. First, it is straightforward to implement in software. Since its representation stays fixed through all times, it relies on only two equations which can be integrated straightforwardly, e.g. with an Euler–Maruyama scheme [30]. As we have seen in our numerical experiments, this makes this filter much faster than established methods, such as particle filters. Second, the interpretation of the dynamics are intuitively comprehensible. Third, since it is a continuous-time formulation, it automatically scales with respect to chosen sampling step size (as long as it is sufficiently small). This is an advantage over continuous-discrete filtering problems, which usually consider a fixed sampling step size, and need to be reformulated should the sampling rate in the observations change or vary across time. Lastly, animals navigate the world based on a continuous stream of sensory information, which motivates the use of continuous-time models when trying to understand how the brain operates under uncertainty. Thus, one possible application could be a conceptual description of how the brain performs angular path integration [31].

Acknowledgements

We would like to thank Melanie Basnak and Rachel Wilson (Harvard Medical School) for active and ongoing discussions that motivated the question of probabilistic path integration, and who helped us formulate the questions that we address in this manuscript. We further would like to thank Johannes Bill (Harvard Medical School) and Simone Surace (University of Bern), as well as the anonymous reviewers, for helpful feedback and suggestions on the manuscript.

Funding

A.K. was funded by the Swiss National Science Foundation (grant numbers P2ZHP2 184213 and P400PB 199242). J.D. and L.R. were supported by the Harvard Medical School Dean’s Initiative Award Program for Innovative Grants in the Basic and Social Sciences, and a grant from the National Institutes of Health (NIH/NINDS, 1R34NS123819).

APPENDIX

A. Nonlinear filtering in a nutshell

Let us briefly review the classical nonlinear filtering setting, i.e., filtering with observations that follow a diffusion process with noise uncorrelated to that of the hidden state process, in order to compare this with filtering with observed state increments. In line with standard literature [23], let denote the process of -valued direct observations. Then the generative model commonly referred to in classical nonlinear filtering is given by

| (74) |

| (75) |

with and Wt independent standard Brownian motion processes, and a potentially nonlinear, vector-valued observation function. All other quantities have been defined below (1). Note that the observations process is a diffusion with error covariance . The goal of nonlinear filtering is to compute dynamics of the posterior expectations , where denotes the filtration generated by the process Yt. Formally, this is solved by the Kushner–Stratonovich equation (KSE, [23, Theorem 3.30]):

| (76) |

with .

B. Derivation of the generalized Kushner–Stratonovich equation for the posterior expectation (Eqs. (7) and (21))

Let us revisit the generative model in Eqs. (1) and (6):

| (77) |

| (78) |

with Wt and Vt independent vector-valued Brownian motion processes, as defined earlier. Here, we derive the gKSE (7) by treating this model as a correlated noise filtering problem, which allows us to directly apply established results [23, Corollary 3.38].

Lemma 1.

The generalized Kushner–Stratonovich equation (gKSE) for the evolution of the conditional expectation of a test function in the presence of state increment observations is given by (It form)

| (79) |

Proof.

To simplify calculations, we first require that the observations process has an error covariance that equals the identity, and thus we rescale the process by ,

| (80) |

Eqs. (77) and (80) define a filtering problem where the noise in hidden process Xt and observation process are correlated, with quadratic covariation

| (81) |

Thus, the result of [23, Corollary 3.38] (KSE for correlated noise filtering problems) is directly applicable to our problem. For more details on [23, Corollary 3.38], we kindly refer to the proof based on the innovations method provided therein. An alternative proof based on the change of measure approach is presented in [20].

Note that the KSE for correlated noise [23, Eq. 3.72] is similar to the classical KSE for uncorrelated noise problems (76), except for a correction term, given by a vector field ,

| (82) |

The vector field can be read out from the dynamics of the quadratic covariation between the process and the rescaled observations process . Using Ito’s lemma, we can write:

| (83) |

and thus

| (84) |

| (85) |

Further identifying , we find

| (86) |

Rescaling yields (79).

Remark 3.

By combining (76) and (79) it is possible to derive a generalized Kushner equation when both types of observations are present, i.e., when we consider both the process Yt (Eq. 75) and the process Ut (Eq. 78) as the observations:

| (87) |

In this case, the expectations are with respect to the filtration and , i.e., .

Corollary 3.

In Stratonovich form, the generalized Kushner–Stratonovich equation (gKSE) for the evolution of the conditional expectation of a test function in the presence of increment observations reads:

| (88) |

with

| (89) |

For one-dimensional problems with , , , and , (88) simplifies to

| (90) |

Proof.

We convert between Itô and Stratonovich calculus using the Wong–Zakai theorem [27]:

| (91) |

where the symbol ◦ denotes Stratonovich calculus, and is the quadratic covariation between the processes Yt and Bt. We identify (cf. Eq. (79))

| (92) |

and write

| (93) |

| (94) |

To obtain the change in quadratic covariation , it is helpful to find by Itô‘s lemma

| (95) |

The evolution of the expectations is obtained by straightforward application of the gKSE (79), substituting with the functions , ft and . This will result in terms that multiply , which are those relevant for computing the change of the covariation process, . Further note that the quadratic covariation of the observation process evolves according to . Some tedious but straightforward algebra and term rearrangements then result for the quadratic covariation in

| (96) |

Plugging this into (94), and again some algebra, yields

| (97) |

This equation can be further simplified by noting that . Further, we can substitute

| (98) |

in the first line, which yields Eq. (88). Equation (90) follows from (88) as the 1D special case.

C. Fisher metric and scalar product

Proposition 1.

The scalar product defined in Eq. (13),

| (99) |

is the scalar product on that is associated with the Fisher metric [26]

| (100) |

Proof.

Consider Z1 and Z2 to correspond to two of the basis vectors of the tangent space , i.e., and . Then

| (101) |

| (102) |

| (103) |

This concludes the proof. □

D. Information scaling

Proposition 2.

The Fisher information about the hidden state variable that is conveyed by Gaussian-type discrete-time observations,

| (104) |

grows linearly with the time step .

Proof.

The information content about the variable that is conveyed by the observation is given by the Fisher information

□

E. Details on numerical simulations

Our numerical simulations in Figures 1 and 3, corresponding to Examples 3 and 5, were based on artificial data generated from the true model equations. In particular, the “true” state is a single trajectory from Eq. (34), and observations are drawn at each time point from Eq. (35) and, in the case of Example 5, additionally from Eq. (65). To simulate trajectories and observations, we use the Euler–Maruyama approximation [30]. In this approximation, the time-discretized generative model with fixed time step size in Examples 3 and 5 reads:

| (105) |

| (106) |

| (107) |

The same time-discretization scheme was used to numerically integrate the SDEs (44), (45), (72) and (73) for the von Mises parameters μt and κt. Unless stated otherwise, we used in all our numerical simulations, and give times in units of κϕ.

F. Benchmarks for numerical simulations

For our numerical simulations in Figures 1 and 3, corresponding to Examples 3 and 5, we used the following filtering algorithms to compare the circKF against.

1). Particle Filter:

As benchmark, we used a Sequential Importance Sampling/Resampling particle filter (SIS-PF, [32]), that was modified to account for state increment observations .

The N particles in the SIS-PF where propagated according to

| (108) |

and each particle j was weighted at each time step according to

| (109) |

yielding an SIS for this model that is asymptotically exact in the limit. Mean μt and precision rt of the filtering distribution were determined at each time step according to a weighted average on the circle, i.e. the first circular moment:

| (110) |

For a von Mises distribution, the radius r of the first circular moment and the precision parameter κ are related via , which is why we use r rather than κ in our plots.

In our simulations, we used N = 103 if direct angular observations Zt were present, and N = 104 if only state increment observations were present. We re-sampled the particles whenever the effective number of particles, , was lower than N/2.

2). Gaussian approximation:

The reference filter, which we refer to as “Gauss filter”, is a heuristic method that assumes posterior mean μt and variance σt to evolve according to a generalized Kalman–Bucy filter (Eqs. (31) and (32)). Such a filter is often referred to as “assumed density filter” (ADF) in the literature, which under certain conditions, such as for the circular filtering problem we consider here, becomes fully equivalent to a Gaussian projection filter (see [18, Section 7] for in-depth discussion). In order to make the resulting distribution circular, this Gaussian is consecutively approximated by a von Mises distribution via , resulting in the following update equations for the model in Example 3:

| (111) |

| (112) |

For the model used in Example 5, this is combined with the observations in the same way as for the circKF. Note that in absence of direct angular observations zt, the mean dynamics are the same as for the circKF, while κt deviates (as shown in Figure 1). When direct angular observations are present, this, in turn, affects the computation of the update in the mean dynamics, which leads to a worse numerical performance than the circKF.

G. Code availability

Jupyter notebooks to generate Figs. 1–3, the underlying simulation data as well as Python scripts to generate this data has been deposited at Zenodo, and is publicly available at https://doi.org/10.5281/zenodo.5820406.

Footnotes

As outlined earlier, for a Gaussian setting this would be the update part of the Kushner–Stratonovich equation. To the best of our knowledge, no such equation exists for von Mises-valued observations.

References

- [1].Heinze S, Narendra A, and Cheung A, “Principles of insect path integration,” Current biology : CB, vol. 28, no. 17, pp. R1043–R1058, Sep 2018. [Online]. Available: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6462409/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Kreiser R, Cartiglia M, Martel JNP, Conradt J, and Sandamirskaya Y, “A Neuromorphic Approach to Path Integration: A Head-Direction Spiking Neural Network with Vision-driven Reset,” in 2018 IEEE International Symposium on Circuits and Systems (ISCAS), May 2018, pp. 1–5, iSSN: 2379–447X. [Google Scholar]

- [3].Taube JS, Muller RU, and Ranck JB, “Head-direction cells recorded from the postsubiculum in freely moving rats. I. Description and quantitative analysis.” The Journal of Neuroscience, vol. 10, no. 2, pp. 420–35, 1990. [Online]. Available: http://www.ncbi.nlm.nih.gov/pubmed/2303851 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Seelig JD and Jayaraman V, “Neural dynamics for landmark orientation and angular path integration,” Nature, vol. 521, no. 7551, pp. 186–191, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Skaggs WE, Knierim JJ, Kudrimoti HS, and McNaughton BL, “A Model of the Neural Basis of the Rat’s Sense of Direction,” Advances in neural information processing systems, p. 10, 1995. [PubMed] [Google Scholar]

- [6].Turner-Evans D, Wegener S, Rouault H, Franconville R, Wolff T, Seelig JD, Druckmann S, and Jayaraman V, “Angular velocity integration in a fly heading circuit,” eLife, vol. 6, p. e23496, May 2017. [Online]. Available: https://elifesciences.org/articles/23496 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Xie X, Hahnloser RH, and Seung HS, “Double-ring network model of the head-direction system,” Physical Review E - Statistical Physics, Plasmas, Fluids, and Related Interdisciplinary Topics, vol. 66, no. 4, pp. 9–9, 2002. [DOI] [PubMed] [Google Scholar]

- [8].Ma WJ, Beck JM, Latham PE, and Pouget A, “Bayesian inference with probabilistic population codes.” Nature Neuroscience, vol. 9, no. 11, pp. 1432–8, Nov 2006. [Online]. Available: http://www.ncbi.nlm.nih.gov/pubmed/17057707 [DOI] [PubMed] [Google Scholar]

- [9].Fiser J, Berkes P, Orban G, and Lengyel M, “Statisticallý optimal perception and learning: from behavior to neural representations,” Trends in Cognitive Sciences, vol. 14, no. 3, pp. 119–130, Mar 2010. [Online]. Available: https://linkinghub.elsevier.com/retrieve/pii/S1364661310000045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Kalman RE, “A New Approach to Linear Filtering and Prediction Problems,” Transactions of the ASME Journal of Basic Engineering, vol. 82, no. Series D, pp. 35–45, 1960. [Online]. Available: http://fluidsengineering.asmedigitalcollection.asme.org/article.aspx?articleid=1430402 [Google Scholar]

- [11].Kalman RE and Bucy RS, “New Results in Linear Filtering and Prediction Theory,” Journal of Basic Engineering, vol. 83, no. 1, pp. 95–108, Mar 1961. [Online]. Available: https://asmedigitalcollection.asme.org/fluidsengineering/article/83/1/95/426820/New-Results-in-Linear-Filtering-and-Prediction [Google Scholar]

- [12].Kurz G, Gilitschenski I, and Hanebeck UD, “Recursive Bayesian filtering in circular state spaces,” IEEE Aerospace and Electronic Systems Magazine, vol. 31, no. 3, pp. 70–87, Mar 2016. [Online]. Available: https://ieeexplore.ieee.org/document/7475421 [Google Scholar]

- [13].Azmani M, Reboul S, Choquel JB, and Benjelloun M, “A recursive fusion filter for angular data,” 2009 IEEE International Conference on Robotics and Biomimetics, ROBIO 2009, pp. 882–887, 2009, publisher: IEEE. [Google Scholar]

- [14].Traa J. and Smaragdis P, “A Wrapped Kalman Filter for Azimuthal Speaker Tracking,” IEEE Signal Processing Letters, vol. 20, no. 12, pp. 1257–1260, 2013. [Google Scholar]

- [15].Kurz G, Gilitschenski I, and Hanebeck UD, “Recursive nonlinear filtering for angular data based on circular distributions,” 2013 American Control Conference, pp. 5439–5445, 2014. [Google Scholar]

- [16].Tronarp F, Hostettler R, and Sarkk a S, “Continuous-Discrete von Mises-Fisher Filtering on S2 for Reference Vector Tracking,” 2018 21st International Conference on Information Fusion, FUSION 2018, no. July, pp. 1345–1352, 2018. [Google Scholar]

- [17].Hanzon B. and Hut R, “New Results on the Projection Filter,” Research Memorandum, vol. 105, no. March, pp. 9457–9475, 1991. [Online]. Available: http://www.sciencedirect.com/science/article/pii/S0377221796004043 [Google Scholar]

- [18].Brigo D, Hanzon B, and Le Gland F, “Approximate nonlinear filtering by projection on exponential manifolds of densities,” Bernoulli, vol. 5, no. 3, pp. 495–534, 1999. [Online]. Available: http://projecteuclid.org/euclid.bj/1172617201 [Google Scholar]

- [19].Tronarp F. and Sarkk a S, “Continuous-Discrete Filtering and Smoothing on Submanifolds of Euclidean Space,” arXiv:2004.09335 [math, stat], Apr. 2020, arXiv: 2004.09335. [Online]. Available: http://arxiv.org/abs/2004.09335 [Google Scholar]

- [20].Nusken N, Reich S, and Rozdeba PJ, “State and Parameter Estimation from Observed Signal Increments,” Entropy, vol. 21, no. 5, p. 505, May 2019. [Online]. Available: https://www.mdpi.com/1099-4300/21/5/505 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Stratonovich RL, “Conditional Markov Processes,” Theory of Probability & Its Applications, vol. 5, no. 2, pp. 156–178, Jan 1960. [Online]. Available: http://epubs.siam.org/doi/10.1137/1105015 [Google Scholar]

- [22].Kushner HJ, “On the Differential Equations Satisfied by Conditional Probablitity Densities of Markov Processes, with Applications,” Journal of the Society for Industrial and Applied Mathematics Series A Control, vol. 2, no. 1, pp. 106–119, Jan 1964. [Online]. Available: http://epubs.siam.org/doi/10.1137/0302009 [Google Scholar]

- [23].Bain A. and Crisan D, Fundamentals of stochastic filtering, ser. Stochastic modelling and applied probability. New York: Springer, 2009, no. 60, oCLC: ocn213479403. [Google Scholar]

- [24].Brigo D, Hanzon B, and LeGland F, “A differential geometric approach to nonlinear filtering: the projection filter,” IEEE Transactions on Automatic Control, vol. 43, no. 2, pp. 247–252, 1998. [Online]. Available: http://ieeexplore.ieee.org/document/661075/ [Google Scholar]

- [25].van Handel R. and Mabuchi H, “Quantum projection filter for a highly nonlinear model in cavity QED,” Journal of Optics B: Quantum and Semiclassical Optics, vol. 7, no. 10, pp. S226–S236, Oct 2005. [Online]. Available: https://iopscience.iop.org/article/10.1088/1464-4266/7/10/005 [Google Scholar]

- [26].Amari S, Differential-geometrical methods in statistics, 2nd ed., ser. Lecture notes in statistics. Berlin; New York: SpringerVerlag, 1990, no. 28. [Google Scholar]

- [27].Wong E. and Zakai M, “On the relation between ordinary and stochastic differential equations,” International Journal of Engineering Science, vol. 3, no. 2, pp. 213–229, Jul 1965. [Online]. Available: https://linkinghub.elsevier.com/retrieve/pii/0020722565900455 [Google Scholar]

- [28].Gatto R. and Jammalamadaka SR, “The generalized von Mises distribution,” Statistical Methodology, vol. 4, no. 3, pp. 341–353, Jul 2007. [Online]. Available: https://escholarship.org/uc/item/8m91b0p7 [Google Scholar]

- [29].Gatto R, “Some computational aspects of the generalized von Mises distribution,” Statistics and Computing, vol. 18, no. 3, pp. 321–331, Sep 2008. [Google Scholar]

- [30].Kloeden PE and Platen E, Numerical solution of stochastic differential equations, corr. 3. print ed., ser. Applications of mathematics. Berlin: Springer, 2010, no. 23. [Google Scholar]

- [31].Kutschireiter A, Basnak MA, Wilson RI, and Drugowitsch J, “A Bayesian perspective on the ring attractor for heading-direction tracking in the Drosophila central complex,” Tech. Rep., Dec. 2021, bioRxiv, Cold Spring Harbor Laboratory, Section: New Results Type: article. [Online]. Available: https://www.biorxiv.org/content/10.1101/2021.12.17.473253v1 [Google Scholar]

- [32].Doucet A, Godsill S, and Andrieu C, “On sequential Monte Carlo sampling methods for Bayesian filtering,” Statistics and Computing, p. 12, 2010. [Google Scholar]