Abstract

Deep learning techniques, in particular generative models, have taken on great importance in medical image analysis. This paper surveys fundamental deep learning concepts related to medical image generation. It provides concise overviews of studies which use some of the latest state-of-the-art models from last years applied to medical images of different injured body areas or organs that have a disease associated with (e.g., brain tumor and COVID-19 lungs pneumonia). The motivation for this study is to offer a comprehensive overview of artificial neural networks (NNs) and deep generative models in medical imaging, so more groups and authors that are not familiar with deep learning take into consideration its use in medicine works. We review the use of generative models, such as generative adversarial networks and variational autoencoders, as techniques to achieve semantic segmentation, data augmentation, and better classification algorithms, among other purposes. In addition, a collection of widely used public medical datasets containing magnetic resonance (MR) images, computed tomography (CT) scans, and common pictures is presented. Finally, we feature a summary of the current state of generative models in medical image including key features, current challenges, and future research paths.

Keywords: Generative adversarial networks, Variational autoencoders, Convolutional neural networks, Medical imaging, Computer vision, Artificial neural networks

Introduction

Since the appearance of machine learning (ML) as a part of artificial intelligence (AI) in the 1950s, the advances on data processing have achieved extraordinary achievements. Early machine learning techniques were unable to process data as it was gathered from its source due to high dimensionality; hence, the pattern identification ability that characterizes them was totally dependent on feature extraction methods. It required high expertise and careful fine tuning of the system to transform raw data into a different representation from which the algorithm could detect or classify patterns.

Nowadays, ML systems have a huge impact on the modern society and generate important benefits to many institutions along multiple industries, including the biomedical field. Among their many uses are recognizing objects in images, speech translation, and user profiling: these being extremely helpful utilities in the medical field. The present survey focuses on the specialized and leading edge subfield of generative models in deep neural networks (DNNs) applied in medical imaging. These models are based on the assumption that the features of an object in an image can be learned, and then a synthetic image could be generated so the differences between a real and a fake one are almost unnoticeable for human perception.

The main motivation for reviewing and explaining how artificial neural networks (NNs) and deep generative models work in medical imaging is to encourage its use in medical works. Surveys and reviews as those of authors like Akazawa and Hashimoto [1], De Siqueira et al. [2], Fernando et al. [3], Chen et al. [4], Sah and Direkoglu [5], and Abdou [6] cover a significant amount of works that apply deep learning to medical image analysis. Regarding generative models, Zhai et al. [7] review numerous autoencoder variants, while Kazeminia et al. [8] focus on the application of GANs for medical image analysis. Despite the fact that the reviews and studies above offer a broad representation of the works currently under way, they show a very technical report that requires an in-depth knowledge of how neural networks work to completely understand certain aspects of the covered subjects. We feel that important areas are left behind since many studies assume that the reader already has extensive knowledge of the fundamental internal workings of the models. This can lead to its usage as a “black box” instead of as an understandable and reliable tool. This situation can further cause opposition to its use by non computer science technical authors, who may not trust a not well-known tool that they are unable to account.

This survey includes recent applications of generative models in medical image processing, comprehensive explanations of its main architectures, and several lists of datasets consisting of medical images of different types. The rest of this paper is structured as follows. Section 2 shows an overview of the deep learning predecessors and outlines the main components and techniques used in many of the state-of-the-art models. Section 3 introduces the concept of generative models focusing on generative adversarial networks and variational autoencoders. Section 4 lists widely used medical and general images datasets. Finally, Sect. 5 discusses the main key features and challenges of generative models in medical image processing.

Deep learning

The aim of deep learning models is to represent probability distributions over data, such as natural images or natural language. This kind of corpora has a large number of features that common and simple artificial intelligence techniques are not able to extract to correctly infer conclusions from the data. Deep learning models stand out when used as a discriminative tool, being able to map high-dimensional data to a class label thanks to multiple techniques as forward and backpropagation through activation and loss functions, complex architectures, and the incorporation of operations as convolution and pooling [9].

In this section, we present a theoretical analysis of artificial neural networks and the techniques that allow them to stand out from other AI models.

Artificial neural networks

Conventional artificial neural networks (NNs) consist of many simple nodes called neurons, each performing a very specific task. Neurons get values as input and perform a linear function previously specified in a manner that an inference is obtained as output. The input can be obtained from sensors perceiving the environment or previous neurons, depending on the problem and how many computational stages it would require to be solved [10].

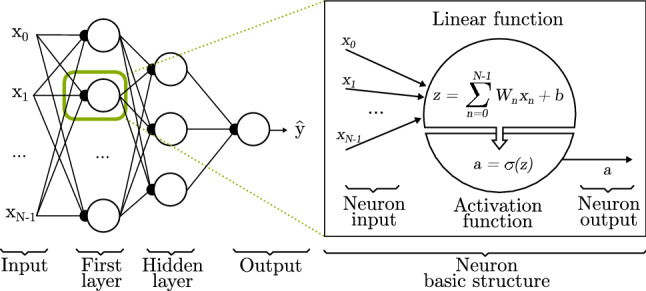

As noted above, a neuron is responsible of processing the information in its input, being the base unit of a larger network. For an array of () inputs, one neuron (j) multiplies each value by a weight () and adds it to a bias (), then all obtained values are summed and an activation function () is applied to the result. This process is the so-called forward propagation which delivers a real number as output [11].

Forward propagation

As Goodfellow et al. [11] explain, each neuron (j) stores a weight () for each input and a bias (), both of which being real numbers and also known as trainable parameters. Formally, for each neuron j and considering N inputs, forward propagation can be expressed as follows.

First, it uses a linear function to compute z (Eq. 1). Next, it applies an activation function (Sect. 2.1.2) to convert z into a probability (Eq. 2).

| 1 |

| 2 |

As represented in Fig. 1, a NN does not only own one neuron, but a set of neurons. These sets of neurons are organized in layers. The neurons of each layer are connected to the previous and to the next layers as a chain.

Fig. 1.

Basic artificial neural network structure. It consists of N inputs, ranging from to , and 3 layers; one input layer with one neuron for each input , one hidden layer that takes as input the previous layer output, and one output layer where the final result is deployed

During the training process, each data example with an associated label (y) is fed through the input layer. The final layer, called the output layer, yields an approximation of the label . The learning algorithm must decide how to update the W and b values of each intermediate layers to better approximate to y [11]. The intermediate layers are called hidden layers, and a more detailed explanation of its working is provided in Sect. 2.2. In this way, assuming N as the number of inputs in a layer and J as the number of neurons in a layer, W is a matrix of size equal to the number of neurons in a layer and its inputs ([J, N]), and the bias b is a vector of 1 and the number of neurons ([1, N]), since it is a single value in each neuron.

As the number of neurons increases and more layers are included in the NN, the number of operations needed to perform forward propagation is also greatly incremented. To reduce the operational burden, matrix operations can be used instead of an iterative process. This is the so-called vectorization technique, which offers a great leap forward in NN models since the matrix operations can be performed on graphic processing units (GPUs) achieving better performance, system optimization and reducing execution times. Eqs. 3 and 4 are vectorized alternatives to Eqs. 1 and 2.

| 3 |

| 4 |

Parameters a and relate to the same value, being common to name when it refers to the final output of a multiple layered NN, and a when it is an output of a neuron that will be part of the input of a different neuron on the next layer. Layers are covered in Sect. 2.2.

Since the probability value delivered in relies on the input, it is usually expressed as . Still, is not only affected by the inputs, but also considers the values found in the weights (W) and bias (b) of each neuron. Therefore, the neuron weight and bias can be updated considering the real value y and the predicted to make them as similar as possible, giving the neuron the ability to learn. The process of training (updating) the parameters W and b is done through backpropagation (Sect. 2.1.4) considering a loss function (Sect. 2.1.3).

Activation function

Before moving toward, it is worth focusing on the activation function and its goal. Generally, NN classification tasks require predicting the value of a variable y. As already seen before, this probability can be expressed as .

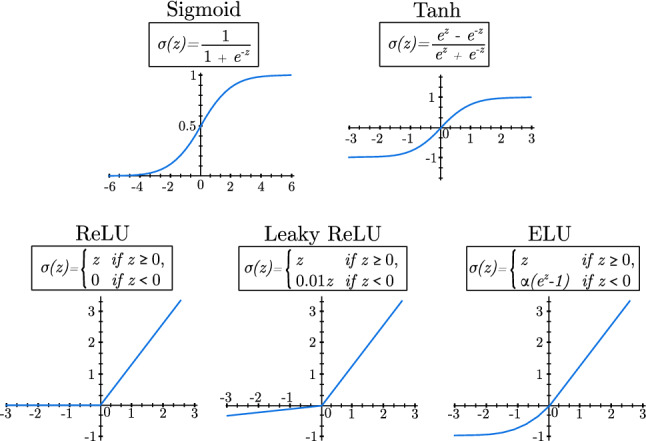

Activation functions take the real number value computed by the linear function (z) and transform it into a probability. Activation functions can be categorized depending on the number of values a class can take, and therefore, the system should predict. If class values are binary, the probability must lie between 0 and 1. In contrast, if the class is represented by a discrete variable with n possible values, the [0, 1] range is not suitable and a different function should be considered.

For binary classes, the most used activation functions are Sigmoid, Tanh, ReLU (Rectified Linear Unit), Leaky ReLU, and ELU (exponential linear unit) [11, 12]. Figure 2 shows a graphic representation of the activation functions.

Fig. 2.

Most used activation functions

For multi-class classification, the most employed activation function is the so-called Softmax, a generalization of the sigmoid function in Fig. 2. It is able to represent the probability distribution over N different classes [11]. Softmax outputs a vector of N elements, each one storing a value between 0 and 1, and since the vector represents a valid probability distribution, the whole vector sums 1. In Eq. 5, and relate to the elements of the input vector z, and i refers to each position of the output vector:

| 5 |

Loss functions

In this section, a binary classification example is used to keep explanation complexity at a mininum as multi-class examples could be inferred the same way.

Loss function (L) provides a value of how correct was the prediction made by the NN for a specific example. Although initial NN uses the square error loss function, better choices appear later. One of the most used loss function is the binary cross-entropy (BCE) shown in Eq. 7. It is a special case of the cross-entropy (CE) function shown in Eq. 6, which was originally formulated to find the loss among C number of classes.

| 6 |

| 7 |

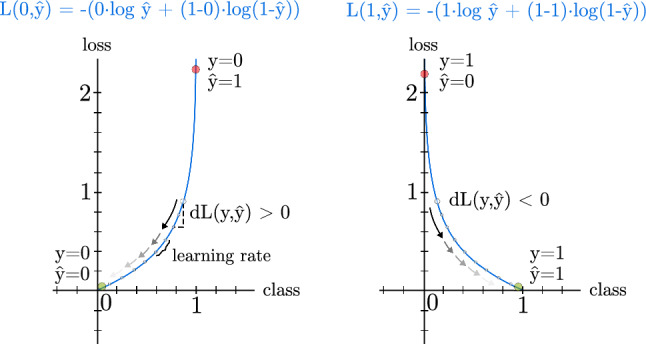

As can be seen in Fig. 3, the slope of the function can be calculated as a derivative. In this way, if the derivative is positive, hence a positive slope will be obtained. On the contrary, if the derivative is negative and its slope too. The derivatives help to know how correct the prediction was. Since this function returns a high value when the prediction is wrong and a low value when the prediction is correct, the trainable parameters of the neurons are changed over the training process to gradually improve the results.

Fig. 3.

Loss function for an individual example throughout the training process. y is the label of an example of the training data, while is the predicted label of the same example. A steady learning rate is presented as example, but its value can be variable

Section 2.1.4 discusses backpropagation, explaining how derivatives affect trainable parameters W and b via a learning parameter .

BCE works well when used in a distribution-based scenario where an image must be classified as a whole into a category, but better options are available when the classification must be made at pixel level. An image is made up of square pixels, and groups of pixels define the different elements shown in it. Classifying these pixels depending on the element, they are part of is called semantic image segmentation [13], a subject that is extended in Sect. 3.2.

Selecting a loss function is very important when working in complex semantic segmentation. The most successful alternatives are focal loss [14], dice loss [15], Tversky loss [16], and shape-aware loss [17].

Focal loss [14] estimates the probability of belonging to a class as :

| 8 |

In this way, cross-entropy can be assessed as , and the focal loss function defined as:

| 9 |

Parameter works as a modulating factor to lower the weight of examples that are easier to classify, emphasizing on the hard negatives. The authors also propose the use of a balancing parameter () to improve accuracy. These conditions make the focal loss function perfect to work with highly imbalanced datasets.

Dice loss [15] is also useful when working with highly imbalanced data. It is defined as:

| 10 |

The main strength of dice loss lies on image to image comparison, being it particularly recommended when a set of images with their related classes, also known as ground truth, is available. Parameter ensures that the loss function avoids numerical issues when both y and equal 0.

Salehi et al. [16] build upon dice loss function and propose the Tversky Loss function, one of the loss functions for semantic segmentation of medical images that offers best results [13]. It adds weight to false positives and false negatives through the parameter, improving its reliability. Equation 11 shows its definition.

| 11 |

Lastly, the shape-aware loss function [17] considers the shape of the object; therefore, it is an excellent loss function in cases with difficult boundaries segmentation. It uses the cross-entropy (CE) loss function, and a coefficient of the average Euclidean distance (E) among i points around curves of predicted segmentation compared to the ground truth [13]. It is calculated as follows:

| 12 |

Building on the above loss functions, new alternatives arise combining them in such a way that results are improved. Examples of this are combinations of existing loss functions as combo loss [18] (combination of dice loss and BCE), and variants inspired in them as focal Tversky loss [19] and log-cosh dice loss [20].

Backpropagation

Early NNs were based on linear regression methods and were not able to learn. It was not until late 1900s when NNs benefited from backpropagation and gradient descent. As shown in the previous section, loss function and derivatives show how correct is the prediction. Thanks to backpropagation, a NN is able to learn from the accuracy of current predictions and update W and b values to improve it on the next iteration.

The goal is, therefore, to find the parameter values that minimize the loss, so when a new example is inputted to the system, it is classified correctly. To optimize this process, the values are not computed for each particular case, but the average of the loss functions of the dataset is calculated and used as a measure. This measure is called Cost function, and it is formally expressed as J(W, b), being M the number of examples in the dataset (see Eq. 13).

| 13 |

Considering that derivatives portray how much a value changes related to another variable, the derivative of the cost function related to the parameters W and b separately tells how much they should be changed. If predictions are accurate, then the loss values will be low; hence, parameters will be barely changed. Otherwise, high loss values lead to major changes. Also, a learning rate value () is used to control the update ratio so it does not escalate out of control. Eqs. 14 and 15 show the updating process of the trainable parameters.

| 14 |

| 15 |

Many authors succeeded at working with low layered NNs architectures. Bollschweiler et al. [21] implement a hidden layer NN for gastric cancer prediction before surgery. Dietzel et al. [22] study how a NN with a single hidden layer helps in breast cancer prediction through breast magnetic resonance images. Biglarian et al. [23] use a one hidden layer NN for early detection of distant metastasis in colorectal cancer.

Gardner et al. [24] train a NN consisting of only one hidden layer to detect diabetic retinopathy through 300 black and white retina pictures. When compared with an expert ophthalmologist judgment, the network achieved good accuracy for the detection of the illness. Years later, Sinthanayothin et al. [25] use a bigger set of colored retinal images (25,094) on a similar three layered NN. They conclude that working with colored RGB (red, green and blue) images improves the detection of retinal elements as optic disc, fovea, and blood vessels, which can be analyzed to detect sight threatening complications such as disc neovascularization, vascular changes, or foveal exudation.

NNs are not only used to analyze medical images, but other types of data collection can be also considered. Özbay et al. [26] introduce a new fuzzy clustering NN architecture (FCNN) for early diagnosis of electrocardiography arrhythmias. The NN consists of one hidden layer, achieving a high accuracy and improving previously reported works [27, 28].

Even after NN were generally dropped out and ignored for other techniques, many authors continued using them successfully. All presented models consisted on one input layer (100 - 400 nodes), one hidden layer (4 - 20 nodes) and a final output layer (1 - 10 nodes). It was not until the early 2000s that NN are brought again globally thanks, among other things, to the rising of fast graphics processing units (GPUs) and its convenient programming capabilities. They open a gate to what it is known today as deep neural networks (DNNs), offering a greater number of hidden layers and nodes.

Optimizer algorithms

As previously stated, the learning process is done through backpropagation in order to update the internal values of the network and decrease the loss, offering a more accurate prediction. This process is described in the previous section, and is known as gradient descent, one of the first optimizer algorithms. As the complexity of the data and the number of training cases increase, it is also more difficult to find the minima and its computational cost. Considering this fact, multiple optimization algorithms have risen over the years.

Stochastic gradient descent (SGD) is an iterative algorithm that follows a function until finding its lowest point as gradient descent. The only difference lies in that SGD updates the internal parameters of the network based on random examples instead of processing all of them. The process is computationally less expensive, but parameters have higher variance and larger fluctuation steps. Momentum algorithm aims to reduce the oscillations and variance of SGD using a parameter stored at each iteration that influences the next update in a way reminiscent of acceleration [29].

The previous optimizers use a constant learning rate, which is a problem when the gradients are sparse or small. Algorithms as AdaGrad [30] and its variants, such as RMSProp [31], Adam [32], or AdaDelta [33], introduce a parameter to modify it, achieving a variable learning rate. This variants aim to mitigate the decay of the learning rate that AdaGrad suffers using exponential moving averages of past gradients [34].

Evaluation metrics

Throughout the training process the optimizer algorithms work to minimize the loss function that the model uses as a tool to update its internal parameters, thus learning from the training dataset. However, these elements are not a reflection of the model performance, for which additional metrics are used depending on the task. When the output of the model is a discrete value, classification metrics must be taking into account. Conversely, if the output is a real value, regression metrics are the appropriate.

Classification metrics are based on the true and predicted condition of the training examples, being true positives (TP) or true negatives (TN) the instances that the model correctly predict, and false positives (FP) or false negatives (FN) the ones that it fails. While there are numerous metrics, the most common are accuracy, specificity, recall, precision, and F1 Score, a combination of the latter two [35]. Accuracy shows a percentage of the correct predictions over the total examples.

| 16 |

Specificity shows how well the model classifies true negatives.

| 17 |

Recall, also known as Sensitivity, is a metric representative of the correctly predicted samples. It is mainly used to know how well the model predicts true positives.

| 18 |

Precision is mainly used when one of the classes is under-represented, situation where the accuracy offers a high value that would not correctly represent reality.

| 19 |

Lastly, F1-Score combines precision and recall to provide a single metric in cases where both are important.

| 20 |

Furthermore, regression metrics use the predicted () and actual value (y) of each example to get the average deviation of the model predictions. One of the most extended metrics is the mean squared error (MSE), that can also be used as a root mean squared error (RMSE) to scale its value to be average deviation between the predicted and the real values.

| 21 |

Mean absolute error (MAE) is a regression metric that represents the absolute distance between the predicted and real values. It is a solid alternative to MSE, as outliers affect it to a lesser extent.

| 22 |

Deep neural networks

Numerous improvements to shallow NN have been proposed, but the groundbreaking advancement in this field is the evolution of NN to a deep architecture. They are called deep neural networks (DNNs), and their main characteristic is the inclusion of a high number of hidden layers. As seen in Fig. 1, a hidden layer is a set of neurons fully connected to the neurons of the previous (input) and next (output) layer.

DNNs composed of hidden layers replace hand-engineered feature detectors and are able to learn complex patterns. This type of multilayer architectures can be trained using simple stochastic gradient descent. As long as the linear functions of the neurons are relatively smooth functions of their inputs and of their internal weights (W) and bias (b), gradients can be computed using the backpropagation procedure [36].

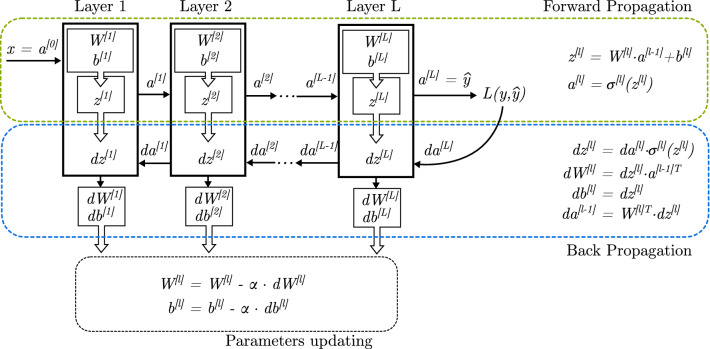

Through the forward propagation process, each layer l has an input vector x. It is represented as if it is an external input, or when it is the output of a previous layer (). Also, its output is expressed as , or when it is the final output layer.

During the Backward Propagation procedure, each layer l computes derivatives , , and . First two values are used to update (train) and , while the last one is served as input to the previous layer so the process can continue until reaching the input layer.

Figure 4 shows the forward and backward propagation processes. Because of the derivatives chain rule, the backpropagation equation can be applied to propagate gradients through all layers of the DNN. It starts on the output given by the forward propagation process, and continues layer by layer until it reaches the first layer (also called input layer).

Fig. 4.

Deep neural network training through forward and backward propagation. In this figure, the layer boxes represent a layer of artificial neurons fully connected with the previous and next layers

A great number of authors have capitalized on the possibilities that DNNs with fully connected layers offer, achieving key milestones in deep learning applied to medical studies. Clarke et al. [37] compare a backpropagation artificial neural network with two hidden layers (14 and 8 nodes each) to maximum likelihood method (MLM), and k-nearest neighbors (k-NN) algorithms over magnetic resonance (MR) image segmentation. They show that NN performs considerably better than MLM and similar to k-NN, providing improved boundary definition between tissues of similar MR parameters such as tumor and edema.

Cancer illness is an important subject of study whose pace has quickened thanks to NN since its early adoption. Veltri et al. [38] use a 2-3 hidden layers NN to detect prostate cancer, giving a significantly higher overall classification accuracy than logistic regression. Kan et al. [39] findings confirm that a NN with two hidden layers have superior potential in comparison with other methods of analysis for the prediction of lymph node metastasis in esophageal cancers. Nigam and Graupe [40] describe a method for automated detection of epileptic seizures from scalp-recorded electroencephalograms. They train a 4 layered NN where the input layer is connected simultaneously to both hidden layers. Darby et al. [41] use two hidden layers in a NN to predict which lymph nodes have a highest percentage of being metastasized in head and neck cancer.

In spite of the good results obtained with DNN architectures, their computation process is highly demanding. Moreover, as medical images with better quality and resolution appear, DNN fall behind as new models specifically crafted for image processing arise. This leads out to one of the most used and studied technique in recent works called convolution.

Convolutional neural networks

As can be inferred from Sect. 2.2, training and use of DNNs, where each layer has a high number of neurons, leads to a very computational demanding process. Moreover, the use of a high number of parameters as input (e.g., pixels of an image), makes it even more computationally demanding.

As colored images become an important subject of study, research focuses on processing data formed by multiple arrays of data. Considering one RGB (red, green, and blue) image as input of the NN, it could be disassembled into three 2D arrays, one for each color layer and one element for each pixel value. This is why convolution operations, which are specifically designed to work with matrices, are introduced as an alternative to fully connected layers. Multiple works [36, 42, 43] have demonstrated that convolutional networks (ConvNets or CNNs) offer similar or better results than DNNs with far less trainable parameters, resulting in less computation operations and smaller size architectures.

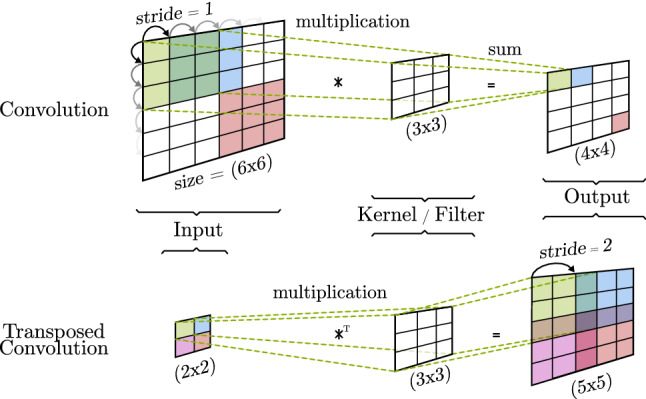

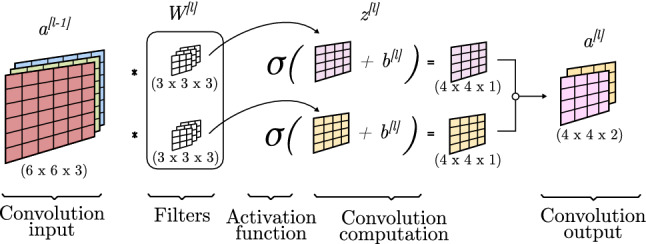

Trainable operations: convolution

The role of a convolution is to detect features found as patterns from the input. To achieve this, as Fig. 5 shows, a convolutional operation makes use of kernels, also called filters. From a mathematical point of view, a convolution operation is the result of an element-wise multiplication between a segment of the input and the filter, and the addition of the results. The process is repeated shifting the position of the segment a number of times set by the stride until the full surface of the input is covered. In this way, a single value for each segment is computed, shaping a new output matrix [44].

Fig. 5.

Basic convolution and transposed convolution

Basic convolutions can reduce (valid convolution) or keep (same convolution) the size of the input as output, while transposed convolutions (also called deconvolutions) take a small input and output a new matrix of greater size. The first type creates an abstract representation of the input, allowing feature extraction and classification techniques to be executed. The second alternative upsamples the input to increase its dimensions [45]. The size of the output matrix can be calculated using the input matrix size (n), the number of excluded cells on the edge of the output (), the number of positions that the filter is moved (), the filter size (f), and the number of filters (F). Eqs. 23 and 24 show the high width number of channels of the convolution and transposed convolution, respectively. It must be noted that many variants of the transposed convolutions are used as up-sample techniques, each one having its own output size calculation.

| 23 |

| 24 |

Originally, the values contained on the filters were fixed and manually crafted to detect a specific feature. LeCun et al. [36] applied backpropagation to convolutional operations, which enabled the training of the filters, automatically updating their values to gain new and better patter recognition values. Figure 6 shows how the convolution components can be associated with components of fully connected neural layers, making the back-propagation procedure very similar to what it is explained in Sect. 2.1.4. In this example, each filter of the two groups of filters has different values and each group of filters is trained separately. Moreover, each group of filters consists of 3 channels, meaning that each one is applied to a channel of the input and all values are summed together. At the end of this process, each group of filters outputs a one-dimensional matrix, so the final output is formed by two channels.

Fig. 6.

Convolution propagation

For the forward propagation process, a convolution operation is applied to the input of the l convolution block (Sect. 2.3.3) considering the trainable filters . A bias () is element-wise added to the matrix and is obtained. Finally, this new matrix is passed through an activation function and is obtained.

For the backward propagation process, gradients are propagated and the values of the bias and filters are updated as matrices as seen in Eqs. 14 and 15. To compute the calculations, dW and db are obtained as the addition of all the gradients of dZ (Eqs. 25 and 26):

| 25 |

| 26 |

These presented techniques are very basic alternatives used as explanation examples. As many authors have covered, problems like vanishing gradients [46, 47] or checkered patterns [45, 48] (caused by transposed convolutions as seen in Fig. 5) require additional techniques to be applied so convolutional operations can optimally work.

Non trainable operations: pooling

In contrast to convolutions, pooling is used to merge semantically similar features into one. They are fixed and not trainable operations that associate neighbor values reducing the dimension of the representation and creating an invariance to small shifts and distortions [36].

Multiple variants of pooling techniques (Fig. 7) are available depending on the intended outcome. The main difference between techniques is denoted by its application over a portion (window) of the input (Normal Pooling) or over all its values at the same time (Global Pooling).

Fig. 7.

Pooling operations

The most used variants are: max or min pooling (higher or lower value goes to the input unmodified) and average pooling (an average value of all the input values affected is obtained as output). Nirthika et al. [49] perform an empirical study of pooling operations in CNN for medical image analysis. They conclude that choosing an appropriate pooling technique for a particular job is related to the size and scale of the images and its class-specific features.

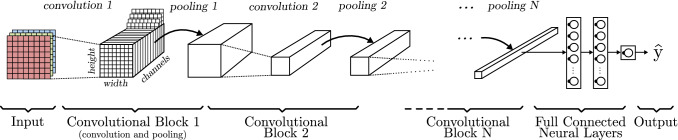

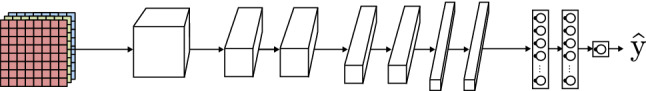

CNN models

Although convolutions are known since late 1970s [50], it was not until 1995 that they were applied to medical images [51], and in 1998 in a real-world application in LeNet [36] for hand-written digit recognition. Eventually, Krizhevsky et al. [52] proposed the AlexNet, a CNN able to classify high-resolution images [53] that established the foundations to many modern models.

As pointed by LeCun et al. [36], CNNs offer four key advantages: local connections, shared weights, pooling, and the use of many layers. Convolutional neural networks consist of consecutive convolution and pooling layers, also called convolutional blocks. The way a convolutional block is built can be changed, allowing highly personalized architectures to fit multiple case studies. Many authors discuss how the the different layers can be combined, but the most relevant aspect of this architecture is the way the first convolutional layers specialize in recognizing simple patters (e.g., vertical or horizontal lines), while final layers are able to classify more complex layouts (types of objects, faces, etc.) [43].

Figure 8 shows a typical CNN model that consists of a high number of convolutional blocks containing different convolution and pooling layers with multiple sizes, strides and padding. Generally, each consecutive convolutional block decreases the high and width of the representation, and increases the number of channels (depth) but, as seen in Sect. 2.3.1, the output size and number of channels is totally dependant on the internal parameters of the convolution operation (stride, filter size, number of filters, etc.). Right before the output layer, some fully connected layers are located. These layers, also known as dense layers, are identical in function and have the same structure as the ones addressed in Sect. 2.1. Their objective is to take the features extracted by the convolutional layers and learn a function to perform a classification of the data.

Fig. 8.

Convolutional neural network basic model based on AlexNet [52] architecture

Throughout the reviewed works, a set of CNN models stands out being embraced by the majority of authors as a base work to create their own models. They are broadly known and were originally created to solve the intrinsic problems of CNN architectures (e.g., overfitting and vanishing gradients) while achieving satisfactory results. AlexNet [52] was the largest CNN to date, it contains five convolutional and three fully connected layers. It is the first big CNN which showed that computer vision systems do not need to be carefully hand-designed, but can be trained to automatically learn from a labeled dataset as it did from ImageNet (Fig. 9). Several authors adjusted the AlexNet model to either improve the results or make them equal with a smaller model. The VGG-19 CNN [54], proposed by Simonyan and Zisserman, uses very small and convolution filters building a deeper model (19 layers) able to achieve better accuracy for localization and classification than the state-of-the-art models. They highlight the relevance of depth in vision systems. Moreover, SqueezeNet [55] achieves AlexNet-level accuracy on ImageNet with 50x fewer parameters. To accomplish this, Iandola et al. present a convolutional block named Fire. It replaces filters with filters and decreases the number of input channels to filters to drastically reduce the number of parameters in the CNN. Finally, they use large strides through the model to prevent the shrinking of the convolutional blocks output and therefore delaying downsampling leading to higher classification accuracy.

Fig. 9.

Convolutional neural network (CNN) based on AlexNet [52] and the VGG-19 [54]

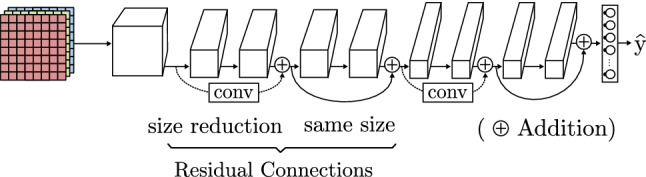

Increasing the CNN models depth also leads to a degradation of the accuracy caused by the inclusion of new convolutional layers. He et al. [46] introduce the residual connection concept in its ResNet model (Fig. 10). They insert shortcut connections from a previous layer onto a later layer without any extra parameter nor computation complexity. The authors achieve high accuracy results even with very deep models, thereby avoiding vanishing gradients issues.

Fig. 10.

Residual CNN architecture based on ResNet [46]

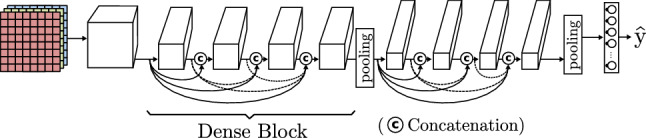

Huang et al. [56] suggest a more brute solution to the degradation of information based on the ResNet. They propose a model, called DenseNet, composed of dense blocks (Fig. 11). Each dense block is formed by many convolution operations and a final pooling. The most distinguishing feature is that every input is concatenated to the output of the convolutions inside the block. These dense residual connections allow to build deeper architectures avoiding data loss.

Fig. 11.

Dense CNN based on DenseNet [56]

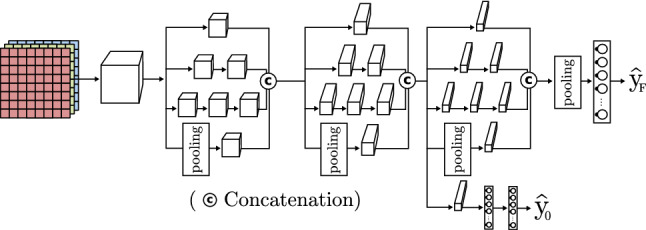

Drawing inspiration from ResNet, Szegedy et al. introduce the inception-V4 model [57] (Fig. 12). It comes from previous inception models (v1 or GoogLeNet [42], v2-v3 [47]), and the ResNet previously seen. A basic inception block uses various size convolutions with different filters and pooling, which are, finally, concatenated to obtain the next layer input. The authors improve this model through versions optimizing both accuracy and computation time.

Fig. 12.

Inception CNN based on inception model [42]

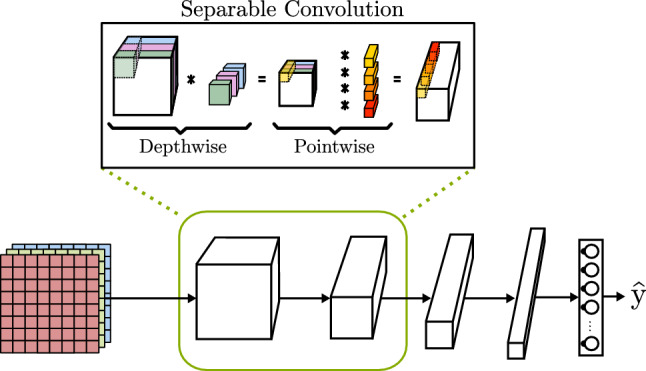

When analyzing CNN architectures is far from clear that if the objective is to process high-quality images, the number of computational operations is very large. This implies the use of high-end computers and long run times. To address this problem, the MobileNetV3 proposed by Howard et al. [58] is specifically tuned to achieve high accuracy on mobile hardware with low specifications as mobile phones and on-board computers, with priority given to fast analysis of images. The MobileNet introduces the use of a separable convolution operation that is able to achieve similar results as basic convolution with far less number of operations. As can be seen in Fig. 13, a separable convolution executes two operations: first, it performs a depthwise convolution where each filter is applied to only one of the input channels. The number of output channels is the same as the input, but the height and width of the feature map is changed depending on the convolution characteristics. Secondly, a pointwise convolution is executed through all the channels. In this time, the output height and width are the same as the input, but the number of channels is equal to the number of filters.

Fig. 13.

CNN with separable convolution block based on MobileNet [58]

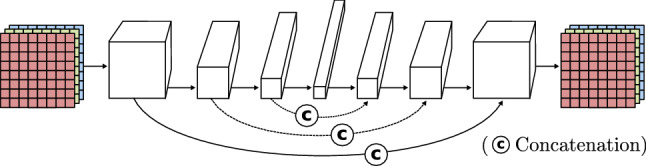

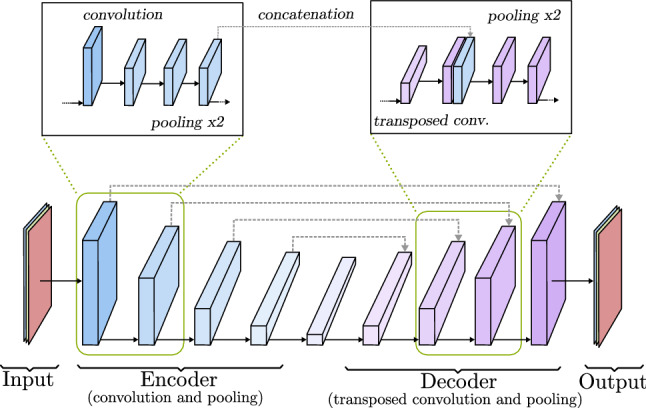

To conclude, convolutions are a very important component of widely known systems specialized in segmentation [59] and object localization [58, 60]. One of the first and most important is the U-Net [59]. It uses a collection of convolutions and transposed convolutions without fully connected layers to obtain a segmented image of the input (Fig. 14), establishing a starting point in encoder-decoder architectures (Sect. 3.5).

Fig. 14.

Encoder-decoder model based on U-Net [59]

All above models propose new CNN architectures for image classification or image generation. In recent years, a collection of models appeared that focus on optimal detection and classification of objects in images. The most popular are Fast-RCNN [61] using a VGG CNN, DetectNet [62] through an inception CNN, SSD (Single Shot Detector) [63] with a MobileNet CNN, and YOLO (You Only Look Once) [60] which uses multiple CNNs in different versions, among others. These models use already existent CNNs to get a classification of the objects in an image, then with all this information they propose different techniques to add them to the original images (i.e., squares pointing the location of the image or coloring the pixels according to the object that they shape). Table 1 collects the most relevant CNN models and their main features.

Table 1.

Overview of convolutional neural networks. The models are ordered from an initial simpler model to the most sophisticated ones. Each model offers an improvement and capitalizes on the previous models advancements. C Classification, IR Image Recognition, SS Semantic Segmentation, IM Image Modification, OD Object Detection, and OP Object Positioning

| Model | Main features | Application |

|---|---|---|

| VGG-19 [54] | Use of smaller convolution filters in order to achieve a deeper model. The authors apply a combination of convolutions and poolings as feature extractors followed by three fully connected layers. | C, IR |

| ResNet [46] | Connection of convolution layers to further layers through a matrix addition. When the connection is done between matrices of different size, the model performs a convolution to adjust its height and width. | |

| DenseNet [56] | Introduction of Dense Blocks containing multiple convolutions and pooling steps. Thanks to the use of the same convolutions, the feature map size does not change inside the block, allowing to concatenate the channels of the input of the block with all its inside convolution outputs. This model achieves deeper architectures avoiding the data loss related to very deep models. | |

| Inception [42] | Introduction of inception block where different filters are applied to the same input, concatenating all their outputs. The model is able to extract features using a lower depth and incorporates multiple model outputs. In this way, it is able to evaluate the result in an intermediate state. | |

| MobileNet [58] | Use of depthwise separable convolution, a convolution operation that splits the operation, reducing the computational costs. This model is designed to run on low-powered devices as mobile phones and on-board computers, with priority given to fast analysis of images. | |

| U-net [59] | Introduces an encoder-decoder structure. First, the model performs a set of convolutions to extract features from an input image (encoder). Second, a collection of transposed convolutions tries to reconstruct the input image while including new information (decoder). To do so, the convolutions output is concatenated to the matching transposed convolution input. | SS, IM |

| Fast-RCNN [61] | These architectures use a convolutional neural network as main tool to detect objects. Their value is not in offering a new CNN model, but using its output to better detect objects and point at its location in a picture. | OD, OP |

| DetectNet [62] | ||

| SSD [63] | ||

| Yolo [60] |

CNNs are successfully applied in many medical fields, focusing on those areas where images are the main asset for diagnosis and analysis. Many techniques are used to adapt a convolutional architecture to different data input. The most common input are 2D images [64–66], but also 2.5D (slice-based) [67, 68] and 3D [69–71] representations are used, extracting a more useful representation across all three axis [72].

The authors in [64] train a CNN to classify images of suspect lesions as melanoma or atypical nevi, outperforming dermatologists of different hierarchical categories of experience. Kather et al. [65] evaluate the performance of five CNN models applied to colorectal cancer tissue images. The evaluated models are AlexNet [52], SqueezeNet [55], GoogLeNet [42], Resnet50 [46], and VGG19 [54], the latter having the best performance. In [66], the inceptionV3 model [47] is used together with a set of feature extraction and classifying techniques for the identification of pneumonia caused by COVID-19 in X-ray images.

Yun et al. [67] use a 2.5D CNN for pulmonary airway segmentation in volumetric CT. The authors employ three images for each spatial point they want to analyze. These images are taken from different angles (axial, sagittal, and coronal), where the center of the images corresponds to the same point. This way, the system can analyze three dimensions without having the computational requirements of using complete 3D images. It represents a major development on the early detection of obstructive lung disease. Similarly, Geng et al. [68] use three parallel slices in each of the three views as input of 3 different CNN (one for each input) fused by a fully connected layer. Their model is able to effectively detect lung diseases as atelectasis, edema, pneumonia, and nodule.

As 2D filters are applied to 2D images, 3D filters can be used to perform 3D convolutions. Multiple models can be used together with 3D convolutions increasing the computational cost but also being able to put together more information. In [69], 3D CT scans are used along with a modified U-Net model [59]. In order to process the 3D models, the authors have to subsample them, worsening the quality of the images, while 2D model could use high resolution examples. They are not able to offer quantitative comparisons between 2D and 3D models, but as GPU computation power and memory capacity continue to grow, future research could focus on employing high resolution 3D scans. Nair et al. [70] train a 3D U-Net able to detect Multiple Sclerosis lesion from multi-sequence 3D MR images. Last, Zhang et al. [73] build a model that merge together 2D and 3D CNN for pancreas segmentation that could be applied to tumor detection. Their progress improves the state-of-the-art segmentation algorithms and helps reducing the input size of the 3D CNN, decreasing the computational cost while maintaining the accuracy.

Generative models

Neural networks have played a key role in analyzing and classifying data. As already seen, many authors have demonstrated how deep models are capable of learning main attributes of different data (e.g., images) to achieve some specific goal (e.g., tumor detection). However, NN capabilities are not limited to data analysis, but they are able to generate new data in a way that can even seem real to human observers. Generative models are being used increasingly by authors for tasks like semantic segmentation, object detection or localization, image quality improvement, and data augmentation, among others.

Image generation

Although fully connected networks are able to generate images, CNNs have demonstrated that they are able to obtain high-quality images with far less training time and computational requirement. Image generation models can be separated into two types depending on their input: a vector or an image.

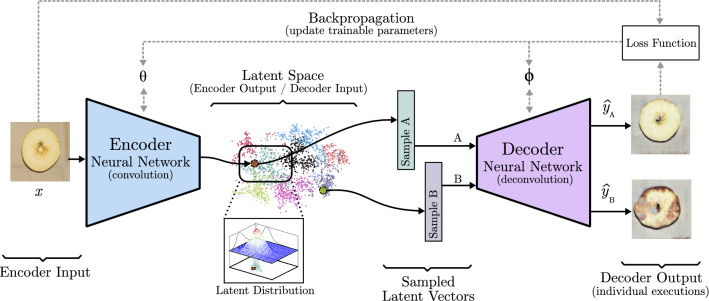

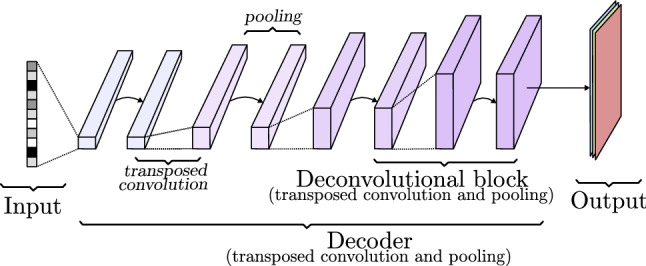

A decoder (Fig. 15) is a collection of transposed convolutional blocks arranged in a way that increases the width and height while reducing the number of channels until reaching the desired image size and the required number of channels, three for colored images and one for black and white images. The internal trainable parameters of the convolution (known as filters in Sect. 2.3.1) shape the image layer by layer. Through forward and backward propagation, this model is able to learn how to generate realistic images and it is controlled by the input vector, which can be a class definition vector or noise to add variation to the generation process. More details can be seen in Sect. 3.4.2.

Fig. 15.

Decoder model based on transposed convolutions

If the goal is to generate a new image from an already existing one, a model like the one represented in Fig. 16 is the way to go. It is composed of a decoder, like previously seen, but its input is the output of a convolutional network called encoder. This encoder-decoder architecture is able to extract the features of one image (input) like a normal CNN. Instead of relying on a few last fully connected layers to output a conclusion, it removes them and creates a new image (output) from the output of the last encoder convolutional block through the decoder. Each layer of the decoder takes the last pooling output of the encoder counterpart layer and concatenates it to the convolution output, this is known as skip connection. In this way, encoding information is transferred to the image creation process. During the training process, the output image is compared to the input image or to a paired image of the input, depending on whether the aim of the model is to rebuild the original image or to change its content. The internal parameters of the encoder and the decoder are changed considering how similar the target and the output image are. This basic idea is further developed in models like U-Net [59] (Sect. 3.4), and variational autoencoders (VAE) (Sect. 3.5).

Fig. 16.

Encoder-decoder model based on U-Net [59]

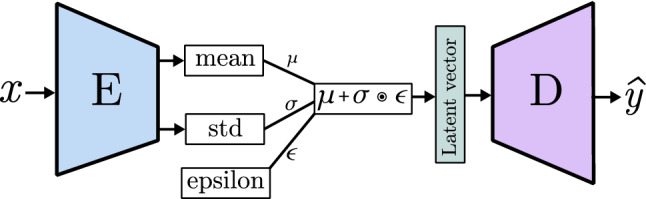

Over the years, multiple generative models have emerged achieving even more reliable results. Although techniques as autorregresive models [74] or flow models [75] perform relatively well, the most used models nowadays are the variational autoencoders (VAEs) [76, 77] and generative adversarial networks (GANs) [9].

In this section, both VAE and GAN are analyzed, emphasizing on their main differences and similarities. Considering that the main internal parts of these networks are convolution, deconvolution, pooling layers (Sect. 2.3), and forward and backward propagation (Sects. 2.1, 2.2) are still used as training method, only main architecture characteristics are included. The main goal of these sections is to gather comprehensive knowledge of the models architecture, their capabilities, and how they influence the medical field.

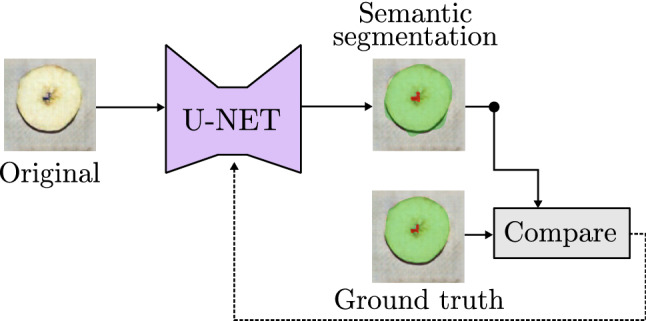

Semantic image segmentation

A great number of works in medical and health-care field aim to locate certain elements in an image, not only to know where they are, but to also visualize their shape. Thanks to models as the U-Net, that generate an image from an existing one, it is possible to analyze each one of the input image pixels, classifying them according to the object that are part of. This process is known as Semantic Image Segmentation and, as can be seen in Fig. 17, the output is a matrix with the same size as the original image that contains a predicted class to each of the pixels. This information can be used to generate a completely new image, or merge it with the original as a mask to point out the classification of the objects in the image [78]. The training process of semantic segmentation models is based on the use of ground truth segmentation. The predicted class of each pixel is compared to the real class, updating the internal values of the model based on one of the loss functions described in Sect. 2.1.3.

Fig. 17.

Example of image segmentation model based on U-Net [59]

Image segmentation has important applications in medical image analysis with multiple models rising along last years. Minaee et al. [79] and Asgari et al. [80] gather and review an extensive collection of works focusing on image segmentation.

In the medical field, several architectures to classify each pixel of an image have been proposed by different authors as, for example, full convolutional networks or adversarial training (Sect. 3.4). But, regarding medical works, the most extended architectures are encoder-decoder models and attention models.

When working with full convolutional networks (FCN), as seen in Section (Sect. 2.3), final layers do not match the size and shape of the input image. Because of it, after the last layers, new deconvolutional ones are introduced to reach the original size following a similar process as decoder models. Ben-Cohen et al. [81] propose to use FCN to detect liver metastases in CT examinations. The authors obtain good results, but nowadays this approach is being abandoned in favor of encoder-decoder models with a larger decoder network, and techniques as skip connections that help to achieve better quality segmentation.

Regarding adversarial training, works as the one presented by Daiqing et al. [82] rely on discriminative networks to train a generative model. The authors build their architecture on the StyleGAN [83], introducing an encoder network as a feature extractor of the input image. Doing so, the generation process is much closer to encoder-decoder networks than plain generative adversarial networks, although adversarial principles are still present.

Encoder-decoder architectures are the most commonly employed, in particular U-Net [59]-based models. Imtiaz et al. [84] apply an encoder-decoder model to screen glaucoma disease from retinal vessel images. Their model outperforms accuracy and processing time of other state-of-the-art works by using a pre-trained VGG16 [54] network as encoder. Rehman et al. [85] modify the skip connections of the U-Net introducing a feature enhancer block that adds more detail to the extracted features, helping the architecture to identify small regions. In a similar way, Zunair and Ben [86] introduce a sharp block in each skip connection of the U-Net in order to prevent the fusion of different features through the encoder convolution process and its merge with the decoder blocks. The sharpening process is performed by high pass kernels that infuse more relevance to distinct features. Su et al. [87] also improve the feature extraction process introducing the MSU-Net (Multi-Scale U-Net) to medical image processing. Specifically, the authors introduce separate convolution sizes in the same block in order to explore the input features at different levels, then each scale output is concatenated in order to be processed by the next convolutional block. To test their model, multiple datasets are used, including breast ultrasound images to detect cancer-harmed tissue, chest X-ray images showing tuberculosis cases and skin lesions pictures. Isense et al. [88] develop the nnU-Net tool, an auto-configurable architecture that adapts its internal parameters to work with multiple medical image datasets of different body parts. The authors use variations of U-Net including 2D U-Net, 3D U-Net, and 3D U-Net cascade to work with low-resolution images whose size is increased over time.

Despite the overall good performance of U-Net models, the very nature of the convolutional networks that build the encoder and decoder networks make them weak when working with high variable shape objects. This situation is very common in medical datasets, as the shape of target organs varies among different patients [89]. In order to overcome this drawback, recent works suggest to use Attention mechanisms that do not rely solely in the shape of the objects, highlighting the position where the main object that must be identified is located. This technique is drawn from natural language processing networks [90], where it is used as a method to check the connection between words using the weights that the model assigns to each word regarding the other terms of the text.

When working in computer vision, Attention mechanisms are usually introduced to existing semantic segmentation models as Attention blocks. These blocks divide the output features of a convolutional block in patches and explore how they are related to each other. This process helps to emphasize salient features, better locate objects of interest, and remove not useful elements by considering the relation to their neighbor area through trainable weights for the extracted features of each convolutional block [91].

Recently, multiple authors propose models that introduce Attention techniques in semantic segmentation tasks for medical images. Ouyang et al. [92] improve the diagnosis of pneumonia caused by COVID-19 by training two models, the first one using the whole lung dataset, and the second one using images with small infection area. While the second model gains more attention on the minority classes at the expense of possible over-fitting, the first one learns the feature representation from the original data distribution, thus addressing the fitting problems. The authors claim that attention mechanisms help the models to focus on important regions and improve affected area localization. This affirmation is also stated and demonstrated by Pang et al. [93] in liver tumor segmentation; Zuo et al. [89], also working with liver tumor images skin lesions, and retinal vascular segmentation; and Sinha and Dolz [94] which, using different resolutions of the same image to extract features at multiple scales, are able to better segment liver and brain tumor images.

Evaluation

Image generation models output a complete image, not a real or a discrete value. This change of the output nature causes evaluation metrics as described in Sect. 2.1.6 to be unsuitable. When working in semantic segmentation tasks, the objective is to compare the output of the model to the ground truth, checking which pixels were correctly predicted. The most common metrics are Pixel Accuracy, Intersection-Over-Union (IOU), and Dice Coefficient [35].

Pixel Accuracy shows which percentage of pixels are classified correctly. This method may be troublesome when studying images with one predominant class as it shows a high accuracy value even when many pixels of the smaller class are not correctly classified.

| 27 |

Intersection-over-Union (IoU) shows how the prediction and the ground truth overlap, thus taking into account even classes represented by few pixels.

| 28 |

Dice Coefficient (F1 Score) is very similar to IoU, and its results are equivalent. Both are common in medical studies, being author preference the main reason for choosing one over the other.

| 29 |

When a ground truth does not exist, as in realistic image generation tasks, above metrics lie pointless as a comparison can not be possible. In those cases metrics as inception score, Frechet inception distance, peak signal-to-noise ratio (PSNR), and structural similarity index (SSIM) must be adopted.

Inception score (IS) [95] and Frechet inception distance (FID) [96] focus on analyzing how realistic and variable the generated images are. To get these metrics, the authors use a pretrained inception model [42] to classify the generated images and compare its label distribution to the real examples. IS merges the probability of correctly classify an image, as a metric of its quality, and its distribution to check its diversity. FID introduces the usage of a multivariate Gaussian distribution to calculate the distance between real and fakes images distribution, making it a better option to analyze image diversity.

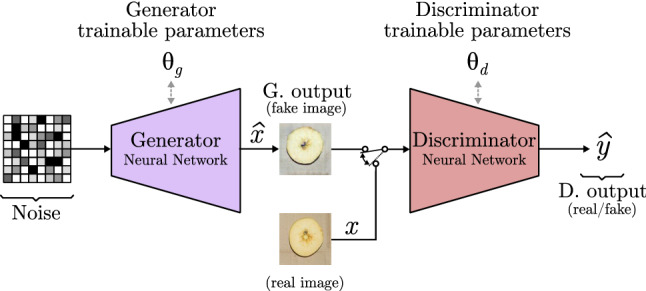

Generative adversarial networks

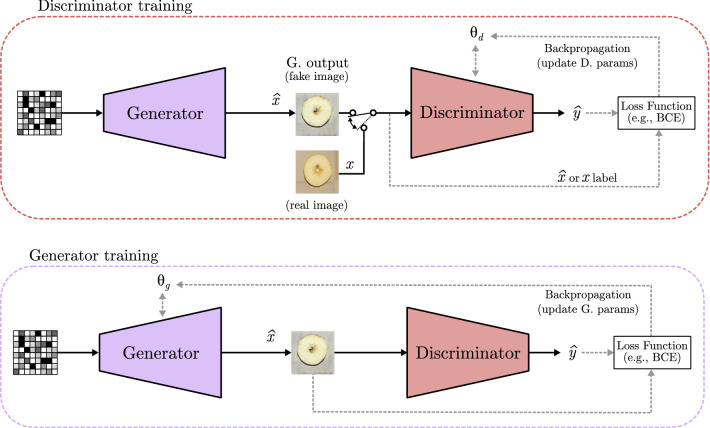

Generative adversarial networks, also known as GANs, were introduced by Goodfellow et al. [9] as a new framework for estimating generative models via an adversarial process. GANs consist of two different neural networks that are trained separately. One of the two models is a generator (G), a deconvolutional NN that captures the data distribution and generates a fake image. The other is a discriminator (D), which works as a classifier telling if its input is a real or fake image.

Following the work of the original authors [9], GANs can be formally described as two models based on a minimax game, as shown in Eq. 30. The objective is to train D using real data (x) to maximize the probability of assigning the correct label to images generated by G given noise variable z, while minimizing the chance of generating images in G that not look real to D. This means that GANs optimal end would be the distribution of real data () equal to the distribution of generated images ().

| 30 |

In Eq. 30, the first addend computes the probability of D predicting that real images (x) are authentic, while the second addend estimates the probability of D predicting that the generated images from G giving a noise z are not real.

Figure 18 shows the basic structure of a GAN, where and represent the trainable parameters of the generator and discriminator, respectively. A GAN is trained using a set of real images, where each one is represented as x features. Moreover, a fake example generated by G is represented as . The fake and real images are feed separately into the discriminator, whose job is to tell if they are fake () or real (x). D output is depicted as (like in Sects. 2.1 and 2.2). In order to prevent that the generator always generates the same output, a random noise vector is used as input. At the first, it was only used as a seed to generate different outputs, but as models become more complex, the noise turned into a way of controlling the content of the fake image. It can be combined with different data to select image characteristics or which class is represented in its content. To do so, different techniques have been proposed, such as the inclusion of a one-hot class vector to select the image class in the conditional GAN model [97], or the modification of the noise to select what the image shows in controllable GAN model [98].

Fig. 18.

Generative adversarial network model based on deep convolutional GAN [99]

The training process of a GAN corresponds to a minimax two-model game, where each of the models is trained separately but needs to learn at same pace. On the one hand, if a completely trained generator is used while a naive discriminator is employed as classifier, the discriminator will be not able to distinguish whether it is a real or a fake image because the generator is doing a very good job generating images that seem real, thus not being able to learn. On the other hand, if a completely trained discriminator is used with an untrained Generator, the classifier will detect all the fake images and the generator will be not able to learn how to “trick” the discriminator. This is why the training process is done separately by batches, where G learns how to produce very good fake images, and then D learns how to detect them. Figure 19 shows how a GAN is trained. In both cases, the discriminator output is compared to the real label of the image, starting the backpropagation process of the trainable parameters or , depending on the model being trained. The training process is repeated until desired results are achieved. Finally, the discriminator model can be discarded and the generator is used to create the fake images as final output.

Fig. 19.

Generative adversarial networks training

GANs convergence

The GAN backpropagation procedure is the same as seen in Sect. 2.2, the only difference being the loss function implementation. Initially, the BCE loss function is implemented independently in each model, updating the parameters individually based on a discrete value returned by D depending on the prediction of the input image (real or fake). A high discriminator loss and low generator loss mean that the model generates images that it is not able to detect as fake, which is a desirable output. Specifically, the training point where G reaches the lowest point and D the highest is called convergence. Previous model states would generate low-quality images that are easily detected as fakes, and following steps would possibly introduce noise in the images, decreasing its quality. This shows that generative models do not necessarily benefit from long training procedures.

This process can lead to multiple problems. One of the main issues is the inability to converge, meaning that the model does not learn to generate images that look real. But still, achieving to trick the discriminator to not detect fake images is not sign of success, as the generator might be producing always the same almost real image as output, situation known as mode collapse. In order to sort out these problems, the loss function evolved to quantify the similarity between generated images and real data distributions based on the assumption that the more similar the distributions are, the better the models generation accuracy is, meaning more diverse and realistic fake images.

Arjovsky et al. [100] analyze and define different ways to measure the distance between two data distributions and propose the Wasserstein GAN (W-GAN). W-GAN swaps the discriminator for a new model known as “critic.” The critic model outputs a real value pointing out how real an image is, instead of a discrete one only stating if it is a real or a fake picture. Furthermore, W-GAN introduces the use of Wasserstein metric, an indicative measure of the cost of transforming one data distribution into another given an specific Earth-Mover distance. These changes to classic GANs help to train a model more gradually, avoiding mode collapse.

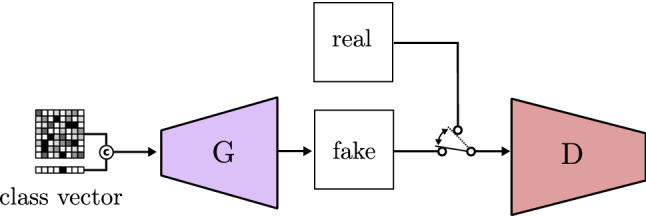

GANs noise manipulation

As previously stated, GANs are able to produce fake images that seem real. However, random or single class generation would heavily constrain GANs potential. This is why many authors worked on methods to control what the GAN is generating. Mirza and Osindero [97] propose a model called conditional GAN, represented in Fig. 20, that generates fake images of different classes. The class selection is done through a one-hot encoded vector concatenated to the noise vector. This class vector points out which class must be generated (1), while the other remains as (0), and the noise vector provides randomness.

Fig. 20.

Conditional GAN based on CGAN [97]

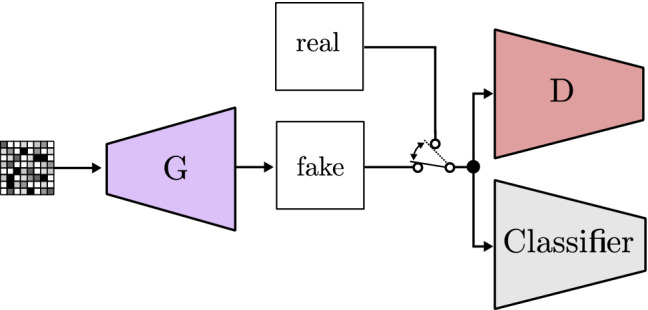

Lee and Seok [98] improve the model and propose the controllable GAN. The authors show how noise manipulation can influence which features are included in the fake image. As can be seen in Fig. 21, they add a third neural network working as a classifier whose main job is to help updating the noise, in order to produce more of the desired feature. In contrast to previously seen updating processes, the noise modification is done through gradient ascent, so the feature is maximized.

Fig. 21.

Controllable GAN based on CGAN [98]

Lastly, Shen et al. [101] review how the modification of the noise to control image features is related to a latent space. The authors claim that GANs are able to encode different semantics inside the latent space across multiple latent variables, being each of the variables a different feature. For instance, if the study focuses on human faces, the features would include eyes color, gender, and smiling, and others. To prove this, they propose the InterFaceGAN, a framework to explore how single or multiple semantics are encoded in the latent space of GANs that enables semantic face editing with any fixed pre-trained GAN model.

GANs applied to biomedical works

Multiple GAN models have been used as tools, inspiration, or as a starting point to develop new models applied to medicine. Some of them (Conditional GAN [97] and Controllable GAN [98]) are addressed in Sect. 3.4.2, but GANs have been on a continuous evolution since those models were proposed by their authors. Some of the most popular and widespread models are outlined below.

Radford et al. [102] propose a new model called deep convolutional GAN (DCGAN), and a set of guidelines to improve GANs including convolutional layers. They conclude that the elimination of the fully connected layers for convolutional layers, the use of ReLU activation in all generator layers except the output (which uses a tanh function), and the LeakyReLU activation in discrimination layers, among others, improve the quality of the generated images.

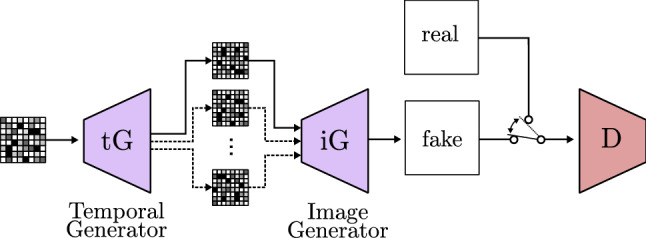

Saito et al. [103] propose a new model, called T-GAN (temporal GAN), able to generate sequences of images. They report the possibility to analyze video frames to train a model which generates consecutive images that together give the impression of movement (video). It first uses a temporal generator to build a collection of noises, one for each image of the sequence. As Fig. 22 shows, the noises are individually used to generate images through the GAN. Unfortunately, this model requires a lot of computation power, meaning that it is still not viable to use for many other authors, but as GPUs power keeps increasing, future works could benefit from this study.

Fig. 22.

Temporal GAN based on TGAN [103]

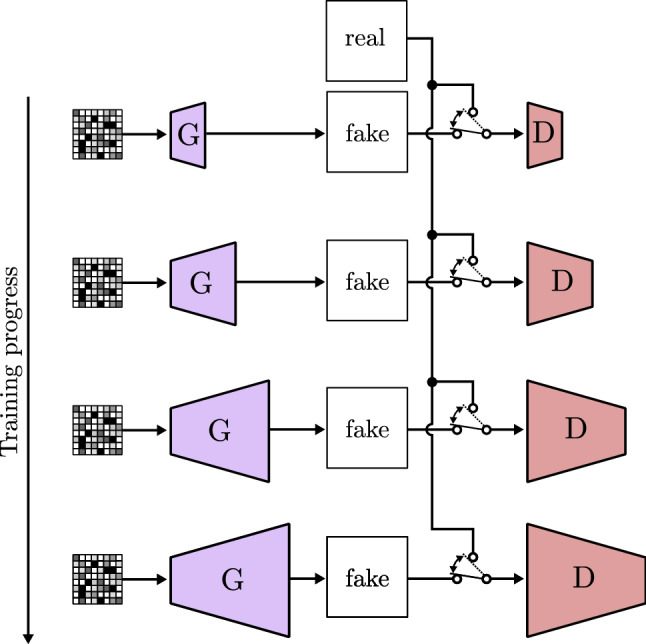

In recent years, many authors look forward to find new techniques in order to improve generated images resolution and quality, as well as to improve images variability to make GANs broader in scope. Karras et al. [104] advocate the use of progressive growing GANs (PGGAN). This model makes the generator and the discriminator bigger as training progresses, starting from low resolution and finally ending with a high resolution image (Fig. 23). The authors add new increased size layers allowing the training process to first find large-scale structures and then switch to finer details as new layers are included. PGGAN achieves training time reduction, more stable training, and high-resolution image generation with never obtained before quality.

Fig. 23.

Progressive growing GAN based on PGGAN [104]

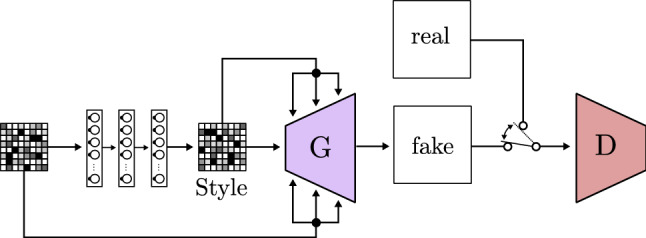

In a later paper [83], Karras et al. study how to transfer the style of an image to generate a variation of another image thanks to the proposed model StyleGAN. The authors introduce a fully connected network that transforms the input of the generator and feeds it in different entry points. This architecture, shown in Fig. 24, is based on a block called AdaIN (Adaptive Instance Normalization), which merges the intermediate latent space, the noise, and the convolution layer output/input to improve image generation.

Fig. 24.

Style GAN based on StyleGAN [83]

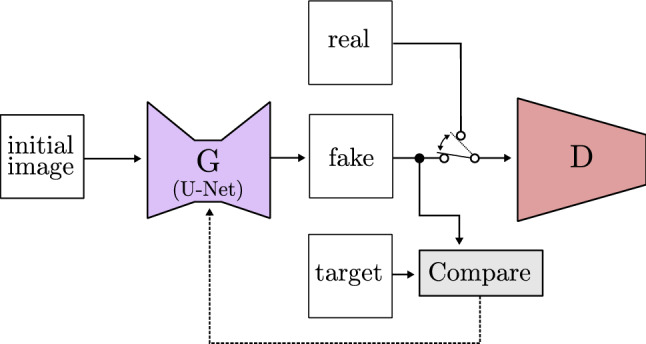

Finally, a rising number of models focuses on image to image translation. This technique consists of taking one image as input and transforming it to a different image that is still related to it. Isola et al. [105] assess this task and propose a model based on CGAN called Pix2Pix (Fig. 25). It uses the U-Net structure as a generator, offering great results in image semantic segmentation. Furthermore, Pix2Pix loss learning is adapted to the data used for its training, which makes it suitable for a wide variety of fields.

Fig. 25.

Image to image GAN based on Pix2Pix [105]

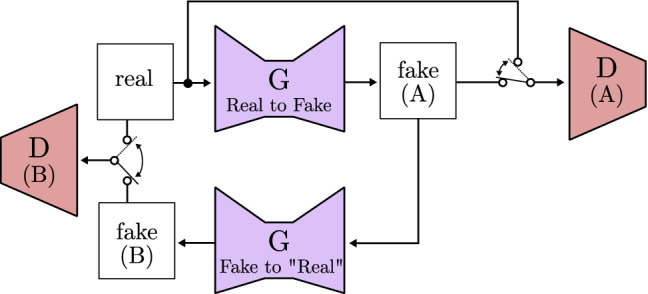

Zhu et al. [106] work with the Pix2Pix framework presented by Isola et al. [105] and the StyleGAN proposed by Karras et al. [83]. Their model, called cycle-consistent adversarial network(CycleGAN), is able to automatically translate an image into another and viceversa without a paired dataset used for training. As can be seen in Fig. 26, the authors achieve this by using two generators and two discriminators. First, it transforms the real image obtaining the output (fake A), then the process is reversed to get the original image (fake B) through a different generator, thus creating a cycle. Finally, different losses acquired through the comparison of different outputs of the model are combined together and used as learning loss. This is one of the most complex models to date, but results are evidence of its effectiveness.

Fig. 26.

Cycle consistent GAN based on CycleGAN [106]

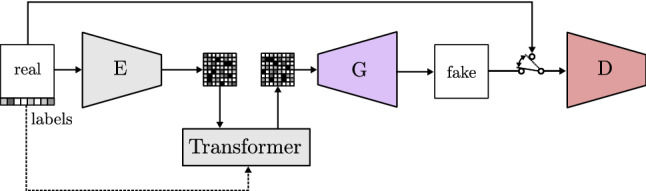

Among the recent models gathered in Table 2 is the VQ-GAN (vector quantized GAN) proposed by Esser et al. [107]. It draws inspiration from transformers structures used in models applied to text analysis. The authors suggest to use transformers to learn a distribution of the different labeled characteristics of the images. The model, shown in Fig. 27, uses an encoder to represent the image as a vector of image features merged with the transformer data. In this way, the image is represented by a rich feature vector instead of the values of the pixels. Finally, this new vector is fed to a GAN following the conventional path.

Table 2.

Overview of generative adversarial models. The models are ordered from an initial simpler model to the most sophisticated ones. Each model offers an improvement and capitalizes on the previous models advancements

| Model | Main features | Application |

|---|---|---|

| GAN [9] | First model that introduces the adversarial philosophy to generate synthetic images. It faces two different neural networks: the generator and the discriminator. The generator captures the data distribution and generates a new image, while the discriminator tries to categorize images as fake or real. The conditional GAN (CGAN) [97] was developed along the GAN. It is the first GAN model able to select and control the class of the generated image. | Image generation |

| CGAN [98] | (Controllable GAN) It extends the basic GAN functionality by adjusting the no| values to control the generated image content. It is able to generate images using more detailed labels that the basic GAN thanks to the introduction of a classifier. The generator keeps generating images and the fiscriminator categorizes them as fake or real, while the Classifier detects if the target class is depicted in the image. | Image generation using detailed labels |

| DCGAN [102] | (Deep convolutional GAN) Unsupervised model that introduces the use of convolutions in all layers, removing the last full connected layers that previous models included. It also uses Batch Normalization to stabilize learning, achieving better feature detection and images of higher resolution than previous models using less trainable parameters. | High resolution image generation, unsupervised learning |

| Pix2Pix [105] | The authors introduce the use of a U-Net model as a henerator instead of an encoder, and a dataset of paired images for the training process. This model, in addition to the discriminator, compares the fake image with the target image paired to the input. In this way, it is able to learn how to translate images to images with different features. | Image to image translation, semantic segmentation |

| TGAN [103] | (Temporal GAN) This model uses a temporal generator to build one noise matrix for each frame of the sequential image from the initial noise. Each of these new noises is sent to another generator that creates the images. | Generation of image sequences |

| CycleGAN [106] | It uses two generators, one to transform a real image to a fake one, and the other to transform the fake to another fake image that must be as close as possible to the real image. CycleGAN uses two different discriminators, and combine all the losses to obtain a cycle loss in order to be trained. | Image to image translation |

| PGGAN [104] | (Progressive growing GAN) A model that increases the number of layers of the generator and discriminator along the training process. It first detects large features structures and then shifts to discover finer scale details. | Production of high-quality images |

| StyleGAN [83] | This models uses a fully connected neural network to extract the style of an image, and a convolutional block called AdaIN to insert it in an input image. This style is inserted in multiple points of the model. | Merging of images |

| BigGAN [108] | It proves that GANs benefit from increasing the size of the model. BigGan establishes a threshold and re-samples the noise matrix in order to increase the number of trainable parameters, and as a result obtaining images with better fidelity and variety. | Generation of a great variety of high-quality images |

| VQ-GAN [107] | (Vector quantized GAN) It uses the transformer block, commonly used in natural language processing, to analyze the interaction between different parts of the image. First, an encoder synthesizes the image and represents it semantically as a collection of features. Then, this feature matrix is fed to the generator, from there, the standard GAN path is followed. | High resolution images |

Fig. 27.

Vector featured GAN based on VQ-GAN [107]

Although GANs are used along multiple areas, they have a great influence over the biomedical field, where the analysis and the generation of images play a very important role. They take advantage of the recent increase of computational power and massive medical data availability. A big part of the data generated is applied to neuroimaging and neuroradiology, brain segmentation, stroke imaging, neuropsychiatric disorders, breast cancer, chest imaging, imaging in oncology, and medical ultrasound, among others [99].

Ghassemi et al. [99] propose a DCGAN to produce MR images of the brain. The authors train the GAN so the discriminator can detect fake MR images and extract their main features. Then, the fully connected layers of the Discriminator are replaced by a softmax layer which is trained again. In this way, they use it as a classifier able to detect meningiomas, gliomas, and pituitary tumors with high accuracy. Nema et al. [109] also study how GANs can be applied to unlabeled MR images. They propose an enhanced version of CycleGAN, called RescueNet, which offers excellent results regarding brain tumor segmentation. Klages et al. [110] evaluate CT image generation for head and neck cancer patients using Pix2Pix and Cycle-GAN models. The generated image is used together with MR imaging, which provides superior soft tissue contrast showing improvements in tumor delineation, segmentation and treatment outcomes in neck and head cancer. The authors conclude that the Pix2Pix model requires near perfect alignment between CT and MR images, while CycleGAN relaxes the constraint of using aligned images or even images acquired from the same patient.

In spite of being a widely known technique, MR images generation can be degraded due to patient motion, leading to increased cost and patient inconveniences. Do et al. [111] propose two GAN models (X-net and Y-net) able to rebuild downsampled MR images to speed up the process with no quality loss. Both models were firstly implemented as basic U-Nets, but the inclusion of a GAN discriminator improved the results and contributed to obtain more realistic images. Furthermore, certain procedures require more representative images, adding techniques to basic MR images. Positron Emission Tomography (PET) is often used along MR images (PET-MRI) and CT (PET-CT). PET-CT provides better results in scan time, costs, and patient comfort, but PET-MRI reduces the patient exposure to radiation [112]. This is one of the reasons why Pozaruk et al. [113] study how GANs can improve prostate cancer PET-MRI. They propose a GAN model to generate pseudo PET-CT images from PET-MR scans, meaning that a safer method is used to obtain high-quality images. Results show improved quantitative accuracy of PET-MR measurements, enabling its use in clinical lesion grading in a non-invasive manner. It leads to better prognostication and reduce or remove the need for biopsy or re-biopsy. Zhou et al. [114] also propose a GAN to enhance MR images of the brain for Alzheimer disease classification. They use 2.5D and 3D scans along with 3D-GAN which uses 3D convolutions to generate a better quality MR images. Then, a classifier analyzes the synthetic image to tell if the patient may suffer Alzheimer disease or not.