SUMMARY

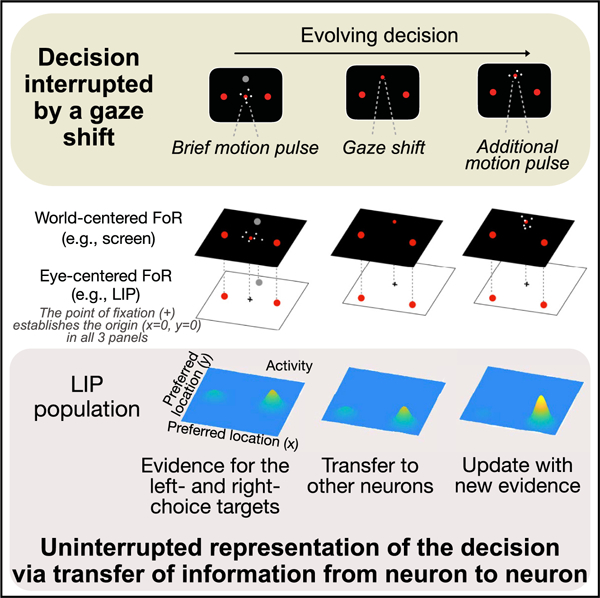

Neurons in the lateral intraparietal cortex represent the formation of a decision when it is linked to a specific action, such as an eye movement to a choice target. However, these neurons should be unable to represent a decision that transpires across actions that would disrupt this linkage. We investigated this limitation by simultaneously recording many neurons from two rhesus monkeys. Although intervening actions disrupt the representation by single neurons, the ensemble achieves continuity of the decision process by passing information from currently active neurons to neurons that will become active after the action. In this way, the representation of an evolving decision can be generalized across actions and transcends the frame of reference that specifies the neural response fields. The finding extends previous observations of receptive field remapping, thought to support the stability of perception across eye movements, to the continuity of a thought process, such as a decision.

Graphical abstract

In brief

Neurons in area LIP represent intention in an oculocentric frame of reference. They are therefore unable to represent a decision when evidence is acquired before and after a change of gaze direction. So and Shadlen show that continuity of a decision arises via transfer of information from neuron to neuron.

INTRODUCTION

The study of decision-making in human and non-human primates has led to an understanding of how the brain integrates samples of information toward a belief in a proposition or a commitment to an action. Two innovations continue to facilitate the elucidation of the neural mechanisms. First, a focus on perceptual decisions permits experimental control of the quality of evidence and builds on psychophysical and neural characterizations of the signal to noise properties. Second, a focus on neurons at the nexus of sensory and motor systems—specifically those capable of representing information over flexible time scales—permits a practical framing of decision-making as the gradual formation of a plan to execute the action used to report the choice. For instance, when a choice is expressed as the next eye movement, single neurons in sensorimotor areas, such as the lateral intraparietal area (LIP), reflect the evolving decision for or against a choice target in the neuron’s response field (Shadlen and Newsome, 1996). The neural response reflects the accumulation of noisy evidence to a threshold that terminates the decision, resulting in either an immediate eye movement or in sustained activity, representing the plan to make said eye movement when permitted.

It may be unsurprising that neurons involved in action selection (or spatial attention) would represent the outcome of a decision communicated by the act, but it was not a foregone conclusion that those neurons would also represent the evolving decision as it is formed. Some interpret this observation as consistent with action-based theories of perception and cognition (Thompson and Varela, 2001; Clark, 1997; Merleau-Ponty, 1945; Shadlen and Kandel, 2021) and the idea that the purpose of vision is to identify affordances (Gibson, 1986). To others, the observation seems limiting because decisions feel disembodied, that is, independent of the way they are reported—if they are reported at all.

Indeed, a limitation of tying decision-making to actions is that the neurons that plan action tend to do so in fixed frames of reference. Neurons in area LIP, in particular, represent space in an oculocentric frame of reference (FoR), suitable for planning eye movements or controlling spatial attention (Gnadt and Andersen, 1988; Barash et al., 1991; Colby et al., 1995). Moreover, in tasks that require multiple eye movements, LIP neurons are typically informative about only the next saccade (Barash et al., 1991; Mazzoni et al., 1996; Andersen and Buneo, 2002; but see Mirpour et al., 2009). These two properties would appear to limit the role of neurons in area LIP, and we set out to evaluate these limitations directly. We tested whether LIP neurons represent the formation of a decision (1) when neither choice target is the object of the next saccadic eye movement and (2) when the retinal coordinates of the choice targets change while the decision is formed.

We found that single neurons in LIP represent decision formation associated with a choice target in its response field even when this target is not the object of the next eye movement. The representation disappears, however, when the gaze shifts, but it appears in the activity of simultaneously recorded neurons with response fields that overlap the target position relative to the new direction of gaze. Through this transfer of information, the population supports an uninterrupted representation of the decision throughout the change in gaze. The mechanism allows decision-making to transcend the oculocentric FoR that delineates the response fields of neurons in LIP.

RESULTS

We recorded from 832 well-isolated single neurons (Table 1) in area LIP of two rhesus monkeys (Macaca mulatta). The monkeys were trained to decide the net direction of motion in dynamic random dot displays (Figure 1). The axis of motion was horizontal (left/right) or vertical (up/down) and determined for each session based on the response fields of the recorded neurons. The random dot motion (RDM) was centered on the point of fixation and flanked by a pair of choice targets, T+ and T−, corresponding to the direction of motion. As in previous experiments, the monkey indicated its decision about the direction by making a saccadic eye movement to T+ or T−. The same sign convention is used to designate whether the direction of motion was associated with T+ or T− (e.g., Figures 1B–1D). The association was established from the beginning of the trial, but unlike previous experiments, the monkey did not report its choice until after making a sequence of instructed eye movements. We used three versions of the task (Figure 1A). In the first (top), the monkey viewed the RDM for a variable duration (100–550 ms) and then made a saccade to a third target, T0, followed by a smooth-pursuit eye movement back to the original point of fixation. Only then, after another brief delay, was the monkey permitted to indicate its choice. This ‘‘variable duration task’’ mainly serves to evaluate whether a decision process, dissociated from the very next eye movement, leads to a representation of the decision variable in LIP. It also allows us to track the representation of the decision outcome across the intervening eye movements (IEMs). The other two tasks require the monkey to form a decision from two brief (80 ms) pulses of motion, P1 and P2, presented before and after the IEM. In both ‘‘two-pulse tasks,’’ the pulses share the same direction of motion, but their strengths are independent and unpredictable.

Table 1.

Number of leader and supporter neurons by task

| Leaders | Supporters | Total | |

|---|---|---|---|

| Variable duration task | 90 | 177 | 247 |

| Two-pulse task (1st variant) | 73 | 211 | 257 |

| Two-pulse task (2nd variant) | 91 | 312 | 328 |

The total counts are less than the sum of leaders and supporters because two target-configurations were employed in most sessions (see neural recording). Thus the same neuron was sometimes classified as a leader in one target configuration and a supporter in the other (e.g., see Figure S3). The table does not include the neurons described in Figure S5.

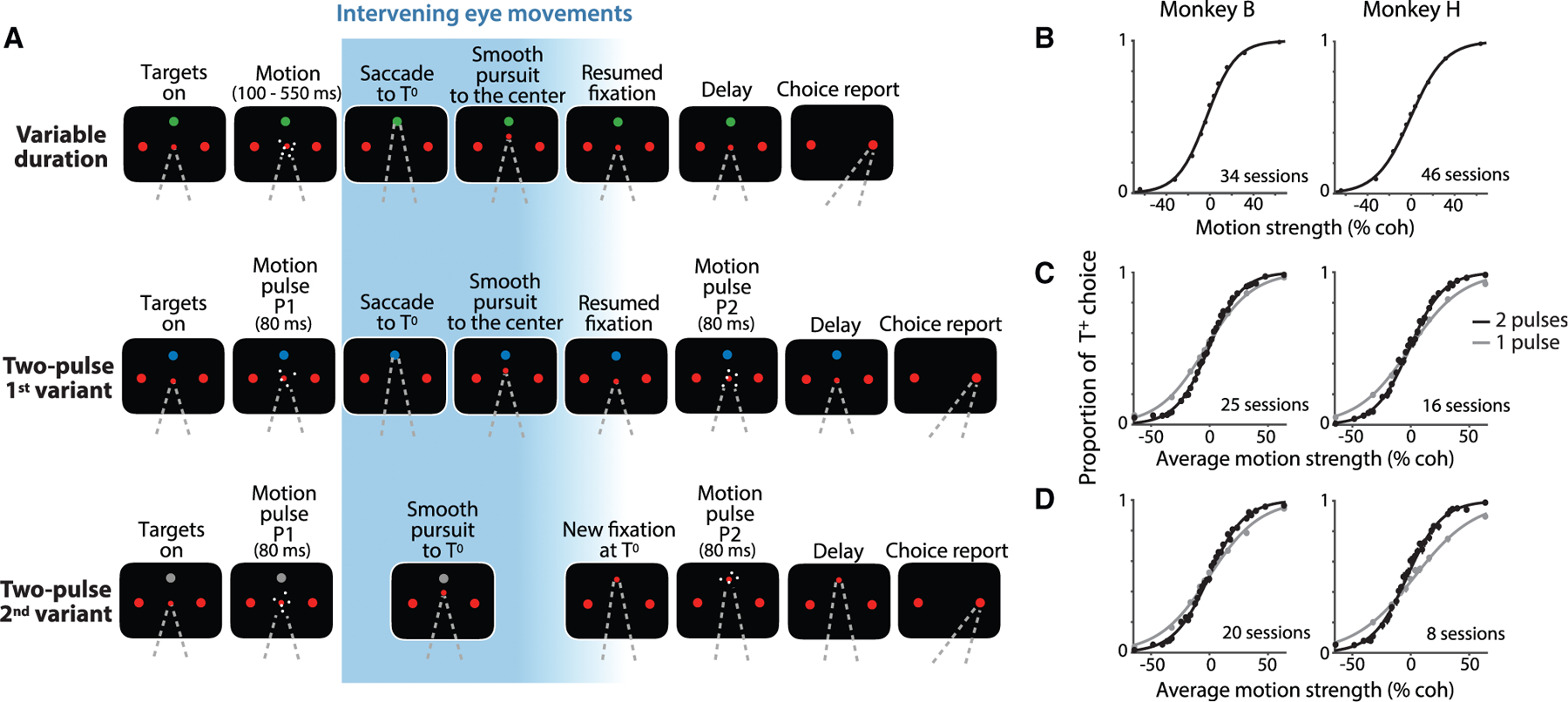

Figure 1. Tasks and behavior.

The monkey decided the net direction of random dot motion (RDM) by making an eye movement to the associated choice target (T+ or T−). The RDM stimulus was displayed at the point of fixation (FP). The choice targets remained visible at fixed positions throughout the trial, but the monkey made intervening eye movements (IEMs; blue gradient) between the initial fixation and the final choice saccade. The first intervening eye movement was always to the choice-neutral target, T0, which was displayed in a different color than the red choice targets.

(A) Sequence of events in the three tasks. In the variable duration task (top), the RDM stimulus was displayed for 100–550 ms. After the post-RDM delay (500 ms), the monkey made a saccade to T0, held fixation there, and made a smooth-pursuit eye movement back to the original FP. After a variable delay, the FP was extinguished, and the monkey reported its choice. In the two-pulse tasks (middle and bottom rows), the monkey reported the common direction of two brief (80 ms) motion pulses displayed before (P1) and after (P2) the IEM. The monkey indicated its decision after a 500 ms delay. In the 1st variant (middle), the monkey executed the same IEM as in the variable duration task, such that the monkey viewed P1 and P2 from the same gaze direction. In the 2nd variant (bottom), the monkey made an intervening smooth-pursuit eye movement to T0 and viewed P2 from this gaze direction. The choice targets remained fixed at the same screen locations throughout the trial and therefore occupied different retinal locations during viewing of P1 and P2.

(B–D) Performance of the two monkeys on the three tasks. Proportions of T+ choices are plotted as a function of motion strength and direction (indicated by sign). Curves are logistic regression fits. Error bars are SE; some are smaller than the data points. In the variable duration task (B), all stimulus durations are combined. See also Figures S1A and S1B. In the two-pulse tasks (C and D), the proportion of T+ choices is plotted as a function of the average strength of the motion in the two pulses. Gray circles and curves represent the data and the fits from catch trials (one third of trials), where the monkey viewed P1 only. See also Figures S1C and S1D.

Behavior

In all three tasks, monkeys based their decisions on the motion direction and strength. This is the signed coherence of the RDM (Figure 1B) or the average of the signed coherences of the two pulses (Figures 1C and 1D). In the variable duration experiment, the performance improved as a function of viewing duration (Figure S1), consistent with a process of bounded evidence accumulation, as shown previously (Kiani et al., 2008). In the two-pulse experiments, the choices were formed using information from both pulses. The sensitivity, measured by the slope of the choice functions, was greater than on a 1-pulse control (Figures 1C and 1D; p < 10−35). Additional analyses, described in Figures S1C and S1D and STAR Methods, rule out alternative accounts for this improvement that use only one of the pulses (e.g., the stronger one) on individual trials.

Decisions dissociated from the next eye movement

The distinguishing feature of the present study is that the monkey always made at least one other eye movement before indicating its decision. Thus, a third target, T0, was present while the monkey viewed the RDM, and it was the object of the first eye movement from the fixation point (FP) in all three tasks. An earlier study of saccadic sequences (Mazzoni et al., 1996) showed that LIP neurons typically modulate their activity to represent the next saccade, not the one made subsequently. As neither T+ nor T− is the object of the next eye movement, it seemed possible that neurons with response fields overlapping the choice targets would not represent decision formation in our tasks.

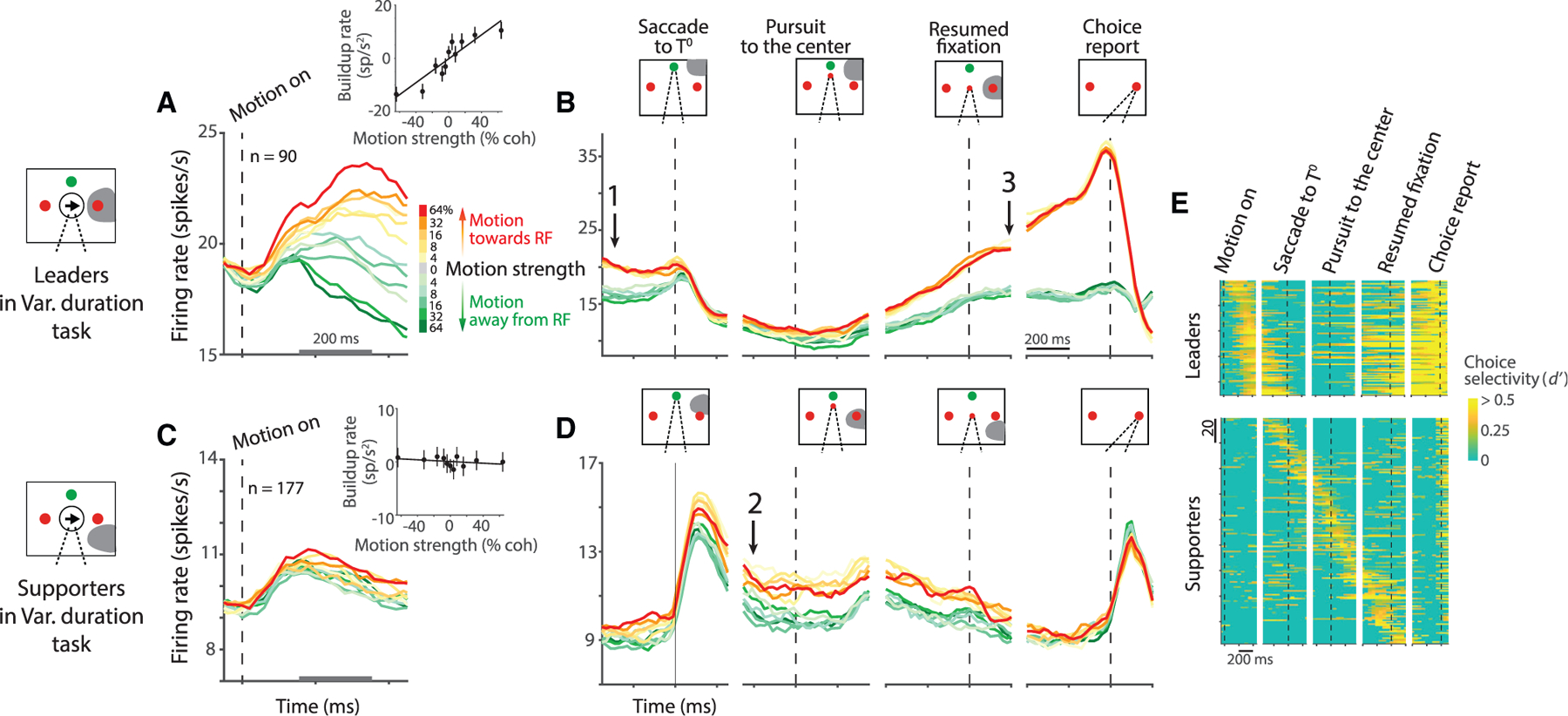

We evaluated this possibility using the variable duration task. As shown in Figure 2A, neurons representing the ultimate choice target exhibit decision-related activity, although the monkey would make the next saccadic eye movement to T0. The neuronal activity evolves with the strength of the evidence supporting the choice that will be reported later by a saccade into or away from the response field—a T+ or T−choice, respectively. The rate of the increase or the decrease in firing rates, termed the buildup rate, is influenced by the strength of motion (Figure 2A, inset; p = 0.0001). The decision-related activity also exhibits second-order statistical features that evolve in a manner consistent with a diffusion-like accumulation process (Churchland et al., 2011; Figure S2). The decision-related activity of these ‘‘leader neurons’’ reflects the monkey’s ultimate saccadic choice by the end of the motion-viewing epoch (arrow-1 in Figure 2B).

Figure 2. Time course of neural activity in the variable duration task.

(A) Average activity from 90 leader neurons aligned to the onset of motion. A leader neuron contains one of the choice targets, T+, in its response field (shading in diagrams). Colors indicate motion strength and direction. Both correct and error trials are included. Inset shows the effect of signed motion strength on buildup rate during the first 200 ms of putative integration (gray scale bar on the abscissa). Buildup rates are plotted as a function of signed motion strength (line, least-squares regression). Error bars are SE. Leader neurons reflect the sensory evidence bearing on the choice target in the response field, although the next eye movement is to the green, choice-neutral target (T0). See also Figure S2.

(B) Average activity of the leader neurons aligned to task events following the motion-viewing epoch. The coherence dependence gives way to a discrete binary representation of the decision outcome before the saccade to T0 (arrow 1). The representation disappears after the saccade, and it is recovered as the pursuit eye movement places the gaze at the original FP (arrow 3). There is a perisaccadic response associated with T+ choices. Only correct trials are included.

(C and D) Average activity from 177 supporter neurons aligned to the same events as in (A) and (B). The neurons first represent the decision outcome after the saccade to T0 (arrow 2). They retain this representation until reacquisition of the original FP at the end of the smooth-pursuit eye movement. Then, they show only a nonselective post-saccadic response, as both T+ and T−are outside the response field.

(E) Choice selectivity (d’) of individual neurons (rows). The neurons are ordered by the time of maximum d’ up to the 1st saccade (leader neurons; top) or time of maximum d’ throughout the trial (supporter neurons; bottom). Some supporter neurons represented the choice just before the 1st saccade and not after. Note that as a population, LIP represents the decision at all times: from motion viewing, through the IEM, to the final saccade to T+ or T−.

When the monkey shifts its gaze to T0 (dashed vertical line, Figure 2B, leftmost panel), the leader neurons cease to represent the choice. This is because the response field no longer overlaps T+. The activity reemerges when the subsequent pursuit eye movement returns the gaze to the original point of fixation, thereby realigning the response field to T+. The activity then exhibits stereotyped preparatory activity, followed by a perisaccadic burst, accompanying T+ choices (arrow-3 in Figure 2B). During the IEM—when the leader neurons are uninformative—other LIP neurons represent the decision (arrow-2 in Figure 2D). These neurons have response fields that overlap the choice targets from the new gaze angle. For example, when the gaze is to T0, neurons with response fields below and to the right of fixation (i.e., the location of T+ ) represent the decision. Such neurons do not represent the decision process during the motion-viewing epoch (Figure 2C, inset; p > 0.1). They do so only when the monkey’s gaze aligns the response field to one of the choice targets. These ‘‘supporter neurons’’ maintain working memory of the decision outcome through the epoch that the leader neurons are uninformative. (Note that the leaders and supporters are distinguished merely by the location of their response fields in the context of the task; see Figure S3.) During the pursuit eye movement, different supporter neurons maintain the working memory at different times, in accordance with the changing direction of the gaze (Figure 2E, bottom). We refer to the displacement of the representation across the population as a ‘‘transfer’’ of decision-related information.

It thus appears that LIP neurons represent the accumulation of evidence bearing on the likelihood that T+, a target in its response field, is associated with reward, even when the saccadic eye movement required to select the target is not the next to be executed. LIP then retains a representation of the decision outcome across intervening saccadic and pursuit eye movements by maintaining a state of elevated firing rate by neurons that contain the chosen target in their response field. We next address three related questions. (1) Is such transfer limited to the outcome of the decision, or can partial information bearing on the decision also undergo transfer? (2) Does the initial representation of evidence accumulation require that the retinal coordinates of the choice targets remain the same before and after the IEM? (3) Is this also required for the recovery of the information after the IEM? These questions are answered by recording from LIP during the two-pulse experiments (Figure 1A).

Graded representation of the decision variable across eye movements

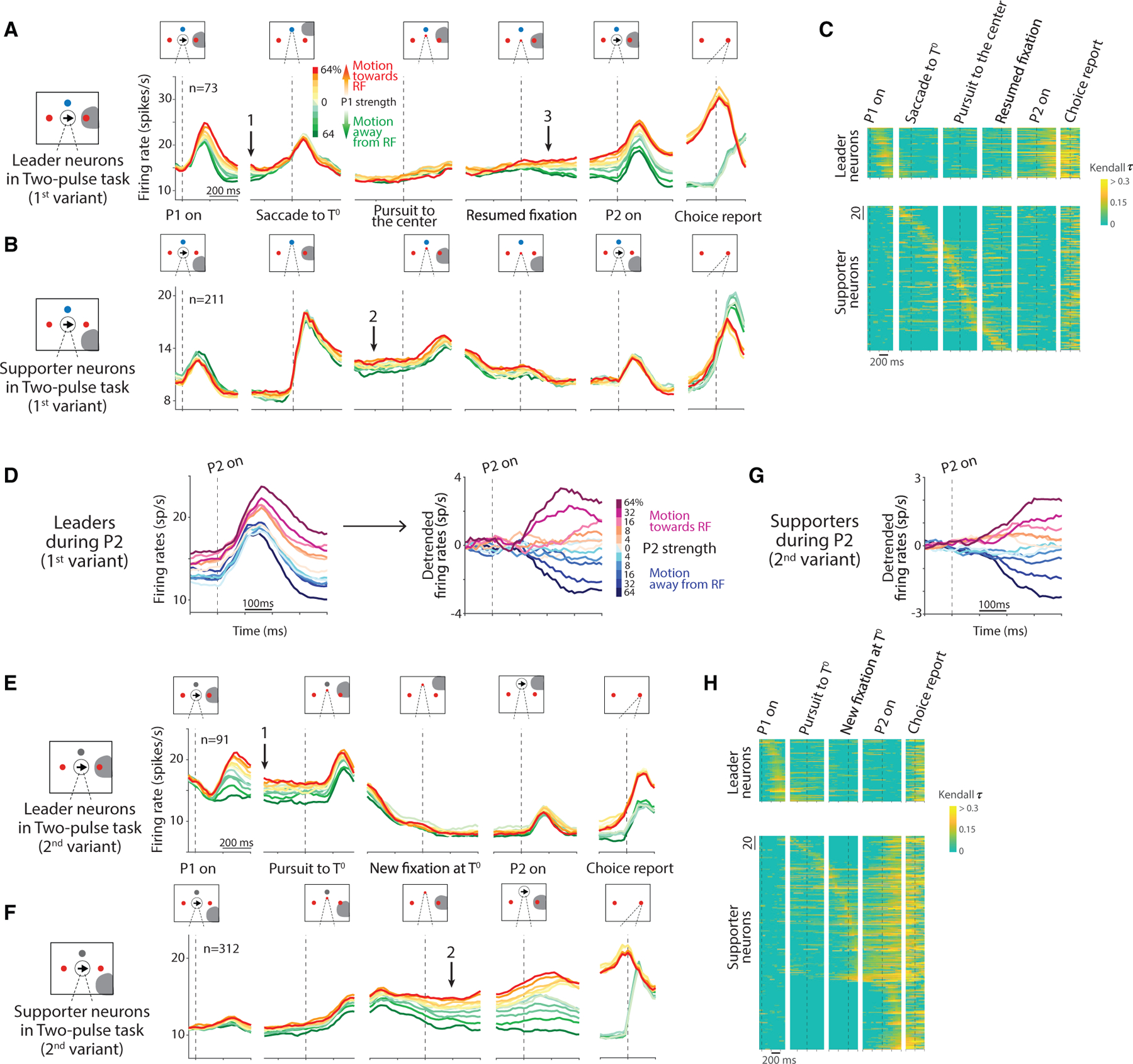

In the two-pulse task, the monkeys base their decisions on two brief (80 ms) pulses, one preceding and the other following the IEM. The two 80 ms pulses are considerably weaker than one 160 ms pulse using the average coherence of the two pulses (see behavioral tasks). Our intent was to encourage the monkey to use both pulses to inform the decision. The 1st variant of the two-pulse task, illustrated in the middle row of Figure 1A, uses the same sequence of IEM as in the variable duration task—a saccade to T0 and smooth-pursuit back to the original fixation. In the two-pulse task, however, the monkey has only partial information about the decision during these movements. This is reflected in the graded, coherence-dependent firing rates of the leader and supporter neurons. Unlike in the variable duration task, the leader neuron responses do not group into two decision categories before the saccade to T0. Instead, they exhibit a clear dependence on the strength of the first motion pulse, P1 (compare arrows-1 in Figures 2B and 3A). This is especially vivid during and after the IEM—in the activity of the supporter neurons after the saccade to T0 (Figure 3B, arrow-2) and in the activity of the leader neurons after the return of gaze to the original point of fixation (Figure 3A, arrow-3). This representation of evidence from P1 is then updated with the second motion pulse, P2, before the activities group into T+ and T− choices in the short delay preceding the saccadic choice. The additive effect of P2 is evident in Figure 3D, which displays the component of the response induced by P2 (see STAR Methods). Regression analyses also confirm that the leader neurons recover the decision variable at the beginning of the P2-viewing epoch (p = 0.001; Equation 8) and update the firing rate based on the new information supplied by P2 (p < 10−30; Equation 9). In an additional analysis, we directly demonstrate that individual leader neurons are affected by both the first and the second motion pulses within single trials (Figure S4A). This observation complements the demonstration in Figures S1C and S1D that both pulses also affect the choice within single trials.

Figure 3. Time course of neural activity in the two-pulse tasks.

Plotting conventions are similar to the ones in Figure 2.

(A and B) Average activity from 73 leader neurons (A) and 211 supporter neurons (B) in the 1st variant. Colors indicate the strength and direction of the first pulse (P1). Leader neurons show a graded representation of the decision variable from P1 (arrow 1), which disappears around the saccade to T0 as some supporter neurons begin to carry the representation, which develops further after the saccade to T0 (arrow 2). The graded representation returns to the leaders after the smooth-pursuit eye movement to the original FP (arrow 3) and persists through the presentation of P2. Arrows 1–3 mark the same time points as the arrows in Figures 2B and 2D. See also Figure S4.

(C) Decision-related activity of individual neurons (rows) in the 1st variant. The heatmap shows the strength of correlation of the activity with signed motion strength (Kendall τ). Leader and supporter neurons are ordered as in Figure 2.

(D) Response of leader neurons to P2 (1st variant). Traces are sorted by the signed coherence of P2, which shares the same sign as P1 but with random strength (including 0%). The raw averages (left) are detrended (right).

(E and F) Average activity from 91 leader neurons (E) and 312 supporter neurons (F) in the 2nd variant. Same plotting conventions as in (A) and (B). Leader neurons show a graded representation of the decision variable from P1 through the initiation of the smooth-pursuit eye movement to T0 (arrow 1). The representation passes to supporter neurons as the gaze reaches T0 (arrow 2). P2 affects only the supporter neurons.

(G) Response of supporter neurons to P2 (2nd variant). Same plotting conventions as in (D). Only the detrended version is shown.

(H) Decision-related activity of individual neurons (rows) in the 2nd variant. The colors in this heatmap correspond to the same Kendall τ values as in (C).

The pattern is even more striking in the 2nd variant (Figures 3E–3H). Here, the only IEM is smooth-pursuit to T0, and the second motion pulse appears at this new point of fixation. The smooth pursuit gradually removes the target from the leader’s response field, whereas the saccade does so abruptly in the 1st variant. The leader neurons thus maintain the representation of the decision variable through the early phase of the pursuit eye movement (Figure 3E, arrow-1), and the supporter neurons begin to represent the decision variable as the gaze approaches T0 (Figure 3F, arrow-2). The second motion pulse, P2, causes the supporter neurons to update the representation of the decision variable. The change in activity is best appreciated by extracting the component of the response induced by P2 (Figure 3G). Again, regression analyses confirm that the supporter neurons represent the decision variable at the beginning of the P2-viewing epoch (p < 10−5; Equation 8) and change the activity based on the new information supplied by P2 (p < 10−57; Equation 9).

The 2nd variant of the two-pulse task extends our characterization in two ways. First, it rules out the possibility that leader neurons represent decision formation only because the information bears on the likelihood of making the saccadic eye movement specified by the vector to T+, what might be termed a deferred oculomotor plan. The monkey never executes an eye movement specified by the direction and distance of T+ or T− relative to the initial gaze position. Second, the final decision need not involve the same neurons as the first pulse of evidence. In the 1st variant of the two-pulse task, the same leader neurons represent the decision process before and after the IEM. Hence, it is possible that the leader neurons maintain the information, despite the gap in spiking activity during the IEM, and restore the activity from so-called silent working memory (e.g., Mongillo et al., 2008). The 2nd variant of the two-pulse task renders this explanation highly unlikely.

Continuous representation of the decision variable across pools of neurons

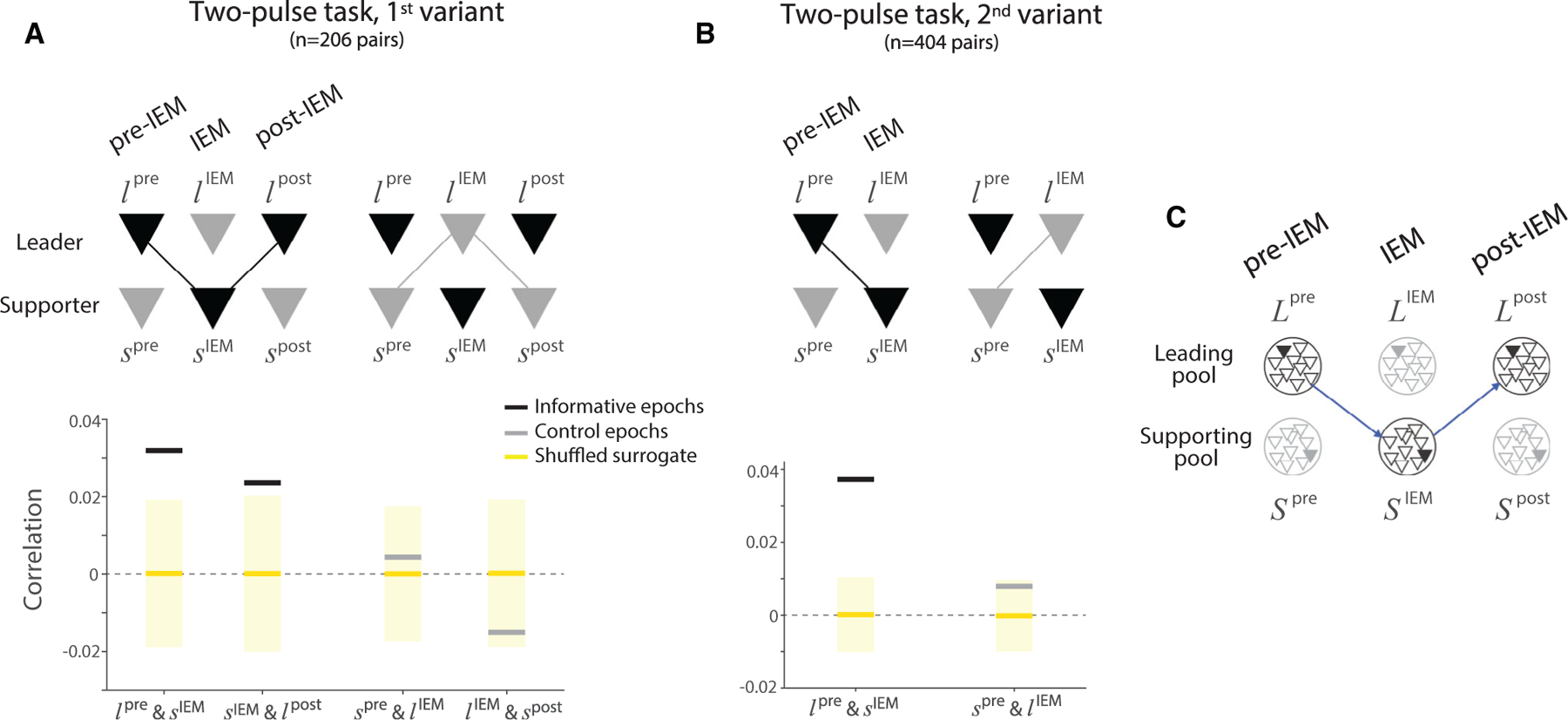

Both two-pulse experiments show that the transfer of information in LIP is not limited to the outcome of the decision. LIP maintains a representation of graded evidence through the IEM. This representation is uninterrupted at the level of the population, supported by the transfer of information (Figure 3). The heatmaps in panels C and H are based on averaged firing rates across trials, but there is also evidence for continuity at the level of single neurons on single trials. Two analyses bear on this point. The first focuses on the correlation between pairs of simultaneously recorded leader and supporter neurons, using spike counts during the epochs marked by the arrows in Figures 3A, 3B, 3E, and 3F. These epochs are separated by the IEM; however, the trial-to-trial variability in firing rates of pairs is correlated (horizontal black lines in Figures 4A and 4B). These correlations are present only when the pairs represent the decision variables (compare the black and gray lines in Figures 4A and 4B). Regression analyses also confirm the positive correlations and demonstrate that they are not explained by other factors, such as the direction/strength of P1 and the monkey’s choice (p < 0:01; Equations 15 and 16).

Figure 4. Correlation between representations of the decision variable by leader and supporter neurons.

The analysis examines the within-trial correlation between the representation of the decision variable by pairs of leader and supporter neurons in epochs bracketing the IEM. The correlation is between the conditional expectations of the spike counts (see STAR Methods).

(A) Correlation between firing rates in the 1st variant of the two-pulse task. Black bars show the correlations when the pair is informative: during the transfer from leader to supporter neurons, before and after the saccade to T0, and from supporter back to the same leader neurons, before and after the completion of smooth-pursuit to the original FP. The informative transfer is shown by the black connector in the cartoon above. Gray bars show the correlation between the same neurons in adjacent uninformative epochs (light gray connectors in the cartoon). The tick and cartoon labels use l and s for leader and supporter, respectively; superscripts indicate the epoch of the sample. Yellow lines and shading show the mean and the two standard deviations of the same correlation statistic under shuffled control (1,000 permutations).

(B) Same analysis applied to the 2nd variant of the two-pulse task. There is only one informative transfer between the leader and supporter neurons around the one IEM. Same conventions as in (A).

(C) Transfer of the decision variable is mediated between pools of weakly correlated leader (L) and supporter (S) neurons. Each circle represents a pool of neurons (triangles) that share the same response field. For each pool, the filled triangle represents the neuron that is observed (recorded), while the unfilled triangles represent the other neurons that are not recorded in the experiment. The diagram applies to the 1st variant of the two-pulse task. If the pools contain only one neuron, then perfect transfer of the decision variable from the L-pool to the S-pool and back to the L-pool (blue arrows) might predict no autocorrelation between Lpre and Lpost, conditional on SIEM. However, a single neuron would retain conditional autocorrelation if it were a member of a pool of weakly correlated neurons.

The second analysis examines the autocorrelation in the spike counts of single leader neurons during the epochs before and after the IEM (arrows 1 and 3 in Figure 3A). Recall that in the 1st variant of the two-pulse task, the gaze returns to the initial point of fixation after the IEM, which, in effect, returns the choice target to the response field of the leader neuron. The spike counts in these two epochs are positively autocorrelated (the prefix, ‘‘auto,’’ serves as a reminder that this correlation is between activities of the same neuron in different epochs; see Figure S4B). This analysis was first performed using single neuron recordings; it was subsequently extended in the multi-neuron recording sessions. The multi-neuron recordings allow us to determine the degree to which the autocorrelation is mediated by a single supporter neuron sampled at the time indicated by arrow-2 in Figure 3B. The positive autocorrelation is barely reduced by conditioning on such activity and remains statistically significant (p < 0.01; Equation 18). This observation suggests that the transfer is not mediated solely by one supporter neuron, but by a pool of weakly correlated neurons that share the same response field (Figure 4C), consistent with the notion that the signaling unit in cortex is a pool of 50–100 weakly correlated neurons (Zohary et al., 1994; Shadlen and Newsome, 1994, 1998).

Together, the results support the conclusion that there is a continuous representation of the decision-related signal and its trial-to-trial variability across pools of LIP neurons. Like single LIP neurons, each pool represents decision-related information in an oculocentric FoR. It is the movement of information from pool to pool that achieves a continuous representation of the evolving decision (two-pulse tasks) and its outcome (variable duration task), thereby transcending the oculocentric FoR.

DISCUSSION

The study of decision-making in animals necessitates some report by the animal, and this report typically involves an action. Thus, what the experimenter interprets as a representation of a decision process also corresponds to an intention—even if unrealized—to act. Indeed, the best-understood neural correlates of decision formation are depicted as an evolution of these very intentions—that is, the accumulation of noisy evidence to a criterion that establishes a readiness to act. This intention-based framing is germane to the study of neurons in the posterior parietal and prefrontal cortices, which serve as nodes in systems for the control of reaching, gazing, and directing attention (Snyder et al., 1997; Colby et al., 1996; Bisley and Goldberg, 2003). It has been argued that the neural mechanisms elucidated through the study of such neurons are likely to be relevant to the broader class of deliberative processes, which may be viewed as bearing on a provisional intention to act in some way (Clark, 1997; Shadlen et al., 2008; Shadlen and Kandel, 2021; Cisek, 2007).

An obvious challenge to this scheme arises when the action associated with decision outcome changes during or after deliberation. This is particularly relevant when intention is specified in a fixed FoR. Area LIP represents space in an oculocentric FoR, suitable for planning eye movements or controlling spatial attention (Gnadt and Andersen, 1988; Barash et al., 1991; Colby et al., 1995). The IEMs in our study invite representation of the choice targets in a FoR relative to the world (egocentric) or video monitor (allocentric). Thus, at the outset of the experiment, it seemed possible that another brain area—one that represents the evolving decision in a more general FoR—would be required, and LIP would play little if any role. We found instead that single neurons in LIP represent the evolving decision when a choice target is in the response field. The representation disappears when an IEM removes the choice target from the response field, only to reappear in the activity of other neurons, whose response fields now contain the same choice target. In this way, a population of LIP neurons with diverse response fields achieves a continuous representation of the decision. Although the gaze changes, there is no moment in time when the representation is absent across the population. Thus, the representation of an intention can be generalized across actions and is not limited to a specific FoR. The limitation holds for single neurons, but the population escapes this limitation via the transfer of information. Although the finding does not preclude the possibility of neural representations in higher order frames of reference, it opens the possibility that such representations are not strictly required.

The findings are related to the phenomenon of perisaccadic, response-field ‘‘remapping.’’ Perisaccadic remapping refers to the anticipatory response of an LIP neuron to a visual stimulus that is about to enter its response field upon completion of a saccadic eye movement (Duhamel et al., 1992). The response occurs either just before the eye movement or after the saccade but before there is sufficient time for the stimulus to evoke a visual response, hence the term, anticipatory. Remapping is observed in several brain areas, and it is thought to support the perceptual stability of objects in the visual field across eye movements (reviewed in Wurtz, 2018 and Golomb and Mazer, 2021). Remapping is also thought to facilitate continuity of an intention to foveate a peripheral object despite an IEM. This is the situation that arises in a double-saccade, where the first and second targets (T1 & T2) are flashed momentarily in rapid sequence such that T2 is flashed before the first saccade is initiated (Becker and Jürgens, 1979; Mays and Sparks, 1980; Sommer and Wurtz, 2002). The saccadic vector required to foveate T2 is not the same as the one specified by the retinal coordinates of T2, relative to the initial FP. Therefore, different LIP neurons represent T2 before and after the first saccade.

The capacity to perform this double-saccade is thought to be supported by the transfer of information between neurons that represent T2 in the two oculocentric frames of reference—that is, from neurons with response fields that overlap the flashed peripheral target, relative to the pre-saccadic point of fixation (i.e., our leader neurons), to the neurons with future response fields that overlap the target, relative to the new gaze position (i.e., our supporter neurons), as proposed by Cavanagh et al. (2010) and Wurtz (2018). Simultaneous recordings of such pairs had not been conducted before the present study. Our findings reveal that the phenomenon is both robust and more general than previously thought. The information that is remapped is not just the location of the object, but a graded quantity, bearing on the degree of desirability or salience (e.g., a decision variable). This observation might provide a partial solution to the ‘‘hard binding problem’’ identified by Cavanagh et al. (2010). (See also Golomb and Mazer, 2021 and Subramanian and Colby, 2014.) Leader and supporter neurons effectively bind the decision variable with the location of the choice targets.

The present findings demystify a puzzling feature of remapping, specifically, that a visual object is represented concurrently by neurons with different response fields. This would seem to work against perceptual stability because it introduces ambiguity about the location of the object (Golomb et al., 2008). This concurrent representation is also unnecessary to perform the double-saccade task. The time between the two saccades is sufficient to update the final saccadic vector to T2 from the new direction of gaze (on T1). The update only requires subtraction of the first saccadic vector (FP to T1) from the vector, FP to T2. This operation can be achieved if the second saccade occurs at least 50 ms after the first, which is less than half the intersaccadic interval (Sparks and Mays, 1983; Sparks and Porter, 1983). In our study, the neural representation is not just about where the salient target is but also the degree to which it should be selected (i.e., its relative salience). In other words, in addition to the identity of the active neurons, the magnitude of the neuronal activity carries information that is subject to further computation. This information is not in the world but in the brain. In order for such information to transfer from one neuron to another, it is inevitable that the representations would overlap in time, especially between neurons with persistent activity.

The transfer of information among pools of neurons enables LIP to maintain a representation of the decision variable across eye movements. Broadly, there are two classes of mechanisms that could bring this about: (1) local transfer of information within area LIP and (2) gating of information from higher order areas to LIP. ‘‘Local transfer’’ would make use of information about the next saccade, within the LIPs of both hemispheres, to determine which neurons are to represent the decision variable next. Importantly, it would rely on neurons (or, more precisely, neural pools) that adhere to an oculocentric FoR. Alternatively, ‘‘gating’’ presupposes the existence of a more general representation of the targets (e.g., egocentric FoR) or an abstract representation of the decision variable, independent of the report of choice (e.g., the categories, leftward and rightward). Neurons that support these more general representations must be able to send information to the appropriate neurons in area LIP. To achieve this, they would need to access proprioceptive information as well as the anatomical organization of the oculocentric representation in LIP. We cannot rule out this possibility, but it seems odd that it would address different pools of LIP neurons at the same time. We thus interpret the simultaneous representation as support for local transfer.

Further support for local transfer may be adduced from the patterns of neurological deficits that accompany parietal lobe damage in humans. When the damage is in one hemisphere, patients exhibit a form of spatial neglect that is not restricted to the contralateral visual field. The deficits tend to be more complex and contralateral with respect to landmarks, such as the head, body parts, and items in the environment (Driver and Mattingley, 1998). In bilateral damage (e.g., Bálint syndrome; Bálint, 1909), patients are unable to point to—or reach for—objects that they can see (optic ataxia), as these operations require translating between oculocentric and craniocentric frames of reference. The more common symptoms, simultagnosia (an inability to see two objects presented at the same time) and extinction, might also be explained as a breakdown of the ability to comprehend the locations of objects relative to each other.

Whether the transfer of information is achieved locally within LIP or through the gating of information from higher order areas, there must be a way to achieve the appropriate addressing from sender to receiver neurons. For local transfer to work in our experiment, a leader neuron must be capable of forming a communication channel with the appropriate pool of supporter neurons. The possible connections are broad, potentially including neurons in the opposite hemisphere, and yet the effective connectivity at any moment must be highly specific. The present findings do not address the underlying mechanism (cf. Odean et al., 2022), but the information required to identify receiver neurons is available within LIP. For example, the saccadic vector of the first intervening saccade, , is represented by neurons in area LIP before execution of the saccade (Gnadt and Andersen, 1988). Subtracting this vector from the retinal coordinates of the leader neuron response fields, , identifies the coordinates of the supporter neuron response fields: . In principle, the leader neurons could broadcast their signal widely, provided that the receptivity to this signal is limited to neurons with response fields overlapping . Gating from higher-order areas would require the same logic. It, too, requires a calculation of , which is likely to be established in LIP.

A similar operation may apply to representations in other frames of reference. For example, the representation of an object relative to the hand could be achieved in parietal cortex using neurons that represent hand displacement in an oculocentric FoR (see Snyder et al., 1997; Batista, 1999; de Lafuente et al., 2015; Stuphorn et al., 2000). The mechanism might also make use of proprioceptive signals from other areas to LIP, such as eye position information from area 7a (Andersen et al., 1990) or efference copy from the thalamus (Asanuma et al., 1985; Hardy and Lynch, 1992; Sommer and Wurtz, 2006). These signals have been invoked to construct representations in more general frames of reference (e.g., craniocentric; Zipser and Andersen, 1988; Semework et al., 2018). The important insight here is that such general representations may not be necessary.

Most decisions ultimately lead to an action, which might explain why the brain areas associated with planning motor actions have provided insights into the neural correlates of decision formation (Shadlen and Newsome, 1996; Horwitz and Newsome, 1999; Kim and Shadlen, 1999). Our findings extend this body of work by establishing that the intentions need not be for the very next action and that the provisional plan for an action can be formed and maintained by transferring information among different pools of neurons—in a way that transcends a fixed oculocentric FoR. In that sense, we speculate that even some mental operations involving abstract concepts that are free from any spatial FoR, such as mental arithmetic and linguistic evaluation of syntactic dependencies (e.g., wh-movement; Chomsky, 1977), might involve operations similar to the information transfer studied here. Just as it does for more general frames of reference, the transfer of information might eliminate the need for direct representations of some concepts (e.g., the subtraction equality, 12 – 7 = 5, as a fact). Instead, such representations may exist at the operational level (i.e., the transfer), and therefore, as noted by Zipser and Andersen (1988), ‘‘exist only in the behavior [or intention] of the animal.’’

STAR★METHODS

RESOURCE AVAILABILITY

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Michael N. Shadlen (shadlen@columbia.edu).

Materials availability

This study did not generate new unique reagent.

Data and code availability

Behavioral and neuronal data reported in this paper have been deposited to Mendeley Data and are publicly available. DOI is listed in the key resources table.

All original code has been deposited at Zenodo and is publicly available as of the date of publication. DOI is listed in the key resources table.

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited data | ||

| Behavioral and neuronal data | This paper | Mendeley Data, V1, https://doi.org/10.17632/ptcvtxg55j.1 |

|

Experimental models: Organisms/strains | ||

| Rhesus macaque (Macaca mulatta) | Columbia University | N/A |

|

Software and algorithms | ||

| Original analyses code | This paper | https://doi.org/10.5281/zenodo.6835463 |

| PsychToolbox | Brainard, 1997 | http://psychtoolbox.org/ |

| MATLAB | The MathWorks | https://www.mathworks.com/products/matlab.html |

EXPERIMENTAL MODEL AND SUBJECT DETAILS

All training, surgery, and recording procedures were in accordance with the National Institutes of Health Guide for the Care and Use of Laboratory Animals (National Research Council, 2011) and approved by the Columbia University Institutional Animal Care and Use Committee.

We performed extracellular neural recordings in the area LIP of two adult male rhesus macaques (M. mulatta). The animals were 8 and 12 years old and weighed 10 and 9 kg, respectively. Prior to data collection, the monkeys were fitted with cranial pins made of surgical grade titanium (Thomas Recordings) to permit head stabilization during training and neural recordings. A PEEK plastic recording chamber, designed and positioned based on the MRI of each monkey (Rogue Research), was placed above a craniotomy over area LIP in the left hemisphere. These procedures were conducted under general anesthesia in an AALAC accredited operating facility using sterile techniques and state of the art monitoring.

METHOD DETAILS

The main data set comprises 832 well-isolated single neurons from LIP, recorded over 149 recording sessions. The experiments were controlled by the Rex system (Hays et al., 1982) running under the QNX operating system integrated with other devices in real-time. Visual stimuli were displayed on a CRT monitor (Sony GDM-17SE2T, 75 Hz refresh rate, viewing distance 60 cm) controlled by a Macintosh computer running Psychtoolbox (Brainard, 1997) under MATLAB (MathWorks). Eye position was monitored by infrared video using an Eyelink1000 system (1 kHz sampling rate; SR Research). Neural data were acquired using Omniplex (Plexon Inc). All training, surgery, and recording procedures were in accordance with the National Institutes of Health Guide for the Care and Use of Laboratory Animals (National Research Council, 2011) and approved by the Columbia University Institutional Animal Care and Use Committee.

Behavioral tasks

Variable duration task

Each trial begins when the monkey fixates a point (FP; diameter 0.3°, i.e., degrees visual angle) at the center of the visual display. After a delay (50–250 ms), three targets (0.5° diameter, eccentricities 6–9°) appear: two red choice targets (T+ and T−) and a green target (T0) that marks the destination of the first intervening eye movement (IEM). The three target locations were chosen to maximize the number of simultaneously recorded leader and supporter neurons. Example target configurations are shown in Figure S3. After another delay (200–500 ms), a dynamic random dot motion stimulus (RDM) is displayed at the point of fixation. The RDM comprises a sequence of video frames of random dots (2x2 pixels) within an invisible aperture (5°diameter) to achieve an average density of 16.7 dots/deg2/s. The difficulty of the decision was controlled by varying the duration of RDM (100–550 ms; truncated exponential distribution) and the motion strength: the probability that a dot plotted in frame n would be displaced by in frame n + 3, where is a displacement consistent with 5 °/s velocity toward T+ or T−. We refer to this probability as the motion strength or coherence |C| with the sign indicating the direction, C ϵ ± {0,0.04,0:08,0.16,0.32,0.64}. With the remaining probability, 1 – |C|, dots are presented at random locations. Code to produce the RDM is publicly available (https://github.com/arielzylberberg/RandomDotMotion_Psychtoolbox).

The fixation point disappears 500 ms after the offset of the motion stimulus, thereby cueing the monkey to make a saccadic eye movement to T0. The monkey must hold fixation at T0 through a variable delay (500–600 ms) until a new target (0.3° diameter) appears on top of T0 and moves at a constant speed (8–12 °/s) to the original point of fixation. The monkey must continue to foveate this moving target by making a smooth-pursuit eye movement (733 ms) and hold fixation at its resting place (the original FP location) through another variable delay (200–600 ms) until the FP is extinguished. This event serves as the final go signal. The monkey then indicates its decision by making a saccadic eye movement to one of the choice targets and receives a juice reward if the choice is correct, or on a random half of trials when C = 0.

Two-pulse task, 1st variant

The task is identical to the variable duration task, except (i) T0 is blue, (ii) the viewing duration of the motion is 80 ms, and (iii) a second 80 ms RDM stimulus is shown after the smooth-pursuit eye movement that returns the gaze to the original point of fixation. We refer to these brief RDM stimuli as pulses 1 and 2 (P1 & P2). They share the same direction, sgn(C), but the motion strengths are random and independent (uniformly distributed from the set defined above). Note that the strength of the motion from the two pulses is weaker than a continuous 160 ms duration RDM at the average coherence of the two pulses. Because of the way the RDM stimulus is constructed, the first informative displacement does not occur until the 4th video frame. Put simply, the first 40 ms (3 video frames) of each pulse is indistinguishable from 0% coherence motion. For a random third of the trials, P2 was not shown, and the monkey had to report the decision based on P1 only. These single-pulse catch trials were included to encourage the monkey to use information from both pulses.

Two-pulse task, 2nd variant

The task is similar to the 1st variant, except (i) T0 is gray, (ii) the only IEM is a pursuit eye movement as the original FP moves to T0, and (iii) after a variable delay, P2 is presented in an imaginary aperture centered on the now foveal T0. From this new gaze position at T0, the monkey reports the choice. In other words, P1 and P2 are presented at the same retinal coordinates, but the retinal coordinates of the choice targets are not the same when viewing P1 and P2.

Neural recording

In each session, either a single channel tungsten electrode (Thomas Recordings; 72 sessions) or a 24-channel V-probe (Plexon Inc.; 77 sessions) was lowered through a grid to the ventral part of LIP (LIPv; Lewis and Van Essen, 2000). The response fields of all well-isolated neurons were characterized using an oculomotor delayed response task (Hikosaka and Wurtz, 1983; Funahashi et al., 1989) in which the saccade target either remained visible through the instructed delay or was flashed briefly at the beginning of the memory delay (800–1200 ms). These tasks served to map the response field and to identify neurons with spatially selective persistent activity. For the recordings using a single-channel electrode, we targeted the cells that show spatially selective persistent activity in the memory-delay. For the recordings using 24-channel V-probes, we identified spatially selective cells post hoc. See Cell categorization below for details. To improve the yield of task-relevant neurons during the multi-channel recordings, we randomly interleaved two distinct target configurations on a trial-by-trial basis (65/77 sessions). The two configurations were of the same task variant, as only one task variant was used in an experimental session. The data from the two configurations were treated independently. For instance, there are cases where a leader neuron in one configuration becomes a supporter neuron in the other configuration (see Figure S3). We included the data from both configurations in our analyses (Table 1).

QUANTIFICATION AND STATISTICAL ANALYSIS

Analysis of behavioral data

Variable duration task

We constructed the choice functions in Figure 1B by fitting the proportion of T+ choices (Pr+ ) as a function of the signed motion coherence, C, using logistic regression:

| (Equation 1) |

For the analysis of the behavior during multi-neuron recordings, we defined T+ as the target in the response field of the majority of the neurons.

The effect of stimulus duration on accuracy was assessed by modeling the monkey’s behavior using a bounded drift-diffusion model (Shadlen et al., 2006; Kiani and Shadlen, 2009). The decision variable, V(t), is described by the stochastic differential equation,

| (Equation 2) |

where C is signed motion strength, C0 is a bias in units of C and κ is a constant that determines the stimulus- and bias-dependent drift. See Hanks et al. (2011) for justification of incorporating the bias as an offset of the drift rate. W represents a standard Weiner process, where dW is drawn from a Normal distribution, . A choice is made when the decision variable V reaches a bound, ± B: + B for a T+ choice, and – B for a T− choice. If V does not reach either bound by the RDM duration, tdur, the choice is determined by the sign of V at this time. Three free parameters, κ, B and C0, are fit to the choices (maximum likelihood). The fitted curves in Figure S1A are generated by calculating the probability of a correct choice, for each motion strength |C| > 0, and duration.

Two-pulse task

To construct the choice functions shown in Figures 1C and 1D, we used the averaged motion strength (Cavg) of the two pulses shown in each trial:

| (Equation 3) |

We conducted a series of analyses to evaluate the possibility that the monkey used just one of the pulses to make the decision on single trials. The following two logistic functions compare the relative influence of the pulses based on their order,

| (Equation 4) |

or based on their relative strength,

| (Equation 5) |

While β1 and β2 are positive in both factorizations (p< 10−74), this does not rule out the possibility that choices are based on only one pulse, chosen randomly, perhaps, on each trial (Model-1). We exploit the factorizations in Equations 4 and 5 to compare Model-1 to its alternative: choices are based on both pulses on each trial (Model-2).

We simulated choices under both models. For each simulation, 10,000 choices were generated from the Bernoulli distribution, where the probability of choosing T+ is governed by

| (Equation 6) |

for Model-1, where Crand is the coherence of one randomly selected pulse in each trial. For Model-2:

| (Equation 7) |

For both Equations 6 and 7, the αi are adapted from the fits in Figure 1. Using the fitted βi in Equation 3, α0 = β0 in both models. α1 = β1 and β1/2 in Models 1 and 2, respectively. The simulations of both models produce choices similar to those in Figures 1C and 1D (Figure S1C).

We fit each simulated data set using the factorization in Equation 5. Figure S1D displays {β1, β2} derived from fits to the actual data superimposed on the means and standard deviation derived from 1,000 simulations for each model. The exercise is founded on the following intuition. If one pulse, chosen randomly, is to achieve the sensitivity of the monkey (Figures 1C and 1D), weaker pulses would require greater weights, whereas if both pulses contribute to the choice, the weights should be equal (β1 ≈ β2). Note that the factorization in Equation 4 does not distinguish the models because Model-1 assumes unbiased sampling of P1 and P2. Indeed the fits of Equation 4 to the data and to model simulations yield β1 ≈ β2.

Analysis of single neurons

Cell categorization

For the experimental sessions using single-channel electrodes, the three visual targets were placed strategically, based on the neuron’s response field, thereby placing the neuron in the role of leader or supporter. In the sessions with multi-channel electrodes, we could not employ this strategy simultaneously for all recorded neurons. We therefore categorized neurons post hoc, based on the epoch(s) in which they exhibited decision-related activity.

In the variable duration task, a leader neuron must exhibit sustained choice selectivity in the motion viewing epoch (600 ms after motion onset) and not in the epoch of the IEM (beginning 250 ms after the first saccadic eye movement to T0 and ending 200 ms before re-acquisition of the FP). A supporter neuron must exhibit sustained choice selectivity in the epoch of the IEM. The designation, sustained choice selectivity, is satisfied by three consistent, statistically significant rank sum tests (p< 0.05) using the spike counts in consecutive, overlapping 300 ms windows shifted by 50 ms, thus spanning at least 400 ms. The heat maps in Figure 2E display the magnitude of the choice selectivity using d’ calculated using the same shifting 300 ms counting windows. We chose 300 ms wide windows to ensure there are enough spikes in each counting window, as the response during motion viewing was often weak when the next eye movement was not toward the response field. The criterion for sustained selectivity (≥ 400 ms) was chosen to include supporter neurons that may represent the choice only briefly during the pursuit eye movement (e.g., neurons with small response fields). In principle, the designation could allow a brief but strong response to be misclassified as sustained, but the heat maps (Figure 2E) demonstrate that such events were rare (if they occurred at all).

In the two-pulse task, instead of relying on choice selectivity, we required the neural response to be correlated significantly with the sign and strength of P1 or the sum of the strengths of P1 and P2. The change in metric is warranted because the two-pulse tasks are intended to preserve a graded quantity through the IEM. In each 300 ms spike-counting window, we computed the Kendall τ and repeated the measure by shifting the window in steps of 50 ms. The designation, sustained decision-related activity, is satisfied by significant correlations in three consecutive windows (p < 0.05). In the 1st variant, a leader and a supporter must achieve this during the epochs described above for the variable duration task. Additionally, a leader must exhibit decision-related activity during the 600 ms epoch after P2 onset. In the 2nd variant, a leader and a supporter must exhibit sustained decision-related activity after P1 or P2, but not both. Only the cells characterized as leaders or supporters are included in the main data set. Figure S5 further characterizes the neurons that do not fit the definition of leader and supporter. The group comprises many neurons that do not have response fields that overlap with choice targets as well as neurons with large response fields that contain a choice target viewed from both FP and T0.

Analyses of decision-related activity

Average firing rates, r(t), from single neurons are obtained from the spike times relative to an event of interest and grouped by signed motion strength and/or choice. The union of the raw point processes is convolved with a non-causal 100 ms boxcar. Therefore the firing rate plotted (or analyzed) at time t = τ represent the average rate over the window τ ± 50 ms. For trials with different stimulus durations (e.g., Figures 2A and 2C), we exclude spikes occurring later than 250 ms after motion offset. An exception to this practice occurs in the estimation of the buildup rate, the rate of change of the firing rate. The buildup rate is estimated to test the effect of the motion strength on the evolution of the activity during the motion-viewing epoch in the variable duration task. For this analysis, we computed peristimulus time histograms (PSTH; bin width = 20 ms) for each motion strength. We then detrended the PSTH by subtracting the average activity across all motion strengths and estimated the slope of a best fitting line to the independent samples (i.e., separated by one binwidth) of average firing rate over the first 200 ms of putative integration. The onset of decision-related activity was estimated as the first spike-counting window in which the activity could discriminate the direction of the two strongest motion stimuli (rank sum test, p < 0.01). The estimates of the build up rate and the standard errors of the fit are shown in the insets of Figures 2A and 2C. The relationship between the buildup rate and the motion strength is assessed using linear regression.

For the two-pulse task, average firing rates are grouped by the motion strength of P1 (Figures 3A, 3B, 3E, and 3F). We also visualize the effect of P2 on the neuronal activity in Figures 3D and 3G, by grouping the activity by the motion strength of P2, detrending the activity of each neuron (by subtracting the average activity across all trials), and adjusting the baseline activity (by subtracting the activity during the 300 ms window around the P2 onset) to remove the effect of the previously displayed P1. This procedure isolates the effect of P2.

We conducted several analyses to determine whether neurons receive a graded representation of the motion evidence after an IEM and update it with new evidence. We first test whether the previously viewed P1 is represented in the starting level of activity in the P2-viewing epoch (RP2,start) for leader neurons (1st variant) and supporter neurons (2nd variant),

| (Equation 8) |

where RP2,start is measured in a 300 ms window centered at P2 onset (H0 : α1 = 0). We then determine whether the responses at the end of the P2-viewing epoch (RP2,end) are also altered systematically by the coherence of P2,

| (Equation 9) |

where the αi are inherited from Equation 8 and RP2,end is measured in a 300 ms time window centered at 300 ms after P2 onset (H0 : β1 = 0). RP2,start and RP2,end are standardized for each neuron and combined across neurons. To control for a possible confounding effect of choice, we include only correct trials and performed the regression separately for T+ and T− choice trials. The reported p-values are the larger of the two.

Analysis of simultaneously recorded neuronal pairs

We measured the correlation between the activities of leader and supporter neurons using the spike counts sampled in 300 ms windows before, during, and after the IEM. The three epochs correspond to the times when either the leader or the supporter neuron represents the decision variable. The first epoch, pre-IEM, begins 200 ms after the onset of P1. The last epoch, post-IEM, centers at the onset of P2. Specification of the middle epoch, IEM, is guided by the time that the supporter neuron represents the decision (Figure 3C). We first identified the time when the supporter neuron begins to exhibit sustained decision-related activity, as explained above (see Cell categorization). We used a 300 ms window beginning 50 ms after this starting time.

Our interest is in the correlation between the latent firing rates (CorCE) that are expected to represent the intensity of the evidence, that is, the quantity that appears to move between neurons. To this end, we removed the Poisson-like component of the variance associated with spike counts—the variability that would be present even if the rates were identical from trial to trial (termed the point process variance in Churchland et al., 2011). We first establish the residual spike counts for each neuron and epoch. For instance, if on trial i, the residual spike counts of each leader neuron (l) during the pre-IEM epoch and each supporter neuron (s) during the IEM epoch were computed as follows:

| (Equation 10) |

| (Equation 11) |

where refers to the mean over the trials sharing the same signed (or 0%) coherence k for the first pulse. We obtain the variance from the union of these residuals, and , and subtract the component of the variance attributed to the point process to obtain estimates of the variance of the latent rates (i.e., the conditional expectations of the counts):

| (Equation 12) |

| (Equation 13) |

where φ is the Fano factor (ratio of variance to mean count) of the point process that characterizes the conversion of firing rate to spike counts (Nawrot et al., 2008). We use an estimate of φ = 0.6, derived from the leader’s activity during decision formation in the variable duration experiment (Figure S2). It is a free parameter that minimizes the squared standardized error between the 10 CorCE values and the predictions from unbounded diffusion (; Fisher-z). Seven of the 10 unique CorCE values are plotted in Figure S2B. The conclusions we draw are robust to a range of φ between 0.5 and 0.9.

For the analyses in Figure 4 the scalar φ affects the conversion of covariance to correlation by replacement of variance with VarCE:

| (Equation 14) |

The assumption is that the conversion of spike rate to random numbers of spikes in different epochs is conditionally independent, given the two rates (see Churchland et al., 2011). The resulting CorCEs are reported in Figures 4A and 4B. We establish the distribution of the statistic under H0 using a permutation test (1,000 surrogate data sets).

We supplemented the correlation analyses with regression. Regression analyses allow us to evaluate the significance of the correlation in pairs after accounting for other shared task variables, such as the motion strength of P1 () and the monkey’s choice (Ichoice):

| (Equation 15) |

| (Equation 16) |

We report the p-value associated with H0 : β3 = 0.

We also measured the autocorrelation of the leader’s activity before and after the IEM (lpre and lpost) in the 1st variant of the two-pulse task:

| (Equation 17) |

applying the same null hypothesis, and we asked whether the effect of lpre on lpost is mediated by the supporter:

| (Equation 18) |

Again H0 : β3 = 0.

Supplementary Material

Highlights.

Neurons in area LIP represent decisions in an oculocentric frame of reference (FoR)

When the gaze shifts, the representation moves to different LIP neurons

The transfer confers continuity of a decision about an object in a world-centered FoR

ACKNOWLEDGMENTS

We thank Brian Madeira and Cornel Duhaney for technical support and animal care. We thank members of Shadlen lab for critical input throughout the research. This work was supported by the Howard Hughes Medical Institute (HHMI) and the National Institutes of Health (R01-NS113113). This article is subject to HHMI’s Open Access to Publications policy. HHMI lab heads have previously granted a nonexclusive CC BY 4.0 license to the public and a sublicensable license to HHMI in their research articles. Pursuant to those licenses, the author-accepted manuscript of this article can be made freely available under a CC BY 4.0 license immediately upon publication.

Footnotes

SUPPLEMENTAL INFORMATION

Supplemental information can be found online at https://doi.org/10.1016/j.neuron.2022.07.019.

DECLARATION OF INTERESTS

The authors declare no competing interests.

REFERENCES

- Andersen RA, Bracewell RM, Barash S, Gnadt JW, and Fogassi L (1990). Eye position effects on visual, memory, and saccade-related activity in areas lip and 7a of macaque. J. Neurosci 10, 1176–1196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen RA, and Buneo CA (2002). Intentional maps in posterior parietal cortex. Annu. Rev. Neurosci 25, 189–220. [DOI] [PubMed] [Google Scholar]

- Asanuma C, Andersen RA, and Cowan WM (1985). The thalamic relations of the caudal inferior parietal lobule and the lateral prefrontal cortex in monkeys: divergent cortical projections from cell clusters in the medial pulvinar nucleus. J. Comp. Neurol 241, 357–381. [DOI] [PubMed] [Google Scholar]

- Bálint R (1909). Seelenlähmung des schauens, optische ataxie, räumliche Stö rung der Aufmerksamkeit. Eur. Neurol 25, 67–81. [Google Scholar]

- Barash S, Bracewell RM, Fogassi L, Gnadt JW, and Andersen RA (1991). Saccade-related activity in the lateral intraparietal area. ii. spatial properties. J. Neurophysiol 66, 1109–1124. [DOI] [PubMed] [Google Scholar]

- Batista AP, Buneo CA, Snyder LH, and Andersen RA (1999). Reach plans in eye-centered coordinates. Science 285, 257–260. [DOI] [PubMed] [Google Scholar]

- Becker W, and Jürgens R (1979). An analysis of the saccadic system by means of double step stimuli. Vision Res 19, 967–983. [DOI] [PubMed] [Google Scholar]

- Bisley JW, and Goldberg ME (2003). Neuronal activity in the lateral intraparietal area and spatial attention. Science 299, 81–86. [DOI] [PubMed] [Google Scholar]

- Brainard DH (1997). The psychophysics toolbox. Spat. Vision 10, 433–436. [PubMed] [Google Scholar]

- Cavanagh P, Hunt AR, Afraz A, and Rolfs M (2010). Visual stability based on remapping of attention pointers. Trends Cogn. Sci 14, 147–153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chomsky N (1977). On wh-movement. In Formal Syntax, Culicover P, Wasow T, and Akmajian A, eds. (Academic Press; ), pp. 71–133. [Google Scholar]

- Churchland AK, Kiani R, Chaudhuri R, Wang XJ, Pouget A, and Shadlen MN (2011). Variance as a signature of neural computations during decision making. Neuron 69, 818–831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P (2007). Cortical mechanisms of action selection: the affordance competition hypothesis. Philos. Trans. R. Soc. Lond. B Biol. Sci 362, 1585–1599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark A (1997). Being there: Putting Brain, Body, and World Together Again (MIT Press; ). [Google Scholar]

- Colby CL, Duhamel JR, and Goldberg ME (1995). Oculocentric spatial representation in parietal cortex. Cereb. Cortex 5, 470–481. [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel JR, and Goldberg ME (1996). Visual, presaccadic, and cognitive activation of single neurons in monkey lateral intraparietal area. J. Neurophysiol 76, 2841–2852. [DOI] [PubMed] [Google Scholar]

- de Lafuente V, Jazayeri M, and Shadlen MN (2015). Representation of accumulating evidence for a decision in two parietal areas. J. Neurosci 35, 4306–4318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, and Mattingley JB (1998). Parietal neglect and visual awareness. Nat. Neurosci 1, 17–22. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, and Goldberg ME (1992). The updating of the representation of visual space in parietal cortex by intended eye movements. Science 255, 90–92. [DOI] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, and Goldman-Rakic PS (1989). Mnemonic coding of visual space in the monkey’s dorsolateral prefrontal cortex. J. Neurophysiol 61, 331–349. [DOI] [PubMed] [Google Scholar]

- Gibson JJ (1986). The Ecological Approach to Visual Perception (Lawrence Erlbaum Associates; ). [Google Scholar]

- Gnadt JW, and Andersen RA (1988). Memory related motor planning activity in posterior parietal cortex of macaque. Exp. Brain Res 70, 216–220. [DOI] [PubMed] [Google Scholar]

- Golomb JD, Chun MM, and Mazer JA (2008). The native coordinate system of spatial attention is retinotopic. J. Neurosci 28, 10654–10662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golomb JD, and Mazer JA (2021). Visual remapping. Annu. Rev. Vision Sci 7, 257–277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanks TD, Mazurek ME, Kiani R, Hopp E, and Shadlen MN (2011). Elapsed decision time affects the weighting of prior probability in a perceptual decision task. J. Neurosci 31, 6339–6352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hardy SG, and Lynch JC (1992). The spatial distribution of pulvinar neurons that project to two subregions of the inferior parietal lobule in the macaque. Cereb. Cortex 2, 217–230. [DOI] [PubMed] [Google Scholar]

- Hays AV, Richmond BJ, and Optican LM (1982). Unix-based multiple-process system, for real-time data acquisition and control WESCON conference Proc. https://www.osti.gov/biblio/5213621. [Google Scholar]

- Hikosaka O, and Wurtz RH (1983). Visual and oculomotor functions of monkey substantia nigra pars reticulata. iii. memory-contingent visual and saccade responses. J. Neurophysiol 49, 1268–1284. [DOI] [PubMed] [Google Scholar]

- Horwitz GD, and Newsome WT (1999). Separate signals for target selection and movement specification in the superior colliculus. Science 284, 1158–1161. [DOI] [PubMed] [Google Scholar]

- Kiani R, Hanks TD, and Shadlen MN (2008). Bounded integration in parietal cortex underlies decisions even when viewing duration is dictated by the environment. J. Neurosci 28, 3017–3029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiani R, and Shadlen MN (2009). Representation of confidence associated with a decision by neurons in the parietal cortex. Science 324, 759–764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim JN, and Shadlen MN (1999). Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nat. Neurosci 2, 176–185. [DOI] [PubMed] [Google Scholar]

- Lewis JW, and Van Essen DC (2000). Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J. Comp. Neurol 428, 112–137. [DOI] [PubMed] [Google Scholar]

- Mays LE, and Sparks DL (1980). Dissociation of visual and saccade-related responses in superior colliculus neurons. J. Neurophysiol 43, 207–232. [DOI] [PubMed] [Google Scholar]

- Mazzoni P, Bracewell RM, Barash S, and Andersen RA (1996). Motor intention activity in the macaque’s lateral intraparietal area. i. dissociation of motor plan from sensory memory. J. Neurophysiol 76, 1439–1456. [DOI] [PubMed] [Google Scholar]

- Merleau-Ponty M (1945). Phenomenology of Perception (Routledge; ). [Google Scholar]

- Mirpour K, Arcizet F, Ong WS, and Bisley JW (2009). Been there, seen that: A neural mechanism for performing efficient visual search. J. Neurophysiol 102, 3481–3491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mongillo G, Barak O, and Tsodyks M (2008). Synaptic theory of working memory. Science 319, 1543–1546. [DOI] [PubMed] [Google Scholar]

- National Research Council. (2011). Guide for the Care and Use of Laboratory Animals, Eighth Edition (The National Academies Press; ). https://www.nap.edu/catalog/12910/guide-for-the-care-and-use-of-laboratory-animals-eighth. [Google Scholar]

- Nawrot MP, Boucsein C, Rodriguez Molina VR, Riehle A, Aertsen A, and Rotter S (2008). Measurement of variability dynamics in cortical spike trains. J. Neurosci. Methods 169, 374–390. 10.1016/j.jneumeth.2007.10.013. [DOI] [PubMed] [Google Scholar]

- Odean NN, Sanayei M, and Shadlen MN (2022). Transient oscillations of neural firing rate associated with routing of evidence in a perceptual decision. Preprint at bioRxiv 10.1101/2022.02.07.478903. [DOI] [PMC free article] [PubMed]

- Semework M, Steenrod SC, and Goldberg ME (2018). A spatial memory signal shows that the parietal cortex has access to a craniotopic representation of space. eLife 7, e30762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadlen MN, Hanks TD, Churchland AK, Kiani R, and Yang T (2006). The speed and accuracy of a simple perceptual decision: a mathematical primer. In Bayesian Brain: Probabilistic Approaches to Neural Coding, Doya K, Ishii S, Pouget A, and Rao R, eds. (MIT Press; ), pp. 209–237. [Google Scholar]

- Shadlen MN, and Kandel ER (2021). Decision-making and consciousness. In Principles of Neural Science, 6e, Kandel ER, Koester JD, Mack SH, and Siegelbaum SA, eds. (McGraw Hill; ). [Google Scholar]

- Shadlen MN, Kiani R, Hanks TD, and Churchland AK (2008). Neurobiology of decision making: an intentional framework. In Better than Conscious?, Engel C and Singer W, eds. (MIT Press; ), pp. 71–101. [Google Scholar]

- Shadlen MN, and Newsome WT (1994). Noise, neural codes and cortical organization. Curr. Opin. Neurobiol 4, 569–579. [DOI] [PubMed] [Google Scholar]

- Shadlen MN, and Newsome WT (1996). Motion perception: seeing and deciding. Proc. Natl. Acad. Sci. USA 93, 628–633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadlen MN, and Newsome WT (1998). The variable discharge of cortical neurons: implications for connectivity, computation, and information coding. J. Neurosci 18, 3870–3896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snyder LH, Batista AP, and Andersen RA (1997). Coding of intention in the posterior parietal cortex. Nature 386, 167–170. [DOI] [PubMed] [Google Scholar]

- Sommer MA, and Wurtz RH (2002). A pathway in primate brain for internal monitoring of movements. Science 296, 1480–1482. [DOI] [PubMed] [Google Scholar]

- Sommer MA, and Wurtz RH (2006). Influence of the thalamus on spatial visual processing in frontal cortex. Nature 444, 374–377. [DOI] [PubMed] [Google Scholar]

- Sparks DL, and Mays LE (1983). Spatial localization of saccade targets. I. Compensation for stimulation-induced perturbations in eye position. J. Neurophysiol 49, 45–63. [DOI] [PubMed] [Google Scholar]

- Sparks DL, and Porter JD (1983). Spatial localization of saccade targets. II. Activity of superior colliculus neurons preceding compensatory saccades. J. Neurophysiol 49, 64–74. [DOI] [PubMed] [Google Scholar]

- Stuphorn V, Bauswein E, and Hoffmann KP (2000). Neurons in the primate superior colliculus coding for arm movements in gaze-related coordinates. J. Neurophysiol 83, 1283–1299. [DOI] [PubMed] [Google Scholar]

- Subramanian J, and Colby CL (2014). Shape selectivity and remapping in dorsal stream visual area lip. J. Neurophysiol 111, 613–627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson E, and Varela FJ (2001). Radical embodiment: neural dynamics and consciousness. Trends Cogn. Sci 5, 418–425. [DOI] [PubMed] [Google Scholar]

- Wurtz RH (2018). Corollary discharge contributions to perceptual continuity across saccades. Annu. Rev. Vis. Sci 4, 215–237. [DOI] [PubMed] [Google Scholar]

- Zipser D, and Andersen RA (1988). A back-propagation programmed network that simulates response properties of a subset of posterior parietal neurons. Nature 331, 679–684. [DOI] [PubMed] [Google Scholar]

- Zohary E, Shadlen MN, and Newsome WT (1994). Correlated neuronal discharge rate and its implications for psychophysical performance. Nature 370, 140–143. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Behavioral and neuronal data reported in this paper have been deposited to Mendeley Data and are publicly available. DOI is listed in the key resources table.

All original code has been deposited at Zenodo and is publicly available as of the date of publication. DOI is listed in the key resources table.

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited data | ||

| Behavioral and neuronal data | This paper | Mendeley Data, V1, https://doi.org/10.17632/ptcvtxg55j.1 |

|

Experimental models: Organisms/strains | ||

| Rhesus macaque (Macaca mulatta) | Columbia University | N/A |

|

Software and algorithms | ||

| Original analyses code | This paper | https://doi.org/10.5281/zenodo.6835463 |

| PsychToolbox | Brainard, 1997 | http://psychtoolbox.org/ |

| MATLAB | The MathWorks | https://www.mathworks.com/products/matlab.html |