Abstract

With rapid development of technologies in medical diagnosis and treatment, the novel and complicated concepts and usages of clinical terms especially of surgical procedures have become common in daily routine. Expected to be performed in an operating room and accompanied by an incision based on expert discretion, surgical procedures imply clinical understanding of diagnosis, examination, testing, equipment, drugs and symptoms, etc., but terms expressing surgical procedures are difficult to recognize since the terms are highly distinctive due to long morphological length and complex linguistics phenomena. To achieve higher recognition performance and overcome the challenge of the absence of natural delimiters in Chinese sentences, we propose a Named Entity Recognition (NER) model named Structural-SoftLexicon-Bi-LSTM-CRF (SSBC) empowered by pre-trained model BERT. In particular, we pre-trained a lexicon embedding over large-scale medical corpus to better leverage domain-specific structural knowledge. With input additionally augmented by BERT, rich multigranular information and structural term information is transferred from Structural-SoftLexicon to downstream model Bi-LSTM-CRF. Therefore, we could get a global optimal prediction of input sequence. We evaluate our model on a self-built corpus and results show that SSBC with pre-trained model outperforms other state-of-the-art benchmarks, surpassing at most 3.77% in F1 score. This study hopefully would benefit Diagnostic Related Groups (DRGs) and Diagnosis Intervention Package (DIP) grouping system, medical records statistics and analysis, Medicare payment system, etc.

Keywords: Natural language processing, Surgical procedure, Long term recognition, SoftLexicon

Natural language processing; Surgical procedure; Long term recognition; SoftLexicon.

1. Introduction

Surgical procedures contextualized in the medical field refer to a procedure that would be expected to be performed in an operating room and accompanied by an incision based on expert discretion. As a normative expression for clinical examination and treatment, surgical procedure terms, such as “ovary transplantation”, “pulmonary artery thrombolytic agent perfusion”, etc., become an important part of the standard medical terminology system, as well as standards with the smallest granularity and the highest necessity for medical and health information standard system. Those terms imply a clinical understanding of diagnosis, examination, testing, equipment, drugs, and symptoms, etc., and are a key building block for many intelligent medical systems.

Being a part of public standard medical terminology resources, surgical procedure terms have the problem of covering the real world, that is, the ability to describe real data [1]. With the booming development in the biomedical field, surgical procedures composing operative approaches, equipment, techniques, drugs, and such continuously change in practical use. Forms of usage like syntagmatic and cross use among these types caused complicated and diversified changes in terms’ morphology, and the ever-increasing bytes of terms further exacerbates this problem. It grows difficult for static vocabularies and standards to discover variants and derivatives in reality thus fully covering surgical procedure terms promptly. How to address the problem, or more precisely, how to address the problem originated from the increased length becomes the heart of our concern. Previous studies provide excellent named entity recognition (NER) methods for detecting entities alike [2, 3, 4, 5].

Named entity recognition is the process of identifying targeted entities and their corresponding domain entity tags from unstructured unlabeled text. Suffice to say that improved NER model capability on surgical procedures directly links to higher identification performance of groupers of Diagnostic Related Groups (DRGs) and Diagnosis Intervention Package (DIP), and further facilitates Medicare payment system, statistics, and analysis of Electronic Medical Records (EMRs) and Electronic Health Records (EHRs) in any potential use. Generally, rapid and accurate identification of such terms is of great significance to efficient hospital management, automatic update of terminology knowledge base, terminology standardization, and interoperability as well as the construction of a Chinese medical terminology system. However, an undeniable fact is that previous NER studies in the biomedical domain mainly concentrate on more common concepts [6, 7, 8, 9, 10, 11, 12], and bare efforts are put into this field or long entity recognition. Current NER models also find their incapability to process these long and complicated terms [13, 14]. Therefore, targeted studies are urgently needed for the automatic recognition of surgical procedure long terms.

This paper proposes a NER model named Structural-SoftLexicon-Bi-LSTM-CRF (SSBC) empowered by the pre-trained model BERT [15]. SSBC first maps characters in the input sequence into dense vectors, then the SoftLexicon feature is built and added to the character vector. The augmented character representations are later input into the sequence modeling layer and CRF (conditional random field) [16, 17] layer to generate final predictions. During the process, a pre-trained language model is applied to learn prior semantic knowledge. SSBC alleviates the long term recognition problem by using lexicon-character combination strategy merely on embedding layer without reconstructing the whole architecture and proves to be effective.

The contributions of this work are summarized as follows:

-

•

We constructed a term corpus and combined term structural features with a deep learning method to overcome combination and cross ambiguity. Testing results outperformed baseline methods and reached a new high F1 score at 79.63%.

-

•

We proposed Structural-SoftLexicon-Bi-LSTM-CRF (SSBC) model which integrates lexicon and character features and avoids complex sequence modeling. The overall architecture is simple, and the transferability is strong.

-

•

We introduced a pre-trained domain lattice and pre-trained language model, which effectively tackle the problem of OOV (out-of-vocabulary) words. And the model performance can continually be improved by subsequent lexicon expansion.

2. Characteristics and difficulties of surgical procedures recognition

Considering the average length appeared in various surgical taxonomies and standards, we define surgical procedure long terms as terms with at least 5 Chinese characters. For entity recognition tasks, we summarize term features as follows:

-

(1)

Highly territorial. Tiny replacement of certain characters may cause huge changes in the semantics of the words or even errors due to the high sensitivity. Thus, characters with rich information are demanded.

-

(2)

More bytes. We define surgical procedure long terms as terms with at least 5 Chinese characters after considering the average length appeared in various surgical taxonomies and standards. For instance, in a normative document Operations And Procedures Classification Code National Clinic Edition 3.0 [4], terms of at least 5 characters account for about 97% of all terms. The semantic dependency and complexity positively correlate to the length of terms.

-

(3)

Highly structural. Surgical procedure terms treat leading terms as its core element and combine other kinds of components to form a term unit, seeing in Figure 1. In fact, almost all term elements follow national or industrial norms, making it possible to make full use of external lexicons to recognize terms. We defined term elements of 7 types: Ana (anatomy), App (operative approach), Dis (disease), Lea (leading term1), Equ (equipment), Drug (drug), and Sym (symptom) (see Figure 2).

Figure 1.

Examples of term segmentation by term type.

Figure 2.

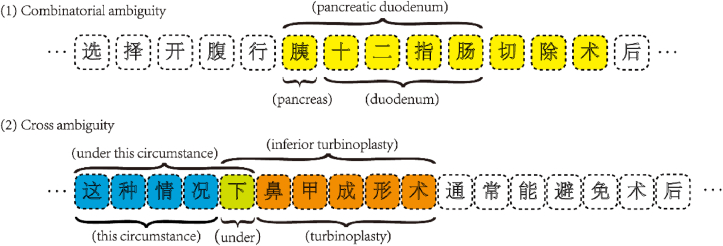

(1) Example indicates that the ground-truth entity (characters highlighted in yellow) may be mistakenly cut into an entity without pancreas (even if it is still legal at semantic level) owing a different semantic; (2) Example shows that a polysemous character “下” is legal in both word dependency relations but only one boundary cutting is the ground-truth entity (ENG: inferior turbinoplasty).

Consequently, 3 challenges need overcome:

-

(1)

For a term, App + Ana + Dis + Lea is the basic formula. However, syntagmatic and cross ambiguity in linguistics lead to word boundary changes, therefore generating cutting errors.

-

(2)

Although terms in practical use are standardized, not all of them are. Due to the descriptive narrative and naming style, surgical procedure terms often have novel and diversified morphological patterns with weak name regularity and nomenclature, and are full of OOV words, which unfavorably embarrass recognition performance.

-

(3)

A large number of symbols and multilingual words. For instance, English words, Arabic numerals, and hyphens are widely used in terms like “Bacon-Black术” (Bacon-Black), “AVAULTA全盆底重建术” (AVAULTA Total Pelvicfloor Reconstruction), and “重组人类活化C蛋白输注” (Recombinant Human Activated Protein C Perfusion).

3. Related works

NER is widely used in Chinese medical texts, involving electronic medical records [18, 19], traditional Chinese medicine texts [20, 21], clinical guidelines [22], disease subtypes [23], admission notes [24], PICO [25] disease and plant name [26], e. tc. Most approaches focus on feature extraction either by rule and dictionary-based means or by machine learning and deep learning means [27, 28]. detects entities from unstructured texts by using rules and dictionaries on the character-word level [29, 30]. utilize machine learning models extracting medical entities based on manually designed character-word level features like dictionaries, pos tagging, and n-grams. Rule-based approaches rely heavily on manual formulation of heuristic rules which requires thorough analysis of corpus and strong enumeration capability for possible rules. The dictionary-based approaches face pretty much the same problem in covering all related entities, which causes poor recognition performance [31]. Machine learning-based approach at earlier stage takes a naïve resolution by simply classifying each word independently [32]. The main problem with this resolution is it assumes that named entity labels are independent which is not the case. Later, the machine learning-based approach regards NER as a sequence labeling issue, and approaches, e.g., conditional random fields, Hidden Markov Models [33], support vector machine (SVM) [34], maximum entropy (ME) [35], are extensively used. Specifically, the machine learning-based approach builds dependencies in and out of input sequences based on labels and then is sent downstream to tackle labeling biases by a global optimization prediction layer.

Deep learning methods characterized by end-to-end learning, deep features extraction, and automatic modeling have comparatively surpassed outcomes maintained by traditional machine learning approaches [36]. Haixin Tan et al [37] propose an ensemble model composing Bi-LSTM, CRF, and transformer encoder [trans] for medical term recognition, incorporating Chinese character radical information and a pre-trained language model. Researchers gradually find that by optimizing the representation layer, models are easier to deploy and appear competitive results [MLNER and lexicon]. Articles [11, 38, 39] use encyclopedia, abstract, and patent corpus to input pre-trained word vectors to the model, and capture word features to form character-word hybrid embedding as model input; Yuan Li et al [40] apply static and dynamic attention mechanism to measure adjacent word weights around characters to obtain word importance information and concatenate into character vectors. To obtain clearer word boundaries, some use segmentation tagging [41] and tagging with different word boundaries generated by feature template segmentation [42] to assist in recognizing entities. Other scholars, based on domain feature, combine external thesaurus with character or word embeddings to facilitate entity recognition. With this thought, Heng-Yi Wu et al. [43] used Drugbank 4.0, Medical Subject Headings (MeSH) vocabulary, and medical literature to construct a dictionary of drug names and metabolism, meanwhile combined part-of-speech information to identify whether entities are related terms or substrings. LERNER I et al [44] use a terminology system based on Unified Medical Language System (UMLS) Metathesaurus and Systematized Nomenclature of Medicine (SNOMED) to obtain entity type information, and combine character and word embedding as model input to detect drug names. In addition to referring to external taxonomies, extracting boundary-specified subwords from known entities also becomes a feasible approach to enriching embeddings. For instance, C.Y.Hou [45] used the BPE iterative merging algorithm to generate subword units from known entities to form character-subword mixed embedding as the input of ID-CNNs-CRF model, which shortens the sequence length and facilitates the determination of boundaries of long entities, achieving superiority in long term recognition. Despite some unique techniques such as denoising algorithm [46] and adversarial training [47] being used, the main structures of these methods based on the embedding layer. Multi-level feature fusion, e.g., character, word, morphology, syntactic, pinyin, and radical features, is getting popular more than ever.

Although many deep neural network models have been applied to entity recognition, they all have different degrees of defects. First, methods that incorporate lexical information inevitably inherent word segmentation errors which can propagate further downstream. Considering the long span of surgical procedure terms, the word-based method will have a negative impact on recognition. Character-based method solves word segmentation problem, and empirically, its performance is better than word-based deep learning models [48, 49], but the former misses the word boundary information, and character sequence is often longer than word sequence, which increases difficulties. Naturally, more and more researchers consciously integrate word information into character-based NER methods to fully take advantage of both and avoid their shortcomings. This idea has two pathways: one is to dynamically improve sequence modeling layer [50, 51], and another is to improve embedding representation layer [52].

As a classical model for refactoring sequence modeling layers to utilize lexicon information, Lattice-LSTM [51] refreshes top scores for several tasks. However, Lattice-LSTM is slow in training speed, complex in architecture, and hard to combine with other deep neural networks. Ruotian Ma et al. [53] proposed SoftLexicon by improving Lattice-LSTM, which avoids complex sequence modeling and large-scale annotation corpus construction and can be combined with other neural network NER models by adjusting embedding representation layer. Therefore, we believe that it can transfer well to operation and procedure term recognition.

In this paper, we adopt a deep learning model incorporating character information, lexicon information, and term structural features. The embedding representation layer is fine-tuned to accept character embedding, BERT [54] embedding, and SoftLexicon embedding, and Bi-LSTM model is used for sequence modeling. In the decoding layer, we use CRF to solve the labeling bias problem in Bi-LSTM, thus obtaining global optimal tagging. Through experiments on the constructed corpus, our model reaches 79.63% on F1 value, outperformed by at most 3.77% than baseline models.

4. Model

We choose SoftLexicon to encode lexicon information in the character representations. As an encoding scheme, SoftLexicon gets all matched words rather than all matched tags of a certain character. By doing so, every character in the input sequence obtains all matched words when the character locates in the four positions tagged by ‘BMES’ (Begin, middle, end of a word, and single character forming a word) tagging scheme, subsequently, we introduced not only word boundaries but also word semantics.

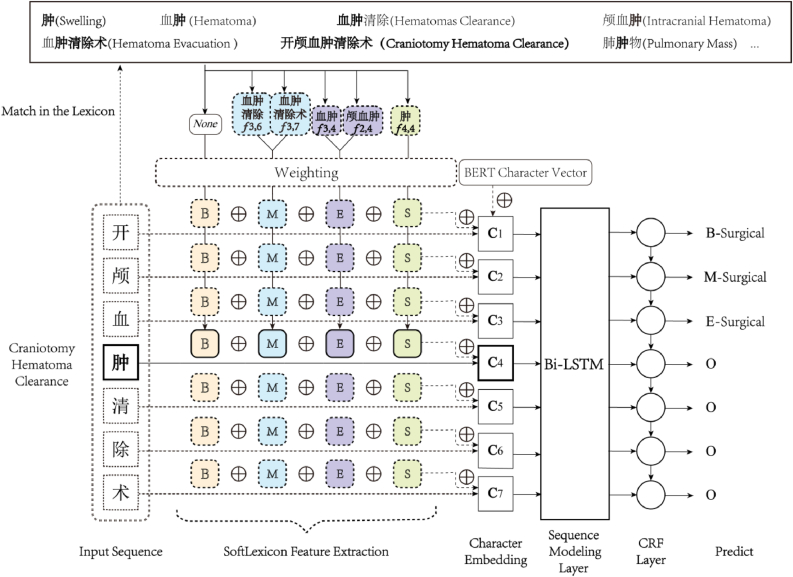

The overall structure of this model is shown in Figure 3. First, the model extracts character from the input sequence containing surgical procedure mentions “Craniotomy Hematoma Clearance”, as this case is “肿” (Swelling), then maps it into a dense vector and concatenates with character vector pre-trained by BERT. Meanwhile, sub-sequences where the character “肿” is located at, “血肿” (Hematoma), “颅血肿” (Intracranial Hematoma), etc., are matched with words in lexicon. Weighted representation is calculated according to the frequency of character “肿” in different positions in matched words. For example, “肿” is in the middle of the word “血肿清除术” (Hematoma Evacuation), then words containing this character are categorized into word set “M”, where “M” originates from BMES tagging scheme. If there is no matching word starting with “肿”, set “B” will be an empty set. To be adjusted to the medical field, we further expanded lexicon so as to obtain more domain knowledge. After the above steps, The SoftLexicon lexicon features are added to characters to form an augmented representation. We utilize the sequence modeling layer and finally have a CRF layer to predict results.

Figure 3.

Architecture of SoftLexicon.

4.1. Character embedding

Each character in the Chinese input sequence is mapped into a dense vector, but due to the lack of large-scale annotated corpora, it is difficult to obtain rich information among characters. The presence of pre-trained language models like BERT is tested effectively on this issue since it learns rich prior semantic knowledge from large unlabeled unstructured text and improves model performance by passing it downstream, refreshing state-of-the-art results on a range of natural language processing tasks [55]. Let denotes a sequence of tokens. After BERT embedding, each character is denoted as and is later concatenated with character embedding to form an augmented embedding as shown in Eq. (1). Here, stands for a lookup table for character embedding.

| (1) |

4.2. SoftLexicon

SoftLexicon takes the following 3 steps to incorporate lexicon information.

4.2.1. Categorizing the matched words

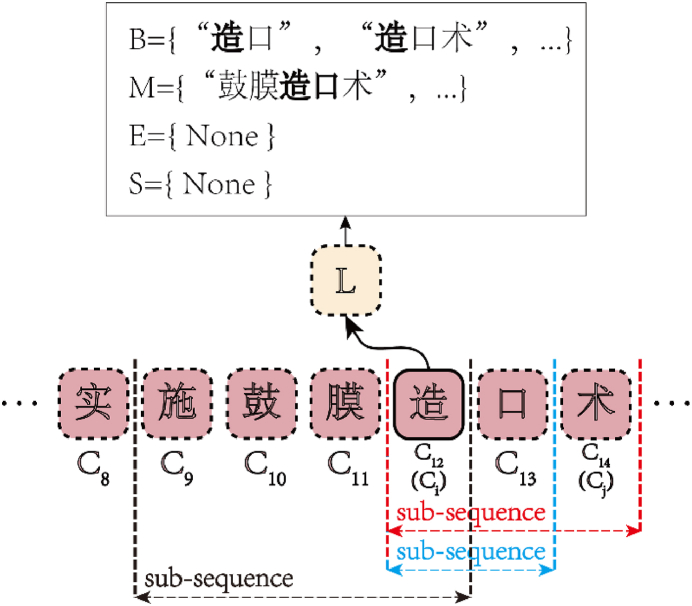

We denote a Lexicon as . For a sequence of tokens , if the sub-sequence containing the character matches words in , it will be grouped into one of the four word sets tagged as ‘’, ‘’, ‘’ and ‘’ which is in accordance with “BMES” tagging scheme. The grouping process is in line with Eqs. (2), (3), (4), and (5). If a word set has no matched words inside , then this set is filled with “” representing an empty set.

| (2) |

| (3) |

| (4) |

| (5) |

In Eq. (2), represents a word (also a sub-sequence) spanning from a character indexed to a character indexed (the word span is surely less than or no more than -length of input sequence). shares the same starting index with the character , which means is the first component character in word . As Figure 3 shows that, given a certain character from an input sequence, sub-sequences’ first component characters start with , i.e., sub-sequences labeled in red and blue in Figure 3, are recognized as . If these exists in , it will be grouped into word set . Note that it’s possible for a single to produce several and among which some are meaningless (sub-sequence labeled in black in Figure 4). Thus, any qualifies Eq. (2) is considered a legal element in word set .

Figure 4.

An example of grouping words into word set .

Similarly, Eq. (3) describes that if character is in the middle of all component characters of and appears in , then will be included in word set . Eq. (4) describes that if there's a sub-sequence ending with character , and appears in , then will be included into word set . Eq. (5) describes that as long as the character exists in , then is included in the word set .

4.2.2. Condensing word sets

Words with different lengths will lead to inconsistent word set dimensions, so it is necessary to condense word sets to a fixed one. Considering the unbalanced importance of different words for entity recognition and speeding the training process, word frequency weighting is applied to obtain the weighted representation of word set in Eq. (6): . is the static word frequency of lexicon word in statistics, is the lookup table of word embedding, and in Eq. (7) is the sum of the frequency of all words in BMES sets.

| (6) |

where

| (7) |

4.2.3. Concatenating mutil-granularity features

After repeating (6) to obtain all four word set representations, we merge them into a fixed dimension feature in Eq. (8) and concatenate with character vector to form a new embedding in Eq. (9).

| (8) |

| (9) |

4.3. Sequence modeling layer

Bi-directional Long Short-Term Memory (Bi-LSTM) [56] is the mainstream model used in sequence labeling tasks. Bi-LSTM synthesizes forward LSTM [57] and backward LSTM, uses two hidden layers to store information of input data from two directions respectively and concatenates information in step as . A LSTM hidden unit is composed of an input gate , forget gate , output gate , and a memory cell . We define as input outputted by convolutional neural network (CNN) layer. In step , the LSTM units can be expressed as:

| (10) |

| (11) |

| (12) |

| (13) |

| (14) |

| (15) |

where , , , , and in Eqs. (10), (11), and (12) represent the weight matrix of input gate , forget gate , and output gate . Parameters , and are bias vectors of the input gate, forget gate, and output gate. Parameters , and in Eq. (13) are the weight matrix and bias vectors of new memory . is the intermediate state of the memory cell waiting to be updated. is the hidden state when step is , in Eq. (14) is the function, in Eq. (15) is a hyperbolic tangent function [58], and the operator denotes the element-wise multiplication.

4.4. CRF layer

Conditional Random Field can make full use of context information of text labels, and model dependencies among sequence labels to obtain a global optimal prediction. Through tag transition probability, CRF adds sentence-level tag transition information on the basis of Bi-LSTM. For input sequence , the corresponding predicted tag sequence is , and the corresponding tag sequence score is obtained by adding tag transition matrix and tag probability matrix output by Bi-LSTM, as shown in Eq. (16). During training, the loss function is calculated using maximum likelihood estimation in Eq. (17), where is the set of all possible tag sequences, and is a subset of . Finally, the Viterbi algorithm in Eq. (18) is used to calculate the maximum probability sequence to obtain the optimal output .

| (16) |

| (17) |

| (18) |

5. Experiments

We evaluate the proposed model SSBC on a self-built corpus and compare its effectiveness with other state-of-the-art models. Corpus, parameter settings and results of our experiments are described below.

5.1. Corpus

Considering a large annotated corpus of this field is not available, we, therefore, built a corpus from scratch. By entering the search query “YE = 2018–2022 AND (TI = 手术 OR AB = 手术)”on CNKI (China National Knowledge Infrastructure) online journal database, literature numbered 1232 was finally included to meet the quantity requirement. Then we manually extracted surgical terms from the full text of literature under the guidance of clinical experts. We segmented the extracted corpus containing 2600 terms by BMES tagging scheme. Table 1 and Table 2 respectively portray corpus in rows and detailed statistics. In experiments, the corpus is randomly divided into train, dev, and test set in proportion to 8:1:1.

Table 1.

Corpus structure.

| Article Title | Term | Context |

|---|---|---|

| 复方曲肽注射液治疗颅脑术后脑水肿的临床效果及对不良反应、血清肿瘤坏死因子-α水平的影响 | 颅脑损伤开颅术 | 颅脑损伤开颅术是颅脑损伤科临床上常见的手术之一, 是指通过机械设备来打开患者的颅骨后进行手术, 从而达到清除颅内血肿, 减轻颅内压力 |

Table 2.

Detailed corpus statistics.

| Type | Train | Dev | Test |

|---|---|---|---|

| Sentence | 2.2k | 0.2k | 0.2k |

| Char | 183.2k | 22.9k | 22.9k |

Selecting published journal articles other than others as our corpus source has 3 reasons: (1) peer-review and publication mechanism of journal articles enable surgical terms that appeared in literature to be more standardized and well-recognized; (2) closer to natural language; (3) more open to access and not restricted by medical safety and privacy rules.

5.2. Environment setting

We choose 2.10 GHz CPU, NVIDIA Tesla V100-16GB GPU, 64G RAM, Ubuntu 16.04 OS. In developing tool and compiling environment, we utilize Python 3.6 and PyCharm 2021.

5.3. Hyper-parameters

Table 3 shows our hyper parameters setting during the training.

Table 3.

Hyper parameter setting.

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| char_emb_size | 50 | epoch | 100 |

| bichar emb size | 50 | dropout | 0.5 |

| lattice_emb_size | 50 | learning rate | 0.005 |

| batch_size | 10 | learing rate dacay | 0.05 |

| LSTM hidden_dim | 200 | LSTM_layer | 1 |

5.4. Lattice training

In addition to inheriting the general-domain lattice embedding used in paper [51], we construct a specialized lattice for surgical procedure terms using an encyclopedia and open access corpus. We choose terms in seven fields mentioned in section 2 as search queries to obtain encyclopedia content. Table 4 shows details about entry words. In addition, we utilize the Chinese Medical Conversational Question-Answer (CMCQA) dataset [59] as a supplementary training corpus, which is a huge conversational question answering dataset in Chinese medical field collecting dialogue materials from the medical dialogue Q&A website Chunyu covering 45 departments, 1.3 million complete dialogues and 19.83 million sentences with a total of 2.84GB. We fuse corpus from the two sources, then perform automatic word segmentation, and use Word2Vec [60] for 50-dimension word vector training. We compared model performance using different embeddings.

Table 4.

Encyclopedia entry words details.

| Category | Size | Source |

|---|---|---|

| Leading term & Operative approach | 372 |

Surgical Procedure Classification Code National Clinical Edition3.0 |

| Anatomy (body) | 1,084 | Chinese Medical Subject Headings (CMeSH) |

| Disease | 2,813 | Disease Classification and Codes (GB T14396-2016) |

| Equipment | 6,609 | Medical Device Classification Catalog 2017 Edition |

| Comprehensive | 18,749 | Tsinghua University Open Chinese Thesaurus (Medical) |

5.5. Evaluating matrix

The traditional practice to calculate the values of precision, recall, and -score is based on the classification results of the NER model. We follow this set of evaluation to reflect the performance of our model in classifying samples into the desired category. Precision () refers to the ratio of correct entities to predict entities. Recall () refers to the ratio of the entities in the test set which are correctly predicted. -score is the harmonic average value of and measuring overall performance, and is calculated by Eq. (19):

| (19) |

In this work, we conduct several comprehensive experiments to verify the proposed module. The comparison models include (1) BERT-Bi-LSTM-CRF [61], a BERT model with a bidirectional long-short term memory network (LSTM) followed by a CRF layer that is the most popular architecture for NER task, but it still lacks the ability to leverage lexicon information; (2) BERT-FLAT-Lattice-Transformer [50], a BERT model with a flat-lattice Transformer incorporating lexicon information, but it is too complicated in structure and is hard to deploy just in embedding layer; (4) SSBC, our proposed model simply fuse lexicon-character level feature without pre-trained language model; and (4) Bichar-SSBC, our proposed model with bichar feature embedding. The model comparison, in theory, can prove the importance of lexicon information and a pre-trained language model. The experiment results are shown in Table 5.

Table 5.

The performances of different models in the test set of self-built corpus.

| Model | % |

F1 | ||

|---|---|---|---|---|

| P | R | |||

| BERT-Bi-LSTM-CRF | 64.71 | 91.67 | 75.86 | |

| BERT-FLAT-Lattice-Transformer | 62.61 | 72.02 | 67.09 | |

| SSBC | 70.52 | 85.91 | 77.46 | |

| Bichar-SSBC | 71.47 | 86.58 | 78.30 | |

| BERT-SSBC | 73.24 | 87.25 | 79.63 | |

| The bold font denotes the best result for each column | ||||

To see how domain knowledge to influence lexicon-based model, different combination of lattice embeddings is used, outcomes seeing in Table 6.

Table 6.

Outcomes using different lattice embeddings.

| Model | +General Lattice |

+General & Medical Lattice |

+Medical Lattice |

||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | |

| SoftLexicon (LSTM) | 70.52 | 85.91 | 77.46 | 71.15 | 86.91 | 78.25 | 75.45 | 83.56 | 79.30 |

| SoftLexicon (LSTM)+bichar | 71.47 | 86.58 | 78.30 | 71.11 | 85.91 | 77.81 | 72.10 | 87.58 | 79.10 |

| SoftLexicon (LSTM)+BERT | 73.24 | 87.25 | 79.63 | 71.43 | 87.25 | 78.55 | 72.25 | 88.26 | 79.46 |

6. Discussion

First, our BERT-SSBC achieves the highest -score at 79.63% comparing the other four models, surpassing baseline models BERT-Bi-LSTM-CRF and BERT-FLAT-Lattice-Transformer by 3.77% and 12.54% respectively. The other 3 models based on SoftLexicon show competitiveness in evaluating indexes since their score gap is no more than 2.5%, and the one with BERT achieves the highest score of 79.46%, implicating the superiority of SoftLexicon structure and the effectiveness brought by rich prior semantic knowledge. SSBC also has the highest score implying that the usage of lexicon greatly reduced segmentation errors.

Second, as proved in paper [51], adding word-level characteristics such as bigram is of great importance to represent character information. By utilizing pre-trained bigram embedding of Chinese Giga-Word, some entity boundary segmentation errors are reduced, and the model obtains lots of word information based on character. Compared with BERT-Bi-LSTM-CRF, bichar + SSBC behaves better in both and score.

Third, SoftLexicon behaves much better than FLAT-Lattice-Transformer even though they used the same lattice. This partly attributes to: (1) the unique structural feature specified in the surgical procedure field. (2) the design of the “Middle” group enables a character to exploit word information from the words that begin or end with it, but also the information of the words that contain the character.

Fourth, due to the characteristics of SoftLexicon, the use of different domain lexicons has an important impact on the performance of the model. Thus, we examine this effect by adding lexicons from different domains to the model. As shown in Table 6, models using medical lexicon tend to be better in most evaluating indexes. BERT-SSBC always achieves the best in every index even lexicon changes. Medical lexicon competes with the general lexicon fiercely implying that domain knowledge is as important as general domain knowledge. When considering that the size of the medical lexicon is only 1% big as the general lattice, we find high quality information representation can be obtained from specialized fields effectively.

Our model correctly predicts more than 70% of long terms especially with a small size of the pre-trained lexicon, showing its room for improvement by adding pre-trained corpus and its transferability to various domain-specific fields. But it is still not enough for application in daily routine processing since the precision rate is significantly lower than the recall rate, indicating its flaw in recognizing entities with longer lengths. Compare with small entities, long-term entities tend to be more unitary, and with more inter-entity nesting phenomena and blurred boundary truncation. Focusing on innovations beyond models and algorithms is hopefully a feasible way.

7. Conclusion and future work

Aiming at recognizing long terms in surgical procedure, this article applies a deep learning model that integrates field structural features, multi-granularities information, and pre-training language model BERT to alleviate the problem of challenging recognition of surgical terms in Chinese literature text. Through experiments on the self-built data set, our method proves to have an effective improvement in the performance of Chinese NER by reaching a new high F1 value of 79.63%. Moreover, we find the effect of domain-specific lexicon is no worse than that of general domain showing its room for continuous improvement and potential for transferability.

Our study has several limitations. This study builds data set by extracting related contexts from research articles which may cause a loss of information presented elsewhere in the full-text document. For instance, we found a prevailing description style of sentences where surgical procedure terms locate. This familiarity in contexts doesn't fit very well with scenarios in reality, therefore limiting the model's transferability in various domain-specific fields. For future work, it is important to consider extracting information from various full-text sources. Additionally, it is still not enough for application in daily routine processing since the precision rate is significantly lower than the recall rate, indicating its flaw in recognizing entities with longer lengths. For future work, to substantiate the usefulness of the system, it is important to test it on a larger lattice and more complex corpus. Finally, in-depth research of polysemy, abbreviations, relationships among entities, and the normalization of entities are the next tasks in our future work.

Declarations

Author contribution statement

Nan Jiale: Conceived and designed the experiments; Performed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Gao Dongping: Conceived and designed the experiments; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Sun Yuanyuan: Contributed reagents, materials, analysis tools or data; Analyzed and interpreted the data.

Li Xiaoying: Analyzed and interpreted the data.

Shen Xifeng; Li Meiting; Zhang Weining; Ren Huiling; Qin Yi: Contributed reagents, materials, analysis tools or data.

Funding statement

Dongping Gao was supported by National Key Research and Development Program of China [2020AAA0104905].

Data availability statement

Data will be made available on request.

Declaration of interest's statement

The authors declare no conflict of interest.

Additional information

No additional information is available for this paper.

Acknowledgements

This work was supported by the National Key Research and Development Program of China [grant ID 2020AAA0104905].

Footnotes

A surgical procedure term is usually ended with a leading term, that is, a word or a character of a clinical operative pattern. When coding operations or procedures of medical records in hospitals, deciding leading terms is the first step in the coding flow.

References

- 1.Cheng Y., Jiang T.L., Deng L.Z., Chen L.M., Jiang K. Research on the coverage of standard Chinese medical terminology to practical application. Chinese Journal of Health Informatics and Management. 2020;17(5):601–605+636. [Google Scholar]

- 2.Yin J., Luo S., Wu Z., Pan L.M. Chinese named entity recognition with character-level BLSTM and soft attention model. J. Beijing Inst. Technol. 2020;29(1):12. [Google Scholar]

- 3.Tang Z., Wan B., Yang L. Word-character graph convolution network for Chinese named entity recognition. IEEE/ACM Transactions on Audio, Speech, and Language Processing. 2020;28:1520–1532. [Google Scholar]

- 4.Zhu Q., Li X., Conesa A., Pereira C. GRAM-CNN: A deep learning approach with local context for named entity recognition in biomedical text. Bioinformatics. 2018;34(9):1547–1554. doi: 10.1093/bioinformatics/btx815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yang H.M., Li L., Yang R.D. Named entity recognition based on bidirectional long short-term memory combined with case report form. Chinese Journal of Tissue Engineering Research. 2018;22:3237–3242. [Google Scholar]

- 6.Zhang F.C., Qing Q.L., Jiang Y., Zhuang R.T. Research on Chinese EMR named entity recognition based on RoBERTa-WWM-BiLSTM-CRF. Data Analysis and Knowledge Discovery. 2022;6(Z1):251–262. [Google Scholar]

- 7.Jing S.Q., Zhao Y.L. Recognizing clinical named entity from Chinese electronic medical record texts based on semi-supervised deep learning. J. Inf. Rec. Mater. 2021;11(6):105–115. [Google Scholar]

- 8.Qu Q.Q., Kan H.X. Named entity recognition of Chinese medical text based on Bert BiLSTM CRF. Electronic Design Engineering. 2021;29(19):5. [Google Scholar]

- 9.Yu H., Xu C., Liu Y.R., Fu Y.W., Gao D.P. BiLSTM and CRF-based extraction of therapeutic events from Chinese clinical guidelines. Chinese Journal of Medical Library and Information Science. 2020;29(2):6. [Google Scholar]

- 10.Yang L., Huang X.S., Wang J.Y., Ding L.L., Li Z.X., Li J. Clinical trial disease subtype identification based on BERT-TextCNN. Data Analysis and Knowledge Discovery. 2022;6(4):69–81. [Google Scholar]

- 11.Wu H.Y., Lu D., Hyder M., Zhang S., Quinney S.K. DrugMetab: an integrated machine learning and lexicon mapping named entity recognition method for drug metabolite. CPT Pharmacometrics Syst. Pharmacol. 2018;7 doi: 10.1002/psp4.12340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lerner I., Paris N., Tannier X. 2019. Terminologies Augmented Recurrent Neural Network Model for Clinical Named Entity Recognition. [DOI] [PubMed] [Google Scholar]

- 13.Wang S.K., Li S.Z., Chen T.S. Recognition of Chinese medicine named entity based on condition random field. J. Xiamen Univ. 2009;48(3):359–364. [Google Scholar]

- 14.Liang Y.H., Zhang W.J., Zhou D.F. A hybrid strategy for high precision long term extraction. J. Chin. Inf. Process. 2009;23(6):26–30. [Google Scholar]

- 15.Devlin J., Chang M.W., Lee K., Toutanova K. 2018. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. [Google Scholar]

- 16.Lafferty J.D., McCallum A., Pereira F.C.N. Proc. 18th Int. Conf. Mach. Learn., Williamstown, MA, USA. 2001. Conditional random fields: Probabilistic models for segmenting and labeling sequence data; pp. 282–289. Jun./Jul. [Google Scholar]

- 17.National Health Commission of the People’s Republic of China, Official Announcement for Hospital Administration. 2020. http://www.nhc.gov.cn/yzygj/s7659/202006/7912483be2784e2ca08a9ea4628369b8.shtml (accessed 8 December 2021) [Google Scholar]

- 18.Zhang F.C., Qing Q.L., Jiang Y., Zhuang R.T. Research on Chinese EMR named entity recognition based on RoBERTa-WWM-BiLSTM-CRF. Data Analysis and Knowledge Discovery. 2022;6(Z1):251–262. [Google Scholar]

- 19.Jing S.Q., Zhao Y.L. Recognizing clinical named entity from Chinese electronic medical record texts based on semi-supervised deep learning. J. Inf. Rec. Mater. 2021;11(6):105–115. [Google Scholar]

- 20.Qu Q.Q., Kan H.X. Named entity recognition of Chinese medical text based on Bert⁃BiLSTM⁃CRF. Electronic Design Engineering. 2021;29(19):5. [Google Scholar]

- 21.Liu Z., Luo C., Zheng Z., Li Y., Fu D., Yu X., Zhao J. TCMNER and PubMed: a novel Chinese character-level-based model and a dataset for TCM named entity recognition. J Healthc Eng. 2021 Aug 7 doi: 10.1155/2021/3544281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Yu H., Xu C., Liu Y.R., Fu Y.W., Gao D.P. BiLSTM and CRF-based extraction of therapeutic events from Chinese clinical guidelines. Chinese Journal of Medical Library and Information Science. 2020;29(2):6. [Google Scholar]

- 23.Yang L., Huang X.S., Wang J.Y., Ding L.L., Li Z.X., Li J. Clinical trial disease subtype identification based on BERT-TextCNN. Data Analysis and Knowledge Discovery. 2022;6(4):69–81. [Google Scholar]

- 24.Shijia E., Xiang Y., Assoc Comp M. Assoc Computing Machinery; Singapore, SINGAPORE: 2017. Chinese Named Entity Recognition with Character-word Mixed Embedding. ACM Conference on Information and Knowledge Management (CIKM) pp. 2055–2058. [Google Scholar]

- 25.Mutinda F.W., Liew K., Yada S., Wakamiya S., Aramaki E. Automatic data extraction to support meta-analysis statistical analysis: a case study on breast cancer. BMC Med. Inf. Decis. Making. 2022 Jun;22(1):158. doi: 10.1186/s12911-022-01897-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cho H., Choi W., Lee H. A method for named entity normalization in biomedical articles: application to diseases and plantss. BMC Bioinf. 2017 Oct 13;18(1):451. doi: 10.1186/s12859-017-1857-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tsuruoka Yoshimasa, Tsujii Jun’ichi. Improving the performance of dictionary based approaches in protein name recognition. J. Biomed. Inf. 2004;37(6):461–470. doi: 10.1016/j.jbi.2004.08.003. [DOI] [PubMed] [Google Scholar]

- 28.Tsai R.T., Sung C.L., Dai H.J., Hung H.C., Sung T.Y., Hsu W.L. NERBio: using selected word conjunctions, term normalization, and global patterns to improve biomedical named entity recognition. BMC Bioinf. 2006 Dec 18;7(Suppl 5):S11. doi: 10.1186/1471-2105-7-S5-S11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Jiang Min, Chen Yukun, Liu Mei, Trent Rosenbloom S., Mani Subramani, Denny Joshua C., Xu Hua. A study of machine-learning-based approaches to extract clinical entities and their assertions from discharge summaries. J. Am. Med. Inf. Assoc. 2011;18(5):601–606. doi: 10.1136/amiajnl-2011-000163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lei Jianbo, Tang Buzhou, Lu Xueqin, Gao Kaihua, Jiang Min, Xu Hua. A comprehensive study of named entity recognition in Chinese clinical text. J. Am. Med. Inf. Assoc. 2014;21(5):808–814. doi: 10.1136/amiajnl-2013-002381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ma P., Jiang B., Lu Z., Li N., Jiang Z. Cybersecurity named entity recognition using bidirectional long short-term memory with conditional random fields. Tsinghua Sci. Technol. 2021;26(3):11–17. [Google Scholar]

- 32.Zhang Q., Hou L., Lv P., Zhang M., Yang H. Chinese medical entity recognition model based on character and word vector fusion. Sci. Program. 2021;2021:1–12. 5933652:1-5933652:12. [Google Scholar]

- 33.Zhou G., Su J. Proc. 40th Annu. Meeting Assoc. Comput. Linguistics, Philadelphia, PA, USA. 2002. Named entity recognition using an HMM-based chunk tagger; pp. 473–480. [Google Scholar]

- 34.Cortes C., Vapnik V. Support vector network. Mach. Learn. 1995;20:273–297. [Google Scholar]

- 35.Liang J., Xian X., He X., et al. A novel approach towards medical entity recognition in Chinese clinical text. Journal of Healthcare Engineering. 2017 doi: 10.1155/2017/4898963. Article ID 4898963, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wang Q., Iwaihara M. IEEE International Conference on Big Data and Smart Computing. BigComp; 2019. Deep neural architectures for joint named entity recognition and disambiguation. [Google Scholar]

- 37.Tan H., Yang Z., Ning J., Ding Z., Liu Q. 2021 International Conference on Asian Language Processing. IALP; 2021. Chinese medical named entity recognition based on Chinese character radical features and pre-trained language models; pp. 121–124. [Google Scholar]

- 38.Habibi M., Weber L., Neves M., Wiegandt D.L., Leser U. Deep learning with word embeddings improves biomedical named entity recognition. Bioinformatics. 2017;33:i37–i48. doi: 10.1093/bioinformatics/btx228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Cho M., Ha J., Park C., Park S. Combinatorial feature embedding based on CNN and LSTM for biomedical named entity recognition. J. Biomed. Inf. 2020;103:8. doi: 10.1016/j.jbi.2020.103381. [DOI] [PubMed] [Google Scholar]

- 40.Li Y., Du G., Xiang Y., Li S., Chen H. Towards Chinese clinical named entity recognition by dynamic embedding using domain-specific knowledge. J. Biomed. Inf. 2020;106 doi: 10.1016/j.jbi.2020.103435. [DOI] [PubMed] [Google Scholar]

- 41.Jin Y., Xie J., Guo W., Luo C., Wang R. IEEE Access; 2019. LSTM-CRF Neural Network with Gated Self Attention for Chinese NER; p. 1. [Google Scholar]

- 42.Zhao H., Kit C. Proceedings of the Sixth SIGHAN Workshop on Chinese Language Processing. 2008. Unsupervised segmentation helps supervised learning of character tagging for word segmentation and named entity recognition. [Google Scholar]

- 43.Wu H.Y., Lu D., Hyder M., Zhang S., Quinney S.K. DrugMetab: an integrated machine learning and lexicon mapping named entity recognition method for drug metabolite. CPT Pharmacometrics Syst. Pharmacol. 2018;7 doi: 10.1002/psp4.12340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lerner I., Paris N., Tannier X. 2019. Terminologies Augmented Recurrent Neural Network Model for Clinical Named Entity Recognition. [DOI] [PubMed] [Google Scholar]

- 45.Hou C.Y., Wang M.L., Li C.L. Springer-Verlag Singapore Pte Ltd; Hangzhou, PEOPLES R CHINA: 2019. Entity Subword Encoding for Chinese Long Entity Recognition. 4th China Conference on Knowledge Graph and Semantic Computing (CCKS) pp. 123–135. [Google Scholar]

- 46.Kim H., Kang J. How do your biomedical named entity recognition models generalize to novel entities? IEEE Access. 2022 Mar 8;10:31513–31523. doi: 10.1109/ACCESS.2022.3157854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Zhao S., Cai Z., Chen H., Wang Y., Liu A. Adversarial training based lattice lstm for Chinese clinical named entity recognition. J. Biomed. Inf. 2019;99(14) doi: 10.1016/j.jbi.2019.103290. [DOI] [PubMed] [Google Scholar]

- 48.Xu Y., Wang Y.N., Liu T.R., Liu J.H., Fan Y.B., Qian Y., et al. Joint segmentation and named entity recognition using dual decomposition in Chinese discharge summaries. J. Am. Med. Inf. Assoc. 2014;21:E84–E92. doi: 10.1136/amiajnl-2013-001806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Liu K.X., Hu Q.C., Liu J.W., Xing C.X. IEEE; Liuzhou, PEOPLES R CHINA: 2017. Ieee. Named Entity Recognition in Chinese Electronic Medical Records Based on CRF. 14th Web Information Systems and Applications Conference (WISA) pp. 105–110. [Google Scholar]

- 50.Li Xiaonan, Yan Hang, Qiu Xipeng, Huang Xuanjing. Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Online. Association for Computational Linguistics; 2020. FLAT: Chinese NER using flat-lattice transformer; pp. 6836–6842. [Google Scholar]

- 51.Zhang Y., Yang J. 56th Annual Meeting of the Association-For-Computational-Linguistics (ACL) Assoc Computational Linguistics-Acl; Melbourne, Australia: 2018. Chinese NER using lattice LSTM; pp. 1554–1564. [Google Scholar]

- 52.Cheng J.R., Liu J.X., Xu X.B., Xia D.W., Liu L., Sheng V.S. A review of Chinese named entity recognition. KSII Trans Internet Inf Syst. 2021;15:2012–2030. [Google Scholar]

- 53.Ma Ruotian, Peng Minlong, Zhang Qi, Wei Zhongyu, Huang Xuanjing. Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Online. Association for Computational Linguistics; 2020. Simplify the usage of lexicon in Chinese NER; pp. 5951–5960. [Google Scholar]

- 54.Devlin J., Chang M.W., Lee K., Toutanova K. 2018. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. [Google Scholar]

- 55.Lample G., Ballesteros M., Subramanian S., Kawakami K., Dyer C. Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 2016. Neural architectures for named entity recognition. [Google Scholar]

- 56.Graves A., Schmidhuber J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Network. 2005;18(5–6):602–610. doi: 10.1016/j.neunet.2005.06.042. [DOI] [PubMed] [Google Scholar]

- 57.Hochreiter Sepp, Schmidhuber Jürgen. Long short-term memory. Neural Comput. 1997;9(8):1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 58.M. Rhif, A. B. Abbes, B. Martinez, and I. R. Farah, A deep learning approach for forecasting non-stationary big remote sensing time series, Arabian J. Geosci., vol. 13.

- 59.Weng Yixuan. 2022. CMCQA.https://github.com/WENGSYX/CMCQA (accessed 8 December 2021) [Google Scholar]

- 60.Sutskever Mikolov I., Chen K., Corrado G.S., Dean J. Proc. Adv. Neural Inf. Process. Syst., Lake Tahoe, NV, USA. Dec. 2013. Distributed Representations of Words and Phrases and Their Compositionality; pp. 3111–3119. [Google Scholar]

- 61.Li Hui, Lin Yu, Zhang Jie, Lyu Ming. Fusion deep learning and machine learning for heterogeneous military entity recognition. Wireless Commun. Mobile Comput. 2022;2022:1–11. Article ID 1103022, 11 pages, 2022. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data will be made available on request.