Abstract

Free energy calculations are rapidly becoming indispensable in structure-enabled drug discovery programs. As new methods, force fields, and implementations are developed, assessing their accuracy on real-world systems (benchmarking) becomes critical to provide users with an assessment of the accuracy expected when these methods are applied within their domain of applicability, and developers with a way to assess the expected impact of new methodologies. These assessments require construction of a benchmark—a set of well-prepared, high quality systems with corresponding experimental measurements designed to ensure the resulting calculations provide a realistic assessment of expected performance. To date, the community has not yet adopted a common standardized benchmark, and existing benchmark reports suffer from a myriad of issues, including poor data quality, limited statistical power, and statistically deficient analyses, all of which can conspire to produce benchmarks that are poorly predictive of real-world performance. Here, we address these issues by presenting guidelines for (1) curating experimental data to develop meaningful benchmark sets, (2) preparing benchmark inputs according to best practices to facilitate widespread adoption, and (3) analysis of the resulting predictions to enable statistically meaningful comparisons among methods and force fields. We highlight challenges and open questions that remain to be solved in these areas, as well as recommendations for the collection of new datasets that might optimally serve to measure progress as methods become systematically more reliable. Finally, we provide a curated, versioned, open, standardized benchmark set adherent to these standards (protein-ligand-benchmark) and an open source toolkit for implementing standardized best practices assessments (arsenic) for the community to use as a standardized assessment tool. While our main focus is benchmarking free energy methods based on molecular simulations, these guidelines should prove useful for assessment of the rapidly growing field of machine learning methods for affinity prediction as well.

1. Overview

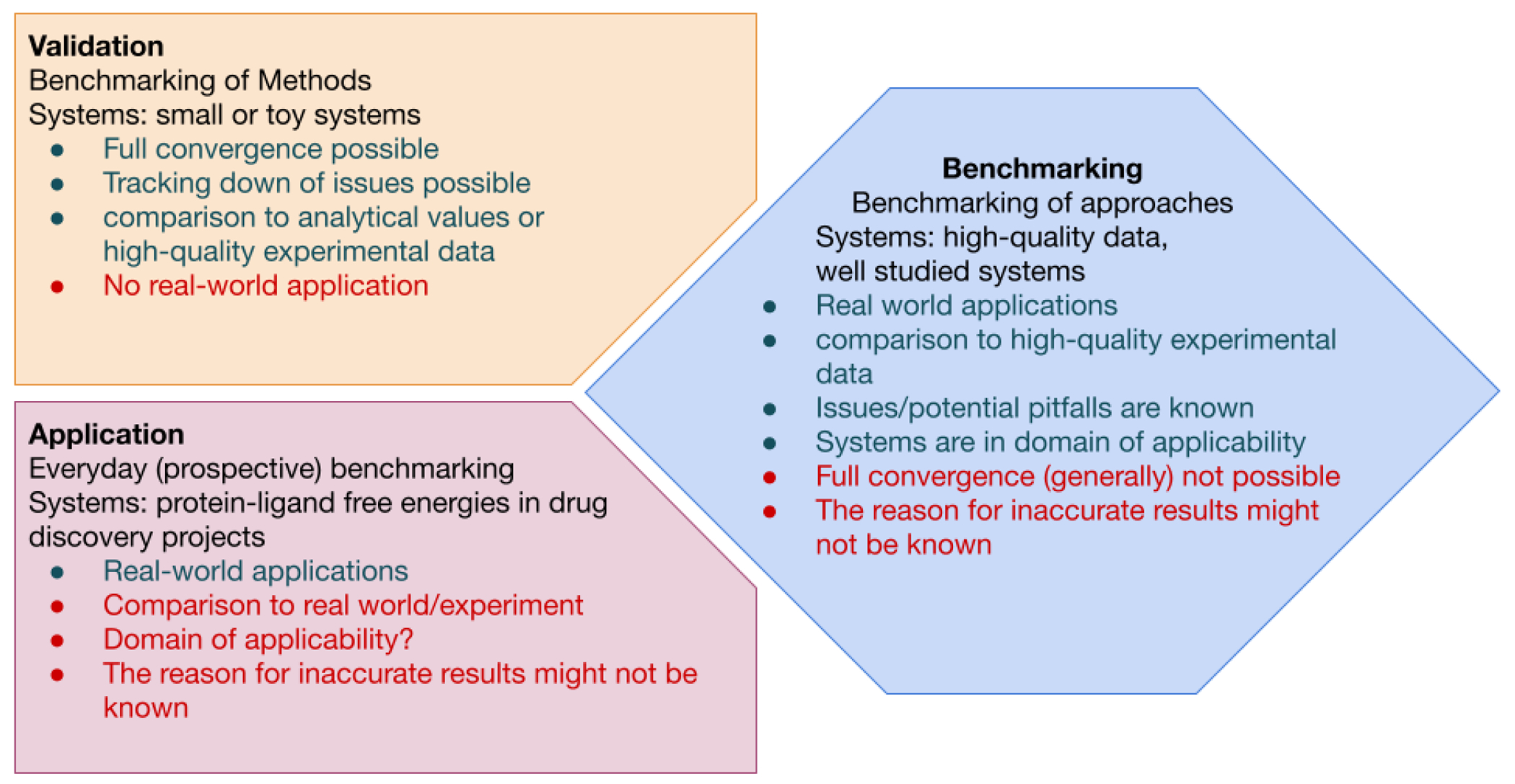

This guide focuses on recommended best practices for benchmarking the accuracy of small molecule binding free energy (FE) calculations. Here, we define benchmarking as the assessment of expected real-world performance relative to experiment. We contrast this with the assessment of methods or tools intended to arrive at the same target free energy, which we refer to as validation (Figure 1), the comparison of the computational efficiency or speed of these methods, or mapping of effort-accuracy trade offs, all of which also play essential roles in dictating real-world usage. Importantly, validation calculations are often performed on systems selected for tractability, rather than intended to be representative of real-world applications [1–3].

Figure 1. Illustration of the definitions of Validation, Application, and Benchmarking used in this guide.

For each term, the definition, advantages (green) and potential short-comings (red) in terms of method evaluation are listed in the three panels. Validation (top left panel) uses systems that will confidently converge, the expected results are known, and the underlying issues are well understood. Validation sets allows robust development and improvement of methods. Application (bottom left panel) of a method, on the other hand, uses real-world systems and enables methods to be continuously evaluated on real-world applications of interest. Because the systems may not be well understood, it is possible for methods to fail in new ways that are difficult to detect. Benchmarking (right panel) bridges validation and application by aiming to assess the accuracy of real-world applications relative to experiment in cases where experimental data quality is not limiting and the method is known to be applied within its domain of applicability. Compared to validation, the size and complexity of the system may introduce challenges to producing robust, repeatable results.

As illustrated in Figure 1, benchmarking against experiment would ideally be performed on high quality data in order to provide an accurate assessment of expected performance under conditions where structure or assay deficiencies do not limit performance. In good benchmark sets, the potential pitfalls and complications in the data are well understood, but these systems may still challenge methodologies to produce reproducible, consistent predictions due to conformational sampling timescales–unlike simpler systems selected for methodology validation. We also differentiate benchmarking from application (Figure 1), where one is often constrained by the availability of experimental data and limited to a particular target, which may not always fall within the domain of applicability of the methodology. We aim to construct benchmarks that provide a good predictor of the expected accuracy in applications that fall squarely within the domain of applicability and for which good experimental data is available.

Organization:

This best practices guide is organized as follows: First, we give a brief overview of protein-ligand binding free energy methods and their use with the goal of highlighting key concepts that guide the construction of a meaningful benchmark. Next, we discuss recommendations for the construction of a high-quality experimental benchmark dataset, which must consider the availability of high-quality structural and bioactivity data as well as the expected domain of applicability. Next we provide recommendations on preparing structures for free energy calculations in a manner that will enable the benchmark dataset to be widely and readily usable by practitioners and developers, incorporating best practices for carrying out free energy calculations. We then discuss recommendations for the statistical analysis of both retrospective benchmarks and blind prospective challenges in order to derive robust conclusions about the accuracy of these methods and insights into where they fail. To address the absence of a standard community-wide benchmark, we provide a curated, versioned, open, standardized benchmark set adherent to these standards (protein-ligand-benchmark). In addition, we provide an open source toolkit that implements standardized best practices for assessment and analysis of free energy calculations (arsenic). Finally, we conclude with recommendations for data collection and curation to guide the systematic improvement of available benchmark sets and drive the expansion of the domain of applicability of free energy methods.

2. Introduction

The quantitative prediction of protein ligand binding affinity is a key task in computer-aided drug discovery (CADD). Accurate predictions of ligand affinity can significantly accelerate preclinical stages of drug discovery programs when used to prioritize compounds for synthesis with the goal of improving or maintaining potency [4, 5]. Binding free energy calculations–particularly alchemical binding free energy calculations–have emerged as arguably the most promising tool [6]. Alchemical methods, which include a multitude of approaches such as free energy perturbation (FEP) [7, 8] and thermodynamic integration (TI) [9–11], have a substantial legacy, with the original theory dating back many decades. Seminal work in the 1980’s and 90’s demonstrated that molecular dynamics (MD) and Monte Carlo (MC) simulation packages could carry out these calculations for practical applications in organic and biomolecular systems [12–18].

Alchemical perturbations in binding free energy calculations involve the transformation of one chemical species into another, or its complete creation or deletion, via a chemically unrealistic pathway (alchemical) that can only be achieved in silico by manipulating its interactions in a defined way. This is achieved by changing an atom from one chemical element to another. Alchemical calculations are often classified as either relative (RBFE) or absolute (ABFE) binding free energy calculations. While the underlying theory is similar, the implementation differs in how the thermodynamic cycle is constructed and which quantities can be computed: In RBFEs, a generally modest alchemical transformation of the chemical substructures that differ between the ligands is performed to compute the difference in free energy of binding between the related ligands (ΔΔG). By contrast, ABFEs alchemically remove an entire ligand, enabling the absolute binding free energy of a ligand (ΔG) to be computed and directly compared to experiment. A detailed review of commonly-used alchemical methodologies and best practices for their use is provided in a separate best practices guide [19].

In drug discovery, lead optimization (LO) typically involves the synthesis of hundreds of close analogues, often differing by only small structural modifications, in order to identify the optimal leads that show a good balance of target potency and other properties. This makes it an ideal scenario for RBFE, where small differences in structure are well suited to alchemical perturbation.

A number of recent studies have highlighted the good performance of RBFE for LO tasks. An early influential publication from Schrödinger [20] reported mean unsigned errors of < 1.2 kcal/mol on a curated set of 8 protein targets, 199 ligands, and 330 perturbations using their commercial implementation of FEP. Minimal discussion was devoted to how these targets were selected, other than their diversity and the availability of published structural and bioactivity data for a congeneric series for each target; notably, some ligands appearing in the published studies from which the data were curated were omitted due to the presence of presumed changes in net charge and the potential for multiple binding modes that would fall outside the domain of applicability. Schrödinger utilized the same benchmark set to assess subsequent commercial force field releases (OPLS3 [21] and OPLS3e [22]). In the absence of other significant efforts to curate benchmark sets, this set (often called the “Schrödinger JACS set”) has become the de facto dataset for most large scale RBFE reports, used to compare the performance of Amber/TI calculations [23], Flare’s FEP (a collaboration between Cresset and the Michel group) [24], and PMX/Gromacs [25], as well as machine learning studies [26, 27]. By contrast, ABFE calculations have not been studied on datasets of similar scale to date, although individual reports have shown success accurately predicting binding affinities [28, 29].

Despite the reported success of RBFE calculations on these benchmark sets, there are many reports demonstrating that RBFE calculations still struggle in scenarios [30] such as with scaffold modifications [31], ring expansion [32], water displacement [33–36], protein flexibility [37–39], applications to GPCRs [40, 41], and the modelling of cofactors such as metal ions or heme [42, 43]. This is manifested in a large-scale study of FEP applied to active drug discovery projects at Merck KGaA, in which Schindler et al. reported several cases of disappointing outcomes due to various of the above reasons [44].

In addition, new methods and implementation improvements for FE calculations continue to emerge, for instance the efforts on lambda dynamics [45, 46], and non-equilibrium RBFE calculations [25, 47]. Furthermore, there are many other methodologies such as end-point binding FE calculations (for instance MMGBSA, MMPBSA) or pathway based FE calculations that continue to be developed and applied [48]. Therefore, we must balance the increased confidence that simulation-based FE calculations can impact drug discovery, with the need to further understand, test, and overcome limitations of the current methods.

In brief, the issues mentioned above are related to three challenges for FE calculations, (1) an accurate representation of the biological system, (2) an accurate force field, and (3) sufficient sampling. Therefore, despite the importance of FE methods to drug discovery and chemical biology it is surprising that there are no benchmark sets or standard benchmark methodologies that allow calculation approaches to be compared in a manner that will reflect their future performance.

The Drug Design Data Resource [49] (D3R) and Statistical Assessment of the Modeling of Proteins and Ligands [50] (SAMPL) prospective challenges have demonstrated the utility of focusing the community on common benchmark systems and using common methods to analyze performance [51–60]. Mobley and Gilson discussed the need for well-chosen validation datasets and how this will have multiple benefits to understanding and expanding the domain of applicability of FE methods [1]. They focused on validation systems that will confidently converge, and where the underlying issues are well understood. The aim was to describe systems that could be used only to assess method performance in a robust manner. A set of recommendations for comparison of computational chemistry methods has been assembled by Jain and Nicholls [61]. As mentioned above, here we define benchmarking as assessing accuracy relative to experiment and focus on the best practices in the particular field of alchemical protein-ligand free energy calculations. This has implications that will be discussed in more detail throughout this article, for instance, the reliability of the underlying experimental data (structure and bioactivities), the confidence in the system setup such as protein and ligand preparation, the suitability of alchemical perturbations for FE, the statistical power of the dataset, the ability of the datasets to capture challenging real-world applications, and recommendations for analysing results. Essentially, we seek to understand what performance can be achieved when all these variables are handled to the best of our abilities.

Here, our proposed benchmark set augments existing datasets while recommending cleaning up or removing entirely some protein-ligand sets. We highlight key considerations in the construction of a useful set of protein-ligand benchmarks and the preparation of these systems for use as a community-wide benchmark. These recommendations are mirrored in a living benchmark set, which can be used to reliably launch future studies [62]. We seek to improve the initial version of this benchmark set in the future with help of the whole community. We welcome any contribution either to improve the existing set or to expand the set with new protein-ligand sets, if they meet the requirements established in here. We also recommend statistical analyses for assessing and comparing the accuracy of different methods and provide a set of open source tools that implement our recommendations [63]. We hope these materials will become a common standard utilized by the community for assessing performance and comparing methodologies.

3. Prerequisites and Scope

We assume a basic familiarity with molecular dynamics (MD) simulations, as well as alchemical free energy protocols. If you are unfamiliar with both of these concepts we suggest the best practices guides by Braun et al. [64] on molecular simulations and Mey et al. [19] on alchemical free energy calculations as a starting point. Note that the best practices of the latter guide are briefly repeated in Section 5 for completeness. Both guides agree with each other and are complementary. The sections on dataset selection (Sections 4), the analysis of benchmark calculations (Section 6), and the key learnings (Section 7) are unique to the present guide.

4. Dataset selection

Details of our criteria for the construction of good benchmark datasets will follow throughout the rest of the manuscript. Here, we examine the purpose of protein-ligand benchmark datasets, and the rationale for expanding these sets. We propose a core of robust datasets that match our suggested optimal criteria for benchmarking, but emphasize the need to supplement this core with new datasets which explore increasingly difficult challenges in order to continue to expand the domain of applicability of predictive methods. A variety of parameters can guide future datasets.

4.1. Protein Selection

The selection of target proteins in the benchmark set is generally dependent on the availability of experimental data and whether the applied methods are applicable to the specific targets. A good benchmark system (consisting of a protein target and small molecules with available experimental binding data) should ideally be representative of classical drug discovery targets and chemistry; a good benchmark set should also be diverse in terms of targets and chemistry. Expansion of this set to include additional systems should ideally reflect the evolution of drug discovery and the emergence of new target families and chemistries. While binding free energy calculations are agnostic to protein classification, there can be a pragmatic value in expanding benchmark sets to new protein families that may present unexpected inherent difficulties (see Section 4.3).

To merit inclusion in a good benchmark set, the available structural data must meet certain quality thresholds (Section 4.4), and the structure should be adequately prepared for molecular simulation to enable the benchmark to be broadly and readily useful (Section 5.1).

4.2. Ligand Selection

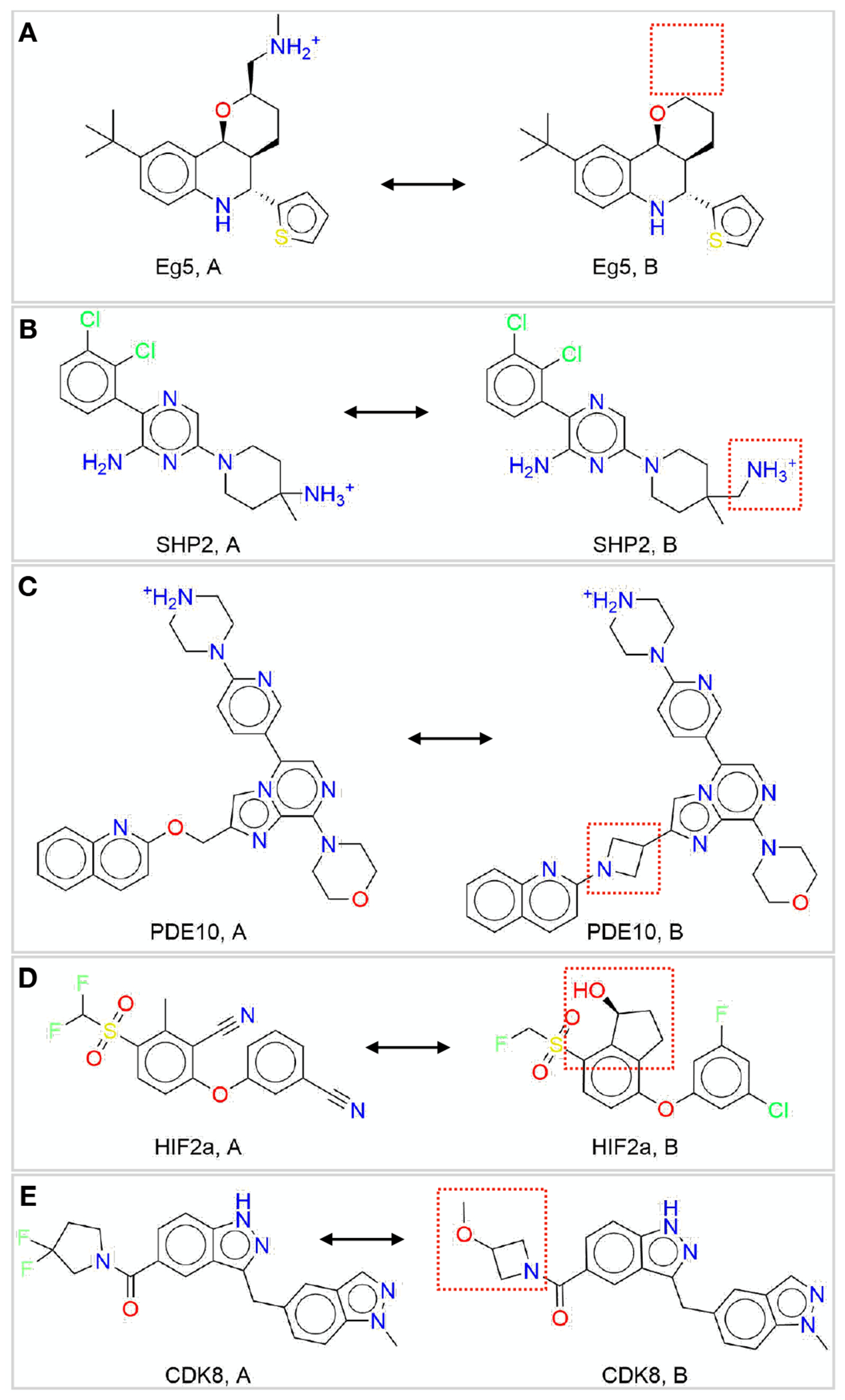

While some methods (such as machine learning and GBSA rescoring) can make rapid predictions of affinity, free energy methods are generally relatively costly in terms of computational effort. In order to make statistically meaningful comparisons among methods, however, a sufficient number of reliable experimental measurements (Section 4.5) will be necessary for a benchmark set. These measurements also need to cover an adequate dynamic range, i.e. the activity range should be sufficiently large. Such a set enables a statistical analysis with sufficient power to distinguish how methods are expected to perform on larger test sets for the same targets (Section 6). In addition, the set of ligands should be both unambiguously specified (with resolved stereochemistry or unambiguous tautomeric or protonation states) and have chemistries that fall within the domain of applicability of the particular free energy method used. In order for standardized benchmark sets to be broadly applicable to a range of methodologies and software packages, we recommend annotating systems in terms of common challenges that may exclude their assessment by certain methods or packages. For relative free energy calculations, these labels should denote transformations that include (1) charge changes, (2) change of the location of a charge, (3) ring breaking, (4) changes in ring size, (5) linker modifications, (6) change in binding mode, and (7) irreversible (covalent) inhibitors. Several of these issues are illustrated in Figure 2. If the ligand sets are sufficiently large, they can then be split into separate subsets (subsets with e.g., different ring sizes or different charges).

Figure 2. Five ligand pairs (A, B) for different targets (with each pair for a single target) having structural differences which can be challenging to simulate.

(A) Eg5: charge change, (B) SHP2: charge move, (C) PDE10: linker change, (D) HIF2α: ring creation, (E) CDK8: ring size change.

Adequately sampling ligand conformers can pose a challenge for some methods, especially if the ligands contain many rotatable bonds, invertible stereocenters, or macrocycles. Aromatic rings with asymmetric substitution will usually sample dihedral rotations freely in solvent, but in complex can become trapped in protein pockets during short simulations [65, 66]. Barriers to inversion of pyramidal centers can sometimes be long compared to typical simulation timescales [67]. Macrocycles present more extreme challenges for ligand sampling, and likely require special consideration to ensure their conformation spaces are adequately sampled [68–70].

The chemical diversity of ligands considered for inclusion in a benchmark set also needs to be suitable for the given free energy method. Single RBFEs rely on common structural elements between the molecules being compared, and are hence more appropriate for a congeneric series of ligands. ABFEs are more amenable for comparing sets of small molecules that differ more substantially in scaffold, or where the common structural elements are minimal. In both kinds of calculations, the size of the structural elements that differ between ligands within a congeneric series is also important to consider, since larger changes may also affect the binding mode of the ligand; the quality and availability of crystal structures for representative ligands of this system becomes critical in assessing these assumptions.

4.3. Addressing specific challenges

Besides the challenges mentioned in Sections 4.1 and 4.2, there are specific challenges which can be addressed by a benchmark set. These include water displacement in binding sites, the presence of cofactors in the binding site, slow motions of ligands (e.g. rotatable bonds) and proteins, and activity cliffs. We recommend annotating these challenging cases in the benchmark set.

4.4. Structural Data

A successful free energy calculation requires a well-prepared, experimentally accurate model of the system to be simulated, with structure(s) representative of the equilibrium state of the system. Just as choices made selecting binding data are critical, the choices made when selecting a protein model will impact benchmarking.

Often structural studies use shorter constructs that might be missing several domains compared to the full-length protein. To facilitate crystallization or expression, mutations might have been introduced. In addition, parts of the protein might not be resolved or modelled in available structures. Ideally, such deviations should be kept to a minimum in a benchmark dataset.

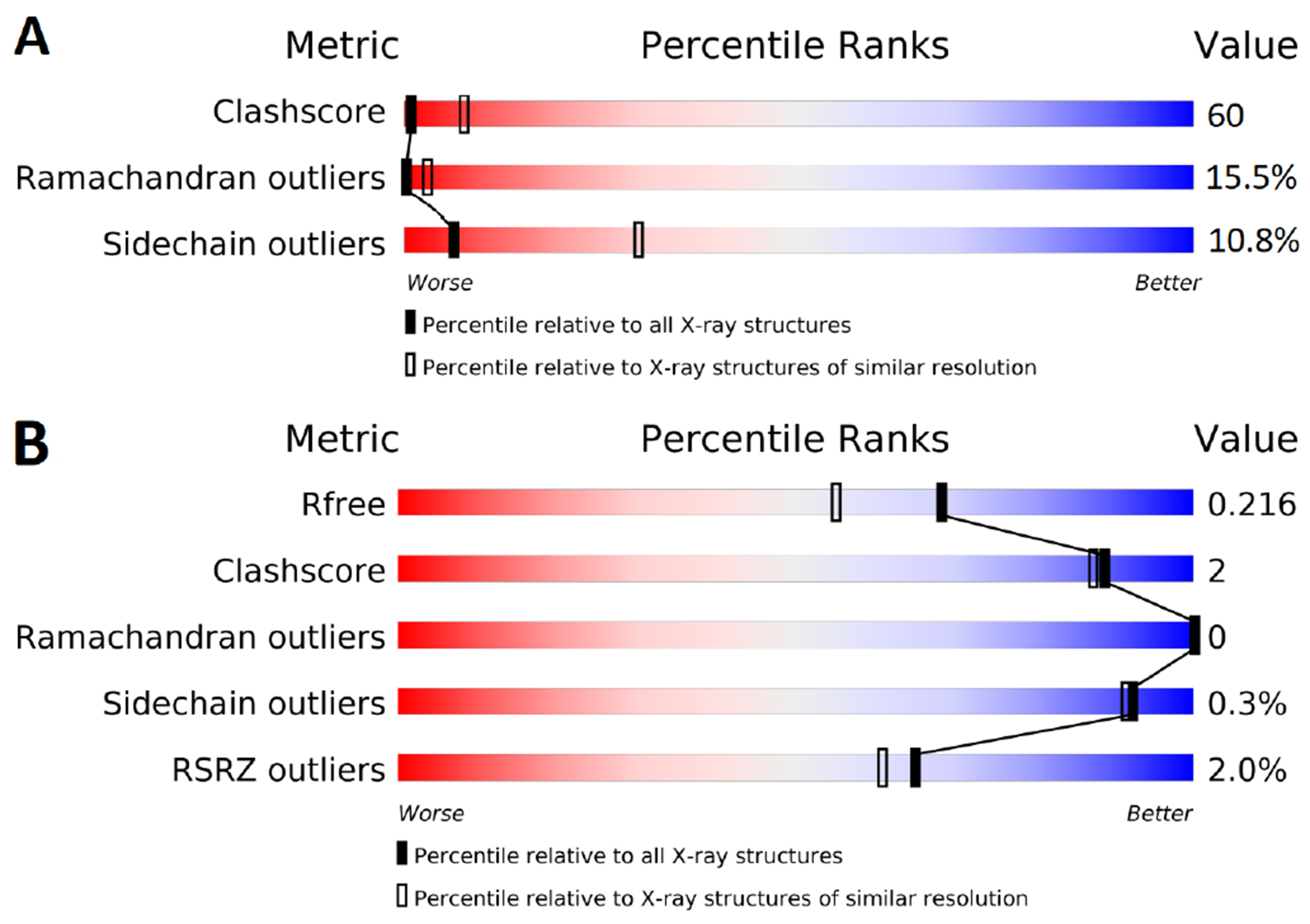

Starting structures are typically obtained from experimentally constrained models, most commonly from X-ray diffraction data. Other sources of structures include cryo-EM, NMR, homology models, or machine-learning-based structural models [6, 29, 44, 71]. However, while these sources could be practical for applications, we do not recommended them for input structures of benchmark calculations without additional validation. As free energy calculations are usually run at atomic resolution, the input structure needs to provide the coordinates of all atoms, with those coordinates ideally determined by the experimental model. For X-ray and cryo-EM structures, this requirement is only met by high quality structures. OpenEye Iridium can guide the assessment of X-ray structures [72]. Based on a set of identification criteria, an Iridium score is calculated, which states higher structure quality for lower scores. The Iridium classification categorizes each structure into not trustworthy (NT), mildly trustworthy (MT) and highly trustworthy (HT) categories. It is important to note that the Iridium criteria were designed to assess structures for benchmarking docking and not necessarily for free energy calculations. As such there is one important criterion missing - completeness of the model - which is likely to be far more important for free energy calculations than docking.

Any protein structural assessment should be done using two filters; overall (global) and local. Traditionally, overall quality of the structure (global) had been assessed using X-ray or cryo-EM resolution as it is easily accessible. However, this metric provides a theoretical limit and does not assess the quality of the model. Therefore, it is not a good metric for accuracy, completeness or quality and should only be used alongside other metrics. Iridium, by design, does not set a resolution limit but suggests a resolution threshold of < 3.5 Å [72] because it is difficult to model side chain atoms precisely above that threshold. Stricter thresholds have been suggested (i.e. < 2.0 Å in a recent benchmark [44]).

More meaningful metrics for X-ray structures are R, Rfree and the coordinate error. Currently, equivalent metrics for cryo-EM structures either do not exist or are less well understood. As a result the rest of the discussion will focus on criteria for structures determined using X-ray or neutron diffraction data. It should be noted that cryo-EM maps can still be visualized with the model to get an idea of the agreement between the model and the data. The R-factor is a measure for the difference between the predicted data (by the model) and the measured data. A smaller R-factor indicates an experimentally consistent model. A complication with R-factor is that it is a non-normalized metric. For a given dataset the model with the lowest R-factor is best fit to the data. Unfortunately, for different datasets, even for the same protein, lowest R-factor may not be the highest quality model.

The Rfree-factor is calculated the same way, but uses only a held out randomly selected subset of the measured data. Thus, it can be used to identify overfit models as these result in a larger difference between R-factor and Rfree (typically more than 0.05). Both R-factors are easily accessible for reported crystallographic data, e.g. in the protein data bank (PDB) [99]. The coordinate error, while more difficult to find or calculate, provides the best way to assess the precision and quality of the model:

| (1) |

where is the number of heavy atoms with occupancy of 1, is the volume of the asymmetric unit cell and is the number of non-Rfree reflections used during refinement. A high-quality structure should have a coordinate error < 0.7. Recent PDB entries usually include a coordinate error estimate which can be found by searching for ESU Rfree, Cruick-shank or Blow Density Precision Index (DPI). The coordinate error (as shown in Equation 1) is BlowDPI.

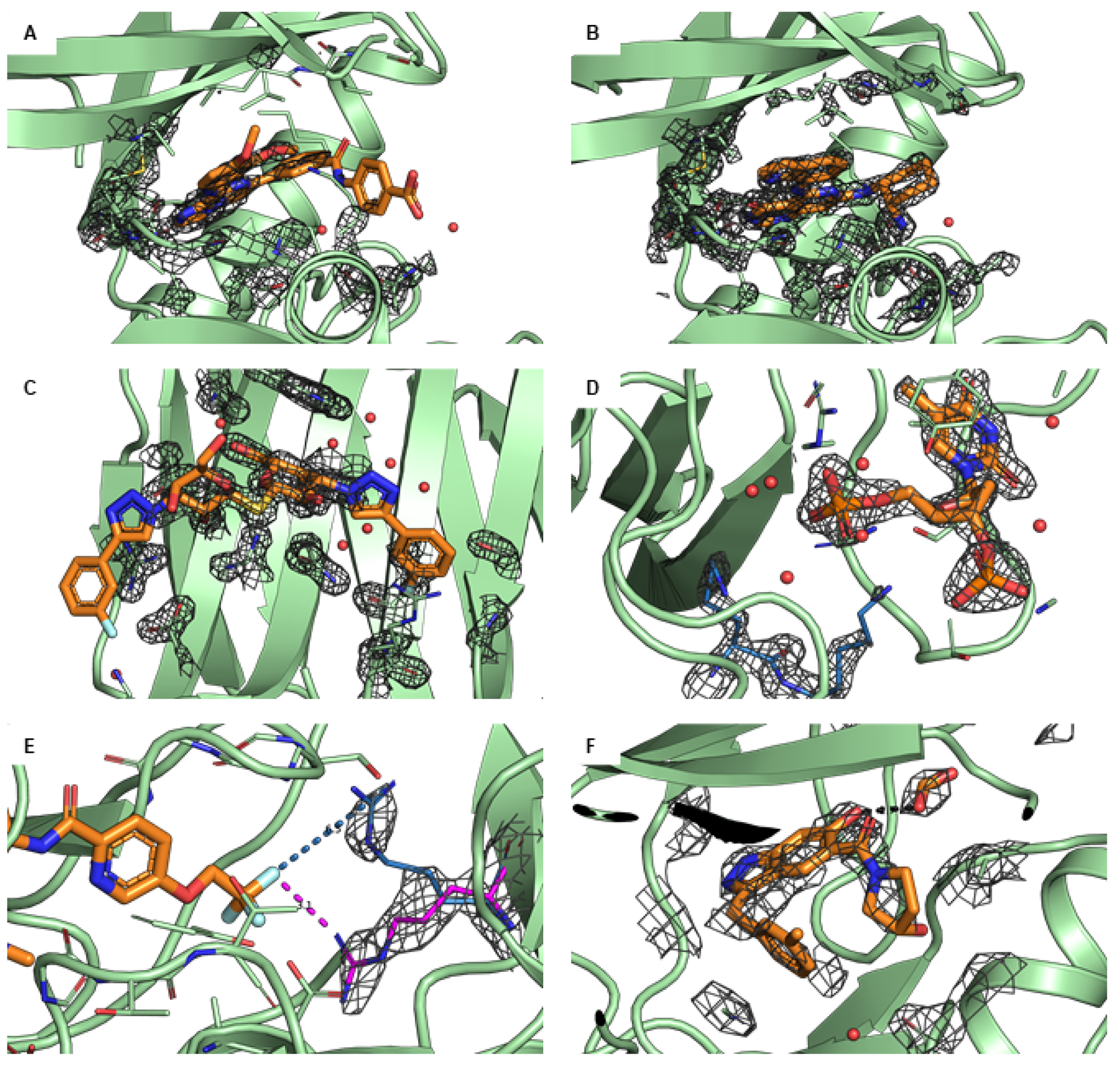

While understanding the global quality of a structure is important, it is the local active site or ligand binding site that will have the largest impact on benchmarking performance. Therefore special care should be taken to assess the ligand and surrounding active site residues. Of highest priority is to identify all unmodeled residues and side chain atoms within 6 to 8 Å of any ligand atom. When multiple structures with similar coordinate error are available, the structure with no missing residues or side chain atoms that meets subsequent criteria should be used. The electron density around the ligand should cover at least 90% of the ligand atom centers, which can be checked visually or by checking for a real space correlation coefficient (RSCC) value > 0.90. Examples for a poor ligand density are shown in Figures 4(A) (in comparison to Figure 4(B) with a good density) and 4(C). Ligand atoms where there are crystal packing atoms within 6 Å should be identified (see Figure 4(D)), as such packing atoms may affect the observed binding mode. All ligand and active site atoms with occupancy < 1.0 should be identified. If there is only partial density for the ligand and the active site residue atoms, these partial-density atoms should be identified (see Figure 4(A)). If alternate conformations for the ligand or active site residues are available, the selected conformation should be determined based on the electron density (see Figure 4(E)). Local metrics such as electron density support for individual atoms (EDIA) [100] or a number of RSCC [101] calculators can indicate if the electron density is sufficient to support the crystallographic placement of a given atom. Covalently bound ligands present as cofactors should be identified and appropriately modelled. Enabling the support of covalent ligand modifications for the free energy calculations has been demonstrated, yet it remains in its early stage of adoption by the community. Hence, we would not recommend including covalent ligands into the standardized benchmark sets [102].

Figure 4. Examples of common challenges encountered when using X-ray crystal structures.

The protein is shown in green and the ligand in orange. If not stated differently, the 2Fo-Fc maps are illustrated as grey isomesh at level. (A) PDB ID 4PV0 shows poor density (at ) for residues in the active site. The beta sheet loop at the top of the active site has residue side chains modeled with no density to support the conformation and the end of the loop has residues that are not modeled. (B) The recommended structure PDB ID 4PX6 for the same protein has complete density (and modeled atoms) for the whole loop (at ). (C) PDB ID 5E89 shows poor ligand density, especially for the m-Cl-phenyl (left) and the hydroxymethyl (center). This means that the ligand conformation, as shown, is not specified by the data, and thus should not be used as input to a computational study unless there is additional data supporting this binding mode. (D) The ligand of PDB ID 1SNC has crystal contacts with the residues K70 and K71 (blue) of the neighboring unit that directly interact with the ligand, potentially affecting the binding mode relative to a solution environment. (E) PDB ID 3ZOV has two alternate side chain conformations. Residue R368 in the B conformation (magenta) has clearly more density () than the A conformation (blue). The B conformation interacts with the ligand (distance 3.2 Å) whereas the A conformation does not interact with the ligand (distance 6.5 Å). If the user does not look at both conformations and chooses A (by default), this would likely be incorrect and miss a potentially important protein-ligand interaction. (F) In PDB ID 5HNB, there is an excipient (formic acid) that interacts directly with the ligand (2.7 Å O-O distance shown in black). The formic acid could be replacing a bridging water. From the data it is not possible to determine how the excipient is affecting the ligand/protein conformation, but for a study of ligand binding in the absence of formic acid, this should be removed.

Additional aspects should be considered beyond the quality of the model and the data (see also structure preparation, Section 5.1). The structure of a complex could be deformed due to crystal contacts or by experimental conditions like additives, pressure or temperature. These conditions might not be representative for the biological environment and therefore biologically active conformation of the complex (see Figure 4(D)). Other factors could play an important role in determining active conformations, such as crystal waters, co-factors or co-binders. These should usually be included to model the natural environment of the protein (see Figure 4(F) and 5(C)). One, however, needs to be cautious when retaining crystallographic waters in the binding site: for the cases where a modelled ligand clashes with the X-ray water, a careful equilibration with position restraints on the ligand atoms may be necessary to ensure further stable simulations. It may be undesired to trap waters near the bound ligand, as overhydration of the binding site may be detrimental to the free energy prediction accuracy due to potentially slow exchange of water between the binding cavity and the bulk [103]. It is also important to remember that for X-ray data, modeling water (versus amino acids or organic compounds) is less precise than for other atoms particularly when the crystal is formed in a high salt environment. The ligand in the experimental structure should be sufficiently close to the ligand to be simulated to have a model of the correct binding mode.

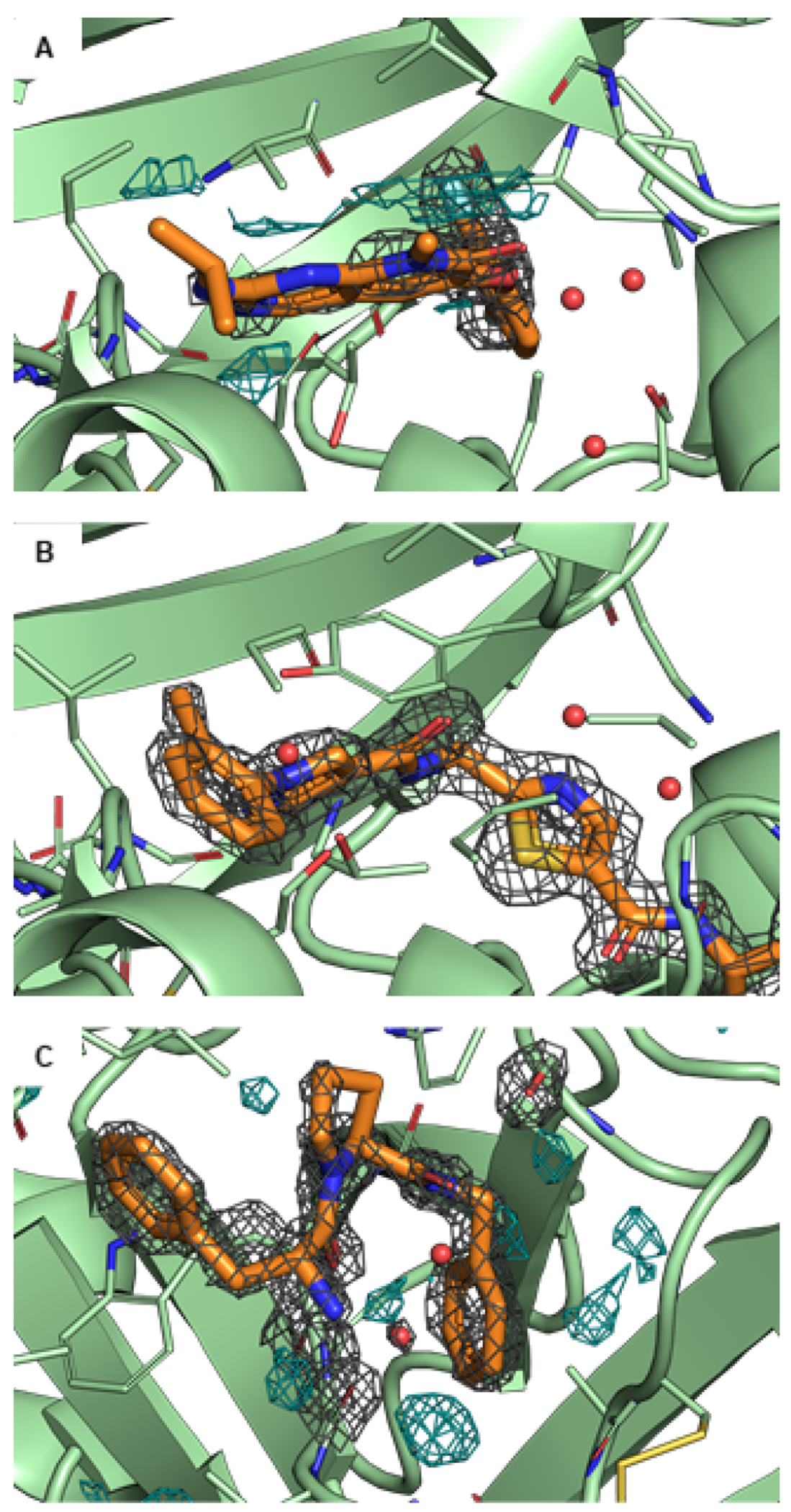

Figure 5. Examples of challenges encountered for ligand modelling using X-ray crystal structures.

The protein is shown in green and the ligand in orange. If not stated differently, the 2Fo-Fc maps are illustrated as grey isomesh at level. In some panels, the difference density Fo-Fc map is illustrated as cyan isomesh at level. (A) In PDB ID 3FLY, there is significant difference density, likely indicating that the ligand conformation is not modeled correctly. It is suspected that there is a low occupancy alternate conformation that is not modeled. (B) The suggested alternate structure of the same protein, PDB ID 6SFI, has no difference density. (C) PDB ID 2ZFF shows unexplained electron density in the binding pocket (difference map, bottom, center, cyan). This could be either water or a Na+ ion, as Na+ is present and modeled in other sites.

The criteria for selecting high-quality protein-ligand structures are summarized in the checklist “Choose Suitable Protein Structures for Benchmarking”. A use case for these selection criteria to score and select structures from prior benchmarking datasets is found in Table 1.

Table 1. Evaluation of the quality of structural and activity experimental data of the proposed benchmark set.

The structures listed in “Used structure” are those used in the initial version of this dataset, which is drawn in part from previous studies. However, alternate available structures may be superior. In these cases, we provide a PDB ID of a higher quality structure and its quality measures, in the “Alternate structure” field. The alternate structures are sorted according to best structure (lowest Iridium score) first. The footnotes “b” denote structures with similar ligands as the used structure. For each structure, the PDB ID is followed with the Iridium classification and Iridium score in the brackets. The Iridium classification categorizes each structure into not trustworthy (NT), mildly trustworthy (MT) and highly trustworthy (HT) categories. The lower the Iridium score, the better the structure [72]. For the used structures, also the diffraction-component precision indeces (DPI) is listed. We define a high ligand similarity as the OpenEye TanimotoCombo (Shape and Color Tanimoto, range from 0 to 2) being larger than 1.4 (standard cutoff). Regarding activity data (“Ligand Information”), the following metrics are given: The number of ligands N, the dynamic range DR (), and a simulated RMSE. For the calculation of the simulated RMSE, predicted data was drawn from a Gaussian distribution around the experimental value with a standard deviation of , taking also the experimental error into account. The numbers in the brackets are the 95% confidence intervals, obtained by bootstrapping using 1000 bootstrap samples. The quality metrics are color coded to highlight ideal quality (dark green), minimum quality (light green) and low quality (red). The ideal and minimum quality codes correspond to the minimal and ideal requirements of the checklist “Minimal requirements for a dataset”.

| Target | Used structure | Alternate structures | Ligand Information | ||||

|---|---|---|---|---|---|---|---|

| PDB | DPI | N | DR | RMSE | |||

| [kcal mol−1] | |||||||

| BACE[73, 74] | 4DJW (HT, 0.32) | 0.11 | 6UWP (HT, 0.28) | 3INF (HT, 0.33)b | 36 | 4.0 | 0.98 [0.78,1.18] |

| 3TPP (HT, 0.28)a | 3IN3 (HT, 0.36)a,b | ||||||

| 4DJV (HT, 0.31)b | 3LHG (HT, 0.36)b | ||||||

| 3INH (HT, 0.33)b | |||||||

| BACE_HUNT[75–77] | 4JPC (HT, 0.32) | 0.12 | 6UWP (HT, 0.28) | 4JOO (HT, 0.33)b | 32 | 4.9 | 0.97 [0.73, 1.19] |

| 3TPP (HT, 0.28)a | 4RRO (HT, 0.33)b | ||||||

| 4JP9 (HT, 0.31)a,b | 4JPE (HT, 0.35)b | ||||||

| BACE_P2[77, 78] | 3IN4 (HT, 0.59) | 0.28 | 6UWP (HT, 0.28) | 3INF (HT, 0.33)b | 12 | 0.8 | 1.00 [0.59,1.33] |

| 3TPP (HT, 0.28)a | 3IN3 (HT, 0.36)a,b | ||||||

| 4DJV (HT, 0.31)b | 3LHG (HT, 0.36)b | ||||||

| CDK2[74, 79] | 1H1Q (MT, 0.87) | 0.28 | 3DDQ (HT, 0.31)a | 4EOR (HT, 0.39)a,b | 16 | 4.3 | 1.00 [0.67,1.29] |

| CDK8[44, 80] | 5HNB (MT, 0.74) | 0.22 | 5XS2 (HT, 0.33) | 4CRL (HT, 0.42)a | 33 | 5.7 | 0.96 [0.73,1.18] |

| 5IDN (HT, 0.36)c | |||||||

| c-MET[44, 81] | 4R1Y (MT, 0.75)h | 0.17 | 5EOB (HT, 0.28) | 3ZXZ (HT, 0.44)a,c | 24 | 6.2 | 0.99 [0.72,1.26] |

| 3I5N (HT, 0.37)a,c | 4DEG (HT, 0.46)a,c | ||||||

| 3ZC5 (HT, 0.38)a,c | 5EYD (HT, 0.47)a,c | ||||||

| 3CD8 (HT, 0.39)a,c | |||||||

| EG5[44, 82] | 3L9H (MT, 0.88) | 0.18 | 2X7C (HT, 0.32) | 3K3B (HT, 0.41)c | 28 | 3.5 | 0.98 [0.72,1.22] |

| 3K5E (HT, 0.35)a | |||||||

| Galectin[83, 84] | 5E89 (MT, 1.04) | 0.07 | 5NF7 (HT, 0.30) | 4BM8 (MT, 0.38)a, d,b | 8 | 2.7 | 1.04 [0.55,1.42] |

| 1KJR (HT, 0.30)a | 5OAX (HT, 0.54)b | ||||||

| 5ODY (MT, 0.33)b, e, g | |||||||

| HIF2a[44, 85] | 5TBM (HT, 0.35) | 0.17 | 3H82 (HT, 0.30)a | 5UFB (HT, 0.36)b | 42 | 4.6 | 1.03 [0.79,1.27] |

| 6D09 (HT, 0.35)b | |||||||

| Jnk1[74, 86] | 2GMX (NT, -)f | 0.77 | 3ELJ (MT, 0.31)a | 3V3V (MT, 1.5)b, h, e | 21 | 3.4 | 0.98 [0.68,1.26] |

| MCL1[74, 87] | 4HW3 (HT, 0.41) | 0.26 | 6O6F (HT, 0.30) | 4WMU (HT, 0.41)b | 42 | 4.2 | 1.01 [0.80,1.21] |

| 4ZBF (HT, 0.35)b | 4ZBI (HT, 0.45)b | ||||||

| 3WIX (HT, 0.37)a, c | |||||||

| P38(MAPK14) [74, 88] | 3FLY (HT, 0.6) | 0.12 | 6SFI (HT, 0.30) | 3FLN (HT, 0.33) | 34 | 3.8 | 0.99 [0.76,1.22] |

| 3FMK (HT, 0.30 )a, b | 3FMH (HT, 0.43)a, b | ||||||

| PDE2[89, 90] | 6EZF (MT, 0.3) | 0.07 | 6C7E (HT, 0.29) | 6B97 (HT, 0.46)c | 21 | 3.2 | 1.05 [0.75,1.32] |

| 5TYY (HT, 0.30)a | |||||||

| PFKFB3[44, 91] | 6HVI (HT, 0.31) | 0.11 | 6HVH (HT, 0.36)a, b | 40 | 3.8 | 1.04 [0.82,1.25] | |

| PTP1B[74, 92] | 2QBS (MT, 0.33) | 0.15 | 2HB1 (HT, 0.32)a | 2QBR (HT, 0.65)b, a | 23 | 5.2 | 0.95 [0.67,1.21] |

| 2ZMM (MT, 0.33)b, d | |||||||

| SHP2[44, 93] | 5EHR (MT, 0.32) | 0.1 | 5EHP (MT, 0.33)b, g | 6MD7 (HT, 0.35) | 26 | 4.3 | 1.06 [0.76,1.34] |

| 6MDD (HT, 0.35)a | |||||||

| SYK[44, 94] | 4PV0 (MT, 0.69) | 0.19 | 4PX6 (HT, 0.3)a | 4FYO (HT, 0.40)c | 44 | 5.9 | 1.01 [0.81,1.21] |

| Thrombin[74, 95] | 2ZFF (HT, 0.3) | 0.06 | 5JZY (HT, 0.27)b | 3QX5 (HT, 0.28)a, b | 11 | 1.7 | 0.97 [0.63,1.28] |

| TNKS2[96] | 4UI5 (HT, 0.29) | 0.08 | 4PC9 (HT, 0.27)a | 4UVZ (HT, 0.29)b | 27 | 4.4 | 1.04 [0.78,1.28] |

| 4BU9 (HT, 0.29)a, b | |||||||

| TYK2[74, 97, 98] | 4GIH (HT, 0.5) | 0.15 | 3LXP (HT, 0.31)a | 5WAL (MT, 0.48)c, g | 16 | 4.3 | 0.97 [0.60,1.30] |

structure was already available 6 month prior to publication of first benchmark study.

ligand similarity > 1.4

ligand considerably similar > 0.8.

crystal contacts

packing.

alternate conformations.

low ligand density below 0.95.

A choice of the simulation conditions like temperature, ion concentration, other additives like co-factors or membranes require additional considerations. Ideally, these conditions are close to those for the structural experiment, the affinity measurements and physiological conditions. Most likely, a trade-off between all of these has to be found. Where possible select structures where data was collected at room temperature that were crystallized using non-salt precipitants. Be aware that room temperature data will have lower precision and more conformational heterogeneity.

If these requirements are not met, it does not necessarily mean that the data is not usable and the results will not match the experimental measurements. A structure not meeting the requirements may suffice after more manual intervention by the user, ideally an experienced one. Unresolved areas can be modelled with current tools and knowledge about atom interactions, though this can be a cause for concern if these are near the binding site. This concern has been validated, at least anecdotally, in a recent publication where different protein preparation procedures where shown to have a substantial effect on the accuracy of the free energy predictions [104].

Collective intelligence could be a way to mitigate the influence of individuals on the prepared input structures of a benchmark set. On a platform, other scientists could suggest changes to structures and updated versions could be deposited, increasing the quality of the benchmark set. Endorsement and rating of deposited structures could increase the level of trust given to specific structures and the database in general.

4.5. Experimental binding affinity data

Choosing high-quality experimental data is crucial for constructing meaningful benchmarks of methods that predict ligand binding affinities. Evaluating whether experimental data merits inclusion requires an in-depth understanding of the biological system and the particular experimental assay that assesses protein-ligand affinity. While a detailed overview of all experimental affinity measurement techniques is beyond the scope of this best practices guide, this section aims to summarize general aspects that should be considered when evaluating whether an experimental dataset is suitable for benchmarking purposes. We note that, in practice, it is often difficult to identify datasets that meet all the recommendations discussed below.

Overall, it is necessary that the experimental data used in benchmarks intended to measure the accuracy of reproducing experimental data are consistent, reliable, correspond well to the model system that is used in the simulations, allowing robust conclusions on accuracy to be drawn.

4.5.1. Deriving free energies from experimental affinities

Binding of a ligand to a receptor protein can be described as an equilibrium between unbound and bound states with the equilibrium constant of the dissociation Kd as

with [PL] being the concentration of the bound protein-ligand complex and [P] and [L] the concentrations of the unbound protein and unbound ligand respectively. The binding free energy ΔG can be related to the dissociation constant via the following equation

| (2) |

with Boltzmann constant kB, temperature T and standard state reference concentration , which is typically . In many drug discovery projects, potency of compounds is assessed by measuring the half-maximal inhibitory concentration (IC50) of a substance on a biological or biochemical function. This is often converted to pIC50

Typically, the substance is competing in these experiments with either a probe or substrate. For such competition assays, IC50 can be related to the binding affinity of the inhibitor Ki via the Cheng-Prusoff equation

| (3) |

where [S] is the concentration of the substrate and Km the Michaelis constant. Assuming that all binding events result in effective protein inhibition, we can relate . Many assays are conducted using a substrate concentration of [S] = Km. This leads to a conversion factor of 0.5 between IC50 and Ki based on Equation 3 and to a constant offset in ΔG. This offset cancels out for a congeneric ligand series with the same mode-of-action in identical assay conditions. Hence, in this case, ΔpIC50 values are a useful bioactivity that can be compared to relative binding free energy calculations. We can then use the approximation

For absolute calculation comparison to experiment, the offset remains relevant. One suggestion to circumvent the issue could be to transform absolute estimates to , this way cancelling the offset and basing the further benchmarking on the relative free energy differences.

4.5.2. Consistency of datasets

The paucity of experimental affinity measurement data may tempt practitioners to cobble together all available measurements for a given target (say, from a ChEMBL query) to construct a dataset with a sufficiently large number of measurements to provide statistical power in discriminating the performance of different methodologies on a given target. This temptation should generally be resisted, as assay conditions or protocols in different labs might not be comparable. Figure 6 illustrates this by comparing two sets of data obtained by different methods. These differences could, for example, result from the concentration of the substrate (see Equation 3), the protein construct, the incubation time or the composition of the buffer, and might not be sufficiently documented in the reported experimental methodology. However, in comparison to the inherent experimental error (see below), mixing experimental data from different laboratories might add only a moderate amount of noise [105]. To ensure consistency within a dataset such that relative free energy differences are as reliable as possible, we highly recommend the use of data from a single source (e.g., a single publication or a patent).

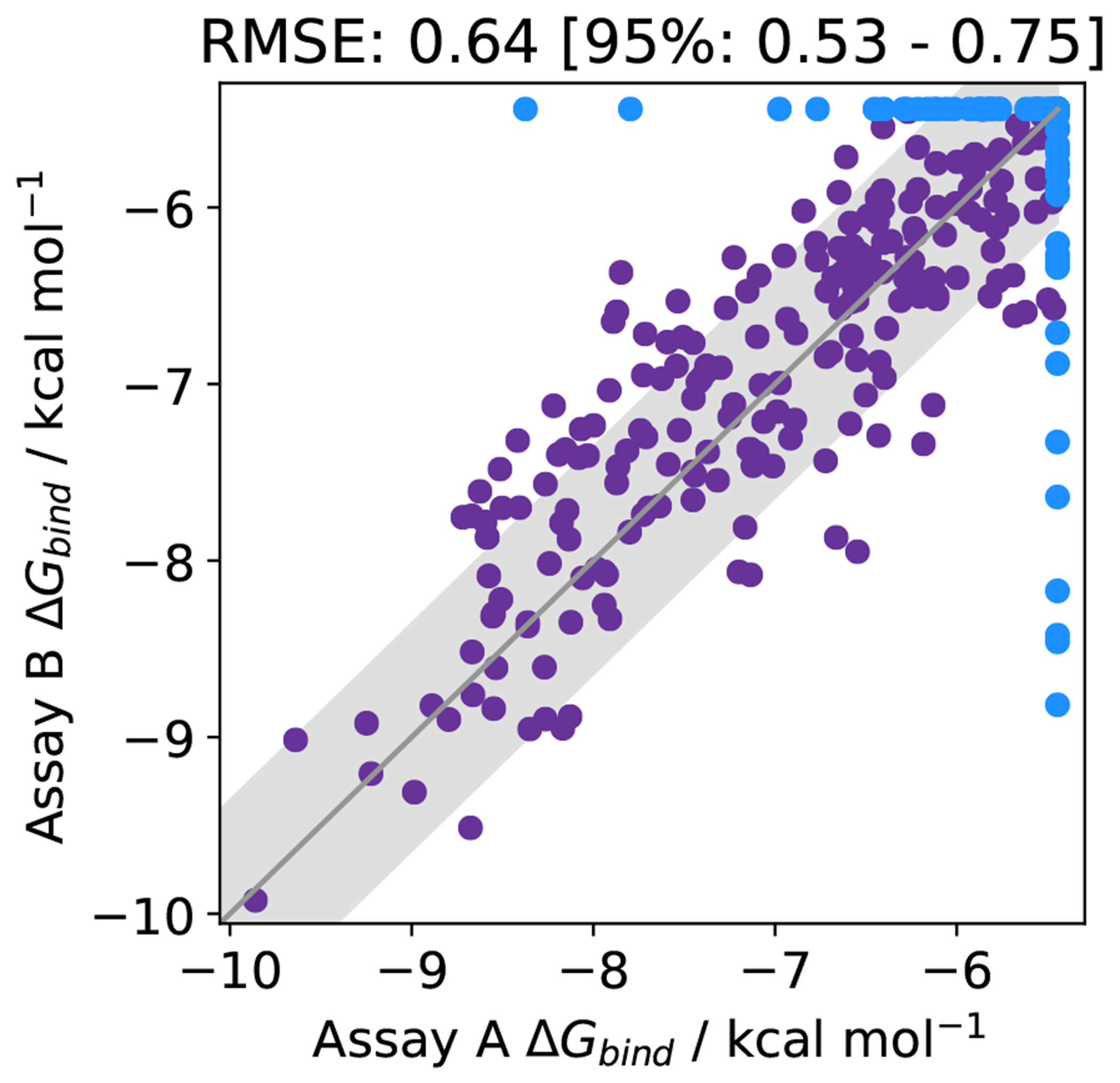

Figure 6. Experimental uncertainties can be on the order of 0.64 kcal mol−1.

The binding affinity of 365 molecules assayed by two different methods for the open source COVID moonshot project [106]. Molecules that were predicted to bind in one assay, but inactive (i.e., affinity lower than the assay limit) in the other are shown in blue. The RMSE agreement between the methods, for both purple and blue data points is 0.64 kcal mol−1. Data was collected from the PostEra website accessed 22/11/2020 [107]. The grey region indicates an assay variability of 0.64 kcal mol−1.

To avoid rounding or unit conversion errors that often arise from automated or manual data extraction, data should be extracted from the original source.1 Going back to the original publication is also important to identify compounds that are outside of the detection limit of the assay but are still reported with specific numerical values (e.g., reported ). Such ligands should be excluded from benchmark sets to ensure that accuracy measures can be properly evaluated.

4.5.3. Experimental uncertainty

To assess the reliability, ideally, errors are reported for all ligand affinities or at least for a subset. The primary publication of the experimental results is typically the best source of experimental uncertainty as cited affinities may occasionally be subject to rounding differences or unit errors [110]. Errors quoted will likely be an estimate of the repeatability of the assay, rather than true, independent reproducibility. Publications with essential experimental controls reported – such as incubation time and concentration regime to demonstrate equilibrium – can add confidence to the reported affinity, however these may be performed and not reported [111]. Meta-analyses of both repeatability [112] and reproducibility [110] found errors in pKi of 0.3-0.4 log units (0.43-0.58 kcal mol−1) and 0.44 log units (0.64 kcal mol−1) respectively. Another analysis for reproducibility found that variability in pIC50 was even 21-26% higher than for pKi data (0.55 log units) [105]. These values provide a guideline for experimental error, if none is available. Note that for difference measures ΔpIC50, the individual experimental errors propagate as .

4.5.4. Choosing representative experimental assays for FE calculations

There are two main requirements to consider in order to ensure that the experimental data are representative of the physics-based binding free energy that is calculated from the simulations. First, the measured output should reflect or closely correlate with actual protein-ligand binding. Second, the assay conditions and the protein-ligand system used in the simulation should match as closely as possible. The first point relates to choosing the appropriate type of experimental data to compare with. Ideally, these would be biophysical binding data such as KD determined from isothermal titration calorimetry (ITC) or surface plasmon resonance (SPR). However, this type of data is often only available for a small number of compounds in drug discovery projects (and the related literature), typically for a few representatives per series. In addition, ITC data are often only available for a narrow dynamic range [113, 114]. Since having a sufficiently large dataset with a large dynamic range is also very important (see below), it may often be necessary to use data from functional assays (e.g., IC50 from a biochemical assay) instead. For this assay, correlation with a biophysical readout should be checked before using the system as a benchmark dataset [105].

With regards to matching simulation and binding assay, as mentioned above, it is important to have detailed knowledge of the assay conditions available; e.g., salt concentrations and co-factors. This information is needed for setting up a simulation model that closely matches the experimental conditions (see Section 5.1). Generally, salt concentration should match experimental assay conditions to capture screening effects, though sometimes salt identity may be varied because of force field limitations. For a benchmark set, experimental data with assay conditions involving many co-factors or multiple protein partners should be avoided. In addition, one should check which protein construct was used in the structural studies compared to the assay (see Section 4.4). These should match as closely as possible.

4.5.5. Ensuring sufficient statistical power

Finally, a dataset used for benchmarking of free energy calculations needs to be suitable to draw robust conclusions on the success of the methods ideally by both accuracy and correlation statistics. Whether a dataset is suitable depends on the number of data points in the set, the experimental dynamic range and the experimental uncertainty.

Quantifying the experimental uncertainty is necessary for understanding the upper-limit of feasible accuracy for a model [115]. Understanding this is both useful for fair comparison between methods, and for conveying the reliability of a model to medicinal chemists [116]. Building predictive models becomes more difficult with (a) a small experimental dynamic range and (b) large experimental uncertainties. It is useful to understand the upper limit of success a computational method can have for a set of experimental results:

| (4) |

where is the highest achievable R2 for a dataset with a standard deviation of affinities () and an experimental uncertainty of [112]. This relation is illustrated in Figure 7.

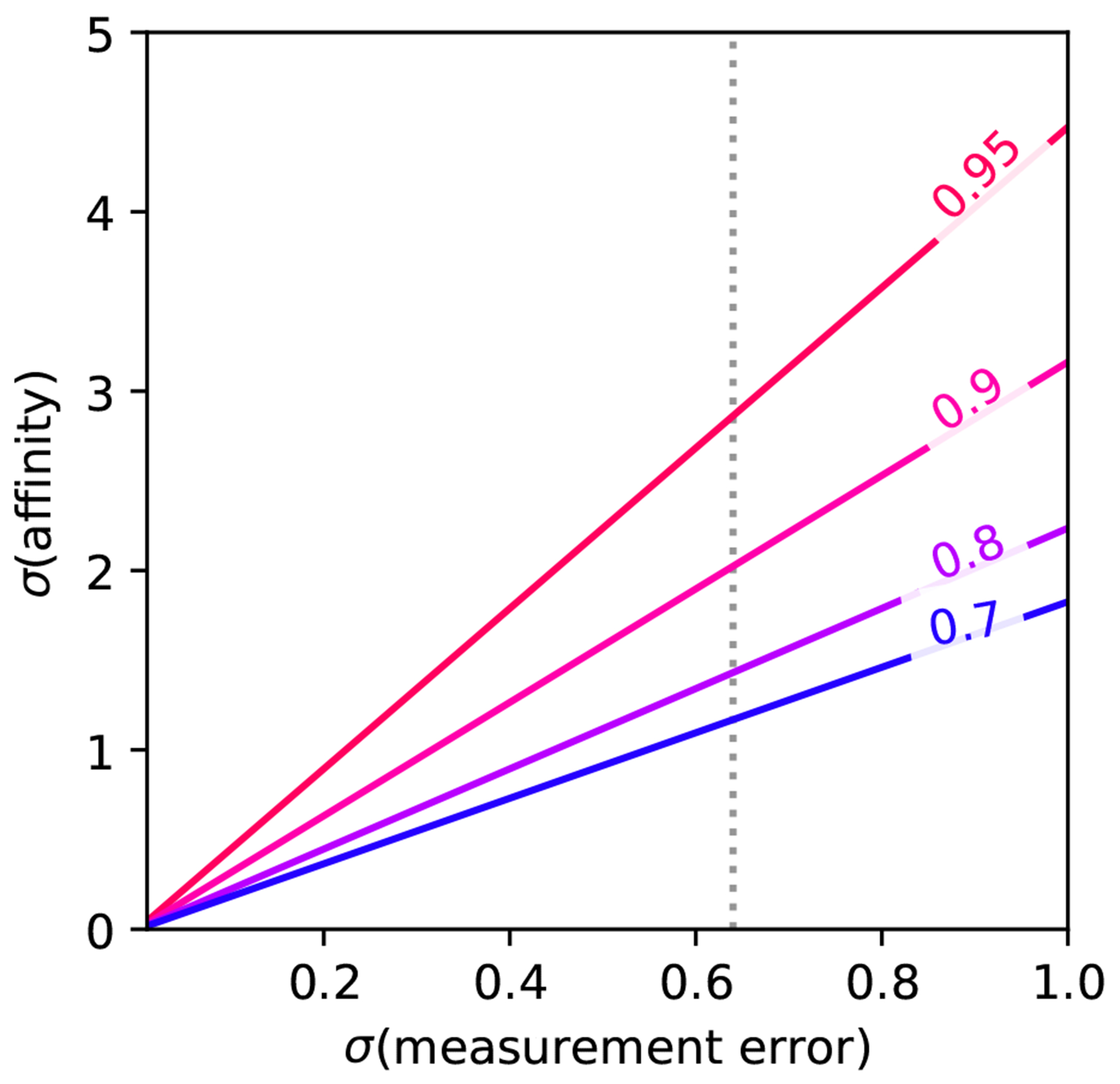

Figure 7. The larger the experimental uncertainty, the larger the affinity range required for a given .

Corresponding to Equation 4, the maximum achievable R2 for a given dataset is limited by the range of affinities and the associated experimental uncertainty. The illustration assumes that (measurement error) and (affinity) are in the same units, with an experimental error of 0.64 kcal mol−1 indicated.

For a typical experimental error of 0.64 kcal mol−1 (see Section 4.5.3) and a desired , a standard deviation of affinities (≈1.5 log units) is required. Assuming a uniform distribution of experimental affinities in the dataset, this corresponds to a required dynamic range of 7.01 kcal mol−1 (e.g., from −12 to −5 kcal mol−1) or ≈ 5 log units (e.g., from 1 nM to ). This dynamic range and the associated standard deviation of affinities also allow to differentiate typical free energy methods from a trivial affinity prediction model where all predicted affinities are equal to the mean experimental affinity . Note that for such a model RMSE is equal to the standard deviation of the affinities , while there is no correlation between predicted and experimental affinities. In practice, experimental datasets with a dynamic range of 7 kcal mol−1 are difficult to obtain. Using the same assumptions as before, a dynamic range of 5 and 3 kcal mol−1 correspond to a standard deviation of affinities of and and hence and respectively. Balancing data availability and achievable , we recommend collecting datasets with a dynamic range of 5 kcal mol−1 (3.7 log units).

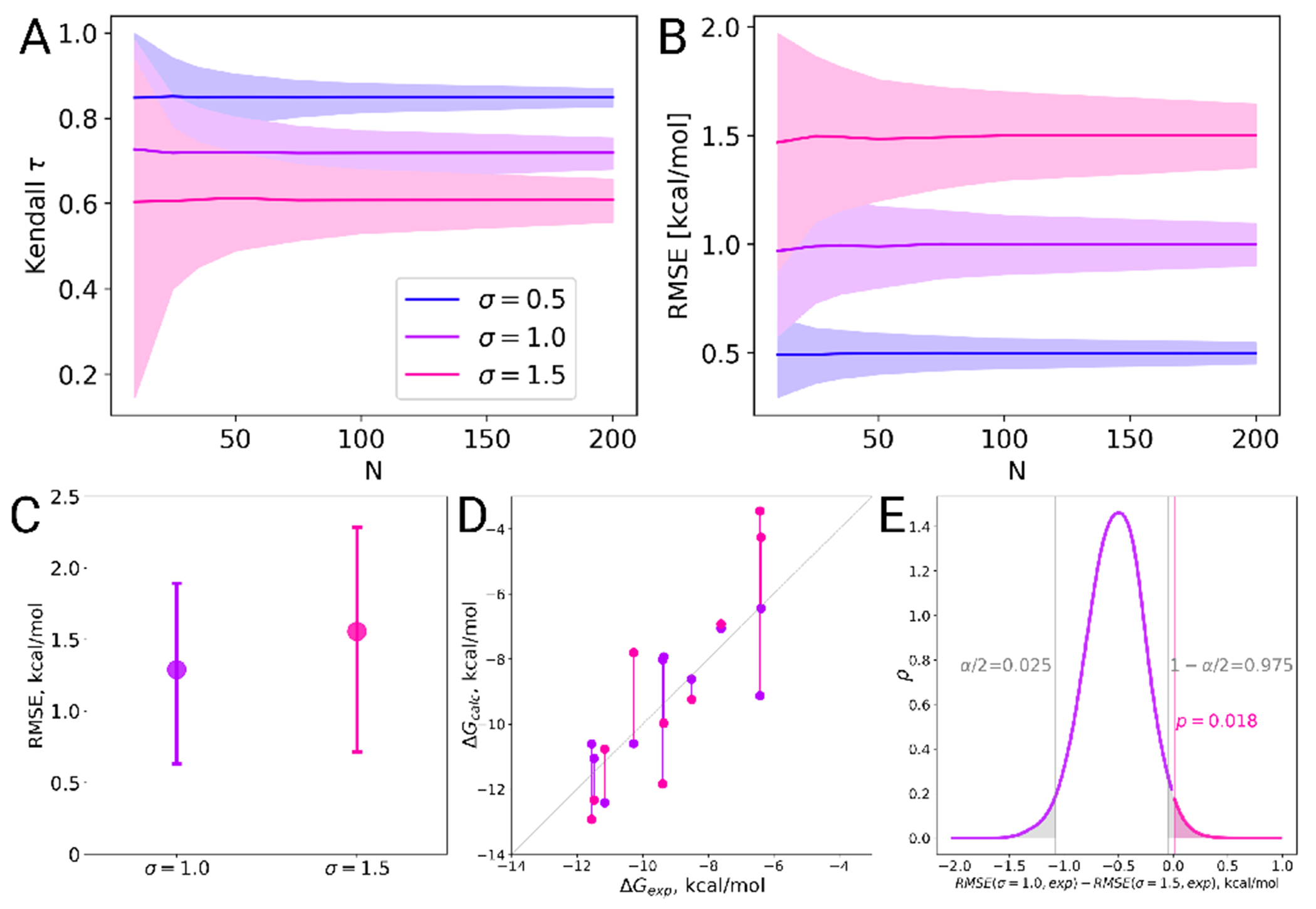

In order to robustly evaluate statistics with small confidence intervals, the dataset needs to be sufficiently large. Figure 8(A) and (B) illustrate the dependence of the confidence interval obtained by bootstrapping for correlation statistics and accuracy statistics for simulated toy data. The “experimental” toy data were simulated using a uniform distribution with an affinity range of 7 kcal mol−1. This would be the optimal dynamic range for an experimental error of 0.64 kcal mol−1 (see Section 4.5.3). Predicted toy data were derived from the experimental toy data using a Gaussian distribution with standard deviation of . While the absolute values that can be obtained for the correlation statistics are strongly affected by the dynamic range of the experimental data, the effect on the confidence intervals estimated via bootstrapping is relatively small (very similar results in terms of the size of the confidence intervals can be obtained assuming a dynamic range of 5 kcal mol−1).

Figure 8. The larger the dataset, the smaller the uncertainty in the performance statistics.

(A) Kendall and (B) RMSE were evaluated for 1,000 toy datasets for a given size of the dataset N. The experimental data were simulated from a uniform distribution over the interval [−12:−5] and the predicted affinities were simulated from the experimental toy data using a Gaussian distribution with different standard deviation . The statistic was evaluated for the whole dataset and 95% confidence intervals were estimated via bootstrapping. These were then averaged over all 1,000 toy datasets. In (C-E) we illustrate a specific case, where two sampled sets of size N =10 were chosen for a closer inspection. (C) Their RMSE values have overlapping confidence intervals. (D) However, when investigating the underlying sets of points in a pair-wise manner, it appears that one case mostly yields values closer to experimental reference than the other. (E) Bootstrap analysis of these dependent samples reveals that the RMSE difference in this case is statistically significant at the confidence level of .

Based on these simulations, we recommend a dataset size of 25 to 50 ligands. For a dataset size of 50, it is possible to distinguish between all three toy methods reliably in terms of RMSE. For an affinity prediction method with Gaussian error this would yield the following estimated statistics: Kendall and . Note that for relative calculations, a smaller number of ligands could be sufficient since multiple edges are typically evaluated for each ligand. On the other hand, for relative calculations, the experimental error for the relative free energies are larger because experimental errors for both ligands add up.

It is important to note that for the case of overlapping error bars, it is not possible to immediately conclude that the compared methods do not differ significantly. This is due to the compared data sets being paired, i.e. the sets are not independent. Analytical rules for deducing whether it is possible to determine if the differences are statistically significant have been nicely summarized by Nicholls [117]. Here, we suggest how to probe for the significance in difference between predictions by means of bootstrap. For the Figure 8 (C), two sets of points were selected from the previous samplings depicted in panels (A) and (B). In this case we used sets of only 10 points each and the resulting RMSE values have overlapping error bars. The sets of points are shown explicitly in the Figure 8D, where it becomes clear that for most of the point pairs the case with has a value closer to the experimental reference than the sampling with . Now to assess the statistical significance of the observed RMSE difference (panel C) we sample with repetition pairs of points in panel D. For every resampling, RMSE values for the two cases are calculated and the difference between the RMSEs is stored. Such a resampling strategy ensures that the dependence between the points is retained. Finally, analyzing the distribution of collected RMSE differences will show whether the difference is statistically significant from zero at a chosen level.

As demonstrated in the example above and Figure 8, uncertainties of the estimated statistics strongly depend on the standard errors of individual free energy predictions. Naturally, this necessitates accurate estimation of uncertainties for the calculated (or ) values. Depending on the free energy protocol and estimator used, an analytical uncertainty estimator might be available. Another possibility is to use bootstrapping, i.e. resample the raw calculation data with repetition to reconstruct the sampling distribution and estimate its standard deviation. However, probably the most reliable, yet computationally demanding, approach to obtaining the standard error is by repeating the whole calculation procedure multiple times [118–120].

As stated before, in practice it is challenging to find datasets that meet these criteria for dynamic range and number of ligands. We therefore currently recommend annotating benchmark datasets according to these criteria to make challenges and limitations visible.

5. How to best set up and run benchmark free energy simulations

5.1. Structure preparation

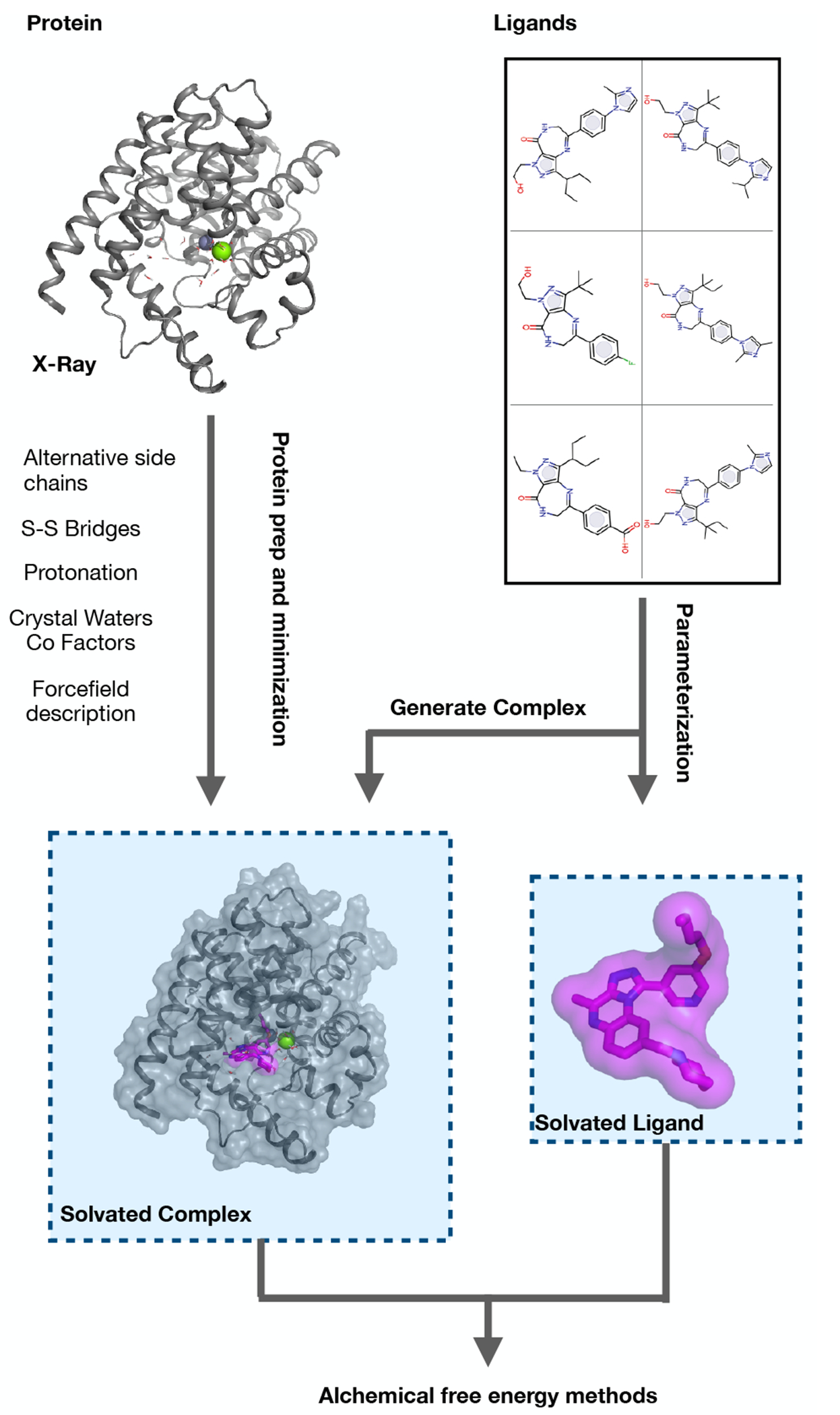

Starting with an experimental crystal structure, often an X-ray structure for the protein or protein-ligand complex, the most error-prone stage of protein preparation is the translation from this experimental structure into a simulation model: inferring missing atoms and making choices about which X-ray components to include. Having chosen biological unit based on the criteria in the above section, some domains of the structure may be removed if they are large and unlikely to affect the biological activities of interest. The truncation of the system needs to be assessed carefully as it has been shown in some cases, such as the dimeric form of PDE2 and the presence of cyclin with CDK2, as a more authentic representation of the system was beneficial for stability during simulations and improved the free energy calculations. In some cases, though, truncation gains efficiency by decreasing the size of the overall simulation system while maintaining its biological activity, with potentially minimal impact on results. Datasets for benchmarking may be run many times so this efficiency gain can be meaningful.

In addition to the protein itself, the subsystem carried forward from the X-ray structure into simulation may have other components: ligand, cofactors, structural waters, other ligands (if simulating a multimer), post-translational modifications (PTMs), and excipients. The cofactors should be deliberately included or excluded based on their role in the biological activity being modeled, removing a cofactor from its cavity might cause unexpected movements or collapse of the cavity during the simulations. To avoid this, a careful equilibration and solvation of that pocket might be needed. All structural waters close to the protein should be considered for inclusion by carefully verifying that water positions are compatible with the modelled ligands: in principle the MD sampling could allow waters to arrange in equilibrium positions, but experimental and theoretical work has shown that the timescales for this can be impractically long. Also, internal structural waters even very distal from the active site are integral to the protein structure, and omitting them can adversely affect the protein dynamics. Generally, we recommend excluding excipients (often specific to the crystallization media and not present in the assay). PTMs require a judgement call: surface-exposed and distal from the active site they can often be safely excluded, for example glycosylations which could otherwise greatly increase the size of the calculation. This again can save on the overall system size and prevent parameterization difficulties. PTMs proximal to the active site or known to be directly implicated in activity should be retained. Ligands other than that in the active site are again a judgement call: retaining them is only necessary if there is biological cooperativity in the biological assay. As this is in practice often not known, they should be kept if possible.

For the absolute binding free energy calculations, it is also necessary to account for the free energy change in the protein’s transition from its apo to holo state. Therefore, initializing the simulations of the alchemical ligand coupling based on the crystallographically resolved protein’s apo state may facilitate convergence.

5.1.1. Protein preparation

The experimental protein structure frequently has missing coordinates for atoms, residues or groups of residues due to the lack of supporting data (electron density) from the X-ray experiments. These often include N-terminal and C-terminal residues, mobile loops (e.g. the activation loop in kinases), and residue sidechains. Also, there can be extra coordinates available in the structure as “alternate locations” (AltLocs): residue sidechains, or occasionally entire residues or the ligand, for which the experimental density supports more than one distinct orientation in a single X-ray structure solution. For the simulation, the protein must have all the atoms provided for every residue modeled. Missing residue sidechains should always be modeled in, assigning them the most preferred rotamer given the local environment.

If the N- and/or C-terminal residues are missing due to lack of electron density, this may provide a basis for omitting them from the model, but the truncated N- and C-termini should be “capped” by neutral termini, usually an acetate (ACE) cap on the N-terminus and an N-methyl (NME) cap on the C-terminus to mimic the peptide backbone up to the carbon-alpha. Of course, one must be careful not to cap the charged protein termini which are properly resolved in the X-ray: these can be critical for function and structure.

This “capping” tactic can also treat the termini of “gaps”: regions of missing residues over the span of the peptide chain, usually missing loop regions due to lack of experimental density. While capping the ends of a loop instead of modeling the whole loop may be acceptable for MD runs of relatively short duration, over longer simulations there is a risk of having the protein around the capped ends of the missing loop gradually lose its structure. Even if a loop is unstructured (and therefore not resolved in the X-ray structure), its presence still affects the remainder of the structure and can provide stability by restricting movement of the connecting residues, thus raising concerns if these are capped instead. Strategic use of a distance restraints during the simulations can mitigate this liability.

Another possibility for missing loops is to close the ends with a short modeled loop of glycine residues of sufficient size to link the termini without introducing strain, but not necessarily of the full length of the missing loop. There are several reasons why this can be desirable. If the missing loop is particularly large (for instance >15 or 20 amino acids) accurately modeling its conformation could be challenging and introduce more uncertainty and instability to MD simulations. Furthermore, if the missing loop is distal from the binding site and not expected to affect protein-ligand interactions, the replacement only needs to stabilize the termini and avoids the use of restraints.

However, all of these approaches are likely inferior to using a good quality model of the missing loop.

When multiple alternate models of a particular region of the protein are available, the experimental model indicates that this region potentially occupies two (or more) mutually exclusive conformations, but one must be chosen for the model. Again, this selection can be a judgement call depending on where this region occurs relative to the active site: distal from the active site, the choice may be less critical; proximal requires more careful consideration. Higher occupancy for one of the alternate models could provide a reason to choose that particular model for the calculations. For critical or uncertain cases, we recommend repeating test simulations beginning from different models to analyze the sensitivity of the choice.

Once the above issues have been resolved, there remains one more round of decision-making to select sidechain rotamers and protonation states. Protein X-ray experiments usually cannot resolve the positions of hydrogens, making decisions on the protonation states an issue. Another challenge is determining sidechain orientations: sidechain flips are particularly relevant for HIS, ASN, and GLN, because the X-ray crystallography experiments cannot distinguish between different first-row elements O, N, and C. These elements produce similar density and are indistinguishable. This means that even with good electron density the sidechain orientations of ASN and GLN can have either orientation, swapping O and N positions, and thus interchanging H-bond donors and acceptors. The two possible orientations of HIS sidechains effectively interchange N and C positions in the ring. Surface exposed, these different orientations may be of little consequence, but in the interior of the protein, proximal to the active site, or especially interacting with the ligand, this can be very important and can change patterns of hydrogen bond donors and acceptors. In principle these orientations can be sampled over the course of the MD run but only if the trajectory is long enough for the sampling scheme to allow it. Considering that these orientations are experimentally ambiguous, it is a matter of judgement at setup time of whether these sidechains should be reoriented to make a more chemically reasonable model.

Protonation of the protein model is generally straightforward with one key exception: the ionization state of sidechains, especially HIS, ASP and GLU, which may undergo pKa shifts due to changes in the environment. Active site catalytic CYS is another case requiring care, and occasionally LYS can be deprotonated in some circumstances. The two main determining factors are the pH of the biological milieu and the microscopic environment around the ionizable sidechain. In general, the ionization state of each residue is chosen during the setup of the protein and remains constant over the course of the simulation, even if the microenvironment changes. Note that a formal charge on the bound ligand can also affect the ionization state of nearby protein residues; this can be particularly problematic when the ligand charge alchemically changes over the course of a relative free energy calculation. Unlike side-chain rotamers, which may sample other orientations within a simulation, incorrect protonation state assignments cannot correct themselves without the use of constant-pH algorithms, that have not been routinely implemented within free energy calculations yet.

There are a number of tools to automate the steps described in this section, notably the Protein Preparation Wizard [121], the Molecular Operating Environment (MOE) [122], and Spruce [123]. We recommend manual inspections after applying these.

5.1.2. Ligand preparation

In the preparation of the ligand for simulation it is important to verify that the chemical structure is correct. While this is less problematic for structures generated from small-molecule sources, historically it has been a frequent problem for ligands taken from protein-ligand X-ray structures. Since X-ray structures lack protons and do not provide bond orders or other key information, if a PDB structure is used as input, some tool must be applied to supply this information, presenting a frequent source of failure (though, for structures in the RCSB, a ligand SMILES string can provide a more complete representation of the ligand’s identity).

Once the underlying chemical structure, including bond orders and stereochemistry, is correct, the key issues are the tautomer and ionization states. As with the ionizable protein residue discussed above, the main factors are the macroscopic pKa of the ligand (for ionization states), the intrinsic relative stability of different tautomer states, and the perturbing effects of the active site micro-environment of the bound ligand. Compounding the complexity is if the unbound ligand (used as a reference state) would have a different tautomer/ionization state. These need to be carefully examined at setup to make sure there is complementarity between the protein and ligand independently of the alchemical change between ligands, and then to flag and resolve alchemical conversions between inconsistent states of the protein.

5.1.3. Preparation of the complex

Once protein and ligand have been prepared, the complex is assembled and solvated in water with counter-ions at an appropriate ionic strength, or embedded in membrane if the protein belongs to a membrane protein family. Membrane simulations should use an appropriate equilibrated membrane that matches experimental criteria of thickness and area per lipid as well as the appropriate counter ions. Once the system box is constructed the step involves neutralizing the net charge on the protein-ligand complex, but beyond this a higher concentration of salt (usually sodium chloride) is often warranted to mimic the biological milieu being modeled; most assays are run in a significant salt concentration (100 to 150 mM) to emulate biological environments. The salt concentration can strongly affect experimental binding affinities, particularly with highly polar active sites. The ion placement needs to be handled with care, for example by prohibiting insertion of ions within a given distance from the protein-ligand complex. Otherwise, positioning an ion in a close proximity to the bound ligand may destabilize the binding pose, in turn affecting the prediction accuracy [124].

Once the above decisions have been made and the complete simulation system has been set up, it is important to let it relax and equilibrate at simulation temperature and pressure, which should mimic the assay conditions.

5.2. Alchemical free energy calculations pose specific setup challenges

There are an abundance of details that must be considered during the set up of any simulation and in particular for alchemical free energy calculations. These simulations require setting up an alchemical perturbation of the small molecules, but also require making a variety of assumptions with respect to the environment at the two end states. In the following we will address all essential choices that need to be made for the setup. For a very detailed introduction to best practices for alchemical free energy calculations and a much broader discussion on choices for their setup please refer to the relevant best practices guide [19].

5.2.1. Should I run an absolute or relative free energy calculation?

There are two possible ways in which to run alchemical free energy calculations, which both provide free energies of binding, but will require different routes for their setup. Relative free energy calculations provide free energies of binding with respect to a reference ligand, meaning that all compounds that are to be assessed for their binding affinity should share a similar scaffold. In contrast, absolute free energies of binding can be used for a set of ligands that do not share any commonalities, since the reference state for the free energy of binding is the standard state. This is probably the easiest deciding factor in terms of what kind of calculation to run. If the particular benchmark dataset contains ligands that form a congeneric series then a relative calculation is likely a better choice. Of course, congeneric ligand series can also be assessed using absolute free energy calculations, or it may be of interest to compare relative to absolute calculations for a given benchmark dataset.

5.2.2. Alchemical pathway

Choices in topology

The choice of topology may be dictated by the simulation software of choice as not all common MD codes implement all topologies. The topology refers to the way in which a molecule A is changed to molecule B, in case of relative free energy calculations. Selecting either a dual or single topology approach is acceptable, unless performance of different topologies is assessed across the benchmark datasets. For more details on the different topology choices and implementations please refer to Mey et al. [19].

Choices concerning

In order to connect the initial and final state of the alchemical free energy calculation an alchemical pathway must be chosen. This pathway is regulated by a variable , which, in the simplest formulation, at represents molecule A and at molecule B. As free energy is a state function, the computed free energy is in principle independent on the pathway, but different choices in pathway can make the problem computationally more or less tractable. The simplest way to switch between molecule A and B is using a linear switching function for the Hamiltonian of the form:

| (5) |

where is the Hamiltonian, is the set of positions, is the set of momenta and the switching parameter. However, this typical approach fails when atoms are being inserted or deleted, requiring alternate choices, as reviewed elsewhere [19].

For the free energy perturbation (FEP) protocol, considerable care needs to be taken in selecting the switching function and spacing of so-called -windows. Common choices are, how many -windows should be used? What functional form should my switching function take? The concept of difficult and easy transformation is more and more explored, but currently heuristics based on phase space overlap between neighboring -windows is the best way to assess how many windows should be simulated. This can for example be done by looking at the off-diagonals of an overlap matrix [125, 126]. Furthermore, the choice of simulation protocol will influence what switching function and how many -windows should be used.

5.2.3. Choice of simulation protocol

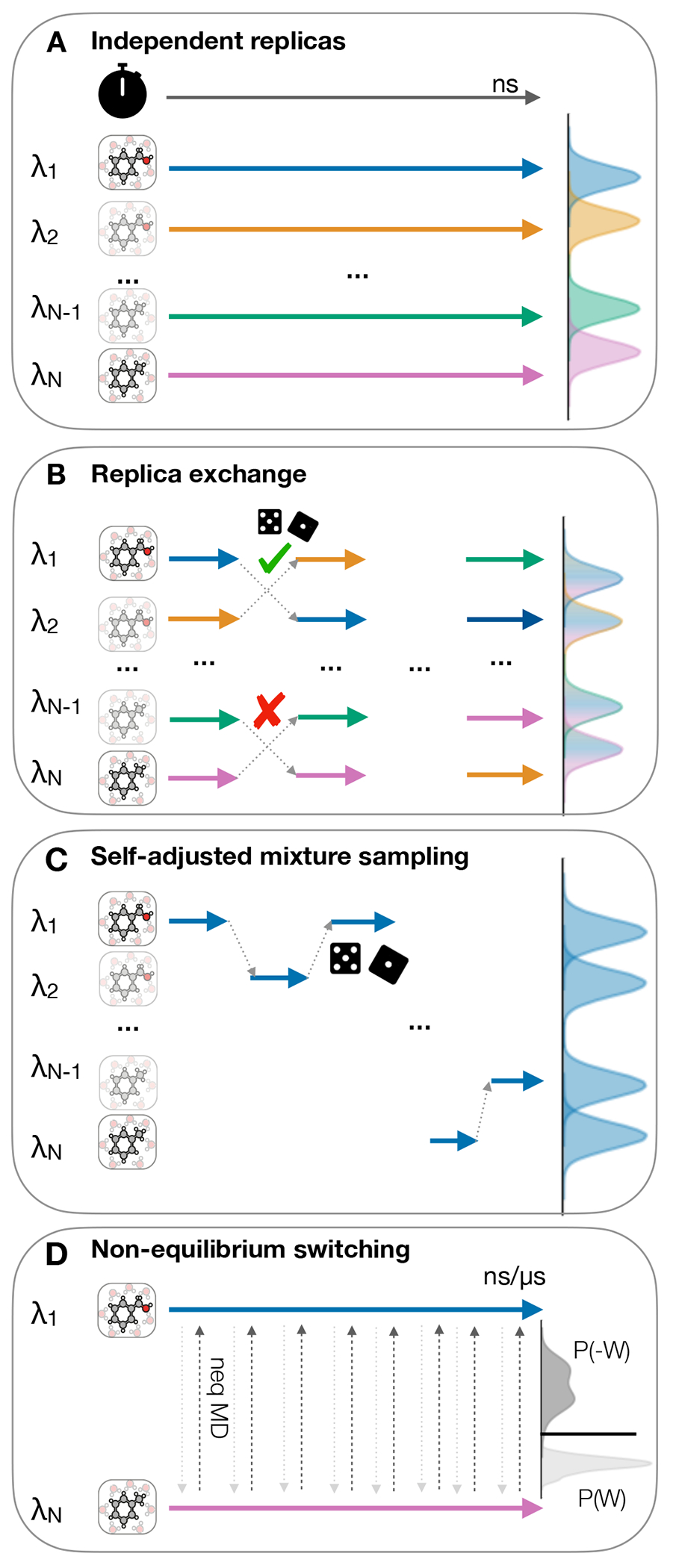

Various simulations protocols for alchemical free energy calculations are available and can be categorized into reference state, independent replica with constant or variable states and ensemble (multiple replica) methods. In reference state methods, one reference state is simulated in a single simulation and free energy differences to other states are extrapolated. Examples for these is one step perturbation [7, 127–130] or enveloping distribution sampling (EDS) [131–135]. In independent replica methods, one or several simulations are performed at different states of the coupling parameter . These parameters may be constant like in discrete thermodynamic integration [9–11] or free energy perturbation [136]. Other methods allow the simulation to adopt discrete states as in self-adjusted mixture sampling [137] or expanded ensemble simulations [138–142]. can also be varied continuously in slow growth thermodynamic integration [143] or dynamics [144–150]. Fast growth or non-equilibrium switching are special cases of independent replica methods where is rapidly changed in non-equilibrium simulations [151–154]. In multiple replica or ensemble methods, two or more replicas of the same system are simulated in parallel and are in equilibrium with each other. In Hamiltonian replica exchange, swaps between replicas at different fixed states are attempted and either accepted or rejected according to the Metropolis-Hastings criterion [155–157]. We provide examples of four of the above protocols, which are summarised in Figure 10. These are: Figure 10(A) independent replicas at constant states, (B) replica exchange, (C) Single replica, self adjusted mixture modelling and (D) non-equilibrium switching. Particularly for (B) and (C) the choice of -spacing will be important, as in (B) it dictates the success of replicas exchanging between and in (C), often tightly spaced replicas allow for a best exploration. Independent replicas are not necessarily recommended, but are still commonly implemented in software packages.

Figure 10. There are four simulations protocols available for for generating samples and evaluating the Hamiltonian at the states.

(A) Independent replicas run in parallel at different as indicated by differently colored arrows, (B) Replica exchange attempts after short simulation for each replica (C) Self-adjusted mixture sampling with a single replica exploring all of , (D) Non-equilibrium methods with equilibrium end-state simulation and frequent nonequilibrium switching between end states. The clock icon is indicating the flow of simulation time and the pair of dice indicate a Metropolis Hastings based trial move

5.2.4. End-state environments

When setting up a relative free energy calculation it is important to be aware of the similarity of the ‘end states’, i.e. of the conformational, hydration, and electrostatic environment of ligand A and B. Many of these end-state issues can be addressed with longer sampling, but this may be impractical and should be considered when planning perturbations. Issues can arise, if there are two distinct bound conformations (different binding modes) for ligand A and ligand B, it may be necessary to sample both binding modes, or extend the simulation time to allow for sufficient rearrangements. A similar issue that may be addressed with extended sampling times are scaffold changes that occur between ligand A and B. Different hydration patterns may also cause inaccuracies in computed binding free energies. Probably the most difficult issue to address are changes in charge states that occur either between the two ligands or may even affect the protein depending on the type of ligand binding. Methods to address this issue are double system/single box setups [158] to retain neutral charges, the use of alchemical ions [159], or the post-hoc corrections [160, 161] to values.

For the absolute binding free energy calculations, the situation is further complicated by the need to account for the change in protein’s conformation when transitioning from apo to holo state. Converging larger protein reorganizations may become challenging already in relative free energy calculations [162], thus in estimating absolute binding failure to properly capture this contribution may manifest in a substantial offset of predicted values with respect to experimental measurement [103]. In principle, longer or enhanced sampling could help in improving convergence of large conformational changes [19]. Another option is to explicitly make use of the crystallographic apo state (if it is available) to initialize ligand coupling simulations for the non-equilibrium switching scheme [163] or seed an FEP based simulation [164].

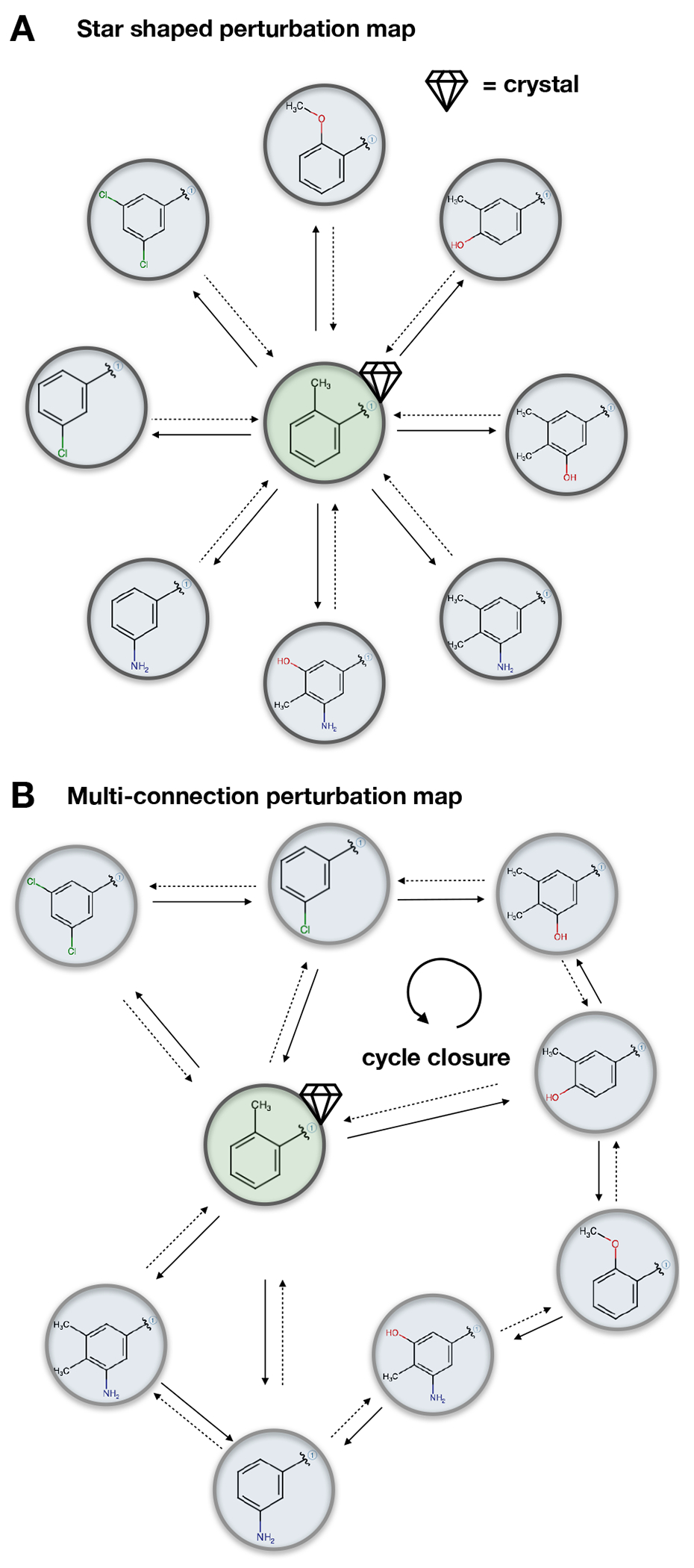

5.2.5. Perturbation maps for relative calculations

In relative free energy calculations a network of perturbations between ligands needs to be constructed. The choice of which relative calculations to carry out is vast and can have a substantial effect on the accuracy of the results. The way in which different ligands are connected by relative alchemical calculations is called a perturbation map. In particular for benchmarking free energy methods, perturbation maps should be held fixed for a given benchmark set, unless the goal is to test different approaches for setting up perturbation maps. In this way each edge of the perturbation map will be maintained across subsequent tests and plots created during the analysis phase later will be comparable.