Abstract

Motivation

Fast, lightweight methods for comparing the sequence of ever larger assembled genomes from ever growing databases are increasingly needed in the era of accurate long reads and pan-genome initiatives. Matching statistics is a popular method for computing whole-genome phylogenies and for detecting structural rearrangements between two genomes, since it is amenable to fast implementations that require a minimal setup of data structures. However, current implementations use a single core, take too much memory to represent the result, and do not provide efficient ways to analyze the output in order to explore local similarities between the sequences.

Results

We develop practical tools for computing matching statistics between large-scale strings, and for analyzing its values, faster and using less memory than the state-of-the-art. Specifically, we design a parallel algorithm for shared-memory machines that computes matching statistics 30 times faster with 48 cores in the cases that are most difficult to parallelize. We design a lossy compression scheme that shrinks the matching statistics array to a bitvector that takes from 0.8 to 0.2 bits per character, depending on the dataset and on the value of a threshold, and that achieves 0.04 bits per character in some variants. And we provide efficient implementations of range-maximum and range-sum queries that take a few tens of milliseconds while operating on our compact representations, and that allow computing key local statistics about the similarity between two strings. Our toolkit makes construction, storage and analysis of matching statistics arrays practical for multiple pairs of the largest genomes available today, possibly enabling new applications in comparative genomics.

Availability and implementation

Our C/C++ code is available at https://github.com/odenas/indexed_ms under GPL-3.0. The data underlying this article are available in NCBI Genome at https://www.ncbi.nlm.nih.gov/genome and in the International Genome Sample Resource (IGSR) at https://www.internationalgenome.org.

Supplementary information

Supplementary data are available at Bioinformatics online.

1 Introduction

Several large-scale projects are under way to assemble the genome of hundreds of new species, and comparing such genomes is crucial for understanding the genetic basis and origin of complex traits and related diseases (for a small sampler, see e.g. Feng et al., 2020; Formenti et al., 2020; Hecker and Hiller, 2020; Jebb et al., 2020; Rhie et al., 2020; Serres Armero et al., 2020; Teeling et al., 2018; Zhang et al., 2014). Efficient tools for comparing genome-scale sequences are thus becoming increasingly necessary. The matching statistics of a string S, called the query, with respect to another string T, called the text, is an array such that is the length of the longest prefix of that occurs anywhere in T without errors. Since the match can occur anywhere in T, matching statistics is robust to large-scale rearrangements and horizontal transfers that are common in genomes, and the average matching statistics length over the whole sequence has been used for building consistent whole-genome phylogenies without alignment—and, unlike k-mer methods, without parameters (Cohen and Chor, 2012; Ulitsky et al., 2006). The effectiveness of matching statistics in alignment-free phylogenetics has even motivated variants that allow for a user-specified number of mismatches (for a small sampler see e.g. Apostolico et al., 2016; Leimeister and Morgenstern, 2014; Pizzi, 2016; Thankachan et al., 2016, 2017); and other, seemingly different, distances can be expressed in terms of matching statistics as well (Castiglione et al., 2019; Ehrenfeucht and Haussler, 1988; Ukkonen, 1992). For genomes from the same or from closely related species, matching statistics has been used for computing estimators of the number of substitutions per site, of the number of pairwise mismatches, and of the occurrence of recombination events (see e.g. Domazet-Lošo and Haubold, 2009; Haubold and Pfaffelhuber, 2012; Haubold et al., 2009, 2011, 2013); finally, the related notion of shortest unique substring, defined on a single sequence, has been employed for computing measures of genome repetitiveness (Haubold and Wiehe, 2006; Haubold et al., 2005), and it could be used as a parameter-free method for detecting segmental duplications (Pu et al., 2018). Since every position of the query S is assigned a match length, matching statistics can reveal ranges of locally high similarity (i.e. of large average matching statistics in the range) induced e.g. by horizontal gene transfer, or conversely ranges of locally low similarity induced by chromosomes of S missing from T, or by horizontal transfer events that affected S but not T (Domazet-Lošo and Haubold, 2011a, b; Haubold and Pfaffelhuber, 2012; Haubold and Wiehe, 2006; Haubold et al., 2011). See Supplementary Figures S6 and S7 for a concrete example. This idea has been recently applied to targeted Nanopore sequencing, using online matching statistics to eject from the pore a long DNA molecule that is not likely to belong to the species of interest, after having read just a short segment of the molecule (Ahmed et al., 2021).

Computing is a classical problem in string processing, and in practice it involves building an index on a fixed T to answer a large number of queries S. Thus, solutions typically differ on the index they use, which can be the textbook suffix tree, the compressed suffix tree (Ohlebusch et al., 2010) or compressed suffix array, the colored longest common prefix array (Garofalo et al., 2018), a Burrows–Wheeler index combined with the suffix tree topology (Belazzougui and Cunial, 2014; Belazzougui et al., 2018), or the r-index combined with balanced grammars (Boucher et al., 2021). In the frequent case where T consists of one genome (or proteome), or of the concatenation of few similar genomes or of many dissimilar genomes, the Burrows–Wheeler transform of T does not compress well, and the best space-time tradeoffs are achieved by the implementation in Belazzougui et al. (2018) (see Boucher et al., 2021 for a runtime comparison, and see Supplementary Fig. S2 for a memory comparison). In this paper, we develop several practical tools for computing the matching statistics array between genome-scale strings, and for analyzing its values, faster and using less memory than the state-of-the-art.

Specifically, we design a practical variant of the algorithm by Belazzougui et al. (2018) that computes MS in parallel on a shared-memory machine, and that achieves approximately a 41-fold speedup of the core procedures and a 30-fold speedup of the entire program with 48 cores on the instances that are most difficult to parallelize. Our implementation takes around 12 min to compute the MS between the Homo sapiens and the Pan troglodytes genomes on a standard 48-core server. We also describe a theoretical variant with better asymptotic complexity, which takes time and bits of space when executed on t processors, where σ is the integer alphabet of S and T. To the best of our knowledge, no algorithm for computing matching statistics in parallel existed before.

Then, we implement fast range queries for computing the average and maximum matching statistic value inside a substring of S, taking advantage of the compact encoding of introduced by Belazzougui and Cunial (2014): this encoding takes just bits, and allows one to retrieve in constant time for any i using just more bits. In some cases, this bitvector is compressible, so our code can operate both on the plain encoding and on its compressed versions. Overall, we can answer queries over arbitrary ranges of the human genome in a few tens of milliseconds, taking just a few extra megabytes of space. No tool for fast range queries over a compact matching statistics encoding existed before.

Finally, we describe a lossy compression scheme that can reduce the size of our compact encoding to much less than bits when S and T are dissimilar, by replacing small matching statistics values (that typically arise from random matches) with other, suitably chosen small values. In practice, this is most useful in applications that need the matching statistics array of every pair of genomes in a large dataset. The threshold of our lossy compression can be set according to some expected length of matches (see e.g. Haubold and Pfaffelhuber, 2012; Haubold et al., 2009, 2011), or it could be learnt from the distribution of match lengths itself, which usually peaks at noisy values (see e.g. Supplementary Fig. S4). Depending on the threshold, our scheme can shrink the encoding from 40% to 10% of its original size of 2 bits per character, and one of our variants achieves 2% for large thresholds. Another popular data structure in string indexing, the permuted longest common prefix array (Sadakane, 2007), has a similar bitvector encoding and shrinks at similar rates under our scheme in practice.

Our compression method bears some similarities to the lossless algorithm by Boffa et al. (2021), which builds an approximation of the select function on arbitrary bitvectors, and stores corrections: in our case, discarding the corrections would amount to replacing every matching statistics value (regardless of whether it is small or large) with another value (which could be either bigger or smaller) within a user-specified error. This might be undesirable for matching statistics, since there is often an expected length of random matches, and large values that carry information should better be kept intact for downstream analysis. The two lossy schemes are incomparable. In practice the one by Boffa et al. (2021) tends to produce smaller files, since it has more degrees of freedom; our methods manage to achieve compression rates of similar magnitude in several cases (see Supplementary Fig. S15). The lossless version of Boffa et al. (2021)expands our bitvectors for all settings (see Supplementary Fig. S3).

2 Preliminaries and notation

2.1 Strings and string indexes

Let be an integer alphabet, and let be a string. We call the reverse of T the string obtained by reading T from right to left, and we denote by the number of occurrences (or frequency) of string W in T. For reasons of space, we assume the reader to be already familiar with the notion of suffix tree of T, which we do not define here. We just recall that every edge in E is labeled with a string of length possibly greater than one, and that a substring W of T can be extended to the right with at least two distinct characters iff for some internal node v of the suffix tree, where is the string label of node obtained by concatenating the label of every edge in the path from the root to v. It is well-known that all the nodes in a suffix tree path have distinct frequencies, which decrease from top to bottom. If u is a node of the suffix tree of T, we use as a shorthand for . We assume the reader to be familiar with the notion of suffix link connecting a node v with for some , to a node w with . Here, we just recall that inverting the direction of all suffix links yields the so-called explicit Weiner links. Given an internal node v of and a symbol , it might happen that string occurs in T, but that it is not the label of any internal node: all such left extensions of internal nodes that end in the middle of an edge or in a leaf are called implicit Weiner links. An internal node of can have several outgoing Weiner links, and every one of them is labeled with a distinct character.

We call suffix tree topology a data structure that supports operations on the shape of , like , which returns the parent of a node v; , which returns the lowest common ancestor of nodes u and v; and , which compute the identifier of the leftmost (respectively, rightmost) leaf in the subtree rooted at node v; , which returns the identifier of the ith leaf in preorder traversal; , which computes the number of leaves that occur before leaf v in preorder traversal. It is known that the topology of an ordered tree with n nodes can be represented using bits as a sequence of 2n balanced parentheses, and that bits suffice to support every operation described above in constant time (Navarro and Sadakane, 2014; Sadakane and Navarro, 2010). We assume the reader to be familiar also with the Burrows–Wheeler transform of T (denoted in what follows). Here, we just recall that every suffix tree node corresponds to a compact lexicographic interval in the BWT, and that following a Weiner link in the suffix tree, i.e. extending a string to the left with one character, corresponds to the well-known backward step from the BWT interval of W. We also mention the classical operations , which returns the number of occurrences of character a in string T up to position i, inclusive; and , which returns the position of the ith occurrence of a in T. In what follows we omit subscripts that are clear from the context, and we use and as shorthands for T¯ and T¯ , respectively.

2.2 Matching statistics in small space

As mentioned, given a query string , we call matching statistics an array such that is the length of the longest prefix of that occurs somewhere in T without errors. In this paper, we work with the compact representation of as a bitvector of bits, which is built by appending, for each in increasing order, zeros followed by a one (Belazzougui and Cunial, 2014). is assumed to be one. Since the number of zeros before the ith one in equals , one can compute for any using select operations on : . As customary, is the position of the ith occurrence of character a in string T, starting from zero. is the number of occurrences of a in T up to position i, inclusive and starting from zero. We also work with the algorithm by Belazzougui and Cunial (2014), which we summarize here for completeness. This offline algorithm computes using both a backward and a forward scan over S, and it needs in each scan just with rank support, and the topology of , or just with rank support, and the topology of . The two phases are connected via a bitvector , such that iff , i.e. iff there is no zero between the ith and the th ones in .

First, we scan S from right to left, using with rank support, and the suffix tree topology of T, to determine the runs of consecutive ones in . Assume that we know the interval in that corresponds to substring , as well as the identifier of the proper locus v of W in the topology of . We try to perform a backward step using character : if the resulting interval is non-empty, we set and we reset to . Otherwise, we set , we replace v by using the topology, we update to the interval of the parent of v, and we try another backward step with character a from . We keep doing parent operations, followed by backward step trials, until the backward step succeeds or v is the root of .

In the second phase, we scan S from left to right, and we build using with rank support, the suffix tree topology of , and bitvector . Assume that we know the interval in that corresponds to substring such that but . We try to perform a backward step with character : if the backward step succeeds, we continue issuing backward steps with the following characters of S, until we reach a position in S such that a backward step with character from the interval of substring in fails. At this point we know that , so we append zeros and a one to . Moreover, we iteratively reset the current interval in to the interval of , where is the proper locus of in , and we try another backward step with character , until we reach an interval for which the backward step succeeds. Let this interval correspond to substring . Note that and for all , so is the position of the first zero to the right of position k in , and we can append ones to . Finally, we repeat the whole process from substring and its interval in . This algorithm can be easily extended to compute the frequency of every in T: see Supplementary Section S2.

3 Computing matching statistics in parallel

It is natural to try and parallelize the construction of MS when query strings are long. In the case of proteomes, or of concatenations of several small genomes, reads or contigs, one could just split the query in chunks of approximately equal size along concatenation boundaries, and process each chunk in parallel. For the large, contiguous genome assemblies that are increasingly achievable with long reads, one could compute MS in parallel for each chromosome, but chromosomes might have widely different lengths and their number might be much smaller than the number of cores available. In this section, we describe algorithms for computing MS in parallel for long query strings, without assuming that they are the concatenation of shorter strings.

We work in the concurrent read, exclusive write (CREW) model of a parallel random-access machine, in which multiple processors are allowed to read from the same memory location at the same time, but only one processor is allowed to write to a memory location at any given time. Let be a partition of S into t blocks of equal size, and let pi be the first position of block Si. Once S and are loaded in memory, one can build in parallel with t threads, by computing independently for each i: this works since every thread can safely read the suffix of S to the right of its own block, as well as the corresponding positions of . Recall, however, that the algorithm outputs a compact representation of array , rather than array itself. Let be the bitvector representation of . Thread i computes starting from position pi of S, and it appends to the beginning of a sequence of zeros and a one; however, in the final bitvector , such a sequence of bits should be replaced by a sequence of zeros and a one. We perform this correction in a final pass, in which a single thread concatenates all output bitvectors. Specifically, we use to correct the first run of zeros of , and so on for the other blocks.

We compute bitvector with t parallel threads, as follows. Let be the partition of induced by blocks . Thread i executes the algorithm for computing independently just inside blocks Si and Ri, starting with filling the last bit of Ri. Assume that thread i, while proceeding from right to left, sets bit bi of Ri to zero, and that it sets to all ones. All the bits that thread i sets in are correct, since they can be decided without looking at blocks . However, to decide the value of bits one needs to look at the blocks that follow Si. We call marked the last block , as well as any block Ri that contains a zero after this phase. If Ri is marked, let : then, thread i stores the BWT interval and the topology identifier of the locus of Wi in . In practice, we expect bi to be close to , and we expect most blocks to be marked. However, there could be an Ri that contains no zero after this phase. Thus, we have to run a second phase in which, for every marked block Ri, we start a thread that updates all the one-bits, in all blocks between and the rightmost marked block Rj before Ri, including the suffix of Rj after its last zero. We perform this correction using the information stored in the previous phase. Note that this strategy might result both in using fewer than t threads (since we issue just one thread per marked block), and in linear time per thread, since the number of one-bits that a thread might have to update could be proportional to .

These problems occur when S and T have long exact matches, and they become extreme when S = T. Thus, we experiment with the pairs of similar genomes and proteomes described in Supplementary Section S1. The construction of both and scales well on genomes and proteomes of similar species, although achieving the ideal speedup gets more difficult as the number of threads i ncreases (Fig. 1). Correcting the bitvector takes a negligible fraction of the total time for processing , even for similar genomes, and it takes more time for proteomes than for genomes, probably because the proteomes of related species are more similar to one another than their genomes (see Supplementary Fig. S1). The fraction of time spent in correcting grows with the number of threads, probably because more threads imply shorter blocks, and shorter blocks are more likely to intersect with exact matches between S and T, or to be fully contained in them. Correcting the bitvector takes even less time than correcting . Even running the algorithm on the very similar pairs of chromosome 1 from different human individuals shows the same trends (Supplementary Fig. S1). When S = T, correcting uses just one thread, since only the last block is marked, and it takes time proportional to (Fig. 1); building uses all threads, but each one of them has to process the whole suffix of S that starts from its block, thus there is no speedup with respect to the sequential version. In the following section, we describe a way to achieve better asymptotic complexity even when S = T.

Fig. 1.

Scaling of our parallel implementation as the number of threads t increases. Line: ideal scaling . Circles along the line: genomes of similar species, proteomes of similar species, pairs of human chromosome 1 from distinct random individuals. Circles far from the line: identical query and text (human chromosome 1 from two random individuals). Vertical axis: time of the parallel implementation divided by the time of the sequential implementation. Correction of is not shown since it is negligible. See Supplementary Figure S1 for a color version

3.1 Better asymptotic complexity

Another way of computing in parallel could be by performing a backward search from the end of every block Si using , by mapping the resulting interval to the corresponding interval in , and by starting the computation of each block from such intervals. This naive approach has the disadvantage of requiring linear time per thread in the worst case to compute the initial BWT intervals of the blocks, and of needing to translate intervals from one BWT to the other. However, the general idea can be used to achieve better complexity, as follows:

Lemma 1.

Let T be a string on alphabet , and assume that we have a representation of that support suffix array and inverse suffix array queries in O(p) time, and a representation of that support and queries in O(q) time. Given a query string S and t processors, we can compute in time using bits of working space.

Proof. Without loss of generality, we assume that t is a power of two. To compute the bitvector, we proceed as follows. First, we split S into t blocks , and we build the BWT interval of every block Si in parallel, spending time overall. Then, we build the BWT interval of every disjoint superblock that consists of adjacent blocks, for all , in phases. In phase j, we compute the BWT interval of every one of the superblocks in parallel, by merging the BWT intervals of the two smaller superblocks from the previous phase that compose it. Every such merge can be performed in time using a data structure that takes additional bits of space (Fischer et al., 2016), so all merging phases take time in total. Note that storing the BWT intervals of all superblocks from all phases takes just bits of working space. Then we compute, for every , the largest such that occurs in T [we call g(i) such a value of j in what follows]. This can be done by assigning a processor to every block Si, and by making the processor merge the BWT intervals of pairs of superblocks computed previously. This takes again time overall. Finally, for every Si in parallel, we try to extend the BWT interval of inside the next block , by performing backward steps in overall time. We use the resulting intervals for computing the block of that corresponds to every block Si, independently and in parallel, in overall time. To compute the block of that corresponds to each Si, independently and in parallel, we first need to compute the interval in of the longest prefix of that occurs in T: we compute all such intervals using the same superblock approach described above. □ □

Plugging into Lemma 1 some well-known suffix array representations, we can get time and bits of space for an integer alphabet of size σ, or time and bits of space for an alphabet that is polynomial in . We can further improve on the complexity of every step of Lemma 1, by using a parallel rather than a sequential algorithm for computing the BWT interval of VW, given the BWT intervals of V and of W. Specifically, we use the algorithm by Fischer et al. (2016), which runs in time with t processors, taking again bits of working space:

Lemma 2.

Given the assumptions of Lemma 1, we can computein time using bits of working space.

Proof. To build the BWT interval of every superblock, we proceed as follows. In phase j we have to merge pairs of BWT intervals (one for each superblock of blocks), thus we can afford to allocate processors to each merge: it is easy to see that this yields time overall. Then, to compute g(i) for each i, we proceed as follows. If we had to solve the problem just for the blocks whose ID is a multiple of , we could allocate processors to each task and be done in time, which is . More generally, we could organize the computation in iterations: at iteration j we solve the problem for all remaining blocks whose ID is a multiple of for increasing j (i.e. from larger to smaller offsets). Thus, every block i that we want to solve at iteration j lies between two blocks that we solved at iteration j − 1. Clearly there are total blocks between and (excluded), thus in the current iteration we have to compute the solution for blocks that lie between and . Moreover, since we are dealing with matching statistics, , and the sum of over all x is at most t. It follows that, if we assigned processors to compute each solution between and , we would end up using processors in total: since we have just t processors, we should thus assign processors to each solution (actually, since at iteration j we compute up to total solutions, we could afford to allocate processors per solution). Since , we need to merge just pairs of superblocks to compute the solution for any Si, thus the total running time of one iteration is using the parallel algorithm by Fischer et al. (2016): it is easy to see that this is , thus we get the claimed bound over iterations. □ □

By plugging into Lemma 2 the same data structures as before, we can get time and bits of space, or time and bits of space, which is comparable to the complexity of prefix matching queries described by Fischer et al. (2016).

4 Compressing the matching statistics bitvector

Even though takes just bits, storing the bitvector of every pair of genomes in a large dataset for later analysis and querying might still require too much space overall. Real bitvectors, however, have several features that could be exploited for lossless compression. Specifically, if S and T are similar, they are likely to contain long maximal exact matches (MEMs), i.e. triplets such that and . In practice, MEMs tend to be surrounded in S by regions with short matches with T (see the example in Fig. 2), so is likely to be short, and the run of zeros induced by in is likely to be long; moreover, in practice for all for some small k (see again Fig. 2). Thus, every MEM is likely to induce a long run of zeros followed by a long run of ones in , and if S and T share several long MEMs, run-length encoding (RLE) might save space. Another property of real bitvectors is that the length of a long run of zeros tends to be similar to the length of the following long run of ones, since is likely to be small (see Supplementary Figs S18 and S19). So, given a pair (zi, oi) representing a run of zi zeros and the following run of oi ones, and given an encoder δ, one might encode the pair as if zi is large, and as otherwise.

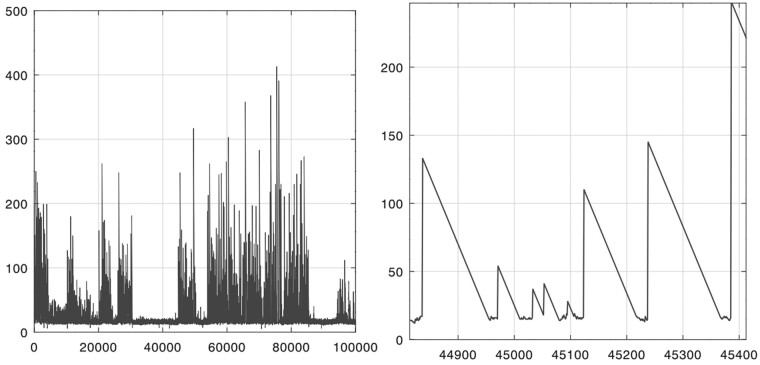

Fig. 2.

Values of matching statistics (vertical axis) in a range of positions along human chromosome 1 (horizontal axis). Query: H.sapiens. Text: P.troglodytes. The right panel is a zoom-in of the left panel. See Supplementary Figure S6 for a bigger range. The PLCP array of a single genome (defined in Section 4) has a similar shape

RLE the bitvectors of pairs of genomes from human individuals using e.g. the data structure by Sirén (2009) yields compression rates of about 20 (Fig. 3, right panel), and compressing the same bitvectors with the data structure from the SDSL library [Gog et al., 2014; which implements the RRR scheme by Raman et al. (2007)] yields compression rates of about 6 (see Supplementary Fig. S8). However, the bitvectors of pairs of genomes from different species are recalcitrant to compression, even when the species are related: RLE expands those files by a factor of 2 (Fig. 3, insert in the left panel), and RRR expands most of them slightly (by a factor of 1.1), and manages to compress just few pairs with rate 1.25 (Supplementary Fig. S8). The same happens with pairs of artificial strings with controlled mutation rate (see Supplementary Figs 16 and 17).

Fig. 3.

Ratio between the size of the data structure by Sirén (2009) built on a permuted bitvector, and the size of the data structure from SDSL built on the original bitvector, for the D, DL and ND lossy variants on pairs of genomes from different species and on pairs of genomes from human individuals, allowing and disallowing negative MS values. Size is measured on disk. The vertical axis in the middle panel shows negative powers of 10. Computation is exact for windows with up to 300 zeros and 300 ones, then it uses the first greedy strategy described in the text

In some applications, including genome comparison, short matches are considered noise by the user, and the precise length of a match can be discarded safely as long as we keep track that at that position the match was short. Given an array and a user-defined threshold τ, let a thresholded matching statistics array be such that if , and equals an arbitrary (possibly negative) value smaller than τ otherwise. In some applications, τ might even change along S, e.g. when S is the concatenation of several genomes with different similarity to T. This notion is symmetrical to the one defined by Cunial et al. (2019), which discards instead long MS values in order to prune the suffix tree topologies and to make the data structures smaller. Given an encoder δ, we are interested in the array whose bitvector takes the smallest amount of space when encoded with δ. In what follows, we drop S and T from the subscripts whenever they are clear from the context.

Note that every is a permutation of , since the two bitvectors must contain the same number of zeros and ones. Moreover, if corresponds to the one-bit at position y in , then every must also have a one at position y, which corresponds to and is preceded by the same number of ones and zeros as position y in (this follows from the fact that ). Let be the sequence of all and only the positions of S whose MS value is at least τ, and let be the sequence of the corresponding one-bits in . Clearly it can happen that ; if this does not happen, then must be equal to τ, and must be a one and must be a zero, both in and in any . Thus, if we compress by delta-coding the length of every run, we can build an that is smallest after compression, by concatenating a permutation of every such interval of that is smallest after compression, as well as of the non-empty intervals and (and all such permutations can be computed in parallel).

Assume that we want to compute a smallest permutation of window , where and every run is delta-coded in isolation. Clearly we could just replace the window with , where p (respectively, q) is total the number of ones (respectively, zeros) in the window; this could make some MS values negative, thus the resulting might not be a valid MS bitvector, and before replacing with one should make sure that any implementation that used handles negative values correctly. Building an MS bitvector without negative values is easy:

Lemma 3.

Given an interval of , with z total zeros and o total ones, we can compute a smallest permutation with no negative value in time and words of space.

Proof. Every permutation of the interval can be represented as a sequence of pairs for some , where zi is the length of a run of zeros, oi is the length of a run of ones, for all i > 0, and for all . We work with the sequence of cumulative pairs , where and . Given a pair (Zi, Oi), we use as a shorthand for (i.e. the MS value that corresponds to the last one-bit of the pair), and we say that the pair is valid iff it satisfies , and . We draw a directed arc from every valid pair (Zi, Oi) to every other valid pair (Zj, Oj) such that Zj > Zi, Oj > Oi, and (this is the MS value of the first one-bit in the last run of ones in the pair), and we assign cost to the arc. Moreover, we add the invalid pair (z, o), we connect it to every valid pair , and we assign cost to the arc. A start pair (Zi, Oi) is a valid pair with Zi = 0, and it is assigned cost . A permutation of smallest size corresponds to a path in the resulting DAG , from a start pair to pair (z, o), that minimizes the sum of the costs of its arcs plus the cost of the start pair. This can be derived by computing, for every node that does not correspond to a start pair, quantity , using dynamic programming over the topologically-sorted DAG. □ □

One can easily modify this construction to enforce MS values in the permuted interval to be at least a positive number, rather than zero. To make compression faster in practice, we fix z and o to a large value and, for every τ used by the target application, we precompute and store a variant of the DAG that answers every possible query of length at most z + o: in addition to (z, o), this variant includes every pair (Zi, Oi) with , it connects it to all valid pairs as described for (z, o), and it computes the min-cost path to every node. To permute a window with zeros and ones such that , and , we go to node in the DAG and we backtrack along an optimal precomputed path. If does not belong to the DAG, we select a valid in-neighbor (Zi, Oi) of using a greedy strategy (e.g. the neighbor that maximizes or ): if (Zi, Oi) belongs to the DAG, we backtrack, otherwise we take another greedy step. In what follows, we label this approach ‘ND’.

As mentioned, in real MS bitvectors the length of a run of zeros and of the following run of ones tend to be similar: we can take this into account by setting the cost of an arc between (Zi, Oi) and (Zj, Oj) to , where and is the following map: since , we map all the negative values of y − x to the even integers up to in increasing order of , we map the positive values of y − x up to x − 2 to the odd integers , and we map every remaining value of y − x to y. We use integer one to encode y = x. Recall that the interval of that we want to permute is , where yi belongs to a (possibly long) run of one-bits, and is the first one-bit of a (possibly long) run. We might not want to alter the lengths of such runs of ones, so we might be interested in permuting just the subinterval where p is the first zero after yi and q is the last one before (if negative values of MS are allowed, the trivial scheme of writing all the ones at the beginning of cannot be used, since it would alter the length of the run of yi). We call this variant ‘D’ in what follows. Since in practice the correlation between the length of a run of zeros and the following run of ones is strong only for long runs, one might want to encode a run of x zeros ad the following run of y ones as only when , and to encode it as otherwise. This would require permuting just , but in an optimal way with respect to the latter encoding. We call this variant ‘DL’ in what follows.

When S and T are dissimilar, run-length compressing the permuted bitvectors expands them for small values of τ when negative MS values are not allowed (Fig. 3, insert in the left panel). For τ = 32, RLE most of our variants shrinks the bitvector to ∼40% of its original size, and increasing τ progressively brings its size down to 10% of the original. We do not detect any clear difference in performance between the variants, with D being significantly smaller in some but not all cases (Supplementary Fig. S11). A detailed analysis of how the permutation schemes compare when varying the similarity between query and text is provided in Supplementary Figures S16 and S17. For pairs of genomes from human individuals, RLE the original bitvector already brings its size down to ∼4.5% of the original, and increasing τ shrinks the bitvectors to 2% of the input (Fig. 3, right panel). Allowing for negative MS values compresses some pairs of genomes from different species already at τ = 16, and for it shrinks the bitvector to ∼2% of the original (Fig. 3, center panel). Negative MS values do not give any significant gain for genomes of individuals (data not shown). Pairs of proteomes display similar trends, but this time RLE is able to compress some bitvectors, and τ = 8 is enough to compress most pairs (Supplementary Figs S9 and S10). Finally, we test our lossy compression on the permuted longest common prefix array (PLCP) of the genomes in our dataset, since this data structure is amenable to a compact encoding that is very similar to (Sadakane, 2007): we observe again a shrinkage from 40% to 10% of the original size when setting (Supplementary Fig. S14).

Clearly, when very few MS values are above threshold, storing just those values might take less space than compressing the bitvector. We call a scheme that stores every MS value at least τ and its position in the minimum number of bits necessary to encode the respective numbers, and we call a scheme in which every MS value at least τ is stored in bits and every position is stored in bits, where M is the maximum observed MS value and L is the length of the query. Accessing MS from such structures might be much slower than using the bitvector with select queries. For , large values are rare enough in the MS arrays of genomes from different species that most compressed bitvectors take much more space than or (Supplementary Fig. S12). When negative values of MS are allowed; however, the permuted bitvectors of several pairs of genomes become smaller than or comparable to and (Supplementary Fig. S13), and for pairs of individuals, the permuted bitvectors are always two or three orders of magnitude smaller than and , since 80% or more of all MS values are above threshold for every τ (Supplementary Fig. S12).

5 Querying the matching statistics bitvector

As mentioned, it is natural to formulate questions on the similarity between a substring of the query and the whole text in terms of matching statistics, and this approach has already been used in bioinformatics for detecting horizontal gene transfer and other structural variations between two genomes. In this section, we focus on two types of range query, which we implement on the bitvector: given an interval , we want to return either (e.g. to compute a local version of the score by Ulitsky et al. (2006)) or (e.g. to detect the presence of significant matches).

We answer the max query using the standard approach of dividing the bitvector into blocks with a fixed number of bits, extracting for each block the maximum MS value that corresponds to a one-bit in the block, and building a range-maximum query (RMQ) data structure on such values. Given a range in the query, we find the block of that contains the ith one-bit, the block that contains the jth one-bit, and we query the RMQ data structure on the range of blocks (if it is not empty): this returns the index of a block with largest value, thus we perform a linear scan of the returned block, as well as of the suffix of block and of the prefix of block (if any). We implement this approach using the and data structures from the SDSL library (Gog et al., 2014). For ranges approximately equal to two blocks or larger, this method allows answering a range-max query in the same time as scanning two full blocks and querying the RMQ (see Fig. 4); for shorter ranges, it takes the same time as a linear scan of the range. In practice, when the query is the human genome and the block size is, say, 1024 bits, we can answer arbitrary range-max queries in a few milliseconds using just two megabytes for the RMQ and 12 megabytes for the precomputed maximum of each block (if we do not want to compute it on the fly). Other space/time tradeoffs are possible, but we omit a detailed analysis for brevity.

Fig. 4.

Diagonal line: non-optimized scanning of the SDSL data structure without an index. Plateaus: scanning combined with an RMQ index for different block size B. Every point is the average of 20 random queries of the same size. Vertical dashed lines: query sizes that are approximately equal to two blocks of the bitvector (labels: average size in bits of the query range when mapped to the bitvector). Dataset: H.sapiens and Mus musculus genomes. Similar trends appear for pairs of genomes from human individuals

After taking care of some details, this approach can be applied to compressed versions of the bitvector as well: our implementation supports RRR and RLE using the and data structures, from SDSL and from the RLCSA code by Sirén (2009), respectively. During a scan, issuing one access operation for every bit is clearly suboptimal: instead, in the uncompressed and in the RRR-compressed , we extract 64 bits at a time and we look up every byte in a precomputed table. This gives speedups between 2 and 8, depending on dataset and range size (see Supplementary Fig. S20). In the RLE-compressed , we process one run at a time, and in very similar strings scanning becomes from a hundred to a thousand times faster than accessing every bit, or more. Overall, scanning the RLE-compressed bitvector of very similar strings processing one run at a time, is approximately 10 times faster than scanning the corresponding uncompressed bitvector processing 64 bits at a time (see Supplementary Fig. S21). Thus, if the target application is not interested in MS values below some threshold, one might swap the uncompressed bitvector with one of the permuted and RLE-compressed variants described in Section 4, and this might speed up range-max queries at no cost.

As customary, by recurring on the output of RMQ queries one can report all blocks in the range with maximum MS value, or all blocks with MS value at least τ, in linear time in the size of the output. Setting the block size to gives an RMQ data structure of bits, and it allows replacing the linear scan of a block with a constant-time lookup from a table of bits in which we store the relative location of a largest value inside each block. We use the RMQ to detect all blocks in the range that contain at least one large value, and we use lookups from another table of bits (which stores offsets between one-bits with MS value at least τ) to report all locations with MS value at least τ in linear time on their number.

The optimized scanning can be applied to range-sum queries as well, with similar speedups (see Supplementary Fig. S21). To implement a range-sum query over an arbitrary range, we just store the prefix sums that correspond to the last one-bit in every block, and we scan the two blocks that contain the one-bit that corresponds to i and the one-bit that corresponds to j. Scanning can also be used to implement other primitives in analytics, like plotting all MS values in a range or their histogram, computing the position k of a longest interval that contains , or finding all windows of fixed length k inside with maximum sum.

Financial Support: none declared.

Conflict of Interest: none declared.

Supplementary Material

References

- Ahmed O. et al. (2021) Pan-genomic matching statistics for targeted Nanopore sequencing. iScience, 24, 102696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Apostolico A. et al. (2016) Sequence similarity measures based on bounded Hamming distance. Theor. Comput. Sci., 638, 76–90. [Google Scholar]

- Belazzougui D., Cunial F. (2014) Indexed matching statistics and shortest unique substrings. In: International Symposium on String Processing and Information Retrieval. Springer,Cham, pp. 179–190. [Google Scholar]

- Belazzougui D. et al. (2018) Fast matching statistics in small space. In Proceedings of the 17th International Symposium on Experimental Algorithms (SEA 2018), Vol. 103, pp. 17:1-17:14. Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik. [Google Scholar]

- Boffa A. et al. (2021) A “learned” approach to quicken and compress rank/select dictionaries. In 2021 Proceedings of the Workshop on Algorithm Engineering and Experiments (ALENEX). SIAM, pp. 46–59. [Google Scholar]

- Boucher C. et al. (2021) PHONI: streamed matching statistics with multi-genome references. In 2021 Data Compression Conference (DCC). IEEE, pp. 193–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castiglione G. et al. (2019) Some investigations on similarity measures based on absent words. Fund. Inform., 171, 97–112. [Google Scholar]

- Cohen E., Chor B. (2012) Detecting phylogenetic signals in eukaryotic whole genome sequences. J. Comput. Biol., 19, 945–956. [DOI] [PubMed] [Google Scholar]

- Cunial F. et al. (2019) A framework for space-efficient variable-order Markov models. Bioinformatics, 35, 4607–4616. [DOI] [PubMed] [Google Scholar]

- Domazet-Loso M., Haubold B. (2009) Efficient estimation of pairwise distances between genomes. Bioinformatics, 25, 3221–3227. [DOI] [PubMed] [Google Scholar]

- Domazet-Lošo M., Haubold B. (2011a) Alignment-free detection of horizontal gene transfer between closely related bacterial genomes. Mobile Genet. Elem., 1, 230–235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domazet-Lošo M., Haubold B. (2011b) Alignment-free detection of local similarity among viral and bacterial genomes. Bioinformatics, 27, 1466–1472. [DOI] [PubMed] [Google Scholar]

- Ehrenfeucht A., Haussler D. (1988) A new distance metric on strings computable in linear time. Discrete Appl. Math., 20, 191–203. [Google Scholar]

- Feng S. et al. (2020) Dense sampling of bird diversity increases power of comparative genomics. Nature, 587, 252–257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer J. et al. (2016) On the benefit of merging suffix array intervals for parallel pattern matching. In: 27th Annual Symposium on Combinatorial Pattern Matching (CPM 2016). Tel Aviv, Israel, pp. 26:1–26:11. [Google Scholar]

- Formenti G. et al. (2020) Complete vertebrate mitogenomes reveal widespread gene duplications and repeats. BioRxiv. [DOI] [PMC free article] [PubMed]

- Garofalo F. et al. (2018) The colored longest common prefix array computed via sequential scans. In: International Symposium on String Processing and Information Retrieval. Springer, Cham, pp. 153–167. [Google Scholar]

- Gog S. et al. (2014) From theory to practice: plug and play with succinct data structures. In: 13th International Symposium on Experimental Algorithms (SEA 2014), pp. 326–337. Springer, Cham. [Google Scholar]

- Haubold B., Pfaffelhuber P. (2012) Alignment-free population genomics: an efficient estimator of sequence diversity. G3, 2, 883–889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haubold B., Wiehe T. (2006) How repetitive are genomes? BMC Bioinform., 7, 541–510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haubold B. et al. (2005) Genome comparison without alignment using shortest unique substrings. BMC Bioinform., 6, 123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haubold B. et al. (2009) Estimating mutation distances from unaligned genomes. J. Comput. Biol., 16, 1487–1500. [DOI] [PubMed] [Google Scholar]

- Haubold B. et al. (2011) Alignment-free estimation of nucleotide diversity. Bioinformatics, 27, 449–455. [DOI] [PubMed] [Google Scholar]

- Haubold B. et al. (2013) An alignment-free test for recombination. Bioinformatics, 29, 3121–3127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hecker N., Hiller M. (2020) A genome alignment of 120 mammals highlights ultraconserved element variability and placenta-associated enhancers. GigaScience, 9, giz159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jebb D. et al. (2020) Six reference-quality genomes reveal evolution of bat adaptations. Nature, 583, 578–584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leimeister C.-A., Morgenstern B. (2014) Kmacs: the k-mismatch average common substring approach to alignment-free sequence comparison. Bioinformatics, 30, 2000–2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navarro G., Sadakane K. (2014) Fully functional static and dynamic succinct trees. ACM Trans. Algor., 10, 1–39. [Google Scholar]

- Ohlebusch E. et al. (2010) Computing matching statistics and maximal exact matches on compressed full-text indexes. In SPIRE, pp. 347–358. Springer, Cham. [Google Scholar]

- Pizzi C. (2016) MissMax: alignment-free sequence comparison with mismatches through filtering and heuristics. Algor. Mol. Biol., 11, 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pu L. et al. (2018) Detection and analysis of ancient segmental duplications in mammalian genomes. Genome Res., 28, 901–909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raman R. et al. (2007) Succinct indexable dictionaries with applications to encoding k-ary trees, prefix sums and multisets. ACM Trans. Algor., 3, 43. [Google Scholar]

- Rhie A. et al. (2020) Towards complete and error-free genome assemblies of all vertebrate species. bioRxiv. [DOI] [PMC free article] [PubMed]

- Sadakane K. (2007) Compressed suffix trees with full functionality. Theory Comput. Syst., 41, 589–607. [Google Scholar]

- Sadakane K., Navarro G. (2010) Fully-functional succinct trees. In: Proceedings of the Twenty-First Annual ACM-SIAM Symposium on Discrete Algorithms. SIAM, pp. 134–149. [Google Scholar]

- Serres Armero A. et al. (2020) A comparative genomics multitool for scientific discovery and conservation. Nature, 587, 240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sirén J. (2009) Compressed suffix arrays for massive data. In: International Symposium on String Processing and Information Retrieval. Springer, Cham, pp. 63–74. [Google Scholar]

- Teeling E.C. et al. (2018) Bat biology, genomes, and the Bat1K project: to generate chromosome-level genomes for all living bat species. Annu. Rev. Anim. Biosci., 6, 23–46. [DOI] [PubMed] [Google Scholar]

- Thankachan S.V. et al. (2016) A provably efficient algorithm for the k-mismatch average common substring problem. J. Comput. Biol., 23, 472–482. [DOI] [PubMed] [Google Scholar]

- Thankachan S.V. et al. (2017) A greedy alignment-free distance estimator for phylogenetic inference. BMC Bioinform., 18, 238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ukkonen E. (1992) Approximate string-matching with q-grams and maximal matches. Theor. Comput. Sci., 92, 191–211. [Google Scholar]

- Ulitsky I. et al. (2006) The average common substring approach to phylogenomic reconstruction. J. Comput. Biol., 13, 336–350. [DOI] [PubMed] [Google Scholar]

- Zhang G. et al. (2014) Comparative genomics reveals insights into avian genome evolution and adaptation. Science, 346, 1311–1320. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.