Abstract

Objective

Digital exposure notifications (DEN) systems were an emergency response to the coronavirus disease 2019 (COVID-19) pandemic, harnessing smartphone-based technology to enhance conventional pandemic response strategies such as contact tracing. We identify and describe performance measurement constructs relevant to the implementation of DEN tools: (1) reach (number of users enrolled in the intervention); (2) engagement (utilization of the intervention); and (3) effectiveness in preventing transmissions of COVID-19 (impact of the intervention). We also describe WA State’s experience utilizing these constructs to design data-driven evaluation approaches.

Methods

We conducted an environmental scan of DEN documentation and relevant publications. Participation in multidisciplinary collaborative environments facilitated shared learning. Compilation of available data sources and their relevance to implementation and operation workflows were synthesized to develop implementation evaluation constructs.

Results

We identified 8 useful performance indicators within reach, engagement, and effectiveness constructs.

Discussion

We use implementation science to frame the evaluation of DEN tools by linking the theoretical constructs with the metrics available in the underlying disparate, deidentified, and aggregate data infrastructure. Our challenges in developing meaningful metrics include limited data science competencies in public health, validation of analytic methodologies in the complex and evolving pandemic environment, and the lack of integration with the public health infrastructure.

Conclusion

Continued collaboration and multidisciplinary consensus activities can improve the utility of DEN tools for future public health emergencies.

Keywords: privacy, public health informatics, disease notification, COVID-19

INTRODUCTION

Background

Identifying and notifying individuals who may have been exposed to someone who is infected with a communicable disease is a long-standing, essential public health strategy for mitigating infectious disease outbreaks.1–4 With timely notification, exposed individuals can engage in protective behaviors (eg, isolation and testing) to limit further transmission. Smartphone-based digital exposure notifications (DEN) systems were developed to rapidly supplement traditional exposure notification strategies (eg, contact tracing) during the coronavirus disease 2019 (COVID-19) pandemic.5 Unlike manual case investigation and contact tracing protocols, DEN systems are a low-burden and innovative approach to public health notifications, as users can learn of an exposure to an infected person whether the contact is known to the index case or not.6

The Google-Apple Exposure Notifications (GAEN) system, released in May 2020, is a widely used DEN system that leverages Bluetooth technology already embedded in iOS and Android smartphone operating systems for proximity detection.7 Passive tracking of close contact encounters is encrypted, and the system anonymously distributes ENs to users who were in contact with a person who has recently tested positive for COVID-19. GAEN relies on a decentralized, privacy-preserving infrastructure, in that users’ phones maintain a local log of their exposure history so no location tracking or personal data sharing is required.8,9 For public health authorities (PHAs), GAEN supports the development of customized DEN apps that are interoperable across jurisdictions. However, GAEN privacy protections create a barrier to evaluating the utility of a DEN implementation.6 Individual-level data are not available due to random noise infused into the data (“differential privacy”) to enhance anonymity. Since users are unknown, PHAs are unable to investigate clusters or outbreaks or follow-up with resources to support quarantine or other social services needs. In addition, the aggregate-level data provided to PHAs is difficult to evaluate.

Developing reliable metrics to assess the performance of DEN systems is needed for the PHAs deploying these systems, developers seeking to make improvements, and policymakers who need information about whether these tools are contributing to pandemic mitigation.10,11 In November 2020, Washington (WA) State deployed WA Notify its COVID-19, GAEN-based, DEN system.12 Public health interventions are rarely introduced without an evidence-base justifying their necessity and effectiveness.13 However, the novel SARS-CoV-2 virus pandemic compelled deployment before the effectiveness of this public health intervention could be established. In this article, we summarize the process of identifying and utilizing performance indicators to evaluate DEN tools, with WA Notify serving as a case study.

Objective

To identify and describe performance measurement constructs relevant to the implementation14 of DEN tools: (1) reach (number of users enrolled in the intervention); (2) engagement (utilization of the intervention); and (3) effectiveness in preventing transmissions of COVID-19 (impact of the intervention). WA State’s experience utilizing these constructs to design data-driven evaluation approaches within the GAEN data infrastructure is described.

METHODS

The approach used to identify potential performance indicators combined:

Conducting an iterative environmental scan of DEN app development, metrics, and system documentation;

Participating in collaborative learning environments; and,

Compiling available data sources and their relevance within DNA app implementation and operation workflows.

The project plan was reviewed by the University of WA Institutional Review Board and determined to be a public health surveillance quality improvement activity.

Environmental scan. The environmental scan included an informal review of published and grey (preprint, technical reports, news, governmental and PHA informational websites, etc.) literature and monitoring developments among states and countries implementing DEN systems.15,16 Searches using Google Scholar and Google focused on capturing information and reports related to data and measurement of DEN systems. Available GAEN platform system and workflow documentation and PHA data access protocols were also reviewed.17

Collaborative learning environments. Information was shared during regular meetings that included internal (WA Notify development, technical assistance, and evaluation team members and the WA State Department of Health [DOH]) and external WA Notify stakeholders (the Centers for Disease Control and Prevention [CDC]-hosted Learning Lab, the Western States Collaborative, MIT’s ImPACT Foundation, and the Public Health Linux Foundation18).

Compiled data sources. Supplementary Appendix A provides additional detailed information for each data source listed below.

iOS EN Setting Activations and Android Downloads: iOS activation data come from hits on a “banner image” and a weighting factor Apple provides to estimate deactivations. Android user-based data are based on device acquisition and device loss data provided by the Google Play Store Developer Console. Logs are discarded and no IP tracking or web analytics are performed.

APHL Exposure Notification Verification Server (APHL-ENCV): The Association of Public Health Laboratories (APHL) hosts national servers for US public health entities. The servers issue both verification codes and manage anonymous Bluetooth “keys,” using Google and Microsoft cloud services, respectively. These national services allow GAEN to be interoperable across PHAs, but not countries. Data available to PHAs include time-series counts of verification codes issued to WA Notify users, as well as the number of diagnoses electively verified by users.

Exposure Notifications Private Analytics (ENPA): Analytics are available based on users who opt-in to ENPA when installing WA Notify. These are infused with differential privacy (ie, statistical noise) at the device level and processed by a third party who provides access to PHAs via both dashboards and APIs.

WA State DOH “What to do next” Web page Counter: When users receive an exposure notification, the notice includes a “tap to learn more” that takes the user to a hidden WA State DOH Landing Page with information regarding “next steps,” such as instructions to self-isolate, get tested, and engage in protective behaviors based on the current CDC and WA State guidance. Page hits record the total number of ENs opened per day. As with activation web hits, logs are discarded, and no IP tracking or web analytics are performed.

WA Notify User Experience Surveys 19 , 20 : Two optional surveys are available to WA Notify EN recipients: (1) A baseline survey when users receive an EN that includes questions regarding their intention to engage in protective behaviors (ie, testing and quarantine), and (2) a follow-up survey that asks users about COVID-19 testing and actual engagement in protective behaviors.

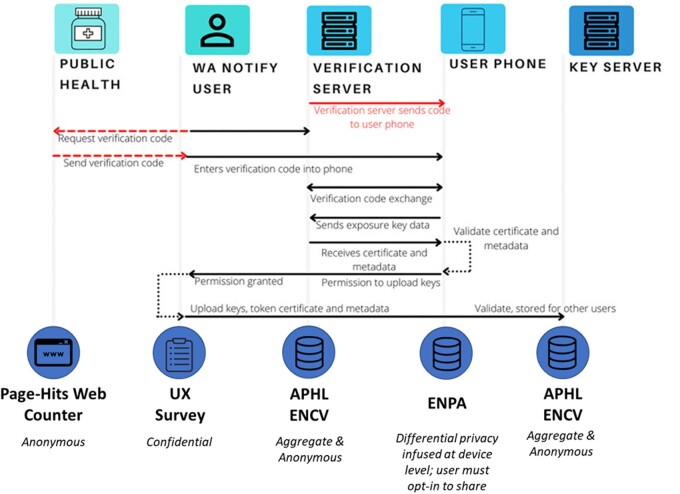

Figure 1 illustrates the process of the WA Notify intervention and the data sources accessible to PHAs for evaluation and system maintenance purposes.

Figure 1.

WA notify intervention process and underlying data sources accessible to PHAs. This figure depicts the interactions described in Apple GAEN technical documentation.21

RESULTS

The WA State experience was used as a case study to highlight performance measurement opportunities within the constraints of the disparate, deidentified, and aggregated databases associated with the tool and framed by the implementation evaluation constructs of reach, engagement, and effectiveness. See Supplementary Appendix A for details about measurements available in each data source across the user workflow.

An early evaluation of the Swiss Covid app’s effectiveness in Switzerland by Salathé et al22 proposed the following technical, behavioral, and procedural conditions must be met for a DEN tool to be an effective intervention. At the individual level, users must (1) install the tool, (2) engage with it (report a diagnosis; open an EN), and (3) elect to change their behavior to prevent secondary transmissions. At a population level, (1) enough users must adopt the tool, (2) testing must be available to promptly diagnose individuals, and (3) there must be a clear path for willing individuals to report and validate positive diagnoses. Whether a positive individual isolates themselves to prevent further disease transmission is an important indicator of the effectiveness of this intervention. Metrics identified for DEN tools as illustrated by the WA Notify case example are presented in Table 1.

Table 1.

Performance indicators and metrics applied in evaluation of DEN systems

| Performance indicator | Metric | WA notify estimates as of March 2022a |

|---|---|---|

| Reach | ||

| Number of installations within the population | Count of active and cumulative users measured by user installations | >3M activations, representing ∼51% of the adult WA population |

| Engagement | ||

| Utilization among infected users |

|

|

| Utilization among exposed users | Count of ENs received, opened or dismissed |

|

| Ratio of utilization among infected and exposed users | Ratio of exposures per diagnosis, measured as count of ENs opened per key published | 7.9 ENs opened per diagnosis reported |

| Effectivenessa | ||

| Transmissions prevented | Model of cases averted | 5500 infections prevented during the first 4 months |

| Transmission characteristics | Secondary attack rate (ie, the estimated proportion of individuals who develop COVID-19 among those who received an exposure notification) | Range of SAR used in cases averted modeling include 5.1%–13.7% during the first 4 months of WA Notify |

| Timeliness | Time from exposure-to-EN | 5.3 days |

| Transmission characteristics | Adherence to quarantine reported in UX surveys | 53% of WA Notify user survey respondents reported staying home after receiving an EN |

The effectiveness estimates are based on the November 2020–March 2021 cases averted analysis.

Reach reflects the number of individuals who installed the tool and are eligible to receive ENs. The environmental scan informed several considerations regarding this performance indicator. For example, a Canadian evaluation of DEN implementations highlighted wide variation in the adoption of DEN tools, ranging from 17.32% to 59.69% of the province populations within 6 months of implementation.23 Early agent-based modeling using WA State data reported that DEN tools must be installed by at least 15% of the population to have a meaningful impact on case aversion.24 Obtaining an accurate count of current active DEN users is a challenge due to differences between iOS and Android operating systems. The approach to count Android installations of DEN is accurate and reliable: users download an app through the Google Play Store, and the Google Play Developer Console provides the number of app installations to the PHA. As described in the Methods section above, the installation experience differs for iOS users in WA State because DEN is enabled as a setting on iOS devices and does not require the user to download an app. The privacy-preserving infrastructure does not allow for direct observation of the number of activations embedded in users’ settings on iOS devices. A proxy measurement for activations in iOS comes from hits on the “banner” image embedded in the settings page where a user enables and “turns on” Exposure Notifications. The banner is a publicly readable image hosted by the PHA with information about the region’s DEN tool and banner image hits occur when Apple displays the region consent screen, which happens once per activation. Given these measurement differences and constraints, generating a reliable estimate of active users across the two device systems will require ongoing development and validation of new methods.

Engagement is measured by utilization of the installed tool, quantified by type, frequency, and volume of usage across the tool’s functionalities, beginning with a user voluntarily reporting a positive test result. This is a multistep process that includes the option to confirm a diagnosis through a laboratory test or self-report (if a home test kit was used) which is then reported to the PHA. In both reporting pathways, the user receives a verification code sent from the APHL server and then claims that code to start the diagnosis reporting process. The number of diagnoses successfully reported is measured by the number of keys published as aggregated in the APHL ENCV data and also available in the ENPA data. For evaluation purposes, it would be useful to measure the denominator of positive cases among all users to understand the proportion reported in the DEN tool. However, a user who tests positive for COVID-19 may choose to not report their diagnosis. As a privacy-preserving, anonymous, and voluntary tool there are many unknowns in understanding the user characteristics, including their intentions and willingness to report information honestly given one’s desire to maintain privacy. User surveys focusing on experiences with COVID-19 testing and intentions to engage in protective behaviors are an important evaluation activity to supplement utilization and other DEN metrics for understanding the user base descriptively.

The next area of engagement is the utilization of the intervention itself as measured by number of ENs received, opened, and/or dismissed. Because the EN generation process occurs locally on a user’s device, the true number of ENs generated cannot be observed directly. Web counter page hits are used as a proxy for ENs opened, though these data may not represent unique users. The relationship between number of diagnoses reported and ENs opened is used by PHA decision-makers to explore the changing attenuation thresholds and risk score parameters (ie, level of infectiousness based on time since diagnosis and proximity and duration of the close contact encounter) to establish duration and proximity thresholds for generating ENs. Further analyses into the thresholds of “meaningful” timing and targeting of ENs coincide with efforts to evaluate the effectiveness of DEN tools to prevent further transmission of COVID-19.

Effectiveness—whether DEN tools prevent forward disease transmission—is perhaps the broadest area of performance which can be estimated using a cases-averted modeling approach developed in the evaluation of the National Health Services (NHS) Covid App in the United Kingdom, the first to define the relationship among the epidemiological parameters of interest for modeling the impact of DEN on preventing COVID-19 transmission.25 The epidemiological parameters include: number exposed to the intervention, timeliness, transmission rate, adherence rate, and secondary attack rate (SAR), which calculates the number of exposed individuals who develop the disease and test positive divided by the total number of exposed and “susceptible” contacts. SAR represents the magnitude and rate of disease spread as well as the infections that occur between app users. However, a challenge for interpreting SAR in the context of DEN systems is that it does not account for the overlap in close contact encounters between DEN tool users and nonusers or individuals who may receive an EN through other mechanisms such as contact tracing. Also, unique to the evaluation of the NHS App was their ability to track outcomes among EN recipients because COVID test requests were integrated with their app, and the NHS infrastructure allows access to test results. The NHS COVID App also asks users to report the first two digits of their postal code when installing the tool, giving insight into geographic variation allowing for factoring of clusters and adjustment to confounding and time-varying factors in their model. DEN tools adopted in the United States lack this geographic location and testing outcomes data, a reflection of a trade-off between privacy and the approaches available to measure the impact of the tool.

The second effectiveness parameter of interest is the time from exposure-to-exposure notification (E-to-EN) which reflects the timeliness of notifying a contact who has been exposed so that they can engage in protective behaviors and reduce further spread as soon as possible. The probability of being notified within a meaningful timeframe is conditional on the level of infectiousness at the time of the exposure encounter and when the index case tested positive for COVID-19, therefore the timing of the encounter as well as the symptom onset date provided by users who report their diagnosis are key metrics reported in the ENCV and APHL-ENCV aggregate data.26 The E-to-EN delay is included in the cases averted model as a function of the probability of the number of positive test results would be expected among those recently notified about a COVID-19 exposure. The longer the delay, the less likely a notification will correlate with reporting of a positive test result (ie, the individual may have been tested for other reasons). Two areas where DEN tools may have increased value in reducing spread with timely notification are when the index case is unknown to the user and/or the user is currently asymptomatic or presymptomatic. Of interest is to what extent DEN tools reduce the time between E-to-EN compared to traditional contact tracing, which primarily captures contacts known to the index case. Recent investigations of this effectiveness parameter report DEN tools may reach close contacts 2–3 days sooner than manual contact tracing.27,28 However, there are limitations to ENPA and APHL-ENCV timeliness metrics. In ENPA, E-to-EN data are reported in aggregate 3-day bins, distorted with differential privacy noise, and limited to those users who opt-in in to share their analytics. In APHL-ENCV data, the distribution of time between symptom onset date and diagnosis report date, while relevant to estimating the level of infectiousness for risk score algorithms, does not allow for a direct calculation of individual events in the E-to-EN timeline. These limitations highlight the challenges inherent in relying on these aggregated data sources to quantify the E-to-EN timeline.

Finally, the third effectiveness parameter focuses on whether users engage in protective behaviors after learning about their COVID-19 exposure. However, anonymity and privacy-preservation limit access to DEN users and their behaviors.29 EN recipient surveys, while a potentially useful strategy for reaching users, are inherently biased. For example, participants in the WA Notify user experience survey are those who received an EN, opened it, clicked on the link to go to the DOH landing page that provides information about what to do next, and then opted to take the survey. Those willing to participate in the survey may represent a unique population of users who are more concerned about their level of risk or more willing to follow public health recommendations. However, at the same time, embedding the survey link into the user experience workflow strengthens the utility of survey responses as it captures individuals in close proximity in time to their experience of receiving an EN. The hidden landing page reduces the chance that individuals could access the link to the survey from sources outside of receiving an EN (eg, the landing page will not show up in Web search results). Measuring attrition across the user workflow can serve to understand the representation of survey participants in the context of other DEN metrics. For the WA Notify preliminary cases averted modeling (November 30, 2020–March 31, 2021), we estimated that 10 741 ENs were generated, 5215 ENs opened, 1168 surveys started, and 1155 surveys completed, indicating that 48.6% of individuals who received an EN opened it, 22.4% of individuals who opened the EN clicked on the survey link embedded on the landing page, and 10.8% of individuals who received an EN completed the survey. In addition, the WA Notify follow-up survey provides insight into the types of protective behaviors respondents endorsed and whether their intention to engage in those behaviors changed over time. This type of contextual information is necessary for assessing the user experience of DEN, as engagement in protective behaviors can also be related to confounding factors, such as vaccination status, home or workspace conditions (eg, ventilation), or travel plans.

DISCUSSION

In this article, we explored the DEN data architecture upon which WA Notify is built and outlined a set of performance metrics for evaluating real-world DEN implementations. Categorizing these metrics into dimensions of reach, engagement, and effectiveness highlights their alignment with, and utility for, the development of solid evaluation objectives. Linking the theoretical implementation constructs30 with the metrics available within the underlying disparate, deidentified and aggregate data infrastructure serves to advance the measurement science of evaluation of DEN tools while also highlighting critical gaps.31,32 As a result, this metrics summary describes the existing challenges and identifies pathways towards validating new measurement approaches within DEN systems.

Despite the local variations in implementation approaches and specifications of the intervention itself (ie, risk score parameters), we identified shared challenges in developing meaningful metrics for evaluation where advancing analysis beyond the current cases averted modeling would be beneficial. First, applied data science skills in public health are needed to handle the constraints of data that are protected with differential privacy methods and infused with statistical noise. Here, methodologies are required to understand the volume of data and the appropriate time-based theories needed to identify a statistically meaningful signal and extract underlying trends obscured by the noise present in the data. A second challenge is the validation of methodologies for estimating epidemiological parameters of interest in the context of COVID-19 as a novel and evolving disease with unique transmission characteristics.33 A third challenge is conducting systematic explorations of DEN tool user characteristics and other contextual and possibly confounding, factors.34 The complexities of the broader environment should be taken into account in analyses, given the dramatic variation in infection rates, population behavior (eg, mask wearing), and ongoing changes to local, state, and federal government recommendations. Lastly, the lack of integration of the tool within a PHA’s public health infrastructure can present challenges for maintenance and prioritization among ongoing data modernization efforts. Siloing of tools is a general challenge for public health infrastructure,35 and while the decentralized nature of DEN tools contributes to its privacy preservation, this can also perpetuate the complexities in the management of the tool, its funding, and may influence its usefulness when there are other competing efforts to engage the public in infection control (eg, vaccine uptake).

In the early testing phases of WA Notify, implementers developed evaluation and monitoring strategies in parallel with the design and deployment of the tool. PHAs should continue to allow room for growth by embedding a process for ongoing, iterative, and flexible evaluations that are responsive as systems, features, and processes change. For example, late in 2021, WA Notify users who tested positive with an at-home COVID-19 test could self-attest, that is, request verification codes and report diagnoses without waiting for laboratory confirmation. This new functionality immediately shifted the distribution of timeliness metrics since rapid test results can be reported within 15 minutes. This change influenced both analytics and PHA considerations around WA Notify's threshold settings and translated into revised definitions for performance indicators and new evaluation objectives.

A formal synthesis of evidence and the consensus of experts on measurement objectives are the traditional mechanism for establishing key performance indicators. These efforts necessitate strong governance, stakeholder engagement, and decision-making around standards. The role of governance in applied public health informatics is rapidly expanding as PHAs across the nation face increasingly complex interoperability and surveillance infrastructure needs. As a group, DEN implementers can identify pathways towards developing a common framework for assessing digital EN performance and value to public health while also defining priorities for standards and validation activities.

The tension between privacy and utility of DEN tools is rooted in the balance between trust and the role of public health to act to mitigate communicable disease outbreaks.36 The digital ecosphere often raises concerns from the public about being tracked and losing control of personal data, among other undesired uses of identifiable information. Although surveillance is not a tangible function of DEN tools when employed as a pandemic response tool, ensuring trust with the community is paramount to promote adoption and willingness to share information honestly and accurately. Most publications about DEN tools emphasize the importance of maintaining the decentralized architecture to protect privacy.37 Balancing the need for epidemiological information to evaluate the tool with legitimate data privacy will be essential to ensure that performance metrics of DEN tools can support ongoing maintenance and improvements, inform policy-related decisions, and communicate their value for pandemic mitigation to the public.

CONCLUSION

During the SARS-CoV-2 pandemic, over 50 national and state PHAs developed and implemented DEN tools to meet unprecedented challenges in public health. Using WA Notify as an example, we identified the challenges encountered with data available to measure DEN performance using the implementation constructs of reach, engagement, and effectiveness and describe how we addressed those challenges in our analytic efforts. Eliminating technical hurdles that create adoption barriers as well as communicating the benefits of DEN tools are crucial to promote further uptake and adherence of such tools and, ultimately, to enhance their effectiveness. To do so, we need collaborative, multidisciplinary, consensus activities to explore the utility of these tools and their potential application to future public health emergencies.

FUNDING

An Interagency Agreement between the State of Washington Department of Health and the University of Washington (HED 25742).

AUTHOR CONTRIBUTIONS

Conceptualization: CS, WL, JB, and BK; Analysis: CS, WL, and DL; Writing—Original Draft: CS, WL, JB, and DR; Writing—Review & Editing: CS, WL, DL, DR, BK, and JB.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

We wish to thank our collaborators at the Washington State Department of Health, notably Amanda Higgins and Andrea King.

CONFLICT OF INTEREST STATEMENT

None declared.

Contributor Information

Courtney D Segal, Department of Health Systems and Population Health, University of Washington, Seattle, Washington DC, USA.

William B Lober, Department of Biobehavioral Nursing and Health Informatics, University of Washington, Seattle, Washington DC, USA; Clinical Informatics Research Group, University of Washington, Seattle, Washington DC, USA.

Debra Revere, Department of Health Systems and Population Health, University of Washington, Seattle, Washington DC, USA.

Daniel Lorigan, Clinical Informatics Research Group, University of Washington, Seattle, Washington DC, USA.

Bryant T Karras, Washington State Department of Health, Olympia, Washington DC, USA.

Janet G Baseman, Department of Epidemiology, University of Washington, Seattle, Washington DC, USA.

Data Availability

The data are not publicly available.

REFERENCES

- 1. Mays GP, McHugh MC, Shim K, et al. Getting what you pay for: public health spending and the performance of essential public health services. J Public Health Manag Pract 2004; 10 (5): 435–43. [DOI] [PubMed] [Google Scholar]

- 2. Centers for Disease Control. Contact tracing for COVID-19 | CDC. https://www.cdc.gov/coronavirus/2019-ncov/php/contact-tracing/contact-tracing-plan/contact-tracing.html. Accessed March 31, 2022.

- 3. World Health Organization. Contact tracing in the context of COVID-19. https://www.who.int/publications/i/item/contact-tracing-in-the-context-of-covid-19. Accessed March 31, 2022. [PubMed]

- 4. Svoboda T, Henry B, Shulman L, et al. Public health measures to control the spread of the severe acute respiratory syndrome during the outbreak in Toronto. N Engl J Med 2004; 350 (23): 2352–61. [DOI] [PubMed] [Google Scholar]

- 5. Kleinman RA, Merkel C.. Digital contact tracing for COVID-19. CMAJ 2020; 192 (24): E653–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Ranisch R, Nijsingh N, Ballantyne A, et al. Digital contact tracing and exposure notification: ethical guidance for trustworthy pandemic management. Ethics Inf Technol 2021; 23 (3): 285–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Google & Apple. Privacy-preserving contact tracing - Apple and Google. Apple.com 1–7. https://covid19.apple.com/contacttracing; 2020. Accessed March 31, 2022.

- 8. Troncoso C, Payer M, Hubaux JP, et al. Decentralized privacy-preserving proximity tracing. arXiv preprint arXiv:2005.12273. May 25, 2020.

- 9. Lovett T, Briers M, Charalambides M, et al. Inferring proximity from Bluetooth low energy RSSI with unscented Kalman smoothers. Preprint at https://arxiv.org/abs/2007.05057. 2020.

- 10. Carney TJ, Shea CM.. Informatics metrics and measures for a smart public health systems approach: information science perspective. Comput Math Methods Med 2017; 2017: 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Yasnoff WA, Overhage JM, Humphreys BL, LaVenture M.. A national agenda for public health informatics: summarized recommendations from the 2001 AMIA Spring Congress. J Am Med Inform Assoc 2001; 8 (6): 535–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Chen T, Baseman J, Lober WB, et al. WA Notify: the planning and implementation of a Bluetooth exposure notification tool for COVID-19 pandemic response in Washington State. Online J Public Health Inform 2021; 13 (1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. World Health Organization. The Transformation Agenda Series 6: Towards a Stronger Focus on Quality and Results: Programmatic key performance indicators. Congo, Brazzaville: WHO Regional Office for Africa; 2019.

- 14. Glasgow RE, Harden SM, Gaglio B, et al. RE-AIM planning and evaluation framework: adapting to new science and practice with a 20-year review. Front Public Health 2019; 7: 64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Exposure notifications. APHL. (2021). https://www.aphl.org/programs/preparedness/Crisis-Management/COVID-19-Response/Pages/exposure-notifications.aspx. Accessed March 8, 2021.

- 16. Exposure Notification. Wikipedia (2020). https://en.wikipedia.org/wiki/Exposure_Notification. Accessed November 1, 2021.

- 17. Analytics in Exposure Notifications Express: FAQ. Github. https://github.com/google/exposure-notifications-android/blob/master/doc/enexpress-analytics-faq.md. Accessed March 8, 2022.

- 18. Link to Linux Public Risk Score Webpage. Updating exposure notification risk score recommendations. Linux, December 15, 2021. https://www.lfph.io/2021/12/15/risk-score-recommendations-2/. Accessed March 8, 2022.

- 19. Baseman JG, Karras BT, Revere D.. Engagement in protective behaviors by digital exposure notification users during the COVID-19 pandemic, Washington State, January–June 2021. Public Health Rep 2022; 003335492211103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Revere D, Karras BT, Higgins A. “EN User Experience.” OSF. May 4. osf.io/tnav3. 2022.

- 21.Apple. Supporting Exposure Notifications Express. Apple Developer Documentation [Internet]. https://developer.apple.com/documentation/exposurenotification/supporting_exposure_notifications_express [developer.apple.com]. Accessed March 30, 2022. [Google Scholar]

- 22. Salathé M, Althaus CL, Anderegg N, et al. Early evidence of effectiveness of digital contact tracing for SARS-CoV-2 in Switzerland. Swiss Med Wkly 2020; 150: w20457. [DOI] [PubMed] [Google Scholar]

- 23. Sun S, Shaw M, Moodie EE, Ruths D. The epidemiological impact of the Canadian COVID Alert App. Can J Public Health 2022; 113 (4): 519–27. [DOI] [PMC free article] [PubMed]

- 24. Abueg M, Hinch R, Wu N, et al. Modeling the effect of exposure notification and non-pharmaceutical interventions on COVID-19 transmission in Washington state. NPJ Digit Med 2021; 4 (1): 49. [DOI] [PMC free article] [PubMed]

- 25. Wymant C, Ferretti L, Tsallis D, et al. The epidemiological impact of the NHS COVID-19 app. Nature 2021; 594 (7863): 408–12. [DOI] [PubMed] [Google Scholar]

- 26. Ferretti L, Wymant C, Kendall M, et al. Quantifying SARS-CoV-2 transmission suggests epidemic control with digital contact tracing. Science 2020; 368 (6491). doi: 10.1126/science.abb6936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Menges D, Aschmann HE, Moser A, Althaus CL, von Wyl V.. A data-driven simulation of the exposure notification cascade for digital contact tracing of SARS-CoV-2 in Zurich, Switzerland. JAMA Netw Open 2021; 4 (4): e218184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Abeler J, Bäcker M, Buermeyer U, Zillessen H.. Covid-19 contact tracing and data protection can go together. JMIR Mhealth Uhealth 2020; 8 (4): e19359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Bengio Y, Janda R, Yu YW, et al. The need for privacy with public digital contact tracing during the COVID-19 pandemic. Lancet Digit Health 2020; 2 (7): e342–e344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. King DK, Glasgow RE, Leeman-Castillo B.. Reaiming RE-AIM: using the model to plan, implement, and evaluate the effects of environmental change approaches to enhancing population health. Am J Public Health 2010; 100 (11): 2076–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Colizza V, Grill E, Mikolajczyk R, et al. Time to evaluate COVID-19 contact-tracing apps. Nat Med 2021; 27 (3): 361–2. [DOI] [PubMed] [Google Scholar]

- 32. von Wyl V, Bonhoeffer S, Bugnion E, et al. A research agenda for digital proximity tracing apps. Author(s): ETH Library A research agenda for digital proximity tracing apps. Swiss Med Wkly 2020; 150, 29–30. [DOI] [PubMed] [Google Scholar]

- 33. Panovska-Griffiths J, Swallow B, Hinch R, et al. Statistical and agent-based modelling of the transmissibility of different SARS-CoV-2 variants in England and impact of different interventions. Philos Trans A Math Phys Eng Sci 2022; 380 (2233): 20210315. [DOI] [PMC free article] [PubMed]

- 34. Ullah S, Khan MS, Lee C, Hanif M.. Understanding users’ behavior towards applications privacy policies. Electronics 2022; 11 (2): 246. [Google Scholar]

- 35. Bragge P, Becker U, Breu T, et al. How policymakers and other leaders can build a more sustainable post-COVID-19 normal. Discov Sustain 2022; 3 (1): 7–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Hulkower R, Penn M, Schmit C.. Privacy and confidentiality of public health information. In: Magnuson JA, Fu PC Jr, eds. Public Health Informatics and Information Systems. Cham: Springer; 2020: 147–166. [Google Scholar]

- 37. Kolasa K, Mazzi F, Leszczuk-Czubkowska E, Zrubka Z, Péntek M.. State of the art in adoption of contact tracing apps and recommendations regarding privacy protection and public health: systematic review. JMIR Mhealth Uhealth 2021; 9 (6): e23250. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data are not publicly available.