Abstract

Objective

Meditation with mobile apps has been shown to improve mental and physical health. However, regular, long-term meditation app use is needed to maintain these health benefits, and many people have a difficult time maintaining engagement with meditation apps over time. Our goal was to determine the length of the timeframe over which usage data must be collected before future app abandonment can be predicted accurately in order to better target additional behavioral support to those who are most likely to stop using the app.

Methods

Data were collected from a randomly drawn sample of 2600 new subscribers to a 1-year membership of the mobile app Calm, who started using the app between July and November of 2018. App usage data contained the duration and start time of all meditation sessions with the app over 365 days. We used these data to construct the following predictive model features: total daily sessions, total daily duration, and a measure of temporal similarity between consecutive days based on the dynamic time warping (DTW) distance measure. We then fit random forest models using increasingly longer periods of data after users subscribed to Calm to predict whether they performed any meditation sessions over 2-week intervals in the future. Model fit was assessed using the area under the receiver operator characteristic curve (AUC), and an exponential growth model was used to determine the minimal amount of data needed to reach an accurate prediction (95% of max AUC) of future engagement.

Results

After first subscribing to Calm, 83.1% of the sample used the Calm app on at least 1 more day. However, by day 350 after subscribing, 58.0% of users abandoned their use of the app. For the persistent users, the average number of daily sessions was 0.33 (SD = 0.02), the average daily duration of meditating was 3.93 minutes (SD = 0.25), and the average DTW distance to the previous day was 1.50 (SD = 0.17). The exponential growth models revealed that an average of 64 days of observations after subscribing to Calm are needed to reach an accurate prediction of future app engagement.

Discussion

Our results are consistent with existing estimates of the time required to develop a new habit. Additionally, this research demonstrates how to use app usage data to quickly and accurately predict the likelihood of users’ future app abandonment. This research allows future researchers to better target just-in-time interventions towards users at risk of abandonment.

Keywords: mindfulness meditation, mobile apps, mHealth, habit formation, dynamic time warping, app engagement

INTRODUCTION

Background and significance

Mindfulness meditation is a mind–body technique that has been found to reduce psychological symptoms from stress, anxiety, and depression,1–4 improve workplace productivity,5,6 and reduce healthcare expenditures.7,8 However, the benefits of meditation are primarily attained through persistent long-term practice,9,10 and many people who initiate meditation struggle to maintain their meditation practice.11–13 Thus, there is a need to better understand how meditation behaviors change over time in order to design and target behavioral strategies that can help individuals better maintain their meditation practice.

Mobile health (mHealth) tools, such as smartphone applications (apps), have become a popular and promising approach for helping individuals establish persistent health behaviors,14,15 as they are easily accessible, relatively inexpensive, and can provide personalized and adaptive interventions to help individuals combat a wide range of behavioral barriers. Meditation apps in particular have become increasingly popular, but users also struggle to maintain consistent app-based meditation practices over time.16–18 Daily adherence to app-based meditation interventions can be as low as 24%.19 Additionally, in real-world (ie, not research study) settings, it was recently reported that only 2% of health app users adhere to a level necessary to attain the corresponding health benefits.19–21 Thus, additional behavioral interventions are likely necessary to help users maintain their app-based meditation practices.

The use of personalized, adaptive, and/or just-in-time adaptive interventions (JITAIs) have been shown to successfully combat dropout and low adherence to many different mHealth behaviors.22 However, the success of these dynamic intervention approaches relies on the ability of researchers to detect when an individual needs a different (or additional) intervention tool,23 which requires active assessments. Health apps offer the ability to passively evaluate users’ maintenance without using burdensome assessment methods such as ecological momentary assessments,24 but little is known about how to best leverage health app usage data to accurately predict users’ future engagement. Additionally, it is unknown how many days of app usage data can be used to successfully identify users who are likely to abandon the app and thus may need additional behavioral supports.

Health behavior-change research provides important guidance for identifying app subscribers who are confronting barriers to behavioral maintenance. The Transtheoretical Model posits that individuals progress through 6 stages of behavior change when implementing a new behavior: precontemplation, contemplation, preparation, action, maintenance, and termination.25,26 By downloading (and potentially paying for) a new meditation app, new subscribers are likely in the action stage, and based on their subsequent app usage data we can determine whether they successfully transition to maintenance or if they require additional interventions to help move from action to maintenance. One mechanism for maintaining behaviors is through the formation of a contextually cued habit.27,28 Psychologists and neuroscientists have demonstrated that repeatedly performing a new behavior in response to the same stimuli (or contextual cue) over time establishes a reflexive habit.28–31 Such contextually cued, reflective habits have been found to support many persistent health habits, such as improved dietary habits32,33 and high medication adherence,34–37 and the temporal similarity of these behaviors (ie, performing the behavior at approximately the same time of day) has been used to identify those who likely have formed contextually cued habits.13,38 These results suggest that both the frequency and timing of app use within the day can serve as important predictors of future app engagement.

Objective

The purpose of this study was to determine how quickly mobile app usage data from new subscribers of the Calm meditation app could be used to accurately predict the maintenance of app engagement. Our data-driven approach to studying meditation app maintenance can be applied to a wide range of other mobile health data, such as trackers of physical activity, medication adherence, and diet, to similarly help target dynamic behavioral interventions across these diverse health behavior settings.

MATERIALS AND METHODS

Predictive variable construction

Detailed, longitudinal behavioral data were collected by the mobile meditation app Calm, which had over 4 million paying subscribers at the time of data collection. These data contained information on individual meditation sessions for a random sample of 2600 new users who subscribed to Calm between July and November of 2018. The data included the date, start time, and duration of each meditation session performed by these users over a 365-day sample period. More information about these data can be found in Huberty et al.39 Each participant’s daily longitudinal data were split into two periods: (1) the first 151 days after subscribing to Calm, which were used to define model features and (2) data ranging from day 181 through 364, which were used to define outcome measures of future meditation app engagement. These two periods (ie, the initial data for prediction and the data used to define future engagement) were separated by at least 30 days to ensure the models were capturing predictors of maintenance and not shorter-term behavioral patterns. This study was approved by the Arizona State University Institutional Review Board (Study #: 00012530).

To predict future meditation app engagement, we constructed 3 daily model features based on the first 151 days after subscribing to Calm: (1) the total time spent meditating with the app, (2) the number of meditation sessions, and (3) a measure of the similarity in the daily timing of meditation sessions between consecutive days. Behavior theorists posit that daily temporal consistency is a predictor of the habit formation process since most habitual behaviors are performed near the same location and at the same time of day.40,41 This existing evidence motivated our inclusion of a temporal similarity measure as a predictive feature. To construct this temporal similarity measure, a dichotomous minute-level time series was constructed for each day whereby each minute was assigned a value of 1 if it corresponded to a subscriber’s meditation session and 0 otherwise. The temporal similarity between consecutive days was then computed using the dynamic time warping (DTW) algorithm, which calculates an adjusted distance measure that allows for flexibility in the timing of similar data patterns. For example, if a subscriber meditated from 9:05 to 9:15 am on the previous day and 9:06 to 9:16 am on the current day, the traditional Euclidean distance between these time series would be 2 minutes, while the DTW distance is 0 minutes since the general pattern of a session at similar times is consistent over the days. This was calculated using the Python software package ‘dtw’.42 We used the Sakoechiba window type with a window size set to 60 (to allow 1 hour of flexibility) and the step pattern of symmetric1.

When comparing activity patterns over consecutive days, it is important to distinguish between temporal consistency associated with app use versus consistency associated with nonuse. Specifically, the DTW distance between consecutive days with no meditation is 0 (minimum), which is the same as the DTW distance between perfectly consistent days of meditations. In other words, the algorithm cannot distinguish between 2 days of perfect temporal consistency and 2 days of no behavior. This complicates the interpretation of the DTW distance, potentially making it an inaccurate signal of temporally consistent meditation. Thus, the DTW distance measure was adjusted by penalizing days with consecutive 0 meditations; where this was the case, the adjusted DTW was defined as 1, and all other DTW distances were scaled by dividing by the total number of minutes spent meditating with the app on the previous day (plus 1 to avoid division by 0). This scaling allowed the penalized distances to be high in comparison to days with actual meditation app use. The adjustment on DTW was done as follows:

Persistence variable construction

Future app engagement was measured by indicator variables of persistent use that identified whether any meditation sessions (of any duration) were performed over a 2-week period. For example, the persistence outcome that started on day 300 was equal to 1 if the subscriber meditated at least once between days 300 and 314, and 0 otherwise. Persistence outcomes were constructed starting on each day from 181 to 350, and the predictive ability of the model features was assessed for these various outcomes at further and further points into the future.

Predictive models

A random forest ensemble method was chosen as the main prediction model of future meditation engagement because of its ability to detect nonlinear relationships. Specifically, these random forest models were created using the Python statistical learning software package ‘scikit-learn’.43 All models were initialized with a balanced initial class weight, which automatically adjusts weights inversely proportional to class frequencies in the input data. All other model specifications were left to their default settings according to version 0.24 of scikit-learn. Specifically, each model used 100 base estimators, had no limitations on the maximum depth of each tree or the leafs per node, and applied a bootstrap sampling method when building the trees. For more details on the default parameters of the scikit-learn random forest classifier, see the scikit-learn documentation.43 Additionally, all of the model features were log-transformed before being entered into the model.

Random forest models were separately estimated using varying amounts of data to construct model features and using meditation persistence outcomes that varied in their distance from each user’s start date with the app. First, a model was created that used 3 predictive variables based on the first and second days after subscribing to the app to predict meditation behavior on the outcome defined on day 181. Then, a second model was created using the predictive variables based on days 1–3 (6 total predictors) to predict the meditation behavior on day 181. This procedure continued until a model using observations over the first 151 days was created to predict the outcome defined on day 181. This is shown in the schematic in Figure 1, with the varying number of feature days shown on the left side of each model.

Figure 1.

Schematic for modeling procedure: models were estimated using varying amounts of predictors defined from days 1–151 to predict varying persistence outcomes defined on days 181 through 350. In total, the number of intervals used for defining predictive variables (n = 150) and for defining persistence outcomes (n = 170) produced 25 500 models.

Additionally, predictions of the persistence outcome at progressively further points into the future were also made. First, as described above, varying predictor variables over the first 151 days were used to predict persistence on day 181. Then, using the same predictor variables from the first 151 days, additional random forest models were estimated to predict the persistence outcome defined on day 182. Following this same pattern, random forest models were estimated predicting the persistent meditation outcomes defined on days 181 through 350. This changing outcome day is shown in the schematic in Figure 1 on the right side of each model.

In total, all combinations of the number of intervals used for defining predictive variables (n = 150) and for defining persistence outcomes (n = 170) produced 25 500 models. Each model was trained to minimize the Gini index and was evaluated by a 10-fold cross-validation. The data was split into 10 subsamples and each model was trained on 9 of the 10 subsamples to obtain predictions for the remaining 10th of the data. This was done 10 times using each 10th as a test subsample. These model predictions were assessed by the area under the receiver operating characteristic curve (AUC).

Growth curve analysis

To determine how many initial observations were needed to accurately predict future app engagement, an exponential growth function was fit to the results from the random forest models. Specifically, Hull’s formula44 was used to model the AUC from each combination of initial feature days and persistence outcomes using the following formula:

where x is the window of observation days (eg, x = 100 when days 1–101 were used to calculate model features), y is the AUC score for a given persistence outcome, a represents the asymptote of the curve, b is the difference between the asymptote and the modeled initial value of y (when x = 0), and c describes the rate at which the maximum is reached. This exponential growth model was separately fit for every persistence outcome variable.45 After fitting the model, the number of feature days needed to reach 95% of the exponential growth model’s asymptote was calculated for each persistence outcome. This can be calculated as follows:

In addition, the number of initial feature days needed to reach 90% of the maximum AUC and the number of feature days needed to reach 95% of the maximum AUC were also calculated to assess the robustness of the findings. Lastly, to describe the aggregate decline in meditation app engagement that occurred over the sample period, we defined a survival variable that was equal to 1 if a user meditated at least once on or after a given day, and 0 otherwise.

RESULTS

Sample characteristics

Among our sample of meditation app users, the number of completed meditation sessions over days 1–365 ranged from 0 to 670 sessions (mean 31.2). Demographic information for these users was not directly obtained, although it was estimated by Calm that the average age was 38 years (SD 10.51) and roughly 72% of these new subscribers were female.

On the day of subscription, 83.1% of users would return to use the Calm app again at least one more time. After the first 100 days, 35.2% of all users stopped using the app entirely, and by day 350 (the last day used our the analysis), only 42.0% of users performed any meditations on that day or on any subsequent day (see Figure 2A).

Figure 2.

(A) (left) The percent of users with any subsequent app use over days 1–350 after subscribing to Calm. There is a steady abandonment in app use over time. (B) (right) The percent of the sample satisfying the persistence outcome over days 181–350 after subscribing to Calm. For days 181–359 between 15.4% and 22.0% of users satisfied the outcome.

For the persistence outcomes defined over days 181–350, between 15.4% and 22.0% of all users satisfied a given persistence outcome (ie, performing any meditation session on or 14 days after the indicated day). This can be seen in Figure 2B, which plots the percentage of users that satisfied each of the persistence outcomes defined on days 181–350. Among the persistent users, the average number of daily sessions was 0.33 (SD = 0.02), the average daily duration of meditating was 3.93 minutes (SD = 0.25), and the average DTW distance to the previous day was 1.50 (SD = 0.17) from days 181 to 351. As seen in Figure 2B, there is a slight decline in the percent of users that satisfied the persistence outcome as the outcome start date moves further into the future, but the values are relatively consistent.

Table 1 presents summary statistics for the model features defined from days 1 to 151. In total, there were 390 000 observations, which is the number of feature days (n = 150) for each user (n = 2600). Over these observations, 0.11 (SD = 0.38) sessions of meditation were conducted per day, meditation sessions were performed for roughly 1.35 minutes (SD = 4.63), and the average daily DTW distance measure was equal to 1.03 (SD = 2.19). To better understand long-term meditation maintenance, Table 1 also displays summary statistics for the same model features constructed from days 181 to 351, which were not used in our predictive analyses. Over this later period, the mean number of meditation sessions dropped to 0.06 (SD = 0.28), with an average time spent meditating of 0.77 minutes (SD = 3.54) per day, and a mean adjusted DTW distance of 1.02 (SD = 1.76).

Table 1.

Summary statistics for the daily measure of users’ meditation duration, meditation session count, and the adjusted dynamic time warping (DTW) distance separately averaged over the first 151 days after subscribing to Calm and days 181–351

| Days 1–151 |

Days 181–351 |

|||||

|---|---|---|---|---|---|---|

| Duration (min) | Number of Sessions | Adjusted DTW | Duration (min) | Number of sessions | Adjusted DTW | |

| Mean | 1.35 | 0.11 | 1.03 | 0.77 | 0.06 | 1.02 |

| STD | 4.63 | 0.38 | 2.19 | 3.54 | 0.28 | 1.76 |

| Min | 0 | 0 | 0 | 0 | 0 | 0 |

| Max | 37.1 | 8 | 445 | 37.1 | 4 | 300 |

| Total n | 390 000 | 442 000 | ||||

Predicting meditation persistence

The cross-validated AUC scores from each combination of observation days (1–2, 1–3, …, 1–151) and persistence outcomes (181,182, …, 350) are visualized in 3D space in Figure 3. In this figure, the “feature days” axis displays the number of initial days of observations used to construct model features, the “outcome day” axis displays the starting day of the defined persistence outcome, and the vertical axis displays the corresponding random forest model’s AUC score. The color map, in Figure 4, identifies ranges of AUC scores, with the darker red color indicating the highest AUC scores (AUC > 0.80) and the darker blue indicating the lowest AUC scores (AUC < 0.50).

Figure 3.

This plot shows the predictive performance (measured by the AUC displayed on the vertical axis) for separate random forest models with persistence outcomes defined from days 181–350 (displayed on the Outcome Day axis) using varying amounts of app usage data (displayed on the Feature Days axis). AUC scores were the highest when the outcome was defined closest to the feature days as well as when more feature days were included in the model. Specifically, AUC levels had a slight decline as the outcome day increased (far left), and the AUC increased as more feature days were included, the AUC increased (far right).

Figure 4.

A heatmap corresponding to the 3D plot that indicates high areas of AUC (red) and low areas of AUC (blue) for each combination of feature days and outcome day. The highest AUC scores are found in the area greater than 250 in the outcome day and greater than 100 in the number of feature days.

Overall, across all sets of observations (n = 150) and all persistence outcomes (n = 170), the AUC values ranged from 0.44 to 0.85 with an average of 0.72 (SD = 0.08). The AUC was highest using larger numbers of initial days to construct model features. Additionally, the AUC was higher when predicting persistence outcomes defined over earlier subsequent periods (eg, outcome day 181 as opposed to day 182). The average AUC over all the persistence outcomes using only the first observation day to construct model features was 0.47 (SD = 0.01), while the average when using all 151 days was 0.79 (SD = 0.03) over all persistence outcomes. As would be expected, using more days to construct model features always produced a greater than or equal AUC for a given persistence outcome, which can be seen in Figure 3, where the AUC increases with the addition of feature days, for a given outcome measure (ie, outcome day).

Additionally, the random forest models’ AUC values were highest when the models were fit to persistence outcomes defined on start days that were closer in time to the feature days (eg, day 181 is closer in time to the observation days than day 182). For all models used to predict the persistence outcome on day 181, the average AUC was 0.76 (SD = 0.06), while the average AUC for models used to predict the persistence outcome on day 350 was 0.68 (SD = 0.07). Generally, the mean AUC decreased as the persistence outcome was defined further in the future, as can be seen in Figure 4.

Growth curve analysis

The second stage of our analysis was to fit an exponential growth model to these trends in AUC for each persistence outcome. A visualization of the exponential growth model using the AUC results for the persistence outcome defined on day 181 is displayed in Figure 5.

Figure 5.

The model predictive performance (AUC) for the persistence outcome was defined on day 181 using varying amounts of initial app usage data (eg, days 1–2, 1–3, …,1–151). The rate of change in the AUC begins to slow down around day 50 (where days 1–50 were included as features in the model).

Table 2 presents the results from fitting exponential growth models to the trends in AUC for each persistence outcome. The coefficient a, which captures asymptotic model AUC, was between 0.74 and 0.83 for all models, with a mean of 0.78 and a standard deviation of 0.02. The number of days to reach 95% of the model asymptote was estimated from these coefficients and is also displayed in Table 2. Across all models, the average number of feature days required to reach 95% of the asymptote was 63.27 days (SD = 13.76). This means that, on average, 64 days of information were needed to accurately predict future app engagement. For comparison purposes, the days to reach 90% and 95% of the maximum AUC were also calculated. The days to reach 90% of maximum AUC were less than the days to reach 95% of the exponential growth model asymptote, with a mean number of days of 50.73 (SD = 9.89). Additionally, days to reach 95% of maximum AUC were greater than the days to reach 95% of the growth model asymptote, with a mean number of days of 86.0 (SD = 9.39).

Table 2.

The results from the exponential growth model of predictive performance (AUC) are displayed as summary statistics over all combinations of persistence outcome measures and the number of days for defining model features

| Outcome Day | a (asymptote) | b | c | Days to reach 95% of asymptote | Days to reach 90% of max AUC | Days to reach 95% of max AUC | |

|---|---|---|---|---|---|---|---|

| Mean | 265.50 | 0.78 | 0.29 | 0.03 | 63.27 | 50.73 | 86.01 |

| STD | 49.22 | 0.02 | 0.01 | 0.01 | 13.76 | 9.89 | 9.39 |

| Min | 181.00 | 0.74 | 0.23 | 0.02 | 44.85 | 29.00 | 58.00 |

| Max | 350.00 | 0.83 | 0.32 | 0.04 | 108.89 | 79.00 | 109.00 |

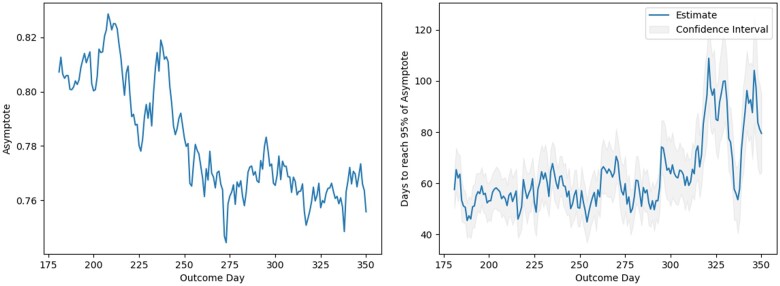

To help visualize these results, the exponential growth models’ asymptote is plotted in Figure 6A for each of the persistent outcomes (days 181–350). As the persistence outcomes are defined further into the future, the asymptote trends downward. For example, the model’s asymptote for the random forest models predicting persistence starting on day 181 was roughly 0.81, while the model’s asymptote for the models predicting persistence starting on day 350 was below 0.76.

Figure 6.

(A) (left) The change in the exponential growth model asymptote as the persistent outcome day varies. As the outcome moves further into the future the AUC asymptote decreases. (B) (right) The number of days to reach 95% of the exponential growth model asymptote for each of the different persistent outcome measures, with confidence intervals that were estimated using a bootstrap resampling method. These values remained below 80 until the models were predicting meditation app persistence defined on day 320.

The number of days to reach 95% of the exponential growth model’s asymptote is displayed in Figure 6B along with a 95% confidence interval which was estimated using a bootstrapping resampling method that generated 1000 new samples. The days to reach 95% of the model’s asymptote remained below 80 until the models were predicting meditation app persistence defined on day 320. The days to reach 95% of the growth models’ asymptote then quickly increased for the persistence outcomes defined after day 320.

DISCUSSION

Principal findings

The purpose of this study was to determine how quickly mobile app usage data from new users of the Calm meditation app could be used to accurately predict future app engagement. We found that meditation app use declined quickly after subscribing to the app, with 35.2% of new subscribers never performing another meditation session after their first 100 days. High rates of abandonment are not uncommon in mobile app-based behaviors. A systematic review found that mental health app abandonment is roughly 26%.18 More broadly, researchers have shown that mobile health apps have an average abandonment rate of approximately 43%.20 To date, it is not known how these app abandonment rates differ between free and subscription-based apps or what features promote sustained app engagement; however, it has been reported that abandonment may be even higher in observational settings.20 This significant drop-off in meditation performance demonstrates the value of being able to quickly identify users who are unlikely to maintain their use of the app in order to more accurately target additional behavioral supports that may enable these users to better maintain their meditation practice and attain the corresponding health benefits.

Our models of future app engagement showed that the predictability of future meditation behavior increased when using more observations to construct model features and when the persistence outcomes were closer in time to the observations used to create model features. These patterns are consistent with expectations, as machine learning models will always improve when nonredundant features are added to the training of a prediction model.46 Interestingly though, the models’ predictive performance leveled off as the persistent outcome moved further into the future. Based on our exponential growth modeling analysis, we estimated that observing approximately 64 days was sufficient for accurately identifying persistent and nonpersistent app users. In other words, after 64 days from subscribing to a meditation app, it can be determined with high predictive performance, measured via AUC, whether someone will remain engaged with the app through the end of the year. The 64-day result is fairly consistent with prior work in the health habits literature, which has found that it takes around 66 days for a healthy habit to form.45 If it takes around 66 days for a habit to form, then it appears that our predictive models were able to identify the users who failed to form a new habit given 64 days of observations.

Our analyses revealed important patterns in model predictive performance that demonstrate how mobile app data can be leveraged to estimate users’ future app behavior. Since the goal of many preventative health behavior interventions is to establish persistent behavior change, for example, persistent physical activity or dietary improvements, these findings indicate that a follow-up intervention may be accurately targeted around 64 days after first starting the new behavior. Preventative health behavior interventions that are not provided at an optimal time risk reduced success. For example, if an intervention is delivered too early, it may be provided to those who do not need it, rendering the intervention ineffective and/or wasting resources. Likewise, late interventions risk users reaching a state of permanent abandonment or discouragement from pursuing the healthy behavior.

Our approach to modeling the maintenance of a health behavior, app-based meditation, builds on existing work that has examined the persistence of other health behaviors.47,48 Additionally, this study used DTW distance as a measure of temporal similarity between consecutive days, which adds to a growing literature that uses DTW distance for detecting temporal patterns in health behavior data and for identifying contextually cued habits.49–54 This article also contributes to the mHealth literature characterizing mobile phone app engagement over time. Declining app usage is an important concern and limitation of many existing app-based health promotion tools, and researchers have found that app engagement durations are shortening.55 Researchers have also documented declining engagement to other mHealth intervention tools, such as physical activity trackers56 and glucose monitors.57 Thus, our research provides important insights for researchers seeking to target behavioral tools towards those unlikely to maintain engagement with an mHealth intervention.

Limitations

This research uses a large sample of real-world app subscribers to inform our analyses, but despite our statistical power, this research has several limitations. First, the analysis focused only on meditation use with the Calm app. Although our study was primarily interested in the behavior (and subsequent health impact) of meditation, the app has several other features that users can employ to relax and practice mindfulness, such as breathing exercises and sleep stories. As a result, it could be that some users persistently used other features of the app that are similar to mindfulness meditation and were incorrectly classified as nonpersistent by our outcome measures. Second, we did not use random forest models tailored for time-series data. In other words, the models did not know the relative difference between features from day 1 and those from day 150. Since we added model features iteratively by day, our results still characterize how additional observations improve model predictive performance, but further research should attempt to estimate models that take the time-series nature of the data into account. Furthermore, future work that attempts to target interventions for increased temporal consistency should apply more detailed feature selection since the findings in this study are associative rather than causal. Finally, the data were without additional contextual data on where meditations were performed, as well as demographic or other background information about new Calm subscribers. This limitation is particularly important when trying to identify the users who did and did not establish a contextually cued habit. Future research that combines objective daily app data with these additional sources of information could provide a more complete picture of the habit formation process and more accurately predict future meditation app engagement.

CONCLUSION

This study demonstrates how mobile meditation app data from new subscribers can be used to quickly determine whether they will persistently meditate over their first year with the app. Our findings indicate that meditation app users can be accurately identified as either persistent or nonpersistent users after approximately two months (64 days), which can help to better target additional behavioral supports for maintaining their app engagement. Importantly, the methods we used in this study can be readily applied to other mHealth data to similarly help in targeting nonpersistent users for additional interventions that can establish persistent healthy habits.

AUTHOR CONTRIBUTIONS

All authors contributed to the concept and design of the study. RF, VB, and CS cleaned and prepared the data. RF and CS implemented the study methods and interpreted the study results. RF drafted the manuscript and CS reviewed it.

CONFLICT OF INTEREST STATEMENT

JH discloses that she receives an annual salary from Calm and holds stock within the company. However, her salary and equity are not dependent upon the results of her research. All other authors have no actual or potential conflicts of interest to disclose.

Contributor Information

Rylan Fowers, College of Health Solutions, Arizona State University, Phoenix, Arizona, USA.

Vincent Berardi, Department of Psychology, Chapman University, Orange, California, USA.

Jennifer Huberty, College of Health Solutions, Arizona State University, Phoenix, Arizona, USA.

Chad Stecher, College of Health Solutions, Arizona State University, Phoenix, Arizona, USA.

Data Availability

The data underlying this article were provided by Calm by permission. The data underlying this article cannot be shared publicly due to privacy concerns. The data will be shared on reasonable request to the corresponding author, however a separate IRB may be required.

REFERENCES

- 1. Bostock S, Crosswell AD, Prather AA, et al. Mindfulness on-the-go: effects of a mindfulness meditation app on work stress and well-being. J Occup Health Psychol 2019; 24 (1): 127–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Eberth J, Sedlmeier P.. The effects of mindfulness meditation: a meta-analysis. Mindfulness 2012; 3 (3): 174–89. [Google Scholar]

- 3. Edenfield TM, Saeed SA.. An update on mindfulness meditation as a self-help treatment for anxiety and depression. Psychol Res Behav Manag 2012; 5: 131–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Lacaille J, Sadikaj G, Nishioka M, et al. Daily mindful responding mediates the effect of meditation practice on stress and mood: the role of practice duration and adherence. J Clin Psychol 2018; 74 (1): 109–22. [DOI] [PubMed] [Google Scholar]

- 5. Kirk U, Wieghorst A, Nielsen CM, et al. On-the-spot binaural beats and mindfulness reduces behavioral markers of mind wandering. J Cogn Enhanc 2019; 3 (2): 186–92. [Google Scholar]

- 6. Killingsworth MA, Gilbert DT.. A wandering mind is an unhappy mind. Science 2010; 330 (6006): 932. [DOI] [PubMed] [Google Scholar]

- 7. Herman PM, Anderson ML, Sherman KJ, et al. Cost-effectiveness of mindfulness-based stress reduction vs cognitive behavioral therapy or usual care among adults with chronic low-back pain. Spine (Phila PA 1976) 2017; 42 (20): 1511–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Stahl JE, Dossett ML, LaJoie AS, et al. Relaxation response and resiliency training and its effect on healthcare resource utilization. PLoS One 2015; 10 (10): e0140212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Shen H, Chen M, Cui D.. Biological mechanism study of meditation and its application in mental disorders. Gen Psychiatr 2020; 33 (4): e100214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Tang Y-Y, Lu Q, Fan M, et al. Mechanisms of white matter changes induced by meditation. Proc Natl Acad Sci USA 2012; 109 (26): 10570–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Huberty J, Eckert R, Larkey L, et al. Smartphone-based meditation for myeloproliferative neoplasm patients: feasibility study to inform future trials. JMIR Form Res 2019; 3 (2): e12662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Stecher C, Sullivan M, Huberty J.. Using personalized anchors to establish routine meditation practice with a mobile app: randomized controlled trial. JMIR Mhealth Uhealth 2021; 9 (12): e32794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Stecher C, Berardi V, Fowers R, et al. Identifying app-based meditation habits and the associated mental health benefits: longitudinal observational study. J Med Internet Res 2021; 23 (11): e27282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Lee J-A, Choi M, Lee SA, et al. Effective behavioral intervention strategies using mobile health applications for chronic disease management: a systematic review. BMC Med Inform Decis Mak 2018; 18 (1): 1–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Han M, Lee E.. Effectiveness of mobile health application use to improve health behavior changes: a systematic review of randomized controlled trials. Healthc Inform Res 2018; 24 (3): 207–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Forbes L, Gutierrez D, Johnson SK.. Investigating adherence to an online introductory mindfulness program. Mindfulness 2018; 9 (1): 271–82. [Google Scholar]

- 17. Howells A, Ivtzan I, Eiroa-Orosa FJ.. Putting the ‘app’ in happiness: a randomised controlled trial of a smartphone-based mindfulness intervention to enhance wellbeing. J Happiness Stud 2016; 17 (1): 163–85. [Google Scholar]

- 18. Torous J, Lipschitz J, Ng M, et al. Dropout rates in clinical trials of smartphone apps for depressive symptoms: a systematic review and meta-analysis. J Affect Disord 2020; 263: 413–9. [DOI] [PubMed] [Google Scholar]

- 19. Fleming T, Bavin L, Lucassen M, et al. Beyond the trial: systematic review of real-world uptake and engagement with digital self-help interventions for depression, low mood, or anxiety. J Med Internet Res 2018; 20 (6): e9275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Meyerowitz-Katz G, Ravi S, Arnolda L, et al. Rates of attrition and dropout in app-based interventions for chronic disease: systematic review and meta-analysis. J Med Internet Res 2020; 22 (9): e20283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Helander E, Kaipainen K, Korhonen I, et al. Factors related to sustained use of a free mobile app for dietary self-monitoring with photography and peer feedback: retrospective cohort study. J Med Internet Res 2014; 16 (4): e3084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Nahum-Shani I, Smith SN, Spring BJ, et al. Just-in-time adaptive interventions (JITAIs) in mobile health: key components and design principles for ongoing health behavior support. Ann Behav Med 2018; 52 (6): 446–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Pace-Schott EF, Shepherd E, Spencer RM, et al. Napping promotes inter-session habituation to emotional stimuli. Neurobiol Learn Mem 2011; 95 (1): 24–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Shiffman S, Stone AA, Hufford MR.. Ecological momentary assessment. Annu Rev Clin Psychol 2008; 4: 1–32. [DOI] [PubMed] [Google Scholar]

- 25. Prochaska JO, DiClemente CC.. Stages and processes of self-change of smoking: toward an integrative model of change. J Consult Clin Psychol 1983; 51 (3): 390–5. [DOI] [PubMed] [Google Scholar]

- 26. Prochaska JO, Velicer WF.. The transtheoretical model of health behavior change. Am J Health Promot 1997; 12 (1): 38–48. [DOI] [PubMed] [Google Scholar]

- 27. Rothman AJ, Gollwitzer PM, Grant AM, et al. Hale and hearty policies: how psychological science can create and maintain healthy habits. Perspect Psychol Sci 2015; 10 (6): 701–5. [DOI] [PubMed] [Google Scholar]

- 28. Wood W, Neal DT.. A new look at habits and the habit-goal interface. Psychol Rev 2007; 114 (4): 843–63. [DOI] [PubMed] [Google Scholar]

- 29. Gollwitzer PM, Brandstätter V.. Implementation intentions and effective goal pursuit. J Personality Soc Psychol 1997; 73 (1): 186–99. [Google Scholar]

- 30. Gollwitzer PM. Implementation intentions: strong effects of simple plans. Am Psychol 1999; 54 (7): 493–503. [Google Scholar]

- 31. Marteau TM, Hollands GJ, Fletcher PC.. Changing human behavior to prevent disease: the importance of targeting automatic processes. Science 2012; 337 (6101): 1492–5. [DOI] [PubMed] [Google Scholar]

- 32. Adriaanse MA, Vinkers CDW, De Ridder DTD, et al. Do implementation intentions help to eat a healthy diet? A systematic review and meta-analysis of the empirical evidence. Appetite 2011; 56 (1): 183–93. [DOI] [PubMed] [Google Scholar]

- 33. Adriaanse MA, Kroese FM, Gillebaart M, et al. Effortless inhibition: Habit mediates the relation between self-control and unhealthy snack consumption. Front Psychol 2014; 5: 444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Alison Phillips L, Leventhal H, Leventhal EA.. Assessing theoretical predictors of long-term medication adherence: patients’ treatment-related beliefs, experiential feedback and habit development. Psychol Health 2013; 28 (10): 1135–51. [DOI] [PubMed] [Google Scholar]

- 35. Kronish IM, Ye S.. Adherence to cardiovascular medications: lessons learned and future directions. Prog Cardiovasc Dis 2013; 55 (6): 590–600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Liddelow C, Ferrier A, Mullan B.. Understanding the predictors of medication adherence: Applying temporal self-regulation theory. Psychol Health 2021; 1–20. [DOI] [PubMed] [Google Scholar]

- 37. Brooks TL, Leventhal H, Wolf MS, et al. Strategies used by older adults with asthma for adherence to inhaled corticosteroids. J Gen Intern Med 2014; 29 (11): 1506–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Phillips LA, Burns E, Leventhal H.. Time-of-day differences in treatment-related habit strength and adherence. Ann Behav Med 2021; 55 (3): 280–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Huberty J, Green J, Puzia M, et al. Evaluation of mood check-in feature for participation in meditation mobile app users: retrospective longitudinal analysis. JMIR Mhealth Uhealth 2021; 9 (4): e27106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Forgas SoP, Forgas JP, Williams KD, et al. Social Motivation: Conscious and Unconscious Processes. Cambridge, United Kingdom: Cambridge University Press; 2005. [Google Scholar]

- 41. Wood W, Quinn JM, Kashy DA.. Habits in everyday life: thought, emotion, and action. J Pers Soc Psychol 2002; 83 (6): 1281–97. [PubMed] [Google Scholar]

- 42. Giorgino T. Computing and visualizing dynamic time warping alignments in R: the dtw Package. J Stat Soft 2009; 31 (7): 1–24. doi: 10.18637/jss.v031.i07 [DOI] [Google Scholar]

- 43. scikit-learn: machine learning in Python — scikit-learn 0.24.2 documentation. https://scikit-learn.org/stable/. Accessed May 20, 2021.

- 44. Hull CL. Principles of Behavior: An Introduction to Behavior Theory. Oxford, England: Appleton-Century; 1943. [Google Scholar]

- 45. Lally P, Jaarsveld C v, Potts HWW, et al. How are habits formed: modelling habit formation in the real world. Eur J Soc Psychol 2010; 40 (6): 998–1009. [Google Scholar]

- 46. Kotsiantis SB, Kanellopoulos D, Pintelas PE.. Data preprocessing for supervised leaning. Int J Comput Information Eng 2007; 1: 4104–9. [Google Scholar]

- 47. Davis MJ, Addis ME.. Predictors of attrition from behavioral medicine treatments. Ann Behav Med 1999; 21 (4): 339–49. [DOI] [PubMed] [Google Scholar]

- 48. Linke SE, Gallo LC, Norman GJ.. Attrition and adherence rates of sustained vs. intermittent exercise interventions. Ann Behav Med 2011; 42 (2): 197–209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Fritsche L, Schlaefer A, Budde K, et al. Recognition of critical situations from time series of laboratory results by case-based reasoning. J Am Med Informatics Assoc: JAMIA 2002; 9 (5): 520–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Zhang Y, Huang Y, Lu B, et al. Real-time sitting behavior tracking and analysis for rectification of sitting habits by strain sensor-based flexible data bands. Meas Sci Technol 2020; 31 (5): 055102. [Google Scholar]

- 51. Bautista M, Hernández Vela A, Escalera S, et al. A gesture recognition system for detecting behavioral patterns of ADHD. IEEE Trans Cybern 2016; 46 (1): 136–47. [DOI] [PubMed] [Google Scholar]

- 52. Eicher-Miller HA, Gelfand S, Hwang Y, et al. Distance metrics optimized for clustering temporal dietary patterning among U.S. adults. Appetite 2020; 144: 104451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Forestier G, Lalys F, Riffaud L, et al. Classification of surgical processes using dynamic time warping. J Biomed Inform 2012; 45 (2): 255–64. [DOI] [PubMed] [Google Scholar]

- 54. Faruqui SHA, Du Y, Meka R, et al. Development of a deep learning model for dynamic forecasting of blood glucose level for type 2 diabetes mellitus: secondary analysis of a randomized controlled trial. JMIR Mhealth Uhealth 2019; 7 (11): e14452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Vagrani A, Kumar N, Ilavarasan PV.. Decline in mobile application life cycle. Procedia Comput Sci 2017; 122: 957–64. [Google Scholar]

- 56. Whelan ME, Orme MW, Kingsnorth AP, et al. Examining the use of glucose and physical activity self-monitoring technologies in individuals at moderate to high risk of developing type 2 diabetes: randomized trial. JMIR Mhealth Uhealth 2019; 7 (10): e14195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Tatara N, Arsand E, Hartvigsen G, et al. Long-term engagement with a mobile self-management system for people with type 2 diabetes. JMIR Mhealth Uhealth 2013; 1 (1): e1. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data underlying this article were provided by Calm by permission. The data underlying this article cannot be shared publicly due to privacy concerns. The data will be shared on reasonable request to the corresponding author, however a separate IRB may be required.