Abstract

The Hohenberg-Kohn theorem of density-functional theory establishes the existence of a bijection between the ground-state electron density and the external potential of a many-body system. This guarantees a one-to-one map from the electron density to all observables of interest including electronic excited-state energies. Time-Dependent Density-Functional Theory (TDDFT) provides one framework to resolve this map; however, the approximations inherent in practical TDDFT calculations, together with their computational expense, motivate finding a cheaper, more direct map for electronic excitations. Here, we show that determining density and energy functionals via machine learning allows the equations of TDDFT to be bypassed. The framework we introduce is used to perform the first excited-state molecular dynamics simulations with a machine-learned functional on malonaldehyde and correctly capture the kinetics of its excited-state intramolecular proton transfer, allowing insight into how mechanical constraints can be used to control the proton transfer reaction in this molecule. This development opens the door to using machine-learned functionals for highly efficient excited-state dynamics simulations.

Subject terms: Density functional theory, Method development, Chemical physics, Excited states

Density functional theory provides a formal map from the electron density to all observables of interest of a many-body system; however, maps for electronic excited states are unknown. Here, the authors demonstrate a data-driven machine learning approach for constructing multistate functionals.

Introduction

Electronic excitations underlie numerous biological and physical processes of interest, including photosynthesis1,2, DNA damage3,4, photodynamic therapy5, photopharmacology6,7, and solar energy conversion8,9. Excited-state dynamics simulations are a powerful tool to uncover the mechanism of photoresponse in these systems, particularly when combined with ab initio electronic-structure calculations, since the important molecular motions do not need to be known a priori but are revealed by the dynamical trajectories10,11. Given its reasonable accuracy within current approximations, linear-response time-dependent density-functional theory (LR-TDDFT)12,13 has become a work-horse method in the field; however, its computational expense (formally scaling with system size as N 3) and the need to solve the electronic-structure problem at every simulation timestep limits the system size and timescale amenable to study. Thus, a cheaper approach to electronic excitations and excited-state dynamics is highly desirable but poses a significant challenge.

One might question whether the expense of LR-TDDFT is needed in the first place. For the ground state (GS), the first Hohenberg–Kohn (HK1) Theorem14, which provides the foundation of DFT, establishes the existence of a bijection from the ground-state electron density to the external potential of a many-body system. As a consequence, there exists a formal map from the ground-state electron density not only to the ground-state energy but also to every property of the system. The map from density to ground-state energy is encoded by an unknown universal density functional; however, practical approaches make use of the Kohn-Sham (KS) theory15, which expresses the functional via a fictitious non-interacting system that shares the density of the true system, allowing an exact treatment of kinetic energy and Coulomb contributions to the functional and approximations to the remaining small exchange and correlation terms. While KS theory provides a practical scheme for solving simultaneously for the ground-state density and energy of a system, functionals that map from the ground-state density to excited-state energies are currently unknown, although, their formal existence is established by the HK1 theorem. As an alternative, LR-TDDFT provides an indirect means to the excited-state energies from knowledge of the ground-state density and its response to an external driving field12,13. Given the expense of LR-TDDFT, a direct map from density to excited-state energies would be preferable.

Theoretical support for a direct route to excited-state energies comes from a generalization of the HK1 theorem to an excited-state density-energy bijection. While a universally general HK1 theorem for excited states with arbitrary external potentials has been disproved16, a special excited-state HK1 theorem for Coulombic external potentials (i.e., molecular systems) has been argued to exist17 and has motivated recent orbital-optimized DFT approaches to electronic excited states18,19.

Alongside theoretical developments in excited-state DFT, there has been significant recent progress in using data-driven approaches to machine-learn ground-state density functionals20–28. Related to the current work, there has also been progress in developing machine-learning models to directly predict electronic excited-state energies, gradients, and non-adiabatic couplings given a particular molecular geometry, and the SchNarc approach by Westermayr, Marquetand et al. has been demonstrated to predict non-adiabatic dynamics in agreement with ab initio simulations using a reasonable amount of training data29–35. In the current work, we explore the proposition that a multistate density functional can be machine learned via an excited-state HK map. This new development establishes a framework that is potentially more powerful than directly learning excited-state energies, since densities and density functionals can yield any desired property.

In order to construct an ML excited-state density functional, we work in the framework introduced in ref. 22, which uses a data-driven ML model with a physically motivated representation of the molecule. The fundamental object in this approach is a potential-to-density map, n[v](r), which is machine-learned by computing the external potential and corresponding density at a desired level of theory for a range of nuclear geometries of the system and then inputting these functions as training data. For ground-state densities, this map is known as the machine-learned Hohenberg–Kohn (ML-HK) map; once learned, it can be used to compute any related quantities, including ground-state energies, and other observables at the same level of theory22,23. It is worth noting that the level of theory is not restricted to DFT but can also be performed, for example, using coupled-cluster theory23. In this work, we generalize the potential-to-density map to excited states in a multistate Hohenberg–Kohn framework (ML-MSHK), allowing us to learn excited-state densities and energies simultaneously with comparable accuracy to the ground state.

In order to test our ML-MSHK model, we consider the excited-state proton transfer (ESPT) reaction in malonaldehyde (MA), a small organic molecule exhibiting a non-trivial internal reaction that is sensitive to electronic excitation. ESPT is at the heart of photoacidity and is a key step in the photocycle of many photoactive proteins, such as green fluorescent protein (GFP)36,37. Intramolecular proton transfer, as in MA, serves as a useful model to study ESPT, since the reaction rate is not limited by the diffusion of the proton donor and acceptor together, and can therefore be probed by ultrafast spectroscopy38,39.

The structural simplicity of MA makes it an especially appealing model of ESPT. In particular, one conformation of malonaldehyde is a ring structure with an internal hydrogen bond that allows for a proton-transfer reaction between the two oxygen atoms (see molecular graphic in Fig. 1a). Excitation of this molecule from the S0 ground state to the S2 singlet excited state (ππ*) leads to a substantial reduction of the proton-transfer barrier from several kcal/mol in the ground state to an essentially barrierless reaction in the excited state. However, the presence of a lower-lying singlet nπ* state in MA significantly complicates its ESPT reaction, and ultrafast non-adiabatic transitions from S2 to S1 are believed to compete with the ESPT reaction40–42. The barrier to proton transfer on S1 is even higher than the ground-state40,43, and furthermore, a three-state intersection is predicted to be energetically accessible41, meaning the electronic excitation can be efficiently quenched to the ground state. As a result, the ESPT reaction in MA is largely hindered by competing processes and has yet to be directly observed experimentally42.

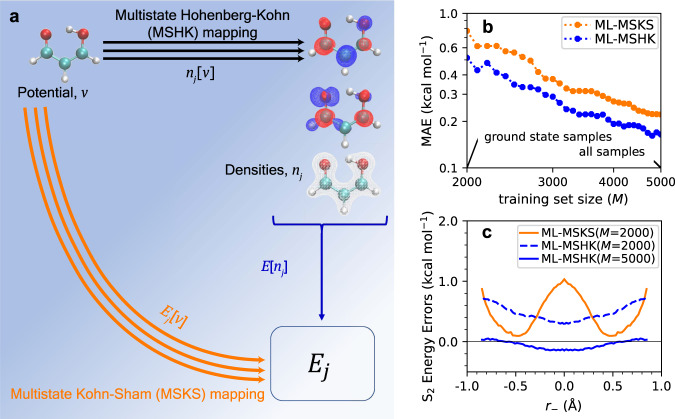

Fig. 1. Overview and performance of the multistate machine-learning (ML) approach.

a DFT maps used in this paper. The orange arrows represent a direct ML-MSKS map from the external potential, v, to total electronic energies, Ej, for each state j. The black arrows represent ML-MSHK maps between v and electron densities of each state, nj. The blue arrow represents a single multistate energy functional that maps a density to its energy. The structure of the malonaldehyde (MA) molecule studied here is shown in the top left of this panel. b Learning curves (on a logarithmic scale), for MA’s S2 energy predictions from the ML-MSHK model (blue curve) using the lowest three densities (n0, n1, n2) and ML-MSKS model (orange curve). Training sets are formed starting from 2000 geometries sampled from S0 dynamics and sequentially adding geometries extracted from ab initio molecular dynamics (AIMD) trajectories on the S2 state in the manner described in Supplementary Note 1. c Errors of energy prediction against a TD-PBE0 benchmark along a minimum energy pathway for proton transfer of MA in the S2 state predicted with ML-MSKS and ML-MSHK maps using training sets of different sizes. For each fixed value of proton-transfer coordinate, r− (see text for definition), geometries were optimized on S2 at the TD-PBE0/aug-cc-pvdz level, subject to a constraint of planarity.

One approach to suppressing the competing processes in MA and, thereby, controlling the ESPT reaction is to raise the nπ* state energy via functional group modifications. For example, the nπ* and ππ* energetic ordering is reversed in methyl salicylate (MS)44, which shares the chelate ring of MA yet exhibits efficient ESPT, as the non-adiabatic transitions to the nπ* state are removed38,45,46. In this work, using excited-state dynamics with our ML-MSHK method, we seek to explore whether mechanical restrictions applied to MA can instead be used to promote the ESPT reaction. Recognizing that the S2/S1 transition in MA is brought about by torsional motions41, we propose that a restraint of planarity on the molecule will allow the ESPT reaction to proceed unhindered. This restraint can be viewed as biomimetic of a similar mechanism in GFP whose protein environment enforces planarity of the 4-(p-hydroxybenzylidene)imidazolidin-5-one chromophore, preventing nonadiabatic transitions and enhancing its fluorescence quantum yield47.

Results

Theory

There is a growing recognition of the importance of using physics-based principles in choosing descriptors and models for machine-learning molecular properties48,49. The blessing, and perhaps curse, of ML models is their great flexibility to predict almost any quantity given sufficient data. This can lead to a loss of physical insight, and concerns of overfitting must always be addressed. By using physical principles, fundamental constraints are automatically introduced into the model that mitigate these problems. In the context of learning electronic structure, a very natural physical framework relies on the connections between the electron density, n(r), external potential, v(r), and energy, E, of a many-body quantum system. These connections are formalized by the HK1 theorem, which proves the existence of a bijection from the ground-state electron density to the external potential of a many-body system: n0(r) ↔ v(r)14. The electron density, which gives the probability for finding an electron at a certain location in space, is a particularly convenient quantity to work with since, unlike the many-body wavefunction, it is a three-dimensional scalar field regardless of the number of electrons and is, therefore, straightforward to represent numerically. For molecular systems, the external potential is simply the Coulombic scalar potential arising from the nuclear charges: . Since the external potential uniquely defines the molecular electronic Hamiltonian (for a given spin state and number of electrons), the electronic energy must also be uniquely determined from knowledge of the external potential or, per HK1, the density14. These maps from external potential to density and energy are realized as functionals, e.g., n[v](r), which inspired the framework for a previous approach that machine-learned density functionals for ground-state energy predictions (ML-HK)22. Part of the success of the ML-HK approach can be attributed to the uniqueness of the external potential and density as molecular descriptors, a property not shared by some other choices50.

We now demonstrate how these ideas can be extended to electronic excited states. As mentioned in the introduction, an excited-state extension of HK1 has been argued to exist for Coulombic external potentials17, meaning that the map from external potential to each state’s density is encoded as a functional, nj[v](r), where j = 0, 1, 2, . . . . labels each excited state. Furthermore, a property of the (unknown) exact universal functional is that each extremal density of the energy functional corresponds to an electronic eigenstate with the functional returning the exact (excited-state) eigenvalue, Ej = E[nj]51. This motivates a machine-learning approach that leverages an excited-state Hohenberg–Kohn mapping.

Learning excited states with ML-MSHK

The first step of our ML model is to learn multiple electronic state densities. We start by expanding the densities in an orthonormal basis set, ϕl(r), as , and we learn the set of basis coefficients by training on a set of input potentials corresponding to different geometries of a system. We allow for the learning of ground- and excited-state densities with a unique map to each state j. Various ML models, including artificial neural networks and kernel methods, have been used to learn total energies from electronic-structure calculations22,23,30,52–59. However, learning excited-state HK maps is unique to our approach, and we follow the ground-state ML-HK functional’s successful use of the kernel ridge regression (KRR) method60. In principle, the functionals could instead be learned with neural networks, but a comparison of different methods is beyond the scope of this manuscript.

With a set of learned densities, a second KRR model is used to learn ground- or excited-state energies from a set of input training densities. We consider two types of energy functionals, depending on the nature of the input used. The first relies on the proven existence of a single functional that maps ground or excited-state densities to their respective energy eigenvalues51. We thus learn a single map from density to energy and use multiple states in the training of this map:

| 1 |

where K(ui,j[v], ui,k[vi]) is the kernel, k runs over the states used in the training of the model, and {αi,k} are the coefficients learned in the energy functional model. Since energy predictions from this model rely on training with multiple electron densities for a given molecular geometry, we call the combination of the two types of maps the multistate Hohenberg–Kohn approach (ML-MSHK). Learning Eq. (1) comes at the cost of retaining multiple densities for each training example, increasing storage needs; however, the advantage is the resulting energy functional is not state specific. We note that while this work focuses on the planar S2 state, we also train on densities from the S1 excited state to demonstrate that including additional densities does not degrade the performance of the ML-MSHK model.

The second energy functional we consider is of the external potential itself, without using the density as an intermediate descriptor. Following the naming of a similar approach for ground-state energies22, we call this the multistate Kohn-Sham map (ML-MSKS):

| 2 |

where {γi,j} are the coefficients learned in the ML-MSKS energy functional model. Such functionals must also exist since the external potential uniquely defines the molecular electronic Hamiltonian and, therefore, also its eigenstates14. Eq. (2) has the advantage that it uses only external potentials rather than densities, reducing computational storage requirements compared to Eq. (1). However, a disadvantage is that the energy maps in this model are state specific, which we expect will introduce errors near electronic crossings30.

In all cases, models are trained against ab initio densities and energies following the procedure described in section “Methods”. A schematic of the different maps used in this study is shown in Fig. 1a.

Excited-state energy predictions

In order to test the performance of the excited-state machine-learned density functionals, we start by considering learning curves for training S2 excited-state energies of MA, shown in Fig. 1b, in which the lowest three densities (n0, n1, n2) were used for the ML-MSHK model. The smallest training set considered contains 2000 molecular conformations of MA generated from a ground-state AIMD trajectory, propagated as described in section “Training and test set generation”. A training set similar to this was previously found to yield a ML-HK potential that accurately described the proton-transfer reaction on the ground state22. From Fig. 1b, however, we see somewhat large out-of-sample mean absolute errors (MAEs) of 0.5 and 0.8 kcal/mol for the ML-MSHK and ML-MSKS models, respectively, with this training set. Furthermore, for the ML-MSKS model, the error is seen to vary strongly with geometry, as revealed by the orange curve in Fig. 1c, which shows energy prediction errors along a minimal-energy pathway for the ESPT reaction as a function of the proton-transfer coordinate, r− = rHO1 − rHO2, where rHOi is the distance of the proton from oxygen atom i. Using the same 2000 training geometries, the ML-MSHK error (dashed blue curve) is more uniform than the ML-MSKS predictions, demonstrating the advantage of the former.

In order to use the ML-MSHK model for excited-state dynamics, we must further reduce its prediction errors; however, we found essentially no improvement upon adding more samples from the ground-state trajectory. As we will see below, the remaining source of error in models trained only with ground-state samples is that the S2-initiated ESPT involves nuclear responses also in modes orthogonal to the direct proton transfer mode, and these are not adequately sampled by the ground-state trajectory. Thus, we extended the training set by including geometries extracted from 30 S2 excited-state AIMD trajectories, in a manner described in Supplementary Note 1, yielding the learning curves shown in Fig. 1b.

After including geometries extracted from excited-state trajectories in the training (ground and excited-state samples), both ML models perform significantly better than the ML model constructed using only ground-state samples in the training. Interestingly, the ML-MSKS map (Fig. 1b, orange curve) always performs worse than the MSHK map (blue curve). Similar behavior was noted in the ground-state ML-HK study of ref. 22. The rest of this work pertains to the ML-HK models since learning the densities as an intermediate step yields the models that outperform the direct potential-to-energy approach, and the inclusion of density learning has other benefits such as the capability of density-based delta-learning to add corrections from high-level wavefunction theory23. Encouragingly, the out-of-sample error for ML-MSHK converges below 0.2 kcal/mol once the sample size reaches M = 5000. This error is comparable to that found in the previous ground-state study of MA22, suggesting that ML-MSHK will be suitable for molecular dynamics simulations (confirmed below). Having found convergence at M = 5000, our final training set generation follows the protocol described in section “Training and test set generation”. For the adiabatic excited-state dynamics considered in this work, it is possible to train state-specific ML-HK models to return the S2 energies using only ground-state or S2 densities as input (see Supplementary Fig. 1). While we do not include any non-adiabatic transitions in this study, we note that the state-specific models are worse than ML-MSHK in the vicinity of electronic crossings (see Supplementary Note 5). Given the excellent performance of the ML-MSHK model and its ability to capture multiple electronic states simultaneously, we focus exclusively on this model for the remainder of this work.

Excited-state density predictions

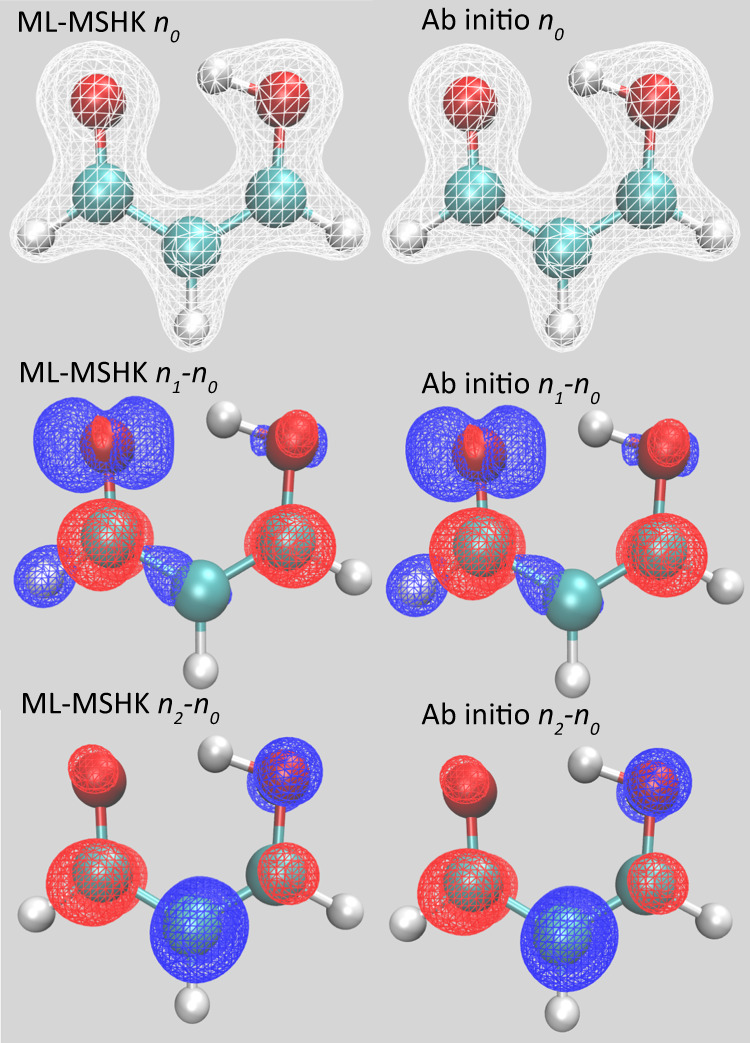

Equally important to energy predictions is the question of how well the model reproduces electron densities. This is explored in Fig. 2, which shows electron densities for the CS-symmetric ground-state optimized geometry of MA (similar results for the proton transfer transition state are shown in Supplementary Fig. 4). In order to highlight the changes in bonding structure, excited-state densities are displayed as density differences with respect to the ground state. For all states considered, there is no discernible difference between the ML-MSHK predicted densities (left) and ab initio TD-PBE0 densities (right), even though the ground-state optimized structure was not included in the training set. Indeed, the out-of-sample integrated MAE in the total electron densities is very small (0.012 e).

Fig. 2. Ground and excited-state electron densities for the CS ground-state minimum energy structure of MA.

1st row: S0 ground-state densities. 2nd row: density differences between S1 and S0. 3rd row: density differences between S2 and S0. An isosurface of 0.1 e/Bohr3 was used for plotting densities and density differences. Left column: ML predictions, right column: ab initio TD-PBE0 predictions. Each density is represented by an isosurface plot. For density differences, red means an accumulation of electronic charge in the excited state and blue means a depletion.

Knowledge of the density differences also provides insight into the nature of the electronic excitations. From Fig. 2, S1 clearly corresponds to an nπ* transition with a depletion of density from the proton-accepting oxygen’s px orbital and an accumulation on the backbone π* orbital. S2, on the other hand, is a ππ* transition, as identified by a depletion of density on the central conjugated carbon’s pz orbital.

Excited-state MD using ML potentials

Having demonstrated that our ML-MSHK model provides a quantitative prediction of excited-state electron densities and total energies within our test set of geometries, we next consider its ability to generate a functional for use in excited-state MD. We thus initiated non-equilibrium MD trajectories of MA on its S2 state following vertical excitation from the ground state. 1000 ML-MSHK and 50 AIMD trajectories were run and compared. It should be noted that the computational effort associated with propagating dynamics on the ML-MSHK surface is negligible compared to AIMD dynamics. Thus, for the cost of 50 excited-state AIMD trajectories used to generate the training and test sets, we gain the ability to perform excited-state dynamics with 1000 ML-MSHK trajectories using the resulting machine-learned functional to converge the excited-state non-equilibrium averaged properties.

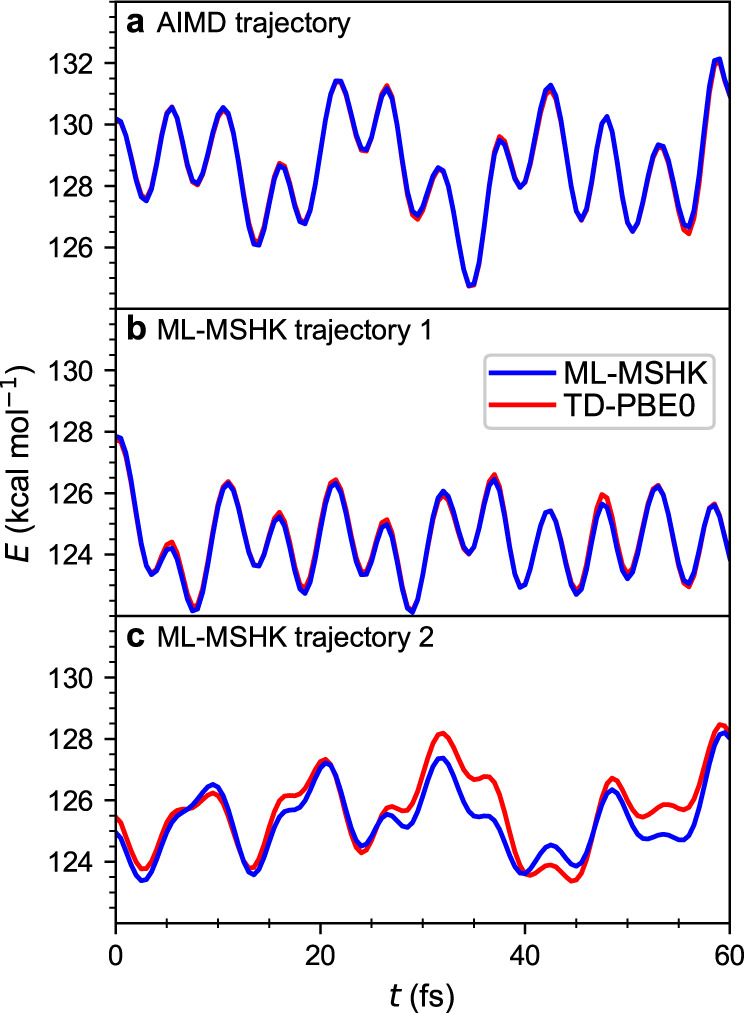

We consider three metrics to assess the quality of the machine-learned functional. The first concerns how well ML-MSHK is able to interpolate the energy between geometries explicitly included in the training. This is accomplished by evaluating ML-MSHK energies along an AIMD excited-state trajectory from which some geometries were included in the training. The result is shown in Fig. 3a, where we see almost perfect agreement between ML-MSHK and the AIMD TD-PBE0 energies. Note that only 32 geometries out of 481 from this trajectory were used in the training set of the ML-MSHK model, highlighting the fidelity to which ML-MSHK is able to interpolate the functional between training points. Second, we assess how well ML-MSHK propagates the dynamics starting from a geometry included in the training set, shown in panel b, where again we see almost perfect agreement with AIMD energies evaluated on the ML trajectory snapshots. Finally, we check how well the machine-learned functional does on arbitrary geometries by evaluating ab initio TD-PBE0 energies along an ML-MSHK trajectory where no geometry was included in the training set, shown in panel c. Here, slightly larger deviations between ML-MSHK and TD-PBE0 energies are seen; however, the difference is within the expected MAE bounds from the learning curve and does not grow with time, showing that ML-MSHK faithfully reproduces the excited-state energy functional, at least in the configurational space sampled during non-equilibrium dynamics. The error could be reduced by further increasing the training set; however, we found this unnecessary since this small error does not influence the dynamical predictions as we show below.

Fig. 3. Predictions of electronic energies along trajectories.

ML-MSHK predictions (blue curves) and TD-PBE0 reference values (red curves) are shown along three different representative trajectories taken from: a an ab initio molecular dynamics (AIMD) trajectory and b an ML-generated excited-state trajectory, both initiated from geometries in the training set, and c an ML-generated excited-state trajectory initiated from a geometry out of the training set.

ESPT in planar MA

Having seen that ML-MSHK provides an accurate description of the S2 excited-state functional of MA, we next consider the predictions of excited-state dynamics of the ESPT reaction using this functional. To that end, we compute the following non-equilibrium response function:

| 3 |

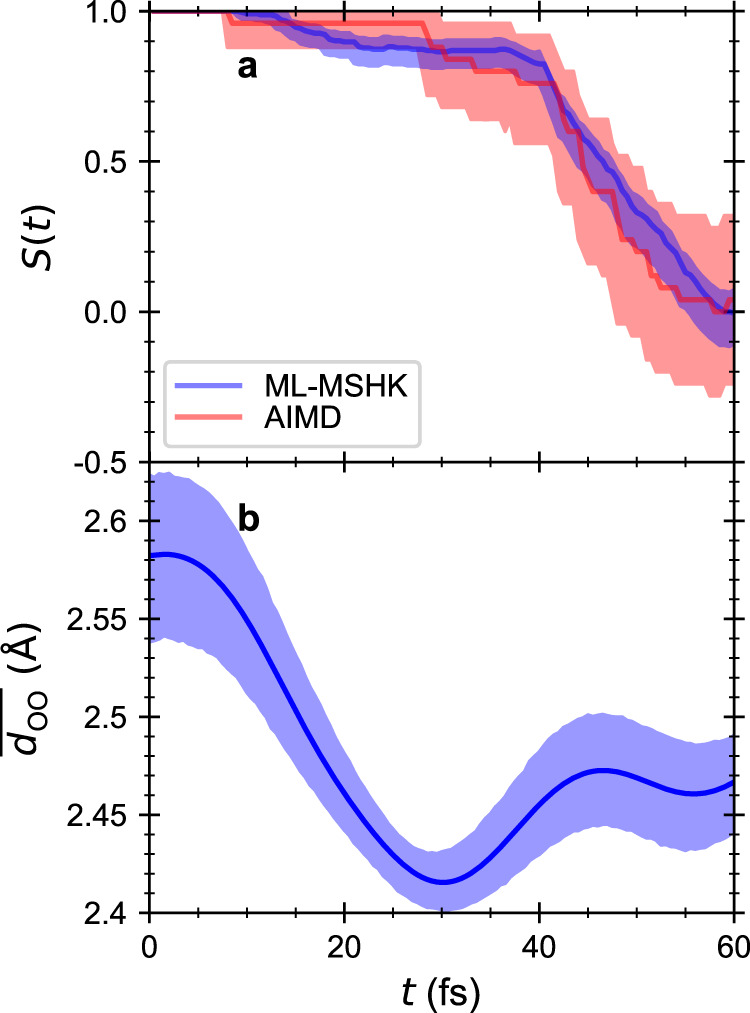

where t = 0 corresponds to the instance of vertical excitation from an equilibrium configuration on the S0 state to the S2 state, and the overbar indicates a non-equilibrium average over initial conditions. S(t) thus measures the memory of which reaction basin the proton is in (reactant or product). A value of S = 1 means the proton is in the reactant basin, a value of S = − 1 means the proton is in the product basin, and a value of S = 0 means memory has been lost. Since MA has a symmetric proton donor and acceptor, S = 0 indicates the reaction is complete.

Figure 4a shows the ESPT reaction’s non-equilibrium response function, S(t), computed for 50 AIMD trajectories (red) and 1,000 ML-MSHK trajectories (blue). Shaded areas represent the 95% confidence intervals computed with the bootstrap method61, as implemented in SciPy v. 1.7.162. The results show that the AIMD and ML-MSHK agree within statistical certainty, highlighting the predictive power of ML-MSHK. Furthermore, an interesting feature of S(t) becomes clear in the ML-MSHK results: apart from a ~10% fraction of trajectories that undergo almost immediate proton transfer, there is a waiting period of ~40 fs before the remainder of ESPT reaction proceeds, which is then rapidly completed by 60 fs. This behavior is hinted at in the AIMD results but becomes much more apparent in the ML-MSHK predictions due to averaging over many more trajectories.

Fig. 4. The non-equilibrium response of the excited-state proton transfer reaction and its relationship to the time varying oxygen–oxygen distance of MA.

a Non-equilibrium response function of the proton’s location (reactant or product basin according to Eq. (3)), S(t), that reflects the progress of the excited-state proton transfer reaction. The solid curves are the expectation values of S(t) and the shaded area shows the 95% confidence intervals estimated from bootstrap analysis using 50 trajectories for ab initio molecular dynamics (AIMD) (red) and 1000 trajectories (blue) for ML-MSHK. The converged S(t) of the ML-MSHK model shows good agreement with the result from AIMD simulations. b The average oxygen–oxygen distance is shown as a function of time following photoexcitation.

The observed 40-fs waiting period arises from important responses of the heavy atoms following excitation to S2. This can be seen for example in the average oxygen–oxygen distance, , plotted in Fig. 4b, which exhibits a decrease from ~2.57 Å to ~2.42 Å in the first 30 fs following excitation. This timescale is comparable to the waiting period of the ESPT reaction, suggesting that the oxygen–oxygen motion gates the reaction, which does not proceed rapidly until a distance of dOO ≤ 2.45 Å is reached.

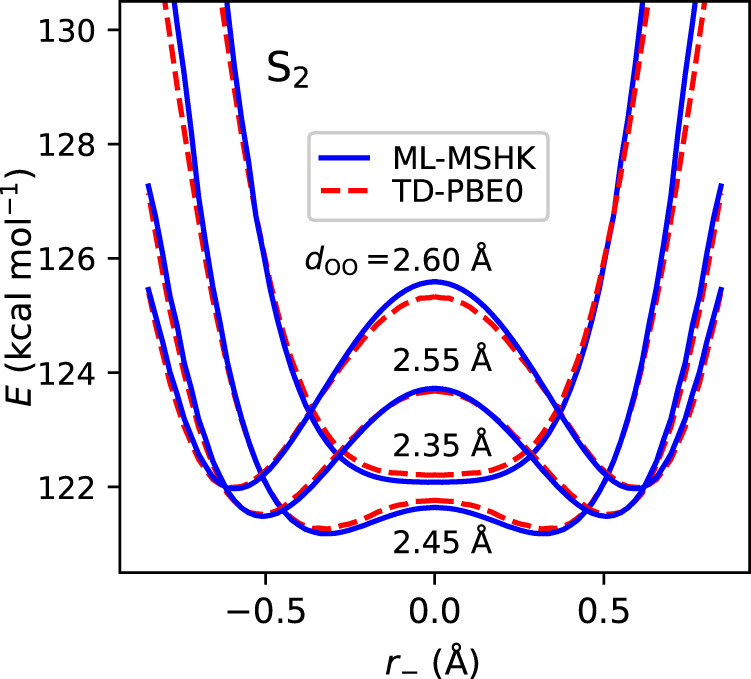

The response of dOO following excitation to S2 can be understood from changes in the electron density shown in Fig. 2. The reduction in π-bonding character lessens the rigidity of the conjugated system, allowing the chelating ring to contract and the oxygen atoms to become closer. Bringing together the proton donor and acceptor oxygen atoms then reduces the barrier to ESPT, thus explaining the gating mechanism. This is explored explicitly in Fig. 5, which plots the potential energy profile of the minimum energy pathway of ESPT on S2 for a series of fixed dOO distances. Encouragingly, quantitative agreement between ML-MSHK (blue curves) and TD-PBE0 (dashed red curves) is seen, despite none of the minimum energy pathway geometries being included explicitly in the training set. For the initial average dOO distance (~2.57 Å), a proton-transfer barrier of >2 kcal/mol is seen, explaining why only a small fraction of excited trajectories undergo ESPT in the first 20 fs. The S2 potential is downhill for motions that reduce dOO to a value of 2.45 Å (following the minimum of the potential energy profile as a function of dOO), which also brings about a reduction of the barrier to proton transfer, having a value of 0.3 kcal/mol at dOO = 2.45 Å. The barrier disappears completely for dOO = 2.35 Å; however, this oxygen–oxygen distance is disfavored due to an overall increase in the potential, explaining why the initial reduction in dOO is seen to reverse at t = 30 fs in Fig. 4b.

Fig. 5. S2 potential energy surfaces of MA along the H-transfer coordinate, r−, for a series of fixed oxygen–oxygen distances, doo.

All coordinates except r− and doo are optimized at the TD-PBE0/aug-cc-pvdz level for S2, subject to a constraint of planarity, following the procedure described in Supplementary Note 3. We see excellent agreement between ab initio results (dashed red curves) and ML-MSHK predictions (solid blue curves).

Discussion

In this paper, we developed a multistate Hohenberg–Kohn machine-learning framework for the accurate prediction of excited-state densities and energies. We demonstrated that excited-state energies, which are expressible as a single functional of multiple state densities, can be learned as accurately as the ground-state energy, even for the moderately sized molecule malonaldehyde, which exhibits a non-trivial excited-state proton transfer reaction. The resulting machine-learned functional is faithful to the underlying excited-state AIMD trajectories on which the model was based, allowing accurate non-equilibrium excited-state molecular dynamics trajectories to be performed with a 10-fold computational saving compared to AIMD. Furthermore, our study yielded new insight into the excited-state proton transfer reaction in malonaldehyde. This was aided by the low-cost of ML-MSHK energy predictions that allowed us to converge the non-equilibrium dynamics with 1,000 excited-state trajectories, revealing the 40-fs waiting period following photoexcitation, after which ESPT promptly completed.

The observed gating mechanism of proton transfer in planar MA by heavy atom motion has been seen previously in other intramolecular ESPT reactions which are not precluded by ultrafast non-adiabatic transitions. In particular, the lack of isotope effect for ESPT in methyl salicylate was explained by a delocalization of the reaction coordinate to modes other than the donor OH stretch38. Later studies in related ESPT molecules found the reaction path was dominated by anharmonic low-frequency backbone modes that modulated the donor-acceptor distance63–65. Thus, by enforcing planarity in MA, we have suppressed nonadiabatic transitions that compete with ESPT and find the mechanism of proton transfer is indeed multidimensional in nature and follows the concensus picture that has emerged for intramolecular chelate ring structures. That we correctly captured the multidimensional nature of ESPT from a limited training of excited-state trajectories is a testament to the power of our ML-MSHK method.

This work can be extended in a number of directions. As an example, we removed the restraint of planarity on malonaldehyde (see Supplementary Note 5). Models using additional non-planar training geometries show that the multistate density functional outperforms the state-specific functionals near S2/S1 state crossings, which is vital for running future non-adiabatic MD trajectories. In addition, the LR-TDDFT training data could be replaced with high-level wavefunction-based electronic structure for input to train the ML-MSHK density and energy maps. A similar approach was recently shown to be successful for the ground-state ML-HK method to learn coupled-cluster energies23. Based on the promising results presented in this work, we are optimistic that ML-MSHK will find practical use in excited-state non-adiabatic simulations with the costly electronic-structure steps done only once to build the training set.

Methods

Machine-learning model

In order to predict the excited-state energies of a molecule using electron densities via the ML-MSHK map introduced in section “Learning excited states with ML-MSHK”, we start by using a basis expansion of the densities:

| 4 |

where j indexes an electronic state (j = 0 is the ground state, j = 1 is the first excited state, etc). Following the ground-state ML-HK method22, we choose a Fourier basis. In Eq. (4), l indexes a basis function, of which there are L. In this work 50 functions are used in each dimension (125,000 = 50 × 50 × 50 in total). The coefficients of the basis set expansion are learned using KRR. In particular, are represented as a kernel expansion of the form

| 5 |

Here, parameterizes the kernel model, and the kernel functional κ has a Gaussian form

| 6 |

where σ is a kernel width hyperparameter. The external potentials, vi(r), i = 1, . . . , M, are unique to each of the M training geometries, and are paired with M training densities, ni,j(r), for each state j. Like the density, we use a basis representation of the external potential; however, care must be taken to avoid the Coulomb singularities in v(r). We therefore use a Gaussian representation of the potential:

| 7 |

where Ra is the position of the ath nucleus, Za is its corresponding nuclear charge, and σpot is a width parameter22,66. The potential from Eq. (7) is then formed on a 3D grid surrounding the molecule and stored as a vector, vi, for each sample i to be used in Eq. (6). 60 × 50 × 30 grid points were used with a spacing of 0.2 Å, commensurate with the shape of the molecule. We used previously optimized values of σpot = 0.2 Å and a grid spacing for MA from ref. 22.

As a final step, we train a multistate total energy functional:

| 8 |

where k sums over the states of the densities used in the training and κ is another Gaussian kernel.

Reference ab initio electronic structure

Reference calculations on MA’s excited states were performed at the LR-TDDFT level using the PBE067,68 approximate exchange and correlation functional. This level of theory, which we abbreviate as TD-PBE0, was chosen as a compromise between accuracy and efficiency in this first demonstration of our ML-MSHK method. In particular, PBE0 provides qualitatively correct ground-state thermochemical properties of MA, yielding proton transfer barrier height of 2.0 kcal/mol compared to the predicted barrier from high-level theory of 4.1 kcal/mol69,70. In addition, TD-PBE0 provides reasonable spectroscopic quantities for MA, with a S0-S2 vertical excitation of 5.3 eV compared to the experimental value of 4.7 eV71. Electronic structure calculations were performed in CPMD v. 4.3.072 using a plane-wave basis with a kinetic energy cutoff of 90 Rydberg. Core electrons were replaced with Troullier-Martins norm-conserving pseudopotentials73.

AIMD excited-state molecular dynamics

Ab initio excited-state Born-Oppenheimer MD simulations of a gas-phase malonaldehyde molecule were performed in CPMD using the same TD-PBE0 level of theory discussed above. 50 independent non-equilibrium trajectories were initiated on the S2 state following vertical excitations spaced every 100 fs from an AIMD ground-state trajectory sampled at 300 K taken from ref. 22. Excited-state dynamics were propagated in the microcanonical ensemble with a 0.25 fs timestep for 120 fs, which takes 5027 min for one simulation with all cores (24) of dual Intel Xeon E5 2650 v4 (2.2 GHz) CPUs. Planarity was maintained with the following added restraining potential: , where ka is the strength of each restraint and is 9 kcal/(mol bohr2) for hydrogen and 40 kcal/(mol bohr2) for heavy atoms. za is the z-coordinate of each atom. The planarity restraints were chosen to be stiffer for the heavy atoms, since backbone torsions have been identified as bringing about the S2/S1 electronic crossing in MA41.

Training and test set generation

It has been shown that exploiting molecular point group symmetries helps to increase the effective dataset size significantly without performing additional quantum chemical calculations23. We thus generated training sets for malonaldehyde that included reflection about the mirror symmetry plane perpendicular to the molecular axis, , of an idealized planar C2v structure, since this symmetry operation ensures the equivalence of the two oxygen atoms as proton donors/acceptors. To achieve a symmetrization of the training sets while avoiding unnecessary duplication of samples, we combined symmeterization with a clustering of molecular geometries. For example, the training set for MD runs was formed by starting with 2000 structures taken from a previous ground-state AIMD trajectory22 and 14,400 structures taken every timestep from 30 independent ab initio excited-state trajectories described in section “AIMD excited-state molecular dynamics”. Next, all geometries were aligned to a reference geometry representing the planar proton-transfer transition state with C2v symmetry (see Supplementary Note 4). Then, following alignment, any molecular structures with a negative proton-transfer coordinate, r−, were reflected in the plane, i.e., to ensure r− > 0. The training set was then clustered with K-Means74 to make a set of 2500 samples, using a metric related to the L2 deviation of the external potential, , where vi represents the potential from Eq. (7). Finally, the training set was doubled in size by applying the reflection operator in the plane to yield the production training set of 5000 geometries. The test set contains 240 aligned snapshots extracted every 10 fs from 20 independent ab initio excited-state trajectories described in section “AIMD excited-state molecular dynamics”.

The electronic-structure calculations for excited-state energies and densities take 40 min with 8 cores of an Intel Xeon E5 2650 v4 (2.2 GHz) CPU for one geometry and the training takes 10 min using the same number of cores with hyperparameters given.

ML excited-state molecular dynamics

To generate initial conditions to perform excited-state dynamics on the ML S2 potential, a ground-state AIMD trajectory was run in CPMD using the PBE exchange and correlation functional67 with the same kinetic energy cutoff and pseudopotentials discussed above. This trajectory was run in the canonical ensemble at 300 K using massive Nosé-Hoover chain thermostats with a timestep of 0.5 fs75. 1000 independent non-equilibrium trajectories were initiated on the S2 state following vertical excitations spaced every 100 fs from the ground-state trajectory. Excited-state dynamics were propagated in the microcanonical ensemble with a 0.25 fs timestep using the atomistic simulation environment v.3.19.076. Atomic forces were evaluated numerically using central differences with a step length of dx = 0.001 Å. Dynamics were propagated for 60 fs, which was sufficient to observe the ESPT reaction. The propagation of one 60-fs dynamics simulation takes 20 min with 2 cores of an Intel Xeon E5 2650 v4 (2.2 GHz) CPU.

Supplementary information

Acknowledgements

W.J.G. acknowledges financial support from the National Natural Science Foundation of China (NSFC, grant no. 22173060), the NSFC Fund for International Excellent Young Scientists (grant no. 22150610466), the Ministry of Science and Technology of the People’s Republic of China (MOST) National Foreign Experts Program Fund (grant No. QN2021013001L), and the MOST Foreign Young Talents Program (grant no. WGXZ2022006L). M.E.T. acknowledges support from the National Science Foundation (grant no. CHE-1955381). L.V.-M. acknowledges support from the NYU University Research Challenge Fund. This project was supported in whole (or in part) by the NYU Shanghai Boost Fund. Computational resources were supported by the NYU-ECNU Center for Computational Chemistry and a start-up fund from NYU Shanghai. We thank Mihail Bogojeski for sharing an early version of the ML-DFT code.

Source data

Author contributions

Y.B. developed the ML python code, performed TDDFT calculations, ab initio MD simulations, and ML experiments. L.V.-M. assisted with developing the ML python code and dataset clustering. L.V.-M., M.E.T., and W.J.G. initiated the work, and M.E.T. and W.J.G. contributed to the theory and experiments. All authors contributed to the manuscript.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewers for their contribution to the peer review of this work.

Data availability

The molecular coordinates, electronic densities and energies for ground and excited states used in this study are available in Zenodo [10.5281/zenodo.7064211]. The source data of the figures are provided in the Source Data file. Source data are provided with this paper.

Code availability

Codes used in this work are available at 10.5281/zenodo.7064211.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-022-34436-w.

References

- 1.Meech SR, Hoff AJ, Wiersma DA. Role of charge-transfer states in bacterial photosynthesis. Proc. Natl. Acad. Sci. USA. 1986;83:9464. doi: 10.1073/pnas.83.24.9464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wahadoszamen M, Margalit I, Ara AM, van Grondelle R, Noy D. The role of charge-transfer states in energy transfer and dissipation within natural and artificial bacteriochlorophyll proteins. Nat. Commun. 2014;5:5287. doi: 10.1038/ncomms6287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Simons J. How do low-energy (0.1-2 eV) electrons cause DNA-strand breaks? Acc. Chem. Res. 2006;39:772. doi: 10.1021/ar0680769. [DOI] [PubMed] [Google Scholar]

- 4.Alizadeh E, Sanz AG, García G, Sanche L. Radiation damage to DNA: The indirect effect of low-energy electrons. J. Phys. Chem. Lett. 2013;4:820. doi: 10.1021/jz4000998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Benov L. Photodynamic therapy: current status and future directions. Med. Princ. Pract. 2015;24:14. doi: 10.1159/000362416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lerch MM, Hansen MJ, van Dam GM, Szymanski W, Feringa BL. Emerging targets in photopharmacology. Angew. Chem. Intl. Ed. 2016;55:10978. doi: 10.1002/anie.201601931. [DOI] [PubMed] [Google Scholar]

- 7.Hüll K, Morstein J, Trauner D. In vivo photopharmacology. Chem. Rev. 2018;118:10710. doi: 10.1021/acs.chemrev.8b00037. [DOI] [PubMed] [Google Scholar]

- 8.Clarke TM, Durrant JR. Charge photogeneration in organic solar cells. Chem. Rev. 2010;110:6736. doi: 10.1021/cr900271s. [DOI] [PubMed] [Google Scholar]

- 9.Zhao Y, Liang W. Charge transfer in organic molecules for solar cells: Theoretical perspective. Chem. Soc. Rev. 2012;41:1075. doi: 10.1039/c1cs15207f. [DOI] [PubMed] [Google Scholar]

- 10.Virshup AM, Chen J, Martínez TJ. Nonlinear dimensionality reduction for nonadiabatic dynamics: the influence of conical intersection topography on population transfer rates. J. Chem. Phys. 2012;137:22A519. doi: 10.1063/1.4742066. [DOI] [PubMed] [Google Scholar]

- 11.Pieri E, et al. The non-adiabatic nanoreactor: towards the automated discovery of photochemistry. Chem. Sci. 2021;12:7294. doi: 10.1039/d1sc00775k. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gross. E. & Kohn, W. in Density Functional Theory of Many-Fermion Systems, Vol. 21 Advances in Quantum Chemistry (ed. Löwdin, P.-O.) 255–291 (Academic Press, 1990).

- 13.Casida, M. E. in Recent Advances in Density Functional Methods (ed. Chong, D. P.) 155–192 (World Scientific Publishing, 1995).

- 14.Hohenberg P, Kohn W. Inhomogeneous electron gas. Phys. Rev. 1964;136:B864. [Google Scholar]

- 15.Kohn W, Sham LJ. Self-consistent equations including exchange and correlation effects. Phys. Rev. 1965;140:A1133. [Google Scholar]

- 16.Gaudoin R, Burke K. Lack of Hohenberg-Kohn theorem for excited states. Phys. Rev. Lett. 2004;93:173001. doi: 10.1103/PhysRevLett.93.173001. [DOI] [PubMed] [Google Scholar]

- 17.Ayers PW, Levy M, Nagy A. Time-independent density-functional theory for excited states of Coulomb systems. Phys. Rev. A. 2012;85:042518. [Google Scholar]

- 18.Hait D, Head-Gordon M. Excited state orbital optimization via minimizing the square of the gradient: general approach and application to singly and doubly excited states via density functional theory. J. Chem. Theory Comput. 2020;16:1699. doi: 10.1021/acs.jctc.9b01127. [DOI] [PubMed] [Google Scholar]

- 19.Hait D, Head-Gordon M. Orbital optimized density functional theory for electronic excited states. J. Phys. Chem. Lett. 2021;12:4517. doi: 10.1021/acs.jpclett.1c00744. [DOI] [PubMed] [Google Scholar]

- 20.Snyder JC, Rupp M, Hansen K, Müller K-R, Burke K. Finding density functionals with machine learning. Phys. Rev. Lett. 2012;108:253002. doi: 10.1103/PhysRevLett.108.253002. [DOI] [PubMed] [Google Scholar]

- 21.Mardirossian N, Head-Gordon M. ωB97X-V: A 10-parameter, range-separated hybrid, generalized gradient approximation density functional with nonlocal correlation, designed by a survival-of-the-fittest strategy. Phys. Chem. Chem. Phys. 2014;16:9904. doi: 10.1039/c3cp54374a. [DOI] [PubMed] [Google Scholar]

- 22.Brockherde F, et al. Bypassing the Kohn-Sham equations with machine learning. Nat. Commun. 2017;8:872. doi: 10.1038/s41467-017-00839-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bogojeski M, Vogt-Maranto L, Tuckerman ME, Müller K-R, Burke K. Quantum chemical accuracy from density functional approximations via machine learning. Nat. Commun. 2020;11:5223. doi: 10.1038/s41467-020-19093-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dick S, Fernandez-Serra M. Machine learning accurate exchange and correlation functionals of the electronic density. Nat. Commun. 2020;11:3509. doi: 10.1038/s41467-020-17265-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Nagai R, Akashi R, Sugino O. Completing density functional theory by machine learning hidden messages from molecules. npj Comput. Mater. 2020;6:43. [Google Scholar]

- 26.Cuevas-Zuviría B, Pacios LF. Analytical model of electron density and its machine learning inference. J. Chem. Inf. Model. 2020;60:3831. doi: 10.1021/acs.jcim.0c00197. [DOI] [PubMed] [Google Scholar]

- 27.Gedeon J, et al. Machine learning the derivative discontinuity of density-functional theory. Mach. Learn. Sci. Technol. 2021;3:015011. [Google Scholar]

- 28.Cuevas-Zuviría B, Pacios LF. Machine learning of analytical electron density in large molecules through message-passing. J. Chem. Inf. Model. 2021;61:2658. doi: 10.1021/acs.jcim.1c00227. [DOI] [PubMed] [Google Scholar]

- 29.Dral PO, Barbatti M, Thiel W. Nonadiabatic excited-state dynamics with machine learning. J. Phys. Chem. Lett. 2018;9:5660. doi: 10.1021/acs.jpclett.8b02469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Westermayr J, Marquetand P. Machine learning for electronically excited states of molecules. Chem. Rev. 2020;121:9873. doi: 10.1021/acs.chemrev.0c00749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hu D, Xie Y, Li X, Li L, Lan Z. Inclusion of machine learning kernel ridge regression potential energy surfaces in on-the-fly nonadiabatic molecular dynamics simulation. J. Phys. Chem. Lett. 2018;9:2725. doi: 10.1021/acs.jpclett.8b00684. [DOI] [PubMed] [Google Scholar]

- 32.Westermayr J, et al. Machine learning enables long time scale molecular photodynamics simulations. Chem. Sci. 2019;10:8100. doi: 10.1039/c9sc01742a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Westermayr J, Gastegger M, Marquetand P. Combining SchNet and SHARC: The SchNarc machine learning approach for excited-state dynamics. J. Phys. Chem. Lett. 2020;11:3828. doi: 10.1021/acs.jpclett.0c00527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Li J, Stein R, Adrion DM, Lopez SA. Machine-learning photodynamics simulations uncover the role of substituent effects on the photochemical formation of cubanes. J. Am. Chem. Soc. 2021;143:20166. doi: 10.1021/jacs.1c07725. [DOI] [PubMed] [Google Scholar]

- 35.Li J, et al. Automatic discovery of photoisomerization mechanisms with nanosecond machine learning photodynamics simulations. Chem. Sci. 2021;12:5302. doi: 10.1039/d0sc05610c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chattoraj M, King BA, Bublitz GU, Boxer SG. Ultra-fast excited state dynamics in green fluorescent protein: multiple states and proton transfer. Proc. Natl. Acad. Sci. USA. 1996;93:8362. doi: 10.1073/pnas.93.16.8362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tsien RY. The green fluorescent protein. Annu. Rev. Biochem. 1998;67:509. doi: 10.1146/annurev.biochem.67.1.509. [DOI] [PubMed] [Google Scholar]

- 38.Herek JL, Pedersen S, Bañares L, Zewail AH. Femtosecond real-time probing of reactions. IX. Hydrogen-atom transfer. J. Chem. Phys. 1992;97:9046. [Google Scholar]

- 39.Formosinho SJ, Arnaut LG. Excited-state proton transfer reactions II. Intramolecular reactions. J. Photochem. Photobiol. A. 1993;75:21. [Google Scholar]

- 40.Sobolewski AL, Domcke W. Photophysics of malonaldehyde: An ab initio study. J. Phys. Chem. A. 1999;103:4494. [Google Scholar]

- 41.Coe JD, Martínez TJ. Ab initio molecular dynamics of excited-state intramolecular proton transfer around a three-state conical intersection in malonaldehyde. J. Phys. Chem. A. 2006;110:618. doi: 10.1021/jp0535339. [DOI] [PubMed] [Google Scholar]

- 42.List NH, Dempwolff AL, Dreuw A, Norman P, Martínez TJ. Probing competing relaxation pathways in malonaldehyde with transient X-ray absorption spectroscopy. Chem. Sci. 2020;11:4180. doi: 10.1039/d0sc00840k. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.do Monte SA, Dallos M, Müller T, Lischka H. MR-CISD and MR-AQCC calculation of excited states of malonaldehyde: geometry optimizations using analytical energy gradient methods and a systematic investigation of reference configuration sets. Collect. Czech. Chem. Commun. 2003;68:447. [Google Scholar]

- 44.Aquino AJA, Lischka H, Hättig C. Excited-state intramolecular proton transfer: a survey of TDDFT and RI-CC2 excited-state potential energy surfaces. J. Phys. Chem. A. 2005;109:3201. doi: 10.1021/jp050288k. [DOI] [PubMed] [Google Scholar]

- 45.Coe JD, Levine BG, Martínez TJ. Ab initio molecular dynamics of excited-state intramolecular proton transfer using multireference perturbation theory. J. Phys. Chem. A. 2007;111:11302. doi: 10.1021/jp072027b. [DOI] [PubMed] [Google Scholar]

- 46.Coe JD, Martinez TJ. Ab initio multiple spawning dynamics of excited state intramolecular proton transfer: the role of spectroscopically dark states. Mol. Phys. 2008;106:537. [Google Scholar]

- 47.Park JW, Rhee YM. Electric field keeps chromophore planar and produces high yield fluorescence in green fluorescent protein. J. Am. Chem. Soc. 2016;138:13619. doi: 10.1021/jacs.6b06833. [DOI] [PubMed] [Google Scholar]

- 48.Bereau T, DiStasio RA, Tkatchenko A, von Lilienfeld OA. Non-covalent interactions across organic and biological subsets of chemical space: physics-based potentials parametrized from machine learning. J. Chem. Phys. 2018;148:241706. doi: 10.1063/1.5009502. [DOI] [PubMed] [Google Scholar]

- 49.Zubatiuk T, Isayev O. Development of multimodal machine learning potentials: toward a physics-aware artificial intelligence. Acc. Chem. Res. 2021;54:1575. doi: 10.1021/acs.accounts.0c00868. [DOI] [PubMed] [Google Scholar]

- 50.von Lilienfeld OA, Ramakrishnan R, Rupp M, Knoll A. Fourier series of atomic radial distribution functions: a molecular fingerprint for machine learning models of quantum chemical properties. Int. J. Quant. Chem. 2015;115:1084. [Google Scholar]

- 51.Perdew JP, Levy M. Extrema of the density functional for the energy: Excited states from the ground-state theory. Phys. Rev. B. 1985;31:6264. doi: 10.1103/physrevb.31.6264. [DOI] [PubMed] [Google Scholar]

- 52.Rupp M, Tkatchenko A, Müller K-R, von Lilienfeld OA. Fast and accurate modeling of molecular atomization energies with machine learning. Phys. Rev. Lett. 2012;108:058301. doi: 10.1103/PhysRevLett.108.058301. [DOI] [PubMed] [Google Scholar]

- 53.Behler J. Perspective: machine learning potentials for atomistic simulations. J. Chem. Phys. 2016;145:170901. doi: 10.1063/1.4966192. [DOI] [PubMed] [Google Scholar]

- 54.Schütt KT, Arbabzadah F, Chmiela S, Müller KR, Tkatchenko A. Quantum-chemical insights from deep tensor neural networks. Nat. Commun. 2017;8:13890. doi: 10.1038/ncomms13890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Chmiela, S. et al. Machine learning of accurate energy-conserving molecular force fields. Sci. Adv. 3, 5 (2017) [DOI] [PMC free article] [PubMed]

- 56.Chmiela S, Sauceda HE, Müller K-R, Tkatchenko A. Towards exact molecular dynamics simulations with machine-learned force fields. Nat. Commun. 2018;9:3887. doi: 10.1038/s41467-018-06169-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Faber FA, Christensen AS, Huang B, von Lilienfeld OA. Alchemical and structural distribution based representation for universal quantum machine learning. J. Chem. Phys. 2018;148:241717. doi: 10.1063/1.5020710. [DOI] [PubMed] [Google Scholar]

- 58.Schütt KT, Gastegger M, Tkatchenko A, Müller K-R, Maurer RJ. Unifying machine learning and quantum chemistry with a deep neural network for molecular wavefunctions. Nat. Commun. 2019;10:5024. doi: 10.1038/s41467-019-12875-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Christensen AS, von Lilienfeld OA. On the role of gradients for machine learning of molecular energies and forces. Mach. Learn. Sci. Technol. 2020;1:045018. [Google Scholar]

- 60.Muller K-R, Mika S, Ratsch G, Tsuda K, Scholkopf B. An introduction to kernel-based learning algorithms. IEEE Trans. Neural. Netw. 2001;12:181. doi: 10.1109/72.914517. [DOI] [PubMed] [Google Scholar]

- 61.Efron B, Tibshirani R. The bootstrap method for assessing statistical accuracy. Behaviormetrika. 1985;12:1. [Google Scholar]

- 62.Virtanen P, et al. SciPy 1.0: fundamental algorithms for scientific computing in python. Nat. Methods. 2020;17:261. doi: 10.1038/s41592-019-0686-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Chudoba C, Riedle E, Pfeiffer M, Elsaesser T. Vibrational coherence in ultrafast excited state proton transfer. Chem. Phys. Lett. 1996;263:622. [Google Scholar]

- 64.Lochbrunner S, Szeghalmi A, Stock K, Schmitt M. Ultrafast proton transfer of 1-hydroxy-2-acetonaphthone: Reaction path from resonance Raman and transient absorption studies. J. Chem. Phys. 2005;122:244315. doi: 10.1063/1.1914764. [DOI] [PubMed] [Google Scholar]

- 65.Takeuchi S, Tahara T. Coherent nuclear wavepacket motions in ultrafast excited-state intramolecular proton transfer: Sub-30-fs resolved pump-probe absorption spectroscopy of 10-hydroxybenzo[h]quinoline in solution. J. Phys. Chem. A. 2005;109:10199. doi: 10.1021/jp0519013. [DOI] [PubMed] [Google Scholar]

- 66.Bartók AP, Payne MC, Kondor R, Csányi G. Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 2010;104:136403. doi: 10.1103/PhysRevLett.104.136403. [DOI] [PubMed] [Google Scholar]

- 67.Perdew JP, Ernzerhof M, Burke K. Rationale for mixing exact exchange with density functional approximations. J. Chem. Phys. 1996;105:9982. [Google Scholar]

- 68.Adamo C, Barone V. Toward reliable density functional methods without adjustable parameters: the PBE0 model. J. Chem. Phys. 1999;110:6158. [Google Scholar]

- 69.Barone V, Adamo C. Proton transfer in the ground and lowest excited states of malonaldehyde: A comparative density functional and post-Hartree-Fock study. J. Chem. Phys. 1996;105:11007. [Google Scholar]

- 70.Sadhukhan S, Muñoz D, Adamo C, Scuseria GE. Predicting proton transfer barriers with density functional methods. Chem. Phys. Lett. 1999;306:83. [Google Scholar]

- 71.Seliskar CJ, Hoffman RE. Electronic spectroscopy of malondialdehyde. Chem. Phys. Lett. 1976;43:481. [Google Scholar]

- 72.Marx, D. et al. CPMD, IBM Corporation 1990–2019 and MPI für Festkörperforschung Stuttgart 1997–2001. http://www.cpmd.org (2019).

- 73.Troullier N, Martins JL. Efficient pseudopotentials for plane-wave calculations. Phys. Rev. B. 1991;43:1993. doi: 10.1103/physrevb.43.1993. [DOI] [PubMed] [Google Scholar]

- 74.Steinhaus H. Sur la division des corps matériels en parties. Bull. Acad. Polon. Sci. 1956;1:801. [Google Scholar]

- 75.Martyna GJ, Klein ML, Tuckerman M. Nosé-Hoover chains: the canonical ensemble via continuous dynamics. J. Chem. Phys. 1992;97:2635. [Google Scholar]

- 76.Larsen AH, et al. The atomic simulation environment—a python library for working with atoms. J. Phys. Condens. Matter. 2017;29:273002. doi: 10.1088/1361-648X/aa680e. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The molecular coordinates, electronic densities and energies for ground and excited states used in this study are available in Zenodo [10.5281/zenodo.7064211]. The source data of the figures are provided in the Source Data file. Source data are provided with this paper.

Codes used in this work are available at 10.5281/zenodo.7064211.