Abstract

Hearing with a cochlear implant (CI) is limited compared to natural hearing. Although CI users may develop compensatory strategies, it is currently unknown whether these extend from auditory to visual functions, and whether compensatory strategies vary between different CI user groups. To better understand the experience-dependent contributions to multisensory plasticity in audiovisual speech perception, the current event-related potential (ERP) study presented syllables in auditory, visual, and audiovisual conditions to CI users with unilateral or bilateral hearing loss, as well as to normal-hearing (NH) controls. Behavioural results revealed shorter audiovisual response times compared to unisensory conditions for all groups. Multisensory integration was confirmed by electrical neuroimaging, including topographic and ERP source analysis, showing a visual modulation of the auditory-cortex response at N1 and P2 latency. However, CI users with bilateral hearing loss showed a distinct pattern of N1 topography, indicating a stronger visual impact on auditory speech processing compared to CI users with unilateral hearing loss and NH listeners. Furthermore, both CI user groups showed a delayed auditory-cortex activation and an additional recruitment of the visual cortex, and a better lip-reading ability compared to NH listeners. In sum, these results extend previous findings by showing distinct multisensory processes not only between NH listeners and CI users in general, but even between CI users with unilateral and bilateral hearing loss. However, the comparably enhanced lip-reading ability and visual-cortex activation in both CI user groups suggest that these visual improvements are evident regardless of the hearing status of the contralateral ear.

Keywords: Cochlear implant, Single-sided-deafness, Bilateral hearing loss, Event-related potential, Cortical plasticity, Multisensory integration, Audiovisual speech perception

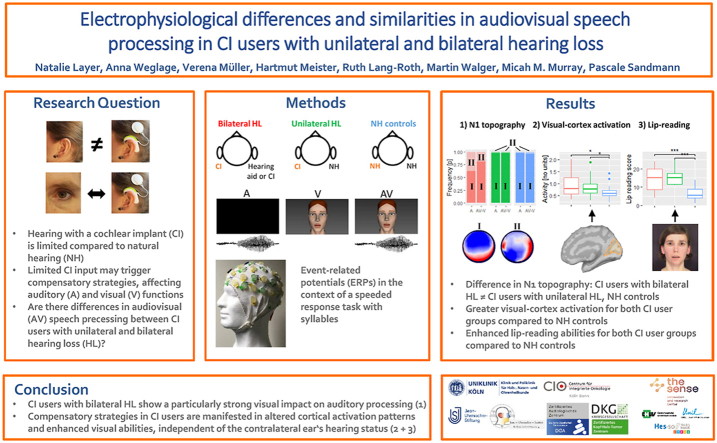

Graphical abstract

Highlights

-

•

Altered audiovisual speech processing after unilateral and bilateral hearing loss.

-

•

CI users with unilateral and bilateral hearing loss differ in their N1 topography.

-

•

Enhanced lip-reading and stronger visual-cortex activation in different CI groups.

-

•

Visual improvements with CI are independent of the contralateral ear's hearing status.

1. Introduction

A cochlear implant (CI) can help restore the communication abilities in patients with severe to profound sensorineural hearing loss by electrically stimulating the auditory nerve (Zeng, 2011). However, listening with a CI is completely different from conventional hearing, as the electrical signal provided by the CI transmits only a limited amount of spectral and temporal information (Drennan and Rubinstein, 2008). Consequently, the central auditory system must learn to interpret the artificially sounding CI input as meaningful information (Giraud et al., 2001c; Sandmann et al., 2015). The ability of the nervous system to adapt to a new type of stimulus is an example of neural plasticity (Glennon et al., 2020; Merzenich et al., 2014). This phenomenon has been investigated in various studies with CI users, manifesting as an increase in activation in the auditory cortex to auditory stimuli during the first months after CI implantation (Giraud et al., 2001c; Green et al., 2005; Sandmann et al., 2015). Additional evidence for neural plasticity in CI users comes from the observation that these individuals recruit visual cortices for purely auditory speech tasks (Chen et al., 2016; Giraud et al., 2001c); a phenomenon referred to as cross-modal plasticity (e.g. Glennon et al., 2020).

Previous research has shown that event-related potentials (ERPs) derived from continuous electroencephalography (EEG) are an adequate method for studying cortical plasticity in CI users (Beynon et al., 2005; Finke et al., 2016a; Sandmann et al., 2009, 2015; Schierholz et al., 2015, 2017; Sharma et al., 2002; Viola et al., 2012; Layer et al., 2022). The primary benefit of analysing ERPs is the high temporal resolution, which allows for the tracking of individual cortical processing steps (Biasiucci et al., 2019; Michel and Murray, 2012). For instance, the auditory N1 (negative potential around 100 ms after stimulus onset) and the auditory P2 ERPs (positive potential around 200 ms after stimulus onset) are at least partly generated in the primary and secondary auditory cortices (Ahveninen et al., 2006; Bosnyak et al., 2004; Näätänen and Picton, 1987). Current models of auditory signal propagation recognise that there is an underlying anatomy exhibiting a semi-hierarchical and highly parallel organisation (e.g. Kaas and Hackett, 2000). In terms of auditory ERPs this would suggest that prominent components, such as the N1–P2 complex, include generators not only within primary auditory cortices, but within a distributed network along the superior temporal cortices as well as fronto-parietal structures and even visual cortices. Moreover, top-down effects, such as attention or expectation of incoming auditory events mediated by the frontal cortex, can influence these auditory processes (Dürschmid et al., 2019). The majority of previous ERP studies with CI users have used auditory stimuli to show that the N1 and P2 ERPs have a reduced amplitude and a prolonged latency in comparison to normal-hearing (NH) individuals. This observation suggests that CI users have difficulties in processing auditory stimuli (Beynon et al., 2005; Finke et al., 2016a; Henkin et al., 2014; Sandmann et al., 2009) and is consistent with previous behavioural results of impaired auditory discrimination ability in CI users (Sandmann et al., 2010, 2015; Finke et al., 2016a, Finke et al., 2016b).

Although multisensory conditions more likely represent everyday situations, only a few ERP studies so far have been conducted with audiovisual stimuli. These studies primarily concentrated on rudimentary, non-linguistic audiovisual stimuli (sinusoidal tones and white discs) and showed a prolonged N1 response, and a greater visual modulation of the auditory N1 ERPs in CI users compared to NH listeners (Schierholz et al., 2015, 2017). Our previous study extended these results to more complex audiovisual syllables (Layer et al., 2022), using electrical neuroimaging (Michel et al., 2004, 2009; Michel and Murray, 2012) to perform topographic and ERP source analyses. Unlike traditional ERP data analysis, which is based on waveform morphology at specific electrode positions, electrical neuroimaging is reference-independent and takes into account the spatial characteristics and temporal dynamics of the global electric field to distinguish between the effects of response strength, latency, and distinct topographies (Murray et al., 2008; Michel et al., 2009). By using this topographic analysis approach, we previously showed a group-specific topographic pattern at N1 latency and an enhanced activation in the visual cortex at N1 latency for CI users when compared to NH listeners (Layer et al., 2022). These observations confirm a recent report about alterations in audiovisual processing and a multisensory benefit for CI users, if additional (congruent) visual information is provided (Radecke et al., 2022).

Based on previous ERP and behavioural results, one might conclude that multisensory processes, in particular integration of auditory and visual speech cues, remain intact in CI users despite the limited auditory signal provided by the CI. Nevertheless, it remains unclear whether the enhanced visual impact on auditory speech processing applies to all of the CI users, given that large inter-individual differences (e.g. with regards to the hearing threshold in the contralateral ear) have not been taken into account. Most of the aforementioned studies have included CI users with bilateral hearing loss (e.g. Finke et al., 2016a; Sandmann et al., 2015; Schierholz et al., 2015; Radecke et al., 2022; Layer et al., 2022), either provided with a CI on both ears (CI + CI on contralateral side) or on one ear (CI + hearing aid on contralateral side). These CI users will be referred to as CI-CHD users (CHD = ‘contralateral hearing device’) in the following. However, over the last years, the clinical margins for CI indication have been extended to unilateral hearing loss, enabling the implantation of single-sided deaf (SSD) patients (CI + NH on contralateral side; Arndt et al., 2011; Arndt et al., 2017; Buechner et al., 2010). This CI user group is particularly interesting, as the signal quality of the input is very different for the two ears, leading to maximally asymmetric auditory processing (Gordon et al., 2013; Kral et al., 2013). The variable hearing ability in the contralateral ear across different CI groups may at least partly account for the large variability in speech recognition ability observed in CI users (Lazard et al., 2012). To better understand the factors contributing to this variability and to extend previous findings on CI-CHD users and NH listeners (Layer et al., 2022), the current study systematically compared the timecourse of auditory and audiovisual speech processing as well as the lip-reading abilities between different groups of CI users, in particular CI-CHD users and CI-SSD users, and to NH listeners. The inclusion of the additional group of patients crucially extends our previous study because it not only evaluates the transferability our previous results to different patient groups but also provides deeper insights into the influence of individual factors - specifically the hearing ability of the second ear - on audiovisual speech processing in CI users. This is noteworthy because literature comparing CI-SSD to bimodal or bilateral CI users is scarce. However, the few existing studies reported differences in speech-in-noise performance (Williges et al., 2019) and in situations with multiple concurrent speakers (Bernstein et al., 2016) between CI-SSD users and bimodal or bilateral CI users, respectively. But, given this first evidence for purely auditory situations, we hypothesised that further differences would emerge for audiovisual stimulation, which has yet to be reported.

Given that CI-SSD users have an intact ear on the contralateral side, it is reasonable that this NH ear serves as the main communication channel despite the advantages given by the CI (Kitterick et al., 2015; Ludwig et al., 2021). Therefore, we hypothesised that CI-SSD users are less influenced by visual information, benefit less from audiovisual input and show poorer lip-reading skills than CI-CHD users. However, we expected a delay in cortical responses in the CI-SSD group, similar to the group of CI-CHD users, when compared to NH individuals, based on previous results from studies with purely auditory stimuli comparing the CI and the NH ear (Finke et al., 2016b; Bönitz et al., 2018; Weglage et al., 2022).

2. Material and methods

The study was conducted in accordance with the Declaration of Helsinki and was approved by the Ethics Committee of the medical faculty of the University of Cologne (application number: ). Prior to data collection, all participants gave written informed consent, and they were reimbursed.

2.1. Participants

In total, twelve post-lingually deafened CI-SSD patients were invited to participate in this study to extend the results from our previous study (Layer et al., 2022) by including an additional subgroup of CI-SSD users. Among these participants, one had to be excluded due to poor EEG data quality (high artefact load), resulting in a total of eleven CI-SSD patients (two right-implanted). Accordingly, we selected post-lingually deafened CI-CHD patients and NH listeners from our previous study (Layer et al., 2022; each) such that they matched the CI-SSD patients as best as possible by gender, age, handedness, stimulated ear and years of education. The matched subset datasets from our previous study of CI-CHD users and NH listeners were reused for the current study and were extended by newly acquired data from an additional group of CI-SSD users. The CI-CHD users were implanted either unilaterally (n ; all left-implanted using a hearing aid on the contralateral ear) or bilaterally (; two right-implanted). All CI users had been using their device continuously for at least one year prior to the experiment. For the experiment, only the ear with a CI was stimulated and in the case of bilateral implantation, the ‘better’ ear (the ear showing the higher speech recognition scores in the Freiburg monosyllabic test) was used as stimulation side.

Thus, for final analyses, thirty-three volunteers were included, with eleven CI-SSD patients (7 female, mean age: 56.5 years ± 9.2 years, range: , 10 right-handed), eleven CI-CHD patients (7 female, mean age: 61.4 years ± 9.7 years, range: , 11 right-handed) and eleven NH listeners (7 female, mean age: 60.1 years ± 10.1 years, range: , 11 righthanded). Detailed information on the implant system and the demographic data are provided in Table 1. To check that cognitive abilities were age-appropriate, the DemTect Ear test battery was used (Brünecke et al., 2018). All participants scored within the normal, age-appropriate range ( points). In addition, the German Freiburg monosyllabic speech test (Hahlbrock, 1970) with a sound intensity level of 65 dB SPL (see Table 4 for scores) was used to assess speech recognition abilities. To obtain a hearing threshold (HT) of the contralateral ear, we measured the aided HT in CI-CHD users in free-field and the unaided HT of the NH ear of CI-SSD users with headphones (see Table 1). All participants were native German speakers, had normal or corrected-to-normal vision (assessed by the Landolt test according to the DIN-norm; Wesemann et al., 2010) and none of the participants had a history of psychiatric disorder. Their handedness was assessed by the Edinburgh inventory (Oldfield, 1971).

Table 1.

Demographic information on the CI participants; HT (hearing threshold; average over 500 Hz, 1 kHz, 2 kHz, 4 kHz); Stim. = stimulated; HL = hearing loss; m = male; f = female.

| ID | Group | Sex | Age | Handed-ness | Fitting | HT (dB HL; contra-lateral ear) | Stim. ear | Etiology | Age at onset of HL (years) | CI use of the stim. ear (months) | CI manufacturer |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | CI-CHD | m | 61 | right | bilateral | 32 | left | unknown | 41 | 15 | MedEl |

| 2 | CI-CHD | f | 75 | right | bilateral | 31 | left | hereditary | 57 | 30 | Advanced Bionics |

| 3 | CI-CHD | f | 39 | right | bilateral | 26 | right | otosclerosis | 24 | 17 | Advanced Bionics |

| 4 | CI-CHD | f | 70 | right | bilateral | 37 | left | unknown | 37 | 56 | MedEl |

| 5 | CI-CHD | f | 70 | right | bilateral | 37 | left | meningitis | 69 | 20 | MedEl |

| 6 | CI-CHD | m | 59 | right | bimodal | 85 | left | unknown | 49 | 33 | Advanced Bionics |

| 7 | CI-CHD | f | 63 | right | bilateral | 36 | left | meningitis | 20 | 106 | Advanced Bionics |

| 8 | CI-CHD | f | 64 | right | bilateral | 29 | left | whooping cough | 9 | 78 | Cochlear |

| 9 | CI-CHD | m | 53 | right | bilateral | 36 | left | unknown | 30 | 235 | Cochlear |

| 10 | CI-CHD | f | 58 | right | bimodal | 41 | left | unknown | 49 | 18 | Advanced Bionics |

| 11 | CI-CHD | m | 56 | right | bilateral | 35 | right | hereditary | 19 | 63 | MedEl |

| 12 | CI-SSD | f | 64 | right | SSD | 10 | left | unknown | 49 | 30 | Cochlear |

| 13 | CI-SSD | f | 40 | right | SSD | 10 | right | sudden hearing loss | 34 | 77 | MedEl |

| 14 | CI-SSD | m | 43 | right | SSD | 12 | left | bike accident | 42 | 12 | Cochlear |

| 15 | CI-SSD | m | 54 | right | SSD | 17 | left | unknown | 52 | 28 | MedEl |

| 16 | CI-SSD | f | 49 | right | SSD | 23 | right | otosclerosis | 39 | 19 | MedEl |

| 17 | CI-SSD | m | 59 | left | SSD | 17 | left | sudden hearing loss | 49 | 54 | Cochlear |

| 18 | CI-SSD | f | 57 | right | SSD | 12 | left | sudden hearing loss | 20 | 53 | Cochlear |

| 19 | CI-SSD | m | 62 | right | SSD | 13 | left | sudden hearing loss | 47 | 63 | Cochlear |

| 20 | CI-SSD | f | 62 | right | SSD | 15 | left | otitis media | 14 | 65 | MedEl |

| 21 | CI-SSD | f | 68 | right | SSD | 25 | left | unknown | 60 | 50 | Cochlear |

| 22 | CI-SSD | f | 52 | right | SSD | 22 | left | hereditary | 20 | 12 | MedEl |

Table 4.

Other behavioural measures for CI users and NH listeners. In the Freiburg monosyllabic word test and in the lip-reading test, a score of 100% means that all words have been repeated correctly. A higher value for the exertion rating means it was more effortful to perform the task (range: 6–20; 6 = no effort, 20 = highly effortful).

| Group | Freiburg test (%) | Lip-reading test | Exertion rating |

|---|---|---|---|

| CI-CHD | 70.9 ± 12.2 | 14.5 ± 6.7 | 12.5 ± 2.0 |

| CI-SSD | 71.4 ± 13.1 | 15.5 ± 4.4 | 12.2 ± 1.8 |

| NH | 98.2 ± 4.0 | 6.9 ± 4.7 | 11.9 ± 1.6 |

2.2. Stimuli

The stimuli in this study were identical to those used in our previous study (Layer et al., 2022) and they were presented in three different conditions: visual-only (V), auditory-only (A) and audiovisual (AV). Additionally, there were trials with a black screen only (‘nostim’), to which the participants were instructed to not react. The stimuli were delivered using the Presentation software (Neurobehavioral Systems, version 21.1) and a computer in combination with a duplicated monitor (69 inch). The stimuli consisted of the two syllables /ki/ and /ka/ which are included in the Oldenburg logatome speech corpus (OLLO; Wesker et al., 2005). They were cut from the available logatomes from one speaker (female speaker 1, V6 ′normal spelling style’, no dialect). These two syllables in particular differed in their phonetic distinctive features (vowel place and height of articulation) in the vowel contrast (/a/ vs. /i/; Micco et al., 1995). These German vowels are different in terms of central frequencies of the first (F1) and second formant (F2) representing the highest contrast between German vowels (e.g. Obleser et al., 2003), making them easily distinguishable for CI users. Importantly, as we presented visual-only syllables as well, the chosen syllables not only highly differ in terms of auditory (phoneme) realisation, but also in their visual articulatory (viseme) realisation. A viseme is the visual equivalent of the phoneme: a static image of a person articulating a phoneme (Dong et al., 2003). The editing of the syllables was done with Audacity (version 3.0.2) by cutting and adjusting them to the same duration of 400 ms. The syllables were normalised (adjusted to the maximal amplitude) in Adobe Audition CS6 (version 5.0.2).

To create a visual articulation of the auditory syllables, we used the MASSY (Modular Audiovisual Speech SYnthesizer; Fagel and Clemens, 2004), which is a computer-based video animation of a talking head. This talking head has been previously validated for CI users (Meister et al., 2016; Schreitmüller et al., 2018) and is an adequate tool to generate audiovisual and visual speech stimuli (Massaro and Light, 2004). To generate articulatory movements matching the auditory speech sounds, one has to provide files that transform the previously transcribed sounds into a probabilistic pronunciation model providing the segmentation and the timing of every single phoneme. This can be done by means of the web-based tool MAUS (Munich Automatic Segmentation; Schiel, 1999). To obtain a video file of the MASSY output, the screen recorder Bandicam (version 4.1.6) was used in order to save the finished video files. Finally, the stimuli were edited in Pinnacle Studio 22 (version 22.3.0.377), making video files of each syllable in each condition: 1) Audiovisual (AV): articulatory movements with corresponding auditory syllables, 2) Auditory-only (A): black screen (video track turned off) combined with auditory syllables, 3) Visual-only (V): articulatory movements without auditory syllables (audio track turned off). Each trial started with a static face (500 ms) and was followed by the video, which lasted for 800 ms (20 ms initiation of articulatory movements + 400 ms auditory syllable + 380 ms completion of articulatory movements). For further analyses, we focused on the moving face (starting 500 ms post-stimulus onset/after the static face), as the responses to static faces comparing NH listeners and CI users have been investigated previously (Stropahl et al., 2015).

Supplementary video related to this article can be found at https://doi.org/10.1016/j.crneur.2022.100059

The following are the supplementary data related to this article:

In general, all participants were assessed monaurally, meaning that in CI-SSD users the CI-ear was measured, in bimodal CI users the CI-ear was measured and in bilateral CI users the better CI-ear was measured. Regarding the CI-SSD patients, we followed the procedures of a previous study (Weglage et al., 2022) and positioned the processor inside an aqua case (Advanced Bionics; https://www.advancedbionics.com) to specifically stimulate the CI-ear without risking a cross-talking to the NH ear. Note that stimulation via a loudspeaker is not possible in this group, because an ear-plug is not enough to cover and mask the NH-ear, as it only reduces the intensity level by maximally 30 dB (Park et al., 2021). We refused the option of using noise to mask the NH ear, as this noise would have to be very loud to fully mask the information. This stimulation option would rather represent a speech-in-noise condition and would differ much more from the other two groups. An insert earphone (3M E-A-RTONE 3A) was put inside a hole of the aqua case where it was placed right over the microphone of the CI. A long coil cable was used to connect the processor with the implant. Regarding the CI-CHD users, the stimuli were presented via a loudspeaker (Audiometer-Box, type: LAB 501, Westra Electronic GmbH) which was placed in front of the participant. The hearing aid or the CI at the contralateral side was removed during the experiment and the ear was additionally covered with an ear-plug. For NH participants, the ear of the matched CI user was stimulated via an insert earphone (3M E-A-RTONE 3A), and the contralateral ear was closed with an ear-plug as well to avoid a cross-talking to the contralateral ear. The stimuli were calibrated to 65 dB SPL to ensure that the intensity level was equal for each stimulation technique. All participants rated the perceived loudness of the syllables with a seven-point loudness rating scale (as used in Sandmann et al., 2009, 2010), to ensure that the syllable intensity was perceived at a moderate level of dB (Allen et al., 1990). The stimuli (video files) are provided as supplementary material and can be downloaded.

2.3. Procedure

The procedure was identical to our previous study (see Layer et al., 2022). The additional CI-SSD users were seated comfortably in an electromagnetically shielded and dimly lit booth at a viewing distance of 175 cm to the screen. The participants were instructed to discriminate as fast and as accurately as possible between the syllables /ki/ and /ka/. The given response was registered using a mouse, with each of the two buttons assigned to one syllable. The sides were counterbalanced across the participants to prevent confounds caused by the used finger.

For each condition (AV, A, V, ‘nostim’), 90 trials each were presented per syllable, resulting in a total number of 630 trials (90 repetitions x 3 conditions (AV, A, V) x 2 syllables (/ki/, /ka/) + 90 ‘nostim’-conditions). Each trial began with a ‘nostim’-condition or a static face of the talking head (500 ms) followed by a visual-only, auditory-only or an audiovisual syllable. Afterwards, a fixation cross was shown until the participant pressed a button. In the case of a ‘nostim’-trial, the participants were asked not to respond to. The trials were pseudo-randomised such that no trial of the same condition and syllable appeared twice in a row. The experiment lasted for 25 min excluding breaks, composed of five blocks of approximately 5 min each. A short break was given after each block. To ensure that the task was understood by the participants, we presented a short practice block consisting of five trials per condition before starting the recording. An illustration of the experimental paradigm can be found in Fig. 1A.

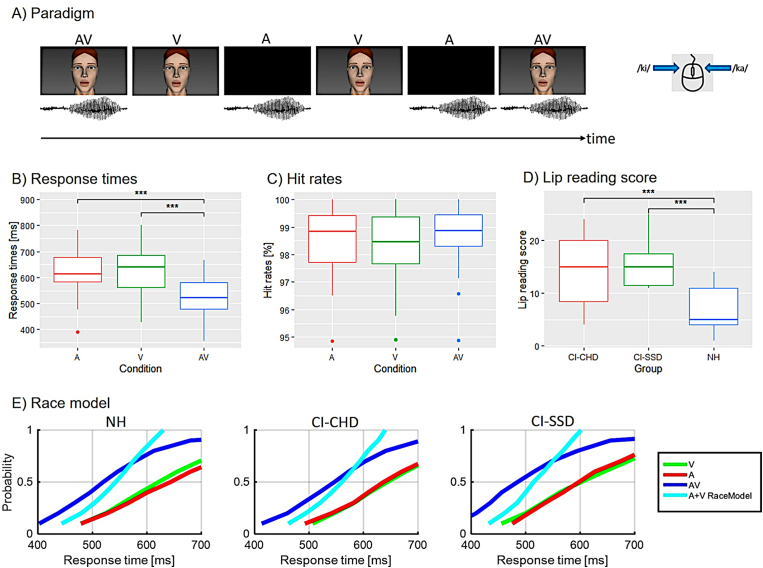

Fig. 1.

Behavioural results. A) Overview of the paradigm (adapted from Layer et al., 2022). B) Mean response times for auditory (red), visual (green) and audiovisual (blue) syllables averaged over all groups, demonstrating that audiovisual syllables had shorter response times than auditory-only and visual-only RTs. C) Mean hit rates for auditory (red), visual (green) and audiovisual (blue) syllables averaged across all groups, with no differences between the three conditions. D) Cumulative distribution functions for CI-CHD, CI-SSD and NH. The race model is violated for all three groups because they show that the probability of faster response times is higher for audiovisual stimuli (blue line) than for those estimated by the race model (cyan line). Significant differences are indicated (* , ** , *** ). (For interpretation of the references to colour in this figure legend, the reader is referred to the Web version of this article.)

To obtain further behavioural measures apart from the ones registered in the EGG task (hit rates, response times), we asked the additionally measured CI-SSD users to rate the exertion of performing the task after each experimental block by using the ‘Borg Rating of Perceived Exertion’-scale (Borg RPE-scale; Williams, 2017). Further, we measured the lip-reading abilities by means of a behavioural lip-reading test consisting of monosyllabic words from the German Freiburg test (Hahlbrock, 1970) which were visualised by various speakers and filmed (Stropahl et al., 2015). The participants were asked to watch the short videos of the muted monosyllabic word performances and to report which word was understood. This test was used in previous studies with CI patients (Stropahl et al., 2015; Stropahl and Debener, 2017), as well as in our previous study with CI-CHD users and NH listeners (Layer et al., 2022), whose scores we compared to CI-SSD users in the current study.

2.4. EEG recording

Similar to our previous study (Layer et al., 2022), the EEG data of the additionally measured CI-SSD users were continuously recorded by means of 64 AG/AgCl ActiCap slim electrodes using a BrainAmp system (BrainProducts, Gilching, Germany) and a customised electrode cap with an electrode layout (Easycap, Herrsching, Germany) according to the 10-10 system. To record an electrooculogram (EOG), two electrodes were placed below and beside the left eye (vertical and horizontal eye movements, respectively). The nose-tip was used as reference, and a midline electrode placed slightly anterior to Fz served as ground. Data recording was performed using a sampling rate of 1000 Hz. The online analog filter was set between 0.02 and 250 Hz. Electrode impedances were maintained below 10 k during data acquisition.

2.5. Data analysis

The subset data taken from our previous study including the newly acquired data of the CI-SSD users were analysed in MATLAB 9.8.0.1323502 (R2020a; Mathworks, Natick, MA) and R (version 3.6.3; R Core Team (2020), Vienna, Austria). Topographic analyses were carried out in CARTOOL (version 3.91; Brunet et al., 2011). Source analyses were performed in Brainstorm (Tadel et al., 2011). The following R packages have been used: ggplot2 (version 2.3.3) for creating plots; dplyr (version 1.0.4), tidyverse (version 1.3.0) and tidyr (version 1.1.3) for data formatting; ggpubr (version 0.4.0) and rstatix (version 0.7.0) for statistical analyses.

2.5.1. Behavioural data

In a first step, we collapsed the syllables /ki/ and /ka/ for each condition (A, V, AV), as they did not show substantial differences between each other (mean RTs ± one standard deviation of the mean: CI-CHD: /ki/= 620 ms ± 88.3 ms, /ka/= 611 ms ± 88.0 ms; CI-SSD: /ki/= 574 ms ± 100.0 ms, /ka/= 566 ms ± 94.0; NH: /ki/= 607 ms ± 91.6 ms, /ka/= 587 ms ± 102.0 ms; all p ≥ 0.354). Second, false alarms or missing responses were discarded from the dataset. Trials that exceeded the individual mean by more than three standard deviations for each condition were declared as outliers and were removed from the dataset. Then, RTs and hit rates were computed for each condition (A, V, AV) for each individual. To analyse the performance for each condition and group, a 3 x 3 mixed ANOVA was used separately for the RTs and the hit rates, with condition (AV, A, V) as the within-subjects factor and group (NH, CI-CHD, CI-SSD) as the between-subjects factor. In the case of violation of the sphericity assumption, a Greenhouse-Geisser correction was applied. Moreover, post-hoc t-tests were carried out and corrected for multiple comparisons using a Bonferroni correction, in the case of significant main effects or interactions (). As the hit rates were very high in our previous study (Layer et al., 2022), we did not expect CI-SSD users to deviate from this pattern. Concerning the RTs, we expected similar results for CI-SSD users as for CI-CHD users and NH listeners, with shorter RTs for AV conditions compared to unisensory (A, V) conditions.

In a next step, we analysed the origin of the redundant signals effect, which is the effect of achieving faster RTs for audiovisual stimuli in comparison to unimodal stimuli (A, V) (Miller, 1982). For this purpose, we reused a subset of our previously reported data of the CI-CHD users and NH listeners (Layer et al., 2022) and extended these by the additional group of CI-SSD users. There are two accounts explaining this issue: the race model (Raab, 1962) and the coactivation model (Miller, 1982). Briefly, the race model claims that due to statistical facilitation it is more probable that either of the stimuli (A and V) will result in shorter response times in comparison to one stimulus alone (A or V). Therefore, one can assume that RTs of redundant signals (AV) are significantly faster, and that no neural integration is required to observe a redundant signals effect (Raab, 1962). In contrast, the coactivation model (Miller, 1982) assumes an interaction between the unimodal stimuli which forms a new product before initiating a motor response, leading to faster RTs. A widely used method in multisensory research is to test for the race model inequality (RMI; Miller, 1982) to explain whether the redundant signals effect was caused by multisensory processes or by statistical facilitation. According to the RMI, the cumulative distribution function (CDF) of the RTs in the multisensory condition (AV) can never exceed the sum of the CDFs of the two unisensory (A, V) conditions:

where represents the likelihood of a condition being less than an arbitrary value . Violation of this model, for any given value of , is an indication for multisensory processes (see also Ulrich et al. (2007) for details). By applying the RMITest software by Ulrich et al. (2007), the CDFs of the RT distributions for each condition (AV, A, V) and for the sum of the modality-specific conditions (A + V) were estimated. The individual RTs were rank ordered for each condition to obtain percentile values (Ratcliff, 1979). Next, for each group separately (NH, CI-CHD, CI-SSD), the CDFs for the redundant signals conditions (AV) and the modality-specific sum (A + V) were compared for the five fastest deciles (bin width: 10 ). We used one-tailed paired t-tests followed by a Bonferroni correction to account for multiple comparisons. Significance at any decile bin was treated as violation of the race model, suggesting multisensory interactions in the behavioural responses. Here, we expected a similar redundant signals effect for CI-SSD users, as CI-CHD users and NH listeners both showed a violation of the race model inequality in our previous study (Layer et al., 2022).

To assess differences between the CI user groups and the NH listeners in the lip-reading task and in the subjective rating of exertion, we performed one-way ANOVAs. Whenever a significant main effect of group was present, we performed follow-up tests with a Bonferroni correction to account for multiple comparisons. Concerning the lip-reading ability, we anticipated that CI-SSD users performed worse compared to CI-CHD users due to their intact contralateral ear, which may reduce the need to rely on lip movements in their everyday life. In terms of subjective exertion rating, we expected no difference between experimental groups because our previous study (Layer et al., 2022) found no difference, which was likely due to the easy task.

2.5.2. EEG pre-processing

The pre-processing of the EEG data was done with EEGLAB (version v2019.1; Delorme and Makeig, 2004), a software working within the MATLAB environment (Mathwork, Natick, MA). The raw data were down-sampled (500 Hz) and filtered with a FIR-filter, having a high pass cut-off frequency of 0.5 Hz with a maximum possible transition bandwidth of 1 Hz (cut-off frequency multiplied by two), and a low pass cut-off frequency of 40 Hz with a transition bandwidth of 2 Hz. For both filters, the Kaiser-window (Kaiser- = 5.653, max. stopband attenuation = −60 dB, max. passband deviation = 0.001; Widmann et al., 2015) was used to maximise the energy concentration in the main lobe by averaging out noise in the spectrum and minimising information loss at the edges of the window. Electrodes in the proximity of the speech processor and transmitter coil were removed for CI users (mean: 2.8 electrodes; range: ). Afterwards, the datasets were epoched into 2 s dummy epoch segments, and pruned of unique, non-stereotype artefacts using an amplitude threshold criterion of four standard deviations. An independent component analysis (ICA) was computed (Bell and Sejnowski, 1995) and the resulting ICA weights were applied to the epoched original data ( Hz, −200 to 1220 ms relative to the stimulus onset (including the static and moving face)). Independent components reflecting vertical and horizontal ocular movements, electrical heartbeat activity, as well as other sources of non-cerebral activity were rejected (Jung et al., 2000). Independent components exhibiting artefacts of the CI were identified based on the side of stimulation and the time course of the component activity, showing a pedestal artefact around 700 ms after the auditory stimulus onset (520 ms). The identified components were removed from the EEG data. In a next step, we interpolated the missing channels using a spherical spline interpolation (Perrin et al., 1989) which allows for a solid dipole source localisation of auditory ERPs in CI users (Debener et al., 2008; Sandmann et al., 2009). Only trials yielding correct responses (NH: 91.0 ± 3.9 ; CI-CHD: 88.1 ± 3.8 ; CI-SSD: 86.6 ± 4.5 ) were kept for further ERP analyses.

2.5.3. EEG data analysis

We compared event-related potentials (ERPs) of all conditions (AV, A, V) between the two CI user groups and the NH participants. The additive model which is denoted by the equation (Barth et al., 1995) was used to investigate multisensory interactions. The model is satisfied and suggests independent and linear processing if the multisensory (AV) responses equal the sum of the unisensory (A, V) responses. Whereas, if the model is not satisfied, non-linear interactions between the unisensory modalities are assumed (Barth et al., 1995). Similar to our previous study (Layer et al., 2022), we rearranged the equation to such that we could compare the directly measured auditory ERP response (A) with the term [], denoting an ERP difference wave representing a visually-modulated auditory ERP response. Hence, [AV-V] is an estimate of an auditory response elicited in a multisensory context. In the case of a lack of interaction between the two unisensory (A, V) modalities, both A and AV-V should be identical. However, if the auditory (A) and the modulated auditory (AV-V) ERPs are identical, this would point to non-linear multisensory interactions (Besle et al., 2004; Murray et al., 2005; Cappe et al., 2010; Foxe et al., 2000; Molholm et al., 2002). Such non-linear effects can be either super-additive () or sub-additive (). But, since interpreting these effects is not straightforward, it is necessary to obtain reference-independent measurements of power or of source estimates (e.g. Cappe et al., 2010). Before creating the difference waves (AV-V), we randomly reduced the number of epochs based on the condition with the lowest number of epochs for each individual to guarantee that there was an equal contribution of each condition to the resulting difference wave. The difference waves were only created for the CI-SSD users in this study, and the difference waves for the NH listeners and CI-CHD users were reused from our previous study (Layer et al., 2022).

As in our previous study (Layer et al., 2022), we analysed our ERP data within an electrical neuroimaging framework (Murray et al., 2008; Michel et al., 2009; Michel and Murray, 2012), comprising topographic and ERP source analysis to compare auditory (A) and modulated (AV-V) ERPs within and between groups (NH, CI-CHD, CI-SSD). We investigated the global field power (GFP) and the global map dissimilarity (GMD) to quantify ERP differences in response strength and response topography, respectively (Murray et al., 2008). First, we looked at the GFP, at the time window of the N1 and the P2 (N1: ms; P2: ms), which were chosen based on visual inspection of the GFP computed for the grand average ERPs across conditions and groups. The GFP is the spatial standard deviation of all electrode values at a specific time point (Murray et al., 2008) and was first described by Lehmann and Skrandies (1980). The reason for choosing the GFP instead of selecting specific channels of interest is that this approach allows for a more objective peak detection. The GFP peak mean amplitudes and latencies were detected for each individual, condition (A, AV-V) and time window (N1, P2) and were statistically analysed by using a 3 x 2 mixed ANOVA with group (NH, CI-CHD, CI-SSD) as the between-subjects factor and condition (A, AV-V) as the within-subjects factor for each peak separately. Based on previous observations with CI-CHD users (Beynon et al., 2005; Finke et al., 2016a; Henkin et al., 2014; Sandmann et al., 2009; Layer et al., 2022) and CI-SSD users (Finke et al., 2016b; Bönitz et al., 2018; Weglage et al., 2022), we expected delayed N1 and reduced P2 responses for all CI user groups compared to NH controls.

Second, we analysed the GMD (Lehmann and Skrandies, 1980) to quantify topographic dissimilarities (and by extension, dissimilar configurations of neural sources; Vaughan Jr, 1982) between experimental conditions and groups, regardless of the signal strength (Murray et al., 2008). The GMD was analysed in CARTOOL by computing a ‘topographic ANOVA’ (TANOVA; Murray et al., 2008) to quantify differences in topographies between groups for each condition. Even though the name is misleading, this is no analysis of variance, but a non-parametric randomisation test. This randomisation test was executed with 5.000 permutations and by calculating sample-by-sample p-values. To control for multiple comparisons, an FDR correction was applied (FDR = false discovery rate; Benjamini and Hochberg, 1995). Since ERP topographies remain stable for a certain period of time before changing to another topography (called ‘microstates’; Michel and Koenig, 2018) and to account for temporal autocorrelation, the minimal significant duration was adjusted to 15 consecutive time frames, corresponding to 30 ms.

2.5.4. Hierarchical clustering and single-subject fitting analysis

Whenever differences in topographies (GMD) for two groups or conditions are found, this is an indication for distinct neural generators contributing to these topographies (e.g. Vaughan Jr, 1982). However, it is also possible that a GMD is caused by a latency shift of the ERP, meaning that the same topographies are present but just shifted in time (Murray et al., 2008). To disentangle these two possible GMD causes, we performed a hierarchical topographic clustering analysis with group-averaged data (NH(A), NH(AV-A), CI-CHD(A), CI-CHD(AV-V), CI-SSD(A), CI-SSD(AV-V)) to identify template topographies within the time windows of interest (N1, P2). Again, we used CARTOOL for this analysis and chose the atomize and agglomerate hierarchical clustering (AAHC) which has been especially designed for ERP-data (detailed in Murray et al., 2008). This method includes the global explained variance of a cluster and prevents blind combinations (agglomerations) of short-duration clusters. Thus, this clustering method identifies the minimal number of topographies accounting for the greatest variance within a dataset (here NH(A), NH(AV-A), CI-CHD(A), CI-CHD(AV-V), CI-SSD(A), CI-SSD(AV-V)).

In a next step, the template maps detected by the AAHC were entered into a single-subject fitting (Murray et al., 2008) to identify the distribution of specific templates by calculating sample-wise spatial correlations for each individual and condition between each template topography and the observed voltage topographies. Each sample was matched to the template map with the largest spatial correlation. For statistical analyses, we focused on the first onset of maps (latency) and the map presence (number of samples in time frames) which are two of many other output options provided by CARTOOL. Particularly, we performed a mixed ANOVA with group (NH, CI-CHD, CI-SSD) as the between-subjects factor and condition (A, AV-V) and template map as within-subject factors, separately for each time window (N1, P2). In the case of significant three-way interactions, group-wise mixed ANOVAs (condition x template map) were computed. A Greenhouse-Geisser correction was applied whenever there was a violation of the sphericity assumption. Post-hoc t-tests were computed and corrected for multiple comparisons using a Bonferroni correction. We anticipated that the analysis of the first onset of maps would confirm a delayed N1 latency for both CI-CHD and CI-SSD users based on previous results. In terms of map presence at N1 latency range, we speculated that there would be a pattern between CI-CHD users and NH listeners for CI-SSD users, as they have both a CI and a NH ear. However, we are not aware of previous studies reporting similar results for CI-SSD users.

2.5.5. Source analysis

We performed an ERP source analysis for each group and condition by means of Brainstorm (Tadel et al., 2011) to find out whether topographic differences can be explained by fundamentally different configurations of neural generators. The tutorial provided by Stropahl et al. (2018) served as guideline for conducting the source analysis. As in our previous study (Layer et al., 2022) and in various studies with CI patients (Bottari et al., 2020; Stropahl et al., 2015; Stropahl and Debener, 2017), we selected the method of dynamic statistical parametric mapping (dSPM, Dale et al. (2000)). dSPM works more precisely in identifying deeper sources than standard norm methods, even though the spatial resolution stays relatively low (Lin et al., 2006). It takes the minimum-norm inverse maps with constrained dipole orientations to approximate the locations of electrical activity recorded on the scalp. This method can be successfully used to localise small cortical areas such as the auditory cortex (Stropahl et al., 2018). First, individual noise covariances were calculated from single-trial pre-stimulus onset baseline intervals (−50 to 0 ms) to estimate single-subject based noise standard deviations at each location (Hansen et al., 2010). As a head model, the boundary element method (BEM) which is implemented in OpenMEEG was used. The BEM gives three realistic layers and representative anatomical information (Gramfort et al., 2010). The final activity data is then displayed as absolute values with arbitrary units based on the normalisation within the dSPM algorithm. Consistent with the procedures of our previous study (Layer et al., 2022), we defined an auditory and a visual ROI by combining smaller regions within the Destrieux-atlas (Destrieux et al., 2010; Tadel et al., 2011; auditory: G_temp_sup-G_T_transv, S_temporal_transverse, G_temp_sup-Plan_tempo and Lat_Fis-post; visual: G_cuneus, S_calcarine, S_parieto_occipital). These ROIs were chosen in accordance with several previous studies (Stropahl et al., 2015; Stropahl and Debener, 2017; Giraud et al., 2001b, Giraud et al., 2001c; ; Prince et al., 2021; Layer et al., 2022). In specific, the chosen parts of the auditory ROI have been reported as both N1 (Näätänen and Picton, 1987; Godey et al., 2001; Woods et al., 1993; Bosnyak et al., 2004) and P2 (Crowley and Colrain, 2004 (for review); Hari et al., 1987; Bosnyak et al., 2004; Ross and Tremblay, 2009) generators. The selected ROIs can be viewed in Fig. 4A.

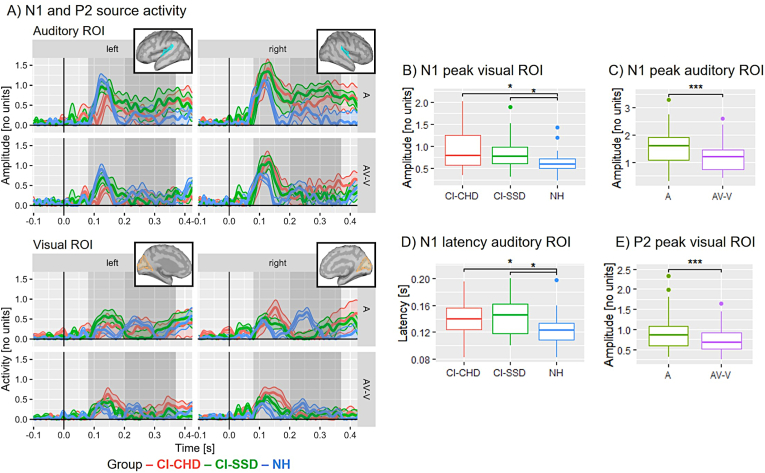

Fig. 4.

ERP results on the source level. A) N1 and P2 source activity for CI users (red), CI-SSD users (green) and NH listeners (blue) separately for each ROI and each hemisphere with standard error (standard error shading was capped at zero). The source activity is displayed as absolute values with arbitrary units based on the normalisation within Brainstorm's dSPM algorithm. The grey shaded areas mark the N1 (light grey) and the P2 (dark grey) time windows. The boxes depict the location of the defined ROIs, with auditory ROIs in blue and visual ROIs in yellow. B) Group effect of the N1 peak mean in the visual cortex: both CI-CHD and CI-SSD users show more activity in the visual cortex compared to NH listeners, regardless of condition. C) Condition effect of the N1 peak mean in the auditory cortex: there is a significantly reduced auditory-cortex activation for AV-V compared to A, indicating multisensory interactions in all groups. D) N1 latency effect in the auditory cortex: Both CI and CI-SSD users show a prolonged N1 latency compared to NH listeners in the auditory cortex, regardless of the condition. This suggests a delayed auditory-cortex activation in CI users, independent of the hearing threshold in the contralateral ear. E) P2 condition effect in the visual cortex: there is a significantly reduced visual-cortex activation for AV-V compared to A, pointing towards multisensory interactions in all groups. Significant differences are indicated (* , ** , *** ). (For interpretation of the references to colour in this figure legend, the reader is referred to the Web version of this article.)

Source activities for each ROI, condition and group were exported from Brainstorm for each participant. Afterwards, the peak means and latencies for each time window of interest (N1: ms, P2: ms) were extracted. A mixed-model ANOVA was performed separately for each time window with group (NH, CI-CHD, CI-SSD) as between-subject factor and condition (A, AV-V), ROI (auditory, visual) and hemisphere (left, right) as within-subjects factors. A Greenhouse-Geisser correction was applied in the case of violation of the sphericity assumption. In the case of significant interactions or main effects, post-hoc t-tests were computed and corrected for multiple comparisons using a Bonferroni correction. Similar to our hypotheses for the fitting data, we speculated that a pattern between the one of CI-CHD users and NH listeners would emerge for the recruitment of the visual cortex, which we observed for CI-CHD users in our previous study (Layer et al., 2022). In addition, in accordance with the fitting data and the GFP, we expected a delayed auditory cortex response for CI-SSD users as well. Finally, based on our previous study, we expected to find indications for multisensory processing, with different activity for AV-V compared to A for CI-SSD users, too.

3. Results

3.1. Behavioural results

Overall, all participants showed hit rates of % in all conditions, and the mean RTs were between 504 ms and 638 ms (see Table 2). The 3 x 3 mixed ANOVA with condition (AV, A, V) as within-subject factor and group (CI-CHD, CI-SSD, NH) as between-subject factor revealed for RTs no main effect of group () and no group × condition interaction, but a main effect of condition (). Follow-up post-hoc t-tests showed that RTs to redundant signals (AV) were significantly faster when compared to V () or A (). There was no difference in RTs between the unisensory stimuli A and V (). These results are displayed in Fig. 1B.

Table 2.

Mean hit rates (in ) and mean response times (in ms).

| Condition | Hit rates |

Response times |

||||

|---|---|---|---|---|---|---|

| NH | CI-CHD | CI-SSD | NH | CI-CHD | CI-SSD | |

| A | 99.0 ± 0.7 | 98.6 ± 1.1 | 98.1 ± 1.6 | 638 ± 84.1 | 623 ± 92.4 | 607 ± 79.4 |

| V | 98.5 ± 1.2 | 98.0 ± 1.3 | 98.5 ± 1.3 | 638 ± 98.4 | 623 ± 96.1 | 602 ± 87.7 |

| AV | 98.7 ± 1.0 | 98.3 ± 1.3 | 99.1 ± 0.8 | 526 ± 81.9 | 530 ± 89.4 | 504 ± 74.0 |

For the hit rates, the 3 x 3 mixed ANOVA with condition (AV, A, V) as within-subject factor and group (CI-CHD, CI-SSD, NH) as between-subject factor showed no main effects or interactions (see Fig. 1C).

Concerning the race model, the one sample t-tests were significant in at least one decile for each group (see Table 3). This means that the likelihood of faster response times for redundant signals (AV) is higher than for those estimated by the race model (A + V). Fig. 1D displays the results of the race model. Overall, the violation of the race model in CI-CHD, CI-SSD users and NH listeners confirms the existence of multisensory integration in all tested groups.

Table 3.

Redundant signals and modality-specific sum in each decile. AV is the redundant signals condition. A + V is the modality-specific sum. Paired-samples one-tailed t-tests were conducted for each group (with Bonferroni correction for multiple comparisons). An asterisk indicates a statistically significant result ().

| Decile | NH |

CI-CHD |

CI-SSD |

||||||

|---|---|---|---|---|---|---|---|---|---|

| AV | A + V | p | AV | A + V | p | AV | A + V | p | |

| .10 | 402 | 444 | .000* | 413 | 462 | .000* | 373 | 432 | .000* |

| .20 | 437 | 480 | .000* | 460 | 494 | .008* | 409 | 461 | .000* |

| .30 | 467 | 504 | .000* | 489 | 520 | .014 | 435 | 484 | .000* |

| .40 | 496 | 525 | .003* | 519 | 541 | .056 | 456 | 450 | .000* |

| .50 | 520 | 543 | .011 | 547 | 560 | .169 | 485 | 515 | .000* |

For the other behavioural measures, we calculated one-way ANOVAs with subsequent t-tests to assess differences in auditory word recognition ability and (visual) lip-reading abilities between CI-CHD, CI-SSD users and NH listeners. The ANOVAs showed a main effect of group for both the auditory word recognition ability () and (visual) lip-reading abilities (). Follow-up t-tests revealed poorer speech recognition ability in the Freiburg monosyllabic test (), but better lip-reading skills for all CI users when compared to NH listeners (CI-CHD vs. NH: , CI-SSD vs. NH: ). There was no difference between the two CI-user groups (CI-CHD vs. CI-SSD: ). Concerning the subjective exertion measured during the EEG task, the ANOVA did not show a difference between the CI-CHD, the CI-SSD users and NH listeners (). These results indicate that none of the tested groups perceived the task as more effortful than another group. The scores of these tests can be found in Table 4.

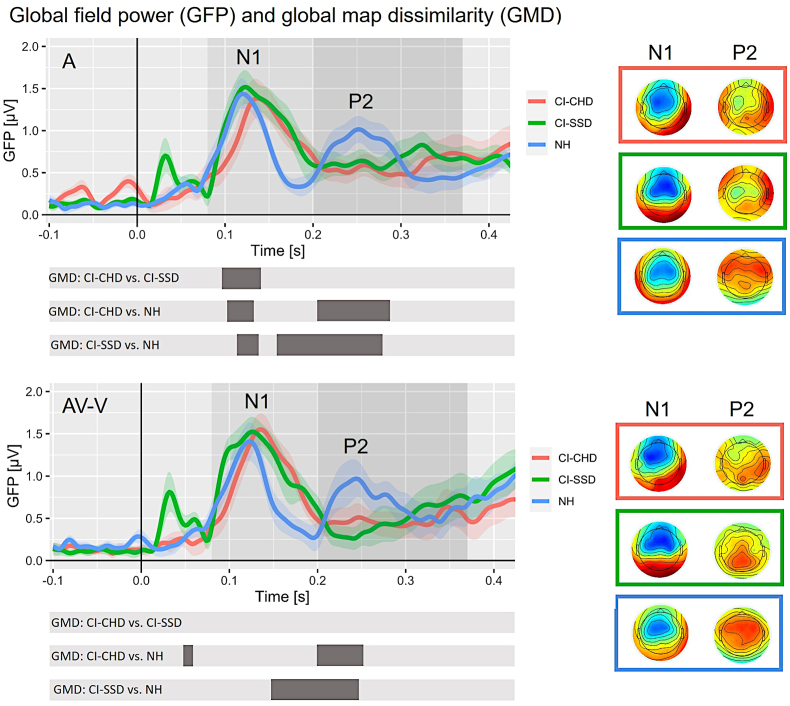

3.2. ERP results on the sensor level: GFP

In Fig. 2 the GFP of the grand averaged ERPs for the unisensory auditory (A) and the visually modulated auditory (AV-V) responses are shown for each group. Approximately between 120 and 140 ms, the first prominent peak is visible for all three groups. This peak fits into the time window of a N1 ERP. The next peak is around 240 ms and seems to be more prominent in NH listeners when compared to the two CI user groups. In the following, this peak is labelled as the P2 ERP. The GFP of the other conditions (V, AV) are also shown in the supplementary material (including the GMD between groups for each condition) to give an idea of the “raw,” non-difference wave data. First, we calculated a 3 x 2 mixed ANOVA with group (NH, CI-CHD, CI-SSD) as between-subject variable and condition (A, AV-V) as within-subject factor for the N1 GFP peak mean amplitude and the GFP peak latency. For the N1 peak amplitude, no statistically significant main effects or interactions were found. However, the ANOVA with N1 latency revealed a significant main effect of group (). Follow-up t-tests showed a prolonged N1 latency for both the CI-CHD () and the CI-SSD users () compared to NH individuals. There was no significant difference between the two CI groups ().

Fig. 2.

ERP results on the sensor level. A) GFP of conditions A and AV-V for CI-CHD users (red), CI-SSD users (green) and NH listeners (blue), including standard error. It is important to note that the GFP only provides positive values because it represents the standard deviation across all electrodes separately for each time point. The ERP topographies at the GFP peaks (N1(A) = CI-CHD: 147 ms, CI-SSD: 136 ms, NH: 118 ms; N1(AV-V) = CI-CHD: 137 ms, CI-SSD: 135 ms, NH: 118 ms; P2(A) = CI-CHD: 305 ms, CI-SSD: 288 ms, NH: 256 ms; P2(AV-V) = CI-CHD: 284 ms, CI-SSD: 307 ms, NH: 245 ms) are given separately for each group (displayed on the right). The grey-shaded areas represent the N1 and P2 time windows for detecting peak and latency. The grey bars below represent the time window in which significant GMDs between the three groups were observed. (For interpretation of the references to colour in this figure legend, the reader is referred to the Web version of this article.)

We performed the same 3 x 2 mixed ANOVA for the P2 GFP peak mean amplitude and latency. For both the P2 peak amplitude and latency, there was no significant main effects or interactions.

3.3. ERP results on the sensor level: GMD

The GMD was analysed sample-by-sample to identify if and when ERP topographies significantly differ between conditions and groups. We compared CI-CHD with NH listeners (CI-CHD vs. NH), CI-SSD with NH listeners (CI-SSD vs. NH) and both CI groups (CI-CHD vs. CI-SSD) separately for each condition (A and AV-V). For the auditory condition (A), the results revealed topographic differences for all group comparisons within the time window of the N1 (CI-CHD vs. NH: ms, CI-SSD vs. NH: ms, CI-CHD vs. CI-SSD: ms). Concerning the topographic differences within the P2 time window, there were no differences between the two groups of CI users, but we observed differences between the NH listeners and the two CI groups (CI-CHD vs. NH: ms, CI-SSD vs. NH: ms).

Regarding the GMD for the modulated condition (AV-V), there was a difference between NH listeners and CI-CHD at the N1 time window (NH vs. CI-CHD: ms). Within the P2 time window, again there were no differences between the two CI groups, however there were differences between the NH listeners and each CI group (CI-CHD vs. NH: ms, CI-SSD vs. NH: ms). In addition, the GMD duration at the P2 time window was shorter for AV-V compared to A. The exact durations displaying differences between the groups are illustrated in Fig. 2 (grey bars beneath the GFP plots).

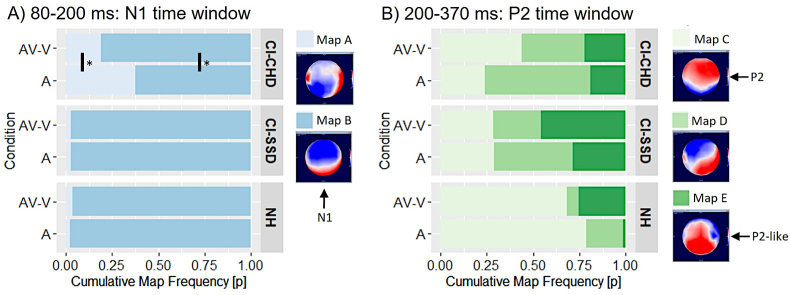

3.4. ERP results on the sensor level: Hierarchical clustering and single-subject fitting results

To better understand the underlying topographic differences (i.e. GMD) between the three groups, we conducted a hierarchical topographic clustering analysis by using the group-averaged data (CI-CHD(A); CI-CHD(AV-V); CI-SSD(A); CI-SSD(AV-V); NH(A); NH(AV-V)) in order to find template topographies within the N1 and P2 time windows. For that purpose, we chose a segment ranging from −100 ms to 470 ms (50–235 time frames). Specifically, we employed the atomize and agglomerate hierarchical clustering (AAHC) to identify the minimal amount of topographies that can explain the variance in our data set as best as possible. This method detected 17 template maps in 18 clusters that explained 88.08 % of all data. To be precise, we detected two maps within the N1 time window (map A and Map B) and three prominent maps within the P2 time window (Map C, Map D, Map E). With these template maps, we performed a single-subject analysis (Murray et al., 2008) to determine how well each of the template maps spatially correlated with the data from each participant. As the template Map B matches the topography from a conventional N1 peak (Fig. 2; Finke et al., 2016a; Sandmann et al., 2015), this template map will be referred to as N1 topography hereafter. Template Map C looks like a typical P2 topography (Fig. 2; Finke et al., 2016a; Schierholz et al., 2021) and therefore we will refer to this template map as the P2 topography. The template Map E is particularly prominent in the two CI-user groups and will be referred to as P2-like topography due to its similarity to the P2 topography (Fig. 2).

Dissimilarities within the topography across groups and conditions (see section ‘ERP results on the sensor level: GMD’) can be explained by a latency shift of the ERPs and/or by distinct neural generator configurations. To shed light on the origin of these differences, we analysed the first onset of maps and the map presence for the N1 and the P2 time windows. These results are presented in the following two subsections.

3.4.1. N1 time window

On the descriptive level, the CI-CHD users showed a map A and a Map B (= N1 topography) which were both present in the auditory-only condition (A; number of samples map A: 18.3 ± 18.6; Map B: 30.5 ± 19.0). Interestingly, specifically in the modulated condition (AV-V), the Map B (= N1 topography) was clearly more frequent compared to map A (number of samples 39.9 ± 18.2 (Map B) vs. 9.27 ± 16.6 (map A)). By contrast, both the NH listeners and the CI-SSD users showed a greater presence of Map B (= N1 topography) in general, irrespective of condition (A: number of samples: NH 40.8 ± 10.8; CI-SSD 48.0 ± 6.71; AV-V: number of samples: NH 36.9 ± 12.5; CI-SSD 47.5 ± 8.26).

To obtain an explanation for a potential ERP latency shift, we statistically analysed the first onset of maps by using a mixed-model ANOVA with group (NH, CI-CHD; CI-SSD) as the between-subjects factor and condition (A, AV-V) and template map as the within-subject factors for the N1 time window. The three-way mixed ANOVA revealed a significant group × map interaction (). Follow-up t-tests showed that the onset of Map B (=N1 topography) was earlier in the NH listeners when compared with the CI-CHD () and the CI-SSD (). There was no group difference in the onset of the N1 topography between CI-CHD and CI-SSD (). The results suggest that the N1 is generated later in CI users compared to NH individuals, regardless of the hearing threshold of the contralateral ear.

Second, we statistically analysed the number of time frames of the maps that showed the highest spatial correlations to the single-subject data, i.e. the map presence. This variable can provide an explanation for potentially distinct underlying neural generators between the three groups (CI-CHD, CI-SSD, NH) and the two conditions (A, AV-V). As above, we calculated a mixed-model ANOVA with group (NH, CI-CHD, CI-SSD) as the between-subjects factor and condition (A, AV-V) and template map as the within-subjects factors for the N1 time window.

For the N1 template maps, the ANOVA results showed a group x map × condition interaction (). Post-hoc t-tests revealed for the CI-CHD users, but not for the CI-SSD users or NH listeners, that the presence of Map B (=N1 topography) was significantly enhanced for the modulated (AV-V) compared to the auditory-only (A) condition (). These results are illustrated in Fig. 3A. Given that template Map B corresponds to a conventional N1 topography, the results suggest that CI-CHD users in specific generate a N1 ERP map for the modulated response (AV-V) more frequently compared to the unisensory (A) condition. This visual modulation effect at the N1 latency was not observable for the NH listeners and the CI-SSD users.

Fig. 3.

Results from the hierarchical clustering and the single-subject fitting. A) Cumulative map frequency of the N1 maps: the CI-CHD users, but not the NH listeners or CI-SSD users, show a condition effect, with more frequent N1 map presence for AV-V compared to A. The corresponding map topographies are displayed on the right side, with Map B being referred to as the N1 topography. B) Cumulative map frequency of the P2 maps: there is a group effects (independent of the condition): NH listeners reveal a more frequent presence of a P2 topography (Map C) compared to CI-SSD users, and CI-SSD users show a more frequent presence of a P2-like topography (Map E) compared to NH listeners. CI-CHD users show a more frequent presence of Map D compared to NH listeners. Additionally, there is a condition effect (independent of the group): The presence of the P2-like topography (Map E) is enhanced for AV-V compared to A. This suggests a visual modulation of auditory speech processing at P2 latency in all groups. Significant differences are indicated (* , ** , *** ).

Taken together, our results for the N1 on the first onset of maps and the map presence suggest that the observed topographic group differences at N1 latency can be explained by the following two reasons: 1) there are generally delayed cortical N1 ERPs in CI users, regardless of the condition (auditory-only or modulated response) and regardless of whether these patients have unilateral or bilateral hearing loss, and 2) there is a distinct pattern of ERP topographies specifically for the CI-CHD users compared to NH listeners and CI-SSD users. The visual modulation effect in the N1 topography was only observed for CI-CHD users, which suggests that this CI group in particular has a strong visual impact on auditory speech processing. By contrast, the visual impact in the CI-SSD users seems to be less pronounced and appears to be comparable to the NH listeners.

3.4.2. P2 time window

Similar to the analysis on the N1 time window, we analysed the first onset of maps by using a mixed-model ANOVA with group (NH, CI-CHD; CI-SSD) as the between-subjects factor and condition (A, AV-V) and template map as the within-subject factors for the P2 time window. The results did not reveal any significant main effects or interactions.

In a second step, we analysed the map presence for the P2 time window. As above, we calculated a mixed-model ANOVA with group (NH, CI-CHD, CI-SSD) as the between-subjects factor and condition (A, AV-V) and template map as the within-subjects factors for the P2 time window. The three-way mixed ANOVA showed a significant group x map () and a condition x map () interaction. For the group × condition interaction, follow-up t-tests revealed for the NH listeners a significantly enhanced presence of Map C (= P2 topography) compared to CI-SSD users (). Vice versa, CI-SSD users showed a significantly enhanced presence of Map E (= P2-like topography) compared to NH listeners (), regardless of the condition. Finally, for Map D, there was a significant difference between CI-CHD users and NH individuals (), with CI-CHD users showing a more dominant presence of this map compared to NH controls. These results are shown in Fig. 3B. Following the condition × map interaction, follow-up t-tests revealed significant differences between A and AV-V only for Map E (). This result suggests that a P2-like topography (Map E) is generated more often for modulated responses (AV-V) compared to unmodulated responses (A), which is shown in Fig. 3B.

In sum, our results about the first onset of maps and the map presence at P2 latency suggest group-specific topographic differences at P2 latency, with a stronger presence of a conventional P2 topography (Map C) in NH listeners compared to CI-SSD users and a stronger presence of the P2-like topography (Map E) in CI-SSD users compared to NH listeners. Together with the observation that Map D is more present in CI-CHD users than in NH listeners, these results confirm our GMD results, showing a significant group difference between NH listeners and the two CI-user groups for both conditions (A, AV-V) at P2 latency (Fig. 2). Finally, all groups show a P2-like topography (Map E) that is more frequent in the modulated than in the auditory-only condition, which points to alterations in the cortical processing at P2 latency due to the additional visual information in the speech signal.

3.5. Results from ERP source analysis

We conducted a source analysis to further analyse the differences between the three groups, focusing on the auditory and visual cortex activity in both hemispheres. Single-subject source activities for each ROI, condition and group were exported from Brainstorm and were statistically analysed. The source waveforms for the N1 and the P2 are illustrated in Fig. 4A, showing the response in the auditory cortex (N1 peak latency mean: CI-CHD = 141 ms ± 27 ms; CI-SSD = 143 ms ± 28 ms; NH = 122 ms ± 22 ms) and in the visual cortex (N1 peak latency mean: CI-CHD = 143 ms ± 29 ms; CI-SSD = 136 ms ± 28 ms; NH = 136 ms ± 35 ms) for all groups. The peak mean amplitudes and latencies were the dependent variables for the following ANOVA. We performed a mixed-model ANOVA with group (NH, CI-CHD, CI-SSD) as the between-subjects factor and condition (A, AV-V) and hemisphere (left, right) as the within-subject factors for each time window of interest (N1, P2) and each ROI (auditory, visual) separately.

Concerning the N1 peak mean in the visual cortex, the mixed-model ANOVA showed a significant main effect of group (). Post-hoc t-tests confirmed a significant difference between the NH listeners and both CI groups (NH vs. CI-CHD: ; NH vs. CI-SSD: ), but no difference between the two CI groups (CI-CHD vs. CI-SSD: ). Thus, both CI user groups showed more recruitment of the visual cortex compared to NH listeners, regardless of hemisphere and condition (see Fig. 4B).

For the N1 peak mean in the auditory cortex, the mixed-model ANOVA revealed a significant main effect of hemisphere () and a significant main effect of condition (). Resolving the main effect of hemisphere, follow-up t-tests showed a greater amplitude for the right hemisphere compared to the left hemisphere (), regardless of group and condition. Following the main effect of condition, the subsequent t-tests revealed reduced amplitudes for AV-V compared to A (), regardless of hemisphere and group, which points to multisensory interaction processes (see Fig. 4C).

For the N1 peak latency in the auditory cortex, the mixed-model ANOVA identified a significant main effect of group () and a significant main effect of hemisphere (). Following the main effect of hemisphere, the post-hoc t-test revealed a significant difference between the left and the right auditory cortex () with the right hemisphere showing faster latencies compared to the left hemisphere. Resolving the main effect of group, follow-up t-tests revealed a significantly shorter latency of the auditory-cortex response in the NH listeners compared to both CI groups (NH vs. CI-CHD: ; NH vs. CI-SSD: ), but no difference between the two CI groups (CI-CHD vs. CI-SSD: ). Hence, both CI user groups showed a delayed auditory-cortex response compared to NH listeners, regardless of hemisphere and condition (see Fig. 4C). For the N1 peak latency in the visual cortex, the mixed-model ANOVA did not show any significant main effects or interactions.

Concerning the P2 peak mean in the auditory cortex, the mixed-model ANOVA found a significant main effect of condition (). Resolving this main effect, the post-hoc t-tests revealed a significant difference between A and AV-V (), with A showing greater amplitudes than AV-V, regardless of group and hemisphere. This points to multisensory interaction processes in the auditory cortex at P2 latency.

For the P2 peak mean in the visual cortex, the mixed-model ANOVA found a significant main effect of condition () as well. Follow-up t-tests revealed a significant difference between A and AV-V (), with A showing greater amplitudes than AV-V, regardless of group and hemisphere. This points to multisensory interaction processes in the visual cortex at P2 latency as well (see Fig. 4E).

Regarding the P2 peak latency in the auditory and visual cortices, the mixed-model ANOVA found neither significant main effects nor significant interactions.

3.6. Correlations

We performed correlations for each CI user group (CI-SSD and CI-CHD), using the Pearson's correlation and the Benjamini-Hochberg (BH) procedure to control for multiple comparisons (Benjamini and Hochberg, 1995). First, we wanted to check whether lip-reading abilities are related to the CI experience and the age at onset of hearing loss (Stropahl et al., 2015; Stropahl and Debener, 2017; Layer et al., 2022). The results revealed a trend for a positive relationship between lip-reading abilities and CI experience (CI-CHD: ; ; p corrected = 0.076; CI-SSD: ; ; p corrected = 0.147) and a negative relationship between lip-reading abilities and the age of onset of hearing loss for both CI user groups (CI-CHD: ; ; p corrected = 0.005; CI-SSD: ; ; p corrected = 0.027). Thus, for both CI user groups it holds that the earlier the onset of hearing impairments, the more pronounced are the lip-reading abilities. Moreover, we aimed to reproduce the relationship between CI experience and the activation in the visual cortex (Giraud et al., 2001c; Layer et al., 2022). The results did not reach a significance level (; ; p corrected = 0.16; ; ; p corrected = 0.29).

4. Discussion

In this follow-up study, we used behavioural and EEG measures to investigate audiovisual interactions in CI users with unilateral (CI-SSD) and bilateral (CI-CHD) hearing loss and in a group of NH controls. This study was conducted to extend the results from our previous study comparing CI-CHD users with NH listeners (Layer et al., 2022), by including a third group of participants; namely the CI-SSD users. A subset of our previously reported data was reused and compared to the additional group of CI-SSD users. The inclusion of the additional group of patients significantly extends our previous study because it not only examines the transferability of our previous findings to different CI patient groups, but also provides valuable insights into the influence of individual factors - specifically the hearing ability of the second ear - on audiovisual speech processing in CI users.

At the behavioural level, we confirmed multisensory interactions for all three groups, as evidenced by the shortened response times for the audiovisual condition compared to each of the two unisensory conditions (Fig. 1B) and by the violation of the race model (Fig. 1E). This was in line with the ERP analyses, confirming a multisensory effect for all groups by exhibiting a reduced activation in the auditory and visual cortex for the modulated (AV-V) response compared to the auditory-only (A) response at both the N1 and P2 latencies (Fig. 4C and E, respectively). In addition to this multisensory effect across all groups, we found group-specific differences. First, specifically the group of CI-CHD users, showed a change of N1 voltage topographies when additional visual information accompanied the auditory information (Fig. 3A), which suggests a particularly strong visual impact on auditory speech processing in CI users with bilateral hearing loss. Second, both groups of CI users revealed a delayed auditory-cortex activation (Fig. 4D), enhanced lip-reading abilities (Fig. 1D) and stronger visual-cortex activation (Fig. 4B) when compared to the NH controls. Thus, the current results extend the results of our previous study (Layer et al., 2022) by showing distinct multisensory processes not only between NH listeners and CI users in general, but even between CI users with unilateral (CI-SSD) and bilateral (CI-CHD) hearing loss.

4.1. Behavioural multisensory integration in all groups

The behavioural results revealed that both the NH listeners and the two CI user groups had faster reaction times for audiovisual syllables than for unisensory (auditory-alone, visual-alone) syllables (Fig. 1; Table 2). No difference was found between the auditory and visual conditions. Hence, all groups exhibited a clear redundant signals effect for audiovisual syllables, implying that the benefit of cross-modal input is comparable between the CI user groups and NH listeners on a behavioural level (Laurienti et al., 2004; Schierholz et al., 2015; Layer et al., 2022), at least when considering syllables that are combined with a talking head. The violation of the race model for each group (CI-CHD, CI-SSD, NH) suggests that multisensory integration was the cause for the observed redundant signals effect in both CI user groups and NH listeners. However, the behavioural responses of the CI users were not slower compared to the NH listeners, even though the signal provided by the CI is known to be limited in comparison to a natural hearing experience (Drennan and Rubinstein, 2008). The observation of comparable response times in CI users can be explained by the fact that there were only two syllables, and that the difficulty of the task was correspondingly low. Compatible with this, all groups were equally able to perform the task, and the subjective rating of the listening effort showed no difference between the groups.

One would assume that the CI users might be better and faster at identifying the purely visual syllables due to results from previous studies with congenitally deaf individuals and CI users, showing visual enhancements, in particular visually induced activation in the auditory cortex (Bottari et al., 2014; Finney et al., 2003; Hauthal et al., 2014; Bavelier and Neville, 2002; Heimler et al., 2014; Sandmann et al., 2012). This cross-modal activation seems to be driven by auditory deprivation and might form the neural basis for specific superior visual abilities (Lomber et al., 2010). Importantly, auditory impairment is not only experienced in CI users before receiving a CI, but also after the implantation when only a limited auditory input is provided by the CI. Thus, it is not surprising that CI users reveal compensatory visual strategies, such as enhanced lip-reading abilities, in order to overcome the limited CI signal (Rouger et al., 2007; Schreitmüller et al., 2018; Stropahl et al., 2015; Stropahl and Debener, 2017). Our results extend previous observations of enhanced visual abilities in CI users by showing that not only CI-CHD users, but surprisingly also CI-SSD users demonstrate a better lip-reading ability when compared to NH listeners. Importantly, the lip-reading ability was comparable between the two patient groups, and both groups showed a positive correlation with the age of the onset of hearing loss, indicating that an earlier onset of hearing loss triggers improved behavioural visual abilities. Our results demonstrate that this visual improvement develops across different groups of CI patients, independent of the hearing abilities of the contralateral ear.

However, behavioural visual improvements in CI users seem to be stimulus- and task-specific, as indicated by our finding that the two CI user groups showed comparable behavioural results to NH listeners in the speeded response task. Our finding is consistent with previous studies, using a speeded response task with simple tones and white discs as auditory and visual stimuli, respectively (Schierholz et al., 2015, 2017). It seems that in our study the task with the basic stimuli and the two syllables was too easy, leading to ceiling effects in all groups. This estimation is in line with our observation that the perceived exertion effort was comparable between all three groups. Importantly, behavioural group differences have well been reported in a previous study using more complex stimuli presented in the context of a difficult recognition paradigm, showing an enhanced audiovisual gain in CI users when compared to NH listeners (Radecke et al., 2022). This is consistent with the view that behavioural advantages due to additional visual information in CI users are task- and stimulus-selective, and that they become evident under specific circumstances, for instance in conditions with semantic information (Moody-Antonio et al., 2005; Rouger et al., 2008; Tremblay et al., 2010; Radecke et al., 2022). Thus, future studies should use linguistically complex stimuli, such as words or sentences presented in auditory, visual and audiovisual conditions, in order to better understand the behavioural advantages for visual and audiovisual speech conditions in CI users compared to NH individuals.

4.2. Electrophysiological correlates of multisensory speech perception

Similar to the behavioural data, we also discovered commonalities among groups at the ERP level. Nonetheless, group differences were found as well, which will be discussed in the following sections.

4.2.1. Group similarities in multisensory speech processing

Similar to the behavioural results, we found evidence for multisensory effects in the ERP responses. The topographic clustering analysis with subsequent single-subject fitting confirmed multisensory interactions for both CI user groups and NH individuals by revealing an increase in P2-like topographies for the modulated ERPs (AV-V) compared to the purely auditory condition (A). This observation points to a visual modulation of the auditory ERPs in the two CI user groups as well as in the NH individuals.