Abstract

Purpose:

Infectious keratitis, especially viral keratitis (VK), in resource-limited settings, can be a challenge to diagnose and carries a high risk of misdiagnosis contributing to significant ocular morbidity. We aimed to employ and study the application of artificial intelligence-based deep learning (DL) algorithms to diagnose VK.

Methods:

A single-center retrospective study was conducted in a tertiary care center from January 2017 to December 2019 employing DL algorithm to diagnose VK from slit-lamp (SL) photographs. Three hundred and seven diffusely illuminated SL photographs from 285 eyes with polymerase chain reaction–proven herpes simplex viral stromal necrotizing keratitis (HSVNK) and culture-proven nonviral keratitis (NVK) were included. Patients having only HSV epithelial dendrites, endothelitis, mixed infection, and those with no SL photographs were excluded. DenseNet is a convolutional neural network, and the two main image datasets were divided into two subsets, one for training and the other for testing the algorithm. The performance of DenseNet was also compared with ResNet and Inception. Sensitivity, specificity, receiver operating characteristic (ROC) curve, and the area under the curve (AUC) were calculated.

Results:

The accuracy of DenseNet on the test dataset was 72%, and it performed better than ResNet and Inception in the given task. The AUC for HSVNK was 0.73 with a sensitivity of 69.6% and specificity of 76.5%. The results were also validated using gradient-weighted class activation mapping (Grad-CAM), which successfully visualized the regions of input, which are significant for accurate predictions from these DL-based models.

Conclusion:

DL algorithm can be a positive aid to diagnose VK, especially in primary care centers where appropriate laboratory facilities or expert manpower are not available.

Keywords: Artificial Intelligence, deep learning, HSV keratitis, viral keratitis

Herpes simplex viral (HSV) keratitis is a common viral infection of the cornea. Stromal HSV infection has infective and immune components and is often a recurrent and potentially blinding corneal disease, especially necrotizing stromal HSV keratitis.[1] Unlike most other microbial keratitis that mandate corneal scraping, diagnosis of epithelial HSV infection is largely based on clinical appearance and corneal staining of dendrites. However, stromal infection can mimic bacterial and fungal keratitis, making treatment difficult and delayed. Recurrent stromal HSV infection can also alter the typical clinical appearance of the disease due to vascularization and scarring. Laboratory tests such as immunofluorescent microscopy and polymerase chain reaction (PCR) to detect the viral genome are expensive and are reserved for atypical and recurrent cases.[2,3]

Artificial intelligence-based deep learning (DL) algorithms are increasingly being employed in medical diagnostics. Recent literature describes DL-based diagnosis of microbial keratitis, especially fungal corneal ulcers, after analysis of high-resolution slit-lamp photographs.[4,5] We have tried to study a similar application in active and confirmed HSV stromal necrotizing keratitis (HSVNK) as these are usually diagnosed by primary care physicians based on clinical presentation rather than investigations. Application of DL-based methods as an ancillary tool to clinical and, at times, laboratory methods in HSVNK could facilitate diagnosis and prompt early speciality referral to initiate appropriate treatment of this morbid disease.

Methods

A retrospective study was conducted with diffusely illuminated slit-lamp images with 8 MP resolution of patients who had microbiologically proven infectious keratitis and presented to our hospital from January 2017 to December 2019. Our study adhered to the Declaration of Helsinki and was approved by our Institutional Review Board and Ethical Committee. An informed consent to acquire and use the slit-lamp images for the purpose of medical research and education had been obtained from the patients. Digital slit-lamp photographs were acquired using the Topcon D series slit lamp with a beam splitter and an attached 8.1 MP digital camera via a DC-3 digital camera attachment. The device functioning is driven by the DC-3 EZ Capture software.

We included patients who had presented with infectious keratitis and had PCR-proven active HSVNK and culture-proven nonviral keratitis (NVK). All cases selected in both groups were symptomatic patients with active corneal infiltration. Resolving or scarred infections or those that were microbiologically negative were not included in the study.

As DL models need more data, a total of 307 images from 285 eyes were used. Out of these, 177 (57.7%) images were from the HSVNK category and the remaining 130 images belonged to the NVK category, which included 43 (14.0%) bacterial and 87 (28.3%) fungal keratitis. Patients who had only HSV epithelial dendrites, endothelitis, mixed infection, pythium keratitis, acanthamoeba keratitis, and those with no slit-lamp photographs were excluded from the study.

Data preprocessing and analysis

Two datasets comprising HSVNK and NVK with two subsets in each category, one for training and the other one for testing, were employed. Out of the 307 images, 177 images were in the HSVNK category, while 130 were in the NVK category (consisting of proven fungal and bacterial keratitis images). Two hundred and sixty-seven images were used for training the DL models. Forty images were used in total for testing, with 20 images from each category picked at random. The training data consisted of 157 viral keratitis images and 110 NVK images. While the training data was slightly skewed, the test data had an equal split between viral and nonviral images. As a part of preprocessing, all the images were resized to 224 × 224 and normalized between zero and one.

Model

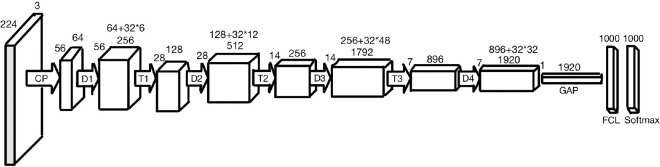

Convolutional neural networks (CNNs) are DL architectures which are extensively utilized in the field of computer vision.[6] There are many DL models such as AlexNet, VGGNet, ResNet, and the Inception architectures. DenseNet-201 is a CNN which is 201 layers deep.[7] Its architecture is shown in Fig. 1.[8] DenseNet has demonstrated better performance than the other models because of its implicitly modeled deep supervision and feature reuse.[9] ResNet-50 and the GoogleNet Inception models were trained on this dataset as well to come up with a comprehensive evaluation of these techniques and to determine the best model.

Figure 1.

DenseNet-201 architecture: CP refers to the convolutional block, D refers to a dense block, T refers to a transition layer, and FCL refers to a fully connected layer

Training

Transfer learning was employed to train the CNN.[10] This was because the training dataset being small, training the entire model from the scratch would have led to severe overfitting. DenseNet-201 and the other models were pretrained on ImageNet.[11] For all the models, the last 1000-node layer with SoftMax activation was replaced with a single node with sigmoid activation for the purpose of binary classification. The last layer was fine-tuned (trained from the scratch), while the rest of the layers were frozen.

There were various hyperparameters. Adam optimizer with an initial learning rate of 0.0001 was used. The loss function and the activation function used were categorical cross-entropy and Rectified Linear Unit (ReLU), respectively. All the models were trained for 375 epochs each. The validation accuracy saturates beyond 350 epochs, which is why training was carried out only till that point. The batch size was 32. Batch Normalization was used. Dropout was used as regularization to prevent overfitting. For an effective comparison, these hyperparameters were the same across the three models.

The model was trained using Google Colab, and the Graphics Processing Unit (GPU) used was NVIDIA Tesla K80. The code was written in Keras.[12]

Dataset

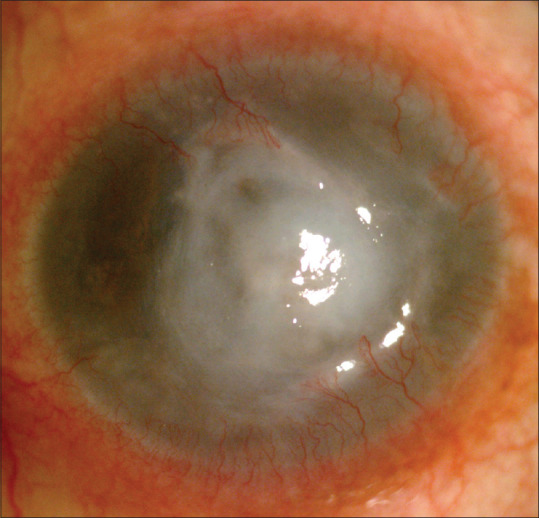

The dataset consisted of infectious keratitis images, a scaled down sample image of which is shown in Fig. 2. They were labeled as viral, bacterial, and fungal images based on clinical history and microbiology. The Artificial Intelligence (AI) model was used to classify the data into HSVNK and NVK images.

Figure 2.

Sample image from the dataset

Training

Cross validation was used for training the model. It involved dividing the training data into a particular number of groups, followed by taking out one group for validation and training the model on the other groups. The model weights were updated accordingly, and these steps were repeated till all the groups were covered (for validation). The model was fine-tuned for 350 epochs on the training data. The test data was not seen by the network and was used exclusively for validating its performance.

Comparison with other algorithms

The performance of DenseNet was compared with DL networks such as ResNet and Inception.[13,14] Since the target dataset was quite different from the original dataset on which the models were trained, the last few layers were fine-tuned on the new dataset.

The diagnostic performance of the algorithm was also evaluated using the area under the receiver operating characteristic (ROC) curve (AUC) with 95% confidence intervals (CIs). The sensitivity, specificity, and accuracy of the model were calculated.

Results

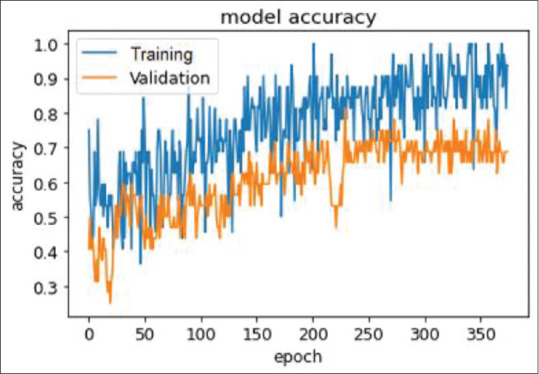

DenseNet gave an accuracy of 72% on the test dataset, which is shown in Fig. 3. ResNet gave an accuracy of 50% on the test dataset, compared to 60% on the training data. Inception performed better, giving an accuracy of 62.5% on the test dataset. This is because of the depth of the model as it was able to capture features more effectively compared to the 50-layered ResNet model. DenseNet performed better than the other models particularly because of its ability to model deep representations more effectively, as discussed above. It does not suffer from overfitting, as the validation accuracy increases with the increasing number of epochs. Toward the end, it began to saturate, which is when the model training was stopped.

Figure 3.

Graph showing the performance of DenseNet with an accuracy of 72% in correctly diagnosing herpes simplex viral stromal necrotizing keratitis

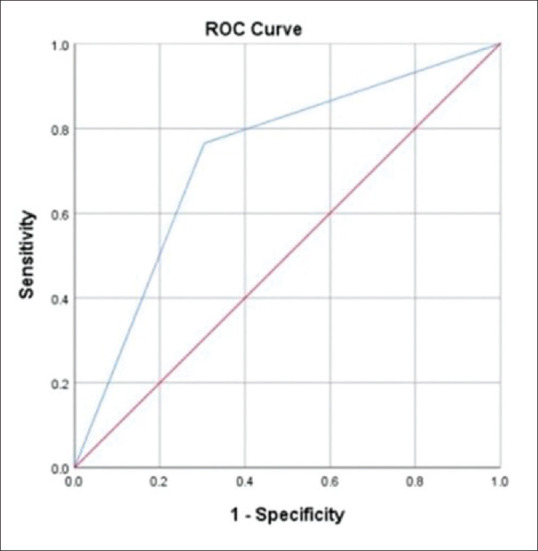

The ROC curve [Fig. 4] plots the true-positive rate (sensitivity) on the y-axis and the false-positive rate (100 − specificity) for different cutoff points of a parameter on the x-axis. AUC between 0.7 and 0.8 is considered statistically significant. The AUC for HSVNK was 0.73 (95% CI: 0.568–0.892) with a sensitivity of 69.6%, specificity of 76.5%, and an accuracy of 72.5%. The AUC value was 0.6, indicating a fairly good performance.

Figure 4.

ROC curve showing the AUC for herpes simplex viral necrotizing stromal keratitis. AUC = area under the curve, ROC = receiver operating characteristic

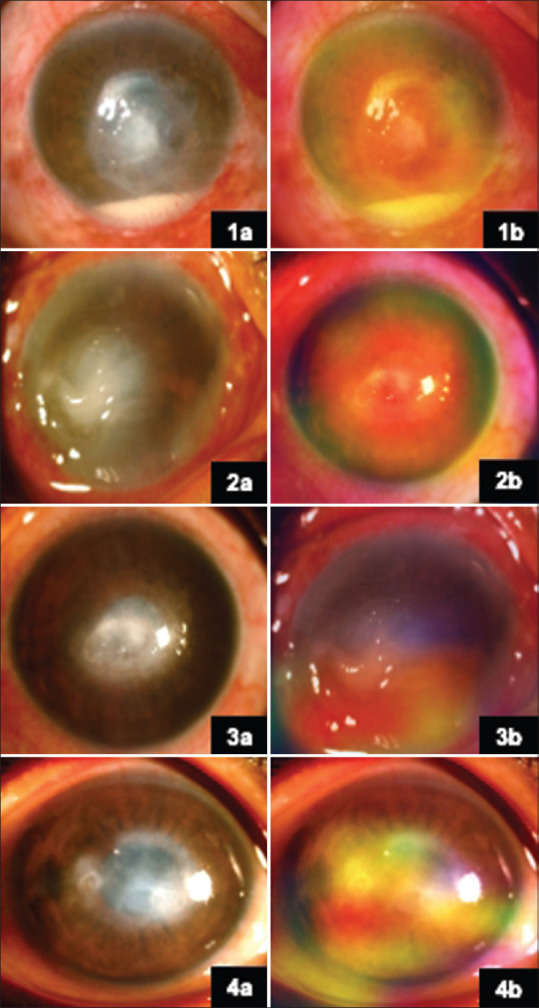

Visualization of the results was also attempted using gradient-weighted class activation mapping (Grad-CAM) for validation of results [Fig. 5]. They were generated for two images in each category of the test dataset. All these images were classified correctly by the model. The red and yellow colors in the Grad-CAM images signify the regions of maximum activation, that is, the regions that affected the classification of the model to the greatest extent. The regions in blue and yellow are those that contributed to a lesser extent. The rest of the image, which is not highlighted, was not used for classification. However, Grad-CAM–based visual representation was an ancillary outcome reported and not a driving tool for this study.

Figure 5.

(1a) Slit-lamp image of NVK. (2a, 3a, 4a) Slit-lamp images of HSVNK. (1b, 2b, 3b, 4b) Corresponding heat maps on Grad-CAM. The regions in red and yellow are the regions with maximum activity, while those in blue and green show activities of lesser degree. Grad-CAM = gradient-weighted class activation mapping, HSVNK = herpes simplex viral stromal necrotizing keratitis, NVK = nonviral keratitis

Discussion

HSV 1 is an important causative agent which can infect all the layers of the cornea and lead to recurrent episodes.[15] HSV stromal keratitis is a corneal infection of variable severity and is a potentially blinding condition. Though stromal HSV is classified into immune and ulcerative necrotic types, quite often, the infective and immune components of this disease coexist. The diagnosis of HSV stromal keratitis compared to bacterial or fungal keratitis is difficult in some respects. Firstly, the infection looks morphologically different in different layers of the cornea.[15] Secondly, HSV necrotizing stromal keratitis infection can look like bacterial or fungal keratitis. Also, infective and immune components could present variably in the same eye. Lastly, multiple recurrences can mar the typical clinical appearance owing to scarring and vascularization.[16]

As epithelial integrity is important for resolution and owing to limited and expensive methods of microbiological diagnosis, HSV keratitis largely remains a clinical diagnosis. Corneal scraping for PCR is usually done for atypical or nonresponding disease. Confocal microscopy, which can be employed in diagnosing fungal keratitis, is of limited value in HSV keratitis.[17]

CNNs are DL architectures which are extensively utilized for classification tasks. DenseNet is one such CNN. It was thought that developing DL algorithms to detect HSVNK could help in clinical diagnosis and would aid early referral by general ophthalmologists to cornea speciality. The first study using neural networks for diagnosis of infective keratitis utilized many clinical and lab parameters, but no photographs.[18] More recent work on the same lines utilized clinical pictures and DL algorithms to diagnose fungal keratitis.[4,5]

In our study, DL was used to diagnose microbiologically proven HSV keratitis using slit-lamp pictures with diffuse white light illumination. Good-quality photos and expert clinical diagnosis are imperative for successful and accurate DL-based machine learning.

The limited number of images and the retrospective nature are definite limitations of the current study. With more images of infectious keratitis, including slit-illuminated and fluorescein-stained ones, the model can be fine-tuned to incorporate this information and improve its performance. The scope for improvement when more images are available is shown in the graph in Fig. 3. Depending on how close the newly acquired images are to the original distribution, the corresponding layers could be chosen for fine-tuning. Should there be a large variation, more layers could be unfrozen and fine-tuned.

But considering the difficulty of this task, an encouraging baseline performance was given by our model since viral, fungal, and bacterial keratitis can appear clinically similar to each other and an expert cornea specialist would be required to differentiate between them. However, there is a room for considerable improvement to be able to perform close to specialists and become an aid for accurately diagnosing such diseases.

Grad-CAM increases the explainability of the DL models by visualizing the regions of input that are significant for predictions from these models.[19] We believe that this would improve the efficiency of the primary clinician in the setting of an outpatient department by producing a visual explanation of the pathological areas detected by CNNs.

Conclusion

To the best of our knowledge, this is the first study highlighting the utility of artificial intelligence in the diagnosis of HSV stromal necrotising keratitis, which has a varying spectrum of presentation and hence runs a risk of misdiagnosis. Artificial intelligence and DL-based detection can be an additional tool aiding in better clinical diagnosis.

This could positively support recognition of infectious HSV keratitis, particularly by general ophthalmologists with limited speciality experience and in primary care centers where appropriate laboratory facilities are nonexistent, and would help in early speciality referral of patients with this potentially blinding disease.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

Acknowledgements

We thank Mr. Viswanathan Natarajan for his assistance in the statistical analysis involved in this study.

References

- 1.Guess S, Stone DU, Chodosh J. Evidence-based treatment of herpes simplex virus keratitis:A systematic review. Ocul Surf. 2007;5:240–50. doi: 10.1016/s1542-0124(12)70614-6. [DOI] [PubMed] [Google Scholar]

- 2.Erdem E, Harbiyeli İİ, Öztürk G, Oruz O, Açıkalın A, Yağmur M, et al. Atypical herpes simplex keratitis:Frequency, clinical presentations and treatment results. Int Ophthalmol. 2020;40:659–65. doi: 10.1007/s10792-019-01226-1. [DOI] [PubMed] [Google Scholar]

- 3.Ma JX, Wang LN, Zhou RX, Yu Y, Du TX. Real-time polymerase chain reaction for the diagnosis of necrotizing herpes stromal keratitis. Int J Ophthalmol. 2016;9:682–6. doi: 10.18240/ijo.2016.05.07. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kuo MT, Hsu BWY, Yin YK, Fang PC, Lai HY, Chen A, et al. A deep learning approach in diagnosing fungal keratitis based on corneal photographs. Sci Rep. 2020;10:14424. doi: 10.1038/s41598-020-71425-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gu H, Guo Y, Gu L, Wei A, Xie S, Ye Z, et al. Deep learning for identifying corneal diseases from ocular surface slit-lamp photographs. Sci Rep. 2020;10:17851. doi: 10.1038/s41598-020-75027-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Esteva A, Chou K, Yeung S, Naik N, Madani A, Mottaghi A, et al. Deep learning-enabled medical computer vision. NPJ Digit Med. 2021;4:5. doi: 10.1038/s41746-020-00376-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Densely Connected Convolutional Networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017:2261–69. [Google Scholar]

- 8.Wang SH, Zhang YD. DenseNet-201-based deep neural network with composite learning factor and precomputation for multiple sclerosis classification. ACM Trans Multimedia Comput Commun Appl. 2020;16:1–19. [Google Scholar]

- 9.Lee CY, Xie S, Gallagher P, Zhang Z, Tu Z. Deeply-supervised nets. Proc Mach Learn Res. 2015;38:562–70. [Google Scholar]

- 10.Zhuang F, Qi Z, Duan K, Xi D, Zhu Y, Zhu H, et al. A comprehensive survey on transfer learning. Proc IEEE. 2021;109:43–76. [Google Scholar]

- 11.Deng J, Dong W, Socher R, Li LJ, Li K, Li FF. Imagenet:A Large-Scale Hierarchical Image Database. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2009:248–55. [Google Scholar]

- 12.Gulli A, Pal S. Deep Learning with Keras. Packt Publishing. 2017 [Google Scholar]

- 13.He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016:770–8. [Google Scholar]

- 14.Szegedy C, Vanhoucke V, Ioffe S, Shlens S, Wojna Z. Rethinking the Inception Architecture for Computer Vision. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016:2818–26. [Google Scholar]

- 15.Azher TN, Yin XT, Tajfirouz D, Huang AJ, Stuart PM. Herpes simplex keratitis:Challenges in diagnosis and clinical management. Clin Ophthalmol. 2017;11:185–91. doi: 10.2147/OPTH.S80475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wilhelmus KR. Antiviral treatment and other therapeutic interventions for herpes simplex virus epithelial keratitis. Cochrane Database Syst Rev. 2015;1:CD002898. doi: 10.1002/14651858.CD002898.pub5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Poon SHL, Wong WHL, Lo ACY, Yuan H, Chen CF, Jhanji V, et al. A systematic review on advances in diagnostics for herpes simplex keratitis. Surv Ophthalmol. 2021;66:514–30. doi: 10.1016/j.survophthal.2020.09.008. [DOI] [PubMed] [Google Scholar]

- 18.Saini JS, Jain AK, Kumar S, Vikal S, Pankaj S, Singh S. Neural network approach to classify infective keratitis. Curr Eye Res. 2003;27:111–6. doi: 10.1076/ceyr.27.2.111.15949. [DOI] [PubMed] [Google Scholar]

- 19.Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM:Visual Explanations from Deep Networks via Gradient-Based Localization. Proceedings of the IEEE International Conference on Computer Vision (ICCV) 2017:618–26. [Google Scholar]