Abstract

One of the most prevalent malignant bone tumors is osteosarcoma. The diagnosis and treatment cycle are long and the prognosis is poor. It takes a lot of time to manually identify osteosarcoma from osteosarcoma magnetic resonance imaging (MRI). Medical image processing technology has greatly alleviated the problems faced by medical diagnoses. However, MRI images of osteosarcoma are characterized by high noise and blurred edges. The complex features increase the difficulty of lesion area identification. Therefore, this study proposes an osteosarcoma MRI image segmentation method (OSTransnet) based on Transformer and U-net. This technique primarily addresses the issues of fuzzy tumor edge segmentation and overfitting brought on by data noise. First, we optimize the dataset by changing the precise spatial distribution of noise and the data-increment image rotation process. The tumor is then segmented based on the model of U-Net and Transformer with edge improvement. It compensates for the limitations of U-semantic Net by using channel-based transformers. Finally, we also add an edge enhancement module (BAB) and a combined loss function to improve the performance of edge segmentation. The method's accuracy and stability are demonstrated by the detection and training results based on more than 4,000 MRI images of osteosarcoma, which also demonstrate how well the method works as an adjunct to clinical diagnosis and treatment.

1. Introduction

Osteosarcoma is the most common primary malignant bone tumor, accounting for approximately 44% of primary malignant tumors in orthopedics [1]. In developing countries, limited by medical level, the death rate of osteosarcoma has far exceeded that of developed countries. The survival rate of patients with advanced osteosarcoma is less than 20% [2]. Early detection and timely development of reasonable treatment strategies can effectively improve the survival rate of patients [3]. The advantage of MRI is that it can detect aberrant signals in the early stages of a lesion. It can produce multidimensional images thanks to its multidirectional imaging. It can also display more information about the soft tissues and their links to the surrounding neurovascular [4]. It can also quantify the extent of the bone marrow cavity's involvement [5]. As a result, MRI is a critical technique for doctors to use when diagnosing and evaluating probable osteosarcoma.

In most developing countries, the treatment and prognosis of osteosarcoma have been troubling for those involved, and it is also a pain point for every osteosarcoma patient. Developing nations are unable to provide patients with osteosarcoma with a more individualized course of treatment due to their economic underdevelopment and lack of medical resources and equipment [6]. On the other hand, the lack of technical personnel and the backward medical technology make the early diagnosis of osteosarcoma a huge problem [7–10]. The larger problem is that even with adequate screening equipment and MRI images, inefficient manual recognition measures may lead to delays in diagnosis and treatment, thus worsening the condition of patients with osteosarcoma. Since 600–700 MRI images are generated per patient [11], there are often fewer than 20 valid osteosarcoma images. A large amount of data can only be diagnosed by doctors' manual identification [11, 12], which burdens doctors. Long-term high-intensity work can also fatigue doctors and reduce the speed and accuracy of discrimination [13]. Worst of all, the location, structure, shape, and density of different osteosarcomas are not identical [14]. It is difficult to distinguish the tumor location from normal tissues. Different osteosarcomas may also have image differences under the same imaging method [15–17]. It is extremely difficult to diagnose with the naked eye, which requires doctors to have rich diagnostic experience. Otherwise, it may lead to inaccurate diagnostic results and delays in patient treatment [18].

Medical image processing technology has steadily been employed in the direction of medical diagnostics as computer image technology has progressed [19]. Among the existing studies, there are many types of segmentation algorithms applied to medical images, such as thresholding [20, 21], region growing [22, 23], machine learning [24, 25], deep learning [26, 27], active contouring [28, 29], quantum-inspired compilation [30, 31], and computational intelligence [32, 33]. These algorithms are able to provide effective support for the clinical routine. Through algorithm processing, the system can more accurately segment the tumor area that the doctor is interested in [34]. It is helpful for precise localization and diagnosis and treatment, reducing the possibility of tumor recurrence, and thereby greatly improving the survival rate of patients [35]. For example, the literature [36] uses the convolutional neural network for the localization and segmentation of brain tumors, and the literature [37] realizes the classification of brain tumors and the grading of glial tumors. However, segmenting osteosarcoma MRI images remains a significant difficulty. The amount of noise in MRI pictures varies. Furthermore, the segmentation model is prone to noise [38] and overfitting, resulting in worse segmentation accuracy. Meanwhile, osteosarcoma has a wide range of local tissue development and shape [39, 40]. These properties cause indistinct tumor boundaries and complex form structures, making it difficult to maintain edge features [41–43]. As a result, it is worth looking into how to segment osteosarcoma effectively and properly.

We present a segmentation approach for osteosarcoma MRI images using edge enhancement features (OSTransnet). To begin, we optimize the dataset by altering the spatial distribution of natural noise. The overfitting problem of deep learning models caused by MRI image noise is solved using this method. Then, for osteosarcoma image segmentation (UCTransnet), we employed Transformer and U-net network models. The channel CTrans module was introduced by UCTransnet. The jump connection element of U-Net is replaced by this module. This method compensates for U-Net segmentation's semantic shortcomings and accomplishes global multiscale segmentation of tumor patches of various sizes. This approach also increases the accuracy of osteosarcoma segmentation by resolving complicated and changeable lesion areas in MRI images of osteosarcoma. Finally, we employ a combined loss function and an edge augmentation module. They collaborate to improve the segmentation results and effectively handle the problem of tumor edge blurring. This method increases diagnostic efficiency while reducing diagnostic workload and time without compromising diagnostic accuracy.

The contributions to this paper are listed as follows:

A new data alignment method is introduced in this paper to optimize the dataset. The new data alignment is achieved by altering the spatial distribution of real noise to generate more training samples that include both actual content and noise. The strategy effectively mitigates the effect of noise on model segmentation while broadening the data.

The segmentation model utilized in this paper is UCTransnset, which is built on Transformer and U-Net. Instead of using the skip-connected section of the U-Net, this network structure uses the channelized Transformer module (CTrans). It realizes the localization and identification of tumors of different scales.

The edge enhancement module (BAB) with a combined loss function is introduced in this study. This module can increase tumor border segmentation accuracy and effectively tackle the problem of tumor edge blurring.

The experimental results show that our proposed method of osteosarcoma segmentation has higher precision than previous methods and has advantages in various evaluation indexes. The results can be used by physicians to assist in the diagnosis and treatment of osteosarcoma. This study has important implications for the ancillary diagnosis, treatment, and prognosis of osteosarcoma.

2. Related Work

With the development of computer technology, there have been many artificial intelligence decision-making systems and image processing methods used in these systems to assist in disease diagnosis. In the diagnosis of osteosarcoma, we use computer technology to analyze and process images to help doctors quickly find the tumor location and improve the speed and accuracy of diagnosis. This has become a research hotspot today, and some mainstream algorithms in this field are introduced below:

To discriminate between live tumors, necrotic tumors, and nontumors, Ahmed et al. [44] proposed a compact CNN architecture to classify osteosarcoma images. The method combines a regularized model with the CNN architecture to reduce overfitting, which achieves good results on balanced datasets. Fu et al. [45] designed a DS-Net algorithm combining a depth model with a Siamese network to address the phenomenon of overfitting of small datasets in osteosarcoma classification. Anisuzzaman et al. [46] used a CNN network for pretraining. In this way, an automatic classifier of osteosarcoma tissue images is realized, thereby better predicting the patient's condition.

Additionally, a lot of research has suggested osteosarcoma segmentation algorithms that predict and separate the tumor region of osteosarcoma. Nasir and Obaid [47] proposed an algorithm-KCG that combines multiple image processing techniques, which involves iterative morphological operations and object counting, and achieves high accuracy on existing datasets. The MSFCN method was proposed by Huang et al. [48]. The idea is to add a supervised output layer to ensure that both local and global image features can be captured. The MSRN proposed by Zhang et al. [49] can provide automatic and accurate segmentation for the osteosarcoma region of the image. By adding three additional supervised side output modules, the extraction of image shape and semantic features is realized respectively. Shuai et al. [50] designed a W-net++ model by considering two cascading U-Net networks in an integrated manner. It is mainly implemented by applying multiscale inputs to the network and introducing deep adaptive supervision. Ho et al. [51] described a deeply interactive learning (DIAL) approach to training a CNN as a labeling method for predictive assessment of prognostic factors for survival in osteosarcoma. This method can effectively predict the necrosis rate within the variation rate range.

In addition to its use for osteosarcoma segmentation, there are many studies on the application of computer technology in the treatment of osteosarcoma. Kim et al. [52] compared the performance of different methods in predicting response to neoadjuvant chemotherapy in osteosarcoma patients, which can help clinicians, decide whether to proceed with further treatment of this patient. Dufau et al. [53] developed a support vector machine-based predictive model to predict the treatment effect of neoadjuvant chemotherapy, which predicted the chemotherapy response of patients before starting treatment. Hu et al. [46, 54] established an MRI image recognition model based on the proposed CSDCNN algorithm. This method obtained better indicators than SegNet, LeNet, and other algorithms. The F-HHO-based GAN proposed by Badshah et al. [47, 54] can be used for early osteosarcoma detection work. The method classifies tumors by GAN and uses GAN to detect and segment the extracted image features.

With the development of deep learning-based networks, many researchers embed the latest algorithms of the team into the system for implementation. Arunachalam et al. [55] created a deep learning architecture that implements a fully automated tumor classification system. It establishes the groundwork for automating the deep learning algorithms' extraction of tumor prediction maps from raw images. Bansal et al. [56] implemented an automatic detection system based on the F-FSM-C classification model. The model can classify the original image into three types: surviving tumor, nonsurviving tumor, and nontumor, reducing the number of network features. In view of the characteristic of high noise in osteosarcoma MRI images, Wu et al. [57] proposed a segmentation system based on deep convolutional neural networks, which effectively improved the speed and accuracy of osteosarcoma MRI images.

From the above research work, it can be seen that image segmentation methods have become increasingly important for disease diagnosis and prognosis. However, as shown in Table 1, existing studies still face many problems in the detection of osteosarcoma MRI images. In particular, it is still difficult to reasonably preserve edge features when segmenting osteosarcoma images. Since images are sensitive to noise, it is necessary to reduce MRI image noise to improve segmentation accuracy. To compensate for segmentation inaccuracy, we present a segmentation method based on edge enhancement from osteosarcoma MRI (OSTransnet). The method uses strategies such as dataset optimization, model segmentation, edge enhancement, and mixed loss functions to improve the accuracy of osteosarcoma segmentation.

Table 1.

Comparison of different auxiliary diagnostic methods for osteosarcoma.

| Detection object | Literature | Technology involved | Application advantages | Limitation |

|---|---|---|---|---|

| CT image | Literature [48] | Image normalization, CNN | Make sure to capture global and local image features | Segmentation performance is limited in the face of small tumor regions |

| Literature [49] | Residual network | Realize the extraction of image shape and semantic features | ||

| Literature [51] | U-Net, channel attention module | Prevents loss of detail caused by multiple encodings and subsampling | ||

|

| ||||

| Pathological image | Literature [44] | Regularization model, CNN | Differentiate between live tumors, necrotic tumors, and nontumors | Pathological evaluation of tissue samples is prone to interobserver variability and is highly subjective. Some of the features used as input to automated machine learners depend on the features identified by the pathologist and require higher costs |

| Literature [45] | Siamese network, FCN | Solve the problem of small data overfitting | ||

| Literature [46] | CNN, transfer learning, VGG19 | Automatic classification of tissue images to predict patient conditions | ||

| Literature [52] | CNN | Prognostic factors predicting survival in osteosarcoma, assessing necrosis rates within a variable range | ||

| Literature [54] | GAN, F–HHO algorithm | Detect and segment the extracted image features | ||

| Literature [55] | Deep learning | Lays the groundwork for an automated process for obtaining tumor prediction maps from raw images | ||

| Literature [56] | Binary arithmetic optimization | Three types of surviving tumor, nonsurviving tumor and nontumor are distinguished | High computational cost and slow system speed | |

|

| ||||

| F-FDG PET image | Literature [52] | CNN | To compare the use of different methods in predicting the effect of neoadjuvant chemotherapy | The data source is relatively single |

|

| ||||

| Diffusion weighted imaging | Literature [54] | CNN | Precise localization of lesions in patients with osteosarcoma | The sample size is small. |

|

| ||||

| MRI | Literature [53] | Support vector machines | Predicting a patient's chemotherapy response before treatment | Not validated for large scale data |

| Literature [47] | K-means, chan–vese segmentation | High precision segmentation | The complexity of the model is high | |

| Literature [57] | Mean-teacher, SepUNet, CRF | Tumor region segmentation | Segmentation accuracy is limited | |

3. System Model Design

The diagnosis and treatment of osteosarcoma present many difficulties in most underdeveloped countries due to financial and technical constraints [58]. Osteosarcoma MRI scans is complex and data-intensive. Manual screening and diagnostic tests, which cost a lot of medical resources and are difficult for clinicians, are extremely difficult to execute [59, 60]. Image processing technology is gradually becoming more frequently employed in disease diagnosis, treatment, and prognosis to aid clinicians in clinical diagnosis and increase disease diagnosis efficiency [61]. In addition, due to the complexity of osteosarcoma MIR images and the diversity of tumors, existing detection methods do not achieve ideal segmentation results [62]. This study offers a segmentation approach (OSTransnet) for osteosarcoma MRI images with edge enhancement features based on Transformer and U-Net, which is primarily intended to assist clinicians in more precisely and rapidly diagnosing osteosarcoma lesions areas by recognizing osteosarcoma MRI pictures. It has been experimentally demonstrated that OSTransnet outperforms the current famous network architecture in segmentation accuracy for the segmentation of osteosarcoma. Figure 1 depicts the overall layout of this publication.

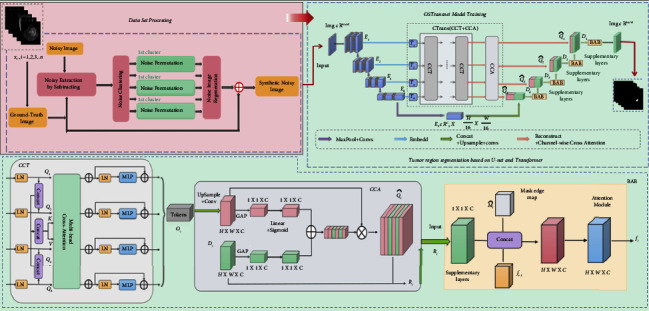

Figure 1.

Overall architecture diagram.

We construct an edge-enhanced osteosarcoma MRI image segmentation method (OSTransnet), which is mainly divided into two parts: dataset optimization processing and MRI image segmentation model based on U-Net and Transformer with edge-enhanced features. In Section 3.1, we introduced a new data alignment. It is better for the subsequent segmentation and diagnosis of the osteosarcoma lesion region. By taking the optimized image data in 3.1 and feeding it into the segmentation network in 3.2, we can locate the location and extent of the tumor and provide aid to the doctor's decision-making for diagnosis and prediction of the disease.

3.1. Dataset Optimization

One of the most important problems in AI-assisted diagnosis systems is the lack of labeled pictures for diagnosing osteosarcoma, despite a large amount of data in MRI images. Deep learning-based models are prone to overfitting if there are insufficient training samples. Data enhancement is an effective way to avoid the overfitting problem. At the same time, osteosarcoma images have the characteristic of being susceptible to noise. It is not feasible to directly discard labeled images that contain noise, and they can also contribute to the model. We introduce a new data alignment method that utilizes the natural noise in authentic noisy images to solve this problem. More training examples with actual content and noise are generated by altering the spatial distribution of natural noise.

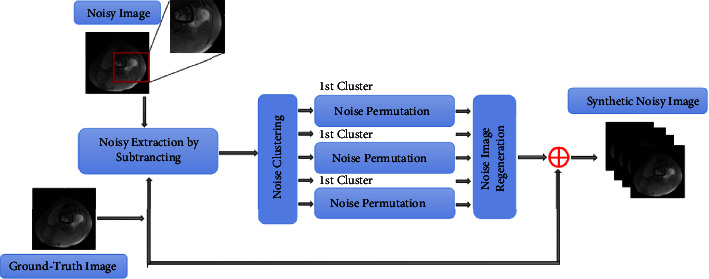

The first step is to create noisy picture data by subtracting the validly labeled photos from the corresponding noisy images, as shown in Figure 2. When working with noisy data, the noise clustering technique divides it into groups based on ground-truth intensity values. The places of these noises are then swapped using a random permutation inside each cluster. The displaced image is combined with the accompanying valid, ground-truth labeled image to form a new synthetic noisy MRI image. This is done to limit the impact of noise on segmentation model accuracy while expanding the breadth of data.

Figure 2.

Dataset optimization process.

In this section, we preprocessed osteosarcoma MRI images. The processed images can not only reduce the waste of ineffective model training but also improve the segmentation performance. Furthermore, these images can be used as a reference for doctors' clinical diagnoses, which can also improve detection accuracy and diagnosis speed. In the next section, we describe the MRI image segmentation process in detail.

3.2. Osteosarcoma Image Segmentation

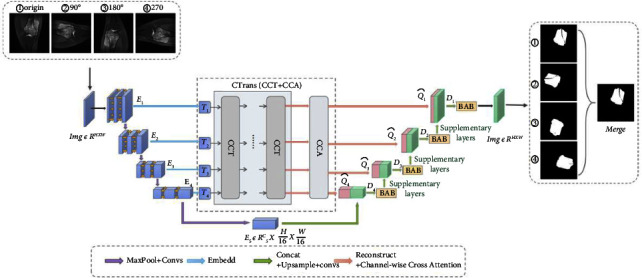

The osteosarcoma segmentation model consists of four main parts: U-Net without skip connection mechanism, channeled Transformer module (CTrans), edge enhancement module (BAB), and combined loss function. The general design is shown in Figure 3.

Figure 3.

Segmentation model diagram.

3.2.1. U-Net without Skip Connection Mechanism

U-Net [30] is the most commonly used model for image segmentation in the medical field due to its lightweight properties. Its performance in medical picture segmentation as a traditional encoder-decoder network structure has been outstanding. As a result, the U-Net model is used to segment MRI images in the case of osteosarcoma. The systolic path and the extended path are the two sections that make up the U-Net in general. The systolic path is on the left and functions mostly as an encoder for low-level and high-level characteristics. It is made of two 3 × 3 unfilled convolutional repetitions and follows the conventional construction of a convolutional network. Following that, a 2 × 2 maximum pooling operation and a rectified linear unit (ReLU) are coupled. After each convolution, there is a two-step downsampling process. During each layer's downsampling, the number of feature channels is multiplied by two. The extended path, on the right, is mostly employed as a decoder, combining semantic characteristics to produce the final result. Upsampling the feature map and conducting a 2 × 2 upconvolution are included in each stage of its journey. It halves the number of features to match the relevant feature maps in the associated shrinkage path. Once the features are linked, the osteosarcoma MRI feature map is subjected to a 3 × 3 convolution. Each convolutional output of the feature map must go through ReLU once more.

The feature connection in the original U-net uses a skip connection mechanism. The features in the encoder and decoder stages are incompatible, leading to a semantic gap, which has a certain impact on the segmentation model. To segment osteosarcoma MRI images more accurately, we introduced channel-based transformers (CTrans) instead of U-Net's skip connection. It takes advantage of the transformers and U-Net for cross-fusion of multiscale channel information to achieve effective connection with decoder feature disambiguation. The multiscale exploration of sufficient information of global context bridges the semantic gap and solves the problem of semantic hierarchy inconsistency. Better segmentation results are obtained in this way.

3.2.2. Channeled Transformer Module (CTrans)

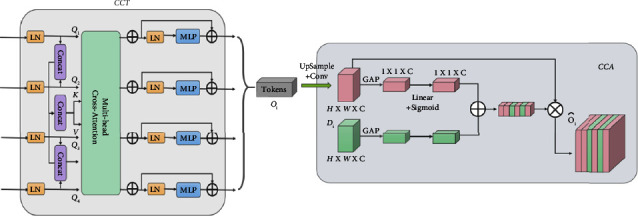

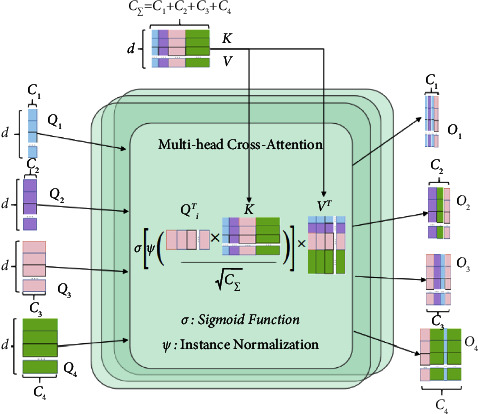

To eliminate semantic delay and integrate encoder features to improve the segmentation effect of osteosarcoma MRI images, a channel conversion module is constructed in this paper, as shown in Figure 4. This is mainly to achieve channel-dependent transformation between the U-Net encoder and decoder. This module consists of two parts: the Channel-wise Cross Fusion Transformer (CCT) and the Channel-wise Cross-Attention (CCA). CCT realizes multilevel coding fusion and CCA is used for decoding fusion. Among them, the extended CCT fusion replaces U-Net with a channel transformer (CTrans).

Figure 4.

Channeled transformer module (CTrans).

(1) CCT: Channel Cross-Merging Transformer for Transforming Encoding Functions. We present a new channel-based cross-fusion transformer (CCT) that uses long-dependent modeling in the Transformer to fuse multiscale encoder characteristics in osteosarcoma MRI images during segmentation to better fuse multiscale features. The CCT module consists of three parts: multiscale feature embedding, multihead channel cross-attention, and multilayer perceptron. They are described in detail below.

Multi-scale feature embedding. We tokenize the osteosarcoma features and restructure them into flattened 2D patch sequences. So that the patch can be mapped to the same region of the encoder at four scales, we set the patch size to P, P/2, P/4, P/8, respectively, and use the four skip-connected layer outputs of the multiscale feature embedding Ei ∈ RHW/i2×Ci. We preserve the original channel sizes during this process. The four layers Ti(i=1,2,3,4), Ti ∈ RHW/i2×Ci as key values are then connected.

| (1) |

Multichannel cross-notice module. This is passed to the multihead channel cross-attention module, which uses multiscale features to refine features at each U-Net encoder level. Then, there is a multilayer perceptron (MLP) with a residual structure that encodes channels and dependencies.

The proposed CCT module has five inputs, as shown in Figure 5, with four tokens Ti serving as queries and a connected token TΣ serving as keys and values:

| (2) |

where WQi ∈ RCi×d, WK ∈ RCΣ×d, WV ∈ RCΣ×d is the weight of the different inputs, d is the length of the sequence, Qi ∈ RCi×d, K ∈ RCΣ×d, V ∈ RCΣ×d, the values of the acquaintance matrix Mi and V are weighted. and Ci(i=1,2,3,4) is the size of the channel that skips the connection layer.

| (3) |

Figure 5.

Multihead channel cross-notice module.

The cross-attention (CA) mechanism is as follows:

| (4) |

where ψ(·) and σ(·) denote the random normalization and softmax functions, respectively.

We operate attention along the channel axis instead of the patch axis, which is quite different from the original self-attention mechanism. By normalizing the similarity matrix for each instance on the similarity maps, we can smooth down the gradient by using instance normalization. The output after multihead cross-attention in an N-head attention condition is computed as follows:

| (5) |

In this formula, N is the total number of heads.

After that, we use MLP and residual operator to get the following output:

| (6) |

For simplicity, we omit layer normalization (LN) from the equation. We repeat the operation of formula (6) L times to finally form an L-layer transformer. where N and L are both set to 4. This is mainly because with 4 layers and 4 heads, the model can achieve state-of-the-art segmentation performance on the dataset after experimental validation with 2, 4, 8, and 12 layers based on CCT.

(2) CCA: Cross-Channel Focus for Feature Synthesis in Decoders. The channel-based cross-notification module filters and disambiguates the decoder features by channel and information that guide the interrogator features. Its main purpose is to fuse features that are semantically inconsistent between the channel interrogator and the U-Net decoder.

We use the level i transformer output Oi ∈ RC×H×W and the level i decoder feature map Di ∈ RC×H×W as inputs to the global average pooling (GAP) layer, which uses them to incorporate global spatial information and shape attention:

| (7) |

where ς(X)=1/H × W∑i=1H∑j=1WXk(i, j), ς(X) ∈ RC×1×1, L1 ∈ RC×C, L2 ∈ RC×C and being weights of two linear layers and the ReLU operator δ(·).

To avoid the effect of dimensionality reduction on channel attention learning, we are constructing channel attention maps with a single linear layer and S-shaped functions, and synthetic vectors are used to recalibrate and excite Oi.

With this method, the process of transformer self-control is rethought from the perspective of the channel to close the semantic gap between features through more effective feature fusion and multidimensional channel cross-checking. This enables acquiring more intricate channel dependencies to enhance the functionality of MRI image segmentation models for osteosarcoma.

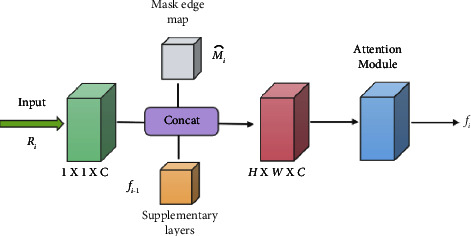

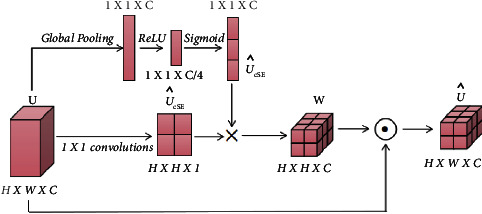

3.2.3. Edge Enhancement Module (BAB)

In the MRI image segmentation of osteosarcoma, blurred edge segmentation, and partial region missing have been the main problems to be solved, which affect the accuracy of MRI image segmentation to a certain extent. We introduce the edge augmentation block (BAB) to solve this problem, as shown in Figure 6. It focuses more on enhancing the edge information of the lesion region by a mask extraction algorithm and attention mechanism, as shown in Figure 7. Edge enhancement is performed on osteosarcoma MRI images to supplement the missing regions. The BAB module solves the segmentation problem of blurred edges to a certain extent.

Figure 6.

Edge enhancement (BAB) module.

Figure 7.

Attention mechanism of BAB module.

The final feature map D1,D2,D3,D4 of the decoder in the U-Net path is fed to the BAB module as an input layer.

After convolving the input feature map, the mask edge map Mi is obtained by the mask edge extraction algorithm as an important complement to the edge information. The process of the mask edge extraction algorithm can be expressed as follows: traverse each pixel point (i, j) of the mask, when the traversed pixel value is 0 and the rest of the pixel points in the nine-box grid centered on the pixel point are not all 0, the pixel point is recorded as 0 until all the pixel points of the mask are traversed, and then, the mask edge map Mi is generated.

The feature maps obtained after convolution are connected with the complementary layer feature maps fi−1) obtained from the previous layer after BAB upsampling by channel and input to the attention module to obtain the final prediction.

| (8) |

where ds(∙) denotes the convolution function, c(∙) denotes the join operation, AB(∙) denotes the attention module function, and U ∈ RC×H×W denotes the output.

For the input feature map U ∈ RC×H×W, the feature map UsCE ∈ RC×H×W and vector UsCE ∈ R1×1×C are obtained by compressing them on the channel and space, respectively, and the two are multiplied to obtain the weight W∈RC×H×W, which is then multiplied pixel by pixel with the input feature map to obtain the output.

| (9) |

where × represents direct multiplication after expansion to read and ⊙ represents pixel-by-pixel multiplication.

3.2.4. Combined Loss Functions

Osteosarcoma MRI images often have the problem of class imbalance, which leads to the training being dominated by the class with more pixels. It is challenging to learn the features of the part with fewer pixels, thus, affecting the effectiveness of the network. Therefore, we mostly use the Dice loss function, which measures the overlapping part of the samples, to solve the class imbalance. However, for osteosarcoma, MRI images have the image characteristics of blurred edges, and the Dice loss function cannot focus on the image edge information. So we propose a combined loss function L. It combines region-based Dice loss and edge-based Boundary loss, supervised in two different focus dimensions. Dice loss and Boundary loss are defined as follows:

| (10) |

where i denotes each pixel point, c denotes the classification, gic denotes whether the classification is correct, and sic denotes the probability of being classified into a certain class.

| (11) |

If ξ ∈ G(Goung Truth), then ϕG(ξ)=−DG(ξ), and vice versa ϕG(ξ)=DG(ξ). Where ϕG is the bounded level set representation, Dθ(ξ) is the distance map of ground truth, and the network's softmax probability output is Sθ(ξ).

The combined loss function L is defined, as shown in (11):

| (12) |

where, parameters α and β are balance coefficients to balance the effect of area loss and edge loss on the final result.

The loss function L combines the region-based Dice loss and the edge-based Boundary loss, allowing the network to focus on both region and edge information. It complements the edge information while ensuring small missing values in the region, thus improving the accuracy of segmentation. As the neural network continues to iterate, the balance coefficients α and β are updated by self-learning adjustments, prompting the Dice loss to occupy a larger proportion of the first half of the U-Net network. Thus, the U-Net network is relatively more concerned with regional information. Boundary loss pays more attention to edge information, so it occupies a larger proportion of the second half of the edge-attention module. In this paper, a combined loss function is used to play the role of an edge attention module, which realizes attention to regional information without losing edge information. It solves the problems of large missing values and unclear edges in current medical image segmentation.

Not only can our segmentation algorithm accurately segment the tumor region in different slices of osteosarcoma MRI images, but it can also solve the problem of the lesion region's hazy boundary in osteosarcoma MRI pictures. Our model places a greater emphasis on edge information, which is beneficial for precise border segmentation. The final lesion area and segmentation results from the model can help doctors diagnose and treat osteosarcoma. It helps to increase the effectiveness and accuracy of osteosarcoma diagnosis, which lessens the pressure on doctors in many nations to treat osteosarcoma. Additionally, it is crucial for the auxiliary diagnosis, prognosis, and prediction of osteosarcoma disease.

4. Experimental Results

4.1. Dataset

The Center for Artificial Intelligence Research at a Monash University provided the data for this article [57]. We gathered more than 4,000 MRI osteosarcoma pictures and other index data. To improve the accuracy and robustness of the model segmentation results, we rotated the photos by 90, 180, and 270 degrees before feeding them into the segmentation network. The training set consisted of 80% of the data, whereas the test set consisted of 20% of the data.

4.2. Evaluation Metrics

To evaluate the performance of the model, we used the Intersection of Union (IOU), Dice Similarity Coefficient (DSC), Accuracy (ACC), Precision (Pre), Recall (Re), and F1-score (F1) as the measures [63]. These indicators are defined as follows:

| (13) |

where I1, I2 are the predicted and actual tumor areas, respectively. A true positive (TP) indicates that the area has been identified as an osteosarcoma area. A true negative (TN) indicates that the area is considered normal, although it is also a lesion area. A false positive (FP) is normal tissue that has been determined to be tumor-free. A false negative (FN) indicates an area predicted to be normal but it is a tumor area [64].

In addition, for comparative experimental analysis, we use the FCN [65], PSPNet [66], MSFCN [48], MSRN [49], U-Net [67], FPN [68], and our proposed OSTransnet algorithms. Below is a quick description of these strategies.

4.3. Training Strategy

To improve the robustness of the model and avoid nonsense features, we need to perform data augmentation on the dataset before training. We use natural noise augmentation to increase the dataset by rotating the image.

For the AI model, the rotation of the image is obtained as a new image. To make the mini-row segmentation effect more robust and accurate, we rotated one image by 90, 180, and 270 as data augmentation to finally obtain the segmentation probability as a weighted average of the four image probabilities.

A total of 200 epochs were trained to create a segmentation neural network. In the U-net, a joint training optimization strategy was applied to the convolution and CTrans parameters, and the inferior attention parameters of the two channels were optimized. We first trained the U-net and then the parameters of the OSTransnet using the same data.

4.4. Results

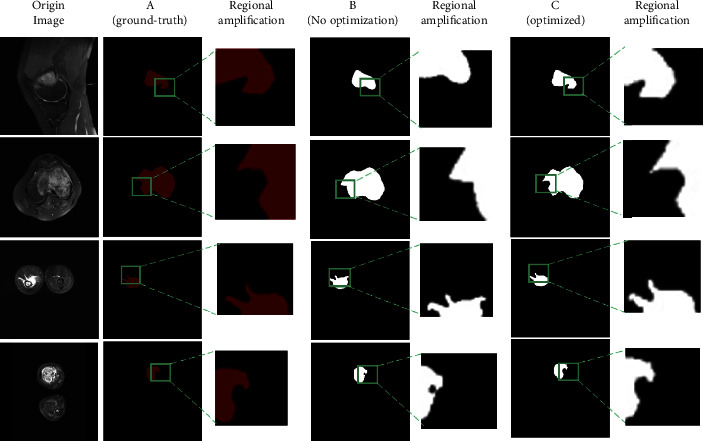

The segmentation effect of the model before and after dataset tuning is shown in Figure 8. Each row has three columns: column A represents the ground truth, column B represents the model's segmentation effect graph without dataset optimization, and column C represents the model's segmentation effect after optimization. In the zoomed-in image of the local area before optimization, as illustrated in column B, partial and erroneous segmentation occurs. After the dataset optimization, the model segmentation results are closer to the real labels, as shown in column C. The completeness and accuracy of the segmentation results can be clearly seen in the enlarged image of the local region. It can be seen that before the dataset is optimized, there is an impact on the segmentation model accuracy due to MRI image noise. After the dataset is optimized, the data augmentation operation using real noise suppresses the influence of noise on the accuracy of the segmentation model to a certain extent and there are significant improvement in segmentation completeness and accuracy. Furthermore, for tumor margins in MRI images, the segmentation effect is significantly improved.

Figure 8.

Comparison of the impact of segmentation before and after dataset optimization.

As shown in Table 2, the dataset optimization and edge improvement modules are advantageous in improving the prediction results, demonstrating that optimizing the dataset may considerably improve the OSTransnet border segmentation and improve the results. Preincreased by around 0.5%, F1 increased by roughly 0.3%, IOU increased by roughly 0.7%, and DSC increased by roughly 0.7%. Following segmentation optimization, DSC improved by 1.1%, Pre by 0.2%, Re by 0.5%, F1 by 0.2%, and IOU by 0.8%, respectively.

Table 2.

Comparison of OSTransnet performance under different conditions.

| Model | IOU | DSC | Pre | Re | F1 |

|---|---|---|---|---|---|

| Our (OSTransnet) No optimization + BAB | 0.889 | 0.931 | 0.917 | 0.974 | 0.946 |

| Our (OSTransnet) No BAB | 0.896 | 0.938 | 0.922 | 0.976 | 0.949 |

| Our (OSTransnet) | 0.904 | 0.949 | 0.924 | 0.981 | 0.951 |

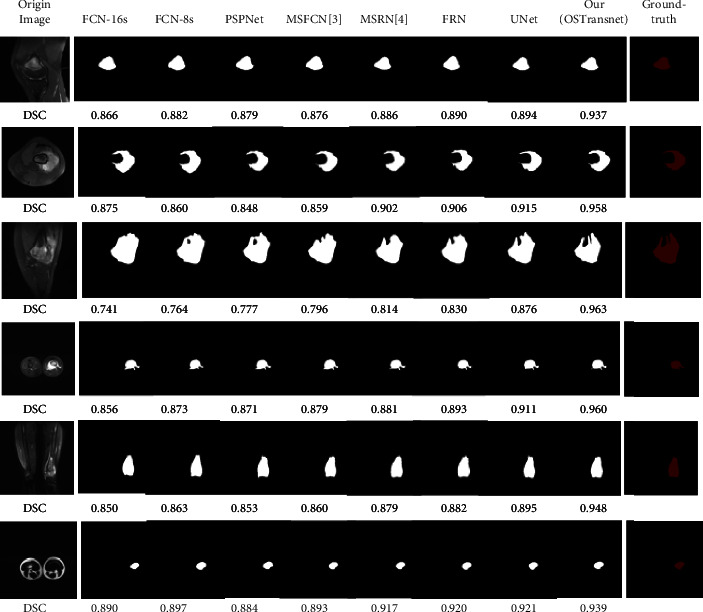

Furthermore, the following Figure 9 shows the effect of each model on the segmentation of osteosarcoma MRI images. We compared the effect plots of FCN-16s, FCN-8s, PSPNet, MSFCN, MSRN, FPN, and U-Net with our OSTransnet segmentation model. Ground-truth segmented images can be used to visually examine the model's segmentation performance. Meanwhile, we chose the DSC metrics. The following 6 osteosarcoma segmentation examples show that OSTransnet can achieve better segmentation results in osteosarcoma MRI image segmentation work. Especially in MRI images with blurred tumor borders, such as the third example with more tumor border segmentation, our method is more accurate and complete in segmentation. For FCN, PSPNet, and MSFCN models, there is an oversegmentation problem.

Figure 9.

Comparison of the effect of each model on MRI image segmentation of osteosarcoma.

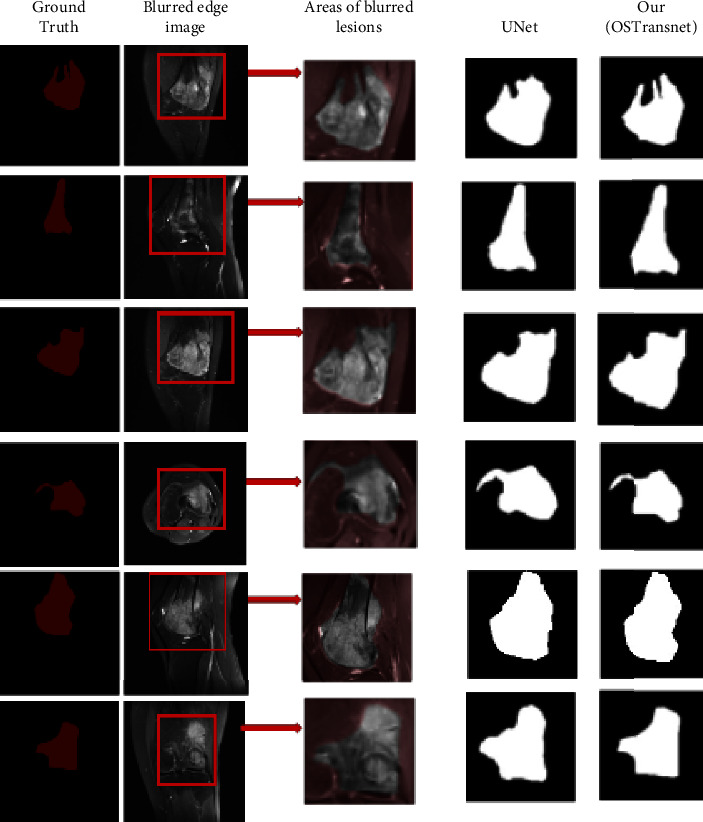

To evaluate the segmentation effect of the model on MRI images with fuzzy edges, we selected six osteosarcoma images with the same fuzzy edge feature as the third example in Figure 9 for detailed comparison. In this paper, we used U-Net, which has the best segmentation effect among many comparison models, and OSTransnet for the comparative analysis of the images. From the detailed comparison in Figure 10, we can intuitively see that our model has a more accurate segmentation effect for the images with the blurred boundaries of the lesion regions. Compared with other contrasting models, our OSTransnet model has greater advantages in boundary blur segmentation due to its unique edge enhancement module and combined loss function. It can be clearly seen that it more effectively and accurately segments the boundary of the lesion area. The OSTransnet model effectively solves the blurred segmentation edge that often occurs in osteosarcoma MRI images.

Figure 10.

Comparison of edge blur image segmentation effect.

We quantified the performance of each method in order to further examine the performance of each strategy. Experimental evaluation was performed on the osteosarcoma MRI dataset, and the results are shown in Table 3. The accuracy of the FCN-8s model was the highest, but the performance was poor in several other metrics. In particular, the recall rate was the worst for FCN. The recall rate was only 0.882 for FCN-16s and 0.873 for FCN-8s. The PSPNet model had the lowest IOU at 0.772. The MSFCN and MSRN models showed relatively improved performance. Both models have improved substantially in all metrics, with recall rates reaching 0.9. The U-Net model has the best performance of all the compared methods, with an IOU of 0.867 and a DSC of 0.892. The performance of the OSTransnet model proposed in this paper is the best. It has the highest results in several metrics of DSC, IOU, Recall, and F1. It achieved a DSC value of 0.949, which is about 6.4% better than U-Net. It indicates that the OSTransnet model has better performance in osteosarcoma segmentation.

Table 3.

Performance comparison of different methods on the osteosarcoma dataset.

| Model | IOU | DSC | Pre | Re | F1 |

|---|---|---|---|---|---|

| FCN-16s | 0.824 | 0.859 | 0.922 | 0.882 | 0.900 |

| FCN-8s | 0.830 | 0.876 | 0.941 | 0.873 | 0.901 |

| PSPNet | 0.772 | 0.870 | 0.856 | 0.888 | 0.872 |

| MSFCN | 0.841 | 0.874 | 0.881 | 0.936 | 0.906 |

| MSRN | 0.853 | 0.887 | 0.893 | 0.945 | 0.918 |

| FPN | 0.852 | 0.888 | 0.914 | 0.924 | 0.919 |

| U-Net | 0.867 | 0.892 | 0.922 | 0.924 | 0.923 |

| Our (OSTransnet) | 0.904 | 0.949 | 0.924 | 0.981 | 0.951 |

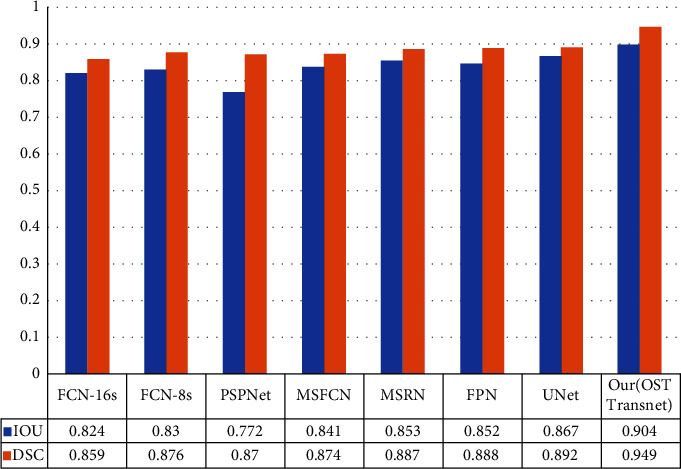

On the osteosarcoma dataset, Figure 11 illustrates the segmentation comparison of different approaches, and we used IOU for numerical comparison with DSC. Our proposed osteosarcoma segmentation model is more accurate, with the DSC metric being 5% higher than the second U-Net and the IOU measure being 4% higher than the second U-Net, according to the data.

Figure 11.

Comparison of DSC and IOU for different methods.

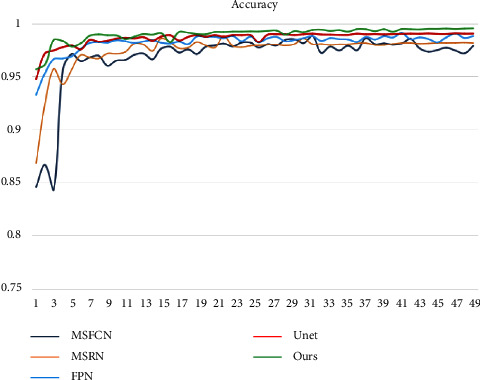

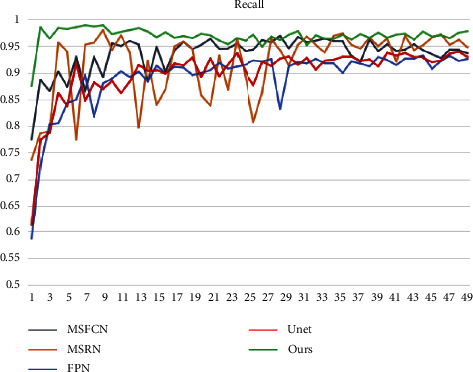

Figure 12 depicts the accuracy variation of each model. We trained a total of 200 epochs and utilized systematic sampling to select 50 epochs at random (1 epoch randomly selected per 4 epochs) for comparative analysis. You can see that the accuracy of each model begins to stabilize after an average of 50 epochs. Our OSTransnet offers the highest value stability, with 98.7% reliability. The accuracy ranking among the models is OSTransnet > U-Net > FPN > MSRN > MSFCN based on the photos supplied. The recall of MRSN and MSFCN changes substantially throughout the first 120 periods of training, as shown in Figure 13. Except for MSRN, the other models converge to a stable state after that. Overall, the recall rate of our suggested method has been kept as high as possible, ensuring that the risk of missing a diagnosis is minimal.

Figure 12.

Variation of accuracy for each model.

Figure 13.

Change in recall of each model.

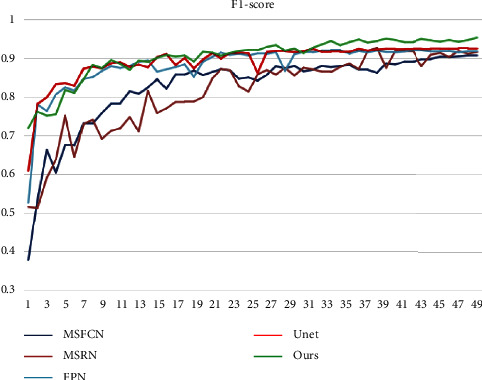

Finally, we used our approach to compare each model's F1-score. The F1 of each model changes, as shown in Figure 14, although our model swings the least in comparison. In addition, when compared to the F1 of other models, our model's F1 is always the greatest. This demonstrates the robustness of our method. We obtained better performance and segmentation results for the osteosarcoma MRI dataset compared to the segmentation results for each of the models in the table. This method can be used to diagnose, treat, and predict osteosarcoma, as well as offer doctors a diagnostic tool for the disease.

Figure 14.

Change of F1-score of each model.

4.5. Discussion

According to the analysis in Section 4.4, the performance of each model has a large gap in tumor region recognition. On the one hand, the shape and location of osteosarcoma MRI images vary greatly. On the other hand, the osteosarcoma MRI images are limited by the acquisition equipment, resulting in low resolution and high noise. All these have a large impact on the segmentation effect. The use of deeper and more complex networks alone does not improve the segmentation accuracy well. The performance of the FCN model is relatively poor, and it is easy to misclassify normal tissues as tumor regions. Although the performance of the PSPNet model and FPN mode has improved, both have lower recognition accuracy for tumor subtleties and different scales of tumors. Both the MSFCN and MSRN models showed substantial improvements in all metrics, but the performance of these two models still fell short of the ideal due to the heterogeneity of osteosarcoma and the complexity of the MRI image background. The U-net model can better avoid the interference of complex background in MRI images by incorporating contextual information, so it has better segmentation performance and all indexes are better than the other methods in the experiment. However, due to the network architecture, it is not sensitive enough to multiscale tumors and edge details.

Our OSTransnet model has the best segmentation performance. Especially for tumors of different scales and for subtleties between tumors. It achieves better segmentation results for both. This is mainly due to the combination of Transformer and U-Net network models we used. By introducing the Channel Transformer (CTrans) module to replace the jump connection in U-Net. It effectively solves the problem of semantic defects in U-Net, thus completing the identification of tumors at different scales. In addition, we introduce the edge enhancement module (BAB) with a combined loss function. This module can improve the accuracy of tumor boundary segmentation and effectively solve the problem of tumor edge blurring.

However, although this approach abbreviates the semantic and resolution gaps, it still cannot fully capture local information due to the introduction of the channel attention cross-attention model. It still has difficulty completing the identification of tumors at different scales in MRI maps. In addition, the small sample dataset has a large impact on the performance of the model. Overall, the results from Section 4.4 show that our approach has less computational cost and better segmentation performance, achieving a better balance between model effectiveness and efficiency. The superiority of the OSTransnet method can be visualized from Figure 9 and Table 3. Therefore, our method is more suitable for clinical aid in diagnosis and treatment.

5. Conclusions

In this study, a U-Net and Transformer-based MRI image segmentation algorithm (OSTransnet) for osteosarcoma with edge correction is proposed. Dataset optimization, model segmentation, edge improvement, and a combined loss function are all part of the strategy. The method outperforms other existing methods and has good segmentation performance, according to the findings of the experiments. In addition, we visualized the segmentation findings for data processing, which can aid clinicians in better identifying the osteosarcoma lesion location and diagnosing osteosarcoma.

With the development of image processing techniques, we will add more information to the method, enabling us to design a multiscale segmentation method. This will help us to better address segmentation errors caused by slight gray-scale differences between tumor tissue and surrounding tissue, as well as improve the accuracy of segmentation.

Acknowledgments

This research was funded by the Focus on Research and Development Projects in Shandong Province (Soft Science Project), No. 2021RKY02029.

Contributor Information

Jun Zhu, Email: zhujun190301@163.com.

Baolong Lv, Email: lvbaolong2010@sina.com.

Jia Wu, Email: jiawu5110@163.com.

Data Availability

Data used to support the findings of this study are currently under embargo while the research findings are commercialized. Requests for data, 12 months after the publication of this article, will be considered by the corresponding author. All data analyzed during the current study are included in the submission.

Conflicts of Interest

The authors declare that there are no conflicts of interest.

Authors' Contributions

All authors designed this works.

References

- 1.Corre I., Verrecchia F., Crenn V., Redini F., Trichet V. The osteosarcoma microenvironment: a complex but targetable ecosystem. Cells . Apr 2020;9(4):p. 976. doi: 10.3390/cells9040976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ouyang T., Yang S., Gou F., Dai Z., Wu J. Rethinking U-net from an attention perspective with transformers for osteosarcoma MRI image segmentation. Computational Intelligence and Neuroscience . 2022;2022:17. doi: 10.1155/2022/7973404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sadoughi F., Maleki Dana P., Asemi Z., Yousefi B. DNA damage response and repair in osteosarcoma: defects, regulation and therapeutic implications. DNA Repair . 2021-06 2021;102 doi: 10.1016/j.dnarep.2021.103105.103105 [DOI] [PubMed] [Google Scholar]

- 4.Ling Z., Yang S., Gou F., Dai Z., Wu J. Intelligent assistant diagnosis system of osteosarcoma MRI image based on transformer and convolution in developing countries. IEEE Journal of Biomedical and Health Informatics . 2022:1–12. doi: 10.1109/JBHI.2022.3196043. [DOI] [PubMed] [Google Scholar]

- 5.Shen Y., Gou F., Dai Z. Osteosarcoma MRI image-assisted segmentation system base on guided aggregated bilateral network. Mathematics . 2022;10(7):p. 1090. doi: 10.3390/math10071090. [DOI] [Google Scholar]

- 6.Zhou L., Tan Y. A residual fusion network for osteosarcoma MRI image segmentation in developing countries. Computational Intelligence and Neuroscience . 2022:2022–21. doi: 10.1155/2022/7285600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wang L., Yu L., Zhu J., Tang H., Gou F., Wu J. Auxiliary segmentation method of osteosarcoma in MRI images based on denoising and local enhancement. Healthcare . 2022;10(8):p. 1468. doi: 10.3390/healthcare10081468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Yu G., Chen Z., Wu J., Tan Y. Medical decision support system for cancer treatment in precision medicine in developing countries. Expert Systems with Applications . 2021;186 doi: 10.1016/j.eswa.2021.115725.115725 [DOI] [Google Scholar]

- 9.Jiao Y., Qi H., Wu J. Capsule network assisted electrocardiogram classification model for smart healthcare. Biocybernetics and Biomedical Engineering . 2022;42(2):543–555. doi: 10.1016/j.bbe.2022.03.006. [DOI] [Google Scholar]

- 10.Chang L., Moustafa N., Bashir A. K., Yu K. AI-driven synthetic biology for non-small cell lung cancer drug effectiveness-cost analysis in intelligent assisted medical systems. IEEE Journal of Biomedical and Health Informatics . 2021 doi: 10.1109/JBHI.2021.3133455. [DOI] [PubMed] [Google Scholar]

- 11.Zhuang Q., Dai Z., Wu J. Deep active learning framework for lymph node metastasis prediction in medical support system. Computational Intelligence and Neuroscience . 2022;2022:1–13. doi: 10.1155/2022/4601696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gou F., Wu J. Triad link prediction method based on the evolutionary analysis with IoT in opportunistic social networks. Computer Communications . 2022;181:143–155. doi: 10.1016/j.comcom.2021.10.009. [DOI] [Google Scholar]

- 13.Lv B., Liu F., Gou F., Wu J. Multi-scale tumor localization based on priori guidance-based segmentation method for osteosarcoma MRI images. Mathematics . 2022;10(12):p. 2099. doi: 10.3390/math10122099. [DOI] [Google Scholar]

- 14.Wu J., Gou F., Tan Y. A staging auxiliary diagnosis model for nonsmall cell lung cancer based on the intelligent medical system. Computational and Mathematical Methods in Medicine . 2021;2021:1–15. doi: 10.1155/2021/6654946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wu J., Xia J., Gou F. Information transmission mode and IoT community reconstruction based on user influence in opportunistic s ocial networks. Peer-to-Peer Networking and Applications . 2022;15(3):1398–1416. doi: 10.1007/s12083-022-01309-4. [DOI] [Google Scholar]

- 16.Gou F., Wu J. Data transmission strategy based on node motion prediction IoT system in opportunistic social networks. Wireless Personal Communications . 2022/09/01 2022;126(2):1751–1768. doi: 10.1007/s11277-022-09820-w. [DOI] [Google Scholar]

- 17.Chen H., Liu J., Cheng Z., et al. Development and external validation of an MRI-based radiomics nomogram for pretreatment prediction for early relapse in osteosarcoma: a retrospective multicenter study. European Journal of Radiology . 2020;129 doi: 10.1016/j.ejrad.2020.109066.109066 [DOI] [PubMed] [Google Scholar]

- 18.Shen Y., Gou F., Wu J. Node screening method based on federated learning with IoT in opportunistic social networks. Mathematics . 2022;10(10):p. 1669. doi: 10.3390/math10101669. [DOI] [Google Scholar]

- 19.Yu L., Fangfang G. Data transmission scheme based on node model training and time division multiple access with IoT in opportunistic social networks. Peer-to-Peer Networking and Applications . 2022 doi: 10.1007/s12083-022-01365-w. [DOI] [Google Scholar]

- 20.Ilhan U., Ilhan A. Brain tumor segmentation based on a new threshold approach. Procedia Computer Science . 2017;120:580–587. doi: 10.1016/j.procs.2017.11.282. [DOI] [Google Scholar]

- 21.Tamal M. Intensity threshold based solid tumour segmentation method for Positron Emission Tomography (PET) images: a review. Heliyon . 2020;6(10) doi: 10.1016/j.heliyon.2020.e05267.e05267 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rundo L., Militello C., Vitabile S., et al. Combining split-and-merge and multi-seed region growing algorithms for uterine fibroid segmentation in MRgFUS treatments. Medical, & Biological Engineering & Computing . 2016;54(7):1071–1084. doi: 10.1007/s11517-015-1404-6. [DOI] [PubMed] [Google Scholar]

- 23.Biratu E. S., Schwenker F., Debelee T. G., Kebede S. R., Negera W. G., Molla H. T. Enhanced region growing for brain tumor MR image segmentation. Journal of Imaging . 2021;7(2):p. 22. doi: 10.3390/jimaging7020022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Militello C., Rundo L., Dimarco M., et al. Semi-automated and interactive segmentation of contrast-enhancing masses on breast DCE-MRI using spatial fuzzy clustering. Biomedical Signal Processing and Control . 2022;71 doi: 10.1016/j.bspc.2021.103113.103113 [DOI] [Google Scholar]

- 25.Vadhnani S., Singh N. Brain tumor segmentation and classification in MRI using SVM and its variants: a survey. Multimedia Tools and Applications . 2022;2022:26. [Google Scholar]

- 26.Baccouche A., Garcia-Zapirain B., Castillo Olea C., Elmaghraby A. S. Connected-UNets: a deep learning architecture for breast mass segmentation. NPJ Breast Cancer . 2021;7(1):151–212. doi: 10.1038/s41523-021-00358-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gunashekar D. D., Bielak L., Hagele L., et al. Explainable AI for CNN-based prostate tumor segmentation in multi-parametric MRI correlated to whole mount histopathology. Radiation Oncology . 2022;17(1):65–10. doi: 10.1186/s13014-022-02035-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Dake S. S., Nguyen M., Yan W. Q., Kazi S. Human tumor detection using active contour and region growing segmentation. Proceedings of the 2019 4th International Conference and Workshops on Recent Advances and Innovations in Engineering (ICRAIE); March 2019; Kedah, Malaysia. IEEE; pp. 1–5. [Google Scholar]

- 29.Shahvaran Z., Kazemi K., Fouladivanda M., Helfroush M. S., Godefroy O., Aarabi A. Morphological active contour model for automatic brain tumor extraction from multimodal magnetic resonance images. Journal of Neuroscience Methods . 2021;362 doi: 10.1016/j.jneumeth.2021.109296.109296 [DOI] [PubMed] [Google Scholar]

- 30.Sergioli G., Militello C., Rundo L., et al. A quantum-inspired classifier for clonogenic assay evaluations. Scientific Reports . 2021;11(1):2830–2910. doi: 10.1038/s41598-021-82085-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Amin J., Anjum M. A., Gul N., Sharif M. A secure two-qubit quantum model for segmentation and classification of brain tumor using MRI images based on blockchain. Neural Computing and Applications . 2022;2022:14. [Google Scholar]

- 32.Vijay V., Kavitha A., Rebecca S. R. Automated brain tumor segmentation and detection in MRI using enhanced Darwinian particle swarm optimization (EDPSO) Procedia Computer Science . 2016;92:475–480. doi: 10.1016/j.procs.2016.07.370. [DOI] [Google Scholar]

- 33.Zhang T., Zhang J., Xue T., Rashid M. H. A brain tumor image segmentation method based on quantum entanglement and wormhole behaved particle swarm optimization. Frontiers of Medicine . 2022;9 doi: 10.3389/fmed.2022.794126.794126 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Liu F., Gou F., Wu J. An attention-preserving network-based method for assisted segmentation of osteosarcoma MRI images. Mathematics . 2022;10(10):p. 1665. doi: 10.3390/math10101665. [DOI] [Google Scholar]

- 35.Li L., Gou F., Wu J. Modified data delivery strategy based on stochastic block model and community detection in opportunistic social networks. Wireless Communications and Mobile Computing . 2022;2022:16. doi: 10.1155/2022/5067849.5067849 [DOI] [Google Scholar]

- 36.Ali T. M., et al. A sequential machine learning-cum-attention mechanism for effective segmentation of brain tumor. Frontiers in Oncology Original Research . 2022-June;12 doi: 10.3389/fonc.2022.873268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Rizwan M., Shabbir A., Javed A. R., Shabbir M., Baker T., Al-Jumeily Obe D. Brain tumor and glioma grade classification using Gaussian convolutional neural network. IEEE Access . 2022;10:29731–29740. doi: 10.1109/ACCESS.2022.3153108. [DOI] [Google Scholar]

- 38.Tian X., Yan L., Jiang L., et al. Comparative transcriptome analysis of leaf, stem, and root tissues of Semiliquidambar cathayensis reveals candidate genes involved in terpenoid biosynthesis. Molecular Biology Reports . 2022/06/01 2022;49(6):5585–5593. doi: 10.1007/s11033-022-07492-0. [DOI] [PubMed] [Google Scholar]

- 39.Tian X., Jia W. Optimal matching method based on rare plants in opportunistic social networks. Journal of Computational Science . 2022;64 doi: 10.1016/j.jocs.2022.101875.101875 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Wu J., Xiao P., Huang H., Gou F., Zhou Z., Dai Z. An artificial intelligence multiprocessing scheme for the diagnosis of osteosarcoma MRI images. IEEE Journal of Biomedical and Health Informatics . 2022;26(9):4656–4667. doi: 10.1109/JBHI.2022.3184930. [DOI] [PubMed] [Google Scholar]

- 41.Wu J., Gou F., Xiong W., Zhou X. A reputation value-based task-sharing strategy in opportunistic complex social networks. Complexity . 2021;2021:16. doi: 10.1155/2021/8554351.8554351 [DOI] [Google Scholar]

- 42.Deng Y., Gou F., Wu J. Hybrid data transmission scheme based on source node centrality and community reconstruction in opportunistic social networks. Peer-to-Peer Networking and Applications . 2021;14(6):3460–3472. doi: 10.1007/s12083-021-01205-3. [DOI] [Google Scholar]

- 43.Li L., Gou F., Long H., He K., Wu J. Effective data optimization and evaluation based on social communication with AI-assisted in opportunistic social networks. Wireless Communications and Mobile Computing . 2022;2022:14. doi: 10.1155/2022/4879557. [DOI] [Google Scholar]

- 44.Ahmed I., Sardar H., Aljuaid H., Alam Khan F., Nawaz M., Awais A. Convolutional neural network for histopathological osteosarcoma image classification. Computers, Materials & Continua . 2021;69(3):3365–3381. doi: 10.32604/cmc.2021.018486. [DOI] [Google Scholar]

- 45.Fu Y., Xue P., Ji H., Cui W., Dong E. Deep model with Siamese network for viable and necrotic tumor regions assessment in osteosarcoma. Medical Physics (Woodbury) . 2020;47(10):4895–4905. doi: 10.1002/mp.14397. [DOI] [PubMed] [Google Scholar]

- 46.Anisuzzaman D. M., Barzekar H., Tong L., Luo J., Yu Z. A deep learning study on osteosarcoma detection from histological images. Biomedical Signal Processing and Control . 2021;69 doi: 10.1016/j.bspc.2021.102931.102931 [DOI] [Google Scholar]

- 47.Nasor M., Obaid W. Segmentation of osteosarcoma in MRI images by K‐means clustering, Chan‐Vese segmentation, and iterative Gaussian filtering. IET Image Processing . 2021;15(6):1310–1318. doi: 10.1049/ipr2.12106. [DOI] [Google Scholar]

- 48.Huang L., Xia W., Zhang B., Qiu B., Gao X. MSFCN-multiple supervised fully convolutional networks for the osteosarcoma segmentation of CT images. Computer Methods and Programs in Biomedicine . 2017;143:67–74. doi: 10.1016/j.cmpb.2017.02.013. [DOI] [PubMed] [Google Scholar]

- 49.Zhang R., Huang L., Xia W., Zhang B., Qiu B., Gao X. Multiple supervised residual network for osteosarcoma segmentation in CT images. Computerized Medical Imaging and Graphics . 2018;63:1–8. doi: 10.1016/j.compmedimag.2018.01.006. [DOI] [PubMed] [Google Scholar]

- 50.Shuai L., Gao X., Wang J. Wnet ++: a nested W-shaped network with multiscale input and adaptive deep supervision for osteosarcoma segmentation. 23:p. 2021. doi: 10.1109/ICEICT53123.2021.9531311. [DOI] [Google Scholar]

- 51.Ho D. J., et al. Deep interactive learning: an efficient labeling approach for deep learning-based osteosarcoma treatment response assessment. Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; 2020; Springer; pp. 540–549. [DOI] [Google Scholar]

- 52.Kim J., Jeong S. Y., Kim B. C., et al. Prediction of neoadjuvant chemotherapy response in osteosarcoma using convolutional neural network of tumor center 18F-fdg PET images. Diagnostics . 2021;11(11):p. 1976. doi: 10.3390/diagnostics11111976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Dufau J., Bouhamama A., Leporq B., et al. Prediction of chemotherapy response in primary osteosarcoma using the machine learning technique on radiomic data. Bulletin Du Cancer . 2019;106(11):983–999. doi: 10.1016/j.bulcan.2019.07.005. [DOI] [PubMed] [Google Scholar]

- 54.Hu Y., Tang J., Zhao S., Li Y. Diffusion-weighted imaging-magnetic resonance imaging information under class-structured deep convolutional neural network algorithm in the prognostic chemotherapy of osteosarcoma. Scientific Programming . 2021;2021:1–12. doi: 10.1155/2021/4989166.4989166 [DOI] [Google Scholar]

- 55.Arunachalam H. B., Mishra R., Daescu O., et al. Viable and necrotic tumor assessment from whole slide images of osteosarcoma using machine-learning and deep-learning models. PLoS One . 2019;14(4):p. e0210706. doi: 10.1371/journal.pone.0210706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Bansal P. Automatic detection of osteosarcoma based on integrated features and feature selection using binary arithmetic optimization algorithm. Multimedia Tools and Applications . 2022;2022:28. doi: 10.1007/s11042-022-11949-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Wu J., Yang S., Gou F., et al. Intelligent segmentation medical assistance system for MRI images of osteosarcoma in developing countries. Computational and Mathematical Methods in Medicine . 2022/01/19 2022;2022:1–17. doi: 10.1155/2022/7703583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Wu J., Gou F., Tian X. Disease control and prevention in rare plants based on the dominant population selection method in opportunistic social networks. Computational Intelligence and Neuroscience . 2022/01/18 2022;2022:1–16. doi: 10.1155/2022/1489988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Gou F., Wu J. Message transmission strategy based on recurrent neural network and attention mechanism in iot system. Journal of Circuits, Systems, and Computers . 2022/05/15 2022;31(07) doi: 10.1142/S0218126622501262.2250126 [DOI] [Google Scholar]

- 60.Zhan X., Long H., Gou F., Duan X., Kong G., Wu J. A convolutional neural network-based intelligent medical system with sensors for assistive diagnosis and decision-making in non-small cell lung cancer. Sensors . 2021;21(23):p. 7996. doi: 10.3390/s21237996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Yu G., Chen Z., Wu J., Tan Y. A diagnostic prediction framework on auxiliary medical system for breast cancer in developing countries. Knowledge-Based Systems . 2021;232 doi: 10.1016/j.knosys.2021.107459.107459 [DOI] [Google Scholar]

- 62.Qin Y., Li X., Wu J., Yu K. A management method of chronic diseases in the elderly based on IoT security environment. Computers & Electrical Engineering . 2022/09/01/2022;102 doi: 10.1016/j.compeleceng.2022.108188.108188 [DOI] [Google Scholar]

- 63.Wu J., Guo Y., Gou F., Dai Z. A medical assistant segmentation method for MRI images of osteosarcoma based on DecoupleSegNet. International Journal of Intelligent Systems . 2022;37(11):8436–8461. doi: 10.1002/int.22949. [DOI] [Google Scholar]

- 64.Wu J., Liu Z., Gou F., et al. BA-GCA net: boundary-aware grid contextual attention net in osteosarcoma MRI image segmentation. Computational Intelligence and Neuroscience . 2022;2022:1–16. doi: 10.1155/2022/3881833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Long J., Shelhamer E., Darrell T. Fully convolutional networks for semantic segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; March 2015; pp. 3431–3440. [DOI] [PubMed] [Google Scholar]

- 66.Zhao H., Shi J., Qi X., Wang X., Jia J. Pyramid scene parsing network. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; July 2017; Honolulu, HI, USA. pp. 2881–2890. [Google Scholar]

- 67.Ronneberger O., Fischer P., Brox T. U-net: convolutional networks for biomedical image segmentation. Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; May 2015; Springer; pp. 234–241. [Google Scholar]

- 68.Lin T.-Y., Dollár P., Girshick R., He K., Hariharan B., Belongie S. Feature pyramid networks for object detection. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; October 2017; pp. 2117–2125. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data used to support the findings of this study are currently under embargo while the research findings are commercialized. Requests for data, 12 months after the publication of this article, will be considered by the corresponding author. All data analyzed during the current study are included in the submission.