Abstract

Introduction

Governments in low-income and middle-income countries (LMICs) and official development assistance agencies use a variety of performance measurement and management approaches to improve the performance of healthcare systems. The effectiveness of such approaches is contingent on the extent to which managers and care providers use performance information. To date, major knowledge gaps exist about the contextual factors that contribute, or not, to performance information use by primary healthcare (PHC) decision-makers in LMICs. This study will address three research questions: (1) How do decision-makers use performance information, and for what purposes? (2) What are the contextual factors that influence the use or non-use of performance information? and (3) What are the proximal outcomes reported by PHC decision-makers from performance information use?

Methods and analysis

We present the protocol of a theory-driven, qualitative study with a multiple case study design to be conducted in El Salvador, Lebanon and Malawi.Data sources include semi structured in-depth interviews and document review. Interviews will be conducted with approximately 60 respondents including PHC system decision-makers and providers. We follow an interdisciplinary theoretical framework that draws on health policy and systems research, public administration, organisational science and health service research. Data will be analysed using thematic analysis to explore how respondents use performance information or not, and for what purposes as well as barriers and facilitators of use.

Ethics and dissemination

The ethical boards of the participating universities approved the protocol presented here. Study results will be disseminated through peer-reviewed journals and global health conferences.

Keywords: Organisation of health services, Health policy, PRIMARY CARE

Strengths and limitations of this study.

Strengths include the use of theory to guide study design, data collection and reporting; the consideration of rival explanations; and the use of triangulation of data sources, respondent accounts and researcher interpretation.

The use of a case study design with embedded units in different country contexts can contribute to theoretical generalisations about the influence of contextual factors on performance information use and non-use, and thus influence future comparative research.

Limitations include reduced transferability of findings to contexts other than the three participating countries and to other populations of decision-makers and providers. The use of virtual interviews may create potential loss of rapport between interviewers and respondents.

Introduction

This protocol aims to describe how decision-makers and providers in three low-income and middle-income countries (LMICs) use available data to assess the performance of their primary healthcare (PHC) systems. Acquiring this knowledge is important for improving PHC systems responsiveness and can contribute to the achievement of Universal Health Coverage in the era of Sustainable Development Goals. High-performing PHC systems have also proven to be key in the preparedness for and response to pandemics and other public health emergencies.1 2

PHC has been defined as a whole-of-government and whole-of-society approach that combines multisectoral policy and action, empowered people and communities and primary care and essential public health functions as the core of integrated health services.3 PHC systems are first points of entry into health service delivery, are essential for people-centred service delivery and connect citizens to health systems.4

During the last 40 years, performance measurement and management (PMM) systems have become prevalent in healthcare management and organisation.5–7 Governments, official development assistance agencies and various global health partnerships have used diverse PMM approaches to improve performance of policies and programmes in maternal and child health,8 9 HIV/AIDS, malaria and tuberculosis,10 and other global health priorities. Outcomes-driven financing approaches have also been used as a means to improve PHC system performance.11

PMM systems

PMM systems were originally conceived as ensembles of management control mechanisms designed to stimulate the delivery of organisational priorities and influencing desirable organisational behaviours.12–14 However, depending on contextual factors and historical antecedents, PMM systems have evolved in response to contrasting organisational logics.15 Directive systems tend to be guided by a logic of consequences, are prevalent in systems that favour audit cultures,16 are designed with a view towards accountability and follow the utility-maximising assumptions of Homo economicus in agency theory.17 Enabling approaches are guided by logics of improvement and learning; can create conditions for adaptive and iterative cycles of error, reflection, sensemaking and corrective action; and conceive of performance as emergent processes, influenced by managers and workers’ agency, motives, means and opportunities.18 19

Studies on PMM systems’ effectiveness have identified several sources of leverage for performance improvement in public sector organisations.20–23 Organisational performance tends to be positively associated with PMM systems that reinforce workforce motivation24; promote performance measurement at multiple levels (ie, individual, interpersonal and interorganisational)25; and where decision-makers use the information generated by the PMM system.26 27

Governments use, and official development assistance agencies promote, a diverse set of approaches to performance management including financial arrangements, accountability approaches and implementation strategies.28 An evidence gap map of PMM interventions in the PHC systems of LMICs showed that most primary studies to date have focused on provider-level implementation strategies such as in-service training and supervision, and on financial arrangements like pay-for-performance.29 The mapping exercise also identified absolute gaps in evidence for PMM interventions that operate at organisational levels, particularly accountability arrangements like public release of performance information or social accountability. There is also limited knowledge about the role of contextual factors in enabling or hindering the use of performance information at the organisational level of teams, facilities and district health systems. Table 1 summarises the interventions mapped in the evidence gap map above.

Table 1.

Interventions and approaches in primary healthcare systems performance measurement and management

| Implementation strategies | Accountability arrangements | Financial arrangements |

|

Provider-level: Clinical practice guidelines, reminders, in-service training and continuous education. Organisational-level: Clinical incident reporting; clinical practice guidelines; local opinion leaders; continuous quality improvement; and supervision. |

Individual-level or organisational-level: Audit and feedback. Community-level: Public release of performance information, social accountability. |

Individual-level and organisational level: Results-based financing, pay-for-performance and other provider incentives and rewards. |

The widespread use of PMM systems in the public sector, particularly in health, has shown that, when not tailored to context, PMM systems can not only be ineffective but can also contribute to negative outcomes such as gaming, goal displacement and data manipulation.30 Further, public administration research has shown that decision-makers do not consistently use performance information and that, when they do, the largest impacts on service delivery are attained when it is used as part of organisational dialogues that inform changes in operational and strategic direction.26 27 31 The literature has also shown that official development assistance agencies promote and use various PMM approaches for improving accountability to donors and beneficiaries; enhancing organisational learning and communications; and informing changes in strategic direction.32

The literature on routine health information systems (RHIS) in LMICs has identified organisational, behavioural and technical challenges to the production and use of information including, among others, fragmentation, duplication and poor data quality.33 It has also been shown that even when quality health information is available, LMIC health managers may not use it, leading to suboptimal decision-making processes that may negatively affect governance and healthcare management. Previous research has also found that non-use of data from RHIS can be explained by lack of motivation or scarce capacity among decision-makers; and by non-existing or poorly functioning feedback and supervision mechanisms.34–37

To address the above gaps in evidence and increase the understanding of performance information use, or non-use, in LMIC settings, this article presents the protocol of a qualitative multiple case study.

Methods and analysis

Study aims and research questions

The study described here will assess the experiences of PHC decision-makers and providers with PMM systems in El Salvador, Lebanon and Malawi. Research findings will be used to inform an applied research agenda on PHC system performance; contribute to improve the measurement and management of PHC systems performance; and develop an evaluation framework for assessing performance information use in other country contexts. Our research questions are: (1) How do PHC system decision-makers use performance information, or not, and for what purposes? (2) What are the contextual factors that influence the decision to use performance information, or not? (3) What are the proximal outcomes reported by PHC decision-makers from performance information use and non-use?

Theoretical framework

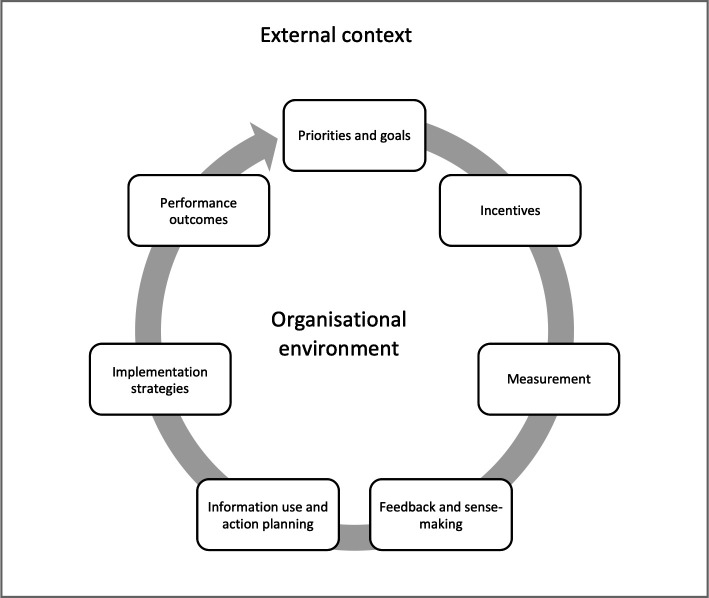

Based on PMM models in public administration research, implementation research and organisational science6 22 38–40 we developed an interdisciplinary theoretical framework to help guide study design. PMM systems are conceptualised as continuous and recursive cycles of (1) organisational priorities and goals; (2) incentives; (3) performance measurement, feedback and sensemaking; (4) implementation strategies; and (5) performance outcomes,6 as represented in figure 1.

Figure 1.

Performance measurement and management system.

Organisational priorities and goals are the ultimate expression of what desirable performance ought to be; they are identifiable in policy documents, summarised in logical models and sometimes reflected as measurable targets in performance frameworks. Incentive systems are managerial practices aimed at stimulating workforce motivation and fostering organisational performance by means of extrinsic and intrinsic stimuli. Extrinsic motivators include rewards, recognition, pay-for-performance, bonuses and in-kind incentives, among others.41 Intrinsic motivators can trigger satisfaction of workers’ basic psychological needs such as competence, autonomy, and connection.42 43 It is believed that both types of motivators are central to organisational performance.44

Performance measurement processes generate raw data about past performance and use metrics that reflect organisational priorities and goals. Performance data are usually compiled into registers that feed into RHIS, and can be summarised and disseminated via reports, scorecards and dashboards. Given the perceived low-quality of RHIS, particularly in LMICs,35 performance data is also sometimes sourced from population surveys. The latter have become one of the most frequently used data sources for tracking health programmes’ performance in LMICs.36 37

The data acquired via RHIS and/or population surveys are usually contrasted against expected targets and goals which, in turn, are disseminated in ways that generate performance information flows aimed at different users. Upward flows bring information through organisational hierarchies usually for reporting and accountability purposes. Information can also be fed back to the frontlines of service provision as part of feedback and audit, quality improvement or supportive supervision processes.45 As organisational actors engage with performance data, ascribe meaning to it and imagine future courses of action in response to perceived gaps in performance, the managerial processes above can contribute to collective sensemaking,46 a process that helps people ‘understand issues or events that are novel, ambiguous, confusing or in some other way violate expectations’.47 It can also inform decisions among organisational actors to engage or not in addressing the gaps in performance made evident by available information. Action plans, budgets, changes in service delivery and other processes of course-correction can then be considered for future implementation.

Once courses of action are decided, organisational actors can deploy various strategies to implement them. Implementation strategies help system actors appraise and respond in adaptive fashion to factors in their immediate environment that can enable or hinder collective action (see table 1). In the short-term, performance information can be used for planning, compliance, reporting or rapid course-correction purposes, among others; it can also be misused through gaming processes, or not used.30 As iterative PMM cycles are repeated through time, performance information can also be used (or not) as the basis for testing new processes and services, for internal advocacy and/or for policy formation or redesign.

PMM cycles can contribute to proximal performance outcomes that feed into long causal chains of outcomes occurring at multiple levels within an organisation (eg, at individual, team and organisational levels). Outcomes can include (1) proximal changes resulting from using performance information (or not), such as action plans implemented, compliance with procedural standards, timely reporting and rapid course-correction; (2) intermediate effects emerge at the organisational behaviour level, and may include changes in workforce motivation, job satisfaction, morale or organisational commitment; and (3) downstream population-level health effects and equity outcomes resulting from the iterative repetition of PMM cycles in dynamic and changing environments.

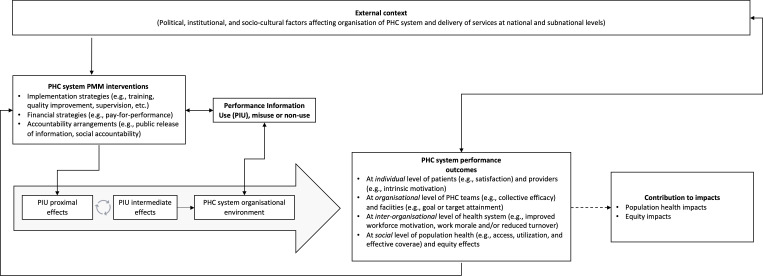

We integrated the elements of the PMM model described above into a theoretical framework that represents the hypothetical process of performance change and the role played by performance information use and non-use. The framework contains the following elements: external context; PMM approaches in use within the public sector; performance information production and use; PHC systems’ internal organisational environment; and the causal pathways connecting performance information use and non-use to proximal, intermediate and distal outcomes.

The theoretical framework is represented in figure 2. Here, the managerial practices used to measure and change performance are influenced by external and internal contextual factors and by the implementation strategies in use and modulated by the use and non-use of performance information. The processes of change thus generated can contribute, via long causal chains, to a variety of outcomes and impacts. Proximal effects from performance information use are represented by single-loop learning effects48 such as changes in planned action, rapid course-correction and improvements in service quality. The repetition of such iterative cycles may, in turn, contribute to the emergence of second-loop learning effects such as changes in strategic direction and new practices among service providers and managers.49

Figure 2.

Theoretical framework. PHC, primary healthcare; PMM, performance measurement and management.

The use of performance information is causally linked to proximal performance outcomes at the individual level of providers and patients; those outcomes are also causally connected to intermediate outcomes at the organisational level such as improved workforce motivation, enhanced organisational commitment, increased trust between providers and PHC system users and reduced staff turnover, among others. These outcomes can contribute to distal population health and equity outcomes (intended and otherwise). Depending on context, the causal chain of outcomes described above can also be interrupted, be limited to isolated pockets of excellence, or be altogether absent.

Study design

The present study will explore the uses of performance information in the PHC systems in El Salvador, Lebanon and Malawi. Investigation across different contexts allows for the generation of context-specific insights of value to local actors and, potentially, to broader understandings of the phenomena of interest.50

To address the research questions, we chose a theory informed, multiple case study design with embedded units of analysis.51 Case studies are well-suited for obtaining an in-depth understanding of context-specific processes in complex systems.52 Here, a case is defined as each country’s PMM practices; the two units of analysis included are PHC service provision and PHC policy implementation at national and subnational levels.

Study setting

El Salvador

El Salvador is a lower-middle income country with a population of 6.4 million. Since the conclusion in 1992 of a civil war, the country reduced inequality by about 5% points between 2007 and 2016; increased coverage of institutional deliveries and immunisation to 98% and 93%, respectively; and achieved the under-5 mortality reduction for the Millennium Development Goals.53 54

Starting in 2009, El Salvador universalised access to free, comprehensive PHC. Existing infrastructure was reorganised into PHC networks, one for each of the departments in which the country is administratively divided. Service delivery was delegated to multi professional teams of PHC providers. The oversight of each departments’ network is the responsibility of a decentralised Ministry of Health (MOH) coordination team called SEBASI in its Spanish acronym. PHC teams have a nominal catchment area of 3000 individuals and are co-located within the communities they serve. A basic PHC team is made up of one medical doctor, two nurses and up to three community health promoters; some teams have specialised care providers. PHC teams provide community outreach as well as facility-based services and deliver a package of benefits containing approximately 300 interventions.55

In 2011, the government of El Salvador joined the Salud Mesoamerica Initiative (SMI), a public–private partnership focused on improving the performance of PHC systems in the eight nation states of Mesoamerica. In El Salvador, SMI operates in 75 PHC teams operating in the poorest rural municipalities in the country. PMM interventions used include PHC team target-setting; monitoring of PHC teams’ performance using population and facility surveys and RHIS; provision of feedback to teams; and team-based in-kind incentives.55 56

Lebanon

Lebanon is home to approximately 6.8 million people and is classified as an upper-middle income country.57 However, the financial crisis that started in 2019 reduced real per-capita gross domestic product 37.1% between 2018 and 2021. The country also hosts the largest number of refugees per capita in the world58 and has suffered additional internal shocks. The combined effect of these various shocks has put major pressure on an already stretched healthcare system.59 60

PHC services in Lebanon are provided by a combination of private-for-profit and not-for-profit providers; the latter are the most accessible and used sources of care by vulnerable Lebanese and refugee populations.61 62 Lebanon’s official PHC network is comprised of 213 centres that have contractual agreements with the Ministry of Public Health based on pre-met community care delivery standards.

In terms of performance measurement at the PHC level, the MOH has developed policies and practices to monitor service delivery patterns, quality of care and performance of PHC centres within the national network.63 Monitoring involves regular visits by MOH inspectors and administration of patient satisfaction surveys.63 Accreditation is also used to regulate the quality of care at the PHC level. By establishing a National Accreditation Program for PHC centres in 2009, the MOH aimed to ensure continuous and sustainable quality control, improve compliance with legal and safety standards, enhance transparency and accountability and establish a positive image of standards of practice and service at PHC centres.63

Despite the various health reforms implemented in Lebanon, there is still no active national strategic plan designed around PHC.64–67 Furthermore, many PHC centres remain underdeveloped with no availability of basic diagnostic imaging and laboratory medicine, resulting in perceived lack of confidence in the quality of services offered.60

Malawi

Malawi is a landlocked, low-income country with a population of approximately 18.6 million. The economy is mainly dependent on the agricultural sector which employs 80% of the population. A 5-year development plan, Malawi’s Growth and Development Strategy, guides the country’s development; the current plan is focused on education, health, agriculture, energy and tourism.68

Malawi’s epidemiological profile combines a high burden of disease from both preventable conditions as well as non-communicable diseases. The country has a high population density and a total fertility rate of 4.4. Prevalent social determinants of health include poverty and inequality, high levels of illiteracy and limited coverage of social safety programmes.68 69

Primary care is the main platform for the delivery of health services in Malawi. However, the PHC system is characterised by poor distribution of human and physical resources, fragmentation of services and chronic shortages of staff.70 To reduce service fragmentation, Malawi developed in 2017 a new community health policy centred on a team-based approach. Community health teams comprise health surveillance assistants, clinicians, environmental health officers and community health volunteers.71

Data collection

The proposed study will use document review and semi-structured interviews with informants who typically hold ‘great knowledge…[and] who can shed light on the inquiry issues’.72 We will use document review to identify domestic priorities and explore the external context, available resources and ongoing official development assistance programmes. Documents to be reviewed include MOH policy documents, strategic frameworks, operational plans, results frameworks, performance reports and logical models, among others. Semi-structured interviews will be conducted with PHC decision-makers at the national and subnational levels and with PHC providers. To be eligible for inclusion, decision-makers will be current or former officials responsible for PHC system policy formulation or implementation at national and subnational levels; providers will be staff currently working as clinical care providers or community health workers.

Respondent selection and recruitment will follow an information power approach based on criteria that are suitable for reaching saturation in qualitative studies using non-probabilistic, purposive sampling.73 We will design respondent sampling guided by our understanding of the types of participants that can provide highly specific information to address the study’s research questions; insights from the preliminary theoretical framework; and responsive to the quality of the dialogue elicited during data collection. The estimated number of respondents to be interviewed in the three countries is approximately 20 respondents per site, for a total of 60 respondents. However, sampling numbers will be further refined, and may be expanded, based on preliminary analysis of data as data collection is ongoing. Respondent inclusion criteria will be calibrated to the context of each study setting; site-specific approaches to data collection will be reported in each country case study.

In the interviews with service providers, we will explore experiences about the PHC system organisational environment; the ways in which PHC performance is measured, analysed and made sense of; the extent to which performance information is used or not, and for what purposes, and the reported effects from using performance information. Interviews with decision-makers at national and subnational levels will explore PHC priorities, goals and/or targets; characterise the public sector institutional context; explore sources and frequency of performance data appraisal; and inquire about the uses of performance information. We will also triangulate the data resulting from document review and interviews, and the experiences reported PHC by the two types of respondents.

In each country, the research team will develop a Project Brief summarising the study’s aims and highlighting the voluntary nature of participation. An invitation to participate in the interview will be sent individually via email to each potential respondent. Once the respondent agrees to participate in the interview, a remote interview will be scheduled (or in-person, if allowed by an ethical review board). Before initiation of the interview, the interviewer shall read the consent form and obtain verbal consent from the interviewee which will be recorded and reflected in the interview transcript accordingly. Site-specific interview guidelines are available in online supplemental file 1.

bmjopen-2021-060503supp001.pdf (131.4KB, pdf)

Analysis

Interviews will be audio taped, transcribed verbatim and imported into NVivo V.12.0. Transcripts will be coded independently by at least two researchers in each country. We will use an iterative, directed approach to analysis74 informed by the theoretical framework. The latter shall also inform the design of a codebook to guide deductive coding of the data. Inductive codes emerging from the data will also be identified and included in the analysis. We will convene analytical workshops among the research teams in participating countries to discuss the codebook, the coding process, thematic analysis and data synthesis procedures.

After the conclusion of coding in each country, we will execute code queries for each code, stratified by respondent type (eg, providers and decision-makers) to extract code-specific data. Subsequently, we will review and summarise the code-specific and respondent-specific data from the query outputs into code summary memos using a standardised template.

Code summary memos will include a respondents table to capture brief and relevant information from each type of respondent, and narratives constructed by the researcher reviewing the query output, supported by exemplary quotes. Code summary memos will include deviant narratives and quotes that run counter to the main narrative(s) and a section for recording researcher insights on where and how codes may be connected to others. In a final step, the synthesised data in the code summary memos will be organised into thematic matrices to formalise linkages between codes and construct themes. The resulting themes will be used to report country-specific findings and to develop a refined theoretical framework. Results for each country case will be organised using the Standards for Reporting Qualitative Research checklist75 (online supplemental file 2). To increase credibility in our findings we will consider rival explanations and triangulate across data sources (ie, SMI relevant programme documents and in-depth interviews), respondents (decision-makers and providers), researchers and social and behavioural science theories. Data collection and analysis will take place between June 2020 and June 2022.

bmjopen-2021-060503supp002.pdf (96.8KB, pdf)

The proposed study has several strengths including the use of theory to guide study design, data collection and reporting; the consideration of rival explanations76; and the use of triangulation of data sources, respondent accounts and researcher interpretation.77 Case study research has limitations including reduced transferability of findings to other contexts and different populations of decision-makers and PHC providers.76 Also, the use of virtual interviews may create potential loss of rapport between interviewers and respondents.

Patient and public involvement

Neither patients nor public were involved in the conduct, reporting or dissemination of the research presented in this protocol.

Ethical considerations and dissemination

The ethical approval for this study was provided by the Institutional Review Boards of the participating universities (study numbers NCR203102 for the George Washington University; SBS-2021–0162 for American University in Beirut, and P.11/20/3198 for the University of Malawi). We will follow ethical principles of voluntary and informed involvement in the study, confidentiality and safety of all participants. Verbal consent will be obtained from all respondents and be reflected in the respective interview transcripts.

A database will be maintained containing information on all interviews completed, including demographic data and time of the interview as well as confirming verbal consent by each respondent. All identifying information will be stored in an encrypted database, hosted in encrypted and password protected cloud services provided by each of the hosting research institutions. The identifier information database will be permanently deleted after the completion of data analysis.

Findings will be reported to the participating ministries of health, the commissioners of this study and to development finance partners, where applicable. Results will also be presented at local, national and international conferences and disseminated via peer-review publications. We aim to produce individual country case study manuscripts followed by a multiple case study synthesising findings from the three study sites.

Study significance

Research on the use of performance information in PHC systems is scarce; multicountry case studies in LMICs are non-existent to the best of our knowledge. The study presented here can contribute to an understanding of the contextual factors and organisational environments that enable or hinder the use of performance information in the PHC systems of El Salvador, Lebanon and Malawi. Such knowledge can inform future research and contribute to improve the strategies used in LMIC settings to measure and manage PHC system performance.

Supplementary Material

Acknowledgments

We would like to acknowledge the Primary Healthcare Research Consortium for their support in particular Dr D Praveen and Manushi Sharma. This work was supported, in whole, by the Bill & Melinda Gates Foundation (INV-000970). Under the grant conditions of the Foundation, a Creative Commons Attribution 4.0 Generic License has already been assigned to the Author Accepted Manuscript version that might arise from this submission.

Footnotes

Contributors: The study was conceptualised by WM, MM and FE-J. The first draft was written by SSW, W-CY and WM, with inputs from LD, MM and FE-J. All authors have read and approved the final manuscript.

Funding: This study is funded by the Bill & Melinda Gates Foundation through the Primary Healthcare Research Consortium hosted at the George Institute for Global Health in India (INV-000970).

Competing interests: None declared.

Patient and public involvement: Patients and/or the public were not involved in the design, or conduct, or reporting, or dissemination plans of this research.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Ethics statements

Patient consent for publication

Not applicable.

References

- 1.Peiris D, Sharma M, Praveen D, et al. Strengthening primary health care in the COVID-19 era: a review of best practices to inform health system responses in low- and middle-income countries. WHO South East Asia J Public Health 2021;10:6–25. 10.4103/2224-3151.309867 [DOI] [Google Scholar]

- 2.Friedrich MJ. Declaration of Astana emphasizes primary care as key to World health. JAMA 2018;320:2412. 10.1001/jama.2018.19558 [DOI] [PubMed] [Google Scholar]

- 3.UNICEF WHO. A vision for primary health care in the 21st century: towards universal health coverage and the sustainable development goals. Geneva, Switzerland: WHO and UNICEF, 2018. [DOI] [PubMed] [Google Scholar]

- 4.WHO . The world health report 2008: primary health care (now more than ever). Geneva: World Health Organization, 2008. [Google Scholar]

- 5.Murray CJ, Frenk J. A framework for assessing the performance of health systems. Bull World Health Organ 2000;78:717–31. [PMC free article] [PubMed] [Google Scholar]

- 6.Pollitt C. Performance management 40 years on: a review. Some key decisions and consequences. Public Money & Management 2018;38:167–74. 10.1080/09540962.2017.1407129 [DOI] [Google Scholar]

- 7.OECD . Measuring up: improving health system performance in OECD countries. Paris: OECD Publishing, 2002. [Google Scholar]

- 8.Fernandes G, Sridhar D. World bank and the global financing facility. BMJ 2017;358:j3395. 10.1136/bmj.j3395 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Eichler R, Agarwal K, Askew I, et al. Performance-Based incentives to improve health status of mothers and newborns: what does the evidence show? J Health Popul Nutr 2013;31:36–47. [PubMed] [Google Scholar]

- 10.Fan V, Denizhan Duran R. Grant performance and payments at the global fund. Washington, DC: Center for Global Development, 2013. [Google Scholar]

- 11.Mokdad AH, Palmisano EB, Zúñiga-Brenes P, et al. Supply-Side interventions to improve health: findings from the Salud Mesoamérica initiative. PLoS One 2018;13:e0195292. 10.1371/journal.pone.0195292 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Broadbent J, Laughlin R. Performance management systems: a conceptual model. Manage Account Res 2009;20:283–95. 10.1016/j.mar.2009.07.004 [DOI] [Google Scholar]

- 13.Ferreira A, Otley D. The design and use of performance management systems: an extended framework for analysis. Manag Account Res 2009;20:263–82. 10.1016/j.mar.2009.07.003 [DOI] [Google Scholar]

- 14.Talbot C. Theories of performance: organizational and service improvement in the public domain. New York, NY: Oxford University Press, 2010. [Google Scholar]

- 15.Pollitt C. The logics of performance management. Evaluation 2013;19:346–63. 10.1177/1356389013505040 [DOI] [Google Scholar]

- 16.Shore C, Wright S, Amit V. Audit culture revisited: rankings, ratings, and the reassembling of Society. Current Anthropology 2015;56:431–2. [Google Scholar]

- 17.Eisenhardt KM. Agency theory: an assessment and review. Acad Manage Rev 1989;14:57–74. 10.2307/258191 [DOI] [Google Scholar]

- 18.Newton-Lewis T, Munar W, Chanturidze T. Performance management in complex adaptive systems: a conceptual framework for health systems. BMJ Glob Health 2021;6:e005582. 10.1136/bmjgh-2021-005582 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Davis JH, Schoorman FD, Donaldson L. Toward a stewardship theory of management. Acad Manage Rev 1997;22:20–47. 10.2307/259223 [DOI] [Google Scholar]

- 20.Walker RM, Lee MJ, James O, et al. Analyzing the complexity of performance information use: experiments with stakeholders to disaggregate dimensions of performance, data sources, and data types. Public Adm Rev 2018;78:852–63. 10.1111/puar.12920 [DOI] [Google Scholar]

- 21.Walker RM, Boyne GA, Brewer GA, eds. Public management and performance: Research directions. Cambridge, UK: Cambridge University Press, 2012. [Google Scholar]

- 22.Franco-Santos M, Lucianetti L, Bourne M. Contemporary performance measurement systems: a review of their consequences and a framework for research. Manag Account Res 2012;23:79–119. 10.1016/j.mar.2012.04.001 [DOI] [Google Scholar]

- 23.Levesque J-F, Sutherland K. What role does performance information play in securing improvement in healthcare? A conceptual framework for levers of change. BMJ Open 2017;7:e014825. 10.1136/bmjopen-2016-014825 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kanfer R, Frese M, Johnson RE. Motivation related to work: a century of progress. J Appl Psychol 2017;102:338–55. 10.1037/apl0000133 [DOI] [PubMed] [Google Scholar]

- 25.Van Dooren W, Bouckaert G, Halligan J. Performance measurement in the public sector. 2nd edn. Routledge, 2015. [Google Scholar]

- 26.Pandey SK. Performance information use: making progress but a long way to go. Public Performance & Management Review 2015;39:1–6. 10.1080/15309576.2016.1071158 [DOI] [Google Scholar]

- 27.Moynihan DP, Pandey SK. The big question for performance management: why do managers use performance information? J Public Adm Res Theory 2010;20:849–66. 10.1093/jopart/muq004 [DOI] [Google Scholar]

- 28.EPOC . Effective practice and organisation of care (EPOC). EPOC taxonomy Cochrane collaboration. Norwegian satellite, 2015. Available: https://epoc.cochrane.org/epoc-taxonomy

- 29.Munar W, Snilstveit B, Aranda LE, et al. Evidence gap map of performance measurement and management in primary healthcare systems in low-income and middle-income countries. BMJ Glob Health 2019;4:e001451. 10.1136/bmjgh-2019-001451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Franco-Santos M, Otley D. Reviewing and theorizing the unintended consequences of performance management systems. Int J Manag Rev 2018;20:696–730. 10.1111/ijmr.12183 [DOI] [Google Scholar]

- 31.Moynihan DP. The dynamics of performance management: constructing information and reform. 12 ed. Washington, DC: Georgetown University Press, 2008. [Google Scholar]

- 32.Vahamaki J, Verger C. Learning from Results-Based management evaluations and reviews. Paris: OECD, 2019. [Google Scholar]

- 33.Leon N, Balakrishna Y, Hohlfeld A, et al. Routine health information system (RHIS) improvements for strengthened health system management. Cochrane Database Syst Rev 2020;8:CD012012. 10.1002/14651858.CD012012.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Aqil A, Lippeveld T, Hozumi D. Prism framework: a paradigm shift for designing, strengthening and evaluating routine health information systems. Health Policy Plan 2009;24:217–28. 10.1093/heapol/czp010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hotchkiss DR, Aqil A, Lippeveld T, et al. Evaluation of the performance of routine information system management (PriSM) framework: evidence from Uganda. BMC Health Serv Res 2010;10:188. 10.1186/1472-6963-10-188 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wagenaar BH, Hirschhorn LR, Henley C, et al. Data-Driven quality improvement in low-and middle-income country health systems: lessons from seven years of implementation experience across Mozambique, Rwanda, and Zambia. BMC Health Serv Res 2017;17:830. 10.1186/s12913-017-2661-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hoxha K, Hung YW, Irwin BR, et al. Understanding the challenges associated with the use of data from routine health information systems in low- and middle-income countries: a systematic review. Health Inf Manag 2022;51:1–14. 10.1177/1833358320928729 [DOI] [PubMed] [Google Scholar]

- 38.Contandriopoulos D, Champagne F, Denis J-L. The multiple causal pathways between performance measures' use and effects. Med Care Res Rev 2014;71:3–20. 10.1177/1077558713496320 [DOI] [PubMed] [Google Scholar]

- 39.Contandriopoulos D, Lemire M, Denis J-L, et al. Knowledge exchange processes in organizations and policy arenas: a narrative systematic review of the literature. Milbank Q 2010;88:444–83. 10.1111/j.1468-0009.2010.00608.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science 2009;4:1–15. 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Heinrich CJ, Marschke G. Incentives and their dynamics in public sector performance management systems. J Pol Anal Manage 2010;29:183–208. 10.1002/pam.20484 [DOI] [Google Scholar]

- 42.Deci EL, Olafsen AH, Ryan RM. Self-Determination theory in work organizations: the state of a science. Annu Rev Organ Psychol Organ Behav 2017;4:19–43. 10.1146/annurev-orgpsych-032516-113108 [DOI] [Google Scholar]

- 43.Gagné M, Deci EL. Self-determination theory and work motivation. J Organ Behav 2005;26:331–62. 10.1002/job.322 [DOI] [Google Scholar]

- 44.Cerasoli CP, Nicklin JM, Ford MT. Intrinsic motivation and extrinsic incentives jointly predict performance: a 40-year meta-analysis. Psychol Bull 2014;140:980–1008. 10.1037/a0035661 [DOI] [PubMed] [Google Scholar]

- 45.Colquhoun HL, Carroll K, Eva KW, et al. Advancing the literature on designing audit and feedback interventions: identifying theory-informed hypotheses. Implement Sci 2017;12:117. 10.1186/s13012-017-0646-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Weick KE, Sutcliffe KM, Obstfeld D. Organizing and the process of Sensemaking. Organization Science 2005;16:409–21. 10.1287/orsc.1050.0133 [DOI] [Google Scholar]

- 47.Maitlis S, Christianson M. Sensemaking in organizations: taking stock and moving forward. Acad Manag Ann 2014;8:57–125. 10.5465/19416520.2014.873177 [DOI] [Google Scholar]

- 48.Argyris C, Schön DA. Organizational learning: a theory of action perspective. Rev Esp Invest Sociol 1997;77/78:345–8. 10.2307/40183951 [DOI] [Google Scholar]

- 49.Argyris C. Double loop learning in organizations. Harvard Bus Rev 1977;55:115–25. [Google Scholar]

- 50.Gilson L. Health policy and systems research: a methodology reader. Geneva: World Health Organization, 2012. [Google Scholar]

- 51.Yin R K. Applications of case study research. Third ed. Thousand Oaks, USA: Sage Publications, 2011. [Google Scholar]

- 52.Yin R K. Case study research and applications: design and methods. Thousand Oaks, CA.: Sage Publications, 2018. [Google Scholar]

- 53.World-Bank . The world bank in El Salvador Washington, DC. USA, 2019. Available: https://www.worldbank.org/en/country/elsalvador/overview

- 54.IADB . El Salvador Washington, DC. USA, 2019. Available: https://www.iadb.org/en/alianza-para-la-prosperidad/el-salvador

- 55.Munar W, Wahid SS, Mookherji S, et al. Team- and individual-level motivation in complex primary care system change: a realist evaluation of the Salud Mesoamerica initiative in El Salvador. Gates Open Res 2018;2:55. 10.12688/gatesopenres.12878.1 [DOI] [Google Scholar]

- 56.Bernal P, Martinez S. In-kind incentives and health worker performance: experimental evidence from El Salvador. J Health Econ 2020;70:102267. 10.1016/j.jhealeco.2019.102267 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.World Bank . Lebanon country overview Washington, DC, 2020. Available: https://www.worldbank.org/en/country/lebanon/overview

- 58.UNHCR . Lebanon. time-series Syrian refugees registered by Cadastral in Lebanon (2012-2019), 2019. Available: https://data2.unhcr.org/en/documents/details/40569

- 59.Harake W, Abou Hamde NM. So When Gravity Beckons, the Poor Don't Fall. Washington, DC: The World Bank, 2019. [Google Scholar]

- 60.Ismaeel H, El Jamal N, Dumit N, et al. Saving the suffering Lebanese healthcare sector: immediate relief while planning reforms. Paris: Arab Reform Initiative, 2020. [Google Scholar]

- 61.Hamadeh RS, Kdouh O, Hammoud R, et al. Working short and working long: can primary healthcare be protected as a public good in Lebanon today? Confl Health 2021;15:1–9. 10.1186/s13031-021-00359-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Hemadeh R, Kdouh O, Hammoud R, et al. The primary healthcare network in Lebanon: a national facility assessment. East Mediterr Health J 2020;26:700–7. 10.26719/emhj.20.003 [DOI] [PubMed] [Google Scholar]

- 63.El- Jardali F, Fadlallah R, Matar L. Primary health care systems (PRIMASYS): comprehensive case study from Lebanon. Geneva: World Health Organization, 2017. [Google Scholar]

- 64.Ministry of Public Health L . Health Response Strategy: Maintaining Health Security, Preserving Population Health & Saving Children and Women Lives A New Approach 2016 & Beyond Beirut, Lebanon, 2016. Available: https://www.moph.gov.lb/userfiles/files/Strategic%20Plans/HealthResponseStrategy-updated.pdf

- 65.Ministry of Public Health L . Health strategic plan: strategic plan for the medium term (2016 to 2020) Beirut, Lebanon: Ministry of public health, Lebanon, 2016. Available: https://www.moph.gov.lb/en/Pages/9/11666/

- 66.Ministry of Public Health L . MOPH strategy 2025 Beirut, Lebanon, 2018. Available: https://www.moph.gov.lb/en/view/19754/ministry-of-public-health-strategy-health-2025-

- 67.Ministry of Public Health L . Health reform in Lebanon Beirut, Lebanon, 2021. Available: https://www.moph.gov.lb/en/view/1279/health-reform-in-lebanon

- 68.World Bank . Malawi. overview Washington, DC, 2021. Available: https://www.worldbank.org/en/country/malawi/overview#1

- 69.WHO . Malawi Geneva, 2021. Available: https://www.afro.who.int/countries/malawi

- 70.Makwero MT. Delivery of primary health care in Malawi. Afr J Prim Health Care Fam Med 2018;10. 10.4102/phcfm.v10i1.1799 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Angwenyi V, Aantjes C, Kondowe K, et al. Moving to a strong(er) community health system: analysing the role of community health volunteers in the new national community health strategy in Malawi. BMJ Glob Health 2018;3:e000996. 10.1136/bmjgh-2018-000996 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Patton MQ. Qualitative research and evaluation methods. Fourth ed. Thousand Oaks, CA: Sage publications, 2014: 806 p. [Google Scholar]

- 73.Malterud K, Siersma VD, Guassora AD. Sample size in qualitative interview studies: guided by information power. Qual Health Res 2015;26:1753–60. 10.1177/1049732315617444 [DOI] [PubMed] [Google Scholar]

- 74.Hsieh H-F, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res 2005;15:1277–88. 10.1177/1049732305276687 [DOI] [PubMed] [Google Scholar]

- 75.O'Brien BC, Harris IB, Beckman TJ, et al. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med 2014;89:1245–51. 10.1097/ACM.0000000000000388 [DOI] [PubMed] [Google Scholar]

- 76.Zhang Y, Zhang J, JJ L. The effect of intrinsic and extrinsic goals on work performance: prospective and empirical studies on goal content theory. Personnel Review 2018;47:900–12. [Google Scholar]

- 77.Denzin NK, Lincoln YS. The SAGE Handbook of qualitative research. 5th ed. Thousand Oaks, CA: Sage Publications, 2011. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2021-060503supp001.pdf (131.4KB, pdf)

bmjopen-2021-060503supp002.pdf (96.8KB, pdf)