Abstract

The advancements in machine learning opened a new opportunity to bring intelligence to the low-end Internet-of-Things nodes such as microcontrollers. Conventional machine learning deployment has high memory and compute footprint hindering their direct deployment on ultra resource-constrained microcontrollers. This paper highlights the unique requirements of enabling onboard machine learning for microcontroller class devices. Researchers use a specialized model development workflow for resource-limited applications to ensure the compute and latency budget is within the device limits while still maintaining the desired performance. We characterize a closed-loop widely applicable workflow of machine learning model development for microcontroller class devices and show that several classes of applications adopt a specific instance of it. We present both qualitative and numerical insights into different stages of model development by showcasing several use cases. Finally, we identify the open research challenges and unsolved questions demanding careful considerations moving forward.

Keywords: Feature projection, internet-of-things, machine learning, microcontrollers, model compression, neural architecture search, neural networks, optimization, sensors, TinyML

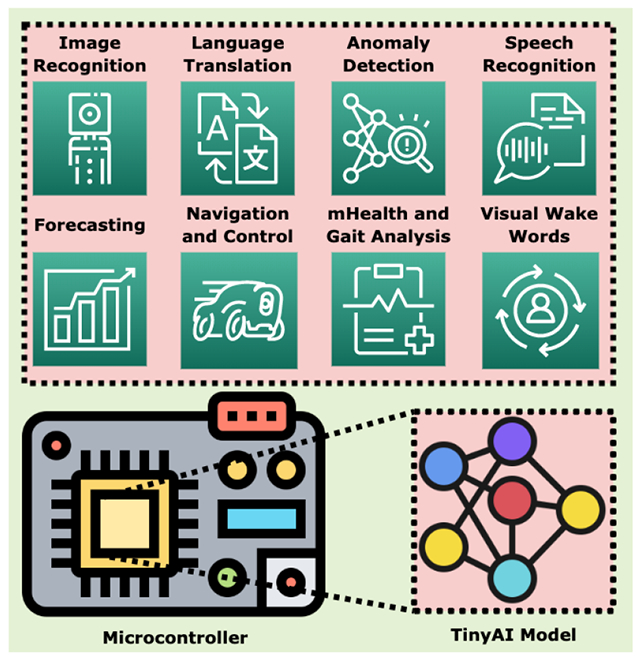

Graphical Abstract

I. Introduction

Low-end Internet-of-Things (IoT) nodes such as microcontrollers are widely adopted in resource-limited applications such as wildlife monitoring, oceanic health tracking, search and rescue, activity tracking, industrial machinery debugging, onboard navigation, and aerial robotics [1] [2]. These applications limit the compute device payload capabilities, and necessitate the deployment of lightweight hardware and inference pipelines. Traditionally, microcontrollers operated on low-dimensional structured sensor data (e.g., temperature and humidity) using classical methods, making simple inferences at the edge. Recently, with the advent of machine learning, considerable endeavors are underway to bring machine learning (ML) to the edge [3] [4].

However, directly porting ML models designed for high-end edge devices such as mobile phones or single-board computers are not suitable for microcontrollers. A typical microcontroller has 128 KB RAM and 1 MB of flash, while a mobile phone can have 4 GB of RAM and 64 GB of storage [5]. The ultra resource limitations of microcontroller class IoT nodes demand the design of a systematic workflow and tools to guide onboard deployment of ML pipelines.

This paper presents the unique requirements, challenges, and opportunities presented when developing ML models doing sensor information processing on microcontrollers. While prior surveys [3] [4] [6] [7] present a qualitative review of the model development cycle for microcontrollers, they fail to provide quantitative comparisons across alternative workflow choices and insights from application-specific case studies. In contrast, we illustrate a closed-loop workflow of ML model development and deployment for microcontroller class IoT nodes with quantitative evaluation, numerical analysis, and benchmarks showing different instances of proposed workflow across various applications. Specifically, we discuss in detail workflow components while making performance comparisons and tradeoffs of the workflow adoptions in the existing literature. Finally, we also identify bottlenecks in the current model development cycle and propose open research challenges going forward. Our contributions are as follows:

We illustrate a coherent and closed-loop ML model development and deployment workflow for microcontrollers. We delineate each block in the workflow, providing both qualitative and numerical insights.

We provide application-dependent quantitative evaluation and comparison of proposed workflow adaptations.

We discuss several tradeoffs in the existing model-development process for microcontrollers and showcase opportunities and ideas in this workspace.

The rest of the paper is organized as follows: Section II outlines the TinyML workflow of model development and deployment for microcontrollers. Section III explores data engineering frameworks. Section IV shows feature projection techniques. Section V discusses model compression methods. Section VI describes lightweight ML blocks suitable for microcontrollers. Section VII discusses neural architecture search (NAS) frameworks for microcontrollers. Section VIII outlines several software suites available for porting developed models onto microcontrollers. Section IX showcases TinyML online learning frameworks. Section X provides quantitative and qualitative comparison of workflow variations depending on application. Section XI presents inter-relative and quantitative analysis of individual portions of the workflow through case studies. Section XII illustrates open challenges and ideas for future research. Section XIII provides concluding remarks.

II. TinyML Workflow

We use the term ”TinyML” to refer to model compression, machine-learning blocks, AutoML frameworks, and hardware and software suites designed to perform ultra-low-power (≤ 1 mW), always-on, and on-board sensor data analytics [4] [6] [7] on resource-constrained platforms. Typical TinyML platforms such as microcontrollers have SRAM in the order of 100 – 102 kB and flash in the order of 103 kB [6]. Table I provides characteristics of these devices compared to cloud servers and mobile phones. Given the widespread penetration of microcontroller-based IoT platforms in our daily lives for pervasive perception-processing-feedback applications, there is a growing push towards embedding intelligence into these frugal smart objects [3]. Embedded AI on microcontrollers is motivated by applicability, independence from network infrastructure, security and privacy, and low deployment cost:

TABLE I.

Comparison of hardware for doing machine learning on cloud servers, mobile phones, and microcontrollers [8]

| Platform | Memory | Storage | Power |

|---|---|---|---|

| Cloud GPU | 16 GB HBM | TB/PB | 250W |

| Mobile CPU | 4 GB DRAM | 64 GB Flash | 8W |

| Microcontroller | 2-1024 kB SRAM | 32-2048 kB eFlash | 0.1-0.3W |

(i). Applicability:

Neural networks have been shown to provide rich and complex inferences over the first-principle approaches for sensor data analytics without domain expertise. With the emergence of real-time ML for microcontrollers, it is possible to turn IoT nodes from simple data harvesters or first-principles data processors to learning-enabled inference generators. TinyML combines the lightweightness of first-principle approaches with the accuracy of large neural networks.

(ii). Independence from Network Infrastructure via Remote Deployment:

Traditionally, sensor data is offloaded onto models running on mobile devices or cloud servers [19] [20]. This is not suitable for time-critical sense-compute-actuation applications such as autonomous driving [21] [22], robot control [4] [23], and industrial control system. Moreover, reliable network bandwidth or power may not be available for communicating with online models, such as in wildlife monitoring [1] or energy-harvesting intermittent systems [24] [25] [26]. TinyML allows offline and on-board inference without requiring data offloading or cloud-based inference.

(iii). Security and Privacy:

Streaming private data onto third-party cloud servers yields privacy concerns from end-users, while cybercriminals can exploit weakly protected data streams. Federated learning [27], secure aggregation [28], and homomorphic encryption [29] allow privacy-preserving and secure inference, but suffer from expensive network and compute requirement. On-board inference constrains the source and destination of private data within the IoT node itself, reducing the probability of privacy leaks and attack surfaces.

(iv). Low Deployment Cost:

While graphics processing units (GPUs) have revolutionized deep-learning [30], GPUs are energy-hungry and expensive to maintain continually for inference using small models, leading to long term financial and environmental degeneration [5]. A Cortex M4 class microcontroller costs around 5-10 USD and can run on a coin-cell battery for months, if not years [7]. TinyML allows these microcontrollers to be exploited for ultra-low-power and low-cost AI inference.

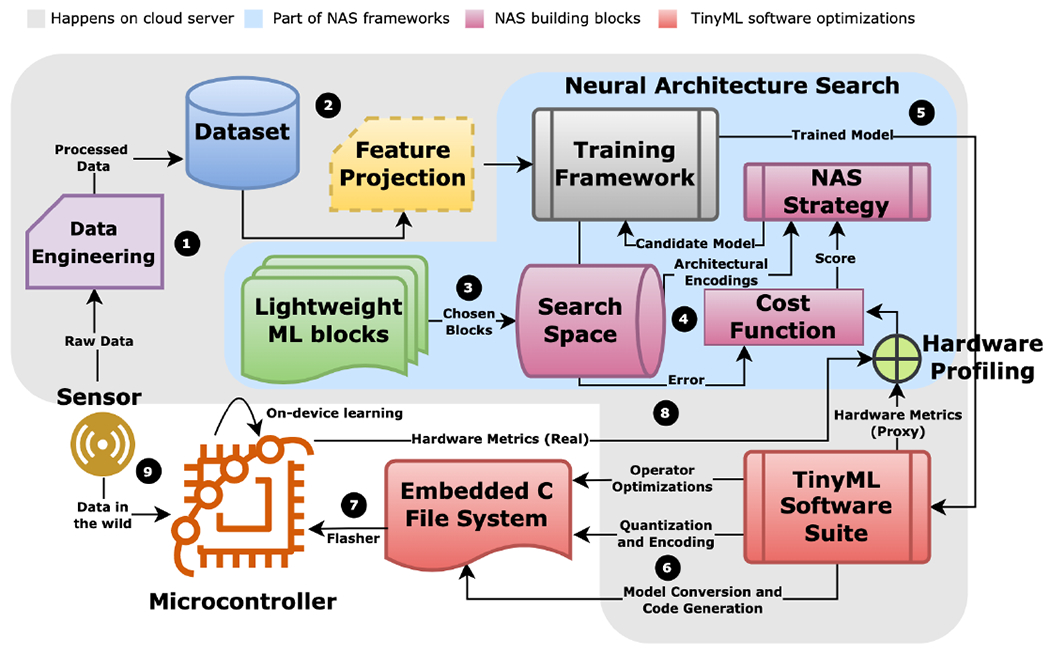

Achieving low deployment cost without sacrificing performance gains requires an unique workflow to port machine learning models onto microcontrollers compared to traditional model design. Fig. 1 illustrates the general ”closed-loop” workflow for TinyML model development and deployment. For various parts of this workflow, specific technologies and variations have emerged [6] [8] [31], which we discuss in upcoming sections. The workflow can be divided into two phases:

Fig. 1.

Closed-loop workflow of porting machine learning models onto microcontrollers. Step (3) to Step (8) are repeated until desired performance is achieved. (1) Data engineering performs acquisition, analytics and storage of raw sensor streams (Section III). (2) Optional feature projection directly reduces dimensionality of input data (Section IV). (3) Models are chosen from a lightweight ML zoo based on the application and hardware specifications (Section VI and Section X). (4) Neural architecture search strategy builds candidate models from the search space for training and evaluates the model based on cost function (Section VII). (5) Trained candidate model is ported to a TinyML software suite. (6) The TinyML software suite performs inference engine optimizations, deep compression and code generation. It also provides approximate hardware metrics (e.g., SRAM, Flash and latency) (Section V, Section VII, and Section VIII). (7) The embedded C file system is ported onto the microcontroller via command line interface. (8) The microcontroller optionally reports real runtime hardware metrics back to the neural architecture search strategy (Section VII). (9) On-device training or federated learning are used occasionally to account for shifts in incoming data distribution (Section IX).

(i). Model Development Phase:

The phase begins by preparing a dataset from raw sensor streams using data engineering techniques (Section III). Data engineering frameworks are used to collect, analyze, label, and clean sensory streams to produce a dataset. Optionally, feature projection (Section IV) is also performed at this stage. Feature projection reduces the dimensionality of the input data through linear methods, non-linear methods, or domain-specific feature extraction. Next, several models are chosen from a pool of established lightweight model zoo based on the application and hardware constraints (Section VI and Section X). The zoo contains optimized blocks for well-known machine-learning primitives (e.g., convolutional neural networks, recurrent neural networks, decision trees, k-nearest neighbors, convolutional-recurrent architectures, and attention mechanisms). To achieve maximal accuracy within microcontroller SRAM, flash, and latency targets, neural architecture search or hyperparameter tuning is performed on candidate models from the zoo (Section VII). The hardware metrics are either obtained through proxies (approximations) or real measurements.

(ii). Model Deployment Phase:

The deployment phase begins by porting the best performing model to a TinyML software suite (Section VIII). These suites perform inference engine optimizations, operator optimizations, and model compression (Section V), along with embedded code generation. The embedded C file system is then flashed onto the microcontroller for inference. The model can be periodically fine-tuned to account for data distribution shifts using online learning (on-device training and federated learning) frameworks (Section IX).

To measure and compare the performance of the tinyML workflow for specific applications, Banbury et al. [9] proposed the widely-used MLPerf Tiny Benchmark Suite, illustrated in Table II. The benchmark contains four tasks representing a wider array of applications expected from microcontroller-class models. These include multiclass image recognition, binary image recognition, keyword spotting, and outlier detection. The benchmark suite also embraces the usage of standard datasets for each task and provides quality target metrics and model size that new workflows should aim to achieve. Hardware metrics include the working memory requirements (SRAM), model size (flash), number of multiply and add operations (MACs), and latency. From Section III to Section IX, we discuss each block in the TinyML workflow, while in Section X, we provide quantitative evaluation of the entire workflow based on applications in light of the benchmarks. In Section XI, we break down the end-to-end workflow and provide analysis of individual aspects.

TABLE II.

MLPerf Tiny v0.5 Inference Benchmarks [9]

| Application | Dataset (Input Size) | Model Type (TFLM model size) | Quality Target (Metric) |

|---|---|---|---|

| Keyword Spotting | Speech Commands [10] (49×10) | DS-CNN [11] [12] [13] (52.5 kB) | 90% (Top-1) |

| Visual Wake Words | VWW Dataset [14] (96×96) | MobileNetV1 [12] (325 kB) | 80% (Top-1) |

| Image Recognition | CIFAR-10 [15] (32×32) | ResNetV1 [16] (96 kB) | 85% (Top-1) |

| Anomaly Detection | ToyADMOS [17], MIMII [18] (5×128) | FC-Autoencoder [9] (270 kB) | 0.85 AUC |

III. Data Engineering

Data engineering is the practice of building systems for acquisition, analytics, and storage of data at scale [37]. Data engineering is well explored in production-scale big data systems, where robust and scalable analytics engines (e.g., Apache Spark, Apache Hadoop, Apache Hive, Apache H2O, Apache Flink, and DataBricks LakeHouse) ingest real-time sensory data via publish-subscribe paradigms (e.g., MQTT and Apache Kafka) [38]. Data streaming systems provide real-time data acquisition protocols for requirement definitions and data gathering, while analytics engines provide support for data provenance, refinement, and sustainment. Popular general-purpose exploratory data analysis tools used in TinyML data analytics include MATLAB [39], Giotto-TDA [40], OpenCV [41], ImgAug [42], Pillow [43], Scikit-learn [44], and SciPy [45]. To suit the specific needs and goals of data engineering for TinyML systems, several specialized frameworks have emerged, illustrated in Table III.

TABLE III.

Features of Notable TinyML Data Engineering Frameworks

| Framework | Data Type | Collection | Labeling | Alignment | Augmentation | Visualization | Cleaning | Open-source |

|---|---|---|---|---|---|---|---|---|

| Edge Impulse [32] | Audio, images, time-series | Real-time (WebUSB, serial daemon, Linux SDK), offline | AI-assisted, DSP-assisted, manual | χ | Geometric image transforms, noise, audio spectrogram transforms, color depth | Images, plots: raw, spectrogram, statistical, DSP, MFE, MFCC, syntiant, feature explorer | Class balancing, crop, scale, split | χ |

| MSWC [33] | Audio with transcription | Offline speech datasets | Heuristic-based auto | Montreal forced | Synthetic noise, environmental noise | Plots: raw, spectrogram, feature embeddings | Gender balance, speaker diversity, self-supervised quality estimation | ✓ |

| SensiML DCL [34] | Time-series, audio | Real-time (WiFi, BLE, Serial daemon), offline | Plot-assisted, Threshold-based auto | Video-assisted | Noise, pool, convolve, drift, dropout, quantize, reverse, time warp | Plots: raw, spectrogram, statistical, DSP, MFCC | Class balancing, crop, scale, split | χ |

| Qeexo AutoML [35] | Time-series, audio | Real-time (Serial daemon, BLE), offline | Plot-assisted | χ | χ | Plots: raw, spectrogram, statistical, DSP, MFCC, feature embeddings | Segment | χ |

| Plumerai Data [36] | Images | offline | AI-assisted, manual | χ | Targetted image transforms, oversampling | Images (AI-assisted visual similarity) | Unit tests, failure case identification | χ |

A major challenge for enabling applications that use machine learning on microcontrollers is preparing the data and learning techniques that can automatically generalize well on unseen scenarios [33]. Thereby, most of these frameworks provide common data augmentation and data cleaning techniques such as geometric transforms, spectral transforms, oversampling, class balancing, and noise addition. MSWC [33] and Plumerai Data [36] go one step further, providing unit tests and anomaly detectors to identify problematic samples and evaluate the quality of labeled data. Plumerai Data can also automatically identify samples in the training set that are likely to be edge cases or problematic based on model performance on detected problematic samples. Such test-driven development can help users discover edge cases and outliers during model validation stages, and allow users to apply targeted augmentation, oversampling, and label correction. To reduce data collection bias, labeling errors and manual labeling effort, Edge Impulse [32], MSWC [33], SensiML DCL [34] and Plumerai Data [36] provide AI, DSP and heuristic-assisted automated labeling tools. In particular, for large-scale keyword spotting dataset generation, MSWC can automatically estimate word boundaries from audio with transcription using forced alignment and extract keywords based on user-defined heuristics in 50 languages. MSWC also automatically ensures that the generated dataset is balanced by gender and speaker diversity. Edge Impulse provides automated labeling of object detection data using YoLov5 and extraction of word boundaries from keyword spotting audio samples using DSP techniques. SensiML DCL allows video-assisted threshold-based semi-automated labeling of sensor data. Overall, these frameworks ensure that the data being used for training are relevant in context, free from bias, class-balanced, correctly labeled, contains edge cases, free from shortcuts, and encompass sufficient diversity [36].

IV. Feature Projection

An optional step in the TinyML workflow is to directly reduce the dimensionality of the data. Models operating on intrinsic dimensions of the data are computationally tractable and mitigate the curse of dimensionality. Feature projection can be divided into three types:

Linear Methods:

Linear methods for dimensionality reduction commonly used in large-scale data-mining include matrix factorization and principal component analysis (PCA) techniques such as singular value decomposition (SVD) [61], flattened convolutions [61], non-negative matrix factorization (NMF) [62], independent component analysis (ICA) [63], and linear discriminant analysis [64]. PCA is used to maximize the preservation of variance of the data in the low-dimensional manifold [65]. Among the popular linear methods, NMF is suitable for finding sparse, parts-based, and interpretable representations of non-negative data [62]. SVD is useful for finding a holistic yet deterministic representation of input data, with a hierarchical and geometric basis ordered by correlation among the most relevant variables. SVD provides a deeper factorization with lower information loss than NMF. ICA is suitable for finding independent features (blind source separation) from non-Gaussian input data [63]. ICA does not maximize variance or mutual orthogonality among the selected features. Nevertheless, linear methods are unable to model non-linearities or preserve the global relationship among features, and struggle in presence of outliers, skewed data distribution, and one-hot encoded variables.

Non-linear Methods:

Non-linear methods minimize a distance metric (e.g., fuzzy embedding topology [66], Kullback-Leibler divergence [67], local neighbourhoods [68], and Euclidean norm [69]) between the high-dimensional data and a low-dimensional latent representation. Non-linear methods to handle non-linear sampling of low-dimensional manifolds by high-dimensional vectors include locally linear embedding (LLE) [68], kernel PCA [69], t-distributed stochastic neighbor embedding (t-SNE) [70], uniform manifold approximation and projection (UMAP) [66], and autoencoders [71]. Kernel PCA couples k-NN, Dijkstra’s algorithm, and partial eigenvalue decomposition to maintain geodesic distance in a low-dimensional space [69]. Similarly, LLE can be thought of as a PCA ensemble maintaining local neighborhoods in the embedding space, decomposing the latent space into several small linear functions [68]. However, both LLE and kernel PCA do not perform well with large and complex datasets. t-SNE optimizes KL-divergence between student’s T distribution in the manifold-space and Gaussian joint probabilities in the higher-dimensional space [70]. t-SNE is able to reveal data structures at multiple scales, manifolds, and clusters. Unfortunately, t-SNE is computationally expensive, lacks explicit global structure preservation, and relies on random seeds. UMAP optimizes a low-dimensional fuzzy embedding to be as topologically similar as the Cech complex embedding [66]. Compared to t-SNE, UMAP provides a more accurate global structure representation, while also being faster due to the use of graph approximations. Nonetheless, while linear methods have been ported to microcontrollers [32] [72], non-linear methods are not suitable for real-time execution on microcontrollers and are usually used for visualizing high-dimensional handcrafted features.

Feature Engineering:

Feature engineering uses domain expertise to extract tractable features from the raw data [73]. Typical features include spectral and statistical features. Domain-specific feature extraction is generally more suited for micro-controllers over linear and non-linear dimensionality reduction techniques due to their relative lightweightness, as well as the availability of dedicated signal processors in commodity microcontrollers for spectral processing. However, feature engineering requires human knowledge to design statistically significant features. Feature selection can reduce the number of redundant features further during model development [74]. Feature selection methods include statistical tests, correlation modeling, information-theoretic techniques, tree ensembles, and metaheuristic methods (e.g., wrappers, filters, and embedded techniques) [75].

V. Pruning, Quantization and Encoding

Model compression aims to reduce the bitwidth and exploit the redundancy and sparsity inherent in neural networks to reduce memory and latency. Han et al. [49] first showed the concept of pruning, quantization, and Huffman coding jointly in the context of pre-trained deep neural networks (DNN). Pruning [76] refers to masking redundant weights (i.e., weights lying within a certain activation interval) and representing them in a row form. The network is then retrained to update the weights for other connections. Quantization [77] accelerates DNN inference latency by rounding off weights to reduce bit width while clustering similar ones for weight sharing. Encoding (e.g., Huffman encoding) represents common weights with fewer bits, either through conversion of dense matrices to sparse matrices [49] or smaller dense matrices through parameter redundancies [78]. Combining the three techniques can drastically reduce the size of state-of-the-art DNNs such as AlexNet (35, 6.9 MB), LeNet-5 (39, 44 kB), LeNet-300- 100 (40, 27 kB), and VGG-16 (49, 11.3 MB) without losing accuracy [49].

Common Model Compression Techniques:

Table IV showcases and compares several frameworks for model compression for microcontrollers. Among the different frameworks, TensorFlow Lite [46] is available as part of the TensorFlow training framework [79], while others are standalone libraries that can be integrated with TensorFlow or PyTorch. 88% of the frameworks provide various quantization primitives, while 50% of the frameworks support several pruning algorithms. Most of these techniques result in unstructured or random sparse patterns.

TABLE IV.

Features of Notable TinyML Model Compression Frameworks

| Framework | Compression Type | Parameters | Size or Latency Change* | Open-Source |

|---|---|---|---|---|

|

TensorFlow Lite [46] |

Post-training quantization | Bit-width (float16, int16, int8), scheme (full-integer, dynamic, float16) | 4× smaller, 2-3× speedup [47] | ✓ |

| Quantization-aware training | Bit-width (arbitrary) | Depends on bit-width (upto 8× smaller) | ||

| Weight pruning | Sparsity distribution (constant, polynomial decay), pruning policy | 5-10× smaller [48], 4× speedup [49] | ||

| Weight clustering | Number of clusters, initial distribution (random, density-based, linear) | 3-6× smaller | ||

| QKeras [50] | Quantization-aware training | Bit-width (arbitrary), symmetry, quantized layer definitions, quantized activation functions | Depends on bit-width (upto 8× smaller) | ✓ |

|

Qualcomm AIMET [51] |

Post-training quantization | Bit-width (arbitrary), rounding mode (nearest, stochastic), scheme (data-free, adaptive rounding) | Depends on bit-width (upto 8× smaller) | ✓ |

| Quantization-aware training | Bit-width (arbitrary), scheme (vanilla, range-learning) | |||

| Channel pruning | Compression ratio, layers to ignore, compression ratio candidates, reconstruction samples, cost metric | 2× smaller | ||

| Matrix factorization | Factorization algorithm (weight SVD, spatial SVD), compression ratio, fine-tuning (per layer, rank rounding) | |||

| Plumerai LARQ [52] | Binarized network training | Bit-width (int1), quantized activation functions, quantized layer definitions (convolution primitives and dense), binarized model backbones | 8× smaller, 8.5-19× speedup (with LARQ compute engine) [53] | ✓ |

|

Microsoft NNI [54] |

Post-training quantization | Scheme (naive, observer), bit-width (8-bit, arbitrary), type (dynamic, integer), operator type | Depends on bit-width (upto 8× smaller) | ✓ |

| Quantization-aware training | Scheme (Vanilla, LARQ, learned step size, DoReFa), bit-width (8-bit, arbitrary), type (dynamic, integer), operator type, optimizer | |||

| Basic pruners | Sparsity distribution, mode (normal, dependency-aware), operator type, training scheme, pruning algorithm (level, L1, L2, FPGM, slim, ADMM, activation APOZ rank, activation mean rank, Taylor FO) | 1.4-20× smaller, 1.6-5× speedup | ||

| Scheduled pruners | All parameters of basic pruners, basic pruning algorithm, scheduled pruning algorithm (linear, AGP, lottery ticket, simulated annealing, auto compress, AMC) | 1.1-120× smaller, 1.81-4× speedup | ||

| CMix-NN [55] | Quantization-aware training (mixed precision) | Bit-width (int2, int4, int8), weight quantization type (per-channel, per-layer), batch normalization folding type and delay, memory constraints, quantized convolution primitives | 7× smaller | ✓ |

| Microsoft SeeDot [56] | Post-training quantization (with autotuned and optimized operators) | Bit-width (8-bit), model (Bonsai [57], ProtoNN [58], Fast-GRNN [59], RNNPool [60]), error metric, scale parameter | 2.4-82.2× speedup [56] | ✓ |

| Genesis@ [24] | Tucker decomposition and weight pruning | Rank decomposition, network configuration, sparsity distribution, pruning policy, sensing energy, communication energy | 2-109× smaller | χ |

for ~1-4% drop in accuracy over uncompressed models.

compression framework for intermittent computing systems.

(i). Quantization Schemes:

From Table IV, we can observe that the most widely-used quantization technique for microcontrollers is the fixed-precision uniform affine post-training quantizer, where a real number is mapped to a fixed-point representation via a scale factor and zero-point (offset) after training [80] [47]. Variations include quantization of weights, weights, and activations, and weights, activations, and inputs [81]. While post-training quantization (with 4, 8, and 16 bits) has been shown to reduce the model size by 4× and speed up inference by 2-3×, quantization-aware training is recommended for microcontroller-class models to mitigate layer-wise quantization error due to a large range of weights across channels [80] [47]. This is achieved through the injection of simulated quantization operations, weight clamping, and fusion of special layers [51], allowing up to 8× model size reduction for same or lower accuracy drop. However, care must be taken to ensure that the target hardware supports the used bitwidth. To account for distinct compute and memory requirements of different layers, mixed-precision quantization assigns different bit-widths for weights and activation for each layer [82]. For microcontrollers, the network subgraph is represented as a quantized convolutional layer with vectorized MAC unit, while special layers are folded into the activation function via integer channel normalization [83] [55]. Mixed-precision quantization provides 7× memory reduction [55] but is supported by limited models of microcontrollers. Recently, binarized neural networks [84] have been ported onto microcontroller-class hardware [52], where the weights and activations are quantized to a single bit (−1 or +1). Binarized quantization can provide 8.5-19× speedup and 8× memory reduction [53].

(ii). Pruning Algorithms:

Among the different pruning algorithms, weight pruning is the most common, providing 4× speedup and 5-10× memory reduction [49] [48]. Weight pruning follows a schedule that specifies the type of layers to consider, the sparsity distribution to follow during training or fine-tuning, and the metric to follow when pruning (pruning policy). Common weight pruning evaluation metrics include the level and norm of weights [79] [54]. For intermittent computing systems with extremely limited power budgets, the pruning policy usually includes the energy and memory budget to maximize the collection of interesting events per unit of energy [24]. Pruning policies for intermittent computing treat pruning as a hyperparameter tuning problem, sweeping through the memory, energy, and accuracy spaces to build a Pareto frontier. Some frameworks [51] [54] provide support for structured pruning, allowing policies for channel and filter pruning rather than pruning weights in an irregular fashion.

Structured Sparsity:

Although model compression improves speedup, eliminates ineffective computations, and reduces storage and memory access costs, unstructured sparsity can induce irregular processing and waste execution time. The benefits of efficient acceleration through sparsity require special hardware and software support for storage, extraction, communication, computation, and load-balance of nonzero and trivial elements and inputs [85]. Several techniques for exploiting structured sparsity for microcontrollers have emerged. Bayesian compression [86] [87] assumes hierarchical, sparsity-promoting priors on channels (output activations for convolutional layers and input features for fully-connected layers) via variational inference, approximating the weight posterior by a certain distribution. For the same accuracy, Bayesian compression can reduce parameter count by 80× over unpruned models. Layer-wise SIMD-aware weight pruning [88] divides the weights into groups equal to the SIMD width of the microcontroller for maximal SIMD unit utilization and column index sharing. Trivial weight groups are pruned based on the root mean square of each group. SIMD-aware pruning provides 3.54× speedup and 88% reduction in model size, compared to 1.90× speedup and 80% reduction in model size provided by traditional weight pruning over unpruned models. Differentiable network pruning [89] performs structured channel pruning during training by applying channel-wise binary masks depending on channel salience. The size of each layer is learned through bi-level continuous gradient descent relaxation through pruning feedback and resource feedback losses without additional training overhead. Compared to traditional pruning methods, differentiable pruning provides up to 1.7× speedup, while compressing unpruned models by 80×. Doping [90] [91] improves the accuracy and compression factor of networks compressed using structured matrices (e.g. Kronecker products (KP)) by adding an extremely sparse matrix, using co-matrix regularization to reduce co-matrix adaptation during training. Doped KP matrices achieve a 2.5-5.5× speedup and 1.3-2.4× higher compression factor over traditional compression techniques, beating weight pruning and low-rank methods with 8% higher accuracy.

VI. Lightweight Machine Learning Blocks

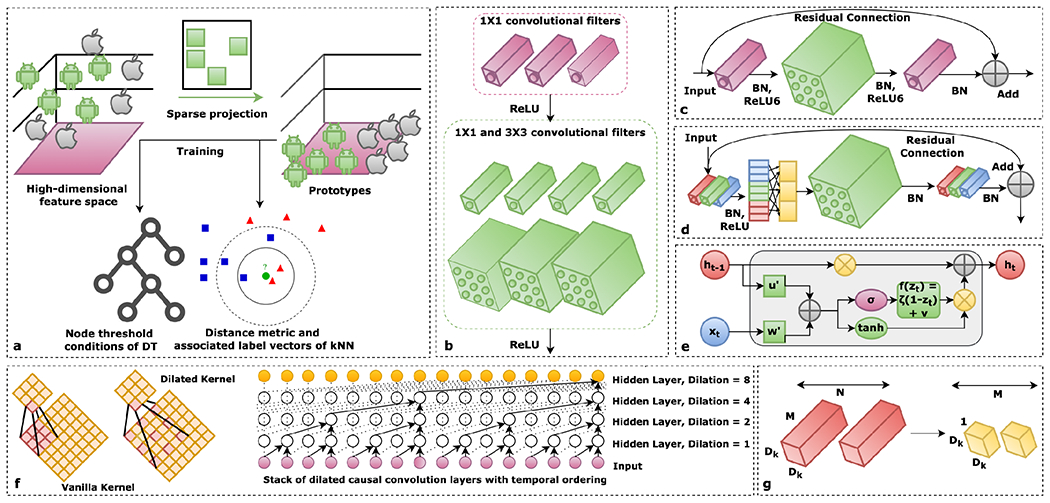

To reduce the memory footprint and latency while retaining the performance of ML models running on microcontrollers, several ultra-lightweight machine learning blocks have been proposed, illustrated in Fig. 2. We describe some of these blocks in this section.

Fig. 2.

Example of lightweight machine learning blocks. (a) Sparse projection onto low-dimensional linear manifold yields lightweight decision trees and k-nearest neighbor classifiers. (b) Fire module containing bottleneck (pointwise) and excitation (pointwise and depthwise) convolutional layers. (c) The inverted residual connection between squeeze layers instead of excitation layers reduces memory and compute. (d) Group convolution with channel shuffle improves cross-channel relations. (e) Adding a gated residual connection and enforcing RNN matrices to be low rank, sparse, and quantized yields stable and lightweight RNN. (f) Temporal convolutional networks extract spatio-temporal representations using causal and dilated convolution kernels. (g) Depthwise separable convolution yields 7-9× memory savings over vanilla convolution kernel (figure adapted from [2]).

Sparse Projection:

When the input feature space is high-dimensional, sparsely projecting input features onto a low-dimensional linear manifold, called prototypes, can reduce the parameter count and improve compute efficiency of models. The projection matrix can be learned as part of the model training process using stochastic gradient descent and iterative hard thresholding to mitigate accuracy loss. Bonsai [57] is a non-linear, shallow, and sparse decision tree (DT) that can make inferences on prototypes. Similarly, ProtoNN [58] is a lightweight k-nearest neighbor (kNN) classifier that operates on prototypes.

Lightweight Spatial Convolution:

SqueezeNet [92] brought on several micro-architectural enhancements to AlexNet [93]. These include replacing 3×3 kernels with point-wise filters, decreasing input channel count using point-wise filters as a linear bottleneck, and late downsampling to enhance feature maps. The resulting network consists of stacked ”fire modules”, with each module containing a bottleneck layer (layer with point-wise filters) and an excitation layer (mix of point-wise and 3×3 filters). Using pruning, quantization, and encoding, SqueezeNet reduced the size of AlexNet by 510× (< 0.5 MB). MobileNetsV1 [12] introduced depthwise separable convolution [11] (channel-wise convolution followed by bottleneck layer), and width and resolution multipliers to control layer width and input resolution of AlexNet. Depth-wise separable convolution is 9× cheaper and induces 7-9× memory savings over 3×3 kernels. MobileNetV2 [94] introduced the concepts of inverted residuals and linear bottleneck, where a residual connection exists between bottleneck layers rather than excitation layers, and a linear output is enforced at the last convolution of a residual block. To reduce channel count, the depthwise separable convolution layer can be enclosed between the pointwise group convolution layer with channel shuffle, thereby improving the semantic relation between input and output channels across all the groups through the use of wide activation maps [95]. Instead of having residual connections across two layers, the gradient highway can act as a medium to feed each layer activation maps of all preceding layers. This is known as channel-wise feature concatenation [96] and encourages reuse and stronger propagation of low-complexity diversified feature maps and gradients while drastically reducing network parameter count.

Lightweight Multiscale Spatial Convolution:

For scalable, efficient, and real-time object detection across scales, EfficientDet [97] introduced a bidirectional feature pyramid network (FPN) to aggregate features at different resolutions with two-way information flow. The feature network topology is optimized through NAS via heuristic compound scaling of weight, depth, and resolution. EfficientDet is 4–9× smaller, uses 13-42× fewer FLOPS, and outperforms (in terms of latency and mean average precision) YOLOv3, RetinaNet, AmoebaNet, Resnet, and DeepLabV3. Scaled-YOLOv4 [98] converts portions of FPN of YOLOv4 [99] to cross-stage partial networks [100], which saves up-to 50% computational budget over vanilla CNN backbones. Removal or fusion of batch normalization layers and downscaling input resolution can speed up multi-resolution inference by 3.6-8.8× [101] over vanilla YOLO [102] or MobileNetsV1 [12]

Low-Rank, Stabilized, and Quantized Recurrent Models:

Although recurrent neural networks (RNN) are lightweight by design, they suffer from exploding and vanishing gradient problem (EVGP) for long time-series sequences. Widely-used solutions to EVGP, namely long short-term memory (LSTM) [103], gated recurrent units [104], and unitary RNN [105] either cause loss in accuracy, or increase memory and compute overhead. FastRNN [59] solves EVGP by adding a weighted residual connection with two scalars between RNN layers to stabilize gradients during training without adding significant compute overhead. The scalars control the hidden state update extent based on inputs. FastGRNN [59] then converts the residual connection to a gate while enforcing the RNN matrices to be low-rank, sparse, and quantized. The resulting RNN is 35× smaller than gated or unitary RNN. Kronecker recurrent units [90] [106] use Kronecker products to stabilize RNN training and decompose large RNN matrices into rank-preserving smaller matrices with fewer parameters, compressing RNN by 16-50× without significant accuracy loss. Doping, co-matrix adaptation and co-matrix regularization can further compress Kronecker recurrent units by 1.3-2.4× [91]. Legendre memory units (LMU) [107], derived to orthogonalize its continuous-time history, have 10,000× more capacity while being 100× smaller than LSTM.

Temporal Convolutional Networks:

Temporal convolutional networks (TCN) [108] [109] can jointly handle spatial and temporal features hierarchically without the explosion of parameter count, memory footprint, layer count, or overfitting. TCN convolves only on current and past elements from earlier layers but not future inputs, thereby maintaining temporal ordering without requiring recurrent connections. Dilated kernels allow the network to discover semantic connections in long temporal sequences while increasing network capacity and receptive field size with fewer parameters or layers over vanilla RNN. Two TCN layers are fused through a gated residual connection for expressive non-linearity and temporal correlation modeling. A time-series TCN can be 100× smaller over a CNN-LSTM [110] [111]. TCN also supports parallel and out-of-order training.

Attention Mechanisms, Transformers, and Autoencoders:

Attention mechanisms allow neural networks to focus on and extract important features from long temporal sequences. Multi-head self-attention forms the central component in transformers, extracting domain-invariant long-term dependencies from sequences without recurrent units while being efficient and parallelizable [112]. Attention condensers are lightweight, self-contained, and standalone attention mechanisms independent of local context convolution kernels that learn condensed embeddings of the semantics of both local and cross-channel activations [113]. Each module contains an encoder-decoder architecture coupled with a self-attention mechanism. Coupled with machine design exploration, attention condensers have been used for image classification (4.17× fewer parameters than MobileNetsV1) [114], keyword spotting (up to 507× fewer parameters over previous work) [113], and semantic segmentation (72× fewer parameters over RefineNet and Edge-SegNet) [115] at the edge. Long-short range attention (LSRA) uses two heads (convolution and attention) to capture both local and global context, expanding the bottleneck while using condensed embeddings to reduce computation cost [116]. Combined with pruning and quantization, LSRA transformers can be 18× smaller than the vanilla transformer architecture. MobileViT combines the benefits of convolutional networks and transformers by replacing local processing in convolution with global processing, allowing lightweight and low-latency transformers to be implemented using convolution [117]. Instead of using special attention and transformer blocks, transformer knowledge distillation teaches a small transformer to mimic the behavior of a larger transformer, allowing up to 7.5× smaller and 9.4× faster inference over bidirectional encoder representations from transformers [118]. Customized data layout and loop reordering of each attention kernel, coupled with quantization, has allowed porting transformers onto microcontrollers [119] by minimizing computationally intensive data marshaling operations. The use of depthwise and pointwise convolution has been shown to yield autoencoder architectures as small as 2.7 kB for anomaly detection [120].

VII. Neural Architecture Search

NAS is the automated process of finding the most optimal neural network within a neural network search space given target architecture and network architecture constraints, achieving a balance between accuracy, latency, and energy usage [125] [126] [127]. Table V compares several NAS frameworks developed for microcontrollers. There are three key elements in a hardware-aware NAS pipeline, namely the search space formulation (Section VII-A), search strategy (Section VII-B), and cost function (Section VII-C).

TABLE V.

Neural Architecture Search Frameworks Targetted Towards Microcontrollers

| Framework | Search Strategy | Hardware Profiling | Inference Engine | Optimization Parameters | Open-Source |

|---|---|---|---|---|---|

| SpArSe [86] | Gradient-driven Bayesian | Analytical | uTensor | Error, SRAM, Flash | χ |

| MCUNet [31] [121] | Evolutionary | Lookup tables, prediction models | TinyEngine (closed-source) | Latency, Error, SRAM, Flash | χ |

| MicroNets [8] | Gradient-driven | Analytical | Tensorflow Lite Micro | Latency, Error, SRAM, Flash | χ |

| μNAS [122] | Evolutionary | Analytical | Tensorflow Lite Micro | Latency, Error, SRAM, Flash | ✓ |

| THIN-Bayes [123] | Gradient-free Bayesian | Hardware-in-the-loop, analytical | Tensorflow Lite Micro | Latency, Error, SRAM, Flash, Arena size, Energy | ✓ |

| iNAS [124] | Reinforcement Learning | Lookup tables, analytical | Accelerated Intermittent Inference (custom) | Latency*, Error, Volatile Buffer, Flash, Power-Cycle Energy@ | ✓ |

sum of progres preservation, progress recovery, battery recharge and compute cost

sum of progres preservation, progress recovery, and compute cost

A. Search Space Formulation

The search space provides a set of ML operators, valid connection rules and possible parameter values for the search algorithm to explore. The neural network search space can be represented as layer-wise, cell-wise, or hierarchical [125].

Layer-wise:

In layer-wise search spaces, the entire model is generated from a collection of serialized or sequential neural operators. The macro-architecture (e.g., number of layers and dimensions of each layer), initial and terminal layers of the network are fixed, while the remaining layers are optimized. The structure and connectivity among various operators are specified using variable-length strings, encoded in the action space of the search strategy [126]. Although such search spaces are expressive, layer-wise search spaces are computationally expensive, require hardcoding associations among different operators and parameters, and are not gradient friendly.

Cell-wise:

For cell-wise (or template-wise) search spaces, the network is constructed by stacking repeating fixed blocks or motifs called cells. A cell is a directed acyclic graph constructed from a collection of neural operators, representing some feature transformation. The search strategy finds the most optimal set of operators to construct the cell recursively in stages, by treating the output of past hidden layers as hidden states to apply a predefined set of ML operations on [128]. Cell-based search spaces are more time-efficient compared to layer-wise approaches and easily transferable across datasets but are less flexible for hardware specialization. In addition, the global architecture of the network is fixed.

Hierarchical:

In tree-based search spaces, bigger blocks encompassing specific cells are created and optimized after cell-wise optimization. Primitive templates which are known to perform well are used to construct larger network graphs and higher-level motifs recursively, with feature maps of low-level motifs being fed into high-level motifs. Factorized hierarchical search spaces allow each layer to have different blocks without increasing the search cost while allowing for hardware specialization [129].

For applications with extreme memory and energy budget (e.g., intermittent computing systems), the search space goes down to the execution level to include operator and inference optimizations (e.g., loop transformations, data reuse, and choice of in-place operators) rather than optimizing the model at the architectural level. iNAS [124] uses RL to optimize the tile dimensions per layer, loop order in each layer, and the number of tile outputs to preserve in a power cycle for convolutional models. When combined with appropriate power-cycle energy, memory, and latency constraints, iNAS reduced intermittent inference latency by 60% compared to NAS frameworks operating at the architectural level, with a 7% increase in search overhead. Likewise, micro-TVM [130] uses a learning-enabled schedule explorer to perform automated operator and inference optimizations at the execution level. We discuss some of these optimizations in Section VIII-A, as well as operation of micro-TVM in Section VIII-B.

B. Search Strategy

The search strategy involves sampling, training and evaluating candidate models from the search space, with the goal of finding the best performing model. This is done using reinforcement learning (RL), one-shot gradient-driven NAS, evolutionary algorithms (with weight sharing), or Bayesian optimization [134]. Recent techniques, known as training-free NAS, aim to perform NAS without the costly inner-loop training [135]. Table VI compares the performance of different NAS search strategies on the ImageNet dataset for MBNetv3 [133] backbone. We distill the insights from Table VI below.

TABLE VI.

| Search Strategy | Top-1% Accuracy | Latency∧ | Model Size (MAC) | Training Cost (GPU hours) | Search Cost (GPU hours) |

|---|---|---|---|---|---|

| Reinforcement Learning∨ | 74%-75.2% | 58mS-70 mS | 219M-564M | None*, 180N@ | 40000N-48000N*, None@ |

| Gradient-driven | 73.1%-74.9% | 71mS | 320M-595M | 250N-384N | 96N-(288+24N) |

| Evolutionary | 72.4%-80.0% | 58mS-59mS | 230M-595M | 1200-(1200+kN) | 40 |

| Bayesian ∨ | 73.4%-75.8% | - | 225M | None | 23N-552N |

dataset: ImageNet-1000, backbone network: MBNetV3, k = fine-tuning epoch count

on Google Pixel1 smartphone, N = Number of deployment scenarios for which different models must be found [5]

Techniques based on RL and Bayesian usually have coupled training and search (training cost included with search cost)

MBNetV3 Search [133]

Reinforcement Learning:

RL techniques, such as NASNet [128] and MNASNet [132], model NAS as a Markov Decision Process on a proxy dataset to reduce search time. RL controllers (e.g., RNNs trained via proximal policy optimization (PPo), deep deterministic policy gradient (DDPG), and Q-learning) are used to find the optimal combination of neural network cells from a pre-defined set recursively. The network graph can either consist of a series of repeatable and identical blocks (e.g., convolutional cells) whose structures are found via the controller or represented in a factorized hierarchical fashion via a layer-wise stochastic super-network. Device constraints are included in the reward function to formulate a multi-objective optimization problem. Among the different RL controllers, Q-learning-based algorithms works for simple search space (i.e, discrete and finite with tens of parameters) [136] created through expert knowledge. PPO and DDPG are useful when the search space is complex (i.e, continuous with thousands of parameters) [137]. PPO-based on-policy algorithms are more stable than DDPG but demand more samples to converge than DDPG [138]. Overall, RL processes are slow to converge, preventing fast exploration of the search space. In addition, fine-tuning candidate networks increases search costs.

Gradient-driven NAS:

Differentiable NAS using continuous gradient descent relaxation can reduce the search and training cost further on the target dataset over RL-based techniques. The goal is to learn the weights and architectural encodings through a nested bi-level optimization problem, with the gradients obtained approximately. The optimization problem can be efficiently handled using path binarization, where the weights and encodings of an over-parametrized network are alternatively frozen during gradient update using binarized gates. The final sub-network is obtained using path-level pruning. Hardware metrics are converted to a gradient-friendly continuous model before being used in the optimization function. The search space can consist of static blocks of directed acyclic graphs containing network weights, edge operations, activations, and hyperparameters or a factorized hierarchical super-network. Examples of gradient-driven NAS include DARTS [139], FBNet [140], ProxylessNAS [129], and MicroNets [8]. Drawbacks of include high GPU memory consumption and training time due to large super-network parameter count and inability to generalize across a broad spectrum of target hardware, requiring the NAS process to be repeated for new hardware.

Evolutionary Search with Weight Sharing:

To eliminate the need for performing NAS for different hardware platforms separately and reduce the training time of candidate networks, several weight-sharing mechanisms (WS-NAS) have emerged [5] [31] [121] [143] [144]. WS-NAS decouples training from search by training a ”once-for-all” super-network consisting of several sub-networks which fits the constraints of eclectic target platforms. Evolutionary search is used during the search phase, where the best performing sub-networks are selected from the super-network, crossed, and mutated to produce the next generation of candidate subnetworks. Progressive shrinking and knowledge distillation ensure all the sub-networks are jointly fine-tuned without interfering with large sub-networks. Evolutionary search can also be applied to RL search spaces [145] for faster convergence or applied on several candidate architectures not part of a super-network [122]. Nevertheless, evolutionary WS-NAS suffers from excessive computation and time requirements due to super-network training, exacerbated by fine-tuning of candidate networks and slow convergence of evolutionary algorithms.

Bayesian Optimization:

When training infrastructure is weak, the search space and hardware metrics are discontinuous, and the training cost per model is high, Bayesian NAS is used as a black-box optimizer. Given their problem-independent nature, Bayesian NAS can be applied across different datasets and heterogenous architectures without being constrained to one specific type of network (e.g., CNN or RNN), provided support for conditional search. The performance of the optimizer is highly dependent on the surrogate model [146]. The most widely adopted surrogate model is the Gaussian process, which allows uncertainty metrics to propagate forward while looking for a Pareto-optimal frontier and is known to outperform other choices like random forest or Tree of Parzen Estimators [146]. The acquisition function decides the next set of parameters from the search space to sample from, balancing exploration and exploitation. The loss function is modeled as a constrained single-objective or scalarized multi-objective optimization problem. Examples include SpArSe [86], Vizier [147], and THIN-Bayes [123]. Unfortunately, Bayesian NAS does not perform well in high dimensional search spaces (e.g., performance degrades beyond a dozen parameters [148]). Moreover, Bayesian optimizers are typically used to optimize hyperparameters for fixed network architectures instead of multiple architectures as the Gaussian process does not directly support conditional search across architectures. Only THIN-Bayes can sample across different architectures thanks to support for conditional search via multiple Gaussian surrogates [123].

Training-Free NAS:

Training-free NAS estimates the accuracy of a neural network either by using proxies developed from architectural heuristics of well-known network architectures [135] or by using a graph neural network (GNN) to predict the accuracy of models generated from a known search space [149] [150]. Examples of gradient-based accuracy proxies include the correlation of ReLU activations (Jacobian covariance) between minibatch datapoints at CNN initialization [151], the sum of the gradient Euclidean norms after training with a single minibatch datapoints [152], change in loss due to layer-level pruning after training with a single minibatch datapoints (Fisher) [152], change in loss due to parameter pruning after training with a single minibatch data-points (Snip) [153], change in gradient norm due to parameter pruning after training with a single minibatch datapoints (Grasp) [154], the product of all network parameters (Synaptic Flow) [155], the spectrum of the neural tangent kernel [156], and the number of linear regions in the search space [156]. Gradient-based proxies still require the use of a GPU for gradient calculation. Recently, Li et al. [135] proposed a gradient-free accuracy proxy, namely the sum of the average degree of each building block in a CNN from a network topology perspective. Unfortunately, both proxies and GNN suffer from the lack of generalizability across different datasets, model architectures, and design space, while the latter also suffers from the training cost of the accuracy prediction network itself.

C. Cost Function

The cost function provides numerical feedback to the search strategy about the performance of a candidate network. Common parameters in the cost function include network accuracy, SRAM usage, flash usage, latency, and energy usage. The goal of NAS is to find a candidate network that finds the extrema of the cost function, i.e., the cost function can be thought of as seeking a Pareto-optimal configuration of network parameters.

Cost Function Formulation:

The cost function can be formulated as either a single or multi-objective optimization problem. Single objective optimization problems only optimize for model accuracy. To take hardware constraints into account, single-objective optimization problems are usually treated as constrained optimization problems with hardware costs acting as regularizers [123]. Multi-objective cost functions are usually transformed into a single objective optimization problem via weighted-sum or scalarization techniques [86] or solved using genetic algorithms.

Hardware Profiling:

Hardware-aware NAS employs hardware-specific cost functions or search heuristics via hardware profiling. The target hardware can be profiled in real-time by running sampled models on the actual target device (hardware-in-the-loop), estimated using lookup tables, prediction models, and silicon-accurate emulators [157] or analytically estimated using architectural heuristics. Common hardware profiling techniques are shown in Table VII. Hardware-in-the-loop is slowest but most accurate during NAS runtime, while analytical estimation is fastest but least accurate [125] [134]. Examples of analytical models for microcontrollers include using FLOPS as a proxy for latency [8] [122], and standard RAM usage model [86] for working memory estimation. Recently, latency prediction models have been made more accurate through kernel (execution unit) detection and adaptive sampling [158]. For intermittent computing systems, the latency is the time required for progress preservation (writing progress indicators and computed tile outputs to flash at the end of a power cycle), progress recovery (system reboot, loading progress indicators, and tiled outputs into SRAM), battery recharge, and running inference (cost of computing multiple tiles per energy cycle) [124]. The SRAM usage in such systems is the sum of memory consumed by the input feature map, weights, and output feature map, dependent upon the tile dimensions, loop order, and preservation batch size in the search space [124].

TABLE VII.

NAS Hardware Profiling Strategies for Microcontrollers

| Method | Speed | Accuracy | NAS Frameworks |

|---|---|---|---|

| Real measurements | Slow | High | THIN-Bayes [123], MNASNet [132], One-shot NAS [141] |

| Lookup tables | Fast-Medium | Medium-High | FBNet [140], Once-for-All [5], MCUNet [31] [121] |

| Prediction models | Medium | Medium | ProxylessNAS [129], Once-for-All [5], MCUNet [31] [121], LEMONADE [142] |

| Analytical | Fast | Low | THIN-Bayes [123], MicroNets [8], μNAS [122], SpArSe [86] |

VIII. TinyML Software Suites

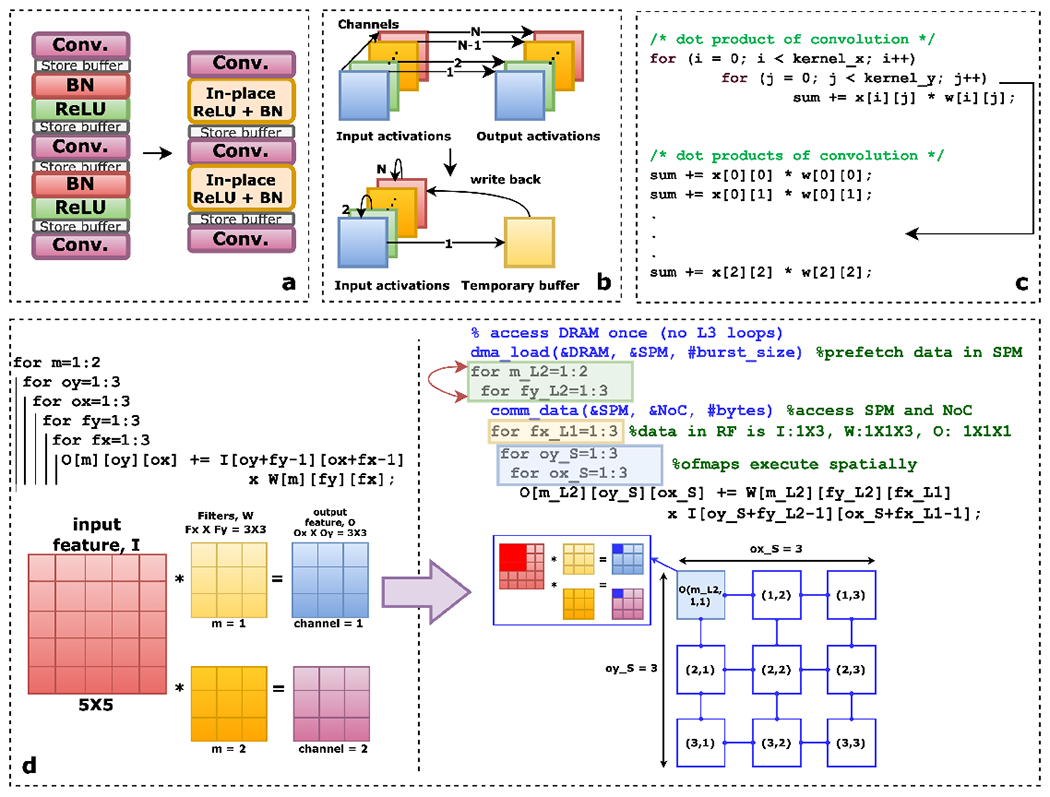

After the best model is constructed from lightweight ML blocks through NAS, the model needs to be prepared for deployment onto microcontrollers. This is performed by TinyML software suites, which generate embedded code and perform operator and inference engine optimizations, some of which are shown in Fig. 3 and discussed in Section VIII-A. In addition, some of these frameworks also provide inference engines for resource management and model execution during deployment. We discuss features of notable TinyML software suites in Section VIII-B.

Fig. 3.

Example operator optimizations performed by TinyML software suites. (a) Use of fused and in-place activated operators reduce memory access cost and improves inference speed [158] [159]. (b) Converting depthwise convolution to in-place depthwise convolution reduces peak memory usage by 1.6×, by allowing first channel output activation (stored in a buffer) to overwrite the previous channel’s inpur activation until written back to the last channel’s input activation [31]. (c) Loop unrolling eliminates branch instruction overhead [31]. (d) Loop tiling encourages resuse of array elements within each tile by partitioning the loop’s iterative space into blocks [160], while loop reordering (with tiling) improves spatiotemporal execution and locality of reference within device memory constraints [161] [162].

A. Operator and Inference Optimizations

All TinyML software suites perform several operator and inference engine optimizations to improve data locality, memory usage, and spatiotemporal execution [162]. Common techniques include the use of fused or in-place operators [130], loop transformations [161], and data reuse (output sharing or value sharing) [163].

In-Place and Fused Operators:

Operator fusion or folding combines several ML operators into a specialized kernel without saving the intermediate feature representations in memory (known as in-place activation) [130]. The software suites follow user-defined rules for operator fusion depending on graph operator type (e.g., injective, reduction, complex-out fusable, and opaque) [130]. Use of fused and in-place operators have been shown to reduce memory usage by 1.6× [31] and improve speedup by 1.2-2× [130].

Loop Transformations:

Loop transformations aim to improve spatiotemporal execution and inference speed by reducing loop overheads [161]. Common loop transformations include loop reordering, loop reversal, loop fusion, loop distribution, loop unrolling, and loop tiling [161] [162] [161] [160]. Loop reordering (and reversal) finds the loop permutation that maximizes data reuse and spatiotemporal locality. Loop fusion combines different loop nests into one, thereby improving temporal locality, and increasing data locality and reuse by creating perfect loop nests from imperfect loop nests. To enable loop permutation for loop nests that are not permutable, loop distribution breaks a single loop into multiple loops [161]. Loop unrolling helps eliminate branch penalties and helps hide memory access latencies [130]. Loop tiling improves data reuse by diving the loops into blocks while considering the size of each level of memory hierarchy [160].

Data Reuse:

Data reuse aims to improve data locality and reduce memory access costs. While data reuse is mostly achieved through loop transformations, several other techniques have also been proposed. CMSIS-NN provides special pooling and multiplication operations to promote data reuse [164]. TF-Net [163] proposed the use of direct buffer convolution on Cortex-M microcontrollers to reduce input unpacking overhead, which reuses inputs in the current window unpacked in a buffer space for all weight filters. Input reuse reduces SRAM usage by 2.57× and provides 2× speedup. Similarly, for GAP8 processors, the PULP-NN library provides a reusable im2col buffer (height-width-channel data layout) to reduce im2col creation overhead [165] [166], providing partial spatial data reuse. PULP-NN also features register-level data reuse, achieving 20% speedup over CMSIS-NN and 1.9× improvement over native GAP8-NN libraries.

B. Notable TinyML Software Suites

Notable open-source TinyML frameworks and inference engines include TensorFlow Lite Micro [167] [46], uTensor [168], uTVM [130], Microsoft EdgeML [57] [58] [59] [60], [169–171], CMSIS-NN [164], EloquentML [72], Sklearn Porter [174], EmbML [175], and FANN-on-MCU [176]. Closed-source TinyML frameworks and inference engines include STM32Cube.AI [172], NanoEdge AI Studio [173], Edge Impulse EON Compiler [32], TinyEngine [31] [121], Qeexo AutoML [35], Deeplite Neutrino [177], Imagimob AI [178], Neuton TinyML [179], Reality AI [180], and SensiML Analytics Studio and Knowledge Pack [34]. Table VIII compares the features of some of these frameworks.

TABLE VIII.

Features of Notable TinyML Software Suites for Microcontrollers

| Framework | Supported Platforms | Supported Models | Supported Training Libraries | Open-Source | Free |

|---|---|---|---|---|---|

| TensorFlow Lite Micro (Google) [167] [46] | ARM Cortex-M, Espressif ESP32, Himax WE-I Plus | NN | TensorFlow | ✓ | ✓ |

| uTensor (ARM) [168] | ARM Cortex-M (Mbed-enabled) | NN | TensorFlow | ✓ | ✓ |

| uTVM (Apache) [130] | ARM Cortex-M | NN | PyTorch, TensorFlow, Keras | ✓ | ✓ |

| EdgeML (Microsoft) [57] [58] [59] [60], [169]–[171] | ARM Cortex-M, AVR RISC | NN, DT, kNN, unary classifier | PyTorch, TensorFlow | ✓ | ✓ |

| CMSIS-NN (ARM) [164] | ARM Cortex-M | NN | PyTorch, TensorFlow, Caffe | ✓ | ✓ |

| EON Compiler (Edge Impulse) [32] | ARM Cortex-M, TI CC1352P, ARM Cortex-A, Espressif ESP32, Himax WE-I Plus, TENSAI SoC | NN, k-means, regressors (supports feature extraction) | TensorFlow, Scikit-Learn | χ | ✓ |

| STM32Cube.AI (STMicroelectronics) [172] | ARM Cortex-M (STM32 series) | NN, k-means, SVM, RF, kNN, DT, NB, regressors | PyTorch, Scikit-Learn, TensorFlow, Keras, Caffe, MATLAB, Microsoft Cognitive Toolkit, Lasagne, ConvnetJS | χ | ✓ |

| NanoEdge AI Studio (STMicroelectronics) [173] | ARM Cortex-M (STM32 series) | Unsupervised learning | - | χ | χ |

| EloquentML [72] | ARM Cortex-M, Espressif ESP32, Espressif ESP8266, AVR RISC | NN, DT, SVM, RF, XGBoost, NB, RVM, SEFR (feature extraction through PCA) | TensorFlow, Scikit-Learn | ✓ | ✓ |

| Sklearn Porter [174] | - | NN (MLP), DT, SVM, RF, AdaBoost, NB | Scikit-Learn | ✓ | ✓ |

| EmbML [175] | ARM Cortex-M, AVR RISC | NN (MLP), DT, SVM, regressors | Scikit-Learn, Weka | ✓ | ✓ |

| FANN-on-MCU [176] | ARM Cortex-M, PULP | NN | FANN | ✓ | ✓ |

| SONIC, TAILS@ [24] | TI MSP430 | NN | TensorFlow | ✓ | ✓ |

inference framework for intermittent computing systems.

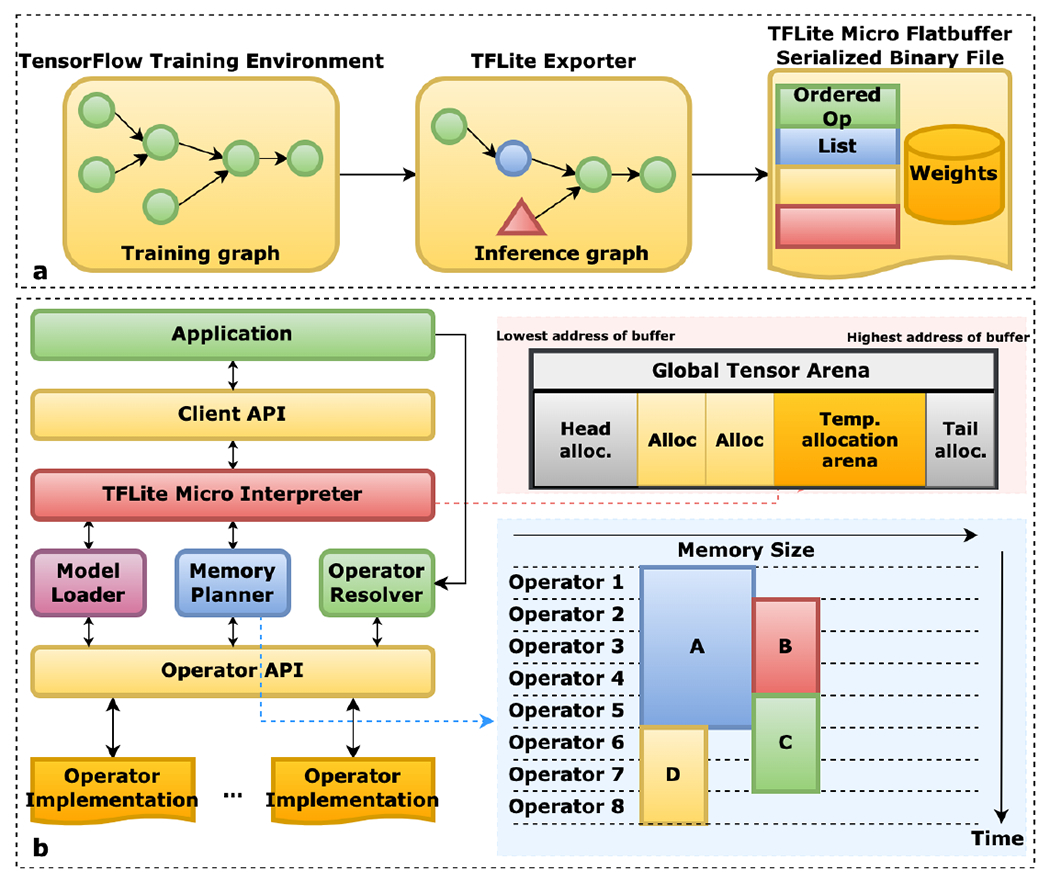

TensorFlow Lite Micro:

TensorFlow Lite Micro (TFLM) [46] [167] is a specialized version of TFLite aimed towards optimizing TF models for Cortex-M and ESP32 MCU. TFLite Micro embraces several embedded runtime design philosophies. TFLM drops uncommon features, data types, and operations for portability. It also avoids specialized libraries, operating systems, or build-system dependencies for heterogeneous hardware support and memory efficiency. TFLM avoids dynamic memory allocation to mitigate memory fragmentation. TFLM interprets the neural network graph at runtime rather than generating C++ code to support easy pathways for upgradability, multi-tenancy, multi-threading, and model replacement while sacrificing finite savings in memory. Fig 4 summarizes the operation of TFLM. TFLM consists of three primary components. First, the operator resolver links only essential operations to the model binary file. Second, TFLM pre-allocates a contiguous memory stack called the arena for initialization and storing runtime variables. TFLM uses a two-stack allocation strategy to discard initialization variables after their lifetime, thereby minimizing memory consumption. The space between the two stacks is used for temporary allocations during memory planning, where TFLM uses bin-packing to encourage memory reuse and yield optimal compacted memory layouts during runtime. Lastly, TFLM uses an interpreter to resolve the network graph at runtime, allocate the arena, and perform runtime calculations. TFLM was shown to provide 2.2× speedup and 1.08× memory and flash savings over CMSIS-NN for image recognition [31].

Fig. 4.

Operation of TensorFlow Lite Micro, an interpreter-based inference engine. (a) The training graph is frozen, optimized and converted to a flatbuffer serialized model schema, suitable for deployment in embedded devices. (b) The TFLM runtime API preallocates a portion of memory in the SRAM (called arena) and performs bin-packing during runtime to optimize memory usage (figure adapted from [167]).

uTensor:

uTensor [168] generates C++ files from TF models for Mbed-enabled boards, aiming to generate models of < 2 kB in size. It is subdivided into two parts. The uTensor core provides a set of optimized runtime data structures and interfaces under computing constraints. The uTensor library provides default error handlers, allocators, contexts, ML operations, and tensors built on the core. Basic data types include integral type, uTensor strings, tensor shape, and quantization primitives borrowed from TFLite. Interfaces include the memory allocator interface, tensor interface, tensor maps, and operator interface. For memory allocation, uTensor uses the concept of arena borrowed from TFLM. In addition, uTensor boasts a series of optimized (built to run CMSIS-NN under the hood), legacy, and quantized ML operators consisting of activation functions, convolution operators, fully-connected layers, and pooling.

uTVM:

micro-TVM [130] extends the TVM compiler stack to run models on bare-metal IoT devices without the need for operating systems, virtual memory, or advanced programming languages. micro-TVM first generates a high-level and quantized computational graph (with support for complex data structures) from the model using the relay module. The functional representation is then fed into the TVM intermediate representation module, which generates C-code by performing operator and loop optimizations via AutoTVM and Metascheduler, procedural optimizations, and graph-level modeling for whole program memory planning. AutoTVM consists of an automatic schedule explorer to generate promising and valid operator and inference optimization configurations for a specific microcontroller, and an XGBoost model to predict the performance of each configuration based on features of the lowered loop program. The developer can either specify the configuration parameters to explore using a schedule template specification API, or possible parameters can be extracted from the hardware computation description written in the tensor expression language. AutoTVM has lower data and exploration costs than black-box optimizers (e.g., ATLAS [181]), and provides more accurate modeling than polyhedral methods [182] without needing a hardware-dependent cost model. The generated code is integrated alongside the TVM C runtime, built, and flashed onto the device. Inference is made on the device using a graph extractor. AutoTVM was shown to generate code that is only 1.2× slower compared to handcrafted CMSIS-NN-based code for image recognition.

Microsoft EdgeML:

EdgeML provides a collection of lightweight ML algorithms, operators, and tools aimed towards deployment on Class 0 devices, written in PyTorch and TF. Included algorithms include Bonsai [57], ProtoNN [58], FastRNN [59], FastGRNN [59], ShallowRNN [169], EMI-RNN [170], RNNPool [60], and DROCC [171]. EMI-RNN exploits the fact that only a small, tight portion of a time-series plot for a certain class contributes to the final classification while other portions are common among all classes. Shallow-RNN is a hierarchical RNN architecture that divides the time-series signal into various blocks and feeds them in parallel to several RNNs with shared weights and activation maps. RNNPool is a non-linear pooling operator that can perform ”pooling” on intermediate layers of a CNN by a downsampling factor much larger than 2 (4-8×) without losing accuracy while reducing memory usage and decreasing compute. Deep robust one-class classifier (DROCC) is an OCC under limited negatives and anomaly detector without requiring domain heuristics or handcrafted features. The framework also includes a quantization tool called SeeDot [56].

CMSIS-NN:

Cortex Microcontroller Software Interface Standard-NN [164] was designed to transform TF, PyTorch, and Caffe models for Cortex-M series MCU. It generates C++ files from the model, which can be included in the main program file and compiled. It consists of a collection of optimized neural network functions with fixed-point quantization, including fully connected layers, depth-wise separable convolution, partial image-to-column convolution, in-situ split x-y pooling, and activation functions (ReLU, sigmoid, and tanh, with the latter two implemented via lookup tables). It also features a collection of support functions including data type conversion and activation function tables (for sigmoid and tanh). CMSIS-NN provides 4.6× speedup and 4.9× energy savings over non-optimized convolutional models.

Edge Impulse EON Compiler:

Edge Impulse [32] provides a complete end-to-end model deployment solution for TinyML devices, starting with data collection using IoT devices, extracting features, training the models, and then deployment and optimization of models for TinyML devices. It uses the interpreter-less Edge Optimized Neural (EON) compiler for model deployment, while also supporting TFLM. EON compiler directly compiles the network to C++ source code, eliminating the need to store ML operators that are not in use (at the cost of portability). EON compiler was shown to run the same network with 25-55% less SRAM and 35% less flash than TFLM.

STM32Cube.AI and NanoEdge AI Studio:

X-Cube-AI from STMicroelectronics [172] generates STM32 compatible C code from a wide variety of deep-learning frameworks (e.g., PyTorch, TensorFlow, Keras, Caffe, MATLAB, Microsoft Cognitive Toolkit, Lasagne and ConvnetJS). It allows quantization (min-max), operator fusion, and the use of external flash or SRAM to store activation maps or weights. The tool also features functions to measure system performance and deployment accuracy and suggests a list of compatible STM32 platforms based on model complexity. X-Cube-AI was shown to provide 1.3× memory reduction and 2.2× speedup over TFLM for gesture recognition and keyword spotting [189]. NanoEdge AI Studio [173] is another AutoML framework from STMicroelectronics for prototyping anomaly detection, outlier detection, classification, and regression problems for STM32 platforms, including an embedded emulator.

Eloquent MicroML and TinyML:

MicroMLgen ports decision trees, support vector machines (linear, polynomial, radial kernels or one-class), random forests, XGboost, Naive Bayes, relevant vector machines, and SEFR (a variant of SVM) from SciKit-Learn to Arduino-style C code, with the model entities stored on flash. It also supports onboard feature projection through PCA. TinyMLgen ports TFLite models to optimized C code using TFLite’s code generator [72].

Sklearn Porter:

Sklearn Porter [174], generates C, Java, PHP, Ruby, GO, and Javascript code from Scikit-Learn models. It supports the conversion of support vector machines, decision trees, random forests, AdaBoost, k-nearest neighbors, Naive Bayes, and multi-layer perceptrons.

EmbML:

Embedded ML [175] converts logistic regressors, decision trees, multi-layer perceptrons, and support vector machines (linear, polynomial, or radial kernels) models generated by Weka or Scikit-Learn to C++ code native to embedded hardware. It generates initialization variables, structures, and functions for classification, storing the classifier data on flash to avoid high memory usage, and supports the quantization of floating-point entities. EmbML was shown to reduce memory usage by 31% and latency by 92% over Sklearn Porter.

FANN-on-MCU:

FANN-on-MCU [176] ports multi-layer perceptrons generated by fast artificial neural networks (FANN) library to Cortex-M series processors. It allows model quantization and produces an independent callable C function based on the specific instruction set of the MCU. It takes the memory of the target architecture into account and stores network parameters in either RAM or flash depending upon whichever does not overflow and closer to the processor (e.g., RAM is closer than flash).

SONIC, TAILS:

Software-only neural intermittent computing (SONIC) and tile-accelerated intermittent low energy accelerator (LEA) support (TAILS) [24] are inference engines for intermittent computing systems. SONIC eliminates redo-logging, task transitions, and wasted work associated with moving data between SRAM and flash by introducing loop continuation, which allows loop index modification directly on the flash without expensive saving and restoring. To ensure idempotence, SONIC uses loop ordered buffering (loop reordering and double buffering partial feature maps to eliminate commits in a loop iterations) and sparse undo-logging (buffer reuse to ensure idempotence for sparse ML operators). SONIC introduces a latency overhead of only 25-75% over non-intermittent neural network execution (compared to 10× overhead from baseline intermittent model execution frameworks), reducing inference energy by 6.9× over competing baselines. TAILS exploits LEA in MSP430 microcontrollers to maximize throughput using direct-memory access and parallelism. LEA supports acceleration of finite-impulse-response discrete-time convolution. TAILS further reduces inference energy by 12.2× over competing baselines.

IX. Online Learning

After deployment, the on-device model needs to be periodically updated to account for shifts in feature distribution in the wild [183]. While models trained on new data on a server could be sent out to the microcontroller once in a while, limited communication bandwidth and privacy concerns can prevent offloading the training to a server. However, the conventional training memory and energy footprint are much larger than the inference memory and energy footprint, rendering traditional GPu-based training techniques unsuitable for microcontrollers [19]. Thus, several on-device training and federated learning (FL) frameworks have emerged for microcontrollers, summarized in Table IX and Table X.

TABLE IX.

Features of Notable TinyML On-Device Learning Frameworks

| Framework | Working Principle | Supported Hardware | Tested Application | Network Type | Open-source |

|---|---|---|---|---|---|

| Learning in the Wild [183] |

W: Per-output feature distribution divergence. H: Transfer learning on last-layer; sample importance weighing to maximize learning effect. T: Gradient norm for sample selection via uncertainty and diversity. |

TI MSP430 | Image recognition (MNIST, CIFAR-10, GT-SRB) | CNN | χ |

| TinyOL [184] |

W: Running mean and variance of streaming input H: Transfer learning on additional layer at the output of the frozen network using stochastic gradient descent (SGD). |

ARM Cortex-M | Anomaly detection | Autoencoder | χ |

| ML-MCU [185] | H: Optimized SGD (inherits stability of GD and efficiency of SGD); optimized one-versus-one (OVO) binary classifiers for multiclass classification | ARM Cortex-M, Espressif ESP32 | Image recognition (MNIST), mHealth (Heart Disease, Breast Cancer), Other (Iris) | Optimized OVO binary classifiers | ✓ |

| Train++ [186] |

W: Confidence score of prediction. H: Incremental training via constrained optimization classifier update |

ARM Cortex-M, ARM Cortex-A, Espressif ESP32, Xtensa LX | Image recognition (MNIST, Banknote Authentication), mHealth (Heart Disease, Breast Cancer, Haberman’s Survival), Other (Iris, Titanic Survival) | Binary classifiers | ✓ |

| TinyTL [19] | H: Update bias instead of weights and use lite residual learning modules to recoup accuracy loss | ARM Cortex-A | Face recognition (CelebA), Image recognition (Cars, Flowers, Aircraft, CUB, Pets, Food, CIFAR-10, CIFAR-100) | CNN (ProxylessNAS-MB, MBNetV2) | ✓ |

| Imbal-OL [187] | T: Weighted replay and oversampling for minority classes | ARM Cortex-A | Image recognition (CIFAR-10, CIFAR-100) | CNN (ResNet-18) | χ |

| QLR-CL [188] | H: Continual learning with quantized latent replays (store activation maps at latent replay layer instead of samples), slow-learning below the latent replay layer. | PULP | Image recognition (Core50) | CNN (MBNetV1) | χ |

W: When to learn, H: How to learn, T: What to learn (sample selection)

TABLE X.

Features of Notable TinyML Federated Learning Frameworks