Abstract

The COVID-19 pandemic has reshaped Internet traffic due to the huge modifications imposed to lifestyle of people resorting more and more to collaboration and communication apps to accomplish daily tasks. Accordingly, these dramatic changes call for novel traffic management solutions to adequately countermeasure such unexpected and massive changes in traffic characteristics.

In this paper, we focus on communication and collaboration apps whose traffic experienced a sudden growth during the last two years. Specifically, we consider nine apps whose traffic we collect, reliably label, and publicly release as a new dataset (MIRAGE-COVID-CCMA-2022) to the scientific community. First, we investigate the capability of state-of-art single-modal and multimodal Deep Learning-based classifiers in telling the specific app, the activity performed by the user, or both. While we highlight that state-of-art solutions reports a more-than-satisfactory performance in addressing app classification (96%–98% F-measure), evident shortcomings stem out when tackling activity classification (56%–65% F-measure) when using approaches that leverage the transport-layer payload and/or per-packet information attainable from the initial part of the biflows. In line with these limitations, we design a novel set of inputs (namely Context Inputs) providing clues about the nature of a biflow by observing the biflows coexisting simultaneously.

Based on these considerations, we propose Mimetic-All a novel early traffic classification multimodal solution that leverages Context Inputs as an additional modality, achieving F-measure in activity classification. Also, capitalizing the multimodal nature of Mimetic-All, we evaluate different combinations of the inputs. Interestingly, experimental results witness that Mimetic-ConSeq—a variant that uses the Context Inputs but does not rely on payload information (thus gaining greater robustness to more opaque encryption sub-layers possibly going to be adopted in the future)—experiences only F-measure drop in performance w.r.t. Mimetic-All and results in a shorter training time.

Keywords: Communication apps, Collaboration apps, COVID-19, Deep Learning, Encrypted traffic, Multimodal techniques, Contextual counters, Traffic classification

1. Introduction

The outbreak of the Covid-19 pandemic has induced governments worldwide to impose lockdown periods during last two years. These events have forced millions of citizens to stay at home, and also study, work, and socialize from there if possible. As a consequence, Internet traffic from residential users has witnessed a significant growth (e.g., EU Internet traffic volume) [1], also with implications on the mobility pattern of users in cellular networks—e.g., an increase in voice traffic and uplink traffic in suburbs () [2].

Focusing on pandemic-related change of traffic composition, Affinito et al. [3] highlighted the changes in the usage of different categories of applications for smart working and distance learning (i.e. Video, SocialMedia, Messaging, and Collaboration Tools) based on the investigation of websites and domains during the enforcement of social distancing measures. Analogous changes in usage and volume (increased use of collaboration platforms, VPNs, and remote desktop services) have been found analyzing traffic in campus networks [4], [5], [6], [7].

The sudden change in the spatial distribution, timing, and usage mix of online presence has had a measurable impact on network performance in terms of increased variability of delay, loss rate, and latency [8], and degradation of over-the-top services [9], to the point that these measurements have been effectively used for global-scale inference of work-from-home and lockdown events and maps [10]. Indeed, unexpected and massive changes in traffic characteristics pose a challenge to efficient network resource management, that in turn calls for enhanced network monitoring capabilities. More specifically, the possibility to infer the application or the type of application that generated the observed traffic (viz. the process of Traffic Classification [11], TC in the following) becomes paramount to most management, planning, and policy enforcement actions.

While TC has been a hard problem and an active field of research for decades, its application is further challenged by specific characteristics of communication and collaboration apps: consistent use of encryption; shared application-level protocols as transport sublayers (namely, TLS and HTTP); different functioning modes (activities) for a single application; execution from mobile devices (platforms characterized by frequent and automated software updates). These challenges are now pushing toward the adoption of advanced Deep Learning (DL) approaches, able to cope with frequently changing input nature, and offering promising performance when dealing with complex and hard-to-model problems [12]. Thus, on the one hand, a better understanding is required of the traffic of applications that have seen a surge in utilization after the Covid-19 pandemic. On the other hand, an assessment (and improvement) of modern TC approaches is needed, applied to this specific scenario. This is the more the case with the mentioned peculiarity of multiple activities that the user can access from within the same application. This specific characteristic poses several problems: as we show in our experimental evaluation, different activities in the same app present different traffic patterns, while being similar to the same activity within other apps —this can likely confuse the classifiers and lead to poor performance (as we prove experimentally); different activities have different requirements in terms of Quality of Service, policy enforcement, and monitoring —managing traffic at app level overly extends the network management actions to be performed; analyses of users behaviors, to identify needs, and plan infrastructure and service deployments, are impacted by the coarseness of surveyed information.

Following these considerations, the objective of our work is to tackle the activity-level early classification of network traffic generated by the most popular communication and collaboration mobile apps, whose utilization has increased with the COVID-19 pandemic—and keeps shaping the nature of Internet traffic. Specifically, we target nine communication and collaboration apps (Discord, GotoMeeting, Meet, Messenger, Skype, Slack, Teams, Webex, and Zoom) that have seen dramatic increase in usage in correspondence of lockdowns. We analyze their traffic and assess the performance of the state-of-art DL approaches for classifying the specific app and/or the kind of activity (Audio-call, Chat, Video-call). As we the find results much wanting, informed by traffic characterization of mobile apps [13] we propose and evaluate a novel set of inputs observed from contextual traffic from the same app, and design a novel architecture exploiting these data as well, showing much improved activity-classification performance. Exploiting such novel inputs, we also design a novel DL architecture which does not rely on application-layer payload, with minimal loss of accuracy, but better training time and increased robustness to more opaque encrypted protocols. We remark that, although the present work is focused on internet traffic data generated by some of the apps whose use has grown significantly in conjunction with the Covid-19 pandemic, the proposed methodology is general. Indeed, it can be applied in all contexts in which it is crucial to classify the app and the activity performed by the user (e.g., QoS/QoE management and user profiling).

Accordingly, the main contributions of this work are summarized in the following:

-

•

We collect and publicly release a novel dataset, named MIRAGE-COVID-CCMA-2022 (where CCMA stands for Communication-and-Collaboration Mobile Apps), encompassing the traffic of nine communication-and-collaboration mobile apps run by users executing three different activities (Audio-call, Video-call, and Chat). The collected dataset is human-generated, recent, and reliably labeled with both the mobile app that generated the traffic and the activity that was performed by the user. The considered apps have experienced a sudden change in volume and spatial/temporal patterns during the pandemic, thus are of specific interest for network operators, network managers, academia, and society at large.

-

•

We experimentally evaluate the capability of state-of-art DL approaches in classifying the traffic generated by communication-and-collaboration apps at different granularity levels (i.e. application and activity), highlighting the shortcomings deriving from the feature leveraged by these solutions. Specifically, we apply state-of-art (and input-size-optimized) DL architectures (an 1D-CNN [14], a hybrid 2D-CNN+LSTM [15], and the multimodal Mimetic-Enhanced architecture [16]) to the collected dataset, to classify the traffic at , , and granularity. For each activity, we analyze the traffic in terms of (payload-carrying) packet direction, payload length, payload content, TCP window size, and inter-arrival time, for the initial part of the biflow1 (early behavior analysis) and point at the limitations of this choice in activity recognition.

-

•

Prompted by the limitations in classifying user activities deriving by the inputs commonly adopted in related literature [12], we investigate the traffic patterns generated by a traffic source and design a novel set of inputs (namely Context Inputs ) able to provide hints about a biflow by observing its context (i.e. the set of co-existing biflows which run in parallel). Hence, we perform a characterization analysis suggesting that Context Inputs are able to support user-activity classification.

-

•

Capitalizing on the appeal of Context Inputs, we design a novel classification solution starting from Mimetic-Enhanced, named Mimetic-All, which leverages them as an additional modality. Our Mimetic-All effectively exploits the Context Inputs obtaining always the best performance when compared with both ML- and DL-based TC solutions fed with the same input, while being also suitable for early traffic classification.

-

•

Exploiting the multimodal nature of the architecture, we provide also a second novel classification solution—we name Mimetic-ConSeq— that trades the inputs based on payload for the new Context Inputs, paying a negligible performance cost (less than 1% F-measure drop for activity classification w.r.t. Mimetic-Enhanced) to gain both a smaller training time and greater robustness to future more opaque encryption sublayers (e.g., TLS with Encrypted Server Name Indication or Encrypted Client Hello extensions [17]).

-

•

In addition to TC performance, we evaluate the reliability of the proposed novel classification solutions and considered variants via a calibration analysis proving the beneficial effect of Context Inputs in reducing the expected calibration error.

We remark that the present paper constitutes the expansion and continuation of the work described in the conference paper [18], which only analyzed existing solutions applied to the problem of app and activity classification (also evaluated on a smaller dataset).

The remainder of the manuscript is organized as follows. Section 2 surveys related studied analyzing changes of Internet traffic during Covid-19 pandemic, ML/DL approaches for app or app-user-activity identification, positioning our work against related literature. Section 3 describes the considered experimental setup, including the collected dataset used for analysis and validation. In Section 4, we highlight the shortcomings of current state-of-art (multimodal) DL-based approaches used for TC. Then, in Section 5, we introduce the Context Inputs and describe our novel proposals based on such inputs. The experimental analysis is provided in Section 6. Finally, Section 7 provides conclusions and future prospects. In Appendix, we report the acronyms and abbreviations used in the manuscript.

2. Related work

In this section, we discuss the recent studies focusing on the impact of Covid-19 pandemic (Section 2.1) and position our study by detailing the latest advancements in TC via DL (Section 2.2) and user activity recognition (Section 2.3).

2.1. Impact of Covid-19 on the nature of internet traffic

Several studies have investigated the changes that the spread of the Covid-19 pandemic on a global scale has caused to Internet traffic as a result of lockdown periods and the consequent shift in daily habits. Regarding the increasing use of tools useful for smart working and distance learning, Affinito et al. [3] provide insights on the usage of different categories of Internet applications for collaboration and entertainment, by analyzing websites and domains visited during the enforcement of the lockdown to contain the spread of the virus. As shown, during the reference period, the most used applications were Youtube, Netflix, Facebook, Whatsapp, Skype, and Zoom. Other works investigate the variation in traffic volumes and network performance [1], [2], [4], [8], [9]. Specifically, Feldmann et al. [1] analyze the effect of the lockdowns on traffic shifts during the period Mar–Jun 2020, by using network flow data from multiple vantage points (i.e. an ISP, three IXPs, and a large academic network). They point out that during the period considered, the volume of European Internet traffic increased by up to , mainly due to residential traffic generated by applications for work and distance education, whose volume increased by up to . Lutu et al. [2] analyze the changes in mobility and their impact on cellular network traffic, showing an overall increase in voice traffic, with a decrease in download traffic () and an increase in uplink traffic (). Additional degradations of network services are highlighted by Böttger et al. [9], who analyze the traffic growth in different regions of the world and how the network responded to an increased demand from the perspective of edge network of Facebook during the beginning of the Covid-19 pandemic. Authors observed a world-wide increase in traffic throughput between March and July 2020 and also a correlation between the phase of traffic growth and the spread of Covid-19 for each region. Similar network traffic shifts are also investigated by Candela et al. [8] which highlight an higher variability in latency and loss rates in Italy during the first weeks of the 2020 lockdown w.r.t. the pre-pandemic period. Finally, Favale et al. [4] analyze the impact of lockdown measures and the switch to online collaboration and e-learning solutions on a university campus during the months of March/April 2020, showing a peak of in the university network traffic due to the increased use of digital tools for collaboration and remote working.

2.2. Deep Learning-based (mobile) traffic classification

In recent years, several works have faced TC via DL approaches. Wang [19] has first used a Stacked AutoEncoder (SAE) for unencrypted traffic identification, achieving superior performance w.r.t. standard neural networks ( precision and recall). Encrypted TC is targeted by Wang et al. [14], who propose a method based on 1D Convolutional Neural Network (1D-CNN)—outperforming the 2D variant—to tackle four different TC tasks: (i) VPN/nonVPN, (ii) encrypted traffic classes, (iii) VPN-tunneled traffic classes, and (iv) encrypted applications. Similar tasks are tackled by Lotfollahi et al. [20] proposing Deep Packet (based on 1D-CNN and SAE), able to outperform ML-based classifiers for encrypted TC at packet granularity. This proposal outperforms ML-based classifiers in both application identification and traffic characterization. Recurrent Neural Networks have been considered by Lopez-Martin et al. [15], proposing different hybrid DL architectures that combine Long Short-Term Memory (LSTM) and 2D-convolutional layers. Zeng et al. [21] propose a framework for encrypted TC and intrusion detection—named Deep-Full-Range—based on three different DL architectures (i.e. CNN, LSTM and SAE) using raw traffic as input data. The framework was evaluated on both classification tasks by comparing performance with state-of-the-art methods on two public datasets obtaining better performance on both tasks.

Focusing on the classification of mobile-app traffic, Rezaei et al. [22] leverage a CNN fed with the header and the payload of the first six packets of a biflow. Similarly, Liu et al. [23] devise FS-Net, an encoder–decoder architecture based on Bidirectional Gated Recurrent Units (BiGRU) taking as input IP-packet sizes of flow sequences. In this context, in our previous work [12], we define a systematic framework to dissect the encrypted mobile TC using DL, and compare a number of the aforementioned techniques for a comprehensive evaluation. Common usage of biased inputs (e.g., local-network metadata [14], or source and destination ports [15]) inflating TC performance is also discussed (and discouraged).

Multimodal DL solutions have been recently proposed to face the challenges of mobile-app TC. We propose Mimetic [24], a general framework for capitalizing the heterogeneous views associated with a traffic object, along with a novel training procedure based on pre-training and fine-tuning. Experimental results show that the Mimetic classifier outperforms single-modal, ML-based, as well as late-combination of traffic classifiers both in terms of TC performance and training complexity. Following along the same research direction, Wang et al. [25] propose App-Net, consisting of two modalities: a (bidirectional) LSTM and a 1D-CNN. Experimental results show that App-Net outperforms ML-based and single-modal DL-based traffic classifiers, while performing almost on par w.r.t. Mimetic. In the same research direction, we propose Distiller [26], a multimodal multitask DL approach for traffic classification to capitalize on the heterogeneity of traffic data and solving multiple traffic categorization problems simultaneously. A specific instance of the proposed framework was experimentally compared with state-of-the-art multitask DL traffic classifiers [27], [28] on the public dataset ISCX VPN-nonVPN, showing Distiller achieves gains over all the TC tasks considered, also exhibiting very manageable training complexity and lower computational burden than the overall-best-performing multitask baseline. Recently, Akbari et al. [29] have proposed a tripartite multimodal DL architecture based on convolutional and LSTM layers for encrypted-traffic classification at service (HTTPS traffic) and application (QUIC traffic) level. Each modality is fed with different input data, namely (i) raw TLS handshake bytes, (ii) flow time-series of IAT, size, and direction, and (iii) handcrafted (post-mortem) flow statistics. Unfortunately, the authors () do not compare their proposal with other multimodal solutions, () include the adoption of both handcrafted and post-mortem flow statistics, and () do not consider Context Inputs in the design of the proposal.

Capitalizing on the latest advancements on TC solutions via DL, we investigate the performance of a state-of-art multimodal architecture and compare its performance against some other recent but simpler proposals.

2.3. User activity recognition

While app-level classification of mobile app traffic is just a—especially harder—case of network-traffic classification, modern apps characterized by multiple usage modes (activities) offer on the one hand an even harder problem, on the other hand the possibility of obtaining more actionable information w.r.t. just the sole indication of the app. Therefore, recent academic studies have investigated and demonstrated the ability to infer user actions performed in mobile apps by analyzing encrypted network traffic. To this aim, in our previous work [30] we have addressed the characterization and modeling (by means of multimodal Markov Chains) of network traffic (at trace, activity, and flow level) generated by apps that have experienced a traffic surge due to Covid-19 pandemic spread. Our results highlight interesting traffic peculiarities related to both the apps and the specific activities they are used for.

Conti et al. [31] propose a framework to infer which specific actions the users perform while running a certain mobile app, based on packet direction/size information. This is achieved by using both supervised (Random Forest, RF) and unsupervised learning (agglomerative clustering with a distance based on dynamic time warping) approaches for service burst classification. It is shown that by knowing the app generating the traffic, it is possible to identify a user action with accuracy for most of the actions considered within a set of Android apps. Saltaformaggio et al. [32] tackle a similar task via their Netscope proposal: the evaluation is carried out considering a set of popular activities (for both Android and iOS devices), based on statistics originated from IP headers. For elementary-behavior discovery, K-means clustering is used, and then a Support Vector Classifier (SVC) is trained/tested on activity-behaviors binary mapping, resulting in performance that varies depending on the device being tested, but averages 78.04% precision and 76.04% recall. Grolman et al. [33] extend the feature-extraction method proposed in [31] and employ transfer learning (based on a modified co-training method requiring only few samples in the source domain) to transfer patterns that allow identifying specific in-app activities (i.e. tweets and posts) across different configurations (i.e. device- or version-wise) to improve the process of recognizing user actions utilizing existing unlabeled encrypted data. The classification of user actions in target configuration is made by using two co-training learners based on RF and AdaBoost algorithms.

Aiolli et al. [34] focus on identifying user activities on smartphone-based Bitcoin wallet apps, leveraging the earlier methodology proposed in [35]. The fingerprints are collected by running apps automatically on Android and iOS devices and simulating user actions using scripted commands. Network traces are pre-processed (to remove background traffic and extract features) to train an SVC and an RF. Statistical features are collected on sets of packets defined through timing criteria and IP address/port pairs. The authors deal with the classification problem in a multi-stage hierarchical fashion to infer the app category (Bitcoin vs. other), the OS (Android vs. iOS), the app, and finally the specific action performed by the user. The results, evaluated on apps ( Bitcoin) and user actions, report 95% accuracy.

Li et al. [36] address the problem of inferring activities from a targeted set of mobile apps via analyzing the continuous encrypted user traffic stream. The authors propose a DL-based framework in which, focusing on activities having duration , they proceed by dividing each traffic stream into segments using a sliding-window approach, where each segment corresponds to an activity of a targeted app. Subsequently, the segments are normalized and represented by a time-space matrix and a traffic spectrum vector that are used to feed 2D-CNN and 1D-CNN branches of a multimodal DL architecture, respectively. The proposed solution is compared with state-of-art classifiers on a semi-synthetic traffic dataset and attains accuracy regarding app, activity, and both classification tasks.

Finally, Li et al. [37] propose a two step strategy method for mobile-service TC. In detail, in the first step, a joint DL model is exploited as a basic classifier to observe the mobile service traffic from multiple timescale (i.e. micro-time interval, short-time interval, and long-time interval). Specifically, based on the time scale, the basic classifier leverages a different architecture—including Logistic Regression (LR), Recurrent Neural Network (RNN) and Convolutional Neural Network (CNN)—to extract information from the features used to feed the model. In the second step, an attention mechanism is used to aggregate the basic predictions made by the first step in order to observe the mobile service traffic in an extra-long-time scale. Experimental results show that the two step strategy outperform pure DL strategies when used in the classification of different services (e.g., video on demand, video call, live stream, chat), both in terms of accuracy and time delay.

Starting from the aforementioned literature on app and activity recognition, we investigate their identifiability via a real dataset targeting apps that were massively used due to the pandemic events, by avoiding handcrafted features and post-mortem identification.

3. Experimental setup

In the following, we first provide the details about the MIRAGE-COVID-CCMA-2022 dataset we collected (Section 3.1), also explaining the rationale behind the selection of the apps (Section 3.2). Then, we introduce the considered classifiers in the experimental analysis (Section 3.3).

3.1. Dataset collection and ground truth generation

The MIRAGE-COVID-CCMA-2022 dataset was collected by students and researchers during Apr.–Dec. 2021 leveraging the Mirage architecture [38] (conveniently optimized to capture the traffic of communication and collaboration apps) in the ARCLAB laboratory at the University of Napoli “Federico II”.2 Experimenters used three different mobile devices (all equipped with Android 10): a Google Nexus 6 and two Samsung Galaxy A5. In each capture session the experimenters performed a specific activity on a given communication and collaboration app (details are given in the later Section 3.2), so as to obtain a traffic dataset that reflects the common usage of the considered apps.3 The session duration spanned from to min based on the specific activity being carried out. Accordingly, each session resulted in a PCAP traffic trace with associated ground-truth information obtained via additional system log-files.

Based on the latter, each biflow4 was reliably labeled with the corresponding Android package-name by considering established network-connections (via the standard Linux command netstat).5 This information was further enriched with a custom label referring to the specific activity performed by the user operating the device.

To foster replicability and reproducibility we publicly release the MIRAGE-COVID-CCMA-2022 dataset.6

3.2. Apps’ and activities’ selection rationale

Communication and collaboration apps—used for business meetings, classes, and social interaction—have experienced a huge utilization increment when “stay-at-home” orders were issued worldwide and are still widely used due to the change of life- and work-style of people around the globe. The wide adoption of these apps exposing complex network behaviors has prompted recent research from academics and practitioners focusing on different facets, ranging from reaction to varying network conditions [39] to the different usages of RTP/RTCP protocols [40] for the multimedia transfers.

Based on both popularity and utilization boost, herein we focus on nine communication and collaboration apps: Discord, GotoMeeting, Meet, Messenger, Skype, Slack, Teams, Webex, and Zoom. Indeed, Zoom has obtained the steepest increment with its traffic scaling by orders of magnitude, followed by Webex, GotoMeeting, Teams, BlueJeans (whose traffic we are currently collecting), and Skype [41]. Also, during 15th–21st March 2020, Zoom was downloaded , , and more than the weekly average during Q4 2019 in the US, UK, and Italy, respectively [42]. Similarly, Teams also experienced significant growth in Italy (resp. France) with (resp. ) more downloads. The considered apps have been extensively exploited for remote (and blended) teaching in Italian7 and European8 institutions and universities. As also highlighted by Sandvine [43], in 2021 video traffic has proven to be even more significant compared to the previous year, both as a standalone and as an embedded component of app mashups. Indeed, social and communication applications have gained further popularity, with WhatsApp, Zoom, Teams, and Messenger being the most used for messaging, and Zoom, Webex, and Teams for enterprise conferencing.

Fig. 1 depicts the mobile apps collected in MIRAGE-COVID-CCMA-2022 and used in this study, highlighting also the activities carried out with each, and the amount of traffic collected in terms of number of biflows and packets. Specifically, according to the observed app usage, the experimentation covered the following activities (all related to live events): Chat (“Chat”)—involves just two participants exchanging textual messages and/or multimedia content (e.g., images or GIFs); Audio-call (“ACall”)—involves just two participants transmitting only audio; Video-call (“VCall”)—involves many attendees which can transmit both video and audio (e.g., live events such as video calls between two or more attendees or webinars).

Fig. 1.

Communication and collaboration apps in MIRAGE-COVID-CCMA-2022 (alphabetic order). Performed activities, number of biflows (left-bar) and number of packets (right-bar) are reported for each app. Note that the log scale is used to report the packets number.

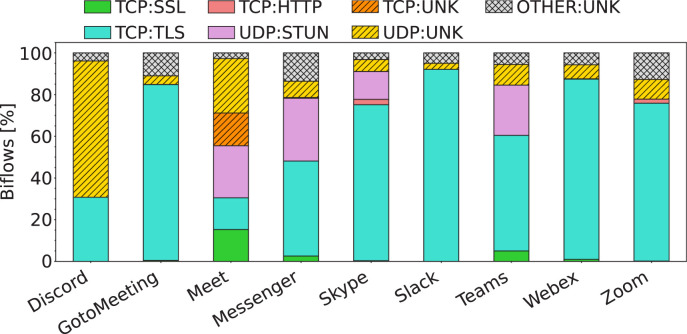

Furthermore, Fig. 2 reports the characterization of MIRAGE-COVID-CCMA-2022 traffic in terms of the adopted protocols for each app. Specifically, for all the apps a significant percentage of SSL/TLS biflows is observed (ranging from 30% for Discord to 90% for Slack). Moreover, all apps generate a relevant portion of UDP biflows. Remarkably, in the case of Discord (unlike the other apps) the UDP traffic is predominant compared to TCP counterpart (i.e. 65% vs. 30%). Finally, for Meet, Messenger, Skype, and Teams there is also a significant presence of STUN9 biflows (between 10% and 30%), commonly used for multimedia communications in the prevalent case of presence of a Network Address Translator (NAT). These findings are consistent with the outcomes of traffic analysis performed in other studies [39], [40].

Fig. 2.

Protocol distribution in terms of biflows. UNK stands for unknown, SSL stands for undetected version of SSL/TLS.

3.3. Baselines considered and learning setup

In the following, we consider state-of-the-art classifiers selected among the best single-modal and multimodal alternatives—based on extensive performance evaluation carried out in our previous works [12], [16], [24]—in terms of both DL architecture and unbiased input data.

Specifically, we consider an 1D-CNN fed with the first bytes of transport-layer payload (PAY) of each biflow [14]. Also, we evaluate a hybrid composition of 2D-CNN+LSTM (named Hybrid hereinafter) as proposed in [15], having as input the following informative fields (SEQ) of the first packets of each biflow: () the number of bytes in transport-layer payload (PL), () TCP window size (TCPWIN, set to zero for UDP packets), () inter-arrival time (IAT), and () packet direction (DIR) .

Finally, we also consider the multimodal Mimetic-Enhanced classifier [16], being an enhanced version of the generic Mimetic framework originally proposed in [24]. Mimetic-Enhanced consists of two modalities fed each with one of the two input types (namely PAY and SEQ) used for the baseline single-modal classifiers described above. First, to augment the information carried by input data, Mimetic-Enhanced improves the original Mimetic by introducing a trainable embedding layer for both modalities. From the architectural viewpoint, the modality fed with the PAY input type consists of two 1D convolutional layers – each followed by a 1D max-pooling layer – and a final dense layer. On the other hand, the modality fed with the SEQ input type consists of a BiGRU and one dense layer. Finally, the intermediate features extracted by each modality are concatenated and fed to a shared dense layer and then to the last softmax classifier. Mimetic-Enhanced is trained via a two-phase procedure consisting of the pre-training of individual modalities plus the fine-tuning of the whole architecture, and adopts an adaptive learning-rate scheduler. We refer to [16] for further details on the Mimetic-Enhanced architecture and related hyperparameters.

In all the following analyses, the performance evaluation is based on a stratified ten-fold cross-validation. Indeed, the latter represents a solid assessment setup since it keeps the sample ratio among classes for each fold. Also, to foster a fair comparison, all the classifiers (both novel proposals and baselines, cf. Section 5.3 for details on our proposals) are trained for a total of epochs (for Mimetic-Enhanced and the novel multimodal proposals, we consider epochs for pre-training of each modality and epochs for fine-tuning) for minimizing the categorical cross-entropy loss, and exploit the Adam optimizer (with a batch size of ) and a validation-based early-stopping technique to prevent overfitting. In detail, for each fold, we consider 80% of the whole training data as the actual training set, while the remaining and 20% is assigned to the validation set.

Finally, hereinafter we take advantage of the ground-truth information associated to each biflow (labeled with both the app generating the traffic and the activity performed) to instruct and evaluate different supervised strategies corresponding to three TC tasks: (i) classifying the app (App-TC), (ii) classifying the activity (Act-TC), and (iii) classifying both the app and the activity (Joint-TC). Accordingly, we train the above models with: (a) app-related ground truth only (App), (b) activity-related ground truth only (Act), and (c) the joint app-and-activity ground truth (App Act). Note that App and Act produce classifiers able to address only the specific task they are trained for (i.e., classifying either apps or activities), whereas when training the DL architectures based on App Act, all three TC tasks above-described can be addressed.

4. Empirical evaluation of state-of-the-art shortcomings in activity classification

After tuning the size of their inputs (Section 4.1), we assess the capability of state-of-art solutions in tackling classification at both app and activity granularity (Section 4.2). Then, we look at traffic patterns to understand the causes of the poor performance attained for activity TC (Section 4.3) and investigate alternative paths viable to highlight peculiarities in the traffic generated by different activities, considering its temporal evolution (Section 4.4), and aggregate behavior (Section 4.5).

4.1. Sensitivity analysis

First, we perform a sensitivity analysis to tune the dimension of the above types of input employed, i.e. the number of bytes and number of packets . For brevity, we refer to the Joint-TC task, i.e. the hardest in its nature.

Fig. 3 shows the accuracy and F-measure 10 attained by the 1D-CNN and Hybrid architectures, when varying and packets, respectively.11 We also report how the number of trainable parameters (TP) varies with the size of the considered input data to highlight the (necessary) input size-complexity trade-off. As reported in Fig. 3(a), although the best performance in terms of accuracy and F-measure is obtained with , when passing from to , both the accuracy and F-measure remain stable despite the larger input size. Additionally, the same variation of causes a non-negligible increase in the number of trainable parameters (), resulting in a much more complex architecture with a limited improvement (i.e. in terms of F-measure).

Fig. 3.

Accuracy [%], F-measure [%], and number of trainable parameters of 1D-CNN (a) when varying the input dimensions and Hybrid (b) when varying the input dimensions . Results refer to the Joint-TC task. The best trade-off value is highlighted via a marker.

On the other hand, regarding Fig. 3(b), we can notice that Hybrid reaches the best performance in terms of both accuracy and F-measure by using packets. In this specific case, considering packets instead of still provides a small improvement of performance in terms of F-measure, at the cost of a slight increase (and thus manageable) of complexity ( in terms of number of trainable parameters).12 Regarding the latter, it is useful to underline that the number of trainable parameters corresponding to is comparable to that obtained by using packets. The reason for this can be traced to the fact that such a small input implies the use of different padding, which causes additional complexity to implement the Hybrid architecture. Hence, in the following we employ and packets in order to keep the trade-off between classification performance (in terms of F-measure) and complexity of the obtained classifiers. Additionally, it is apparent that there is an upper bound on the achievable TC performance even optimizing the input size, whose causes are deepened in the next.

4.2. App and activity classification via state-of-the-art approaches

Hereinafter, we evaluate the performance achieved by state-of-the-art classifiers (i.e., 1D-CNN, Hybrid, and Mimetic-Enhanced), when adopting different training strategies (i.e., App, Act, and App Act). We recall that App (resp. Act) is able to solve only the App-TC (resp. Act-TC) task, whereas App Act allows to solve both each separate task and the Joint-TC problem.

As a result, Table 1 reports the performance of the classifiers in terms of accuracy and F-measure attained for each TC task (column-wise). The table is also complemented by a computational complexity assessment (via the “TP” and the training “Time” columns).13 By comparing the general performance achieved on the three TC problems, Joint-TC clearly confirms to be the most difficult task to tackle (with accuracy and F-measure ranges) due to the greater number of classes (i.e. the combinations of apps and activities). In contrast, the (easier) App-TC task (consisting of classes) can be effectively solved by all the architectures ( accuracy and F-measure). Finally, the performance obtained on the Act-TC task highlights the actual difficulty in activity recognition. Indeed, despite the smallest number of classes (i.e. the activities) considered in our study, very low performance ( accuracy and F-measure) are attained with the all state-of-art approaches.

Table 1.

Comparison of accuracy, F-measure, number of Trainable Parameters (#TP), and Training Time (Time) for the three state-of-art DL architectures (1D-CNN, Hybrid, and Mimetic-Enhanced) when trained on different class-labels (i.e. related to AppAct, App, and Act) for different classification tasks. #TP slightly varies with the classification task (with variations being smaller than reported precision). Results are in the format obtained over 10-folds. Training time was computed by pre-training the individual modalities in parallel. The best result per metric (column) is highlighted in boldface. MM denotes a multi-modal architecture .

| Classifier | MM | Training Strategy | Joint-TC |

App-TC |

Act-TC |

#TP [k] | Time [min] | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy [%] | F-measure [%] | Accuracy [%] | F-measure [%] | Accuracy [%] | F-measure [%] | |||||

| 1D-CNN | AppAct | 4272 | ||||||||

| App | – | – | – | – | 4256 | |||||

| Act | – | – | – | – | 4250 | |||||

| Hybrid | AppAct | 428 | ||||||||

| App | – | – | – | – | ||||||

| Act | – | – | – | – | ||||||

| Mimetic-Enhanced | ● | AppAct | 1235 | |||||||

| App | – | – | – | – | 1225 | |||||

| Act | – | – | – | – | 1221 | |||||

By looking at classifier standpoint, Mimetic-Enhanced outperforms the two competing approaches on all the three considered TC tasks. For instance, Mimetic-Enhanced roughly achieves (resp. ) F-measure than Hybrid (resp. 1D-CNN) on Joint-TC. Interestingly, Table 1 also highlights that the training strategy adopted may impact the performance achieved by the models. Indeed, while for App-TC all the architectures achieve better performance when relying on App training strategy, for Act-TC the training App Act results in better performance for Mimetic-Enhanced and Hybrid. Hence, this highlights that app classification may be conducive to activity recognition, while the opposite does not necessarily hold.

Furthermore, looking at the complexity of the considered architectures, the analysis provides other interesting pieces of evidence (last two columns of Table 1). In fact, the complexity highly varies with considered architecture: 1D-CNN and Mimetic-Enhanced are and more complex than Hybrid, respectively, in terms of trainable parameters (which are roughly proportional to both the training time and the memory occupation). Hence, Mimetic-Enhanced provides the best trade-off between TC performance and complexity.

To provide details on the performance at a finer grain, Fig. 4 reports the confusion matrix of each architecture on the Joint-TC task. Delving into these matrices, we can notice that Mimetic-Enhanced can substantially reduce the misclassification patterns w.r.t. both 1D-CNN and Hybrid, confining the errors within the activities of the same app, illustrated by the more evident block diagonal pattern in Fig. 4(c). Still, the block-diagonal pattern of all the confusion matrices confirms the general difficulty in discriminating adequately among the activities with the given set of inputs.14

Fig. 4.

Confusion matrices of 1D-CNN (a), Hybrid (b), and Mimetic-Enhanced (c) considering the AppAct and related to the Joint-TC. Note that the log-scale is used to evidence small errors.

4.3. Understanding the causes: Biflow-level characterization of early behavior

In the previous analysis, we have shown that state-of-art classifiers achieve unsatisfactory performance when used to solve the activity classification problem. Consequently, in this section we analyze the typical behavior of biflows in the initial part of the communication, in order to collect clues about whether activities related to specific apps can be discriminated by observing the inputs used to feed the considered DL architectures. Accordingly, we analyze the PL/DIR/IAT/TCPWIN sequences of the first packets15 (i.e. the fields considered in the SEQ input) and the first B (i.e. the PAY input). For each packet/byte index, we report the average value across all biflows for a given app. The analysis presented in Fig. 5 focuses on Skype,16 Teams,17 and Webex.18 For these apps, the above information is broken down into the considered activities (ACall, Chat, and VCall) and also reported in summary form (All).

Fig. 5.

Properties of biflows’ time-series with respect to PL, DIR, IAT, TCPWIN, and PAY for Skype (a, c, e, g, i), Teams (b, d, f, h, j), and Webex (k, l, m, n, o) based on the activity-type and in summary (All) form. The downstream and upstream DIR is mapped on and , respectively. For each input type, the vertical dashed line marks the size of the input fed to classifiers, based on the sensitivity analysis in Section 4.1.

Considering the generic behavior of the specific app (All), in all cases there are significant differences between the initial part – which extends at most up to the 15th packet – and the remaining part of the sequence, when considering PL/DIR/IAT. This does not occur in the case of TCPWIN, for which the behavior is slightly less evident. Specifically, in the cases of Skype and Teams, the main patterns are located on the first packets, while for Webex the trend extends up to the 15th packet.

Moreover, when focusing on the specific app, similarities can be seen between the patterns associated with the different activities. For Skype and Teams we observe characteristic patterns associated with ACall and VCall on the first packets while for Chat we observe a trend that extends over all packets. Specifically, focusing on VCall and ACall, if we consider PL and DIR, in the case of Skype we observe identical behavior between the two activities (excluding the 5th packet) while in the case of Teams the pattern associated with VCall is slightly more pronounced (i.e. packets with higher payload in the downstream direction). On the contrary, this does not hold when looking at the IAT for which we observe longer times in the case of ACall. It is also interesting to note that in the case of Webex, ACall and VCall present an identical behavior and this is probably due to the fact that Webex,19 unlike the other two shown, is an app designed to allow users to make video calls and this function has been adapted to make simple audio calls, since it does not have a native function to perform this type of activity. Finally, referring to PAY, similar observations can be made about the indistinguishability between the activities associated with Skype, Teams, and Webex, especially regarding the ACall and VCall.

Different activities performed by the user using a specific app exhibit very similar behaviors when considering the features that are typically used as input to feed the classifiers.

4.4. Highlighting activity peculiarities via an alternative view: Time evolution of app connections

The previous analysis suggests that the shortcomings encountered in TC performance are related to the nature of the considered inputs, which are not able to capture the peculiarities of the activities performed. Hence, here we investigate whether alternate paths are viable based on the observation of “simultaneous” (viz. concurrent) biflows.

Indeed, the behavior of an app on the network is the result of the mixture of multiple concurrent traffic biflows between the client – running a certain (communication and collaboration) app – and a variety of servers [13], whose number and characteristics may depend both on the app and the activity. To validate this hypothesis, we analyze the overall traffic generated or received by the app during a traffic capture in order to quantify the number of concurrent biflows over time, by considering (non-overlapping) windows.

Formally, for a capture starting at time and having duration , the th aggregation interval gathers all biflows that transmit at least one packet within , with , where is the duration of the sampling window. Considering three exemplifying apps (i.e. Skype, Teams, and Webex), Fig. 6 shows the amount of concurrent biflows considering a (non-overlapping) window size . We can observe a limited time frame at the very beginning of the communication during which the client generates several biflows whose number depends on the type of action conducted by the user (this is particularly evident for instance in Fig. 6(b)). These concurrent interactions might be connected to authorization/accounting, telemetry, and advertising services, each expected to be located on dedicated servers.20

Fig. 6.

Amount of concurrent biflows ( across different captures) in each 5-second slot for Skype (a) and Teams (b), and Webex (c) across the different activities performed. Values are calculated considering the first of each capture.

The number of generated biflows then settles to a lower value (which varies with both the app and the activity, and ranges from to biflows). Interestingly, for some apps such as Teams, this plateau value appears to be more stable for both Acall and Vcall, whereas the Chat exhibits more pronounced variations across multiple captures. Generally, if we compare the number of biflows associated to ACall and VCall before reaching the plateau, we can observe that in the case of Teams, the VCall is characterized by a higher number of biflows than ACall, while the opposite scenario occurs in the case of Webex.

Observing the steady-state behavior, VCall tends to maintain a higher number of active biflows than ACall in the case of Teams, while the two activities tend to maintain the same number of active biflows for Webex. Interestingly, the behavior of the two activities is very different for Teams—with VCall always being associated with a higher number of active biflows. This does not hold for Skype where the behavior is very similar in both phases. Finally, for both Skype and Teams, the traffic related to Chat is always carried by a lower number of biflows compared to the other two activities.

We also evaluated the number of different server sockets contacted by the client, which characterize the packets belonging to the same service burst [45]. The definition of service burst starts from that of burst [46], defined as a sequence of packets (regardless of the biflow they belong to) having an inter-packet time smaller than a given threshold. Hence, a service burst is defined as the subset of packets of a burst belonging to biflows that share the same transport protocol, and destination IP:port pair The service burst ideally groups packets related to the same server application and strictly-related information (due to the timing), and has been used previously for the purpose of TC [31], [45], [47], implying also that the client device is set (as in our analysis). At any time, a service burst can refer to a single server socket, not including traffic related to other simultaneous communications from the same client (see Fig. 8).

Fig. 8.

Comparison between context biflows of a reference biflow and service bursts. All packets are generated or received by the same mobile app.

Performing an analysis of concurrent server sockets (strictly analogous to what we have done with biflows) we can observe trends similar to those shown in Fig. 6 for biflows but with a notable offset. Specifically, we found that the number of server sockets are at most 50% of the overall number of active biflows. This clearly implies that multiple biflows concurrently reach the same service. Hence, the presence of a significant number of concurrent biflows and multiple concurrent service bursts imply that a single service burst (not to say a single biflow) cannot account for most traffic exchanged by a given app at a give time interval. Therefore, contextual information is missed when using biflow-based or service-burst-based inputs. This (expected) experimental finding will inform the definition of novel inputs in the following Section 5, and motivates the aggregated characterization of app traffic in the following.

4.5. Highlighting activity peculiarities via an alternative view: Characterization of simultaneous biflows

Informed by the analysis on time evolution of app communications, in this section we characterize the traffic carried by concurrent biflows in terms of aggregate metrics, namely bit- and packet-rates, computed considering a (non-overlapping) window of size according to the aforementioned methodology. Results are shown in Fig. 7, Fig. 7, depicting the downstream and upstream bit-rate, respectively. Similarly, Fig. 7, Fig. 7 report the downstream and upstream packet-rate, respectively. In addition, for each app, the distribution is broken down across the specific activities highlighted with different colors.

Fig. 7.

Downstream bitrate (a), upstream bitrate (b), downstream packet-rate (c), and upstream packet-rate (d). Values are evaluated over time intervals of . Boxes report the 1st and 3rd quartiles (1Q and 3Q, respectively), while whiskers mark 1Q-1.5 IQR and 3Q IQR, where IQR=3Q-1Q. Black diamonds highlight outliers.

Observing the downstream bit- and packet-rates (in Fig. 7, Fig. 7), we can notice that the different activities are characterized by significantly-different patterns in terms of median rate which, however, do not seem strictly related to a specific app. In fact, for all apps VCall always corresponds to the highest bit-rate (resp. packet-rate) which varies in the range (resp. ). This is expected given the simultaneous transmission of both audio and video traffic. On the contrary, in the case of ACall and Chat, the bit-rate (resp. packet-rate) varies in the ranges (resp. ) and (resp. ), respectively.

In contrast, when upstream bit- and packet-rates (in Fig. 7, Fig. 7) are considered, there is no clear separation as in the downstream case. In fact, the different activities tend to have closer median values, varying in the range (resp. ) for the bit-rate (resp. packet-rate) and depending more on the type of app. Indeed, for both Skype and Teams we observe differences between ACall and VCall. On the contrary, focusing on Webex, the same two activities are characterized by very similar bit- and packet-rates, i.e. vs. and vs. , respectively. Moreover, referring to Chat, the activity pattern seems quite distinguishable from ACall and VCall for both rate metrics (cf. Fig. 7, Fig. 7) in the case of Teams. Conversely, this does not hold for Skype, for which the Chat bit-rate is much more similar to that of VCall.

5. Context-based multimodal deep learning traffic classification

Hereinafter, we introduce the definition of context behavior and related Context Inputs in Section 5.1; the traffic characterization of Context Inputs is then provided in Section 5.2. Finally, Section 5.3 describes the novel Mimetic-All architecture proposed herein.

5.1. Definition of context behavior

Based on above considerations, in addressing the TC problem at activity level we intend to exploit both the () intrinsic characteristics of the biflow to be classified and () the information related to its context, namely taking into account the traffic related to the biflows that are contextually created by the same app when the user performs a specific activity. To be able to do this in a practically meaningful and useful manner, we need to clarify the concepts of classification object and contextual information.

A classification object represents the (aggregated) unit of traffic that will receive the classification label—and thus will be subject to the management actions that motivated the classification, e.g., it will be throttled, or blocked, or given higher priority, or will trigger a special logging rule, etc. Typically, the classification object is also the source of input data for the classifier. This is the case for the biflow, whose information is used to extract classification features, and is the typical unit of traffic for network management.

Considering the service burst, defined in Section 4.4, and recently used in the context of TC as classification object [31], [45], [47], we notice that this aggregate of traffic presents however some significant limitations, discussed hereafter. First, the (service) burst definition is highly-sensitive w.r.t. the choice of the inter-packet-time threshold: this value can critically depend on the network conditions, and on the presence (or lack thereof) of multiple clients accessing the same service. Secondly, using a service burst as the TC object poses a practical problem on the usage of the classification result: from both network management and network security standpoints the biflow is a well-known and widely-used granularity to apply relevant actions such as filtering, throttling, priority queuing, accounting. Conversely, it is not straightforward (or necessarily meaningful) to apply the same actions on varying sets of packets separated by the aforementioned transmission gaps. Finally, service bursts are unlikely to reflect (and allow to exploit) the heterogeneous nature of modern-application traffic. Indeed, a single application to perform its functions can at the same time communicate with different network services [13], and a mix of services is likely to be more indicative of a specific activity of the same application. In Sections 4.4, 4.5 we experimentally validated the presence of multiple simultaneous biflows, and several service bursts, that differently characterize the time evolution and volume of traffic of each app and activity.

Following these considerations, we keep the biflow as the TC object, and we will use the information from simultaneous same-app communications as contextual information that will help discerning the activities of a given application. Specifically, in what follows we refer to the TC object as the reference biflow or . We consider the traffic generated by the same device, identified by its IP address21 hereafter referred to as device IP, and the same app generating : a filter on the device IP address and a pre-classification stage detecting the app22 allow to select all biflows potentially related to . To restrict the contextual information to only communications simultaneous to , and to impose causality (thus enabling online classification), we further restrict considered packets to biflows that were open during the transmission of the first payload-carrying packets of . In summary, naming the arrival time of the SYN packet of , and the arrival time of its th payload-carrying packet, the set of packets defining contextual biflows satisfy all the following four conditions:

-

•

same device IP of ;

-

•

same app label of ;

-

•

biflow did not end before ;

-

•

arrival time of the packet precedes .

The overall mechanism is illustrated in Fig. 9 and described hereafter. For each biflow, the starting time and the current number of bytes/packets transmitted (in both the upstream and downstream directions) are saved. Then, for each reference biflow , at time (arrival of its th payload-carrying packet), the packets belonging to its contextual biflows are used to compute nine aggregate metrics to be used as Context Inputs (Context in short). Specifically, these metrics correspond to: (a) the number of contextual biflows (), (b) the amount of transmitted byte/packets (/) and (c) the bit-/packet- rate23 (/) in both directions (we use and to denote the upstream and downstream directions, respectively).

Fig. 9.

For each biflow are highlighted: the arrival time of the first packet () (i.e. the SYN packet for TCP biflows), the arrival time of the last packet (), and the arrival time of first packet with non-zero payload (). Additionally, for the current biflow , in addition to the arrival time of the first packet (), the arrival time of the packet with non-zero payload is also highlighted (). PAY denotes the first byte of transport-level payload. SEQ denotes header fields extracted from the sequence of the first packets. CONTEXT denotes Context Inputs computed at the arrival of the th packet of the reference biflow (i.e., the biflow to be classified). refers to the starting time of the generic biflow identified by the quintuple . and denote the total amount of byte and packets, respectively, transmitted/received by the biflow , up to time .

Regarding the practical feasibility of the definition above, we highlight that the Context Inputs are time-sliced analogous to flow counters kept by routing devices and traffic monitoring middleboxes (NetFlow and IPFIX standard [49]), and can be directly derived from their values sampled at two instants determined by each reference biflow (the start and the arrival of th packet).

5.2. Analysis of the Context Inputs

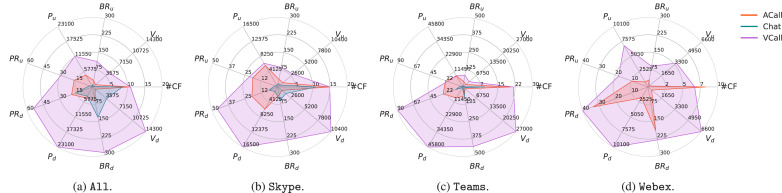

In this section, we use the Context Inputs previously defined above to characterize the traffic generated when the user performs one of the considered activities (i.e. ACall, Chat, and VCall), taking into account also the specific app. To this end, in Fig. 10, for each activity, we report the average value of each feature calculated in correspondence of the 20th packet of the reference biflow as a radar chart. In detail, Fig. 10(a) focuses on activities without taking into account the generating app whereas Figs. 10(b)–10(d) provide a drill-down for Skype, Teams, and Webex, respectively.

Fig. 10.

Properties of biflows’ with respect to the nine Context Inputs (i.e., , arranged symmetrically in the upper half for upstream, lower half for downstream) for All apps (a), Skype (b), Teams (c), and Webex (d) based on the activity-type (ACall, Chat, and VCall). Values are calculated at the arrival of the 20th packet for each current biflow and averaged over all biflows. are reported in . are reported in . are reported in . are reported in .

As shown in Fig. 10(a), despite the (average) number of contextual biflows is similar (#CF), the different activities show very different behaviors w.r.t. the other Context Inputs. In fact, as expected, VCall represents the activity originating the highest contextual traffic for both directions, in terms of packets and bytes. This is mainly due to the simultaneous transmission of audio and video traffic streams. Also, comparing Chat and ACall, we notice that the former presents a predominance of downstream traffic (in terms of both volume and byte-rate) whereas the latter presents a more balanced traffic that stands out especially for the quantity and rate of packets transmitted in the upstream direction.

Focusing on the downstream direction, we notice that for both Skype and Teams (cf. Fig. 10, Fig. 10) VCall still continues to have the highest amount of traffic both in terms of bytes and packets. Furthermore, for Webex (cf. Fig. 10(d)), we notice a similar behavior between VCall and ACall in terms of packet- and byte-rate, whereas this does not occur when considering the number of packets and byte volume received, which are higher in the case of VCall. Moreover, when comparing ACall and Chat in the case of Skype and Teams, the two activities differ mostly in terms of packets, whereas if we consider the byte volume, the difference becomes less evident, especially for Teams.

On the other hand, by considering the upstream direction, we notice a clear pattern for Chat that results in a smaller amount of traffic w.r.t. all the Context Inputs. Conversely, this does not apply to ACall and VCall. In fact, for Skype and Teams (cf. Fig. 10, Fig. 10), there is a similarity between the two activities in terms of number of packets transmitted and packet-rate. However, this is not true when looking at the byte volume and the byte-rate. Finally, in the case of Webex (cf. Fig. 10(d)), a clear distinction between ACall and VCall w.r.t. all the Context Inputs is evident.

5.3. Leveraging Context Inputs : the Mimetic-All architecture

In Section 4.2, we have shown that the state-of-art Mimetic-Enhanced [16] is able to outperform the considered single-modality classifiers, especially when addressing classification tasks that take into account the activities performed by the users. In this section, taking advantage of the modularity offered by the general Mimetic framework [24], we describe the design of Mimetic-All to exploit the Context Inputs discussed in Section 5.1.

As shown in Fig. 11, the proposed Mimetic-All architecture consists in three per-modality (viz. input-specific) branches, henceforth named simply bPAY, bSEQ, and bContext (where the initial “b” stands for “branch”). As in the original proposal [16], bPAY and bSEQ branches take as input the first bytes of the transport layer payload (PAY) and the informative fields extracted from the sequence of the first packets (SEQ), respectively (cf. Section 3.3 for details on such input types). Additionally, both branches exploit a trainable embedding layer to embed each input element into a vector of dimension , resulting in an overall embedding matrix —with denoting the input dimensionality (i.e. and for the PAY- and SEQ-modality, respectively). The motivation for applying an embedding to these inputs is due to the categorical nature of PAY and SEQ and for providing a better representation (i.e. more informative) of the inputs.

Fig. 11.

Proposed Mimetic-All classifier. The macro-blocks shared with the original Mimetic-Enhanced architecture are highlighted in blue. The green macro-block denotes the new branch (i.e., bContext) dedicated to the novel set of Context Inputs . For each single block the color filling indicates the type of layer. The  symbol characterizes the layers that are not part of the architecture when considering the single-modality (bPAY, bSEQ, or bContext) alone. The

symbol characterizes the layers that are not part of the architecture when considering the single-modality (bPAY, bSEQ, or bContext) alone. The  symbol marks layers frozen during the fine-tuning phase.

symbol marks layers frozen during the fine-tuning phase.

Specifically, in the bPAY branch, the corresponding embedding matrix is fed to a sequence of single-modality layers, consisting of two D convolutional layers—with a kernel size of , unit stride, and and filters, respectively—each followed by a D max-pooling layer – with spatial extent of and unit stride – and finally, one dense layer with neurons. On the other hand, the layers of the bSEQ branch are a bidirectional GRU (BiGRU) – with units and return-sequences behavior – and one dense layer with neurons. All the layers are set with the Rectified Linear Unit (ReLU) activation function. Besides the above branches, the newly-added bContext branch takes as input the contextual (aggregated) inputs associated with the th packet of each reference biflow (Context). Indeed, as opposed to PAY and SEQ, these aggregated inputs do not have a natural ordering or sequentiality. In detail, inspired by the proposal of Akbari et al. [29], we have used a Multi-Layer Perceptron (MLP) network to ingest (viz. distill information from) them. This choice is driven by the fact that, unlike PAY and SEQ, the Context Inputs are calculated in an aggregate form and, having no natural ordering or sequentiality, there is no spatial/time evolution dependence that could be exploited by convolutional/recurrent layers. Specifically, bContext consists of two dense layers—characterized by neurons and a LeakyReLU activation function. Similar to the other two branches, we also use a final dense layer with units and a ReLU activation function. Finally, the features extracted by the single-modality branches are joined via a concatenation layer and fed to a (dense) shared representation layer – with neurons and ReLU activation – before performing the classification through a softmax.

As in the original proposal, Mimetic-All is trained via a two-phase procedure consisting of an independent pre-training of each single-modality branch followed by a fine-tuning of the entire architecture after freezing the lower single-modality layers (i.e., the dense, D convolutional, and BiGRU layers, as highlighted in Fig. 11 via the  symbol). In more detail, we performed the pre-training of each branch and the fine-tuning for and epochs, respectively, to minimize respective categorical cross-entropy loss functions via the ADAM optimizer (with a batch size of ).24

Additionally, as opposed to the Mimetic-Enhanced proposal [16], preliminary investigation showed that using a fixed learning-rate (i.e. equal to 0.001) instead of an adaptive one results in a performance improvement for Joint-TC.25

Finally, to improve regularization and mitigate the possible overfitting, we added a dropout of 0.2 at the end of each single-modality branch and after every dense layer, and adopted an early-stopping technique measured on the validation accuracy with the same setup described in Section 3.3 (i.e. 20% of training data are used for validation).

symbol). In more detail, we performed the pre-training of each branch and the fine-tuning for and epochs, respectively, to minimize respective categorical cross-entropy loss functions via the ADAM optimizer (with a batch size of ).24

Additionally, as opposed to the Mimetic-Enhanced proposal [16], preliminary investigation showed that using a fixed learning-rate (i.e. equal to 0.001) instead of an adaptive one results in a performance improvement for Joint-TC.25

Finally, to improve regularization and mitigate the possible overfitting, we added a dropout of 0.2 at the end of each single-modality branch and after every dense layer, and adopted an early-stopping technique measured on the validation accuracy with the same setup described in Section 3.3 (i.e. 20% of training data are used for validation).

Remarks on hyperparameter choice: during the design process of the bContext branch, we evaluated several combinations regarding the tuning of the activation functions and the number of neurons of the dense layers. Specifically, regarding the former, we obtained slightly better performance by using a LeakyReLU on the first two layers and a ReLU on the last one. Specifically, the LeakyReLU allows for a small, non-zero gradient when the unit is saturated and not active compared with the ReLU, alleviating potential problems caused by the hard activation of the latter [50]. Conversely, trying different configurations26 we obtained the best trade-off – between performance and complexity – by setting neurons on the first two dense layers. Moreover, we noticed better performance when considering the same number of neurons (i.e., ) in the final dense layers of the single modalities before concatenation.

6. Experimental evaluation

As shown in Section 4.2, the sole combination of biflow transport-layer payload (PAY) and packet-level (SEQ) inputs within Mimetic-Enhanced classifier is not sufficient to guarantee adequate performance when dealing with Joint-TC and Act-TC tasks. Thus, in Section 5 we identified a new set of inputs – which we named Context Inputs – suitable to distinguish traffic associated with different activities performed with the same app and proposed the Mimetic-All classifier leveraging them (Section 5.3).

In this section, we evaluate the impact of this context-based modality on TC performance when adopting the App Act training strategy. The latter strategy indeed allows tackling all the considered classification tasks (i.e. App-TC, Act-TC, and Joint-TC).

For the sake of a complete evaluation and aiming at performing a reasoned ablation study, other than Mimetic-Enhanced and Mimetic-All, in the following analysis we investigate the performance of classifiers obtained by selecting a subset of the three modalities available. For readers’ convenience, in Table 2 we report the different instances drawn from the Mimetic framework according to the considered type of input (i.e. PAY, SEQ, and Context).

Table 2.

Variants of classifiers used in this work with respective input data used to feed them.

| Variant | PAY | SEQ | Context |

|---|---|---|---|

| bPAY | ✓ | – | – |

| bSEQ | – | ✓ | – |

| bContext | – | – | ✓ |

| Mimetic-Enhanced | ✓ | ✓ | – |

| Mimetic-ConPay | ✓ | – | ✓ |

| Mimetic-ConSeq | – | ✓ | ✓ |

| Mimetic-All | ✓ | ✓ | ✓ |

PAY: First bytes of transport-layer payload.

SEQ: Informative header fields extracted from the sequence of the first packets.

Context: Context Inputs computed at the arrival of the th packet.

6.1. Overall performance comparison

In Table 3, we report the performance of DL-based traffic classifiers combining different mixes of modalities, namely when considering both single-modality branches alone – each fed with one of the three different input types PAY, SEQ, and Context– and their combinations. The following results are obtained considering the best-performing and packets. As shown, performance varies depending on the considered type of input and TC task.

Table 3.

Accuracy, F-measure, number of Trainable Parameters (#TP), and Training Time (Time) comparison of DL-based traffic classifiers when combining different mixes of modalities. Models are trained using AppAct class labels. Results are in the format obtained over 10-folds. The best result per metric (column) is highlighted in boldface. Training time is calculated by pre-training the individual modalities in parallel. The input types fed to each classifier are shown in Table 2.

| Classifier | Joint-TC |

App-TC |

Activity-TC |

#TP [k] | Time [min] | |||

|---|---|---|---|---|---|---|---|---|

| Accuracy [%] | F-measure [%] | Accuracy [%] | F-measure [%] | Accuracy [%] | F-measure [%] | |||

| bPAY | 460 | |||||||

| bSEQ | 706 | |||||||

| bContext | ||||||||

| Mimetic-Enhanced | 1235 | |||||||

| Mimetic-ConPay | 628 | |||||||

| Mimetic-ConSeq | 875 | |||||||

| Mimetic-All | 1368 | |||||||

In detail, the suitability of Context inputs discussed in Section 5.2, is demonstrated by the fact that considering only the bContext branch results in a significant performance improvement on the Act-TC task compared to Mimetic-Enhanced ( F-measure). With the ability of Mimetic-Enhanced to discriminate apps and the modularity the multimodal architecture being given, these results suggest that the addition of Context inputs can lead to a classifier that is able to simultaneously achieve good performance for all the considered tasks.

Therefore, the impact on performance when combining Context with PAY and SEQ is evaluated. Considering the classification performance of Mimetic-Enhanced as a reference, combining Context and PAY (Mimetic-ConPay) results in an increase of in terms of F-measure for both Joint- and Act-TC tasks ( and of accuracy, respectively). Unfortunately, the same combination results in a non-negligible decrease of of F-measure (and of accuracy) for the App-TC.

In contrast, combining SEQ and Context (Mimetic-ConSeq) we obtain a performance increase for both Joint- and Act-TC– i.e. (resp. ) and (resp. ) in terms of F-measure (resp. accuracy) – w.r.t. Mimetic-Enhanced. It is noteworthy that, by using the latter input combination, it is possible to ignore the payload content (i.e. PAY): this peculiarity makes this solution suitable also for future scenarios where more opaque encryption sublayers are deployed (e.g., extensions for Encrypted Server Name Indication and Encrypted Client Hello in TLS 1.3 [17]), likely hindering classification solutions leveraging payload (see [16] for an analysis of DL usage of payload-based inputs). Finally, combining the three input types (Mimetic-All), this model is able to outperform the other configurations for all the considered TC tasks.

Overall, in agreement with the results already discussed in Section 4.2, leveraging the bPAY and bSEQ branches individually leads to a performance degradation for all TC tasks, compared to Mimetic-Enhanced. In fact, when considering the PAY input only (i.e. the bPAY branch), we incur the worst performance, which corresponds to a loss between (resp. ) and (resp. ) in terms of F-measure (resp. accuracy). On the contrary, when we consider only the SEQ input (i.e. the bSEQ branch) we obtain a significant loss regarding the Joint-TC task ( F-measure) while for App- and Act-TC tasks the loss is more contained ( F-measure).

More in detail, Fig. 12(a) depicts the Act-TC performance of Mimetic-Enhanced (i.e. the state-of-art baseline), conditioned on each application. To further investigate the results obtained by combining Context with the other inputs, in Figs. 12(b)–12(d) we report the gain/loss w.r.t. Mimetic-Enhanced at the activity granularity (conditioned on each app).

Fig. 12.

Per-activity performance for each app achieved by Mimetic-Enhanced (i.e., the basic configuration) (a). Gain/loss w.r.t. Mimetic-Enhanced obtained by considering the Context Inputs and related to Mimetic-ConPay (b), Mimetic-ConSeq (c), and Mimetic-All (d).

As depicted in Fig. 12(a), for most applications ( out of ), VCall shows to the best classification performance (). This does not hold for Messenger, Slack, and Teams, which result in the range 40%–60%. On the contrary, in the case of Chat F-measure is achieved, regardless of the considered app (except for Slack). Finally, for ACall the performance varies strongly depending on the app between 38% (for Zoom) and 77% (for Discord). Interestingly, for Slack, the performance (always in the range of –65% F-measure) does not significantly vary with the activity.

The addition of Context results in a gain in terms of F-measure that depends on the specific combination of inputs used and varies with the app and the activity (Figs. 12(b)–12(d)). Moreover, while Mimetic-ConSeq and Mimetic-All exhibit approximately the same average performance, Figs. 12(c)–12(d) witness that the gains obtained strongly depend on the specific app. Indeed, Mimetic-All results in higher gains than Mimetic-ConSeq on the activities of Skype, Teams, and Webex, but lower performance improvements are observed for the remaining apps (i.e. Discord, GotoMeeting, Meet, Messenger, Slack, and Zoom). Also, for these apps we see that the main differences are related to ACall for which the former obtains a lower gain of about 3%–4% compared to the latter. Finally, the worst performance of Mimetic-ConPay compared to Mimetic-ConSeq and Mimetic-All is mainly due to a general lower gain for the activities of all the apps, especially regarding VCall for Teams and ACall for Meet, Teams, and Webex. In this regard, it is worth noting that for Teams, Mimetic-ConPay brings no improvement w.r.t. Mimetic-Enhanced for the ACall activity.

The novel set of Context Inputs is thus useful when dealing with the classification of both the app and the specific activity performed by the user. Also, results prove that the combination with the traffic inputs commonly used in the literature at biflow level (e.g., the first byte of the transport level payload and/or features extracted from the first packets) is fruitful.

6.2. Calibration analysis

In this section, after demonstrating the effectiveness of Mimetic-Enhanced when tackling the Joint-TC task through the inclusion of Context Inputs , we evaluate its reliability [51] by means of a metric to quantify its calibration. To this end, we consider both the whole confidence vector—i.e. —and the confidence associated to the inferred class—i.e. .

In general, a calibrated classifier is such that for each sample, the confidence of the predicted class equals , where and are the true and predicted labels, respectively. To quantify the degree of this (mis)alignment, we partition the predictions into equally-spaced bins and compute both the (expected) accuracy and confidence for each of these.

Specifically, let be the set of tested TC objects for which the confidence associated with the predicted label falls within , the accuracy and confidence associated with the bin are:

| (1) |

| (2) |

where , , and are the true label, the predicted label, and the predicted confidence of the th sample, respectively. Since the confidence values vary in , we consider the starting point of the confidence interval to be . Accordingly, to obtain a summary metric of the deviation from the perfect calibration, we consider the Expected Calibration Error (ECE), defined as , which represents the expected absolute deviation between the confidence and the confidence-conditional accuracy. The above metric can be approximated as:

| (3) |