Abstract

Research has explored how the COVID-19 pandemic triggered a wave of conspiratorial thinking and online hate speech, but little is empirically known about how different phases of the pandemic are associated with hate speech against adversaries identified by online conspiracy communities. This study addresses this gap by combining observational methods with exploratory automated text analysis of content from an Italian-themed conspiracy channel on Telegram during the first year of the pandemic. We found that, before the first lockdown in early 2020, the primary target of hate was China, which was blamed for a new bioweapon. Yet over the course of 2020 and particularly after the beginning of the second lockdown, the primary targets became journalists and healthcare workers, who were blamed for exaggerating the threat of COVID-19. This study advances our understanding of the association between hate speech and a complex and protracted event like the COVID-19 pandemic, and it suggests that country-specific responses to the virus (e.g., lockdowns and re-openings) are associated with online hate speech against different adversaries depending on the social and political context.

Keywords: conspiracy theory, online hate speech, COVID-19, pandemic, Telegram, topic modeling, automated text classification, Sinophobia, anti-journalist hate, anti-doctors hate

Introduction

Since the beginning of the pandemic in 2020, there were numerous conspiracy theories circulating in online communities about the origin of COVID-19. For example, some suggested that the virus was created by the Chinese government intentionally to attack the West. Others posited that the pandemic was a hoax encouraged by domestic elites to legitimize draconian measures to control the population (see Imhoff & Lamberty, 2020). The objective of this study is to understand whether hate speech against different adversaries identified by online conspiracy communities is associated with country-specific responses to the virus.

We address this research objective by examining the discussions on one of the largest and most active Italian conspiracy Telegram channels: La Cruna dell’Ago. This is a meaningful case study for three reasons. First, Italy was the first Western country to experience a significant number of infections in early 2020 immediately after the virus was detected in Wuhan (Fanelli & Piazza, 2020). This makes Italy a useful context to assess how domestic conspiracy communities reacted to the pandemic when little was known about the virus. Second, Telegram is a valuable environment to examine hate speech because it has limited content-moderation policies: it only bans messages promoting violence on public channels and illegal pornographic material. As a result of these narrow policies, Telegram has become an important platform where conspiracy theorists and hate groups gather (Guhl & Davey, 2020), attracting more users after centrally controlled networks such as Twitter, Facebook, or YouTube increased their de-platforming efforts against hate speech and other crimes (Innes & Innes, 2021). Third, the channel La Cruna dell’Ago is a valuable case study because of its prominence in the Italian context: it was created by Cesare Sacchetti, a notorious conspiracy theorist and one of the top five super-spreaders of online misinformation about COVID-19 in Europe, and it attracted thousands of active users (see McDonald et al., 2020).

Previous research has considered the impact of trigger events on hateful content online such as riots (Bliuc et al., 2019), rallies (van der Vegt et al., 2021), terrorist attacks (Burnap et al., 2014; Williams & Burnap, 2015), and political elections (Müller & Schwarz, 2018; Scrivens et al., 2020; Siegel et al., 2018). It is well documented that the COVID-19 pandemic triggered an increase in conspiracy theories circulating online (Fan et al., 2020; Velásquez et al., 2020) and a wave of COVID-19-related hate speech against targets such as Asian and Jewish minorities online in particular (Croucher et al., 2020; Ziems et al., 2020). We contribute to this growing body of research by looking at the pandemic not as a homogeneous event, but rather as a process made of different phases marked by country-specific responses to the virus (e.g., lockdowns and re-openings). Specifically, we investigate how lockdowns and re-openings are associated with increases in online hate speech against different adversaries identified by different conspiratorial narratives.

This study contributes to the empirical literature on the spread of extreme right-wing misinformation on Telegram (e.g., Gallagher & O’Connor, 2021; Walther & McCoy, 2021) as well as the interconnections between far-right actors (Urman & Katz, 2020) and mobilization efforts there (Guhl & Davey, 2020). In what follows, we define conspiracy theories and describe their symbiotic relationships with hate. We then review the key conspiracy theories associated with COVID-19 that circulate in the Telegram channel we examine in the current study, as well as the Italian context during the first year of the pandemic. Finally, we describe the research questions guiding the current study, data and methods, and study results, followed by a discussion of the theoretical implications of our findings.

Online Hate and Conspiracy Theories

Hate—defined as an intense, sustained, and stable dislike of the target (Allport, 1954)—is the fundamental force motivating hate speech. There is enormous variation in the definitions of hate speech (Vergani et al., 2022), but all fundamentally refer to the expression of hatred toward particular people and groups, which implicitly or explicitly stigmatizes them and depicts them as undesirable and a legitimate object of hostility (Parekh, 2006). When hate speech takes place online, it is often referred to as cyberhate or online hate (Costello et al., 2019), and when hate speech targets specific identities (e.g., racial minorities), it can be referred to with terms like cyber-racism (Bliuc et al., 2018). In all its forms, hate speech aims to preserve unequal power relationships by denigrating out-groups while simultaneously bolstering the superiority of the speaker and reinforcing the discrimination and marginalization of the target (Burch, 2018).

Conspiracy theories can be defined as attempts to explain the causes of socio-political events with claims of a “secret plot by two or more powerful actors” (Douglas et al., 2019, p. 4). Conspiracy theories have been present in both modern and traditional societies (see West & Sanders, 2003), and large portions of the human population believe in at least one conspiracy theory. For example, Oliver and Wood (2014) found that half of the American population consistently endorses at least one conspiracy theory and that belief in conspiracy theories is widespread across the ideological spectrum. Conspiracy theories are not monolithic and static (Dean, 2000; Klein et al., 2018). As Byford (2014) suggests, they are dynamic and historically contingent sets of beliefs that are flexibly drawn upon, modified, debated, and applied to novel circumstances in the course of everyday sense-making practices. In social media, conspiracy theories can be conceptualized as an assemblage of arguments, images, and interpretations shared by individuals who constantly contribute to developing and adding new parts to the main plot. Research suggests that believing in one conspiracy theory is one of the strongest predictors of believing in other conspiracy theories (Douglas et al., 2019; Goldberg, 2008; Spark, 2000). This happens because conspiracy theories are based on the belief that important things are covered up, or hidden from the public, by powerful elites. For people who believe in one conspiracy theory, other conspiracies seem more plausible (Douglas, 2021).

There is a natural affinity between conspiracy theories and online hate. Endorsing conspiracy theories is associated with intergroup hostility and anger (Jolley & Paterson, 2020; Ullrich et al., 2014), and conspiratorial thinking might serve as a core mindset to identify the enemies (Bilewicz & Sędek, 2015; Jolley et al., 2020). Online communities of conspiracy believers tend to be isolated and to have a siege mentality (Swami & Furnham, 2014). Recent research conducted during the COVID-19 pandemic found that hate speech on Twitter tends to be higher in clusters of users who are denser and more isolated from the rest of the users (Fan et al., 2020; Gruzd & Mai, 2020). Conspiracy theories identify an adversary who is responsible for the theorists’ or the world’s misfortunes. This is usually the government, but it can be “any group perceived as powerful and malevolent” (Douglas et al., 2019, p. 5). For example, previous research has examined conspiracy theories promoting hate against the Jews via centuries-old tropes like the Protocols of the Learned Elders of Zion (Bronner, 2003; Kofta et al., 2020).

COVID-19 Conspiracy Theories

In the first year of the COVID-19 pandemic, we propose that there were two main types of conspiratorial narratives about the origins of COVID-19: the first portrayed the COVID-19 virus as created by foreign adversaries and the second as a hoax promoted by domestic adversaries. In European and North American contexts, the first type, which we refer to as foreign-focused theory, was the idea that the virus was designed and released by the Chinese government intentionally to attack the West (Douglas, 2021; Huang & Liu, 2020). This was reinforced by the narrative of the “China virus” and “Wuhan virus” circulating in social media in the first months of 2020 and supported by political leaders like Donald Trump who extensively used these terms in public speeches (Darling-Hammond et al., 2020). This narrative was associated with anti-Asian hate, which translated into a peak of online and offline victimization of Asian minorities in Europe, North America, and Australia (Kamp et al., 2021). This foreign-focused theory was symmetrical to conspiracy theories circulating in non-Western areas of the world blaming the West for COVID-19. For example, in Pakistan a popular conspiracy theory suggested that COVID-19 was created by the United States to attack the Muslim world (see Y. H. Khan et al., 2020). This anti-Western conspiracy theory was linked with older conspiracies about the CIA using anti-polio vaccines to make Muslims infertile (see M. A. Khan & Kanwal, 2015).

The second type, which we refer to as domestic-focused theory, was the idea the pandemic was a hoax to allow political elites within one’s country to pass unpopular and restrictive laws, with the ultimate aim to control the population (e.g., making vaccination mandatory to inoculate microchips hidden in vaccines) (Imhoff & Lamberty, 2020). Within this narrative, two professional categories played a key role: health professionals and journalists (Privitera, 2020). Both professional groups were accused of being used by powerful national elites to exaggerate the consequences of the pandemic by spreading (allegedly) false information about the risks of COVID-19 and the collapse of the healthcare system. This conspiracy was associated with a wave of online hate against journalists, doctors, and nurses (Dearden, 2021) including documented episodes of real-world violence against them (e.g., Orellana, 2020).

The two types of COVID-19 conspiratorial narratives (foreign-focused and domestic-focused) were broadly complementary but not alternative and tended to circulate in online communities that already shared pre-existing conspiratorial beliefs. For example, many adherents of the QAnon theory saw COVID-19 as a global hoax created by a mix of foreign and domestic adversaries, including the Chinese government, American and European politicians (Hannah, 2021). QAnon is an assemblage of far-right conspiracy theories that emerged in 2017, which holds that Donald Trump is waging a secret war against an international cabal of satanic pedophiles, which include government officials, the media, and the Hollywood elite (see Amarasingam & Argentino, 2020).

Although the QAnon narrative is mainly focused on US domestic politics and centered around the figure of Donald Trump, it has been spreading globally in 2020, including in non-English-speaking countries like Italy, Germany, and Brazil (Rauhala & Morris, 2020). Outside of the United States, QAnon adherents tend to concentrate their hate not only on pedophile networks but also on the global repercussions of the alleged war between Donald Trump—seen by QAnon adherents as the hero—and his arch-enemies—such as Barack Obama and Hillary Clinton, who were often accused of conspiring against Trump together with national elites (Roose, 2021).

Importantly, the QAnon theory borrowed many of its elements from pre-existing conspiracy theories like the New World Order (NWO) theory (Amarasingam & Argentino, 2020). Since the 1990s, the NWO theory has been mainly popular in right-wing and conservative Christian circles worried about a globalist takeover led by Jewish and left-wing elites (Spark, 2000). In short, the NWO theory alleges that a secretive elite—composed of powerful figures including Jews, progressive atheist socialist elites, financial elites, and bankers—wants to establish a totalitarian world government replacing nation-states (Goldberg, 2008).

The Italian Context During the First Year of the COVID-19 Pandemic

Italy was the first European country to experience a significant number of COVID-19 infections in early 2020, which led to an initial peak of deaths around 21 March 2020 (Fanelli & Piazza, 2020). In the first months of the pandemic, the reported mortality rate of the virus in Italy was between 4% and 8%, substantially higher than in China, where it was between 1% and 3% (Fanelli & Piazza, 2020). This was likely because the virus spread into retirement homes and because of the aging Italian population (Boccia et al., 2020). Italy declared the first extended lockdown on 9 March 2020, which lasted until 18 May of that same year. The initial reaction of the Italian population was national unity, with public celebrations of healthcare workers as heroes, angels, and soldiers defending the Italian population from the virus (Arcolaci, 2020; Ceccarelli, 2020).

The Italian government relaxed the restrictive measures during the summer months (Comin & Partners, 2020). After the summer holidays, Italy experienced a new wave of infections, which lead to a second national lockdown from 6 November, which lasted until the end of 2020 and continued into 2021. If in March Italy experienced a sharp increase in popular support for the government, with 62% of the public trusting Prime Minister Conte and his response to the pandemic, by November trust in the Prime Minister was down to 45% (Stefanoni, 2020). One of the main drivers of the change in public sentiment was concern about the economic situation: Italians who were positive about the government’s measures to sustain the economy went down from 55% in March to 24% in November (Stefanoni, 2020).

Importantly, the perception of the health threat posed by the pandemic changed substantially during 2020: the percentage of Italians who believed that COVID-19 “killed only the elderly or people who are already ill” went from 15% in March to 39% in November (Stefanoni, 2020). According to a national survey conducted at the end of October 2020, 6.5% of Italians believed that the pandemic was fabricated to justify political and economic decisions (Bucchi & Saracino, 2020). Similarly, the perception of healthcare workers started shifting from heroes to villains: a survey published in July 2020 revealed that, out of a sample of 627 healthcare workers, 25% reported at least 1 episode of discrimination and 11.3% more than 10 episodes of discrimination (Cabrini et al., 2020). These ranged from refusal to assist healthcare workers in their daily needs (e.g., shopping, hairdresser) to property vandalism and physical assaults (Cabrini et al., 2020). The incidents were likely motivated by fear of being infected (Cabrini et al., 2020) as well as the spread of conspiracy theories in which healthcare workers were seen as contributing to inflate the real dimension of the virus (see Fuschetto, 2020). In the second half of 2020, healthcare workers became the target of widespread hate on social media (Viafora, 2020), and on 2 November a famous graffiti celebrating healthcare workers in Milan was vandalized (Ranieri, 2020).

Data and Methods

In this exploratory study, we looked at whether and how the language used in the Telegram channel La Cruna dell’Ago changed over time during the year 2020. Specifically, we assessed whether the language changed before and after the key country-specific responses to the virus: (1) 9 March 2020 (the beginning of the first lockdown); (2) 19 May 2020 (the re-opening for the summer months); and (3) 6 November 2020 (the beginning of the second lockdown). All messages written in the La Cruna dell’Ago Telegram channel were collected from its inception on 7 February 2020 until 31 December 2020. Data were downloaded as an HTML file using the Telegram desktop app (capturing only text) and consisted of 239,711 messages from 4,023 users. At the time of access, La Cruna dell’Ago was a public channel: it was open to anyone requesting access without limits and all users were granted access to view, download content, and post messages. We accessed the group as observers and remained in the group until the end of 2020, regularly observing the group’s interactions. The observation of the channel activities and the regular reading of content posted in the online community were crucial to understand the context, to guide the automated text analyses and the interpretation of the results. In this section, we provide detail about the interactions between observational and automated text analysis methods.

Data Pre-Processing

Pre-processing was adapted to the diverse nature of posts and the needs of the text classification model described below, which uses AlBERTo (Polignano et al., 2019), the Italian language version of the popular BERT model (Devlin et al., 2018). AlBERTo is language specific and, like BERT, cannot process some longer messages. Furthermore, one message may contain claims about different narratives. Hence, we first removed the small subset of messages that were not in Italian using a language identifier (Lui & Baldwin, 2011) and then divided the remaining messages into sentences using the pre-trained Italian language model in the Python package spaCy.1 In addition, we removed sentences: (1) with no words (corresponding to videos and images not stored by Telegram); (2) only containing URLs (after storing them separately for reporting the key sources); (3) that were automated messages from user “Group Help”; and (4) were less than 10 characters. After filtering the data, the remaining dataset has 2,718 users, 155,073 messages, and 298,125 sentences, with a mean of 12.46 words per sentence.

Topic Modeling

To identify the key topics discussed in the Telegram channel, we used top2vec, which represents documents and words as vectors (i.e., long series of coordinates). In other words, top2vec places documents in a “semantic space” where closer documents are closer in meaning. A cluster of documents is then understood to be a potential topic (see Angelov, 2020). Top2vec and related approaches have been extensively used in research related to online discourses, including research on COVID-19 and vaccine hesitancy (Ghasiya & Okamura, 2021). Top2vec’s key difference to other topic modeling tools (e.g., Latent Dirichlet Allocation [LDA]) is that it does not require a predetermined number of topics to search (k value). This is particularly valuable when conducting exploratory analysis of social media text, which need not conform to any particular set of categories.

While document clustering performs better than ordinary bag-of-words approaches on notoriously difficult social media data (Curiskis et al., 2020), sentence-length “documents” can yield many, very similar clusters that must be further processed. Hence, we create standard “documents” by merging contiguous sentences into chunks of approximately 100 words. Once top2vec has created the semantic space and identified clusters, it generates a list of words associated with each cluster for human interpretation. Finally, it associates each 100-word document to the closest cluster based on spatial distance. We evaluated these word lists and assigned reasonable labels to them. Most lists have a very apparent theme that is consistent with the discussions we observed in the Telegram channel. Only 15 out of the 196 detected by the model had no clear theme. Through an iterative process of random sampling the labeled documents, and drawing on subject matter expertise, we grouped these labels (and their corresponding documents) into the final topics. We removed any documents that were not close enough to any cluster (spatial distance score < 0.2). Also excluding the clusters left unlabelled, overall we labeled 88% of the conversations by length.

The six most common topics as a proportion of the length of all messages with meaningful topics included: elites and conspiracies (keywords include “masonry,” “aliens,” “Gates,” “chemtrails,” “Mossad”), banks and finance (keywords include “debt,” “cash,” “banks,” “economic,” “fund”), illness (keywords include “mortality,” “lethality,” “deceased,” “contagion,” “respirators”), religion (keywords include “church,” “Jesus,” “Christ,” “Benedict,” “Prophet”), social control (keywords include “Zuckerberg,” “censorship,” “Apple,” “tracking,” “drones”), and US politics (keywords include “Trump,” “Biden,” “Pence,” “election,” “fraud”).

Hate Speech Analysis

To detect hate speech, we used the text classification model of the Innovative Monitoring Systems and Prevention Policies of Online Hate Speech (IMSyPP)2 project. The model classifies each input into one of four classes: acceptable, inappropriate (containing swearing that is not abusive in nature), offensive (containing degrading and insulting language targeting a group), and violent speech (containing references to violence against a group) (Kralj Novak et al., 2021).3Table 1 reports examples of each category of sentences detected in our dataset.

Table 1.

Examples of Acceptable, Inappropriate, Offensive, and Violent Speech.

| Category | Examples (Italian language) | Examples (English translation) |

|---|---|---|

| Acceptable | Al suo apice, una carriola piena di banconote, per l’equivalente di 100 miliardi di marchi, non bastava a comprare nemmeno un tozzo di pane. | At its peak, a wheelbarrow full of banknotes, for the equivalent of 100 billion marks, was not enough to buy even a loaf of bread. |

| Inappropriate | Quel che é certo é che tutta questa storia puzza e che il fanatismo creatosi attorno a sto cazzo si vaccino sta raggiungendo punte da integralismo religioso. | What is certain is that this whole story stinks and that the fanaticism created around this fucking vaccine is reaching peaks of religious fundamentalism. |

| Offensive | Questa è malata di mente . . . sta li con uno straccio sulla faccia e non si capisce che dice. | She is mentally ill . . . she stands there with a rag over her face and it’s not clear what she says. |

| Violent | Questi BISOGNA ucciderli | These MUST be killed. |

To ensure that the IMSyPP was a valid tool to capture hate speech in our dataset of Telegram messages, we used two different approaches. First, we cross-checked the IMSyPP with the Complex Networks Research Group (CNERG) model (Aluru et al., 2020), which was trained to distinguish hate speech from non-hate speech in a range of languages. As the IMSyPP and the CNERG models measure equivalent concepts and both distinguish between hate speech and non-hate speech, their agreement gives us confidence about the overall performance of the IMSyPP labeling. The two models agreed with 82% of the sentences categorized as acceptable by the IMSyPP labeled “non-hate” by the CNERG, and 84% of the sentences categorized as “violent” by the IMSyPP labeled “hate” by the CNERG (see Table 2). Second, we extracted and manually coded a randomly selected sample of 100 sentences per label type “acceptable,” “inappropriate,” “offensive,” and “violent” (total sample = 400 sentences). We then compared the IMSyPP labels with the manually coded labels. The results indicate a good performance for individual classes “acceptable” (F1 = 0.86), “inappropriate” (F1 = 0.86), “offensive” (F1 = 0.93), and “violent” (F1 = 0.86). The overall accuracy coefficient of the IMSyPP model on the manually labeled sentences is Acc = 0.87, 95% CI = [0.84, 0.90]. Our results were in line with those reported by the model creators for the same purpose of detecting hate speech as ourselves in two separate papers looking at YouTube comments (Cinelli et al., 2021) and Tweets (Evkoski et al., 2021). Overall, the comparison between the IMSyPP and the CNERG models, and the comparison between the IMSyPP and our manually annotated set, gives us confidence that the IMSyPP model was a valid tool to detect hate speech in our dataset of Telegram messages.

Table 2.

Comparison Between IMSyPP and CNERG Models.

| IMSyPP | CNERG | Percentage of sentences in each category |

|---|---|---|

| Acceptable | Hate | 18% |

| Acceptable | Non-hate | 82% |

| Inappropriate | Hate | 78% |

| Inappropriate | Non-hate | 22% |

| Offensive | Hate | 63% |

| Offensive | Non-hate | 37% |

| Violent | Hate | 84% |

| Violent | Non-hate | 16% |

IMSyPP: Innovative Monitoring Systems and Prevention Policies of Online Hate Speech.

Hate Speech Targets

Based on our observation of the discussions in the Telegram channel, we identified the main adversaries associated with each of four conspiratorial narratives: “China,” “Chinese,” and “Wuhan” for the foreign-focused theory; “doctors,” “nurses,” and “journalists” for the domestic-focused theory; “Jews,” “banks,” and “finance” for the NWO theory; “pedophiles,” “Obama,” and “Clinton” for the QAnon theory. We avoided targets like “government,” “politician,” “leftist,” “communist,” or “masonry” because they are key targets shared across too many different narratives. Instead, we decided to focus on targets that allowed us to distinguish between different conspiracy theories. For example, looking at targets like “Jews,” “banks,” and “finance” allowed us to measure whether the Telegram channel discussions focused on adversaries identified by more traditional (i.e., NWO) as opposed to more contemporary (i.e., QAnon) conspiracy theories. For each adversary, we matched all occurrences (after lower-casing) of a theoretically and observationally informed list of synonyms and variations of the words in Italian, including slang (e.g., for China we included “Cina,” “cinesata,” “filocinese”; for Jew we included “ebreo,” “ebrei,” “ebrea,” “giudeo,” “giudea”).

Analysis Plan

This was an exploratory study aimed at generating hypotheses. As a result, our primary focus was on providing a description of the trends that we identify in the data and notably the differences in four distinct periods, namely: (1) the period before the first lockdown (4 weeks); (2) the period of the first lockdown (11 weeks); (3) the period in between lockdowns (24 weeks); and (4) the period of the second lockdown (9 weeks). We note that each period contains different levels of our measured variables, which are hate targets mentions and topic occurrence. To statistically verify this point, we estimated the mean of the weekly measured outcomes for each period and the differences between periods using a linear mixed model. The model included period as the independent categorical variable and assumed an autoregressive process with lag defined using an autocorrelation plot with Bartlett’s 95% confidence bands. Sidak’s adjusted p-values for pairwise comparisons between periods are reported.

Results

Descriptive Information of the Sample

Figure 1 includes descriptive information about the sample (i.e., total number of messages over time and the proportion of messages produced by top posters) and shows some macro-level patterns in the data. First, the channel activity increased over time and reached its peak during the second lockdown. Second, if a large portion of the group activity was driven by top posters during the first months of 2020, there was a noticeable increase in grassroots engagement especially during the second lockdown. Third, it is interesting to notice that the creator of the Telegram channel, Cesare Sacchetti, was the second top poster. The first top poster was a user who displayed both the Italian and the US flags in their username, which suggests a key focus on US issues and topics.4 Fourth, the content shared in the Telegram channel mainly originated from mainstream social media (e.g., YouTube, Facebook, and Twitter). Here, we found 22,367 raw URLs, compared to 40,986 URLs shared with a message, over the course of the year. The first source of URLs was YouTube (8,715), followed by Twitter (7,659), Facebook (2,709), and other Italian websites.

Figure 1.

Messages over time.

Topic Modeling

Figure 2 shows that the discussions about “illnesses”—which revolved around the health issues associated with COVID-19—clearly peaked just before the beginning of the first lockdown. This is confirmed by the statistical analysis, which showed that the mean of the weekly proportion of conversations about illness was significantly higher in the weeks before the first lockdown (Period 1) compared to the period in between lockdowns (Period 3, p = .045) and the second lockdown (Period 4, p = .011) (Table 3). Additionally, Figure 2 shows that the discussions about US politics peaked just after the beginning of the second lockdown. Consistently, the statistical model revealed that the mean of the weekly proportion of conversations about US politics was significantly higher in the weeks of the second lockdown (Period 4) than in the rest of the year (p < .001 for all comparisons; Table 3).

Figure 2.

Most common topics.

Top six most common topics discussed in the Telegram channel as a proportion of the length of labeled text, averaged weekly.

Table 3.

Estimated Mean of the Weekly Proportion of Conversations About Illness and US Politics by Period.

| Outcome | Period 1 | Period 2 | Period 3 | Period 4 | 1 vs 2 | 1 vs 3 | 1 vs 4 | 2 vs 3 | 2 vs 4 | 3 vs 4 |

|---|---|---|---|---|---|---|---|---|---|---|

| Mean (standard error) | p-value (Sidak’s adjusted) | |||||||||

| Illness | 0.291 (0.038) | 0.203 (0.024) | 0.181 (0.017) | 0.147 (0.026) | .240 | .045 | .011 | .967 | .524 | .858 |

| US politics | 0.055 (0.016) | 0.03 (0.009) | 0.056 (0.006) | 0.251 (0.01) | .732 | 1.000 | <.001 | .105 | <.001 | <.001 |

Period 1: pre-lockdown; Period 2: lockdown 1; Period 3: in between lockdowns; Period 4: second lockdown.

Estimates and p-values obtained under a linear mixed model with autocorrelation structure lag(2) for US politics and lag(1) for illness.

Detecting Hate Speech

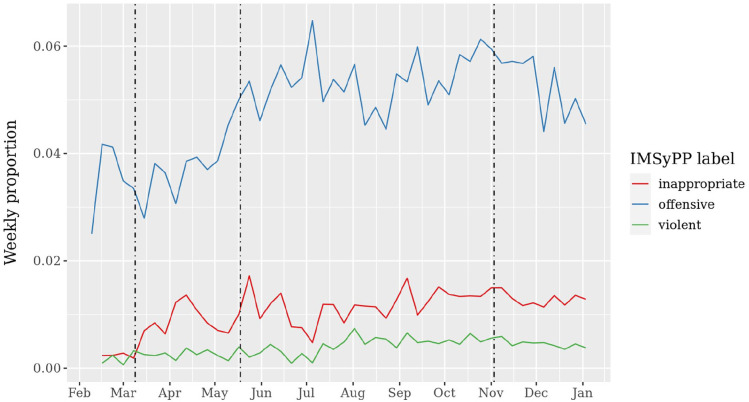

Using the IMSyPP model to detect hate speech, Figure 3 shows the proportion of sentences containing inappropriate, offensive, and violent speech in the dataset. Figure 3 shows that there was an overall increase in offensive speech across 2020. The increase took place during the first lockdown, and levels remained high across the whole year. A similar trend can be observed for inappropriate and violent speech, although less noticeable.

Figure 3.

Evolution of inappropriate, offensive, and violent speech over time.

Presented as a proportion of sentences labeled over all sentences in each week.

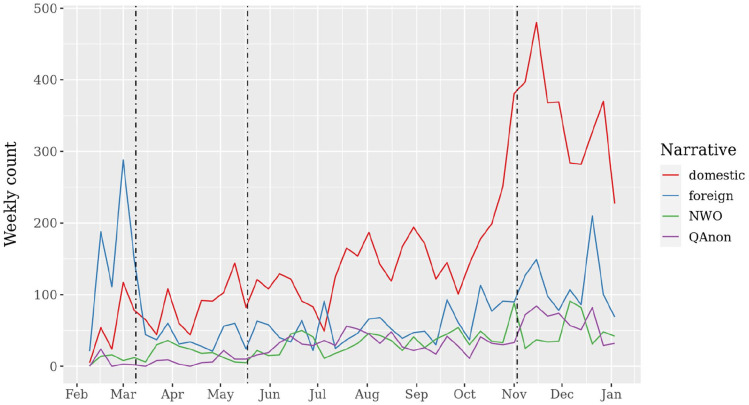

In examining the extent to which adversaries were discussed in the sample as they relate the four conspiracy theories (foreign-focused, domestic-focused, NWO, QAnon), Figure 4 shows two visually noticeable trends: first, there was a dramatic increase in the frequency of discussions about domestic-focused adversaries right before the beginning of the second lockdown, with the mean count of domestic hate targets during the second lockdown (Period 4) significantly higher than in the other three periods (compared to Period 1, p = .02; Period 2, p < .001; Period 3, p = .007; Table 4). Second, the figure shows that the foreign-focused adversaries (i.e., China) were discussed with the most frequency before the first lockdown (i.e., the month of February 2020, Period 1) and during the second lockdown (Period 4) compared to both Period 2 and Period 3 (see Table 4). There were no significant differences between Period 1 and Period 4, and between Period 2 and Period 3.

Figure 4.

Counts of keywords capturing adversaries in each conspiratorial narrative.

Table 4.

Estimated Mean of the Weekly Count of Sentences Mentioning Domestic and Foreign Adversaries by Period.

| Outcome | Period 1 | Period 2 | Period 3 | Period 4 | 1 vs 2 | 1 vs 3 | 1 vs 4 | 2 vs 3 | 2 vs 4 | 3 vs 4 |

|---|---|---|---|---|---|---|---|---|---|---|

| Mean (standard error) | p-value (Sidak’s adjusted) | |||||||||

| Domestic | 75.8 (44.2) | 91.2 (32.7) | 158.5 (25.2) | 281.1 (35.7) | 1.000 | .432 | .002 | .319 | <.001 | .007 |

| Foreign | 150.6 (21) | 49.4 (12.9) | 58.1 (8.8) | 113.3 (14.2) | <.001 | <.001 | .597 | .994 | .005 | .006 |

Period 1: pre-lockdown; Period 2: lockdown 1; Period 3: in between lockdowns; Period 4: second lockdown.

Estimates and p-values obtained under a linear mixed model with autocorrelation structure lag(2) for US politics and lag(1) for illness.

Looking at the intensity of hate against different targets, Figure 5 suggests that offensive speech against targets identified by the foreign-focused theory is highest before the first lockdown. Consistently, the weekly proportion of sentences mentioning foreign adversaries and offensive speech was significantly higher in the weeks before the first lockdown than in the rest of the year. After the first lockdown, offensive speech against domestic adversaries tended to be higher than offensive speech against other adversaries.

Figure 5.

Inappropriate, offensive, and violent speech against adversaries.

Labeled sentences are allocated to each narrative by the presence of keywords.

Discussion

The primary contribution of our study is to show that a complex and protracted event like the COVID-19 pandemic needs to be disaggregated by country-specific responses (e.g., lockdowns and re-openings), which can present different associations with online hate speech against various targets depending on the social and political context. While previous research considered the pandemic as a homogeneous event triggering hate speech against one target group (e.g., Asian or Jewish minorities) (Croucher et al., 2020; Ziems et al., 2020), we found heterogeneous associations between hate speech against different targets and policy responses to the virus. Overall, our exploratory study suggests that, when COVID-19 was perceived by the Telegram channel members as a significant health threat (hence the discussion about illness), the hate speech primarily targeted China reflecting a foreign-focused conspiracy theory. When the health threat became less salient in the channel’s conversations, the conspiratorial narratives, the topics of discussion, and the targets of hate speech aligned with a domestic-focused conspiracy theory associated with US-related themes. These are hypotheses that should be explored in future research.

Gottschalk’s (2017) criminological concept of convenience and social identity theory applied to political groups (Huddy, 2001) provide insights into why some conspiracy theories gained traction and others lost popularity over time in the Italian-themed Telegram channel. Online conspiracy communities need adversaries to blame for the misfortunes of their group (e.g., their country, their ethnic, racial or religious group) (Bilewicz & Sędek, 2015; Jolley et al., 2020). In a new and rapidly changing context, like the first year of the COVID-19 pandemic, the Telegram channel users rapidly shifted from one conspiracy theory/adversary to another, in order to maintain the othering narrative aligned with the issues that were more salient in the social and political context. Shifting the adversary and target of hate was dictated by convenience because it was a response to different public opinion and perceived threats to the group’s political identity (Huddy, 2001) during 2020. China (a foreign power) was convenient to blame in a context of national unity and when the health threat of COVID-19 was most salient (Arcolaci, 2020). Domestic elites were convenient to blame in a social and environmental context of decreasing support for domestic elites and lockdowns (Ceccarelli, 2020; Stefanoni, 2020).

Previous research suggests that events of social and political significance such as elections (Müller & Schwarz, 2018) and terrorist attacks (Burnap et al., 2014) can trigger waves of online hate speech. COVID-19 lockdowns and re-openings have been perceived as political events in Western democracies, which have experienced an ideological split in the support for COVID-19 policy responses with conservatives being opposed and liberals being in favor of restrictions (Han et al., 2020). This is likely the reflection of conservative elites endorsing COVID-19-related conspiracy theories, including the idea—especially in the United States—that COVID-19 was being used to attack President Trump (Uscinski et al., 2020). Our study detected a general increase in hate speech in the Italian-themed Telegram channel over the course of the pandemic in 2020. The hate speech levels increased during the first lockdown and did not return to pre-lockdown levels for the remainder of the year. This finding aligns with previous research, which showed that the COVID-19 pandemic triggered an increase in online hate speech (Croucher et al., 2020; Ziems et al., 2020).

Previous research has found that cultural worldviews adapt to the changing social, cultural, and economic environment over long periods of time (Ornatowski, 1996) and that conspiracy theories are often interpreted by the communities that adopt them as well as are adapted to different local cultures (Byford, 2014; Swami, 2012). By uncovering that the discussions in the Telegram channel shifted their main focus from foreign adversaries (i.e., China) to domestic adversaries (i.e., journalists and healthcare workers) between March and November 2020, our findings suggest that the conspiracy theories circulating in online communities can adapt to the social and political context over a relatively short period of time (i.e., months). Additionally, by showing that elements of multiple conspiracy theories (including foreign focused, domestic focused, QAnon, and NWO) coexist but also showing their importance can vary significantly over the course of 2020, we show that the culture of online communities of conspiracy theorists is a dynamic set of ideas made of moving parts that gain (or lose) dominance over time. In the Italian-themed Telegram channel, numerous conspiracy theories coexisted simultaneously and were circulated during the pandemic in 2020. Various conspiracy theories formed layers that build on top of each other: they included deeper layers of classic conspiracy theories like the NWO, and more contemporary layers such as QAnon, domestic-focused and foreign-focused conspiracies about the origin of COVID-19.

The relevance of the discussions about the US elections in the Italian Telegram channel (i.e., it was the most discussed topic during the second lockdown, and the top poster of the Telegram channel had both Italian and US flags in their username) suggests that channel members were invested in US politics, and—also based on the research team’s observations—that US-based conspiracy theories (i.e., QAnon) were circulating there. Consistently, we detected a small but significant increase in hate speech against QAnon targets (i.e., Obama, Clinton, pedophiles) alongside domestic targets (i.e., journalists and healthcare workers). More research is needed to understand the contagion effect of the US election and the QAnon narrative at a global level, and how that might have changed—in the short, medium, and long term—the content of the conspiratorial narratives and the adversaries in global contexts. It is important to note that the beginning of the second lockdown took place only 3 days after the US presidential election, which might have been a trigger event for discussions about US politics, too.

Limitations and Ethical Considerations

A limitation of this study is that we attempted to distinguish between different conspiracy theories (foreign-based, domestic-based, QAnon, and NWO) that—although conceptually distinct and broadly complementary—present many overlaps and were sometimes conflated and merged by members of the Telegram channels. For example, some Telegram channel users (including Sacchetti) wrote that China was plotting with the Italian secret service against Trump using the pandemic as a weapon to strengthen a global elite of pedophiles. Often, conspiracy theories are not mutually exclusive; rather, they can coexist and merge one into another because they share the same adversaries, which become common targets of hate. For example, QAnon and the NWO conspiracies target domestic politicians and secret elites such as the masonry and the Illuminati (Amarasingam & Argentino, 2020; Barkun, 2013; Imhoff & Lamberty, 2020). Satan-worshippers and communists are key adversaries in both the NWO and the QAnon conspiracy theories (Amarasingam & Argentino, 2020). Adversaries identified by the NWO theory (“Jews,” “banks,” and “finance”) might also identify adversaries within the QAnon theory, and potentially also within the domestic-focused and foreign-focused theories. This overlap exists because the NWO was a blueprint for QAnon, as well as many other contemporary conspiracy theories (Amarasingam & Argentino, 2020). For these reasons, we had to exclude from our data collection adversaries that were shared across different conspiracy theories (i.e., “government,” “politicians,” “leftists,” “communists,” or “masonry”). Future studies should attempt to identify building blocks of different conspiratorial narratives and track their evolution and interactions over time.

We acknowledge that conducting research on social media data is a gray area for research ethics because the users did not explicitly consent for their data to be used, and because they might not have anticipated that their data could be used for research purposes. For this reason, we do not provide any individual-level data attached to this publication. The Telegram channel that we analyzed was a public channel: it was open to anyone, its name appeared in Italian mainstream media, it was large (over 4,000 users), and there were no disclaimers or limitations on the use of data. The Telegram privacy guidelines state, “like everything on Telegram, the data you post in public communities is encrypted, both in storage and in transit—but everything you post in public will be accessible to everyone.”5 We provided aggregate analyses of the users’ language, and there is no way that any user could be re-identified from our analyses. The only user that we named is the Telegram channel creator, Cesare Sacchetti, who is a high-profile public figure in the Italian conspiracy milieu and has been named multiple times in conspiracy-related research (e.g., McDonald et al., 2020). We believe that providing his name—and explaining who he is—provided important context to understand the origins of the Telegram channel.

Conclusion

Online communities like the one that we explored in this study are hotspots for polarizing opinions. Individuals who belong to groups that endorse conspiracy theories tend to have a siege mentality (Fan et al., 2020; Gruzd & Mai, 2020). In the Telegram channel, this is demonstrated by “social control” being one of the main topics of discussion, with users intensively discussing the issues of censorship and control that they perceive to be motivated by obscure conspiratorial goals. This siege mentality, the separation from the rest of society, and the associated mindset create a fertile ground for the sharing of deliberately false and implausible materials that are uncritically distributed (Bessi et al., 2015). Whether—and to what extent—this process of polarization might translate into real-world harm is an important question that needs to be explored by both academics and practitioners working in violence prevention.

We believe that this study is a potential warning signal for government and non-governmental organizations that work on preventing and mitigating real-world hate. Different conspiracy theories are associated with different behavioral and attitudinal outcomes (Oleksy et al., 2020, 2021). Imhoff and Lamberty (2020) found that depending on whether COVID-19 is believed to be a hoax or human-made, participants in the United States, the United Kingdom, and Germany indicated less compliance with government responses and more engagement in self-reported behavior targeting personal benefits of the crisis. Previous research established that—at an aggregate level—online hate speech is associated with episodes real-world violence (Williams et al., 2020). Therefore, our findings warn about the potential for an increase in real-world violence against professional groups like journalists and healthcare workers.

Author Biographies

Matteo Vergani (PhD) is a Senior Lecturer in Sociology at Deakin University. His research focuses on the “ecosystem of hate,” which includes discrimination, micro-aggressions, hate speech, hate crime, and politically motivated violence. His research interests include the factors that trigger and accelerate hate-motivated behaviors, as well as the factors that contribute to prevent and mitigate hate, such as social cohesion and inclusion of diverse communities in multicultural societies.

Alfonso Martinez Arranz (PhD) is a researcher at the Faculty of Engineering and Information Technology of the University of Melbourne. He has a PhD in Public Policy from Monash University. His interests are in sustainability transitions, low-carbon technologies, climate and energy policy, and the use of data-intensive research methods to answer important political, societal, and environmental questions.

Ryan Scrivens (PhD) is an Assistant Professor in the School of Criminal Justice at Michigan State University. He is also an Associate Director at the International CyberCrime Research Centre at Simon Fraser University and a Research Fellow at the VOX-Pol Network of Excellence. He conducts problem-oriented interdisciplinary research with a focus on terrorists’ and extremists’ use of the Internet, right-wing terrorism and extremism, combating violent extremism, hate crime, and computational social science.

Liliana Orellana (PhD) is Professor in Biostatistics in the Faculty of Health at Deakin University. She has a PhD in Biostatistics from Harvard University, and her major empirical areas of interest include cancer prevention and surveillance, palliative care, HIV epidemiology and treatment, nutrition, and eating disorders in children and adolescents. Major methodological areas of interest include causal inference, optimal dynamic regimes, complex epidemiological surveys, and robust multivariate methods.

For its Italian version, the model was trained on 119,670 YouTube comments and tested on an independent test set of 21,072 YouTube comments. See https://huggingface.co/IMSyPP/hate_speech_it.

The top posters were anonymized in Figure 1.

Footnotes

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

ORCID iD: Matteo Vergani  https://orcid.org/0000-0003-0546-4771

https://orcid.org/0000-0003-0546-4771

References

- Allport G. W. (1954). The nature of prejudice. Addison-Wesley. [Google Scholar]

- Aluru S. S., Mathew B., Saha P., Mukherjee A. (2020). Deep learning models for multilingual hate speech detection. arXiv preprint. arXiv:2004.06465. [Google Scholar]

- Amarasingam A., Argentino M. A. (2020). The QAnon conspiracy theory: A security threat in the making. CTC Sentinel, 13(7), 37–44. [Google Scholar]

- Angelov D. (2020). Top2vec: Distributed representations of topics. arXiv preprint. arXiv:2008.09470. [Google Scholar]

- Arcolaci A. (2020). Coronavirus, medicie infermieri: “Siamo stremati” [Coronavirus, doctors and nurses: “we are exhausted”]. Vanity Fair. https://www.vanityfair.it/news/storie-news/2020/03/18/coronavirus-medici-e-infermieri-appello-storie

- Barkun M. (2013). A culture of conspiracy: Apocalyptic visions in contemporary America. University of California Press. [Google Scholar]

- Bessi A., Coletto M., Davidescu G. A., Scala A., Caldarelli G., Quattrociocchi W. (2015). Science vs conspiracy: Collective narratives in the age of misinformation. PLOS ONE, 10(2), Article e0118093. 10.1371/journal.pone.0118093 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bilewicz M., Sędek G. (2015). Conspiracy stereotypes: Their sociopsychological antecedents and consequences. In Bilewicz M., Cichocka A., Soral W. (Eds.), The psychology of conspiracy (pp. 3–22). Routledge. [Google Scholar]

- Bliuc A. M., Betts J., Vergani M., Iqbal M., Dunn K. (2019). Collective identity changes in far-right online communities: The role of offline intergroup conflict. New Media & Society, 21(8), 1770–1786. https://doi.org/10.1177%2F1461444819831779 [Google Scholar]

- Bliuc A. M., Faulkner N., Jakubowicz A., McGarty C. (2018). Online networks of racial hate: A systematic review of 10 years of research on cyber-racism. Computers in Human Behavior, 87, 75–86. 10.1016/j.chb.2018.05.026 [DOI] [Google Scholar]

- Boccia S., Ricciardi W., Ioannidis J. P. (2020). What other countries can learn from Italy during the COVID-19 pandemic. JAMA Internal Medicine, 180(7), 927–928. 10.1001/jamainternmed.2020.1447 [DOI] [PubMed] [Google Scholar]

- Bronner S. E. (2003). A rumor about the Jews: Antisemitism, conspiracy, and the Protocols of Zion. Oxford University Press. [Google Scholar]

- Bucchi M., Saracino B. (2020). Vaccino Covid-19, un italiano su cinque non lo vuole. I dati di Observa Science in Society [Covid-19 vaccine, one in five Italians doesn’t want to. The data of Observa Science in Society]. Corriere della Sera. http://corriereinnovazione.corriere.it/cards/vaccino-covid-terzo-italiani-non-vuole-dati-sondaggio-osservatorio-observa-science/percezione-pericolo-fonti-informative_principale.shtml

- Burch L. (2018). “You are a parasite on the productive classes”: Online disablist hate speech in austere times. Disability & Society, 33(3), 392–415. 10.1080/09687599.2017.1411250 [DOI] [Google Scholar]

- Burnap P., Williams M. L., Sloan L., Rana O., Housely W., Edwards A., Knight V., Procter R., Voss A. (2014). Tweeting the terror: Modelling the social media reaction to the Woolwich terrorist attack. Social Network Analysis and Mining, 4, Article 206. 10.1007/s13278-014-0206-4 [DOI] [Google Scholar]

- Byford J. (2014). Beyond belief: The social psychology of conspiracy theories and the study of ideology. In Antaki C., Condor S. (Eds.), Rhetoric, ideology and social psychology: Essays in honour of Michael Billig (pp. 83–94). Routledge. [Google Scholar]

- Cabrini L., Grasselli G., Cecconi M. (2020). Yesterday heroes, today plague doctors: The dark side of celebration. Intensive Care Medicine, 46(9), 1790–1791. 10.1007/s00134-020-06166-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ceccarelli D. (2020). Coronavirus, anche i medici-eroi hanno bisogno del pronto soccorso [Coronavirus, also the doctors-heroes need the emergency room]. Sole 24 Ore. https://www.ilsole24ore.com/art/coronavirus-anche-medici-eroi-hanno-bisogno-pronto-soccorso-ADTADqI?refresh_ce=1

- Cinelli M., Pelicon A., Mozetič I., Quattrociocchi W., Novak P. K., Zollo F. (2021). Dynamics of online hate and misinformation. Scientific Reports, 11(1), 1–12. 10.1038/s41598-021-01487-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Comin & Partners. (2020). Coronavirus e turismo: il sondaggio di IZI e Comin & Partners [Coronavirus and tourism: the survey of IZI and Comin & Partners]. https://www.cominandpartners.com/en/comunicati-stampa/coronavirus-e-turismo-il-sondaggio-di-izi-e-comin-partners/

- Costello M., Hawdon J., Bernatzky C., Mendes K. (2019). Social group identity and perceptions of online hate. Sociological Inquiry, 89(3), 427–452. 10.1111/soin.12274 [DOI] [Google Scholar]

- Croucher S. M., Nguyen T., Rahmani D. (2020). Prejudice toward Asian Americans in the COVID-19 pandemic: The effects of social media use in the United States. Frontiers in Communication, 5, Article 39. 10.3389/fcomm.2020.00039 [DOI] [Google Scholar]

- Curiskis S. A., Drake B., Osborn T. R., Kennedy P. J. (2020). An evaluation of document clustering and topic modelling in two online social networks: Twitter and Reddit. Information Processing & Management, 57(2), Article 102034. 10.1016/j.ipm.2019.04.002 [DOI] [Google Scholar]

- Darling-Hammond S., Michaels E. K., Allen A. M., Chae D. H., Thomas M. D., Nguyen T. T., Johnson R. C. (2020). COVID-19 after “the China virus” went viral: Racially charged coronavirus coverage and trends in bias against Asian Americans. Health Education & Behavior, 47(6), 870–879. 10.1177/1090198120957949 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dean J. (2000). Theorizing conspiracy theory. Theory & Event, 4(3). https://muse.jhu.edu/article/32599/summary [Google Scholar]

- Dearden L. (2021). Kate Shemirani: Covid conspiracy theorist investigated by police over doctor hanging comments. The Independent. https://www.independent.co.uk/news/uk/crime/covid-vaccine-doctors-hanging-shemirani-police-b1890636.html

- Devlin J., Chang M.-W., Lee K., Toutanova K. (2018). BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805. [Google Scholar]

- Douglas K. M. (2021). COVID-19 conspiracy theories. Group Processes & Intergroup Relations, 24(2), 270–275. 10.1177/1368430220982068 [DOI] [Google Scholar]

- Douglas K. M., Uscinski J. E., Sutton R. M., Cichocka A., Nefes T., Ang C. S., Deravi F. (2019). Understanding conspiracy theories. Political Psychology, 40(S1), 3–35. 10.1111/pops.12568 [DOI] [Google Scholar]

- Evkoski B., Ljubešić N., Pelicon A., Mozetič I., Kralj Novak P. (2021). Evolution of topics and hate speech in retweet network communities. Applied Network Science, 6(1), 1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan L., Yin Z., Yu H., Gilliland A. (2020). Using data-driven analytics to enhance archival processing of the COVID-19 Hate Speech Twitter Archive (CHSTA). Preprint. 10.31229/osf.io/gkydm [DOI]

- Fanelli D., Piazza F. (2020). Analysis and forecast of COVID-19 spreading in China, Italy and France. Chaos, Solitons & Fractals, 134, Article 109761. 10.1016/j.chaos.2020.109761 [DOI] [PMC free article] [PubMed]

- Fuschetto C. (2020). C’è chi pensa che il coronavirus sia un complotto dei governi [There are people who think that coronavirus is a government’s conspiracy]. AGI. https://www.agi.it/blog-italia/scienza/post/2020-03-19/coronavirus-complotto-governi-7643757/

- Gallagher A., O’Connor C. (2021). Layers of lies: A first look at Irish far-right activity on Telegram. Institute for Strategic Dialogue. [Google Scholar]

- Ghasiya P., Okamura K. (2021). Investigating COVID-19 news across four nations: A topic modeling and sentiment analysis approach. IEEE Access, 9, 36645–36656. 10.1109/access.2021.3062875 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldberg R. A. (2008). Enemies within: The culture of conspiracy in modern America. Yale University Press. [Google Scholar]

- Gottschalk P. (2017). Convenience in white-collar crime: Introducing a core concept. Deviant Behavior, 38(5), 605–619. 10.1080/01639625.2016.1197585 [DOI] [Google Scholar]

- Gruzd A., Mai P. (2020). Going viral: How a single tweet spawned a COVID-19 conspiracy theory on Twitter. Big Data & Society, 7(2), 1–9. 10.1177/2053951720938405 [DOI] [Google Scholar]

- Guhl J., Davey J. (2020). A safe space to hate: White supremacist mobilisation on Telegram. Institute for Strategic Dialogue. https://www.isdglobal.org/wp-content/uploads/2020/06/A-Safe-Space-to-Hate.pdf

- Han J., Cha M., Lee W. (2020). Anger contributes to the spread of COVID-19 misinformation. Harvard Kennedy School Misinformation Review, 1(3). https://misinforeview.hks.harvard.edu/article/anger-contributes-to-the-spread-of-covid-19-misinformation/ [Google Scholar]

- Hannah M. (2021). QAnon and the information dark age. First Monday. https://firstmonday.org/ojs/index.php/fm/article/download/10868/10067

- Huang J., Liu R. (2020). Xenophobia in America in the age of coronavirus and beyond. Journal of Vascular and Interventional Radiology, 31(7), 1187–1188. https://doi.org/10.1016%2Fj.jvir.2020.04.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huddy L. (2001). From social to political identity: A critical examination of social identity theory. Political Psychology, 22(1), 127–156. 10.1111/0162-895X.00230 [DOI] [Google Scholar]

- Imhoff R., Lamberty P. (2020). A bioweapon or a hoax? The link between distinct conspiracy beliefs about the Coronavirus disease (COVID-19) outbreak and pandemic behavior. Social Psychological and Personality Science, 11(8), 1110–1118. 10.1177/1948550620934692 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Innes H., Innes M. (2021). De-platforming disinformation: Conspiracy theories and their control. Information, Communication & Society. Advance online publication. 10.1080/1369118X.2021.1994631 [DOI]

- Jolley D., Meleady R., Douglas K. M. (2020). Exposure to intergroup conspiracy theories promotes prejudice which spreads across groups. British Journal of Psychology, 111(1), 17–35. 10.1111/bjop.12385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jolley D., Paterson J. L. (2020). Pylons ablaze: Examining the role of 5G COVID-19 conspiracy beliefs and support for violence. British Journal of Social Psychology, 59(3), 628–640. 10.1111/bjso.12394 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamp A., Denson N., Atie R., Dunn K., Sharples R., Vergani M., Walton J., Sisko S. (2021). Asian Australians’ experiences of racism during the COVID-19 pandemic. Centre for Resilient and Inclusive Societies. https://static1.squarespace.com/static/5d48cb4d61091100011eded9/t/60f655dd3ca5073dfd636d88/1626756586620/COVID+racism+report+190721.pdf

- Khan M. A., Kanwal N. (2015). Is unending polio because of religious militancy in Pakistan? A case of federally administrated tribal areas. International Journal of Development and Conflict, 5(1), 32–47. [Google Scholar]

- Khan Y. H., Mallhi T. H., Alotaibi N. H., Alzarea A. I., Alanazi A. S., Tanveer N., Hashmi F. K. (2020). Threat of COVID-19 vaccine hesitancy in Pakistan: The need for measures to neutralize misleading narratives. The American Journal of Tropical Medicine and Hygiene, 103(2), 603–604. 10.4269/ajtmh.20-0654 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein C., Clutton P., Polito V. (2018). Topic modeling reveals distinct interests within an online conspiracy forum. Frontiers in Psychology, 9, Article 189. 10.3389/fpsyg.2018.00189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kofta M., Soral W., Bilewicz M. (2020). What breeds conspiracy antisemitism? The role of political uncontrollability and uncertainty in the belief in Jewish conspiracy. Journal of Personality and Social Psychology, 118(5), 900–918. 10.1037/pspa0000183 [DOI] [PubMed] [Google Scholar]

- Kralj Novak P., Mozetič I., Ljubešić N. (2021). Slovenian Twitter hate speech dataset IMSyPP-sl. http://imsypp.ijs.si/

- Lui M., Baldwin T. (2011). Cross-domain Feature Selection for Language Identification. In Proceedings of 5th International Joint Conference on Natural Language Processing (pp. 553–561). Chiang Mai, Thailand: Asian Federation of Natural Language Processing. https://aclanthology.org/I11-1062 [Google Scholar]

- McDonald K., Padovese V., Labbe C., Richter M. (2020). Monitoraggio della diffusione via Twitter della disinformazione sul COVID-19 in Europa [Monitoring the diffusion of COVID-19 misinformation via Twitter in Europe]. NewsGuard. https://www.newsguardtech.com/it/twitter-superspreaders-europe/

- Müller K., Schwarz C. (2018). Making America hate again? Twitter and hate crime under Trump. Preprint. 10.2139/ssrn.3149103 [DOI]

- Oleksy T., Wnuk A., Gambin M., Łyś A. (2021). Dynamic relationships between different types of conspiracy theories about COVID-19 and protective behaviour: A four-wave panel study in Poland. Social Science & Medicine, 280, Article 114028. 10.1016/j.socscimed.2021.114028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oleksy T., Wnuk A., Maison D., Łyś A. (2020). Content matters. Different predictors and social consequences of general and government-related conspiracy theories on COVID-19. Personality and Individual Differences, 168, Article 110289. 10.1016/j.paid.2020.110289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliver J. E., Wood T. J. (2014). Conspiracy theories and the paranoid style (s) of mass opinion. American Journal of Political Science, 58(4), 952–966. 10.1111/ajps.12084 [DOI] [Google Scholar]

- Orellana C. I. (2020). Health workers as hate crimes targets during COVID-19 outbreak in the Americas. Revista de Salud Pública, 22(2), Article e386766. [DOI] [PubMed] [Google Scholar]

- Ornatowski G. K. (1996). Confucian ethics and economic development: A study of the adaptation of Confucian values to modern Japanese economic ideology and institutions. The Journal of Socio-Economics, 25(5), 571–590. [Google Scholar]

- Parekh B. (2006). Hate speech. Public Policy Research, 12(4), 213–223. [Google Scholar]

- Polignano M., Basile V., Basile P., de Gemmis M., Semeraro G. (2019). AlBERTo: Modeling Italian Social Media Language with BERT. IJCoL: Italian Journal of Computational Linguistics, 5(5–2), 11–31. 10.4000/ijcol.472 [DOI] [Google Scholar]

- Privitera G. (2020). Italy’s doctors face new threat: Conspiracy theories. Politico. https://www.politico.eu/article/italy-coronavirus-doctors-face-conspiracy-theories/

- Ranieri G. (2020). Milano, lo sfregio agli eroi del covid: vandalizzato il murales dedicato a medici e infermieri [Milan, an affront to the heroes of covid: vandalised the graffiti dedicated to doctors and nurses]. Milano Today. https://www.milanotoday.it/attualita/coronavirus/murales-medici-infermieri-vandali.html

- Rauhala E., Morris L. (2020). In the United States, QAnon is struggling. The conspiracy theory is thriving abroad. The Washington Post. https://www.washingtonpost.com/world/qanon-conspiracy-global-reach/2020/11/12/ca312138-13a5-11eb-a258-614acf2b906d_story.html

- Roose K. (2021). What is QAnon, the viral pro-Trump conspiracy theory? The New York Times. https://www.nytimes.com/article/what-is-qanon.html

- Scrivens R., Burruss G., Holt T., Chermak S., Freilich J., Frank R. (2020). Triggered by defeat or victory? Assessing the impact of presidential election results on extreme right-wing mobilization online. Deviant Behavior, 42(5), 630–645. 10.1080/01639625.2020.1807298 [DOI] [Google Scholar]

- Siegel A. A., Nikitin E., Barberá P., Sterling J., Pullen B., Bonneau R., Nagler J., Tucker J. A. (2018). Measuring the prevalence of online hate speech, with an application to the 2016 US Election. https://smappnyu.org/wp-content/uploads/2018/11/Hate_Speech_2016_US_Election_Text.pdf

- Spark A. (2000). Conjuring order: The new world order and conspiracy theories of globalization. The Sociological Review, 48(2 Suppl.), 46–62. 10.1111/j.1467-954X.2000.tb03520.x [DOI] [Google Scholar]

- Stefanoni F. (2020). Covid, cambia la paura degli italiani e il governo Conte perde fiducia [Covid, the fear of Italian people changes, and the Conte government loses trust]. Corriere della Sera. https://www.corriere.it/politica/20_novembre_12/covid-cambia-paura-italiani-governo-conte-perde-fiducia-e030d07e-24e3-11eb-9615-de24e09c8a4a.shtml

- Swami V. (2012). Social psychological origins of conspiracy theories: The case of the Jewish conspiracy theory in Malaysia. Frontiers in Psychology, 3, Article 280. 10.3389/fpsyg.2012.00280 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swami V., Furnham A. (2014). Political paranoia and conspiracy theories. In Prooijen J., Lange P. (Eds.), Power, Politics, and Paranoia: Why People are Suspicious of their Leaders (pp. 218–236). Cambridge University Press. [Google Scholar]

- Ullrich S., Keers R., Coid J. W. (2014). Delusions, anger, and serious violence: New findings from the MacArthur Violence Risk Assessment Study. Schizophrenia Bulletin, 40(5), 1174–1181. 10.1093/schbul/sbt126 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Urman A., Katz S. (2020). What they do in the shadows: Examining the far-right networks on Telegram. Information, Communication & Society, 25(7), 904–923. 10.1080/1369118X.2020.1803946 [DOI] [Google Scholar]

- Uscinski J. E., Enders A. M., Klofstad C., Seelig M., Funchion J., Everett C., Murthi M. (2020). Why do people believe COVID-19 conspiracy theories? Harvard Kennedy School Misinformation Review, 1(3). https://misinforeview.hks.harvard.edu/article/why-do-people-believe-covid-19-conspiracy-theories/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Vegt I., Mozes M., Gill P., Kleinberg B. (2021). Online influence, offline violence: Language use on YouTube surrounding the “Unite the Right” rally. Journal of Computational Social Science, 4(1), 333–354. 10.1007/s42001-020-00080-x [DOI] [Google Scholar]

- Velásquez N., Leahy R., Restrepo N. J., Lupu Y., Sear R., Gabriel N., Johnson N. F. (2020). Hate multiverse spreads malicious COVID-19 content online beyond individual platform control. arXiv preprint. arXiv:2004.00673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vergani M., Perry B., Freilich J., Chermak S., Scrivens R., Link R. (2022). PROTOCOL: Mapping the scientific knowledge and approaches to defining and measuring hate crime, hate speech, and hate incidents. Campbell Systematic Reviews, 18(2), Article e1228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Viafora G. (2020). Covid, negazionismo e insulti ai medici: Facebook non cancella i post. “Rispettano i nostri standard” [Covid, negationism and insults against doctors: Facebook does not delete the posts “they respect our standards”]. Corriere della Sera. https://www.corriere.it/cronache/20_dicembre_01/covid-negazionismo-insulti-medici-facebook-non-cancella-post-rispettano-nostri-standard-3b7d3c72-33be-11eb-be82-c9839d3e98fa.shtml

- Walther S., McCoy A. (2021). US extremism on Telegram: Fueling disinformation, conspiracy theories, and accelerationism. Perspectives on Terrorism, 15(2), 100–124. [Google Scholar]

- West H. G., Sanders T. (Eds.) (2003). Transparency and conspiracy: Ethnographies of suspicion in the new world order. Duke University Press. [Google Scholar]

- Williams M. L., Burnap P. (2015). Cyberhate on social media in the aftermath of Woolwich: A case study in computational criminology and big data. British Journal of Criminology, 56(2), 211–238. 10.1093/bjc/azv059 [DOI] [Google Scholar]

- Williams M. L., Burnap P., Javed A., Liu H., Ozalp S. (2020). Hate in the machine: Anti-Black and anti-Muslim social media posts as predictors of offline racially and religiously aggravated crime. The British Journal of Criminology, 60(1), 93–117. 10.1093/bjc/azz049 [DOI] [Google Scholar]

- Ziems C., He B., Soni S., Kumar S. (2020). Racism is a virus: Anti-Asian hate and counterhate in social media during the COVID-19 crisis. arXiv preprint. arXiv:2005.12423. [Google Scholar]