Abstract

Given the recent success of artificial intelligence (AI) in computer vision applications, many pathologists anticipate that AI will be able to assist them in a variety of digital pathology tasks. Simultaneously, tremendous advancements in deep learning have enabled a synergy with artificial intelligence (AI), allowing for image-based diagnosis on the background of digital pathology. There are efforts for developing AI-based tools to save pathologists time and eliminate errors. Here, we describe the elements in the development of computational pathology (CPATH), its applicability to AI development, and the challenges it faces, such as algorithm validation and interpretability, computing systems, reimbursement, ethics, and regulations. Furthermore, we present an overview of novel AI-based approaches that could be integrated into pathology laboratory workflows.

Keywords: artificial intelligence, computational pathology, digital pathology, histopathology image analysis, deep learning

1. Introduction

Pathologists examine pathology slides under a microscope. To diagnose diseases with these glass slides, many traditional technologies, such as hematoxylin and eosin (H&E) staining and special staining, have been used. However, even for experienced pathologists, intra- and interobserver disagreement cannot be avoided through visual observation and subjective interpretation [1]. This limited agreement has resulted in the necessity of computational methods for pathological diagnosis [2,3,4]. Because automated approaches can achieve reliable results, digital imaging is the first step in computer-aided analysis [5]. When compared to traditional digital imaging technologies that process static images through cameras, whole-slide imaging (WSI) is a more advanced and widely used technology in pathology [6].

Digital pathology refers to the environment that includes tools and systems for digitizing pathology slides and associated metadata, in addition their storage, evaluation, and analysis, as well as supporting infrastructure. WSI has been proven in multiple studies to have an excellent correlation with traditional light microscopy diagnosis [7] and to be a reliable tool for routine surgical pathology diagnosis [8,9]. Indeed, WSI technology provides a number of advantages over traditional microscopy, including portability, ease of sharing and retrieving images, and task balance [10]. The establishment of the digital pathology environment contributed to the development of a new branch of pathology known as computational pathology (CPATH) [11]. Novel terminology and definitions have resulted from advances in computational pathology (Table 1) [12]. The computational analysis of pathology slide images has made direct disease investigation possible rather than relying on a pathologist analyzing images on a screen [13]. AI approaches aided by deep learning results are frequently used to combine information from digitized pathology images with their associated metadata. Using AI approaches that computationally evaluate the entire slide image, researchers can detect features that are difficult to detect by eye alone, which is now the state-of-the-art in digital pathology [14].

Table 1.

Computational pathology definitions.

| Terms | Definition |

|---|---|

| Artificial intelligence (AI) | The broadest definition of computer science dealing with the ability of a computer to simulate human intelligence and perform complicated tasks. |

| Computational pathology (CPATH) | A branch of pathology that involves computational analysis of a broad array of methods to analyze patient specimens for the study of disease. In this paper, we focus on the extraction of information from digitized pathology images in combination with their associated metadata, typically using AI methods such as deep learning. |

| Convolutional neural networks (CNN) | A form of deep neural networks with one or more convolutional layers and various different layers that can be trained using the backpropagation algorithm and which is suitable for learning 2D data such as images. |

| Deep learning | A subclassification of machine learning that imitates a logical structure similar to how people conclude using a layered algorithm structure called an artificial neural network. |

| Digital pathology | An environment in which traditional pathology analysis utilizing slides made of cells or tissues is converted to a digital environment using a high-resolution scanner. |

| End-to-end training | An opposite concept of feature-crafted methods in a machine learning model, a method which learns the ideal value simultaneously rather than sequentially using only one pipeline. It works smoothly when the dataset is large enough. |

| Ground truth | A concept of a dataset’s ‘true’ category, quantity, or label that serves as direction to an algorithm in the training step. The ground truth varies from the patient- or slide-level to objects or areas within the picture, depending on the objective. |

| Image segmentation | A technique for classifying each region into a semantic category by decomposing an image to the pixel level. |

| Machine learning | An artificial intelligence that parses data, learns from it, and makes intelligent judgments based on what it has learned. |

| Metadata | A type of data that explains other data. A single histopathology slide image in CPATH may include patient disease, demographic information, previous treatment records and medical history, slide dyeing information, and scanner information as metadata. |

| Whole-slide image (WSI) | An whole histopathological glass slide digitized at microscopic resolution as a digital representation. Slide scanners are commonly used to create these complete slide scans. A slide scan viewing platform allows for image examination similar to that of a regular microscope. |

The conventional pathological digital image machine learning method requires particularly educated pathologists to manually categorize abnormal picture attributes before incorporating them into algorithms. Manually extracting and analyzing features from pathological images was a time-consuming, labor-intensive, and costly method that led to many disagreements among pathologists on whether features are typical [15]. Human-extracted visual characteristics must be translated into numerical forms for computer algorithms, but identifying patterns and expressing them with a finite number of feature markers was nearly impossible in some complex diseases. Diverse and popular studies to ‘well’ learn handmade features became the basis for a commercially available medical image analysis system. After all the algorithm development steps, its performance often had a high false-positive rate, and generalization in even typical pathological images was limited [16]. Deep learning, however, has enabled computers to automatically extract feature vectors from pathology image example data and learn to build optimal algorithms on their own [17,18], even outperforming physicians in some cases, and has now emerged as a cutting-edge machine learning method in medical clinical practice [19]. Diverse deep architectures trained with huge image datasets provide biological informatics discoveries and outstanding object recognition [20].

The purpose of this review is to enhance the understanding of the reader with an update on the implementation of artificial intelligence in the pathology department regarding requirements, work process and clinical application development.

2. Deveopment of AI Aided Computational Pathology

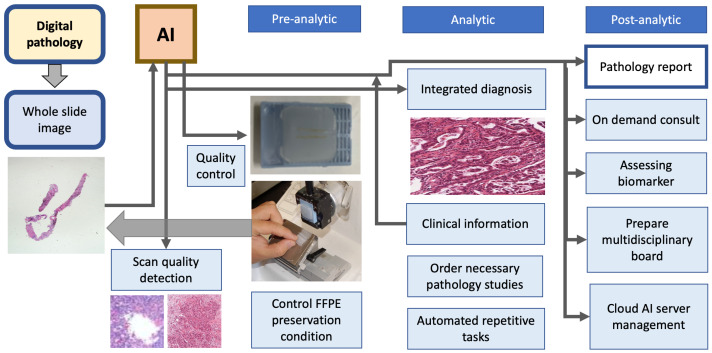

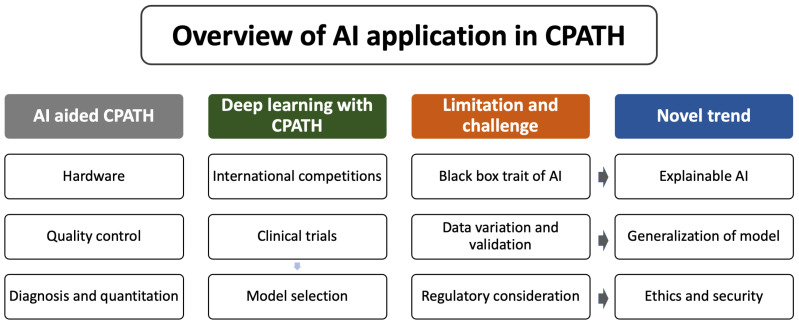

Integrating artificial intelligence into the workflow of the pathology department can perform quality control of the pre-analytic, analytic, and post-analytic phases of the pathology department’s work process, allowing quality control of scan images and formalin-fixed paraffin-embedded tissue blocks, integrated diagnosis with joining clinical information, ordering necessary pathology studies including immunohistochemistry and molecular studies, automating repetitive tasks, on-demand consultation, and cloud server management (Figure 1), which, finally allow precision medicine by enabling us to use a wide range of patient data, including pathological images, to develop disease-preventive and treatment methods tailored to individual patient features. To achieve the above-mentioned goals, there are crucial elements required for CPATH. A simple summary of the required steps for the application of an AI with CPATH is demonstrated in Figure 2.

Figure 1.

Embedding AI into pathology department workflow. The digital pathology supplies whole-slide images to artificial intelligence, which performs quality control of pre-analytic phase, analytic phase and post-analytic phase of pathology laboratory process.

Figure 2.

Requirement for clinical applications of artificial intelligence with CPATH.

2.1. Equipment

Transitioning from glass to digital workflows in AP requires new digital pathology equipment, image management systems, improved data management and storage capacities, and additional trained technicians [21]. While the use of advanced high-resolution hardware with multiple graphical processing units can speed up training, it can become prohibitively expensive. Pathologists must agree to changes to a century-old workflow. Given that change takes time, pathologist end-users should anticipate change-management challenges independent of technological and financial hurdles. AI deployment in the pathology department requires digital pathology. Digital pathology has many proven uses, including primary and secondary clinical diagnosis, telepathology, slide sharing, research data set development, and pathology education or teaching [22]. Digital pathology systems provide time- and cost-saving improvements over the traditional microscopy technique and improve inter-observer variation with adequate slide image management software, integrated reporting systems, improved scanning speeds, and high-quality images. Significant barriers include the introduction of technologies without regulatory-driven, evidence-based validation, the resistance of developers (academic and industrial), and the requirement for commercial integration and open-source data formats.

2.2. Whole Slide Image

In the field of radiology, picture archiving and communication systems (PACS) were successfully introduced owing to infrastructure such as stable servers and high-performance processing devices, and they are now widely used in deep learning sources [23,24]. Similarly, in the pathology field, a digital pathology system was developed that scans traditional glass slides using a slide scanner to produce a WSI; it then stores and transmits it to servers [13]. Because WSI which has an average of 1.6 billion pixels and occupies 4600 megabytes (MB) per unit, thus taking up much more space than a DICOM (digital imaging and communications in medicine) format, this technique took place later in pathology than in radiography [25]. However, in recent years, scanners, servers, and technology that can quickly process WSI have made this possible, allowing pathologists to inspect images on a PC screen [6].

2.3. Quality Control Using Artificial Intelligence

AI tools can be embedded within a pathology laboratory workflow before or after the diagnosis of the pathologist. Before cases are sent to pathologists for review, an AI tool can be used to triage them (for example, cancer priority or improper tissue section) or to help with screening for unexpected events (e.g., tissue contamination or microorganisms). After reviewing a case, pathologists can also use AI tools to execute certain tasks (e.g., counting mitotic figures for tumor grading or measuring nucleic acid quantification). AI software can also run in the background and execute tasks such as quality control and other tasks all the time (e.g., correlation with clinical or surgical information). The ability of AI, digital pathology, and laboratory information systems to work together is the key to making a successful AI workflow that fits the needs of a pathology department. Furthermore, pre-analytic AI implementation can affect the process of molecular pathology. Personalized medicine and accurate quantification of tumor and biomarker expression have emerged as critical components of cancer diagnostics. Quality control (QC) of clinical tissue samples is required to confirm the adequacy of tumor tissue to proceed with further molecular analysis [26]. The digitization of stained tissue slides provides a valuable way to archive, preserve, and retrieve important information when needed.

2.4. Diagnosis and Quantitation

A combination of deep learning methods in CPATH has been developed to excavate unique and remarkable biomarkers for clinical applications. Tumor-infiltrating lymphocytes (TILs) are a prime illustration, as their spatial distributions have been demonstrated to be useful for cancer diagnosis and prognosis in the field of oncology [27]. TILs are the principal activator of anticancer immunity in theory, and if TILs could be objectively measured across the tumor microenvironment (TME), they could be a reliable biomarker [20]. TILs have been shown to be associated with recurrence and genetic mutations in non-small cell lung cancer (NSCLC) [28], and lymphocytes, which have been actively made immune, have proved to have a better response, leading to a longer progression-free survival than the ones that did not show much immunity [29]. Because manual quantification necessitates a tremendous amount of work and is easily influenced by interobserver heterogeneity [30,31], many approaches are being tested in order to overcome these hurdles and determine a clinically meaningful TIL cutoff threshold [32]. Recently, a spatial molecular imaging technique obtaining spatial lymphocytic patterns linked to the rich genomic characterization of TCGA samples has exemplified one application of the TCGA image archives, providing insights into the tumor-immune microenvironment [20].

On a cellular level, spatial organization analysis of TME containing multiple cell types, rather than only TILs, has been explored, and it is expected to yield information on tumor progression, metastasis, and treatment outcomes [33]. Tissue segmentation is done using the comprehensive immunolabeling of specific cell types or spatial transcriptomics to identify a link between tissue content and clinical features, such as survival and recurrence [34,35]. In a similar approach, assessing image analysis on tissue components, particularly focusing on the relative amount of area of tumor and intratumoral stroma, such as the tumor-stroma ratio (TSR), is a widely studied prognostic factor in several cancers, including breast cancer [36,37], colorectal cancer [38,39], and lung cancer [40]. Other studies in CPATH include an attempt to predict the origin of a tumor in cancers of unknown primary source using only a histopathology image of the metastatic site [41].

One of the advantages of CPATH is that it allows the simultaneous inspection of histopathology images along with patient metadata, such as demographic, gene sequencing or expression data, and progression and treatment outcomes. Several attempts are being made to integrate patient pathological tissue images and one or more metadata to obtain novel information that may be used for diagnosis and prediction, as it was discovered that predicting survival using merely pathologic tissue images was challenging and inaccurate [42]. Mobadersany et al. used a Cox proportional hazards model integrated with a CNN to predict the overall survival of patients with gliomas using tissue biopsy images and genetic biomarkers such as chromosome deletion and gene mutation [43]. He et al. used H&E histopathology images and spatial transcriptomics, which analyzes RNA to assess gene activity and allocate cell types to their locations in histology sections to construct a deep learning algorithm to predict genomic expression in patients with breast cancer [44]. Furthermore, Wang et al. employed a technique known as ‘transcriptome-wide expression-morphology’ analysis, which allows for the prediction of mRNA expression and proliferation markers using conventional histopathology WSIs from patients with breast cancer [45]. It is also highly promising in that, as deep learning algorithms progress in CPATH, it can be a helpful tool for pathologists and doctors making decisions. Studies have been undertaken to see how significant an impact assisting diagnosis can have. Wang et al. showed that pathologists employing a predictive deep learning model to diagnose the metastasis of breast cancer from WSIs of sentinel lymph nodes reduced the human error rate by nearly 85% [46]. In a similar approach, Steiner et al. looked at the influence of AI in the histological evaluation of breast cancer with lymph node metastasis, comparing pathologist performance supported by AI with pathologist performance unassisted by AI to see whether supplementation may help. It was discovered that algorithm-assisted pathologists outperformed unassisted pathologists in terms of accuracy, sensitivity, and time effectiveness [47].

3. Deep Learning from Computational Pathology

3.1. International Competitions

The exponential development in scanner performance making producing WSI easier and faster than previously, along with sophisticated viewing devices, major advancements in both computer technology and AI, as well as the accordance to regulatory requirements of the complete infrastructure within the clinical context, have fueled CPATH’s rapid growth in recent years [15]. Following the initial application of CNNs in histopathology at ICPR 2012 [48], several studies have been conducted to assess the performance of automated deep learning algorithms analyzing histopathology images in a variety of diseases, primarily cancer. CPATH challenges are being promoted in the same way that competitions and challenges are held in the field of computer engineering to develop technologies and discover talented rookies. CAMELYON16 was the first grand challenge ever held, with the goal of developing CPATH solutions for the detection of breast cancer metastases in H&E-stained slides of sentinel lymph nodes and to assess the accuracy of the deep learning algorithms developed by competition participants, medical students and experienced professional pathologists [49]. The dataset from the CAMELYON16 challenge, which took a great deal of work, was used in several other studies and provided motive for other challenges [50,51,52], attracting major machine learning companies such as Google to the medical artificial intelligence field [53], and is said to have influenced US government policy [54]. Since then, new challenges have been proposed in many more cancer areas using other deep learning architectures with greater datasets, providing the driving force behind the growth of CPATH (Table 2). Histopathology deep learning challenges can attract non-medical engineers and medical personnel, provide prospects for businesses, and make the competition’s dataset publicly available, benefiting future studies. Stronger deep learning algorithms are expected to emerge, speeding the clinical use of new algorithms in digital image analysis. Traditional digital image analysis works on three major types of measures: image object localization, classification, and quantification [12], and deep learning in CPATH focuses on those metrics similarly. CPATH applications include tumor detection and classification, invasive or metastatic foci detection, primarily lymph nodes, image segmentation and analysis of spatial information, including ratio and density, cell and nuclei classification, mitosis counting, gene mutation prediction, and histological scoring. Two or more of these categories are often researched together, and deep learning architectures like convolutional neural networks (CNN) and recurrent neural networks are utilized for training and applications.

Table 2.

Examples of grand challenges held in CPATH.

| Challenge | Year | Staining | Challenge Goal | Dataset |

|---|---|---|---|---|

| GlaS challenge [55] | 2015 | H&E | Segmentation of colon glands of stage T3 and T4 colorectal adenocarcinoma | Private set—165 images from 16 WSIs |

| CAMELYON16 [56] | 2016 | H&E | Evaluation of new and current algorithms for automatic identification of metastases in WSIs from H&E-stained lymph node sections | Private set—221 images |

| TUPAC challenge [57] | 2016 | H&E | Prediction of tumor proliferation scores and gene expression of breast cancer using histopathology WSIs | 821 TCGA WSIs |

| BreastPathQ [58] | 2018 | H&E | Development of quantitative biomarkers to determinate cancer cellularity of breast cancer from H&E-stained WSIs | Private set—96 WSIs |

| BACH challenge [59] | 2018 | H&E | Classification of H&E-stained breast histopathology images and performing pixel-wise labeling of WSIs | Private set—40 WSIs and 500 images |

| LYON19 [60] | 2019 | IHC | Provision of a dataset as well as an evolution platform for current lymphocyte detection algorithms in IHC-stained images | LYON19 test set containing 441 ROIs |

| DigestPath [61] | 2019 | H&E | Evaluation of algorithms for detecting signet ring cells and screening colonoscopy tissue from histopathology images of the digestive system | Private set—127 WSIs |

| HEROHE ECDP [62] | 2020 | H&E | Evaluation of algorithms to discriminate HER2-positive breast cancer specimens from HER2-negative breast cancer specimens with high sensitivity and specificity only using H&E-stained slides | Private set—359 WSIs |

| MIDOG challenge [63] | 2021 | H&E | Detection of mitotic figures from breast cancer histopathology images scanned by different scanners to overcome the ‘domain-shift’ problem and improve generalization | Private set—200 cases |

| CoNIC challenge [64] | 2022 | H&E | Evaluation of algorithms for nuclear segmentation and classification into six types, along with cellular composition prediction | 4981 patches |

| ACROBAT [65] | 2022 | H&E, IHC | Development of WSI registration algorithms that can align WSIs of IHC-stained breast cancer tissue sections with corresponding H&E-stained tissue regions | Private dataset—750 cases consisting of 1 H&E and 1–4 matched IHC |

3.2. Dataset and Deep Learning Model

Since public datasets for machine learning learning in CPATH, such as the Cancer Genome Atlas (TCGA), the Cancer Image Archive (TCIA), and public datasets created by several challenges, such as the CAMELYON16 challenge dataset, are freely accessible to anyone, researchers who do not have their own private data can conduct research and can also use the same dataset as a standard benchmark by several researchers comparing the performance of each algorithm [15]. Coudray et al. [66], Using the inception-v3 model as a deep learning architecture, assessed the performance of algorithms in classification and genomics mutation prediction of NSCLC histopathology pictures from TCGA and a portion of an independent private dataset, which was a noteworthy study that could detect genetic mutations using WSIs such as STK11 (AUC 0.85), KRAS (AUC 0.81), and EGFR (AUC 0.75). Guo et al. used the Inception-v3 model to classify the tumor region of a breast cancer [67]. Bulten et al. used 1243 WSIs of private prostate biopsies, segmenting individual glands to determine Gleason growth patterns using UNet, followed by cancer grading, and achieved performance comparable to pathologists [68]. Table 3 contains additional published examples utilizing various deep learning architectures and diverse datasets. A complete and extensive understanding of deep learning concepts and existing architectures can be found [17,69], while a specific application of deep learning in medical image analysis can be read [70,71,72]. To avoid bias in algorithm development, datasets should be truly representative, encompassing the range of data that would be expected in the real world [19], including both the expected range of tissue features (normal and pathological) and the expected variation in tissue and slide preparation between laboratories.

Table 3.

Summary of recent convolutional neural network models in pathology image analysis.

| Publication | Deep Learning | Input | Training Goal | Dataset |

|---|---|---|---|---|

| Zhang et al. [73] | CNN | WSI | Diagnosis of bladder cancer | TCGA and private—913 WSIs |

| Shim et al. [74] | CNN | WSI | Prognosis of lung cancer | Private—393 WSIs |

| Im et al. [75] | CNN | WSI | Diagnosis of brain tumor subtype | private—468 WSIs |

| Mi et al. [76] | CNN | WSI | Diagnosis of breast cancer | private dataset—540 WSIs |

| Hu et al. [77] | CNN | WSI | Diagnosis of gastric cancer | private—921 WSIs |

| Pei et al. [78] | CNN | WSI | Diagnosis of brain tumor classification | TCGA—549 WSIs |

| Salvi et al. [79] | CNN | WSI | Segmentation of normal prostate gland | Private—150 WSIs |

| Lu et al. [80] | CNN | WSI | Genomic correlation of breast cancer | TCGA and private—1157 WSIs |

| Cheng et al. [81] | CNN | WSI | Screening of cervical cancer | Private—3545 WSIs |

| Kers et al. [82] | CNN | WSI | Classification of transplant kidney | Private—5844 WSIs |

| Zhou et al. [83] | CNN | WSI | Classification of colon cancer | TCGA—1346 WSIs |

| Hohn et al. [84] | CNN | WSI | Classification of skin cancer | Private—431 WSIs |

| Wang et al. [45] | CNN | WSI | Prognosis of gastric cancer | Private—700 WSIs |

| Shin et al. [85] | CNN, GAN | WSI | Diagnosis of ovarian cancer | TCGA—142 WSIs |

Abbreviation: CNN, convolutional neural network; WSI, whole-slide image; TCGA, The Cancer Genome Atlas.

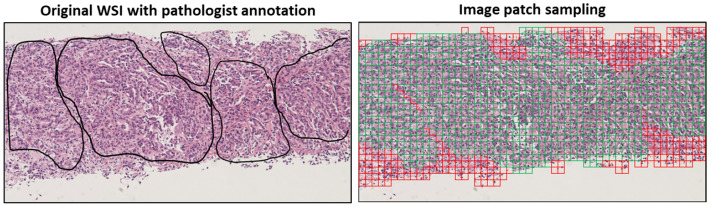

CNNs are difficult to train end-to-end because gigapixel WSIs are too large to fit in GPU memory, unlike many natural pictures evaluated in computer vision applications. A single WSI requires over terabytes of memory, yet high-end GPUs only give tens of gigabytes. Researchers have suggested alternatives such as partitioning the WSI into small sections (Figure 3) using only a subset or the full WSI compressed with semantic information preserved. Breaking WSI into little patches and placing them all in the GPU to learn everything takes too long; thus, picking patches to represent WSI is critical. For these reasons, randodmizing paches [86], selecting patches from region of interests [42], and randomly selecting patches among image clustering [87] were proposed. The multi-instance learning (MIL) method is then mostly employed in the patch aggregation step, which involves collecting several patches from a single WSI and learning information about the WSI as a result. Traditional MILs treat a single WSI as a basket, assuming that all patches contained within it have the same WSI properties. All patches from a cancer WSI, for example, are considered cancer patches. This method appears to be very simple, yet it is quite beneficial for cancer detection, and representation can be ensured if the learning dataset is large enough [88], which also provides a reason why various large datasets should be produced. If the learning size is insufficient, predicted patch scores are averaged, or classes that account for the majority of patch class predictions are estimated and used to represent the WSI. A more typical way is to learn patch weights using a self-attention mechanism, which uses patch encoding to calculate weighed sum of patch embeddings [89], with a higher weight for the patch that is closest to the ideal patch for performing a certain task for each model. Techniques such as max or mean pooling and certainty pooling, which are commonly utilized in CNNs, are sometimes applied here. There is an advantage to giving interpretability to pathologists using the algorithm because approaches such as self-attention can be presented in the form of a heatmap on a WSI based on patch weights.

Figure 3.

Images are divided into small patches obtained from tissue of WSI, which are subsequently prepared to have semantic features extracted from each patch. Green tiles indicate tumor region; red tiles indicate non-tumor region. Images from Yeouido St. Mary’s hospital.

3.3. Overview of Deep Learning Workflows

WSIs are flooding out of clinical pathology facilities around the world as a result of the development of CPATH, including publicly available datasets, which can be considered a desirable cornerstone for the development of deep learning because it means more data are available for research. However, as shown in some of the previous studies, the accuracy of performance, such as classification and segmentation by algorithms commonly expressed in the area under the curve (AUC), must be compared to pathological images manually annotated by humans in order to calculate the accuracy of the performance. In this way, supervised learning is a machine learning model that uses labeled learning data for algorithm learning and learns functions based on it, and it is the machine learning model most utilized in CPATH so far. According to the amount and type of data, object and purpose (whether the target is cancer tissue or substrate tissue and calculating the number of lymphocytes), it can be divided into qualitative and distinct or quantitative and continuous representations, expressed as ‘classification’ [90] and ‘regression’ [91], respectively. Because the model is constructed by simulating learning data, labeled data are crucial, and the machine learning model’s performance may vary. Unsupervised learning uses unlabeled images, unlike previous scenarios. This technology is closer to an AI since it helps humans collect information and build knowledge about the world. Except for the most basic learning, such as language character acquisition, we can identify commonalities by looking at applied situations and extending them to other objects. To teach young children to recognize dogs and cats, it is not required to exhibit all breeds. ‘Unsupervised learning’ can find and assess patterns in unlabeled data, divide them into groups, or perform data visualization in which specific qualities are compacted to two or three if there are multiple data characteristics or variables that are hard to see. A study built a complex tissue classifier for CNS tumours based on histopathologic patterns without manual annotation. It provided a framework comparable to the WHO [92], which was based on microscopic traits, molecular characteristics, and well-understood biology [93]. This study demonstrated that the computer can optimize and use some of the same histopathologic features used by pathologists to assist grouping on its own.

In CPATH, it is very important to figure out how accurate a newly made algorithm is, so there is still a lot of supervised learning. Unsupervised learning still makes it hard to keep up with user-defined tasks, but it has the benefit of being a very flexible way to build data patterns that are not predictable. It also lets us deal with changes we did not expect and allows us to learn more outside of the limits of traditional learning. It helps us understand histopathology images and acts as a guide for precision medicine [94].

Nonetheless, unsupervised learning is still underdeveloped in CPATH, and even after unsupervised learning, it is sometimes compared with labeled data to verify performance, making the purpose a little ambiguous. Bulten et al. classified prostate cancer and non-cancer pathology using clustering, but still had to verify the algorithm’s ability using manually annotated images, for example [95].

Currently, efforts are made to make different learning datasets by combining the best parts of supervised and unsupervised learning. This is done by manually labeling large groups of pathological images. Instead of manually labeling images, such as in the 2016 TUPAC Challenge, which was an attempt to build standard references for mitosis detection [96], “weakly supervised learning” means figuring out only a small part of an image and then using machine learning to fill in the rest. Several studies have shown that combining sophisticated learning strategies with weakly supervised learning methods can produce results that are similar to those of a fully supervised model. Since then, many more studies have been done on the role of detection and segmentation in histopathology images. “NuClick”, a CNN-based algorithm that won the LYON19 Challenge in 2019, showed that structures such as nuclei, cells, and glands in pathological images can be labeled quickly, consistently, and reliably [97], whereas ‘CAMEL’, developed in another study, only uses sparse image-level labels to produce pixel-level labels for creating datasets to train segmentation models for fully supervised learning [98].

4. Current Limitations and Challenges

Despite considerable technical advancements in CPATH in recent years, the deployment of deep learning algorithms in real clinical settings is still far from adequate. This is because, in order to be implemented into existing or future workflows, the CPATH algorithm must be scientifically validated, have considerable clinical benefit, and not cause harm or confuse people at the same time [99]. In this section, we will review the roadblocks to full clinical adoption of the CPATH algorithm, as well as what efforts are currently being made.

4.1. Acquiring Quality Data

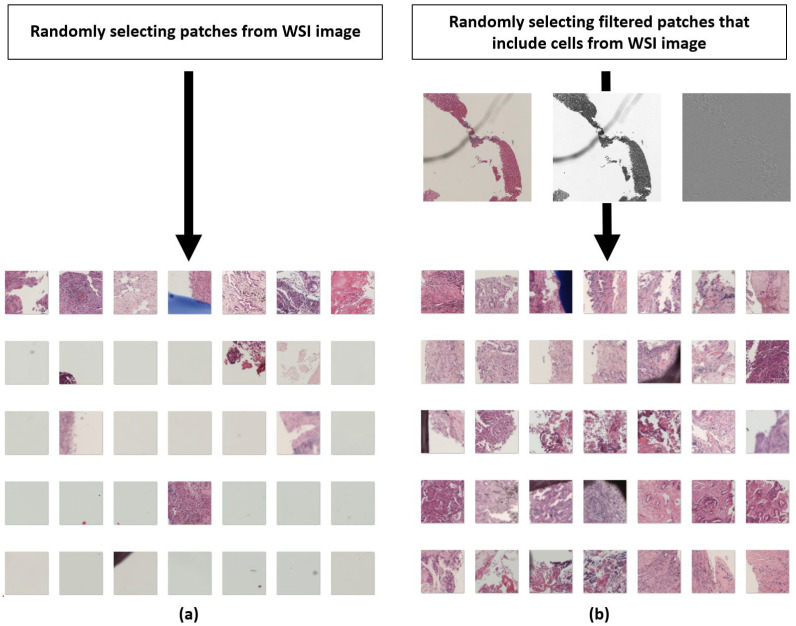

It is critical that CPATH algorithms be trained with high-quality data so that they can deal with the diverse datasets encountered in real-world clinical practice. Even in deep learning, the ground truth should be manually incorporated into the dataset in order to train appropriate diagnostic contexts in supervised learning to classify, segment, and predict images based on it [100]. The ground truth can be derived from pathology reports grading patient outcomes or tumors, as well as scores assessed by molecular experiments, depending on the study’s goals, which are still determined by human experts and need a significant amount of manual labor to obtain a ‘correct’ dataset [12]. Despite the fact that datasets created by professional pathologists are of excellent quality, vast quantities are difficult to obtain due to the time, cost, and repetitive and arduous tasks required. As a result, publicly available datasets have been continuously created, such as the ones from TCGA or grand challenges, with the help of weakly supervised learning. Alternative efforts have recently been made to gather massive scales of annotated images by crowdsourcing online. Hughes et al. used a crowdsourced image presentation platform to demonstrate deep learning performance comparable to that of a single professional pathologist [101], while López-Pérez et al. used a crowdsourced deep learning algorithm to help a group of doctors or medical students who were not pathologists make annotations comparable to an expert in breast cancer images [102]. Crowdsourcing may generate some noise, but it shows that non-professionals of various skill levels could assist with pathological annotation and dataset generation. Obtaining quality data entails more than just obtaining a sufficient raw pathological image slide of a single disease from a patient or hospital; it also includes preparing materials to analyze and process the image in order to extract useful data for deep learning model training. By using strategies such as selecting patches with cells while excluding patches without cells from raw pictures, as demonstrated in Figure 4, collecting quality data may be made easier.

Figure 4.

(a) Random sampling of 100 patches selected arbitrarily from an WSI image. (b) Random sampling of 100 patches after application of Laplace filter (which highlights areas with great changes) from WSI image. Images from Yeouido St. Mary’s Hospital.

4.2. Data Variation

Platform diversity, integration, and interoperability represent yet another significant hurdle for the creation and use of AI tools [103]. Recent findings show that current AI models, when trained on insufficient datasets, even when utilizing precise and pixel-by-pixel labelling, can exhibit a 20% decline in performance when evaluated on independent datasets [88]. Deep learning-based algorithms have produced outstanding outcomes in image analysis applications, including digitized slide analysis. Deep learning-based systems face several technological problems, including huge WSI data, picture heterogeneity, and feature complexity. To achieve successful generalization properties, the training data must include a diverse and representative sample of the disease’s biological and morphological variability, as well as the technical variables introduced in the pre-analytical and analytical processes in the pathology department, as well as the image acquisition process [104]. A generic deep learning-based system for histopathology tissue analysis. The previously introduced framework is a series of strategies in the preprocessing-training-inference pipeline that showed improved efficiency and generalizability. Such strategies include an ensemble segmentation model, dividing the WSI into smaller overlapping patches, efficient inference algorithms, and a patch-based uncertainty estimation methodology [105,106]. Technical variability challenges can also be addressed by standardizing and preparing CPATH data to limit the effects of technical variability or to make the models robust to technical variability. Training the deep learning model on large and diverse datasets may lower the generalization error to some extent [107].

The amount and quality of input data determine the performance of the deep learning algorithm [108,109]. Although the size of datasets has been growing over the years with the development in CPATH, even if algorithms trained using learning datasets perform well on test sets, it is difficult to be certain that algorithms perform well on actual clinical encounters because clinical data come from significantly more diverse sources than studies. Similarly, when evaluating the performance of deep learning algorithms with a specific validation set for each grand challenge, it is also difficult to predict whether they will perform well in actual clinical practice. Color variation is a representative example of the variation of data. Color variation is caused by differences in raw materials, staining techniques used across different pathology labs, patient intervariability, and different slide scanners, which affect not just color but also overall data variation [110]. As a result, color standardization as an image preparation method has long been devised to overcome this problem in WSI. Because predefined template images were used for color normalization in the past, it was difficult to style transformation between different image datasets, but recent advances in generative adversarial networks (GAN) among deep learning artificial neural networks have allowed patches to be standardized without organizational changes. For example, using the cycle-GAN technique, Swiderska-Chadaj et al. reported an AUC of 0.98 and 0.97 for two different datasets constructed from prostate cancer WSIs [72,111]. While efforts are being made to reduce variation and create well-defined standardized data, such as color standardization and attempts to establish global standards for pathological tissue processing, staining, scanning, and digital image processing, data augmentation techniques are also being used to create learning datasets with as many variations as possible in order to learn the many variations encountered in real life. Not only the performance of the CPATH algorithm but also many considerations such as cost and explainability should be thoroughly addressed when deciding which is more effective for actual clinical introduction.

4.3. Algorithm Validation

Several steps of validation are conducted during the lengthy process of developing a CPATH algorithm in order to test its performance and safety. To train models and evaluate performance, CPATH studies on typical supervised algorithms separate annotated data into individual learning datasets and test datasets, the majority of which employ datasets with features fairly similar to those of learning datasets in the so-called ‘internal verification’ stage. Afterwards, through so-called ‘external validation’, which uses data for tests that have not been used for training, it is feasible to roughly evaluate if the algorithm performs well with the data it would encounter in real clinical practice [15]. However, simply because the CPATH algorithm performed well at this phase, it is hard to ascertain whether it will function equally well in practical practice [112]. While many studies on the CPATH algorithm are being conducted, most studies use autonomous standards due to a lack of established clinical verification standards and institutional validation. Even if deep learning algorithms perform well and are employed with provisional permission, it is difficult to confirm that their performance exhibits the same confirmed effect when the algorithm is upgraded in the subsequent operation process. Efforts are being made to comprehend and compare diverse algorithms regardless of research techniques, such as the construction of a complete and transparent information reporting system called TRIPOD-AI in the prediction model [113].

Finally, it should be noted that the developed algorithm does not result in a single performance but rather continues within the patient’s disease progress and play an auxiliary role in decision-making; thus, relying solely on performance as a ratification metric is not ideal. This suggests that, in cases where quality measure for CPATH algorithm performance is generally deemed superior to or comparable to pathologists, it should be defined by examining the role of algorithms in the whole scope of disease progression in a patient in practice [114]. This is also linked to the solution of the gold-standard paradox [14]. This is a paradox which may ariase during the segmentation model’s quality control, where pathologists are thought to be the most competent in pathological picture analysis, but algorithmic data are likely to be superior in accuracy and reproducibility. This paradox may alternatively be overcome by implementing the algorithm as part of a larger system that tracks the patient’s progress and outcomes [12].

4.4. Regulatory Considerations

One of the most crucial aspects for deep learning algorithms to be approved by regulatory agencies in order to use AI in clinical practice is to understand how it works, as AI is sometimes referred to be a “black box” because it is difficult for humans to comprehend exactly what it does [114]. Given the difficulty of opening up deep learning artificial neural networks and their limited explainability due to the difficulty of understanding how countless parameters interact at the same time, more reliable and explainable models for complex and responsible behaviors for diagnosis and treatment decisions and prediction are required [115]. As a result, attempts have been made to turn deep learning algorithms into “glass boxes” by clarifying the input and calculating the output in a way that humans can understand and analyze [116,117,118].

The existing regulatory paradigm is less adequate for AI since it requires rather small infrastructure and little human interaction, and the level of progress or results are opaque to outsiders, so potential dangers are usually difficult to identify [119]. Thus far, the White House has issued a memorandum on high-level regulatory principles for AI in all fields in November 2020 [120], the European Commission issued a similar white paper in February 2020 [121], and UNESCO made a global guideline on AI ethics in November 2021 [122], but these documents unfortunately do not provide a very detailed method to operate artificial intelligence in the context of operations. Because artificial intelligence is generally developed in confined computer systems, progress has been made outside of regulatory environments thus far, and regulatory uncertainty can accelerate development while also fueling systemic dangers at the same time. Successful AI regulations, as with many new technologies, are expected to be continuously problematic in the future, as regulations and legal rules will still lag behind developing technological breakthroughs [123]. Self-regulation in industrial settings can be theoretically beneficial and is already in use [124], but it has limitations in practice because it is not enforced. Ultimately, a significant degree of regulatory innovation is required to develop a stable AI environment. The most crucial issue to consider in this regard is that, in domains such as health care, where even a slight change can have a serious influence, regulations of AI should be built with the consideration of the overall impact on humans rather than making arbitrary decisions alone.

5. Novel Trends in CPATH

5.1. Explainable AI

Because most AI algorithms have unclear properties due to their complexity and often lacking robustness, there are substantial issues with AI trust [125]. Furthermore, there is no agreement on how pathologists should include computational pathology systems into their workflow [126]. Building computational pathology systems with explainable artificial intelligence (xAI) methods is a strong substitute for opaque AI models to address these issues [127]. Four categories of needs exist for the usage of xAI techniques and their application possibilities [128]: (1) Model justification: to explain why a decision was made, particularly when a significant or unexpected decision is created, all with the goal of developing trust in the model’s operation; (2) Model controlling and debugging: to avoid dangerous outcomes. A better understanding of the system raises the visibility of unknown defects and aids in the rapid identification and correction of problems; (3) Model improving: When a user understands why and how a system achieved a specific result, he can readily modify and improve it, making it wiser and possibly faster. Understanding the judgments created by the AI model, in addition to strengthening the explanation-generating model, can improve the overall work process; (4) Knowledge discovery: One can discover new rules by seeing the appearance of some invisible model results and understanding why and how they appeared. Furthermore, because AI entities are frequently smarter than humans, it is possible to learn new abilities by understanding their behavior.

Recent studies in breast pathology xAI quickly presented the important diagnostic areas in an interactive and understandable manner by automatically previewing tissue WSIs and identifying the regions of interest, which can serve pathologists as an interactive computational guide for computer-assisted primary diagnosis [127,129]. An ongoing study is being done to determine which explanations are best for artificial intelligence development, application, and quality control [130], which explanations are appropriate for situations with high stakes [115], and which explanations are true to the explained model [131].

With the increasing popularity of graph neural networks (GNNs), their application in a variety of disciplines requires explanations for scientific or ethical reasons in medicine [132]. This makes it difficult to define generalized explanation methods, which are further complicated by heterogeneous data domains and graphs. Most explanations are therefore model- and domain-specific. GNN models can be used for node labeling, link prediction, and graph classification [133]. While most models can be used for any of the above tasks, defining and generating explanations can affect how a GNN xAI model is structured. However, the power of these GNN models is limited by their complexity and the underlying data complexity, although most, if not all, of the models can be grouped under the augmented paradigm [134]. Popular deep learning algorithms and explainability techniques based on pixel-wise processing ignore biological elements, limiting pathologists’ comprehension. Using biological entity-based graph processing and graph explainers, pathologists can now access explanations.

5.2. Ethics and Security

AI tool creation must take into account the requirement for research and ethics approval, which is typically necessary during the research and clinical trial stages. Developers must follow the ethics of using patient data for research and commercial advantages. Recognizing the usefulness of patient data for research and the difficulties in obtaining agreement for its use, the corresponding institution should establish a proper scheme to provide individual patients some influence over how their data are used [103]. Individual institutional review boards may have additional local protocols for permitting one to opt out of data use for research, and it is critical that all of these elements are understood and followed throughout the design stage of AI tool creation [104]. There are many parallels to be found with the AI development pipeline; while successful items will most likely transit through the full pathway, supported by various resources, many products will, however, fail at some point. Each stage of the pipeline, including the justification of the tool for review and being recommended for usage in clinical guidelines, can benefit from measurable outcomes of success in order to make informed judgments about which products should be promoted [135]. This usually calls for proof of cost or resource savings, quality improvements, and patient impact and is thus frequently challenging to demonstrate, especially when the solution entails major transformation and process redesign.

Whether one uses a cloud-based AI solution for pathology diagnostics depends on a number of things, such as the preferred workflow, frequency of instrument use, software and hardware costs, and whether or not the IT security risk group is willing to allow the use of cloud-based solutions. Cloud-based systems must include a business associate’s agreement, end-to-end encryption, and unambiguous data-use agreements to prevent data breaches and inappropriate use of patient data [21].

6. Conclusions and Future Directions

AI currently has enormous potential to improve pathology practice by reducing errors, improving reproducibility, and facilitating expert communication, all of which were previously difficult with microscopic glass slides. Recent trends of AI applicaion should be affordable, practical, interoperable, explainable, generalizable, manageable, and reimbursable [21]. Many researchers are convinced that AI in general and deep learning in particular could help with many repetitive tasks using digital pathology because of recent successes in image recognition. However, there are currently only a few AI-driven software tools in this field. As a result, we believe pathologists should be involved from the start, even when developing algorithms, to ensure that these eagerly anticipated software packages are improved or even replaced by AI algorithms. Despite popular belief, AI will be difficult to implement in pathology. AI tools are likely to be approved by regulators such as the Food and Drug Administration.

The quantitative nature of CPATH has the potential to transform pathology laboratory and clinical practices. Case stratification, expedited review and annotation, and the output of meaningful models to guide treatment decisions and predict patterns in medical fields are all possibilities. The pathology community needs more research to develop safe and reliable AI. As clinical AI’s requirements become clearer, this gap will close. AI in pathology is young and will continue to mature as researchers, doctors, industry, regulatory agencies, and patient advocacy groups innovate and bring new technology to health care practitioners. To accomplish its successful application, robust and standardized computational, clinical, and laboratory practices must be established concurrently and validated across multiple partnering sites.

Author Contributions

Conceptualization, T.-J.K.; data curation, K.K. and Y.S.; writing—original draft preparation, I.K.; writing—review and editing, I.K. and T.-J.K.; supervision, T.-J.K.; funding acquisition, T.-J.K. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of Yeouido St. Mary’s hospital (SC18RNSI0005 approved on 22 January 2018).

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This work was supported by the National Research Foundation of Korea (NRF) through a grant funded by the Korean government (MSIT) (grant number 2017R1E1A1A01078335 and 2022R1A2C1092956) and the Institute of Clinical Medicine Research in the Yeouido St. Mary’s Hospital.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Gurcan M.N., Boucheron L.E., Can A., Madabhushi A., Rajpoot N.M., Yener B. Histopathological image analysis: A review. IEEE Rev. Biomed. Eng. 2009;2:147–171. doi: 10.1109/RBME.2009.2034865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wolberg W.H., Street W.N., Heisey D.M., Mangasarian O.L. Computer-derived nuclear features distinguish malignant from benign breast cytology. Hum. Pathol. 1995;26:792–796. doi: 10.1016/0046-8177(95)90229-5. [DOI] [PubMed] [Google Scholar]

- 3.Choi H.K., Jarkrans T., Bengtsson E., Vasko J., Wester K., Malmström P.U., Busch C. Image analysis based grading of bladder carcinoma. Comparison of object, texture and graph based methods and their reproducibility. Anal. Cell. Pathol. 1997;15:1–18. doi: 10.1155/1997/147187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Keenan S.J., Diamond J., Glenn McCluggage W., Bharucha H., Thompson D., Bartels P.H., Hamilton P.W. An automated machine vision system for the histological grading of cervical intraepithelial neoplasia (CIN) J. Pathol. 2000;192:351–362. doi: 10.1002/1096-9896(2000)9999:9999<::AID-PATH708>3.0.CO;2-I. [DOI] [PubMed] [Google Scholar]

- 5.Weinstein R.S., Graham A.R., Richter L.C., Barker G.P., Krupinski E.A., Lopez A.M., Erps K.A., Bhattacharyya A.K., Yagi Y., Gilbertson J.R. Overview of telepathology, virtual microscopy, and whole slide imaging: Prospects for the future. Hum. Pathol. 2009;40:1057–1069. doi: 10.1016/j.humpath.2009.04.006. [DOI] [PubMed] [Google Scholar]

- 6.Farahani N., Parwani A.V., Pantanowitz L. Whole slide imaging in pathology: Advantages, limitations, and emerging perspectives. Pathol. Lab. Med. Int. 2015;7:4321. [Google Scholar]

- 7.Saco A., Ramírez J., Rakislova N., Mira A., Ordi J. Validation of whole-slide imaging for histolopathogical diagnosis: Current state. Pathobiology. 2016;83:89–98. doi: 10.1159/000442823. [DOI] [PubMed] [Google Scholar]

- 8.Al-Janabi S., Huisman A., Vink A., Leguit R.J., Offerhaus G.J.A., Ten Kate F.J., Van Diest P.J. Whole slide images for primary diagnostics of gastrointestinal tract pathology: A feasibility study. Hum. Pathol. 2012;43:702–707. doi: 10.1016/j.humpath.2011.06.017. [DOI] [PubMed] [Google Scholar]

- 9.Snead D.R., Tsang Y.W., Meskiri A., Kimani P.K., Crossman R., Rajpoot N.M., Blessing E., Chen K., Gopalakrishnan K., Matthews P., et al. Validation of digital pathology imaging for primary histopathological diagnosis. Histopathology. 2016;68:1063–1072. doi: 10.1111/his.12879. [DOI] [PubMed] [Google Scholar]

- 10.Williams B.J., Bottoms D., Treanor D. Future-proofing pathology: The case for clinical adoption of digital pathology. J. Clin. Pathol. 2017;70:1010–1018. doi: 10.1136/jclinpath-2017-204644. [DOI] [PubMed] [Google Scholar]

- 11.Astrachan O., Hambrusch S., Peckham J., Settle A. The present and future of computational thinking. ACM SIGCSE Bulletin. 2009;41:549–550. doi: 10.1145/1539024.1509053. [DOI] [Google Scholar]

- 12.Abels E., Pantanowitz L., Aeffner F., Zarella M.D., van der Laak J., Bui M.M., Vemuri V.N., Parwani A.V., Gibbs J., Agosto-Arroyo E., et al. Computational pathology definitions, best practices, and recommendations for regulatory guidance: A white paper from the Digital Pathology Association. J. Pathol. 2019;249:286–294. doi: 10.1002/path.5331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zarella M.D., Bowman D., Aeffner F., Farahani N., Xthona A., Absar S.F., Parwani A., Bui M., Hartman D.J. A practical guide to whole slide imaging: A white paper from the digital pathology association. Arch. Pathol. Lab. Med. 2019;143:222–234. doi: 10.5858/arpa.2018-0343-RA. [DOI] [PubMed] [Google Scholar]

- 14.Aeffner F., Wilson K., Martin N.T., Black J.C., Hendriks C.L.L., Bolon B., Rudmann D.G., Gianani R., Koegler S.R., Krueger J., et al. The gold standard paradox in digital image analysis: Manual versus automated scoring as ground truth. Arch. Pathol. Lab. Med. 2017;141:1267–1275. doi: 10.5858/arpa.2016-0386-RA. [DOI] [PubMed] [Google Scholar]

- 15.Van der Laak J., Litjens G., Ciompi F. Deep learning in histopathology: The path to the clinic. Nat. Med. 2021;27:775–784. doi: 10.1038/s41591-021-01343-4. [DOI] [PubMed] [Google Scholar]

- 16.Chan H.P., Samala R.K., Hadjiiski L.M., Zhou C. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 2020;19:221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 18.Schmidhuber J. Deep learning in neural networks: An overview. Neural Netw. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 19.Verma P., Tiwari R., Hong W.C., Upadhyay S., Yeh Y.H. FETCH: A Deep Learning-Based Fog Computing and IoT Integrated Environment for Healthcare Monitoring and Diagnosis. IEEE Access. 2022;10:12548–12563. doi: 10.1109/ACCESS.2022.3143793. [DOI] [Google Scholar]

- 20.Saltz J., Gupta R., Hou L., Kurc T., Singh P., Nguyen V., Samaras D., Shroyer K.R., Zhao T., Batiste R., et al. Spatial organization and molecular correlation of tumor-infiltrating lymphocytes using deep learning on pathology images. Cell Rep. 2018;23:181–193. doi: 10.1016/j.celrep.2018.03.086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cheng J.Y., Abel J.T., Balis U.G., McClintock D.S., Pantanowitz L. Challenges in the development, deployment, and regulation of artificial intelligence in anatomic pathology. Am. J. Pathol. 2021;191:1684–1692. doi: 10.1016/j.ajpath.2020.10.018. [DOI] [PubMed] [Google Scholar]

- 22.Volynskaya Z., Evans A.J., Asa S.L. Clinical applications of whole-slide imaging in anatomic pathology. Adv. Anat. Pathol. 2017;24:215–221. doi: 10.1097/PAP.0000000000000153. [DOI] [PubMed] [Google Scholar]

- 23.Mansoori B., Erhard K.K., Sunshine J.L. Picture Archiving and Communication System (PACS) implementation, integration & benefits in an integrated health system. Acad. Radiol. 2012;19:229–235. doi: 10.1016/j.acra.2011.11.009. [DOI] [PubMed] [Google Scholar]

- 24.Lee J.G., Jun S., Cho Y.W., Lee H., Kim G.B., Seo J.B., Kim N. Deep learning in medical imaging: General overview. Korean J. Radiol. 2017;18:570–584. doi: 10.3348/kjr.2017.18.4.570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kohli M.D., Summers R.M., Geis J.R. Medical image data and datasets in the era of machine learning—Whitepaper from the 2016 C-MIMI meeting dataset session. J. Digit. Imaging. 2017;30:392–399. doi: 10.1007/s10278-017-9976-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chung M., Lin W., Dong L., Li X. Tissue requirements and DNA quality control for clinical targeted next-generation sequencing of formalin-fixed, paraffin-embedded samples: A mini-review of practical issues. J. Mol. Genet. Med. 2017;11:1747-0862. [Google Scholar]

- 27.Salgado R., Denkert C., Demaria S., Sirtaine N., Klauschen F., Pruneri G., Wienert S., Van den Eynden G., Baehner F.L., Pénault-Llorca F., et al. The evaluation of tumor-infiltrating lymphocytes (TILs) in breast cancer: Recommendations by an International TILs Working Group 2014. Ann. Oncol. 2015;26:259–271. doi: 10.1093/annonc/mdu450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.AbdulJabbar K., Raza S.E.A., Rosenthal R., Jamal-Hanjani M., Veeriah S., Akarca A., Lund T., Moore D.A., Salgado R., Al Bakir M., et al. Geospatial immune variability illuminates differential evolution of lung adenocarcinoma. Nat. Med. 2020;26:1054–1062. doi: 10.1038/s41591-020-0900-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Park S., Ock C.Y., Kim H., Pereira S., Park S., Ma M., Choi S., Kim S., Shin S., Aum B.J., et al. Artificial Intelligence-Powered Spatial Analysis of Tumor-Infiltrating Lymphocytes as Complementary Biomarker for Immune Checkpoint Inhibition in Non-Small-Cell Lung Cancer. J. Clin. Oncol. 2022;40:1916–1928. doi: 10.1200/JCO.21.02010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Khoury T., Peng X., Yan L., Wang D., Nagrale V. Tumor-infiltrating lymphocytes in breast cancer: Evaluating interobserver variability, heterogeneity, and fidelity of scoring core biopsies. Am. J. Clin. Pathol. 2018;150:441–450. doi: 10.1093/ajcp/aqy069. [DOI] [PubMed] [Google Scholar]

- 31.Swisher S.K., Wu Y., Castaneda C.A., Lyons G.R., Yang F., Tapia C., Wang X., Casavilca S.A., Bassett R., Castillo M., et al. Interobserver agreement between pathologists assessing tumor-infiltrating lymphocytes (TILs) in breast cancer using methodology proposed by the International TILs Working Group. Ann. Surg. Oncol. 2016;23:2242–2248. doi: 10.1245/s10434-016-5173-8. [DOI] [PubMed] [Google Scholar]

- 32.Gao G., Wang Z., Qu X., Zhang Z. Prognostic value of tumor-infiltrating lymphocytes in patients with triple-negative breast cancer: A systematic review and meta-analysis. BMC Cancer. 2020;20:179. doi: 10.1186/s12885-020-6668-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lee K., Lockhart J.H., Xie M., Chaudhary R., Slebos R.J., Flores E.R., Chung C.H., Tan A.C. Deep Learning of Histopathology Images at the Single Cell Level. Front. Artif. Intell. 2021;4:754641. doi: 10.3389/frai.2021.754641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Jiao Y., Li J., Qian C., Fei S. Deep learning-based tumor microenvironment analysis in colon adenocarcinoma histopathological whole-slide images. Comput. Methods Programs Biomed. 2021;204:106047. doi: 10.1016/j.cmpb.2021.106047. [DOI] [PubMed] [Google Scholar]

- 35.Failmezger H., Muralidhar S., Rullan A., de Andrea C.E., Sahai E., Yuan Y. Topological tumor graphs: A graph-based spatial model to infer stromal recruitment for immunosuppression in melanoma histology. Cancer Res. 2020;80:1199–1209. doi: 10.1158/0008-5472.CAN-19-2268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Moorman A., Vink R., Heijmans H., Van Der Palen J., Kouwenhoven E. The prognostic value of tumour-stroma ratio in triple-negative breast cancer. Eur. J. Surg. Oncol. 2012;38:307–313. doi: 10.1016/j.ejso.2012.01.002. [DOI] [PubMed] [Google Scholar]

- 37.Roeke T., Sobral-Leite M., Dekker T.J., Wesseling J., Smit V.T., Tollenaar R.A., Schmidt M.K., Mesker W.E. The prognostic value of the tumour-stroma ratio in primary operable invasive cancer of the breast: A validation study. Breast Cancer Res. Treat. 2017;166:435–445. doi: 10.1007/s10549-017-4445-8. [DOI] [PubMed] [Google Scholar]

- 38.Kather J.N., Krisam J., Charoentong P., Luedde T., Herpel E., Weis C.A., Gaiser T., Marx A., Valous N.A., Ferber D., et al. Predicting survival from colorectal cancer histology slides using deep learning: A retrospective multicenter study. PLoS Med. 2019;16:e1002730. doi: 10.1371/journal.pmed.1002730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Geessink O.G., Baidoshvili A., Klaase J.M., Ehteshami Bejnordi B., Litjens G.J., van Pelt G.W., Mesker W.E., Nagtegaal I.D., Ciompi F., van der Laak J.A. Computer aided quantification of intratumoral stroma yields an independent prognosticator in rectal cancer. Cell. Oncol. 2019;42:331–341. doi: 10.1007/s13402-019-00429-z. [DOI] [PubMed] [Google Scholar]

- 40.Zhang T., Xu J., Shen H., Dong W., Ni Y., Du J. Tumor-stroma ratio is an independent predictor for survival in NSCLC. Int. J. Clin. Exp. Pathol. 2015;8:11348. [PMC free article] [PubMed] [Google Scholar]

- 41.Lu M.Y., Chen T.Y., Williamson D.F., Zhao M., Shady M., Lipkova J., Mahmood F. AI-based pathology predicts origins for cancers of unknown primary. Nature. 2021;594:106–110. doi: 10.1038/s41586-021-03512-4. [DOI] [PubMed] [Google Scholar]

- 42.Zhu X., Yao J., Huang J. Deep convolutional neural network for survival analysis with pathological images; Proceedings of the 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM); Shenzhen, China. 15–18 December 2016; pp. 544–547. [Google Scholar]

- 43.Mobadersany P., Yousefi S., Amgad M., Gutman D.A., Barnholtz-Sloan J.S., Vega J.E.V., Brat D.J., Cooper L.A. Predicting cancer outcomes from histology and genomics using convolutional networks. Proc. Natl. Acad. Sci. USA. 2018;115:E2970–E2979. doi: 10.1073/pnas.1717139115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.He B., Bergenstråhle L., Stenbeck L., Abid A., Andersson A., Borg Å., Maaskola J., Lundeberg J., Zou J. Integrating spatial gene expression and breast tumour morphology via deep learning. Nat. Biomed. Eng. 2020;4:827–834. doi: 10.1038/s41551-020-0578-x. [DOI] [PubMed] [Google Scholar]

- 45.Wang Y., Kartasalo K., Weitz P., Acs B., Valkonen M., Larsson C., Ruusuvuori P., Hartman J., Rantalainen M. Predicting molecular phenotypes from histopathology images: A transcriptome-wide expression-morphology analysis in breast cancer. Cancer Res. 2021;81:5115–5126. doi: 10.1158/0008-5472.CAN-21-0482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Wang D., Khosla A., Gargeya R., Irshad H., Beck A.H. Deep learning for identifying metastatic breast cancer. arXiv. 20161606.05718 [Google Scholar]

- 47.Steiner D.F., MacDonald R., Liu Y., Truszkowski P., Hipp J.D., Gammage C., Thng F., Peng L., Stumpe M.C. Impact of deep learning assistance on the histopathologic review of lymph nodes for metastatic breast cancer. Am. J. Surg. Pathol. 2018;42:1636. doi: 10.1097/PAS.0000000000001151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Roux L., Racoceanu D., Loménie N., Kulikova M., Irshad H., Klossa J., Capron F., Genestie C., Le Naour G., Gurcan M.N. Mitosis detection in breast cancer histological images An ICPR 2012 contest. J. Pathol. Inform. 2013;4:8. doi: 10.4103/2153-3539.112693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Bejnordi B.E., Veta M., Van Diest P.J., Van Ginneken B., Karssemeijer N., Litjens G., Van Der Laak J.A., Hermsen M., Manson Q.F., Balkenhol M., et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA. 2017;318:2199–2210. doi: 10.1001/jama.2017.14585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kong B., Wang X., Li Z., Song Q., Zhang S. Information Processing in Medical Imaging. IPMI 2017. Springer; Cham, Switzerland: 2017. Cancer metastasis detection via spatially structured deep network; pp. 236–248. Lecture Notes in Computer Science. [Google Scholar]

- 51.Li Y., Ping W. Cancer metastasis detection with neural conditional random field. arXiv. 20181806.07064 [Google Scholar]

- 52.Lin H., Chen H., Graham S., Dou Q., Rajpoot N., Heng P.A. Fast scannet: Fast and dense analysis of multi-gigapixel whole-slide images for cancer metastasis detection. IEEE Trans. Med. Imaging. 2019;38:1948–1958. doi: 10.1109/TMI.2019.2891305. [DOI] [PubMed] [Google Scholar]

- 53.Liu Y., Gadepalli K., Norouzi M., Dahl G.E., Kohlberger T., Boyko A., Venugopalan S., Timofeev A., Nelson P.Q., Corrado G.S., et al. Detecting cancer metastases on gigapixel pathology images. arXiv. 20171703.02442 [Google Scholar]

- 54.Bundy A. Preparing for the Future of Artificial Intelligence. Executive Office of the President National Science and Technology Council; Washington, DC, USA: 2017. [Google Scholar]

- 55.Sirinukunwattana K., Pluim J.P., Chen H., Qi X., Heng P.A., Guo Y.B., Wang L.Y., Matuszewski B.J., Bruni E., Sanchez U., et al. Gland segmentation in colon histology images: The glas challenge contest. Med. Image Anal. 2017;35:489–502. doi: 10.1016/j.media.2016.08.008. [DOI] [PubMed] [Google Scholar]

- 56.Fan K., Wen S., Deng Z. Innovation in Medicine and Healthcare Systems, and Multimedia. Springer; Berlin/Heidelberg, Germany: 2019. Deep learning for detecting breast cancer metastases on WSI; pp. 137–145. [Google Scholar]

- 57.Zerhouni E., Lányi D., Viana M., Gabrani M. Wide residual networks for mitosis detection; Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017); Melbourne, Australia. 18–21 April 2017; pp. 924–928. [Google Scholar]

- 58.Rakhlin A., Tiulpin A., Shvets A.A., Kalinin A.A., Iglovikov V.I., Nikolenko S. Breast tumor cellularity assessment using deep neural networks; Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshops; Seoul, Korea. 27–28 October 2019. [Google Scholar]

- 59.Aresta G., Araújo T., Kwok S., Chennamsetty S.S., Safwan M., Alex V., Marami B., Prastawa M., Chan M., Donovan M., et al. Bach: Grand challenge on breast cancer histology images. Med. Image Anal. 2019;56:122–139. doi: 10.1016/j.media.2019.05.010. [DOI] [PubMed] [Google Scholar]

- 60.Jahanifar M., Koohbanani N.A., Rajpoot N. Nuclick: From clicks in the nuclei to nuclear boundaries. arXiv. 20191909.03253 [Google Scholar]

- 61.Da Q., Huang X., Li Z., Zuo Y., Zhang C., Liu J., Chen W., Li J., Xu D., Hu Z., et al. DigestPath: A Benchmark Dataset with Challenge Review for the Pathological Detection and Segmentation of Digestive-System. Med. Image Anal. 2022;80:102485. doi: 10.1016/j.media.2022.102485. [DOI] [PubMed] [Google Scholar]

- 62.Eloy C., Zerbe N., Fraggetta F. Europe unites for the digital transformation of pathology: The role of the new ESDIP. J. Pathol. Inform. 2021;12:10. doi: 10.4103/jpi.jpi_80_20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Aubreville M., Stathonikos N., Bertram C.A., Klopleisch R., ter Hoeve N., Ciompi F., Wilm F., Marzahl C., Donovan T.A., Maier A., et al. Mitosis domain generalization in histopathology images–The MIDOG challenge. arXiv. 2022 doi: 10.1016/j.media.2022.102699.2204.03742 [DOI] [PubMed] [Google Scholar]

- 64.Graham S., Jahanifar M., Vu Q.D., Hadjigeorghiou G., Leech T., Snead D., Raza S.E.A., Minhas F., Rajpoot N. Conic: Colon nuclei identification and counting challenge 2022. arXiv. 20212111.14485 [Google Scholar]

- 65.Weitz P., Valkonen M., Solorzano L., Hartman J., Ruusuvuori P., Rantalainen M. ACROBAT—Automatic Registration of Breast Cancer Tissue; Proceedings of the 25th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2022); Singapore. 18–22 September 2022. [Google Scholar]

- 66.Coudray N., Ocampo P.S., Sakellaropoulos T., Narula N., Snuderl M., Fenyö D., Moreira A.L., Razavian N., Tsirigos A. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat. Med. 2018;24:1559–1567. doi: 10.1038/s41591-018-0177-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Guo Z., Liu H., Ni H., Wang X., Su M., Guo W., Wang K., Jiang T., Qian Y. A fast and refined cancer regions segmentation framework in whole-slide breast pathological images. Sci. Rep. 2019;9:882. doi: 10.1038/s41598-018-37492-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Bulten W., Pinckaers H., van Boven H., Vink R., de Bel T., van Ginneken B., van der Laak J., Hulsbergen-van de Kaa C., Litjens G. Automated deep-learning system for Gleason grading of prostate cancer using biopsies: A diagnostic study. Lancet Oncol. 2020;21:233–241. doi: 10.1016/S1470-2045(19)30739-9. [DOI] [PubMed] [Google Scholar]

- 69.Goodfellow I., Bengio Y., Courville A. Deep Learning. MIT Press; Cambridge, MA, USA: 2016. [Google Scholar]

- 70.Litjens G., Kooi T., Bejnordi B.E., Setio A.A.A., Ciompi F., Ghafoorian M., Van Der Laak J.A., Van Ginneken B., Sánchez C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 71.Shen D., Wu G., Suk H.I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 2017;19:221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Yi X., Walia E., Babyn P. Generative adversarial network in medical imaging: A review. Med. Image Anal. 2019;58:101552. doi: 10.1016/j.media.2019.101552. [DOI] [PubMed] [Google Scholar]

- 73.Zhang Z., Chen P., McGough M., Xing F., Wang C., Bui M., Xie Y., Sapkota M., Cui L., Dhillon J., et al. Pathologist-level interpretable whole-slide cancer diagnosis with deep learning. Nat. Mach. Intell. 2019;1:236–245. doi: 10.1038/s42256-019-0052-1. [DOI] [Google Scholar]

- 74.Shim W.S., Yim K., Kim T.J., Sung Y.E., Lee G., Hong J.H., Chun S.H., Kim S., An H.J., Na S.J., et al. DeepRePath: Identifying the prognostic features of early-stage lung adenocarcinoma using multi-scale pathology images and deep convolutional neural networks. Cancers. 2021;13:3308. doi: 10.3390/cancers13133308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Im S., Hyeon J., Rha E., Lee J., Choi H.J., Jung Y., Kim T.J. Classification of diffuse glioma subtype from clinical-grade pathological images using deep transfer learning. Sensors. 2021;21:3500. doi: 10.3390/s21103500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Mi W., Li J., Guo Y., Ren X., Liang Z., Zhang T., Zou H. Deep learning-based multi-class classification of breast digital pathology images. Cancer Manag. Res. 2021;13:4605. doi: 10.2147/CMAR.S312608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Hu Y., Su F., Dong K., Wang X., Zhao X., Jiang Y., Li J., Ji J., Sun Y. Deep learning system for lymph node quantification and metastatic cancer identification from whole-slide pathology images. Gastric Cancer. 2021;24:868–877. doi: 10.1007/s10120-021-01158-9. [DOI] [PubMed] [Google Scholar]

- 78.Pei L., Jones K.A., Shboul Z.A., Chen J.Y., Iftekharuddin K.M. Deep neural network analysis of pathology images with integrated molecular data for enhanced glioma classification and grading. Front. Oncol. 2021;11:2572. doi: 10.3389/fonc.2021.668694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Salvi M., Bosco M., Molinaro L., Gambella A., Papotti M., Acharya U.R., Molinari F. A hybrid deep learning approach for gland segmentation in prostate histopathological images. Artif. Intell. Med. 2021;115:102076. doi: 10.1016/j.artmed.2021.102076. [DOI] [PubMed] [Google Scholar]

- 80.Lu Z., Zhan X., Wu Y., Cheng J., Shao W., Ni D., Han Z., Zhang J., Feng Q., Huang K. BrcaSeg: A deep learning approach for tissue quantification and genomic correlations of histopathological images. Genom. Proteom. Bioinform. 2021;19:1032–1042. doi: 10.1016/j.gpb.2020.06.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Cheng S., Liu S., Yu J., Rao G., Xiao Y., Han W., Zhu W., Lv X., Li N., Cai J., et al. Robust whole slide image analysis for cervical cancer screening using deep learning. Nat. Commun. 2021;12:5639. doi: 10.1038/s41467-021-25296-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Kers J., Bülow R.D., Klinkhammer B.M., Breimer G.E., Fontana F., Abiola A.A., Hofstraat R., Corthals G.L., Peters-Sengers H., Djudjaj S., et al. Deep learning-based classification of kidney transplant pathology: A retrospective, multicentre, proof-of-concept study. Lancet Digit. Health. 2022;4:e18–e26. doi: 10.1016/S2589-7500(21)00211-9. [DOI] [PubMed] [Google Scholar]

- 83.Zhou C., Jin Y., Chen Y., Huang S., Huang R., Wang Y., Zhao Y., Chen Y., Guo L., Liao J. Histopathology classification and localization of colorectal cancer using global labels by weakly supervised deep learning. Comput. Med. Imaging Graph. 2021;88:101861. doi: 10.1016/j.compmedimag.2021.101861. [DOI] [PubMed] [Google Scholar]

- 84.Höhn J., Krieghoff-Henning E., Jutzi T.B., von Kalle C., Utikal J.S., Meier F., Gellrich F.F., Hobelsberger S., Hauschild A., Schlager J.G., et al. Combining CNN-based histologic whole slide image analysis and patient data to improve skin cancer classification. Eur. J. Cancer. 2021;149:94–101. doi: 10.1016/j.ejca.2021.02.032. [DOI] [PubMed] [Google Scholar]

- 85.Shin S.J., You S.C., Jeon H., Jung J.W., An M.H., Park R.W., Roh J. Style transfer strategy for developing a generalizable deep learning application in digital pathology. Comput. Methods Programs Biomed. 2021;198:105815. doi: 10.1016/j.cmpb.2020.105815. [DOI] [PubMed] [Google Scholar]

- 86.Naik N., Madani A., Esteva A., Keskar N.S., Press M.F., Ruderman D., Agus D.B., Socher R. Deep learning-enabled breast cancer hormonal receptor status determination from base-level H&E stains. Nat. Commun. 2020;11:5727. doi: 10.1038/s41467-020-19334-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Yao J., Zhu X., Jonnagaddala J., Hawkins N., Huang J. Whole slide images based cancer survival prediction using attention guided deep multiple instance learning networks. Med. Image Anal. 2020;65:101789. doi: 10.1016/j.media.2020.101789. [DOI] [PubMed] [Google Scholar]

- 88.Campanella G., Hanna M.G., Geneslaw L., Miraflor A., Werneck Krauss Silva V., Busam K.J., Brogi E., Reuter V.E., Klimstra D.S., Fuchs T.J. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med. 2019;25:1301–1309. doi: 10.1038/s41591-019-0508-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Lu M.Y., Williamson D.F., Chen T.Y., Chen R.J., Barbieri M., Mahmood F. Data-efficient and weakly supervised computational pathology on whole-slide images. Nat. Biomed. Eng. 2021;5:555–570. doi: 10.1038/s41551-020-00682-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]