Abstract

Magnetic Resonance Imaging (MRI) is a noninvasive technique used in medical imaging to diagnose a variety of disorders. The majority of previous systems performed well on MRI datasets with a small number of images, but their performance deteriorated when applied to large MRI datasets. Therefore, the objective is to develop a quick and trustworthy classification system that can sustain the best performance over a comprehensive MRI dataset. This paper presents a robust approach that has the ability to analyze and classify different types of brain diseases using MRI images. In this paper, global histogram equalization is utilized to remove unwanted details from the MRI images. After the picture has been enhanced, a symlet wavelet transform-based technique has been suggested that can extract the best features from the MRI images for feature extraction. On gray scale images, the suggested feature extraction approach is a compactly supported wavelet with the lowest asymmetry and the most vanishing moments for a given support width. Because the symlet wavelet can accommodate the orthogonal, biorthogonal, and reverse biorthogonal features of gray scale images, it delivers higher classification results. Following the extraction of the best feature, the linear discriminant analysis (LDA) is employed to minimize the feature space’s dimensions. The model was trained and evaluated using logistic regression, and it correctly classified several types of brain illnesses based on MRI pictures. To illustrate the importance of the proposed strategy, a standard dataset from Harvard Medical School and the Open Access Series of Imaging Studies (OASIS), which encompasses 24 different brain disorders (including normal), is used. The proposed technique achieved the best classification accuracy of 96.6% when measured against current cutting-edge systems.

Keywords: brain, MRI, medical imaging, feature extraction, recognition, healthcare

1. Introduction

The brain, which is the human body’s most important structural element, contains 50–100 trillion neurons [1]. It is also known as the human body’s core section. Furthermore, it is known as the “processor” or “kernel” of the nervous system, and it plays the most important and critical role in the nervous system [2,3]. To the best of our knowledge, diagnosing brain disease is too difficult and complex due to the presence of the skull around it [4].

Utilizing technology to evaluate individuals with the aim of identifying, tracking, and treating medical issues is known as medical imaging. In medical imaging, magnetic resonance imaging (MRI) is a precise and noninvasive technique that can be used to diagnose a variety of disorders. In the last few decades, many scholars have proposed various state-of-the-art methods for brain MRI classification, and most of them focused on various modules of the MRI systems.

A latest convolutional neural network-based MRI method, data expansion, and image processing were proposed by [5] to recognize brain MRI images in various diseases. They compared the significance of their approach with pre-trained VGG-16 in the presence of transfer learning using a small dataset. Another deep learning-based method for detecting brain tumors in MRI images was created by [6]. This approach was divided into three phases: in the first phase, the CNN-based classifiers were implemented; while in the second phase, a region-based CNN was utilized on the output of the first phase; finally, in the third phase, the boundary of the brain tumor was focused and segmented by the Chan-Vese energy function followed by the edge detection method. On the other hand, an integrated approach was designed by [7], combining a mathematical morphological operator and an OASIS operator. In the first step, they extracted the largest connected area, such as the brain. After this, the unsupervised framework was employed to extract the various axial slices of brain. The main contribution of this paper was to identify the brains automatically, which was evaluated through five matrices using a publicly available dataset. Similarly, the glioma disease was analyzed by [8], where they utilized the Gaussian Naïve Bayes technique. In their approach, they employed the grow cut method followed by 3D features on MRI images. Then, they statistically analyzed the corresponding values through Spearman and Mann–Whitney U tests and achieved better results than the standard MRI dataset. The authors of [9] proposed an integrated approach for the detection of the tumor on brain MRI images. This approach is the combination of two well-known methods, morphological edge detection followed by fuzzy methods, respectively. In this method, the authors located the tumor through edge detection methods, while their performances were enhanced by a fuzzy algorithm, and showed the best recognition rate on a brain MRI dataset.

The authors [10] recently developed a piece of work that used deep learning and transfer learning to classify different MRI pictures of brain tumors. They showed acceptable results on a small public dataset of brain tumor MRI images. Likewise, an artificial neural network (ANN) based approach was developed by [11] that efficiently classified normal and abnormal MRI images. In this approach, they utilized a median filter in the pre-processing step in order to diminish the noise from MRI images. For the feature extraction, they employed the wavelet transform to extract the best features from the enhanced images. Then the dimensions are reduced by employing color moments. Finally, for the classification of normal and abnormal MRI images, the fast-forward ANN has been utilized. They utilized only 70 images for their corresponding experiments. In contrast, a cutting-edge system with three fundamental modules was created by [12] for the identification of brain tumors. They utilized histogram equalization in the pre-processing module in order to enhance the contrast of the brain MRI images. While, in the feature extraction module, they used principal component analysis followed by independent analysis to extract prominent features. Finally, they utilized an integrated classifier that was based on Naïve Bayes recurrent neural networks. They claimed better performance using publicly available brain MRI datasets.

Additionally, [13] established a reliable method for the recognition and segmentation of the brain tumor in MRI images. To distinguish between a wide range of tumor tissues in normal and abnormal MRI images and segment the tumor area accordingly, they used Berkeley’s wavelet transform followed by a deep learning classifier. Most of the afore-mentioned approaches showed better performances and acceptable results on small brain MRI images. However, their performances and classification accuracies are accordingly decreased on large brain MRI datasets.

As a result, this study suggests a precise and effective system for the classification of brain diseases using MRI images. In this approach, the following contributions have been made.

In the preprocessing step, the MRI images have been enhanced through existing well-known techniques like global histogram equalization.

Then, for feature extraction, an accurate and robust technique is proposed that is based on symlet wavelet transform. This technique yields better classification outcomes because it can handle the orthogonal, biorthogonal, and reverse biorthogonal features of gray scale images. Our tests support the frequency-based supposition. The wavelet coefficients’ statistical reliance was assessed for each frame of grayscale MRI data. A gray scale frame’s joint probability is calculated by collecting geometrically aligned MRI images for each wavelet coefficient. In order to determine the wavelet coefficients obtained from these distributions, the mutual information between the two MRI images is used to calculate the statistical dependence’s intensity.

Following the extraction of the best feature, a linear discriminant analysis (LDA) was used to minimize the feature space’s dimensions.

Following the selection of the best features, the model is trained using logistic regression, which uses the coefficient values to determine which characteristics (i.e., which pixels) are crucial in deciding which class a sample belongs to. The per-class probability for each sample may be computed using the coefficient values, and the conditional probability for each class can be computed using this method. In general, the class with the highest probability might be found to acquire the predicted label.

In order to assess the performance of the proposed approach, a comprehensive set of experiments was performed using the brain MRI dataset, which has 24 various kinds of brain diseases.

For this assessment, a comprehensive dataset is collected from Harvard Medical School [14] and Open Access Series of Imaging Studies (OASIS) [15], which has total 24 various kinds of diseases such as Fatal stroke (FS), Motor neuron disease (MN), Glioma (GL), Vascular dementia (VD), Cavernous angioma (CA), Hypertensive encephalopathy (HY), Cerebral calcinosis (CC), Metastatic adenocarcinoma (MA), Chronic subdural hematoma (CS), Multiple embolic infarctions (MI), AIDS dementia (AD), Cerebral toxoplasmosis (CT), Meningioma (M), Pick’s disease (PD), Sarcoma (SR), Alzheimer’s disease (AL), Creutzfeld-Jakob disease (CJ), Metastatic bronchogenic carcinoma (MB), Alzheimer’s disease with visual agnosia (AV), Multiple sclerosis (MS), Lyme encephalopathy (LE), Herpes encephalitis (HE), Cerebral haemorrhage (CH), Huntington’s disease (HD), and normal brain (NB). The proposed technique achieved better performance on this comprehensive MRI dataset.

The entire paper is organized as follows: Section 2 describes the existing MRI systems along with their respective disadvantages. Section 3 presents the proposed approach, while, the experimental setup is described in Section 4. Based on the experimental setup, the results are shown in Section 5. Finally, Section 6 summarizes the proposed approach along with future directions.

2. Literature Review

In the past couple of years, lots of efficient and accurate studies have been done for the classification of numerous types of brain ailments using MRI images. Most of these studies showed the best performances on a small dataset of brain MRI. However, their performances degraded accordingly on larger testing datasets. Therefore, a robust and accurate framework has been designed that showed good classification results on a large brain MRI dataset.

A novel method has been proposed by [16] that is based on statistical features coupled with various machine learning techniques. They claimed the best performance on a small MRI dataset. However, computational-wise, this approach is much more expensive. A state-of-the-art framework has been designed by [17], which classified the Alzheimer disease using MRI images. In this framework, the corresponding MRI image has been enhanced in the preprocessing step, while the brain tissues are segmented in the post-processing step. Then several deep learning techniques (convolutional neural network) are employed to classify the corresponding disease. However, the convolutional neural network has an overfitting problem [18]. Also, this approach has been tested and validated on a small dataset. Similarly, an accurate and robust method was proposed by [19]. They utilized stepwise linear discriminant analysis (SWLDA) for feature extraction and support vector machines for classification on a large brain MRI dataset. They achieved the best performance using the MRI dataset. However, SWLDA is a linear method that might be employed in a small subspace of binary classification problems [20].

On the other hand, an integrated approach was designed by [21], where the authors integrated a feature-based classifier and an image-based classifier for brain tumor classification. Further, their proposed architecture was based on deep neural networks and deep convolutional networks. They achieved a comparable classification rate. However, a huge number of training images and the carefully constructed deep networks required for this approach [22]. Similarly, a state-of-the-art framework was designed by [23] in order to classify brain MRI along with gender and age. They utilized deep neural network, convolutional network, LeNet, AlexNet, ResNet, and SVM to classify abnormal and normal MRIs accurately. However, they showed better performance on a small dataset, and most of the experiments were in a static environment. Likewise, the authors of [24] proposed an efficient brain image classification system using an MRI dataset. In their system, they extracted the features by shape and textual method, such as region based active contour, and showed good performance. However, the major limitation of the region-based method is its’ sensitivity to the initialization, and because of this, the region of interest does not segment properly [25].

A cutting-edge method for classifying various brain illnesses using MRI images was reported by Nayak et al. [26]. They utilized convolutional neural network-based dense EfficientNet coupled with min-mix normalization for categorization, and they showed better performance using the MRI dataset. However, this approach employs a huge number of operations, which make the model computationally slower [27]. Similarly, an integrated framework was designed by [28], where the authors employed a semantic segmentation network coupled with GoogleNet and a convolutional neural network (CNN) for brain tumor classification using MRI and CT images. They achieved better results using a small dataset of brain MRI and CT. However, in GoogleNet, the connected layers cannot manage various input image sizes [29].

A fully automated brain tumor segmentation approach was developed by [30] that was based on support vector machines and CNN. Moreover, the segmentation was done through the details of various techniques such as structural, morphological, and relaxometry. However, the methodologies utilized in this framework have comparatively lower significance with larger amounts of input MRI images [31]. Because it is a challenging task for these methods to accurately detect the abnormalities in the brain MRI images [31]. Moreover, a modified CNN based model was developed by [32] for the analysis of brain tumors. The authors employed CNN along with parametric optimization techniques such as the sunflower optimization algorithm (SFOA), the forensic-based investigation algorithm (FBIA), and the material generation algorithm (MGA). They claimed the highest accuracy of classification using the MRI dataset. However, SFOA is very sensitive to initializing and premature convergence [33]. Moreover, in MGA, the predictions are made based on single-slice inputs, hypothetically restraining the information available to the network [34].

An integrated framework was proposed by [35], which was based on the VGG19 feature extractor along with a progressive growing generative adversarial network (PGGAN) augmentation model for brain tumor classification using MRI images. They achieved good classification results on a publicly available MRI dataset. However, this approach cannot generate high-resolution images via the PGGAN model [36]. Moreover, this approach might not generate new examples with objects in the desired condition [37]. Another state-of-the-art scheme was proposed by [38], which contained some steps such as preprocessing, segmentation, feature extraction, and classification. The image was enhanced via a Wiener filter followed by edge detection. The tumor was segmented by a mean shift clustering algorithm. The features were extracted from the segmented tumor through the gray level co-occurrence matrix (GLCM), and the classification was done by support vector machines. However, the GLCM method is robust to Gaussian noise, and the extracted features are based on the difference between the corresponding pixels, but the magnitude of the difference was not taken into account [39].

A state-of-the-art fused method was developed by [40] that was based on gray level co-occurrence matrix (GLCM), spatial grey level dependence matrix (SGLDM), and Harris hawks optimization (HHO) techniques followed by support vector machines for brain tumor detection. However, this approach depends on the manual selection of the region of interest, due to which the corresponding results in the dependence of parameter values on the extracted region might not be selected [40].

As a result, in this work, a solid framework was created for the classification of various brain illnesses using an MRI dataset. A symlet wavelet-based feature extraction method was designed and is used in the proposed framework to extract the key features from brain MRI images. Furthermore, the dimensions of the feature space are reduced by LDA, and the classification is done through logistic regression. The proposed approach achieved the best classification results using MRI images compared to the existing publications.

3. Proposed Feature Extraction Methodology

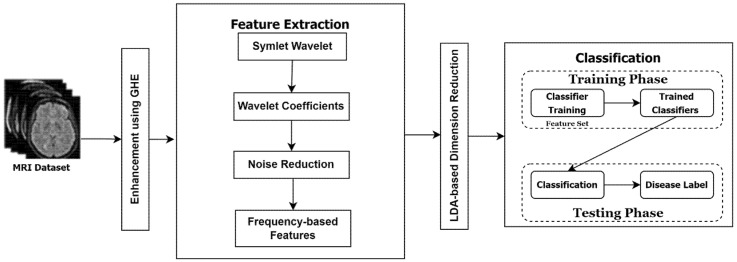

The overall working diagram for the proposed brain MRI images is presented in Figure 1.

Figure 1.

The working diagram of the proposed MRI classification Approach.

3.1. Preprocessing

Most images contain extra elements, including background information, lighting effects, and pointless details that could lead to classification errors. To facilitate quick processing and enhance image quality, it is crucial to remove any superfluous parameters. To enhance the quality of the images by extending the dynamic range’s intensity using the histogram of the entire image, the global histogram equalization (GHE) is used in the preprocessing stage. In essence, GHE finds the histogram’s sequential sum, normalizes it, and then multiplies it by the value of the highest gray level. Then, utilizing one-to-one correspondence, these values are translated onto the earlier original values. GHE’s transformation function is given in Equation (1).

| (1) |

where k = 0, 1, 2…, N − 1, 0 ≤ Gk ≤ 1, n is the total number of pixels in the input image, ni is the number of pixels with the grey level gi, and P(gi) is the PDF of the input grey level. To evenly distribute the brightness histogram of picture (I) in GHE, the image (I) must first be normalized before the PDF can be calculated. This is shown by Equation (2),

| (2) |

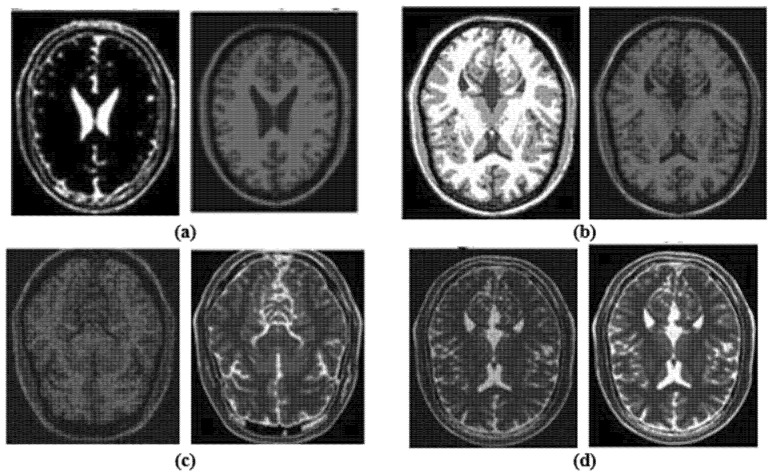

where the cumulative density function (CDF) dependent on PDF is denoted by C(rk) in (1). The supplied transformation function in Equation (1), which is mapped by multiplying it by [N − 1], represents the GHE and has a dynamic range of [0, N − 1]. This method produced images with a resolution of 240 × 320 pixels. The corresponding results are shown in Figure 2. The Figures (a) and (b) the left side images are affected by light and the right-side images are respectively enhanced by the preprocessing step; while, the Figures (c) and (d), the left side images are affected by noise and the right-side images are respectively enhanced by the preprocessing step.

Figure 2.

The corresponding results of preprocessing step.

3.2. Symlet Wavelet Transform

Following the preprocessing stage of enhancing the MRI images, the symlet wavelet transform has been used to extract a number of standout features from the MRI images. The decomposition method was employed in this procedure, which required grayscale video frames. The proposed algorithm was converted from RGB to grayscale in order to increase its effectiveness. The decomposition of the signal into a group of distinct feature vectors could be understood as the wavelet decomposition. Each vector includes smaller sub-vectors, such as

| (3) |

where F represents the 2D feature vector. Let assume, a 2D MRI image like Y that has been divided into orthogonal sub-images for various visualizations. One level of decomposition is depicted in the following equation.

| Y = R1 + P1 | (4) |

where, R1 and P1 denote rough and precise coefficient vectors, respectively, and Y denotes the decomposed image. If the MRI image is divided into multiple levels, then, the Equation (3) can be expressed as.

| (5) |

where, j indicates the decomposition’s level. Only the rough coefficients were used for feature extraction because the precise coefficients are typically made up of noise. Each frame is divided into up to four layers of decomposition during the decomposition process, or j = 4, because beyond this value, the image loses a lot of information, making it difficult to discover the useful coefficients and perhaps leading to misclassification.

The precise coefficients further consist of three sub-coefficients. So, the Equation (4) can be written as

| (6) |

Or simply, the Equation (5) can be written as

| (7) |

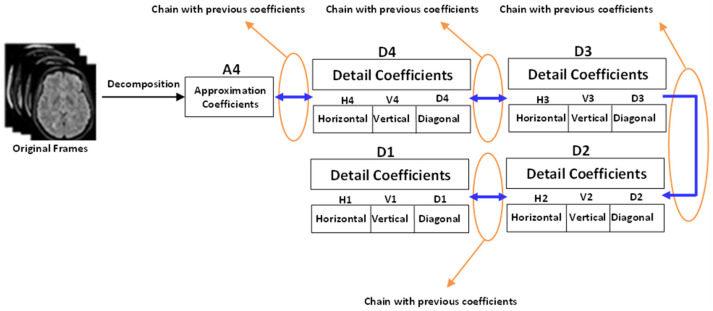

where, Pv, Ph, and Pd represent vertical, horizontal, and diagonal coefficients, respectively. As can be seen from Equation (6) or (7), all the coefficients are linked to one another in a chain, making it simple to identify the salient features. Figure 3 graphically displays these coefficients. For each stage of the decomposition, the rough and precise coefficient vectors are produced by passing the signal through low-pass and high-pass filters, respectively.

Figure 3.

The entire coefficients are connected in the form of a chain.

The feature vector is produced by averaging all the frequencies present in the MRI images following the decomposition procedure. The frequency of each MRI image within a given time window has been calculated by applying the wavelet transform to the analysis of the relevant frame [41].

| (8) |

where, is the wavelet function for estimating frequency, and t is the time. In order to obtain a greater level of judgment for frequency estimation, x is the scale of the wavelet between the lower and upper frequency boundaries. Moreover, y represents the wavelet’s position within the time frame with respect to the signal sampling period, and the wavelet coefficients with the supplied scale and position parameters are denoted by W(ai, bj), and their mode frequency conversion is shown below.

| (9) |

where is the wavelet function’s average frequency, and is the sampling period of the signal. In order to obtain the feature vector, the entire image frequencies for each MRI are averaged as follows:

| (10) |

where, K denotes the total number of frames for every MRI image, is the last frame of the current disease, and favg denotes the average values of the frequency for every MRI image. It is also a feature vector for that MRI.

3.3. Feature Selection and Dimension Reduction via Linear Discriminant Analysis (LDA)

LDA ensures maximum separability by maximizing the ratio of between-class variation to within-class variance in any given data set. LDA is used to classify data in order to solve speech recognition classification issues. The input is mapped into the classification space, where the samples’ class identification is determined by an ideal linear discriminant function produced by LDA. When the within-class frequencies are unequal and their performances have been evaluated using test data generated at random, LDA handles the situation with ease. The following equations are used to compare within-class VARW and between-class VARB.

| (11) |

| (12) |

where, c is the total number of classes (in our case, c represents the total MRI diseases within each state), and Vi represents the vector in the ith class Ci. Also, represents the mean of the class Ci, mk represents the vector of a specific class, and represents the mean of all vectors. The optimal projection matrix for discrimination, Do, is taken by maximizing the determinant of the between-class and within-class scatter matrices, and it is selected as

| (13) |

where, Do is the collection of discriminate vectors of VARW and VARB that correspond to the c − 1 highest generalized Eigen values . Do has a size of (), and r is the dimension of a vector. Then,

| (14) |

where, the upper bound value of t is c − 1, and the rank of VARB is c − 1 or less.

Thus, LDA minimizes the within scatter of classes like MRI diseases while maximizing the total dispersion of the data. Please refer to [42] for additional information on LDA.

3.4. Classification via Logistic Regression

A popular linear model that can be used for image categorization is logistic regression. In this model, a logistic function is used to simulate the probabilities describing the potential outcomes of a single experiment.

The example of logistic regression can be binary, e.g., One-vs-Rest, or multinomial logistic regression with optional regularization of ℓ1, ℓ2 or Elastic-Net.

As an optimization problem, binary class ℓ2 regularized logistic regression optimizes the following cost function:

| (15) |

Similarly, ℓ1 regularized logistic regression optimizes the following cost function:

| (16) |

Elastic-Net regularization is a combination of ℓ1 and ℓ2, and minimizes the following cost function:

| (17) |

where, regulates the relative magnitude of ℓ1 regularization vs. ℓ2 regularization. Note that, in this notation, the target yi is supposed to accept values from the set [−1, 1] at trial i. Additionally, The Elastic-Net is identical, as might be demonstrated to ℓ1 when ρ = 1 and to ℓ2 when ρ = 0. Please see [43] for a comprehensive detail of logistic regression.

4. Designed Approach Evaluation

The proposed technique is evaluated in the following order to show the performance of the proposed technique.

4.1. MRI Images Dataset

A comprehensive and generalized MRI dataset was created that contained the actual MRI images from the Harvard Medical School and OASIS MRI databases. The collection contains brain MRI images that have been T1 and T2 weighted. Each input image is 256 × 256 × 3 pixels in size and contains demographic and clinical data, including the patients’ gender, age, clinical dementia rating, mental state observation, and test parameters. The patients are all right-handed. This dataset is separated into two groups: the first comprises eleven diseases (which is used as a benchmark dataset by most existing works), and the second contains 24 diseases, including eleven from the first group. For large-scale experiments, this group is more ubiquitous. The overall number of brain MRI images in the first group is 255 (220 abnormal and 35 normal), while the total number of images in the second group is 340 (260 abnormal and 80 normal).

4.2. Experiment Settings

The performance of the created approach is assessed using the extensive set of experiments below, which are carried out in MATLAB using the specifications of RAM 8GB and processor running at 1.7 Hz.

The first experiment is implemented in order to assess the significance of the developed method on a publicly available MRI dataset. The entire experiment is performed against an n-fold cross validation scheme, where every image is used for both training and testing.

While, the second experiment presents the importance of the proposed technique in the MRI classification system. For which, a comprehensive sub experiments were executed; where, well-known existing feature extraction algorithms were employed like Speeded Up Robust Features (SURF), Gray Texture Features, Fusion Feature, Least Squares, Partial, Semidefinite Embedding, Latent Semantic Analysis, Independent Component Analysis (ICA) instead of the developed approach.

Finally, the third experiment prescribes the comparison of the developed approach against the state-of-the-art systems. This experiment was performed against three major measurement rules such as sensitivity, accuracy, and specificity, which are measured through the values of false positive and false negative.

5. Experimental Results

The performance of the proposed approach is evaluated through the following comprehensive set of experiments, which are presented in the following order.

5.1. 1st Experiment

This experiment presents the significance of the developed technique on the brain MRI dataset. An n-fold cross validation rule was used, where every MRI image has been used accordingly for training and validation. Table 1 contains the performance of the proposed approach.

Table 1.

Performance of the developed approach using MRI dataset (Unit %).

| Illnesses | FS | MN | GL | VD | CA | HY | CC | MA | CS | MI | AD | CT | ME | PD | SR | AL | CJ | MB | AV | MS | LE | HE | CH | HD | NB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FS | 96 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| MN | 0 | 98 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| GL | 1 | 0 | 99 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| VD | 0 | 0 | 0 | 100 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| CA | 0 | 0 | 1 | 0 | 95 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| HY | 2 | 0 | 0 | 0 | 0 | 93 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 |

| CC | 0 | 0 | 0 | 0 | 0 | 1 | 99 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| MA | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 97 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| CS | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 96 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| MI | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 94 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| AD | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 97 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| CT | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 98 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ME | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 100 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| PD | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 94 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 |

| SR | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 96 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| AL | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 97 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 |

| CJ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 99 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| MB | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 94 | 0 | 0 | 0 | 0 | 2 | 0 | 0 |

| AV | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 1 | 95 | 0 | 0 | 0 | 0 | 1 | 0 |

| MS | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 96 | 0 | 0 | 0 | 0 | 0 |

| LE | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 98 | 0 | 0 | 0 | 0 |

| HE | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 99 | 0 | 0 | 0 |

| CH | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 94 | 0 | 0 |

| HD | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 93 | 1 |

| NB | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 97 |

| Average | 96.58% | ||||||||||||||||||||||||

Table 1 clarifies that the developed technique achieved the best classification rates on a large brain MRI dataset. This is because the statistical reliance of the wavelet coefficients is measured in the proposed method, which means that the joint probabilities are calculated by collecting geometrically aligned MRI images for each wavelet coefficient. In order to determine the wavelet coefficients obtained from these distributions, the mutual information between the two MRI images is used to calculate the statistical dependence’s intensity. The execution time for the classification of every class using the proposed approach was 21.5 s against brain MRI dataset, which shows that the proposed approach was not only accurate but also computational wise less expensive.

5.2. 2nd Experiment

In the second type of experiment, a number of tests were performed to demonstrate the value of the suggested feature extraction method for the classification of brain MRI images. The existing state-of-the-art feature extraction techniques are employed instead of utilizing the proposed feature extraction method in the MRI system. The same experimental setup is kept for these experiments as in the first experiment. Then Speeded Up Robust Features, Gray Texture Features, Fusion Feature, Latent Semantic Analysis, Partial, Least Squares, Semidefinite Embedding, Independent Component Analysis are employed in the current respective MRI system. The entire results are presented in Table 2, Table 3, Table 4, Table 5, Table 6,Table 7 and Table 8.

Table 2.

Performance of Speeded Up Robust Features (SURF) method (instead of using the developed approach) using MRI dataset (Unit %).

| Illnesses | FS | MN | GL | VD | CA | HY | CC | MA | CS | MI | AD | CT | ME | PD | SR | AL | CJ | MB | AV | MS | LE | HE | CH | HD | NB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FS | 87 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 2 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 2 | 0 |

| MN | 2 | 88 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 2 |

| GL | 0 | 1 | 90 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 |

| VD | 1 | 0 | 0 | 85 | 2 | 0 | 1 | 0 | 1 | 0 | 2 | 0 | 0 | 3 | 0 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 0 | 2 | 0 |

| CA | 2 | 0 | 3 | 1 | 80 | 0 | 0 | 2 | 0 | 1 | 1 | 0 | 2 | 0 | 2 | 1 | 0 | 0 | 2 | 0 | 1 | 2 | 0 | 0 | 0 |

| HY | 0 | 1 | 0 | 0 | 0 | 85 | 2 | 0 | 1 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 2 | 0 | 0 | 2 | 0 | 0 | 2 | 0 | 2 |

| CC | 0 | 0 | 1 | 0 | 2 | 0 | 88 | 1 | 0 | 0 | 2 | 0 | 2 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 0 |

| MA | 2 | 0 | 0 | 2 | 0 | 2 | 0 | 82 | 0 | 2 | 0 | 1 | 0 | 2 | 0 | 2 | 1 | 0 | 2 | 0 | 1 | 0 | 0 | 1 | 0 |

| CS | 0 | 2 | 1 | 0 | 0 | 0 | 4 | 0 | 83 | 0 | 2 | 0 | 1 | 0 | 2 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 1 | 0 | 1 |

| MI | 1 | 0 | 0 | 2 | 1 | 0 | 1 | 0 | 0 | 85 | 0 | 2 | 0 | 2 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 1 | 0 | 2 | 0 |

| AD | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 2 | 0 | 1 | 87 | 0 | 1 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 2 | 0 | 0 | 0 | 1 |

| CT | 0 | 0 | 2 | 0 | 0 | 0 | 2 | 0 | 1 | 0 | 2 | 91 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| ME | 2 | 1 | 0 | 1 | 2 | 0 | 0 | 1 | 0 | 2 | 0 | 1 | 79 | 3 | 0 | 2 | 1 | 0 | 2 | 0 | 1 | 0 | 2 | 0 | 0 |

| PD | 0 | 1 | 2 | 0 | 0 | 1 | 2 | 0 | 2 | 0 | 1 | 0 | 2 | 80 | 2 | 0 | 1 | 2 | 0 | 1 | 0 | 2 | 0 | 1 | 0 |

| SR | 1 | 0 | 0 | 2 | 1 | 0 | 0 | 1 | 0 | 2 | 0 | 2 | 0 | 0 | 81 | 2 | 0 | 1 | 2 | 0 | 1 | 0 | 2 | 0 | 2 |

| AL | 2 | 0 | 1 | 0 | 0 | 2 | 0 | 0 | 2 | 0 | 1 | 0 | 1 | 2 | 0 | 82 | 2 | 0 | 0 | 2 | 0 | 1 | 0 | 2 | 0 |

| CJ | 0 | 1 | 0 | 2 | 0 | 0 | 1 | 2 | 0 | 1 | 0 | 2 | 0 | 0 | 1 | 0 | 86 | 1 | 0 | 0 | 2 | 0 | 1 | 0 | 0 |

| MB | 0 | 0 | 2 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 1 | 0 | 88 | 1 | 0 | 0 | 0 | 0 | 0 | 2 |

| AV | 1 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 90 | 2 | 0 | 1 | 0 | 0 | 0 |

| MS | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 1 | 0 | 0 | 2 | 0 | 1 | 0 | 1 | 0 | 0 | 89 | 0 | 0 | 0 | 1 | 0 |

| LE | 2 | 0 | 1 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 2 | 1 | 0 | 84 | 0 | 2 | 0 | 2 |

| HE | 0 | 1 | 0 | 2 | 0 | 0 | 2 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 2 | 0 | 86 | 0 | 1 | 0 |

| CH | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 0 | 2 | 0 | 2 | 0 | 1 | 0 | 87 | 0 | 2 |

| HD | 0 | 0 | 2 | 0 | 0 | 2 | 1 | 0 | 2 | 0 | 0 | 1 | 0 | 1 | 2 | 0 | 0 | 1 | 0 | 1 | 0 | 2 | 3 | 82 | 0 |

| NB | 2 | 1 | 0 | 1 | 0 | 0 | 2 | 2 | 0 | 1 | 1 | 0 | 2 | 0 | 0 | 2 | 1 | 0 | 1 | 0 | 2 | 0 | 0 | 1 | 81 |

| Average | 85.04% | ||||||||||||||||||||||||

Table 3.

Performance of Gray Texture Features method (instead of using the developed approach) using MRI dataset (Unit %).

| Illnesses | FS | MN | GL | VD | CA | HY | CC | MA | CS | MI | AD | CT | ME | PD | SR | AL | CJ | MB | AV | MS | LE | HE | CH | HD | NB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FS | 72 | 0 | 4 | 2 | 1 | 2 | 0 | 0 | 2 | 0 | 1 | 2 | 2 | 0 | 0 | 1 | 2 | 1 | 2 | 0 | 2 | 2 | 0 | 0 | 2 |

| MN | 1 | 69 | 0 | 2 | 0 | 1 | 4 | 2 | 0 | 2 | 2 | 0 | 4 | 2 | 1 | 0 | 1 | 0 | 2 | 2 | 1 | 0 | 2 | 2 | 0 |

| GL | 0 | 1 | 78 | 0 | 2 | 2 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 2 | 2 | 0 | 2 | 1 | 0 | 2 | 2 | 0 | 1 | 1 |

| VD | 2 | 0 | 1 | 76 | 0 | 0 | 3 | 0 | 1 | 3 | 0 | 0 | 1 | 2 | 0 | 1 | 1 | 3 | 4 | 0 | 1 | 0 | 1 | 0 | 0 |

| CA | 0 | 3 | 1 | 0 | 77 | 2 | 0 | 0 | 4 | 0 | 1 | 2 | 0 | 0 | 2 | 1 | 0 | 0 | 3 | 1 | 0 | 1 | 1 | 0 | 1 |

| HY | 3 | 1 | 0 | 2 | 0 | 71 | 2 | 2 | 0 | 2 | 0 | 1 | 1 | 1 | 2 | 0 | 0 | 3 | 4 | 0 | 1 | 2 | 0 | 1 | 1 |

| CC | 0 | 2 | 1 | 1 | 0 | 0 | 68 | 3 | 2 | 0 | 1 | 1 | 2 | 1 | 4 | 3 | 0 | 2 | 0 | 4 | 2 | 1 | 2 | 0 | 0 |

| MA | 1 | 3 | 0 | 0 | 1 | 2 | 2 | 70 | 3 | 2 | 1 | 3 | 2 | 1 | 0 | 0 | 2 | 1 | 1 | 2 | 0 | 1 | 1 | 0 | 1 |

| CS | 0 | 0 | 2 | 0 | 1 | 1 | 0 | 0 | 80 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 2 | 3 | 0 | 1 | 2 | 2 | 1 | 1 |

| MI | 1 | 0 | 0 | 0 | 0 | 2 | 1 | 2 | 1 | 81 | 0 | 3 | 2 | 0 | 1 | 0 | 1 | 1 | 2 | 0 | 0 | 1 | 1 | 0 | 0 |

| AD | 1 | 1 | 1 | 1 | 2 | 1 | 0 | 0 | 3 | 1 | 74 | 1 | 1 | 2 | 1 | 1 | 0 | 0 | 3 | 2 | 0 | 2 | 1 | 0 | 1 |

| CT | 1 | 0 | 0 | 3 | 2 | 0 | 2 | 1 | 0 | 1 | 1 | 73 | 2 | 1 | 1 | 4 | 0 | 2 | 1 | 0 | 2 | 1 | 0 | 2 | 0 |

| ME | 0 | 2 | 2 | 0 | 1 | 0 | 1 | 2 | 1 | 0 | 2 | 1 | 69 | 4 | 0 | 0 | 3 | 1 | 3 | 1 | 1 | 0 | 3 | 2 | 1 |

| PD | 2 | 0 | 0 | 1 | 1 | 3 | 1 | 1 | 0 | 3 | 2 | 1 | 1 | 66 | 2 | 4 | 1 | 2 | 2 | 0 | 3 | 1 | 2 | 0 | 1 |

| SR | 1 | 3 | 0 | 0 | 2 | 1 | 1 | 1 | 4 | 1 | 2 | 1 | 3 | 1 | 72 | 0 | 0 | 0 | 1 | 0 | 0 | 2 | 2 | 0 | 2 |

| AL | 0 | 1 | 0 | 1 | 0 | 2 | 2 | 1 | 0 | 0 | 1 | 2 | 3 | 1 | 1 | 73 | 1 | 2 | 3 | 1 | 0 | 2 | 0 | 1 | 2 |

| CJ | 1 | 2 | 2 | 0 | 1 | 1 | 2 | 1 | 1 | 1 | 2 | 0 | 0 | 1 | 2 | 2 | 75 | 0 | 1 | 0 | 2 | 0 | 1 | 1 | 1 |

| MB | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 3 | 0 | 1 | 0 | 2 | 1 | 0 | 1 | 0 | 1 | 79 | 3 | 0 | 1 | 1 | 0 | 1 | 3 |

| AV | 2 | 1 | 1 | 2 | 1 | 3 | 3 | 0 | 0 | 0 | 1 | 1 | 0 | 3 | 1 | 1 | 2 | 0 | 68 | 2 | 1 | 2 | 1 | 2 | 2 |

| MS | 3 | 1 | 2 | 0 | 0 | 2 | 0 | 1 | 1 | 0 | 2 | 0 | 3 | 2 | 1 | 0 | 0 | 2 | 5 | 69 | 0 | 1 | 2 | 1 | 2 |

| LE | 0 | 1 | 1 | 2 | 3 | 4 | 1 | 2 | 1 | 3 | 0 | 0 | 1 | 0 | 2 | 1 | 1 | 0 | 2 | 3 | 67 | 0 | 2 | 3 | 0 |

| HE | 0 | 3 | 2 | 0 | 0 | 2 | 0 | 2 | 2 | 4 | 0 | 0 | 0 | 0 | 2 | 3 | 1 | 1 | 1 | 2 | 2 | 70 | 2 | 0 | 1 |

| CH | 1 | 2 | 0 | 3 | 1 | 0 | 1 | 0 | 1 | 0 | 2 | 2 | 2 | 3 | 1 | 0 | 0 | 1 | 2 | 3 | 1 | 0 | 71 | 1 | 2 |

| HD | 2 | 0 | 3 | 1 | 2 | 1 | 0 | 3 | 0 | 1 | 1 | 2 | 1 | 0 | 0 | 0 | 0 | 2 | 1 | 1 | 0 | 0 | 2 | 74 | 3 |

| NB | 1 | 2 | 1 | 1 | 0 | 2 | 0 | 1 | 1 | 0 | 2 | 1 | 0 | 0 | 0 | 1 | 2 | 3 | 1 | 0 | 2 | 1 | 0 | 2 | 76 |

| Average | 72.72% | ||||||||||||||||||||||||

Table 4.

Performance of Fusion Feature method (instead of using the developed approach) using MRI dataset (Unit %).

| Illnesses | FS | MN | GL | VD | CA | HY | CC | MA | CS | MI | AD | CT | ME | PD | SR | AL | CJ | MB | AV | MS | LE | HE | CH | HD | NB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FS | 70 | 2 | 3 | 1 | 0 | 1 | 2 | 2 | 1 | 0 | 1 | 1 | 0 | 2 | 1 | 2 | 0 | 0 | 0 | 2 | 4 | 2 | 1 | 0 | 2 |

| MN | 2 | 66 | 0 | 1 | 3 | 4 | 1 | 1 | 0 | 3 | 2 | 1 | 3 | 1 | 1 | 1 | 0 | 3 | 0 | 1 | 3 | 1 | 0 | 1 | 1 |

| GL | 0 | 1 | 67 | 1 | 2 | 0 | 2 | 1 | 1 | 2 | 0 | 0 | 2 | 2 | 3 | 2 | 2 | 1 | 3 | 0 | 1 | 2 | 3 | 0 | 2 |

| VD | 1 | 0 | 1 | 71 | 1 | 2 | 0 | 3 | 0 | 3 | 2 | 2 | 1 | 0 | 2 | 0 | 1 | 2 | 1 | 1 | 2 | 0 | 2 | 1 | 1 |

| CA | 0 | 0 | 1 | 1 | 72 | 2 | 1 | 2 | 1 | 1 | 1 | 0 | 2 | 3 | 0 | 2 | 3 | 0 | 0 | 0 | 2 | 2 | 1 | 3 | 0 |

| HY | 1 | 1 | 0 | 0 | 2 | 73 | 2 | 1 | 1 | 2 | 0 | 1 | 1 | 2 | 1 | 0 | 2 | 1 | 2 | 1 | 1 | 2 | 3 | 0 | 0 |

| CC | 3 | 0 | 1 | 1 | 0 | 1 | 74 | 2 | 1 | 3 | 1 | 0 | 0 | 2 | 1 | 1 | 0 | 2 | 0 | 3 | 0 | 1 | 0 | 2 | 1 |

| MA | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 77 | 2 | 0 | 0 | 2 | 2 | 1 | 1 | 2 | 0 | 0 | 0 | 2 | 1 | 2 | 2 | 0 | 2 |

| CS | 1 | 2 | 2 | 1 | 2 | 0 | 2 | 2 | 66 | 1 | 2 | 1 | 1 | 2 | 0 | 0 | 2 | 0 | 2 | 1 | 1 | 2 | 2 | 1 | 4 |

| MI | 3 | 1 | 0 | 0 | 0 | 4 | 0 | 0 | 0 | 64 | 5 | 0 | 0 | 1 | 1 | 1 | 0 | 4 | 5 | 0 | 4 | 0 | 3 | 2 | 2 |

| AD | 1 | 2 | 1 | 1 | 2 | 1 | 1 | 2 | 2 | 1 | 68 | 2 | 3 | 1 | 0 | 0 | 1 | 2 | 1 | 1 | 0 | 1 | 2 | 1 | 3 |

| CT | 0 | 0 | 2 | 0 | 2 | 1 | 0 | 0 | 0 | 0 | 2 | 79 | 1 | 2 | 1 | 1 | 0 | 0 | 2 | 0 | 2 | 3 | 1 | 0 | 1 |

| ME | 2 | 1 | 0 | 2 | 3 | 0 | 3 | 2 | 4 | 0 | 0 | 5 | 60 | 2 | 2 | 3 | 2 | 1 | 1 | 1 | 0 | 4 | 1 | 0 | 1 |

| PD | 4 | 0 | 2 | 0 | 0 | 3 | 1 | 1 | 2 | 1 | 0 | 0 | 2 | 71 | 3 | 2 | 0 | 1 | 0 | 3 | 1 | 2 | 1 | 0 | 0 |

| SR | 1 | 1 | 0 | 1 | 1 | 0 | 0 | 3 | 1 | 0 | 0 | 2 | 1 | 1 | 73 | 1 | 0 | 1 | 2 | 1 | 3 | 0 | 2 | 3 | 2 |

| AL | 1 | 2 | 1 | 2 | 0 | 2 | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 2 | 76 | 0 | 1 | 2 | 0 | 0 | 2 | 2 | 1 | 1 |

| CJ | 3 | 1 | 0 | 0 | 1 | 0 | 1 | 2 | 1 | 1 | 1 | 0 | 0 | 0 | 3 | 1 | 77 | 2 | 2 | 1 | 0 | 0 | 2 | 0 | 1 |

| MB | 2 | 3 | 1 | 4 | 3 | 1 | 0 | 1 | 3 | 0 | 6 | 0 | 1 | 0 | 1 | 0 | 0 | 67 | 1 | 1 | 0 | 3 | 0 | 1 | 1 |

| AV | 0 | 2 | 1 | 3 | 1 | 0 | 1 | 2 | 0 | 3 | 1 | 1 | 2 | 2 | 2 | 1 | 1 | 2 | 69 | 1 | 0 | 2 | 1 | 2 | 0 |

| MS | 1 | 3 | 2 | 0 | 1 | 1 | 0 | 0 | 2 | 4 | 1 | 1 | 3 | 0 | 1 | 0 | 0 | 2 | 1 | 72 | 2 | 1 | 0 | 1 | 1 |

| LE | 1 | 0 | 1 | 1 | 1 | 2 | 1 | 1 | 3 | 0 | 2 | 0 | 0 | 0 | 0 | 1 | 2 | 2 | 1 | 1 | 75 | 1 | 1 | 1 | 2 |

| HE | 2 | 1 | 1 | 0 | 0 | 1 | 3 | 0 | 0 | 0 | 2 | 3 | 1 | 1 | 1 | 0 | 0 | 2 | 1 | 1 | 2 | 74 | 1 | 0 | 3 |

| CH | 3 | 0 | 0 | 2 | 3 | 4 | 1 | 1 | 3 | 2 | 1 | 0 | 0 | 0 | 3 | 2 | 2 | 1 | 3 | 0 | 1 | 0 | 66 | 2 | 0 |

| HD | 0 | 3 | 2 | 0 | 1 | 1 | 1 | 0 | 3 | 5 | 0 | 2 | 1 | 1 | 2 | 3 | 1 | 2 | 0 | 1 | 1 | 2 | 1 | 65 | 2 |

| NB | 2 | 1 | 2 | 3 | 0 | 0 | 0 | 2 | 1 | 4 | 3 | 2 | 1 | 1 | 0 | 0 | 4 | 2 | 2 | 0 | 0 | 1 | 3 | 2 | 64 |

| Average | 70.24% | ||||||||||||||||||||||||

Table 5.

Performance of Latent Semantic Analysis method (instead of using the developed approach) using MRI dataset (Unit %).

| Illnesses | FS | MN | GL | VD | CA | HY | CC | MA | CS | MI | AD | CT | ME | PD | SR | AL | CJ | MB | AV | MS | LE | HE | CH | HD | NB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FS | 65 | 0 | 2 | 2 | 0 | 1 | 2 | 4 | 0 | 2 | 1 | 2 | 1 | 4 | 0 | 2 | 2 | 2 | 1 | 0 | 2 | 2 | 2 | 0 | 1 |

| MN | 2 | 66 | 1 | 0 | 2 | 2 | 0 | 1 | 2 | 2 | 0 | 1 | 2 | 2 | 2 | 0 | 4 | 1 | 2 | 2 | 2 | 0 | 1 | 3 | 0 |

| GL | 1 | 2 | 71 | 2 | 1 | 0 | 2 | 2 | 1 | 0 | 2 | 1 | 1 | 4 | 0 | 2 | 1 | 0 | 1 | 0 | 2 | 2 | 0 | 0 | 2 |

| VD | 0 | 1 | 2 | 69 | 2 | 2 | 1 | 0 | 2 | 1 | 0 | 4 | 2 | 1 | 2 | 0 | 2 | 2 | 2 | 1 | 1 | 0 | 2 | 1 | 0 |

| CA | 2 | 2 | 3 | 1 | 67 | 0 | 0 | 2 | 1 | 3 | 2 | 1 | 0 | 0 | 1 | 3 | 1 | 1 | 2 | 1 | 0 | 2 | 1 | 2 | 2 |

| HY | 3 | 1 | 2 | 1 | 1 | 64 | 2 | 1 | 1 | 2 | 4 | 0 | 1 | 2 | 0 | 0 | 0 | 2 | 1 | 2 | 1 | 2 | 3 | 1 | 3 |

| CC | 4 | 0 | 1 | 3 | 2 | 4 | 60 | 0 | 2 | 0 | 2 | 3 | 2 | 2 | 1 | 2 | 1 | 3 | 2 | 0 | 2 | 0 | 1 | 2 | 1 |

| MA | 0 | 2 | 2 | 1 | 0 | 2 | 4 | 61 | 1 | 3 | 2 | 0 | 1 | 2 | 2 | 3 | 2 | 0 | 2 | 2 | 1 | 2 | 2 | 0 | 4 |

| CS | 2 | 1 | 0 | 2 | 2 | 1 | 0 | 2 | 67 | 2 | 0 | 1 | 2 | 1 | 2 | 0 | 1 | 2 | 2 | 2 | 3 | 0 | 1 | 2 | 2 |

| MI | 1 | 2 | 2 | 1 | 2 | 0 | 2 | 1 | 2 | 69 | 2 | 0 | 1 | 2 | 0 | 2 | 2 | 0 | 1 | 2 | 1 | 2 | 0 | 1 | 2 |

| AD | 2 | 1 | 1 | 0 | 1 | 2 | 0 | 2 | 0 | 2 | 72 | 1 | 0 | 2 | 2 | 1 | 0 | 2 | 2 | 0 | 2 | 2 | 2 | 1 | 0 |

| CT | 0 | 2 | 2 | 1 | 2 | 0 | 2 | 1 | 2 | 0 | 1 | 73 | 2 | 0 | 1 | 2 | 2 | 1 | 2 | 1 | 0 | 1 | 0 | 2 | 0 |

| ME | 1 | 0 | 1 | 2 | 0 | 2 | 1 | 0 | 1 | 2 | 2 | 2 | 75 | 1 | 2 | 0 | 1 | 2 | 0 | 2 | 1 | 0 | 1 | 0 | 1 |

| PD | 0 | 1 | 0 | 1 | 2 | 1 | 0 | 2 | 0 | 1 | 0 | 1 | 0 | 77 | 1 | 2 | 2 | 0 | 1 | 1 | 2 | 1 | 0 | 2 | 2 |

| SR | 2 | 1 | 2 | 0 | 1 | 0 | 2 | 0 | 1 | 0 | 2 | 0 | 1 | 0 | 78 | 1 | 0 | 1 | 2 | 2 | 0 | 2 | 1 | 1 | 0 |

| AL | 1 | 2 | 1 | 2 | 0 | 2 | 1 | 2 | 2 | 2 | 0 | 1 | 0 | 2 | 1 | 71 | 2 | 2 | 0 | 1 | 2 | 1 | 0 | 2 | 1 |

| CJ | 2 | 0 | 2 | 1 | 2 | 4 | 0 | 1 | 2 | 1 | 2 | 2 | 2 | 1 | 3 | 0 | 63 | 1 | 2 | 2 | 1 | 2 | 2 | 0 | 2 |

| MB | 3 | 2 | 2 | 4 | 1 | 0 | 2 | 2 | 1 | 1 | 3 | 0 | 1 | 2 | 0 | 1 | 1 | 61 | 2 | 3 | 1 | 0 | 2 | 3 | 2 |

| AV | 2 | 3 | 2 | 0 | 1 | 2 | 3 | 0 | 2 | 0 | 2 | 1 | 4 | 0 | 3 | 2 | 0 | 5 | 60 | 0 | 2 | 2 | 1 | 3 | 0 |

| MS | 0 | 2 | 2 | 1 | 1 | 2 | 5 | 3 | 0 | 2 | 2 | 2 | 1 | 3 | 4 | 0 | 3 | 2 | 0 | 59 | 2 | 1 | 2 | 0 | 1 |

| LE | 1 | 1 | 1 | 0 | 2 | 1 | 2 | 0 | 3 | 1 | 0 | 0 | 2 | 1 | 0 | 2 | 1 | 2 | 4 | 0 | 71 | 2 | 0 | 1 | 2 |

| HE | 3 | 2 | 0 | 0 | 0 | 1 | 2 | 2 | 3 | 1 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 2 | 1 | 3 | 1 | 73 | 2 | 1 | 0 |

| CH | 0 | 1 | 3 | 1 | 1 | 2 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 2 | 2 | 2 | 1 | 2 | 3 | 1 | 0 | 0 | 74 | 1 | 1 |

| HD | 2 | 2 | 0 | 0 | 3 | 1 | 1 | 1 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 0 | 2 | 4 | 0 | 0 | 1 | 1 | 1 | 76 | 2 |

| NB | 1 | 1 | 1 | 2 | 2 | 0 | 0 | 0 | 1 | 1 | 2 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 2 | 2 | 1 | 2 | 78 |

| Average | 68.80% | ||||||||||||||||||||||||

Table 6.

Performance of Partial Least Squares method (instead of using the developed approach) using MRI dataset (Unit %).

| Illnesses | FS | MN | GL | VD | CA | HY | CC | MA | CS | MI | AD | CT | ME | PD | SR | AL | CJ | MB | AV | MS | LE | HE | CH | HD | NB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FS | 61 | 0 | 2 | 1 | 2 | 0 | 4 | 2 | 1 | 0 | 4 | 2 | 1 | 2 | 3 | 0 | 2 | 2 | 1 | 0 | 2 | 4 | 1 | 0 | 3 |

| MN | 2 | 67 | 1 | 2 | 0 | 2 | 1 | 3 | 2 | 1 | 0 | 1 | 2 | 4 | 0 | 2 | 1 | 2 | 2 | 2 | 0 | 1 | 0 | 2 | 0 |

| GL | 1 | 2 | 70 | 0 | 2 | 1 | 0 | 2 | 1 | 0 | 2 | 3 | 0 | 1 | 2 | 1 | 2 | 0 | 1 | 2 | 2 | 0 | 2 | 1 | 2 |

| VD | 0 | 1 | 2 | 74 | 1 | 2 | 2 | 0 | 1 | 1 | 2 | 0 | 2 | 0 | 1 | 2 | 1 | 2 | 0 | 1 | 1 | 2 | 1 | 0 | 1 |

| CA | 2 | 2 | 0 | 1 | 73 | 1 | 0 | 2 | 0 | 2 | 1 | 2 | 1 | 1 | 2 | 0 | 2 | 1 | 2 | 2 | 0 | 1 | 0 | 2 | 0 |

| HY | 1 | 0 | 2 | 2 | 2 | 63 | 1 | 2 | 1 | 2 | 4 | 2 | 0 | 3 | 1 | 2 | 1 | 2 | 2 | 0 | 2 | 0 | 2 | 1 | 2 |

| CC | 2 | 2 | 1 | 0 | 3 | 2 | 62 | 1 | 2 | 0 | 1 | 4 | 2 | 1 | 2 | 1 | 2 | 0 | 1 | 2 | 1 | 2 | 4 | 0 | 2 |

| MA | 0 | 1 | 2 | 2 | 1 | 2 | 2 | 60 | 1 | 2 | 0 | 1 | 2 | 2 | 3 | 4 | 0 | 2 | 3 | 1 | 2 | 1 | 0 | 4 | 2 |

| CS | 4 | 2 | 1 | 2 | 2 | 1 | 0 | 2 | 59 | 1 | 2 | 2 | 1 | 2 | 1 | 2 | 2 | 3 | 0 | 2 | 4 | 2 | 1 | 2 | 0 |

| MI | 1 | 2 | 2 | 1 | 0 | 2 | 2 | 1 | 2 | 66 | 1 | 2 | 4 | 1 | 0 | 1 | 2 | 1 | 2 | 1 | 0 | 1 | 2 | 2 | 1 |

| AD | 2 | 0 | 1 | 2 | 2 | 2 | 1 | 2 | 0 | 2 | 68 | 1 | 2 | 2 | 1 | 2 | 1 | 0 | 1 | 2 | 1 | 2 | 1 | 0 | 2 |

| CT | 1 | 2 | 2 | 0 | 1 | 0 | 2 | 1 | 2 | 0 | 2 | 71 | 0 | 2 | 2 | 1 | 2 | 1 | 2 | 0 | 2 | 1 | 0 | 2 | 1 |

| ME | 2 | 1 | 0 | 2 | 2 | 1 | 2 | 0 | 1 | 2 | 2 | 0 | 68 | 1 | 2 | 2 | 0 | 2 | 1 | 2 | 2 | 0 | 2 | 1 | 2 |

| PD | 0 | 2 | 2 | 1 | 2 | 2 | 1 | 2 | 4 | 1 | 0 | 3 | 2 | 61 | 1 | 2 | 2 | 1 | 2 | 1 | 0 | 2 | 1 | 2 | 3 |

| SR | 2 | 2 | 1 | 2 | 1 | 2 | 2 | 1 | 0 | 2 | 2 | 1 | 2 | 2 | 60 | 0 | 1 | 4 | 1 | 2 | 2 | 3 | 2 | 1 | 2 |

| AL | 1 | 0 | 2 | 1 | 2 | 1 | 2 | 0 | 2 | 1 | 2 | 2 | 1 | 2 | 2 | 64 | 2 | 0 | 2 | 1 | 2 | 2 | 1 | 4 | 1 |

| CJ | 2 | 2 | 1 | 2 | 1 | 2 | 0 | 2 | 1 | 2 | 1 | 1 | 2 | 0 | 2 | 2 | 66 | 2 | 1 | 2 | 1 | 1 | 2 | 0 | 2 |

| MB | 1 | 2 | 0 | 1 | 0 | 1 | 2 | 1 | 2 | 0 | 2 | 2 | 1 | 2 | 1 | 2 | 2 | 69 | 2 | 0 | 2 | 2 | 1 | 2 | 0 |

| AV | 2 | 1 | 2 | 0 | 2 | 0 | 1 | 0 | 1 | 2 | 2 | 0 | 2 | 1 | 2 | 0 | 2 | 2 | 72 | 2 | 1 | 0 | 2 | 0 | 1 |

| MS | 0 | 2 | 1 | 2 | 1 | 2 | 0 | 2 | 2 | 1 | 0 | 2 | 1 | 1 | 0 | 1 | 1 | 0 | 2 | 73 | 2 | 1 | 0 | 2 | 1 |

| LE | 2 | 1 | 2 | 1 | 0 | 2 | 2 | 1 | 2 | 0 | 2 | 1 | 0 | 2 | 2 | 1 | 0 | 2 | 1 | 2 | 69 | 2 | 2 | 1 | 0 |

| HE | 1 | 2 | 0 | 2 | 1 | 1 | 2 | 2 | 0 | 1 | 1 | 2 | 2 | 1 | 1 | 2 | 1 | 1 | 2 | 0 | 2 | 70 | 1 | 0 | 2 |

| CH | 2 | 0 | 2 | 1 | 2 | 2 | 4 | 0 | 2 | 2 | 1 | 2 | 1 | 2 | 2 | 0 | 2 | 2 | 1 | 2 | 1 | 2 | 62 | 2 | 1 |

| HD | 1 | 2 | 2 | 2 | 1 | 0 | 1 | 2 | 2 | 1 | 2 | 0 | 4 | 1 | 2 | 3 | 1 | 0 | 2 | 1 | 2 | 3 | 2 | 61 | 2 |

| NB | 2 | 2 | 1 | 0 | 4 | 2 | 2 | 3 | 1 | 2 | 0 | 4 | 1 | 2 | 1 | 2 | 0 | 2 | 1 | 2 | 0 | 2 | 1 | 2 | 60 |

| Average | 65.96% | ||||||||||||||||||||||||

Table 7.

Performance of Semidefinite Embedding method (instead of using the developed approach) using MRI dataset (Unit %).

| Illnesses | FS | MN | GL | VD | CA | HY | CC | MA | CS | MI | AD | CT | ME | PD | SR | AL | CJ | MB | AV | MS | LE | HE | CH | HD | NB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FS | 79 | 0 | 1 | 0 | 2 | 2 | 0 | 1 | 0 | 2 | 0 | 1 | 2 | 0 | 1 | 0 | 1 | 2 | 0 | 2 | 1 | 2 | 0 | 1 | 0 |

| MN | 2 | 82 | 0 | 1 | 0 | 1 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 2 | 0 | 1 | 0 | 1 | 2 | 0 | 2 | 0 | 1 | 0 | 2 |

| GL | 0 | 1 | 87 | 0 | 2 | 0 | 1 | 0 | 0 | 1 | 0 | 2 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 2 | 0 | 1 | 0 |

| VD | 2 | 0 | 1 | 77 | 0 | 2 | 1 | 1 | 0 | 2 | 2 | 0 | 1 | 0 | 2 | 0 | 2 | 2 | 1 | 0 | 1 | 0 | 2 | 0 | 1 |

| CA | 0 | 1 | 0 | 2 | 81 | 0 | 2 | 0 | 2 | 0 | 1 | 2 | 0 | 2 | 0 | 1 | 0 | 0 | 1 | 2 | 0 | 1 | 0 | 2 | 0 |

| HY | 2 | 0 | 1 | 0 | 1 | 79 | 0 | 1 | 0 | 2 | 0 | 1 | 2 | 0 | 1 | 2 | 1 | 0 | 2 | 0 | 1 | 0 | 2 | 0 | 2 |

| CC | 1 | 2 | 0 | 2 | 0 | 1 | 75 | 0 | 2 | 1 | 2 | 0 | 1 | 2 | 0 | 1 | 2 | 2 | 0 | 1 | 0 | 2 | 2 | 1 | 0 |

| MA | 0 | 1 | 2 | 0 | 2 | 0 | 1 | 83 | 0 | 0 | 1 | 2 | 0 | 1 | 2 | 0 | 0 | 1 | 2 | 0 | 0 | 1 | 0 | 0 | 1 |

| CS | 1 | 0 | 0 | 1 | 0 | 2 | 0 | 1 | 85 | 0 | 2 | 0 | 1 | 0 | 0 | 2 | 1 | 0 | 0 | 2 | 1 | 0 | 1 | 0 | 0 |

| MI | 0 | 2 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 87 | 0 | 1 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 1 | 1 |

| AD | 2 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 88 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 1 |

| CT | 0 | 1 | 0 | 2 | 1 | 2 | 0 | 0 | 1 | 2 | 0 | 80 | 0 | 1 | 2 | 1 | 0 | 2 | 0 | 1 | 2 | 0 | 0 | 2 | 0 |

| ME | 1 | 0 | 2 | 0 | 0 | 0 | 2 | 1 | 0 | 0 | 1 | 0 | 84 | 0 | 1 | 0 | 2 | 0 | 1 | 0 | 0 | 2 | 1 | 0 | 2 |

| PD | 0 | 2 | 0 | 1 | 0 | 1 | 0 | 0 | 2 | 1 | 0 | 1 | 0 | 85 | 0 | 2 | 0 | 1 | 0 | 1 | 1 | 0 | 0 | 2 | 0 |

| SR | 1 | 0 | 1 | 0 | 2 | 0 | 1 | 2 | 0 | 0 | 2 | 0 | 1 | 0 | 82 | 0 | 2 | 0 | 1 | 0 | 0 | 2 | 1 | 0 | 2 |

| AL | 0 | 2 | 0 | 1 | 0 | 1 | 0 | 1 | 2 | 1 | 0 | 1 | 0 | 1 | 2 | 81 | 0 | 2 | 0 | 1 | 1 | 0 | 2 | 1 | 0 |

| CJ | 3 | 0 | 0 | 0 | 1 | 1 | 1 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 78 | 2 | 1 | 1 | 0 | 0 | 2 | 3 | 1 |

| MB | 2 | 1 | 1 | 1 | 0 | 2 | 0 | 1 | 1 | 1 | 2 | 1 | 0 | 1 | 0 | 0 | 1 | 79 | 2 | 2 | 1 | 1 | 0 | 0 | 0 |

| AV | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 2 | 89 | 0 | 0 | 0 | 0 | 1 | 0 |

| MS | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | 90 | 0 | 1 | 0 | 1 | 0 |

| LE | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 2 | 91 | 0 | 1 | 0 | 1 |

| HE | 1 | 1 | 1 | 0 | 2 | 1 | 0 | 0 | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 1 | 86 | 0 | 0 | 1 |

| CH | 1 | 0 | 0 | 0 | 0 | 2 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 1 | 2 | 1 | 0 | 0 | 87 | 0 | 0 |

| HD | 2 | 1 | 0 | 2 | 1 | 0 | 0 | 2 | 0 | 1 | 1 | 0 | 1 | 1 | 2 | 3 | 0 | 0 | 0 | 0 | 1 | 2 | 0 | 80 | 0 |

| NB | 1 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 2 | 0 | 0 | 0 | 0 | 3 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 85 |

| Average | 83.20% | ||||||||||||||||||||||||

Table 8.

Performance of Independent Component Analysis (ICA) method (instead of using the developed approach) using MRI dataset (Unit %).

| Illnesses | FS | MN | GL | VD | CA | HY | CC | MA | CS | MI | AD | CT | ME | PD | SR | AL | CJ | MB | AV | MS | LE | HE | CH | HD | NB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FS | 71 | 0 | 2 | 1 | 2 | 2 | 0 | 1 | 2 | 2 | 0 | 1 | 2 | 0 | 2 | 1 | 2 | 0 | 2 | 1 | 1 | 0 | 2 | 2 | 1 |

| MN | 1 | 77 | 0 | 2 | 0 | 0 | 2 | 2 | 1 | 0 | 1 | 2 | 0 | 1 | 2 | 2 | 0 | 1 | 2 | 2 | 0 | 1 | 0 | 1 | 0 |

| GL | 2 | 0 | 81 | 0 | 2 | 1 | 0 | 1 | 0 | 2 | 0 | 1 | 1 | 0 | 1 | 1 | 2 | 0 | 1 | 0 | 2 | 0 | 1 | 0 | 1 |

| VD | 0 | 2 | 0 | 82 | 0 | 1 | 2 | 0 | 1 | 0 | 2 | 0 | 0 | 2 | 0 | 0 | 1 | 2 | 0 | 1 | 0 | 2 | 0 | 2 | 0 |

| CA | 1 | 0 | 2 | 1 | 73 | 0 | 1 | 2 | 1 | 2 | 0 | 1 | 2 | 0 | 2 | 2 | 0 | 1 | 2 | 0 | 2 | 0 | 1 | 2 | 2 |

| HY | 0 | 1 | 0 | 0 | 1 | 84 | 2 | 0 | 2 | 0 | 1 | 2 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 2 | 0 | 2 | 0 | 1 | 0 |

| CC | 2 | 0 | 1 | 1 | 0 | 0 | 85 | 1 | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 1 | 0 | 2 | 0 | 1 |

| MA | 0 | 2 | 0 | 2 | 1 | 1 | 0 | 81 | 1 | 0 | 2 | 1 | 0 | 2 | 1 | 0 | 1 | 2 | 0 | 0 | 0 | 1 | 0 | 2 | 0 |

| CS | 1 | 0 | 1 | 0 | 2 | 0 | 2 | 0 | 80 | 1 | 0 | 2 | 0 | 0 | 2 | 1 | 0 | 0 | 1 | 2 | 2 | 0 | 2 | 1 | 0 |

| MI | 0 | 2 | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 88 | 0 | 0 | 1 | 0 | 0 | 0 | 2 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 1 |

| AD | 2 | 0 | 1 | 0 | 2 | 1 | 2 | 0 | 2 | 0 | 74 | 1 | 0 | 2 | 1 | 2 | 0 | 0 | 2 | 1 | 1 | 0 | 2 | 2 | 2 |

| CT | 2 | 1 | 0 | 2 | 1 | 0 | 2 | 1 | 0 | 2 | 2 | 75 | 1 | 0 | 2 | 0 | 1 | 2 | 0 | 2 | 0 | 2 | 0 | 2 | 0 |

| ME | 0 | 2 | 1 | 0 | 2 | 1 | 1 | 0 | 2 | 1 | 0 | 2 | 77 | 1 | 0 | 2 | 0 | 1 | 2 | 0 | 1 | 1 | 2 | 0 | 1 |

| PD | 1 | 0 | 2 | 1 | 0 | 0 | 2 | 1 | 0 | 0 | 1 | 0 | 2 | 79 | 1 | 0 | 2 | 2 | 0 | 1 | 2 | 0 | 1 | 2 | 0 |

| SR | 2 | 1 | 0 | 0 | 1 | 2 | 0 | 0 | 2 | 1 | 0 | 1 | 0 | 0 | 83 | 2 | 0 | 0 | 2 | 0 | 0 | 2 | 0 | 1 | 0 |

| AL | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 2 | 0 | 0 | 2 | 0 | 2 | 1 | 0 | 85 | 1 | 0 | 0 | 2 | 0 | 0 | 2 | 0 | 0 |

| CJ | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 89 | 2 | 0 | 0 | 1 | 0 | 0 | 2 | 0 |

| MB | 0 | 2 | 0 | 1 | 0 | 1 | 0 | 2 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 90 | 0 | 1 | 0 | 0 | 1 | 0 | 0 |

| AV | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 91 | 0 | 0 | 2 | 0 | 0 | 0 |

| MS | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 92 | 0 | 0 | 0 | 0 | 2 |

| LE | 2 | 1 | 0 | 2 | 0 | 2 | 0 | 1 | 0 | 2 | 2 | 1 | 0 | 0 | 2 | 1 | 2 | 0 | 2 | 1 | 76 | 0 | 2 | 1 | 0 |

| HE | 1 | 0 | 2 | 0 | 1 | 0 | 2 | 0 | 2 | 0 | 1 | 0 | 1 | 2 | 0 | 2 | 0 | 2 | 0 | 2 | 2 | 78 | 0 | 1 | 1 |

| CH | 0 | 2 | 0 | 1 | 0 | 2 | 0 | 1 | 0 | 1 | 0 | 2 | 0 | 2 | 1 | 0 | 1 | 0 | 2 | 0 | 1 | 1 | 81 | 0 | 2 |

| HD | 2 | 0 | 1 | 0 | 2 | 0 | 1 | 0 | 2 | 0 | 2 | 0 | 1 | 0 | 2 | 2 | 0 | 1 | 0 | 1 | 2 | 2 | 0 | 77 | 2 |

| NB | 0 | 1 | 0 | 2 | 0 | 1 | 0 | 2 | 1 | 1 | 0 | 2 | 0 | 1 | 2 | 0 | 2 | 0 | 1 | 2 | 0 | 0 | 2 | 0 | 80 |

| Average | 81.16% | ||||||||||||||||||||||||

It is evident from Table 2, Table 3, Table 4, Table 5, Table 6,Table 7 and Table 8 that the corresponding MRI classification system did not achieve better recognition rates using the existing well-known feature extraction methods. Hence, the importance of the proposed feature extraction method might be judged by the respective MRI disease classification system. This is because the proposed approach can handle the orthogonal, biorthogonal, and reverse biorthogonal properties of gray scale images, and produces higher classification results. Our experiments validate the frequency-based assumption. The statistical reliance on wavelet coefficients is assessed for all grayscale MRI images. A gray scale frame’s joint probability is calculated by collecting geometrically aligned MRI pictures for each wavelet coefficient. In order to determine the wavelet coefficients obtained from these distributions, the mutual information between the two MRI images is used to calculate the statistical dependence’s intensity.

5.3. 3rd Experiment

Finally, in this experiment, we have compared the recognition rate of the proposed approach against existing state-of-the-art systems. These systems were implemented using the existing settings as described in their respective articles. For some systems, we have borrowed their respective implementation, while for others we have utilized their results as mentioned in their respective studies for a fair comparison. Moreover, the proposed approach and the existing state-of-the-art methods are measured through different measurement schemes such as sensitivity, accuracy, and specificity. For every measurement, we utilized the following formulas for evaluation.

| (18) |

| (19) |

| (20) |

where is true positive, is true negative, is false positive, and is false negative. The entire comparisons against the afore-mentioned measurements are respectively presented in Table 9, Table 10 and Table 11.

Table 9.

Performance comparison of the state-of-the-art methods against the proposed approach on MRI brain dataset (Number of utilized images 595 (where, normal = 115, and abnormal = 480)).

| Published Methods | Used Methods | Recognition Rates | Misclassification |

|---|---|---|---|

| Orouskhani, et al. [44] | Conditional Deep Triplet Network | 92.5% | 1.2% |

| Inglese, et al. [45] | Decision Support System | 81.0% | 2.5% |

| Mandle, et al. [46] | Kernel-based SVM | 90.2% | 3.3% |

| Abdulmunem, et al. [47] | Deep Belief Network | 88.9% | 3.5% |

| Jang, et al. [48] | Sorting Algorithm | 72.6% | 4.6% |

| Popuri, et al. [49] | Ensemble Learning | 90.3% | 3.1% |

| Latif, et al. [50] | Neural-Network-Based Features with SVM Classifier | 89.9% | 0.9% |

| Nawaz, et al. [51] | Multilayer Perception, J48, Meta Bagging, Random Tree | 83.8% | 2.0% |

| Assam, et al. [52] | Random Forest | 94.1% | 3.9% |

| Islam, et al. [53] | Convolutional Neural Network | 78.9% | 4.8% |

| Dehkordi, et al. [54] | Evolutionary Convolutional Neural Network | 91.3% | 2.0% |

| Krishna, et al. [55] | Local Linear Radial Basis Function Neural Network | 88.7% | 3.9% |

| Takrouni, et al. [56] | Deep Convolutional Network | 92.5% | 2.0% |

| Fayaz, et al. [57] | Convolutional Neural Network | 86.8% | 5.2% |

| Proposed Scheme | Logistic Regression | 96.6% | 3.4% |

Table 10.

Performance comparison of the state-of-the-art methods and the proposed approach using various evaluation measurements on brain MRI dataset (Number of utilized images 595 (where, normal = 115, and abnormal = 480)).

| Published Methods | Used Methods | True Positive | True Negative | False Positive | False Negative |

|---|---|---|---|---|---|

| Orouskhani, et al. [44] | Conditional Deep Triplet Network | 375 | 175 | 60 | 8 |

| Inglese, et al. [45] | Decision Support System | 360 | 178 | 57 | 7 |

| Mandle, et al. [46] | Kernel-based SVM | 350 | 177 | 63 | 9 |

| Abdulmunem, et al. [47] | Deep Belief Network | 365 | 178 | 67 | 10 |

| Jang, et al. [48] | Sorting Algorithm | 380 | 174 | 66 | 8 |

| Popuri, et al. [49] | Ensemble Learning | 350 | 175 | 61 | 7 |

| Latif, et al. [50] | Neural-Network-Based Features with SVM Classifier | 355 | 176 | 64 | 11 |

| Nawaz, et al. [51] | Multilayer Perception, J48, Meta Bagging, Random Tree | 375 | 169 | 59 | 6 |

| Assam, et al. [52] | Random Forest | 380 | 172 | 58 | 7 |

| Islam, et al. [53] | Convolutional Neural Network | 370 | 174 | 60 | 9 |

| Dehkordi, et al. [54] | Evolutionary Convolutional Neural Network | 360 | 173 | 55 | 8 |

| Krishna, et al. [55] | Local Linear Radial Basis Function Neural Network | 355 | 177 | 62 | 9 |

| Takrouni, et al. [56] | Deep Convolutional Network | 365 | 171 | 68 | 10 |

| Fayaz, et al. [57] | Convolutional Neural Network | 370 | 178 | 60 | 8 |

| Proposed Approach | Logistic Regression | 405 | 185 | 30 | 5 |

Table 11.

Performance comparison of the state-of-the-art methods and the proposed approach using sensitivity, accuracy, and specificity on brain MRI dataset (Number of utilized images 595 (where, normal = 115, and abnormal = 480)).

| Published Methods | Used Methods | Sensitivity | Accuracy | Specificity |

|---|---|---|---|---|

| Orouskhani, et al. [44] | Conditional Deep Triplet Network | 93.1% | 92.5% | 87.8% |

| Inglese, et al. [45] | Decision Support System | 83.8% | 81.0% | 76.5% |

| Mandle, et al. [46] | Kernel-based SVM | 89.3% | 90.2% | 92.4% |

| Abdulmunem, et al. [47] | Deep Belief Network | 91.4% | 88.9% | 85.4% |

| Jang, et al. [48] | Sorting Algorithm | 76.7% | 72.6% | 67.3% |

| Popuri, et al. [49] | Ensemble Learning | 91.6% | 90.3% | 86.6% |

| Latif, et al. [50] | Neural-Network-Based Features with SVM Classifier | 90.2% | 89.9% | 88.1% |

| Nawaz, et al. [51] | Multilayer Perception, J48, Meta Bagging, Random Tree | 81.2% | 83.8% | 85.3% |

| Assam, et al. [52] | Random Forest | 95.6% | 94.1% | 89.9% |

| Islam, et al. [53] | Convolutional Neural Network | 80.8% | 78.9% | 74.0% |

| Dehkordi, et al. [54] | Evolutionary Convolutional Neural Network | 93.6% | 91.3% | 90.1% |

| Krishna, et al. [55] | Local Linear Radial Basis Function Neural Network | 89.4% | 84.1% | 85.2% |

| Takrouni, et al. [56] | Deep Convolutional Network | 93.1% | 90.5% | 87.6% |

| Fayaz, et al. [57] | Convolutional Neural Network | 88.7% | 84.9% | 90.2% |

| Proposed Approach | Logistic Regression | 97.9% | 96.6% | 92.1% |

Table 9 denotes that the designed framework achieved remarkable achievements compared to the state-of-the-art studies. This is the proposed frameworks that handles the orthogonal, biorthogonal, and reverse biorthogonal properties of gray scale images. It produces higher classification results.

Similarly, Table 10 presents the effectiveness of the proposed approach. A comparison has been made against state-of-the-art methods in terms of true positive, true negative, false positive, and false negative.

Likewise, Table 11 provides a comparison between the proposed approach and the existing studies in terms of accuracy, sensitivity, and specificity. As can be seen, the proposed approach provides better sensitivity and specificity results compared with existing state-of-the-art methods.

6. Conclusions

In medical imaging, magnetic resonance imaging (MRI) is a precise and noninvasive technique that can be used to diagnose a variety of disorders. Various algorithms for brain MRI categorization have been developed by a number of researchers. On small MRI datasets, the majority of these algorithms did well and had higher identification rates. When dealing with larger MRI datasets, however, their performance degrades. As a result, the objective is to create a quick and precise classification system that can sustain a high identification rate across a sizable MRI dataset. As a result, in this study, a well-known enhancement method called global histogram equalization (GHE) is used to reduce undesirable information in MRI images. Furthermore, a reliable and accurate feature extraction technique is suggested for extracting and selecting the most prominent feature from an MRI picture. The suggested feature extraction method for grayscale photos is a compactly supported wavelet that has the greatest number of vanishing moments and the least amount of asymmetry for a given support width. Our study supports the frequency-based hypothesis. The statistical dependence of the wavelet coefficients is assessed for all grayscale MRI pictures. A gray scale frame’s joint probability is calculated by collecting geometrically aligned MRI pictures for each wavelet coefficient. Using mutual information for the wavelet coefficients derived using these distributions, the degree of statistical dependence between the two MRI images is evaluated. Furthermore, the linear discriminant analysis is used after extracting the features to choose the best features and lower the dimensions of the feature space, which may improve the performance of the recommended method for generating feature vectors. Finally, logistic regression is used to classify the brain illnesses. A huge dataset from Harvard Medical School and the OASIS is utilized, which comprises a total of 24 distinct types of brain disorders, to assess and test the suggested method.

In the proposed approach, the optimum set of features is extracted from the MRI images that are important for improving the accuracy. Subsequently, the rate of convergence is also one of the main factors improving the accuracy of this research; however, the number of features in this approach is not too high to reduce the computational complexity. Therefore, in the future, the proposed approach will be enclosed using MRI datasets in various healthcare domains. Moreover, the proposed approach is robust and efficient, which might be useful for real-time diagnostic applications in the future. Therefore, the proposed method might play a significant role in helping the radiologists and physicians with the initial diagnosis of the brain diseases using MRI.

Author Contributions

Conceptualization, methodology, and validation, M.H.S.; formal analysis, M.A. and Y.A.; resources and data curation, Y.A.; writing—review and editing, M.H.S.; funding acquisition, Y.A. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This work was funded by the Deanship of Scientific Research at Jouf University under Grant no. DSR–2021–02–0352.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Islam M.K., Ali M.S., Miah M.S., Rahman M.M., Alam M.S., Hossain M.A. Brain tumor detection in MR image using superpixels, principal component analysis and template-based K-means clustering algorithm. Mach. Learn. Appl. 2021;5:100044. doi: 10.1016/j.mlwa.2021.100044. [DOI] [Google Scholar]

- 2.Alam M.S., Rahman M.M., Amazad M.H., Islam M.K., Ahmed K.M., Ahmed K.T., Sing B.C., Miah M.S. Automatic human brain tumor detection in MRI image using template-based K means and improved fuzzy C means clustering algorithm. Big Data Cogn. Comput. 2019;3:27. doi: 10.3390/bdcc3020027. [DOI] [Google Scholar]

- 3.Islam M.K., Ali M.S., Das A.A., Duranta D., Alam M. Human brain tumor detection using k-means segmentation and improved support vector machine. Int. J. Sci. Eng. Res. 2020;11:583–588. [Google Scholar]

- 4.Gondal A.H., Khan M.N.A. A review of fully automated techniques for brain tumor detection from MR images. Int. J. Mod. Educ. Comput. Sci. 2013;5:55–61. doi: 10.5815/ijmecs.2013.02.08. [DOI] [Google Scholar]

- 5.Khan H.A., Jue W., Mushtaq M., Mushtaq M.U. Brain tumor classification in MRI image using convolutional neural network. Math. Biosci. Eng. 2020;17:6203–6216. doi: 10.3934/mbe.2020328. [DOI] [PubMed] [Google Scholar]

- 6.Gunasekara S.R., Kaldera H.N.T.K., Dissanayake M.B. A systematic approach for MRI brain tumor localization and segmentation using deep learning and active contouring. J. Healthc. Eng. 2021;2021:6695108. doi: 10.1155/2021/6695108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Duarte K.T.N., Moura M.A.N., Martins P.S., de Carvalho M.A.G. Brain Extraction in Multiple T1-weighted Magnetic Resonance Imaging slices using Digital Image Processing techniques. IEEE Lat. Am. Trans. 2022;20:831–838. [Google Scholar]

- 8.Cinarer G., Emiroglu B.G. Classification of brain tumours using radiomic features on MRI. New Trends Issues Proc. Adv. Pure Appl. Sci. 2020;12:80–90. doi: 10.18844/gjpaas.v0i12.4989. [DOI] [Google Scholar]

- 9.Mathur N., Meena Y.K., Mathur S., Mathur D. High-Resolution Neuroimaging-Basic Physical Principles and Clinical Applications. InTech; Rijeka, Croatia: 2018. Detection of brain tumor in MRI image through fuzzy-based approach. [Google Scholar]

- 10.Qodri K.N., Soesanti I., Nugroho H.A. Image Analysis for MRI-Based Brain Tumor Classification Using Deep Learning. Int. J. Inf. Technol. Electr. Eng. 2021;5:21–28. doi: 10.22146/ijitee.62663. [DOI] [Google Scholar]

- 11.Ullah Z., Lee S.H., Fayaz M. Enhanced feature extraction technique for brain MRI classification based on Haar wavelet and statistical moments. Int. J. Adv. Appl. Sci. 2019;6:89–98. doi: 10.21833/ijaas.2019.07.012. [DOI] [Google Scholar]

- 12.Kaur D., Singh S., Mansoor W., Kumar Y., Verma S., Dash S., Koul A. Computational Intelligence and Metaheuristic Techniques for Brain Tumor Detection through IoMT-Enabled MRI Devices. Wirel. Commun. Mob. Comput. 2022;2022:1519198. doi: 10.1155/2022/1519198. [DOI] [Google Scholar]

- 13.Arif M., Ajesh F., Shamsudheen S., Geman O., Izdrui D., Vicoveanu D. Brain Tumor Detection and Classification by MRI Using Biologically Inspired Orthogonal Wavelet Transform and Deep Learning Techniques. J. Healthc. Eng. 2022;2022:2693621. doi: 10.1155/2022/2693621. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 14.Harvard Medical School Dataset. [(accessed on 30 June 2022)]. Available online: http://med.harvard.edu/AANLIB/

- 15.Open Access Series of Imaging Studies (OASIS) Dataset. [(accessed on 30 June 2022)]. Available online: http://www.oasis-brains.org/

- 16.Fayaz M., Qureshi M.S., Kussainova K., Burkanova B., Aljarbouh A., Qureshi M.B. An improved brain MRI classification methodology based on statistical features and machine learning algorithms. Comput. Math. Methods Med. 2021;2021:8608305. doi: 10.1155/2021/8608305. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 17.Aaraji Z.S., Abbas H.H. Automatic Classification of Alzheimer’s Disease using brain MRI data and deep Convolutional Neural Networks. arXiv. 20222204.00068 [Google Scholar]

- 18.Yamashita R., Nishio M., Do R.K.G., Togashi K. Convolutional neural networks: An overview and application in radiology. Insights Into Imaging. 2018;9:611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Siddiqi M.H., Alsayat A., Alhwaiti Y., Azad M., Alruwaili M., Alanazi S., Kamruzzaman M.M., Khan A. A Precise Medical Imaging Approach for Brain MRI Image Classification. Comput. Intell. Neurosci. 2022;2022:6447769. doi: 10.1155/2022/6447769. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 20.Mowla M.R., Gonzalez-Morales J.D., Rico-Martinez J., Ulichnie D.A., Thompson D.E. A comparison of classification techniques to predict brain-computer interfaces accuracy using classifier-based latency estimation. Brain Sci. 2020;10:734. doi: 10.3390/brainsci10100734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Veeramuthu A., Meenakshi S., Mathivanan G., Kotecha K., Saini J., Vijayakumar V., Subramaniyaswamy V. MRI Brain Tumor Image Classification Using a Combined Feature and Image-Based Classifier. Front. Psychol. 2022;13:848784. doi: 10.3389/fpsyg.2022.848784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tambe P., Saigaonkar R., Devadiga N., Chitte P.H. Deep Learning techniques for effective diagnosis of Alzheimer’s disease using MRI images; Proceedings of the International Conference on Automation, Computing and Communication; Mumbai, India. 14–15 July 2021. [Google Scholar]

- 23.Wahlang I., Maji A.K., Saha G., Chakrabarti P., Jasinski M., Leonowicz Z., Jasinska E. Brain Magnetic Resonance Imaging Classification Using Deep Learning Architectures with Gender and Age. Sensors. 2022;22:1766. doi: 10.3390/s22051766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Shenbagarajan A., Ramalingam V., Balasubramanian C., Palanivel S. Tumor diagnosis in MRI brain image using ACM segmentation and ANN-LM classification techniques. Indian J. Sci. Technol. 2016;9:1–12. doi: 10.17485/ijst/2016/v9i1/78766. [DOI] [Google Scholar]

- 25.Despotović I., Goossens B., Philips W. MRI segmentation of the human brain: Challenges, methods, and applications. Comput. Math. Methods Med. 2015;2015:450341. doi: 10.1155/2015/450341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nayak D.R., Padhy N., Mallick P.K., Zymbler M., Kumar S. Brain Tumor Classification Using Dense Efficient-Net. Axioms. 2022;11:34. doi: 10.3390/axioms11010034. [DOI] [Google Scholar]

- 27.Hazarika R.A., Kandar D., Maji A.K. An experimental analysis of different deep learning-based models for Alzheimer’s disease classification using brain magnetic resonance images. J. King Saud Univ. Comput. Inf. Sci. 2021;34:8576–8598. doi: 10.1016/j.jksuci.2021.09.003. [DOI] [Google Scholar]

- 28.Ruba T., Tamilselvi R., ParisaBeham M., Aparna N. Accurate classification and detection of brain cancer cells in MRI and CT images using nano contrast agents. Biomed. Pharmacol. J. 2020;13:1227–1237. doi: 10.13005/bpj/1991. [DOI] [Google Scholar]

- 29.Malhotra P., Gupta S., Koundal D., Zaguia A., Enbeyle W. Deep Neural Networks for Medical Image Segmentation. J. Healthc. Eng. 2022;2022:9580991. doi: 10.1155/2022/9580991. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 30.Jia Z., Chen D. Brain tumor identification and classification of MRI images using deep learning techniques. IEEE Access. 2020:1–10. doi: 10.1109/ACCESS.2020.3016319. [DOI] [Google Scholar]

- 31.Yamanakkanavar N., Choi J.Y., Lee B. MRI segmentation and classification of human brain using deep learning for diagnosis of Alzheimer’s disease: A survey. Sensors. 2020;20:3243. doi: 10.3390/s20113243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Nayak D.R., Padhy N., Mallick P.K., Bagal D.K., Kumar S. Brain Tumour Classification Using Noble Deep Learning Approach with Parametric Optimization through Metaheuristics Approaches. Computers. 2022;11:10. doi: 10.3390/computers11010010. [DOI] [Google Scholar]

- 33.Jaya M.R.A., Pravin A. Clustering by Hybrid K-Means-Based Rider Sunflower Optimization Algorithm for Medical Data. Adv. Fuzzy Syst. 2022;2022:7783196. [Google Scholar]

- 34.Shen L., Zheng J., Lee E.H., Shpanskaya K., McKenna E.S., Atluri M.G., Plasto D., Mitchell C., Lai L.M., Guimaraes C.V., et al. Attention-guided deep learning for gestational age prediction using fetal brain MRI. Sci. Rep. 2022;12:1408. doi: 10.1038/s41598-022-05468-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gab Allah A.M., Sarhan A.M., Elshennawy N.M. Classification of Brain MRI Tumor Images Based on Deep Learning PGGAN Augmentation. Diagnostics. 2021;11:2343. doi: 10.3390/diagnostics11122343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Koshino K., Werner R.A., Pomper M.G., Bundschuh R.A., Toriumi F., Higuchi T., Rowe S.P. Narrative review of generative adversarial networks in medical and molecular imaging. Ann. Transl. Med. 2021;9:821. doi: 10.21037/atm-20-6325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.You A., Kim J.K., Ryu I.H., Yoo T.K. Application of generative adversarial networks (GAN) for ophthalmology image domains: A survey. Eye Vis. 2022;9:6. doi: 10.1186/s40662-022-00277-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Mahalakshmi D.M., Sumathi S. Performance Analysis of SVM and Deep Learning with CNN for Brain Tumor Detection and Classification. ICTACT J. Image Video Process. 2020;10:2145–2152. [Google Scholar]

- 39.Barburiceanu S., Terebes R., Meza S. 3D texture feature extraction and classification using GLCM and LBP-based descriptors. Appl. Sci. 2021;11:2332. doi: 10.3390/app11052332. [DOI] [Google Scholar]

- 40.Sassi O.B., Sellami L., Slima M.B., Chtourou K., Hamida A.B. Improved spatial gray level dependence matrices for texture analysis. Int. J. Comput. Sci. Inf. Technol. 2012;4:209. doi: 10.5121/ijcsit.2012.4615. [DOI] [Google Scholar]

- 41.Turunen J. Ph.D. Thesis. Aalto University School of Electrical Engineering; Espoo, Finland: Mar 25, 2011. A Wavelet-Based Method for Estimating Damping in Power Systems. [Google Scholar]

- 42.Belhumeur P.N., Hespanha J.P., Kriegman D.J. Eigenfaces vs. fisherfaces: Recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997;19:711–720. doi: 10.1109/34.598228. [DOI] [Google Scholar]

- 43.Logiestic Regression. [(accessed on 15 July 2022)]. Available online: https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression.

- 44.Orouskhani M., Rostamian S., Zadeh F.S., Shafiei M., Orouskhani Y. Alzheimer’s Disease Detection from Structural MRI Using Conditional Deep Triplet Network. Neurosci. Inform. 2022;2:100066. doi: 10.1016/j.neuri.2022.100066. [DOI] [Google Scholar]

- 45.Inglese M., Patel N., Linton-Reid K., Loreto F., Win Z., Perry R.J., Carswell C., Grech-Sollars M., Crum W.R., Lu H., et al. A predictive model using the mesoscopic architecture of the living brain to detect Alzheimer’s disease. Commun. Med. 2022;2:70. doi: 10.1038/s43856-022-00133-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Mandle A.K., Sahu S.P., Gupta G. Brain Tumor Segmentation and Classification in MRI using Clustering and Kernel-Based SVM. Biomed. Pharmacol. J. 2022;15:699–716. doi: 10.13005/bpj/2409. [DOI] [Google Scholar]

- 47.Abdulmunem I.A. Brain MR Images Classification for Alzheimer’s Disease. Iraqi J. Sci. 2022;63:2725–2740. doi: 10.24996/ijs.2022.63.6.37. [DOI] [Google Scholar]

- 48.Jang I., Danley G., Chang K., Kalpathy-Cramer J. Decreasing Annotation Burden of Pairwise Comparisons with Human-in-the-Loop Sorting: Application in Medical Image Artifact Rating. arXiv. 20222202.04823 [Google Scholar]