Abstract

Simple Summary

The goal of this paper is to provide an overview of current radiomic and AI applications in veterinary diagnostic imaging. We discuss the essential elements of AI for veterinary practitioners with the aim of helping them make informed decisions in applying AI technologies to their practices and that veterinarians will play an integral role in ensuring the appropriate uses and suitable curation of data. The expertise of veterinary professionals will be vital to ensuring suitable data and, subsequently, AI that meets the needs of the profession.

Abstract

Great advances have been made in human health care in the application of radiomics and artificial intelligence (AI) in a variety of areas, ranging from hospital management and virtual assistants to remote patient monitoring and medical diagnostics and imaging. To improve accuracy and reproducibility, there has been a recent move to integrate radiomics and AI as tools to assist clinical decision making and to incorporate it into routine clinical workflows and diagnosis. Although lagging behind human medicine, the use of radiomics and AI in veterinary diagnostic imaging is becoming more frequent with an increasing number of reported applications. The goal of this paper is to provide an overview of current radiomic and AI applications in veterinary diagnostic imaging.

Keywords: radiomics, artificial intelligence, veterinary medicine, diagnostic imaging

1. Introduction

Human health care has shown great advances in the application of radiomics and artificial intelligence (AI) in a variety of areas, ranging from hospital management and virtual assistants to remote patient monitoring and medical diagnostics and imaging [1]. Disciplines dealing with large data components, in particular, benefit from the assistance of AI. Digital imaging is used in a wide variety of clinical settings, in both human and veterinary medicine, including X-ray, ultrasound (US), computed tomography (CT), magnetic resonance imaging (MRI), positron emission tomography (PET) scans, retinal photography, dermoscopy, and histology, among others [1,2]. These fields already benefit from the applications of radiomics and AI [1,2]. The combination of radiomics and AI with diagnostic imaging offers a number of advantages, including their automated ability to perform complex pattern recognition, which can be applied in an accurate and reproducible manner to digital image analysis [1]. This can be integrated into a variety of medical imaging applications, including disease detection, characterization, and monitoring. Traditionally, this role is performed by trained diagnostic imaging specialists who assess and evaluate medical images. However, image interpretation can be subjective and greatly influenced by education and prior experience. Consequently, there has been a recent move to integrate them as tools to assist clinical decision making and to incorporate it into routine clinical workflows and diagnoses. However, lagging behind human medicine, the use of radiomics and AI in veterinary diagnostic imaging is becoming more frequent with an increasing number of reported applications. The goal of this paper is to provide an overview of current AI applications in veterinary diagnostic imaging.

2. Radiomics and AI

2.1. Radiomics

In medicine, various ways to generate big data exist, including the well-known fields of genomics, proteomics, metabolomics, transcriptomics, and microbiomics. Imaging is increasingly being utilized to build a specific omics cluster called “radiomics”, which is similar to these “omics” clusters. Radiomics is a relatively recent area of precision medicine [3]. It is a quantitative approach to medical imaging that tries to improve the data available to doctors through advanced and sometimes counterintuitive mathematical analysis [3]. The notion of radiomics is founded on the assumption that biological images contain information about disease-specific processes that is undetectable to the human eye and thus unavailable through typical visual analysis of the generated image [4]. It consists of the extraction of a large number of features from medical images and modalities. The mapping of these images into quantitative data, which can be mined with appropriate and sophisticated statistical tools, can be an important step toward personalized precision medicine (PPM) [5,6,7]. Radiomics has been applied to different fields in human health, including magnetic resonance imaging (MRI), computed tomography (CT), positron emission tomography (PET), and ultrasound (US) [8,9,10,11].

Traditionally, information from medical images was extracted solely based on visual inspection. In addition to the intra- and inter-operator variability, visual extraction cannot pull out important information hidden in these images. Radiomics was introduced as a tool to extract as much quantitative hidden information as possible to aid practitioners in diagnosis and decision making. As an example, in their study of tumor phenotype for lung and head-neck cancer, and using CT images with a radiomic approach, Aerts et al. (2014) found several new features that were not identified as significant previously, with high prognostic power [12]. This radiomic signature was validated with biological data on three independent data sets, demonstrating the performance of radiomics [12].

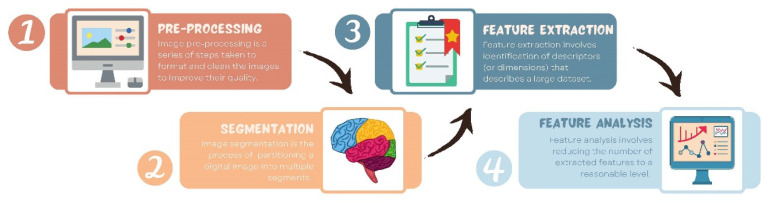

The workflow of radiomics is usually described in four steps: (i) preprocessing, (ii) imaging and image segmentation, (iii) feature extraction such as shape, texture, intensity, and filters, and (iv) feature analysis (see Figure 1) [13]. In the following, we briefly describe each of these four steps.

Figure 1.

Illustration of the radiomics workflow steps: preprocessing, segmentation, feature extraction, and feature analysis.

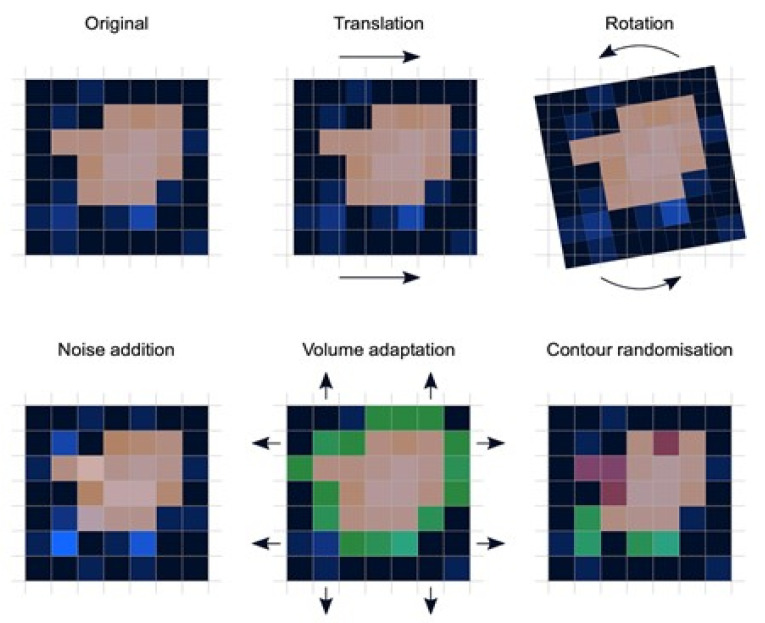

Preprocessing: Image preprocessing is the practice of applying a series of transformations to an initial image in order to improve image quality and make statistical analysis more repeatable and comparable. However, there is no predetermined analytic method to do this, and it varies depending on collected data and disease to study. Since radiomics deals primarily with images, it depends highly on image parameters of a given modality such as: the size of pixels (2D) or voxels (3D), the number of gray levels and range of the gray-level values, as well as the type of 3D-reconstruction algorithms [14]. This is important because the stability and robustness of radiomics features depend on the image processing settings [14,15]. Image acquisition, segmentation, intensity normalization, co-regulation, and noise filtering are three more analytical techniques that are crucial for processing the quantitative analysis of the images (Figure 2).

Segmentation: This is the delineation of the region of interest (ROI) in 2D or volume of interest (VOI) in 3D. This is the most critical step in the radiomics workflow since it specifies the area/volume from which the features will be extracted. Segmentation is tedious and is usually done manually by a human operator or semi-manually using standard segmentation software [16]. However, segmentation is subject to intra- and inter-operator variability. Therefore, fully automated segmentation was introduced recently, using deep learning techniques [17]. Deep learning (DL) is based on artificial neural networks where multiple layers (such as neurons in the human brain) are used to perform complex operations and extract higher levels of features from data. DL techniques require a large amount of data and considerable computing resources to achieve the required accuracy.

Feature extraction: This is mainly a software-based process aimed at extracting and calculating quantitative feature descriptors. Most of the feature extraction procedures follow the guidelines of ISBI “Image Biomarker Standard Initiative”, which clusters features in major categories such as intensity-based features, shape and edge features, texture features, and morphological features [18]. On the other hand, using only gray-level descriptors or histograms provides no information on the spatial distribution of an image’s content, which can be obtained by evaluating texture features [19]. Because of their varied textures, regions with comparable pixels/voxels can be distinguished in some images. Because texture features can represent the intricacies of a lesion observed in an image, they have become increasingly relevant [20]. The geometric features extracted from the segmented object, such as its contours, junctions, curves, and polygonal regions, are referred to as shape features. Quantifying item shapes is a difficult task because it is dependent on the efficacy of segmentation algorithms. Moreover, methods such as wavelet transformation, Laplacian of Gaussian, square root, and local binary pattern are used to polish this step. For example, a wavelet transformation is a powerful tool for multiscale feature extraction. A wavelet is a function that resembles a wave. Scale and location are the basic characteristics of the wavelet function. The scale defines how "stretched" or “squished” the function is, whereas placement identifies the wavelet’s position in time (or space). Decreasing the scale (resolution) of the wavelet can capture high-frequency information and, therefore, analyze well high-spatial frequency phenomena localized in space and can effectively extract information derived from localized high-frequency signals. Laplacian of Gaussian filter smooths the image by using a Gaussian filter, then applies the Laplacian to find edges (areas of gray-level rapid change). Square and square root image filters are tagged as Gamma modifiers. The square filter is accomplished by taking the square of image intensities, and the square root filter by taking the square root of the absolute value of image intensities [21]. Local binary pattern relies on labeling a binary value to each pixel of the image by thresholding the neighboring pixels based on the central pixel value, and the histogram of these labels is considered as texture features [22].

Feature analysis: The number of features extracted can be very high, which makes the analysis process cumbersome and the application of artificial intelligence ill-posed, in particular, if the number of data is not high. Reducing the number of features to a reasonable yet meaningful number is called “feature selection” or “dimension reduction” and helps to exclude features that are redundant and non-relevant from the data set before doing the final analysis. It also helps gather only the features that are the most consistent and relevant to build a reliable model for further prediction and classification [23]. Dimension reduction techniques, such as principal component analysis and partial least squares, construct ‘super variables’—usually linear combinations of original input variables—and use them in classification. Although they may also lead to satisfactory classification, biomedical implications of the classifiers are usually not obvious since all input features are used in the construction of the super features and hence classification. Feature selection methods can be classified into three categories. The filter approach separates feature selection from classifier construction. This implies that the machine learning algorithm handles the feature removal and data classification in separate steps. As a result, the algorithm begins by picking out the most crucial features and eliminating the others, and then, in the second step, it only uses those features to classify the data. The wrapper approach measures the “usefulness” of features based on the classifier performance by using a greedy search approach that evaluates all the possible combinations of features against the classification-based evaluation criterion and keeps searching until a certain accuracy criterion is satisfied. The embedded approach embeds feature selection within classifier construction. Embedded approaches have less computational complexity than wrapper methods. Compared with filter methods, embedded methods can better account for correlations among input variables. Penalization methods are a subset of embedded methods in which feature selection and classifier construction are achieved simultaneously by computing parameters involved in the penalized objective function. Many algorithms have been proposed to achieve this; the most popular ones are lasso, adaptive lasso, bridge, elastic net, and SCAD, to name a few [24,25,26,27,28]. Table 1 summarizes an assessment of some publicly available open-source radiomics extraction tools and their primary characteristics.

Figure 2.

Variations in patient positioning, image acquisition, and segmentation affect each feature to varying degrees. If radiomic models use features that are not robust against such influences, they will perform poorly when applied to new data. Assessing feature robustness is thus recommended to improve the generalizability of radiomic models and feature analysis.

Table 1.

Primary characteristics of publicly available open-source radiomics extraction tools.

| Programming Language |

IBSI 1 Feature Definition |

Full OS Compatibility |

DICOM-RT 2 Import | Integrated Visualization |

Radiomics Metadata Storage |

Built-in Segme-Ntation |

Reference | |

|---|---|---|---|---|---|---|---|---|

| MITK | C++ | No | Yes | Yes | No | No | No | Götz et al. 2019 [29] |

| MaZda | C++/Delphi | No | No | No | Yes | No | Yes | Szczypinski et al. 2009 [30] |

| PyRadiomics | Python | Yes | Yes | No | No | No | No | van Griethuysen et al. 2017 [31] |

| IBEX | Matlab/C++ | No | No | Yes | Yes | Yes | Yes | Zhang et al. 2015 [32] |

| CERR | Matlab | Yes | Yes | Yes | Yes | Yes | Yes | Apte et al. 2018 [33] |

1 ISBI “Image Biomarker Standard Initiative”. 2 DICOM-RT: “Digital Imaging and Communications in Medicine—Radiation Therapy”, an international standard to store, transmit, process, and display imaging data in medicine.

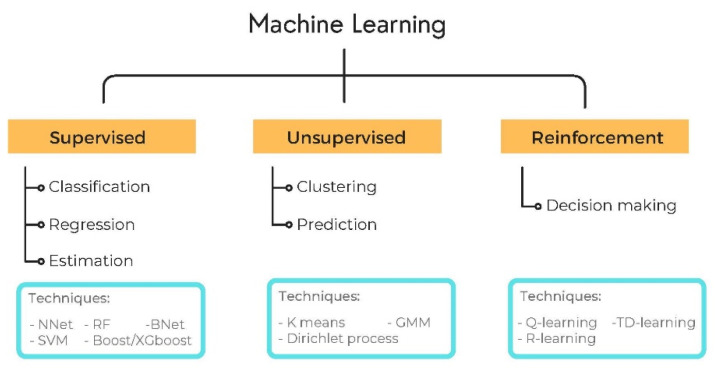

2.2. AI

Artificial Intelligence (AI) has attracted a great deal of attention in the past decade. It is a field that uses and develops computer systems to imitate human intelligence and learn from experience to perform and improve the tasks assigned. Machine Learning (ML) is a major subfield of AI that develops algorithms to learn from existing data and perform statistical inference to make accurate predictions of new data. The training of the algorithm on the data can be performed in two ways: supervised and unsupervised. Supervised learning trains its algorithms on previously labeled/annotated data to find the relationship between the labels and the data features and generalizes this knowledge to predict new (unlabeled) cases. Unsupervised learning (also known as self-supervised learning) refers to the process of grouping data into clusters using automated methods or algorithms on data that has not been classified or categorized and finding the relationship between intrinsic features to categorize the data into clusters (see Figure 3) [34]. Many machine learning models are linear and, therefore, cannot capture all features that are intrinsically nonlinear. Several machine learning algorithms based on nonlinear models have arisen in recent decades to solve regression, classification, and estimation challenges. A linear model for prediction uses a linear function, whereas a nonlinear model uses a nonlinear function coupled with computational complexity (which limits its use). In classification, linearity refers to the fact that the decision surface is a linear separator, such as a line that divides positive and negative points in the training set in the case of a plane. Similarly, a nonlinear model will use a nonlinear decision surface, such as a parabola, to divide classes. Details of linear and nonlinear learning models are also summarized in Table 2.

Figure 3.

Supervised, unsupervised, and reinforced machine learning.

Table 2.

List of the most commonly used machine learning algorithms in medical imaging.

| Model | Algorithm | Reference |

|---|---|---|

| Linear Learning model |

|

|

| Nonlinear Learning model |

|

(2015) [49]; Goodfellow (2016) [50]

|

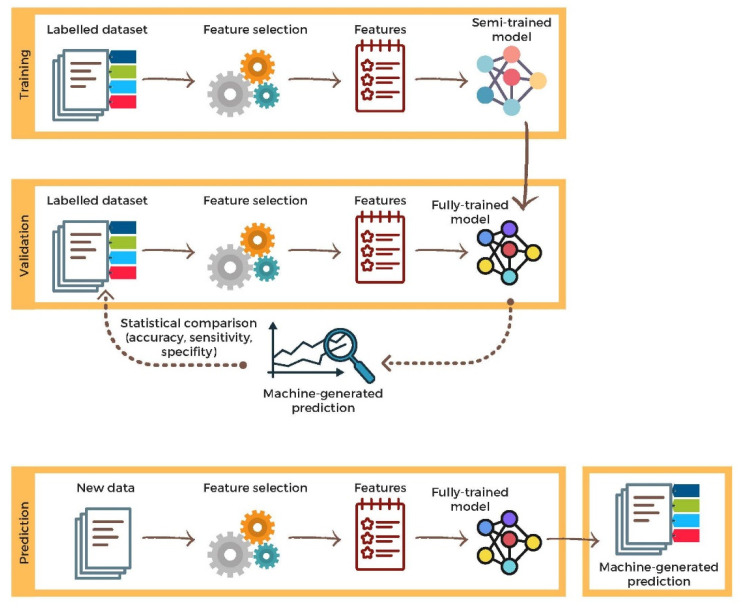

Deep learning (DL) is a more advanced subfield of ML. Instead of teaching the algorithm to process and learn from the data, a DL algorithm teaches itself to process and learn from the data. This is done through layers of artificial neural networks (ANN) using large amounts of data (see Section 2.3) [51]. Another type of machine learning algorithm is called reinforcement learning, where the algorithm is trained to take a sequence of decisions based on trial and error in which, after each operation, the algorithm gets rewarded or penalized until a solution is achieved [52]. There are several metrics to assess the outcome of AI models. These are sensitivity, specificity, and accuracy. Sensitivity is the proportion of positive cases (e.g., malignant tumors) that are reported as positive cases. Specificity is the proportion of negative cases that are reported as negative cases. Accuracy is the proportion of all correct cases that are reported as correct (either negative or positive) cases. In most applications in medicine, supervised learning is the preferred strategy for association/prediction or classification, particularly in radiomics. It applies the same concept as a student learning under the supervision of the teacher. Figure 4 summarizes the principle of employing supervised learning techniques from creating, training, and testing data to prediction or classification.

Figure 4.

A brief description of supervised learning flowchart, including training, validation, and prediction.

2.3. Radiomics-AI Combination

The field of radiomics deals with an ever-growing number of images and imaging modalities. The resulting excessive number of features extracted from these images requires sophisticated and powerful data analytic tools beyond traditional statistical inference, which only AI can provide. Moreover, these features provide valuable quantitative metrics that are perfectly suited for AI algorithms. Consequently, the fields of radiomics and AI became easily interchangeable in diagnostic imaging [53]. With the development of artificial intelligence and new machine learning tools, auxiliary diagnostic systems have expanded greatly and have been used in many different tasks with all medical imaging modalities. One of the areas of artificial intelligence that has been gaining attention in the scientific community most recently is deep learning [54]. Traditional machine learning methods have limitations in data processing, mainly related to the need for segmentation and the development of feature extractors to represent images and serve as input for the classifiers. Therefore, researchers began to develop algorithms that integrated the processes of feature extraction and image classification within the ANN itself. Therefore, in deep learning techniques, the need for preprocessing or segmentation is minimized. However, the method also has disadvantages, such as the need for a very large set of images (hundreds to thousands), greater dependence on exam quality and clinical data, and difficulty in identifying the logic used (“processing black box”). The most widely known method of deep learning in medicine is that involving a convolutional neural network (CNN). A CNN is basically composed of three types of layers: the first (convolutional layer) detects and extracts features; the second (pooling layer) selects and reduces the amount of features; and the third (fully connected layer) serves to integrate AI features extracted by the previous layers, typically by using a multilayer perceptron-like neural network to perform the final image classification, which is given by the prediction of the most likely class [55,56].

2.4. Validation

Another important step in the machine learning process is validation and performance assessment. Given a set of images, a machine learning classifier must use at least two different subsets to perform algorithm training and predictive model validation. A widely used strategy in radiomics is cross-validation. In cross-validation, the samples are separated into N subsets: one for training, one for validation, and one (independent subset) for testing only [57,58]. Another strategy is K-fold validation, which is based on dividing data into K subsets: one for training, one for validation, and one for test and shuffling randomly this process K times. Performance is typically evaluated by calculating the accuracy, sensitivity, specificity, and area under the receiver operating characteristic (ROC) curve for the method in question. An area under the curve (AUC) closer to 1 (on a scale from 0 to 1) indicates greater accuracy of the method

2.5. Open-Source Data for Radiomics

Publicly accessible data sets, such as the RIDER data set, aid in the understanding of the impact of various parameters in radiomics [59]. Furthermore, the availability of a public phantom data set for radiomics reproducibility tests on CT could aid in determining the impact of collection parameters in order to minimize non-robust radiomic characteristics [60]. However, more research is needed to see if data collected on a phantom can be used on humans [61]. Similar endeavors for PET and MRI would aid in the knowledge of how changes in environments affect radiomics. To put it another way, open-source data is critical to the future advancement of radiomics.

3. Application of AI and Radiomics in Veterinary Diagnostic Imaging

3.1. Lesion Detection

One of the earliest publications describing the use of AI in veterinary diagnostic imaging involved the evaluation of a linear partial least squares discriminant analysis (PLS-DA) and a nonlinear artificial neural network (ANN) model in the application of machine learning for canine pelvic radiograph classification (see Table 3) [62]. Classification error, sensitivity, and specificity of 6.7%, 100%, and 89% for the PLS-DA model and of 8.9%, 86%, and 100% for the ANN model were achieved [62]. Although the classification in this study was not focused on the presence of hip joint pathology but on the presence of a hip joint in an image, this study was one of the first to demonstrate that common machine learning algorithms could be applied to the classification of veterinary radiographic images and suggested that for future studies the same models could potentially be used for multiclass classifiers [62].

Table 3.

Literature review of AI/radiomics studies in the veterinary imaging applications, with reported accuracies and conclusions. CNN: convolutional neural networks. N/A: not available.

| Reference | Topic | Scale | Species | AI/Radiomic Algorithms |

Accuracy | Conclusion |

|---|---|---|---|---|---|---|

| Basran et al., 2021 [63] | Lesion detection: equine proximal sesamoid bone micro-CT | Clinical N = 8 cases and 8 controls |

Equine | Radiomics | N/A | Radiomics analysis of μCT images of equine proximal sesamoid bones was able to identify image feature differences in image features in cases and controls |

| Becker et al., 2018 [64] | Lesion detection: murine hepatic MRIs | Pre-clinical N = 8 cases and 2 controls. |

Murine | Radiomics | N/A | Texture features may quantitatively detect intrahepatic tumor growth not yet visible to the human eye |

| Boissady et al., 2020 [65] | Lesion detection: canine and feline thoracic radiographic lesions | Clinical N = 6584 cases |

Canine and feline | Machine learning

|

N/A | The described network can aid detection of lesions but not provide a diagnosis; potential to be used as tool to aid general practitioners |

| McEvoy and Amigo, 2013 [62] | Lesion detection: canine pelvic radiograph classification | Clinical N = 60 cases |

Canine | Machine learning

|

N/A | Demonstrated feasibility to classify images, dependent on availability of training data |

| Yoon et al., 2018 [66] | Lesion detection: canine thoracic radiographic lesions | Clinical N = 3122 cases |

Canine | Machine learning

|

CNN: 92.9–96.9% BOF: 79.6–96.9% | Both CNN and BOF capable of distinguishing abnormal thoracic radiographs, CNN showed higher accuracy and sensitivity than BOF |

| Banzato et al., 2018 [67] | Lesion characterization: MRI differentiation of canine meningiomas vs. gliomas | Clinical N = 80 cases |

Canine | Machine learning

|

94% on post-contrast T1 images, 91% on pre-contrast T1-images, 90% on T2 images | CNN can reliably distinguish between different meningiomas and gliomas on MR images |

| D’Souza et al., 2019 [68] | Lesion characterization: assessment of B-mode US for murine hepatic fibrosis | Pre-clinical N = 22 cases and 4 controls. |

Murine | Radiomics | N/A | Quantitative analysis of computer-extracted B-mode ultrasound features can be used to characterize hepatic fibrosis in mice |

| Kim et al., 2019 [69] | Lesion characterization: canine corneal ulcer image classification | Clinical N = 281 cases |

Canine | Machine learning

|

Most models > 90% for superficial and deep corneal ulcers; ResNet and VGGNet > 90% for normal corneas, superficial and deep corneal ulcers | CNN multiple image classification models can be used to effectively determine corneal ulcer severity in dogs |

| Wanamaker et al., 2021 [70] | Lesion characterization: MRI differentiation of canine glial cell neoplasia vs. noninfectious inflammatory meningoencephalitis | Clinical N = 119 cases |

Canine | Radiomics | Random forest classifier accuracy was 76% to differentiate glioma vs. noninfectious inflammatory meningoencephalitis | Texture analysis using random forest algorithm to classify inflammatory and neoplastic lesions approached previously reported radiologist accuracy, however performed poorly for differentiating tumor grades and types |

Yoon and colleagues (2018) were among the first to perform a feasibility study that evaluated bag-of-features (BOF) and convolutional neural networks (CNN) in veterinary imaging for the purpose of computer-aided detection to identify abnormal canine radiographic findings, which included cardiomegaly, abnormal lung patterns, mediastinal shift, pleural effusion, and pneumothorax (see Table 3) [66]. The results indicated that while both models showed the possibility of improving work efficiency with the potential for double reading, CNN showed higher accuracy (92.9–96.9%) and sensitivity (92.1–100%) when compared to BOF (accuracy 74.1–94.8%; sensitivity 79.6–96.9%) [66]. Later, Boissady et al. (2020) developed a unique deep neural network (DNN) for thoracic radiographic screening in dogs and cats for 15 different abnormalities [65]. For the purpose of training, more than 22,000 thoracic radiographs, with corresponding reports from a board-certified veterinary radiologist, were provided to the algorithms. Following training, 120 radiographs were then evaluated by three groups of observers: the best-performing network, veterinarians, and veterinarians aided by the network. The results showed that the overall error rate of the network alone was 10.7%, significantly lower (p = 0.001) than the overall error rate of the veterinarians (16.8%) or the veterinarians aided by the network (17.2%) [65]. It is interesting to note that the network failed to statistically improve the veterinarians’ error rate in this study, which the authors hypothesized could be due to a lack of experience with the use of AI as an aid and failure to trust CNN’s pattern recognition [65]. These results indicated that although the network could not provide a specific diagnosis, it could perform very well at detecting various lesion types (15 different abnormalities), confirming the usefulness of CNN for the purpose of identification of thoracic abnormalities in small animals [65].

Several papers have been published investigating the use of AI applied to rodents, which are commonly used as animal models of disease (see Table 3). In these cases, the studies focus on liver disease. In 2018, one such study investigated whether AI could be used to detect texture features on mouse MRIs, which could be correlated with metastatic intrahepatic tumor growth before they become visible to the human eye [64]. The results of this study suggested that livers affected by both neoplastic metastases and micrometastases develop systematic changes in texture features [64]. Three clusters or features derived from each of the gray-level matrices were found to have an independent linear correlation with tumor growth [64]. The authors concluded that changes in texture features at a sub-resolution level could be used to detect micrometastases within the liver before they become visually detectable by the human eye [64].

Another report on the use of radiomics in veterinary medicine describes a radiomics-based approach to the analysis of micro-CTs (μCT) of equine proximal sesamoid bones to be able to distinguish image features in controls compared to cases who developed catastrophic proximal sesamoid bone fractures (see Table 3) [63]. Using radiomics, it was possible to consistently identify differences in image features between cases and controls, as well as highlight several features previously undetected by the human eye [63]. This work provides an initial framework for future automation of image biomarkers in equine proximal sesamoid bones, with potential applications including the identification of racehorses in training at high risk of catastrophic proximal sesamoid bone fracture [63].

3.2. Lesion Characterization

The term ‘characterization’ of a lesion encompasses the segmentation, diagnosis, and staging of a disease [1]. This depends on a number of quantifiable radiological characteristics of a lesion, including size, extent, and texture [1]. Humans are limited in their capability to interpret medical diagnostic images in this regard due to our finite capacity to handle multiple qualitative features simultaneously. AI, on the other hand, has the capacity to process a large number of quantitative features in a reproducible manner. In the veterinary literature, several examples exist of the use of AI for lesion characterization in a variety of applications.

Interpretation and characterization of brain lesions on MRI can be challenging. In 2018, Banzato et al. evaluated the ability and accuracy of a deep CNN to differentiate between canine meningiomas and gliomas on pre- and post-contrast T1-weighted and T2-weighted MRI images and developed an image classifier based on this to predict whether a lesion (characterized by final histopathological diagnosis) is a meningioma or glioma [67]. The image classifier was found to be 94% accurate on post-contrast T1-weighted images, 91% on pre-contrast T1-weighted images, and 90% on T2-weighted images, thus concluding that it had potential as a reliable tool to distinguish canine meningiomas and gliomas on MRIs [67].

Similarly, more recently, the use of Texture Analysis (TA) to differentiate canine glial cell neoplasia from noninfectious inflammatory meningoencephalitis was investigated [70]. This can be challenging even for experienced diagnostic imaging specialists due to a number of overlapping image characteristics. A group of 119 dogs with diagnoses confirmed on histology were used, 59 with gliomas and 60 with noninfectious inflammatory meningoencephalitis [70]. The authors found that cohorts differed significantly in 45 out of 120 texture metrics [70]. TA was unable to classify glioma grade or cell type correctly and could only partially differentiate between subtypes of inflammatory meningoencephalitis (e.g., granulomatous vs. necrotizing) [70]. However, with a random forest algorithm (supervised learning algorithm where the "forest" built is an ensemble of decision trees, usually trained with the “bagging” method), its accuracy for differentiating between inflammatory and neoplastic brain disease was found to approach that previously reported for subjective radiologist evaluation [70].

Another study focusing on rodent livers described the use of quantitative analysis of computer-extracted features of B-mode ultrasound as an alternative non-invasive method to liver biopsy for the characterization of hepatic fibrosis [68]. Computer-extracted quantitative parameters included brightness and variance of the hepatic B-mode ultrasounds [68]. Hepatic fibrosis induced in rats (n = 22) through oral administration of diethylnitrosamine (DEN) showed an increase in hepatic echo intensity from 37.1 ± 7.8 to 53.5 ± 5.7 (at 10 weeks) to 57.5 ± 6.1 (at 13 weeks), while the control group remained unchanged at an average of 34.5 ± 4 [68]. A similar effect was seen over time in the hepatorenal index, heterogeneity, and anisotropy. Three other features were studied that also increased over time in the DEN group44. Subsequent hepatic histology revealed more severe fibrosis grades in DEN rats compared to controls [68]. The results showed that increasing parameters in US showed a significant positive correlation with increasing fibrosis grades, with anisotropy having the strongest correlation (p = 0.58) [68]. Computer-extracted features of B-mode US images consistently increased over time in a quantifiable manner as hepatic damage and fibrosis progressed in rats, making this quantitative tool a potentially beneficial adjunct to the clinical diagnosis and assessment of hepatic fibrosis and chronic liver disease [68].

CNN technology has also been developed to classify canine corneal ulcer severity (normal vs. superficial vs. deep) based on corneal photographs, which had previously been classified by veterinary ophthalmologist evaluation [69]. Following labeling and learning of images (1040 in total), they were then evaluated using GoogLeNet [71], ResNet [72], and VGGNet [73] models to determine the severity, using simulations based on an open-source software library, which was fine-tuned using a CNN model trained on the ImageNet data set [69]. Accuracies greater than 90% were achieved for most of the models for the classification of superficial and deep corneal ulcers, with ResNet [72] and VGGNet [73] achieving accuracies >90% [69]. This study concluded that the proposed CNN method could effectively differentiate ulcer severity in dogs based on corneal photographs and that multiple image classification models are applicable for use in veterinary medicine [69].

4. Discussion

Several challenges exist that are inherent to data sets associated with veterinary diagnostic imaging. Due to the nature of the patient caseload and the variability of the species and breeds encountered, the acquisition of large, uniform data sets can be challenging. Therefore, learning tasks must often be performed using small and often variable data sets. The lack of availability of examples of rare diseases for algorithm training is a limitation, meaning some diagnoses may be missed if such examples are not included in the training sets [2]. The availability of data sets will likely present one of the greatest challenges to the advancement of the use of AI in veterinary diagnostic imaging in the future, hence the need to develop large open-source data sets. Such data must also be curated in such a manner as to ensure ease of access and retrieval [1]. A number of additional challenges are also likely to present themselves in the future and will mirror those seen in the human medical fields, such as those associated with regulation and benchmarking of AI-related activities, as well as issues of privacy and a number of other ethical considerations such as culpability for misdiagnoses [1].

Despite an overall openness and enthusiasm to adapt and implement AI for use in human medical radiology, in general, a knowledge gap still exists that must be addressed before it can be fully adopted in veterinary medicine [74]. As the study by Boissady et al. (2020) showed, operators must be familiar and experienced with the use of AI as an aid, and trust in its results, in order to benefit from it [65]. Otherwise, failure to do so can add an error to the process. In addition, there is still a perception among a significant proportion of radiographers that AI could threaten or disrupt radiology practice, mainly due to a possible drop in demand or loss of respect for the profession [74,75]. It is likely that these perceptions also exist within veterinary diagnostic imaging and thus also present a hurdle to overcome before AI can be fully accepted within this profession in the future.

5. Conclusions

Although no reports exist in the veterinary literature, one logical next step for AI application in veterinary diagnostic imaging involves its use for the monitoring of lesion progression over time. Monitoring disease over time is essential not only for diagnosis and prognostic estimation but also for the evaluation of response to treatment. It consists of aligning diseased tissue across multiple diagnostic images taken over time, with the comparison of simple data to quantify change, for example, change in size, as well as variations in texture or heterogeneity computer-aided change analysis could detect subtle changes in characteristics not easily identified by the human eye and would also avoid the problems encountered with interobserver variability [1]. It is also likely that in the future, AI will play a greater administrative role, including patient identification and registration and medical reporting, and these advances are also likely to spill over into veterinary fields [1].

Author Contributions

Conceptualization, O.B., H.B. and J.P.J.; writing—original draft preparation, O.B., H.B. and J.P.J.; writing—review and editing, O.B., H.B., J.P.J., F.D. and A.S.; visualization, A.S. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Hosny A., Parmar C., Quackenbush J., Schwartz L.H., Aerts H.J.W.L. Artificial Intelligence in Radiology. Nat. Rev. Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ker J., Wang L., Rao J., Lim T. Deep Learning Applications in Medical Image Analysis. IEEE Access. 2017;6:9375–9389. doi: 10.1109/ACCESS.2017.2788044. [DOI] [Google Scholar]

- 3.Gillies R.J., Kinahan P.E., Hricak H. Radiomics: Images Are More than Pictures, They Are Data. Radiology. 2016;278:563–577. doi: 10.1148/radiol.2015151169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mannil M., von Spiczak J., Manka R., Alkadhi H. Texture Analysis and Machine Learning for Detecting Myocardial Infarction in Noncontrast Low-Dose Computed Tomography: Unveiling the Invisible. Investig. Radiol. 2018;53:338–343. doi: 10.1097/RLI.0000000000000448. [DOI] [PubMed] [Google Scholar]

- 5.Miles K. Radiomics for Personalised Medicine: The Long Road Ahead. Br. J. Cancer. 2020;122:929–930. doi: 10.1038/s41416-019-0699-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Castellano G., Bonilha L., Li L.M., Cendes F. Texture Analysis of Medical Images. Clin. Radiol. 2004;59:1061–1069. doi: 10.1016/j.crad.2004.07.008. [DOI] [PubMed] [Google Scholar]

- 7.Tourassi G.D. Journey toward Computer-Aided Diagnosis: Role of Image Texture Analysis. Radiology. 1999;213:317–320. doi: 10.1148/radiology.213.2.r99nv49317. [DOI] [PubMed] [Google Scholar]

- 8.Wang X.-H., Long L.-H., Cui Y., Jia A.Y., Zhu X.-G., Wang H.-Z., Wang Z., Zhan C.-M., Wang Z.-H., Wang W.-H. MRI-Based Radiomics Model for Preoperative Prediction of 5-Year Survival in Patients with Hepatocellular Carcinoma. Br. J. Cancer. 2020;122:978–985. doi: 10.1038/s41416-019-0706-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Reuzé S., Schernberg A., Orlhac F., Sun R., Chargari C., Dercle L., Deutsch E., Buvat I., Robert C. Radiomics in Nuclear Medicine Applied to Radiation Therapy: Methods, Pitfalls, and Challenges. Int. J. Radiat. Oncol. Biol. Phys. 2018;102:1117–1142. doi: 10.1016/j.ijrobp.2018.05.022. [DOI] [PubMed] [Google Scholar]

- 10.Li Y., Yu M., Wang G., Yang L., Ma C., Wang M., Yue M., Cong M., Ren J., Shi G. Contrast-Enhanced CT-Based Radiomics Analysis in Predicting Lymphovascular Invasion in Esophageal Squamous Cell Carcinoma. Front. Oncol. 2021;11:644165. doi: 10.3389/fonc.2021.644165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Qin H., Wu Y.-Q., Lin P., Gao R.-Z., Li X., Wang X.-R., Chen G., He Y., Yang H. Ultrasound Image-Based Radiomics: An Innovative Method to Identify Primary Tumorous Sources of Liver Metastases. J. Ultrasound Med. 2021;40:1229–1244. doi: 10.1002/jum.15506. [DOI] [PubMed] [Google Scholar]

- 12.Aerts H.J.W.L., Velazquez E.R., Leijenaar R.T.H., Parmar C., Grossmann P., Cavalho S., Bussink J., Monshouwer R., Haibe-Kains B., Rietveld D., et al. Decoding Tumour Phenotype by Noninvasive Imaging Using a Quantitative Radiomics Approach. Nat. Commun. 2014;5:4006. doi: 10.1038/ncomms5006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.van Timmeren J.E., Cester D., Tanadini-Lang S., Alkadhi H., Baessler B. Radiomics in Medical Imaging-“how-to” Guide and Critical Reflection. Insight. Imaging. 2020;11:91. doi: 10.1186/s13244-020-00887-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wichtman B.D., Attenberger U.I., Harder F.N., Schonberg S.O., Maintz D., Weiss K., Pinto dos Santos D., Baessler B. Influence of Image Processing on the Robustness of Radiomic Features Derived from Magnetic Resonance Imaging—A Phantom Study; Proceedings of the ISMRM 27th Annual Meeting & Exhibition; Montreal, QC, Canada. 27 May 2019. [Google Scholar]

- 15.Altazi B.A., Zhang G.G., Fernandez D.C., Montejo M.E., Hunt D., Werner J., Biagioli M.C., Moros E.G. Reproducibility of F18-FDG PET Radiomic Features for Different Cervical Tumor Segmentation Methods, Gray-Level Discretization, and Reconstruction Algorithms. J. Appl. Clin. Med. Phys. 2017;18:32–48. doi: 10.1002/acm2.12170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Olabarriaga S.D., Smeulders A.W. Interaction in the Segmentation of Medical Images: A Survey. Med. Image Anal. 2001;5:127–142. doi: 10.1016/S1361-8415(00)00041-4. [DOI] [PubMed] [Google Scholar]

- 17.Egger J., Colen R.R., Freisleben B., Nimsky C. Manual Refinement System for Graph-Based Segmentation Results in the Medical Domain. J. Med. Syst. 2012;36:2829–2839. doi: 10.1007/s10916-011-9761-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Zwanenburg A., Leger S., Vallières M., Löck S. Image Biomarker Standardisation Initiative. Radiology. 2020;295:328–338. doi: 10.1148/radiol.2020191145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bovik A.C. Handbook of Image and Video Processing (Communications, Networking and Multimedia) Academic Press; Cambridge, MA, USA: 2005. [Google Scholar]

- 20.Bernal J., del C. Valdés-Hernández M., Escudero J., Viksne L., Heye A.K., Armitage P.A., Makin S., Touyz R.M., Wardlaw J.M. Analysis of Dynamic Texture and Spatial Spectral Descriptors of Dynamic Contrast-Enhanced Brain Magnetic Resonance Images for Studying Small Vessel Disease. Magn. Reson. Imaging. 2020;66:240–247. doi: 10.1016/j.mri.2019.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Huang K., Aviyente S. Wavelet Feature Selection for Image Classification. IEEE Trans. Image Process. 2008;17:1709–1720. doi: 10.1109/TIP.2008.2001050. [DOI] [PubMed] [Google Scholar]

- 22.Bernatz S., Zhdanovich Y., Ackermann J., Koch I., Wild P., Santos D.P.D., Vogl T., Kaltenbach B., Rosbach N. Impact of Rescanning and Repositioning on Radiomic Features Employing a Multi-Object Phantom in Magnetic Resonance Imaging. Sci. Rep. 2021;11:14248. doi: 10.1038/s41598-021-93756-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hira Z.M., Gillies D.F. A Review of Feature Selection and Feature Extraction Methods Applied on Microarray Data. Adv. Bioinform. 2015;2015:127–142. doi: 10.1155/2015/198363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tibshirani R. Regression Shrinkage and Selection Via the Lasso. J. R. Stat. Soc. Ser. B Methodol. 1996;58:267–288. doi: 10.1111/j.2517-6161.1996.tb02080.x. [DOI] [Google Scholar]

- 25.Leng C., Lin Y., Wahba G. A note on the lasso and related procedures in model selection. Stat. Sin. 2006;16:1273–1284. [Google Scholar]

- 26.Fu W., Knight K. Asymptotics for Lasso-Type Estimators. Ann. Stat. 2000;28:1356–1378. doi: 10.1214/aos/1015957397. [DOI] [Google Scholar]

- 27.Zou H., Hastie T. Regularization and Variable Selection via the Elastic Net. J. R. Stat. Soc. Ser. B Stat. Methodol. 2005;67:301–320. doi: 10.1111/j.1467-9868.2005.00503.x. [DOI] [Google Scholar]

- 28.Fan J., Li R. Variable Selection via Nonconcave Penalized Likelihood and Its Oracle Properties. J. Am. Stat. Assoc. 2001;96:1348–1360. doi: 10.1198/016214501753382273. [DOI] [Google Scholar]

- 29.Götz M., Nolden M., Maier-Hein K. MITK Phenotyping: An Open-Source Toolchain for Image-Based Personalized Medicine with Radiomics. Radiother. Oncol. 2019;131:108–111. doi: 10.1016/j.radonc.2018.11.021. [DOI] [PubMed] [Google Scholar]

- 30.Szczypiński P.M., Strzelecki M., Materka A., Klepaczko A. MaZda--a Software Package for Image Texture Analysis. Comput. Methods Programs Biomed. 2009;94:66–76. doi: 10.1016/j.cmpb.2008.08.005. [DOI] [PubMed] [Google Scholar]

- 31.van Griethuysen J.J., Fedorov A., Parmar C., Hosny A., Aucoin N., Narayan V., Beets-Tan R.G., Fillion-Robin J.-C., Pieper S., Aerts H.J. Computational Radiomics System to Decode the Radiographic Phenotype. Cancer Res. 2017;77:e104–e107. doi: 10.1158/0008-5472.CAN-17-0339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Zhang L., Fried D.V., Fave X.J., Hunter L.A., Yang J., Court L.E. IBEX: An Open Infrastructure Software Platform to Facilitate Collaborative Work in Radiomics. Med. Phys. 2015;42:1341–1353. doi: 10.1118/1.4908210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Apte A.P., Iyer A., Crispin-Ortuzar M., Pandya R., van Dijk L.V., Spezi E., Thor M., Um H., Veeraraghavan H., Oh J.H., et al. Technical Note: Extension of CERR for Computational Radiomics: A Comprehensive MATLAB Platform for Reproducible Radiomics Research. Med. Phys. 2018 doi: 10.1002/mp.13046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Erhan D., Bengio Y., Courville A., Manzagol P.-A., Vincent P., Bengio S. Why Does Unsupervised Pre-Training Help Deep Learning? J. Mach. Learn. Res. 2010;11:625–660. [Google Scholar]

- 35.Nelder J.A., Wedderburn R.W.M. Generalized Linear Models. J. R. Stat. Soc. Ser. A Gen. 1972;135:370. doi: 10.2307/2344614. [DOI] [Google Scholar]

- 36.Jolliffe I.T. Principle Component Analysis. 2nd ed. Springer; New York, NY, USA: 2002. [Google Scholar]

- 37.McLachlan G.J., Frontmatter . Discriminant Analysis and Statistical Pattern Recognition. John Wiley & Sons, Ltd.; Hoboken, NJ, USA: 1992. [Google Scholar]

- 38.Walker S.H., Duncan D.B. Estimation of the Probability of an Event as a Function of Several Independent Variables. Biometrika. 1967;54:167–179. doi: 10.1093/biomet/54.1-2.167. [DOI] [PubMed] [Google Scholar]

- 39.Norvig P., Russell S.J. Artificial Intelligence: A Modern Approach. 4th ed. Prentice Hall; Hoboken, NJ, USA: [(accessed on 10 October 2021)]. Available online: http://aima.cs.berkeley.edu/ [Google Scholar]

- 40.Hastie T., Tibshirani R., Friedman J. Boosting and Additive Trees. In: Hastie T., Tibshirani R., Friedman J., editors. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer; New York, NY, USA: 2009. pp. 337–387. (Springer Series in Statistics). [Google Scholar]

- 41.Quinlan J.R. Simplifying Decision Trees. Int. J. Man-Mach. Stud. 1987;27:221–234. doi: 10.1016/S0020-7373(87)80053-6. [DOI] [Google Scholar]

- 42.Cortes C., Vapnik V. Support-Vector Networks. Mach. Learn. 1995;20:273–297. doi: 10.1007/BF00994018. [DOI] [Google Scholar]

- 43.Chen T., Guestrin C. XGBoost: A Scalable Tree Boosting System; Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; San Francisco, CA, USA,. 13 August 2016; 2016. pp. 785–794. [Google Scholar]

- 44.Ho T.K. The Random Subspace Method for Constructing Decision Forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998;20:832–844. doi: 10.1109/34.709601. [DOI] [Google Scholar]

- 45.Kleene S.C. Representation of Events in Nerve Nets and Finite Automata. RAND Corporation; Santa Monica, CA, USA: 1951. [Google Scholar]

- 46.Fix E., Hodges J.L. Discriminatory Analysis. Nonparametric Discrimination: Consistency Properties. Int. Stat. Rev. Rev. Int. Stat. 1989;57:238–247. doi: 10.2307/1403797. [DOI] [Google Scholar]

- 47.Bishop Pattern Recognition and Machine Learning. [(accessed on 10 October 2021)]. Available online: https://docs.google.com/viewer?a=v&pid=sites&srcid=aWFtYW5kaS5ldXxpc2N8Z3g6MjViZDk1NGI1NjQzOWZiYQ.

- 48.Schmidhuber J. Deep Learning in Neural Networks: An Overview. Neural Netw. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 49.LeCun Y., Bengio Y., Hinton G. Deep Learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 50.Goodfellow I., Bengio Y., Courville A. Deep Learning. MIT Press; Cambridge, UK: 2016. [Google Scholar]

- 51.Alzubaidi L., Zhang J., Humaidi A.J., Al-Dujaili A., Duan Y., Al-Shamma O., Santamaría J., Fadhel M.A., Al-Amidie M., Farhan L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions. J. Big Data. 2021;8:53. doi: 10.1186/s40537-021-00444-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Jonsson A. Deep Reinforcement Learning in Medicine. Kidney Dis. 2019;5:18–22. doi: 10.1159/000492670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Sollini M., Antunovic L., Chiti A., Kirienko M. Towards Clinical Application of Image Mining: A Systematic Review on Artificial Intelligence and Radiomics. Eur. J. Nucl. Med. Mol. Imaging. 2019;46:2656–2672. doi: 10.1007/s00259-019-04372-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Bartholomai B., Koo C., Johnson G., White D., Raghunath S., Rajagopalan S., Moynagh M., Lindell R., Hartman T. Pulmonary Nodule Characterization, Including Computer Analysis and Quantitative Features. J. Thorac. Imaging. 2015;30:139–156. doi: 10.1097/RTI.0000000000000137. [DOI] [PubMed] [Google Scholar]

- 55.Fukushima K. Neocognitron: A Self Organizing Neural Network Model for a Mechanism of Pattern Recognition Unaffected by Shift in Position. Biol. Cybern. 1980;36:193–202. doi: 10.1007/BF00344251. [DOI] [PubMed] [Google Scholar]

- 56.Lecun Y., Bottou L., Bengio Y., Haffner P. Gradient-Based Learning Applied to Document Recognition. Volume 86. Scientific Research Publishing; Wuhan, China: 1998. [(accessed on 10 June 2022)]. pp. 2278–2324. Available online: https://www.scirp.org/reference/referencespapers.aspx?referenceid=2761538. [Google Scholar]

- 57.Aerts H.J.W.L., Grossmann P., Tan Y., Oxnard G.R., Rizvi N., Schwartz L.H., Zhao B. Defining a Radiomic Response Phenotype: A Pilot Study Using Targeted Therapy in NSCLC. Sci. Rep. 2016;6:33860. doi: 10.1038/srep33860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Erickson B.J., Korfiatis P., Akkus Z., Kline T.L. Machine Learning for Medical Imaging. Radiographics. 2017;37:505–515. doi: 10.1148/rg.2017160130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ronneberger O., Fischer P., Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab N., Hornegger J., Wells W.M., Frangi A.F., editors. Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015. Volume 9351. Springer International Publishing; Cham, Switzerland: 2015. pp. 234–241. Lecture Notes in Computer Science. [Google Scholar]

- 60.Zhao B., James L.P., Moskowitz C.S., Guo P., Ginsberg M.S., Lefkowitz R.A., Qin Y., Riely G.J., Kris M.G., Schwartz L.H. Evaluating Variability in Tumor Measurements from Same-Day Repeat CT Scans of Patients with Non–Small Cell Lung Cancer. Radiology. 2009;252:263–272. doi: 10.1148/radiol.2522081593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kalendralis P., Traverso A., Shi Z., Zhovannik I., Monshouwer R., Starmans M.P.A., Klein S., Pfaehler E., Boellaard R., Dekker A., et al. Multicenter CT Phantoms Public Dataset for Radiomics Reproducibility Tests. Med. Phys. 2019;46:1512–1518. doi: 10.1002/mp.13385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.McEvoy F.J., Amigo J.M. Using Machine Learning to Classify Image Features from Canine Pelvic Radiographs: Evaluation of Partial Least Squares Discriminant Analysis and Artificial Neural Network Models. Vet. Radiol. Ultrasound. 2013;54:122–126. doi: 10.1111/vru.12003. [DOI] [PubMed] [Google Scholar]

- 63.Basran P.S., Gao J., Palmer S., Reesink H.L. A Radiomics Platform for Computing Imaging Features from ΜCT Images of Thoroughbred Racehorse Proximal Sesamoid Bones: Benchmark Performance and Evaluation. Equine Vet. J. 2021;53:277–286. doi: 10.1111/evj.13321. [DOI] [PubMed] [Google Scholar]

- 64.Becker A.S., Schneider M.A., Wurnig M.C., Wagner M., Clavien P.A., Boss A. Radiomics of Liver MRI Predict Metastases in Mice. Eur. Radiol. Exp. 2018;2:11. doi: 10.1186/s41747-018-0044-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Boissady E., de La Comble A., Zhu X., Hespel A.-M. Artificial Intelligence Evaluating Primary Thoracic Lesions Has an Overall Lower Error Rate Compared to Veterinarians or Veterinarians in Conjunction with the Artificial Intelligence. Vet. Radiol. Ultrasound. 2020;61:619–627. doi: 10.1111/vru.12912. [DOI] [PubMed] [Google Scholar]

- 66.Yoon Y., Hwang T., Lee H. Prediction of Radiographic Abnormalities by the Use of Bag-of-Features and Convolutional Neural Networks. Vet. J. 2018;237:43–48. doi: 10.1016/j.tvjl.2018.05.009. [DOI] [PubMed] [Google Scholar]

- 67.Banzato T., Bernardini M., Cherubini G.B., Zotti A. A Methodological Approach for Deep Learning to Distinguish between Meningiomas and Gliomas on Canine MR-Images. BMC Vet. Res. 2018;14:317. doi: 10.1186/s12917-018-1638-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.D’Souza J.C., Sultan L.R., Hunt S.J., Schultz S.M., Brice A.K., Wood A.K.W., Sehgal C.M. B-Mode Ultrasound for the Assessment of Hepatic Fibrosis: A Quantitative Multiparametric Analysis for a Radiomics Approach. Sci. Rep. 2019;9:8708. doi: 10.1038/s41598-019-45043-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Kim J.Y., Lee H.E., Choi Y.H., Lee S.J., Jeon J.S. CNN-Based Diagnosis Models for Canine Ulcerative Keratitis. Sci. Rep. 2019;9:14209. doi: 10.1038/s41598-019-50437-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Wanamaker M.W., Vernau K.M., Taylor S.L., Cissell D.D., Abdelhafez Y.G., Zwingenberger A.L. Classification of Neoplastic and Inflammatory Brain Disease Using MRI Texture Analysis in 119 Dogs. Vet. Radiol. Ultrasound. 2021;62:445–454. doi: 10.1111/vru.12962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Boddapati V., Petef A., Rasmusson J., Lundberg L. Classifying Environmental Sounds Using Image Recognition Networks. Procedia Comput. Sci. 2017;112:2048–2056. doi: 10.1016/j.procs.2017.08.250. [DOI] [Google Scholar]

- 72.McAllister P., Zheng H., Bond R., Moorhead A. Combining Deep Residual Neural Network Features with Supervised Machine Learning Algorithms to Classify Diverse Food Image Datasets. Comput. Biol. Med. 2018;95:217–233. doi: 10.1016/j.compbiomed.2018.02.008. [DOI] [PubMed] [Google Scholar]

- 73.Jalali A., Mallipeddi R., Lee M. Sensitive Deep Convolutional Neural Network for Face Recognition at Large Standoffs with Small Dataset. Expert Syst. Appl. 2017;87:304–315. doi: 10.1016/j.eswa.2017.06.025. [DOI] [Google Scholar]

- 74.Abuzaid M., Elshami W., Tekin H., Issa B. Assessment of the Willingness of Radiologists and Radiographers to Accept the Integration of Artificial Intelligence into Radiology Practice. Acad. Radiol. 2022;29:87–94. doi: 10.1016/j.acra.2020.09.014. [DOI] [PubMed] [Google Scholar]

- 75.Bhandari A., Purchuri S.N., Sharma C., Ibrahim M., Prior M. Knowledge and Attitudes towards Artificial Intelligence in Imaging: A Look at the Quantitative Survey Literature. Clin. Imaging. 2021;80:413–419. doi: 10.1016/j.clinimag.2021.08.004. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.