Abstract

Plant phenomics (PP) has been recognized as a bottleneck in studying the interactions of genomics and environment on plants, limiting the progress of smart breeding and precise cultivation. High-throughput plant phenotyping is challenging owing to the spatio-temporal dynamics of traits. Proximal and remote sensing (PRS) techniques are increasingly used for plant phenotyping because of their advantages in multi-dimensional data acquisition and analysis. Substantial progress of PRS applications in PP has been observed over the last two decades and is analyzed here from an interdisciplinary perspective based on 2972 publications. This progress covers most aspects of PRS application in PP, including patterns of global spatial distribution and temporal dynamics, specific PRS technologies, phenotypic research fields, working environments, species, and traits. Subsequently, we demonstrate how to link PRS to multi-omics studies, including how to achieve multi-dimensional PRS data acquisition and processing, how to systematically integrate all kinds of phenotypic information and derive phenotypic knowledge with biological significance, and how to link PP to multi-omics association analysis. Finally, we identify three future perspectives for PRS-based PP: (1) strengthening the spatial and temporal consistency of PRS data, (2) exploring novel phenotypic traits, and (3) facilitating multi-omics communication.

Keywords: plant phenomics, remote sensing, phenotyping, phenotypic traits, multi-omics, breeding, precision cultivation

This review analyzes the progress of proximal and remote sensing (PRS) in plant phenomics (PP) over the last two decades from an interdisciplinary perspective. Means of linking PRS to multi-omics studies are demonstrated, and challenges and prospects for PRS in PP applications are highlighted.

Introduction

Improving crop yield production is a serious global challenge caused by increasing population, limited resources, and deteriorating climate (Rosegrant and Cline, 2003). Breeding ideal varieties and realizing precision cultivation are fundamental ways to meet this challenge (Moreira et al., 2020). High-throughput genomics, transcriptomics, and proteomics have been achieved in the last two decades, enabling the large-scale dissection of the genetic basis of important traits (Varshney et al., 2009; Roitsch et al., 2019). However, high-throughput, high-precision, and multi-dimensional phenotypic data acquisition and analysis are seriously lagging and have become bottlenecks hindering high-throughput genetic-improvement breeding and precision cultivation management (Montes et al., 2007; Grosskinsky et al., 2015).

Plant phenomics (PP), an emerging interdisciplinary subject, is well recognized as an accelerator for breeding and an optimizer for the cultivation of plants (Watt et al., 2020), including agricultural, forest, horticultural, and grassland plants. The history of phenomics can be traced back to 1911 when the concept of phenotype was proposed to represent “all types of organisms, distinguishable by direct inspection or by finer methods of measuring or description” (Johannsen, 1911). Phenomics is a much wider concept that refers to the acquisition of high-dimensional phenotype data on an organism-wide scale (Soule, 1967). The concepts of phenotype and phenomics are both proposed by geneticists to decipher the relationship between genes and target traits (e.g., cancer). When the connotation of these concepts was adopted by plant scientists, plant phenotype and PP were formed to study plant growth, performance, and composition (Furbank, 2011). In addition, the methods and protocols in the process from plant phenotype to PP have been defined as plant phenotyping (Schurr, 2013).

Traditional phenotyping has been implemented mainly by manual measurement or scoring, which is tedious, time-consuming, and labor-intensive (Dhondt et al., 2013). The development of rapid breeding and precision cultivation has placed new demands on the throughput, accuracy, repeatability, and novelty of phenotyping. First, high-throughput, highly accurate, and high-precision phenotyping is the basis. Second, there is an increasing demand for non-destructive, timely, and repeatable phenotyping such as senescence dynamics (Anderegg et al., 2020). Third, novel, high-dimensional, and invisible phenotypes, such as the leaf to panicle ratio (LPR) (Xiao et al., 2021a) and canopy occupation volume (COV) (Liu et al., 2021a), are difficult to measure with traditional methods.

Remote sensing technology is the acquisition of information without contact (Navalgund et al., 2007). It has been widely used in geoscience and engineering and sheds new light on plant phenotyping (Jin et al., 2021b). Since the first aerial photo was taken from a hot-air balloon in 1858, remote sensing technology has experienced two stages from qualitative to quantitative analysis benefiting from the development of sensors (e.g., RGB, hyperspectral, thermal, light, and ranging detection/light detection and ranging [LiDAR]), platforms (e.g., robot, drone, and satellite), and information technologies (e.g., computer vision) (Horning, 2008). With the development of platform techniques and their widespread application to PP, the definition of remote sensing techniques has taken on a more precise distinction in the modern context, i.e., proximal and remote sensing (PRS) (Jin et al., 2021b; Li et al., 2021; Pineda et al., 2021). The use of sensors close to plants is defined as proximal sensing (PS) and includes approaches such as computed tomography (CT) and magnetic resonance imaging (MRI). By contrast, the use of sensors at a distance from plants is defined as remote sensing (RS) and includes airborne and space-borne imaging (Figure 1). The quality of PRS data consists of its temporal, spatial, and spectral resolutions, which determine its advantages for quantitative plant phenotyping (Navalgund et al., 2007; Toth and Jóźków, 2016). The noncontact working mechanism makes it a suitable tool for non-destructive and repeatable measurements. Various temporal, spatial, and spectral resolutions have boosted the acquisition of time-series, multi-scale, and multi-dimensional phenotyping data.

Figure 1.

The spectral, spatial, and temporal dimensions of PRS (sensors and platforms) in PP under the background of genetic diversity and environmental gradients.

The three axes of the cube represent spectral, temporal, and spatial dimensional data from different PRS platforms, including proximal platforms (such as tripods, gantries, and vehicles) and remote platforms (such as drones, airplanes, and satellites). The spectral dimension refers to phenotyping plants with an electromagnetic spectrum that ranges from gamma rays to microwaves. The temporal dimension is the time interval for plant observation, including single, diurnal, seasonal, and inter-annual observations. The spatial dimension includes phenotyping from the cell/tissue level to the global level.

Unprecedented progress of PRS in PP has been witnessed in the last two decades. PRS has provided observations of plants from the cell (Piovesan et al., 2021) to the population (Inostroza et al., 2016), from above ground (Maesano et al., 2020) to underground (Shi et al., 2013), and from indoor (controlled) environments to field conditions on multiple spatial (Pallottino et al., 2019; Jin et al., 2020d; Xie and Yang, 2020) and temporal scales (Din et al., 2017; Chivasa et al., 2019; Weksler et al., 2020). Meanwhile, image analysis methods enable the transformation of multiple spatio-temporal and spectral data into phenotypic knowledge, including plant structural, physiological, and performance-related traits (e.g., biomass and yield) (Feng et al., 2008; Koppe et al., 2010; Dhondt et al., 2013; Din et al., 2017). More importantly, multi-dimensional PRS data mining and interpretation have brought about a renaissance for PP (Yang et al., 2013; Li et al., 2014).

Combining PP and PRS can accelerate breeding and cultivation management (Xiao et al., 2022). Breeders are concerned about the stability of inheritance and the expression of genes in the natural environment, which often takes a long time. Over the past years, PRS technology has helped breeders rapidly characterize the performance of genotypes in multiple environments, enabling early seedling assessment (Yu et al., 2013), key growth period monitoring (Song et al., 2021b), and yield prediction (Zhuo et al., 2022) and thereby improving and accelerating the trait selection process. In addition, the existence of pleiotropism and multigenic effects contributes substantial uncertainty to breeding (Jin et al., 2020d). Multiple spatial and temporal analyses of PRS technology have increased the interpretability of genomic × environment (G × E) interactions and have established an effective feedback mechanism between breeding and cultivation (Jung et al., 2021).

The increasing interdisciplinary applications of PRS in PP have spawned some important review articles during the last decade. These efforts have mainly concerned the applications of specific PRS sensors (e.g., laser scanner, Jin et al., 2021b; optical imager, Li et al., 2014), platforms (e.g., unmanned aerial vehicle [UAV]; Feng et al., 2021), and phenotypic methods (Jiang and Li, 2020) and have emphasized how to link PP to breeding by plant scientists (Araus and Cairns, 2014; Araus et al., 2018; Yang et al., 2020a; Moreira et al., 2020). However, the PP community lacks a systematic review from the PRS perspective, covering phenotypic observation, data interpretation, and phenomics analysis. Therefore, this paper aims to make the following contributions: (1) reviewing the overall applications of PRS to PP during the last two decades rather than focusing on only one class of sensor or platform; (2) summarizing the data acquisition, processing, modeling, and analysis techniques that help link PRS to PP and multi-omics analysis; and (3) highlighting interdisciplinary challenges and prospects from PRS insight.

Progress of PRS in PP

Overview of PRS

PRS is the noncontact acquisition of information on objects or phenomena. The history of PRS can be traced back to the picture of Paris taken from a hot-air balloon in 1858. After that, the balloon platform was replaced by more advanced planes and satellites, enabling PRS to be used in military reconnaissance (Hudson and Hudson, 1975), land surveying (Sheng-qing, 2002), topography mapping (Schuler et al., 1998), and so forth. Meanwhile, sensors have undergone development from simple RGB cameras to various passive and active sensors. Passive PRS and active PRS methods were developed depending on the received electromagnetic radiation of the target illuminated by sunlight or sensor-emitted light (Toth and Jóźków, 2016). Passive PRS sensors commonly include visible light cameras (RGB), multi/hyperspectral imagers (MS/HS), chlorophyll fluorescence imagers, and thermal imagers. Active PRS sensors mainly include LiDAR, laser-induced chlorophyll fluorescence (LIF), synthetic aperture radar (SAR), and CT. Active and passive sensors provide a wealth of data sources for phenotypic observations under different working conditions, and they are inseparable from the development of multiple platforms.

PRS platforms can be either stationary or mobile, indoor or outdoor, proximal or remote (Toth and Jóźków, 2016; Guo et al., 2020b) and include tripods, gantries, vehicles, drones, airplanes, and satellites (Figure 1). These platforms have undergone development from RS (e.g., satellites) to PS (e.g., drones) in PP. Satellite platforms usually have the advantage of global accessibility. Although most satellite platforms have a relatively low spatial-temporal resolution, some low-orbit satellite platforms show promising sub-meter resolution (e.g., WorldView-4, GeoEye-1, and GF-2) and/or near-daily temporal resolution (e.g., AVHRR/MODIS, WorldView-4, and SuperView-1). Airborne platforms (e.g., helicopters) have the advantage of mobilization flexibility, and they can mount various types of sensors and respond quickly under proper conditions. The emergence of drones has further improved the flexibility of low-altitude observations and greatly reduced data costs. However, the endurance and load capacity of drones is far inferior to that of ground platforms such as fixed tripods, mobile gantries, and ground vehicles. As the technology matures and costs decrease, portable and affordable personal PS devices (e.g., smartphones and handheld LiDAR) (Balenović et al., 2021) are becoming powerful tools for exploring plant phenotypes (Lane et al., 2010; Fan et al., 2018).

The development and popularity of PRS sensors and platforms have promoted the formation of highly automated plant phenomics facilities (PPFs) for phenotype acquisition and data transmission in indoor and field environments (Pratap et al., 2015). The indoor platform has the advantage of high stability and can enable the mutual feedback adjustment of phenotypic observation and environmental control. However, most indoor PPFs have limited space and controllable environments, and plant samples are therefore transported to the facilities, which are described as “plant to sensor” facilities. Famous plant to sensor facilities include CropDesign (BASF, Germany) (Sinclair, 2006), PHENOPSIS (INRAE, France) (Granier et al., 2006), the PlantScreen system (NPEC, Holland) (https://plantphenotyping.com/), and HRPF (HZAU, China) (Yang et al., 2014). By contrast, most outdoor PPFs measure phenotypes under natural environmental conditions where plants are fixed but sensors are equipped on moveable facilities, which are described as “sensor to plant” facilities. Representative sensor to plant facilities include Field Scanalyzer (Rothamsted Research, UK) (Virlet et al., 2017), Crop 3D (CAS, China) (Guo et al., 2016), and NU-Spidercam (University of Nebraska, USA) (Bai et al., 2019). These indoor and outdoor PPFs combine an automatic control system and multi-sensors, enabling high-throughput and high-precision observations of multi-scale (from cell to population level), time-series (single time, diurnal, seasonal, and inter-annual), and multi-dimensional plant phenotypes (Figure 1). These PRS-based observations further enable the versatile applications of PRS in PP.

Overview of PRS applications in PP

To analyze the advances, challenges, and perspectives of PRS in PP, we retrieved publications from the Web of Science database (Clarivate Analytics, USA) between 2000 and 2020 using the following keywords and criteria: (“plant”) AND ("remote sensing" or "RGB" or "visible light" or "digital camera" or "∗spectral" or "lidar" or "light detection and ranging" or "laser scanning" or "thermal" or "Chlorophyll Fluorescence" or "SIF" or "CT" or "Computed Tomography" or "PET" or "Positron Emission Tomography" or "NMR" or "Nuclear Magnetic Resonance" or "MRI" or "Magnetic Resonance Imaging"). A total of 52 492 papers in English appeared after conference papers were excluded. Subsequently, a large number of automatically retrieved articles that did not match the review topic were manually eliminated from the analysis based on their article titles, keywords, and abstracts. Finally, 2972 articles were selected and classified into different categories in terms of global spatial distribution and temporal dynamics patterns, specific PRS technologies, phenotypic research fields, working environments, species, traits, and so forth. Specific categorization criteria and final classification results are provided in a supplementary table that is available at https://github.com/ShichaoJin/PRSinPP.

The global spatial distribution and temporal dynamics of numbers of phenotypic applications using PRS show that (1) the total number of global PRS phenotyping applications has increased every year, especially after 2014; (2) the major continents producing publications over the past two decades have been America and Europe. Asia developed slowly in the first decade but has developed rapidly in the last 10 years, even surpassing Europe and on par with America (Figure 2A); (3) most countries in the Americas but only a few countries in Africa are conducting PRS phenotyping research. China, Germany, and Australia have made major contributions in Asia, Europe, and Oceania, respectively (Figure 2B).

Figure 2.

The global spatial distribution and temporal dynamics of the number of phenotypic applications using PRS during 2000–2020.

(A–D) (A) The number of yearly publications by continent, (B) total number of publications by country, (C) yearly publications with different phenotypic sensors, and (D) yearly publications with different phenotypic platforms.

To analyze the specific PRS technologies applied in phenotyping, we analyzed temporal trends in phenotypic sensors and platforms. RGB sensors appeared early and flourished owing to the development of PS in the last 5 years (Figure 2C). Multi-/hyperspectral sensors are the most popular sensors, exceeding 50% of the sensors used in almost all years. Some emerging PS sensors have had fewer applications, but they show a growth trend. For example, LiDAR is mainly used to measure structural phenotypes, and CT is usually used to measure the fine internal structures of plants (Lafond et al., 2015). Furthermore, the simultaneous use of multiple sensors has gradually increased, indicating an increasing demand for multiple phenotypes when studying plant growth.

In terms of PRS platforms (Figure 2D), ground-based platforms are the mainstream application choice, followed by space-borne platforms and airborne platforms. Space-borne platforms have grown steadily, whereas airborne platform applications have increased more rapidly in the last 5 years, mainly owing to the popularity of near-ground aircraft such as drones. Phenotyping applications of multi-platform combinations have also emerged in recent years, but their proportion is small.

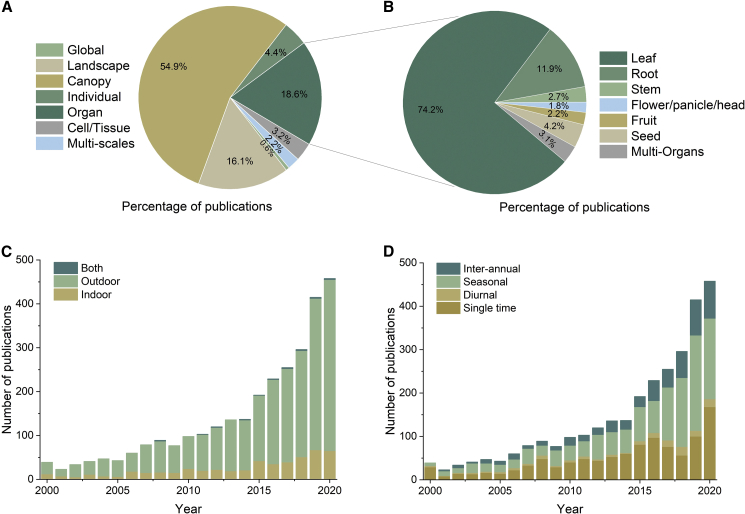

The above PRS sensors and platforms have boosted multi-spatial and multi-temporal PP studies of different organ types in different working environments (Figure 3). Outdoor phenotyping research far exceeds indoor phenotyping research, and there are a few studies that span indoor and outdoor environments (Figure 3C). In addition, phenotyping has been conducted at multiple spatial scales, including the cell, organ, individual, canopy, landscape, and global scales (Figure 3A). Canopy, organ, and landscape are the top three scales, accounting for nearly 90% of the surveyed publications. In terms of organ phenotyping, these publications mainly focus on leaves and roots (Figure 3B). Meanwhile, analysis of multi-temporal studies showed that the early application of PRS to PP consisted mainly of one-off (single-time) observations and later changed gradually to focus on growth cycle applications, including diurnal, seasonal, and inter-annual phenotyping (Figure 3D). Among different multi-temporal phenotyping applications, inter-annual phenotyping was the most popular owing to the low requirement for high-throughput phenotyping, whereas diurnal applications were less common because of the challenges of high-throughput, repeatable, and comparable phenotyping. On the whole, there have been relatively few phenotypic studies across multiple environments, organs, and spatiotemporal scales.

Figure 3.

The number of PRS in PP publications for multi-spatial and multi-temporal PP studies of different organ types in different working environments.

(A–D) (A) Multi-spatial scales: from cell to global; (B) specific organ types; (C) working environments, including indoor, outdoor, and both; (D) multi-temporal scales, including single, diurnal, seasonal, and inter-annual phenotyping.

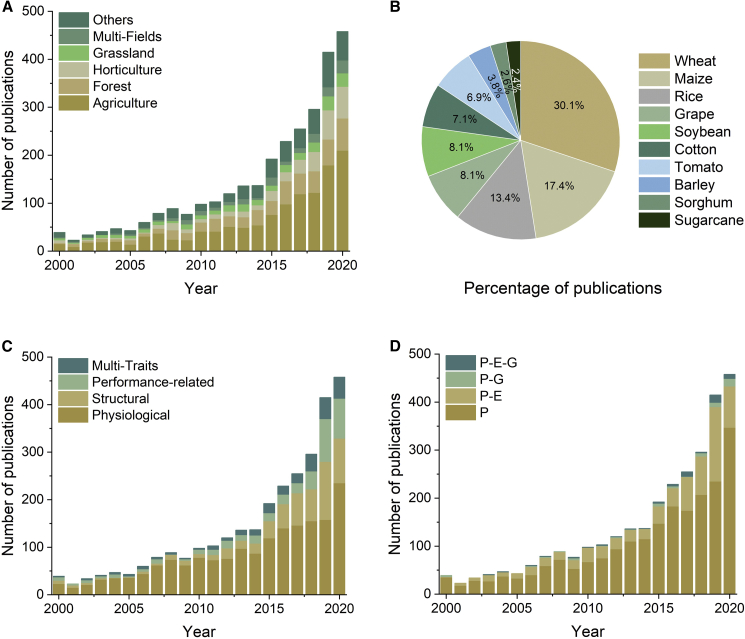

The multiple spatio-temporal applications of PRS in PP can be further analyzed from the perspective of research fields (Figure 4). The growing numbers of published papers show that the main research fields are agriculture, forestry, horticulture, and grasslands (Figure 4A). Because PRS has always been most widely used in agriculture, we next analyzed specific research species. The top 10 commonly studied agricultural species are summarized in Figure 4B, which shows that cereal crops, including wheat, maize, and rice, are the most commonly studied species. Plant phenotypic traits in different fields can be divided into three categories according to Dhondt et al. (2013): physiological, structural, and performance-related; physiological traits account for the largest proportion of studies and performance-related traits the smallest (Figure 4C). In addition, multiple phenotypic traits have only emerged in the last decade. The specific phenotypic traits in each category and the technological readiness level (TRL) of applications using different sensors are evaluated using the methods of Araus et al. (2018) and Jin et al. (2021b) (Table 1). Although phenotyping itself has achieved rapid growth, it has also promoted interdisciplinary research on phenomics (P), genomics (G), and the environment (E). In these interdisciplinary studies, the P × E interaction has been most commonly studied, whereas there have been relatively few studies of P × G and P × E × G interactions (Figure 4D).

Figure 4.

PRS applications in PP.

(A–D) PRS applications in PP in terms of (A) research field, (B) agricultural species, (C) trait class, and (D) interdisciplinary studies.

Table 1.

TRL of different phenotypic traits at different spatial scales using different sensors.

Note: the TRL method was described by Araus et al. (2018) and Jin et al. (2021b).

fAPAR, fraction of absorbed photosynthetically active radiation.

Legend:  High TRL

High TRL  Medium TRL

Medium TRL  Low TRL

Low TRL  Not applicable.

Not applicable.

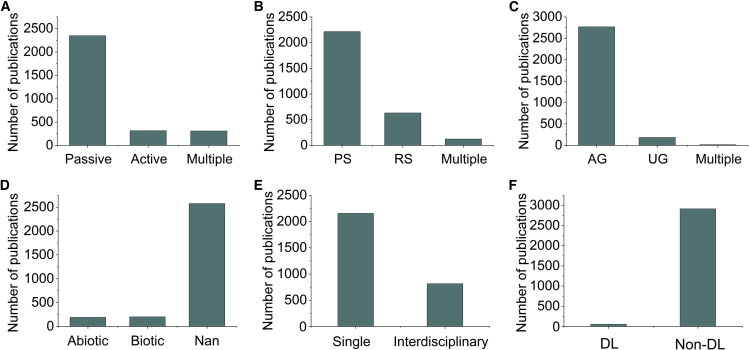

This section summarizes progress in the application of PRS to PP in terms of the technological and practical aspects of PRS. Passive sensors are the most frequently used sensor type (Figure 5A), whereas active sensors (e.g., LiDAR) have gradually aroused the interest of researchers because they have less reliance on the environment. PS is becoming the dominant approach for plant phenotyping (Figure 5B) owing to its high spatial, temporal, and spectral resolution. The research targets (organs) are mainly aboveground, but a considerable part of the work has been focused on the underground or on a combination of across the aboveground and underground phenotypes (Figure 5C). In addition, although phenotypic research on abiotic/biotic stress, interdisciplinary approaches, and deep learning are current interests, they still represent only a small proportion of the published literature (Figures 5D–5F). How to leverage PRS to better address these research interests and contribute to multi-omics studies is a question worth pursuing.

Figure 5.

Current PRS technology applications.

(A–F) A summary of current PRS technology applications in PP from different perspectives, including (A) passive versus active sensing, (B) proximal versus remote sensing, (C) aboveground versus underground traits, (D) stress versus non-stress, (E) phenomics versus multi-omics, and (F) deep learning versus non-deep learning.

How to link PRS to G × P × E studies

The development of PP is becoming increasingly important for the promotion of multi-omics studies. Genomic and phenomic association analysis methods have been successfully used in crop improvement breeding, enabling researchers to map chromosome regions that condition complex traits (Carlson et al., 2019), screen drought-resistant germplasm (Wu et al., 2021b), and predict crop yield or quality (Romero-Bravo et al., 2019; Sun et al., 2019). Plant phenotypes are also affected by the environment, showing different types of phenotypic plasticity (Sultan, 2000). Stotz et al. (2021) provided a good interpretation of the G × E interaction of plant phenotyping by studying the differences in plant phenotypic plasticity across biogeographic scales; they emphasized the need to consider environmental factors in order to improve the genetic potential of future plants to adapt to climate change.

However, owing to the interactions between genes and the environment (Dowell et al., 2010), plant phenotypes are comprehensive and spatio-temporal, meaning that the same genotype may correspond to different traits, and different traits may have the same genotype. Similarly, it is important to note that the same physical matter/material may have different spectra and that similar spectra may correspond to different physical matter/materials, as has been studied for several decades in PRS. Methods that have been proposed for determining the essential relationship between PRS signals and intrinsic properties of matter include linear mixing models such as the geometric method (Drumetz et al., 2020), nonnegative matrix factorization (Fu et al., 2019b), Bayesian method (Shuai et al., 2019), and sparse unmixing (Sun et al., 2020); non-linear mixing models such as bilinear mixing models (Luo et al., 2019); and multilinear mixing models (Li et al., 2019b). For example, Zhou et al. (2019) used a spectral unmixing analysis method, vertex component analysis (VCA), to identify and visualize pathogen infection from hyperspectral images. The results showed that abundance maps calculated by VCA could perform high-throughput screening of plant disease infection at the early stages. Yuan et al. (2021) distinguished and amplified the spectral differences between rice and background by integrating the abundance information of the mixed components of rice fields calculated by the bilinear mixing model (BMM) with the spectral index, which also improved the accuracy of the rice yield estimation model. These PRS theories and methods may provide a way to analyze the complexity of phenotypes and multi-omics.

PRS for plant phenotyping

Data acquisition

PRS enables multi-spatial, multi-temporal, and multispectral data acquisition in a non-invasive and high-throughput manner (Song et al., 2021a; Jangra et al., 2021) (Figure 6). Multispectral data can be collected using various sensors. Graph, shape, and spectral information can be ideally captured by high-resolution RGB cameras, three-dimensional (3D) sensors (e.g., LiDAR), and hyperspectral imagers, respectively. RGB cameras provide fast access to two-dimensional (2D) and plant canopy morphology information (Poire et al., 2014; Yang and Han, 2020). In addition, time-of-flight sensors, such as TOF cameras and LiDAR, can produce finer 3D structural phenotypes of plants (Paulus, 2019; Li et al., 2022). In addition to these morphological and structural traits, physiological traits involved in plant biochemical processes can be obtained using multispectral, hyperspectral (Guo et al., 2017), chlorophyll fluorescence (Jiang and Bai, 2019), and thermal imaging technologies (Rajsnerova and Klem, 2012). These physiological traits can quickly and effectively indicate plant growth and developmental status, enabling early assessment of plant vigor (Candiago et al., 2015), early detection of plant pathogens (Rumpf et al., 2010), estimation of total gross primary production (Zhang et al., 2020c), and characterization of changes in stomatal conductance (Vialet-Chabrand and Lawson, 2019). In addition, proximal tomography techniques, such as CT, PET, MRI, and NMR, are recommended for acquiring traits that are not visible to the human eye, such as photoassimilate distribution (Wang et al., 2014), root system structure (Xu et al., 2018), metabolic processes (Phetcharaburanin et al., 2020), plant internal damage (Lyons et al., 2020), and cellular water status (Musse et al., 2017).

Figure 6.

The path of linking PRS to multi-omics by phenotyping and phenomics practices.

In multi-omics analysis, the P2G in black represents the pathway (black arrow) from phenomics to genomics, and the G2P in red represents the pathway (red arrow) from genomics to phenomics.

Multi-spatial data are usually acquired by integrating multiple platforms according to the requirements of a working environment and data quality (Ravi et al., 2019). Ground-based platforms are the most common platforms for data acquisition and can be further divided into indoor and outdoor platforms depending on the working environment. Indoor platforms are usually under controlled conditions and are oriented toward phenotyping at the level of the individual plant (Bi et al., 2021), organ (Sarkar et al., 2021), or cell (Sun et al., 2021), which is particularly advantageous for acquiring comparable phenotypic data. Ground-based outdoor platforms such as gantries, handheld or backpack instruments, and mobile vehicles focus on plant- to canopy-scale phenotyping. In addition, airborne and satellite platforms can be used to obtain phenotypes of plant populations from field to global scales (higher spatial throughput), facilitating the study of environmental and genetic plasticity in the expression of plants in different ecological contexts.

Multi-temporal data can be divided into single-time, diurnal, seasonal, and inter-annual frequencies. In the early years, PRS phenotype monitoring was mostly a single exploratory exercise because of the limitations of phenotyping platforms and algorithm performance (Weiss et al., 2020). The advent of satellite imagery products has enabled inter-annual observations of phenology traits on a global scale (Setiyono et al., 2018). The rapid development of airplane and drone technology in recent years has greatly increased the temporal resolution of phenotyping (Holman et al., 2016), allowing for the acquisition of seasonal and even diurnal phenotype data. Thanks to the development of active sensors (e.g. LiDAR; Guo et al., 2018b) and high-throughput phenotyping facilities (e.g., gantries; Guo et al., 2016), diurnal plant phenotyping (e.g., circadian rhythms; Chaudhury et al., 2019; Jin et al., 2021a) can be fully achieved, enabling higher temporal analysis of plant growth.

Data processing

High-throughput and high-precision trait extraction from PRS data is an essential step from sensors to biological knowledge (Tardieu et al., 2017). Data preprocessing is important for ensuring accuracy in phenotyping, such as radiation calibration and geometric alignment. For example, the raw spectral signal (e.g., one-dimensional [1D] curve or 2D image) records digital number values, which need to be converted to physical quantities like radiance and reflectance (Zhu et al., 2020a). In addition, distortion of information in the spatial domain can occur during PRS image acquisition. Systematic errors are predictable and are usually calibrated at the sensor end (Berra and Peppa, 2020). Random observation errors are usually corrected by geometrically calibrating the PRS image to a known ground coordinate system (such as topographic maps and ground control points) (Han et al., 2020; Liu et al., 2021b). After preprocessing, the data processing pipeline is usually sensor specific. 1D spectral curves, such as the hyperspectral curve, usually require dimension reduction (Luo et al., 2020), wavelet transformation (Paul and Chaki, 2021), and spectral index calculation (Fu et al., 2020). 2D image–based phenotyping (e.g., with RGB images or multi-/hyperspectral images) usually involves image registration (Tondewad and Dale, 2020), classification (Cheng et al., 2020), segmentation (Hossain and Chen, 2019), and trait extraction (Jiang et al., 2020). 3D data, such as LiDAR or image-reconstructed point clouds, typically undergo a processing pipeline of registration (Cheng et al., 2018), denoising (Hu et al., 2021), sampling (Bergman et al., 2020), filtering (Jin et al., 2020a), normalization (Kwan and Yan, 2020), classification/segmentation (Mao and Hou, 2019), and trait extraction (Jin et al., 2021b). These phenotyping methods enable the extraction of structural, physiological, and performance-related traits.

Recently, integrated analysis platforms have been developed to process phenotypic data with improved throughput and efficiency. Image Harvest is a high-throughput image analysis framework (Knecht et al., 2016) that significantly reduces the phenotyping costs required by plant biologists. MISIRoot, an in situ and non-destructive root phenotyping robot, was developed to detect the health of plant roots (Song et al., 2021c). In light of the large volume of phenotypic data, some open-source and cross-platform frameworks have been proposed for flexible and effective phenotyping. PhenoImage is a typical open-source image processing platform that provides simple access to high-throughput/efficient phenotyping for non-computer professionals (Zhu et al., 2021). These high-throughput phenotyping systems with integrated data acquisition and processing have become ideal options for breeders (Wu et al., 2020) because they provide automated and flexible procedures for high-throughput image processing algorithms (Zhang et al., 2019b), paving the way for PP studies with the help of data modeling.

PRS for PP

Data modeling is important for exploring phenotypic knowledge with biological significance from multi-dimensional phenotypes. Basic statistical models mainly suffice for simple preliminary analyses. By contrast, machine-learning methods are superior for high-dimensional and non-linear modeling of phenotypic tasks such as yield prediction (Ashapure et al., 2020). However, machine-learning-based methods usually require handcrafted features, and their performance has not been significantly improved under the accumulation of big data (Guo et al., 2020a). Deep learning, a new branch of data-driven machine learning, can handle more complex phenotypic tasks by performing automatic feature extraction from massive datasets, creating a new paradigm shift in phenomics analysis (Jiang and Li, 2020; Nabwire et al., 2021). For example, wheat ears can be identified and counted from thousands of RGB images of the wheat canopy (Misra et al., 2020). However, deep-learning-based methods usually have higher requirements for data volume and quality.

In addition to empirical and data-driven methods whose generalizability and interpretability may be questioned, mechanical models based on physical and mathematical quantities are of great interest because of their explainable and generalizable ability (Berger et al., 2018a). There have been many studies of phenotypic radiative transfer models (RTMs) as a reliable method for characterizing crop canopy differences, and one of the most popular physical models is PROSAIL (Su et al., 2019a), which has made significant progress in an inversion of leaf area index (LAI) and canopy chlorophyll content (CCC). A recent study demonstrated the potential of the PROSAIL model for studying crop growth traits based on UAV RS (Wan et al., 2021). Crop growth models (CGMs) such as WOFOST (CWFS&WUR, the Netherlands) (Van Diepen et al., 1989), DSSAT (University of Florida, USA) (Jones et al., 2003), Agricultural Production System Simulator (APSIM) (CSIRO, Australia) (Keating et al., 2003), STICS (INRA, France) (Brisson et al., 2003), and CropGrow (NJAU, China) (Zhu et al., 2020c) have also received widespread attention. By integrating the interactions among crop genetic potential, environmental effects, and cultivation techniques, crop growth models can simulate the growth and development of crops under different conditions, effectively predicting plant responses to stress (Tang et al., 2009), simulating the effects of climate extremes on crop yield (Pohanková et al., 2022), predicting the performance of varieties in target environments (Lamsal et al., 2017), and explaining how G × E interactions affect crop productivity (Messina et al., 2018). Therefore, CGMs may provide decision support for precision agriculture, variety selection, and management optimization (Kherif et al., 2022).

PP for multi-omics

Accurate and rapid analysis of phenomics data can not only help expand our understanding of the dynamic developmental processes of plants but also provide a novel approach to interdisciplinary dialogue among multi-omics, including genomic, epigenomic, transcriptomic, proteomic, metabolomic, and microbiomic studies, which shows significant potential value in integrating multi-dimensional regulatory networks from plant genes to phenotypes (Watt et al., 2020).

Multi-omics analysis pipelines can generally be grouped into two types: phenomics to omics (e.g., P2G, from phenomics to genomics) and omics to phenomics (e.g., G2P, genomics to phenomics) (Figure 6). Combining phenomics with other omics and using association analysis for different times and environments, quantitative trait loci (QTLs) can be located and candidate genes or networks discovered (Furbank, 2009). Optimal traits (ideotypes) can then be designed by genome editing.

PRS-based phenomics has been used to accelerate multi-omics studies for identifying new genetic loci, screening high-quality varieties, and accelerating breeding. In terms of rapid genetic loci identification, non-destructive, dynamic, and high-throughput phenotyping provides high-quality phenomics data for genome-wide association studies (GWASs), leading to rapid identification of the genetic architecture of important agronomic traits (Guo et al., 2018c). For example, Wu et al. (2019a) used a high-throughput micro-CT-RGB imaging system to obtain 739 traits from 234 rice accessions at nine time points. A total of 402 significantly associated loci were identified, and two of them were associated with yield and vigor, thus contributing to the selection of high-yielding varieties. Zhang et al. (2017) quantified 106 maize phenotypic traits using an automated high-throughput phenotyping platform and identified 988 QTLs, including three hotspots. They revealed the dynamic genetic structure of maize growth and provided a new strategy for selecting superior maize varieties. In terms of high-quality genetic selection, the high-throughput phenotyping platform has proven its feasibility. Using phenomics information on plant height, leaf shape, color, and flowering time, 18 stable genetic mutants were successfully screened from a library of several thousand tobacco mutants (Wang et al., 2017). Dynamic high-throughput phenotyping data were used for genomic selection to assess optimal wheat varieties under drought and high-temperature environments, demonstrating that the combination of genetic information and phenomics data can help breeders identify and select quality wheat lines more effectively (Crain et al., 2018). In addition, high-throughput phenotyping sensors have provided important data support for accelerating breeding. The target selection cycle of corn oil content can be reduced from 100 generations to 18 generations by combining MRI and near-infrared sensors (Song et al., 1999; Dudley and Lambert, 2004; Song and Chen, 2004). In all, PP has played a crucial role in indoor germplasm screening and field performance evaluation of crop varieties.

In summary, PRS paves the way for multi-omics studies by providing phenotyping methodology and phenomics knowledge, as shown in Figure 6. By acquiring multi-spatial, multi-temporal, and multispectral data, structural, physiological, and performance-related traits can be extracted with data processing and modeling methods. Empirical, data-driven, and mechanical methods can integrate multi-dimensional phenotypes and transform data into phenomics knowledge. As a bridge for multi-omics research, PRS-based PP provides unprecedented opportunities, although there are still many challenges.

Challenges and future perspectives

Strengthening the spatial and temporal consistency of PRS data

The plant phenotype involves comprehensive traits (e.g., biomass) that also show spatio-temporal changes with plant growth and development owing to the co-regulation of genomics and the environment (Dowell et al., 2010). PRS enables high-throughput, high-precision, and multi-dimensional phenotyping, benefiting from various available and affordable sensors and platforms. Some considerations are recommended to improve phenotypic data quality. First, choose an appropriate spectral/spatial/temporal resolution based on the phenotypic targets. Second, standardize data collection processes to ensure comparability and improve processing efficiency by following standards published by international organizations (Liping, 2003; Kresse, 2010). More importantly, because of the increasing need for repeatable phenotyping, data sharing, and interdisciplinary collaboration, a more serious challenge for PRS-based phenotyping is to maintain the spatio-temporal consistency of multi-source data.

Spatio-temporal consistency is the key to ensuring the comparability of phenotypes, which is more important for PS applications. Unlike RS, which observes the earth in similar ways using unified data acquisition, transfer, and processing protocols, PS usually has comparability problems between different sensors due to their different working settings and spatio-temporal resolutions (Aasen et al., 2018). Therefore, it is necessary but challenging to improve the spatio-temporal consistency of PS-based phenotyping. For data acquisition, a space and ground integrated network with wireless sensor networks (WSNs) is operated to coordinate plant traits from satellites and ground-based systems (Huang et al., 2018). Another promising approach is to develop novel sensors that can fuse data at the signal level. Some efforts have been made to develop hyperspectral LiDAR and fluorescence LiDAR sensors using techniques like RGB color-based restoration (Wang et al., 2020a), geometric invariability-based calibration (Zhang et al., 2019a), and multispectral waveform decomposition (Song et al., 2019). These have initially achieved the simultaneous acquisition of geometric and radiation information for plant structural and biochemical traits (Du et al., 2016; Bi et al., 2020b).

At the feature and/or decision levels, fusing multi-source data is also beneficial for strengthening spatiotemporal consistency and fully utilizing the complementary advantages of multiple sensors and platforms. Traditional multi-source feature fusion approaches are usually implemented with simple linear regression by assigning weights to different extracted features (Rischbeck et al., 2016; Sobejano-Paz et al., 2020). With the increasing volume, heterogeneity, and non-linear characteristics of PRS data, especially hyperspectral imagery and LiDAR data, advanced machine-learning algorithms (e.g., domain adaptation and transfer learning) have been developed to provide fusion solutions (Ghamisi et al., 2019). Deep learning is a fast-growing field in PRS and has been used for multi-source data fusion. For example, a classification method based on an interleaving perception convolutional neural network (IP-CNN) was developed to fuse spectral and spatial features (Zhang et al., 2022). Similarly, these statistical, machine-learning, and deep-learning methods have also been used to fuse multi-source data at the decision level (Ouhami et al., 2021). For example, the nitrogen nutrition status of multiple organs in almond trees was successfully assessed by integrating spectral reflectances of leaf and root tissues based on the weighted partial least squares (Paz-Kagan et al., 2020). A micro-phenotypic analysis of micronutrient stress was achieved by combining fluorescence kinetics with cell-related traits (e.g., stomatal conductance) in leaves (Mijovilovich et al., 2020). Although integrating multi-source phenotypic traits can deepen our understanding of plant behavior under multiple conditions, the automatic alignment of heterogeneous PRS data and auxiliary data (e.g., geolocated texts and images) is still challenging.

In addition, data transmission, processing, and storage should include a complete record of metadata, and standardized methods should be adopted to further ensure spatio-temporal data consistency (Jang et al., 2020; Guo et al., 2021). Detailed documentation may include metadata names, acquisition steps (e.g., sensor types and configurations), and experimental conditions (such as experimental design, environmental conditions, time, and geographic location). Metadata also include standard processing methods and unified data formats, which are particularly significant for the integration of multi-source phenomics data and other omics data (Coppens et al., 2017). Metadata will help to build a PP database based on plant ontology. For example, the GnpIS database contains data from indoor and outdoor experiments, from experimental design to data collection, and follows the findable, accessible, interoperable, and reusable (FAIR) principles (Pommier et al., 2019), enabling data to be shared with researchers in different fields over time.

Finally, data sharing is an important step for strengthening interdisciplinary studies and promotes the integration of data sets from multiple sources, creating an unprecedented amount of information that can be reused to generate novel knowledge (Roitsch et al., 2019). For example, an open plant breeding network database, ImageBreed, was designed for image-based phenotyping queries against genotype, phenotype, and experimental design information to train machine-learning models and support breeding decisions (Morales et al., 2020). In particular, Harfouche et al. (2019) considered the sharing of data between individual researchers and breeders to be one of the key challenges for artificial intelligence (AI) breeding in the next decade. They emphasized that data sharing may contribute to deeper insights into data and improve the robustness of breeding programs. Several shared databases have been constructed, such as the GnpIS database (Pommier et al., 2019) described above.

Exploring novel phenotypic traits

Plant phenotypic traits are the “spokespersons” of PP and multi-omics communication, yet many phenotypic traits are still unexplored or unnoticed. PRS may expand the frontier of plant phenotyping and enrich plant science studies.

PRS may help to discover traditionally unobservable phenotypes. The root system is a key organ for keeping plants vigorous and vibrant (Watt et al., 2020), but its phenotyping is easily overlooked owing to limited accessibility and the lack of efficient tools for trait extraction. There are challenges involved in underground phenotyping. As a commonly used non-invasive geophysical technique, ground-penetrating radar has been successfully used to characterize various root system traits. Liu et al. (2022) proposed an automated framework for processing ground-penetrating radar data that provides new opportunities for determining root water content under field conditions and increasing our understanding of plant root system interactions with the environment (soil and water). Research interest in root phenology has increased the application of other 3D visualization techniques such as CT and MRI, and the development of these techniques and new methods has, in turn, increased the potential for understanding complex root systems and their environmental interactions (Topp et al., 2013; Maenhout et al., 2019; Falk et al., 2020). The leaf stoma is another challenging microscopic phenotype that can be observed using advanced PRS technology. Xie et al. (2021a) introduced a high-throughput epidermal cell phenotype analysis pipeline based on confocal microscopy that was combined with QTL techniques to identify the heritability of epidermal traits in field maize, providing a physiological and genetic basis for further studies on stomatal development and conductance.

PRS enables the proposition of novel biologically meaningful traits. Using RGB cameras and deep learning, Yang et al. (2020b) proposedLPR as a novel phenotypic trait indicative of source-sink relationships, revealing unique canopy light interception patterns of ideal-plant-architecture varieties from a solar perspective. Liu et al. (2021a) used a LiDAR camera to develop a new algorithm to segment maize stems and leaves and introduced COV, a new phenotyping trait that characterizes the photosynthetic capacity of plant canopies. The fusion of multi-source PRS data also helps us to understand plant phenotyping traits at a finer scale in multiple dimensions. Shen et al. (2020b) resolved the pattern of biochemical pigmentation with age and species vertical variation in different tree species based on fused hyperspectral and LiDAR data, providing an important potential indicator for quantifying the terrestrial carbon cycle.

PRS boosts time-series phenotyping. Monitoring and tracking plant growth dynamics such as growth duration (Park et al., 2016), flowering rate (Zhang et al., 2019e), filling habit, and senescence dynamics (Han et al., 2018) is a long-term interest of biologists. As early as the eighteenth century, astronomer Jean Jacques discovered that leaves of the mimosa plant exhibit a normal daily rhythm independent of changes in daylight, suggesting a regular adaptation of the plant to its environment, now known as the circadian rhythm (McClung, 2006). Plant rhythms are important for the study of plant responses to changing environments (Webb, 2003). However, time-series observations of plant phenotyping lag far behind the study of growth rhythms in plant physiology (e.g., the circadian clock). LiDAR, as an active sensing technology, is less affected by environmental light conditions and has been successfully used to explore the seasonal and circadian rhythms of plant growth at the individual and organ levels (Puttonen et al. 2016, 2019; Herrero-Huerta et al., 2018; Jin et al., 2021a). However, in situ measurements occur only at a specific time and place, and the resulting conclusions may not be universal. Recently launched and forthcoming earth observation satellites (e.g., OCO-3) offer a possible solution. These satellites have diurnal sampling capabilities that can increase the exploration of diurnal patterns of carbon and water uptake by different ecosystems and plants at different life stages (Xiao et al., 2021b). These time-series phenotypes derived from PS and RS may help to find new traits/genes (Das Choudhury et al., 2018) and large-scale phenotypic plasticity (Stotz et al., 2021) in response to environmental change, respectively.

PRS may also be beneficial for multi-scale phenotyping. Combining RS techniques to capture phenotypic variation that connects molecular biology to earth-system science would also be a major research interest (Pallas et al., 2018; Porcar-Castell et al., 2021). Meanwhile, large-scale phenotypic analysis could help us to understand within-species variation in plants and thus reveal whether local provenances have sufficient genetic variation in functional traits to cope with environmental change (Camarretta et al., 2020). Mizuno et al. (2020) described the tolerance of inbred quinoa lines to salt stress under three different landscape conditions and demonstrated the genotype-phenotype relationships for salt tolerance among quinoa lines, providing a useful basis for molecular elucidation and genetic improvement of quinoa. More studies have explored different genetic gains analyzed at the landscape scale and individual scales (Dungey et al., 2018; Tauro et al., 2022). It is foreseeable that combining PRS techniques will be beneficial for unraveling more universal genetic mechanisms across different scales.

However, phenotyping discrepancies among scales should be considered (Wu et al., 2019b) because PRS signals are influenced by different targets. For example, the leaf-level spectrum is mainly related to leaf thickness, structure, pigment, and water content, whereas the canopy-level spectrum is influenced by canopy structure (e.g., LAI and leaf inclination distribution) (Berger et al., 2018b). In addition, topographic and climatic factors need to be considered at landscape and even higher levels (Zarnetske et al., 2019). Solutions for multi-scale transformation include (1) physical models, such as scaling from leaf to canopy level based on the PROSPECT and SAIL model (Li et al., 2018c); (2) pixel-based methods, such as fractal theory (Wu et al., 2015), for correcting the scaling effect of the LAI estimated from heterogeneous pixels in coarse-resolution images; and (3) object-based methods, such as simulation zone partitions for separating and clustering large regions into smaller zones with similar crop growth traits and environments (Guo et al., 2018a). Despite these existing multi-scale phenotyping and modeling solutions, more efforts are needed to understand the interactions of phenotypes among different scales that may contribute to multi-omics analysis.

Facilitating multi-omics communication

In the coming years, an important challenge in phenomics will be to identify the genetic and environmental determinants of phenotypes. Multi-omics analysis shows promise for resolving the spatio-temporal regulatory networks of important agronomic traits (Yang et al., 2020a).

Discovering genes according to phenotypic variation has enabled tremendous advances, but high-throughput gene discovery still has a long way to go with PRS-derived phenomics (P2G) (Furbank et al., 2019). "Genetic gain" is a fundamental concept in quantitative genetics and breeding and refers to the incremental performance per unit of time achieved through artificial selection (Araus et al., 2018). The integration of different levels of phenotyping and modern breeding techniques, such as marker-assisted selection (MAS), QTLs, and GWAS, can help maximize genetic gain and further shorten breeding cycles (Xiao et al., 2022). At the population level, Sun et al. (2019) investigated the possibility of using hyperspectral traits (i.e., Normalized Difference Spectral Index) for genetic studies. At the organ level, Nehe et al. (2021) explored genetic variation based on a combination of RGB images and KASP (Kompetitive Allele Specific PCR) markers for marker-assisted selection of drought-tolerant wheat varieties. At the cell/tissue level, Zhang et al. (2021) revealed natural genetic variation and dissected the genetic structure of vascular bundles using GWAS of 48 micro-phenotypic traits based on CT scans. Time-series phenotypes for genetic analysis have also increased our understanding of the genetic basis of dynamic plant phenotypes. Campbell et al. (2019) discovered a locus associated with rice shoot growth trajectories using random regression methods based on continuous visible light images, providing a viable solution for revealing persistent and time-specific QTLs. Therefore, a collaboration between high-throughput PP and functional genomics has enhanced our ability to identify new genetic variants (Grzybowski et al., 2021), thereby accelerating precision breeding and cultivation and bridging the research gap between genomics and phenomics (Araus and Kefauver, 2018; Singh et al., 2019).

Predicting phenotypes according to genetic variation is another direction of the genomics and phenomics combination that is important for guiding gene editing to achieve smart breeding (G2P) (Yang et al., 2014; Ma et al., 2018). With the advent of the breeding 4.0 era, in which phenomics, genomics, bioinformatics, and biotechnology are involved in the conventional breeding pipeline, researchers have identified diverse molecular mechanisms of phenotype formation controlled by the expression of deoxyribonucleic acid (DNA) (Wang et al., 2020b). Therefore, correlating molecular phenotypes with phenotypes at the organism-wide scale can further reveal genetic loci associated with plant phenotypes, enabling the establishment of a complete information flow model from DNA to phenotypic traits (Salon et al., 2017). Furthermore, researchers can use the information flow model to explain the causal relationship of genetic variants to phenotypic variation, remove deleterious alleles, and introduce beneficial alleles, significantly accelerating the process of crop improvement (Rodriguez-Leal et al., 2017; Wang et al., 2020b).

Phenotype differences due to various gene expressions in heterogeneous environments have recently been studied (Friedman et al., 2019). However, current studies do not fully consider multiple environmental factors and environmental dynamics. The surrounding environment (e.g., soil, moisture, light) and plant internal environment (e.g., pH) are specific and different for each genotype or variety (Xu, 2016). An integrated understanding of environmental dynamics and accurate environmental factor measurements are also extremely important for breeding resilient varieties because of G × E interactions (Langstroff et al., 2022). CGMs provide a quantitative framework for linking the effects of genes or alleles to traits. The motivation and potential benefits of CGMs as a G2P trait linkage function for applying quantitative genetic mechanisms to predict expected traits were explored in a recent review (Cooper et al., 2020). When applied to practical production, however, the model achieved only small improvements in accuracy, probably caused by the difficulty of estimating parameters for CGMs (Toda et al., 2020). PRS-based high-throughput phenotyping technologies offer opportunities for parameter improvement of CGMs. Combining PRS and CGMs for phenomics-genomics research is an interesting endeavor (Kasampalis et al., 2018). For example, Yang et al. (2021) integrated RGB images to parameterize the APSIM for the development of varieties with performance in the target environment. Furthermore, developing virtual CGMs to predict crop growth states based on G × E data in real time and thus regulate real plant growth is promising (Liu et al., 2019a).

Although multi-source PRS data provide the above-mentioned opportunities for multi-omics analysis, they also introduce new data processing challenges due to massive data accumulation. Therefore, deep-learning methods have gained wide popularity in recent years (Xiao et al., 2022). Image-based deep-learning methods have been well investigated for phenotypic analyses such as wheat head counting (Khaki et al., 2022) and stress detection (Wang et al., 2022). More deep-learning-based phenotypic applications have been reviewed (Singh et al., 2018; Guo et al., 2020a; Arya et al., 2022). Here, we want to highlight the challenges of deep learning for phenomics based on PRS data. One challenge is to construct large-volume, well-labeled, and openly available datasets. There are already ways to promote open data in the current phenotyping community, such as algorithm competitions. Meanwhile, “sharing the right data right” has been proposed because it allows for scientific reproducibility (Tsaftaris and Scharr, 2019). Another challenge is the development of networks with multimodal data. Multi-task learning (MTL) is an important direction that not only may facilitate multiple task implementation but also can integrate multi-source input. MTL has been proven effective and efficient for phenotyping (Dobrescu et al., 2020). For example, Sun et al. (2022) used MTL to simultaneously predict both yield and grain protein content of wheat from LiDAR and multispectral data. In addition, generative adversarial networks (GANs) are also promising for the analysis of very large, multi-source datasets that lack labeled phenotypic data. Yasrab et al. (2021) predicted plant leaf and root growth from multi-temporal data using a GAN. GAN-based methods may also be coupled with growth models to relate digital pairs of plant simulation and plant growth, supporting smart breeding and intelligent management (Drees et al., 2021).

Concluding remarks

PP has become a bottleneck technology for high-throughput breeding and a key valve for increasing yield production. Our era has witnessed tremendous advances in PRS and PP, but there has not previously been a systematic understanding of the history, applications, and trends of PRS in PP. This review focuses on PRS applications in PP from an interdisciplinary perspective over the last two decades and covers the overall history, systematic application progress, and pipeline to link PRS-based phenomics to multi-omics analysis, giving insights into future challenges and perspectives. To the best of our knowledge, the application analysis covers nearly all aspects of PRS application in PP, including the global spatial distribution and pattern of temporal dynamics, specific PRS technologies (sensor and platform types), phenotypic research fields, working environments, species, and traits. As a bridge for multi-omics research, PRS-based PP involves multi-dimensional data acquisition, processing, and modeling, which can be used to accelerate multi-omics studies to identify new genetic loci, screen high-quality varieties, and accelerate breeding. We also highlight some key directions to better promote the in-depth development of PRS in PP, including strengthening the spatial and temporal consistency of PRS data, exploring novel phenotypic traits, and facilitating multi-omics communication.

Funding

This work was supported by the Hainan Yazhou Bay Seed Lab (no. B21HJ1005), the Fundamental Research Funds for the Central Universities (no. KYCYXT2022017), the Open Project of Key Laboratory of Oasis Eco-agriculture, Xinjiang Production and Construction Corps (no. 202101), the Jiangsu Association for Science and Technology Independent Innovation Fund Project (no. CX(21)3107), the High Level Personnel Project of Jiangsu Province (no. JSSCBS20210271), the China Postdoctoral Science Foundation (no. 2021M691490), the Jiangsu Planned Projects for Postdoctoral Research Funds (no. 2021K520C), and the JBGS Project of Seed Industry Revitalization in Jiangsu Province (no. JBGS[2021]007).

Author contributions

S.J. designed the study. H.T., J.Z., and S.J. prepared figures and tables. S.J., H.T., and S.X. wrote the manuscript. Y.W. and H.T. organized the references. S.J., Y.G., Y.T., Z.L., G.Z., X.D., Z.Z., Y.D., D.J., and Q.G. helped to revise the manuscript. S.J. provided funding support. All authors read and approved the final manuscript.

Acknowledgments

We gratefully acknowledge Qing Li and Songyin Zhang at the Nanjing Agricultural University for their help in preparing figures and tables. No conflict of interest is declared.

Published: June 2, 2022

Footnotes

Published by the Plant Communications Shanghai Editorial Office in association with Cell Press, an imprint of Elsevier Inc., on behalf of CSPB and CEMPS, CAS.

References

- Aasen H., Honkavaara E., Lucieer A., Zarco-Tejada P.J. Quantitative remote sensing at ultra-high resolution with UAV spectroscopy: a review of sensor technology, measurement procedures, and data correction workflows. Rem. Sens. 2018;10:1091–1131. doi: 10.3390/rs10071091. [DOI] [Google Scholar]

- Ajadi O.A., Liao H., Jaacks J., Delos Santos A., Kumpatla S.P., Patel R., Swatantran A. Landscape-scale crop lodging assessment across Iowa and Illinois using synthetic aperture radar (SAR) images. Rem. Sens. 2020;12:3885–3899. doi: 10.3390/rs12233885. [DOI] [Google Scholar]

- Anderegg J., Yu K., Aasen H., Walter A., Liebisch F., Hund A. Spectral vegetation indices to track senescence dynamics in diverse wheat germplasm. Front. Plant Sci. 2020;10:1749. doi: 10.3389/fpls.2019.01749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Araus J.L., Cairns J.E. Field high-throughput phenotyping: the new crop breeding frontier. Trends Plant Sci. 2014;19:52–61. doi: 10.1016/j.tplants.2013.09.008. [DOI] [PubMed] [Google Scholar]

- Araus J.L., Kefauver S.C., Zaman-Allah M., Olsen M.S., Cairns J.E. Translating high-throughput phenotyping into genetic gain. Trends Plant Sci. 2018;23:451–466. doi: 10.1016/j.tplants.2018.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Araus J.L., Kefauver S.C. Breeding to adapt agriculture to climate change: affordable phenotyping solutions. Curr. Opin. Plant Biol. 2018;45:237–247. doi: 10.1016/j.pbi.2018.05.003. [DOI] [PubMed] [Google Scholar]

- Arya S., Sandhu K.S., Singh J., kumar S. Deep learning: as the new frontier in high-throughput plant phenotyping. Euphytica. 2022;218:47. doi: 10.1007/s10681-022-02992-3. [DOI] [Google Scholar]

- Asaari M.S.M., Mertens S., Dhondt S., Inzé D., Wuyts N., Scheunders P. Analysis of hyperspectral images for detection of drought stress and recovery in maize plants in a high-throughput phenotyping platform. Comput. Electron. Agric. 2019;162:749–758. doi: 10.1016/j.compag.2019.05.018. [DOI] [Google Scholar]

- Ashapure A., Jung J., Chang A., Oh S., Yeom J., Maeda M., Maeda A., Dube N., Landivar J., Hague S., et al. Developing a machine learning based cotton yield estimation framework using multi-temporal UAS data. ISPRS J. Photogrammetry Remote Sens. 2020;169:180–194. doi: 10.1016/j.isprsjprs.2020.09.015. [DOI] [Google Scholar]

- Backoulou G.F., Elliott N.C., Giles K., Phoofolo M., Catana V. Development of a method using multispectral imagery and spatial pattern metrics to quantify stress to wheat fields caused by Diuraphis noxia. Comput. Electron. Agric. 2011;75:64–70. doi: 10.1016/j.compag.2010.09.011. [DOI] [Google Scholar]

- Baghdadi N.N., El Hajj M., Zribi M., Fayad I. Coupling SAR C-band and optical data for soil moisture and leaf area index retrieval over irrigated grasslands. IEEE J. Sel. Top. Appl. Earth Obs. Rem. Sens. 2016;9:1229–1243. doi: 10.1109/jstars.2015.2464698. [DOI] [Google Scholar]

- Bai G., Ge Y.F., Scoby D., Leavitt B., Stoerger V., Kirchgessner N., Irmak S., Graef G., Schnable J., Awada T. NU-Spidercam: a large-scale, cable-driven, integrated sensing and robotic system for advanced phenotyping, remote sensing, and agronomic research. Comput. Electron. Agric. 2019;160:71–81. doi: 10.1016/j.compag.2019.03.009. [DOI] [Google Scholar]

- Balenović I., Liang X., Jurjević L., Hyyppä J., Seletković A., Kukko A. Hand-held personal laser scanning: current status and perspectives for forest inventory application. Croat. J. For. Eng. 2021;42:165–183. doi: 10.5552/crojfe.2021.858. [DOI] [Google Scholar]

- Bånkestad D., Wik T. Growth tracking of basil by proximal remote sensing of chlorophyll fluorescence in growth chamber and greenhouse environments. Comput. Electron. Agric. 2016;128:77–86. doi: 10.1016/j.compag.2016.08.004. [DOI] [Google Scholar]

- Bendel N., Kicherer A., Backhaus A., Köckerling J., Maixner M., Bleser E., Klück H.C., Seiffert U., Voegele R.T., Töpfer R. Detection of grapevine leafroll-associated virus 1 and 3 in white and red grapevine cultivars using hyperspectral imaging. Rem. Sens. 2020;12:1693–1719. doi: 10.3390/rs12101693. [DOI] [Google Scholar]

- Berger K., Atzberger C., Danner M., D’Urso G., Mauser W., Vuolo F., Hank T. Evaluation of the PROSAIL model capabilities for future hyperspectral model environments: a review study. Rem. Sens. 2018;10:1–26. doi: 10.3390/rs10010085. [DOI] [Google Scholar]

- Berger K., Atzberger C., Danner M., Wocher M., Mauser W., Hank T. Model-based optimization of spectral sampling for the retrieval of crop variables with the PROSAIL model. Rem. Sens. 2018;10:2063. doi: 10.3390/rs10122063. [DOI] [Google Scholar]

- Bergman A.W., Lindell D.B., Wetzstein G. 2020 IEEE International Conference on Computational Photography (ICCP) 2020. Deep Adaptive Lidar: End-To-End Optimization of Sampling and Depth Completion at Low Sampling Rates; pp. 1–11. [Google Scholar]

- Beriaux E., Lucau-Danila C., Auquiere E., Defourny P. Multiyear independent validation of the water cloud model for retrieving maize leaf area index from SAR time series. Int. J. Rem. Sens. 2013;34:4156–4181. doi: 10.1080/01431161.2013.772676. [DOI] [Google Scholar]

- Bériaux E., Waldner F., Collienne F., Bogaert P., Defourny P. Maize leaf area index retrieval from synthetic Quad Pol SAR time series using the water cloud model. Rem. Sens. 2015;7:16204–16225. doi: 10.3390/rs71215818. [DOI] [Google Scholar]

- Berra E.F., Peppa M.V. 2020 IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS) 2020. Advances and Challenges of UAV SFM MVS Photogrammetry and Remote Sensing: Short Review; pp. 533–538. [Google Scholar]

- Bi K., Niu Z., Gao S., Xiao S., Pei J., Zhang C., Huang N. Simultaneous extraction of plant 3-D biochemical and structural parameters using hyperspectral LiDAR. Geosci. Rem. Sens. Lett. IEEE. 2022;19:1–5. doi: 10.1109/lgrs.2020.3025321. [DOI] [Google Scholar]

- Bi K., Niu Z., Xiao S., Bai J., Sun G., Wang J., Han Z., Gao S. Non-destructive monitoring of maize nitrogen concentration using a hyperspectral LiDAR: an evaluation from leaf-level to plant-level. Rem. Sens. 2021;13:5025. doi: 10.3390/rs13245025. [DOI] [Google Scholar]

- Bi K., Xiao S., Gao S., Zhang C., Huang N., Niu Z. Estimating vertical chlorophyll concentrations in maize in different health states using hyperspectral LiDAR. IEEE Trans. Geosci. Rem. Sens. 2020;58:8125–8133. doi: 10.1109/tgrs.2020.2987436. [DOI] [Google Scholar]

- Blanquart J.E., Sirignano E., Lenaerts B., Saeys W. Online crop height and density estimation in grain fields using LiDAR. Biosyst. Eng. 2020;198:1–14. doi: 10.1016/j.biosystemseng.2020.06.014. [DOI] [Google Scholar]

- Blunk S., Hafeez MAlik A., de Heer M.I., Ekblad T., Fredlund K., Mooney S.J., Sturrock C.J. Quantification of differences in germination behaviour of pelleted and coated sugar beet seeds using x-ray computed tomography (x-ray CT) Biomed. Phys. Eng. Express. 2017;3:044001. doi: 10.1088/2057-1976/aa7c3f. [DOI] [Google Scholar]

- Bodner G., Alsalem M., Nakhforoosh A., Arnold T., Leitner D. RGB and spectral root imaging for plant phenotyping and physiological research: experimental Setup and imaging protocols. J. Vis. Exp. 2017:1–21. doi: 10.3791/56251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brisson N., Gary C., Justes E., Roche R., Mary B., Ripoche D., Zimmer D., Sierra J., Bertuzzi P., Burger P., et al. An overview of the crop model STICS. Eur. J. Agron. 2003;18:309–332. doi: 10.1016/s1161-0301(02)00110-7. [DOI] [Google Scholar]

- Bruning B., Liu H.J., Brien C., Berger B., Lewis M., Garnett T. The development of hyperspectral distribution maps to predict the content and distribution of nitrogen and water in wheat (Triticum aestivum) Front. Plant Sci. 2019;10:1380–1396. doi: 10.3389/fpls.2019.01380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bu H.G., Sharma L.K., Denton A., Franzen D.W. Sugar beet yield and quality prediction at multiple harvest dates using active-optical sensors. Agron. J. 2016;108:273–284. doi: 10.2134/agronj2015.0268. [DOI] [Google Scholar]

- Buchaillot M.L., Gracia-Romero A., Vergara-Diaz O., Zaman-Allah M.A., Tarekegne A., Cairns J.E., Prasanna B.M., Araus J.L., Kefauver S.C. Evaluating maize genotype performance under low nitrogen conditions using RGB UAV phenotyping techniques. Sensors. 2019;19:1815–1842. doi: 10.3390/s19081815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camarretta N., Harrison A., Lucieer A.P., Lucieer A.M., Potts B., Davidson N., Hunt M. From drones to phenotype: using UAV-LiDAR to detect species and provenance variation in tree productivity and structure. Rem. Sens. 2020;12:3184–3200. doi: 10.3390/rs12193184. [DOI] [Google Scholar]

- Camino C., Zarco-Tejada P.J., Gonzalez-Dugo V. Effects of heterogeneity within tree crowns on airborne-quantified SIF and the CWSI as indicators of water stress in the context of precision agriculture. Rem. Sens. 2018;10:604–622. doi: 10.3390/rs10040604. [DOI] [Google Scholar]

- Campbell M., Momen M., Walia H., Morota G. Leveraging breeding values obtained from random regression models for genetic inference of longitudinal traits. Plant Genome. 2019;12:180075. doi: 10.3835/plantgenome2018.10.0075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Candiago S., Remondino F., De Giglio M., Dubbini M., Gattelli M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Rem. Sens. 2015;7:4026–4047. doi: 10.3390/rs70404026. [DOI] [Google Scholar]

- Cao Q., Miao Y.X., Gao X.W., Liu B., Feng G.H., Yue S.C., Ieee . 2012 First International Conference on Agro-Geoinformatics(Agro-Geoinformatics) 2012. Estimating the Nitrogen Nutrition Index of Winter Wheat Using an Active Canopy Sensor in the North China Plain; pp. 178–182. [Google Scholar]

- Cao Q., Miao Y.X., Wang H.Y., Huang S.Y., Cheng S.S., Khosla R., Jiang R.F. Non-destructive estimation of rice plant nitrogen status with Crop Circle multispectral active canopy sensor. Field Crop. Res. 2013;154:133–144. doi: 10.1016/j.fcr.2013.08.005. [DOI] [Google Scholar]

- Carlson C.H., Gouker F.E., Crowell C.R., Evans L., DiFazio S.P., Smart C.D., Smart L.B. Joint linkage and association mapping of complex traits in shrub willow (Salix purpurea L.) Ann. Bot. 2019;124:701–715. doi: 10.1093/aob/mcz047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casadesús J., Kaya1 Y., Bort J., Nachit M.M., Araus J.L., Amor S., Ferrazzano G., Maalouf F., Maccaferri M., Martos V., et al. Using vegetation indices derived from conventional digital cameras as selection criteria for wheat breeding in water-limited environments. Ann. Appl. Biol. 2007;150:227–236. doi: 10.1111/j.1744-7348.2007.00116.x. [DOI] [Google Scholar]

- Castro W., Marcato Junior J., Polidoro C., Osco L.P., Gonçalves W., Rodrigues L., Santos M., Jank L., Barrios S., Valle C., et al. Deep learning applied to phenotyping of biomass in forages with UAV-based RGB imagery. Sensors. 2020;20:4802–4820. doi: 10.3390/s20174802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang C.Y., Zhou R.Q., Kira O., Marri S., Skovira J., Gu L.H., Sun Y. An Unmanned Aerial System (UAS) for concurrent measurements of solar-induced chlorophyll fluorescence and hyperspectral reflectance toward improving crop monitoring. Agric. For. Meteorol. 2020;294:108145. doi: 10.1016/j.agrformet.2020.108145. [DOI] [Google Scholar]

- Chaudhury A., Ward C., Talasaz A., Ivanov A.G., Brophy M., Grodzinski B., Hüner N.P.A., Patel R.V., Barron J.L. Machine vision system for 3D plant phenotyping. IEEE ACM Trans. Comput. Biol. Bioinf. 2019;16:2009–2022. doi: 10.1109/tcbb.2018.2824814. [DOI] [PubMed] [Google Scholar]

- Chen R., Chu T., Landivar J.A., Yang C., Maeda M.M. Monitoring cotton (Gossypium hirsutum L.) germination using ultrahigh-resolution UAS images. Precis. Agric. 2018;19:161–177. doi: 10.1007/s11119-017-9508-7. [DOI] [Google Scholar]

- Chen X.J., Mo X.G., Zhang Y.C., Sun Z.G., Liu Y., Hu S., Liu S.X. Drought detection and assessment with solar-induced chlorophyll fluorescence in summer maize growth period over North China Plain. Ecol. Indicat. 2019;104:347–356. doi: 10.1016/j.ecolind.2019.05.017. [DOI] [Google Scholar]

- Cheng G., Xie X., Han J., Guo L., Xia G.-S. Remote sensing image scene classification meets deep learning: challenges, methods, benchmarks, and opportunities. IEEE Journal of Selected Topics in Applied Earth Observations. 2020;13:3735–3756. doi: 10.1109/jstars.2020.3005403. [DOI] [Google Scholar]

- Cheng L., Chen S., Liu X., Xu H., Wu Y., Li M., Chen Y. Registration of laser scanning point clouds: a review. Sensors (Basel) 2018;18:1641–1666. doi: 10.3390/s18051641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chivasa W., Mutanga O., Biradar C. Phenology-based discrimination of maize (Zea mays L.) varieties using multitemporal hyperspectral data. J. Appl. Remote Sens. 2019;13:017504–017525. doi: 10.1117/1.jrs.13.017504. [DOI] [Google Scholar]

- Chivasa W., Mutanga O., Biradar C. UAV-based multispectral phenotyping for disease resistance to accelerate crop improvement under changing climate conditions. Rem. Sens. 2020;12:2445–2472. doi: 10.3390/rs12152445. [DOI] [Google Scholar]

- Coast O., Shah S., Ivakov A., Gaju O., Wilson P.B., Posch B.C., Bryant C.J., Negrini A.C.A., Evans J.R., Condon A.G., et al. Predicting dark respiration rates of wheat leaves from hyperspectral reflectance. Plant Cell Environ. 2019;42:2133–2150. doi: 10.1111/pce.13544. [DOI] [PubMed] [Google Scholar]

- Converse A.K., Ahlers E.O., Bryan T., Williams P.H., Williams P., Barnhart T., Engle J.W., Engle J., Nickles R.J., Nickles R., DeJesus O.T., DeJesus O. Positron emission tomography (PET) of radiotracer uptake and distribution in living plants: methodological aspects. J. Radioanal. Nucl. Chem. 2013;297:241–246. doi: 10.1007/s10967-012-2383-9. [DOI] [Google Scholar]

- Converse A.K., Ahlers E.O., Bryan T.W., Hetue J.D., Lake K.A., Ellison P.A., Engle J.W., Barnhart T.E., Nickles R.J., Williams P.H.J.P.M., et al. Mathematical modeling of positron emission tomography (PET) data to assess radiofluoride transport in living plants following petiolar administration. Plant Methods. 2015;11:1–17. doi: 10.1186/s13007-015-0061-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper M., Powell O., Voss-Fels K.P., Messina C.D., Gho C., Podlich D.W., Technow F., Chapman S.C., Beveridge C.A., Ortiz-Barrientos D., et al. Modelling selection response in plant-breeding programs using crop models as mechanistic gene-to-phenotype (CGM-G2P) multi-trait link functions. in silico Plants. 2020;3:1–21. doi: 10.1093/insilicoplants/diaa016. [DOI] [Google Scholar]

- Coppens F., Wuyts N., Inzé D., Dhondt S. Unlocking the potential of plant phenotyping data through integration and data-driven approaches. Current Opinion in Systems Biology. 2017;4:58–63. doi: 10.1016/j.coisb.2017.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cotrozzi L., Lorenzini G., Nali C., Pellegrini E., Saponaro V., Hoshika Y., Arab L., Rennenberg H., Paoletti E. Hyperspectral reflectance of light-adapted leaves can predict both dark- and light-adapted Chl fluorescence parameters, and the effects of chronic ozone exposure on date palm (Phoenix dactylifera) Int. J. Mol. Sci. 2020;21:6441–6459. doi: 10.3390/ijms21176441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crain J., Mondal S., Rutkoski J., Singh R.P., Poland J. Combining high-throughput phenotyping and genomic information to increase prediction and selection accuracy in wheat breeding. Plant Genome. 2018;11:170043–170057. doi: 10.3835/plantgenome2017.05.0043. [DOI] [PubMed] [Google Scholar]

- Das Choudhury S., Bashyam S., Qiu Y., Samal A., Awada T. Holistic and component plant phenotyping using temporal image sequence. Plant Methods. 2018;14:35. doi: 10.1186/s13007-018-0303-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delalieux S., van Aardt J., Keulemans W., Schrevens E., Coppin P. Detection of biotic stress (Venturia inaequalis) in apple trees using hyperspectral data: non-parametric statistical approaches and physiological implications. Eur. J. Agron. 2007;27:130–143. doi: 10.1016/j.eja.2007.02.005. [DOI] [Google Scholar]

- Dente L., Satalino G., Mattia F., Rinaldi M. Assimilation of leaf area index derived from ASAR and MERIS data into CERES-Wheat model to map wheat yield. Remote Sensing of Environment. 2008;112:1395–1407. doi: 10.1016/j.rse.2007.05.023. [DOI] [Google Scholar]