Abstract

Research assessing the validity and reliability of many forensic science disciplines has been published; however, the quality of this research varies depending on the methodologies employed. This was a major point of contention with the United States' President's Council of Advisors on Science and Technology, who recognized the existing literature but found the majority lacking because of methodological issues. Questionable scientific methodologies have undermined the forensic science community's ability to defend the scientific foundations and examination protocols used to examine evidence in criminal cases. Such scientific failures have significant legal implications. Registered reports, which strengthen the quality of scientific research and reliability of laboratory protocols, can provide transparency, validity and a stronger scientific foundation for forensic science.

Keywords: registered reports, forensic science, quality, methodology, justice

1. Forensic methods in question

Forensic science is a cornerstone of advancing investigations or establishing facts in question to support criminal or civil litigation. Interpretations and conclusions made by specialists following forensic examinations have life-changing consequences for individuals and society. Thus, it is imperative that interpretations and conclusions are based on sound scientific principles, methods and practices. Over the years, increasing concerns have been raised within the scientific community as it relates to the validity and reliability of scientific methodologies underpinning various forensic science disciplines [1,2]; for example, ‘PCAST found few black-box studies appropriately designed to assess scientific validity of subjective methods’ [1, p. 68]. Although thousands of research studies related to forensic science methods and applications have been published in peer review journals over the years [3], the validity of these studies varies depending on the rigour of the underlying scientific methodology employed.

Issues concerning the quality of existing research supporting forensic science became a key point of contention in 2009, when the National Research Council (NRC) raised the question of the extent to which there is science in any given forensic science discipline [2] and again in 2016, the President's Council of Advisors on Science and Technology (PCAST) [1] applied a critical lens to the existing body of research often referenced by the forensic science community as the bedrock of their examination methodologies and was largely dismissive of a significant proportion of studies intended to assess the accuracy and reliability of many forensic disciplines [1]. Many within the forensic science community were quick to publicly disagree with the findings from PCAST and reaffirm the credibility of forensic science methods, including several major forensic science professional organizations (e.g. [4–6]). They took issue with the PCAST's decision to discount and ignore research on methodological grounds, such as small sample sizes, and lack of open-set, black-box designs. Although significant negative reactions across the forensic science community to the PCAST report caused the PCAST to reflect on their findings and recommendations and re-engage relevant stakeholders, they ultimately stood firm in their assessments [7]. These concerns are not limited to just the PCAST. More recently, controversy has ensued as it relates to the methodological design and calculation of error rates, specifically how ‘inconclusive’ decisions are accounted for in error rate studies [8–15]. These concerns have raised questions as to whether courts and the public have been misled when presented with statistics touting the reliability of forensic evidence presented in courts.

Irrespective of whether one agrees with the PCAST and others within the scientific community that have been critical of the methodological design of forensic science research, the controversy has nevertheless cast a shadow over the credibility of many forensic science disciplines. This has caused some litigators and courts to question the reliability of many traditional forensic science practices and challenge the admissibility of forensic evidence. This is a problem that cannot be addressed through mere arguments and critiques in response. Instead, it will require a fundamental shift in how forensic science research is approached.

2. Reliable methodologies underpin legal admissibility

One of the most important aspects of admission and reliance on forensic evidence in criminal trials is the extent to which a forensic discipline is founded on a reliable scientific methodology [2]. However, in 2009 the NRC noted that while ‘[f]orensic science examiners need to understand the principles, practices and contexts of scientific methodology, as well as the distinctive features of their specialty’, they ‘face pressure to sacrifice appropriate methodology for the sake of expediency’ [2, pp. 23, 24, 27]. This environment flies in the face of statutes and rulings that emphasize the court's focus only on the expert's foundational principles and applied methodology and not on any conclusions that result from them. Expert conclusions and opinions based on a methodology that diverges significantly from the procedures accepted by recognized authorities in the field cannot be generally accepted as a reliable technique [2].

For example, US Federal Rule of Criminal Procedure, 16 A(1)G states,

At the defendant's request, the government must provide a written summary of any testimony that the government intends to use under Rules 702, 703, or 705 of the Federal Rules of Evidence during its case-in-chief at trial. If the government requests discovery under subdivisions (b)(1)(C)(ii) and the defendant complies, the government must, at the defendant's request, give to the defendant a written summary of testimony that the government intends to use under Rules 702, 703, or 705 of the Federal Rules of Evidence as evidence at trial on the issue of the defendant's mental condition. The summary provided under this subparagraph must describe the witness's opinions, the bases and reasons for those opinions, and the witness's qualifications (emphasis added) [16].

Federal Rule of Evidence 702 states, in part,

A witness who is qualified as an expert by knowledge, skill, experience, training, or education may testify in the form of an opinion or otherwise if…(c) the testimony is the product of reliable principles and methods; and (d) the expert has reliably applied the principles and methods to the facts of the case (emphasis added) [17].

The USA is not unique in these requirements. The Supreme Court of Canada has adopted Rule 702's reliability requirements in its expert evidence doctrine (White Burgess Langille Inman v Abbott and Haliburton Co, 2015). Similarly, several Australian jurisdictions require expert witnesses to provide the court with the ‘necessary scientific criteria for testing the accuracy’ of their opinions as a requirement for admitting that evidence (Makita Pty Ltd v Sprowles, 2001).

As a result, forensic science is on the front line of society's current ‘crisis of expertise’ [18]. In other words, many fields struggle to maintain legitimacy in the face of politically motivated attacks on scientific findings. Legitimacy takes on a different meaning and level of importance in forensic science, in which sound, valid methodologies enhance the credibility of the field to courts and the public. As a public good, the public expects transparency and no reporting biases in science that inform the criminal justice process. Exaggerating findings repeatedly have consequences for their credibility, regardless of their veracity [19]. Once outside the laboratory, the conclusions are in the hands of lawyers and judges, who often have insufficient training and background in scientific methodology. Legal actors often fail to fully comprehend the approaches employed by different forensic science disciplines and the reliability of forensic science evidence offered in trials [2]. Therefore, it is up to the scientific community, and forensic science community specifically, to ensure that methodologies are sound and valid before being applied in casework. How best to vet the myriad of methods used by forensic service providers worldwide? We contend that the methodology, the bedrock of any science's legitimacy and reliability, presents a way forward via registered reports.

3. Registered reports

Registered reports are a publishing format that promotes rigorous methodology and adherence to that methodology by conducting peer reviews prior to the results being known. Authors submit ‘Stage 1’ manuscripts (including theory, hypotheses, methods and analysis plans) prior to collecting data—or looking at the data if the data are pre-existing. Stage 1 manuscripts are peer-reviewed based on methodological quality and their ability to address research questions. If they pass this process, then the ‘Stage 2’ manuscript (the approved protocol plus results) will be published if the authors follow through with the accepted methodology.

The registered report format is designed to reward best practices in the design of transparent, reproducible methods which lead to unambiguous reporting, regardless of the outcome. It eliminates a variety of questionable research practices by removing the incentive to selectively report results and increasing transparency such that selective reporting would be easy to detect. The format also allows reviewers to help improve the methods by suggesting more appropriate statistical models and encouraging higher powered designs, among other benefits.

Early research on registered reports suggests that these salutary aims have been achieved. Registered reports are associated with more realistic proportions of positive results [20] and a perceived higher quality of research [21]. As a result, they seem to improve research quality, while reducing publication bias. More than 300 journals now accept registered reports [22]. Recently, PLoS Biology observed an 88% acceptance rate for registered reports with substantial revisions for most submissions, compared to 50% acceptance in its regular track [23]. The 88% rate is possibly due to reviewers being able to improve the methods during peer review and ensure that the methodologies are sound from the outset prior to the expended resources and research being conducted. Registered reports seem to improve the quality and credibility of the published literature in several fields.

4. Registered reports as a tool for improving validated methods

While forensic practitioners focus on daily operational activities, many are motivated to involve themselves in scientific research and development as well as validation studies required for accreditation and admissibility. Depending on their organization and the scientific health and cultural changes within their organizations, practitioners may have limited access to qualified collaborators. At the same time, practitioners bring an important perspective with direct access to specialist tools, other qualified practitioners in allied disciplines and expertise. How these projects are designed, what is being performed, and the results, let alone their validity, remain largely unknown and less defensible than those with more transparent methodological designs.

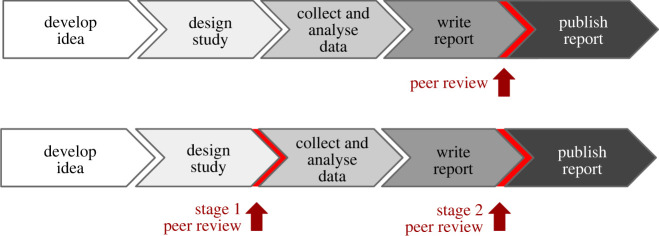

Notably, technical guidelines regarding the performance of validation studies have been published for practitioners working in operational environments (e.g. ENFSI and UK Regulator)1; however, in terms of the quality, validity and reliability of the methods applied and the resulting published results, in-house studies still lack scientific accountability. Registered reports provide accountability and, more importantly, from the practitioner's perspective, they provide a set of clear instructions to ensure a robust and more defensible approach (for a complete overview of the process, see https://osf.io/rr/) (figure 1). Although, at first glance, it may seem like a daunting task, what the field should aim for is encouraging a more transparent, consistent platform that promotes scientific integrity. Currently, countless in-house unpublished validation studies and other research and development studies are hidden or lost behind organizational lines. This would serve the community to have a better and more transparent process to ensure that the results of these studies are published for a wider forensic community. The registered report manuscript would be published regardless of the study's outcomes; this feedback is crucial for forensic science to move forward. Publication of the results requires a valid study design. Registered reports provide this information.

Figure 1.

Traditional research publication process and the registered report protocol compared. Redrawn from: https://osf.io/rr.

5. Registered reports can improve forensic methods and justice outcomes

Forensic Science International: Synergy was the first journal in forensic science to adopt registered reports2. Part of the journal's mission is that because forensic services are sciences integral to a just society governed by the rule of law, they are unarguably a public good and should be accessible to anyone. Open, transparent science helps to promote justice, and we believe that registered reports support this mission.

To this end, registered reports can provide more reliable results through the vetting of methods prior to data collection, decreasing the realistic proportion of positive results and addressing issues with method quality before conclusions are drawn. Moreover, the registered reports are in line with many laws that provide methodological transparency in the justice process. For example, the ability to fully examine evidence tendered against an accused is a component of the right to fair trial in many jurisdictions. For example, in the United States, the Sixth Amendment of the Constitution provides that a person accused of a crime has the right to confront a witness against them in a criminal action. This ensures that witnesses testify under oath and understand the serious nature of the trial process, allows the accused to cross-examine witnesses who testify against them, and, crucially for registered reports, allows jurors to assess the credibility of a witness. The witness's credibility, in large part, stems from the validity of their methods and the conclusions drawn from them. On the stand, the witness would be able to discuss the vetting of the method, its increased validity through the registered reports process, and thus be better seen as the neutral arbiter of science, rather than an advocate using untested methods only they can make work; see [24] for a particularly egregious example of a lack of validation.

Several jurisdictions also exclude evidence when its probative value is exceeded by the danger of unfair prejudice [25]. The probative value is undermined by poor methodology and a lack of methodological transparency. Methodological opacity also makes evidence untestable by the adverse party, heightening its unfair prejudice and potential exclusion if it infringes on due process (e.g. relating to the Fifth and Fourteenth Amendments to the Constitution).

6. Conclusion

In summary, registered reports can provide a strong evidence base for forensic science. They align with principles of justice, such as the right to examine and confront evidence and the purposes that underlie the rules of evidence in many jurisdictions. Researchers, editors and other stakeholders can sign an open letter3 to help bring registered reports to other forensic science journals. The profession and discipline of forensic science must demonstrate validity and transparency to its stakeholders and the public it serves.

Acknowledgements

The authors would like to thank the reviewers of the manuscript for their helpful and professional comments.

Footnotes

Data accessibility

No data supporting this work were generated.

Authors' contributions

M.M.H.: conceptualization, writing—original draft and writing—review and editing; J.C.: conceptualization, writing—original draft and writing—review and editing; H.S.: conceptualization, writing—original draft and writing—review and editing; C.G.: conceptualization, writing—original draft and writing—review and editing.

All authors gave final approval for publication and agreed to be held accountable for the work performed therein.

Conflict of interest declaration

All the authors are associated with the journal Forensic Science International: Synergy.

Funding

This work was not funded by any outside entity.

References

- 1.President's Council of Advisors on Science and Technology (PCAST). 2016. Forensic science in criminal courts: ensuring scientific validity of feature-comparison methods. Report to the President. Washington DC. See https://obamawhitehouse.archives.gov/sites/default/files/microsites/ostp/PCAST/pcast_forensic_science_report_final.pdf.

- 2.US National Research Council. 2009. Strengthening forensic science in the United States: a path forward. Washington, DC: National Academies of Science. [Google Scholar]

- 3.Jesubright JJ, Saravanan P. 2014. A scientometric analysis of global forensic science research publications. Library Phil. Practice 1, 1024. [Google Scholar]

- 4.American Society of Crime Laboratory Directors (ASCLD). 2016. Statement on September 20, 2016 PCAST Report on Forensic Science. See https://www.ascld.org/wp-content/uploads/2016/10/ASCLD-Statement-on-PCAST-Report.pdf.

- 5.Association of Firearms and Tool Mark Examiners (AFTE). 2016. AFTE Response to the September 2016 PCAST Report. See https://afte.org/news/afte-response-to-the-september-2016-pcast-report.

- 6.Federal Bureau of Investigation (FBI). 2016. Comments on: President's Council of Advisor on Science and Technology Report to the President. Forensic science in federal criminal courts: ensuring scientific validity of pattern comparison methods. See https://www.fbi.gov/file-repository/fbi-pcast-response.pdf/view.

- 7.President's Council of Advisors on Science and Technology (PCAST). 2017. An addendum to the PCAST report on forensic science in criminal courts. Report to the President. See https://obamawhitehouse.archives.gov/sites/default/files/microsites/ostp/PCAST/pcast_forensics_addendum_finalv2.pdf.

- 8.Dror IE, Scurich N. 2020. (Mis)use of scientific measurements in forensic science. Forensic Sci. Int. 2, 333-338. ( 10.1016/j.fsisyn.2020.08.006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Weller TJ, Morris MD. 2020. Commentary on: I. Dror, N. Scurich (Mis)use of scientific measurements in forensic science. Forensic Sci. Int. 2, 701-702. ( 10.1016/j.fsisyn.2020.10.004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Biedermann A, Kotsoglou KN. 2021. Forensic science and the principle of excluded middle: ‘inconclusive’ decisions and the structure of error rate studies. Forensic Sci. Int. 3, 100147. ( 10.1016/j.fsisyn.2021.100147) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Arkes HR, Koehler JJ. 2021. Inconclusives and error rates in forensic science: a signal detection theory approach. Law Probab. Risk 20, 153-168. ( 10.1093/lpr/mgac005) [DOI] [Google Scholar]

- 12.Dorfman AH, Valliant R. 2022. Inconclusives, errors, and error rates in forensic firearms analysis: three statistical perspectives. Forensic Sci. Int. 5, 100273. ( 10.1016/j.fsisyn.2022.100273) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hicklin RA, et al. 2022. Accuracy and reliability of forensic handwriting comparisons. Proc. Natl Acad. Sci. USA 119, e2119944119. ( 10.1073/pnas.2119944119) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kukucka J. 2022. In studies of forensic errors, the devil is in the details. Psychology Today. See https://www.psychologytoday.com/gb/blog/reasonable-doubt/202208/in-studies-forensic-errors-the-devil-is-in-the-details.

- 15.Dror IE. 2022. The use and abuse of the elusive construct of inconclusive decisions. Law Probab. Risk mgac008. ( 10.1093/lpr/mgac008) [DOI] [Google Scholar]

- 16.US Federal Rules of Criminal Procedure, Rule 16. Discovery and Inspection.

- 17.US Federal Rules Of Evidence, Article VII. Opinions and Expert Testimony, Rule 702—Testimony by Experts.

- 18.Medvecky F, Leach J. 2019. An ethics of science communication. Cham, Switzerland: Palgrave Macmillan. [Google Scholar]

- 19.Ibaviosa CM, Chin JM. 2016. Beyond CSI: calibrating public beliefs about the reliability of forensic science through openness and transparency. Sci. Justice 62, 272-283. [DOI] [PubMed] [Google Scholar]

- 20.Scheel AM, Schijen MR, Lakens D. 2021. An excess of positive results: comparing the standard psychology literature with registered reports. Adv. Methods Practices Psychol. Sci. 4, 25152459211007467. ( 10.1177/25152459211007467) [DOI] [Google Scholar]

- 21.Soderberg CK, Errington TM, Schiavone SR, Bottesini J, Thorn FS, Vazire S, Esterling KM, Nosek BA. 2021. Initial evidence of research quality of registered reports compared with the standard publishing model. Nat. Hum. Behav. 5, 990-997. ( 10.1038/s41562-021-01142-4) [DOI] [PubMed] [Google Scholar]

- 22.Chambers CD, Tzavella L. 2022. The past, present and future of registered reports. Nat. Hum. Behav. 6, 29-42. ( 10.1038/s41562-021-01193-7) [DOI] [PubMed] [Google Scholar]

- 23.Pariente N, PLOS Biology Staff Editors. 2022. Premiering pre-registration at PLOS Biology. PLoS Biol. 20, e3001611. ( 10.1371/journal.pbio.3001611) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Balko R, Carrington T. 2018. The cadaver king and the country dentist: a true story of injustice in the American South. New York, NY: PublicAffairs. [Google Scholar]

- 25.Chin J, Cullen HJ, Clarke B. In press. The prejudices of expert evidence. Monash Law Rev. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No data supporting this work were generated.