Abstract

Microcalcifications (MCs) are the main signs of precancerous cells. The development of aided-system for their detection has become a challenge for researchers in this field. In this paper, we propose a system for MCs detection based on the multifractal approach that classifies mammographic ROIs into normal (healthy) or abnormal ROIs containing MCs. The proposed method is divided into four main steps: a mammogram pre-processing step based on breast selection, breast density reduction using haze removal algorithm and contrast enhancement using multifractal measures. The second step consists of extracting the normal and abnormal ROIs and calculating the multifractal spectrum of each ROI. The next step represents the extraction of the multifractal features from the multifractal spectrum and the GLCM characteristics of each ROI. The last step is the classification of ROIs where three classifiers are tested (KNN, DT, and SVM). The system is evaluated on images from the INbreast database (308 images) with a total of 2688 extracted ROIs (1344 normal, 1344 with MC) from different BI-RADS classes. In this study, the SVM classifier gave the best classification results with a sensitivity, specificity, and precision of 98.66%, 97.77%, and 98.20% respectively. These results are very satisfactory and remarkable compared to the literature.

Keywords: Multifractal, Multifractal spectrum, Mammogram pre-processing, Microcalcification detection, Multifractal features and ROI classification

Introduction

More than two million women in the world are affected by breast cancer and more than five hundred thousand women die each year of this disease [1]. Awareness, screening and early detection remain the only ones to fight against this scourge. Mammography is the most effective X-ray imaging exam and used technique for breast cancer detection. However, the complex architecture of the breast, the distribution of breast density, the image quality, and the level of experience of the radiologist are all factors that influence the reading of the mammogram and its analysis where the radiologist can miss the small abnormal details present in the mammogram such as microcalcifications (MCs) which are calcium deposits representing the main signs of precancerous cells. Hence, great efforts of scientific researchers to develop computer-aided detection or diagnosis (CAD) systems aimed at assisting radiologists in the detection of abnormalities and the diagnosis of breast cancer. These systems increase the cancer detection rate at an early stage and reduce false positive interpretations.

The classification of mammographic region of interest (ROI) has been the subject of several scientific studies and has become a challenge for researchers in this field given the importance and considerable assistance of radiologists in the early detection of breast cancer [2]. Most of the proposed CAD systems for the classification of mammographic ROI go through four major stages:

Pre-processing: This stage consists of removing noise and artifacts from the mammogram, selecting the breast, removing the pectoral muscle and the background, and, finally, enhancing the contrast. Many techniques for mammogram enhancement have been proposed in the literature. Table 1 summarizes all these techniques [3].

ROI extraction: This is done either by cropping (with the help of the ground truth or the annotation of the expert) or by segmentation approaches. Different ROI sizes were considered in different papers.

Feature extraction: Different sets of features are computed in the literature: statistical features (mean, standard deviation, skewness, uniformity, kurtosis, smoothness…), textural features using gray-level co-occurrence matrix (GLCM) or gray-level run length matrix (GLRLM) (contrast, homogeneity, correlation, entropy, sum average, sum entropy, difference variance, difference entropy, inverse difference moments…), shape features (area, perimeter, convexity, circularity, rectangularity, compactness, area ratio, perimeter ratio…), and multiscale features (wavelets coefficients, directional sub-bands coefficients…).

Classification: Extracted features are fed to a machine-learning algorithm to classify the ROI (normal/abnormal, benign/malignant). A large number of machine learning algorithms have been proposed in the literature, and the most frequently used models are support vector machines (SVM) and artificial neural networks (ANN) [2].

Table 1.

Mammogram enhancement techniques in the literature [3]

| Technique | Methods |

|---|---|

| Contrast stretching |

• Adaptive neighborhood processing - Fixed neighborhood approaches - Adaptive neighborhood contrast enhancement - Gradient and local statistics based enhancement • Histogram-based enhancement techniques - Histogram equalization - Adaptive histogram equalization (AHE) - Contrast limited adaptive histogram equalization (CLAHE) - Histogram modified local contrast enhancement - Fuzzy clipped CLAHE (FC-CLAHE) • Unsharp masking (UM)-based enhancement techniques - Linear and order statistics UM - Quadratic UM - Rational, cubic, and adaptive UM - UM based on region segmentation - Non-linear UM |

| Region based and Feature based techniques |

• Region-based enhancement techniques - Region growing algorithm - Region based algorithm using watershed segmentation - Direct image contrast enhancement algorithm • Feature-based enhancement techniques - Wavelet-based multi-resolution techniques - Laplacian pyramid-based techniques - Miscellaneous multi-resolution techniques |

| Non-linear techniques |

• Morphological filtering • Fuzzy-based enhancement techniques • Enhancement using non-linear filtering |

However, we also find in the literature some CAD systems that use the whole mammogram to detect the suspicious region without going through the stage of ROI extraction. Some other CAD systems do not go through the pre-processing stage. Other CAD systems initially extract a large number of features, then go through the stage of relevant feature selection.

Moreover, different issues have been studied in the classification of mammographic ROIs. We find the classification of normal and abnormal ROIs and the classification of benign and malignant ROIs where suspicious ROIs are either masses [4–7], or MCs [8–11], or without specifying the type of lesion [12–18].

In this work, we are interested in the detection of ROIs containing MCs, in other words, classifying ROIs into normal or abnormal ROI (containing MCs). Therefore, we present some recent works that have worked on the same problem. In [19], authors proposed a system based on wavelet transform for classification of normal and abnormal ROI of MCs, then the classification of benign and malignant MCs. Without pre-processing step, they directly worked on the cropped ROI where they extracted statistical and multi-scale features based on the Haar wavelet transform, interest points, and corners. In total, 50 features were fed to the random forest classifier. The system was tested on the BCDR data base with a total of 192 ROI (96 normal, 96 abnormal). Obtained accuracy, sensitivity, and specificity of classification were 95.83%, 96.84%, and 95.09% respectively. In [20], authors proposed a system based on pattern recognition and size prediction of MCs. The pattern of a MC was found based on its physical characteristics (the reflection coefficient and mass density of the lesion). Then, the detected MC pattern is projected as a 3D image to find the size of the MC. This was tested on 100 images from the DDSM database (100 abnormal, 10 normal). Obtained accuracy, sensitivity, and specificity of classification were 99%, 99%, and 100% respectively. In [21], authors compared three feature extraction techniques: the rotation invariant local frequency (RILF), the local binary pattern (LBP), and segmented fractal texture analysis (SFTA) where the RILF technique gave the best results. The IRMA database was used with a total of 1620 ROIs (932 normal, 688 abnormal). Extracted histogram features were fed to the SVM classifier. Obtained accuracy, sensitivity, and specificity of classification were 91.10%, 98.04%, and 81.17% respectively. In [22], authors proposed a system based on transfer learning for the classification of normal and abnormal ROI containing MCs or masses. They started with a pre-processing step where they used morphological operations, binarization, and component selection. ROIs were cropped and resized. Features were extracted and classified using ResNet. The system was tested on the Mini-Mias database, and it achieved an accuracy of 95.91%. In [23], authors proposed a system for classification of normal and abnormal ROI with MCs, then the classification of benign and malignant MCs. They started by ROIs extraction which are pre-processed using the pixel assignment-based spatial filter to enhance the visibility of MCs in mammograms. Then, six statistical features were extracted and fed to the SVM, multilayer perceptron neural network (MLPNN), and linear discriminant analysis (LDA) classifiers. This was tested on 219 mammograms extracted from the DDSM data base (124 normal, 95 abnormal). Obtained accuracy, sensitivity, and specificity were 90.9%, 98.4%, and 81.3% respectively. In [24], authors proposed an improvement of the previous work [19] by using only two features (interest point and interest corners) with a total of 260 ROIs (130 normal, 130 abnormal). Obtained accuracy, sensitivity, and specificity were 97.31%, 94.62%, and 100% respectively using random forest classifier.

In this paper, we propose a novel approach to the classification of normal and abnormal ROI containing MCs based on multifractal analysis. First, the original mammogram is pre-processed using the proposed approach in [25] for mammogram enhancement which is based on multifractal measures. Then, the ROI is cropped automatically according to the expert annotation. For each ROI (normal and abnormal), the multifractal spectrum is computed using two different methods and five features are extracted. Finally, multifractal features combined with GLCM features are fed to three classifiers (SVM, KNN and DT) for the classification of normal and abnormal ROIs.

The paper is organized as follows: a section of “Materials and methods” presents the multifractal theory and the proposed approach; the “Results and discussion” section discusses the obtained results; and, finally, we end with the “Conclusion” section.

Materials and Methods

Fractal and Multifractal Theories

The mathematician Benoît Mandelbrot introduced the concept of fractals to describe complex objects whose Euclidian geometry did not allow their description. These objects are characterized by the properties of scale invariance, also called self-similarity where similar structures are viewed on all scales, i.e., the object is composed of smaller parts, where each part is a smaller copy of the whole. The main parameter used to describe the geometry and heterogeneity of these irregular objects and quantify their internal structure repeated over a range of scales is the fractal dimension (). For a fractal object, the number of features of a certain size , , varies as [26]:

| 1 |

Equation (1) is the scaling (or power) law that describes the size distribution of the object.

One of the simplest ways to calculate the fractal dimension is to calculate the logarithmic ratio of change in detail () to change in scale (): . The most used method to estimate the is the box-counting technique. This method uses sample elements, which are an array of pixel points. The scale relates to the sampling element’s size. It consists of covering a measure with boxes of size and counting the number of boxes containing at least one pixel representing the object under study , is estimated as [26]:

| 2 |

Using Eq. (2), the box-counting dimension can be determined as the negative slope of versus measured over a range of boxes sizes.

However, the limits of monofractal analysis remain in its inability to describe the local fractal behaviors of images, hence the birth of multifractal analysis.

Multifractals are a generalization of fractals. A multifractal object is more complex and it is always invariant by translation. Multifractal analysis studies the global regularity from the local regularity of the signal. It is based on the estimation of two sets of coefficients: the Hölder exponents that quantifies the local regularity of the signal and the multifractal spectrum that quantifies the multifractality of the signal. It consists of decomposing the signal into subsets having the same regularity, and then measuring the “size” of the subsets thus obtained. The local regularity of a signal at any point is determined by the Hölder exponent defined as [27]:

| 3 |

Since the exponent is defined in all , the original signal can be associated with its Hölder function, , which describes how the regularity of varies: the smaller , the more irregular is in , and vice versa [27].

The second step in multifractal analysis is to study the sets [27]:

| 4 |

where is the sets of points (pixel locations for 2D signal) of the same regularity. The measurement of these sets gives a global description of the singularities distribution of the signal . This can be done using a geometrical or statistical approach. The result is, in all cases, a “multifractal spectrum,” i.e., a function , which describes “how many” points of the signal have a regularity equal to . In the geometrical approach, the multifractal spectrum represents the dimension of all the points having the exponent, i.e., . In the statistical approach, the multifractal spectrum is estimated from the probability of encountering a pixel whose regularity is in the order of [27]. In this paper, we use the statistical approach.

Several methods for estimating the multifractal spectrum have been proposed in the literature. There are methods based on box counting, methods based on wavelets and methods based on detrended fluctuation analysis (DFA). A summary of these methods is presented in Table 2. In this paper, we use the generalized fractal dimensions method and the moving average DFA method for the classification of mammographic ROI.

Table 2.

Multifractal spectrum estimation methods for two dimensional signal

| Box-counting methods | - Generalized fractal dimensions [28] - The “sand box” or cumulative mass method [29] - The large-deviation multifractal spectrum [30] |

|---|---|

| Wavelet methods |

- The wavelet transform modulus maxima (WTMM) method [31] - The wavelet leaders method [32] |

| Detrending analysis methods |

- Multifractal detrended fluctuation analysis (MFDFA) [33] - Multifractal detrended moving average (MFDMA) [33] - Multiscale multifractal detrended-fluctuation analysis (MMFDFA) [34] |

Generalized Fractal Dimensions and Legendre Spectrum

In practice, in order to quantify local densities of a considered set (an image), the mass probability in the ith box is estimated as [35]:

| 5 |

and varies as:

| 6 |

where is the number of pixels in the ith box, is the total mass of the set, and is the Hölder exponent that reflect the local behavior of the mass probability around the center of each box of size , it can be estimated as [35]:

| 7 |

The number of boxes where the probability has similar exponent values between and follows the power law with the box size and the multifractal spectrum [35]:

| 8 |

Since multifractals are affected by distortions, the process of multifractal analysis is equivalent to applying warp filters to an image to analyze imperceptible features. Warp filters are a set of arbitrary exponents traditionally denoted by the symbol “” usually manipulated from a set bracketing 0 in a symmetrical way (e.g., from − 5 to 5). Therefore, a characterization of multifractal measures can be made through the scaling of the qth moments of distributions in the form:

| 9 |

The exponent is called the mass exponent of the qth order moment, and , q € R are the generalized fractal dimensions defined from Eq. [9] as:

| 10 |

where is the mean of the distribution of the distorted probability mass for a size , and the generalized dimension () is determined for each where each mass is distorted by being raised to . So, can be considered as a “microscope” allowed to explore different regions of the distribution. Low values of favor boxes with low (low irregularities) and high values of favor boxes with high values of (high irregularities). In other words, for , represents the more singular regions, and for , it accentuates the less singular regions [36].

The relation between the power exponents and the exponent is established via the Legendre transformation:

| 11 |

and

| 12 |

However, the determination of needs to smooth the curve and then use the Legendre transformation. The smoothing operation provides errors in the estimation of and misses phase transitions when it exhibits discontinuities. In order to avoid these numerical errors, Chhabra and Jensen proposed a method for a direct calculation of the multifractal spectrum using the following formulas [28]:

First, a family of normalized measures was constructed where the probabilities in the boxes of size are:

| 13 |

Then, the direct computation of and values is:

| 14 |

| 15 |

For each , values of and are obtained from the slope of plots of the numerators of Eqs. (14) and (15) vs. over the entire range of values considered. The and functions obtained over a given were used to construct the -spectrum as an implicit function of and .

Multifractal Detrended Moving Average (MFDMA)

The two-dimensional multifractal detrended moving average (2D-MFDMA) algorithm is described as follows [33]: Consider a surface of possible multifractal properties which can be denoted by a two-dimensional matrix , with , and .

Step 1: Calculate the sum

The first step consist to calculate the sum in a sliding window with size , where and . θ1 and θ2 are two position parameters that vary in the range . Specifically, we extract a sub-matrix with size from the matrix , where and . We can calculate the sum of as follows:

| 16 |

Step 2: Determine the moving average function .

First, we extract a sub-matrix with size from the matrix , where and , where and . Then, we calculate the cumulative sum of the matrix of W:

| 17 |

where and .

The moving average function can be calculated as follows:

| 18 |

Step 3: The residual matrix

The matrix is detrended by removing the moving average function from to obtain the residual matrix :

| 19 |

Step 4: The detrended fluctuation function.

The residual matrix is partitioned into disjoint rectangle segment of the same size. Each segment can be denoted by such that for and , where and . The detrended fluctuation of segment can be calculated as follow:

| 20 |

Step 5: The qth order overall fluctuation function is calculated as follows:

| 21 |

is a vector obtained from the detrended fluctuation of each segment for each value of . Then, it is used to calculate the multifractal scaling exponent , and , mathematically, can take any real values except . In practice, if , is given by:

Step 6: Varying the segment sizes and , we can determine the power-law relation between the q-order overall fluctuation function and the scale :

| 22 |

For each q, we can get the corresponding traditional function through:

| 23 |

and obtain the singularity strength function and the multifractal spectrum via Legendre transform.

Comparison Between the Two Methods

The generalized fractal dimensions method is the most used in literature due to its simplicity of its implementation and robustness. Compared to MFDMA, the computation time is short and fast (Table 3) and only two parameters need to be adjusted (Table 4):

Box sizes : are powers of two, i.e., , where is the smallest integer such that .

q-orders: described previously. In this paper, we took .

Table 3.

Computation time of each method according to the image size

| Image (ROI) size | Generalized fractal dimension | MFDMA |

|---|---|---|

| 64 × 64 | 2.243523 s | 2.767289 s |

| 256 × 256 | 2.225408 s | 17.780042 s |

Table 4.

Parameters to be set for each method

| Generalized fractal dimension | MFDMA |

|---|---|

|

- Box size - q-orders |

- Moving average detrending - Sample size—Scaling range - q-orders |

Database

There are several mammographic image databases which are used by researchers in the breast analysis field. In this paper, we used the INbreast database. This database was acquired at the Breast Center of Porto. The image matrices are 3328 × 4084 or 2560 × 3328 pixels saved in DICOM format. It has FFDM (full-field digital mammograms) images from screening, diagnostic, and follow-up cases with a total of 115 cases (a total of 410 images) of which 90 cases (MLO and CC) are from women with both breasts affected (four images per case) and 25 cases are from mastectomy patients (two images per case). It includes several types of lesions: masses, calcifications, asymmetries, architectural distortions, and multiple findings (Fig. 1) and provides information regarding patient’s age at the time of image acquisition, family history, BI-RADS classification of the breast density (Fig. 2), and abnormality [37].

Fig. 1.

Chart describing findings in the INbreast database [37]

Fig. 2.

BI-RADS classification of the breast density. a Almost entirely fat tissue, b scattered fibro-glandular tissue, c heterogeneously dense tissue, and d extremely dense tissue

The database contains 308 images of calcifications of different BI-RADS breast density classes (Fig. 2, Table 5). Thus, 6880 calcifications were individually identified in 299 images. Annotations were made by a specialist in the field, and validated by a second specialist (Fig. 3). These specialists are experts in reading mammograms. A detailed contour of the finding was made. An ellipse enclosing the entire cluster was adopted to annotate the clusters of MCs [37].

Table 5.

Number of mammograms containing MCs of each BI-RADS class

| BI-RADS class | A | B | C | D |

|---|---|---|---|---|

| No. of MCs cases | 102 | 105 | 81 | 20 |

Fig. 3.

Example of mammogram containing MCs from INbreast database. a Original mammogram and b original mammogram with MCs’s annotations

Proposed Method

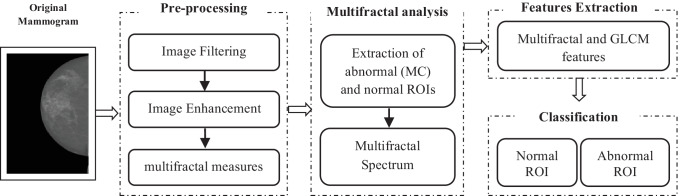

In this paper, we propose an approach based on multifractal analysis for the characterization and classification of normal and abnormal ROIs to detect MCs. Figure 4 shows the global diagram of the proposed approach. Our method is divided into five steps: pre-processing, ROI extraction, multifractal analysis, feature extraction, and classification.

Fig. 4.

Global diagram of the proposed approach

Pre-Processing

Image Filtering

Images of the INbreast database are of high quality and do not contain labels or artifacts, so they do not require a filtering step. But they are of large size and contain many empty rows and columns which represent the background of the mammogram (represented in black). So, in order to reduce the computation time of the next steps, the mammogram is filtered from this black background by removing the empty rows and columns and keeping only the breast [25].

Image Enhancement

Considering breast density as haze in mammography, we use a low-light image enhancement algorithm based on the haze removal technique [38] to reduce breast density and highlight MCs in relation to density [25].

α-image (Multifractal Measures)

α-image is constructed from the multifractal measures and the estimated Hölder exponents . For estimating Hölder exponents , natural logarithms of measure value and of the window size (Eq. 2) are calculated and plotted corresponding points in bi-logarithmic diagram vs. where 03 boxes of 1 × 1, 3 × 3, and 5 × 5 pixels in size were considered. Then, the limiting value of is estimated as the slope of the linear regression line. All steps are detailed in the previous work [25]. The final result represents the enhanced mammogram.

ROI Extraction and Multifractal Analysis

Normal and abnormal ROIs containing MCs are extracted from the enhanced image. Based on the expert’s annotations, the coordinates of MCs are extracted, and then the ROI is cropped such that these coordinates represent the center of the ROI. For a normal ROI, the coordinates of the center are chosen randomly where there is no annotation. The ROIs are nonoverlapping of size 64 × 64 pixels. Then, for each extracted ROI, the multifractal spectrum is calculated using the two different methods described in the previous section.

Feature Extraction

Multifractal Features

From each multifractal spectrum of each ROI, five multifractal parameters are extracted: Hölder exponent (correspending to the maximum of the spectrum), spectrum width (), , , and Asymmetry () (Fig. 5).

Fig. 5.

Schematic representation of the multifractal spectrum and the main parameters

The Hölder exponent measures the intensity of the local irregularities present in the image [39]. The parameter Δα is a measure determining the degree of multifractality. The smaller Δα indicates that the function tends to be monofractal and the larger one indicates the enhancement of multifractality [40]. Asymmetry measures the symmetry of the spectrum. It is null for symmetrical shapes, positive or negative for left and right asymmetric shapes, respectively [41]. An asymmetric spectrum on the right corresponds to a concentration of irregularities in large structures and conversely, an asymmetrical spectrum on the left corresponds to a concentration of irregularities in fine structures [42].

Gray Level Co-occurrence Matrix (GLCM)

GLCM is defined as the distribution of co-occurring values at a given offset. It consists of identifying patterns of pairs of pixels separated by a distance in a direction by calculating how often a pixel with a gray-level value occurs either horizontally (), vertically () or diagonally ( or ) to adjacent pixels with the value .

In this paper, since MCs are small structures of different sizes and shape, we extracted GLCM metrics for single pixel distances (an offset ) along the horizontal direction (). If we apply large distances or different directions, the GLCM can miss detailed information. The chosen metrics also allow us to minimize execution time.

After the creation of the GLCM, several statistics can be derived providing information about the texture of the image [43]:

where

Classification

Classification based on machine learning techniques is a two-step process: the learning step where the model is developed from given training data and the prediction step where the trained model is used to predict the response of given data. Generally, it is a supervised machine-learning that use training data and associated labels during the model learning process. The objective is to predict output labels of input data related to what the model has learned during the training phase. Therefore, each output response belongs to a specific class. Many supervised machine-learning algorithms have been proposed in the literature [44]. The most popular are support vector machines (SVM), K-nearest neighbors (KNN), and decision trees (DT) which are used in this research to classify ROIs in which the output is either normal (healthy) or abnormal (MCs) case.

Support Vector Machine (SVM)

Support vector machines (SVM) are one of the main supervised machine-learning algorithms that are not only accurate but also highly robust. In the SVM algorithm, each data item is plotted as a point in n-dimensional space (where n is number of features) with the value of each feature being the value of a particular coordinate. The objective is to find the most appropriate classification function by making a comparison of the separating hyperplane that goes through the center of the two classes, separating the two. The role of SVM is to increase the margin (the shortest possible space between the hyperplane point and the closely located data points) to the maximum between the two classes [44, 45].

Decision Tree (DT)

A decision tree is a hierarchical supervised learning model. The goal of using a DT is to create a training model that can be used to predict the class of the target variable by learning simple decision rules (in the form of yes or no questions) inferred from prior data (training data). A DT is made up of internal decision nodes and terminal leaves. Each decision node uses a test function that labels the branches with discrete scores. A test is used at every node with an input, and one of the branches is chosen depending on the result. This process starts from the root with the complete training information, the best split must be checked in each phase. It divides the training data into two or more classes. Then, we continue to divide recursively with the relevant subset until there is no longer any need to split; at this stage, a leaf node is generated and labeled [44].

K-Nearest Neighbors (KNN)

In k-nearest neighbors classification, examples are classified based on the class of their nearest neighbors (more than one neighbor). The K-NN classification has two stages: the first is the determination of the nearest neighbors, and the second is the determination of the class using those neighbors. When classification needs to be determined for an unlabeled object, the distance metric between the labeled object and the unlabeled object is calculated. The k-nearest neighbors are selected based on this distance metric. Thereby, the identification of the k-nearest neighbors is attained. Therefore, the nearest neighbors’ class labels are employed in order to identify the object’s class label [44, 46].

To assess the performance of our approach, the extracted features are fed to the classifiers and classification accuracy, sensitivity, and specificity are calculated. The SVM classifier was trained using standardized predictors and the second-order polynomial kernel. The KNN classifier was trained using standardized Euclidean distance metric. The DT classifier was trained without using specific options.

In order to split our database into training and testing sets, the k-fold cross validation method is used. It consists to split the database into k groups, for each k, the group is taken as the test data and the remaining groups are taken as training data. This process (cross validation process) is repeated k times. In this paper, each classifier was tested using 5-folds cross validation.

Let TP, FP, TN, and FN be the number of true positives, false positives, true negative, and false negatives, respectively, and sensitivity, specificity, and accuracy are defined by:

Results and Discussion

The proposed system was tested and validated using MATLAB R2019b, on a personal computer with an Intel (R) Core (TM) i5-3230 M CPU processor and 8 Go RAM, running under Windows 7 operating system.

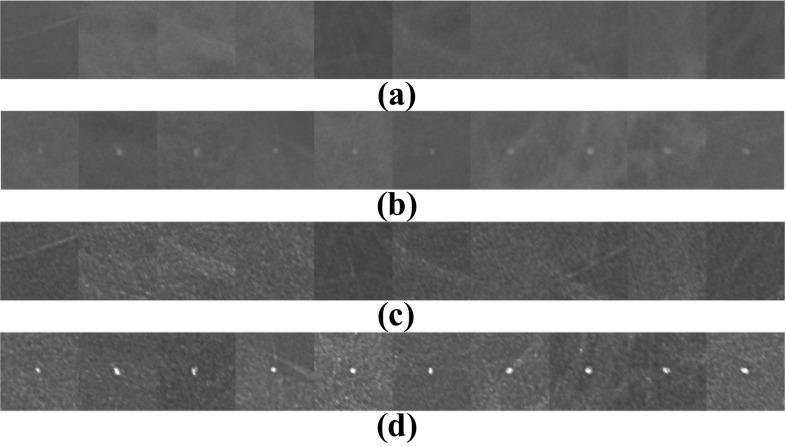

After the pre-processing step (Fig. 6), normal ROIs and abnormal ROIs (containing MCs) of size 64 × 64 pixels were extracted from the pre-processed mammograms based on the expert’s annotations. The size of the ROI influences the multifractal spectrum and its parameters. If the ROI’s size is larger, the ROI contains more singularities (structures); therefore, it can negatively impact the classification. If the ROI’s size is smaller, the classification results will be better. Also, the choice of this size allows us to reduce the computation time of the multifractal spectrum. Figures 7 and 8 compare some examples of ROIs obtained before (without pre-processing step) and after pre-processing (with pre-processing step). The influence of pre-processing was explained, evaluated, and discussed in the previous work [25].

Fig. 6.

Result of mammogram pre-processing using proposed approach, case “20,587,320” of INbreast database. a Original mammogram. b Original ROIs. c Enhanced mammogram. d Enhanced ROIs with highlighted MCs

Fig. 7.

Examples of abnormal ROIs containing MCs. a, c, e Original ROI. b, d, f Pre-processed ROI

Fig. 8.

Examples of normal ROIs. a, c, e Original ROI. b, d, f Pre-processed ROI

The next step is to compute the multifractal spectrum of each ROI (normal and abnormal) and extract the parameters described in the previous section. To illustrate this, we took 10 examples of normal ROI and 10 examples of abnormal ROI (Fig. 9), we calculated the multifractal spectrum using the generalized fractal dimensions method of each ROI before and after pre-processing, then, the multifractal parameters are extracted. Figure 10 shows the result of obtained multifractal spectrums of ROIs before and after pre-processing. The extracted parameters are presented in Tables 6 and 7.

Fig. 9.

Examples of normal and abnormal ROIs. a 10 Original normal ROIs. b 10 original abnormal ROIs. c, d The results of their pre-processing respectively

Fig. 10.

Multifractal spectrums of normal (red) and abnormal ROIs (blue). a Before pre-processing. b After pre-processing

Table 6.

Extracted parameters from the multifractal spectrum of normal ROI. (a) Before pre-processing, (b) after pre-processing

| α0 | w | R | L | A | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| (a) | (b) | (a) | (b) | (a) | (b) | (a) | (b) | (a) | (b) | |

| im_01 | 2.0001 | 2.0004 | 0.0023 | 0.0063 | 0.0012 | 0.0035 | 0.0011 | 0.0028 | 1.1075 | 1.2393 |

| im_02 | 2.0002 | 2.0007 | 0.0026 | 0.0105 | 0.0013 | 0.0055 | 0.0013 | 0.0050 | 0.9485 | 1.1017 |

| im_03 | 2.0003 | 2.0009 | 0.0054 | 0.0143 | 0.0026 | 0.0075 | 0.0027 | 0.0068 | 0.9565 | 1.1049 |

| im_04 | 2.0001 | 2.0006 | 0.0022 | 0.0088 | 0.0011 | 0.0046 | 0.0011 | 0.0041 | 1.0424 | 1.1163 |

| im_05 | 2.0001 | 2.0003 | 0.0022 | 0.0049 | 0.0011 | 0.0025 | 0.0011 | 0.0024 | 0.9804 | 1.0311 |

| im_06 | 2.0003 | 2.0006 | 0.0049 | 0.0095 | 0.0025 | 0.0051 | 0.0024 | 0.0044 | 1.0183 | 1.1493 |

| im_07 | 2.0001 | 2.0003 | 0.0010 | 0.0049 | 0.0005 | 0.0025 | 0.0005 | 0.0024 | 1.0072 | 1.0618 |

| im_08 | 2.0003 | 2.0006 | 0.0044 | 0.0101 | 0.0022 | 0.0055 | 0.0021 | 0.0046 | 1.0561 | 1.1882 |

| im_09 | 2.0002 | 2.0004 | 0.0025 | 0.0069 | 0.0013 | 0.0037 | 0.0011 | 0.0032 | 1.1614 | 1.1247 |

| im_10 | 2.0001 | 2.0003 | 0.0021 | 0.0055 | 0.0011 | 0.0029 | 0.0010 | 0.0026 | 1.0540 | 1.1312 |

Table 7.

Extracted parameters from multifractal spectrum of abnormal ROI. (a) Before pre-processing, (b) after pre-processing

| α0 | w | R | L | A | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| (a) | (b) | (a) | (b) | (a) | (b) | (a) | (b) | (a) | (b) | |

| im_01 | 2.0001 | 2.0007 | 0.0013 | 0.0168 | 0.0007 | 0.0124 | 0.0006 | 0.0045 | 1.0404 | 2.7652 |

| im_02 | 2.0003 | 2.0013 | 0.0043 | 0.0431 | 0.0025 | 0.0377 | 0.0018 | 0.0054 | 1.4420 | 6.9454 |

| im_03 | 2.0002 | 2.0011 | 0.0030 | 0.0281 | 0.0016 | 0.0227 | 0.0014 | 0.0054 | 1.1525 | 4.1829 |

| im_04 | 2.0003 | 2.0011 | 0.0046 | 0.0259 | 0.0022 | 0.0197 | 0.0024 | 0.0062 | 0.9241 | 3.1956 |

| im_05 | 2.0001 | 2.0011 | 0.0019 | 0.0229 | 0.0010 | 0.0161 | 0.0009 | 0.0068 | 1.0744 | 2.3755 |

| im_06 | 2.0001 | 2.0008 | 0.0017 | 0.0216 | 0.0009 | 0.0178 | 0.0008 | 0.0038 | 1.0958 | 4.6315 |

| im_07 | 2.0002 | 2.0013 | 0.0034 | 0.0300 | 0.0018 | 0.0233 | 0.0016 | 0.0067 | 1.1788 | 3.4643 |

| im_08 | 2.0004 | 2.0016 | 0.0057 | 0.0445 | 0.0030 | 0.0366 | 0.0027 | 0.0079 | 1.1109 | 4.6651 |

| im_09 | 2.0004 | 2.0015 | 0.0062 | 0.0405 | 0.0034 | 0.0329 | 0.0029 | 0.0076 | 1.1778 | 4.3309 |

| im_10 | 2.0001 | 2.0011 | 0.0016 | 0.0309 | 0.0009 | 0.0256 | 0.0007 | 0.0054 | 1.2305 | 4.7778 |

From the figures and tables above, we notice that before pre-processing, the normal ROIs were confused with the abnormal ROIs containing only one MC, and the multifractal spectrum could not differentiate between them. But after pre-processing, a very good discrimination of these ROIs was obtained from the multifractal spectrum and the extracted parameters.

According to Table 8, before pre-processing, we notice that the variation intervals of each parameter are very close to the normal and abnormal ROIs. After pre-processing, a large difference in these intervals is recorded, hence a better characterization and discrimination between the normal and the abnormal ROIs.

Table 8.

Interval of variation of each multifractal parameter before and after pre-processing

| Before pre-processing | After pre-processing | |||

|---|---|---|---|---|

| Normal ROIs | Abnormal ROIs | Normal ROIs | Abnormal ROIs | |

| α0 | [2.0001–2.0003] | [2.0001–2.0004] | [2.0003–2.0009] | [2.0007–2.0016] |

| w | [0.001–0.0054] | [0.0013–0.0062] | [0.0049–0.0143] | [0.0168–0.0445] |

| R | [0.0005–0.0026] | [0.0007–0.0034] | [0.0025–0.0075] | [0.0124–0.0377] |

| L | [0.0005–0.0027] | [0.0006–0.0029] | [0.0024–0.0068] | [0.0038–0.0079] |

| A | [0.9485–1.1614] | [0.9241–1.442] | [1.0311–1.2393] | [2.3755–6.9454] |

Results of ROI Classification

The proposed approach was applied to each BI-RADS class of the INbreast database (Table 9). After extraction of normal and abnormal ROIs where only ROIs with individual MC were considered, the multifractal spectrum was calculated using the two methods described in the previous section. Extracted parameters are used for classification of ROIs using the three classifiers. Obtained results are mentioned in Tables 10, 11 and 12. Table 13 presents the comparison of our results with the state of the art.

Table 9.

ROI’s number of each class

| BI-RADS Class | A | B | C | D | |

|---|---|---|---|---|---|

| ROIs number | Normal | 300 | 376 | 368 | 300 |

| Abnormal (MC) | 300 | 376 | 368 | 300 | |

| Total | 600 | 752 | 736 | 600 | |

Table 10.

Classification of normal and abnormal ROIs of each BI-RADS class using the generalized fractal dimension method

| BI-RADS A | ||||||

|---|---|---|---|---|---|---|

| Before pre-processing | After pre-processing | |||||

| Classifier | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy |

| KNN | 83.55% | 82.66 | 83.17% | 100% | 99.67% | 99.83% |

| SVM | 91.06% | 76.35% | 83.83% | 100% | 99.64% | 99.83% |

| DT | 80.01% | 79.36% | 79.83% | 99.68% | 99.66% | 99.67% |

| BI-RADS B | ||||||

| Before pre-processing | After pre-processing | |||||

| Classifier | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy |

| KNN | 81.14% | 78.59% | 79.79% | 99.49% | 100% | 99.74% |

| SVM | 92.82% | 76.95% | 84.84% | 99.75% | 99.15% | 99.47% |

| DT | 81.35% | 79.79% | 80.59% | 99.78% | 100% | 99.87% |

| BI-RADS C | ||||||

| Before pre-processing | After pre-processing | |||||

| Classifier | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy |

| KNN | 66.21% | 71.62% | 68.89% | 97.27% | 98.02% | 97.69% |

| SVM | 97.81% | 53.27% | 75.54% | 98.12% | 96.51% | 97.28% |

| DT | 73.93% | 71.85% | 72.83% | 96.73% | 96.41% | 96.60% |

| BI-RADS D | ||||||

| Before pre-processing | After pre-processing | |||||

| Classifier | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy |

| KNN | 50.14% | 53.68% | 51.83% | 90% | 91.32% | 90.67% |

| SVM | 30.21% | 77.15% | 52.83% | 94.01% | 92.95% | 93.50% |

| DT | 55.04% | 52.65% | 54% | 87.48% | 87.87% | 87.83% |

Table 11.

Classification of normal and abnormal ROIs of each BI-RADS class using the MFDMA method

| BI-RADS A | ||||||

|---|---|---|---|---|---|---|

| Before pre-processing | After pre-processing | |||||

| Classifier | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy |

| KNN | 75.76% | 74.46% | 75.17% | 96.63% | 97.29% | 97% |

| SVM | 96.09% | 57.40% | 76.83% | 97.31% | 91.88% | 94.67% |

| DT | 75.18% | 71.28% | 73.17% | 96.33% | 95.99% | 96.17% |

| BI-RADS B | ||||||

| Before pre-processing | After pre-processing | |||||

| Classifier | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy |

| KNN | 81% | 78.88% | 79.92% | 96.53% | 95.44% | 96.01% |

| SVM | 96.66% | 68.74% | 82.71% | 97.87% | 94.36% | 96.14% |

| DT | 78.63% | 79.68% | 78.99% | 97.34% | 96.81% | 97.08% |

| BI-RADS C | ||||||

| Before pre-processing | After pre-processing | |||||

| Classifier | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy |

| KNN | 70.62% | 72.99% | 71.74% | 89.57% | 88.87% | 89.13% |

| SVM | 98.09% | 54.79% | 76.36% | 97.64% | 82.54% | 90.08% |

| DT | 69.06% | 71.51% | 70.24% | 90.89% | 88.26% | 89.54% |

| BI-RADS D | ||||||

| Before pre-processing | After pre-processing | |||||

| Classifier | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy |

| KNN | 53.77% | 51.62% | 52.83% | 68.97% | 70.08% | 69.67% |

| SVM | 57.74% | 64.86% | 61.17% | 83.34% | 75.32% | 79.33% |

| DT | 54.86% | 51.86% | 53.33% | 72.34% | 69.59% | 71% |

Table 12.

ROI’s Classification results of all BI-RADS classes using the generalized fractal dimension method

| Features | Multifractals | Multifractals and GLCM | ||||

|---|---|---|---|---|---|---|

| Classifier | Sens (%) | Spec (%) | Acc (%) | Sens (%) | Spec (%) | Acc (%) |

| KNN | 96.68 | 97.24 | 96.97 | 97.83 | 96.87 | 97.34 |

| SVM | 98.04 | 97.17 | 97.61 | 98.66 | 97.77 | 98.20 |

| DT | 97.37 | 97.39 | 97.38 | 97.53 | 97.03 | 97.27 |

Acc accuracy, Sens sensitivity, Spec specificity

Table 13.

Comparison of classification results with state-of-the-art methods

| Method | Database (no. images) | No. ROIs | Feature type (number) | Classifier | Acc (%) | Sens (%) | Spec (%) |

|---|---|---|---|---|---|---|---|

| [19] | BCDR (176) |

Normal: 96 MC: 96 Total: 192 |

Statistical, interest points, interest corners (50) | Random forest | 95.83 | 96.84 | 95.09 |

| [20] | DDSM (100) |

Normal: 10 MC: 10 Total: 110 |

Physical characteristics (2) | –- | 99 | 99 | 100 |

| [21] | IRMA (–-) |

Normal: 932 MC: 688 Total: 1620 |

Rotation invariant local frequency magnitude descriptors | SVM | 91.10 | 98.04 | 81.17 |

| [22] | Mini-MIAS (–-) |

Normal: 208 Abnormal:112 Total: 330 |

ResNet features | ResNet | 95.91 | –- | –- |

| [23] | DDSM (219) |

Normal:124 MC:95 Total: 219 |

Statistical features (6) | SVM, LDA, MLPNN | 90.9 | 98.4 | 81.3 |

| [24] | BCDR (–-) |

Normal: 130 MC: 130 Total: 260 |

Interest points, interest corners (2) | Random forest | 97.31 | 94.62 | 100 |

| Proposed approach | INbreast (308) |

Normal: 1344 MC: 1344 Total: 2688 |

Multifractals and GLCM (9) | SVM | 98.20 | 98.66 | 97.77 |

Acc accuracy, Sens sensitivity, Spec specificity, Abnormal masse + MC

The classification results obtained after the pre-processing step are very striking and persuasive, which proves that the proposed method for mammogram pre-processing is effective in enhancing the contrast of MCs by reducing the effect of breast density.

By comparing the two methods, the MFDMA method is not only complicated in its implementation where several parameters need to be adjusted and takes more computation time, but also does not give good classification results compared to the generalized fractal dimensions method.

In most research, the analyzed ROIs of MCs are ROIs where the MCs (clusters in general) are very clear and discriminated from the surrounding tissue. Also, critical cases where MCs are invisible and masked by the high breast density (BI-RADS C and D) are not analyzed in their studies. In our work, it should be noted that the analyzed ROIs contain only one MC, so, if we take ROIs containing more than one MC (cluster for example) or a mass, the result would be better since the multifractal spectrum was sensitive to one MC.

The BI-RADS classification classifies the mammogram according to the density of the breast tissue from the weakest to the densest. The denser the tissue, the more difficult detection is because breast density masks lesions, especially small lesions such as MCs. In this paper, all BI-RADS cases have been taken into consideration (BI-RADS A, B, C, and D). The classification accuracy obtained for classes A, B, and C exceeds 97%; for class D, the obtained accuracy of 93.33% is low compared to other classes, but it remains a promising result in detecting MCs. Grouping all cases, the classification accuracy reached 98% which is a very satisfactory rate compared with the literature.

The proposed algorithm could be efficient for other databases, such as BCDR or DDSM. The pre-processing step may not work the same way, since they do not have the same image quality, further filtering steps are required, but multifractal analysis is still a powerful tool for singularity analysis.

Conclusion

In this paper, we have proposed an aided-system for the detection of MCs and classification of mammographic ROIs into normal or abnormal ROIs based on multifractal features. The proposed approach is based on multifractal analysis where the multifractal measures were used to enhance the contrast of the MCs, and then the multifractal spectrum was computed to extract the multifractal attributes used for the classification of the ROIs.

Generally, multifractal analysis does not require a pre-processing step, because it is a point analysis, which measures the local regularity and studies its variation from one point to another. In this paper, the pre-processing step was necessary for a better characterization and classification of ROI. A brief comparison of two methods of spectrum computation was made where the generalized fractal dimension method gave the best results. The combination between the multifractal features and the GLCM features gives a better result of classification where the SVM classifier was the most efficient. In comparison with the literature, the proposed system has given very satisfactory and promising results. The particularity of our work lies in the fact that all cases of breast density have been taken into consideration, which has not been done in the literature. On the other hand, the analyzed abnormal ROIs in our study contained only one MC, i.e., the spectrum was sensitive to one MC.

Finally, if this approach has been effective for the detection of individual MCs (ROIs containing one MC), certainly, it will be effective for the detection and classification of ROIs containing other abnormalities such as clusters of MCs or masses. Also, this approach could be used for the discrimination and classification of benign and malignant breast abnormalities.

Data Availability

The INbreast dataset used in this work was assigned by the manager with a signed transfer agreement.

Code Availability

Some matlab codes used in this work are available in Matlab toolboxes, and some others programmed by the authors are not available, they are personal.

Declarations

Ethics Approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.“Cancer.” https://www.who.int/fr/news-room/fact-sheets/detail/cancer (accessed Jun. 06, 2021).

- 2.S. B. Yengec Tasdemir, K. Tasdemir, and Z. Aydin, “A review of mammographic region of interest classification,” Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, vol. 10, no. 5. Wiley-Blackwell, Sep. 01, 2020, 10.1002/widm.1357.

- 3.V. Bhateja, M. Misra, and S. Urooj, “Studies in Computational Intelligence 861 Non-Linear Filters for Mammogram Enhancement A Robust Computer-aided Analysis Framework for Early Detection of Breast Cancer.” [Online]. Available: http://www.springer.com/series/7092.

- 4.Elmoufidi A, El Fahssi K, Jai-andaloussi S, Sekkaki A, Gwenole Q, Lamard M. Anomaly classification in digital mammography based on multiple-instance learning. IET Image Process. 2018;12(3):320–328. doi: 10.1049/iet-ipr.2017.0536. [DOI] [Google Scholar]

- 5.Gautam A, Bhateja V, Tiwari A, Satapathy SC. An improved mammogram classification approach using back propagation neural network. Advances in Intelligent Systems and Computing. 2018;542:369–376. doi: 10.1007/978-981-10-3223-3_35. [DOI] [Google Scholar]

- 6.Tavakoli N, Karimi M, Norouzi A, Karimi N, Samavi S, Soroushmehr SMR. Detection of abnormalities in mammograms using deep features. J. Ambient Intell. Humaniz. Comput. 2019 doi: 10.1007/s12652-019-01639-x. [DOI] [Google Scholar]

- 7.D. Muduli, R. Dash, and B. Majhi, “Automated breast cancer detection in digital mammograms: A moth flame optimization based ELM approach,” Biomed. Signal Process. Control, vol. 59, May 2020, 10.1016/j.bspc.2020.101912.

- 8.Hu K, Yang W, Gao X. Microcalcification diagnosis in digital mammography using extreme learning machine based on hidden Markov tree model of dual-tree complex wavelet transform. Expert Syst. Appl. 2017;86:1339–1351. doi: 10.1016/j.eswa.2017.05.062. [DOI] [Google Scholar]

- 9.Suhail Z, Denton ERE, Zwiggelaar R. Classification of micro-calcification in mammograms using scalable linear Fisher discriminant analysis. Med. Biol. Eng. Comput. 2018;56(8):1475–1485. doi: 10.1007/s11517-017-1774-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.B. Singh and M. Kaur, “An approach for classification of malignant and benign microcalcification clusters,” Sādhanā, Vol. 43, 2018, 10.1007/s12046-018-0805-2S.

- 11.J. G. Melekoodappattu and P. S. Subbian, “A Hybridized ELM for Automatic Micro Calcification Detection in Mammogram Images Based on Multi-Scale Features,” J. Med. Syst., vol. 43, no. 7, Jul. 2019, 10.1007/s10916-019-1316-3. [DOI] [PubMed]

- 12.M. Dong, Z. Wang, C. Dong, X. Mu, and Y. Ma, “Classification of Region of Interest in Mammograms Using Dual Contourlet Transform and Improved KNN,” J. Sensors, vol. 2017, 2017, 10.1155/2017/3213680.

- 13.K. U. Sheba and S. Gladston Raj, “An approach for automatic lesion detection in mammograms,” Cogent Eng., vol. 5, no. 1, Jan. 2018, 10.1080/23311916.2018.1444320.

- 14.Mohanty F, Rup S, Dash B, Majhi B, Swamy MNS. Mammogram classification using contourlet features with forest optimization-based feature selection approach. Multimed. Tools Appl. 2019;78(10):12805–12834. doi: 10.1007/s11042-018-5804-0. [DOI] [Google Scholar]

- 15.H. Kaur, J. Virmani, Kriti, and S. Thakur, “A genetic algorithm-based metaheuristic approach to customize a computer-aided classification system for enhanced screen film mammograms,” in U-Healthcare Monitoring Systems, Elsevier, 2019, pp. 217–259.

- 16.S. A. Agnes, J. Anitha, S. I. A. Pandian, and J. D. Peter, “Classification of Mammogram Images Using Multiscale all Convolutional Neural Network (MA-CNN),” J. Med. Syst., vol. 44, no. 1, Jan. 2020, 10.1007/s10916-019-1494-z. [DOI] [PubMed]

- 17.F. Mohanty, S. Rup, B. Dash, B. Majhi, and M. N. S. Swamy, “An improved scheme for digital mammogram classification using weighted chaotic salp swarm algorithm-based kernel extreme learning machine,” Appl. Soft Comput. J., vol. 91, Jun. 2020, 10.1016/j.asoc.2020.106266.

- 18.Mohanty F, Rup S, Dash B, Majhi B, Swamy MNS. Digital mammogram classification using 2D-BDWT and GLCM features with FOA-based feature selection approach. Neural Comput. Appl. 2020;32(11):7029–7043. doi: 10.1007/s00521-019-04186-w. [DOI] [Google Scholar]

- 19.L. Losurdo et al., “A combined approach of multiscale texture analysis and interest point/corner detectors for microcalcifications diagnosis,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2018, vol. 10813 LNBI, pp. 302–313, 10.1007/978-3-319-78723-7_26.

- 20.G. R. Jothilakshmi, A. Raaza, V. Rajendran, Y. Sreenivasa Varma, and R. Guru Nirmal Raj, “Pattern Recognition and Size Prediction of Microcalcification Based on Physical Characteristics by Using Digital Mammogram Images,” J. Digit. Imaging, vol. 31, no. 6, pp. 912–922, Dec. 2018, 10.1007/s10278-018-0075-x. [DOI] [PMC free article] [PubMed]

- 21.Paramkusham S, Rao KMM, Rao BVVSNP. Comparison of rotation invariant local frequency, LBP and SFTA methods for breast abnormality classification. Int. J. Signal and Imaging Syst. Eng. 2018;11(3):136–150. doi: 10.1504/IJSISE.2018.093266. [DOI] [Google Scholar]

- 22.Yu X, Wang SH. Abnormality Diagnosis in Mammograms by Transfer Learning Based on ResNet18. Fundam. Informaticae. 2019;168(2–4):219–230. doi: 10.3233/FI-2019-1829. [DOI] [Google Scholar]

- 23.Hekim M, Yurdusev AA, Oral C. The detection and classification of microcalcifications in the visibility-enhanced mammograms obtained by using the pixel assignment-based spatial filter. Adv. Electr. Comput. Eng. 2019;19(4):73–82. doi: 10.4316/AECE.2019.04009. [DOI] [Google Scholar]

- 24.A. Fanizzi et al., “A machine learning approach on multiscale texture analysis for breast microcalcification diagnosis,” BMC Bioinformatics, vol. 21, Mar. 2020, 10.1186/s12859-020-3358-4. [DOI] [PMC free article] [PubMed]

- 25.N. Kermouni Serradj, S. Lazzouni, and M. Messadi, “Mammograms enhancement based on multifractal measures for microcalcifications detection,” Int. J. Biomed. Eng. Technol (in press).

- 26.A. N. D. Posoda, D. Giménez, R. Quiroz, and R. Protz, “Multifractal characterization of soil pore systems,” SOIL SCL SOC.AM.J., vol. 67, pp. 1361–1369, 2003.

- 27.J. L. Véhel, “Fractal and multifractal processing of images,” Traitement du Signal, vol. 20, pp. 303–311, 2003. [Online]. Available: http://www-rocq.inria.fr/fractales.

- 28.Chhabra A, Jensen RV. Direct Determination of the f (c) Singularity Spectrum. Phys. Rev. Letters. 1989;62(12):1327–1330. doi: 10.1103/PhysRevLett.62.1327. [DOI] [PubMed] [Google Scholar]

- 29.S. G. De Bartolo, R. Gaudio, and S. Gabriele, “Multifractal analysis of river networks: Sandbox approach,” Water Resour. Res., vol. 40, no. 2, 2004, 10.1029/2003WR002760.

- 30.Broniatowski M, Mignot P. A self-adaptive technique for the estimation of the multifractal spectrum. Statistics and Probability Letters. 2001;54:125–135. doi: 10.1016/S0167-7152(00)00210-8. [DOI] [Google Scholar]

- 31.A. Arneodo, B. Audit, P. Kestener, and S. Roux, “Multifractal Formalism based on the Continuous Wavelet Transform.” Scholarpedia, vol. 3, 2007.

- 32.Jaffard S, Bruno L, Patrice A. wavelet-leaders-in-multifractal-analysis. In: Tao Q, Mang IV, Xu Y, editors. Wavelet Analysis and Applications. Switzerland: Birkhauser Verlag Basel; 2006. pp. 219–264. [Google Scholar]

- 33.Xi C, Zhang S, Xiong G, Zhao H. A comparative study of two-dimensional multifractal detrended fluctuation analysis and two-dimensional multifractal detrended moving average algorithm to estimate the multifractal spectrum. Phys. A Stat. Mech. its Appl. 2016;454:34–50. doi: 10.1016/j.physa.2016.02.027. [DOI] [Google Scholar]

- 34.F. Wang, Q. Fan, and H. E. Stanley, “Multiscale multifractal detrended-fluctuation analysis of two-dimensional surfaces,” Phys. Rev. E, vol. 93, no. 4, Apr. 2016, 10.1103/PhysRevE.93.042213. [DOI] [PubMed]

- 35.B. Yao, F. Imani, A. S. Sakpal, E. W. Reutzel, and H. Yang, “Multifractal Analysis of Image Profiles for the Characterization and Detection of Defects in Additive Manufacturing,” J. Manuf. Sci. Eng. Trans. ASME, vol. 140, no. 3, Mar. 2018, 10.1115/1.4037891.

- 36.Lopes R, Betrouni N. Fractal and multifractal analysis: A review. Med. Image Anal. 2009;13(4):634–649. doi: 10.1016/j.media.2009.05.003. [DOI] [PubMed] [Google Scholar]

- 37.Moreira IC, Amaral I, Domingues I, Cardoso A, Cardoso MJ, Cardoso JS. INbreast: Toward a Full-field Digital Mammographic Database. Acad. Radiol. 2012;19(2):236–248. doi: 10.1016/j.acra.2011.09.014. [DOI] [PubMed] [Google Scholar]

- 38.“Low-Light Image Enhancement - MATLAB & Simulink Example - MathWorks France.” https://fr.mathworks.com/help/images/low-light-image-enhancement.html (accessed Jun. 06, 2021).

- 39.A. Ouahabi, Signal and image Multiresolution Analysis. Wiley-ISTE, 2012.

- 40.Dick OE, Svyatogor IA. Potentialities of the wavelet and multifractal techniques to evaluate changes in the functional state of the human brain. Neurocomputing. 2012;82:207–215. doi: 10.1016/j.neucom.2011.11.013. [DOI] [Google Scholar]

- 41.Telesca L, Colangelo G, Lapenna V, Macchiato M. Monofractal and multifractal characterization of geoelectrical signals measured in southern Italy. Chaos, Solitons and Fractals. 2003;18(2):385–399. doi: 10.1016/S0960-0779(02)00655-0. [DOI] [Google Scholar]

- 42.Oudjemia S. Analyse des signaux biomedicaux par des approches multifractales et entropiques: Application à la variabilité du rythme cardiaque foetal. Tiizi-ouzou: Mouloud Mammeri University; 2015. [Google Scholar]

- 43.“Properties of gray-level co-occurrence matrix - MATLAB graycoprops - MathWorks France.” https://fr.mathworks.com/help/images/ref/graycoprops.html (accessed Jun. 06, 2021).

- 44.A. Subasi, “Machine learning techniques,” in Practical Machine Learning for Data Analysis Using Python, Elsevier, 2020, pp. 91–202.

- 45.S. Huang, C. A. I. Nianguang, P. Penzuti Pacheco, S. Narandes, Y. Wang, and X. U. Wayne, “Applications of support vector machine (SVM) learning in cancer genomics,” Cancer Genomics and Proteomics, vol. 15, no. 1. International Institute of Anticancer Research, pp. 41–51, Jan. 01, 2018, 10.21873/cgp.20063. [DOI] [PMC free article] [PubMed]

- 46.P. Cunningham and S. J. Delany, “k-Nearest Neighbour Classifiers: 2nd Edition (with Python examples),” Apr. 2020, [Online]. Available: http://arxiv.org/abs/2004.04523.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The INbreast dataset used in this work was assigned by the manager with a signed transfer agreement.

Some matlab codes used in this work are available in Matlab toolboxes, and some others programmed by the authors are not available, they are personal.