Abstract

Objectives:

Automatically detecting dental conditions using Artificial intelligence (AI) and reporting it visually are now a need for treatment planning and dental health management. This work presents a comprehensive computer-aided detection system to detect dental restorations.

Methods:

The state-of-art ten different deep-learning detection models were used including R-CNN, Faster R-CNN, SSD, YOLOv3, and RetinaNet as detectors. ResNet-50, ResNet-101, XCeption-101, VGG16, and DarkNet53 were integrated as backbone and feature extractor in addition to efficient approaches such Side-Aware Boundary Localization, cascaded structures and simple model frameworks like Libra and Dynamic.

Total 684 objects in panoramic radiographs were used to detect available three classes, namely, dental restorations, denture and implant.

Each model was evaluated by mean average precision (mAP), average recall (AR), and precision-recall curve using Common Objects in Context (COCO) detection evaluation metrics.

Results:

mAP varied between 0.755 and 0.973 for ten models explored while AR ranges between 0.605 and 0.771. Faster R-CNN RegnetX provided the best detection performance with mAP of 0.973 and AR of 0.771. Area under precision-recall curve was 0.952. Precision-recall curve indicated that errors were mainly dominated by localization confusions.

Conclusions:

Results showed that the proposed AI-based computer-aided system had great potential with reliable, accurate performance detecting dental restorations, denture and implant in panoramic radiographs. As training models with more data and standardization in reporting, AI-based solutions will be implemented to dental clinics for daily use soon.

Keywords: deep learning, dentistry, detection, COCO, evaluation, implant, restoration

Introduction

Dental examination and treatment planning include physical and screening controls. Dental screening modalities are routinely used for radiographic examination that plays a key role in decision making, diagnosis and treatment planning of diseases. Intraoral and extraoral radiographs are routinely employed depending on their need. Periapical and bitewing radiographs are intraoral images. Periapical radiograph visualizes teeth with surrounding bone while bitewing scans crowns of posterior teeth to examine tooth interfaces. 1 Panoramic radiograph (PR) as an extraoral image scans wider anatomical regions with relatively low radiation dose. 2 It is firstly preferred to evaluate teeth, oral structures, pathologies, cephalometric landmarks, bone loss, root morphology, jaws, and surrounding anatomic structures before complementary examinations if needed. Although PRs are very useful tool for dentists in diagnosis of diseases, they have some limitations. 3 Two-dimensional low-resolution radiographs with artifacts and inhomogeneity can cause misinterpretation. Moreover, dental procedures take much time for each patient a day. There is a need for an automated identification of intraoral conditions quickly. Detecting and classifying dental conditions automatically helps (i) to overcome interobserver variability, (ii) to increase effectiveness of care and (iii) to provide more accurate and reliable evaluation for observers and new graduates in clinics.

Over the past two decades, research in Computer-Aided Diagnosis (CAD) has shown promising advancements. Medical image analysis attempts to give radiologists and doctors a more effective diagnostic and therapeutic process. Conventional CAD systems including pattern recognition techniques was dependent on handcrafted features and learning methods to map them to a decision. Feature preparation and transformation process causes a large human bias. 4 The concept of Artificial Intelligence (AI) has changed rule-based individual solutions to learning based mostly generic solutions. 5 CAD system can help patients where resource is limited, and experts are not available. It also improves accuracy and variability of interpretations. Artificial Neural Networks were initially presented by several interconnected layers that was inspired by natural neural interconnections in the brain in 1960s. 6 A non-linear transformation function, like youtput = f(xinput), was applied to the input. 7 With the advancements in computer science and electronics, today models with much more layers have been used to extract useful information from input data. 8 Machine learning is a subset of AI that refers algorithms learning from input data, enabling computers to solve a specific problem. Deep learning is the leading machine-learning approach. Deep-learning models are trained with a specific task in such a way that more input data scales their performance up for object detection, segmentation, classification, and object recognition. 9,10 It covers wide range of applications in medical research for image analysis.

AI research has been rapidly getting more attention and emerging in the field of dentistry. 11 Dental radiographs from clinical routines creates enough resource to develop AI-based applications. Deep learning as an AI technology has been applied to various research fields including dentistry for object detection, prediction, classification, and other related tasks. 12–15 In dentistry, diagnosis of dental conditions and diseases has been previously addressed using a deep-learning model. 2,3,6,9,11,16

This work aimed to develop a computer-aided detection system to automatically detect dental restorations, denture, and implants in panoramic radiographs. It included the state-of-the-art deep-learning detection solution consisting of 10 different deep-learning models. Five different detectors R-CNN, Faster R-CNN as two-stage techniques and SSD, YOLO, RetinaNet as one-stage techniques were investigated. Additionally, ResNet-50, ResNet-101, XCeption-101, VGG16, and DarkNet53 were used together with detectors as a backbone or feature extractor. Moreover, Side-Aware Boundary Localization (SABL) and cascaded structures were two approaches used in this work to improve detection performance. Similarly, Libra and Dynamic model frameworks were also integrated. The performance of each model was evaluated by mean average precision (mAP), average recall (AR), precisison-recall curve and Common Objects in Context (COCO) detection evaluation metrics to examine whether all objects in an image were found and to check all objects detected were properly assigned to correct class.

Methods and materials

This work was approved by the Ethical Review Board approved at Ankara Yildirim Beyazit University (approval number 2021-69). It was performed under the ethical standards of the Helsinki Declaration.

Image database created between 2018 and 2020 were used to randomly choose PRs. Patients were between 18 and 65 years old. 123 PRs with total 684 objects were used. Digital PRs were taken from the same dental panoramic device, Planmeca oy, Helsinki, Finland. During selection process, PRs with metallic artifacts and position-based distortions and incomplete root formations were excluded. PRs was randomly divided into training, validation, and test folders with the ratio of 80, 10, and 10%. There were three classes defining teeth with restoration, denture, and implant. Filling and single-unit fixed crown were categorized as dental restorations. The class denture included multiunit fixed crowns, while the class implant consisted of single implant supported crown as one object. Data labeling process was performed by an Oral and Maxillofacial radiologist (B.Ç.) with more than 5 years of experience in the field. The software labeling was used to choose rectangular bounding box of each object and their corresponding labels. 17

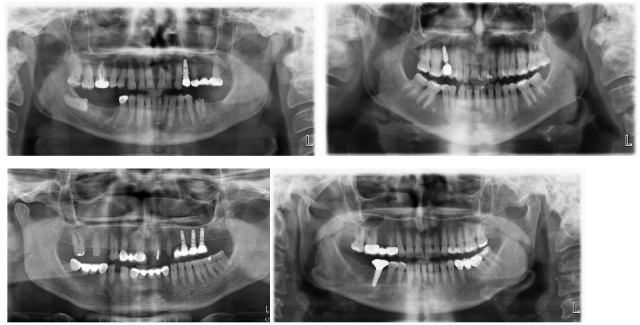

The original images had DCM format with resolution of 2943 × 1435. They were initially converted to PNG in MATLAB, then resized to 640 × 640 before passing to the models. PyTorch library and Google CoLab were primarily used for developing the models. Figure 1 shows PRs with one of the classes that are used as inputs for proposed detection solution.

Figure 1.

Example PRs used to train detection models.

Object detection typically Convolutional neural networks (CNNs) refers to determine where object’ locations are within images and which class each object belongs to. The advantages of object detection with deep learning–based approaches using CNNs compared to traditional methods are (i) hierarchical feature representation learned by a hierarchical multistage structure, (ii) increased learning and expressing capability by means of deep architectures, (iii) benefitting of jointly optimization of two related tasks that enables to combine classification and localization in multitask learning approach. Object detection models can be categorized into two structures. The first category is region proposal-based techniques that generates region proposals in the beginning and then classify each proposal into different object classes. It includes R-CNN, Fast R-CNN, and Faster R-CNN. 18,19 The other category consists of algorithms based on regression that they predict object classes and locations directly instead of selecting interested parts of an image. YOLO, SSD, and RetinaNet are examples of regression-based methods. 20–22 Transfer learning, that is a technique that enables to begin with a pretrained network and fine-tuning it for existing application purpose, is implemented. 23 Models are pre-trained with COCO dataset, generalized image classification where sufficient data available for training, including 80 object classes. 24 Each detection model is fine-tuned using custom dataset with three classes.

There are two main categories for the state-of-art object detection algorithms such as two-stage and one-stage architectures. The former performs proposal-driven mechanisms that object locations are initially proposed and then each location is assigned to one of the classes using CNN. The latter uses anchor boxes to localize region of interest in the image in a single step without using region proposals. AI-based computer-aided detection system includes state-of-art 10 deep-learning models including two-stage and one-stage detectors. Faster RCNN and RCNN are two-stage techniques that need backbone as a feature extractor while SSD, YOLO, and RetinaNet perform detection task in a single-step. ResNet-50 and ResNet-101, VGG16 and DarkNet-53 CNNs are used as a backbone for detectors.

Deep learning-based detection: Detectors, Backbones, and Approaches

Faster R-CNN architecture has two subnetworks working together such as Region Proposal Network and Fast RCNN network. 18,19 Principally, it initially generates potential bounding boxes and then runs a classifier on these proposed boxes as a region proposal technique. Region Proposal Networks which is a fully convolutional network are used to predict object bounds and objectness scores with 3 × 3 sliding window on convolutional feature map. Classification process is then followed by a post-processing step to refine bounding boxes, exclude duplications and score bounding boxes again.

YOLO, You Only Look Once, is one-stage object detector. It exploits features learned by a deep CNN. 20 Predictions are performed at different scales by downsampling the dimensions of the image at different fully convolutional layers, resulting in extracted features. Feature extraction is performed by DarkNet network. Cross-entropy loss and logistic regression are primarily used to predict each class of the predicted object’ bounding boxes.

Single Shot MultiBox Detector (SSD) is an object detector that aims to optimize loss function from objectness and bounding box position losses. 21 It uses a VGG network by replacing its fully connected layers with convolutional layers. It provides to extract features from different scales and reduce the size of the next layer. Detection is performed with single forward pass.

RetinaNet addresses downsides of one-stage detection algorithms with two improvements such as Feature Pyramid Networks (FPN) and Focal Loss. FPN principally combines low level and high-level features while Focal Loss eliminates class imbalances dominating the loss due to a large number of background objects. 22

Dynamic R-CNN is an efficient model architecture that adapting label assignment criteria and the regression loss function automatically considering the proposals during training. 25 This provides to use better training samples by fitting the detector to more high-quality image samples.

Libra R-CNN is a simple model framework that addresses typical limitations caused by imbalances during training process. 26 Three parameters, IoU-balanced sampling, balanced feature pyramid, and balanced L1 loss, are combined to reduce imbalance at sample, feature, and objective level, respectively.

RegNet is a network design paradigm that forms network design spaces parametrizing populations of networks instead of an individual network. 27 Network design structure was explored and low-dimensional space with regular networks was provided. Parametrization of good networks is explained by a quantized linear function.

Side-Aware Boundary Localization (SABL) is an approach to improve detection performance of bounding box regression technique that is mainly used to localize objects. 28 Instead of predicting centers and sizes of the objects that is vulnerable to displacements between anchors and targets, it uses two-step localization scheme. Firstly, movement range is firstly predicted, then precise position with predicted block is marked, providing improvements between 1 and 3% for both two-stage and single-stage detection models.

Cascade R-CNN is a multistage object detection architecture consisting of a set of detectors trained with increasing IoU thresholds to address overfitting and quality mismatch issues between detector and test hypotheses. 29 Sequential training of the detectors by giving the output of a detector as input for the next detector improves hypotheses quality and decreases overfitting.

VGG16 is a CNN model including 13 convolutional, five pooling layers in the architecture. It has 3 × 3 kernel-sized receptive fields that increase non-linearity of the network but decrease the total number of the parameters, to get better performance with ease of implementation. 30

Deeper architectures cause extremely small back-propagated gradient, resulting in saturated or decreased performance. Residual networks deal with it by proposing, residual connection approach, identity shortcut connections skipping one or more layers and performing identity mappings. 31 It also reduces the number of parameters needed for a deep network. ResNet has a depth of up to 152 layers such as ResNet-50 and ResNet-101.

Xception is CNN architecture, an interpretation of Inception replaced with depthwise separable convolutions. 32 It maps the spatial correlations for each output channel separately instead of separating input data into multiple blocks. Then, 1 × 1 depthwise convolution is performed to obtain cross-channel correlation.

Darknet-53 is a CNN that works as a backbone to extract features for the YOLOv3 object detector. 20 Compared to its predecessor Darknet-19, it includes residual shortcut connections and more convolutional layers.

Evaluation criteria for detection performance

Object detection models predict bounding box coordinates and class of the objects in input images as outputs. The detection performance is evaluated by mean average precision (mAP), recall and precision metrics. mAP is used for well-known PASCAL VOC object-detection challenge. 33

Intersection Over Union (IOU) is a ratio of overlap between the area of predicted bounding box and the area of ground truth. It indicates how correctly the bounding box is predicted. It takes values between 0 and 1, indicating no overlap and exact overlap, respectively.

| 1 |

Precision indicates how exact the model is in identifying relevant objects, while recall refers ability of the model in proposing correct detections among all ground truths. They can be calculated using Eq 2–3. In comparing models, high precision and recall value are considered to be of a model with better performance in detection.

| 2 |

| 3 |

Average Precision refers the area under the precision-recall curve (AUC) that is evaluated at a IoU threshold. It is calculated using Eq 4.

| 4 |

AP@threshold means that AP is calculated at a given IoU threshold. Mostly, it is considered as 0.5 and shown by AP@0.5. Additionally, AP is calculated for each class in the data. When AP values of each class are averaged, n-different AP values for n-classes, mean Average Precision (mAP) is obtained for n classes with Eq 5.

| 5 |

Similarly, mAP@0.5:0.95 indicates averaged AP over all classes and different IoU thresholds from 0.5 to 0.95 with a step of 0.05.

Object detection models exploit a bounding box and a class label to make predictions. For each object in the image, intersection over union (IoU) is used to measure the ratio between the predicted bounding box and ground truth. 7,8 If the calculated ratio is bigger than the IoU threshold, then the object is assigned to true positive (TP). Precision and Recall metrics are calculated based on these predictions.

Detection performance is evaluated by Common Objects in Context (COCO) detection evaluation metrics. 24 It includes multiple metrics that some of them have been used in precision-recall curve that its area under curve (AUC) is given in brackets in the legend of precision-recall curve. Evaluation metrics used in precision-recall curve as a legend box are briefly explained.

C75 refers to the AUC at a strict IOU equals to 0.75,

C50 refers to the AUC at a strict IOU equals to 0.5,

Loc refers to the AUC when localization errors are ignored,

Sim refers to the AUC when supercategory class confusions are removed,

Oth refers to the AUC when all class confusions are removed,

BG refers to the AUC when all background and class confusions are removed,

FN refers to the AUC when all remaining errors are removed

Results

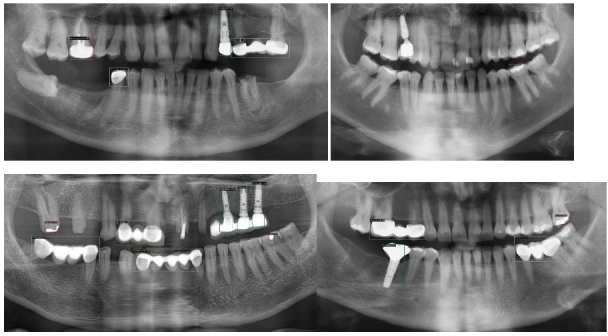

Detection performances of each model is evaluated by mAP, average recall, and COCO detection evaluation metrics. Precision-recall and loss curves are then presented for the three best performing models to further analyze them. Figure 2 demonstrates how accurate objects were detected on PRs.

Figure 2.

Detection results with predicted bounding boxes and class names.

Table 1 shows performance results with two categories such as multistage methods and single-stage methods using mAP and average recall metrics. Eight of ten models explored gave mAP@0.5 that is higher than 0.9 while all produced average recall that is higher than 0.7. Faster R-CNN RegnetX provided the best detection performance with mAP of 0.97 and 0.72 for IoU of 0.5 and 0.5:0.95, respectively. Average recall was 0.77.

Table 1.

Detection performance of each model

| Models | mAP@0.5 | mAP@0.5:0.95 | Average Recall |

|---|---|---|---|

| MultiStage Methods | |||

| Faster R-CNN ResNet-50 | 0.958 | 0.677 | 0.732 |

| Faster R-CNN ResNet-101 | 0.930 | 0.705 | 0.745 |

| Faster R-CNN XCeption-101 | 0.941 | 0.682 | 0.720 |

| Libra RCNN Xception-101 | 0.938 | 0.711 | 0.767 |

| Faster R-CNN RegnetX 3.2GF | 0.973 | 0.723 | 0.771 |

| Cascade RCNN Resnet-101 | 0.917 | 0.689 | 0.749 |

| Dynamic R-CNN | 0.934 | 0.688 | 0.736 |

| Single-Stage Methods | |||

| SSD512 VGG16 | 0.875 | 0.623 | 0.721 |

| YOLO DarkNet-53 | 0.755 | 0.442 | 0.605 |

| RetinaNet Resnet-101 | 0.903 | 0.665 | 0.751 |

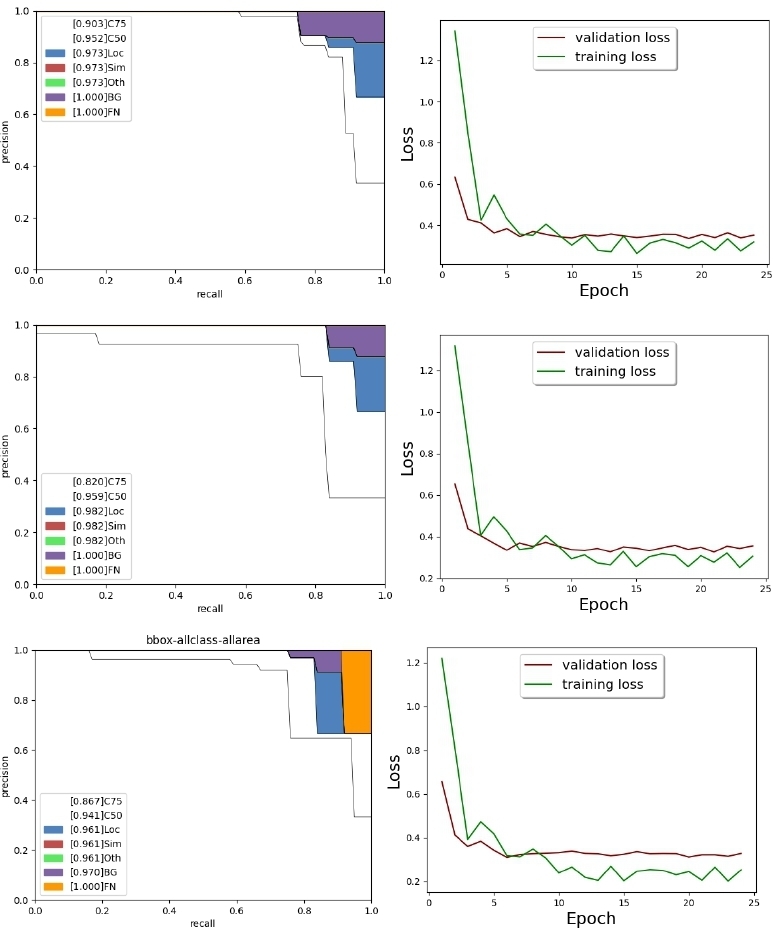

Faster R-CNN RegnetX, Faster R-CNN ResNet-50 and Faster R-CNN XCeption-101 are top three models with mAP@0.5 of 0.973, 0.958 and 0.938, respectively. Figure 3 shows the precision-recall and loss curves for them.

Figure 3.

Precision-Recall and loss curves for the best three models.

Precision-recall curves are used to analyze where models do errors in detection in terms of object localization, class-based confusions, false-positives caused by background and missing detections. The area under precision-recall curve (AUC) for Faster R-CNN RegnetX, Faster R-CNN ResNet-50, and Faster R-CNN XCeption-101 begin with 0.90, 0.82, and 0.86. If localization errors were removed, it would have become 0.973, 0.982, and 0.961, respectively. Then, it was seen that removing class-based confusions did not change AUC for three models. If background errors were removed, it would have become 1, 1, and 0.97. Lastly, if missing detections were removed, then it would be 1, 1, and 1, respectively. This demonstrated that errors were dominated by localization confusions for the three best performing models. Background confusions slightly affected their performance. However, there were not any class-based errors. Moreover, there were not any missing detections for Faster R-CNN RegnetX, Faster R-CNN ResNet-50.

The length of time required to train each model is also investigated. Table 2 shows computational time for each model. It was seen that it took more time to train for complex architectures and models with more layers.

Table 2.

Total computation time for each model

| Models | Computation Time |

|---|---|

| MultiStage Methods | |

| Faster R-CNN ResNet-50 | 17 min 1 sec |

| Faster R-CNN ResNet-101 | 21 min 46 sec |

| Faster R-CNN XCeption-101 | 30 min 43 sec |

| Libra RCNN Xception-101 | 46 min 41 sec |

| Faster R-CNN RegnetX 3.2GF | 30 min 4 sec |

| Cascade RCNN Resnet-101 | 30 min 5 sec |

| Dynamic R-CNN | 17 min 3 sec |

| Single-Stage Methods | |

| SSD512 VGG16 | 48 min 8 sec |

| YOLO DarkNet-53 | 39 min 40 sec |

| RetinaNet Resnet-101 | 24 min 22 sin |

Discussion

Deep-learning-based applications have significantly increased especially in radiology research of dentistry in the last few years, showing valuable potential for various purposes. 2,3,6,9,11 Applications are based on classification, detection, and segmentation that include tooth detection and numbering, conditions like anatomical structures, carries, lesions, landmarks, root morphology, and bone loss. 2,3,6,9,11 Contrarily, it was seen that the prior studies on detection of dental restorations were limited in terms of dental conditions examined and the number of deep-learning architectures explored.

Recent studies have reported that AI using deep-learning techniques can detect implants. Kim et al. detected three different type of implant fixtures in periapical radiographs. 34 Dataset consisted of 355 periapical radiographs that were divided into training and test sets with a ratio of 8 to 2. One model, YOLOv3, was used to evaluate the performance. Sensitivity, specificity, accuracy, and confidence score were, respectively, obtained as 94.4, 97.9, 96.7, and 0.75%. Proposed work explored ten models for three classes including YOLOv3 and implants, resulting superior detection performance for most of the models used and similar performance for YOLOv3, AP of 0.657 for implant class only.

Takahashi et al. identified six dental implant systems by three manufactures using deep learning. 35 1282 panoramic radiograph images with implants were used to train YOLOv3. It was reported that AP varied between 0.51 and 0.85 while mAP was 0.71. Proposed work analyzed detection performance of YOLOv3 for implant detection, resulting AP of 0.657 for implant class only and mAP of 0.755. The other nine models provided AP between 0.657 and 0.758 for implant class only while mAP varied between 0.755 and 0.973.

In another work, Takahashi et al. used deep learning to recognize 11 types of dental prostheses and restorations. 36 YOLOv3 provided AP of each prosthesis class varied between 0.59 and 0.93. It was concluded that metallic dental prostheses and restorations in color can be identified better compared to those with tooth color. Proposed work included three classes, namely, restorations, denture, and implant contrary to additional categorization of each condition due to limited number of PRs. YOLOv3 gave AP of 0.414 for denture class only while AP varied between 0.414 and 0.833 for the other nine models.

Lee et al. evaluated classification performance of deep CNN and compared accuracy with dental professionals. 37 11,980 panoramic and periapical radiographs with six different implants were applied to automated deep CNN, resulting accuracy, sensitivity, and specificity of 0.95, 0.95, and 0.85, respectively.

Park et al. segmented missing tooth regions for planning of dental implant placement. 38 455 panoramic radiographs were given as an input to the first segmentation model, Mask R-CNN with ResNet- 101 as backbone, then teeth masks were applied to detection model, Faster R-CNN, to predict missing teeth regions. mAP for segmentation and detection tasks were, respectively 0.92 and 0.59. Proposed work used Faster R-CNN with its four derivations. mAP varied between 0.93 and 0.973.

Santos et al. developed a computer-assisted system based on AI to identify dental implant brands of three different manufacturers in periapical radiographs. 39 1800 periapical radiographs of dental implants were supplied to a CNN architecture that had five convolutional layers with each one followed by a pooling layer five dense layers. It was reported that the accuracy, sensitivity, and specificity of implant identification were 85.29, 89.9, and 82.4%, respectively.

Başaran et al. used AI for diagnostic charting of 10 different dental conditions including implant, filling, and crown in panoramic radiographs. 40 Faster R-CNN Inception v2 model was used to evaluate the performance. Sensitivity and precision of implant, filling and crown class are 0.96, 0.92, 0.86, 0.87, 0.96, and 0.86, respectively.

Lee et al. detected and classified dental implant fractures using 445 panoramic and periapical radiographic images. 41 Three different deep CNN provided detection and classification accuracy over 0.8.

Rohrer et al. segmented dental restorations using U-Net, a CNN for semantic image segmentation. 42 1781 panoramic radiographs with fillings, crowns, and root canal fillings. It was shown that more equally spaced rectangular image crops achieved to increase model performance. Mean F1 score increased from 0.7 to 0.95 for one-tile and 20-tile cases, respectively.

Compared to prior works, this work presents comprehensive solution for the detection of dental restorations, dentures and implants automatically using 10 types of deep-learning models for the first time. It is complicated to make a direct comparison between proposed work and previous works in four ways. The first incompatibility is that previous works examined only one deep-learning model performance for a class mostly. This work covers the problem broader extend using 10 different state-of-art detection model performances. The second one is that previous works performed classification or segmentation tasks instead of detection for various applications. This work emphasized detection of common dental conditions with three classes. Third one is incompatibility between evaluation metrics inherited from previous step. mAP is used as a standard metric to evaluate the performance of a detection model in computer vision; however, it was not always reported in the prior works. The last incompatibility is the type of radiography used. This work used PRs to analyze the objects while other prior works had used different types of intraoral images such as periapical and bitewing.

There is a limitation in this work. Although proposed work presented quite successful detection performance, the number of PRs is limited. It is known that increased amount of data can significantly improve the performance. 2,3,6,9,11,16 This will be addressed by collecting and annotating more PRs soon to get more robust results. Additionally, data augmentation approach also helps this issue. Thus, each dental condition can be separately examined instead of categorizing them in a class. Moreover, this work used PRs taken by only one device. Model performances with different devices and exposure settings are uncertain.

Conclusion

Deep learning has gained significant attention in particularly the radiology research of medicine and dentistry. In recent years, deep learning has shown valuable potential for various purposes in dental radiography. The results of this study demonstrate that dental restorations and implants can be detected from PR images using deep-learning-based object-detection algorithms. Implementation of AI-based solutions in dental clinics routine seems to be available soon to assist dentists in decision-making process after addressing several limitations, learning performance is increased with larger number of images in subsequent studies, i.e. technical and clinical evidences are obtained. As a reliable and robust diagnostic and detection tool, AI-based systems have valuable potential to assist dentists in decision-making process in terms of diagnosis, treatment planning, and dental healthcare management.

Contributor Information

Berrin Çelik, Email: bcelik@ybu.edu.tr.

Mahmut Emin Çelik, Email: mahmutemincelik@gazi.edu.tr.

REFERENCES

- 1. Zadrożny Ł, Regulski P, Brus-Sawczuk K, Czajkowska M, Parkanyi L, Ganz S, et al. Artificial intelligence application in assessment of panoramic radiographs. Diagnostics (Basel) 2022; 12: 224. doi: 10.3390/diagnostics12010224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Prados-Privado M, Villalón JG, Martínez-Martínez CH, Ivorra C. Dental images recognition technology and applications: a literature review. Applied Sciences 2020; 10: 2856. doi: 10.3390/app10082856 [DOI] [Google Scholar]

- 3. Hung K, Montalvao C, Tanaka R, Kawai T, Bornstein MM. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: a systematic review. Dentomaxillofac Radiol 2020; 49(1): 20190107. doi: 10.1259/dmfr.20190107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Shin H-C, Roth HR, Gao M, Lu L, Xu Z, Nogues I, et al. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans Med Imaging 2016; 35: 1285–98. doi: 10.1109/TMI.2016.2528162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Kooi T, Litjens G, van Ginneken B, Gubern-Mérida A, Sánchez CI, Mann R, et al. Large scale deep learning for computer aided detection of mammographic lesions. Med Image Anal 2017; 35: 303–12: S1361-8415(16)30124-4. doi: 10.1016/j.media.2016.07.007 [DOI] [PubMed] [Google Scholar]

- 6. Khanagar SB, Al-Ehaideb A, Maganur PC, Vishwanathaiah S, Patil S, Baeshen HA, et al. Developments, application, and performance of artificial intelligence in dentistry - A systematic review. J Dent Sci 2021; 16: 508–22. doi: 10.1016/j.jds.2020.06.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Skansi S. Introduction to deep learning. In: Introduction to Deep Learning: from logical calculus to artificial intelligence. Cham: Springer; 2018. doi: 10.1007/978-3-319-73004-2 [DOI] [Google Scholar]

- 8. Goodfellow I, Bengio Y, Courville A. Deep learning. MIT press; 2016. [Google Scholar]

- 9. Khan A, Sohail A, Zahoora U, Qureshi AS. A survey of the recent architectures of deep convolutional neural networks. Artif Intell Rev 2018; 53: 5455–5516. doi: 10.1007/s10462-020-09825-6 [DOI] [Google Scholar]

- 10. Liu L, Ouyang W, Wang X, Fieguth P, Chen J, Liu X, et al. Deep learning for generic object detection: a survey. Int J Comput Vis 2018; 128: 261–318. doi: 10.1007/s11263-019-01247-4 [DOI] [Google Scholar]

- 11. Carrillo-Perez F, Pecho OE, Morales JC, Paravina RD, Della Bona A, Ghinea R, et al. Applications of artificial intelligence in dentistry: A comprehensive review. J Esthet Restor Dent 2022; 34: 259–80. doi: 10.1111/jerd.12844 [DOI] [PubMed] [Google Scholar]

- 12. Leite AF, Gerven AV, Willems H, Beznik T, Lahoud P, Gaêta-Araujo H, et al. Artificial intelligence-driven novel tool for tooth detection and segmentation on panoramic radiographs. Clin Oral Investig 2021; 25: 2257–67. doi: 10.1007/s00784-020-03544-6 [DOI] [PubMed] [Google Scholar]

- 13. Tuzoff DV, Tuzova LN, Bornstein MM, Krasnov AS, Kharchenko MA, Nikolenko SI, et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac Radiol 2019; 48(4): 20180051. doi: 10.1259/dmfr.20180051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Chang H-J, Lee S-J, Yong T-H, Shin N-Y, Jang B-G, Kim J-E, et al. Deep learning hybrid method to automatically diagnose periodontal bone loss and stage periodontitis. Sci Rep 2020; 10: 7531. doi: 10.1038/s41598-020-64509-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Celik ME. Deep learning based detection tool for impacted mandibular third molar teeth. Diagnostics (Basel) 2022; 12(4): 942. doi: 10.3390/diagnostics12040942 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Hiraiwa T, Ariji Y, Fukuda M, Kise Y, Nakata K, Katsumata A, et al. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac Radiol 2019; 48(3): 20180218. doi: 10.1259/dmfr.20180218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Tzutalin. LabelImg. Git Code . 2015. Available from: https://github.com/tzutalin/labelImg (accessed 5 Oct 2015)

- 18. Girshick R, Donahue J, Darrell T, Malik J. Rich feature Hierarchies for accurate object detection and semantic segmentation. In: Malik J, ed. 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Columbus, OH, USA. ; 2016. doi: 10.1109/CVPR.2014.81 [DOI] [Google Scholar]

- 19. Ren S, He K, Girshick R, Sun J. Faster r-cnn: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 2017; 39: 1137–49. doi: 10.1109/TPAMI.2016.2577031 [DOI] [PubMed] [Google Scholar]

- 20.. Redmon J, Farhadi A.. YOLOv3: An Incremental Improvement. 2018.

- 21. Leibe B, Matas J, Sebe N, Welling M. Computer Vision – ECCV 2016. In: SSD: Single Shot MultiBox Detector. Computer Vision – ECCV 2016. Cham: Springer International Publishing; 2016. 10.1007/978-3-319-46448-0 [DOI] [Google Scholar]

- 22. Lin T-Y, Goyal P, Girshick R, He K, Dollar P. Focal Loss for Dense Object Detection. In: Dollár P, ed. 2017 IEEE International Conference on Computer Vision (ICCV); Venice. ; 2016. 10.1109/ICCV.2017.324 [DOI] [Google Scholar]

- 23. Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans Knowl Data Eng 2014; 22: 1345–59. doi: 10.1109/TKDE.2009.191 [DOI] [Google Scholar]

- 24. Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, et al. Microsoft coco: common objects in context. In European Conference on Computer Vision. Cham: Springer; September 2014. pp. 740–55. doi: 10.1007/978-3-319-10602-1 [DOI] [Google Scholar]

- 25. Zhang H, Chang H, Ma B, Wang N, Chen X. (n.d.). Dynamic R-CNN: Towards High Quality Object Detection via Dynamic Training. Computer Vision – ECCV 2020; 2020 2020//. Cham: Springer International Publishing; [Google Scholar]

- 26. Pang J, Chen K, Shi J, Feng H, Ouyang W, Lin D. Libra R-CNN: towards balanced learning for object detection. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); Long Beach, CA, USA. ; 2020. doi: 10.1109/CVPR.2019.00091 [DOI] [Google Scholar]

- 27. Radosavovic I, Kosaraju RP, Girshick R, He K, Dollar P. Designing Network Design Spaces. In: Dollár P, ed. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); Seattle, WA, USA. ; 2020. 10.1109/CVPR42600.2020.01044 [DOI] [Google Scholar]

- 28. Wang J, Zhang W, Cao Y, Chen K, Pang J, Gong T, et al. Side-aware boundary localization for more precise object detection. In European Conference on Computer Vision. Cham: Springer; August 2020. pp. 403–19. doi: 10.1007/978-3-030-58548-8 [DOI] [Google Scholar]

- 29. Cai Z, Vasconcelos N. Cascade R-CNN: delving Into high quality object detection. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); Salt Lake City, UT. ; 2020. pp. 6154–62. doi: 10.1109/CVPR.2018.00644 [DOI] [Google Scholar]

- 30. Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. ArXiv 2014: arXiv:1409.1556. [Google Scholar]

- 31. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Las Vegas, NV, USA. ; June 2010. pp. 770–78. doi: 10.1109/CVPR.2016.90 [DOI] [Google Scholar]

- 32. Chollet F. Xception: deep learning with depthwise separable convolutions. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Honolulu, HI. ; June 2010. pp. 1251–58. doi: 10.1109/CVPR.2017.195 [DOI] [Google Scholar]

- 33. Everingham M, Van Gool L, Williams CKI, Winn J, Zisserman A. The pascal visual object classes (voc) challenge. Int J Comput Vis 2010; 88: 303–38. doi: 10.1007/s11263-009-0275-4 [DOI] [Google Scholar]

- 34. Kim HS, Ha EG, Kim YH, Jeon KJ, Lee C, Han SS. Transfer learning in A deep convolutional neural network for implant fixture classification: A pilot study. Imaging Sci Dent 2022; 52: 219–24. doi: 10.5624/isd.20210287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Takahashi T, Nozaki K, Gonda T, Mameno T, Wada M, Ikebe K. Identification of dental implants using deep learning-pilot study. Int J Implant Dent 2020; 6: 53. doi: 10.1186/s40729-020-00250-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Takahashi T, Nozaki K, Gonda T, Mameno T, Ikebe K. Deep learning-based detection of dental prostheses and restorations. Sci Rep 2021; 11: 1–7: 1960. doi: 10.1038/s41598-021-81202-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Lee JH, Kim YT, Lee JB, Jeong SN. A performance comparison between automated deep learning and dental professionals in classification of dental implant systems from dental imaging: A multi-center study. Diagnostics (Basel) 2020; 10: 910: E910. doi: 10.3390/diagnostics10110910 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Park J, Lee J, Moon S, Lee K. Deep learning based detection of missing tooth regions for dental implant planning in panoramic radiographic images. Applied Sciences 2022; 12: 1595. doi: 10.3390/app12031595 [DOI] [Google Scholar]

- 39. da Mata Santos RP, Vieira Oliveira Prado HE, Soares Aranha Neto I, Alves de Oliveira GA, Vespasiano Silva AI, Zenóbio EG, et al. Automated identification of dental implants using artificial intelligence. Int J Oral Maxillofac Implants 2021; 36: 918–23. doi: 10.11607/jomi.8684 [DOI] [PubMed] [Google Scholar]

- 40. Başaran M, Çelik Ö, Bayrakdar IS, Bilgir E, Orhan K, Odabaş A, et al. Diagnostic charting of panoramic radiography using deep-learning artificial intelligence system. Oral Radiol 2022; 38: 363–69. doi: 10.1007/s11282-021-00572-0 [DOI] [PubMed] [Google Scholar]

- 41. Lee DW, Kim SY, Jeong SN, Lee JH. Artificial intelligence in fractured dental implant detection and classification: evaluation using dataset from two dental hospitals. Diagnostics (Basel) 2021; 11: 233. doi: 10.3390/diagnostics11020233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Rohrer C, Krois J, Patel J, Meyer-Lueckel H, Rodrigues JA, Schwendicke F. Segmentation of dental restorations on panoramic radiographs using deep learning. Diagnostics (Basel) 2022; 12: 1316. doi: 10.3390/diagnostics12061316 [DOI] [PMC free article] [PubMed] [Google Scholar]