Abstract

Decision-making is an essential cognitive process by which we interact with the external world. However, attempts to understand the neural mechanisms of decision-making are limited by the current available animal models and the technologies that can be applied to them. Here, we build on the renewed interest in using tree shrews (Tupaia belangeri) in vision research and provide strong support for them as a model for studying visual perceptual decision-making. Tree shrews learned very quickly to perform a two-alternative forced choice contrast discrimination task, and they exhibited differences in response time distributions depending on the reward and punishment structure of the task. Specifically, they made occasional fast guesses when incorrect responses are punished by a constant increase in the interval between trials. This behavior was suppressed when faster incorrect responses were discouraged by longer intertrial intervals. By fitting the behavioral data with two variants of racing diffusion decision models, we found that the between-trial delay affected decision-making by modulating the drift rate of a time accumulator. Our results thus provide support for the existence of an internal process that is independent of the evidence accumulation in decision-making and lay a foundation for future mechanistic studies of perceptual decision-making using tree shrews.

Keywords: sequential sampling model, decision-making, tree shrew, timed racing diffusion model

Significance Statement

Despite decades of work in the field of decision-making, we still have no clear brain-wide model of how perceptual decisions are formed and executed. A major reason for this lack of understanding is the limited animal models in decision-making studies. Here, we have successfully established a rigorous perceptual decision-making paradigm in tree shrews, and evaluated their choice and response-time behaviors with both summary statistics and trial-level computational modeling. Our results suggest that an endogenously-driven decision process, in addition to standard stimulus-dependent evidence accumulation, is necessary for interpreting the observed behavior. Our study thus underscores the importance of characterizing additional factors that affect decisions and encourages future investigations using tree shrews to reveal the neural mechanisms underlying these cognitive processes.

Introduction

Decision-making is a vital cognitive process, playing an important role in many brain functions such as categorization, learning, memory, and reasoning. Among different forms of decision-making, perceptual decision-making, where decisions are based on sensory stimuli, is a simple yet informative task that is particularly amenable to experimental studies. Visual stimuli are often used because the visual system is arguably the best studied sensory system, thus advantageous for understanding perceptual decision-making from sensation to action.

Considering decision-making is a dynamic process with complex combinations of distinct underlying variables, researchers have frequently applied sequential sampling models (SSMs) to interpret and decompose decision behaviors. These models assume that the evidence (i.e., a variable depending on the sensory stimulus strength) is accumulated through time, and a corresponding choice is made when the accumulated evidence passes a threshold. By defining these stochastic accumulation processes, SSMs can simulate decisions and response times (RTs) with the stimulus as the input. The discovery of “ramping neurons” during decisions in many brain regions provides neural evidence for these models (Horwitz and Newsome, 1999; Roitman and Shadlen, 2002; Ding and Gold, 2010; Mante et al., 2013). Despite the models’ effectiveness in a wide range of applications, variants of the SSM make different predictions regarding what decision variables (bias, threshold, time perception, etc.) are involved and how they interact with each other (Ratcliff, 1978; Usher and McClelland, 2001; Brown and Heathcote, 2005; Cisek et al., 2009). More importantly, the neural mechanisms of these variables and their interactions remain largely unknown, which typically require studies in animal models.

Monkeys and rodents (mostly rats and mice) are commonly used in decision-making studies, with respective advantages and drawbacks. Monkeys are closely related to humans, but they are expensive and limited in availability, thus difficult to study or control individual differences. Furthermore, most modern “circuit-busting” opto-genetic and chemo-genetic techniques are not yet routinely used in primates. On the other hand, recent use of rodents, especially mice, has significantly advanced our understanding of decision-making (Odoemene et al., 2018; International Brain Laboratory et al., 2021; Ashwood et al., 2022). However, mice and rats are nocturnal animals with poor eyesight, making them less than ideal for visual tasks. In addition, rodents often learn visual tasks slowly (Aoki et al., 2017; Urai et al., 2021), costing both time and effort to obtain high quality data. Here, we use a different animal model, tree shrews (Tupaia belangeri; Fig. 1A) for visual decision studies. Under the order of Scandentia, tree shrews are evolutionarily closer to primates than rodents are (Yao, 2017). They are diurnal, have an excellent acuity, and display visual system complexity similar to primates (Petry and Bickford, 2019). Earlier studies have shown that they could be reliably trained to perform visual (color, orientation, spatial frequency, temporal frequency, etc.) discrimination tasks (Casagrande and Diamond, 1974; Petry et al., 1984; Petry and Kelly, 1991; Callahan and Petry, 2000; Mustafar et al., 2018). In addition, tree shrews are of lower cost, smaller, and have a faster reproduction cycle than monkeys, making them more accessible. Finally, modern viral, genetic, and imaging techniques are being applied in tree shrews with a better success than in primates (Lee et al., 2016; Li et al., 2017; Sedigh-Sarvestani et al., 2021; Savier et al., 2021). Taken together, tree shrews have the potential to advance the understanding of neural mechanisms underlying perceptual decision-making. In this study, we seek to establish a rigorous perceptual decision-making paradigm for tree shrews, and to characterize the decision-making features, including both response accuracy and response time, in this animal model quantitatively with both summary statistics and trial-level computational modeling.

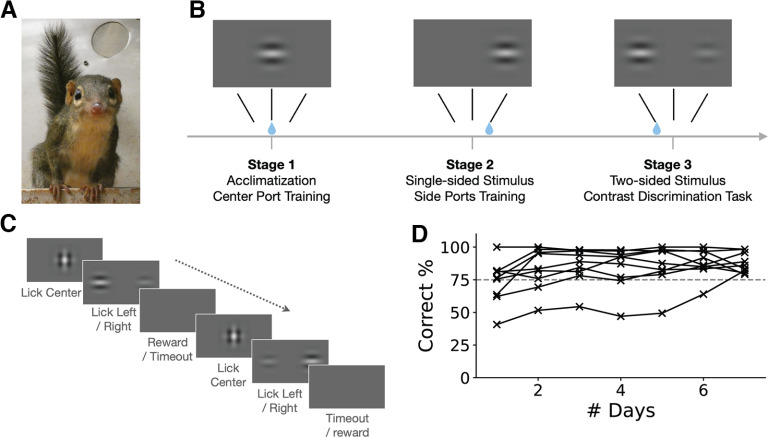

Figure 1.

Experimental design. A, A photograph of a tree shrew in the home cage. B, A schematic of the training procedure. C, The contrast discrimination task. The animal needs to choose the side that has a higher contrast gabor and report the choice by licking the corresponding port. D, Learning curve of individual animals. The y-axis is the response accuracy for the easiest condition on each day. Day 1 refers to the first day of training with two-sided gabor stimulus. Dashed gray line, 75% accuracy. Most animals reached this level by day 2 and all by day 7.

Materials and Methods

Contrast discrimination task

We trained in total of nine (male = 7, female = 2) freely moving tree shrews to perform a two-alternative forced choice (2AFC) contrast discrimination task (Fig. 1C). At the beginning of each trial, a visual stimulus of two orthogonal overlapping α-transparent gabors appeared at the screen center to indicate that the tree shrew could lick the center port to initiate the trial. After initiation, the center stimulus disappeared, and two side gabor patches were presented immediately on the left and right of the screen. Tree shrews needed to choose the side with a higher contrast by licking the corresponding lick port. This self-initiation design helped to ensure that the animals were focused from the beginning of each trial and allowed us to record accurate RTs, which were calculated as the duration between the stimulus (two side gabors) appearance and the side-port lick detection. Once a choice lick was detected, the stimulus would disappear from the screen. We adopted a free-response structure that if no choice was detected, the stimulus would be on for an infinite amount of time.

Intertrial intervals (ITIs) were randomly drawn from a truncated normal distribution with a mean of 0.6, a SD of 1, a lower bound of 0.5, and an upper bound of 0.7 (unit: seconds). For correct responses, liquid reward (50% grape juice) was given right after the animals reported their choices. The reward volume was determined by the duration of the valve opening, which was randomly drawn from a truncated normal distribution with a mean of 0.1, a SD of 0.06, a lower bound of 0.2, and an upper bound of 0.4 (unit: seconds). The speed of liquid flow was 150 μl/s. The average reward volume in one correct trial was 33 μl (0.22 s). The random ITI and random reward duration helped the animals to stay engaged in the task.

For incorrect responses, two protocols were used to generate a delay as a punishment. (1) A fixed delay of 4 s was used in the first group of tree shrews for all incorrect responses. If the animal licked the center port during the delay (i.e., blank screen licks; detected in 0.8 s periods), a penalty of 0.8 s was then added to the delay, with a maximum of 8 s for the total delay. (2) An exponential decay function (Equation 1) was applied in the second group of animals to generate a between-trial delay based on the trial-level RT:

| (1) |

where T is the between-trial delay, RT is the response time of the current incorrect trial, and l and s are the location and scale parameters, which shift and scale the function in the stimulus generation code. For all animals, we used l = 0.1, s = 1.7. For the blank screen lick penalty, 1.5 s was added for every center-port-lick, with the total delay being Max(T, tpassed + penalty), and no upper limit. To determine the potential effect of these two delay paradigms, we calculated the reward rate using the data of a representative animal from the first group of tree shrews (Eq. 2): the response accuracy of each RT bin was fitted with a sigmoid function, which was then used to calculate the theoretical reward per unit time (pulse/s).

| (2) |

where RR(t) is the reward rate for a response time of t, Acc(t) is the response accuracy (i.e., ratio of correct choices) under this response time t obtained from the observed data, Delay(t) is the intertrial delay for incorrect responses, which is four for the fixed-delay rule or follows the exponential decay function defined above (Eq. 1) for the exponential-delay rule.

Animal training and data collection

Tree shrews were first acclimated to the behavior box for 1–2 d. For most animals (seven out of nine), water restriction started at this stage of training (stage 1). For the other two animals, water restriction started a couple of days before acclimation. Two approaches of water restriction were used: (1) we gradually reduced their water intake from baseline (20–40 ml/d) to 5–10 ml/d by limiting the availability of drinking water; (2) we used citric acid (CA; Urai et al., 2021) water in their home cage to reduce water intake and gradually increased its concentration from 2% to 4%. The progress of water restriction depended on the animals’ weight loss, water-intake baseline, and tolerance, to make sure that they were motivated to stay focused on the task for at least 25 min/d, and at the same time, not experiencing any health issue (weight ≥90% × baseline). Depending on the animals’ acclimation and learning speed, the water restriction progress (2–7 d) could extend to stage 2 and even 3 before reaching a stable restriction level.

During stage 1, a single gabor stimulus would be shown right above the center lick port. After the gabor appeared, the animals could lick the center port at any time to trigger a liquid reward (grape juice diluted with water in a 1:1 ratio). Each tree shrew was left in the behavior box to learn to use the center port for no more than 20 min every day for acclimation, but this stage usually took only 1 d (20–40 trials per day). Having learnt to get liquid reward from the center port, the animals progressed to the next stage. At stage 2, the contrast discrimination task was set up with contrast pairs of 1.0 (full contrast) versus 0.0 (zero contrast), i.e., a single side stimulus was shown. The goal of stage 2 was to train the animals to use the left and right lick ports. Liquid reward from the center port was gradually reduced to zero within ∼50 trials. Animals usually perform 100–300 trials per day at this stage. Once they learned and had a stable correct rate of >75%, they progressed to stage 3. Note that most animals learned very fast and graduated both stages 1 and 2 within 2 d.

At stage 3, we first gave the animals an easy condition by using contrast pairs of 1.0 versus 0.1, and gradually mixed in other pairs of smaller contrast differences, finally achieving the stimulus set we use in the formal data collection. During this stage of training, we also adjusted the ratio of easy (e.g., comparing the highest and lowest contrast) and difficult (same or similar contrast) trials for each animal. By including sufficient easy trials and limiting the number of equal-contrast trials, we were able to keep the animals motivated to keep doing the task. For equal contrast trials, the correct answer was randomly assigned to left or right, so that the animals still had 50% chance to get a reward in these trials. At this stage, the animals performed 500–600 trials per day. Some animals could finish it within 30 min, while some of the others needed as long as 1 h, especially when they produced large numbers of incorrect choices (giving rise to more penalty time) or they started to lose patience and focus (giving rise to more idling time). To control the frustration level, we would stop the training when the duration was over 1 h. At this time, some animals (50%) also developed biased behavior by making most choices to the same side. We discouraged this behavior by automatically adjusting the probability of left/right trials depending on their real-time performance. For example, we calculated the proportion of choosing rightward in the previous 10 trials, denoted as Pr. The probability of the next trial being rightward was 1 – Pr. This real-time bias correction quickly discouraged the biased behavior in the tree shrews.

After the animals achieved a stable (three to five consecutive days) overall accuracy ≥60% (at this time, the accuracy is expected to be lower because of the existence of equal contrast trials and other difficult trials), we collected data for consecutive days (500–600 trials per day) to reach at least 100 repeats for each condition of contrast discrimination. The data were first culled by applying a three SD outlier removal on the Box–Cox transformed response time distribution in preprocessing. The remaining trials were used in further analysis.

All animal procedures were performed in accordance with the University of Virginia animal care committee’s regulations.

Stimulus and apparatus

The experiment program was written in Python and the stimuli were generated and presented with the State Machine Interface Library for Experiments (SMILE; https://github.com/compmem/smile). The Gabor patch size was 28∘, and the spatial frequency was 0.2 cpd. The stimulus screen had a 1280 × 1024 resolution and 60-Hz refresh rate, and was γ-corrected. It was set at a distance of 15 cm from the animal. There were six levels of stimulus contrasts ranging from 0.08 to 0.99, which were evenly-spaced. All combinations of left and right contrasts are presented in a randomized order.

The lick-detector circuit (adapted from Marbach and Zador, 2017), and reward-valve control circuit (adapted from https://bc-robotics.com/tutorials/controlling-a-solenoid-valve-with-arduino/) were controlled with an NI USB-6001 multifunction I/O device (https://www.ni.com/en-us/support/model.usb-6001.html). The Plexiglas behavior box was L: 40 cm × W: 22 cm × H: 20 cm with a transparent window on the front side to allow the animals to watch the screen.

Data analysis and models

To test the relationship between RT and contrast difference, we fitted a mixed effect linear regression model with RT as the dependent variable, the absolute contrast difference between left and right stimuli as the independent variable, and individual animal as the group variable, using the statsmodels library in Python.

We fitted the behavioral data with two sequential sampling decision-making models, the timed racing diffusion model (TRDM) and the racing diffusion model (RDM), and compared their performance using a Bayesian approach. TRDM contains three independent accumulation processes, namely two evidence accumulators and one time accumulator (or “timer”), whereas RDM only has the two evidence accumulators. The probability density function (PDF) [f(t)] and cumulative distribution function [F(t)] for each evidence or time accumulation process are defined by the inverse Gaussian (Wald) distribution in Equation 3:

| (3) |

where t is the response time, ρ is the mean drift rate, σ is the within-trial variability of the drift rate, α is the threshold (which was fixed to 1.0), t0 is the nondecision time, Φ is the cumulative distribution function of a standard normal distribution (Heathcote, 2004; Hawkins and Heathcote, 2021).

The mean drift rate (ρ) of each evidence accumulator was calculated using the following equation (Eq. 4), taking into consideration both the stimulus difference and their total strength:

| (4) |

where ρl and ρr are the mean drift rate of the left and right evidence accumulators, v0 is the baseline drift rate, sl and sr are the contrasts of left and right stimuli, vd is the coefficient of the contrast difference term, vs is the coefficient of the contrast summation term (van Ravenzwaaij et al., 2020).

The accumulators race against each other. If one of the evidence accumulators reaches the threshold first, a corresponding choice is made. If the time accumulator reaches the threshold first, one of the options will be chosen randomly with a partial dependence on which evidence is greater at that time point. This is done through a process controlled by a parameter γ, ranging from 0 to 1, with 1 being fully dependent on the evidence accumulated up until that point, and 0 being completely random regardless of the accumulated evidence. Other parameters of the model include ρt, ω, and t0, as described in Table 1.

Table 1.

Priors of free parameters in tested models

| Parameter | Description | Prior |

|---|---|---|

| ω | Bias | IL(0, 1.4) |

| t 0, c | Nondecision time of choice | IL(0, 1.4) |

| Drift rate coefficients of choice | LN(1.56, 1.5) | |

| Mean drift rate of timer | LN(1.56, 1.5) | |

| Within-trial variability | LN(1.56, 1.5) | |

| γ * | Mixture between random and evidence-based timer-induced decision |

IL(–1, 1.0) |

IL inverse logit distribution.

LN log normal distribution.

parameters only exist in TRDM.

The best fitting parameters of the two models for each animal are shown in Extended Data Tables 1-2 and 1-3. We also tested the relationship between RT and contrast difference using nonmodel statistics described in Extended Data Table 1-1.

Statistical table. Download Table 1-1, DOC file (32.5KB, doc) .

TRDM best fitting parameters of each animal. Download Table 1-2, DOC file (79.5KB, doc) .

RDM best fitting parameters of each animal. Download Table 1-3, DOC file (62.5KB, doc) .

To apply Bayesian inference, we first defined the “priors,” the belief of the true parameter values before data observation, by assigning a probability distribution for each of the parameters based on previous experience (Table 1; Kirkpatrick et al., 2021). We then used the observed data to update the prior distributions, to achieve a more constrained posterior distribution of what parameters could have generated the observed data for each model. Posterior samples were generated with the differential evolution Markov chain Monte Carlo (DE-MCMC; Ter Braak, 2006; Turner and Sederberg, 2012; Turner et al., 2013) algorithm, which was shown to be computationally efficient. This was implemented by the RunDEMC library (https://github.com/compmem/RunDEMC). We set 10k (k is the number of parameters) parallel chains for 200 iterations in the burn-in stage and 500 iterations to sample the posterior.

Specifically, we apply a standard Metropolis–Hastings algorithm to accept or reject proposed samples from the posterior. Here, a new parameter proposal is evaluated by comparing its posterior probability with that of the current proposal, with the probability of accepting a new proposal:

| (5) |

where D represents the observed data, θ′ is the new proposal, θ is the current proposal, P(D|θ′) and are the likelihoods calculated with Equation 6, and P(θ′) and P(θ) are the priors.

To calculate the likelihood of observing the data D given the parameters θ, we multiply the likelihoods of observing each choice and RT as determined by the model probability density function (PDF) defined by the parameters θ. For example, the PDF for observing a left response with a decision time t is defined by the following equation (Heathcote, 2004; Hawkins and Heathcote, 2021):

| (6) |

where f(t) and F(t) are the density and distribution functions defined above, fE and FE are for the evidence accumulators, while fT and FT are for the time accumulator. FX is the cumulative distribution function for the random variable X, and X follows a normal distribution defined by the difference in evidence accumulator distributions. ρl and ρr are the mean drift rate for left and right evidence accumulators, ηc is the within-trial variability of the drift rate for the evidence accumulators.

Finally, to compare the performance of the two models, we first calculated the Bayesian information criterion (BIC) values (Eq. 7) of each model fitting result:

| (7) |

where k is the number of parameters, n is the number of data points, is the maximum likelihood of the model’s fit to the data. Then we approximated the Bayes factor (BF) with BIC as in Equation 8 (Kass and Raftery, 1995):

| (8) |

where BICi and BICj are BIC values for Model i (in this case the TRDM) and Model j (the RDM) respectively. BFi,j > 1 means evidence is in favor of Model i over Model j. BFi,j > 3, 20, 150, correspondingly ln (BFi,j) > 1, 3, 5, indicates positive, strong, very strong evidence for Model i over Model j, respectively (Lodewyckx et al., 2011).

Code accessibility

Python code for preprocessing and running TRDM/RDM models are included in the Extended Data 1.

Code for analysis and modeling. fit_rdm.py fit_trdm.py single_animal_preprocessing.ipynb waldrace.py Download Extended Data 1, ZIP file (42.8KB, zip) .

Results

Tree shrews quickly learned to perform a contrast discrimination 2AFC task

We trained a total of nine (male = 7, female = 2) tree shrews to perform a 2AFC contrast discrimination task (Fig. 1). The 2AFC design was chosen over other classic paradigms such as “Go/no-Go” tasks because it eliminates the asymmetry between responses for different options. Also, we designed the trials to be self-initiated and self-paced by the animals, to obtain precise response time (RT) data for comprehensive behavioral analysis. During training, freely moving tree shrews were first acclimated in the behavioral box with a single gabor stimulus appearing at the center or either side of the screen (Fig. 1B). After the animals learned the association between the stimulus and liquid reward, often within 1–2 d, two gabors of different contrasts were introduced with the higher contrast one indicating the location of the reward (Fig. 1C). All the tree shrews were able to learn the task and reach an accuracy >75% for the easiest condition within one week (Fig. 1D). In fact, most of them reached 75% accuracy within 2 d. It is worth noting that, once the animals reached a good performance, the overall difficulty was increased progressively. In other words, the “easiest” condition often became more difficult in successive days. However, the animals’ performance was stably above 75%, indicating that they had learned the rule of the task, instead of the specific stimuli, within a very short period. These observations thus highlight the impressive learning capability of tree shrews and indicate that they can be a promising animal model in cognitive neuroscience research.

Tree shrews showed different behaviors under two training schemes

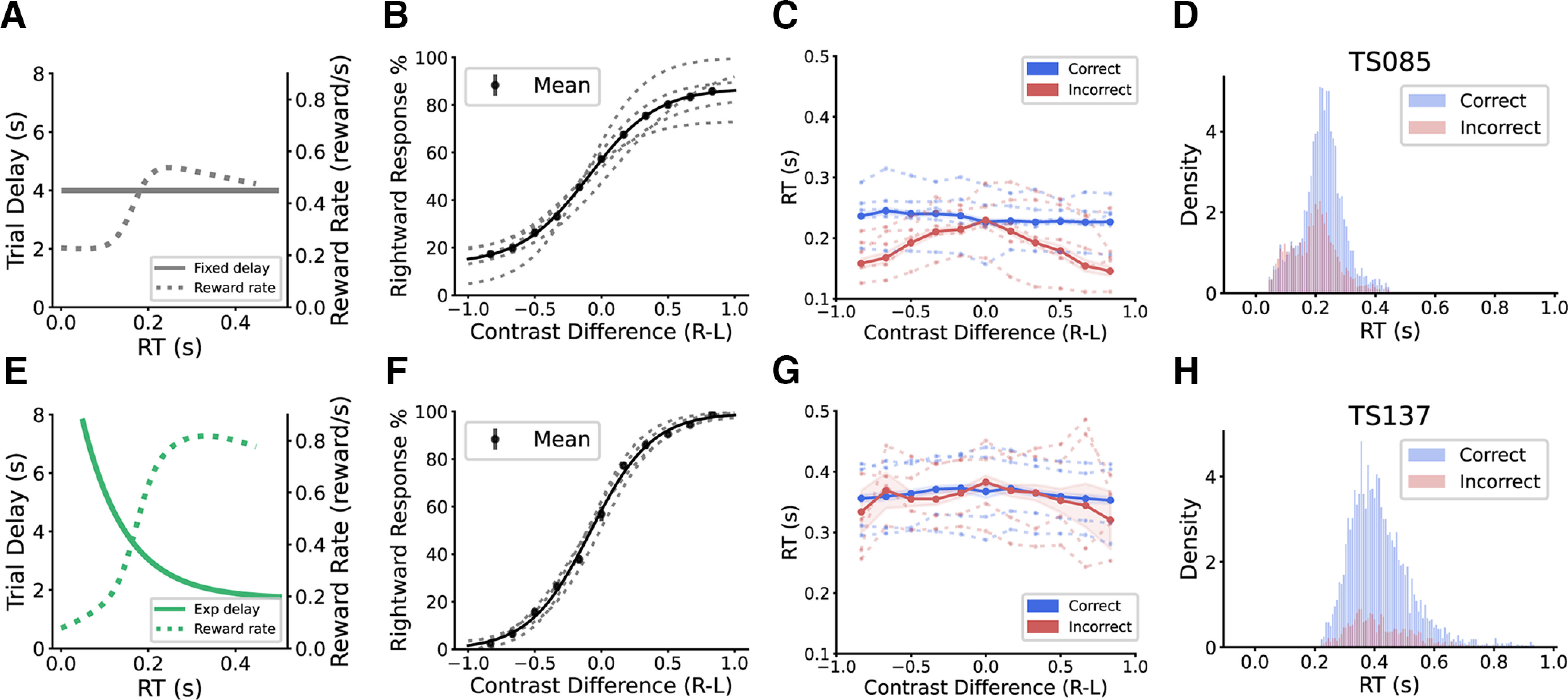

In the first group of animals (n = 5; male = 4, female = 1), a fixed trial delay of 4 s was used to punish incorrect responses (Fig. 2A). All animals were able to learn the task. An increase in difficulty (i.e., a decrease of contrast difference between the two stimuli) induced an expected drop of response accuracy (Fig. 2B). However, task difficulty did not have a significant effect on the response time (RT) in correct trials (mixed effect linear regression, β = 0.008a, p = 0.125; Extended Data Table 1-1), whereas the RT in incorrect trials increased with task difficulty (Fig. 2C, mixed effect linear regression, β = –0.075b, p < 0.001). This result is different from previously reported RT trend in humans, monkeys, and mice (Roitman and Shadlen, 2002; Palmer et al., 2005; Philiastides et al., 2011; Dmochowski and Norcia, 2015; Jun et al., 2021; Orsolic et al., 2021), where increasing task difficulty usually resulted in an increase in RT in correct trials. We examined the RT distribution of individual animals and saw a bimodal-like shape in most animals (n = 4 out of 5) in this group (Fig. 2D; Extended Data Fig. 2-1), instead of the more common log-normal distribution (Ratcliff, 1978; Smith and Ratcliff, 2004). Furthermore, the first small peak of the RT distribution contained a similar proportion of correct and incorrect trials, while the second peak had many more correct than incorrect trials. This bimodal distribution suggested two possible modes in the behavioral responses, a “fast-guessing” mode of random performance and a slower mode where an animal was more “engaged” in the task.

Figure 2.

Tree shrews show different behaviors under two training schemes. A, A fixed delay of 4 s (solid line) was used in training one group of animals. The dashed line shows the theoretical reward rate under this fixed delay. B, Psychometric curve of animals from this training scheme. Contrast difference: right contrast (R) – left contrast (L). Gray dashed line, Individual animals. Black solid line, Average across animals. C, Response time (RT) as a function of contrast difference. Dashed line, Individual animals. Solid line, Average across animals. The shaded area is 95% confidence interval. D, RT density histogram from a representative animal. Correct and incorrect trials are separately plotted. E, An exponential decay delay scheme (solid line) was applied in another group. The dashed line shows the theoretical reward rate under this scheme. F–H, Same as C–E but for the second group. Figures 2-1 and 2-2 show the RT distributions of individual animals from the fixed-delay group and exponential-delay group respectively.

Response time distributions of the individual animals from the fixed-delay group. Download Figure 2-1, TIF file (9.3MB, tif) .

To discourage the animals from “fast guessing,” we employed an exponential decay trial delay for incorrect responses in the second group (n = 4; male = 3, female = 1; Fig. 2E). The exponential decay delay would punish fast incorrect responses more than slow incorrect ones, at a more aggressive level than the fixed trial delay procedure (Fig. 2A,E). All animals in this group were again able to learn the task quickly (Fig. 2F,G). Notably, the overall RT was substantially slower compared with the fixed-delay group, indicating the effectiveness of the new trial delay paradigm. Furthermore, the RTs in correct trials showed a slightly increasing trend with task difficulty (mixed effect linear regression, β = –0.021c, p = 0.001), while the effect on the incorrect RT became less prominent than for the fixed-delay group (mixed effect linear regression, β = –0.046d, p = 0.014). When examining the RT distribution of individual animals, we saw one-peak log-normal distributions, similar to what was reported in other species, and a clear above-chance accuracy across the entire range (Fig. 2H; Extended Data Fig. 2-2). These behavioral data thus demonstrate that the tree shrews responded to the two trial delay schemes with different behaviors.

Response time distributions of the individual animals from the exponential-delay group. Download Figure 2-2, TIF file (13.4MB, tif) .

Non-evidence-accumulation mechanism is crucial to interpreting tree shrew behaviors

The above behavioral data suggest the involvement of a process in addition to evidence collection during decision-making. One possibility is a time accumulation process where the animals had an internal time threshold on the task, and they would rush into a more or less random choice if the time threshold was reached before accumulating enough evidence to guide the choice. This time limit would be different under the two trial delay paradigms: shorter under fixed delay, thus leading to many fast guesses. To test the plausibility of this explanation, we turned to cognitive models of decision-making.

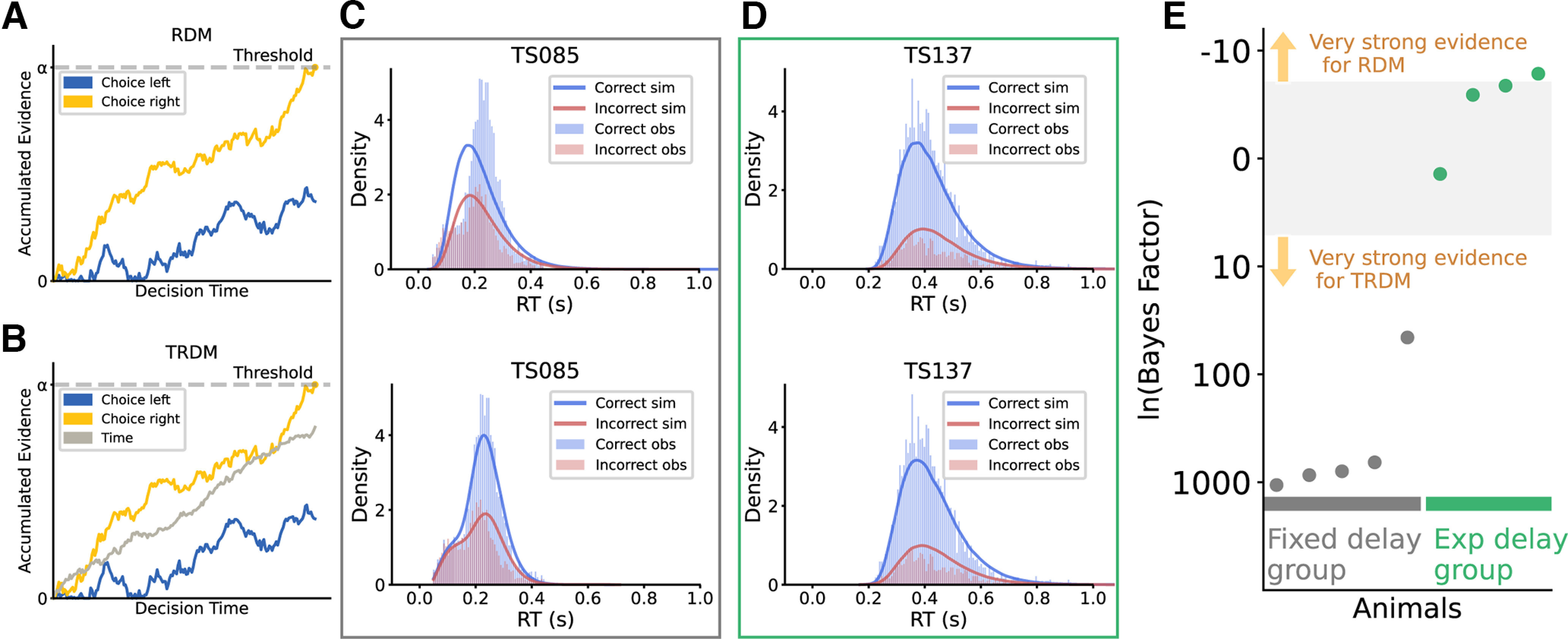

We fitted two models, racing diffusion model (RDM) and timed racing diffusion model (TRDM; Hawkins and Heathcote, 2021), to the data obtained from individual animals. In a 2AFC task, the RDM describes 2 independent evidence accumulators racing against each other. When one of the accumulators first reaches the threshold, a corresponding choice is made (Fig. 3A). The TRDM has one additional accumulator that tracks time (Fig. 3B). If the time accumulator reaches the threshold before the evidence accumulators, a decision is made based on the current accumulated evidence with a certain probability γ. We fixed all the accumulation thresholds to be 1. A fast time accumulator was thus effectively equal to a short time limit as described above. The two models allowed us to test whether an additional timing mechanism can better explain tree shrew decision behaviors.

Figure 3.

Modeling results suggest that evidence accumulation combined with a timing mechanism better fits tree shrew decision-making behavior. A, B, Racing diffusion model (RDM; A) and timed racing diffusion model (TRDM; B). Blue trace, The evidence accumulator for left choice. Yellow trace, The evidence accumulator for right choice. Gray trace, The time accumulator. The two evidence accumulation processes race against each other. In these schematics, the accumulator for right stimuli (yellow) reaches the threshold first, resulting in a rightward choice. C, Observed (histograms) and simulated (lines) RT distribution for the representative animal from the fixed-delay group. Top, RDM simulation. Bottom, TRDM simulation. D, Observed and simulated RT distribution for the representative animal from the exponential-delay group. Top, RDM simulation. Bottom, TRDM simulation. E, Estimated log Bayes factor comparing the two models’ performance. Positive values favor TRDM, while negative values favor RDM. Gray dots represent the animals from the fixed-delay training, and green dots represent the exponential-delay group. The upper and lower edges of the gray shaded area represent the lower limit for “very strong” evidence [ln(BF) = 5].

We used a Bayesian approach for model fitting (Ter Braak, 2006; Turner and Sederberg, 2012; Turner et al., 2013), and then simulated choice and RT data with the best fitting parameters to visualize the goodness of fit. We found that the RDM captured the RT distribution of the exponential-delay group well, but failed to fit the fixed-delay group (Fig. 3C,D, top panels). On the other hand, the TRDM fitted well to both groups (Fig. 3C,D, bottom panels). To quantify their performance difference, we estimated the Bayes factor (BF) of the two models for each animal (Fig. 3E). For animals in the fixed-delay group, the values of ln(BF) were extremely high, ranging from 45 to 1062, providing overwhelming support for the TRDM. These values were much higher than 5, which is a conventional threshold for “very strong” evidence for one model over the other in Bayesian modeling (Lodewyckx et al., 2011). For the exponential-delay group, the evidence favored the RDM for three out of the four tree shrews, although the magnitude of evidence was not nearly as strong [ln(BF) ranging from −6 to 1]. It should be noted that Bayes factor in our estimation punishes complex models that have more parameters. As a result, despite the similar performance of the two models in fitting the exponential-delay group data, the RDM had the advantage of simplicity, thus leading to the winning BF.

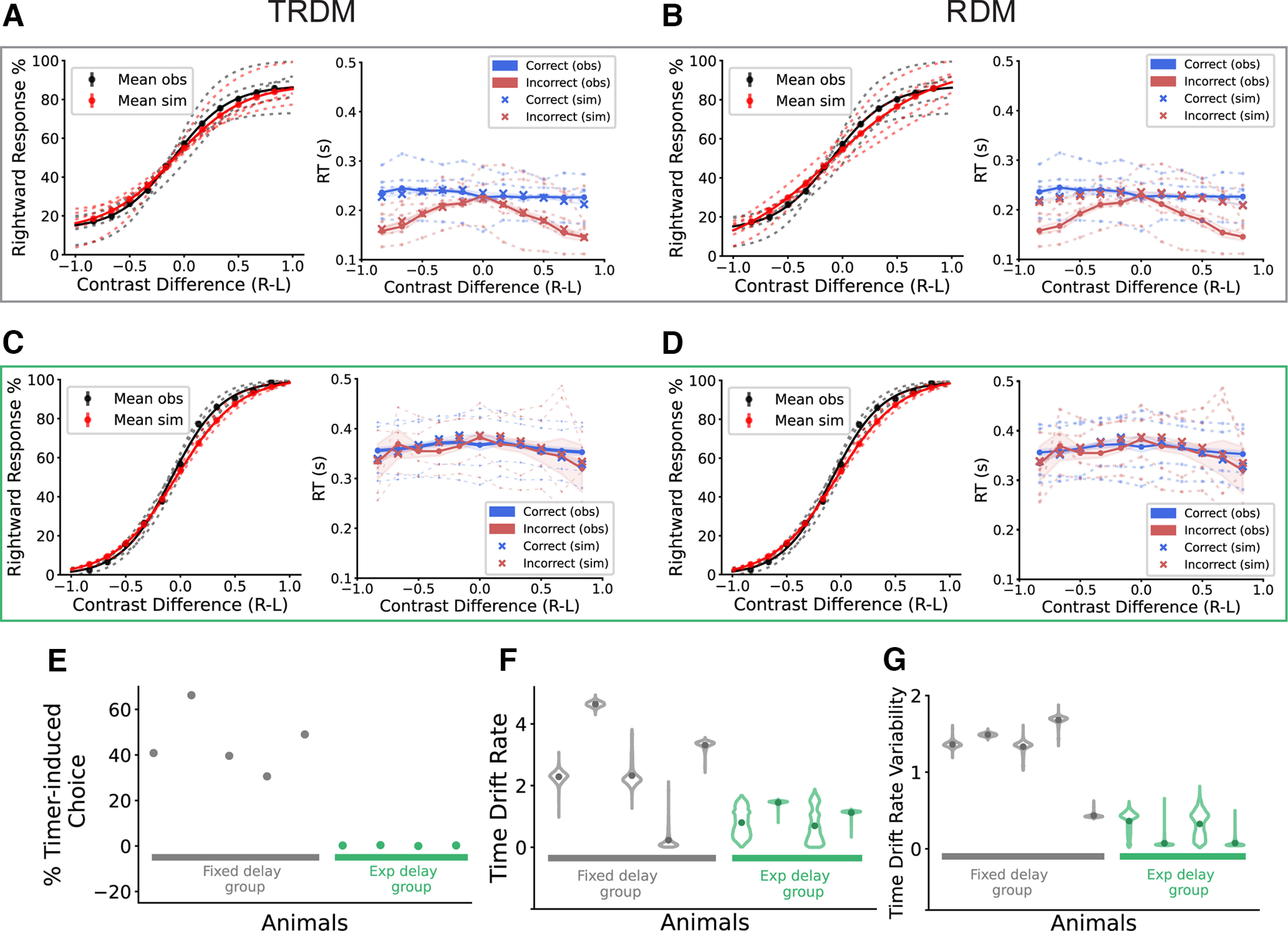

We then simulated choice and RT data with the best fitting parameters (Extended Data Tables 1-2 and 1-3) for each animal using the winning model, to visually check the goodness of fit. Figure 4 illustrates that the TRDM fit the data of the fixed-delay group well (Fig. 4A), and the RDM was able to reproduce the behavior of the exponential-delay group (Fig. 4D), for both the psychometric curves and the RT-contrast relationship. Consistent with the result in Figure 3, the TRDM was also able to fit the psychometric curves and the RT-contrast relationship for the exponential-delay group (Fig. 4C), similarly to the RDM, while the RDM failed to capture the RT-contrast relationship for the fixed-delay group (Fig. 4B). The fact that the behavior of both groups could be explained by the TRDM supported the involvement of the non-evidence-accumulation process during tree shrew visual decision-making, and this process can be manipulated by applying different trial delay rules.

Figure 4.

Model simulation of the psychometric curves and associated response time, and the posterior of the timer-related parameters. A, TRDM simulation for the fixed-delay group. Left, Observed (black) and simulated (red) psychometric curves for individual animals (dotted lines) and the group average (solid lines). The simulations were done with the best fitting parameters of the TRDM. Right, Observed (dots, solid lines, and dotted lines) and simulated RT function (“x”). Dotted lines, Individual animals. Solid lines, Group average. B, RDM simulation for the fixed-delay group. C, TRDM simulation for the exponential-delay group. D, RDM simulation for the exponential-delay group. E, Percentage of timer-induced choice calculated from the TRDM-simulated data for each animal. F, The posterior distribution of the time accumulator mean drift rate (ρt) for individual animals from the TRDM fitting. The dot in each distribution indicates the mean value. G, Same as F, but for the drift rate variability of the time accumulator (ηt). Figure 4-1 shows the decomposed simulation data of TRDM for one example animal.

The models allowed us to track down the generating mechanism of the simulated data, i.e., whether each decision was initiated by an evidence accumulator or the timer crossing the threshold. We separated the TRDM-simulated data for each animal according to the generating mechanism, and found the timer and evidence accumulators contributed to two separate RT peaks. Extended Data Figure 4-1 shows the comparison between simulated data and observed data for an example tree shrew from the fixed-delay group (Fig. 2D). The results indicated that the fast RTs were largely generated by the timer (Extended Data Fig. 4-1A). In addition, when examining the simulated RTs for correct choices generated by evidence accumulators only, they increased with the task difficulty (Extended Data Fig. 4-1D), similar to what has been previously reported in humans, monkeys, and mice (Roitman and Shadlen, 2002; Palmer et al., 2005; Philiastides et al., 2011; Dmochowski and Norcia, 2015; Jun et al., 2021; Orsolic et al., 2021). These model results suggest that the tree shrews learned the visual decision-making task, and they had similar behaviors as other animals when “engaged” in the task. Moreover, the timer-driven random choices explained the plateau of a nonperfect accuracy, even in the easiest conditions (Extended Data Fig. 4-1C).

Decomposition of an example animal’s simulated RT distribution by the TRDM. A, The simulated RTs for one example animal (TS085) from the first group are divided into four groups: evidence accumulator generated RT for correct (blue) and incorrect (pink) responses, and time accumulator generated RT for correct (green) and incorrect (yellow) choices. Compared with the observed data (B), the plots show that the TRDM interprets the first peak (fast RT) in the RT distribution as generated by the time accumulator. C, Simulated psychometric curves generated by the evidence accumulators and the time accumulator. D, Evidence accumulator simulated RT as a function of contrast difference. Download Figure 4-1, TIF file (12MB, tif) .

Next, for each tree shrew, we quantified the percentage of timer-induced choices from the TRDM-simulated data (Fig. 4E). As expected from the above analysis, all of the animals from the fixed-delay group showed many timer-induced choices (ranging from 30% to 66%), while the value was near zero for every animal in the exponential-delay group. To understand what decision variables were altered by the change of delay rule, we examined the posterior distribution of the parameters in the TRDM. The posteriors of the timer-related parameters showed a general trend of higher mean drift rate for the time accumulator (ρt) and higher time drift rate variability (ηt) in the fixed-delay group than in the exponential-delay group (Fig. 4F,G). The two parameters work together to determine the accumulation speed of time during decision-making, with the fixed-delay group having faster timers. The model results therefore proposed a possible mechanism that the exponential delay worked by slowing down the time accumulation process in the tree shrews, which resulted in far fewer “timer-induced” fast responses with compromised accuracy, and more correct responses guided by the evidence accumulation process.

Discussion

In this study, we aimed to and succeeded in establishing a response-time paradigm of perceptual decision-making for tree shrews. The behavioral results showed that tree shrews are able to perform a contrast-discrimination perceptual decision task and generate informative choice and response time data. Model-based analyses suggest that, other than the choice-related evidence accumulation process, additional mechanisms, presumably mechanisms that keep track of time, are involved in the decision-making process depending on the specific design of trial delay because of incorrect responses. This new animal model will facilitate future decision-making studies with fast learning, reliable behaviors, increased availability, and more modern techniques.

We carefully considered two points when designing the behavioral paradigm. First, we adopted a 2AFC framework, where two alternative options match symmetrically with two response targets. In other widely used tasks, there often exists asymmetry in either responses or stimulus categories, which can be problematic when interpreting different behaviors. For example, Go/no-Go tasks involve an action (“go”) and a suppression of action (“no-go”) as two responses, which are likely driven by different neural circuits. Such tasks have thus become more suitable for studying impulsion and inhibition (Eagle et al., 2008; Dong et al., 2010; na Ding et al., 2014). On the other hand, yes/no tasks offer two asymmetric stimulus categories as options, which are likely represented differently at the neural level (Wentura, 2000; Donner et al., 2009). In comparison, a multiple alternative forced choice framework is better in perceptual decision-making studies. Second, we designed the task to be self-initiated and self-paced by the animals. Self-initiation ensures that the animals are focused during the stimulus presentation, and self-pacing encourages them to respond without delay once they reach a decision. Compared with the commonly-used design where the stimuli show up automatically and animals can respond at any time point within a fixed response window, our design allowed us to collect precise response times in addition to choice data. Response times are particularly useful because they are continuous (whereas choice data are discrete) and are more informative when characterizing decision behaviors. For example, fast correct responses have potentially different mechanisms from slow correct responses, which would be impossible to study without the RT information.

We used models under the SSM family to fit tree shrew decision behaviors on the trial level. SSMs predict the choice and RT distribution with a mathematically defined dynamic decision-making process controlled by cognitively meaningful parameters and offer testable hypotheses about the underlying mechanisms. Signal detection models have also been used to explain perceptual decision-making behaviors (Newsome et al., 1989), but they only predict the choices made by subjects in a decision process, ignoring the information contained in the response time. Furthermore, the choice data are usually averaged over trials, further reducing the information present in the raw data. By comparison, SSMs have the advantage of maximizing the efficiency of the animal experiments and data analysis (Ratcliff et al., 2003).

Despite the RDM showing a slightly better Bayes factor than the TRDM in the exponential-delay group because of simplicity, the TRDM had the same ability to reproduce the observed choice and RT pattern. Together with its overwhelmingly better performance in the fixed-delay group, the TRDM was overall the better model for this dataset. By examining the source of the simulated data (Extended Data Fig. 4-1), we found that timer-induced random choices largely contribute to the plateau of a nonperfect accuracy in the easiest conditions. Canonically, this nonperfect accuracy is modeled by “lapse rate” under the Signal Detection framework (Wichmann and Hill, 2001; International Brain Laboratory et al., 2021; Wang et al., 2020; Prins, 2012). The lapses are usually assumed to happen via a Bernoulli process, i.e., the animals simply make guesses at some random rate independently from trial to trial, while providing no detailed process of choice generation. In comparison, the TRDM utilizes a time accumulator that is highly similar to evidence accumulation to generate random choices. It offers a more integrative solution to the interaction between evidence-based and stimulus independent mechanisms. This can be more plausible on the neuronal level than two separate processes that involve very different calculations. In addition, the TRDM provides the extra ability to explain why we rarely see extremely long RTs in the difficult conditions, especially in the equal-evidence conditions. The time accumulator can limit the RT so that the decision-makers do not waste too much time on a single decision when the evidence is obscure. Thus, we think that the TRDM has more explanatory power than models that include a “lapse rate.” Furthermore, a recent study showed that mice alternate between states, such as lapse or biased decisions, during a perceptual decision-making task, and they have a higher probability to stay in the same state for consecutive trials (Ashwood et al., 2022). Therefore, Bernoulli “lapses” would be an oversimplified explanation of how nonperfect choices happen. In future studies, the temporal sequence of choices and RTs should also be analyzed to further investigate the mechanism of decision state switching.

Finally, it is intriguing that the tree shrews in this study showed a fair amount of premature choices under fixed trial-delay although this strategy was suboptimal, in that it did not maximize the reward rate. The TRDM suggested that the animals actively applied a fast timer (or a short time limit) on the task without being trained to perform the task speedily. Interestingly, this tendency of rushing into choices was discouraged by the exponential trial-delay design that specifically punished fast incorrect responses more. The baseline suboptimal behavior could partly be because of (1) the characteristics of this animal model and/or (2) the stimulus design. The tree shrews showed much faster responses compared with humans on similar tasks (Kirkpatrick et al., 2021). They were very nimble and showed swift movements and reactions in various environments (behavior rig, home cage, nature, etc.). Given their motor capabilities, fast responses could be a survival strategy to guarantee the total amount of reward via high sampling frequency with slightly compromised accuracy, and could be broadly used in most scenarios to facilitate “exploration” behaviors, unless specifically discouraged. Additionally, in previous perceptual decision-making studies, stochastic stimuli with motion such as random dot kinematogram were usually used (Roitman and Shadlen, 2002; Ditterich, 2006; Resulaj et al., 2009). These stimuli require temporal integration to acquire evidence for choices. In our study, we used the static feature (contrast) as evidence. Although studies showed support for evidence accumulation even using the static stimuli in other species (Kirkpatrick et al., 2021), temporal integration might not be needed as strongly to generate a choice under this situation. This could result in short response times, leading the animals to a faster RT regime (more prone to make premature choices) and masking the effect of task difficulty on the RT (Fig. 2G, minor effect, although significant). Nevertheless, the tree shrew data emphasized the natural existence of f evidence-independent mechanisms in decision-making and offered an opportunity to examine their effects. These behavioral patterns also suggest that we should consider the involvement of processes in addition to the evidence accumulation process in other animal/human models when interpreting both behavioral and neural data from decision-making tasks. Here, we included an independent time accumulator to implement this additional process in our decision-making models (Hawkins and Heathcote, 2021). However, it should be noted that mechanisms other than the time accumulator could also generate the fast-guessing responses and our results do not rule out these possible mechanisms. In other words, the time accumulator was not necessarily the true underlying mechanism, but rather a piece of evidence for the involvement of multiple generative processes for decision instead of one single process. Other studies have indeed applied alternative approaches to account for decisions not entirely based on evidence accumulation, such as combining the decision process with a probabilistic fast-guess mode that generates a normally distributed guessing time (Ratcliff and Kang, 2021). Future studies that incorporate neural data will be needed to reveal exactly how response times in perceptual decision tasks are affected by information other than the sensory strength.

Acknowledgments

Acknowledgments: We thank Masashi Kawasaki for the help with making the behavior box, Seiji Tanabe for advice on building electronic circuits of the lick port system and animal training, Michele Basso and Vaibhav Thakur for the helpful discussion and suggestions, Alev Erisir for sharing and coordinating tree shrew usage, Brandon Jacques for technical assistance and feature addition of the SMILE library, and Ryan Kirkpatrick for advice on modeling procedure.

Synthesis

Reviewing Editor: Nicholas J. Priebe, University of Texas at Austin

Decisions are customarily a result of the Reviewing Editor and the peer reviewers coming together and discussing their recommendations until a consensus is reached. When revisions are invited, a fact-based synthesis statement explaining their decision and outlining what is needed to prepare a revision will be listed below. The following reviewer(s) agreed to reveal their identity: Santiago Jaramillo. Note: If this manuscript was transferred from JNeurosci and a decision was made to accept the manuscript without peer review, a brief statement to this effect will instead be what is listed below.

SYNTHESIS

Two reviewers evaluated the manuscript “Tree shrews as an animal model for studying perceptual decision-making reveal a critical role of stimulus-independent processes in guiding behavior” and both reviewers agreed that the revisions address the issues previously raised. The manuscript establishes a perceptual decision-making task using tree shrews, and demonstrates that, as with other mammals, tree shrew task training and behavior is influenced by stimulus-independent processes. The paper will be useful for future studies examining decision making in tree shrews. The reviewers did, however, have some minor concerns and questions. These issues are detailed below, which you should find useful in revising your manuscript.

Issues:

1) The authors agree that they cannot distinguish the TRDM from many other models that incorporate fast guessing. This is an extremely important caveat that should be clear to the reader. We appreciate that the authors emphasize stimulus-independent processes instead of the specific timing mechanism in the text and the additions to the last paragraph of the discussion. The authors should plainly state in the discussion that there is nothing in their results that specifically supports the time accumulator as the mechanism for fast guessing and that many other models that do not include a time accumulator might also explain the data.

2) “Even if we add an additional random guess mechanism into Snapshot models, the random guess probability will be independent of the stimulus strength, still unable to reproduce the relationship between the incorrect RTs and contrast difference observed in our data” (line 410). It is not clear that this statement is correct. In this model, the large majority of error trials in the easiest conditions would be generated by fast guesses, whereas error trials in the difficult conditions would be a mixture of fast guesses and snapshots (which have RTs longer than the fast guesses). Therefore, error RTs will follow the observed pattern. Further, using the authors’ logic, they should rule-out sequential sampling models in the fixed-delay data because they do not observe an effect of stimulus strength on RT. In more general terms, if the authors want to claim that the snapshot model with fast guessing cannot explain their data, then they should fit it to their data and compare it to the TRDM. This comparison is not necessary to support the claims outlined above, but it is critical that readers understand that the space of models that might explain the data is large.

3) It may not be helpful to characterize collapsing bounds or urgency signals as evidence-independent processes (they are mathematically equivalent). The bound is applied directly to the accumulated evidence. It also produces error RTs that are longer than correct RTs, which is the opposite of what the authors observed. Finally, collapsing bounds are the optimal solution in terms of maximizing reward rate when there is a mixture of stimulus difficulties (Drugowitsch et al, 2012). The useful distinction is between models with a single generative process for choice/RT and models that contain multiple.

4) Lines 390-396: It is difficult to understand the point the authors are conveying.

5) Many of the plots do not have ticks on the abscissa.

6) The authors may want to rephrase the sentence in the abstract that states their main results: “Specifically, they made occasional fast guesses under a constant increase in the delay between trials for incorrect responses that did not vary with the response time.” The current version is difficult to interpret because it is not clear what the last clause (“did not vary with response time”) refers to. Please consider splitting the sentence.

7) The units in Fig.1D are not correct. The vertical axis should be “fraction” (instead of %) or the values should be 0-100 instead of 0-1.

8) When presenting p-values (e.g., in line 276) the authors should indicate what statistical test was used.

The reviewers also included ideas that may be helpful, but that the authors are free to ignore.

1) A useful discussion point could be to consider why there was such a minimal effect of stimulus strength on RT. It is a major discrepancy between monkey and human data and a primary aim of the paper is to establish tree shrews as an alternative animal model for decision making. Is it a species-specific difference? Is it a training effect? Is it because of the specific stimulus used? Most studies in humans and monkeys with large modulation of RTs used a stochastic stimulus which yields noisy samples of evidence, which makes accumulating evidence more beneficial. The current study used a static stimulus. The authors also might find it useful to know (if they didn’t already) that it takes several months of training-after learning the basics of the task-for monkeys to show stimulus-dependent RTs.

2) It is surprising that the percentage of trials estimated to be induced by the timer wasn’t emphasized more. It suggests that 30-60% of trials in the fixed delay condition result from a stimulus independent generative process even though the psychometric functions look reasonably good. That’s a huge number! How does that estimate compare to the estimate yielded by fitting a lapse rate to the simulated choice data. If the estimate yielded by the lapse rate is lower than the true rate, it suggests that people might be underestimating just how corrupted their data is by stimulus-independent generative processes.

3) Is there a link between serial choice biases (e.g. win-stay, lose-switch) that are commonly observed and the fast guessing observed here. Do the animals exhibit these biases? An analysis that quantifies these biases separately for different RT quantiles could be interesting. Similarly, one could try to predict the animal’s RTs using the trial history. Most studies model serial biases as a weight on the decision process, but it could be that they result from a separate generative process which maps onto the fast guesses.

References

- Aoki R, Tsubota T, Goya Y, Benucci A (2017) An automated platform for high-throughput mouse behavior and physiology with voluntary head-fixation. Nat Commun 8:1196. 10.1038/s41467-017-01371-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashwood ZC, Roy NA, Stone IR, International Brain Laboratory; Urai AE, Churchland AK, Pouget A, Pillow JW (2022) Mice alternate between discrete strategies during perceptual decision-making. Nat Neurosci 25:201–212. 10.1038/s41593-021-01007-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown S, Heathcote A (2005) A ballistic model of choice response time. Psychol Rev 112:117–128. 10.1037/0033-295X.112.1.117 [DOI] [PubMed] [Google Scholar]

- Callahan TL, Petry HM (2000) Psychophysical measurement of temporal modulation sensitivity in the tree shrew (Tupaia belangeri). Vision Res 40:455–458. 10.1016/S0042-6989(99)00194-7 [DOI] [PubMed] [Google Scholar]

- Casagrande VA, Diamond IT (1974) Ablation study of the superior colliculus in the tree shrew (Tupaia glis). J Comp Neurol 156:207–237. 10.1002/cne.901560206 [DOI] [PubMed] [Google Scholar]

- Cisek P, Puskas GA, El-Murr S (2009) Decisions in changing conditions: the urgency-gating model. J Neurosci 29:11560–11571 10.1523/JNEUROSCI.1844-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding L, Gold JI (2010) Caudate encodes multiple computations for perceptual decisions. J Neurosci 30:15747–15759. 10.1523/JNEUROSCI.2894-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ditterich J (2006) Evidence for time-variant decision making. Eur J Neurosci 24:3628–3641. 10.1111/j.1460-9568.2006.05221.x [DOI] [PubMed] [Google Scholar]

- Dmochowski JP, Norcia AM (2015) Cortical components of reaction-time during perceptual decisions in humans. PLoS One 10:e0143339. 10.1371/journal.pone.0143339 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dong G, Zhou H, Zhao X (2010) Impulse inhibition in people with Internet addiction disorder: electrophysiological evidence from a Go/NoGo study. Neurosci Lett 485:138–142. 10.1016/j.neulet.2010.09.002 [DOI] [PubMed] [Google Scholar]

- Donner TH, Siegel M, Fries P, Engel AK (2009) Buildup of choice-predictive activity in human motor cortex during perceptual decision making. Curr Biol 19:1581–1585. 10.1016/j.cub.2009.07.066 [DOI] [PubMed] [Google Scholar]

- Eagle DM, Bari A, Robbins TW (2008) The neuropsychopharmacology of action inhibition: cross-species translation of the stop-signal and go/no-go tasks. Psychopharmacology (Berl) 199:439–456. 10.1007/s00213-008-1127-6 [DOI] [PubMed] [Google Scholar]

- Hawkins GE, Heathcote A (2021) Racing against the clock: evidence-based versus time-based decisions. Psychol Rev 128:222–263. 10.1037/rev0000259 [DOI] [PubMed] [Google Scholar]

- Heathcote A (2004) Fitting Wald and ex-Wald distributions to response time data: an example using functions for the S-PLUS package. Behav Res Methods Instrum Comput 36:678–694. 10.3758/bf03206550 [DOI] [PubMed] [Google Scholar]

- Horwitz GD, Newsome WT (1999) Separate signals for target selection and movement specification in the superior colliculus. Science 284:1158–1161. 10.1126/science.284.5417.1158 [DOI] [PubMed] [Google Scholar]

- International Brain Laboratory, et al. (2021) Standardized and reproducible measurement of decision-making in mice. Elife 10:e63711. 10.7554/eLife.63711 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jun EJ, Bautista AR, Nunez MD, Allen DC, Tak JH, Alvarez E, Basso MA (2021) Causal role for the primate superior colliculus in the computation of evidence for perceptual decisions. Nat Neurosci 24:1121–1131. 10.1038/s41593-021-00878-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kass RE, Raftery AE (1995) Bayes factors. J Am Stat Assoc 90:773–795. 10.1080/01621459.1995.10476572 [DOI] [Google Scholar]

- Kirkpatrick RP, Turner BM, Sederberg PB (2021) Equal evidence perceptual tasks suggest a key role for interactive competition in decision-making. Psychol Rev 128:1051–1087. 10.1037/rev0000284 [DOI] [PubMed] [Google Scholar]

- Lee KS, Huang X, Fitzpatrick D (2016) Topology of on and off inputs in visual cortex enables an invariant columnar architecture. Nature 533:90–94. 10.1038/nature17941 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li CH, Yan LZ, Ban WZ, Tu Q, Wu Y, Wang L, Bi R, Ji S, Ma YH, Nie WH, Lv LB, Yao YG, Zhao XD, Zheng P (2017) Long-term propagation of tree shrew spermatogonial stem cells in culture and successful generation of transgenic offspring. Cell Res 27:241–252. 10.1038/cr.2016.156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lodewyckx T, Kim W, Lee MD, Tuerlinckx F, Kuppens P, Wagenmakers EJ (2011) A tutorial on Bayes factor estimation with the product space method. J Math Psychol 55:331–347. 10.1016/j.jmp.2011.06.001 [DOI] [Google Scholar]

- Mante V, Sussillo D, Shenoy KV, Newsome WT (2013) Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature 503:78–84. 10.1038/nature12742 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marbach F, Zador A (2017) A self-initiated two-alternative forced choice paradigm for head-fixed mice. bioRxiv e073783. [Google Scholar]

- Mustafar F, Harvey MA, Khani A, Arató J, Rainer G (2018) Divergent solutions to visual problem solving across mammalian species. eNeuro 5:ENEURO.0167-18.2018. 10.1523/ENEURO.0167-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- na Ding W, hua Sun J, wen Sun Y, Chen X, Zhou Y, guo Zhuang Z, Li L, Zhang Y, rong Xu J, song Du Y (2014) Trait impulsivity and impaired prefrontal impulse inhibition function in adolescents with internet gaming addiction revealed by a Go/No-Go fMRI study. Behav Brain Funct 10:20–29. 10.1186/1744-9081-10-20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newsome WT, Britten KH, Movshon JA (1989) Neuronal correlates of a perceptual decision. Nature 341:52–54. 10.1038/341052a0 [DOI] [PubMed] [Google Scholar]

- Odoemene O, Pisupati S, Nguyen H, Churchland AK (2018) Visual evidence accumulation guides decision-making in unrestrained mice. J Neurosci 38:10143–10155. 10.1523/JNEUROSCI.3478-17.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orsolic I, Rio M, Mrsic-Flogel TD, Znamenskiy P (2021) Mesoscale cortical dynamics reflect the interaction of sensory evidence and temporal expectation during perceptual decision-making. Neuron 109:1861–1875.e10. 10.1016/j.neuron.2021.03.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer J, Huk AC, Shadlen MN (2005) The effect of stimulus strength on the speed and accuracy of a perceptual decision. J Vis 5:376–404. 10.1167/5.5.1 [DOI] [PubMed] [Google Scholar]

- Petry HM, Kelly JP (1991) Psychophysical measurement of spectral sensitivity and color vision in red-light-reared tree shrews (Tupaia belangeri). Vision Res 31:1749–1757. 10.1016/0042-6989(91)90024-Y [DOI] [PubMed] [Google Scholar]

- Petry HM, Bickford ME (2019) The second visual system of the tree shrew. J Comp Neurol 527:679–693. 10.1002/cne.24413 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petry HM, Fox R, Casagrande VA (1984) Spatial contrast sensitivity of the tree shrew. Vision Res 24:1037–1042. 10.1016/0042-6989(84)90080-4 [DOI] [PubMed] [Google Scholar]

- Philiastides MG, Auksztulewicz R, Heekeren HR, Blankenburg F (2011) Causal role of dorsolateral prefrontal cortex in human perceptual decision making. Curr Biol 21:980–983. 10.1016/j.cub.2011.04.034 [DOI] [PubMed] [Google Scholar]

- Prins N (2012) The psychometric function: the lapse rate revisited. J Vis. 12:25–25. 10.1167/12.6.25 [DOI] [PubMed] [Google Scholar]

- Ratcliff R (1978) A theory of memory retrieval. Psychol Rev 85:59–108. 10.1037/0033-295X.85.2.59 [DOI] [Google Scholar]

- Ratcliff R, Kang I (2021) Qualitative speed-accuracy tradeoff effects can be explained by a diffusion/fast-guess mixture model. Sci Rep 11:15169. 10.1038/s41598-021-94451-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Cherian A, Segraves M (2003) A comparison of macaque behavior and superior colliculus neuronal activity to predictions from models of two-choice decisions. J Neurophysiol 90:1392–1407. 10.1152/jn.01049.2002 [DOI] [PubMed] [Google Scholar]

- Resulaj A, Kiani R, Wolpert DM, Shadlen MN (2009) Changes of mind in decision-making. Nature 461:263–266. 10.1038/nature08275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roitman JD, Shadlen MN (2002) Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. J Neurosci 22:9475–9489. 10.1523/JNEUROSCI.22-21-09475.2002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Savier E, Sedigh-Sarvestani M, Wimmer R, Fitzpatrick D (2021) A bright future for the tree shrew in neuroscience research: summary from the inaugural tree shrew users meeting. Zool Res 42:478–481. 10.24272/j.issn.2095-8137.2021.178 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sedigh-Sarvestani M, Lee KS, Jaepel J, Satterfield R, Shultz N, Fitzpatrick D (2021) A sinusoidal transformation of the visual field is the basis for periodic maps in area V2. Neuron 109:4068–4079.e6. 10.1016/j.neuron.2021.09.053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith PL, Ratcliff R (2004) Psychology and neurobiology of simple decisions. Trends Neurosci 27:161–168. 10.1016/j.tins.2004.01.006 [DOI] [PubMed] [Google Scholar]

- Ter Braak CJ (2006) A Markov chain Monte Carlo version of the genetic algorithm differential evolution: easy Bayesian computing for real parameter spaces. Stat Comput 16:239–249. 10.1007/s11222-006-8769-1 [DOI] [Google Scholar]

- Turner BM, Sederberg PB (2012) Approximate Bayesian computation with differential evolution. J Math Psychol 56:375–385. 10.1016/j.jmp.2012.06.004 [DOI] [Google Scholar]

- Turner BM, Sederberg PB, Brown SD, Steyvers M (2013) A Method for efficiently sampling from distributions with correlated dimensions. Psychol Methods 18:368–384. 10.1037/a0032222 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Urai AE, Aguillon-Rodriguez V, Laranjeira IC, Cazettes F, Mainen ZF, Churchland AK; International Brain Laboratory (2021) Citric acid water as an alternative to water restriction for high-yield mouse behavior. eNeuro 8:ENEURO.0230-20.2020–8. 10.1523/ENEURO.0230-20.2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Usher M, McClelland JL (2001) The time course of perceptual choice: the leaky, competing accumulator model. Psychol Rev 108:550–592. 10.1037/0033-295X.108.3.550 [DOI] [PubMed] [Google Scholar]

- van Ravenzwaaij D, Brown SD, Marley AA, Heathcote A (2020) Accumulating advantages: a new conceptualization of rapid multiple choice. Psychol Rev 127:186–215. 10.1037/rev0000166 [DOI] [PubMed] [Google Scholar]

- Wang L, McAlonan K, Goldstein S, Gerfen CR, Krauzlis RJ (2020) A causal role for mouse superior colliculus in visual perceptual decision-making. J Neurosci 40:3768–3782. 10.1523/JNEUROSCI.2642-19.2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wentura D (2000) Dissociative affective and associative priming effects in the lexical decision task: yes versus no responses to word targets reveal evaluative judgment tendencies. J Exp Psychol Learn Mem Cogn 26:456–469. 10.1037/0278-7393.26.2.456 [DOI] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ (2001) The psychometric function: I. Fitting, sampling, and goodness of fit. Percept Psychophys 63:1293–1313. 10.3758/bf03194544 [DOI] [PubMed] [Google Scholar]

- Yao YG (2017) Creating animal models, why not use the Chinese tree shrew (Tupaia belangeri chinensis)? Zoological research 38:118–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Statistical table. Download Table 1-1, DOC file (32.5KB, doc) .

TRDM best fitting parameters of each animal. Download Table 1-2, DOC file (79.5KB, doc) .

RDM best fitting parameters of each animal. Download Table 1-3, DOC file (62.5KB, doc) .

Code for analysis and modeling. fit_rdm.py fit_trdm.py single_animal_preprocessing.ipynb waldrace.py Download Extended Data 1, ZIP file (42.8KB, zip) .

Response time distributions of the individual animals from the fixed-delay group. Download Figure 2-1, TIF file (9.3MB, tif) .

Response time distributions of the individual animals from the exponential-delay group. Download Figure 2-2, TIF file (13.4MB, tif) .

Decomposition of an example animal’s simulated RT distribution by the TRDM. A, The simulated RTs for one example animal (TS085) from the first group are divided into four groups: evidence accumulator generated RT for correct (blue) and incorrect (pink) responses, and time accumulator generated RT for correct (green) and incorrect (yellow) choices. Compared with the observed data (B), the plots show that the TRDM interprets the first peak (fast RT) in the RT distribution as generated by the time accumulator. C, Simulated psychometric curves generated by the evidence accumulators and the time accumulator. D, Evidence accumulator simulated RT as a function of contrast difference. Download Figure 4-1, TIF file (12MB, tif) .