Abstract

Instance generation creates representative examples to interpret a learning model, as in regression and classification. For example, representative sentences of a topic of interest describe the topic specifically for sentence categorization. In such a situation, a large number of unlabeled observations may be available in addition to labeled data, for example, many unclassified text corpora (unlabeled instances) are available with only a few classified sentences (labeled instances). In this article, we introduce a novel generative method, called a coupled generator, producing instances given a specific learning outcome, based on indirect and direct generators. The indirect generator uses the inverse principle to yield the corresponding inverse probability, enabling to generate instances by leveraging an unlabeled data. The direct generator learns the distribution of an instance given its learning outcome. Then, the coupled generator seeks the best one from the indirect and direct generators, which is designed to enjoy the benefits of both and deliver higher generation accuracy. For sentence generation given a topic, we develop an embedding-based regression/classification in conjuncture with an unconditional recurrent neural network for the indirect generator, whereas a conditional recurrent neural network is natural for the corresponding direct generator. Moreover, we derive finite-sample generation error bounds for the indirect and direct generators to reveal the generative aspects of both methods thus explaining the benefits of the coupled generator. Finally, we apply the proposed methods to a real benchmark of abstract classification and demonstrate that the coupled generator composes reasonably good sentences from a dictionary to describe a specific topic of interest.

Keywords: Classification, Natural language processing, Numerical embeddings, Semisupervised generation, Unstructured data

1. Introduction

Generating an essay or a text for given structured information is an important Artificial Intelligence (AI) problem, which automatically imitates a certain style of writing. Whereas solving this AI problem is rather challenging, we tackle its simpler version in this article, which we call instance (example) generation, that is, generation of representative instances given a specific outcome to describe and interpret the corresponding learning model, for instance, classification and regression.

The use of black-box predictive models such as deep neural networks has delivered a high empirical learning accuracy in many real-life applications [14, 15]. Yet, it is difficult to make a sense of such a learning model. From the generative perspective, instance generation can describe the relationship between an instance and an outcome retrospectively. Its applications include a topic description of sentence categorization, abstractive text summarization [12], and image captioning [25], where generated sentences render descriptive examples of topics, texts, and images. In such a situation, sentence generation allows us to compose a novel essay and image captioning when the structured information is supplied. For example, the UCI abstract categorization benchmark1 consists of sentences from abstracts of articles, which are labeled with one of five topic categories. The goal here is learning a sentence generation mechanism to compose a novel abstract given a specific topic, in which the generation performance is measured by the cross-entropy error based on a test sample.

In the literature, instance generation, despite its vast important applications in AI, remains largely unexplored, although some approaches have been suggested for sentence generation. For example, a computational linguistics approach represents words/phrases as trees to model linguistic dependencies [20], a learning approach uses a large text corpus to learn a sentence’s structure without any access to linguistic annotation [5]. In [26], a sentence generating model is proposed to produce a document by sampling the latent topic of a sentence and then words of the sentence using a recurrent neural network (RNN). In [37, 17], image captioning links the image content to a language model through an interplay between a convolution neural network (CNN) and an RNN. Yet, there is a paucity of works on instance generation given structured information, and incorporating both labeled and unlabeled data.

One of the primary characteristics of topic-instance data is that the amount of unlabeled data may be significantly larger than that of labeled data. For example, in sentence generation, uncategorized sentences are about ten times more than categorized ones. This is in a parallel situation of semisupervised learning with a different focus on leveraging unlabeled data to enhance the predictive accuracy of supervised learning [42, 18], which is in contrast to our generation objective given a learning outcome.

Our main contribution lies in the development of a new semisupervised generation framework for producing instances given an outcome. On this ground, we propose three generative methods–indirect, direct, and coupled generators. The indirect generator uses the principle of inverse learning to estimate the conditional probability distribution of an outcome given an instance, enabling to leverage unlabeled data, if available. On the other hand, the direct generator estimates the corresponding conditional probability of an instance given an outcome in a supervised manner. Then, the coupled generator is designed to enjoy the benefits of both generations. The proposed generators are illustrated in sentence generation, where we generate a sentence through sequential next-word-prediction. Specifically, we develop regularized embedding-based regression/classification in conjuncture with an unconditional RNN for the indirect generator, whereas we use a conditional RNN for the direct generator.

To shed light on the generative performance of the three generators, we derive finite-sample generation error bounds for each method. Interestingly, the generation error of the indirect generator is governed by the complexity of the parameter space of the conditional densities of an outcome given an instance and that of marginal densities. Similarly, that of the direct generator is determined by the conditional densities of an instance given an outcome. As a result, the indirect and direct generators have their own advantages with respect to generation with the unlabeled data is large, and importantly the coupled generator enjoys the benefits of both in terms of generation accuracy. This, together with a real benchmark of sentence categorization, demonstrates the utility of the coupled generation for composing reasonably good sentences to describe a specific topic. Numerically, the proposed method outperforms a separate RNN method and the indirect generator can leverage additional unlabeled data for further enhancing the performance.

This paper is organized as follows. Section 2 introduces the framework of coupled generation based on indirect and direct generations. Section 3 develops a theory of the generation performance of the proposed methods. Section 4 is devoted to the development of a novel sentence generative method given a topic of interest through sequential next-word prediction. Section 5 investigates the operating characteristics of the coupled generator and compares it with the direct and indirect generators as well as one competitor. The Appendix contains technical proofs.

2. Methods

Consider a generative model in which the goal is to generate an instance X given an outcome Y, where X and Y represent instance and response variables, which can be numerical or unstructured such as texts and documents that cannot be expressed in a predefined manner. In this article, we focus on instance generation under a generative model, based on the conditional distribution pX|Y of X given an outcome of Y. As an example, in sentence generation [26], instance generation produces representative examples of X given a specific topic of Y, where X and Y represent a sentence and its associated topic.

For instance generation, a labeled training sample is available as well as an instance-only sample , whose sample size may greatly exceed or smaller than the sample size n. In our context, we leverage the unlabeled sample to enhance the generative accuracy of instance generation.

Indirect generator.

An indirect generator produces instances using an estimate of pX|Y through the inverse relation (1): an estimate of pY|X based on in (2) and the marginal density pX based on combined data and in (3). That is,

| (1) |

| (2) |

| (3) |

where and in (1) are regularized maximum likelihood estimates of pY|X and pX, Jb and Jm are regularizers, for example, L1- or L2-regularization in a neural network model, λb ≥ 0 and λm ≥ 0 are tuning parameters controlling the weights of regularization, and in (2) and in (3) are parameter spaces of pY|X and pX, respectively. Note that in (1) normalizes to become a probability density, although normalization is unnecessary when only some aspects of the distribution such as the modes or percentiles are of concern, as opposed to the distribution itself. Importantly, the indirect generator leverages instance-only (unlabeled) data , but any potential bias in estimation of pX based on could translate into that of pY|X.

Direct generator.

A direct generator uses pX|Y to generate instances, estimated by minimizing the negative regularized conditional likelihood of X given Y based on :

| (4) |

where is a parameter space of pX|Y, Jf is a regularizer, and λf ≥ 0 is a tuning parameter controlling the weight of regularization.

It appears that (4) can be extended to leverage additional unlabeled data through the conditional likelihood of pX|Y and a mixture relation . Unfortunately, however, the mixture approach may suffer from an asymptotic bias when additional unlabeled data is included, thus degrading the estimation performance of pX|Y [8, 9, 39]. This is because the aforementioned mixture relation may not hold when is misspecified, and moreover its impact could be minimal even it holds, especially when the support of Y is large. As suggested by the theorem in Section 4 [39], the supervised and semisupervised maximum likelihood estimates may converge to different values, and thus more unlabeled data produces a larger estimation bias as measured by the Kullback-Leibler divergence, when the model is misspecified in that does not belong to the parameter space or the mixture relation is not satisfied. Furthermore, as demonstrated by Figures 1 and 2 of [8] and Figure 4.1 of [7], empirical studies indicate that an EM algorithm based on both labeled and unlabeled data tends to degrade performance solely based on the labeled data when the size of labeled data exceeds 30 in SecStr dataset. As a result, pX|Y estimated from labeled data renders a better performance than that on labeled and unlabeled data.

In summary, how to leverage unlabeled data to enhance the generation performance remains an open question, which depends on model assumptions that may not be verifiable in practice. It is worth mentioning that (4) is a general formulation without assuming any specific assumption on how pX is related to . However, if such an assumption becomes available in practice, (4) can be generalized based on it to incorporate unlabeled data for improvement. At present, we shall not pursue this aspect as the indirect method can benefit from additional unlabeled data, as suggested by Theorem 1 in Section 3.

Coupled generator.

The level of difficulty of estimating and that of may differ, particularly when pX can be well-estimated from both instance-only and unlabeled data. Depending on situations, the former may be more difficult than the latter, and vice versa. Some theoretical results for this aspect are illustrated in Section 4.5. Then we propose coupled generation by choosing, between the two, the one maximizing a predictive log-likelihood, or minimizing a negative log-likelihood, such as (23) in the sentence generation example. In particular, a coupled generator is defined as,

| (5) |

The probability density gives the whole spectrum of values of X given Y. First, we may generate representative instances using the mode of pX|Y to give one representation or sampling-based on pX|Y for multiple representations. Second, discriminative features X with respect to Y can be extracted by comparing at different Y -values retrospectively. For example, in classification with Y = ±1, a comparison of and leads to discriminative features. This aspect will be further investigated elsewhere.

Coupled learning has its distinct characteristics although it appears remotely related to semisupervised variational auto-encoders [18] and inverse autoregressive flows [19]. In particular, [19] uses a generative model pX|Y and pX to enhance a discriminative model pY|X regarding the marginal distribution as a mixture of conditional distributions, whereas the proposed indirect generator integrates the unlabeled data to separately estimate the marginal distribution. Furthermore, [18] estimates the marginal density of X pX via a chain of latent factors and inevitable transformations of autoregressive neural networks and connects blocks by invertible relations. Yet, the proposed method links two conditional densities by Bayes’ law. Finally, the theoretical justification of [19] and [18] remains unknown.

3. Theory

This section develops a learning theory to investigate the generation errors of direct, indirect, and coupled generators. In particular, we derive finite-sample generation error bounds for estimators , , and of (1), (4) and (5).

The generation error for generating X given Y is defined as the expected Hellinger-distance between two conditional densities pX|Y and qX|Y with respect to Y :

where μ is the Lebesgue measure on x, and is the expectation with respect to Y.

Three parameter spaces , , and are defined for estimating pY|X in (2), pX in (3), and pX|Y in (4), each of which is allowed to depend on the corresponding sample size. Then their regularized parameter spaces are given as follows: for (2), for (3), and for (4). On this ground, we define the metric entropy to measure their complexities to be used for our theory.

The u-bracketing metric entropy H(u, ) of space with respect to a distance D is defined as the logarithm of the cardinality of the u-bracketing of of the smallest size. A u-bracketing of is a finite set (of pairs of functions) such that for any , there is a j such that with . Note that , h2(pX, qX), and , respectively for , , and .

To quantify the degree of approximation of the true density by , we introduce a distance , where gα(x) = α−1(xα − 1) for α ∈ (0, 1). As suggested in Section 4 of [38], this distance is stronger than the corresponding Hellinger distance. Similarly, and are defined for approximating the true densities and by and , respectively.

Let and be two approximating points of and in that and for some sequences γb ≥ 0 and γm ≥ 0. Of course, γb = 0 when and γm = 0 when .

Theorem 1 (Indirect generator).

Suppose there exist some positive constants c1–c6, such that, for any ϵb > 0 and λb ≥ 0,

| (6) |

and, for any ϵm > 0 and λm ≥ 0,

| (7) |

then

| (8) |

provided that λb and λm , and c7–c9 are some positive constants. Consequently, as n, under under .

Theorem 1 indicates that the generation error of the indirect generator is governed by the estimation errors ϵb and ϵm from (2) and (3), and the approximation errors γb and γm, where ϵb and ϵm can be obtained by solving the entropy integral equations in (6) and (7). Moreover, optimal rates of convergence can be obtained through tuning of λb and λm. Note that ηm could be tuned as a smaller order of ηb when the size of unlabeled data greatly exceeds the size of labeled data. Then the generalization error of the indirect method is governed primarily by ηb. In other words,the indirect generator’s performance is mainly determined by the estimation of pY|X.

For direct generator, let be an approximation of pX|Y0 in that ρf (pX|Y0, pX|Y*) ≤ γf for some γf ≥ 0.

Theorem 2 (Direct generator).

Suppose there exist some positive constants c10–c12, such that, for any ϵf > 0, and λf ≥ 0,

| (9) |

then

| (10) |

provided that λf , and c13 > 0 is a constant. Consequently, as n → ∞ under .

In contrast to the indirect generation, the generation error ηf of the direct generation could be much larger or smaller than that of the indirect generation ηb depending on the complexities of , , and the corresponding approximation errors γf and γb, when pX can be sufficiently well estimated. This suggests that either may outperform the other depending on the model assumptions.

Note that γf, γb, and γm are the approximation errors of the approximation capabilities of function spaces , and [35, 40]. In particular, when the function space is defined by a ReLU deep neural network to approximate a function in a Sobolev space, the approximation error is available and related to the scale of the neural network [40].

Theorem 3 says that the coupled generator performs no worse than the indirect and direct generators when (5) is used to select based on an independent cross-validation sample of size N.

Theorem 3 (Coupled generation).

Under , as N → ∞, the coupled generator defined in (5) satisfies , where K(pX|Y, qX|Y) is the Kullback-Leibler divergence between pX|Y and qX|Y.

Remarks:

In Theorem 3, if for some constant c14 > 0, then , which occurs when the likelihood ratio is bounded.

4. Sentence generation given a topic

This section derives generative methods of sentence generation, which integrates the likelihood methods developed previously with language models to compose a sentence. As a result, a new sentence can be generated, which may not appear in training data; see Table 3 for an example.

Table 3.

An abstract generated by the coupled generator based on one random partition of the UCI benchmark text corpus for sentence categorization. Here five sentences (1)-(5) correspond to five categories: AIM, OWN, CONTRAST, BASIS, MISC, with the first five words of each sentence prespecified. All sentences are grammatically legitimate except ” improves” in (4) suffers from an error, and kolmogorov in (1) and israelis in (3) should be capitalized. These errors are correctable by a grammar checker.

| (1) The paper extends research on the theory of choice rules. (2) We test our predictions using the ideas and the notion of kolmogorov complexity bound on the number of examples of the data sets. (3) The results demonstrate that israelis models can be used to provide new results for classification accuracy. (4) We show that implementation concerns the performance of the learning algorithm for improves the optimal predictor of the prediction. (5) The effect of balance is described by a high level of events and the objects that are shared. |

A complete sentence is represented by a word vector X1:T = (X1, …, XT)′, where Xt is the t-th word, T is a sentence-specific length, and ′ denotes the transpose of a vector. For convenience, we write X1 = “START” and XT+1 = “END” as the null words of the first and last words of a sentence, respectively. For example, X1 =“START”, X2 = ``Football″, X3 = ``is″, X4 = ``a″, X5 = ``popular″, X6 = ``sport″, and X7 = ``END″. Together with X1:T, its associated topic category Y = (Y1, …, YK)′ is available, where Yj ∈ {0, 1} or . Finally, we construct a dictionary to contain all composing words, that is , with denoting size.

For simplicity, we consider the case of a fixed T, where sentences of different lengths can be processed with a fixed length, as illustrated in Table 1. Sentence generation given a topic Y generates a sentence X1:T+1 using the conditional probability P(X1:T+1 = x1:T+1 |Y = y). However, estimation of this probability at the sentence level is infeasible. Therefore, we decompose it at the word level by the probability chain rule:

| (11) |

This decomposition (11) permits sequential generation of a sentence through next-word-prediction given existing words by learning p(Xt+1 = xt+1 | x1:t, y) from data; t =1, …,T.

Table 1.

Eleven next-word-prediction sequences with associated with a sentence.

| Topic | Sentence |

|---|---|

| MISC | The loss bound of SYMBOL implies convergence in probability. |

| 1. | Null Null Null Null Null Null Null Null Null Null Null START → The |

| 2. | Null Null Null Null Null Null Null Null Null START The → loss |

| 3. | Null Null Null Null Null Null Null Null START The loss → bound |

| 4. | Null Null Null Null Null Null Null START The loss bound → of |

| 5. | Null Null Null Null Null Null START The loss bound of → SYMBOL |

| 6. | Null Null Null Null Null START The loss bound of SYMBOL → implies |

| 7. | Null Null Null Null START The loss bound of SYMBOL implies → convergence |

| 8. | Null Null Null START The loss bound of SYMBOL implies convergence → in |

| 9. | Null Null START The loss bound of SYMBOL implies convergence in → probability |

| 10. | Null START The loss bound of SYMBOL implies only convergence in probability →. |

| 11. | START The loss bound of SYMBOL implies only convergence in probability. → END |

Yet, estimation of p(Xt+1 = xt+1 | x1:t, y) remains challenging for unstructured X1:t because of a lack of observations in any conditioning event of X1:t given Y even with large training data. Furthermore, it is difficult to utilize unlabeled data to estimate p(Xt+1 = xt+1 | x1:t, y).

4.1. Indirect generator

In this context, we derive a version of (2) and that of (3) through (11) to estimate the inverse probabilities. Specifically, p(xt+1 | x1:t, y) can be written as

| (12) |

for t =1, …, T. Then, we estimate the inverse probability p(y | x1:t+1) based on labeled data and estimate p(xt+1 | x1:t) based on for t = 1, …, Ti, and unlabeled data for t = 1, …, Tj.

Estimation of p(y | x1:t) may proceed with unstructured predictors x1:t. To proceed, we map a sentence x1:t to a numerical vector , known as a numerical embedding of size p via a pre-trained embedding model such as Doc2Vec [23, 24] and BERT [11]. If a pre-trained embedding model is sufficient in that [10], the numerical embedding captures word-to-word relations expressed in terms of co-occurrence of words, which may raise the level of predictability of unstructured predictors X1:t. Next, we model p(y | x1:t) through when Y ∈ {0, 1}K is categorical or is continuous with an embedded label Y :

| (13) |

where K is the dimension of Y, σ(·) is the softmax function [1], and f is a nonparametric classification or regression function forest [3] or linear function with . For illustration, we use a linear representation in (13) sequentially. Now the cost function Lb (θb) in (2) becomes

| (14) |

where λb ≥ 0 is a tuning parameter and Jb (f) ≥ 0 is a regularizer, for example, if , where ∥·∥F is the Frobenius-norm of a matrix.

On the other hand, the next word probability is estimated by a RNN in a form of

| (15) |

where [xt+1] ={j : wj = xt+1}, oj (xt, ht, θm) is the probability of occurrence of the j-th word in , and h(xt, ht+1, θm) is a hidden state function, such as a long short-term memory unit (LSTM) [16], a bidirectional unit [32], a gated recurrent unit (GRU) [6], and GPT2 [30], θm is the parameter of a specific RNN model, for example, in a basic RNN,

| (16) |

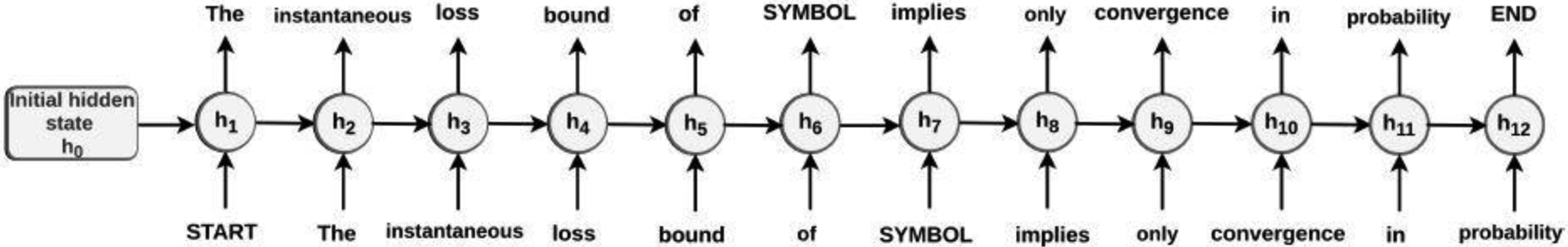

where σ(·), as defined before, is the softmax and ϕ is an activation function such as the ReLU function [1], , , and , and rm is the number of latent factors of the RNN. See Figure 1 for a display of the architecture of a basic RNN.

Fig. 1.

A generated sentence by indirect and direct RNN generators in (20) and (15), where the RNN architecture is displayed, in which sentence “The instantaneous loss bound of SYMBOL implies only convergence in probability” with topic “MISC” is consecutively generated by words, ht is the hidden node of RNNs in (20) and (15), and h0 is the initial hidden state, which is zero under (15) and “MISC” under (20).

On the ground of (15), the cost function Lm(θm) in (3) becomes

| (17) |

where λm ≥ 0 is a tuning parameter and Jm(θm) is a regularizer regularizing the weights matrix and the activation layer [22].

Minimizing (14) and (17) yields estimators θb and θm, respectively. Then the conditional probabilities are estimated as and . Plugging these estimates into (12), we obtain the estimated probability, and the process is summarized as,

| (18) |

Then, a sentence is sequentially generated as follows:

| (19) |

This generation process begins with x1 = ``START″ or pre-specified t0-words and proceeds until is reached, where is an index at termination. It is worth mentioning that the denominator in (18) normalizes the probability but may not need to be computed when a maximizer of (18) is desired in (19).

4.2. Direct generator

The direct generation is inspired by a conditional RNN (C-RNN; [37, 17]) by estimating

| (20) |

where θf represents the parameters of a RNN, and h0 is built on the label information as opposed to h0 =0 in (16). As in (16), the direct generator requires additional parameters for to model the effect from y as follows:

| (21) |

where , , , and rf is the number of latent factors of the RNN. On this ground, the cost function in (4) becomes

| (22) |

where λf ≥ 0 is a tuning parameter and Jf (θf) is a nonnegative regularizer. Minimizing (22) in θf yields an estimate θf, thus the estimated probability , from (20). Then, sentence generation proceeds as in (19).

Worth of note is that the direct and indirect generators can be respectively implemented using different RNN models, for example, GPT2 for the direct RNN (20) while LSTM for the indirect RNN in (15). Moreover, different model architectures of RNNs may yield different empirical results. This aspect is illustrated in Section 5.

4.3. Coupled generator

Given the estimated probabilities and . The coupled generator chooses one between and on a validation set by minimizing an empirical version of the log-likelihood loss,

| (23) |

4.4. Large-scale computation

This section develops a computational scheme for the indirect generator in (14)–(17), and the direct generator in (22) can be treated by a standard RNN implementation as in [36, 29]. In particular, when stochastic backpropagation is used through the time gradient method, the computation complexity is of order of the number of parameters per time step [27].

In what follows, we apply gradient descent [41] or stochastic gradient descent [31] to solve (14). For (17), we apply a classical back-propagation algorithm. In each case, we use analytic a gradient expression for updates.

Gradient for indirect generation.

The gradient expression for θm in (17) is given in [29], while that for θb in (14) is computed as

| (24) |

where θb,k denotes the kth column of θb.

The detail of gradient descent for the indirect generator is summarized as follows.

Algorithm 1 can be updated by a stochastic gradient scheme [2]. Lemma 1 describes computational properties of Algorithm 1.

Lemma 1.

If the cost functions Lb in (14) and Lm in (17) are continuously twice differential, and the probability measure of random initialization is absolutely continuous with respect to the Lebesgue measure. Then, θb is a global minimizer of (14), while θm is a local minimizer of (17) almost surely, provided that the step size in Algorithm 1 is sufficiently small.

4.5. Theory for sentence generation

This section generalizes the theoretical result of Section 3 to the problem of next-word-prediction.

Now we use pX|Y, pY|X, and pX to respectively represent , and . The expected square Hellinger-distance for next-word-prediction is

| (25) |

where .

The metric entropy of is defined by a distance . Similarly, , and d2(pX|Y, qX|Y) are used for and , respectively.

The approximation error for pY|X0 is . Similarly, the approximation errors and are used for pX0 and pX|Y0.

Corollary 1 (Sequential generation).

All the results in Theorems 1 and 2 continue to hold with the distance d(·,·) defined in (25).

Next we provide a theoretical example to illustrate Corollary 1.

Theoretical example.

Suppose that the RNN in (15) is a basic recurrent network with , that is, , and , where , , and , rm is the number of latent factors of the RNN, and ϕ(z) is an activation function, such as the sigmoid function ϕ(z) = 1/ (1+ exp(−z)), the tanh function ϕ(z) = tanh(z), and the Rectified linear unit (ReLU) ϕ(z) = z+. For illustration, we focus on the sigmoid function.

The RNN in (20) is that , , and . The network parameters are , where , , , and , and rf is the number of latent factors of the RNN in the direct generation.

Corollary 2 gives the generation errors of the direct and indirect generators.

Corollary 2 (Theoretical example).

For the estimated next-word probabilities by the direct generator in (22), we have that , where

, , and c15 > 0 and c16 > 0 are constants with . Similarly, the estimated next-word probabilities by the indirect generator in (14) and (17) satisfies: , where

Λb = Kp, , , , and c16 > 0 and c18 > 0 are constants with .

Corollary 2 says that the generation error of the indirect generator in (1) becomes when , when γb = γm = 0. In fact, the generation error is primarily dominated by its estimation error of p(Y | X1:t), because p(Xt+1 | X1:t) can be well estimated with the help of large unlabeled data with . In this situation, the indirect method outperforms the direct method, particularly when Λb < Λf, suggesting that the estimation complexity of the indirect method is less than that of the direct method. Interestingly, the generation error of the direct generator agrees with that of the maximum likelihood estimates under the Hellinger-distance [34, 38]. With respect to tuning, a large value of Λb, Λm, and Λf increases the complexity of the corresponding functional space for probability estimation, thus reduces the generation errors. Consequently, the generation errors of the direct and indirect generators indeed depend on the model complexity of parameter spaces and .

To illustrate the synergy of indirect and direct generators’ respective strengths, we consider two situations. First, but is bounded away from zero if an unlabeled sample follows a different marginal distribution of the labeled sample . Second, but is bounded away from zero in the presence of a new word in labeled but unlabeled samples. However, in both situations, , when Kullback-Leibler divergence is equivalent to the Hellinger-distance. In other words, only the coupled generator has a generation error tending to zero in both situations.

5. Benchmark

This section examines the performance of the coupled, indirect, and direct generators in one benchmark example, and compares with a baseline method “Separate RNN”, which fits RNNs for each topic as in [36]. The benchmark concerns sentence categorization based on a text corpus in the UCI machine learning repository2. This corpus contains 1,039 labeled sentences collected from abstracts and introductions of 30 articles, in which five topic categories are AIM (a specific aim of the present paper), OWN (description of own work presented in the present paper), CONTRAST (comparison statements with other works, including strengths and weaknesses), BASIS (statements of agreement with other works or continuation of other works), and MISC (generally accepted scientific background or description of other works). These labels originate from three scientific domains: computational biology (PLOS), the machine learning repository on arXiv (ARXIV), and the psychology journal judgment and decision making (JDM). For example, a typical sentence such as “The instantaneous loss bound of SYMBOL implies only convergence in probability. ” is labeled as “MISC” according to scientific topic classification. In addition to the aforementioned labeled sentences, this corpus contains 34,481 unlabeled sentences from 300 articles in PLOS, ARXIV, and JDM.

Before proceeding, we pre-process the text corpus to filter out redundant each sentence’s component so that numerical embeddings are applied for the indirect generator. First, we replace all numerical values, symbolic values, and citations by “NUMBER”, “SYMBOL”, and “CITATION”, respectively, and remove all standalone punctuation marks except commas, periods, and semicolons. For unlabeled sentences, we remove words appearing less than 20 times in the corpus, which leads to an unlabeled corpus of 8,286 sentences. On this ground, a dictionary is constructed, consisting of 5,369 words extracted from labeled and unlabeled sentences.

For training, we generate word strings for next-word-prediction based on the maximum length of all sentences in the dataset. Thus, all the previous tokens in the sentence are contributing to predict the next word. Specifically, we create next-word-prediction sequences consisting of consecutive previous words and fill with the null word “NULL” to pad all word strings as the same length. An example of such a next-word-prediction sequence is displayed in Table 1. In this fashion, we gather enough training sentences as the null words do not impact our learning process. Now, 28,180 labeled next-word-prediction sequences are generated from the original 1,039 labeled sentences, together with 174,355 unlabeled sequences from the original 8,286 unlabeled sentences.

The generation performance is measured by two commonly used metrics, namely, the next-word entropy loss and the Bi-Lingual Evaluation Understudy (BLEU) loss [28] over a test sample, approximating the predicted Kullback-Leibler divergence and Jaccard distance [13], respectively. Given sentences generated from p and its reference sentence given a topic y, the entropy loss is defined in as the empirical version of (23), while the BLEUl-loss (l =1, …, 4) can be written as

where ntest is the number of sentences in the testing set, |·| denotes a set’s size and graml(·) is the l-gram set for a sentence. For a sentence “the cat in the hat”, its 1-gram set is {“the”, “cat”, “in”, “the”, “hat”}, the 2-gram set is {“the cat”, “cat in”, “in the”, “the hat”}, and the 3-gram set is {“the cat in”, “cat in the”, “in the hat”}. The BLEUl-loss can be computed using the NLTK library in Python. Whereas the entropy loss measures the occurrence probability of the reference sentences, the BLEUl-loss focuses on exact matching between l consecutive words of two sentence. Moreover, we also consider the SF-BLEUl-loss to evaluate the diversity of a generated sentences [43], defined as

where , and a high SF-BLEUl-loss score means more diverse.

For training, validation, and testing, we randomly split all the labeled articles into three sets with a partition ratio of 60%, 20%, and 20%, respectively. Moreover, for a sentence x1:T and its associated topic y in a testing set, five starting words x1:5 as opposed to the null word are given to predict the rest of a sentence.

Consider two situations of the semantic label: (1) Y ∈ {0, 1}K is categorical and is coded as a 0–1 vector using the one-hot encoding from the topic category; (2) is continuous with each topic as a p = 128-dimensional vector based on Doc2Vec. In (2), each topic is represented by the averaged embedding of all the sentences in this topic category in training data.

In the case of Y ∈ {0, 1}K, the indirect generator involves (14) and (17). For (14), we perform regularized multinomial logistic regression using the Python library sklearn3 on the embedded next-word prediction sequence training samples , where is the numerical embedding of Doc2Vec4 of size p = 128 and the optimal λb is obtained by minimizing the entropy loss based on validation data over a set of grids {.0001, .001, .01, .1, 1, 10, 100}. For (17), the indirect RNN is trained based on both labeled and unlabeled next-word prediction sequences in training data. The indirect RNN model in (17) is structured in four layers, including an embedding layer consisting of 5, 369 nodes with each node corresponding one word in the dictionary , an LSTM layer of 128 latent factors, a dense layer with output dimension 5, 369. Note that the tuning parameter in (17) is fixed as λm = .0001 through in the embedding layer to regularize words in the absence in a training set. Similarly, the direct generator trains the RNN model in (22), which has the same configuration as the indirect RNN expect that the input dimension is in its embedding layer. Moreover, Separate RNN has the same structure as the indirect RNN given each topic.

As discussed in Section 4.2, different RNN model architectures may yield different empirical performance. Toward this end, we compare the LSTM architecture with GPT2 architecture for the direct RNNs. In particular, we consider the base GPT2 with 12 layers and 117M parameters [30] for the direct method, denoted as direct-GPT2. One key difference between LSTM and GPT2 lies in its masked self-attention layer, which masks future tokens and passes the attention information through tokens that are positioned at the left of the current position.

In the case of continuous after numerical embedding, the indirect generation proceeds as in the categorical case except that linear regression as opposed to multinomial logistic regression in (14) is performed using sklearn on the labeled embedded next-word prediction sequences in training data , where each yi is a 128-dimensional embedding vector.

All RNN models are trained using Keras5 with the batch and epoch sizes 200 and 100, and optimizer as Adam, and the over-fitting is prevented by early termination [4] of patience as 20. Moreover, the coupled generator is tuned as in (5).

As indicated in Table 2, when only labeled data is available, the coupled generator delivery higher accuracy than direct and indirect generators, which suggests the advantage of the proposed method. When combining with unlabeled data, the coupled generator outperforms the direct generator and separate RNN for both categorical and continuous labels, which selects the indirect generator in this case. With respect to the entropy loss, the amounts of improvement of the indirect generator over the separate RNN method and direct generator are 20.3% and 14.5% for the categorical case and 29.1% and 16.1% for the continuous case. With respect to BLEU1–BLEU4 losses, a similar situation occurs, with the amounts of improvement vary with the best improvement around 15.6%. Concerning unlabeled data, a comparison between the indirect generator with and without unlabeled data suggests that unlabeled data indeed help to improve the performance of the indirect generation over 14.5%. Interestingly, in terms of the entropy loss, the direct generator based on fine-tuned GPT2 outperforms the direct generator and indirect generator based on LSTM without unlabeled data, while the coupled generator achieves the best performance between them. However, they perform similarly in terms of BLEUl scores. In view of the SF-BLEUl scores, sentences generated by the direct and indirect generators have a high degree of diversity. Moreover, the semantic label Y after sentence embeddings Doc2Vec performs slightly worse than its categorical counterpart for the indirect and direct generations, indicating that semantic relations or linguistics dependencies, as captured by the sentence embeddings, may not have an impact given that there are only five categories. Finally, as suggested in Table 3, an abstract generated based on the five categories is reasonable except for three grammatical errors that are correctable by a grammar checker6.

Table 2.

Test errors in loss functions–Entropy, BLEUl, and SF-BLEUl (standard errors in parentheses) of various generators based on 20 random partitions of the UCI sentence categorization text corpus. Here “Separate RNN”, “Indirect”, “Direct”, “Direct-GPT2” and “Coupled” denote the separate RNN, indirect, and direct generators based on the RNN-LSTM architecture, the direct generator based on the RNN-GPT architecture, and the coupled generator, while Indirect-label or Coupled-label refers to the generation without unlabeled data.

| Method | Entropy | BLEU1-loss | BLEU2-loss | BLEU3-loss | BLEU4-loss |

|---|---|---|---|---|---|

| Y : categorical label | |||||

| Separate RNN | 9.317(.040) | 0.895(.010) | 0.926(.008) | 0.954(.007) | 0.971(.005) |

| Indirect | 7.424(.049) | 0.768(.003) | 0.854(.002) | 0.885(.002) | 0.914(.002) |

| Indirect-label | 8.839(.060) | 0.831(.008) | 0.878(.005) | 0.899(.004) | 0.923(.003) |

| Direct | 9.537(.054) | 0.823(.008) | 0.872(.005) | 0.895(.005) | 0.919(.004) |

| Direct-GPT2 | 8.684(.051) | 0.900(.006) | 0.954(.002) | 0.970(.001) | 0.981(.001) |

| Coupled | 7.424(.049) | 0.768(.003) | 0.854(.002) | 0.885(.002) | 0.914(.002) |

| Coupled-label | 8.644(.050) | 0.880(.008) | 0.932(.008) | 0.949(.007) | 0.963(.006) |

| SF-BLEU1-loss | SF-BLEU2-loss | SF-BLEU3-loss | SF-BLEU4-loss | ||

| Separate RNN | 0.076(.010) | 0.208(.027) | 0.271(.036) | 0.303(.043) | |

| Method | Entropy | BLEU1-loss | BLEU2-loss | BLEU3-loss | BLEU4-loss |

| Indirect | 0.105(.006) | 0.296(.009) | 0.416(.012) | 0.502(.013) | |

| Indirect-label | 0.138(.008) | 0.363(.022) | 0.472(.029) | 0.545(.036) | |

| Direct | 0.139(.006) | 0.372(.019) | 0.487(.026) | 0.561(.032) | |

| Direct-GPT2 | 0.053(.006) | 0.159(.019) | 0.255(.031) | 0.320(.040) | |

| Coupled | 0.105(.006) | 0.296(.009) | 0.416(.012) | 0.502(.013) | |

| Coupled-label | 0.082(.011) | 0.233(.028) | 0.342(.038) | 0.417(.045) | |

| Method | Entropy | BLEU1-loss | BLEU2-loss | BLEU3-loss | BLEU4-loss |

| Y: continuous label based on Doc2Vec [23, 24] | |||||

| Indirect | 7.641(.036) | 0.768(.005) | 0.851(.003) | 0.883(.003) | 0.912(.003) |

| Indirect-label | 8.512(.041) | 0.912(.010) | 0.937(.008) | 0.949(.007) | 0.960(.005) |

| Direct | 9.102(.050) | 0.916(.010) | 0.939(.007) | 0.950(.005) | 0.961(.004) |

| Coupled | 7.641(.036) | 0.768(.005) | 0.851(.003) | 0.883(.003) | 0.912(.003) |

| Coupled-label | 8.512(.041) | 0.912(.010) | 0.937(.008) | 0.949(.007) | 0.960(.005) |

| SF-BLEU1-loss | SF-BLEU2-loss | SF-BLEU3-loss | SF-BLEU4-loss | ||

| Indirect | 0.097(.005) | 0.261(.008) | 0.361(.010) | 0.440(.012) | |

| Method | Entropy | BLEU1-loss | BLEU2-loss | BLEU3-loss | BLEU4-loss |

| Indirect-labeled | 0.064(.010) | 0.165(.026) | 0.211(.035) | 0.232(.040) | |

| Direct | 0.079(.014) | 0.202(.037) | 0.252(.046) | 0.271(.050) | |

| Coupled | 0.097(.005) | 0.261(.008) | 0.361(.010) | 0.440(.012) | |

| Coupled-label | 0.064(.010) | 0.165(.026) | 0.211(.035) | 0.232(.040) |

Supplementary Material

Acknowledgments

The authors thank the editors, the associate editor, and two anonymous referees for helpful comments and suggestions.

Research supported in part by NSF grants DMS-1712564, DMS-1721216, DMS-1952539, DMS-1952386, and NIH grants 1R01GM126002, R01HL105397, and R01AG065636.

Appendix

Proof of Lemma 1.

Note that Lb (θb) in (14) is convex in θb and Lb (θb) and Lm(θm) in (17) are continuously twice-differential. Then the result follows from Theorem 4 of [21]. This completes the proof. □

Proof of Theorem 1.

Note that and

Furthermore, . It follows from the triangular inequality that

| (26) |

Note that . By the triangle inequality,

Hence, . Consequently,

To bound I1, let

where c9 1 − 2exp(−τ / 2) / (1 − exp(−τ / 2))2 > 0 is a constant defined by the truncation constant τ > 0. Then I1 is upper bounded by

By the Markov inequality,

By Corollary 1 of [33], , implying for some constant c7 > 0. For I2, a similar probabilistic bound can be established by applying the same argument of Theorem 2 and switching the role of X and Y. This leads to for some constant c8 > 0. The desired result then follows. □

Proof of Theorem 2.

Denote

By the definition of a minimizer, for any ηf > 0,

where and

is the left truncation of pX|Y(x|y).

Next, we bound I5 and I6 separately. An application of the same argument as in [38] yields that

| (27) |

where the second inequality follows from and the third inequality follows from Markov’s inequality.

Our treatment of bounding I6 relies on a chaining argument over a suitable partition of and the left-truncation of likelihood ratios as in [38, 33]. Now, consider a partition of :

where . Then for any ηf > 0,

| (28) |

where . To treat Ikj, we control the mean and variance of . By Lemma 4 of [38],

| (29) |

and the variance is bounded by

| (30) |

where the second inequality follows from Lemma 3 of [38]. Then, Ikj is upper-bounded by

| (31) |

where a3 > 0 is a constant, , , the second inequality follows from the assumption that and (29), and the last inequality follows from Lemma 2 and the fact that the j-th (j ≥ 2) moment is bounded by

where a1 =(exp(τ / 2) − 1 – τ / 2)/(1 − exp(−τ/2))2 > 0 is a constant and the last inequality follows from Lemma 5 in [34]. It suffices to verify the condition (2.4) of [38]. A combination of (28) and (31) yield that , which, together with (27) yields that . The desired result then follows. □

Proof of Theorem 3.

Let be a cross-validation sample. By (5),

then the desired result follows from the law of large number, by taking the limit for both the sides as N → ∞. This completes the proof. □

Proof of Corollary 1.

For the direct sequential generation, we apply the same argument in the proof of Theorem 2. Denote

Then , where p(τ) (Xt+1 | X1:t, Y) is the left truncation of p(Xt+1 | X1:t, Y) as defined in the proof of Theorem 2.

For I7,

For I8, let . For the first moment,

For the j-th moment with j ≥ 2,

where the first inequality follows from the Jensen’s inequality. Then

| (32) |

follows the same arguments as in the proof of Theorem 2.

For the indirect generation, let pt (·) = p(·| X1:t) and . Then,

where the first inequality follows from (26) by replacing p(·) as pt (·), and , . Therefore,

where the last inequality follows from (32). Similarly, bounds for and can be established. The desired result then follows. □

Proof of Corollary 2.

It suffices to verify the entropy conditions in Corollary 1. For the direct generation, let and in . Then

where the last inequality uses the fact that

which uses the fact that

and .

Hence, and the entropy condition is met by setting .

For the indirect generation, it suffices to verify the entropy conditions in Corollary 2. Let and . Note that and

Then, and the entropy condition is met by setting .

Moreover, if Y ∈ {0,1}K,

Similarly, and the entropy condition is met by setting .

If , then

| (33) |

implying that , and the entropy condition holds when . This completes the proof. □

Lemma 2.

Let , assume that there exist some generic constants a2 > 0 and a3 > 0, for j ≥ 2, such that

and for any δ > 0, if

where , then there exist some constants a4 > 0 and a5 > 0 depending on a2 and a3 such that

| (34) |

where P* is the outer probability measure corresponding to .

Proof of Lemma 2.

The result follows from Lemmas 5 and Lemma 7 in [38], by replacing the Hellinger distance in Lemma 5 as a generic distance d(·,·). □

Footnotes

Supplementary Materials

The supplementary materials provide Python codes used in real data application.

Notes

References

- [1].Bishop CM. Pattern recognition and machine learning. springer, 2006. [Google Scholar]

- [2].Bottou L. Large-scale machine learning with stochastic gradient descent. In Proceedings of COMPSTAT’2010, pages 177–186. Springer, 2010. [Google Scholar]

- [3].Breiman L. Random forests. Machine Learning, 45(1):5–32, 2001. [Google Scholar]

- [4].Caruana R, Lawrence S, and Giles CL. Overfitting in neural nets: Backpropagation, conjugate gradient, and early stopping. In Advances in Neural Information Processing Systems, pages 402–408, 2001. [Google Scholar]

- [5].Cheng J and Lapata M. Neural summarization by extracting sentences and words. arXiv preprint arXiv:1603.07252, 2016. [Google Scholar]

- [6].Cho K, Van Merriënboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, and Bengio Y. Learning phrase representations using rnn encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078, 2014. [Google Scholar]

- [7].Cozman F and Cohen I. Risks of semi-supervised learning. Semi-supervised learning, pages 56–72, 2006. [Google Scholar]

- [8].Cozman FG, Cohen I, and Cirelo M. Unlabeled data can degrade classification performance of generative classifiers. In Flairs conference, pages 327–331, 2002. [Google Scholar]

- [9].Cozman FG, Cohen I, and Cirelo MC. Semi-supervised learning of mixture models. In Proceedings of the 20th international conference on machine learning (ICML-03), pages 99–106, 2003. [Google Scholar]

- [10].Dai B, Shen X, and Wang J. Embedding learning. Journal of the American Statistical Association, (in press), 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Devlin J, Chang M-W, Lee K, and Toutanova K. Bert: Pre-training of deep bidirectional transformers for language understanding. In NAACL-HLT, 2018. [Google Scholar]

- [12].Dong L, Yang N, Wang W, Wei F, Liu X, Wang Y, Gao J, Zhou M, and Hon H-W. Unified language model pre-training for natural language understanding and generation. In Advances in Neural Information Processing Systems, pages 13042–13054, 2019. [Google Scholar]

- [13].Gjorgjioski V, Kocev D, and Džeroski S. Comparison of distances for multi-label classification with pcts. In Proceedings of the Slovenian KDD Conference on Data Mining and Data Warehouses (SiKDD’11), 2011. [Google Scholar]

- [14].Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, and Bengio Y. Generative adversarial nets. In Advances in Neural Information Processing Systems, pages 2672–2680, 2014. [Google Scholar]

- [15].He D, Xia Y, Qin T, Wang L, Yu N, Liu T-Y, and Ma W-Y. Dual learning for machine translation. In Advances in Neural Information Processing Systems, pages 820–828, 2016. [Google Scholar]

- [16].Hochreiter S and Schmidhuber J. Long short-term memory. Neural Computation, 9(8):1735–1780, 1997. [DOI] [PubMed] [Google Scholar]

- [17].Karpathy A and Fei-Fei L. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3128–3137, 2015. [DOI] [PubMed] [Google Scholar]

- [18].Kingma DP, Mohamed S, Rezende DJ, and Welling M. Semi-supervised learning with deep generative models. In Advances in Neural Information Processing Systems, pages 3581–3589, 2014. [Google Scholar]

- [19].Kingma DP, Salimans T, Jozefowicz R, Chen X, Sutskever I, and Welling M. Improved variational inference with inverse autoregressive flow. In Advances in Neural Information Processing Systems, pages 4743–4751, 2016. [Google Scholar]

- [20].Langkilde I. Forest-based statistical sentence generation. In Proceedings of the 1st North American chapter of the Association for Computational Linguistics conference, pages 170–177. Association for Computational Linguistics, 2000. [Google Scholar]

- [21].Lee JD, Simchowitz M, Jordan MI, and Recht B. Gradient descent only converges to minimizers. In Conference on Learning Theory, pages 1246–1257, 2016. [Google Scholar]

- [22].Merity S, Keskar NS, and Socher R. Regularizing and optimizing LSTM language models. In International Conference on Learning Representations, 2018. [Google Scholar]

- [23].Mikolov T, Sutskever I, Chen K, Corrado GS, and Dean J. Distributed representations of words and phrases and their compositionality. In Advances in Neural Information Processing Systems, pages 3111–3119, 2013. [Google Scholar]

- [24].Mikolov T, Yih W-T, and Zweig G. Linguistic regularities in continuous space word representations. In Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 746–751, 2013. [Google Scholar]

- [25].Mullachery V and Motwani V. Image captioning. arXiv preprint arXiv:1805.09137, 2018. [Google Scholar]

- [26].Nallapati R, Melnyk I, Kumar A, and Zhou B. Sengen: Sentence generating neural variational topic model. arXiv preprint arXiv:1708.00308, 2017. [Google Scholar]

- [27].Ollivier Y, Tallec C, and Charpiat G. Training recurrent networks online without backtracking. arXiv preprint arXiv:1507.07680, 2015. [Google Scholar]

- [28].Papineni K, Roukos S, Ward T, and Zhu W-J. Bleu: a method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, pages 311–318. Association for Computational Linguistics, 2002. [Google Scholar]

- [29].Pascanu R, Mikolov T, and Bengio Y. On the difficulty of training recurrent neural networks. In International Conference on Machine Learning, pages 1310–1318, 2013. [Google Scholar]

- [30].Radford A, Wu J, Child R, Luan D, Amodei D, and Sutskever I. Language models are unsupervised multitask learners. OpenAI Blog, 1(8):9, 2019. [Google Scholar]

- [31].Schmidt M, Le Roux N, and Bach F. Minimizing finite sums with the stochastic average gradient. Mathematical Programming, 162(1–2):83–112, 2017. [Google Scholar]

- [32].Schuster M and Paliwal KK. Bidirectional recurrent neural networks. IEEE Transactions on Signal Processing, 45(11):2673–2681, 1997. [Google Scholar]

- [33].Shen X. On the method of penalization. Statistica Sinica, 8(2):337–357, 1998. [Google Scholar]

- [34].Shen X and Wong WH. Convergence rate of sieve estimates. Annals of Statistics, pages 580–615, 1994. [Google Scholar]

- [35].Smale S and Zhou D-X. Estimating the approximation error in learning theory. Analysis and Applications, 1(01):17–41, 2003. [Google Scholar]

- [36].Sutskever I, Martens J, and Hinton GE. Generating text with recurrent neural networks. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), pages 1017–1024, 2011. [Google Scholar]

- [37].Vinyals O, Toshev A, Bengio S, and Erhan D. Show and tell: A neural image caption generator. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3156–3164, 2015. [Google Scholar]

- [38].Wong WH, Shen X, et al. Probability inequalities for likelihood ratios and convergence rates of sieve mles. Annals of Statistics, 23(2):339–362, 1995. [Google Scholar]

- [39].Yang T and Priebe CE. The effect of model misspecification on semi-supervised classification. IEEE transactions on pattern analysis and machine intelligence, 33(10):2093–2103, 2011. [DOI] [PubMed] [Google Scholar]

- [40].Yarotsky D. Error bounds for approximations with deep relu networks. Neural Networks, 94:103–114, 2017. [DOI] [PubMed] [Google Scholar]

- [41].Yu H-F, Huang F-L, and Lin C-J. Dual coordinate descent methods for logistic regression and maximum entropy models. Machine Learning, 85(12):41–75, 2011. [Google Scholar]

- [42].Zhou D, Hofmann T, and Schölkopf B. Semi-supervised learning on directed graphs. In Advances in Neural Information Processing Systems, pages 1633–1640, 2004. [Google Scholar]

- [43].Zhu Y, Lu S, Zheng L, Guo J, Zhang W, Wang J, and Yu Y. Texygen: A benchmarking platform for text generation models. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, pages 1097–1100, 2018. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.