The ethical impact of AI algorithms in healthcare should be assessed at each phase, from data creation to model deployment, so that they narrow rather than widen inequalities.

Artificial Intelligence (AI) has the potential to revolutionize many aspects of clinical care, but can also lead to patient and community harm, the misallocation of health resources, and the exacerbation of existing health inequities, greatly threatening its overall efficacy and social impact.1 These issues have prompted the movement towards “ethical AI”, a field devoted to surfacing and addressing ethical issues related to AI development and application.

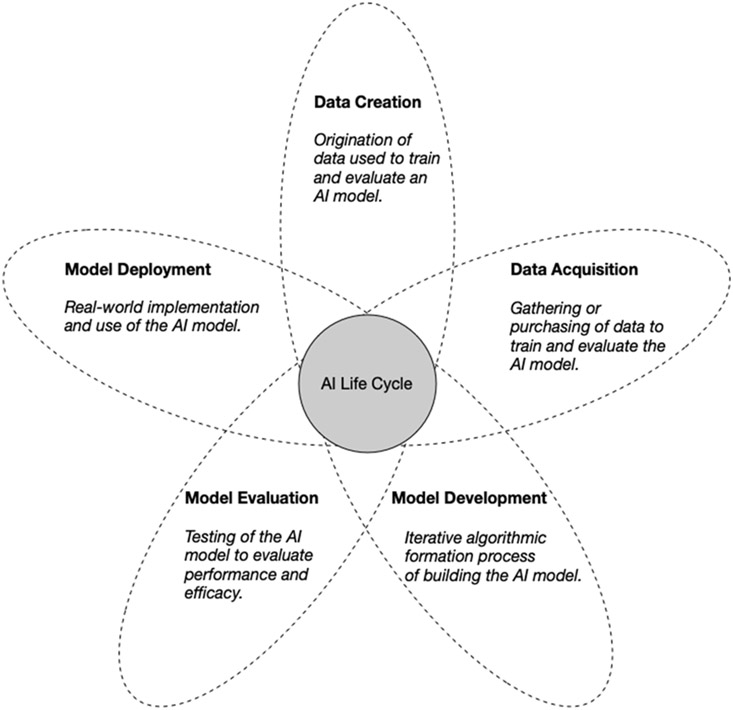

A key part of promoting ethical AI is understanding algorithms not only through the lens of performance, but also through the various actors, processes, and objectives that drive development and eventual application of the algorithm. An AI life cycle consisting of five broad phases: data creation, data acquisition, model development, model evaluation, and model deployment, can be used to holistically evaluate the ethical nature of a clinical AI algorithm (Figure 1). Most efforts in ethical AI focus on examining ethical implications that impinge on discrete phases of the life cycle, but this approach emphasizes the need to consider their impact in concert and how each phase interacts. Centering on any single phase fails to comprehensively address issues such as transparency, fairness, and harm that emerge differentially across multiple stages. To truly tackle the ethical challenges afflicting clinical AI algorithms, it is critical to preemptively examine how phase-specific decisions can affect an AI algorithm’s overall adherence to appropriate ethical norms.

Figure 1: Overview of a reimagined AI life cycle.

Rather than examine the ethical implications that impinge on discrete phases of the AI life cycle, there is a need to consider their impact in concert and interaction with all the phases.

Data Creation

The data creation phase involves understanding how the data used to train and evaluate a clinical AI algorithm were produced and the circumstances surrounding its generation. Often, clinician-patient interactions from routine care provide the most granular data and is an effective source for answering many clinical questions using AI. Additional sources, such as commercially marketed tools like Fitbit and 23andMe, and data from clinical research trials may also be used to create clinical AI algorithms. The amount, quality and depth of these data can vary hugely depending on the source of origin. Data from many of these sources often lack diversity in terms of socioeconomic status, geography, and racial and ethnic representation.2

The context in which data were created should be published alongside any AI dataset. At present, this context is typically limited to naming the clinical site and listing patient characteristics, such as race, ethnicity, sex, and age, which may not always be representative.3 At most, other information such as comorbidities or biomarkers, are included at this data origin point. Moving forward, those involved in data creation should be encouraged to call attention to areas of under- or mis-representation and disclose the status of efforts tackling systemic structural and social barriers that prevent the inclusion of certain subgroups in the first place. Using data that are unrepresentative of the populations that an AI algorithm will likely serve (in the model deployment phase) will lead to ethical concerns at downstream AI phases.

Data Acquisition

The data acquisition phase is the process of obtaining and consolidating data for AI model development. At this stage, creators of AI algorithms seek out data sources that satisfy the demands of their model. Often, developers use the data that are most convenient rather than the data that are most fit for purpose. Data available for acquisition will likely vary substantially in completeness, depth, and relevance due to unaddressed factors at the data creation stage. Legal and regulatory mandates such as HIPAA, consumer data privacy laws such as GDPR or the California Consumer Privacy Act (CCPA), as well as proprietary agreements, may lead to the redaction of important sociodemographic or clinical information from shared health datasets. The laudable goal of these requirements is to protect patient privacy but they can also lead to unethical AI algorithms, by removing essential demographic information.

Information regarding the data acquisition for AI algorithms is often limited to data deidentification and Institutional Review Boards (IRBs) approval statements. Satisfying current regulatory standards does not necessarily mean the health data used for AI algorithm development are ethically acquired. Transparency regarding the extent of financial arrangements, privacy protections, and patient sentiments with data acquisitions for AI algorithm development is important for determining ethical use. As the data change hands, this lost or omitted information leaves those in future stages who are not privy to the data creation stage or data acquisition details unable to accurately assess if the data are fit for purpose. Moreover, as clinical data continues to be used for the development and eventual deployment of clinical AI algorithms, expanding regulatory and transparency requirements in the data acquisition phase can lead to tools that are more reliable across populations – fostering trust between patients, clinicians, and other stakeholders that are a part of the AI life cycle.

Model Development

The process of developing AI models requires a clear definition of the problem or question the AI algorithm is tasked to address, the model end-user, and the model beneficiary. The problems that the algorithm seeks to address should not be biased and there should be a feasible mitigating action the end-user can make based on the model output in order to be useful for clinical care. Decisions made during the model development phase are heavily informed by previous and subsequent phases, as model development hinges on the data available for training. The model must also be tuned to perform according to evaluation methods and deployment goals.

Once the purpose of a model is agreed, data pre-processing techniques, model architectures, and hyperparameters will be explored. Who the model is optimized for and how data are labeled, processed, and aggregated can all perpetuate discrimination against some groups and prevent under-represented populations from benefitting from the model. Data labeling and the development of a ground truth, data that represents the true or actual problem under study, from which the model will learn are essential to understanding model applicability.4 Knowing who established a ground truth can reveal biases with labeling that are influenced by one’s lived experience. For example, a ground truth established by trainees could vastly differ from that developed by seasoned professionals. Ground truths for AI algorithms should be developed on the basis of reliability and representativeness, rather than costs and resources. Model development processes should be disclosed, including deliberations on what constitutes a fair model. Reflection on topics such as social value and embedded biases can help reveal potential ethical shortcomings at this phase.

Information regarding technical specifications of a model should be described in detail. Additionally, many journals have imposed a requirement for open-source code, which encourages researchers to not only describe the AI model’s architecture, including selected training methods and parameters, but also provide publicly available source code. The data used to train and evaluate a model should also be made publicly available, allowing for the model development process to be fully replicated and inspected. Considerable effort must go into anticipating the impact of the AI algorithm upon its eventual deployment.

Model Evaluation

The purpose of evaluating a clinical AI algorithm is to understand how well it can perform its learned task in its desired environment. Recent methodologies allow algorithms to be evaluated on the basis of fairness.5,6 However, models are commonly evaluated only on measures of performance, such as receiver operating characteristic (ROC) curve and area under the (AUC) curve, in scenarios that do not represent the range of possible applications. AI algorithms sometimes fail to present a comprehensive evaluation of performance and fairness7 and are not evaluated as fully integrated tools within a clinical workflow where clinician biases, system-specific logistics, and diagnostic uncertainties come into play. Limited evaluations can mask the potential societal harms that the AI algorithm might cause to certain populations once in the subsequent deployment phase.

Generalizability across populations is often a focal point of model evaluation discussions. As with the data creation stage, there may be inadequate data to test algorithm accuracy for certain under-represented populations. Models are often evaluated in settings that may be different from their deployment environment, and few models report evaluations across populations or settings, preventing an assessment of model generalizability.8 Furthermore, standard metrics for understanding model calibration and how models can under or overestimate risk for certain populations are often ignored.7 Model calibrations can highlight important differences in performance across populations, as shown by Obermeyer and colleagues.9 Model evaluation should assess and report performance across populations, as performance may be hindered if there was limited inclusion of diverse populations.

Model Deployment

A goal of creating AI algorithms for clinical settings is to eventually deploy them where they can improve patient care and outcomes. It is therefore critical to understand the full scope of model deployment in practice, including its actual performance across populations, how clinicians might interpret model output, and the extent of its clinical utility. There is little published information related to implementation of AI algorithms, or how to best measure the success or impact of clinical AI algorithms. Few AI models have been deployed at point of care, and so few studies exist to describe successful deployment of clinical AI algorithms, with even fewer describing ethical concerns, such as the impact to patient care when clinicians differ in their interaction and use of the model.10 Model deployment is therefore an understudied, yet hugely important phase in the AI life cycle. There is a need for evidence on how to best integrate an AI algorithm into a health professional’s workflow, what knowledge and training is needed for intended users, and what resources are required to deploy and maintain an AI model in a healthcare setting.

Transparency, generalizability, and fairness are all relevant concerns at the deployment phase, where they will manifest as real-world consequences. Lack of transparency about training data and how the model was built can result in distrust or confusion from patients and practitioners alike. Generalizability and fairness should be adequately assessed and demonstrated in the model evaluation phase, or populations who are under-represented in testing data sets will likely not benefit from the model or may even suffer negative consequences from deployment of the algorithm.

Accessibility is a key concern, the algorithm must reach those populations which would benefit the most from its deployment. AI deployment requires technical resources, training materials, and AI trained experts, which are likely only available in resource-rich settings. Without explicit discussion of how the model can be used in low-resource settings, such as community or rural healthcare facilities, the model will not be equitably accessible to patients.11

A model that enters the deployment phase becomes a creator of clinical data itself, funneling back into the data creation phase where it may serve as a data source for future models. In one study, an AI algorithm estimated differential risk scores based on healthcare costs rather than healthcare need, which resulted in Black patients being allocated less resources than the equivalent White patient.9 This bias, whereby Black patients receive fewer resources for similar illnesses is then amplified by the AI algorithm, creating additional biased data, which will then be used by future algorithms. This connection between the data creation, acquisition, and deployment stages underscores how the phases of the AI life cycle are connected to potentially amplify biases.

Connect the phases

The phases of the AI life cycle, from data creation to deployment, are all connected, creating ethical complexities that arise at one phase but act across multiple phases. Ethical complexities within a single phase, if not adequately addressed, will continue to negatively affect the integrity of the algorithm as it moves through the life cycle. Actions should therefore be assessed at a single phase, but within the context of the full AI life cycle, in order to illustrate the importance of every action and its future consequences. This framework will help to advance the field of ethical AI by emphasizing the need to research and address the interrelated concerns born from an increasingly AI-filled healthcare environment.

Table 1.

AI biases and how to fix them

| Phase | Ethical issues | Recommendation |

|---|---|---|

| Data creation | Data which are unrepresentative of the target population will lead to algorithms that poorly serve much of that population. | The context in which data are created, including the original purpose of data generation, areas of representation, and efforts that tackle barriers to inclusion, should be published alongside any AI dataset. |

| Data acquisition | Incomplete data collection, mandatory redactions, and lack of information on financial and privacy protections limits the ability to assess whether the data source is fit for purpose and meets ethical standards. | Expand regulatory and transparency requirements to enable the additional sharing of essential demographic and health information and logistical data acquisition details needed to promote the development of reliable AI tools. |

| Model development | Model optimization, in regards to data labeling, inclusion and exclusion criteria, and benchmarks for assessment can perpetuate discrimination against some groups and prevent marginalized populations from benefitting from the model. | Technical specifications of the AI model and any fairness deliberations should be required reporting criteria and made publicly available, allowing for the model development process to be fully replicated and inspected. |

| Model evaluation | Limited evaluations of algorithm performance and fairness as fully integrated tools within a clinical workflow can mask potential societal harms the algorithm might cause to certain populations once deployed. | A comprehensive set of evaluation metrics, including fairness evaluations, should be required by regulatory leaders, including journal editors, hospital administrators, and federal agencies. |

| Model deployment | Algorithms are deployed with minimal preceding understanding about impact and risks to populations, implementation, and the resources required for their success. Transparencies, generalizability, and fairness shortcomings can lead to inequitable real-world consequences. | Focused studies on the challenges and successes of AI deployment, including identifying standards and/or metrics to measure success, are needed, especially in low-resource healthcare settings. |

Acknowledgments

Research reported in this publication was supported by the Agency for Health Research & Quality under Award Number R01HS027434, the Ethics, Society, and Technology (EST) Hub at Stanford University, and the Betty and Gordon Moore foundation. The content is solely the responsibility of the authors and does not necessarily represent the official views of the funders.

Footnotes

Competing interests

The authors declare no competing interests.

References

- 1.Challen R, Denny J, Pitt M, Gompels L, Edwards T, Tsaneva-Atanasova K. Artificial intelligence, bias and clinical safety. BMJ Qual Saf. March 2019;28(3):231–237. doi: 10.1136/bmjqs-2018-008370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Goldstein BA, Navar AM, Pencina MJ, Ioannidis JP. Opportunities and challenges in developing risk prediction models with electronic health records data: a systematic review. J Am Med Inform Assoc. Jan 2017;24(1):198–208. doi: 10.1093/jamia/ocw042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bozkurt S, Cahan EM, Seneviratne MG, et al. Reporting of demographic data and representativeness in machine learning models using electronic health records. J Am Med Inform Assoc. Dec 2020;27(12):1878–1884. doi: 10.1093/jamia/ocaa164 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gordon ML, Lam MS, Park JS, et al. Jury learning: Integrating dissenting voices into machine learning models. 2022:1–19. [Google Scholar]

- 5.Corbett-Davies S, Goel S. The measure and mismeasure of fairness: A critical review of fair machine learning. arXiv preprint arXiv:180800023. 2018; [Google Scholar]

- 6.Pfohl SR, Foryciarz A, Shah NH. An empirical characterization of fair machine learning for clinical risk prediction. J Biomed Inform. January 2021;113:103621. doi: 10.1016/j.jbi.2020.103621 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Röösli E, Bozkurt S, Hernandez-Boussard T. Peeking into a black box, the fairness and generalizability of a MIMIC-III benchmarking model. Sci Data. January 24 2022;9(1):24. doi: 10.1038/s41597-021-01110-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Futoma J, Simons M, Panch T, Doshi-Velez F, Celi LA. The myth of generalisability in clinical research and machine learning in health care. Lancet Digit Health. September 2020;2(9):e489–e492. doi: 10.1016/S2589-7500(20)30186-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. Oct 25 2019;366(6464):447–453. doi: 10.1126/science.aax2342 [DOI] [PubMed] [Google Scholar]

- 10.Sujan M, Furniss D, Grundy K, et al. Human factors challenges for the safe use of artificial intelligence in patient care. BMJ Health Care Inform. Nov 2019;26(1)doi: 10.1136/bmjhci-2019-100081 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Nsoesie EO. Evaluating Artificial Intelligence Applications in Clinical Settings. JAMA Netw Open. September 07 2018;1(5):e182658. doi: 10.1001/jamanetworkopen.2018.2658 [DOI] [PubMed] [Google Scholar]

- 12.Wall A, Lee GH, Maldonado J, Magnus D. Genetic disease and intellectual disability as contraindications to transplant listing in the United States: A survey of heart, kidney, liver, and lung transplant programs. Pediatr Transplant. November 2020;24(7):e13837. doi: 10.1111/petr.13837 [DOI] [PubMed] [Google Scholar]