Abstract

Context:

The Public Health Workforce Interests and Needs Survey (PH WINS) was fielded in 2014 and 2017 and is the largest survey of the governmental public health workforce. It captures individual employees' perspectives on key issues such as workplace engagement and satisfaction, intention to leave, training needs, ability to address public health issues, as well as collects demographic information. This article describes the methods used for the 2021 PH WINS fielding.

PH WINS 2021:

PH WINS 2021 was fielded to a nationally representative sample of staff in State Health Agency-Central Offices (SHA-COs) and local health departments (LHDs) from September 13, 2021, to January 14, 2022. The instrument was revised to assess the pandemic's potential toll on the workforce, including deployment to COVID-19 response roles and well-being, and the country's renewed focus on health equity and “Racism as a Public Health Crisis.” PH WINS 2021 had 3 sampling frames: SHAs, Big Cities Health Coalition (BCHC) members, and LHDs. All participating agencies were surveyed using a census approach.

Participation:

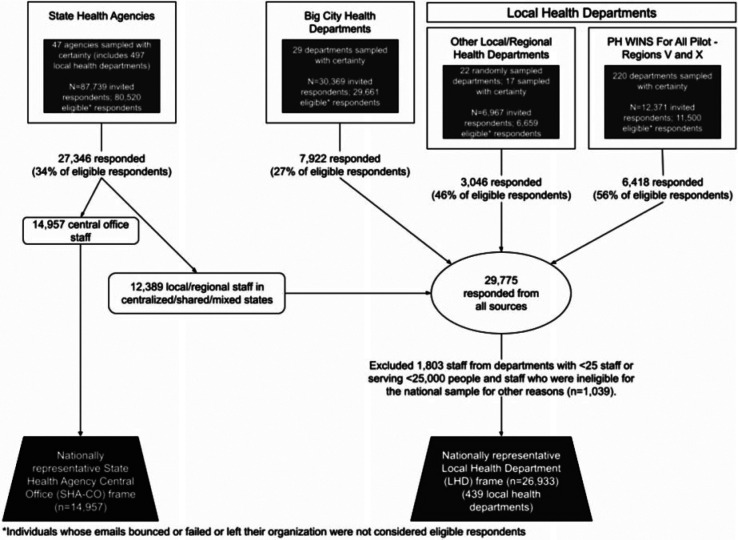

Overall, staff lists for 47 SHAs, 29 BCHC members, and 259 LHDs were collected, and the survey was sent to 137 446 individuals. PH WINS received a total of 44 732 responses, 35% of eligible respondents. The nationally representative SHA-CO frame includes a total of 14 957 individuals, and the nationally representative LHD frame includes 26 933 individuals from 439 LHDs (decentralized and nondecentralized).

Considerations for Analysis:

PH WINS now offers a multiyear, nationally representative sample of both SHA-CO and LHD staff. Both practice and academia can use PH WINS to better understand the strengths, needs, and opportunities of the workforce. When using PH WINS for additional data analysis, there are a number of considerations both within the 2021 data set and when conducting multiyear and multiple cross-sectional analyses.

Keywords: governmental public health practice, methodology report, Public Health Workforce Interests and Needs Survey (PH WINS), survey research methods, workforce research

Context

The Public Health Workforce Interests and Needs Survey (PH WINS) has filled a foundational gap in knowledge about the training needs, motivations, satisfaction, and intent to leave of the governmental public health workforce. Fielded in 2014, 2017, and 2021, PH WINS remains the only survey of its kind by providing the workforce with valuable information that has improved efforts around recruitment and retention, workforce development, and strategic planning in agencies and departments across the United States.1–3 Initially, PH WINS was designed to field every 3 years, off-cycle with other major national tentpole studies such as the Profile studies from the Association of State and Territorial Health Officials (ASTHO) and the National Association of County and City Health Officials (NACCHO). However, the all-hands-on-deck response to the novel coronavirus and the ensuing COVID-19 pandemic necessitated a mild reimagining of PH WINS, its fielding, its instrument, and its potential impact on the field.

The elevation of public health into the spotlight has meant an elevation of its workforce and the relative lack of workforce surveillance mechanisms present in the United States. PH WINS is one of few extant surveys present in the field, but it seemed imprudent to ask public health practitioners to participate in something like this while they were responding to a once-in-a-century pandemic. The downside, however, is that the field then had to wait more than a year to better understand the impact of that very pandemic on the workforce. There have been media reports, and some published materials enumerating the deep and broad challenges faced by public health practitioners.4,5 But we think it likely that PH WINS will be one of the largest sources of workforce sentiment, perception, and intent-to-leave research conducted during the COVID-19 response available that will ever exist on sheer numbers alone—approximately 45 000 state and local governmental public health staff members participated in PH WINS in 2021 across the United States.

In this article, we outline the detailed sampling approaches and revisions to fielding PH WINS 2021, including instrument revision, fielding and administration, as well as considerations for analysis, including multiple cross-sectional analyses across multiple years of PH WINS for those who may want to compare several fieldings now and into the future.

PH WINS 2014 and 2017

The need for PH WINS was divined during a 2013 summit hosted by the de Beaumont Foundation and ASTHO that included leaders of public health membership organizations, discipline-specific affiliate groups, federal partners, and other public health workforce experts.6,7 The summit not only established leadership perspectives on training but also reinforced the need for staff perspectives on these matters, as well as many others, making the case for PH WINS quite strong. PH WINS became the first and only nationally representative survey of its kind, surveying governmental public health departments at the individual level.6 The survey was first fielded in 2014 and then a second time in 2017. Over time, it has evolved to meet the changing needs of the governmental public health workforce.

PH WINS 2014 and 2017 consisted of 4 major domains: workplace environment, training needs, emerging concepts in public health, and demographics.6 Between 2014 and 2017, because of feedback from participating departments and other stakeholders, the training needs section was revised to include more specific, actionable skills spanning a broader array of training needs.6 The most recognizable difference between the instruments was that the 2017 training needs section was specific to the supervisory levels: nonsupervisor, supervisors and managers, and executives. Additional instrument changes are well documented in earlier articles in this journal.6,7

In 2014, PH WINS participation included a nationally representative sample of State Health Agency-Central Office (SHA-CO) staff (“state frame”) and a local pilot.7 Overall, PH WINS 2014 was completed by 23 229 staff members representing 37 state health agencies (SHAs) (n = 19 171; 48% response rate), 14 large local health departments (LHDs) that were members of the Big Cities Health Coalition (BCHC) (n = 2670; 26% response rate), and 50 smaller LHDs (n = 1325; 40% response rate).7 The sampling strategy in 2017 was expanded to include all SHAs, all large LHDs that were members of BCHC, and a nationally representative sample of LHDs that had a staff size of at least 25 and served a population of at least 25 000.6 Beyond the addition of the national representative sample of LHDs, all employees at participating agencies were invited to complete PH WINS, which differed from the 2014 strategy of using a representative sample within most agencies.6 Overall, PH WINS 2017 was completed by 47 604 staff members representing 47 SHAs (n = 35 909; 46% response rate), 25 LHDs that were member of BCHC (n = 7174; 43% response rate), and 71 LHDs with a staff size greater than 25 and serving a population greater than 25 000 (n = 4521; 61% response rate). The full history of PH WINS as well as its sampling strategy is available in earlier articles in this journal.6,7

PH WINS 2021

Timeline

PH WINS was designed to be fielded every 3 years, providing enough time between fieldings for robust reporting by the de Beaumont Foundation and ASTHO, and for participating departments to put the data into practice. PH WINS was set to enter the field for its third iteration in September 2020 but was delayed by 1 year, until September 2021, due to the demand of COVID-19 response on the governmental public health workforce. The survey opened on September 13, 2021, and closed on January 14, 2022. It was originally set to close at the end of 2021, but remained open for an additional 2 weeks as requested by a large number of participating agencies. Approximately 68% of responses were received by the end of October, 9% by the end of November, 10% by the end of December, and the remainder by survey closure.

Instrument revisions

The goal of the PH WINS 2021 instrument was to assess the pandemic's potential toll on the workforce and the country's renewed focus on “Racism as a Public Health Crisis” (RaPHC), in addition to the workforce's training needs, engagement, satisfaction, and intention to leave. Specifically related to the pandemic, the instrument aimed not only to be inclusive of the workforce's experience with COVID-19 and its response but to also have other foci, similar to the philosophy behind the revisions to the Federal Employee Viewpoint Survey around the same time.8 We do recognize that all results from 2021 would have to be viewed with the pandemic in mind, and that this would also be a unique opportunity to measure the impact of the pandemic on the workforce, so we attempted to strike a balance in prioritizing how much of the instrument to dedicate to COVID-19 measurement. The instrument revision process included gathering feedback from a wide array of stakeholders, including past participants, federal organizations, public health researchers, practice organizations such as the regional public health training centers (PHTCs), and membership organizations. Stakeholders were able to provide written and oral feedback. All reviewers were asked to think about the future needs of the workforce as they reviewed the instrument as a means of recognizing the importance of longitudinal data. The final PH WINS 2021 instrument had 5 domains: workplace environment, COVID-19 response, training needs, addressing public health issues, and demographics. While the training needs and demographic sections remained largely the same, some important additions were made to the workplace environment section and the COVID-19 response and addressing public health issues sections were entirely new (Box).

BOX • Summary of Instrument Revisions

-

Addition of the following questions/question topics:

Rating of overall mental and emotional health

Agreement with statements about bullying/harassment and undermining/challenging public health expertise

Identifying symptoms of posttraumatic stress disorder related to COVID-19 and its response

Impact of the pandemic on an employee's intention to leave or stay their organization

Employment status prior to March 2020

Whether the respondent fully or partially served in a COVID-19 response role

Degree to which they served in a COVID-19 response role by quarter

Average amount of overtime worked as they served in a COVID-19 response role

Quarter in which a new employee was hired

What was needed to effectively respond to COVID-19, beyond funding

Skill items related to programmatic expertise

Awareness and confidence of health equity concepts

Addressing Racism as a Public Health Crisis

Revision of gender question to be more in line with research standards

Removal of the Oldenburg Burnout Index

Revision of program area response and job-type response options

Revision of program area question type from single select to multiselect with the addition of a follow-up on percent time working in each program area

Stakeholders identified the well-being of the workforce as an area of high interest and a gap in previous versions of PH WINS. To address this, the following set of questions was added to the workplace environment section of the survey:

In general, how would you rate your mental or emotional health?9

-

Please rate your level of agreement with the following items5:

I have felt bullied, threatened, or harassed by individuals outside of my health department because of my role as a public health professional.

I have felt my public health expertise was undermined or challenged by individuals outside of the health department.

-

Has the coronavirus or COVID-19 outbreak been so frightening, horrible, or upsetting10:

that you had nightmares about it or thought about it when you did not want to?

that you tried hard not to think about it or went out of your way to avoid situations that reminded you of it?

that you were constantly on guard, watchful, or easily startled?

that you felt numb or detached from others, activities, or your surroundings?

Bullying and harassment of public health officials have become a focus of public health researchers due to the increased number of reported incidents throughout the pandemic.5,11 Quantitatively capturing those experiences, as well as those related to challenging public health expertise, will be important in defining the breadth and scope of this issue for future workforce development efforts. The aforementioned final 4-item question measures the prevalence of probable posttraumatic stress disorder (PTSD) and was adapted from the Primary Care PTSD Screen for DSM-IV developed by the US Department of Veterans Affairs.10

Several questions directly related to COVID-19 were added to the survey to understand the impact of the pandemic in relation to workforce development and planning. A question was added to the workplace environment section asking about the impact of the pandemic on employees' intention to leave or stay their organization. In addition, the COVID-19 response section was included to understand the burden of the response on the workforce's capacity to fulfill both COVID-19 response roles and those foundational or essential to a health department. Participants were asked to respond to questions about their employment status prior to March 2020, whether they fully or partially served in a COVID-19 response role and the degree to which they served in that role by quarter from Q1 2020 (January-March) until the quarter the respondent completed the survey (those who completed the survey in January 2022 provided this information for Q1 2022), and the average amount of overtime worked as they served in a COVID-19 response role. New hires, specifically, were also asked to select the quarter in which they were hired. Finally, all participants were asked what they needed to effectively responds to COVID-19, beyond funding.

The other major change to the survey was the overhaul to the “Emerging Concepts in Public Health” section, now called “Addressing Issues in Public Health.” It starts with 5 different concepts: (1) health equity, (2) social determinants of equity, (3) social determinants of health, (4) structural racism, and (5) environmental justice. Participants were provided with definitions of each concept and then asked to rate their level of awareness of and confidence in addressing those concepts in their work. The section then dug deeper into RaPHC asking whether they have been involved in addressing RaPHC through their work, whether they believed that their agency should be involved in addressing RaPHC, and what they need to better address RaPHC. This was of particular importance to the Foundation and stakeholders because of the Centers for Disease Control and Prevention's declaration that racism is a public health crisis.12

Other adjustments to the instrument included revising the gender question to be more in line with research standards,13 removing the Oldenburg Burnout Index (OLBI) from 2017, and updating the response options for the program area and job classification questions using the “other” responses from 2017. Removal of the OLBI was a difficult decision, given the high levels of burnout expected among the workforce. However, it was decided other questions, such as the engagement and well-being items, would provide a more wholistic picture of the workforce's overall mental health and well-being, not just levels of burnout. In addition to adding response options, the program area question was revised to be a multiselect question with a follow-up question that asked respondents the percentage of time they worked in each program area selected. All new questions were pretested before use.

Cognitive interviews were conducted with 12 individuals representing 4 SHAs, 6 LHDs, and 2 external stakeholders to test the perception of certain questions. A pretest survey was sent to 348 individuals representing all participating health departments (n = 240; 126 responses), a subset of contractors (n = 82; 15 responses) in one large LHD, and de Beaumont Foundation staff members (n = 26; 6 responses). The survey instrument was found to be exempt by the NORC IRB (IRB00000967), under its Federal-wide Assurance #FWA00000142.

Recruitment and sampling

PH WINS 2021 had 3 sampling frames: SHA-COs, BCHC members, and LHDs. Recruitment for each frame was led by a different PH WINS partner; however, the process was very similar. The recruitment partner contacted leadership in target health departments and asked to have the department participate. Upon agreeing to participate, department leadership would designate a PH WINS workforce champion (WC), generally human resources or workforce development personnel, who we worked closely with to prepare for fielding. The WC role included the following activities:

Gathering the department's full staff list, including temporary and contractor staff, for survey administration. The addition of these staff members was new to 2021 to fully capture the COVID-19 response workforce, which was largely made up of these types of employees. WCs were able to update their staff list at designated times during fielding.

Identifying a contact in the health department's Information Technology division to ensure the survey would not get stuck in the department's spam filters, a process called allow-listing.

Pretesting PH WINS.

Promoting PH WINS within their department to encourage high response rates.

The target population for the SHA-CO frame included all SHAs across the United States. ASTHO conducted recruitment and then shared WC contact information with the PH WINS team. Ultimately, 47 SHAs, of varying governance, participated in the 2021 survey and utilized a census approach. Governance refers to the relationship an SHA has with the LHDs in their state. There are 4 types of governance: decentralized, centralized, mixed, and shared.6,14 Staff lists from SHAs in nondecentralized states include both employees working in the SHA-CO and those working in LHDs in the state. Employees working in the SHA-CO were identified through screening questions, such as whether they were a central office staff or worked in district offices, because not all staff lists had the level of detail necessary to determine who worked in the central office and who did not. All employees identified to work in the central office were included in the final SHA-CO sample.

The target population for the BCHC frame included all employees in each of the participating departments. BCHC conducted recruitment and shared WC information with the PH WINS team. All BCHC departments that were members at the time of recruitment, 29 out of 29, participated in the survey and utilized a census approach.

The target population for the LHD frame included only those departments that had a staff size of at least 25 and served a population of at least 25 000, excluding small LHDs. The de Beaumont Foundation conducted recruitment, with assistance from NACCHO and several State Associations of County and City Health Officials, and in partnership with 2 regional PHTCs. LHDs in the sample were contributed either by probability or certainty from 4 recruitment streams:

Probability: A stratified, clustered random sample based on a list of all eligible LHDs. Strata were constructed on the basis of cross-classification of 10 Health and Human Services (HHS) regions15 and 2 population sizes (25 000-250 000 and >250 000).

Certainty: LHDs that participated in 2017 through the random sample were invited to participate in the 2021 survey.

Certainty: Local staff that participated as an employee of a BCHC department or LHD in a nondecentralized state.

Certainty: LHDs that participated through the PH WINS for All pilot program, a partnership with the Region V PHTC and Northwest Center for Public Health Practice's Region X PHTC aimed at recruiting all LHDs in each region (Region V: Illinois, Indiana, Michigan, Minnesota, Ohio, Wisconsin; Region X: Alaska, Idaho, Oregon, Washington), including those with a staff size of less than 25 and serving a population of less than 25 000. The PH WINS for All pilot proved to be successful, with participation from nearly 50% of the LHDs in both regions, while providing small LHDs the opportunity to participate in PH WINS for the first time. Additional information can be found in Kulik et al (2022; this issue).

Overall, 439 LHDs were included in the LHD sample; 208 LHDs were selected via probability and 22 participated. Among the 71 LHDs that participated in 2017 random sample, 17 LHDs opted to participate in the 2021 survey. Local staff were contributed with certainty from 497 LHDs in nondecentralized states; 220 LHDs participated through PH WINS for All, 100 of which were small LHDs. The other 120 LHDs from PH WINS for All were included in the local sample. In addition, a number of local staff members were identified through the SHA frame when they indicated that they were a noncentral (ie, local or regional) employee. The final PH WINs local frame comprises both the initial LHD sample's 4 recruitment streams and the SHA sample.

COVID-19 was an obstacle at all levels of recruitment and participation. Recruitment partners had trouble getting in contact with department leadership, and leadership was hesitant to agree to participate because of the strain of COVID-19 response on their staff. This was largely the case for the LHD sample, which was drawn multiple times to account for high decline rates. There were also several health departments, including 2 SHAs, that originally agreed to participate but then backed out because of COVID-19 response activities.

Participation

Participants for PH WINS were identified through each participating health department. We worked with WCs, whose activities were described previously, to collect a list of the names and e-mail addresses of all staff members in the department. Other pertinent information included division, bureau, or unit for those larger agencies that wanted more information to conduct targeted outreach during fielding. These staff lists were then standardized (standard column names and the addition of the agency ID and sampling frame), cleaned, and combined to be entered into Qualtrics, the Web-based platform used to field PH WINS. During fielding, WCs were able to provide updates to their staff lists so that employees who had left their organizations could be removed from the response rate calculation and new employees would have the opportunity to participate. The final fielding approach, including participation numbers and response rates, can be found in the Figure 1.

FIGURE 1.

Overview of Fielding Approach and Participation

We worked with 47 SHAs, 29 BCHC departments, and 259 LHDs to collect e-mails for the 137 446 individuals who were invited to participate in PH WINS 2021. After accounting for bounced or failed e-mails and those who left their organization, the number of eligible respondents was 128 340. PH WINS received 44 732 responses, 35% of eligible respondents. This response rate is lower than in previous years (48% in 20176), which was expected because of the demand of COVID-19 on the workforce.

In the initial SHA frame, 87 739 respondents were invited to participate and 27 346 responded (34% of eligible respondents). In the BCHC frame, 7922 responded (28% of eligible respondents). Within the LHD frame, 3046 staff members from other LHDs (46% of eligible respondents) and 6418 staff members from LHDs in Regions V and X (56% of eligible respondents) responded. Of the 220 LHDs that participated through PH WINS for All, 97% responded overall (employees from 215 LHDs in Regions V and X completed the survey). Notably, 100 departments in this pilot, and 968 respondents, are health departments that are not large enough to presently be included in the national sample because they did not have at least 25 staff members or serve at least 25 000 people. However, Regions V and X, as pilot sites in 2021, have first-of-their kind workforce estimates for state central office, large, medium, and now small agencies in PH WINS.

One important change in 2021 around staffing inclusion for SHA-CO staff was the inclusion of nonpermanent employees in the nationally representative frame. In PH WINS 2017, nonpermanent employees were excluded from the final nationally representative SHA-CO frame owing to (1) their small presence in the field and (2) the conceptual importance of focusing workforce development efforts on a permanent workforce. However, the decision was made to include them in the PH WINS 2021 data as many nonpermanent employees were those who were hired to increase the capacity of the workforce during COVID-19. This choice may have some implication for researchers wishing to make multiple cross-sectional comparisons across PH WINS fieldings.

Survey Implementation

PH WINS 2021 was fielded from September 13, 2021, to January 14, 2022. The survey was administered using Qualtrics, a Web-based survey platform. Recruitment of agencies started in April 2021 and extended through the start of the survey. For this reason, the survey was administered in 5 separate cohorts, which was similar to PH WINS 2017. This allowed the PH WINS team additional time to work with WCs to prepare for fielding.

Survey preparation

WCs were asked to attend one WC webinar to learn about PH WINS and their role in implementation; WC webinar ere held weekly from June to August 2021. Optional WC check-ins, an additional touchpoint for WCs, occurred weekly from August to September 2021 (survey start) and then periodically throughout survey administration. WCs were provided with implementation kits that had key dates, requirements for staff lists, and sample language for promotion before and during fielding. We sent an e-mail weekly from the end of July through the close of the survey in January 2022 to all WCs detailing what was new with PH WINS, key dates and reminders, response rates (during fielding only), and other pertinent information.

As stated, because of differing sign on times, PH WINS had 5 different launch groups (“cohorts”). WCs were asked to submit their staff list 2 to 4 weeks before their departments launch date. Staff lists needed to include the first name, last name, and e-mail address of employees. Additional helpful information included the division/unit, position, or title of the employee. Division or unit information was important if a WC wanted responses rates at that level to conduct more targeted outreach during fielding. WCs were asked to include all public health agency staff and local offices if the state was centralized. WCs were encouraged to include contractors and federal employees, which is different from that in the past, because of their vital nature to the pandemic response. Once receiving a staff list, we standardized the headings and ran the list through a cleaning program to check for any discrepancies. About a week before a department's survey launch date, WCs were encouraged to send an e-mail to their department to inform them of PH WINS.

Survey administration

Survey launch dates were approximately every 2 weeks starting on September 13, 2021, with the last cohort, cohort 5, launching on December 6, 2021. Each cohort received reminders, typically weekly with some exceptions. The number of reminders per cohort ranged from 6 to 9 depending on the cohort's start date. WCs were encouraged to send reminder e-mails that coincided with the reminder e-mails we sent through Qualtrics, the survey platform, so promote participation. Everyone received their own unique link to the survey. The Appendix Table details the types of agencies in each cohort, cohort start date, and dates of reminder e-mails.

Response rate reporting

To receive a department-level report, each participating agency needed to reach a minimum required response rate (“benchmark response rate”) that was calculated using the department's staff size and a margin of error of ±5%. These benchmark response rates help ensure that department-level results are accurate and precise and maintain the confidentiality and anonymity that respondents are promised.

To share response rates in a cohesive and timely manner, the PH WINS team created a response rate reporting dashboard that included bar graphs for all participating agencies within a sampling frame. The sampling frames were SHAs, Big Cities (BCHC departments), other LHDs, and PH WINS for All (Region V and X LHDs). Each department was identified using the state abbreviation for SHAs or a NACCHO ID. A NACCHO ID lookup table was provided. In addition to the department's response rate and benchmark response rate, the response rate dashboard provided each department with the number of surveys completed, number of surveys needed to reach the benchmark response rate, and number of eligible employees (employees whose e-mails failed or bounced and those who left their organization were considered ineligible).

Data were extracted from Qualtrics weekly (at the start of fielding) or twice weekly (toward the end of fielding), survey responses were deduplicated on the basis of e-mail address and first and last names, and the cleaned responses were merged with staff distribution lists. Duplicate survey responses resulted from a respondent sharing their survey link with another staff who then took the survey themselves using another link provided by the PH WINS team. In addition to the agency-level response rates provided through the dashboard, agency response rates by division or unit were calculated for SHAs and BCHC departments. These data were provided in Excel spreadsheets.

Weighting

Weighting programs were developed to create multiple sets of weights, both the sample design weights and balanced repeated replication (BRR) for variance estimation. The programs were developed to allow for necessary changes in response to the final set of completed surveys and any challenges that arose (eg, allowing for collapsing across regions to account for small sample sizes). The following paragraphs outline the methods implemented in the programs that created the final weights.

The programs and final weights were created in Stata 16 (StataCorp LLC, College Station, Texas), using data from PH WINS 2017. The first part of the programs created the needed agency and national-level sample design weights for all respondents, for both the SHAs and LHDs.

The SHA-CO national sample weight was constructed in a multistep process: (1) a sample design weight was calculated at the state level for each participating SHA to account for any subsampling of staff; (2) a nonresponse adjustment was applied to the sample design weight to bring the total weighted count of central office–completed surveys in alignment with the known staff totals of central office employees for each SHA; (3) a poststratification adjustment was applied to the nonresponse adjusted weight to bring the total weighted count of staff in each of the HHS regions in alignment with region-level staff totals providing the final sample weights. The weights summed across participating SHAs in an HHS region will equal the total population of central office state-level employees in that region. Note that Regions I and II and Regions VII and VIII were collapsed because of sample size constraints at the local level.

The local national sample weights were calculated for the combined sample comprising completed surveys from the participating BCHCs, from the sample of LHDs in decentralized states, and from the completed surveys of local employees contributed from the SHA frame for nondecentralized states. Similar to the SHA weights, the multistep weighting process to create the local sample weights was as follows: (1) a sample design weight was calculated for staff in each participating big city agency and each LHD based on their probabilities of selection, and for local staff coming from the SHA frame; (2) for big city and LHD completes, a nonresponse adjustment was applied to the sample design weight to bring the total weighted count of completed surveys in alignment with the known staff totals for each big city or LHD; (3) a poststratification adjustment was applied to the nonresponse adjusted weight to bring the total weighted count of staff in each of the 20 HHS regions by population served size strata in alignment with staff totals; (4) the poststratified weights were trimmed to remove outliers, that is, weights that were 3 times the interquartile range (IQR) greater than the median were capped at 3 times the IQR plus the median. These final local national weights summed across participating LHDs in an HHS region will equal the total population of local employees in that region as well as by population served size above and below 250 000. Note that Regions I and II and Regions VII and VIII were collapsed because of sample size constraints among LHDs.

In a complex sample survey setting such as for the national PH WINS, variance estimates computed using standard statistical software packages that assume simple random sampling are generally too low (ie, significance levels are overstated), as well as biased because they do not account for the differential weighting among sampled persons. Resampling methods for variance estimation, such as BRR, are popular because of the relative simplicity of replication-based estimates, especially for nonlinear estimators. In BRR, multiple replicates (also called subsamples) are drawn from a full sample according to a specific resampling scheme and require a 2 primary sampling unit (PSU) per strata design. For the SHA sample, we collapsed the state SHAs into 39 pseudo strata, collapsing the smallest SHAs within the HHS region as needed, and then created 2 pseudo-PSUs per strata by randomly assigning completed cases equally to each of the 2 pseudo PSUs. For the local sample, we again created pseudo strata at the state level, within region, by collapsing NACCHO IDs together until less than 40 pseudo-strata are defined. The collapsing rules allow for approximately equal sizes for each pseudo-stratum while ensuring that each pseudo-stratum also crosses all 3 sized strata within each region (A-250, B-250, E-25). In this way, homogeneous strata will be created. We collapsed PSUs within strata down to 2 by randomly assigning each case in the pseudo stratum to PSU 1 or PSU 2.

Considerations for Analysis

Considerations for 2021 analyses

PH WINS in 2021 is not without limitations that bear consideration in analysis. Nonresponse assessment shows that primary potential for bias lies both with differential nonresponse from individuals and potentially from the participation or nonparticipation of invited agencies. Among those local agencies invited from the probability-based frame, only a few dozen agreed to participate, while there was much higher participation from those sampled with certainty. Strictly speaking, BRR weights and the broader poststratifications account for this complex sampling design, as does the weight trimming, but this is a consideration nonetheless for those planning to use the data for national analysis. Beyond sampling design considerations, it seems worth noting, especially in 2021, that nonresponse bias is always a concern for sentiment and perception surveys (and surveys generally). It seems especially relevant that a survey about job satisfaction and COVID-19 response, during COVID-19 has a relatively modest response rate compared with previous years. Notably, PH WINS 2021 not only has a lower overall response rate but also has a high incomplete rate. In 2017, the response rate was 48% and the e-mail open rate was 57% and the survey completion rate once opened was 85%. In 2021, the response rate was 35% and the e-mail open rate was 40% and the survey completion rate once opened was 87%.

Considerations for comparisons with other fieldings of PH WINS

PH WINS 2021 is roughly comparable with PH WINS 2017 as a national estimate of the workforce, with one minor exception. For the SHA-CO frame, directly compatible estimates would need to exclude from 2021 nonpermanent staff using the employment status variable, Q5_17. Giving COVID-19 response to this may somewhat marginalized the true picture of 2021 workforce, but including it would likely diminish the value of 2017 to 2021 multiple cross-sectional comparisons. A more appropriate approach likely would be to create longitudinal comparisons where researchers create agency cohorts that participated in both years of 2017 and 2021 and so can be less concerned about the permanency status of employees but rather that the agencies themselves participated in PH WINS. This is relevant because different agencies participated in different years. A different set of agency-based weights is included in restricted data sets for researchers that obtain permission to conduct these types of analysis. Likely these longitudinal type comparisons well allow for the most precise and accurate measures of change overtime, though they might not be nationally representative. Analyses between 2014, 2017, and 2021 are only possible for the nationally representative SHA-CO frame, and possibly for big cities (contingent on the analysis in question), as the nationally representative LHD frame did not exist until 2017.

Reflections for Future Iterations of PH WINS

The PH WINS team strived to build a relationship with WCs and plans to maintain that relationship in the interim years between PH WINS fieldings. WCs are the backbone of survey administration and maintaining these relationships through regular postfielding communications will hopefully make recruitment easier for the next iteration of PH WINS. One of the biggest struggles was getting LHDs to commit to participate. Through the PH WINS for All model, working with regional PHTCs to increase LHD participation and expand to small LHDs (<25 staff members or <25 000 served) proved to be fruitful, with nearly half of all LHDs in those regions participating and more than half of all staff members in those departments completing the survey. Expanding this model in the next iteration of PH WINS to include more regions could provide the nation with an even more robust picture of the interests and needs of the governmental public health workforce and possibly provide enough information to create a national representative sample of small LHDs. The lack of small LHDs remains the biggest limitation of PH WINS, but the complexities of onboarding many hundreds of more agencies into the PH WINS process are practically implausible without additional structured partnerships, as was made possible through the WINS for All pilot.

Future iterations of PH WINS may also benefit from additional innovations to increase response rates. PH WINS 2017 had a 47% response rate and PH WINS 2021 had a 35% response rate. While it can be assumed that some of the decreased response rate was due to the increased workload among the workforce as they responded to the COVID-19 pandemic, it is likely there are other contributing factors such as survey length or communication tactics. In response to survey length, PH WINS will be looking to evaluate the psychometrics of PH WINS 2021 to include data-based decision making in the next round of instrument revisions.

Implications for Policy & Practice

de Beaumont and ASTHO were able to use PH WINS 2021 to respond to what was happening in the world by asking new questions about the mental health effects of COVID-19 response on the workforce, the movement of employees due to COVID-19 response activities, and the ability and willingness of the workforce to address racism and key equity-related concepts in their work.

PH WINS 2021 can be compared to past PH WINS data to identify trends in satisfaction, engagement, intention to leave, training needs, and demographics of the workforce.

The expanded PH WINS local sample through the PH WINS for All pilot provides data on small decentralized LHDs (staff <25; serving <25,000) for the first time. Results show that small LHDs operate in a very different context and have different needs than their medium, large, and state counterparts.

Participating agencies, regional public health training centers, national membership organizations, and the federal government can use PH WINS as a platform for action. Results can be used to identify the strengths and gaps of the workforce to develop supports that meet the unique needs of the governmental public health workforce.

APPENDIX TABLE. Information on Each Cohort, Including Type and Number of Agencies in Each Cohort, the Cohort Start Date, and Date and Number of Reminders.

| Cohort 1 | Cohort 2 | Cohort 3 | Cohort 4 | Cohort 5 | |

|---|---|---|---|---|---|

| Types of agencies, n | |||||

| SHAs | 38 | 1 | 4 | 1 | 3 |

| BCHC | 24 | 4 | 1 | 0 | 0 |

| LHDs | 18 | 4 | 8 | 2 | 7 |

| PH WINS for All | 197 | 16 | 2 | 1 | 4 |

| Total in each cohort | 277 | 25 | 15 | 4 | 14 |

| Launch and reminder e-mails | |||||

| Sep 13, 2021a | P | ||||

| Sep 20, 2021 | P | ||||

| Sep 27, 2021a | P | ||||

| Oct 4, 2021 | P | P | |||

| Oct 12, 2021a | P | P | P | ||

| Oct 18, 2021 | P | P | P | ||

| Oct 25, 2021a | P | P | P | P | |

| Nov 1, 2021 | P | P | P | P | |

| Dec 6, 2021a | P | P | P | P | P |

| Dec 13, 2021 | P | P | P | P | |

| Dec 20, 2021 | P | ||||

| Jan 5, 2022 | P | P | P | P | P |

| Jan 12, 2022 | P | P | |||

| Jan 14, 2022 | P | P | |||

| Total # of e-mails | 9 | 9 | 7 | 7 | 6 |

Abbreviations: BCHC, Big Cities Health Coalition; LHD, local health department; SHA, State Health Agency.

aThe date the survey launched for each cohort.

Footnotes

PH WINS is funded by the de Beaumont Foundation. All authors were funded by the de Beaumont Foundation to work on the fielding and evaluation of PH WINS.

The authors have indicated that they have no potential conflicts of interest to disclose.

Human Participant Compliance Statement: PH WINS 2021 was determined to be exempt by the NORC IRB (IRB00000967), under its Federal wide Assurance #FWA00000142.

Contributor Information

Moriah Robins, Email: gendelman@debeaumont.org.

Jonathon P. Leider, Email: leider@umn.edu.

Kay Schaffer, Email: schaffer@debeaumont.org.

Melissa Gambatese, Email: gambateseconsulting@gmail.com.

Elizabeth Allen, Email: allen-elizabeth@norc.org.

Rachel Hare Bork, Email: harebork@debeaumont.org.

References

- 1.Becker SJ, Hannan C, Robitscher JW. A win for workforce development: the value of PH WINS for ASTHO affiliates. J Public Health Manag Pract. 2019;25(suppl 2):S177–S179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Brown JN, Fuchs J, Ristow A. Prioritizing the public health workforce: harnessing PH WINS data in local health departments for workforce development. J Public Health Manag Pract. 2019;25(suppl 2):S183–S184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McKeown K, Matthies R, Valdes Lupi M, Mortell T. Using data to advance workforce development in public health agencies: perspectives from state and local health officials. J Public Health Manag Pract. 2019;25(suppl 2):S180–S182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Johns Hopkins Bloomberg School of Public Health. Harassment of public health officials widespread during the initial phase of the COVID-19 pandemic. https://publichealth.jhu.edu/2022/harassment-of-public-health-officials-widespread-during-the-initial-phase-of-the-covid-19-pandemic. Published March 17, 2022. Accessed April 15, 2022.

- 5.Ward JA, Stone EM, Mui P, Resnick B. Pandemic-related workplace violence and its impact on public health officials, March 2020-January 2021. Am J Public Health. 2022:112(5):736–746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Leider JP, Pineau V, Bogaert K, Ma Q, Sellers K. The methods of PH WINS 2017: approaches to refreshing nationally representative state-level estimates and creating nationally representative local-level estimates of public health workforce interests and needs. J Public Health Manag Pract. 2019;25:S49–S57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Leider JP, Bharthapudi K, Pineau V, Liu L, Harper E. The methods behind PH WINS. J Public Health Manag Pract. 2015;21(suppl 6):S28–S35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.US Department of the Treasury. 2021 Federal Employee Viewpoint Survey results. https://home.treasury.gov/about/careers-at-treasury/2021-federal-employee-viewpoint-survey-results. Published 2021. Accessed April 15, 2022.

- 9.Ahmad F, Jhajj AK, Stewart DE, Burghardt M, Bierman AS. Single item measures of self-rated mental health: a scoping review. BMC Health Serv Res. 2014;14:398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Prins A, Bovin MJ, Kimerling R, et al. The Primary Care PTSD Screen for DSM-5 (PC-PTSD-5). https://www.ptsd.va.gov/professional/assessment/screens/pc-ptsd.asp. Published 2015. Accessed May 15, 2022.

- 11.Yeager VA. The politicization of public health and the impact on health officials and the workforce: charting a path forward. Am J Public Health. 2022;112(5):734–735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Walensky RP. Director's commentary—racism and health. https://www.cdc.gov/healthequity/racism-disparities/director-commentary.html. Published October 25, 2021. Accessed May 15, 2022.

- 13.Amaya A. Adapting how we ask about the gender of our survey respondents. https://medium.com/pew-research-center-decoded/adapting-how-we-ask-about-the-gender-of-our-survey-respondents-77b0cb7367c0. Published September 11, 2020. Accessed May 15, 2022.

- 14.Meit M, Sellers K, Kronstadt J, et al. Governance typology: a consensus classification of state-local health department relationships. J Public Health Manag Pract. 2012;18(6):520–528. [DOI] [PubMed] [Google Scholar]

- 15.Department of Health and Human Services. HHS regional offices. https://www.hhs.gov/about/agencies/iea/regional-offices/index.html. Accessed May 15, 2022.